The 'Slop' Problem Nobody Wants to Talk About

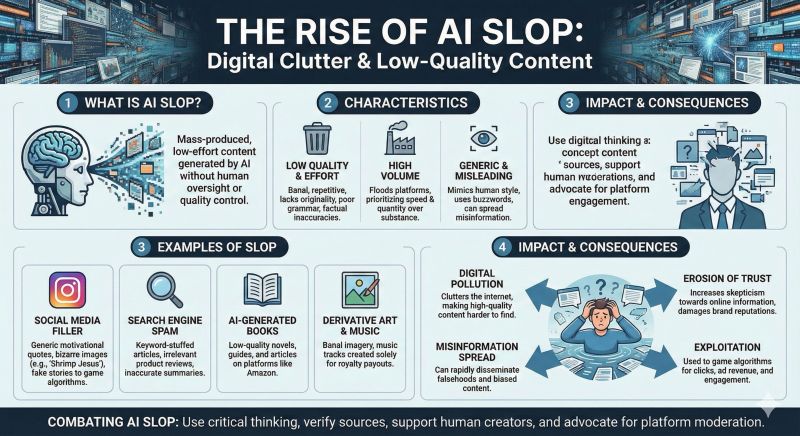

Last year, the word "slop" dominated conversations about AI. Not the kind you feed pigs, but the increasingly common AI-generated content that floods social media, blogs, and email inboxes. Low-effort, vaguely plausible, utterly forgettable. Merriam-Webster made it the word of the year, and honestly, it felt earned.

Then Microsoft CEO Satya Nadella said something that cut through all the noise.

Instead of panicking or apologizing for the state of AI-generated content, Nadella reframed the entire conversation. He didn't deny the slop exists. Instead, he asked us to stop thinking about AI as a "slop generator" and start thinking about it as a "bicycle for the mind." More specifically, as a scaffolding for human potential, not a replacement for it.

On the surface, this sounds like corporate PR spin. Microsoft has a massive investment in AI. Of course they want you to feel good about it. But here's where it gets interesting: the data is actually backing him up.

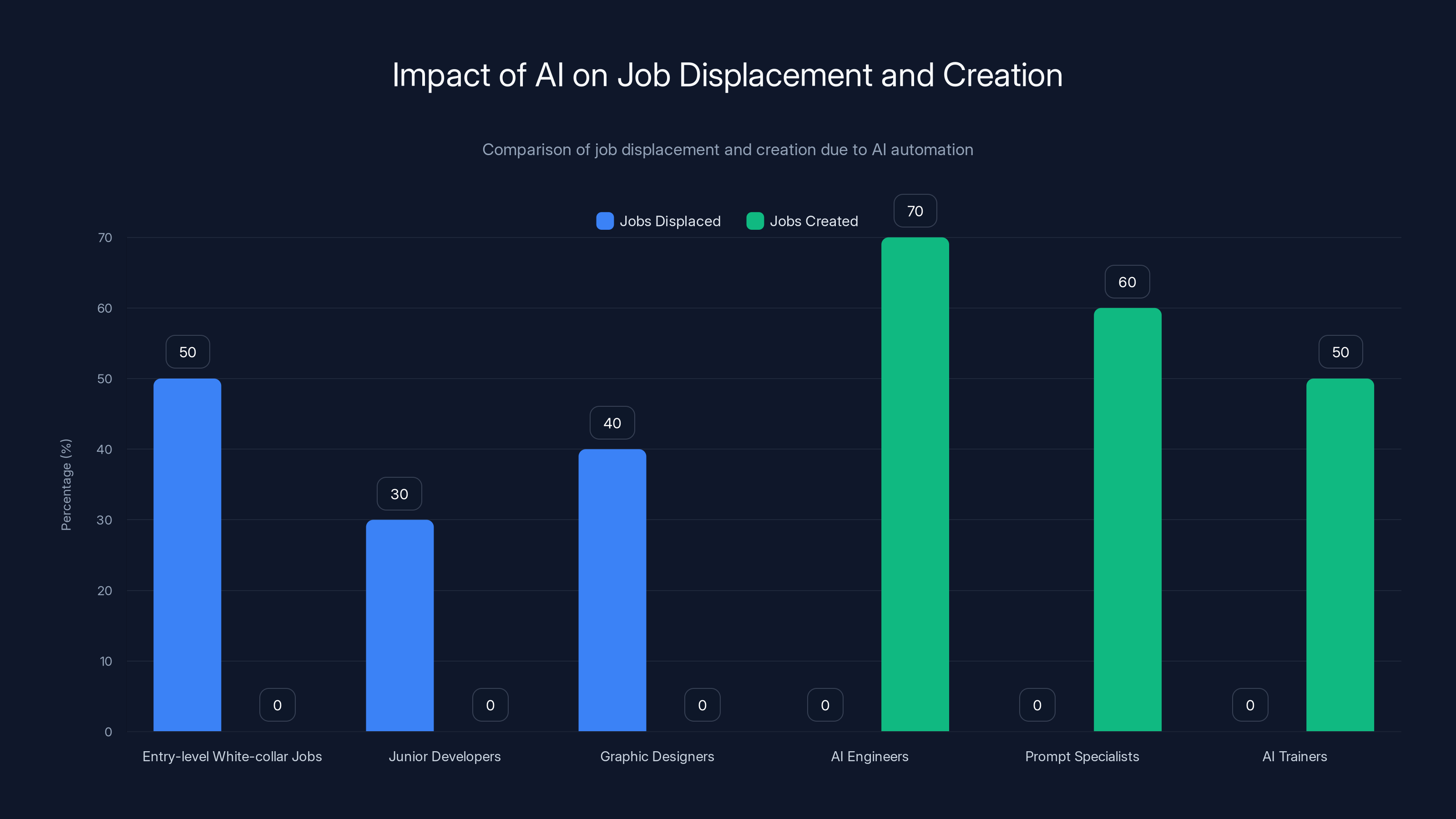

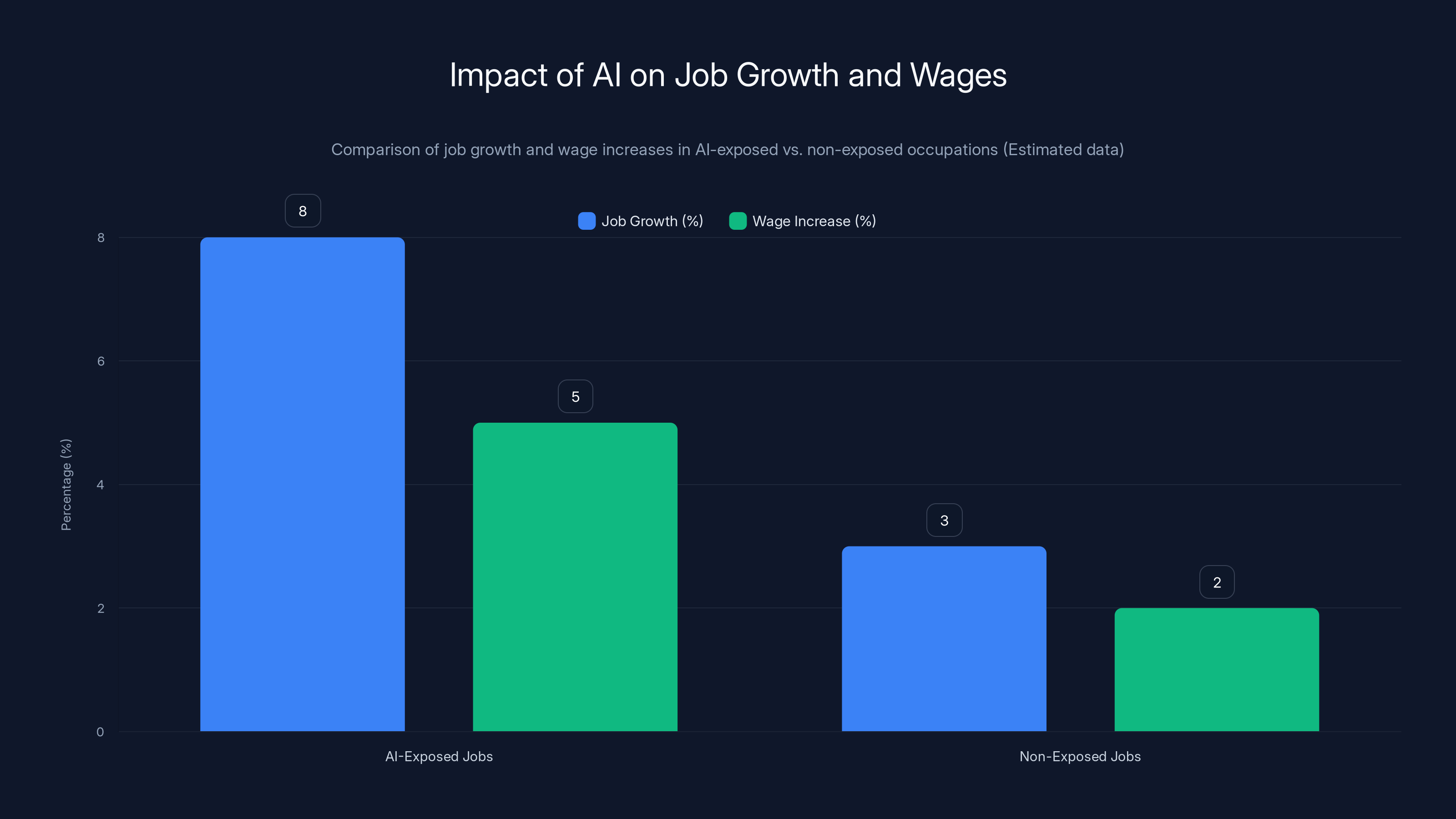

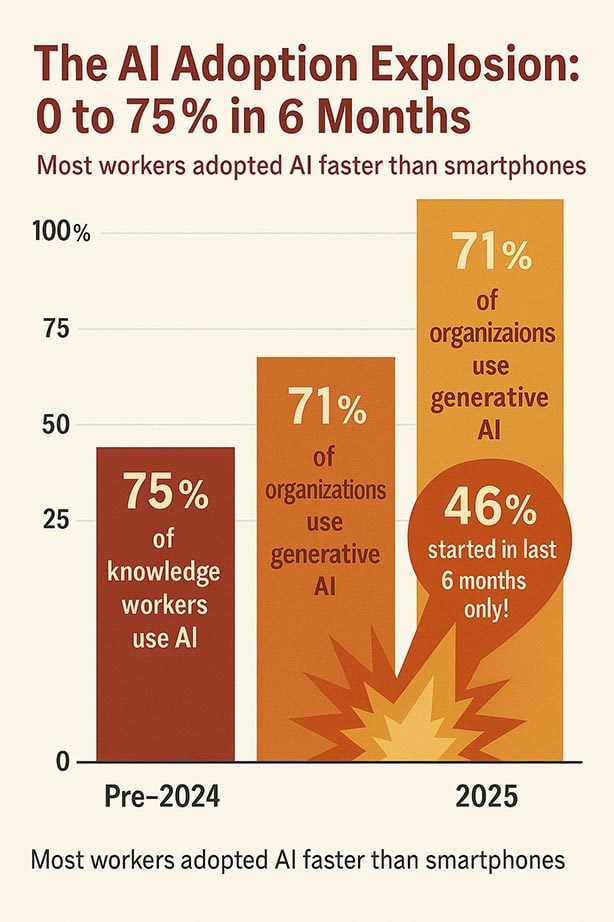

Vanguard's 2026 economic forecast found something counterintuitive. The approximately 100 occupations most exposed to AI automation are outperforming the rest of the labor market in terms of job growth and real wage increases. The jobs people feared AI would destroy are actually flourishing. Workers using AI effectively are making themselves more valuable, not more replaceable.

So what's really going on here? Is Nadella right? Is AI actually a tool that amplifies human capability? Or are we just in the early stages of a massive displacement we haven't fully recognized yet?

The answer is messier, more interesting, and more nuanced than either the AI-apocalypse crowd or the tech optimists want to admit.

The "Slop" Narrative and Why It Started

To understand Nadella's push back, you need to understand why the "slop" concept took off so hard and so fast.

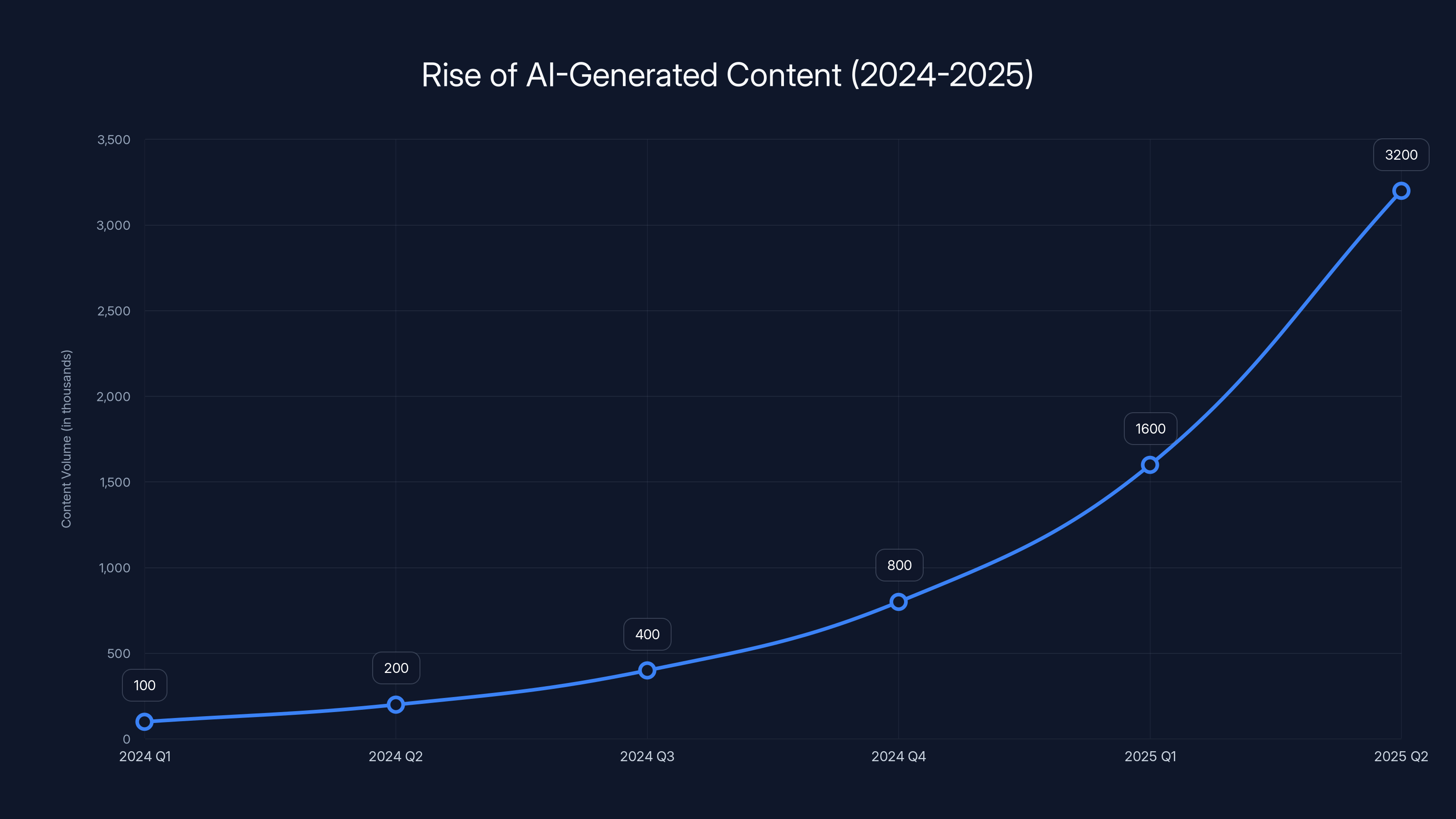

In 2024 and early 2025, AI tools became cheap and accessible. Chat GPT, Claude, Gemini—all available to anyone with an internet connection. What followed was predictable: a flood of low-effort content. AI-generated blog posts optimized for SEO but stripped of personality. Social media accounts that pump out hundreds of posts daily, each one a variation on the same theme. LinkedIn suddenly filled with AI-written gratitude posts from accounts that never posted before.

It wasn't that the AI was fundamentally broken. It was that the economics incentivized volume over quality. If you could generate 50 blog posts in an hour for $2 worth of API calls, why spend a day crafting one great post? The marginal cost of another mediocre piece of content approaches zero.

This created a visible problem. Feed readers started filling with noise. Social algorithms had to contend with a massive new source of low-signal content. Google announced it was updating its algorithm to penalize AI-generated content that didn't add value. The sense was real: we're drowning in AI slop.

But here's what the slop narrative missed: the people complaining loudest about AI-generated content were often using AI tools themselves. The contradiction was invisible to them. They'd use Chat GPT to draft an email, Claude to brainstorm ideas, then turn around and mock AI-generated blog posts. They were living Nadella's vision without realizing it.

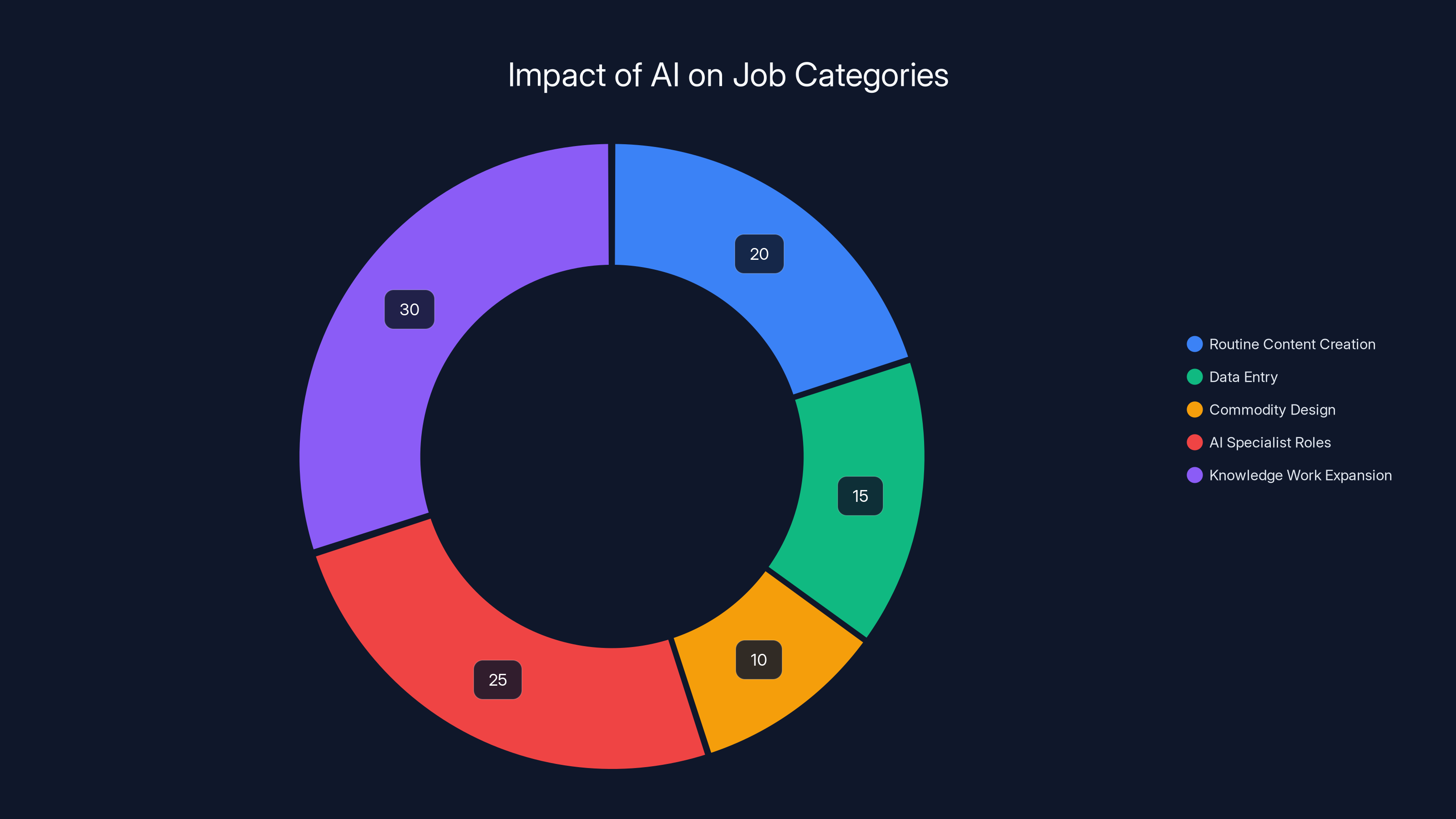

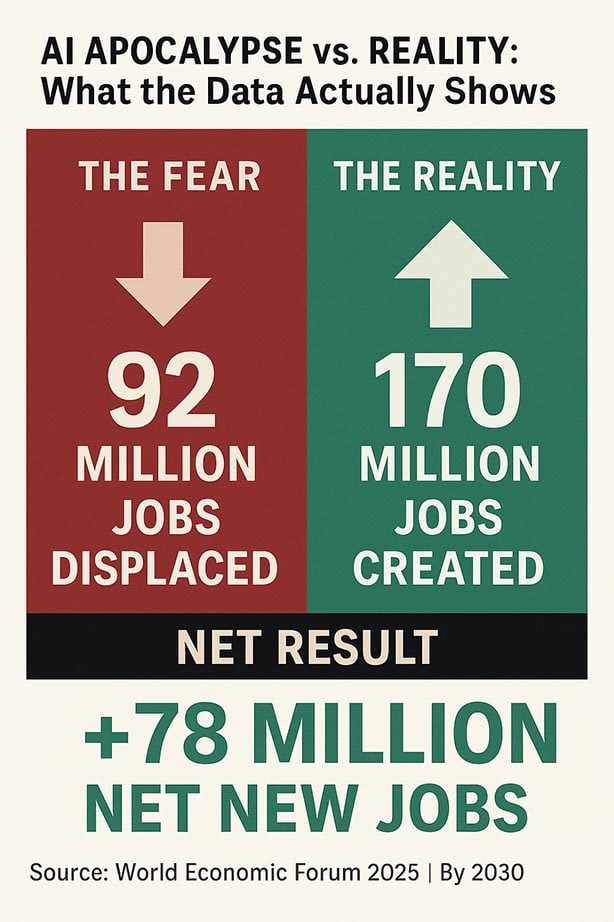

AI is both displacing and creating jobs, with significant growth in AI specialist roles and knowledge work. Estimated data.

The Job Displacement Concern: Real or Overblown?

The slop narrative was never really about content quality. It was a proxy for a deeper fear: if AI can generate all this content automatically, what happens to the people who used to write it?

In May 2024, Anthropic CEO Dario Amodei warned that AI could take away half of all entry-level white-collar jobs within five years. He doubled down on this prediction in a 60 Minutes interview just weeks before Nadella's bicycle-for-the-mind post. High unemployment rates among junior developers seemed to confirm the trend. Graphic designers posted about clients demanding AI-generated work for 10% of the previous price.

The fear wasn't irrational. It was based on real displacement happening in real time.

But the Vanguard data suggests something more complex. Yes, some jobs are being disrupted. Yes, some workers are struggling. But the occupations most exposed to AI automation are growing faster than the rest of the market. Wages in those fields are rising faster too.

How is this possible? Three things are happening simultaneously:

First, automation of routine tasks creates space for higher-value work. A junior developer using AI might write 10x more code in the same time. They're not obsolete—they're more productive. The best juniors aren't losing opportunities; they're multiplying them. The worst ones? Yeah, they're struggling. But they were always going to struggle.

Second, new jobs are being created faster than old ones disappear. AI engineers, prompt specialists, AI trainers, people who know how to evaluate AI output—these are new job categories that barely existed three years ago. They're paying well and growing fast.

Third, the jobs that are growing fastest are the ones where human judgment matters most. Strategy, design, content creation, sales, management—these all benefit from AI augmentation but can't be replaced by it. At least not yet.

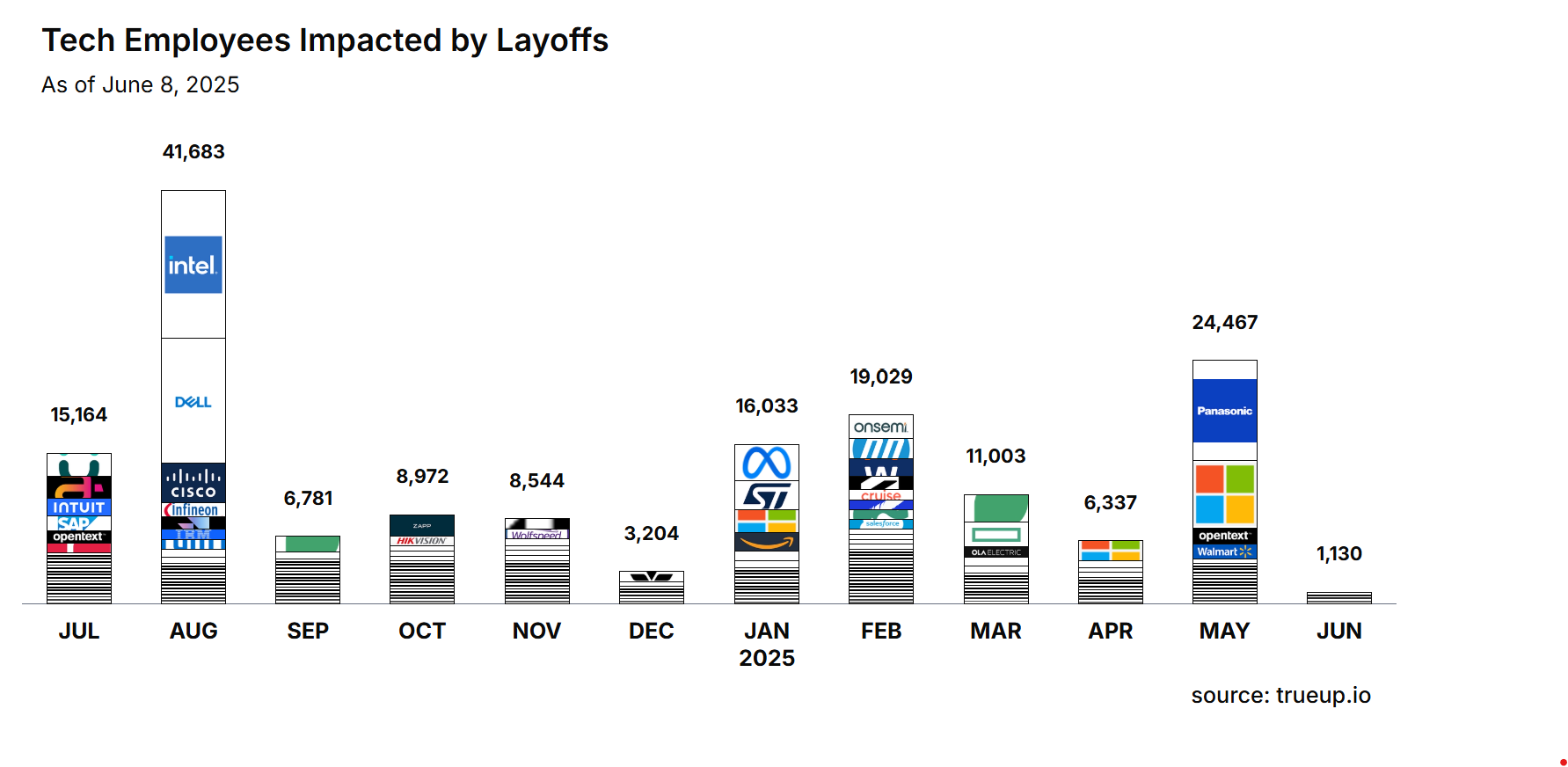

The Microsoft layoff situation illustrates this perfectly. In 2025, Microsoft laid off over 15,000 people while recording record revenues and profits. Nadella framed it as repositioning for AI transformation, not as a direct result of AI efficiency. And that distinction matters. The company was reallocating capital from slowing business areas to growth areas. That's normal business strategy. It's not unique to AI.

What MIT's Project Iceberg Actually Tells Us

Much of the job displacement discussion hinges on one study: MIT's Project Iceberg.

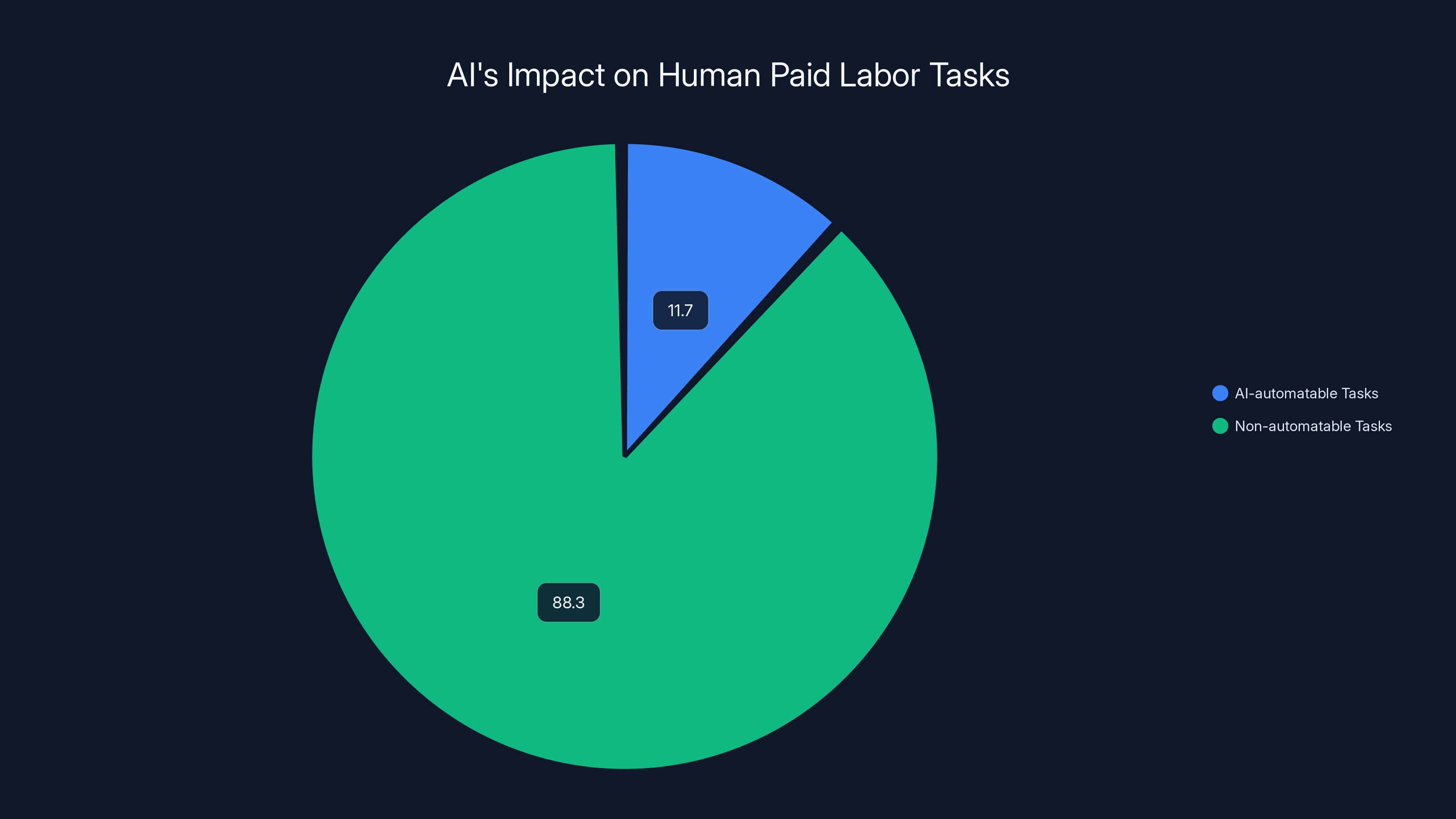

The research found that AI is capable of performing about 11.7% of human paid labor. That number gets repeated constantly. "AI could replace 12% of jobs" becomes the headline. But that's misreading the research.

Project Iceberg isn't measuring job replacement. It's measuring task displacement within jobs. The researchers are asking: what percentage of the work that a human does in their job could be offloaded to AI? Then they're calculating wages attached to that work.

Examples they cite: automated paperwork for nurses, AI-written code for developers, data entry for administrative assistants. None of these result in the job disappearing. The job changes. The person's role evolves. The time previously spent on these tasks can be redirected to higher-value work.

A nurse isn't replaced by AI that handles paperwork. The nurse becomes more effective. She spends less time on forms and more time with patients. A developer using GitHub Copilot isn't becoming obsolete—they're writing more complex systems faster.

This distinction between "task automation" and "job replacement" is crucial. It's also where Nadella's framing becomes pragmatically useful. Calling it a scaffolding for human potential is more accurate than calling it job replacement, because most AI isn't replacing people. It's changing what people do.

AI is predicted to displace a significant percentage of entry-level white-collar jobs, junior developers, and graphic designers. However, it also creates new opportunities in emerging fields like AI engineering and prompt specialization. Estimated data based on current trends.

The Jobs Actually Being Hit

That said, pretending there's no displacement would be dishonest.

Certain jobs are being hit harder than others. Professional graphic designers who handle routine design work—resize logos, create social media graphics, make marketing collateral—are facing real pressure. A non-designer can now use Midjourney or DALL-E to generate decent images that would've previously required hiring a professional. The market for commodity design work has contracted.

Junior content writers, especially those producing blog posts or product descriptions, have felt the impact. Clients who used to hire freelance writers now generate the first draft with AI and hire an editor instead. That's a significant income shift for someone relying on writing for their primary income.

Marketing roles focused on repetitive content creation have been disrupted. Some marketing departments have downsized because AI can handle the volume of routine work that previously required multiple junior marketers.

But here's the counterintuitive part: experienced professionals in these same fields are doing better than before. The best graphic designers are busier than ever, because there's more design work getting done, and clients still want human creativity guiding the process. The best writers are commanding premium rates because scarcity of genuinely good writing has increased. Marketing departments that fully adopted AI are producing better work, faster, and the strategists running those departments got promoted.

Why Nadella's "Scaffolding" Framing Actually Matters

This is where Nadella's pivot becomes more than corporate messaging.

He's explicitly rejecting two narratives that have competing power. One says AI is the ultimate tool of human augmentation—workers will become superpowers with AI. The other says AI is the job killer—displacement is inevitable. Both are incomplete.

The scaffolding metaphor is different. Scaffolding supports construction without replacing the builder. The builder still makes all the decisions about what gets built. The scaffolding just makes it physically possible to build faster and higher. When the building is finished, you remove the scaffolding. The structure remains.

Applied to work, this means: AI handles the repetitive, well-defined parts of your job. You handle judgment, creativity, strategy, and decisions. The combination makes you more valuable, not less. Your job doesn't disappear. It evolves.

This framing has massive implications for how companies should think about AI investment. If you're buying AI tools to replace workers, you're thinking about it wrong. You're optimizing for a one-time savings that destroys institutional knowledge and creates retention problems. If you're buying AI tools to amplify workers, you're thinking about it right. You're investing in making your best people better.

Microsoft's own situation backs this up. They laid off 15,000 people and hired fewer people in some areas. But they're not running with fewer people total. They redistributed people. More engineers on AI products, fewer in declining business areas. More strategists focused on AI integration, fewer in administrative roles that AI can now handle.

That's not job destruction. That's resource reallocation.

The Skill Divide: Where AI Actually Creates Problems

Here's where the honest conversation needs to happen, though.

AI as a scaffolding amplifies existing capabilities. If you're a great writer, AI makes you a better writer faster. You can generate drafts that you refine. You can explore more ideas in the same time. But if you can't write well, AI making it easy to generate writing doesn't suddenly make you a good writer. It makes it easier to generate mediocre writing at scale.

This is the real risk. Not that AI replaces skilled workers. The risk is that the gap between skilled and unskilled workers widens dramatically.

A developer who knows how to evaluate code can use Copilot to multiply their productivity. A developer who doesn't know how to evaluate code will generate buggy systems fast. A strategist who understands business can use AI to research competitors and generate options quickly. Someone without business judgment will just be confused about what the AI is suggesting.

This means the return on investment from AI depends heavily on the human using it. Companies that hire better, that train people better, that retain experienced people, will see AI multiply their competitive advantage. Companies treating AI as a replacement will struggle, because they're losing the very people who could make AI work.

AI-exposed occupations are experiencing higher job growth and wage increases compared to non-exposed jobs, suggesting AI enhances job value rather than replacing it. Estimated data.

The Vanguard Data: What's Really Growing

Let's dig into the Vanguard forecast that backs Nadella up.

The companies and institutions with the most to lose from job displacement have a vested interest in accurate forecasting. Vanguard isn't a venture capital firm betting on AI hype. It's a massive money manager with clients' retirement savings at stake. If Vanguard thought AI was about to cause depression-level unemployment, their forecasts would reflect that reality.

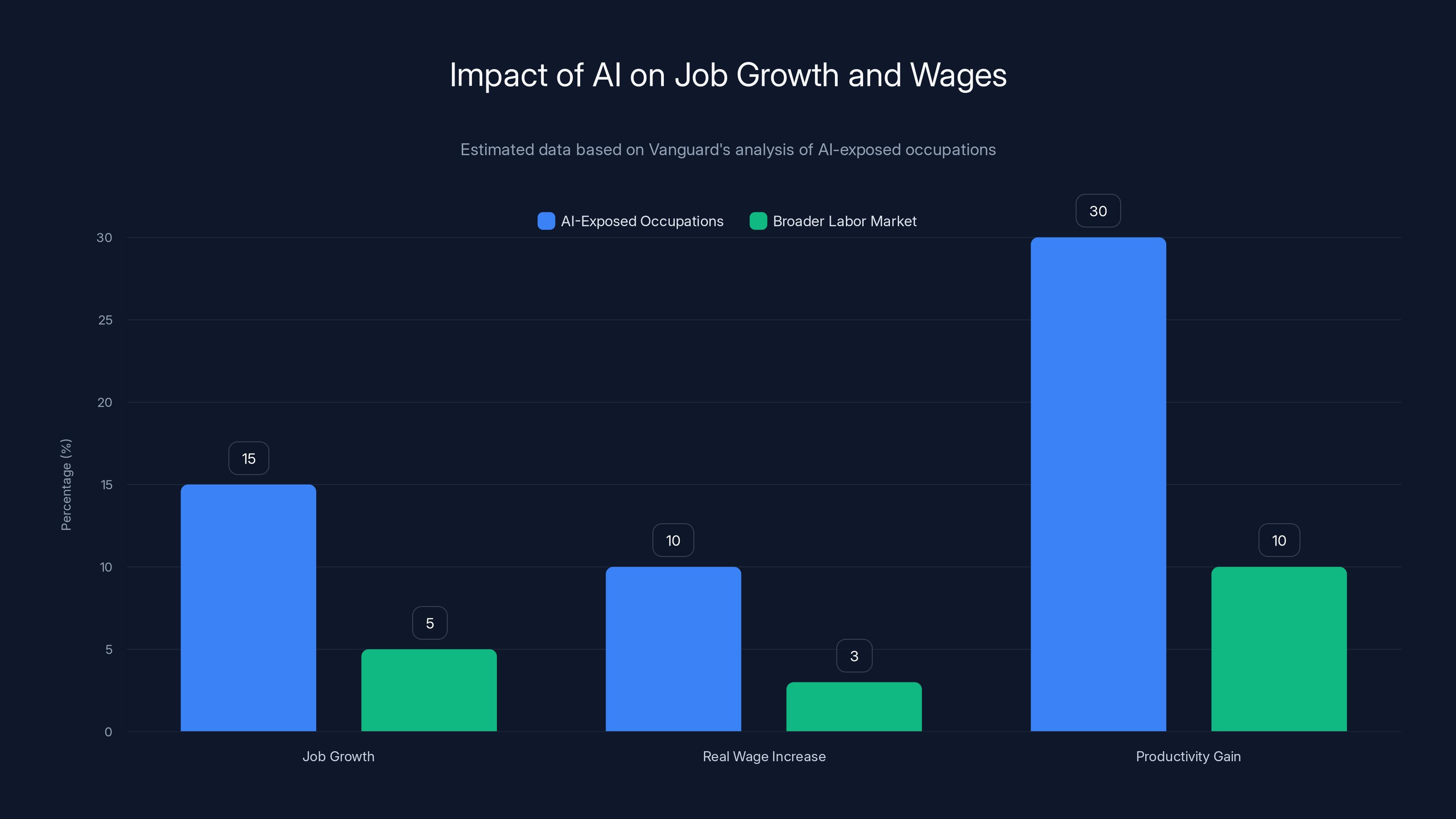

Instead, Vanguard found that the 100 occupations most exposed to AI automation are outperforming the broader labor market. Not just in job growth, but in real wage increases. More jobs, higher pay, in the fields most likely to be automated.

How? Several mechanisms:

First, productivity gains get partially passed to workers. If AI makes a worker 30% more productive, companies can afford to pay them more while still improving margins. Second, increased demand for the output means more people are needed, despite the productivity boost. There's more code being written by more developers, not fewer developers writing all the code. Third, skill premiums increase. As tools become more powerful, the difference between someone who can use them well and someone who can't grows dramatically. That gap gets reflected in compensation.

The implication is stark: learn to use AI or accept wage stagnation and reduced opportunities. This isn't a judgment. It's just the economic reality of a rapidly changing tools landscape.

Why the Slop Problem Persists

Nadella's framing solves a lot of problems in the conversation about AI. It reduces apocalyptic thinking. It explains why AI-exposed jobs are growing. It gives a coherent theory of how AI fits into work.

But it doesn't solve the actual slop problem.

The slop exists because the economic incentives support it. If you can generate 100 mediocre blog posts for the cost of one good one, you will. If you can fill your social media with AI content that gets 5% engagement but costs nothing, you will. The content isn't slop because the AI is broken. It's slop because the creator's incentive is volume, not quality.

Nadella's bicycle-for-the-mind framing assumes good faith. It assumes the person using the AI wants to create something good and is using AI to amplify their capability. That's true for many use cases. A developer using Copilot to write better code faster. A designer using AI for background generation so they can focus on composition. A marketer using AI to generate initial drafts they'll refine and personalize.

But it's not true for the SEO spam blogs or the social media bot accounts. Those are optimizing for metric gaming, not for actual value creation. The slop isn't a byproduct of good-faith AI use. It's the intended output of people prioritizing vanity metrics over substance.

Solving the slop problem isn't about better AI or better intentions. It's about changing the metrics and incentives. Google's approach—penalizing low-quality AI content—is a start. But as long as people optimize for engagement and reach, not for actual value, the slop will continue.

What Anthropic Gets Right (And Where Nadella Disagrees)

Dario Amodei's warnings about AI displacement aren't wrong. They're incomplete, just like dismissing displacement concerns entirely is incomplete.

Amodei is right that AI is advancing rapidly. He's right that tasks once thought to require human workers can now be done by AI. But his timeline and his certainty about the outcomes might be overstating the case.

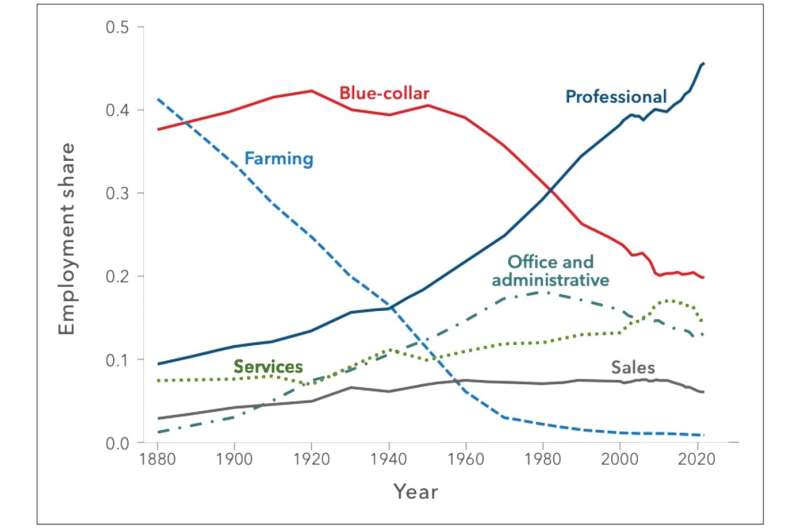

Consider the history of labor-saving technology. The internal combustion engine was supposed to eliminate horse jobs. It did, but it created more jobs in transportation, maintenance, and a thousand adjacent industries than it destroyed. The printing press was supposed to obsolete scribes. Instead, it created an explosion of demand for written content and literacy.

None of this means disruption isn't real or painful for the people affected. The blacksmith whose trade became obsolete didn't benefit from the fact that the car industry created more jobs overall. But it does mean the macroeconomic outcome is usually positive, even as individual workers face real challenges.

AI is following a similar pattern, just much faster. The disruption is real and visible now. The growth is also real, but more diffuse and less obvious.

Where Nadella and Amodei agree: AI is transformative. It will change work fundamentally. People need to adapt. Where they disagree: whether adaptation leads to widespread unemployment or to new opportunity. The Vanguard data suggests Nadella's framing is more accurate, at least so far.

AI can automate approximately 11.7% of tasks within jobs, allowing workers to focus on higher-value activities. (Estimated data)

The Microsoft Paradox: Record Profits, Mass Layoffs

Here's where the narrative gets complicated.

Microsoft laid off 15,000 people in 2025 while recording record revenues and profits. On the surface, this seems to contradict Nadella's scaffolding framing. Isn't this exactly the kind of displacement he said we shouldn't worry about?

Not quite. The details matter.

Microsoft didn't fire 15,000 people because AI made them unnecessary. Microsoft reallocated capital. The company was investing in some areas and divesting from others. It had specific product lines that weren't meeting strategic objectives, so it cut them. It had others that were high-growth, so it invested more. Some of the layoffs were from cutting duplicative roles as teams merged. Some were from natural attrition and slowdown in hiring.

None of that is unique to AI. It's ordinary business strategy. Companies do this constantly. During boom times, they expand. When they refocus, they contract.

What made it AI-relevant was the narrative. Nadella framed the restructuring as preparing for AI transformation. That created the perception that AI caused the layoffs, even though the proximate cause was business strategy.

This is where corporate incentives and reality get messy. Nadella had reason to frame it as AI-driven, because that makes the layoffs seem strategic and forward-looking rather than just cost-cutting. And there probably was some truth to it—investing in AI products probably meant defunding other things.

But the idea that 15,000 people were fired by AI is probably too simple. 15,000 people were fired because the company decided to reallocate resources. AI transformation was the strategic direction, but the mechanism of reallocation was ordinary corporate decision-making.

Building the Skills for AI-Augmented Work

If Nadella is right and the future is scaffolding, not replacement, then the practical question becomes: how do you prepare for this?

First, learn the tools. Whatever your field, AI tools are coming for it. Design, writing, coding, analysis, strategy—there are already mature tools. Learn them. Not to become a specialist in AI, but to understand what the tools can and can't do. This takes weeks or months, not years.

Second, understand your domain deeply. This is where the skill divide emerges. AI can accelerate existing knowledge. It can't replace it. If you're a great designer, AI makes you better. If you're mediocre, AI makes you adequately mediocre faster. Invest in genuine expertise in your field.

Third, develop judgment about AI output. This is underrated. The ability to look at what an AI generated and say "this is wrong" or "this is missing context" or "this is good but could be better" is increasingly valuable. You need domain expertise to have good judgment. You can't automate judgment.

Fourth, focus on human-centric skills. AI handles the routine, logic-based stuff. Creativity, strategy, persuasion, relationship-building, emotional intelligence—these are harder to automate. People who develop these skills will remain valuable.

The Real AI Transformation Isn't About Jobs

Here's the thing that gets lost in the job displacement debate: the real transformation isn't about employment. It's about capability.

A software engineer can now build systems in weeks that would've taken months. A marketer can analyze competitor strategies at a depth that would've required a team. A researcher can survey a field and identify gaps in hours instead of weeks. A designer can iterate 10 times faster.

This is genuinely transformative. Companies that figure out how to leverage this capability will outcompete those that don't. Workers who learn to use these tools will outcompete those who don't. Countries that adopt AI effectively will outcompete those that don't.

It's not about whether jobs are created or destroyed. It's about productivity and capability. The economic pie gets bigger. The distribution of that bigger pie is what's uncertain.

Focusing only on "will AI take my job" misses the actual change. The economy isn't a fixed number of jobs that AI fills or destroys. It's a constantly evolving set of problems that need solving. More capability means solving bigger problems faster. That tends to create more work, even as it eliminates some categories of work.

Nadella's scaffolding framing points to this. It's not about job preservation. It's about capability amplification. That's a bigger conversation than employment.

AI-exposed occupations are experiencing significantly higher job growth and wage increases compared to the broader market, driven by productivity gains. (Estimated data)

The Reasonable Middle Ground

After parsing through all this, what's the honest assessment?

Nadella is right that we should stop thinking of AI as primarily a job replacement tool. Most AI isn't replacing people. It's changing what people do. Workers who learn to use AI effectively are becoming more valuable, not less. Job growth in AI-exposed fields is real and measurable.

But Amodei isn't wrong either. AI is advancing rapidly. Some jobs will be heavily disrupted. Some people will be displaced. The transition can be painful, especially if you're caught without the skills to adapt. High unemployment among junior developers isn't imagination. Shrinking demand for commodity content isn't made up.

The reasonable middle ground: AI is accelerating work, creating more value, and shifting what people do. Some transition costs are inevitable. The net effect is probably positive, but the distribution of benefits and costs is uneven. Workers who adapt will thrive. Those who don't will struggle. That's not good or bad—it's just how technological change has always worked.

The policy question is whether we should smooth that transition. Help people retrain. Support communities hit hard by disruption. Create safety nets for those displaced. That's separate from whether the disruption is happening. It is. The response should be realistic about the challenge.

Looking Ahead: What 2026 and Beyond Tells Us

The Vanguard forecast covers 2026 and looking forward. What's their actual prediction?

Continued job growth in AI-exposed fields. Continued real wage increases. Widening skills gap between workers who use AI effectively and those who don't. More companies investing in AI integration. More AI tools becoming commodities, with the value shifting to how well you use them.

The slop problem persists, because the incentives supporting it persist. But quality AI-augmented work will command premium prices and recognition. The market rewards differentiation.

Nadella's world is plausible. It's also conditional. It depends on:

Workers learning to use AI tools effectively. Employers focusing on skill development, not just head-count reduction. Education systems adapting to teach skills that complement AI. Policy supporting transition rather than blocking innovation. Regulation preventing the worst AI harms without stifling progress.

None of that is guaranteed. It's a choice about how to respond to the capability that AI provides.

Practical Steps for Teams and Organizations

If you're building an organization or managing a team, what does Nadella's framing suggest?

First, invest in AI literacy for everyone, not just technical roles. Your finance team needs to understand what AI can do for accounting and reporting. Your marketing team needs to understand what AI can do for content and analytics. This isn't about everyone becoming AI engineers. It's about everyone understanding how AI changes their work.

Second, resist the temptation to use AI as an excuse for layoffs. If you're cutting people because you adopted an AI tool, you're probably using it wrong. Good AI adoption amplifies capability. Use that to do more, better work. If you're not doing more work, you're wasting the investment.

Third, focus on creating roles that AI can't easily fill. Strategy, judgment, creativity, customer relationships, culture-building. Double down on what makes humans valuable. AI will handle the rest.

Fourth, measure productivity carefully. It's easy to claim AI improved productivity. It's harder to measure it accurately. Set up metrics before you implement AI. Track changes. Adjust based on data, not assumptions.

Fifth, be honest about disruption. Some roles will genuinely become redundant. Plan for transitions. Offer support. Don't pretend it's painless. Handle it with care and compensation appropriate to the impact.

The volume of AI-generated content surged from 2024 to early 2025, doubling each quarter as tools became more accessible. (Estimated data)

The Honest Tension in Nadella's Position

Here's the thing that deserves acknowledgment: Nadella's position is ideologically convenient for Microsoft.

If AI is a scaffolding that amplifies human capability, then Microsoft's aggressive investment in AI is obviously good. If AI is going to displace workers, then Microsoft's aggressive investment is ethically complicated.

There's nothing wrong with corporate leadership advocating for positions that benefit the company. But it's worth noting the bias.

That said, the data actually does seem to support his framing, at least so far. Jobs in AI-exposed fields are growing. Wages in those fields are rising. The displacement is real, but not apocalyptic. The growth is real, but less visible.

Nadella might be right for the right reasons. Or he might be right by luck, while the underlying mechanics he's describing aren't quite accurate. The honest answer is we won't know for a few more years.

What we can say: in early 2025, the optimistic scenario Nadella described seems more accurate than the apocalyptic scenarios. That could change. But right now, the data points toward his direction.

What about the Slop Though?

We keep circling back to slop because it's the visible manifestation of the tension in all this.

Slop proves that AI can generate content fast and cheap. That should terrify any writer, designer, or content creator. It proves that quality is increasingly divorced from speed. That's the real insight hidden in the slop problem.

Slop also proves that humans prefer human-created content when they can tell the difference. A mediocre blog post that's clearly human-written gets more engagement than a slick but soulless AI post. A design with personality beats an AI-generated design every time. This suggests that scarcity of human creativity might actually increase, because AI-generated content flooded the market so thoroughly that actual human content becomes premium.

Long term, slop might be self-solving. The market floods with low-quality AI content. Consumers get frustrated. They seek out and value high-quality human content. Creators who focus on quality and personality command premium prices. The commodity content market collapses because why pay for slop when you can generate it free?

But that timeline is probably years away. In the meantime, we're wading through slop.

Nadella's not wrong that slop isn't the inherent nature of AI. It's the inevitable output of treating content creation as a metrics game. You can use AI to generate something genuinely good, or you can use it to generate volume. The tool doesn't determine which.

The Path Forward: Integration, Not Replacement

If we take Nadella's framing seriously and the Vanguard data at face value, the path forward looks like this:

AI tools become commodity infrastructure. Like email or spreadsheets, everyone uses them. You don't get ahead by using them—you fall behind by not using them. The value shifts to what you do with them.

Skill premiums increase. The gap between someone who uses AI well and someone who doesn't widens. This creates pressure for education and training, but also opportunity for those who invest in capability.

Work shifts toward human-centric roles. Strategy, creativity, leadership, relationships—these become increasingly central because they're what AI can't handle. Engineering and deep work also remain, but the nature of engineering changes.

New categories of work emerge. AI trainers, prompt engineers, people who understand how to work between humans and AI systems. These roles barely exist today but will be everywhere by 2030.

Commodity work shrinks. The middle-skill, middle-pay work of routine content creation, data entry, and routine coding becomes less valuable. You either move up to strategy and judgment, or down to commodity work with commodity pay.

This is rough on people stuck in the middle. That's worth acknowledging. But it's also the direction the incentives point.

The Deeper Question: What Is Work For?

Beneath all of this—the slop, the jobs, the wages, the disruption—there's a deeper question that AI forces us to ask.

What is work for? Is it primarily about creating value and solving problems? Or is it about income distribution and human purpose?

If work is about value creation, then AI is unambiguously good. It creates vastly more value, faster. The question is just how to distribute the benefits.

If work is about income distribution, then AI is threatening. It can create the same value with fewer people, which threatens income for some.

If work is about human purpose and meaning, then AI might be neutral or negative, depending on what you do. Work that's intellectually engaging and offers autonomy might become more fulfilling if AI handles the tedious parts. Work that's primarily routine might become meaningless if AI handles the routine and you're left with nothing.

Nadella's scaffolding framing assumes work is primarily about creating value and that humans will find purpose in using their judgment and creativity. That's optimistic, but probably true for many workers.

For others, the future is less clear. How do you find meaning in a world where your routine work is automated? What's your role? What's your value?

These are philosophical questions, not technical ones. But they matter for how we think about AI's impact.

Bottom Line: Nadella's Right (For Now)

Nadella's vision of AI as a scaffolding for human potential is more accurate than either the utopian or dystopian views.

AI is advancing rapidly and will change work fundamentally. Jobs are being displaced, but new ones are being created faster. Workers using AI effectively are more valuable, not less. The jobs most exposed to AI automation are actually growing.

This doesn't mean there's no disruption. It does mean the disruption isn't as simple as "AI kills jobs." It's more like "AI changes what we do, and those who adapt thrive."

The slop problem is real. But it's not a reflection of AI's nature—it's a reflection of how AI is being used. Quality AI-augmented work commands premium value. Commodity AI-generated content commands nothing.

The skills divide is real too. Workers who develop expertise and learn to use AI effectively are pulling away from those who don't. That's not new to AI—it's just accelerated by it.

If you're worried about AI and work, the answer is the same as it's always been with technological change: develop valuable skills, stay adaptable, and learn to use the new tools.

Nadella's vision requires all those things. So does Amodei's cautious warning. They're not incompatible. They're just emphasizing different aspects of the same reality.

The honest view: the near future probably looks closer to Nadella's vision than Amodei's apocalypse. But the medium-term could surprise us either way. Capability is advancing faster than our ability to integrate it. That's where real risk lives.

FAQ

What does Satya Nadella mean by "bicycles for the mind"?

Nadella is positioning AI not as a replacement for human workers, but as a cognitive amplifier that enhances human capability and productivity. Just as a bicycle makes a human move faster and farther without replacing human muscle, AI should augment human judgment and decision-making while humans remain the primary decision-makers and strategy owners in their work.

Is AI actually destroying jobs or creating them?

The reality is both: AI is displacing certain categories of work (routine content creation, data entry, commodity design) while simultaneously creating new categories (AI specialists, prompt engineers, people who manage AI systems) and expanding opportunities in knowledge work. Vanguard's 2026 forecast found that occupations most exposed to AI automation are actually seeing faster job growth and higher real wage increases than the broader labor market.

What is MIT's Project Iceberg actually measuring?

Project Iceberg measures task displacement, not job replacement. It estimates that AI can automate about 11.7% of the work within jobs (like paperwork for nurses or routine code writing for developers), not that 11.7% of entire jobs will disappear. When tasks are automated, the work evolves—the human focuses on higher-value activities instead.

Which jobs are most at risk from AI disruption?

Jobs most vulnerable to near-term disruption include routine content writing, commodity graphic design, junior-level coding, data entry, and repetitive administrative tasks. However, workers in these fields who combine skills with AI tools (like designers who use AI for backgrounds to focus on composition) are actually seeing increased demand and higher compensation.

How can I prepare for an AI-transformed workplace?

Develop deep expertise in your field, learn AI tools relevant to your work, cultivate judgment about AI output quality, focus on human-centric skills (strategy, creativity, leadership), and stay adaptable. The competitive advantage goes to people who combine domain expertise with AI capability, not people who do either alone.

What's the difference between AI augmentation and AI replacement?

AI augmentation amplifies human capability—a writer using AI to draft faster, a developer using Copilot to code more efficiently. AI replacement eliminates human involvement entirely. Most current AI tools are augmentation tools, though slop demonstrates that without human judgment, they can produce low-value output at scale.

Will AI cause widespread unemployment?

Current data suggests major unemployment is unlikely in the near term. Jobs are being created faster than they're being displaced. However, disruption in specific sectors is real, and workers who fail to adapt will face significant challenges. The distribution of benefits matters more than the aggregate economic effect.

How do we solve the "AI slop" problem Nadella mentions?

Slop exists because incentives reward volume over quality. Solutions include: better AI evaluation algorithms (like Google's content updates), cultural shifts toward quality over metrics, premium pricing for human-created content, and better tools for evaluating AI output. Ultimately, slop is a market problem, not a technology problem.

Why did Microsoft lay off 15,000 people if AI creates jobs?

Microsoft's layoffs were about capital reallocation—shifting resources from slower business areas to high-growth AI areas—rather than direct automation. It's ordinary business strategy framed through AI. The company maintained or grew its overall workforce while restructuring roles and departments.

Should my company prioritize AI investment or employee protection?

A false choice. Strong companies do both: they invest in AI to increase capability and competitiveness while investing in employee retraining and career development. Using AI as an excuse for layoffs typically backfires—you lose the expertise you need to make AI work effectively.

What does the Vanguard 2026 forecast actually say?

Vanguard found that the 100 occupations most exposed to AI automation are outperforming the rest of the labor market in both job growth and real wage increases. This suggests that workers and companies successfully integrating AI are becoming more valuable, not less—supporting Nadella's scaffolding vision more than apocalyptic displacement scenarios.

Is Nadella right about AI and jobs?

Based on 2025-2026 data, Nadella's vision appears more accurate than extreme displacement predictions. Jobs in AI-exposed fields are growing. Wages are rising. Disruption is real but manageable. However, the long-term picture remains uncertain—current data covers only the early phases of AI adoption.

Key Takeaways

- Nadella's 'scaffolding' framing—AI as cognitive amplifier, not job replacement—is more accurate than extreme displacement narratives based on emerging 2025-2026 labor data

- Vanguard's forecast found that the 100 occupations most exposed to AI automation are outperforming the broader labor market in both job growth and real wage increases

- The real divide isn't between workers with jobs and workers without—it's between workers who use AI effectively and those who don't, creating a widening skills premium

- AI is automating specific tasks (11.7% of work within jobs, per MIT's Project Iceberg), not entire jobs, meaning work evolves rather than disappears

- Companies that treat AI as a tool to amplify capability see better returns than those using it as a cost-cutting justification for layoffs

![AI Isn't Slop: Why Nadella's Vision Actually Makes Business Sense [2025]](https://tryrunable.com/blog/ai-isn-t-slop-why-nadella-s-vision-actually-makes-business-s/image-1-1767656250428.jpg)