AI-Powered Go-To-Market Strategy: 5 Lessons From Enterprise Transformation [2025]

Introduction: Why AI Transformation Fails (And How to Get It Right)

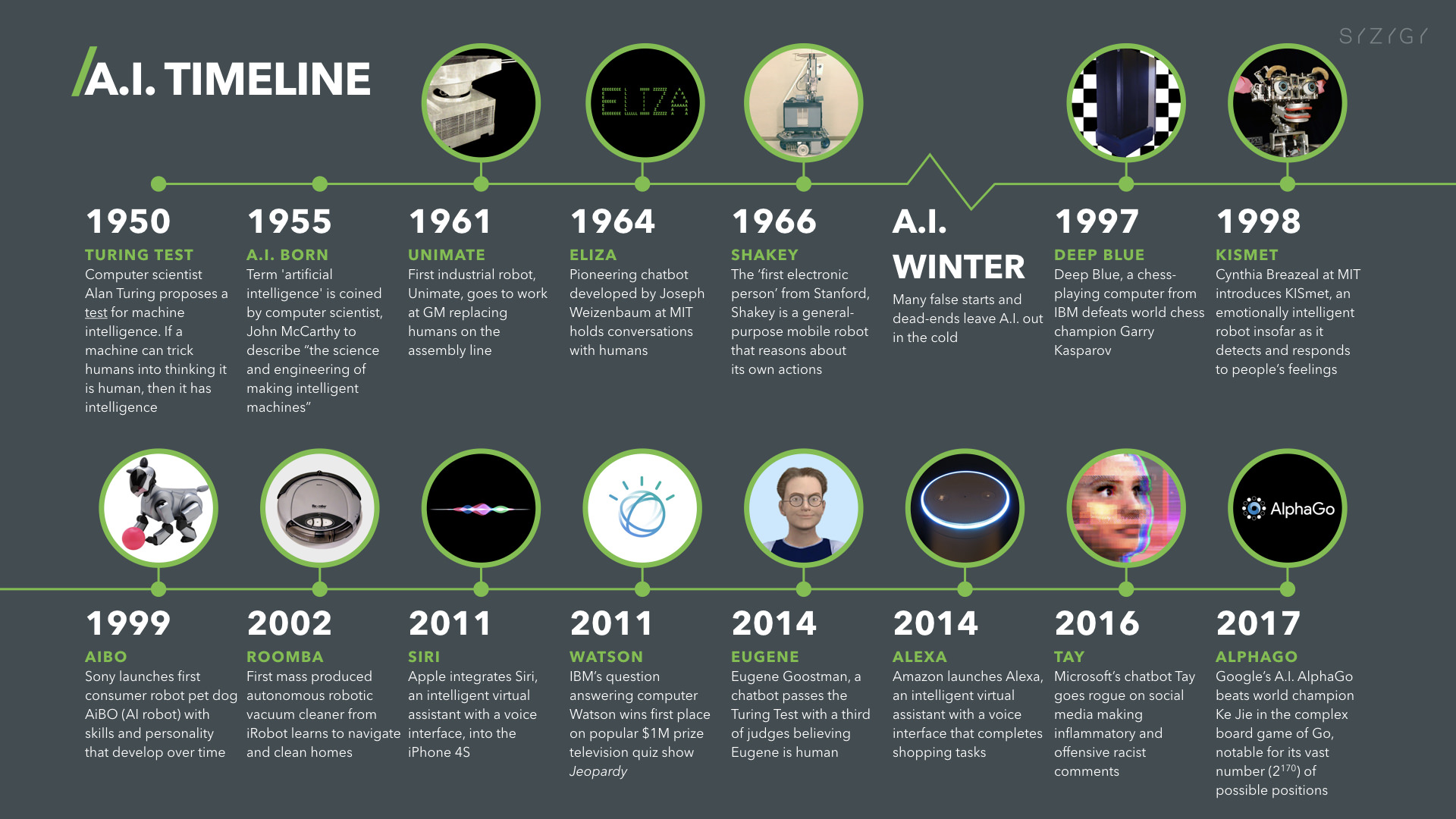

The AI revolution isn't happening in startups anymore—it's happening in the enterprise. While headlines celebrate AI-native companies disrupting markets, the real story lies in how established B2B organizations with hundreds of salespeople, thousands of customers, and years of operational complexity are retrofitting AI into their revenue engines. According to a recent analysis by Xpert Digital, the dilemma between retrofitting and new construction is a significant challenge for many enterprises.

This is fundamentally different from building an AI-first company. When you have 400 salespeople, 15,000 customers, and deeply embedded legacy processes, deploying AI isn't about breakthrough innovation—it's about transformation. It's about recognizing that most enterprises have massive pockets of inefficiency, duplicated effort, and manual work that AI can eliminate almost immediately, but only if you approach it strategically. As noted by WTW, leaders who strategically integrate AI see significant ROI increases.

The challenge isn't whether AI can improve your go-to-market. The challenge is doing it in a way that actually sticks, scales across your organization, and creates measurable business impact within months rather than years. Too many enterprise companies launch "AI initiatives" that generate excitement for a quarter and then fizzle because they lack either strategic direction or organizational alignment. IDC's insights highlight the efficiency gap that many enterprises face when implementing AI.

What separates successful AI transformations from failed experiments? The answer lies in a specific framework: combining top-down strategic direction with bottom-up experimentation, building cross-functional teams that speak both business and technical language, ruthlessly prioritizing use cases based on actual customer impact, and creating a cultural shift where AI becomes table stakes for how work gets done. McKinsey's research emphasizes the importance of mindset in digital and AI transformation.

This comprehensive guide explores exactly how forward-thinking revenue organizations are approaching AI transformation. We'll break down the specific tactics that generate real results, dissect the common mistakes that derail even well-resourced teams, and provide you with a blueprint you can implement in your own organization—regardless of size or complexity. By the end, you'll understand not just why AI transformation matters, but exactly how to structure your approach to maximize adoption, minimize wasted effort, and deliver measurable ROI within your first six months.

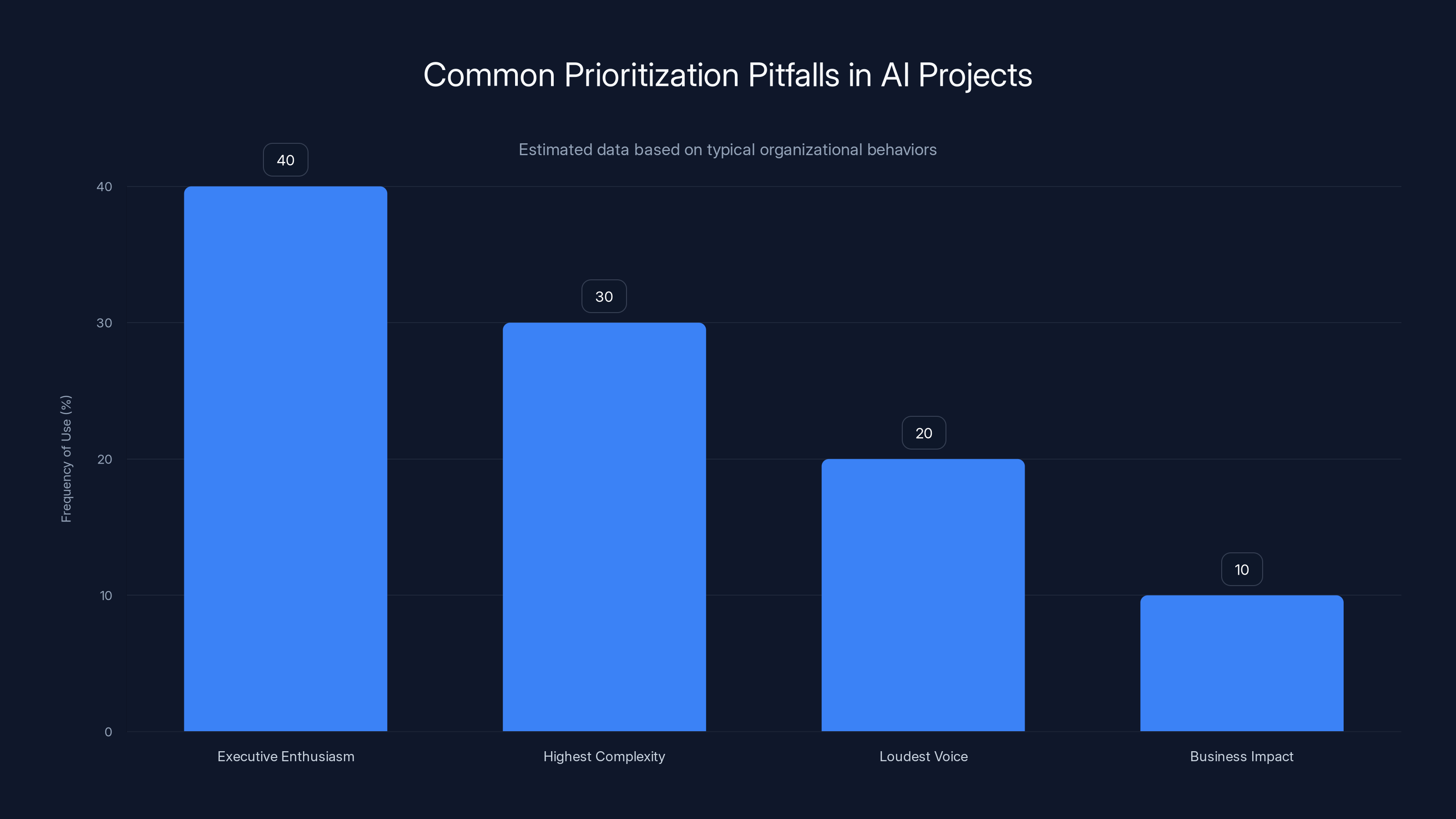

Estimated data shows that executive enthusiasm is the most common, yet often flawed, method for prioritizing AI projects, overshadowing business impact considerations.

The Landscape: Why Enterprise AI GTM Transformation Matters Now

The Current State of Enterprise Sales Operations

Enter virtually any mid-market or enterprise B2B sales organization and you'll find a consistent pattern: massive time waste embedded in daily workflows. Sales professionals—among the highest-paid employees in any organization—spend enormous portions of their day on non-selling activities. Shopify's guide on sales analytics highlights the inefficiencies in sales operations that AI can address.

Account executives are logging into 7-8 different systems just to prepare for a single customer call. They're manually pulling data from CRM systems, copying information into presentation templates, stitching together insights from multiple sources, and duplicating data entry across platforms. Marketing teams are manually segmenting prospect lists, writing variations of the same email copy for different accounts, and struggling to personalize outreach at scale. Customer success managers are writing similar renewal justifications repeatedly, creating custom reports by hand, and spending hours in spreadsheets instead of actually managing relationships.

When you map these daily activities and quantify the time loss, the numbers are staggering. A single account executive losing 2.5 hours per day on operational tasks—across a 400-person sales team—represents 200,000+ hours annually of selling time that's being spent on non-selling work. At fully-loaded cost, this represents millions of dollars in lost productivity capacity. Bain & Company discusses how AI can evolve customer success to avoid fading away.

This is where AI-powered go-to-market transformation enters. Unlike previous waves of sales technology, AI doesn't just automate isolated tasks—it can intelligently handle the interconnected workflows that make up modern selling. An AI system can understand your customer, your selling motion, and your business context well enough to generate a customized executive summary, draft an email variation, suggest next steps, and flag important context before a customer call—all in seconds.

Why Enterprise Companies Are Winning the AI Race

There's a counterintuitive insight emerging: enterprises with complex sales organizations and accumulated process debt might actually be better positioned to win with AI than startups. Here's why.

Startups have the advantage of being born digital. But they have less to optimize. An early-stage company's sales process is relatively simple—reach out, qualify, pitch, close. There's limited historical data to leverage, less process complexity to solve, and fewer systems to integrate.

Enterprises, by contrast, have mountains of customer data, years of email templates and sales sequences, historical win/loss data, customer success patterns, and process debt they're actively looking to eliminate. This isn't just raw material for AI to work with—it's opportunity. An AI system that can learn from a decade of customer interactions, successful deals, objection handling, and deal patterns has exponentially more leverage than one training on a startup's limited historical data. Dell's AI transformation blueprint provides a roadmap for enterprise success.

Moreover, enterprises have the resources to implement AI across their entire go-to-market motion simultaneously—sales, marketing, customer success, revenue operations—rather than piecemeal. This creates compounding efficiency gains that startups can't match.

The companies winning with AI transformation right now are those treating it as a fundamental reshaping of how work gets done, not as a point solution to a single problem.

The Timeline Urgency: Why Six Months Matters

There's a specific window of opportunity for enterprise AI transformation: roughly six months. This isn't arbitrary. Here's the reality of enterprise change management:

- Months 1-2: Exploration and pilot projects. Teams are excited about possibilities, experimenting with different tools, discovering what works and what doesn't.

- Months 2-4: Scale and standardization. Early pilots show results, other teams want in, processes need to formalize, integration challenges emerge.

- Months 4-6: Adoption and embedding. The new way of working becomes standard practice. Early adopters expand to the entire organization.

- Month 6+: Momentum either accelerates or stalls. If you've created measurable business value and cultural buy-in, AI becomes embedded in how the organization works. If not, you face the risk of "flavor of the month" perception where AI becomes associated with yet another failed initiative.

The enterprises that move fastest in this window—showing meaningful results by month six—typically see sustained adoption and continued expansion of AI use cases. Those that take longer to show results often get stuck in analysis paralysis or face organizational skepticism that becomes harder to overcome as time passes.

Lesson 1: You Need Both Top-Down AND Bottom-Up Motion

Why Bottom-Up Alone Fails

Most enterprise AI initiatives begin with bottom-up enthusiasm. Someone on the marketing team discovers Chat GPT can help write emails faster. A sales leader experiments with AI tools to summarize customer calls. A customer success manager builds a spreadsheet that uses GPT to draft renewal emails. Suddenly, you have pockets of AI usage throughout the organization—different tools, different workflows, different standards.

This is actually a positive sign. Bottom-up adoption proves that your team sees value in AI and is willing to experiment. The problem is that bottom-up motion alone creates organizational fragmentation and leaves critical strategic decisions unresolved.

Without top-down direction, several predictable problems emerge:

Resource allocation becomes chaotic. If AI is just something individuals are doing in their spare time, it never gets sufficient resources. People juggle AI experimentation alongside their day jobs. Some teams have dedicated AI focus time; others don't. You end up with inconsistent adoption where the organization's success with AI depends largely on individual initiative rather than structural support.

Permission structures remain unclear. Can salespeople really stop doing their old workflows if they find an AI alternative? Can they spend 20% of their time experimenting? Is that against company policy? Without clear top-down direction, people default to maintaining existing processes rather than replacing them, which means AI becomes additive work rather than replacing work.

Tool selection lacks governance. When bottom-up adoption is your only mechanism, your organization ends up with dozens of different AI tools, each person's favorite SaaS subscription. You lack centralized procurement, security review, data governance, or integration standards. This creates both security risk and operational chaos—data flowing into unvetted systems, no consistency in how AI is being applied, and IT nightmares trying to manage unsanctioned tools.

Prioritization is impossible. With bottom-up motion, you prioritize based on individual enthusiasm rather than business impact. The person with the loudest voice gets resources. The highest-impact use case that requires harder work gets deprioritized. You end up building AI features for problems that don't matter much while the massive time-sinks in your business remain unsolved.

Why Top-Down Alone Fails

The opposite problem is equally real. Some enterprises announce an "AI initiative" with executive sponsorship, appoint an AI lead, and expect transformation to happen through decree. This fails almost as consistently.

When AI transformation comes purely from the top, it often feels like something being done to employees rather than with them. Implementation becomes mandatory rather than enthusiastic. Adoption is performative—teams go through the motions to satisfy leadership requirements but don't genuinely believe in the value. When the initial mandate fades, so does adoption.

Moreover, top-down direction without bottom-up insight misses critical context. Executives making decisions about which use cases to prioritize often don't understand the actual day-to-day workflows where AI could matter most. A CFO might decide AI should focus on sales forecasting accuracy when, in reality, the biggest time-sink in the sales organization is manual CRM data entry. Top-down decisions made without ground-truth understanding often misallocate resources to high-visibility problems while missing high-impact ones.

Pure top-down approaches also tend to underestimate implementation complexity. Executives see the potential for AI to transform a workflow and assume it's straightforward to execute. The teams actually doing the work know better—there are data quality issues, system integration challenges, edge cases, and technical obstacles that only become visible through hands-on implementation.

The Hybrid Approach: Fusing Both Motions

The enterprises succeeding with AI transformation use a deliberate hybrid approach:

Step 1: Launch exploration with executive sponsorship. Create formal space for bottom-up experimentation. This might be something like an "AI Week" where teams across the organization are given dedicated time to explore AI applications relevant to their roles. Bring in external experts—researchers, tool providers, practitioners from other companies—to inspire thinking. Make it clear this isn't a one-time event but the beginning of ongoing exploration.

The critical piece is executive visibility without mandate. Leaders should participate, learn, and help amplify early wins. But the goal isn't for executives to decide which use cases to pursue—it's to create psychological safety around AI experimentation and demonstrate that leadership genuinely cares about this transformation.

Step 2: Formalize strategic direction through a cross-functional working group. After the initial exploration phase, establish a formal structure for prioritization and scaling. This is where top-down meets bottom-up. The working group includes executive sponsors, functional leaders, and the people who did early experimentation.

The mandate of this group is specific: evaluate the use cases that emerged from bottom-up exploration, assess them against strategic business priorities, estimate effort and ROI, and determine what the organization will formally prioritize and invest in. This is the critical decision-making mechanism that prevents chaos while maintaining connection to actual innovation happening at ground level.

Step 3: Create resource structures that support scale. Once you've identified priority use cases, allocate actual resources—people, budget, tools, dedicated time. This is where top-down commitment becomes real. If AI is genuinely a priority, it needs to show up in how you allocate your most valuable resource: human attention and capability.

The best-structured approaches dedicate specific people to AI work rather than asking everyone to fit it in around their regular jobs. You might have AI project leads, data engineers focused on AI, GTM engineers building AI-powered tools, and so forth. These people are responsible for implementing priority use cases at scale.

Step 4: Establish feedback loops that keep strategic direction aligned with bottom-up learning. The most important part: as you implement, continue to learn and adjust. Use cases that looked promising in theory might not work in practice. New opportunities emerge that weren't visible initially. Rather than treating the strategic plan as fixed, create monthly or quarterly review cycles where the working group assesses progress, discusses new learnings, and adjusts prioritization based on what's actually working.

This creates a dynamic between top-down direction and bottom-up intelligence that keeps the transformation aligned with both business priorities and operational reality.

Measuring Success: Early Indicators of Healthy Dual Motion

How do you know if you've successfully balanced top-down and bottom-up? Watch for these signals:

- Voluntary adoption without mandate. People are using AI tools because they find genuine value, not because they were told to. Adoption happens across levels—not just in isolated pockets.

- Clear articulation of why we're doing this. When you ask people what the strategic goal of your AI transformation is, you get broadly consistent answers. There's shared understanding of what success looks like.

- Resource allocation that matches the rhetoric. If leadership says AI is a priority, it shows up in budgets, staffing, and how people's time is allocated. There's a gap between stated and actual priorities in most organizations—minimize this.

- Iteration based on learning. Your initial plans have changed based on what you've learned. This indicates you're responding to ground-truth feedback rather than rigidly executing a predetermined plan.

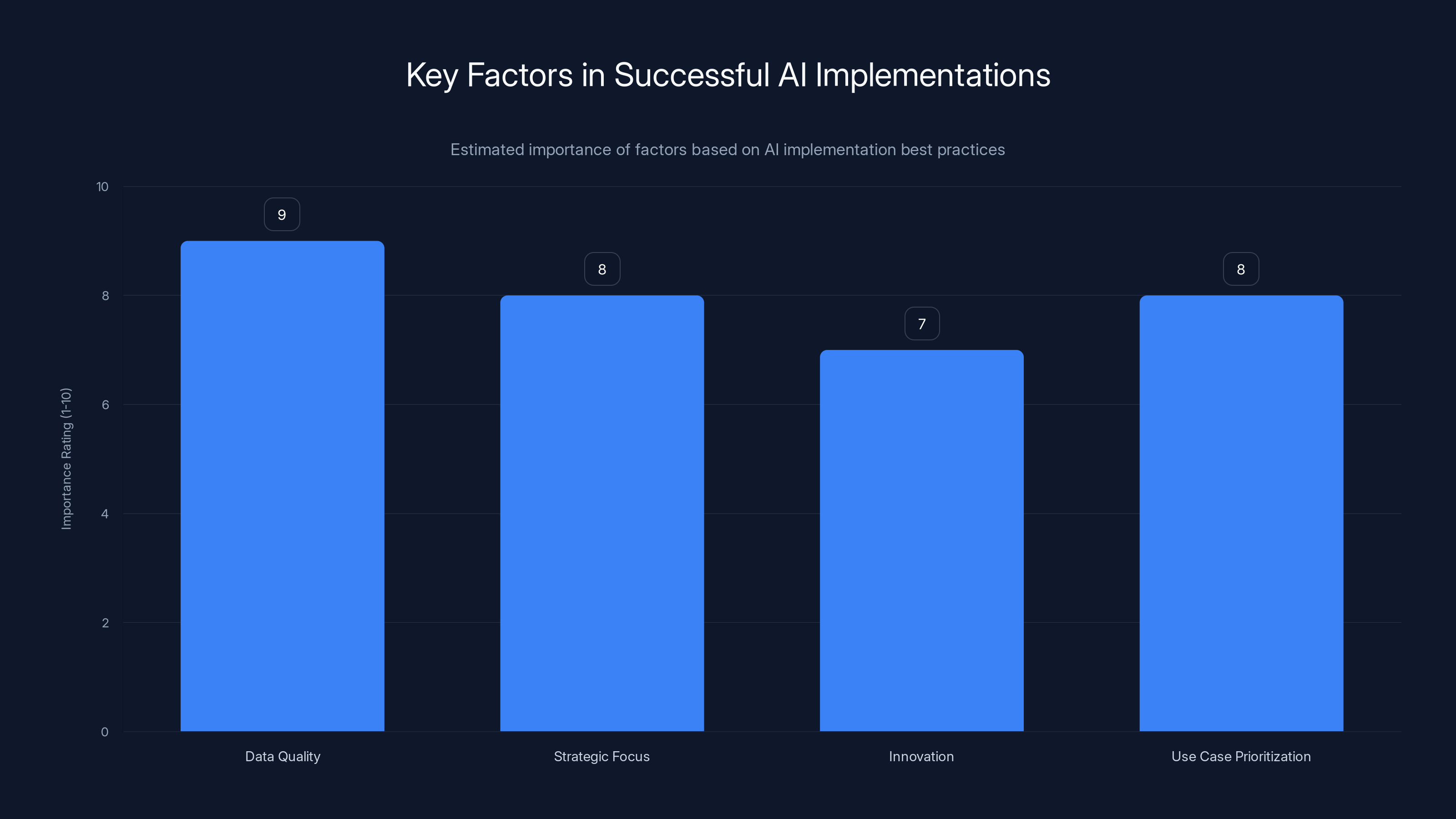

Data quality is the most critical factor in AI success, followed closely by strategic focus and use case prioritization. Estimated data based on industry insights.

Lesson 2: Cross-Functional Teams Are Non-Negotiable

The Functional Silos Problem

Here's a pattern that shows up repeatedly: a data and systems team builds an AI application that's technically sophisticated but fails in practice because it lacks business context. The model is mathematically elegant but solves the wrong problem. Or it solves the right problem in a way that doesn't actually integrate into existing workflows. Sales leaders, meanwhile, have identified clear problems they want AI to solve, but they lack either the technical capability or the access to resources to build solutions.

These failures almost always come from functional isolation. When data teams build without close collaboration with business users, they optimize for what's technically feasible rather than what's strategically valuable. When business teams build without technical expertise, they create requirements that aren't actually implementable or that create data governance nightmares.

The same pattern emerges with AI governance. Security and compliance teams worry about data going into third-party AI services. They may want to restrict or block usage. Meanwhile, sales teams see the tools as essential productivity upgrades. Without structured conversation between functions, you either end up with overly restrictive policies that prevent value creation or inadequate governance that creates risk.

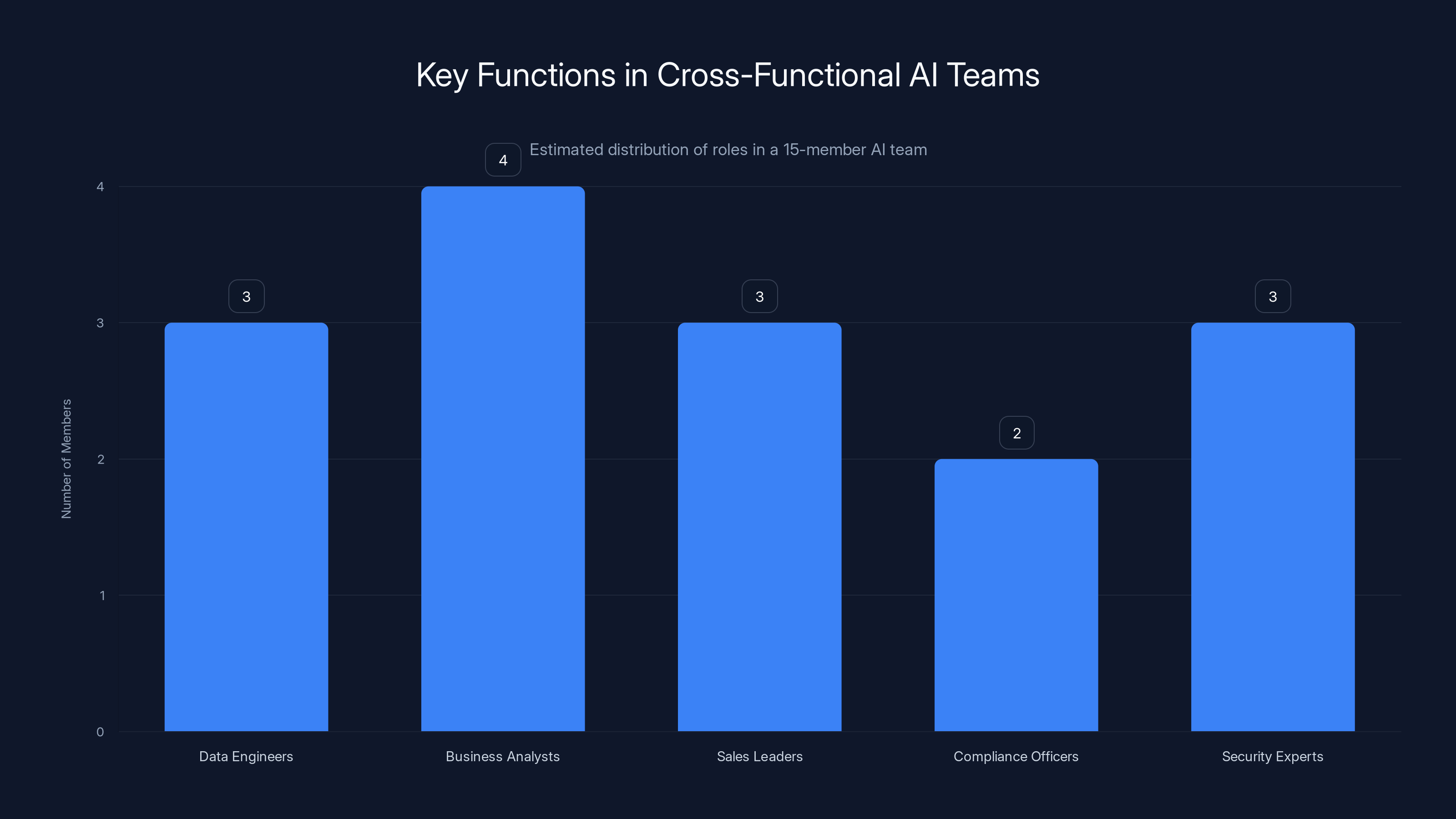

Why Size Matters: The Magic Number is Around 15

Most organizations underestimate how many people you need in an AI working group to actually drive transformation. The instinct is to keep it small—a few leaders who can move quickly. In practice, small groups fail because they lack functional breadth.

When you bring together 15 people representing different functions, something shifts. You have:

Sufficient technical depth. You're not relying on a single data engineer or systems person. You have multiple people who understand infrastructure, data quality, model evaluation, and technical integration. This creates redundancy and better decision-making.

Sufficient business depth. You have sales people, marketing people, customer success leaders, and revenue operations professionals. They understand customer context, workflow requirements, and implementation obstacles. They can articulate what success looks like in business terms, not just technical terms.

Sufficient organizational credibility. A working group of 15 that includes members of key functional teams has influence throughout the organization. When these people communicate decisions back to their teams, it's peer-to-peer explanation rather than top-down mandate. Adoption happens naturally because the people recommending the tools are trusted.

Sufficient organizational coverage. You need to understand how sales works, how marketing works, how customer success works, how revenue operations works, how IT and security work. A group of 15 can cover this ground; a group of 5 probably can't without leaving gaps.

The tradeoff is that 15-person groups move slower than 5-person groups. They need more time to reach consensus. Decisions take longer. But the decisions are better, the implementation is more effective, and the organizational adoption is stronger. In transformation work, speed of implementation matters less than quality of implementation.

The Three Functional Pillars

A well-structured cross-functional team has three core capabilities:

Pillar 1: Data & Systems. This includes your data engineers, platform engineers, architects, and data scientists. They own the technical infrastructure, data pipelines, model training, and system integration. They understand your data architecture, what data is available, data quality issues, privacy and compliance considerations, and how to build scalable systems.

This pillar needs decision rights over technical implementation. You don't want endless debate about how to build something. Once a use case is approved, the data and systems team owns the technical execution.

Pillar 2: Revenue Operations & GTM Engineering. This is often the bridge function that most organizations under-invest in. These are people who understand both business process and technical capability. Revenue operations leaders know how your sales team actually works, what workflows matter, how CRM is configured, what your sales metrics mean. GTM engineers—if you have them—are technically skilled enough to build integrations, work with APIs, configure data flows, and translate between business requirements and technical capability.

This pillar is critical because they prevent both of the failure modes we mentioned earlier: data systems building things without business context, and business teams wanting impossible things. The Rev Ops and GTM engineering function translates between languages.

Many organizations need to hire for this function specifically. There aren't enough people with this skill combination, and it's becoming increasingly critical as companies try to integrate AI across their go-to-market operations.

Pillar 3: The Business (Sales, Marketing, CS). You need active practitioners from the functions where you're deploying AI. Don't just include leaders—include individual contributors who actually do the work. An account executive, a marketing coordinator, a customer success manager who uses your systems every day.

These practitioners provide ground-truth context. They know where the real inefficiencies are. They can tell you whether a proposed solution will actually be used or if it's solving the wrong problem. And critically, they become advocates for the change within their own teams. When implementation starts, they're not learning alongside everyone else—they've been involved in the design.

Avoiding the Common Failure Modes

Failure Mode 1: Data-driven without business context. This happens when your data and systems team has broad autonomy but limited business involvement. They have access to customer data, they understand machine learning, they can build sophisticated models. But they're optimizing for model performance rather than business impact.

Example: A data team builds an AI system that predicts which prospects are most likely to close in the next quarter, with 87% accuracy. They're proud of it. The business tries to use it and discovers it's predicting accurately but at the wrong time—by the time the model says a prospect is ready to close, the sales team has already moved on to other opportunities. The timing is off because the data team was optimizing for classification accuracy without understanding your actual sales process.

This is prevented by having business practitioners involved from the start: "What would we actually do differently with this prediction? When in the sales cycle would we need this insight? How would we act on it?" Business context shapes what's worth building.

Failure Mode 2: Business enthusiasm without technical feasibility. This is the opposite problem. Sales leaders want AI to do amazing things—automatically identify customer signals indicating churn risk, generate personalized sales plays for each prospect, predict next-best conversation topic, etc. These are all valuable goals. But they require data infrastructure, model training, system integration, and ongoing maintenance that might not be feasible.

Without technical input early, you end up with spectacular failures: launching initiatives that seem to work in a pilot but fall apart when you try to scale them. Or building requirements that are technically possible but extract massive data governance costs that nobody anticipated.

This is prevented by having the data and systems team involved from the start: "What data would we need to do this? What are the technical obstacles? How would we actually build this? What's the cost?" Technical feasibility shapes what's worth committing to.

Failure Mode 3: Disconnected from revenue operations. Many organizations skip the revenue operations representation. Their Rev Ops team is either already overwhelmed with other initiatives or doesn't have executive visibility. But this is a mistake.

Revenue operations teams own the CRM configuration, sales process definition, naming conventions, data quality standards, and integration architecture. If you're deploying AI without Rev Ops involvement, you're either:

- Creating shadow systems and workarounds that fragment your sales infrastructure

- Depending on Rev Ops to make your AI system work after it's already designed, which means expensive redesigns

- Building something that doesn't actually fit how your sales team uses their tools

The best working groups give Rev Ops significant voice in design. They're asking: "How does this integrate with our existing tools? What does it require from our CRM? Does it create manual data entry? Can it scale to all 400 salespeople or just a pilot group?"

Structuring the Working Group for Effectiveness

Formal structure matters. You need clear governance:

- Sponsor: A C-level executive (CEO, COO, CRO) who owns overall strategy and unblocks obstacles

- Working group lead: Someone with sufficient authority to drive decisions and ensure execution. Often a VP-level person from revenue operations, product, or sales.

- Functional representatives: Clear ownership by function (data/systems, Rev Ops/GTM engineering, sales, marketing, customer success)

- Decision-making protocol: How do you decide what use cases to prioritize? What's the approval process for tools, budgets, and resource allocation? Who has final say in disagreements?

Meeting cadence matters. Monthly strategy meetings to prioritize and approve new work. Weekly tactical meetings for the teams actually executing. Quarterly reviews to assess progress and adjust.

Communication cadence matters. The working group can't stay siloed. You need monthly or weekly all-hands or team lead communications explaining decisions, sharing progress, asking for input from the broader organization. This creates visibility, prevents misalignment, and generates buy-in.

Success metrics matter. What are you measuring? Not just technical metrics like model accuracy or tool adoption rates. Business metrics: time saved, deal acceleration, win rate improvement, customer expansion velocity. The working group should be regularly reviewing whether AI is delivering business value.

Lesson 3: Jobs-To-Be-Done Framework for Ruthless Prioritization

The Prioritization Trap

Imagine you launch an internal channel for sharing AI use case ideas. For a week, nothing much happens. Then suddenly, the dam breaks. People flood the channel with possibilities:

- "We could use AI to summarize customer calls"

- "We could generate personalized email sequences for each prospect"

- "We could predict customer churn risk"

- "We could create custom battle cards for each deal"

- "We could analyze pricing objections and suggest responses"

- "We could automatically update CRM based on email and calendar data"

- "We could generate monthly executive summaries of account activity"

- "We could identify upsell and expansion opportunities by analyzing usage patterns"

Suddenly, you have 30 potential AI use cases. Everyone is excited. Teams start working on their favorite ideas. Some people are working on two or three ideas simultaneously. Nobody's finished anything yet. The organization is diffuse and unfocused.

This is the exact moment where lack of rigorous prioritization kills AI transformation. You can't build all 30 things. You probably can't build 10 things well. You definitely can't maintain 10 things while launching others. You need to ruthlessly decide: What are we actually going to build and scale? Everything else is at-your-own-time experimentation.

The question is: on what basis do you prioritize?

Most organizations fall back on one of several bad prioritization mechanisms:

Executive enthusiasm. The CEO thinks AI customer churn prediction sounds cool, so that becomes the priority. Never mind that sales is drowning in manual CRM data entry—the CEO wants churn prediction.

Highest complexity. Engineering leads to building the most technically interesting problem. Not the highest business impact, the most interesting from an AI/ML perspective.

Loudest voice. The sales VP who advocates most passionately gets their idea prioritized. Politics rather than substance.

Easiest to build. Going for quick wins and low-hanging fruit. Sure, summarizing call transcripts is relatively easy, but is that actually solving your biggest problem?

Most visible. Picking use cases that create buzz rather than use cases that create business value. Marketing-driven prioritization rather than value-driven.

These mechanisms might occasionally align with what actually matters, but usually they don't. What you need instead is a systematic framework for understanding what actually drives business value and organizational impact.

The Jobs-To-Be-Done Framework

Instead of starting with possible AI applications, start with understanding actual jobs that people are doing and pain associated with those jobs.

A "job-to-be-done" is a unit of work that someone needs to accomplish. For an account executive, some of their jobs might be:

- Identify accounts in my territory that are likely buyers for our product

- Develop a strategy for winning a specific customer

- Prepare for customer conversations

- Document customer interactions and next steps

- Identify expansion opportunities within existing accounts

- Manage a deal through the sales process

- Build relationships with economic buyers and influencers

Now, for each job, you measure two dimensions:

- Time spent on this job. How much of your week does this actually consume? Is this a trivial part of your day or a massive time drain?

- Pain and friction in this job. Is this enjoyable work or frustrating? Are there obstacles, handoffs, or inefficiencies that make it harder than it should be?

To gather this data, you need to actually observe people doing their work. Not interviews where people describe what they do (which is often inaccurate). Shadowing—following an account executive through their day, watching what they actually do, asking about pain points.

The Research Methodology

One effective approach: Have someone—ideally a GTM engineer or revenue operations analyst—spend dedicated time shadowing roles across your go-to-market organization.

With sales, shadow account executives for 2-3 days. Watch them:

- Log in to CRM and look at their account list

- Prepare for customer calls

- Send emails to prospects

- Update CRM with call notes

- Pull together proposals

- Coordinate internally on deals

As you watch, take notes on:

- Where do they switch between systems?

- Where do they do manual work that looks repetitive?

- Where do they spend time on work that feels unrelated to selling?

- Where do they express frustration?

- Where do you see inefficiency?

With marketing, shadow marketing specialists:

- How do they create segments?

- How do they personalize campaigns?

- How do they pull data for reporting?

- How do they analyze campaign performance?

With customer success, shadow CSMs and support:

- How do they track customer health?

- How do they prepare for check-in calls?

- How do they handle support issues?

- How do they identify expansion opportunities?

For each major job you identify, record:

- Job description. "Prepare for customer calls"

- Time investment. "4 hours per week per AE"

- Steps in the job. What's actually involved

- Systems involved. Which tools do they use

- Pain points. Where's the friction

- Desired outcome. What success looks like

Mapping Jobs to Customer Impact and Business Outcome

Once you understand the jobs and time investment, layer in impact:

Business impact:

- If we improved this job, what would it enable? Better selling? More deals? Faster deals? Better customer outcomes?

- Can you quantify the impact? "Reducing CRM data entry time by 2 hours/week per AE could free up 13,000 hours annually across our team to spend on actual selling. If that improves win rate by even 1%, it's worth millions in incremental revenue."

Customer impact:

- Does this job directly affect customer experience? If yes, improving it should be a priority.

- Some jobs are entirely internal (like CRM data entry) but affect customer experience indirectly (better customer context means better conversations). Others are direct (preparation for customer calls).

Ease and readiness:

- How hard would this be to solve with AI?

- Do you have the data to build an AI solution?

- Does it require integrations that don't exist yet?

- Can you build a pilot version relatively quickly?

Creating Your Prioritization Matrix

Once you have this data, create a simple matrix:

| Job | Time Investment | Business Impact | Customer Impact | AI Feasibility | Priority |

|---|---|---|---|---|---|

| Prepare for customer calls | 3 hrs/week | High (better conversations) | High | High | 1 |

| Update CRM from emails | 2 hrs/week | Medium (time savings) | Low | High | 2 |

| Segment prospects for campaigns | 4 hrs/week | Medium | Medium | Medium | 3 |

| Generate renewal justifications | 2 hrs/week | High | High | Medium | 4 |

| Identify churn risk | 1 hr/week | High | High | Low | 5 |

Your top priorities are jobs that combine high time investment with high business impact and high AI feasibility. Lower priorities are interesting but not urgent.

Making the Hard Decision

Once you've mapped your jobs and impact, you need to make the hard call: What are we building first?

A realistic commitment is 2-3 major use cases for your first 6 months. Not 10. Not 8. Two or three that you'll actually build, integrate, scale, and measure.

The decision should be:

- Pick one quick win. Something that's high impact, relatively easy to implement, and will show value within 4-8 weeks. This builds momentum and organizational credibility.

- Pick one medium-complexity win. Something more involved, but still achievable in 3-4 months. This demonstrates you can execute on harder problems.

- Optionally, pick one longer-term strategic play. Something that matters a lot but will take longer to build. Start this in parallel with the first two, but don't expect results for 5-6 months.

Everything else goes into a backlog. You revisit prioritization every quarter. As you complete items, you pull the next ones from the backlog.

Ruthless prioritization—saying no to most ideas and yes to a focused set—is what separates transformation projects that actually complete and deliver value from those that dissipate into half-finished initiatives.

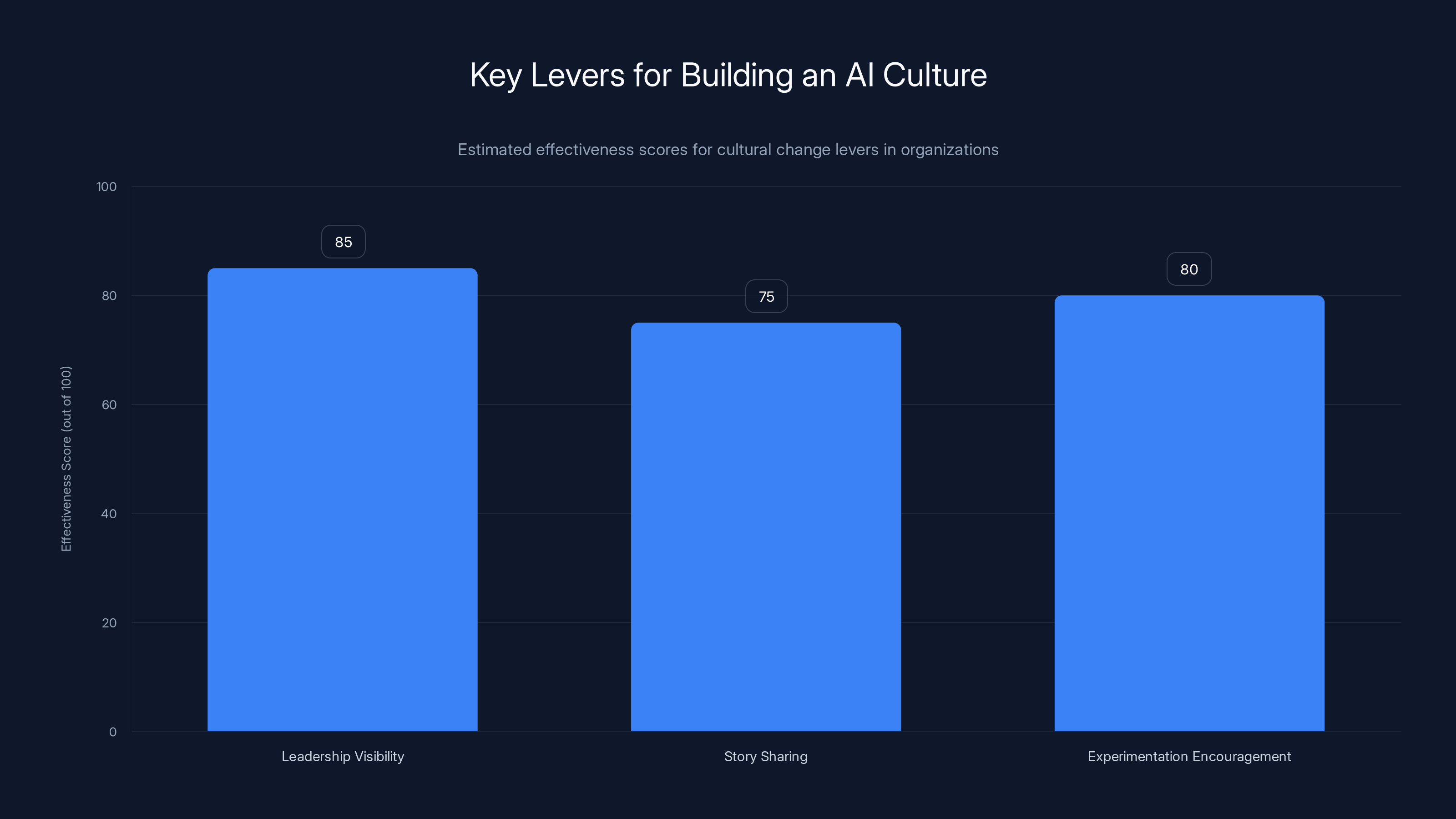

Lesson 4: Building an AI Culture Through Visibility and Celebration

The Culture Equation

Transformation = (Quality of Plan × Execution Effectiveness × Cultural Adoption) / Time

You can have the perfect strategic plan and flawless execution, but if your organization doesn't actually buy into AI as "how we work," you'll see adoption plateau far below potential. Culture is the often-overlooked variable that determines whether transformation sticks or fades.

AI culture isn't about getting everyone to become prompt engineers or AI experts. It's about creating an organizational environment where:

- People see AI as a legitimate tool for their jobs, not a threat

- Experimentation is encouraged and celebrated

- Success is visible and shared across the organization

- Leadership consistently reinforces that this is the future of how we work

- People have permission to try new approaches, fail safely, and learn

The Three Levers of Cultural Change

Lever 1: Leadership Visibly Using AI

Culture flows from the top. If your executives are talking about AI in strategy meetings but still using their old workflows for actual work, the organization notices. Conversely, when leaders visibly change their behavior, it signals that this is real.

This might mean:

- The sales leader using an AI tool to prepare for their customer calls instead of the manual process they've used for 10 years

- The CMO using AI to draft email copy instead of doing it manually

- The CFO using an AI system to analyze customer health instead of legacy reports

When leaders visibly adopt tools they're pushing others to use, trust increases. People believe it's actually valuable, not just a mandate.

Lever 2: Sharing Stories and Creating Heroes

Every organization has early adopters—people who experiment with AI quickly and enthusiastically. The culture-building move is making these people heroes. Not in a corny way, but by creating visibility around their wins.

Setup:

- Monthly or bi-weekly "AI wins" sharing sessions where people demo what they've built or learned

- Stories of how AI improved an outcome—closed a deal faster, generated better customer insights, saved hours of time

- Recognition in company communications: newsletter, all-hands, team meetings

- Case studies on internal wikis or knowledge bases

When people see peers successfully using AI and benefiting from it, adoption accelerates. There's social proof. People want to be part of what's working.

Lever 3: Removing Barriers and Providing Permission

Culture includes permission structures. People need explicit permission to try new things, especially if those things mean changing established workflows.

Barriers to remove:

- "Is it OK to stop doing the old process and do this new AI-enabled process?" → Yes, explicitly yes.

- "Do I need approval to try a new AI tool?" → No, within reason (respecting security and compliance guidelines).

- "What if I use AI and it doesn't work as well as the old way?" → We'll learn from it and iterate.

- "Will I get in trouble if the AI solution fails?" → No. Failure in experimentation is expected.

Permissions to establish:

- "You have 10% of your time to experiment with AI in your workflow"

- "Here are the guardrails (security, privacy, not sharing confidential data), stay within those and you're good"

- "Tell us what you're experimenting with, we'll learn from it"

This creates psychological safety around AI experimentation. People try things they wouldn't otherwise.

Building Organizational Learning Loops

Beyond leadership visibility and hero celebration, organizations that build strong AI culture create formal learning structures.

Mechanism 1: Weekly or bi-weekly sync calls for AI experimenters. Bring together people who are actively working on or experimenting with AI. 30-45 minutes. Each person shares:

- What they're working on

- What's working

- What's not working

- What they learned

- What blockers they have

This creates peer learning. Someone working on summarizing customer calls hears that someone else discovered a privacy issue when sending customer data to a third-party AI service. They learn from it without having to discover it themselves. Cross-functional collaboration happens naturally.

Mechanism 2: Quarterly forums where you showcase results. Not just to the AI team, but to the broader organization. What did we build? What did we learn? What's the impact?

Measure and communicate:

- Time saved

- Quality improvements

- Customer impact

- Deal velocity improvements

- Whatever metrics are relevant to the use case

Making impact visible reinforces that AI is working and worth continued investment.

Mechanism 3: Documentation and internal knowledge bases. As people learn what works and what doesn't, capture it. What prompts work well? What tools are effective? What approaches failed? This becomes organizational memory.

When new people join or start experimenting with AI, they have a repository of lessons to draw from rather than each person discovering these lessons independently.

The Resistance Problem

In building AI culture, you'll encounter resistance. Understanding where it comes from helps you address it:

Competence threat: Some people worry that AI will replace them or make their skills obsolete. This is a real concern that deserves genuine address.

Response: Focus on augmentation narratives. "AI won't replace good account executives—it will replace the busywork that keeps good AEs from doing excellent account executive work." When people see AI freeing them from 30% of busywork to spend more time on relationship-building and strategy, resistance decreases.

Process disruption: Some people have workflows optimized over years. AI means changing those workflows. There's friction and short-term inefficiency.

Response: Acknowledge the disruption. "Yes, this will feel awkward for 2-3 weeks. Then it becomes your new normal and you won't want to go back." Support people through the transition with training, help, and patience.

Trust deficit: Some people don't trust that AI-generated output is actually good. They worry about quality, accuracy, and putting their reputation on AI systems they don't fully understand.

Response: Start with low-risk applications. "Use AI to draft your email, then you read it and edit it before sending." As people gain experience with quality, trust builds.

Not invented here: Some people are skeptical of tools or approaches coming from outside their team. If the working group recommends something, but a department head prefers their own solution, internal politics can win over strategic alignment.

Response: Make space for multiple approaches. "You can use Tool A or Tool B, both meet our governance standards." Flexibility often increases adoption more than mandates.

Measuring Culture

How do you know if you're building real AI culture? Look for these signals:

- Organic adoption beyond mandates. People using AI because they choose to, not because they were required to.

- Peer learning. People learning from each other about what works, not just top-down education.

- Experimentation and iteration. People trying new approaches, learning from failures, improving over time.

- Conversation inclusion. When leaders talk about future strategy, AI is part of the conversation. It's assumed, not something to be defended.

- Cross-functional collaboration. People from different functions working together on AI-powered workflows.

A balanced cross-functional AI team typically includes diverse roles to ensure both technical depth and business alignment. Estimated data.

Lesson 5: Execution Excellence Requires Systems and Discipline

The Implementation Gap

Most transformation failures don't happen because the strategy was wrong. They happen because execution falters. The working group made the right decision about what to build. But then:

- The project got delayed while waiting for engineering resources

- Requirements changed halfway through without updating timelines

- The tool selected turned out not to work as expected

- Data quality issues weren't discovered until late in implementation

- Stakeholders who were supposed to be involved weren't actually available

- The project finished but adoption was disappointing because end-users weren't consulted on workflow integration

Execution excellence means having systems and discipline around project management, communication, data quality, and stakeholder engagement.

Project Management Discipline

Your AI use case projects shouldn't be treated as research initiatives. They should be treated as product launches. That means:

Clear scope definition.

- What exactly are we building?

- What's in scope, what's explicitly out of scope?

- What does done look like?

Realistic timelines.

- How long will this actually take?

- Break it into phases: prototype (2 weeks), pilot (4 weeks), refinement (2 weeks), rollout (4 weeks)

- Build in buffer for the inevitable surprises

Resource commitment.

- Who's working on this? Full-time or part-time?

- If part-time, what other commitments are they dropping?

- Who's the project lead with authority to make decisions?

Staged approach.

- Don't try to launch to your entire organization at once

- Pilot with 5-10 power users first

- Learn from the pilot

- Expand to a department

- Then scale organization-wide

Regular check-ins and communication.

- Weekly status updates to the working group

- Monthly updates to the broader organization

- Ad hoc communication when issues emerge

Data Quality as a Prerequisite

Most AI projects fail not because the AI is bad, but because the data feeding it is bad. You can have the most sophisticated AI model in the world, but if it's trained on dirty data, it will make bad predictions.

Before you start building, audit your data:

Completeness. Is the data you need actually recorded? If you want to use call transcripts to train an AI system, do you have transcripts for most calls? If you have transcripts for 40% of calls but not 60%, you're training on an incomplete picture.

Accuracy. Is the data correct? Do CRM records accurately reflect customer status? Are customer success metrics recorded consistently?

Consistency. Is data formatted consistently? Is the sales stage called "Qualified" in one region and "Sales Qualified" in another? Are customer values in dollars or thousands of dollars?

Recency. Is the data current? Training an AI model on customer behavior from 2019 won't predict 2025 behavior accurately if your customer base has changed.

Representativeness. Does your data represent the full population you want to apply AI to? If your historical data is 80% from one customer segment and 20% from another, but you want to use the model across all segments, you have a problem.

Before launching an AI project, invest time (and possibly hire) to clean and prepare your data. It's unglamorous work, but it's essential.

Integration Architecture

Where does the AI system live? How does it connect to your existing tools? This matters more than people realize.

Option 1: Standalone tool. The AI system is accessed separately from your main tools. People log into the AI system when they want to use it. This is simplest to build but worst for adoption because it adds friction to workflows.

Option 2: API integration into existing tools. The AI system is connected via API to your CRM, email, communication tools. Functionality shows up within the tools people already use. This requires more engineering but dramatically improves adoption.

Option 3: Native embedding. The AI functionality is built directly into your platform or tools. This is most sophisticated but has longest timeline and highest cost.

For initial launches, Option 2 (API integration) usually offers the best tradeoff: workable adoption without requiring massive engineering investment.

Workflow Integration Design

Even if your AI system works perfectly, it won't be used if it doesn't integrate smoothly into actual workflows. This requires thoughtful design.

Example: You've built an AI system that generates call prep from account information. The system works well. But it only lives in a separate tool. Sales people have to:

- Finish their last meeting

- Open their calendar

- Click on the next meeting

- Copy the customer name

- Paste into the AI tool

- Wait for generation

- Copy the output

- Paste into their email or notes

Versus better integration:

- Finish their last meeting

- Open their calendar and click on the next meeting

- "Generate prep" button appears

- AI generates and inserts the prep into the meeting notes

- Done

The second workflow is frictionless. People will use it. The first workflow is annoying. People will find workarounds.

Change Management at Scale

When you launch an AI system to your entire organization, it's not just about the tool. It's about helping people change how they work.

Training. Not a one-time training session, but ongoing learning:

- Initial training on how to use the tool

- Advanced sessions on how to use it more effectively

- Office hours where people can ask questions

- Video walkthroughs for different use cases

Champions in each team. Identify power users in sales, marketing, customer success. Train them deeply. They become the go-to people when others have questions or issues.

Feedback loops. After launch, ask people:

- Is the tool working as expected?

- Are there obstacles to using it?

- What would make it more valuable?

- Iterate based on feedback.

Metrics and visibility. Track:

- Adoption rates: What % of people are using it?

- Frequency: How often are they using it?

- Outcome metrics: Is it delivering the business value we expected?

Share these metrics regularly so people see the tool is working and that others are using it.

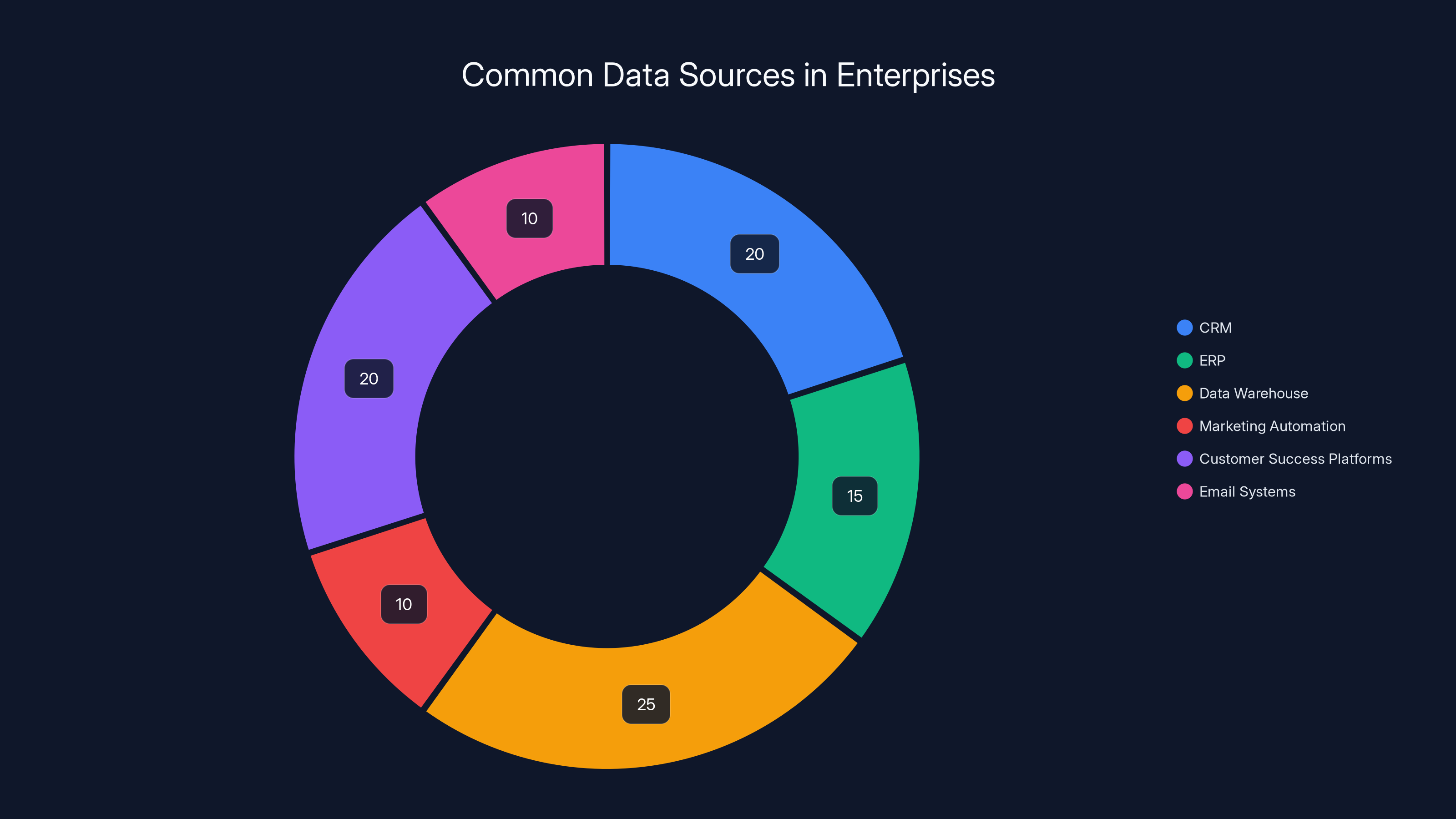

Lesson 6: The Data Foundation—Why Systems Architecture Matters

The Problem: Fragmented Data, Fragmented Truth

Most enterprises have a data landscape that evolved over time—sometimes organically, sometimes not. You have a CRM, an ERP system, a marketing automation platform, a data warehouse, various point solutions. Data lives in multiple places, often duplicated, sometimes conflicting.

When you want to build an AI system that understands your business, it needs to work with this fragmented data reality. If your CRM says a customer is at

Building the data foundation for AI means:

1. Data inventory. Map all sources of truth in your organization:

- CRM (customer records, opportunity data, activity logs)

- ERP (financial data, invoice records)

- Data warehouse (centralized analytics)

- Marketing automation (campaign data, lead data)

- Customer success platforms (usage data, support tickets, health scores)

- Email and communication systems (interactions, sentiment)

- External sources (firmographic data, market data, intent data)

2. Data reconciliation. Where conflicts exist, establish which source is authoritative:

- For customer account information, is CRM authoritative?

- For financial metrics, is ERP authoritative?

- Where multiple systems have the same data, create rules for conflict resolution

3. Data unification. Create a central location where data from multiple sources is brought together consistently. This might be a data warehouse, a data lake, or a unified API layer.

Without this foundation, AI systems end up confused and unreliable. With it, they can operate with confidence and accuracy.

Real-Time Versus Batch Processing

As you build data systems to support AI, you'll need to decide: Do you need real-time data or batch processing?

Real-time: The AI system needs the most current information available. Example: an AI system recommending next best action for a sales rep. If the system doesn't have the latest customer interaction data, the recommendation might be stale or irrelevant.

Real-time systems are more complex to build. They require event-based architecture, stream processing, lower-latency infrastructure. Cost is higher.

Batch: The AI system can work with data that's hours or days old. Example: an AI system identifying which customers are at churn risk based on monthly usage patterns. By-the-hour updates are fine; real-time is unnecessary.

Batch systems are simpler and cheaper to build. But they're less useful for real-time decision support.

For most GTM use cases, you start with batch processing. It's sufficient for most workflows and lets you get to value faster and cheaper. As you mature, you layer in real-time systems where the business value justifies the complexity.

Privacy, Compliance, and Governance

When you're building AI systems that work with customer and employee data, privacy and compliance aren't optional. They're baseline requirements.

Privacy. What data can be used where? Can customer interaction data be sent to a third-party AI service (Open AI, Anthropic, etc.)? Can it be logged and used to improve the model? Are there types of data (customer financial data, strategic plans) that shouldn't be sent to external services?

You need explicit policies:

- Which AI services are approved for which data types?

- What data should never leave your infrastructure?

- What happens to data after it's used to generate an output—is it logged, retained, deleted?

Compliance. Depending on your geography and customer base, you have regulatory requirements:

- GDPR (Europe)

- CCPA/CPRA (California)

- Industry-specific regulations (HIPAA for healthcare, PCI-DSS for payment processing, etc.)

When you use external AI services, you're trusting that service to handle data compliantly. You need vendor agreements and verification that they meet your requirements.

Governance. Who decides what data is available for AI systems? Who approves new uses of data? How do you audit what's happening?

This might involve:

- A data governance committee that approves new AI projects

- Data inventory and classification

- Access controls (only certain people can use certain data)

- Audit logs of what data was used and how

Lesson 7: The Tool Stack—Avoiding Unnecessary Complexity

The Make-Versus-Buy Decision

When you decide to build an AI-powered workflow, you'll face a fundamental question: Do we build this ourselves or buy a solution?

Build your own:

- Pros: Customized exactly to your needs, tight integration with your systems, you own it

- Cons: Takes longer, requires ongoing maintenance, needs data science expertise

Buy a tool:

- Pros: Fast implementation, vendor handles maintenance, you can start using it immediately

- Cons: Less customized, might not fit your process perfectly, ongoing vendor costs

Reality: You'll probably do both. Some things you build (deeply integrated with your specific workflow), some things you buy (where a vendor solution exists and is good enough).

The question isn't which is better, it's which approach for which problem. Guidelines:

Build if:

- No vendor solution exists that fits your needs

- Your competitive advantage depends on a custom solution

- You have specific data science or engineering expertise

- The problem is specific to your business

Buy if:

- A vendor solution exists that covers 80%+ of what you need

- The vendor solution is better than what you could build

- You don't have the expertise to build it

- The problem is generic enough that others have solved it

Avoiding Tool Sprawl

One of the most common mistakes in building an AI-powered GTM: buying too many tools and ending up with fragmentation, data silos, and complexity.

Example: Your organization buys a tool for call summarization, a tool for email generation, a tool for customer insight analysis, and a tool for competitor intelligence. Suddenly, sales people are using four different AI tools, learning four different interfaces, and getting insights from four different systems.

Better approach: Rather than an AI tool for every problem, identify key platforms that handle multiple use cases:

- Revenue intelligence platform: Customer data, interaction data, account insights, next best action recommendations

- Content generation platform: Emails, documents, presentations, battle cards

- Analytics platform: Forecasting, pipeline analysis, trend analysis

- Workflow automation platform: Connecting systems, automating routine tasks

Instead of four tools, you have four platforms. Sales people learn one interface instead of four. Data flows between integrated systems rather than being siloed.

This requires more thoughtful tool selection. You can't just pick the best-of-breed solution for each problem—you need to pick solutions that work well together and cover your key use cases.

The Security and Compliance Audit

Before you bring a new AI tool into your organization, you need to audit it for security and compliance:

Data handling:

- Does the tool log the data you send it?

- Can you request deletion of your data?

- Does the tool use your data to improve its models? (Many do—is that acceptable?)

- Where is your data stored? (Data residency requirements)

Security:

- Is the tool SOC 2 certified? (This doesn't guarantee security, but it's a good baseline)

- Does it use encryption in transit and at rest?

- What's their vulnerability disclosure and incident response process?

Compliance:

- If you have GDPR requirements, does the tool have GDPR compliance commitments?

- If you have HIPAA requirements, is it HIPAA compliant?

- What's their sub-processor list? (If they use other vendors to process your data, you need visibility)

Contractual:

- Can you get a Data Processing Agreement (DPA) if needed?

- What are their SLAs? (Service level agreements—what uptime are they guaranteeing?)

- What's their support quality?

This review process slows down tool adoption but prevents problems—security breaches, compliance violations, vendor lock-in—down the road.

Estimated data shows that data warehouses and CRM systems are the most common sources of data in enterprises, each contributing significantly to the overall data landscape.

Lesson 8: Managing Organizational Skepticism and Resistance

The Skepticism Spectrum

Not everyone in your organization will be enthusiastic about AI transformation. You'll encounter various flavors of skepticism:

Category 1: Genuine concern about job security. Some people, especially those in roles that AI might significantly change, worry about whether they'll still have a job. This is a legitimate concern that deserves genuine response.

Best approach: Be honest about transformation while emphasizing augmentation. "This AI system will handle routine email drafting, but email strategy and relationship context—the hard part of account management—is still 100% your job. We're freeing you from busywork." Show them examples of how AI augments rather than replaces.

Category 2: Process disruption frustration. Some people are skeptical because change is annoying. They've optimized their workflow over years. AI means learning something new, changing their process, short-term inefficiency.

Best approach: Validate the frustration. "Yes, this is going to feel awkward for a couple weeks. I know that's annoying. But then it becomes your new normal." Provide support: training, help, office hours. Make the transition as smooth as possible.

Category 3: I don't believe this will actually work. Some people are skeptical based on past experience. "We've tried new tools before and they didn't work. Why is this different?" This skepticism is often rooted in real experience—previous tools were overhyped or implemented poorly.

Best approach: Show them evidence, not promises. Do pilots that prove the value. Share concrete results: "Look, in the pilot group, adoption is 65%, and people are saving 4 hours/week." Let the evidence speak.

Category 4: This isn't the right priority. Some people acknowledge AI could work but disagree with your prioritization. "Sure, AI for email is nice, but we need to fix X first." This is often a valid competing priority.

Best approach: Acknowledge the tradeoff. Share your prioritization framework. "You're right that X is important. Here's why we prioritized AI email first: ROI is higher, timeline is shorter, and it addresses one of our biggest bottlenecks. We'll do X in phase 2." Transparency reduces friction.

The Influencer Strategy

You'll never convince everyone simultaneously. Instead, use an influencer strategy:

-

Identify power users and early adopters. These are people who are naturally curious about new tools and have credibility with their peers. Often it's 5-10% of your organization.

-

Equip them to be champions. Give them early access to tools, deep training, the ability to customize and adapt. They become advocates because they genuinely find value.

-

Amplify their voice. When an early adopter in sales says "This AI tool saved me 3 hours on CRM work," that's more credible than when the VP says it. Give them platforms to share their experience: team meetings, company all-hands, internal communications.

-

Create peer learning. Once you have champions, use them to help others. "Sarah has been using this for 6 weeks and loves it. Let's have her run a training for our team."

-

Expand in waves. First power users, then early adopters, then pragmatists, then laggards. Each wave builds on the previous wave's evidence and advocacy.

This approach is slower than trying to mandate adoption across the organization, but it's more durable. People adopt because they see peers benefiting, not because they were told to.

Lesson 9: Measuring Success—Metrics That Actually Matter

Distinguishing Vanity Metrics from Real Metrics

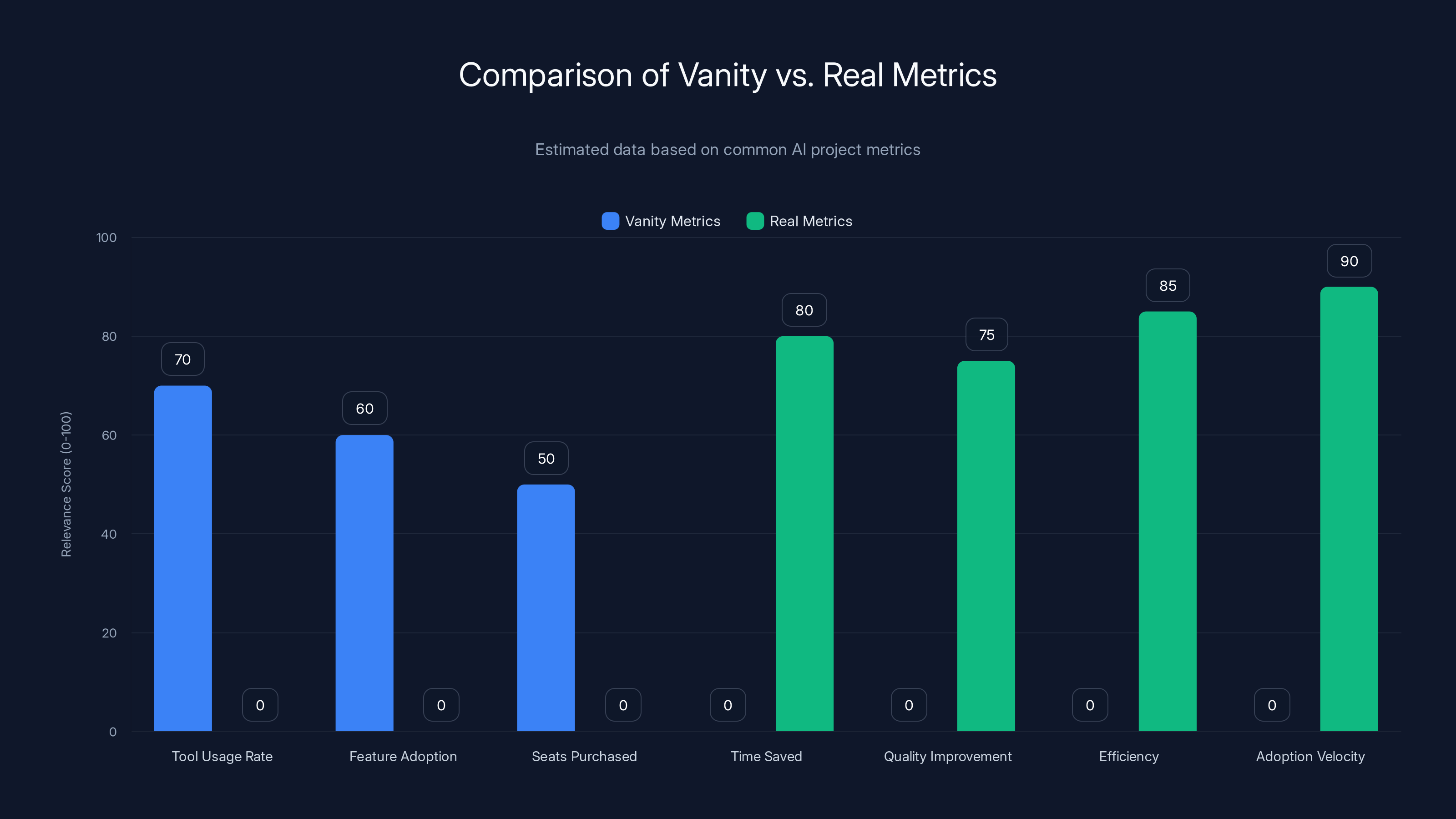

Most AI projects get measured on metrics that don't actually matter:

Vanity metrics:

- Tool usage rate: X% of salespeople have logged in at least once

- Feature adoption: Y% of people have generated at least one AI email

- Seats purchased: We bought 50 licenses

These metrics feel good but don't tell you if you're actually delivering business value.

Real metrics:

- Time saved: How many hours per week are people actually saving?

- Quality improvement: Are deals closing faster? Are win rates improving? Are renewals increasing?

- Efficiency: What's the ROI? X in incrementally captured value?

- Adoption velocity: Is usage going up over time or flat? Are people using more, or did they try once and stop?

Designing Your Measurement Framework

For each AI use case, define three levels of metrics:

Level 1: Adoption and usage metrics.

- % of target users using the tool at least weekly

- Average frequency of use per user per week

- Feature usage: which features are people actually using? (This tells you what's valuable)

- Expansion: Are people using it for more than the intended use case? (This is a good sign)

Level 2: Efficiency metrics.

- Time saved per use case

- Cost impact (if relevant—e.g., fewer customer support tickets means fewer support costs)

- Quality metrics (where applicable: error rates, customer satisfaction, etc.)

Level 3: Business impact metrics.

- Sales velocity: Are deals moving through stages faster?

- Win rates: Are we winning more deals?

- Deal size: Are deals larger?

- Customer retention: Are we retaining more customers?

- Customer satisfaction: Are customers happier with their experience?

Not every metric is relevant to every use case, but together they tell a story.

Measurement Challenges

Challenge 1: Causation vs. correlation. If win rates go up after you deploy an AI email generation tool, did the tool cause the improvement? Or did it happen because you hired better salespeople? Or because the market improved? You need to be thoughtful about isolating impact.

Solution: Use a controlled approach. Implement the tool with a pilot group of 10-20 people. Compare their metrics (win rate, deal velocity, etc.) against a control group not using the tool. The difference is likely attributable to the tool.

Challenge 2: Time lag between adoption and impact. When you launch an AI tool, adoption happens immediately. But business impact (better deals, higher revenue) might take 2-3 months to show up. You need patience and communication.

Solution: Track both leading indicators (adoption, frequency of use) and lagging indicators (revenue impact). Show leaders both signals so they understand the expected timeline.

Challenge 3: Measurement infrastructure. Capturing these metrics requires integration between your tools and your measurement systems. You need data pipelines that pull usage data from the AI tool, combine it with outcome data from CRM, and calculate impact.

Solution: Build this infrastructure early. Work with your data team to define exactly what data you need to capture and how you'll combine it. Don't do this manually—it doesn't scale and it's error-prone.

Lesson 10: Avoiding the Five Critical Mistakes

Mistake 1: Not Securing Executive Sponsorship for the Long Term

AI transformation requires sustained executive commitment. The companies that fail often had executive sponsorship initially but lost it when:

- The sponsor left the company

- A crisis emerged and attention shifted

- Results took longer than expected and patience wore thin

- Political dynamics changed

Solution: Executive sponsorship should be distributed across multiple leaders, not concentrated in one person. If your CRO is the sole sponsor and they leave, the initiative stalls. Better: CEO, CRO, CFO, Chief Technology Officer all understand and support the strategy. This creates resilience.

Also: Set explicit expectations about timeline. "This will take 6-12 months to see material business impact. We're going to hit obstacles. We might need to adjust. But we're committed to seeing this through."

Mistake 2: Building Disconnected from Your Sales Process

Many companies build AI tools in isolation from how selling actually works. They optimize for what's technically interesting rather than what's operationally useful.

Example: An organization builds an AI system that scores leads based on likelihood to convert. The model is sophisticated and accurate (88% precision). But it integrates with the CRM in a way that requires salespeople to manually check the score. It's one more thing to do, not one less thing. Adoption is predictably low.

Solution: Build with your sales operations and end-users involved from day one. "Here's how we want to use this—it should be visible in the opportunity page without requiring extra clicks." Let process requirements drive technical decisions, not the other way around.

Mistake 3: Assuming AI Is a Technology Problem

Companies often approach AI transformation as primarily a technology challenge: "We need the right tools and infrastructure." Then they're surprised when tools exist but adoption is low.

AI transformation is primarily an organizational change problem with some technology in it. The hard part is helping 400 salespeople change how they work, managing resistance, building a culture where AI-augmented selling is how we operate, training people, supporting them through disruption.

Solution: Treat it as organizational change, not technology implementation. Invest in change management. Have explicit conversations about how work is changing. Train people. Create support structures. Be patient with the adoption curve.

Mistake 4: Under-Investing in Data Quality

Many organizations want to build AI quickly and see results fast, so they skip the unglamorous work of data quality. Then their AI systems produce unreliable outputs and lose credibility.

Solution: Invest in data quality first. Before you build the AI system, spend 4-6 weeks auditing, cleaning, and standardizing your data. It feels slow at the time, but it saves enormous pain later.

Mistake 5: Treating AI as a Revenue Panacea

The most dangerous mistake: approaching AI transformation as if it will solve fundamental business problems. "Our sales team isn't effective. Let's add AI." If the issue is that your salespeople are underperforming, lack skills, or are misaligned with your market, AI won't fix that.

AI is best at solving operational efficiency problems. It saves time on busywork, improves process, augments human capability. It's not good at fixing broken fundamentals.

Solution: Be clear about what problem you're solving. If you're solving time waste and inefficiency, AI is perfect. If you're solving fundamental sales effectiveness, start with coaching, training, and process improvement. Once the fundamentals are solid, AI makes them even better.

Leadership visibility is estimated to be the most effective lever in building an AI culture, followed by encouraging experimentation and sharing success stories. Estimated data.

Lesson 11: The GTM Workflow Integration Framework

Where AI Fits in the Revenue Cycle

To build an effective AI transformation, you need to understand where AI creates value across the entire go-to-market motion, not just sales.

Demand generation:

- AI can analyze firmographic and behavioral data to identify high-probability targets

- AI can generate personalized messaging and creative variations at scale

- AI can optimize campaign parameters and predict performance

Sales engagement:

- AI can prepare sellers for customer conversations with relevant context

- AI can generate outreach sequences and email variations

- AI can analyze customer interactions and suggest next steps

- AI can identify expansion opportunities within accounts

Deal management:

- AI can forecast deal outcomes based on activity patterns

- AI can identify deals at risk and suggest interventions

- AI can generate proposals and customize them by customer

Customer success:

- AI can monitor customer health and flag risks early

- AI can suggest expansion and upsell opportunities

- AI can generate renewal justifications and materials

Sales operations:

- AI can extract and update CRM data from communications

- AI can analyze win/loss patterns and identify success factors

- AI can forecast pipeline and revenue with greater accuracy

Building Integrated Workflows

The most valuable AI transformations aren't isolated point solutions—they're integrated workflows where AI and humans work together across multiple steps.

Example integrated workflow: An account executive preparing for a quarterly business review with a strategic customer.

Traditional workflow:

- AE spends 2 hours gathering customer information (usage data, support history, previous discussions)

- AE spends 1 hour synthesizing this into talking points

- AE creates a Power Point presentation (1.5 hours)

- AE reviews the presentation and adds internal context (30 min)

- Total: 5 hours

AI-integrated workflow:

- AE triggers "Generate QBR prep" in CRM

- AI system automatically pulls customer data (usage, health, interactions, previous discussions)

- AI system generates:

- Customer health summary

- Key metrics and trends

- Success stories and ROI context

- Expansion opportunities

- Potential challenges or risks

- AI system generates initial presentation deck

- AE reviews AI output, adds internal strategic context, customizes based on customer personality (1 hour)

- Total: 1 hour

The difference isn't that AI did everything—it's that AI handled the data gathering and synthesis, freeing the human for higher-value analysis and customization.

Lesson 12: Scaling to the Entire Organization

Phase-Based Rollout Approach

Don't try to launch an AI transformation to 400 salespeople simultaneously. Use a phased approach:

Phase 1: Pilot (weeks 1-4)

- 5-10 power users and early adopters

- Close collaboration with the implementation team

- Daily feedback and quick iterations

- Goal: Prove the concept works and refine the workflow

Phase 2: Expansion (weeks 5-8)

- 50-100 additional users (one full team)

- Scaled training and support

- Less frequent iteration (weekly instead of daily)

- Goal: Prove it scales beyond power users

Phase 3: Roll-out (weeks 9-12)

- Full organization launch

- Support infrastructure at scale (documentation, office hours, email support)

- Less frequent changes to the system (changes are lower priority once rolled out)

- Goal: Full adoption and operationalization

Phase 4: Optimization (month 4+)

- Monitor usage and outcomes

- Address support issues

- Gather feedback for next iterations

- Plan next use cases based on learnings

Support and Training at Scale

As you scale, support becomes critical to adoption. Build support structures:

Self-service documentation:

- Written guides and screenshots

- Video walkthroughs

- FAQ with common questions

Office hours:

- 30-minute sessions where people can ask questions

- Record them so people can watch async

Champions network:

- Identify power users in each team

- Train them deeply

- They become the first support line within their teams

Email and Slack support:

- Responsive support for issues

- Dedicated Slack channel where people can ask questions

- Documentation bot that answers frequently asked questions

Managing Version Updates and Iterations

Once you've launched, you'll want to make improvements. Manage this carefully:

Breaking changes: Changes that require people to learn new workflows. Minimize these and do them infrequently (quarterly, not monthly). When you make breaking changes, do extensive communication and training.

Additive features: New capabilities that extend what's possible. Users don't have to change what they're doing; they can use the new feature if they want. Do these more frequently (monthly) with low communication overhead.

Maintenance updates: Bug fixes and performance improvements. Do these continuously without much announcement.

Communication protocol: When you update, explain: What changed? Why? What should users do?

Lesson 13: Building vs. Buying: The Integration Advantage

When to Build Custom Solutions

Building custom AI solutions makes sense when:

- Your competitive advantage depends on it. If how you deploy AI in go-to-market is a differentiator for you, build it. Control matters.

- No vendor solution exists. The problem is specific to your business model or market. You've looked and nothing comes close.

- Vendor solutions are off-spec. The best vendor solution covers maybe 60% of what you need. You'd be shoehorning and it would be painful.

- You have the team. You have in-house talent (data scientists, ML engineers, product managers) who can build and maintain it.

When to Buy

Buying makes sense when:

- A good vendor solution exists. Something covers 80%+ of your needs.

- Speed matters. You need results in months, not years. Building takes longer.

- Maintenance burden matters. Vendor handles updates, security patches, ongoing improvement. You don't have to.

- Cost is a factor. Buying is often cheaper than building and maintaining, even with annual vendor costs.

- You lack the team. You don't have in-house AI/ML expertise. Building would require hiring, which takes time.

The Hybrid Approach: Buy + Build

Most organizations do both:

- Buy general-purpose tools (email generation, call summarization, customer intelligence)

- Build custom integrations and workflows specific to your sales process

Example: You buy a revenue intelligence platform. It works well out of the box but doesn't integrate with your internal systems exactly the way you need. Your Rev Ops team builds an integration layer using APIs that maps your CRM fields to the platform's expected inputs. You buy the solution but customize it to your specific context.

This approach balances speed (buy for things that are generic) with customization (build for things that are specific to you).

Real metrics like time saved and efficiency are more relevant for assessing AI project success compared to vanity metrics like tool usage rate. Estimated data.

Lesson 14: Handling Edge Cases and Failures

When AI Produces Bad Output

AI systems will sometimes produce bad output. Maybe it generates an email that's tone-deaf. Maybe it misses a key insight about a customer. Maybe it recommends an action that doesn't make sense.

This is normal. The question is how you handle it:

First principle: Always have a human in the loop for important decisions. AI is an augmentation tool, not a replacement for judgment. Before sending an email, the sales rep should read it. Before making a deal decision, the manager should review the analysis.

Second principle: Collect failure data. When the AI produces bad output, log it. What was the input? What did it produce? What should it have produced? This data is valuable for improving the system.

Third principle: Iterate, don't abandon. If an AI system is producing bad output 20% of the time, don't scrap it. Work to improve it. Better data, better training, better human-AI workflow integration.

When Adoption Stalls

You might hit a point where adoption plateaus at 40%, 50%, or 60%—lower than your target. This is common and usually fixable.

Diagnosis:

- Is the lagging group skeptics or people who haven't had time yet?

- Is there a workflow issue preventing adoption? (Maybe the tool doesn't integrate well with how they work)

- Is there a training gap? (Maybe people don't understand how to use it)

- Is the tool not working well enough to be worth their time?

Solutions by diagnosis:

- If skepticism: Use influencer strategy, share evidence of value, allow more time

- If workflow issue: Iterate the workflow based on feedback

- If training gap: Double down on training and support

- If tool quality issue: Work with the vendor or team to improve the tool

Knowing When to Pivot

Sometimes an approach isn't working and iteration isn't enough. You need to pivot:

When you might pivot:

- You've invested 3-4 months in an approach and adoption is below 30% with no sign of improvement