How Amazon's CISO Uses AI to Stay Ahead of Threat Actors [2025]

Threat actors aren't interested in your company's security posture. They care about one thing: achieving their goal. Whether that's stealing data, disrupting operations, or extorting millions, attackers will find the path of least resistance. They'll test your defenses, exploit misconfigured cloud resources, manipulate your employees, or pivot laterally through compromised systems until they break through.

This perspective isn't cynical—it's practical. And it's exactly why a fundamental shift in how enterprises approach cybersecurity is happening right now.

At AWS re: Invent 2025, Amy Herzog, Chief Information Security Officer at Amazon, shared insights that challenge how most organizations think about security. Her message was clear: traditional security practices are no longer sufficient. The pace of threats, the complexity of modern infrastructure, and the sheer volume of security alerts have overwhelmed human-centric defense models. Enter artificial intelligence and, more specifically, agentic AI—autonomous agents that work alongside security teams to catch threats before they ever harm customers.

This isn't about replacing security professionals. It's about augmenting them with tools intelligent enough to understand context, prioritize risk, and act on findings faster than humans ever could. For teams already drowning in alert fatigue, managing sprawling cloud environments, and struggling to hire experienced security talent, this shift represents a genuine lifeline.

But here's what matters most: understanding how to implement these tools thoughtfully. Because AI in security, like AI everywhere else, comes with its own set of challenges, misconceptions, and potential pitfalls.

TL; DR

- Threat actors are outcome-focused: They don't care about your security strategy—they'll exploit whatever vulnerability gets them to their goal fastest

- AI agents work best when specialized: Focus agents on doing one job exceptionally well, then integrate them into larger security frameworks

- Prevention beats response: Catching threats before they reach customers eliminates 90% of the damage and cost associated with breaches

- Security isn't binary: The goal isn't 100% security (which breaks functionality)—it's the optimal balance of protection and usability

- Speed of adaptation matters more than initial perfection: Security landscapes change monthly; organizations that continuously measure and adapt win

Investing

The Reality of Modern Threat Actors: Why Traditional Defenses Fall Short

When we talk about cybersecurity strategy, we often start with a defense-centric mindset. We build walls, implement firewalls, deploy intrusion detection systems, and monitor logs. Then we assume we're safe.

Threat actors don't think that way.

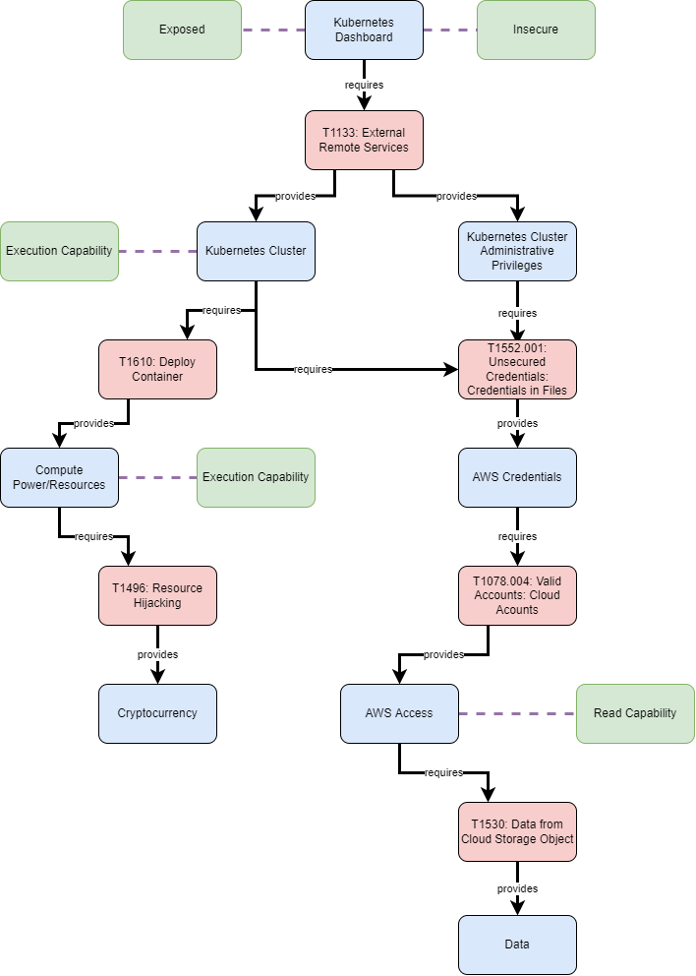

They start with an objective. Maybe it's accessing customer financial data. Maybe it's disrupting critical infrastructure. Maybe it's simply establishing a foothold for future exploitation. From there, they work backward. They'll reconnaissance your organization, identify potential entry points, and then execute a series of attacks designed to find the weakest link.

This is fundamentally asymmetrical. You have to defend everywhere. They only need to find one gap.

What makes this worse today is scale and speed. A single misconfigured AWS S3 bucket can expose millions of records. A compromised developer workstation can grant access to your entire codebase. A phishing email can look identical to legitimate communication. An unpatched vulnerability that you didn't know existed can be weaponized within 24 hours of disclosure.

Traditional security—based on quarterly penetration tests, annual security audits, and reactive incident response—was built for a different era. When vulnerabilities took months to exploit. When attackers couldn't automate reconnaissance. When your infrastructure was mostly static and on-premises.

Now? Threat actors use AI to scan for vulnerabilities at scale. They automate credential stuffing attacks. They generate convincing phishing emails. They analyze your public GitHub repositories to understand your tech stack and identify known weaknesses. They're moving faster than any human security team can respond.

That's why the security industry is fundamentally shifting. It's not about doing more of the same thing. It's about working smarter, leveraging intelligence, and getting ahead of threats before they materialize.

Leading organizations significantly outperform average ones in key security metrics, reducing detection and response times and focusing spending on critical risks. Estimated data.

Understanding Agentic AI: Beyond the Hype

When most people hear "AI agents," they picture something from science fiction. Autonomous robots making decisions independently. Systems that operate without human oversight. Frankly, that's why Herzog spends energy de-mystifying AI agents for customers.

In reality, security-focused AI agents are far more grounded. They're specialized tools designed to automate specific, well-defined tasks that would otherwise consume human security analysts' time.

An effective security agent might:

- Analyze logs from thousands of servers in real-time, flagging anomalous behavior patterns that humans would miss

- Correlate security events across multiple tools and data sources to identify coordinated attack chains

- Prioritize alerts based on business impact, not just threat severity

- Suggest remediation steps backed by context about your environment

- Continuously monitor for known vulnerabilities and misconfigurations

- Simulate attack paths to identify which vulnerabilities represent the highest actual risk

The key insight from Herzog's perspective is critical here: agents work best when they're built to do one thing exceptionally well, then integrated into a larger framework. Don't try to build an all-knowing security agent that handles every security task simultaneously. That's a recipe for false positives, missed signals, and wasted effort.

Instead, think of it like a surgical team. You have specialists—the orthopedic surgeon, the anesthesiologist, the nurses. Each is expert in their domain. They coordinate through established protocols. The head surgeon integrates their findings into a coherent treatment plan.

Security agents should work the same way. One agent specializes in cloud configuration auditing. Another focuses on network anomaly detection. A third handles vulnerability assessment. They feed information into a central security platform where human analysts synthesize findings and make decisions.

This approach solves a genuine problem: alert fatigue. Most security teams today are drowning in alerts. A typical enterprise generates millions of security events daily. Traditional SIEM systems can flag tens of thousands of potential issues. Security analysts manually sort through this noise, investigating which alerts are actually critical.

When a skilled agent filters, prioritizes, and contextualizes this information, suddenly the security team isn't overwhelmed. They're empowered.

The Three Pillars of Effective Security: Grounding, Measurement, and Adaptation

Herzog emphasizes something that gets lost in technical discussions about AI and security: grounding. Your security strategy must be grounded in actual, measurable reality. Not in theoretical attack vectors. Not in what vendors tell you matters. Not in what competitors are doing.

What matters is understanding your specific environment, your specific threats, and your specific business objectives. That grounding comes from genuine engagement with teams building and using your systems.

She notes that product teams need to understand customer experience. Security teams need to understand the builder experience. You can't secure what you don't comprehend. This is why many organizations struggle when they adopt security tools—they implement them without understanding how work actually happens in their business. A firewall rule that technically improves security but makes developers unable to deploy their code doesn't actually help you. It creates friction, frustration, and workarounds that undermine security.

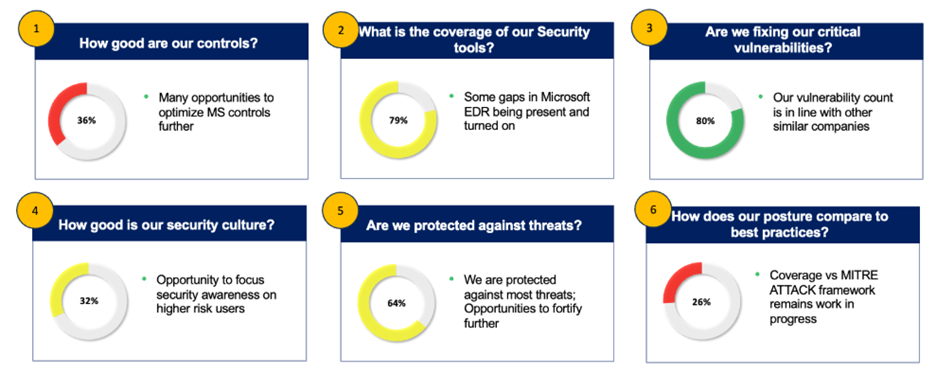

This brings us to the second pillar: measurement. Most security teams measure the wrong things.

They track: "How many vulnerabilities did our scanner find?" and "How many issues did we resolve?"

They should track: "How fast are we fixing each vulnerability?" and "Are we reducing actual risk over time?"

It's the difference between activity metrics and impact metrics. One tells you how busy you are. The other tells you whether you're actually getting safer.

Consider a practical example. You run a vulnerability scanner. It finds 5,000 issues this quarter. You remediate 3,500 of them. Sounds good, right? But what if those 1,500 unresolved issues are critical bugs that could be exploited? What if those 3,500 remediations were for low-priority issues that attackers wouldn't bother exploiting? Your metrics make you look productive, but your actual risk increased.

Proper measurement means:

- Categorizing vulnerabilities by exploitability: Not all bugs are equally dangerous. A missing HTTP security header is less critical than an unauthenticated API endpoint exposing customer data

- Tracking mean time to remediation (MTTR) by severity level: How fast can you fix critical issues? That's your real competitive advantage

- Measuring business impact of security incidents: What did that breach actually cost? This drives smarter investment decisions

- Monitoring attack surface expansion: Is your environment growing in ways that increase risk? Growing out of control?

- Assessing security tool effectiveness: Are your tools actually preventing attacks, or just generating noise?

The third pillar is continuous adaptation. The security landscape isn't static. New vulnerabilities emerge weekly. Threat actors develop new tactics monthly. Regulations change. Your business pivots. Your infrastructure evolves.

A security strategy designed once and implemented once is obsolete within months. Effective security is iterative. You measure. You learn. You adapt. You measure again.

Herzog specifically notes that security teams often get caught in a trap: they become so focused on what their tools are producing that they lose sight of actual outcomes. The scanner said to do X, so they do X. But is X actually making them safer? Sometimes the answer is no.

Bringing this back to AI agents: good agents support this adaptive cycle. They continuously monitor your environment, flag changes, highlight new risks, and suggest improvements. But they require humans to set direction, validate findings, and make strategic decisions.

Activity metrics show high productivity with 5,000 vulnerabilities found and 3,500 issues resolved. However, impact metrics highlight unresolved critical bugs, indicating increased risk. Estimated data.

Preventing Threats Before They Become Incidents

Here's something that separates sophisticated security programs from reactive ones: prevention beats response by orders of magnitude.

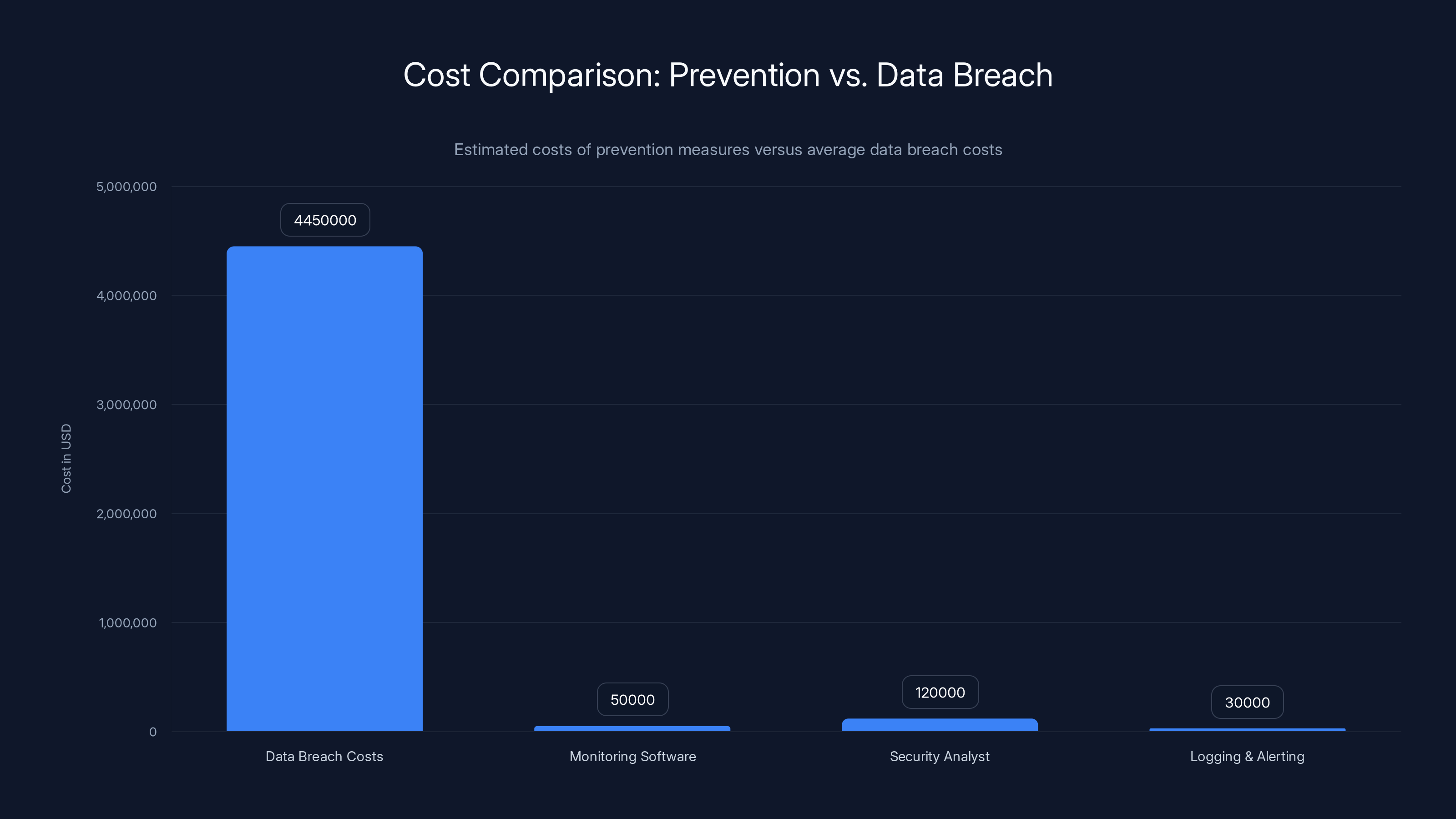

Consider the economics. A data breach costs organizations an average of $4.45 million in direct expenses. But that's just the starting point. There's reputational damage, customer churn, regulatory fines, incident response costs, forensics, legal fees, and long-term brand damage.

Contrast that with the cost of preventing that breach. Better monitoring software: maybe

The math is staggering. Spending

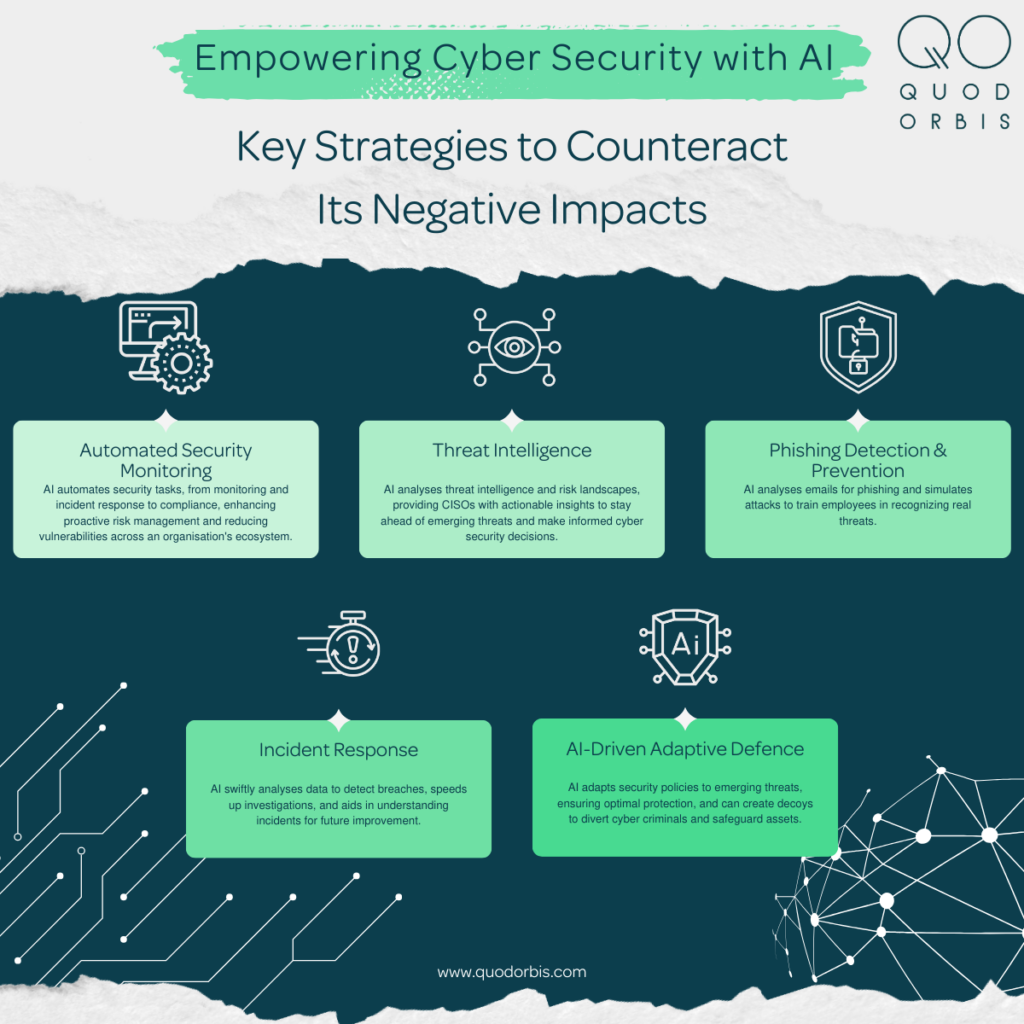

AI agents change this equation. They work 24/7, don't get fatigued, don't miss details. An agent monitoring your cloud infrastructure can detect misconfigured permissions in seconds. A human might take days to discover the same issue, if they ever do.

What gets lost in incident response discussions is what Herzog highlights: the goal is to catch and prevent things before they're ever in front of a customer's eye. If a threat actor doesn't reach your data, there's no breach. If an insider doesn't exfiltrate intellectual property, there's no loss. If malware doesn't encrypt your systems, there's no downtime.

This requires a fundamental mindset shift:

Prevention-focused security means:

- Continuously mapping your attack surface (what's exposed to the internet, what can be accessed from where, what data is accessible without proper authentication)

- Prioritizing prevention controls over detection controls (firewalls matter more than intrusion detection if they actually stop attacks)

- Testing defenses regularly through red-team exercises and controlled simulations

- Implementing compensating controls for vulnerabilities you can't immediately patch

- Monitoring for early indicators of compromise before attackers achieve their objectives

- Automating routine security tasks so humans focus on complex decisions

- Building redundancy and resilience into critical systems

AI agents excel at many of these. They can continuously map your attack surface. They can monitor for early indicators. They can automate routine tasks. They can identify where compensating controls are needed.

But here's the catch: prevention only works if you actually respond to what agents discover. A perfectly functioning threat detection system that gets ignored by busy security teams prevents nothing.

The False Choice: Security Versus Functionality

One of Herzog's most important insights deserves its own exploration: the idea that "100% security" is a false goal.

You could theoretically create a perfectly secure computer. Disconnect it from all networks. Disable all peripherals. Allow no user input. Never turn it on. That computer is maximally secure. It's also completely useless.

Every security control creates friction. Every control limits functionality. A restrictive password policy (16 characters, special symbols, changed monthly) is more secure than a simple password. But it also leads to users writing passwords on sticky notes and password fatigue.

Multi-factor authentication is more secure than passwords alone. But it also means users can't access applications from their phone in an emergency. It adds friction to the authentication process.

Zero-trust architecture is theoretically more secure than perimeter-based security. But it means every access requires verification, slowing down legitimate work.

Strict data classification schemes improve data protection. But they also require employees to make decisions about sensitivity levels, creating overhead and errors.

The security profession has historically approached this wrong. The goal becomes "make it as secure as possible" rather than "make it optimally secure for our business context."

The better question is: What's the optimal balance of functionality and control that achieves what we're trying to accomplish?

For a financial services company handling regulated data, that balance looks different than for a software-as-a-service startup. The startup might accept more risk in exchange for developer velocity. The financial services company might accept more friction in exchange for compliance certainty.

There's no universal answer. But the framework is sound: define what you're trying to protect, define what level of risk is acceptable given your business context, then design controls that achieve that risk tolerance without unnecessarily constraining operations.

This is where human judgment becomes irreplaceable. An AI agent can flag that you're leaving sensitive data in publicly accessible cloud storage. But it can't decide whether that's an acceptable business risk because sales processes require quick document sharing, and stricter controls would slow deals.

Humans make that trade-off decision. Agents provide information and recommendation. Humans decide.

The roadmap for AI-assisted security implementation shows a phased approach, with significant progress expected during the integration phase. Estimated data.

Agentic AI in Practice: Real-World Applications

Theory is useful. But where does agentic AI actually help?

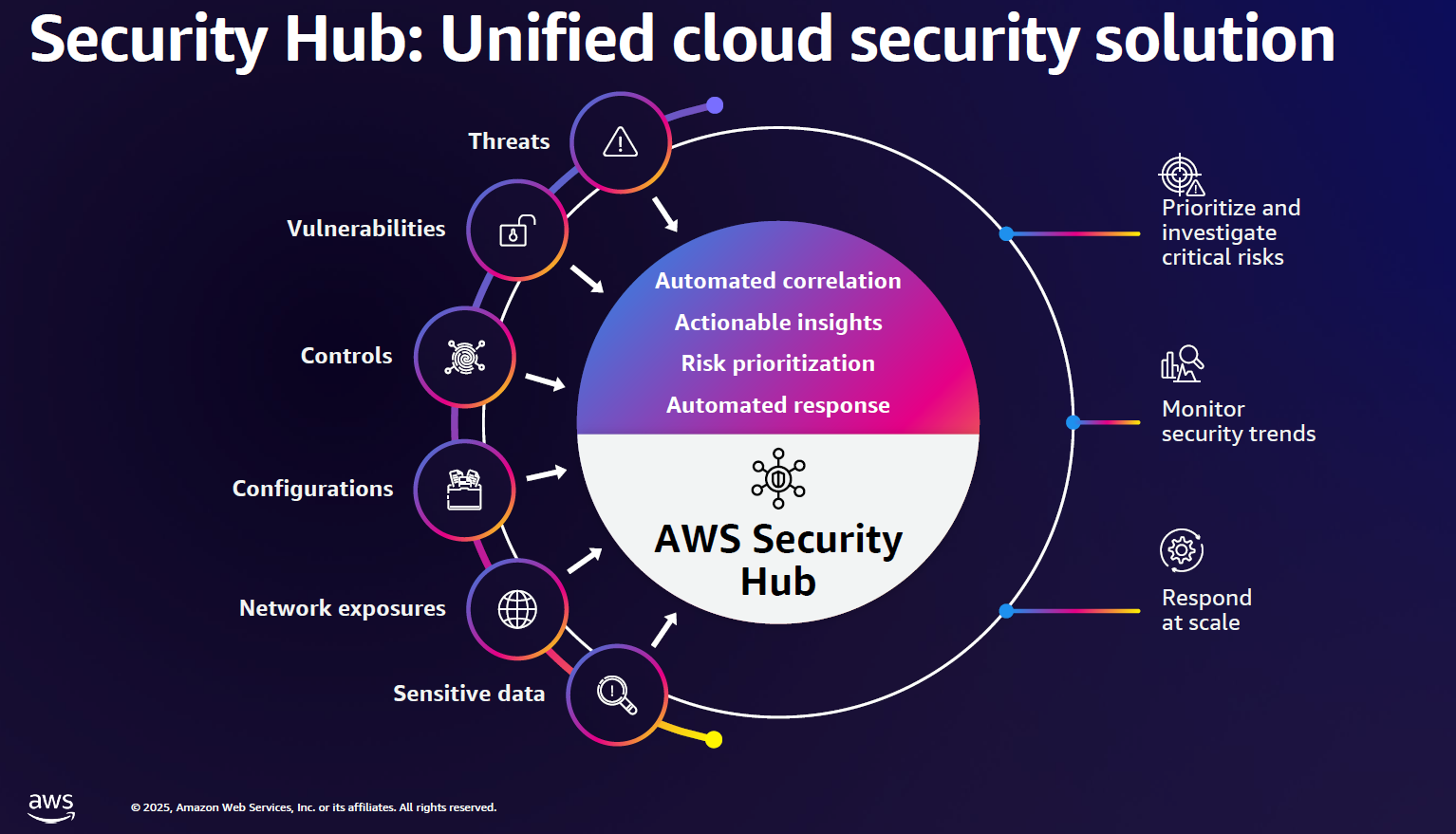

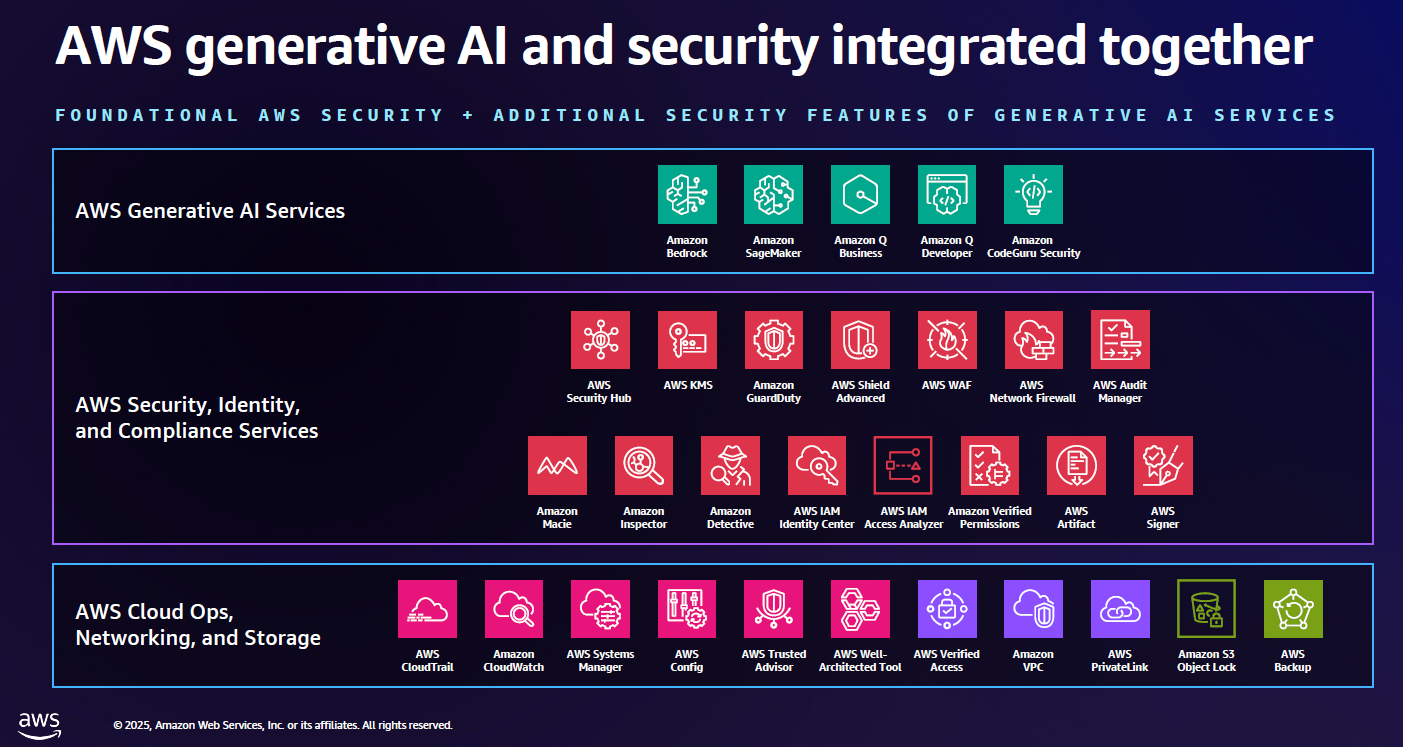

Cloud Configuration Auditing: Agents continuously scan your AWS, Azure, or GCP environments for misconfigurations. Public S3 buckets, overly permissive security groups, unencrypted databases, IAM roles with excessive privileges. These agents don't get tired. They don't miss details. They flag issues in minutes that humans might never discover. For organizations managing hundreds or thousands of cloud resources, this is transformative.

Log Analysis at Scale: A typical enterprise generates terabytes of security logs daily. Sifting through that data manually is impossible. AI agents can analyze logs in real-time, identifying anomalies (unusual login attempts from unexpected locations, large data transfers to external accounts, privilege escalation attempts) that might signal a breach. The agent learns what "normal" looks like for your environment and flags deviations.

Vulnerability Prioritization: Vulnerability scanners generate long lists. But which vulnerabilities should you fix first? The agent can analyze each finding and determine: Is this exploitable in our environment? Are there existing compensating controls? Is this accessible to untrusted users? How quickly could an attacker exploit this? This transforms vulnerability management from "fix everything" to "fix what matters."

Threat Intelligence Integration: Your organization subscribes to threat feeds, receives vulnerability disclosures, gets alerts from security vendors. An agent can correlate this intelligence with your specific environment, identifying which threats are relevant to you, which vulnerabilities affect systems you actually operate, and which attack techniques match the threat profile of your industry.

Incident Response Assistance: When a security incident occurs, time is critical. An agent can assist by: gathering logs relevant to the incident, identifying affected systems, suggesting containment steps, documenting timeline, and preparing initial incident reports. Security analysts can focus on decision-making while agents handle information gathering.

Security Awareness: Agents can monitor for phishing emails, flag suspicious attachments, and educate users in real-time. When someone clicks a malicious link, an agent can intercept before damage occurs and provide education about what they fell for and how to recognize similar attacks.

Compliance Validation: Regulatory requirements (HIPAA, PCI-DSS, SOC 2, GDPR) demand specific controls. Agents can continuously validate that you're meeting these requirements, identifying gaps before audits occur.

What's important about all these applications: they're augmenting human judgment, not replacing it. The agent provides information. Humans make decisions. The combination is more effective than either alone.

Building Your Security Resilience Strategy

Given that complete prevention is impossible (threat actors will always find some gap), the goal becomes resilience. How quickly can you detect compromise? How fast can you respond? How much damage can you limit?

This requires thinking about security in stages:

Prevention Stage: Controls that stop attacks before they succeed. Firewalls, access controls, encryption, network segmentation. AI agents help by identifying weaknesses in these controls before attackers do.

Detection Stage: Controls that identify attacks in progress. Network monitoring, endpoint detection and response (EDR), security information and event management (SIEM). This is where AI agents genuinely excel—analyzing data faster and at scale beyond human capability.

Response Stage: Processes and tools that limit damage once compromise is confirmed. Incident response teams, forensics capabilities, communication protocols, remediation procedures.

Recovery Stage: Systems and processes that restore normal operations and prevent recurrence. Patching, process improvements, monitoring enhancement, architecture changes.

A mature security program invests across all four stages. Organizations that over-invest in prevention while neglecting detection are vulnerable to sophisticated attackers who will eventually break through. Organizations that focus only on detection without strong response procedures suffer high-cost, prolonged incidents.

The math here is important. Each stage multiplies effectiveness:

- Prevention that stops 95% of attacks, detection that catches 90% of what gets through, and response that limits damage to 50% of what gets detected = overall success rate of 95% + (5% × 90%) + (5% × 10% × 50%) ≈ 99%

Compare that to:

- Prevention that stops 80% of attacks, detection that catches 50% of what gets through, and response that limits damage to 30% of what gets detected = overall success rate of 80% + (20% × 50%) + (20% × 50% × 30%) ≈ 93%

That 6% difference sounds small. But it translates to whether your organization experiences a major breach or not.

The role of AI agents in this framework is clear: they make detection faster and more reliable, improving the second multiplier. They also help with prevention by identifying vulnerabilities and misconfigurations before deployment. And they assist with recovery by automating remediation and validation.

Organizations using AI security agents have reduced average threat dwell time from over 200 days to under 15 days, significantly limiting potential damage from breaches. Estimated data.

Preparing Your Team for AI-Assisted Security

Technology doesn't win security battles. People do. AI agents amplify what security teams do, but they don't replace the need for skilled professionals who understand your business, your threats, and your environment.

As organizations adopt agentic AI, teams need to evolve:

From Alert Responders to Analysts: Security analysts today spend 40-50% of time investigating alerts most of which are false positives or low-priority issues. When AI agents pre-filter alerts, analysts transition to deeper investigation, root cause analysis, and strategic security improvements.

From Generalists to Specialists: As routine tasks automate, teams shift toward specialization. Someone focuses on cloud security. Someone else specializes in application security. A third handles threat intelligence. Rather than everyone knowing a little about everything, teams develop deeper expertise in specific domains.

From Reactive to Proactive: Without AI agents, teams are perpetually behind, reacting to the latest alert or incident. With agents handling routine monitoring, teams can engage in proactive activities: penetration testing, security architecture reviews, threat modeling, control validation.

From Manual to Orchestrated: Many security tasks are becoming orchestrated. An agent identifies a misconfigured storage bucket. It validates the misconfiguration. It checks impact. It suggests remediation. An analyst reviews and approves. A separate agent or automation system implements the fix. This orchestration is far more efficient than a human handling each step.

Skill shifts required:

- Deep technical knowledge becomes more critical (AI agents need human oversight and direction)

- Business acumen matters more (security professionals need to understand risk in business terms)

- Soft skills become differentiated (communication, stakeholder management, decision-making)

- Vendor/tool expertise becomes less critical (agents abstract away many tool details)

- Architecture and design skills become more valuable (designing resilient systems beats fixing broken ones)

Organizations that recognize these shifts and invest in team development will capture the value of AI-assisted security. Organizations that simply deploy tools without evolving team practices will struggle to realize benefits.

The Governance Challenge: Controlling AI in Security

Here's a challenge that gets less attention than it should: what happens when your security AI makes a mistake?

If an agent incorrectly blocks legitimate traffic, developers can't deploy code. If an agent misidentifies a security incident and triggers incident response procedures, you burn resources on false alarms. If an agent approves remediation that breaks production systems, customers experience downtime.

This is why governance around security AI agents is critical.

Effective AI governance includes:

-

Clear authority and approval workflows: Which decisions can agents make autonomously? Which require human approval? For remediation of critical issues, who decides whether implementing the fix is worth the risk?

-

Audit trails and explainability: When an agent flags something, you need to understand why. What data did it analyze? What rules did it apply? This matters for compliance, debugging, and learning.

-

Regular testing and validation: Do agent recommendations actually improve security? Are false positive rates acceptable? Is the agent recommending technically sound solutions? This requires ongoing testing against known good and bad configurations.

-

Threshold management: Some actions are too risky for autonomous implementation. Critical system changes should require human sign-off. Less impactful changes (enabling monitoring on a database) might be safe to automate.

-

Feedback loops: When an agent makes a recommendation and humans override it, that's valuable training data. When an agent approves something that later turns out to be problematic, that's important feedback. Organizations need processes to capture and act on this information.

-

Regular review and adaptation: Agents aren't fire-and-forget. They require ongoing review. Are recommendations still accurate? Has your environment changed in ways that affect agent performance? Do thresholds need adjustment?

Without governance, AI security agents can cause more problems than they solve. With proper governance, they become force multipliers.

One practical example: an agent identifies that a development database contains copies of production data (bad practice, creates security risk). It recommends deletion. Before the agent automatically deletes the database, a human approves the recommendation. That approval process catches that developers intentionally created that copy for debugging a customer issue. The deletion is deferred until the issue is resolved. Governance prevented a productivity-damaging mistake.

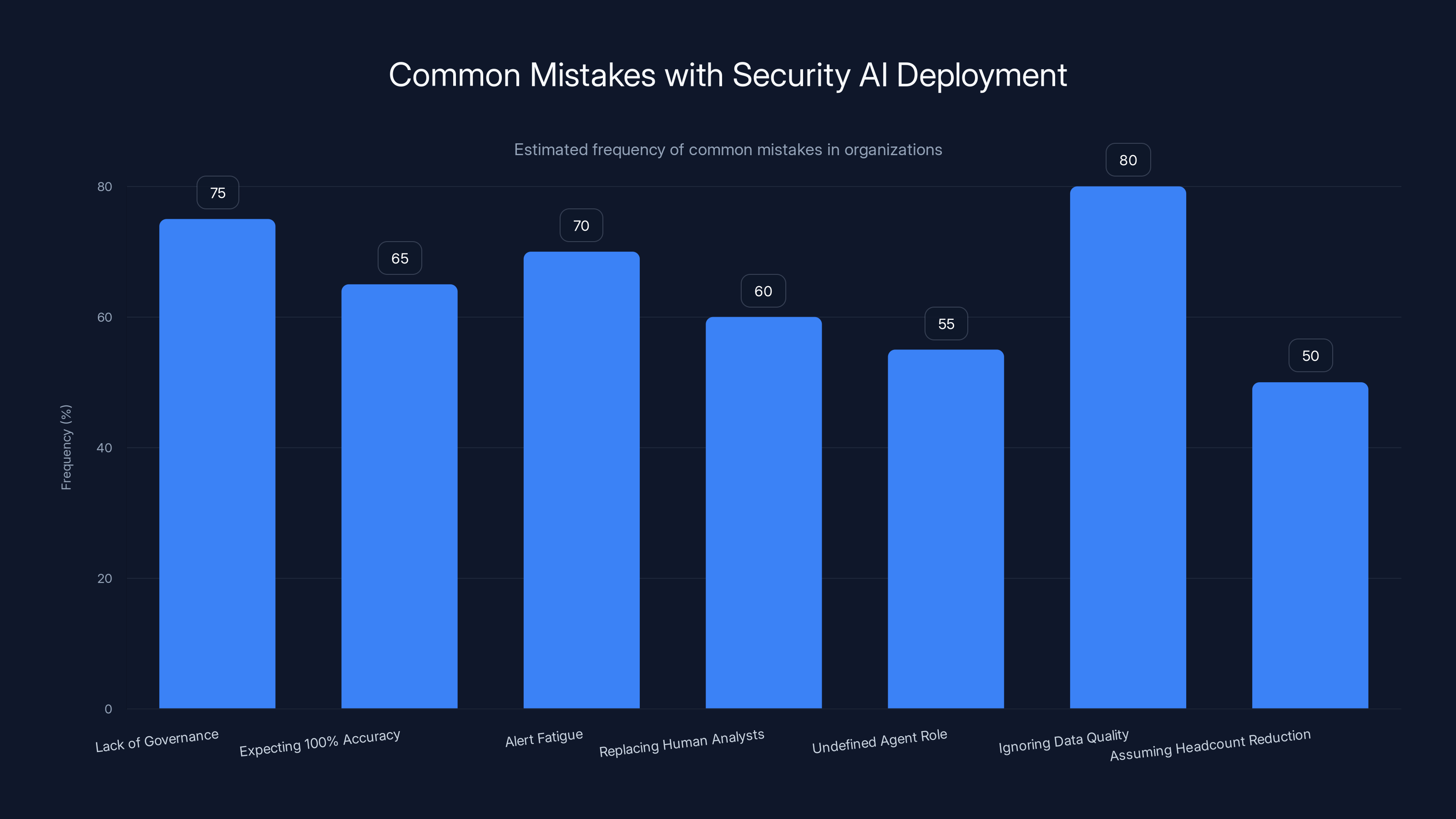

Estimated data shows that ignoring data quality issues is the most frequent mistake, affecting 80% of organizations, while assuming headcount reduction is the least frequent at 50%.

Measuring Success: What Security Metrics Actually Matter

You can't improve what you don't measure. But many organizations measure the wrong things.

Avoid these vanity metrics:

- "Number of vulnerabilities found": Finding vulnerabilities without fixing them is pointless. This metric incentivizes scanning more, not securing better.

- "Number of security incidents investigated": More investigations doesn't mean safer. It might mean you have terrible detection or are investigating lots of false positives.

- "Percentage of security training completed": Completions don't equal learning. Training completion is a compliance metric, not a security metric.

- "Number of security tools deployed": More tools create complexity and overhead. You need the right tools, not all the tools.

Focus on these impactful metrics:

Mean Time to Detect (MTTD): How long does it take from when an attacker enters your environment until you notice them? Industry average is 200+ days. Leading organizations achieve 8-15 days. This is directly influenced by AI agent effectiveness.

Mean Time to Respond (MTTR): How long from detection until containment? From containment until eradication? This directly impacts damage. A week to respond means attackers have seven days to steal data. A day to respond limits exposure dramatically.

Vulnerability Exposure Time (VET): How long from vulnerability disclosure until you patch/mitigate? This is especially critical for vulnerabilities with known exploits. VET of 30 days means attackers have 30-day windows to exploit known vulnerabilities in your environment.

Risk-Based Security Spending: What percentage of your security budget addresses your most significant risks? This requires understanding your threat landscape, your vulnerabilities, your likelihood of compromise, and your potential impact. Random spending doesn't improve security. Targeted spending does.

Breach Cost Trend: Organizations that improve security show declining breach costs (if breaches happen, they're smaller and less damaging). This is a lagging indicator but captures overall effectiveness.

Operational Efficiency: How much time does your team spend on routine tasks versus strategic activities? AI agents should shift this ratio. If you're still spending 80% of time on routine work, agents aren't delivering value.

Security Maturity: How mature are your processes? Maturity models help here (CMM, NIST, etc.). Progress on this scale indicates systematic improvement.

When you measure the right things, you can make smart decisions. "Our MTTD increased from 180 days to 60 days after deploying AI agents" is valuable information. "We resolved 23% more vulnerabilities" isn't.

Future-Proofing Your Security Program

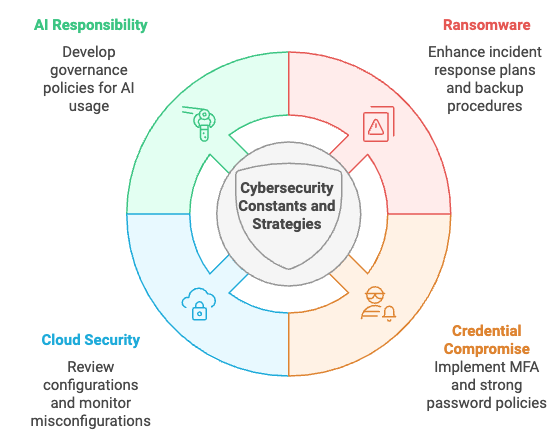

Herzog made an important point: security in six months will look different from security today. Organizations that acknowledge this and build adaptability into their security strategies will endure. Organizations that optimize for current threats will become vulnerable as threats evolve.

Where is security headed?

Increased AI-Enabled Threats: Threat actors are already using AI. Automated vulnerability scanning, credential stuffing at scale, sophisticated phishing emails generated by language models, malware that adapts to defensive measures. This arms race will accelerate. Security that works today might fail against AI-enhanced threats tomorrow.

Quantum Computing: The timeline is debated, but sufficiently powerful quantum computers will break current encryption. Organizations need to begin planning for post-quantum cryptography now, understanding which systems will need re-encryption, and building migration plans.

Regulatory Expansion: Expect more regulations similar to GDPR, emerging regulations around AI governance, increasingly strict data protection requirements, and compliance burden that continues to expand. This makes it essential that security scales with compliance.

Convergence of Operational Technology and Information Technology: Factories, power grids, healthcare systems, transportation networks are increasingly connected. Security breaches in these domains cause physical harm, not just data loss. This changes risk calculus and required controls.

Expanded Attack Surface: Cloud computing, remote work, containerization, microservices, Io T devices, supply chain connectivity—the attack surface keeps growing. Traditional perimeter-based security becomes increasingly irrelevant.

Insider Threat Evolution: Remote work, contractor reliance, high turnover—the insider threat landscape is changing. More people have access to more sensitive systems from more locations. This requires new approaches to access control and monitoring.

Building adaptability means:

- Treating security as an iterative process: Not a one-time implementation but continuous improvement

- Investing in fundamentals: Strong identity and access management, network segmentation, encryption, logging—these won't become obsolete

- Building flexibility into architecture: Decisions that couple you to specific tools or vendors limit your ability to adapt

- Continuous learning: Your team needs to stay current on emerging threats, new attack techniques, and effective defenses

- Regular strategy review: Quarterly or semi-annual reviews of your threat landscape and whether your controls still make sense

- Staying vendor-neutral where possible: Proprietary lock-in limits flexibility. Open standards and multi-vendor approaches preserve adaptability

Organizations that embed adaptability into their security culture will navigate whatever comes next. Those that treat security as a checkpoint will struggle.

Implementing AI-Assisted Security: A Practical Roadmap

Understanding the theory of AI-assisted security is one thing. Implementing it in your organization is another.

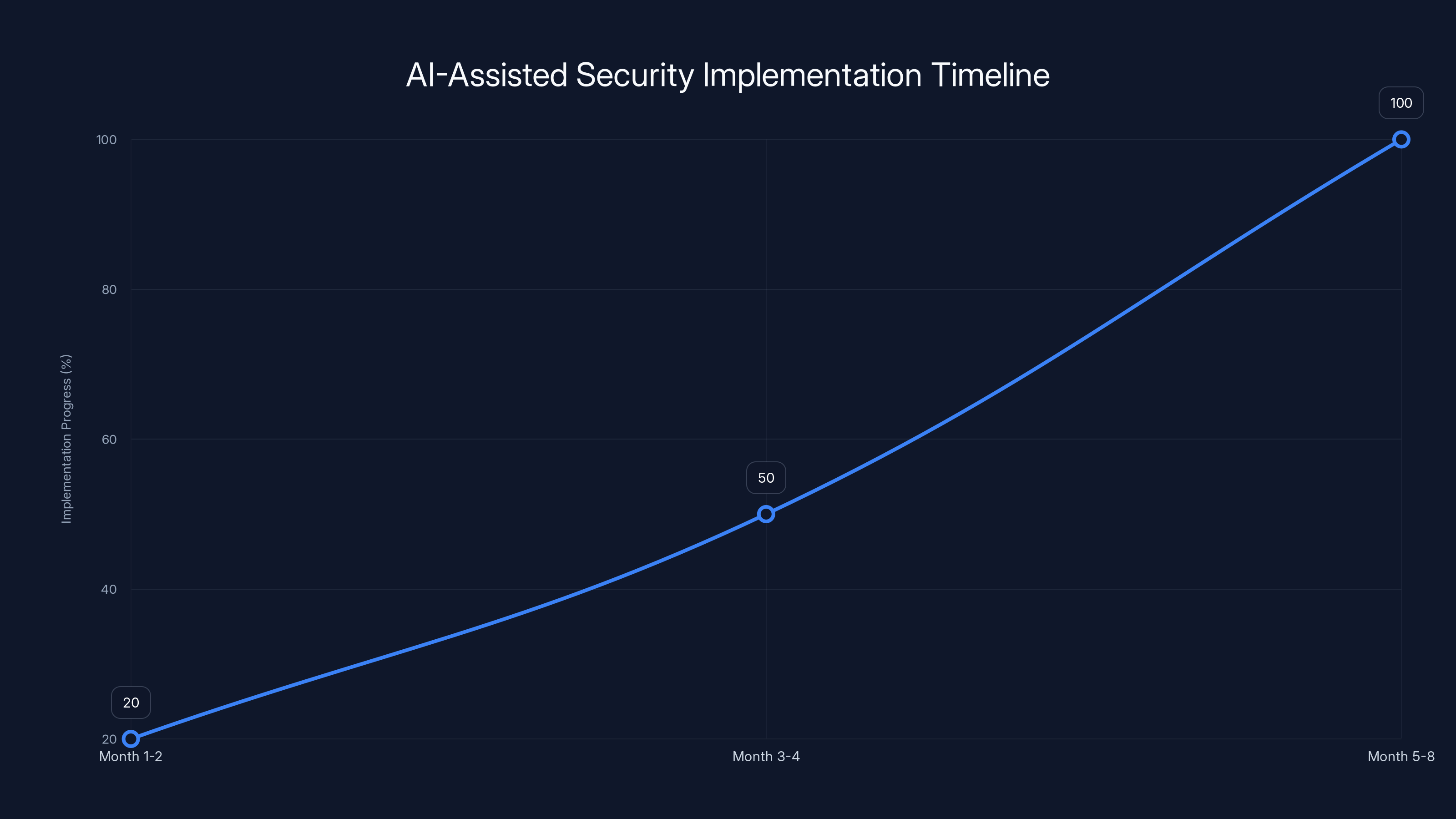

Phase 1: Assessment (Month 1-2)

Start by understanding your current state:

- What's your current threat landscape? Which threat actors target your industry? What are they after?

- What's your current security maturity? Where are your biggest gaps?

- What's your current tech stack? Which systems generate logs and alerts?

- What's your team's capacity? How many people on your security team? What are they currently focused on?

- What's your security budget? What tools already have budget allocated?

From this assessment, identify the highest-impact area for AI agents. If your MTTD is 200 days, deploying agents for log analysis and anomaly detection might have highest ROI. If your vulnerability management is chaotic, agents for vulnerability prioritization might be better.

Phase 2: Pilot (Month 3-4)

Pilot one specific agent for one specific use case:

- Define clear success criteria: "Reduce MTTD from 180 to 90 days" or "Improve vulnerability remediation rate from 60% to 80%"

- Implement with realistic expectations: First-generation agents have limitations. Accuracy might be 85%, not 100%

- Establish feedback loops: How do you collect information on agent performance? How do you iterate?

- Build team familiarity: Let your team work with the agent, understand its strengths and limitations, develop trust

Phase 3: Integration (Month 5-8)

Once the pilot proves value, integrate into operations:

- Develop processes around agent recommendations: Who reviews? Who approves? What's the escalation path?

- Build governance: Define what agents can do autonomously vs. what requires human approval

- Train the broader team: People outside the pilot program need to understand the agent and how to work with it

- Establish metrics: Track the success criteria from the pilot. Is the agent delivering promised value?

Phase 4: Expansion (Month 9-12)

With one agent working well, expand:

- Deploy second agent for a different use case: Complementary agents (one focused on detection, another on vulnerability management) create synergies

- Consider consolidation: Some platforms offer multiple agents. Consolidation reduces complexity

- Continue optimization: Each agent can be tuned, thresholds adjusted, new data sources integrated

- Develop internal expertise: Your team should be developing deep expertise with these tools

Phase 5: Continuous Improvement (Ongoing)

AI-assisted security isn't a "deploy and forget" project:

- Regular performance review: Are agents still delivering value? Have threats changed in ways that affect agent effectiveness?

- Threshold adjustment: As your environment changes, agent sensitivity might need adjustment

- New use cases: As you become comfortable with agents, identify new applications

- Team evolution: Shift team members' responsibilities to leverage agents for routine work, freeing them for strategic activities

This roadmap acknowledges that AI-assisted security is a journey, not a destination. Organizations that execute this methodically build sustainable capability. Those that try to shortcut by deploying everything at once typically struggle with adoption, governance, and ROI.

Common Mistakes Organizations Make with Security AI

Forewarned is forearmed. Here are mistakes organizations commonly make:

Mistake 1: Deploying agents without governance. The agent flags an issue and automatically remediates it, breaking production. Or the agent is too restrictive and blocks legitimate business processes. Governance matters.

Mistake 2: Expecting 100% accuracy. An agent that's 90% accurate and reduces MTTD from 200 days to 50 days is valuable. Don't hold agents to impossible standards. Hold them to "do they improve outcomes?"

Mistake 3: Ignoring alert fatigue in the opposite direction. Sometimes organizations deploy agents and are amazed at how many issues the agent finds. They get overwhelmed by agent output and stop trusting the agent. Agents that surface 10,000 issues aren't helpful. Agents that surface the 50 most critical issues are.

Mistake 4: Treating AI agents as replacements for human analysts. The best outcomes come from human-agent collaboration. Agents can process information faster. Humans provide judgment, context, and nuance. Both are necessary.

Mistake 5: Deploying before understanding what the agent is supposed to do. "Deploy this agent and tell me what happens" is a recipe for confusion. Define the agent's charter clearly first. "Detect unusual login patterns that might indicate account compromise" is clear. "Improve security" is not.

Mistake 6: Ignoring data quality issues. Agents learn from data. If you're feeding agents poor quality data, incomplete logs, or inaccurate configuration data, agent recommendations will be poor. Data quality matters.

Mistake 7: Assuming agents reduce headcount. Most organizations that deploy security agents initially find they need more people to manage the agents. Early wins can come from redeploy (moving analysts toward higher-value work) rather than reduction. Headcount reduction comes later, after agents mature.

Mistake 8: Not integrating with existing tools. Your new agent doesn't operate in isolation. It needs to integrate with your SIEM, your vulnerability scanner, your ticketing system, your compliance tools. Integration is critical.

Avoid these mistakes and you dramatically improve your odds of successful AI-assisted security deployment.

Runable for Security Documentation and Reporting

AI-assisted security generates massive volumes of data. Vulnerability reports, incident analyses, threat assessments, compliance documentation—this work is documentation-intensive.

Platforms like Runable can help by automating report generation. Instead of security analysts manually compiling findings into presentations and documents, Runable's AI agents can take raw security data, analyze it, and generate executive summaries, technical deep-dives, and compliance reports. This is particularly valuable for organizations deploying multiple security agents that produce more data than humans can manually synthesize.

Use Case: Security teams can use AI agents to automatically generate weekly threat summaries, vulnerability reports, and executive security briefings without manual compilation.

Try Runable For Free

The Broader Philosophy: From Perfection to Pragmatism

What Herzog's perspective really boils down to is a shift from perfectionist security thinking to pragmatic security thinking.

Perfectionist security says: "Find every vulnerability. Fix every issue. Eliminate every risk." This is exhausting, often impossible, and leads to burnout.

Pragmatic security says: "Understand your actual risks. Prioritize based on likelihood and impact. Invest where it matters most. Continuously adapt as things change." This is sustainable and actually improves security over time.

AI agents support pragmatic security. They identify risks faster. They prioritize better. They enable faster adaptation. But they work best when humans set strategic direction.

The companies that win at security long-term aren't the ones with the most tools or the most restrictions. They're the ones that balance protection with functionality, that measure outcomes rather than activity, and that continuously adapt to emerging threats.

If your organization is serious about security, start there. Get the philosophy right. Then layer in technology.

FAQ

What are AI agents in cybersecurity?

AI agents in cybersecurity are autonomous software systems designed to perform specific security tasks efficiently and at scale. They analyze logs, identify threats, prioritize vulnerabilities, and assist security analysts by handling routine work. Unlike traditional automated tools, modern security agents use machine learning to understand context and make more nuanced decisions. Examples include cloud configuration auditing agents, anomaly detection agents, and vulnerability prioritization agents. These agents don't operate independently but rather augment human security teams, providing faster analysis and freeing analysts for strategic work.

How do AI security agents reduce threat dwell time?

AI security agents reduce threat dwell time (the period an attacker remains undetected) by continuously monitoring for suspicious behavior 24/7 without human fatigue. Agents can analyze logs and network traffic in real-time, identifying anomalous patterns like unusual login attempts, lateral movement, or data exfiltration that humans might miss. By detecting threats faster, your team has more time to contain the breach before attackers achieve objectives. Organizations deploying effective security agents have reduced average dwell time from 200+ days to under 15 days, dramatically limiting damage from breaches.

What's the difference between detection and prevention in security?

Prevention controls stop attacks before they succeed (firewalls, access controls, encryption). Detection controls identify attacks that have already begun (network monitoring, endpoint detection). Most modern security strategies combine both because perfect prevention is impossible—attackers eventually find gaps. AI agents enhance both prevention (by identifying vulnerabilities before deployment) and detection (by analyzing vast data streams for attack signatures). The most effective approach invests across prevention, detection, response, and recovery stages, with each stage creating synergistic improvements in overall security.

Should we automate security remediation?

Partially. Automating routine remediation (enabling encryption on a database, closing overly permissive firewall rules) can be safe if proper governance exists. Major changes (deleting databases, removing administrator access) should require human approval. The key is establishing clear authority levels: which changes can agents implement autonomously based on rules you've defined, and which require human sign-off? This balance improves security speed while maintaining control.

How do we measure if AI security agents are working?

Focus on outcome metrics rather than activity metrics. Track Mean Time to Detect (MTTD), Mean Time to Respond (MTTR), and Vulnerability Exposure Time (VET). Has MTTD improved? Are you responding to incidents faster? Are you remediating vulnerabilities before they can be exploited? These metrics tell you whether agents are actually improving security. Also measure operational efficiency: is your team spending less time on routine work? Are they shifting toward strategic activities? These shifts indicate agents are delivering value.

What's the biggest challenge in deploying security AI?

Understanding what problem you're solving. Organizations often deploy agents without clear objectives, then wonder why they're not getting value. Start by defining: "What specific security problem is this agent solving? How will we measure success? What's our baseline?" From there, pilot with realistic expectations. First-generation agents aren't perfect, but if they improve key metrics, they're working. The biggest risk is expecting human-level performance or giving up after initial setbacks rather than investing in optimization.

How do AI agents affect security team size and roles?

Initially, deploying agents doesn't reduce headcount; it typically requires someone to manage the agent, tune thresholds, and oversee recommendations. But it does shift work. Analysts spend less time investigating false positives and more time on complex analysis. Junior analysts focus on agent oversight rather than alert triage. Over time, mature agent deployment enables teams to cover more ground with existing staff. Instead of hiring more security analysts to handle growing infrastructure, agents absorb the volume increase.

Can AI security agents be tricked or bypassed?

Yes. Like any security control, agents can have vulnerabilities. An attacker who understands how an agent works might craft attacks to evade detection. This is why governance and regular testing matter. You need to validate that agents catch realistic attacks, test them against new threat techniques, and update detection rules as threats evolve. Agents aren't perfect. They're force multipliers. Proper governance and continuous improvement are essential.

What role does human judgment play if we're using AI agents?

Human judgment is irreplaceable. Agents gather information, prioritize findings, and suggest actions. Humans decide whether recommendations are sound, whether trade-offs between security and functionality are acceptable, and whether to implement recommendations. The best results come from human-AI collaboration: agents handling information processing at scale, humans making strategic decisions. An agent can flag that you're exposing sensitive data. But deciding whether that's an acceptable business risk requires understanding business context that agents don't have.

How do we ensure AI agents align with compliance requirements?

Most modern compliance frameworks (HIPAA, PCI-DSS, SOC 2, GDPR) care about outcomes, not specific tools. If an agent helps you meet compliance requirements faster and more consistently, that's valuable. Document how agents contribute to compliance: reduced vulnerability exposure time helps meet patching requirements; improved threat detection helps satisfy incident response requirements. Some agents specifically audit compliance, validating that you're meeting regulatory requirements continuously rather than during annual audits.

Final Thoughts: Building the Security Organization of Tomorrow

Amy Herzog's perspective from the AWS CISO office reflects a maturing security industry. The days of security through obscurity, perimeter-based defense, and manually-intensive processes are ending. Modern threats move too fast, attack surfaces are too large, and talent constraints are too severe for traditional approaches to scale.

The future of enterprise security looks like this: AI agents handling routine monitoring, anomaly detection, and vulnerability assessment. Security analysts focusing on complex investigations, strategic improvements, and decision-making. Automation handling repetitive tasks, freeing humans for judgment. Teams measuring outcomes, not activities. Organizations balancing protection with functionality rather than pursuing impossible perfection.

This isn't science fiction. Organizations are doing this today. Those who move first build competitive advantage through better security outcomes and more efficient security operations. Those who wait risk falling further behind.

The good news: you don't need to overhaul everything at once. Start with assessment. Identify your biggest pain point. Run a pilot. Measure results. Expand methodically. Over 12-18 months, you can transform your security posture.

The hard truth: this requires investment. Not just in tools, but in team development, governance establishment, and continuous improvement. But the alternative—staying with traditional approaches as threats evolve—carries far higher long-term cost.

Threat actors have a goal in mind. They'll use whatever path they see to achieve it. Your job is to make every path harder, every attack slower, and every compromise more costly and risky for them. AI-assisted security makes that possible at scale.

The question isn't whether to adopt AI agents. It's how quickly you can do it well.

Key Takeaways

- Threat actors are outcome-focused and will exploit whatever path reaches their goal fastest; traditional reactive security fails against this asymmetry

- AI agents in security work best when specialized for specific tasks (cloud auditing, anomaly detection, vulnerability prioritization) and integrated into larger frameworks

- Prevention catches threats before they harm customers and costs orders of magnitude less than breach response; organizations should shift investment accordingly

- Perfect security is impossible and counter-productive; optimal security balances protection with functionality to achieve business objectives

- Success metrics should measure outcomes (MTTD, MTTR, VET) not activities (vulnerabilities found, alerts generated); pragmatic security beats perfectionist security

Related Articles

- 9 Game-Changing Cybersecurity Startups to Watch in 2025

- Prompt Injection Attacks: Enterprise Defense Guide [2025]

- Aflac Data Breach: 22.6 Million Exposed [2025]

- WebRAT Malware on GitHub: The Hidden Threat in Fake PoC Exploits [2025]

- France's La Poste DDoS Attack: What Happened & How to Protect Your Business [2025]

- AI Agents Are Coming for Your Data: Privacy Risks Explained [2025]

![AWS CISO Strategy: How AI Transforms Enterprise Security [2025]](https://tryrunable.com/blog/aws-ciso-strategy-how-ai-transforms-enterprise-security-2025/image-1-1766918165473.jpg)