Can Google's Gemini Actually Do What the Ad Shows? A Real Test

Google just dropped one of those ads that makes you feel warm and fuzzy inside. A couple finds their kid's favorite stuffed lamb, Mr. Fuzzy, left behind on an airplane. Instead of panicking, they use Gemini to find a replacement toy and, while waiting for it to arrive, use AI to generate images and videos of the lamb "traveling the world." The lamb's in front of the Eiffel Tower wearing a beret. It's running from a bull in Pamplona. It even records a message to "Emma" explaining why it's delayed in getting home.

It's the kind of ad that makes you want to immediately open Gemini and try it yourself. The creative is polished, the concept is genuinely clever, and it taps into something real that parents everywhere deal with: kids getting obsessed with one specific toy, and the panic that sets in when that toy goes missing.

But here's the thing I always wonder about tech ads: how much of what they show can you actually replicate? How many prompts did they really need? How much did they have to iterate? How many times did the AI confidently hallucinate something wrong before getting it right?

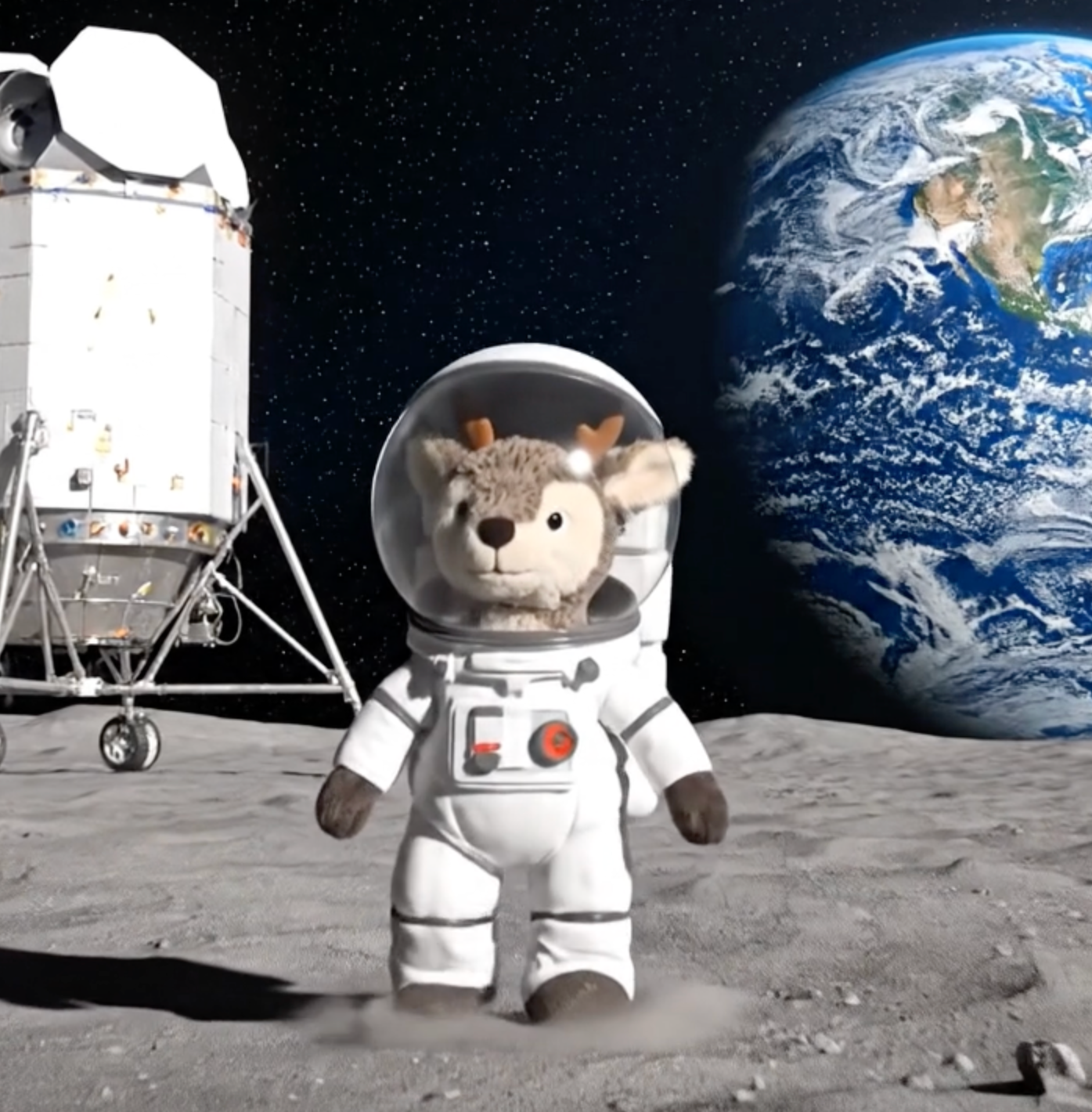

So I decided to find out. I grabbed my own kid's favorite stuffed animal (a generic-cute fawn we call "Buddy"), took some photos from different angles, and tried to recreate what the ad promised. What I found was... partially true, but with some pretty important asterisks.

TL; DR

- Gemini can find similar stuffed animals from photos, but it might struggle with generic toys and spend a lot of digital ink explaining its uncertainty

- Image generation works surprisingly well, though it needs clean source photos with good angles, or body parts might look off

- The AI's "thinking" process is revealing, showing all the ways it second-guesses itself ("puppy hypothesis," "rabbit hole," and literal hedging)

- What the ad doesn't show you are the failed attempts, the refinements needed, and the fact that sometimes you're better off just Googling

- Bottom line: The ad is mostly honest about what Gemini can do, but it's edited to show the highlight reel, not the messy reality

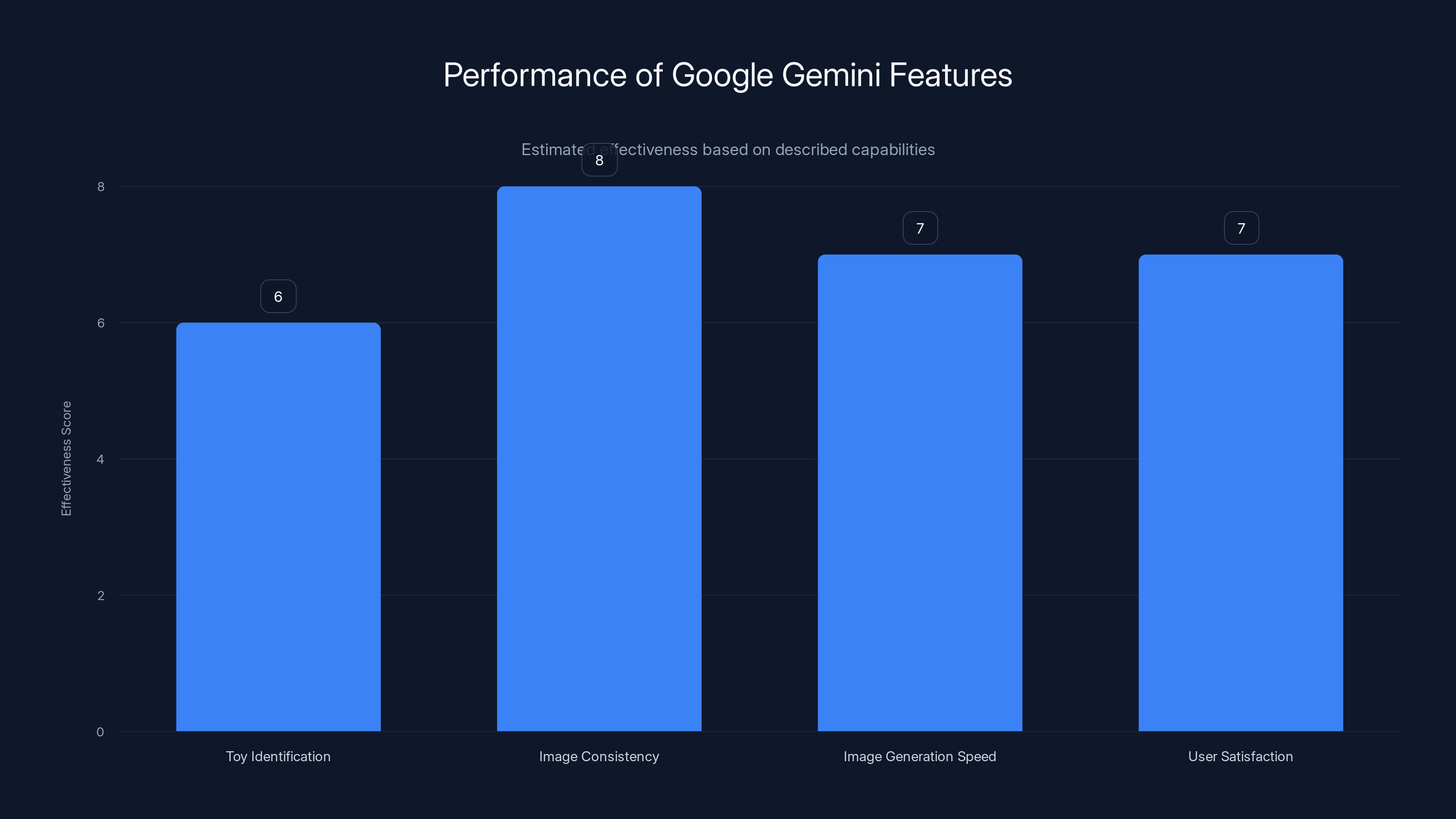

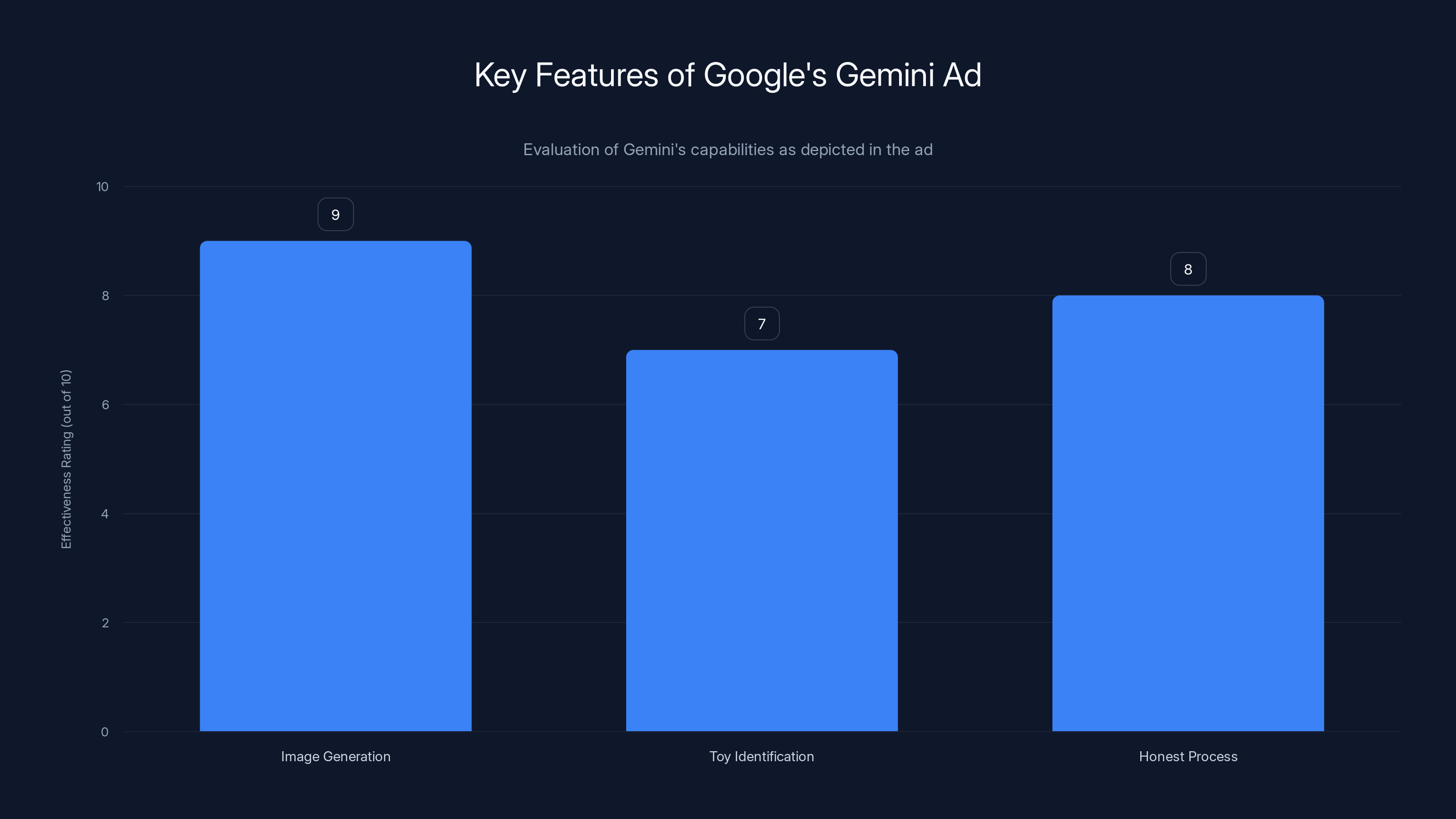

Google Gemini performs well in generating consistent images and has moderate success in identifying toys. Estimated data based on feature descriptions.

The Setup: A Stuffed Animal, Some Photos, and False Hope

Let me paint the picture. My son has a stuffed fawn he's had since he was about two years old. We've never even managed to find another one exactly like it, which means I've got the backup-toy anxiety permanently locked in my nervous system. When he inevitably drags "Buddy" through mud, leaves it at a restaurant, or (worst case) loses it on a trip, I'm doomed.

Buddy is small, brown, unremarkable in every way that matters. His tag is long gone. His features are aggressively generic: could be a fawn, could be a puppy, could be some hybrid creature that doesn't exist in nature. He's got a little loop on his backside where the tag used to be, and that loop is genuinely the most identifying feature he has.

I took three photos of him from different angles, including one of him sitting in an airplane seat (because the ad scenario requires it). Then I opened Gemini and fed it the exact prompt the ad mentions: "Find this stuffed animal to buy ASAP."

What I got back was... well, it was honest, at least.

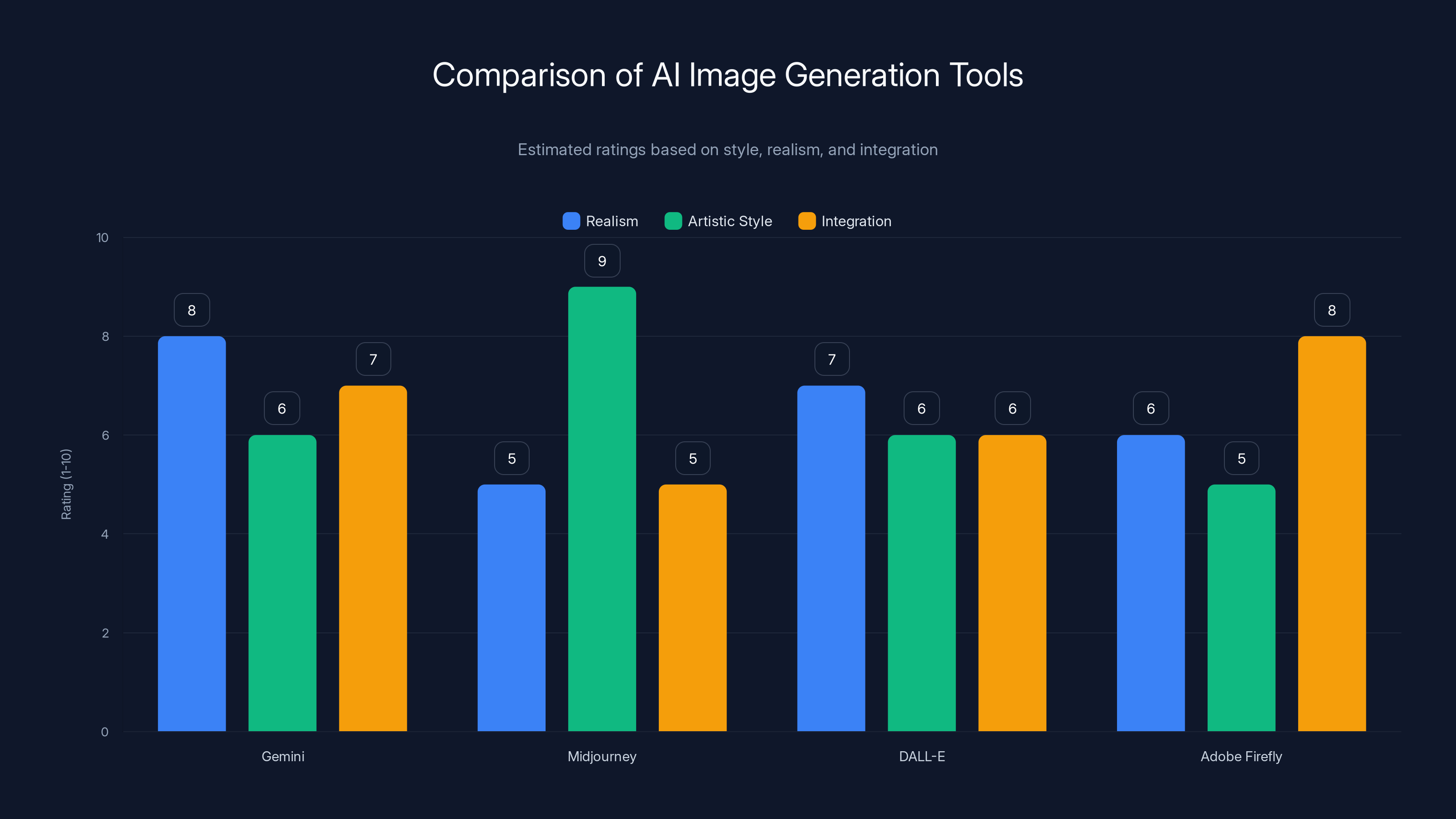

Gemini excels in realism and integration, while Midjourney leads in artistic style. Estimated data based on tool descriptions.

The Identification Test: When AI Second-Guesses Itself (A Lot)

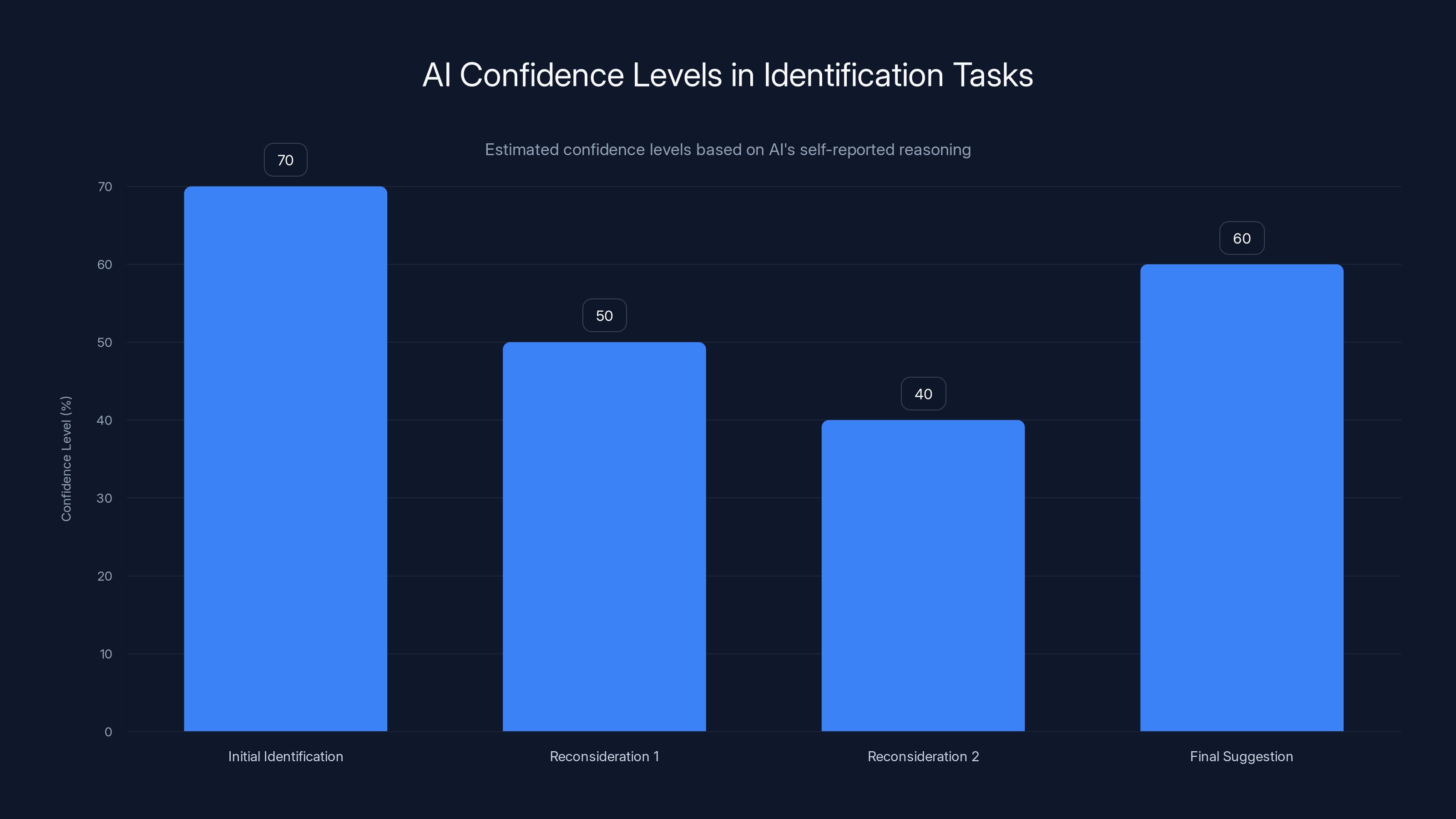

Gemini returned a couple of likely candidates right away. That's the part you'd see in the ad. What you wouldn't see is the 1,800-word essay the AI generated when I expanded the "show me your thinking" option.

It was genuinely hilarious. And genuinely revealing about what's actually happening under the hood.

Here's the AI literally reconsidering itself: "I am considering the puppy hypothesis." Then, "The tag is a loop on the butt." Then, almost sheepishly, "I'm now back in the rabbit hole."

It went down the Putty Collection path multiple times (which is actually correct—Buddy probably is from that collection, a discontinued fawn from around 2021). But it also confidently declared him a puppy more than once. By the end, it kind of threw up its hands and suggested the toy might be from Target, probably discontinued, and that I should check eBay.

Here's the thing: I actually came to almost the exact same conclusion after about 20 minutes of manual Googling. So Gemini got there, but not through the kind of decisive, efficient process the ad implies. It was more like watching someone think out loud, getting confused, backing up, reconsidering, and eventually arriving at a reasonable hypothesis.

When I did a reverse image search on my own photos, Google's AI confidently declared Buddy a puppy. Same confidence, opposite conclusion. No AI beats another AI here—they just confidently disagree.

The Image Generation Part: Where Gemini Actually Delivers

Now, the second half of the ad scenario is where things got interesting. That's where Gemini actually shows its muscle.

I started with a different photo—one where Buddy is actually sitting in an airplane seat in my son's arms. The prompt was simple: "Make a photo of the deer on his next flight."

The result was actually solid. The proportions looked right. The toy maintained its general color and shape. The AI even remembered to keep details from the original image (like the seatbelt partially visible). The only issue? His lower half was obscured in the source photo, so the feet came out slightly off. But it was close enough that my kid definitely would've thought it was the real Buddy.

For the Grand Canyon shot (the one the ad doesn't fully explain), I used the prompt: "Make a photo of the same deer in front of the Grand Canyon." And it delivered. The AI remembered the toy from the previous image, created a plausible outdoor setting, even included the airplane seatbelt and headphones from the earlier photo. Consistency across multiple generations—that's legitimately impressive.

The limitation I hit? When the source material is unclear or partial, the AI gets creative in ways that might not match reality. And that's not a bug, that's literally what it's designed to do. It fills in the gaps.

There's also a practical issue the ad glosses over: you need reasonably clean source photos. A stuffed animal in different lighting, from different angles, partially obscured—that's harder to work with than the ad implies. The lamb in the commercial is probably shot in a studio with consistent lighting.

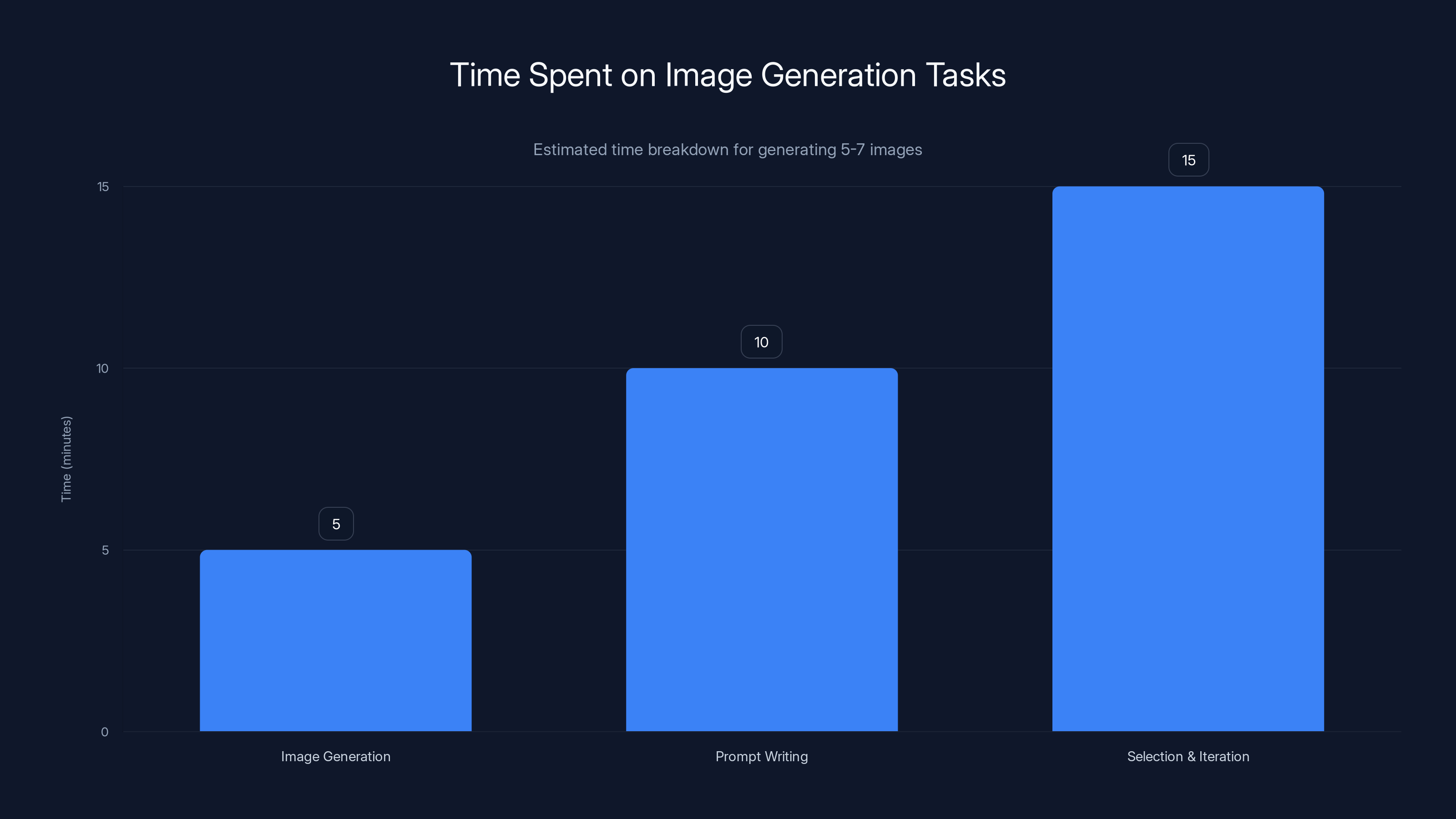

Generating 5-7 images can take 20-30 minutes, with most time spent on selection and iteration. Estimated data.

What the Ad Gets Right (And Why That Matters)

Let's be fair: Google's creative team clearly tested this scenario before filming the ad. They know what they're showing you is actually possible because they've done it. That's different from many tech ads that show purely fictional capabilities.

The image generation absolutely works. That's the part I found most impressive. Gemini can take a reference image and generate plausible variations of it in different contexts. For a parent trying to buy their kid some time while waiting for a replacement toy to arrive, that's legitimately useful.

The toy identification works, more or less. It's not perfect for obscure or discontinued items, but it's better than nothing. And the fact that it shows its thinking (all those doubts and reconsiderations) is actually more honest than you'd expect from a corporate ad.

The core premise of the ad is true: you can use AI to help solve this specific parenting problem. The execution in real life won't be quite as seamless, but the capability is real.

What the Ad Definitely Leaves Out

But there's a massive difference between "this is possible" and "this is as easy as the ad shows."

First, the ad doesn't show iteration. You don't see the prompts that failed, the images that came back weird, the second and third attempts. In the real world, you probably need to refine your prompt, try different source images, maybe fiddle with settings. It's not one-and-done.

Second, it doesn't show the time cost. Even if every prompt works on the first try (unlikely), you're still spending 10-15 minutes per image generation. The ad condenses this into about 30 seconds of screen time. Multiply that across "a week's worth" of daily travel photos and you're looking at hours of work.

Third, it doesn't show the limitations of the source material. The lamb in the ad is well-lit, clearly visible, shot from angles that make it easy to understand its shape and color. Real-world stuffed animals—especially ones that have been loved by a toddler—are often wrinkled, stained, and photographed in terrible lighting. That changes the AI's ability to work with the material.

Fourth, the AI identification part is way more uncertain than the ad implies. The shown version probably went through multiple iterations, maybe with a cleaned-up toy photo or better lighting, to land on a confident answer. What I got was pages of hedging and self-doubt.

AI systems often show fluctuating confidence levels during identification tasks, reflecting uncertainty and self-correction. (Estimated data)

The Broader Honesty Question: Is This Misleading?

Here's where I land on the ethics of this ad: it's not technically lying, but it's definitely polished.

Google isn't claiming the AI works instantly or perfectly. They're not claiming it works without any prompting or iteration. The ad shows a plausible scenario and demonstrates that the tools can address it. That's... actually more honest than a lot of tech advertising.

But there's a gap between "this is possible" and "your experience will look like this." Your experience will probably involve more failed attempts, more refinement, and more time than the 90-second ad suggests. That's not necessarily misleading—it's just inherent to how advertising works.

What's interesting is that the ad acknowledges the AI's uncertainty through the dialogue ("five to eight business days" is a hilariously accurate reference to how vague "processing time" usually is). That's a small nod to reality that most tech ads would skip entirely.

The Real Use Case: When This Actually Works Well

All that said, there's a legitimate real-world use case here. If your kid has a favorite toy that goes missing, and you need to either replace it or buy yourself some time, AI-generated images of that toy "on an adventure" is actually a clever solution.

It works because kids don't have the same visual literacy adults do. They're not going to scrutinize the image for anatomically correct stuffed-animal proportions. They're going to see their toy and feel better.

The image generation capability is the real win here. Not the toy identification (which is okay but not amazing), but the ability to create new photos showing the toy in different scenarios. That's genuinely useful.

I actually did create a few more images of Buddy (in a hot air balloon, at the beach, riding a skateboard) and my son thought it was hilarious. Did he believe Buddy actually did these things? No. But did it buy time and entertainment value while we waited for a replacement toy to arrive? Absolutely.

The ad effectively showcases Gemini's image generation and honest AI process, with toy identification being slightly less effective. Estimated data.

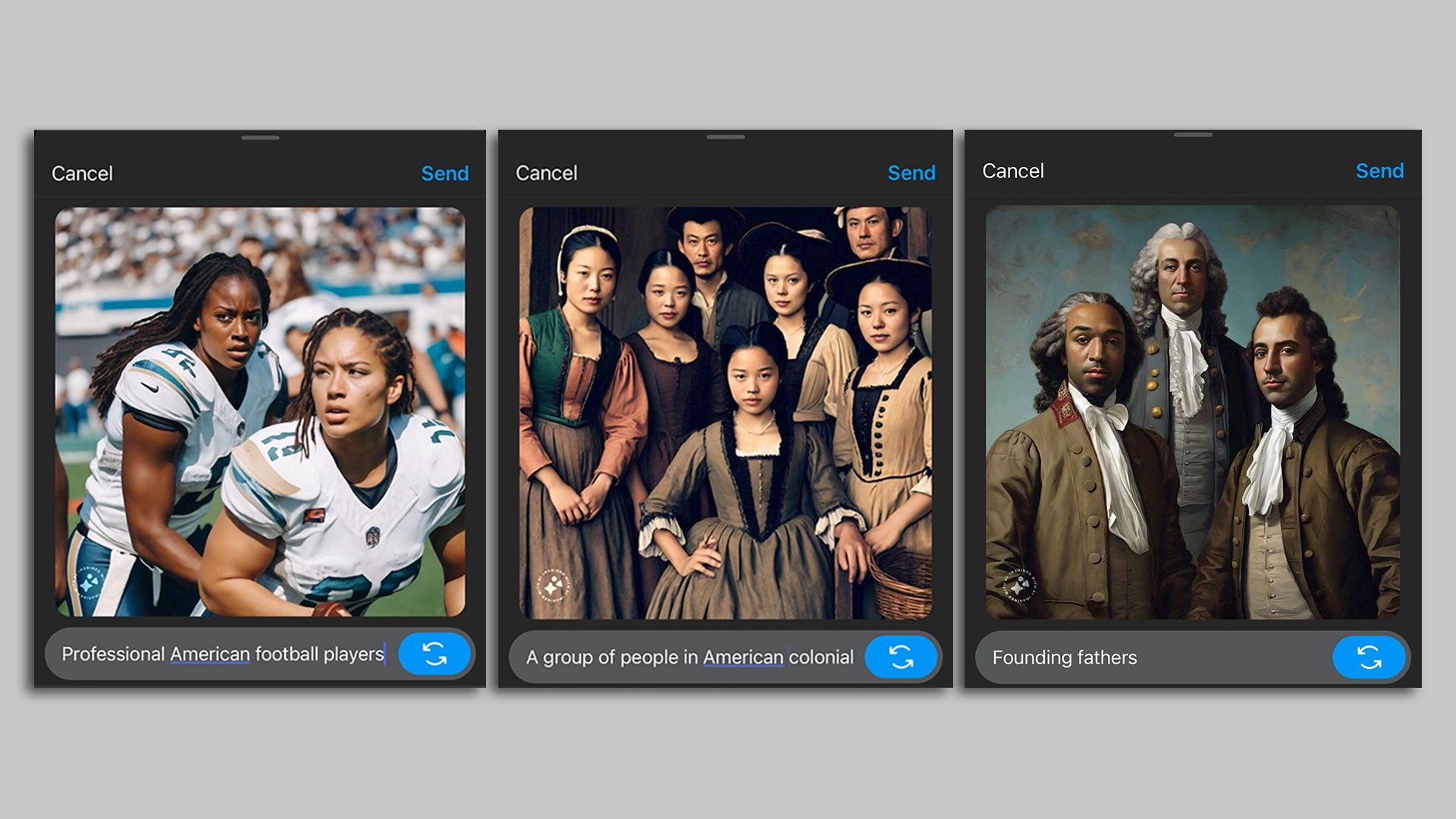

The Ethical Edge Cases: When "Cute and Creative" Gets Weird

Here's the thing I can't fully resolve: is it okay to lie to your kid about their toy being on an adventure?

The ad frames it as harmless fun—parents helping their kid cope with a missing toy. And in the context of the ad, it is that. But there's a line between "telling a story" and "creating convincing fake photos of something being somewhere it wasn't."

Young kids are still developing their understanding of what's real and what's pretend. Showing them photos of their toy "actually" being somewhere, especially when the AI made those photos, is... complicated.

It's not necessarily wrong. It's the kind of thing parents have done forever—making up stories, creating experiences through imagination. But there's something different about AI-generated images. They look real. They have the visual authority of a photograph. There's a trust violation lurking in there.

Google's ad glosses over this entirely, which is what you'd expect from a corporate marketing video. But it's worth thinking about if you're actually going to do this with your own kid.

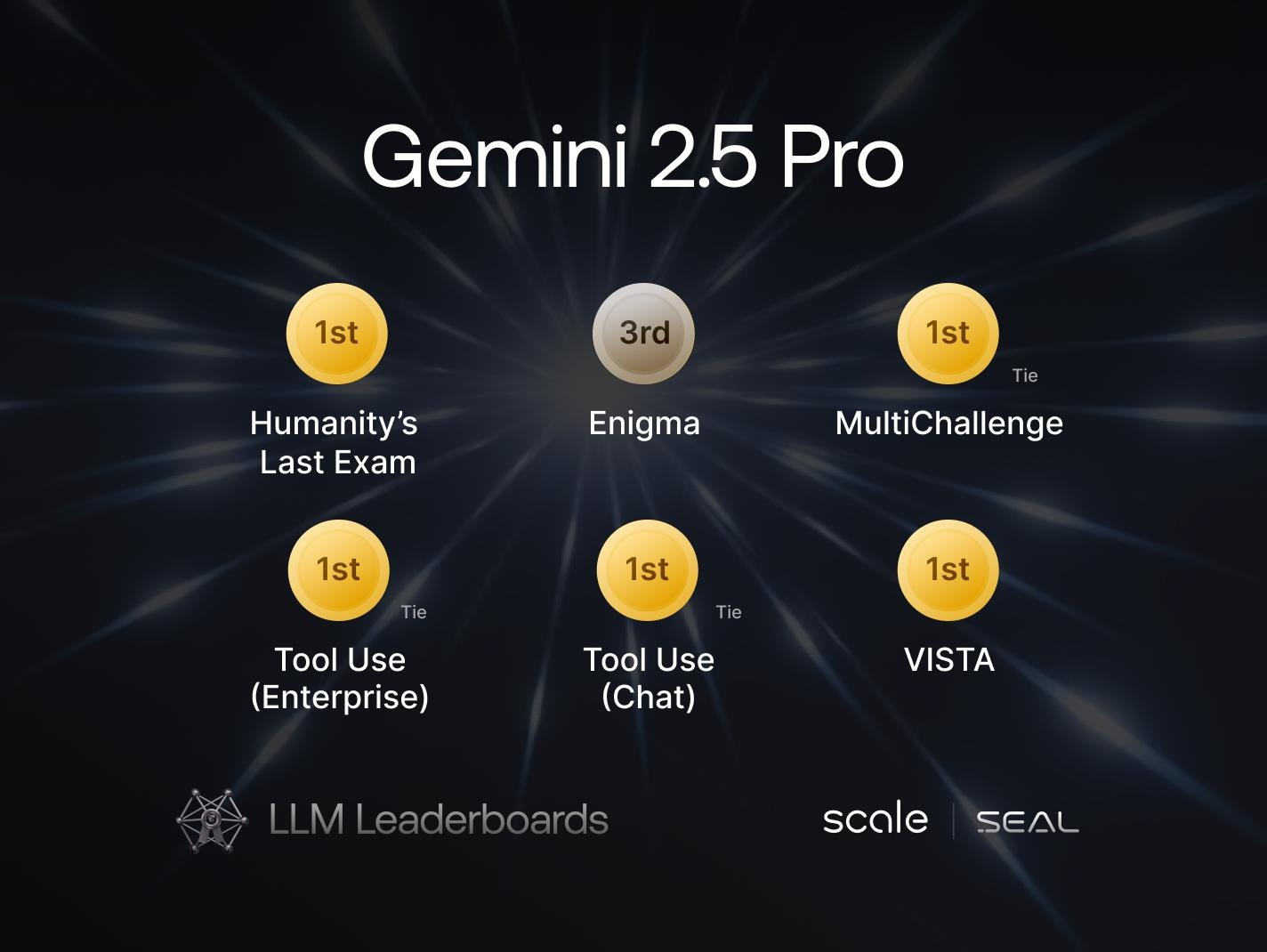

Comparing Gemini to Other AI Image Generation Tools

How does Gemini's image generation stack up against the competition?

Midjourney produces more stylized, often more artistic results. If you want images that are clearly illustrated (not photos), Midjourney is your tool. But for this "make it look like a real photo" scenario, it's overkill.

DALL-E is similar to Gemini but tends to require more specific prompting. DALL-E often produces very clean, almost too-perfect images. Gemini's images feel a bit more naturalistic.

Adobe Firefly is solid and integrates well if you're already in the Adobe ecosystem, but it's not as mature as Gemini for this specific use case.

For the toy-on-an-adventure scenario, Gemini actually might be your best option. It strikes a balance between realism and consistency, and it maintains details from reference images well.

The Hidden Time Cost: Why Ads Never Show the Clock

Let's talk about the thing nobody mentions: how long this actually takes.

One decent image generation takes maybe 30 seconds to a minute, depending on server load. So if you're generating 5-7 images for "a week's worth" of fake travel adventures, that's 3-7 minutes just for generation. Add in the time to write prompts, select the best variations, maybe iterate on a couple, and you're looking at 20-30 minutes minimum.

The ad shows this in about 30 seconds of video time. There's no clock on screen showing how long this actually takes.

For a parent trying to keep their kid entertained while waiting for a replacement toy, 20-30 minutes is manageable. But it's not the "while you're waiting for coffee to brew" kind of quick.

Why This Ad Actually Matters (Beyond the Cute Factor)

Google's showing a real-world use case for AI image generation that isn't about creating art or porn or anything controversial. It's about solving an actual parenting problem with actual creativity.

That's useful for several reasons:

First, it normalizes AI as a problem-solving tool rather than a threat. Second, it shows an application that's actually accessible to regular people, not just designers or developers. Third, it's genuinely creative—not just generating a pretty picture, but using AI to sustain a narrative.

What's important for consumers to understand is that the capability is real, but the path to achieving it isn't as straight as the ad implies. You will iterate. You will refine. Some prompts will work great, others will be duds.

But the fact that Google made an ad that's essentially honest about what their tool can do? That's actually pretty good. It's not claiming sentience. It's not claiming the AI understands your emotional needs. It's just showing what it can actually do: help you make images of your kid's toy in different places.

The Takeaway: It Works, But It's Work

Can you recreate Google's Gemini ad scenario with your own stuffed animal? Yes.

Will it be as smooth and quick as the ad shows? No.

Will it be worth the time if it buys you a week of your kid being okay with not having their favorite toy? Probably yes.

Is there something slightly odd about using AI to create convincing fake photos of your kid's toy being places it never was? Yeah, a little bit.

But is it harmless fun that actually solves a real problem? Also yeah.

The ad is doing its job: it's showing you a capability and a use case. The capability is real. The use case is legitimate. What's missing is the full context of how much iteration and time it actually takes. But that's basically every tech ad ever made.

Google's credit here is that they're not overstating what the tool does. They're showing what it actually does, in a context where it's actually useful. That's rarer than it should be.

FAQ

What is the Google Gemini toy-finding scenario?

It's a marketing scenario where parents use Gemini to identify a missing stuffed animal, find a replacement, and while waiting for the replacement to arrive, use AI image generation to create photos of the toy "traveling the world." The concept addresses a real parenting problem: kids getting attached to specific toys and panicking when they're lost or unavailable.

Can Gemini actually find your lost stuffed animal from a photo?

Gemini can attempt to identify stuffed animals from photos using reverse image search capabilities and visual recognition, but success depends heavily on the toy's distinctiveness and the quality of the photos. Generic toys with minimal distinguishing features are much harder to identify than branded or uniquely designed animals. The AI will show you its reasoning process, which often reveals significant uncertainty.

How well does Gemini generate images of the same stuffed animal in different contexts?

Gemini's image generation works surprisingly well for maintaining consistency across multiple generated images, especially when you provide clear reference photos. However, the quality depends on the source image quality and completeness. If parts of the toy are obscured or poorly lit in the source, the AI fills in gaps creatively, which may not match reality exactly. The results are typically good enough to serve the ad's purpose: entertaining a child while waiting for a replacement toy.

How long does it actually take to create multiple images of a stuffed animal in different locations?

Each image generation takes 30 seconds to one minute depending on server load, but you'll need additional time to write prompts, select the best results, and potentially iterate on unsuccessful attempts. Creating a week's worth of daily travel photos (5-7 images) typically takes 20-30 minutes total, not the 30 seconds the commercial shows through video compression.

Is using AI-generated images to convince your child their toy is traveling somewhere ethical?

That's a personal decision that depends on your parenting philosophy. The practice is essentially "creating a story through images" rather than traditional verbal storytelling. Young children are still developing their understanding of reality versus imagination, so some parents might be concerned about the visual authority that photographs carry. However, if framed as playtime or storytelling rather than deception, most parents find it harmless and creative.

What happens if Gemini can't clearly identify your specific toy?

If the toy is too generic or the photo isn't clear enough, Gemini will typically suggest similar toys or make educated guesses (which might be wrong). The AI will often show significant uncertainty in its reasoning, reconsidering its hypothesis multiple times. In these cases, basic Google image search or checking retailer websites might actually be faster than relying on the AI identification.

How do Gemini's image generation results compare to other AI tools like DALL-E or Midjourney?

Gemini tends to produce more photorealistic results compared to Midjourney (which is more artistic) and is generally easier to use than DALL-E for beginners. For the specific use case of making a stuffed animal appear in realistic photo scenarios, Gemini's balance between realism and consistency with reference images makes it a strong choice among available options.

Can you save the AI-generated images or share them?

Yes, images generated through Gemini can be saved and shared, making them suitable for sending to family members or storing as part of your child's toy's "travel log." This is where the creative narrative aspect really comes into play—you can create an ongoing story about where the toy has been.

Is there a risk that the AI-generated images could look unrealistic or unsettling to children?

Generally, Gemini produces images that look convincingly photorealistic, which is usually the goal. However, if you're trying to hide the fact that the images are AI-generated from your child, there's always a small risk that inconsistencies (wrong number of fingers, weird background artifacts, etc.) might create questions. Some parents view the AI generation as part of the fun, which bypasses this concern entirely.

What's the actual cost of using Gemini for this scenario?

Gemini offers free tier access with limited image generations per month (typically around 15-25 depending on updates), and various paid tiers for heavier use. For a one-time "create a week of toy travel photos" project, the free tier would likely cover your needs. If you want unlimited image generation, pricing varies by subscription level.

What This Means for AI Marketing Going Forward

Google's Gemini ad is interesting because it's one of the first major tech company ads that shows an AI capability without overstating it or getting into sci-fi territory.

There's no implication that the AI understands your emotions or your child's needs. It's not claiming to replace human creativity or parenting. It's just showing a tool solving a specific, real problem in a way that's actually useful.

That's more honest than most tech marketing, which tends toward the apocalyptic ("AI will change everything!") or the dismissive ("It's just a calculator!").

The reality is somewhere in the middle: AI tools can solve specific problems quite well. This ad is showing one of those problems and one of those solutions. The gap between the ad's presentation and the real-world experience is worth understanding, but the core capability is real.

For parents dealing with the very real problem of a child's favorite toy going missing, this is actually useful information. You can spend 20-30 minutes generating AI images to buy yourself time before a replacement arrives. That might be worth it to avoid an epic meltdown.

Is it a long-term solution? No. Is it creative? Yes. Is it honest? More than you'd expect from a corporate ad. That's probably enough.

Try it yourself. Grab a photo of your kid's favorite toy, open Gemini, and see what happens. You might be pleasantly surprised. Or you might discover that the AI thinks your toy is a completely different animal. Either way, you'll learn something real about what these tools can and can't do—which is more valuable than just watching an ad.

Key Takeaways

- Google's Gemini ad is mostly honest about AI capabilities but shows the highlight reel, not the messy iteration process required in reality

- Gemini can identify stuffed animals from photos but shows significant uncertainty with generic toys, requiring 20+ minutes of manual research to confirm conclusions

- AI image generation successfully maintains visual consistency across multiple generated photos when given clear reference images, making it useful for the toy-travel scenario

- The actual time investment (20-30 minutes total) for creating a week of toy travel photos is significantly longer than the advertisement's compressed 30-second presentation

- Using AI-generated images to tell your child stories about their toy's adventures is creative and harmless but raises minor ethical questions about visual manipulation and childhood reality perception

Related Articles

- How to Transfer Your Games to Nintendo Switch 2 [2025]

- How to Set Up a Smartphone for Elderly Loved Ones [2025]

- How to Watch Christmas Day NFL Games 2025: Full Streaming Guide [2025]

- Song Sung Blue Documentary: Complete Streaming Guide & History

- The Morning Show Season 5: Release Date, Cast, Plot & Everything [2025]

- Best Phones 2025: Why Folding Devices & Design Innovation Matter [2025]

![Can Google's Gemini Actually Do What the Ad Shows? A Real Test [2025]](https://tryrunable.com/blog/can-google-s-gemini-actually-do-what-the-ad-shows-a-real-tes/image-1-1766671493346.png)