Gemini on Google TV Just Got a Major AI Upgrade: Here's What's Actually Happening

Google dropped something pretty significant at CES 2025, and honestly, it caught a lot of people off guard. Gemini on Google TV is getting a complete makeover, and it's not just cosmetic changes either. We're talking about actual, practical upgrades that could fundamentally change how you interact with your television.

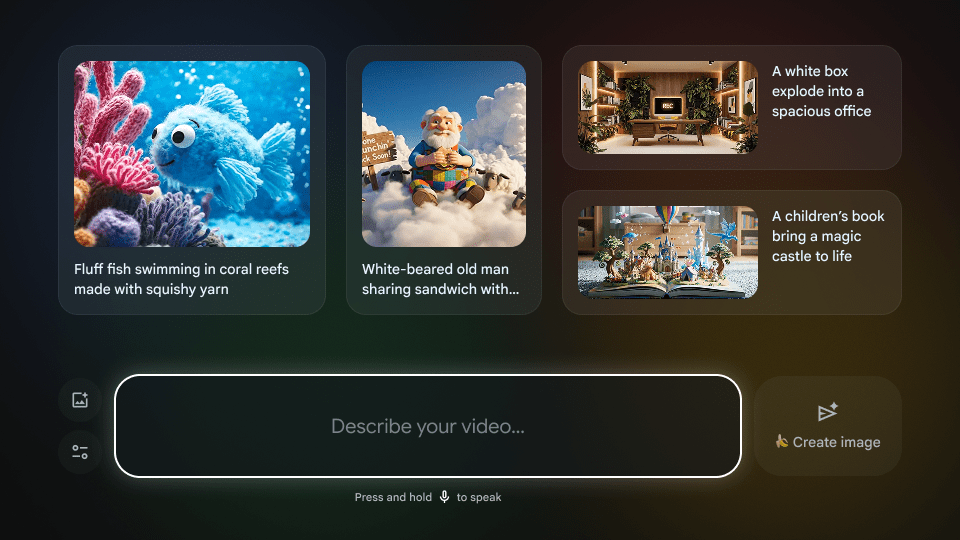

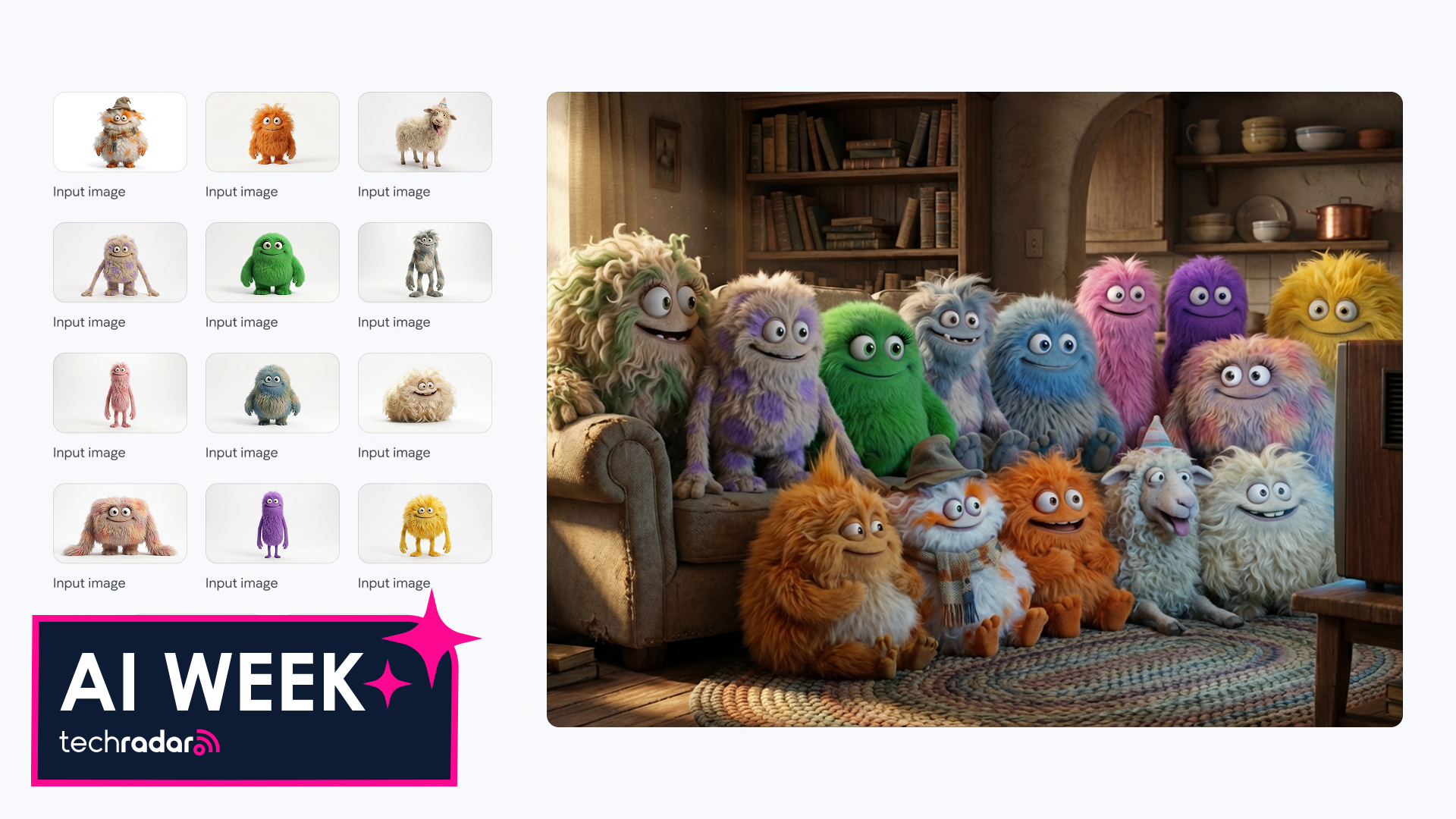

The headline features are Nano Banana and Veo support. If those names sound weird to you, that's fair. But here's what they actually mean: you can now generate AI images and videos directly on your TV. Not by uploading clips to your phone first. Not by using some clunky web interface. Straight on your television, using your TV remote and your voice.

But the real game-changer might be the voice-controlled settings. Imagine saying "the screen is too dim" and having your TV automatically adjust brightness. Or telling it "I can't hear the dialogue" and having it bump up the dialogue-specific audio. That's not science fiction anymore. It's coming to Google TV devices starting with select TCL models.

What surprised me most when I first heard about this was how practical it actually is. Everyone's been screaming about AI integration everywhere, and most of it feels forced. This doesn't. It solves real problems people actually have when watching TV. And it does it in ways that feel natural, not like you're fighting the interface.

Let's dig into what's actually coming, why Google is doing this now, and whether it's actually worth caring about.

TL; DR

- Nano Banana for AI Images: Generate custom images directly on your TV using Google's lightweight image model

- Veo for AI Videos: Create short video clips on your TV with natural language prompts

- Voice-Controlled Settings: Tell your TV "the screen is too dim" or "I can't hear" and it adjusts automatically

- Deep Dives with Narration: Ask Gemini for interactive explorations of topics with voiceover and visuals

- Google Photos Integration: Pull photos from your library to create stylized slideshows directly on your TV

- Rollout Timeline: Starting with TCL Google TVs in early 2025, expanding to other Google TV devices throughout the year

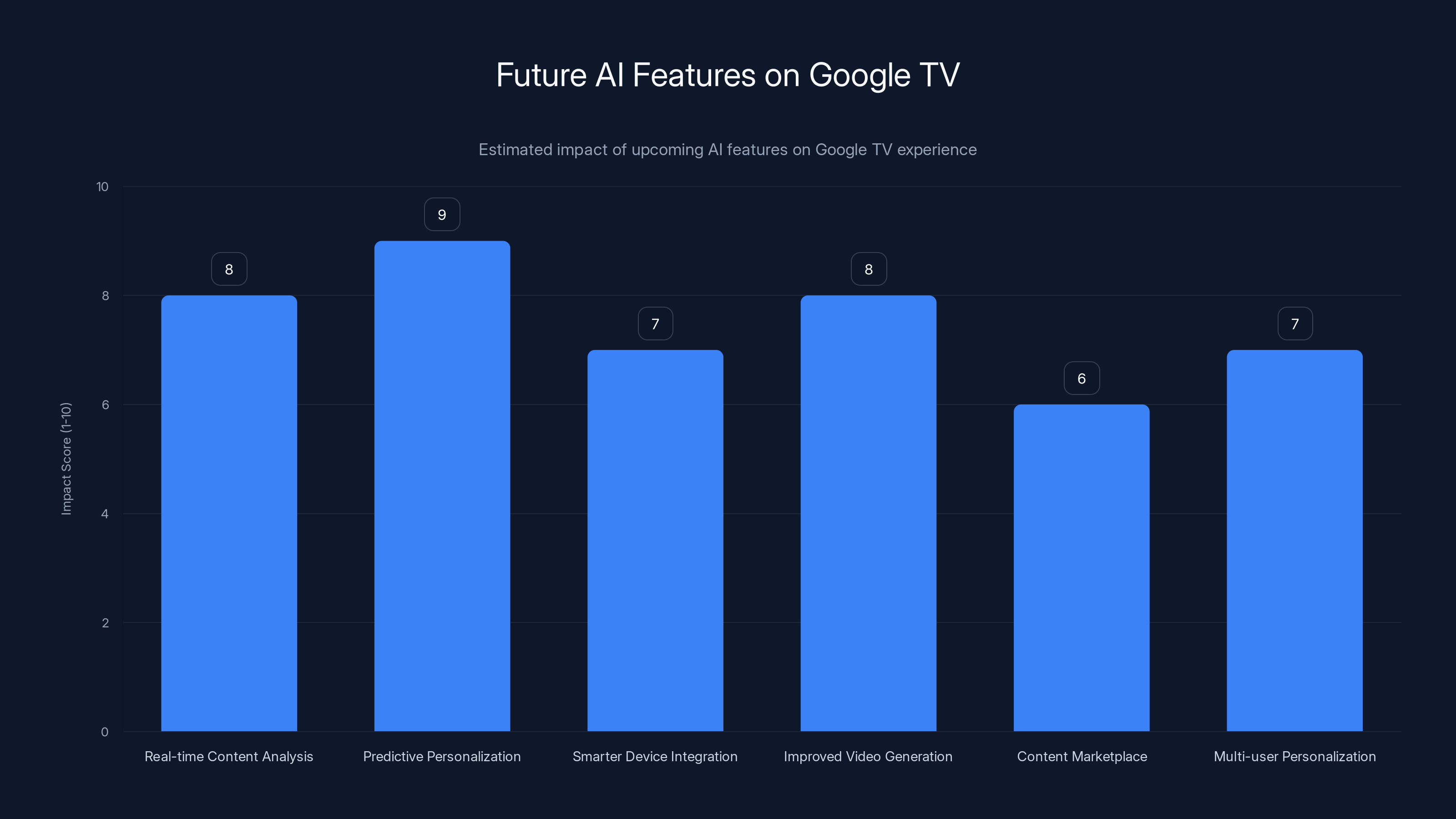

Predictive personalization and real-time content analysis are expected to have the highest impact on enhancing the Google TV experience. (Estimated data)

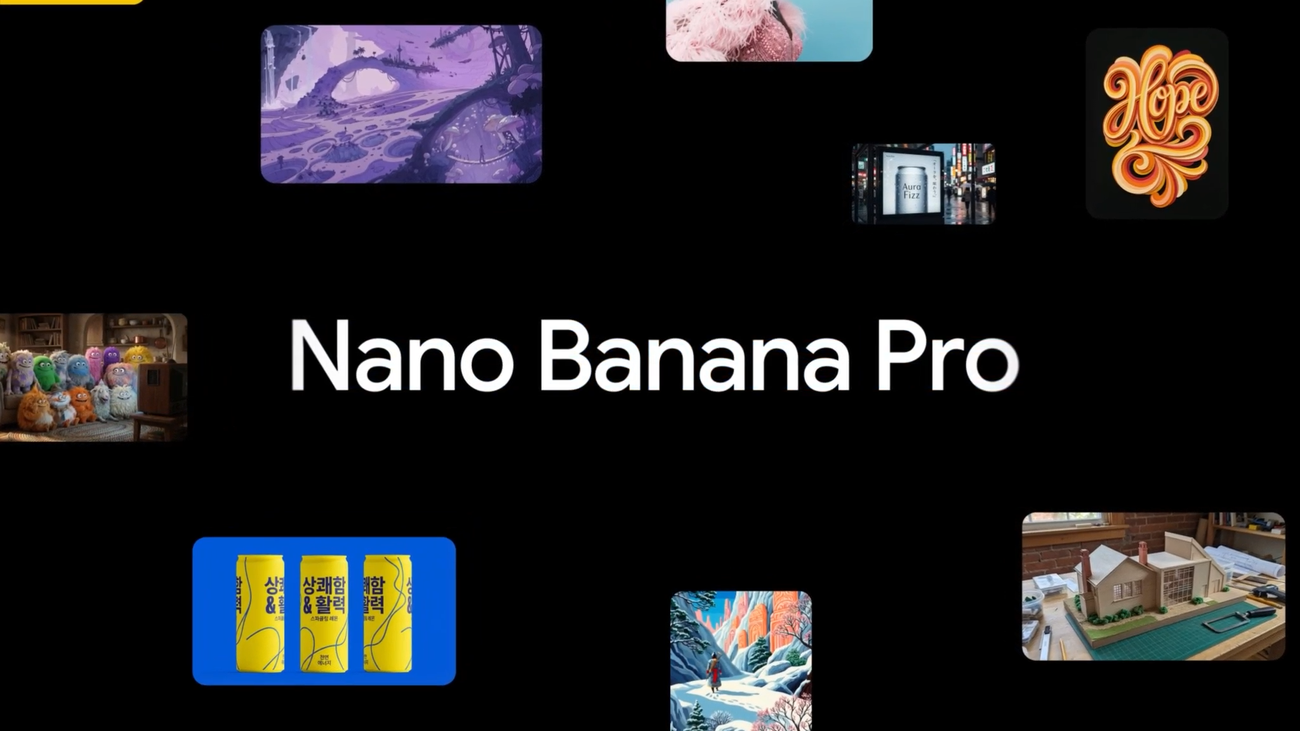

What Exactly Is Nano Banana? Breaking Down Google's New Lightweight Image Model

Nano Banana isn't a code name someone came up with in a meeting and forgot to rename. It's actually Google's lightweight image generation model, optimized specifically for running on devices without massive computational resources. And that's the whole point.

Traditional image generation models like DALL-E or Midjourney require serious processing power. They're built for cloud infrastructure. They're not designed to run locally on a television. Google recognized this problem and built Nano Banana as a solution. It's stripped down, efficient, and designed to generate images quickly without needing to ping Google's servers every single time.

What does that mean practically? Speed. When you're watching TV, you don't want to wait 30 seconds for an image to generate. You want it in seconds. Nano Banana gets you there. The quality trade-off is real—it won't compete with premium models for photorealistic output—but for TV-based use cases, that trade-off makes sense.

The use cases are actually more practical than you might think at first. Say you're watching a cooking show and you want to see what that dish would look like with a different plating style. You can prompt Nano Banana and see variations instantly on your TV. Or you're watching a historical documentary and you want to visualize what a scene might have looked like in a different artistic style. Van Gogh style? Watercolor? Cyberpunk neon? You can generate it right there.

Google's also paired Nano Banana with your Google Photos library. This is where it gets genuinely clever. You can tell Gemini to pull photos from specific events or time periods, then apply Nano Banana's generation capabilities to create stylized versions. Family vacation photos in impressionist style? Holiday pictures as anime characters? This is the kind of stuff people actually want to do on their TV.

The model is optimized for consumer hardware, which means it can run efficiently even on Google TV devices that aren't the latest and greatest. This was critical for adoption. Google couldn't release this feature exclusively to premium TVs. It had to work across the board.

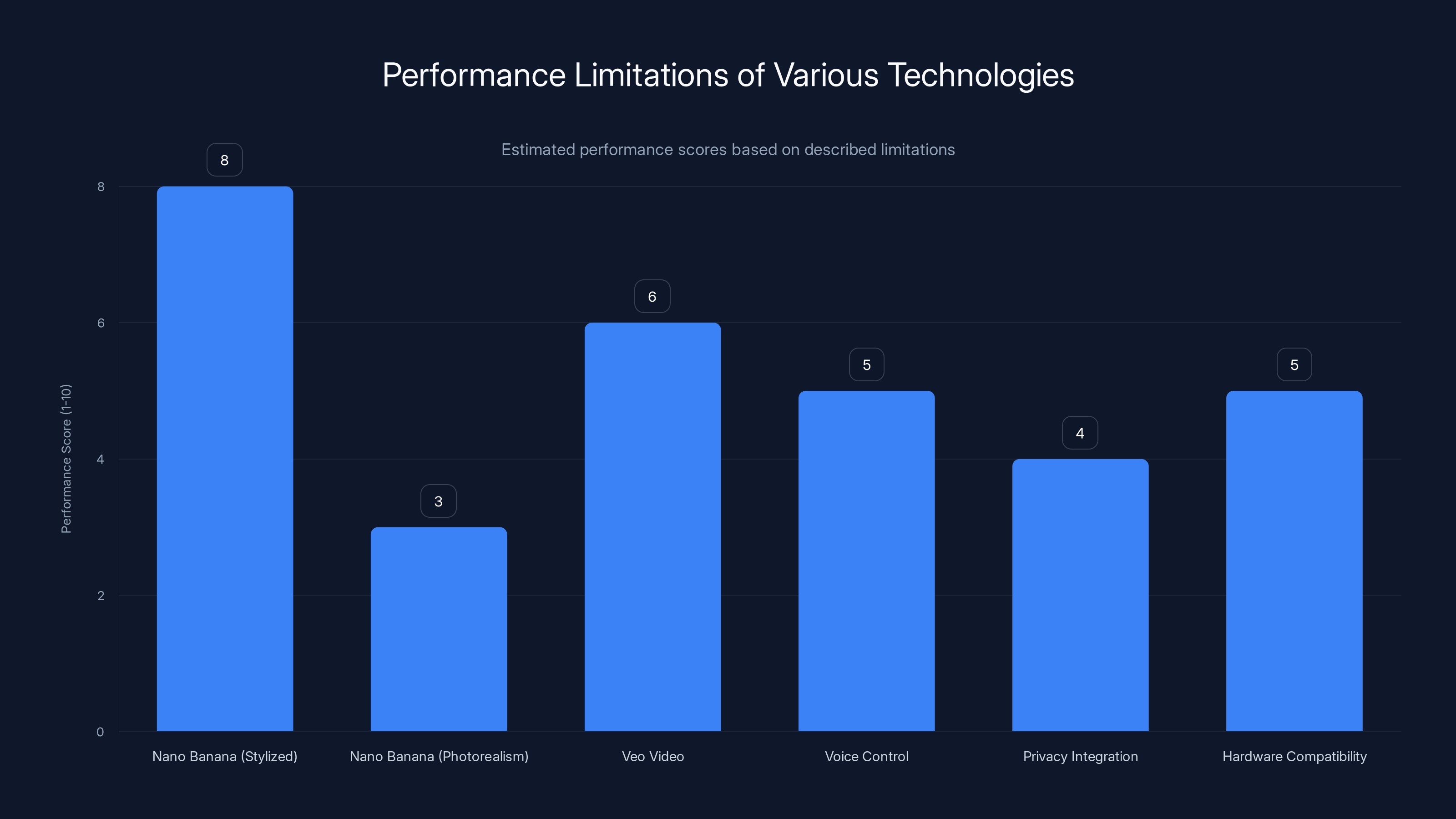

Estimated performance scores highlight strengths in stylized content for Nano Banana and limitations in photorealism and privacy concerns. Estimated data.

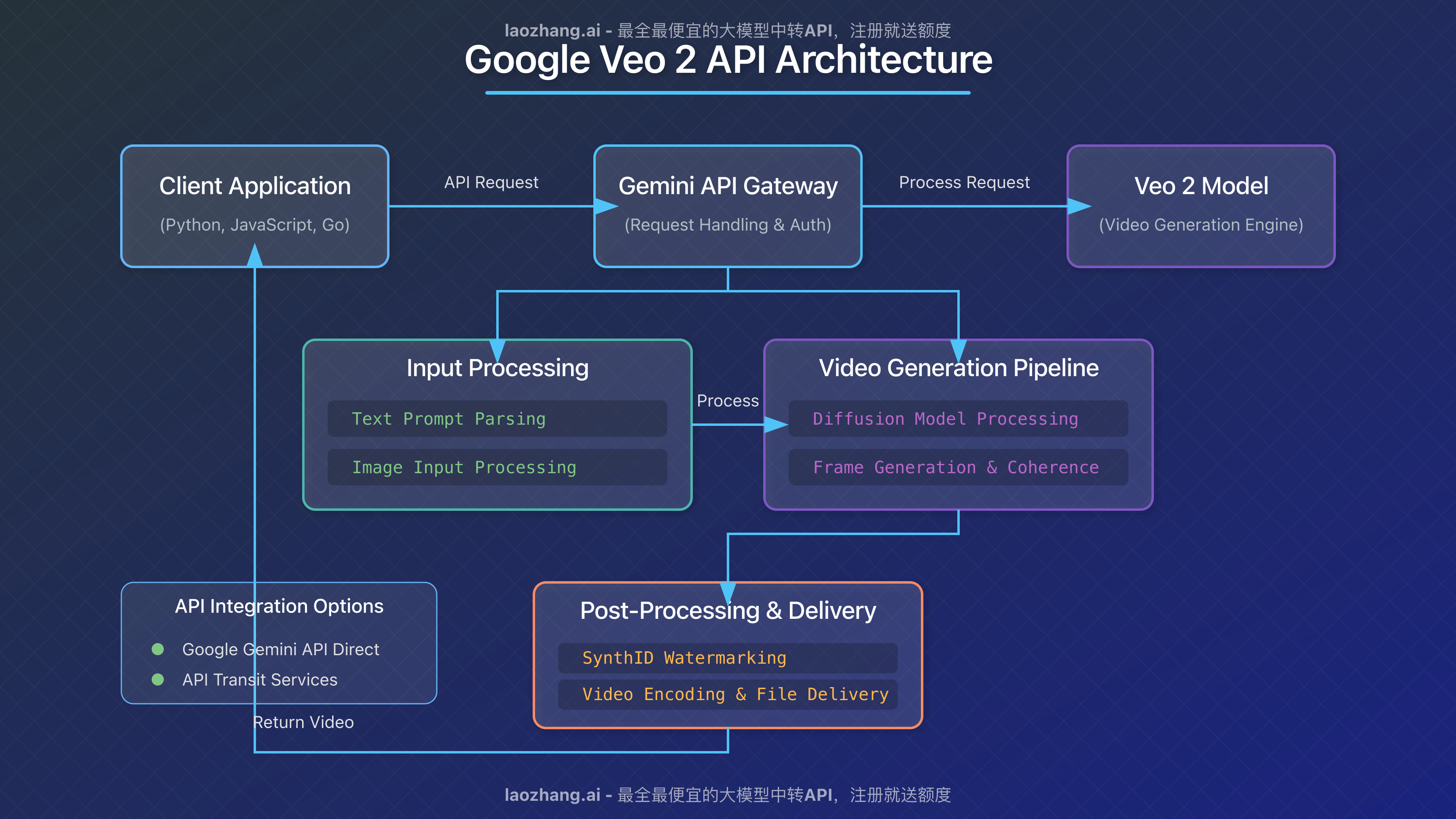

Veo Video Generation: Creating Short Clips Directly on Your Television

If Nano Banana is about images, Veo is Google's answer to "what if you could create videos on your TV?" And unlike some AI video tools that require serious setup, Veo on Google TV is designed to be stupid simple. Press a button, say what you want, wait a few seconds.

Veo is Google's video generation model, and it's actually pretty capable. The default output is short-form video—we're talking 10 to 30 seconds typically—which is perfect for TV applications. Long-form video generation would require too much processing power and would make the TV feel sluggish.

What can you actually create? Anything you can describe. Want a video of a spaceship landing on Mars? You can generate it. A time-lapse of a coffee brewing? Done. A quick animation of a scenario playing out? Go for it. The quality is decent for consumer use. It won't replace professional videography, but it's genuinely impressive for something running locally on a TV.

The real application here is entertainment and creativity. You're watching something on TV, you get inspired, and you want to quickly create a related video clip to share with someone. You don't want to mess with editing software. You don't want to upload to cloud services. You want to say "create a video of a wizard casting a lightning spell" and have it happen in 10 seconds.

Google's paired this with Gemini's broader context awareness. You're not just generating random videos. You can ask Gemini to create videos based on what you're currently watching, what you've been discussing, or content from your Google Photos library. It's contextualized creativity.

One thing to understand: Veo isn't replacing Vimeo or YouTube. It's not creating production-quality content. But it is solving a specific problem for consumers. That problem is: "I want to quickly create video content without complexity." And it does that well.

The rollout strategy is smart too. Google's starting with TCL TVs because TCL has the hardware optimization done. They can ensure Veo performs well on those devices. As the technology gets integrated more widely, other TV manufacturers will follow.

Voice-Controlled TV Settings: The Feature Most People Will Actually Use

Here's the thing about voice-controlled picture and audio settings. It sounds boring. It doesn't sound revolutionary. But it's the feature that'll probably have the biggest impact on day-to-day TV watching.

Every person who watches TV regularly has said something like "the screen is too dark" or "I can't hear the dialogue" at some point. Usually, you have to fumble for the remote, find the settings menu, navigate through options, and adjust. It's friction. Not massive friction, but real friction that happens constantly.

Gemini removes that friction entirely. You just say it. Your TV listens and adjusts. "The picture is too bright." Brightness drops. "I can't hear them." Dialogue boost activates. "Everything's too saturated." Color saturation decreases.

Google's backend handles the natural language understanding. When you say "I can't hear the dialogue," Gemini doesn't just turn up volume. It specifically boosts dialogue frequencies and can adjust surround sound characteristics accordingly. It's not a dumb volume slider. It's actually understanding what you're saying and responding appropriately.

This is where AI in consumer products actually shines. It's not trying to be clever. It's not generating unnecessary content. It's just making an existing task dramatically easier.

The technical implementation is interesting too. Your TV needs to understand a range of natural variations. "The screen is too dim," "I can't see," "brightness needs adjustment," "it's too dark"—all should trigger the same response. And they do. Google's trained Gemini specifically for this use case with all the variations people actually use when talking to their TV.

Speaker quality matters here. If your TV has a decent microphone, it'll pick up what you're saying even if there's background noise (TV audio, conversation, etc.). This isn't always reliable, but Google's made improvements on newer Google TV devices.

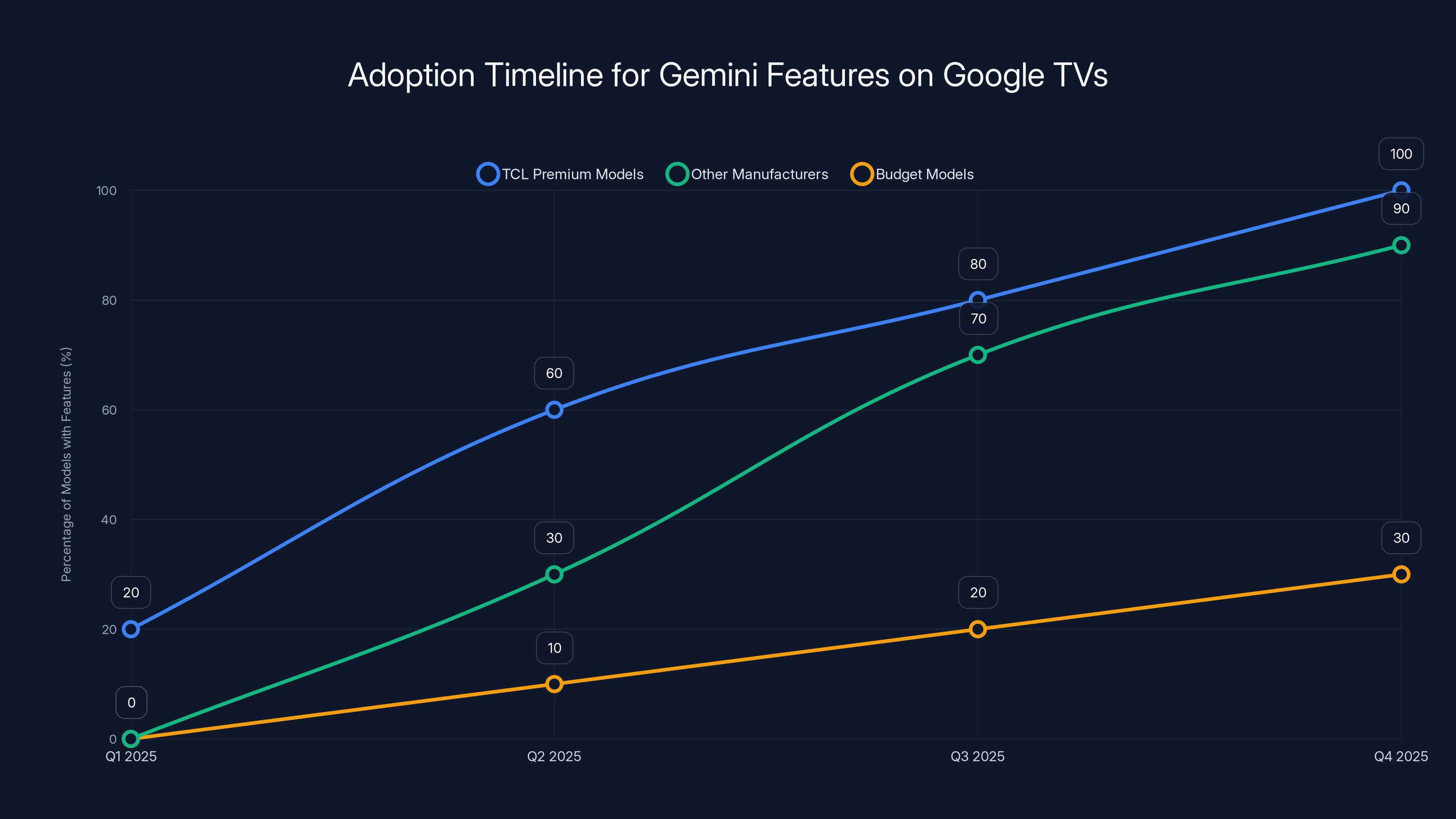

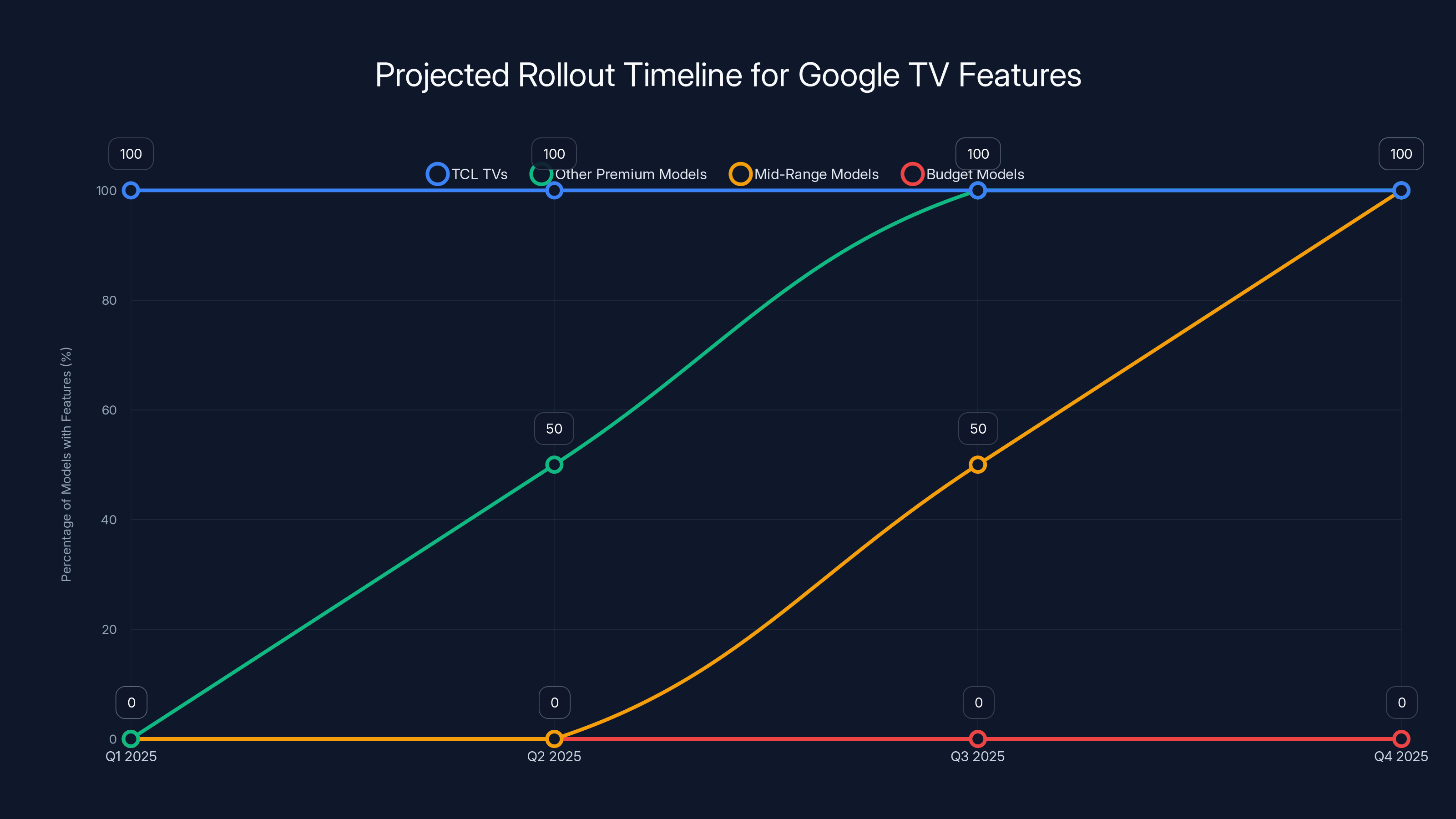

TCL's premium and mini-LED models will be the first to receive Gemini features, with other manufacturers following in subsequent quarters. Estimated data.

Gemini Deep Dives: Interactive, Narrated Explorations of Any Topic

Beyond images and videos, Gemini is getting a new content format: deep dives. When you ask Gemini about a topic, it doesn't just return text or a quick summary anymore. You can request a "deep dive," which Gemini generates as an interactive, narrated exploration.

What does that mean? Gemini creates a multi-part response with voiceover, related images, video clips (possibly generated with Veo), and interactive elements. You're not reading. You're watching and listening to an explanation that feels more like a documentary than a search result.

Ask about the history of spaceflight, and Gemini generates a narrated deep dive with historical photos, illustrations, possibly some generated visuals showing historic launches, and the ability to click "dive deeper" on subtopics. It's surfing a wave of information, but instead of scrolling and clicking, you're watching.

This is clever because it's tailored to TV as a medium. TV is passive but also visual and audio-rich. Deep dives leverage all three dimensions. You're getting information, but it's delivered in a format that actually makes sense on a television.

The narration is AI-generated. Google's text-to-speech has gotten genuinely good recently. On TV speakers, with proper audio mixing, it's often indistinguishable from real narration. And the quality matters because bad narration would kill the whole feature.

Interactivity is key here. It's not just passive watching. You can ask follow-up questions, request deeper exploration of specific points, or ask Gemini to refocus on a different aspect. It's like having a documentary that responds to what you want to know.

Google's positioning this as educational content, but the use cases are broader. Someone could use deep dives as entertainment, as learning tools, as inspiration sources. The format is flexible enough to adapt to different content types.

Google Photos Integration: Turning Your Photo Library Into Creative Content

Google Photos has billions of photos across millions of accounts. Most of them sit there, occasionally viewed, mostly ignored. Google's new TV integration makes photos more active.

You can ask Gemini to search your Google Photos library by event, time period, or subject matter. "Show me photos from our beach trips" triggers a search across your entire library. Gemini then pulls relevant images and presents them on your TV.

But here's where it gets interesting: you can transform these photos. Nano Banana can re-style them. Veo can create videos incorporating them. Gemini can generate narration about them. Your personal photos become the seed for creative content.

Imaginative family experiences? You're looking through vacation photos, and you ask Gemini to create a stylized slideshow with narration and music. Suddenly your photo library becomes a home video. It's personalized, it's based on your actual memories, and it required no effort beyond asking.

Google's also adding "immersive slideshows" where photos are displayed with AI-generated background animations and music. It's not replacing the photos. It's enhancing the viewing experience. The photos stay centered, but there's visual context around them making the whole thing feel more like watching a film than browsing photos.

Privacy considerations here are important. All this processing happens with Google Photos data tied to your account. Google's being explicit about this: it's not sharing your photos widely, it's using them for your personal entertainment. But the integration is deep, so you should know what's happening.

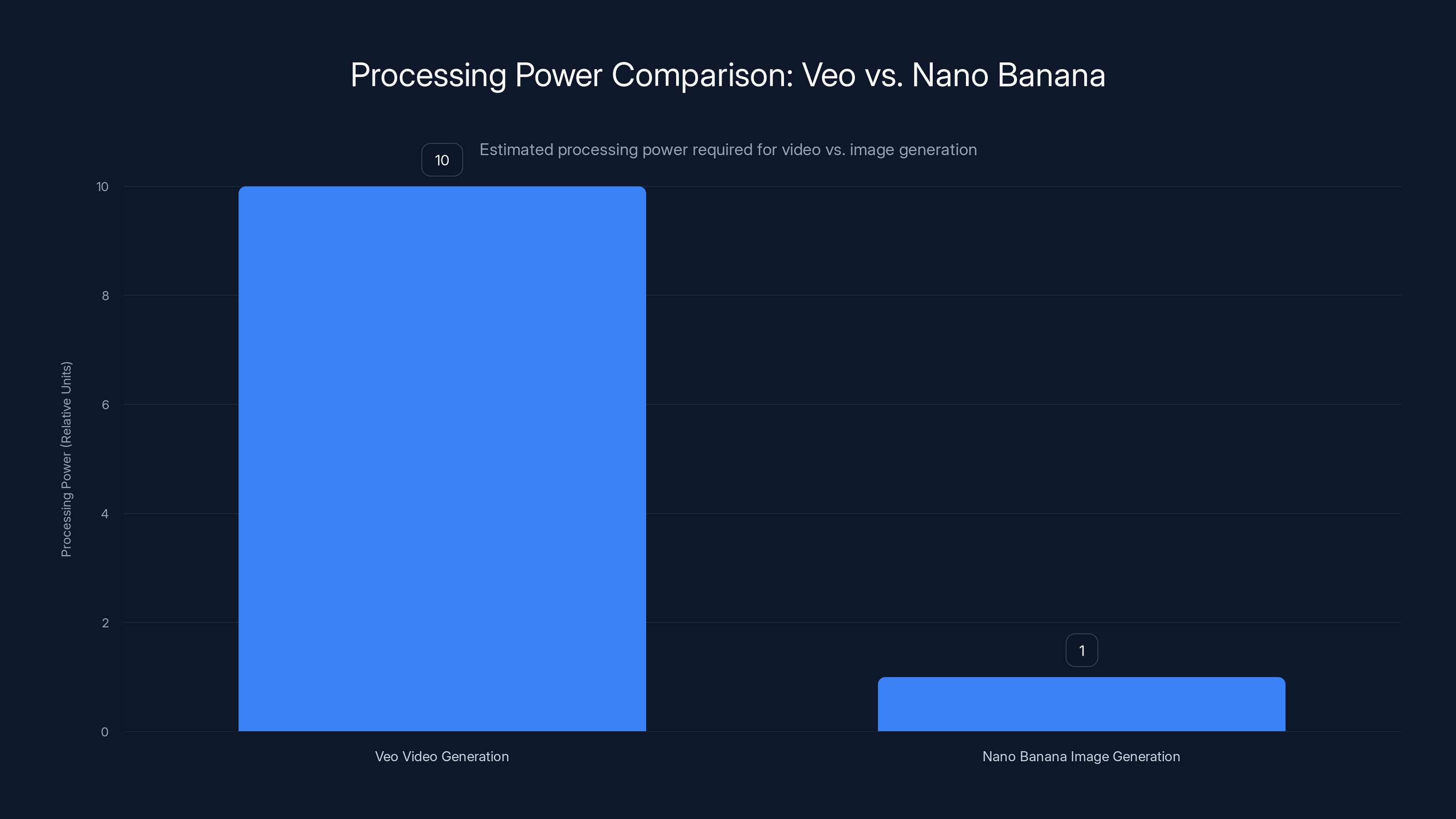

Veo video generation consumes approximately 10 times the processing power of Nano Banana image generation (Estimated data).

The Hardware Reality: Why TCL Gets It First and What That Means

Google's starting this rollout with TCL televisions, and that's not random. TCL has specific relationships with Google. They've optimized their hardware for Google TV for years. Their processors, their storage, their memory configurations all align with what Gemini needs to run these features smoothly.

This matters because running Nano Banana and Veo locally requires meaningful processing power. You need a decent GPU. You need sufficient RAM. You need fast storage for model inference. Not every TV has this. Budget models, older models, many mid-range TVs simply don't have the hardware specs to run these models efficiently.

TCL's higher-end models do. Their mini-LED TVs, their premium smart TV lineup, they have the specs. So the rollout starts there. It's practical hardware compatibility, not corporate favoritism.

The timeline matters too. TCL gets it first in early 2025. Then other Google TV manufacturers follow "in the coming months." That probably means Q2 or Q3 2025 before most people with Google TVs get access. And it'll likely be phased by device tier. Premium models first, mid-range later, budget segments possibly never if the hardware can't handle it.

This also explains why Google didn't just release this on all Google TVs simultaneously. They want the experience to be good. Slow image generation. Laggy video creation. Stuttering voice controls. These would tank the feature. So they're being smart about hardware requirements.

For consumers, this means you should check your TV specs before expecting these features. If you have a relatively recent Google TV, you're probably fine. If your TV is more than 3-4 years old, you might not have the horsepower. And that's okay. Not every feature needs to land on every device immediately.

Real-World Use Cases: Where Nano Banana and Veo Actually Make Sense

It's easy to dismiss AI image and video generation as gimmicks. But let's think through actual scenarios where this is genuinely useful.

Scenario one: you're watching a cooking show and you want to see variations of a recipe presentation. Instead of scrolling through Pinterest or Google Images, you tell Gemini "show me this dish plated in minimalist style, then Japanese style, then rustic style." Nano Banana generates three variations in seconds. You're still watching TV. You're not switching devices. The information is right there.

Scenario two: historical or educational content. You're watching a documentary about ancient Rome. You want to visualize what a scene might have looked like. You ask Gemini to generate images of Roman forums, bathhouses, street scenes in different artistic interpretations. Suddenly you have visual context that enhances understanding.

Scenario three: creative inspiration. You're watching a movie and you get inspired by the cinematography or aesthetic. You can ask Veo to generate short video sequences in similar styles. It's a creative tool integrated directly into your entertainment experience.

Scenario four: personal memory curation. You ask Gemini to pull your vacation photos, create a stylized slideshow, maybe add some generated establishing shots or transitions. You're creating a mini-documentary of your trip without touching editing software.

These aren't replacing TV watching. They're adding a creative layer on top of TV watching. And that's actually valuable. It's TV as an interactive, generative medium rather than a purely passive one.

The key insight is this: Google isn't trying to make you create YouTube content from your couch. It's trying to make TV more interactive and personalized. Every person's Gemini experience becomes unique based on their questions, their photos, their interests.

The rollout of new Google TV features starts with TCL TVs in early 2025, followed by other premium models in Q2, mid-range in Q3, while budget models may not receive the update due to hardware limitations. Estimated data.

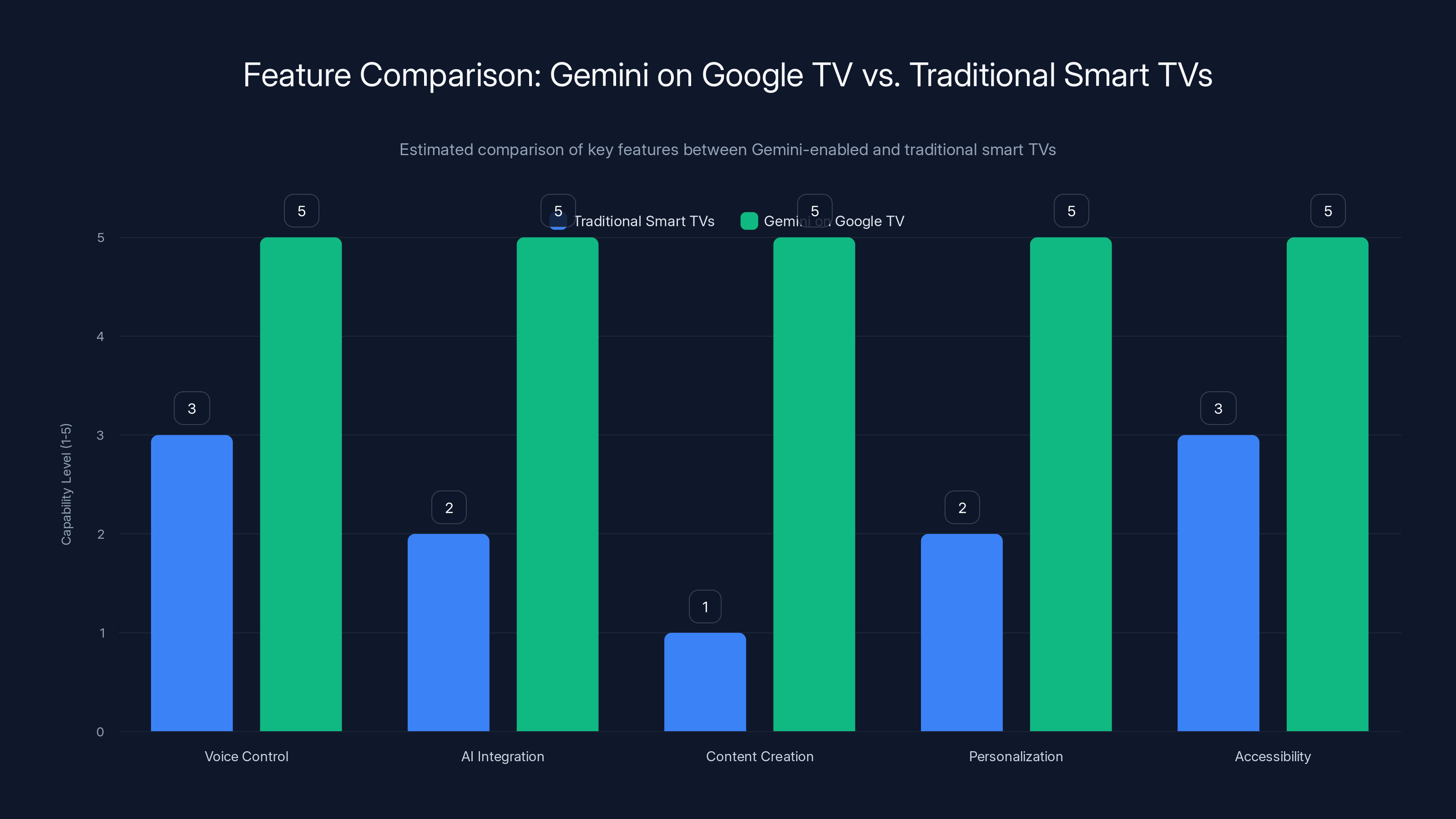

Comparison: Gemini on Google TV vs. Traditional Smart TV Features

Let's put this in context. How does Gemini's new functionality compare to what you get with traditional smart TV features?

Traditional smart TVs have:

- Remote controls with physical buttons

- On-screen menus for settings

- App-based content (Netflix, YouTube, etc.)

- Voice assistants (sometimes)

- Maybe some basic scene modes or picture presets

Gemini-enabled Google TVs now have:

- Voice-controlled settings with natural language understanding

- Local AI image and video generation

- Generative content (deep dives, narrated explorations)

- Photo library integration with creative transformations

- Contextual AI assistance

The difference is substantial. Traditional smart TVs are passive recipients of content. Gemini-enabled TVs become interactive, generative platforms. You're not just consuming content. You're creating it and exploring it in real time.

For users, this means:

- Less friction in settings adjustment: No more menu diving

- More personalized content: Your photos, your questions, your interests

- Creative tools at hand: Generate images and videos without leaving TV

- Smarter content exploration: Deep dives adapt to your interests

- Better accessibility: Voice control helps users with mobility issues

For a consumer who just wants to watch TV, most of this is background. But for someone who wants their TV to be more interactive and creative, it's a significant step forward.

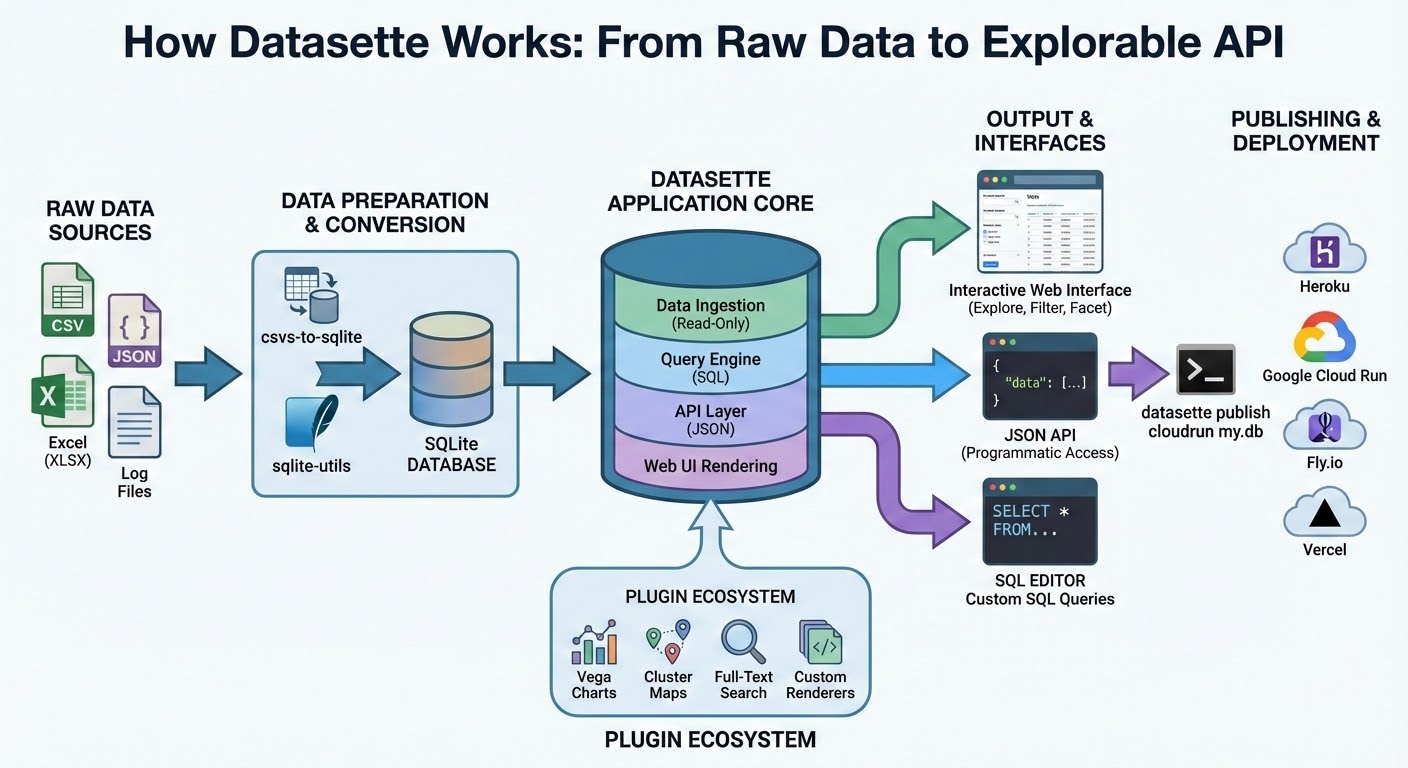

The Processing Power Question: Understanding the Hardware Behind the Scenes

Let's talk about what's actually happening under the hood when you generate an image or video on your TV. This matters because it explains why not every TV gets this, and why the feature works the way it does.

Nano Banana, despite being lightweight, still requires meaningful GPU resources. When you prompt an image, your TV is running inference on a neural network model. That model is compressed and optimized, but it's still doing serious computation. The processing happens locally on your TV's GPU, not on Google's servers.

Why local processing? Latency and privacy. If every image generation request went to Google's servers, you'd wait longer and your prompts would be logged on Google's systems. Local processing is faster and more private.

The trade-off is performance variability. Nano Banana runs faster on newer TVs with better GPUs than on older models. This is why the feature is being rolled out selectively. Google doesn't want users experiencing 30-second waits for image generation. That would feel broken.

For Veo video generation, the processing requirements are higher. Creating even short video sequences requires substantial GPU work. That's why video generation might take longer than image generation, and why it's only available on devices with sufficient specs.

Gemini itself (the language model) runs partially locally and partially on Google's servers, depending on context. Simple queries might be handled locally. Complex deep dives with narration likely involve some server-side processing. But Google's architecture tries to minimize server requests where possible.

What does this mean for you as a user? Performance depends on your TV's hardware. Newer models with better GPUs will see faster generation times. Older models might experience lag. This is just physics. You can't generate complex imagery instantly on weak hardware.

Gemini-enabled Google TVs significantly enhance interactive and creative capabilities compared to traditional smart TVs, offering advanced AI integration and personalization. Estimated data.

Privacy and Data: What Google's Actually Doing With Your Information

When you use Gemini on Google TV, you're submitting prompts, asking about content, accessing your Google Photos. That data flows somewhere. Let's be clear about where.

Your voice commands are processed by Google. When you ask for a setting adjustment or request an image, Google's systems handle the request. This is the same as any Google Assistant interaction. Google has privacy policies covering this, and if you care about that, you should read them.

Your Google Photos stay within your account. Google's not using your family vacation photos to train models or share with others. They're used for your personal content generation (the slideshow remixes, the Nano Banana styling, etc.). But they are technically accessible to Google's systems when you request these features.

Searching your photos through Gemini means Gemini indexes and understands your photo library. That's the point. But it also means Google's systems have detailed data about what you photograph, where you go, who you spend time with. If that concerns you, understand that you're trading privacy for convenience.

Generative outputs (images, videos created with Nano Banana and Veo) are created on your device. You own them. You can delete them. Google's not training models on them (or shouldn't be, based on their stated policies). But understand that creating copyrighted content or problematic content on your TV is still creating it, and you're responsible for it.

Google's been pretty clear about not selling personal data. But they're monetizing your behavior through advertising and improving their products. That's the actual trade. You get free or cheap services. Google gets insights into your behavior to improve their platform and target ads better. Understand that exchange.

Competitive Landscape: How Gemini on Google TV Stacks Up Against Alternatives

Let's talk about the bigger picture. Google's not the only company thinking about AI on TV. What's the competitive situation?

Amazon's Alexa on Fire TVs handles some similar features, but less comprehensively. You get voice control for settings and basic AI-assisted search. You don't get local image and video generation. Amazon's approach is more focused on voice shopping and Alexa integration than creative content generation.

Roku's TV platform is less AI-focused overall. They're slower to adopt generative AI features. Their voice control (if present) is basic compared to Gemini.

Samsung's smart TVs have their own AI assistant (Bixby) with some generative features, but the integration is less smooth than Gemini and the capabilities are more limited.

Apple TV focuses on streaming quality and ecosystem integration rather than generative AI. Not their strategy right now.

What gives Gemini an advantage?

- Integration with Google ecosystem: Photos, search, personal preferences

- Actual generative AI models running locally: Nano Banana and Veo aren't trivial features

- Natural language understanding: Gemini's language capabilities are genuinely good

- Active development: Google's clearly investing in this space

What's the catch?

- Hardware limitations: Only works well on newer, higher-spec TVs

- Privacy trade-offs: Deep integration with Google's data collection

- Limited to Google ecosystem: If you're all-in on Amazon or Apple, this doesn't help

For most people with Google TVs, this is the best AI TV experience available right now. That's the honest assessment.

Looking Forward: What's Likely Coming Next for AI on Google TV

Google's not stopping here. This is clearly phase one of a broader strategy. What might come next?

Real-time content analysis is likely. Imagine Gemini watching what's on your TV and automatically creating deep dives about the content you're watching. "I see you're watching a cooking show. Want a deep dive about the cuisine being prepared?" That's coming.

Predictive personalization will improve. As Gemini learns your preferences through your questions and your photo library, it'll get better at suggesting content, generating relevant images, predicting what you want to explore.

Smarter device integration is probable. Your TV could coordinate with your phone, your smart home devices, creating a more seamless experience across Google devices.

Improved video generation quality is inevitable. As Veo gets better and more efficient, you'll get higher quality videos, potentially longer form, running on current hardware.

Content marketplace might emerge. Imagine sharing your generated content directly from your TV to social media, or selling it if you created something interesting.

Multi-user personalization is coming too. Right now, Gemini learns about you as an individual. Eventually, Google could support multiple profiles on one TV, with Gemini personalizing content for each person who watches.

The trajectory is clear: Google's positioning TV not as a passive entertainment device but as an active, generative, personalized content platform. That's a meaningful shift in how we think about televisions.

Practical Implementation: How to Actually Use These Features

When these features roll out to your TV, here's how you'll actually use them.

For Nano Banana image generation:

- Press the Google Assistant button on your remote

- Say "generate an image of [your description]"

- Speak clearly with specific details

- Wait 5-15 seconds depending on your TV's hardware

- Image appears on screen

- You can say "generate another" for variations

For Veo video generation:

- Press Google Assistant button

- Say "create a video of [your description]"

- Provide specific, descriptive prompts

- Wait 15-30 seconds

- Video plays on screen

- You can save it, share it, or delete it

For voice-controlled settings:

- Just talk naturally: "the screen is too dark"

- TV interprets and adjusts

- No button press necessary

- Say "undo" if you don't like the change

For Google Photos integration:

- Ask Gemini: "show me photos from [event/time/location]"

- Gemini searches your library

- Photos appear on screen

- Ask for stylized versions or slideshows

- Gemini generates remix versions if you request

For deep dives:

- Ask Gemini: "give me a deep dive about [topic]"

- Gemini generates interactive narrated content

- Watch and listen

- Click "dive deeper" on points that interest you

- Ask follow-up questions mid-deep-dive

The key is natural interaction. These features aren't designed for command-line style prompting. They're designed for you to talk to your TV like you'd talk to an informed friend about something interesting.

Potential Limitations and Honest Assessments

Let's be clear about where this technology struggles.

Image generation with Nano Banana is good for stylized content but struggles with photorealism. If you need realistic images, you're better served by searching the web. If you want artistic interpretations, Nano Banana is solid.

Veo video generation is impressive but limited. The output is short-form, the realism is good but not perfect, and the model can struggle with complex scenes or specific real-world details.

Voice control has accuracy limitations. Noisy environments (other people talking, loud TV audio) degrade performance. And you need to be reasonably specific in your instructions.

Privacy integration with Google Photos means your photo data is accessible to Google's systems whenever you use these features. If you care about data privacy, that's a real trade-off.

Hardware limitations are substantial. Older TVs simply won't support this. And even newer TVs will have variable performance depending on their specs.

Internet requirements are real but modest. You need broadband for Gemini itself, but local processing means you're not streaming massive models. Still, a flaky internet connection will degrade the experience.

Expectation management is important here. This is not Chat GPT running on your TV. It's a integrated experience that's useful for certain tasks but limited for others. Understanding those limits prevents frustration.

The Bigger Picture: AI Integration in Consumer Electronics

Gemini on Google TV isn't an isolated feature. It's part of a broader trend of AI being embedded directly into consumer hardware.

We're moving from AI as a service (you use Chat GPT through a website) to AI as a feature (every device has AI capabilities built in). This shift has major implications.

On the positive side: faster response times, better privacy, lower latency, more personalized experiences. You don't need internet to generate an image locally.

On the negative side: hardware bloat, increased power consumption, complexity you might not want, and potential reliability issues as models on devices become outdated.

Google's betting on AI becoming as fundamental to TVs as screens and speakers. That might be right. As models get smaller and more efficient, it becomes practical to embed them in everything.

The competitive pressure is real. If Google's TVs have AI generation and Amazon's don't, Google has an advantage. If Samsung's don't, Samsung falls behind. This creates an incentive for every TV manufacturer to include AI features, whether consumers really want them or not.

That's worth thinking about. Just because you can embed AI doesn't mean you should. But market dynamics often push adoption regardless.

Putting It Together: Should You Care About These Features?

Here's the practical question: does any of this matter to you?

If you're someone who just wants to watch TV and streaming apps work reliably, most of this is irrelevant. These features exist, but you don't need them to use your TV.

If you're interested in creative tools, personalization, or interactive content, you should care. These features address real use cases. Image generation, video creation, and smart settings adjustment are genuinely useful.

If you care about privacy, you should understand the trade-offs. Google Photos integration and voice control require deeper data collection. That's the price of convenience.

If you have an older TV, this doesn't immediately affect you. But when you upgrade, you'll have access to these features. Worth considering as part of your next TV purchase decision.

For TCL specifically, if you're shopping for a new TV, the fact that they're getting this first might be worth something. Not a deciding factor necessarily, but a nice-to-have.

For Google's ecosystem users (Gmail, Google Photos, Google Home, Nest, etc.), this is a natural extension. It fits into your existing digital life. For people not in Google's ecosystem, it's less compelling.

Implementation Timeline and Availability

Here's what's actually happening and when:

Q1 2025: TCL Google TV models get these features (starting with their premium/mini-LED lines)

Q2-Q3 2025: Expanded rollout to other Google TV manufacturers (Sony, Hisense, others)

Likely exclusions: Budget models, older devices, non-Google TV platforms

Optional features: Many of these (especially Google Photos integration) will be toggle-able in settings

Software updates: Features will be distributed via software updates, not just hardware-dependent

Feature maturity: Early versions will have limitations. Expect improvements over months and years

For consumers, this means:

- Don't upgrade immediately if you have a working TV

- Wait 2-3 months after launch for early adopter feedback

- Check your TV's specs if you want these features

- Remember that early versions might be buggy

FAQ

What is Nano Banana?

Nano Banana is Google's lightweight AI image generation model optimized to run directly on Google TV devices without requiring cloud servers. It allows you to create custom images by describing what you want, and the image is generated in seconds locally on your television. It's designed for stylized, artistic images rather than photorealistic output, making it perfect for creative content on your TV.

How does Veo video generation work on Google TV?

Veo is Google's video generation model that creates short video clips (typically 10-30 seconds) based on text prompts you provide. You describe what you want to see, and Veo generates the video directly on your TV hardware without uploading to servers. The generated videos can be saved, deleted, or used as creative content for sharing on social media.

What are the voice-controlled settings on Google TV?

Voice-controlled settings let you adjust picture and audio properties by simply talking to your TV naturally. Instead of navigating menus, you can say "the screen is too dim" and brightness adjusts automatically, or "I can't hear the dialogue" and dialogue audio boosts. Gemini understands the natural language context and makes appropriate adjustments to your viewing experience.

Which TVs are getting these Gemini features first?

TCL Google TVs are getting these features first, beginning in early 2025, particularly their premium and mini-LED models that have the hardware specifications needed. Other Google TV manufacturers will follow in the coming months (Q2-Q3 2025). Budget models and older TVs may not support all features due to hardware limitations.

How is privacy handled with Google Photos integration?

When you use Google Photos integration, your photos remain in your Google account, but Google's systems access them when you request content generation or stylized slideshows. Your photos aren't shared with others or used to train models (per Google's stated policies), but Google's systems do have access to understand and process your photo library. You can control this through privacy settings.

What are Gemini Deep Dives?

Deep Dives are interactive, narrated explorations of any topic you request on Google TV. When you ask for a deep dive, Gemini generates multi-part content with voiceover narration, related images, generated visuals when appropriate, and clickable elements to explore subtopics deeper. It's like watching a personalized documentary that responds to your interests.

What hardware do I need for these features to work well?

You need a Google TV device with a decent processor, GPU, and RAM. Newer models (2023+) typically have sufficient specs. Older TVs or budget models may not support these features or may experience slower performance. Google's rolling out features based on hardware tiers to ensure good experiences on capable devices.

Can I generate copyrighted content with Nano Banana or Veo?

Technically yes, but you shouldn't. Generating images or videos that violate copyright is still copyright infringement, even if it happens locally on your TV. These tools are intended for original creative content, transformations of your own content (like stylizing your photos), and fair use scenarios, not for recreating copyrighted material.

Is internet connection required for all these features?

Partially. Image and video generation (Nano Banana and Veo) run locally with minimal internet requirements. However, Gemini itself needs internet for language processing, voice recognition, and accessing Google services like your Photos library. So yes, you need broadband internet, but you're not streaming full models from servers constantly.

When will these features come to my TV?

If you have a recent Google TV (2023 or newer), you'll likely get these features by mid-2025. If your TV is older or a budget model, you might not get all features, or you might get them later. Check the official Google TV announcements for your specific model's rollout timeline.

Conclusion: The Future of Interactive Television Is Here

Gemini on Google TV represents a meaningful shift in how televisions work. We're moving from passive content consumption devices to interactive, generative, personalized platforms. That's not hype. That's a real change in functionality.

Nano Banana and Veo might sound like gimmicky features—AI image and video generation on your TV. But they actually enable things people want to do: quickly create visual content, stylize their memories, explore ideas visually. They're not revolutionary, but they're genuinely useful.

The voice-controlled settings are almost unglamorous in comparison, but they solve a real problem. Less friction in everyday tasks. That adds up over time.

What makes this work is that Google's not trying to be everything to everyone. These features aren't mandatory. Your TV works fine without them. But if you want interactive, generative, personalized content experiences, they're available.

The hardware requirement is real, though. This won't work on every TV. And that's okay. Technology always has capability tiers.

The privacy trade-offs are worth understanding. Deeper integration with Google's systems means more data collection. If that concerns you, understand the trade-off before enabling these features.

For early adopters willing to upgrade to newer Google TV devices (or buy new ones), these features are worth exploring. They might become part of your TV experience. They might sit unused. Depends on your preferences.

For most people, this is worth monitoring but not rushing to adopt. Let early users shake out bugs. See how these features actually work in the wild. Then decide if it makes sense for your next TV purchase.

Google's clearly committed to this direction. Expect AI on TV to become more capable, more integrated, and more common over the next few years. This is just the beginning.

Key Takeaways

- Gemini on Google TV gains Nano Banana for AI image generation and Veo for video creation, both running locally on your TV for instant results

- Voice controls now understand natural language—say 'the screen is too dim' and brightness adjusts automatically without menu navigation

- Deep dives create interactive, narrated explorations of any topic with AI-generated narration, images, and clickable elements for deeper exploration

- TCL Google TVs get these features first in early 2025, with broader rollout to other Google TV devices expected through Q2-Q3 2025

- Hardware limitations mean older and budget TVs won't support these features—modern processors and GPUs are required for smooth performance

![Gemini on Google TV: Nano Banana, Veo, and Voice Controls [2025]](https://tryrunable.com/blog/gemini-on-google-tv-nano-banana-veo-and-voice-controls-2025/image-1-1767624492910.png)