Google Meet's Conference Room Detection: How It's Changing Workplace Communication [2025]

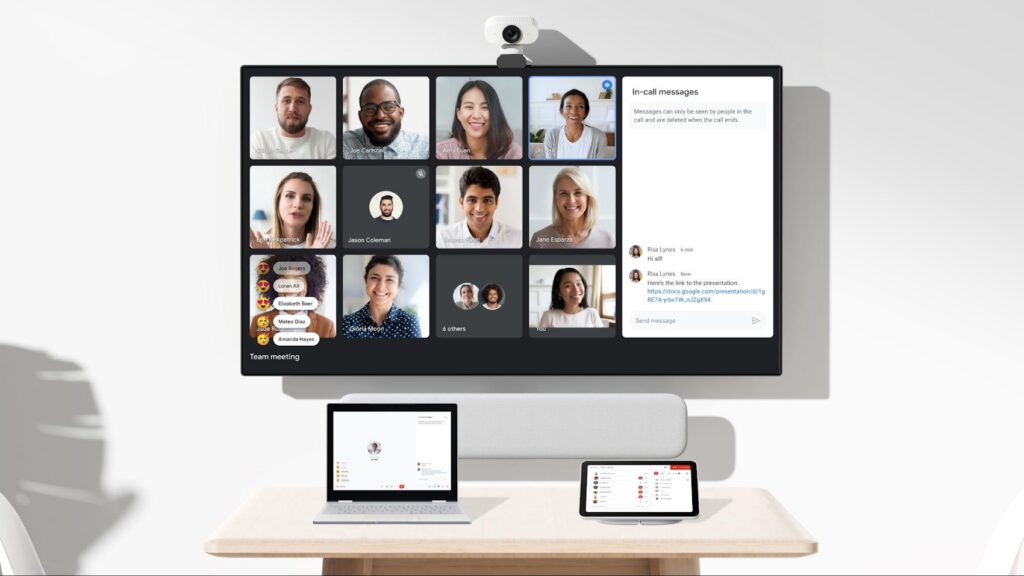

Last year, I walked into a conference room for a client meeting and watched something frustrating happen. The main projector had Google Meet open, my colleague joined on his laptop three feet away, and suddenly we had this awful echo feedback loop that derailed the first five minutes of discussion. Everyone was talking over each other, frustrated, adjusting volume, and generally wasting time on audio problems that shouldn't exist in 2025.

That's exactly the problem Google Meet is now solving with its conference room detection feature.

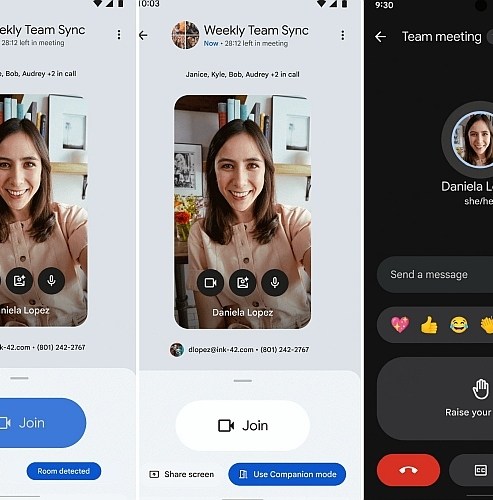

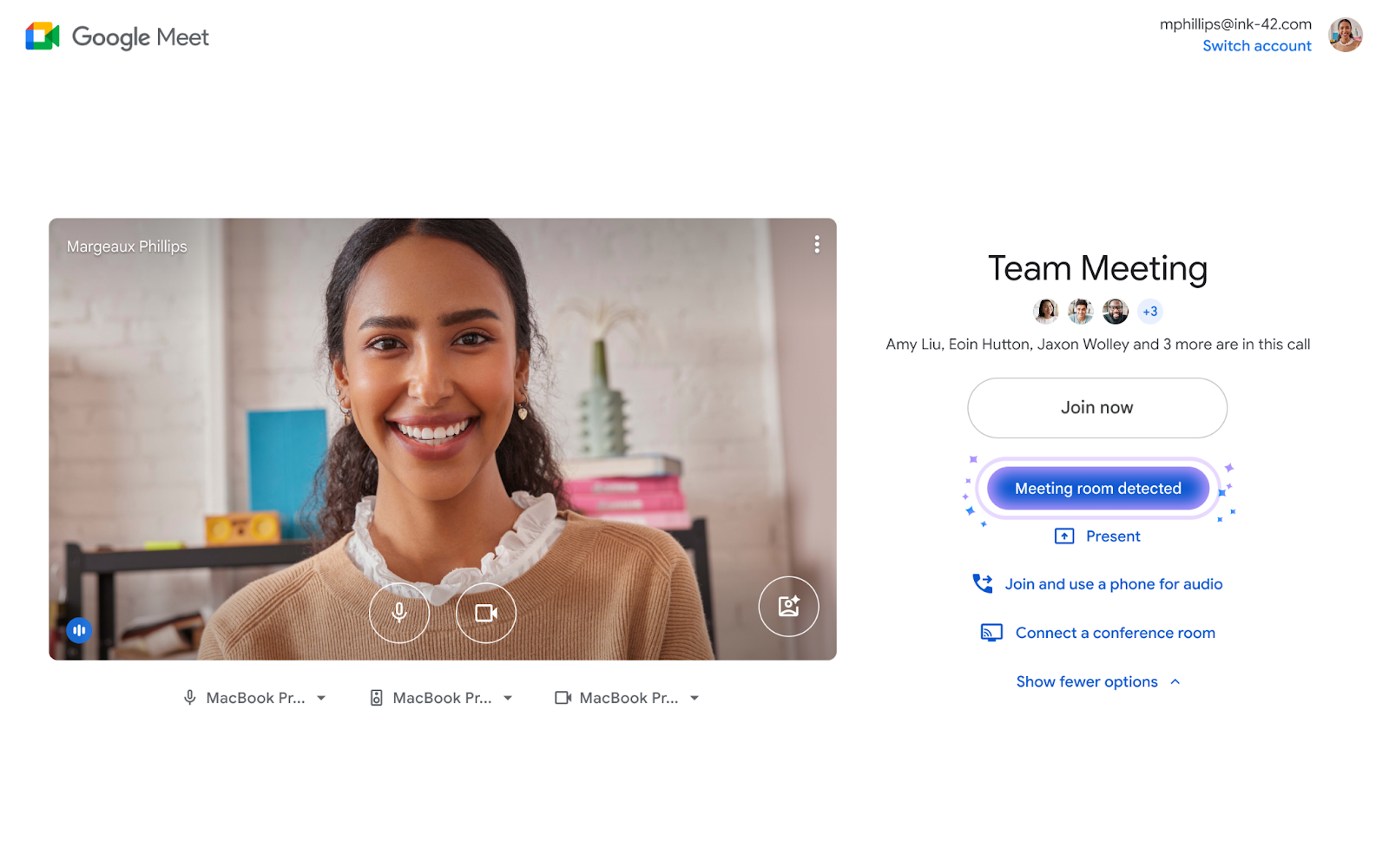

Google just rolled out a smart update that automatically detects when you're sitting in a meeting room and makes joining calls significantly smoother. The system uses ultrasonic sound technology (frequencies above human hearing range) to identify when devices are in the same physical space, then automatically prioritizes Companion Mode as the default joining method. This simple shift eliminates the audio feedback, duplicate microphone inputs, and other problems that plague hybrid meetings.

Here's what's wild: this isn't just a convenience feature. It's solving one of the biggest pain points in modern remote work—the hybrid meeting problem where some people are in the office while others dial in from home. When done wrong, it creates a two-tier meeting experience where remote attendees feel left out. Google's solution addresses this directly by recognizing the physical context and adjusting the tech accordingly.

The feature started rolling out to all Google Workspace users in late 2024, including individual account holders, though some domains are experiencing slower rollout times. Admins can control it at the room level, enabling or disabling proximity detection based on their infrastructure. And if you're wondering whether you have a choice—that's less clear. Google hasn't been explicit about whether end users can opt out, which is worth paying attention to from a privacy perspective.

In this guide, I'm breaking down exactly how Google Meet's conference room detection works, why it matters for your organization, the technical implementation details, privacy considerations, and how it compares to competitor solutions. Whether you're managing IT infrastructure for an enterprise or just attending too many video calls, this feature changes the calculus on how you approach hybrid meetings.

TL; DR

- Ultrasonic Detection: Google Meet now uses silent ultrasonic signals emitted by conference room hardware to automatically detect when devices are in the same physical space

- Companion Mode Priority: The system automatically makes Companion Mode the default joining option, reducing echo and audio feedback from multiple active microphones

- Automatic Check-In: Devices in meeting rooms are automatically checked in, helping admins track attendance without extra steps

- Admin Control: Organization admins can enable or disable proximity detection at the room level, giving IT departments flexibility

- Broad Rollout: The feature is available on iOS and Android devices using both the Meet and Gmail apps, with support for both primary and secondary devices

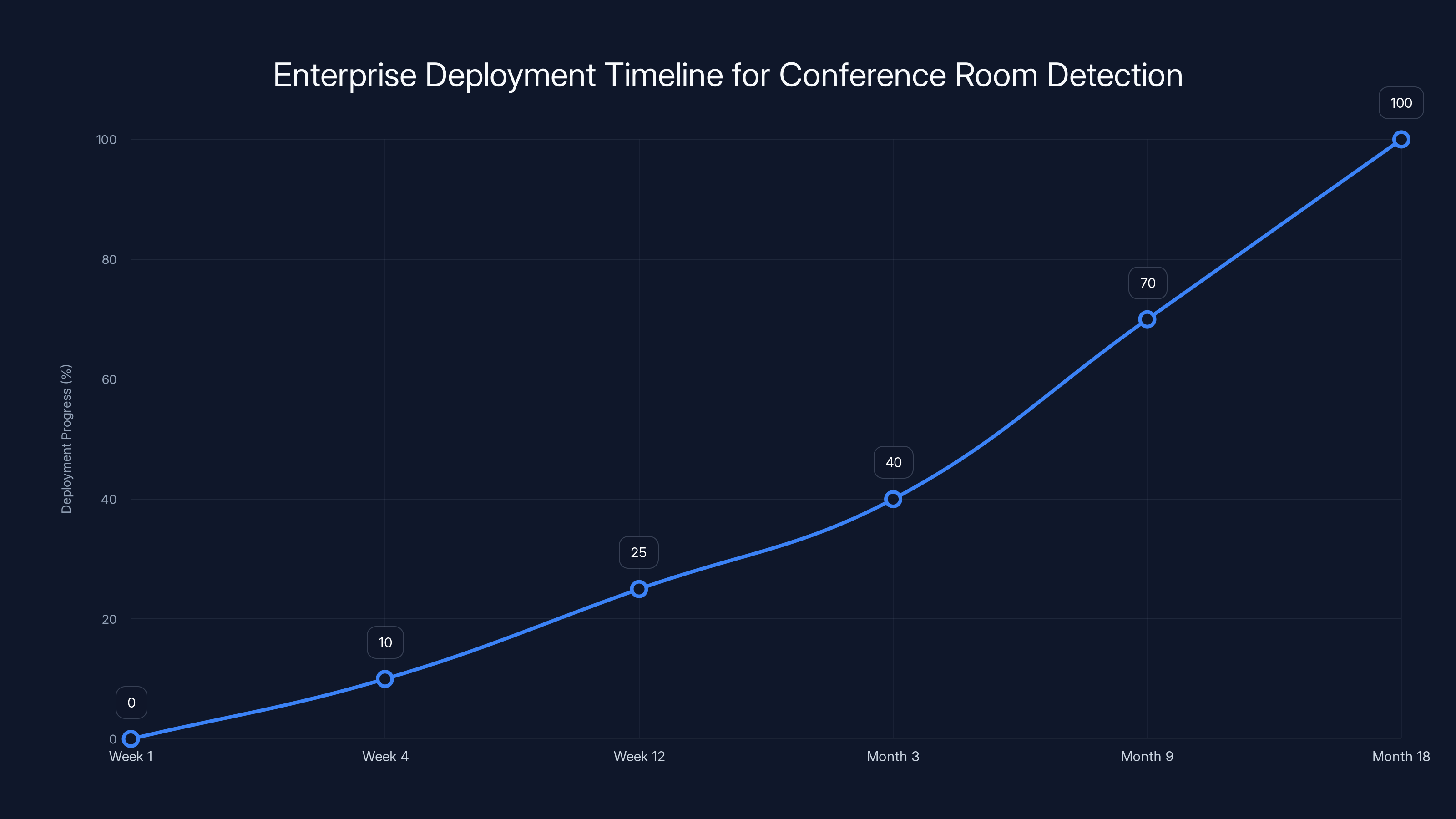

The deployment of conference room detection progresses from assessment to full deployment over 18 months, with key milestones at weeks 4, 12, and months 3, 9, and 18. Estimated data.

How Google Meet's Ultrasonic Room Detection Works

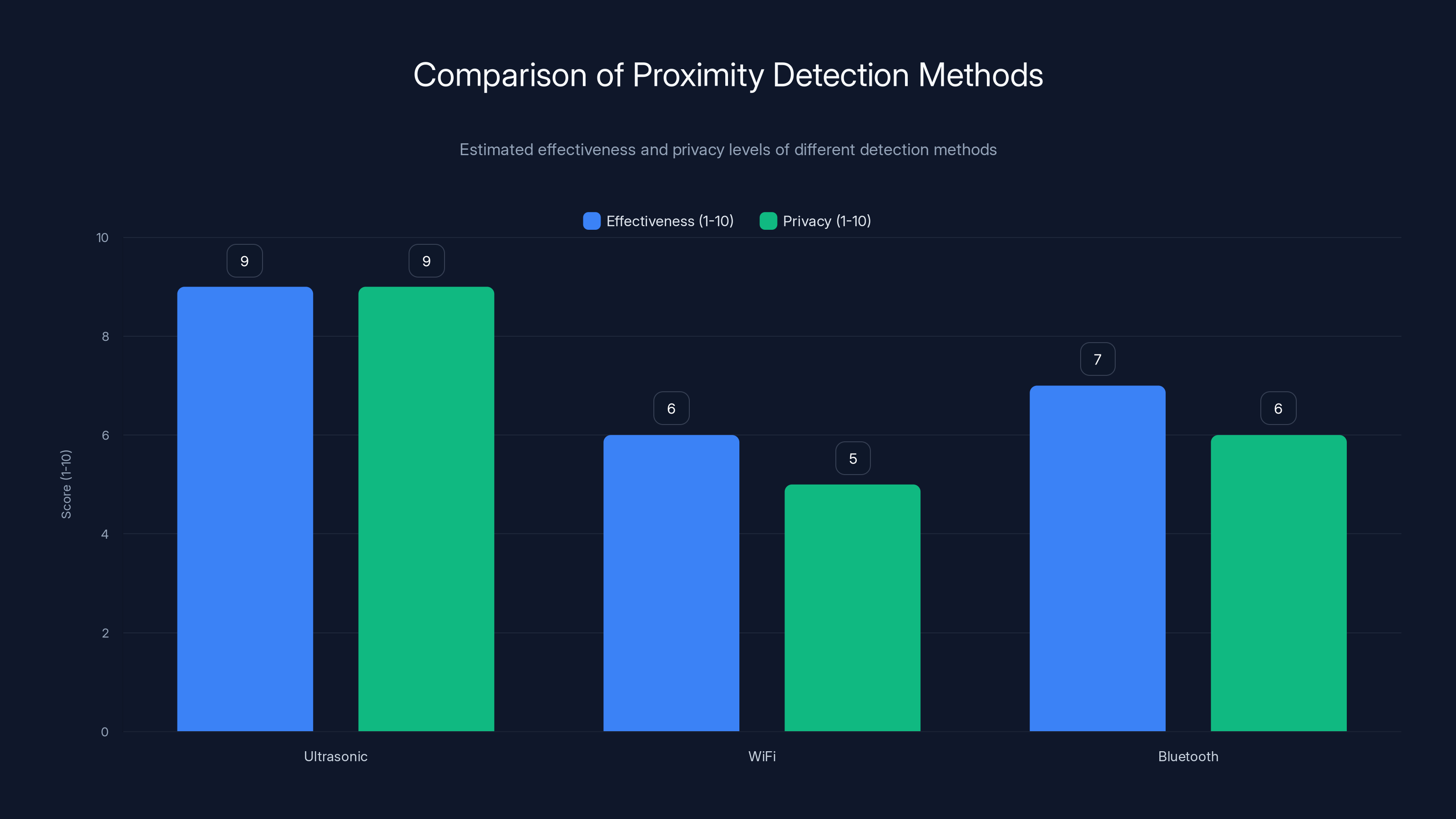

The technology behind Google Meet's room detection is deceptively elegant. Instead of relying on WiFi signal strength, Bluetooth proximity, or calendar integration (the old ways), Google uses ultrasonic sound waves that exist just outside human hearing range.

Here's the actual mechanism: Conference room hardware—typically the main display system, speakerphone, or dedicated video conferencing equipment—emits an ultrasonic signal at frequencies around 18-20 kHz. The human ear can't perceive sounds above 20 kHz, so this happens completely silently. Your phone or tablet has microphones that are sensitive enough to pick up these frequencies, even though you won't hear a thing.

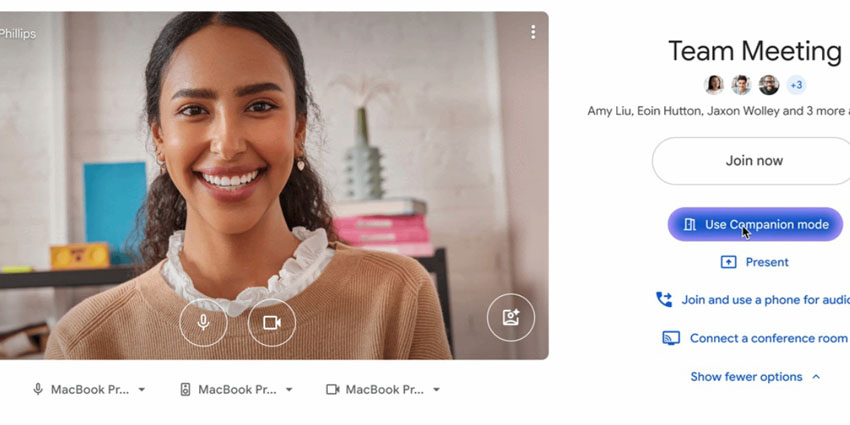

When your Android or iOS device detects this ultrasonic signal, it knows you're in that specific meeting room. The Google Meet or Gmail app then automatically displays Companion Mode as the primary joining option instead of making you choose between full participant mode or companion mode.

This is fundamentally different from previous proximity detection methods. WiFi-based detection requires devices to be on the same network and can give false positives in open office spaces where signal bleeds between areas. Bluetooth requires explicit pairing. Calendar integration depends on your meeting actually being scheduled and synced. Ultrasonic detection is physical, immediate, and doesn't require authentication or setup—it just works when the hardware is configured correctly.

The beauty of ultrasonic technology is its range and reliability. The signal travels through walls to some degree, but it degrades quickly with distance, which means it's effective within a conference room's footprint but not across the entire office. This precision is exactly what you need for room-level detection.

Google didn't invent this approach. Retailers have used ultrasonic beacons for location-based mobile experiences for years. What's different here is the scale and the problem it solves. For the first time, there's a seamless, standards-agnostic way to make hybrid meetings work without requiring users to think about which mode they should join in.

Companion Mode: The Key to Fixing Hybrid Meetings

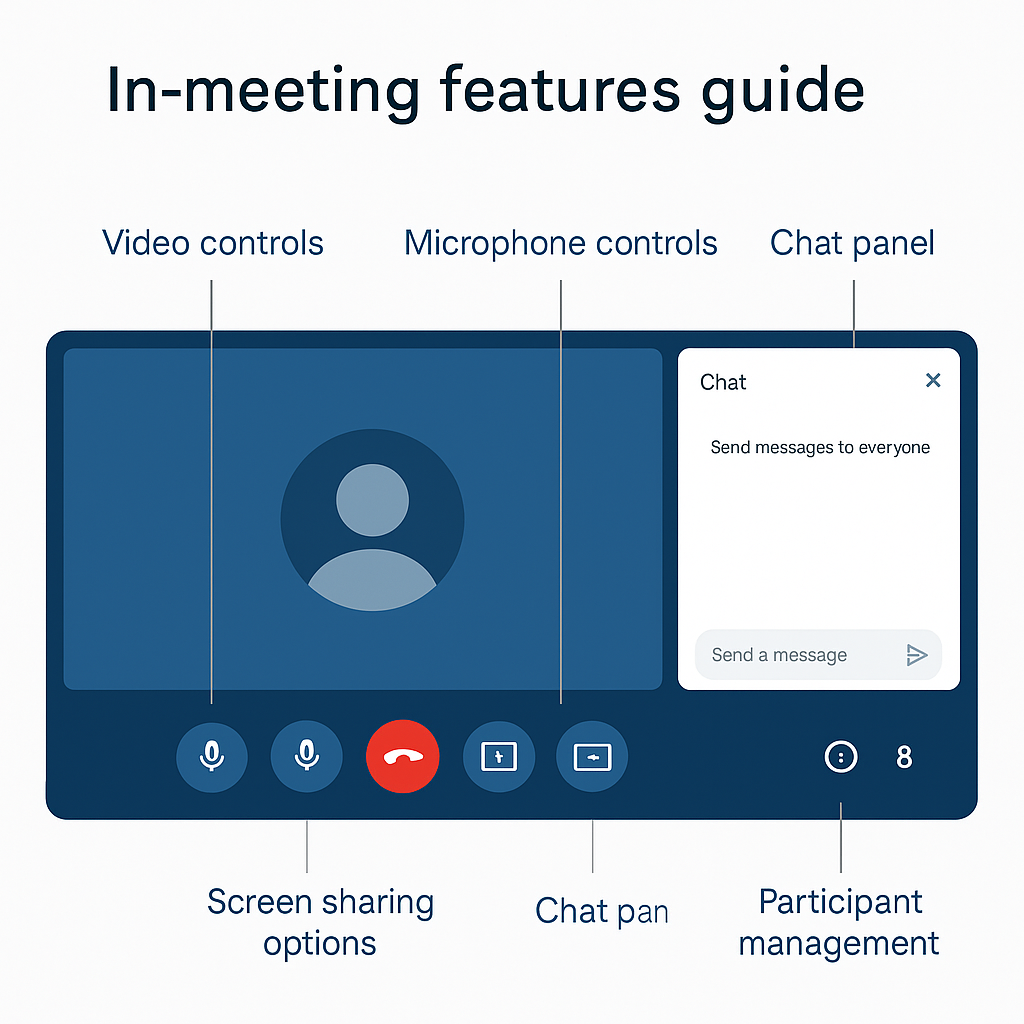

Before diving deeper into how the detection works, you need to understand what Companion Mode actually is and why automatically selecting it matters so much for meeting quality.

Companion Mode is a secondary joining option for people already sitting in a conference room. When someone joins a Google Meet through Companion Mode, their device doesn't activate its microphone. Instead, it uses the room's audio system. This means you get one microphone in the room (the conference equipment) rather than multiple devices all trying to capture and transmit audio independently.

The problem it solves is spectacularly common: you're in a conference room with five colleagues. The main video display is showing the meeting. Someone's laptop is open on the table running the full meeting. A colleague has their phone with the call open. Suddenly you have three microphones all picking up the same room audio and transmitting it to remote participants. The remote folks hear massive echo, reverb, and feedback. People talking in the room sound like they're in a wind tunnel. The whole meeting becomes unpleasant for everyone.

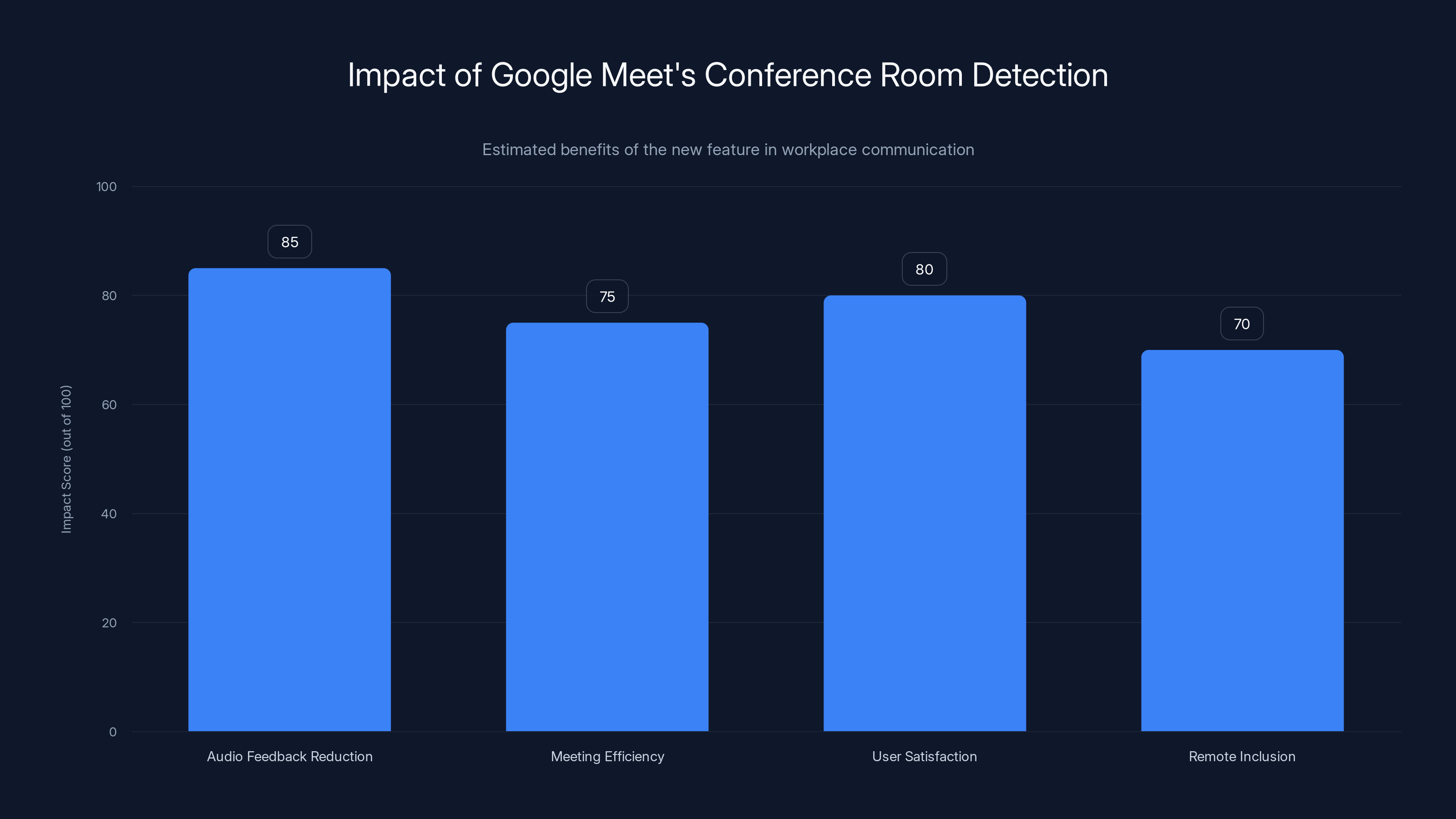

Companion Mode fixes this by designating one audio source. People in the room use the room's professional audio equipment. Their phones or tablets become video-only displays. Remote participants hear clean audio from a single microphone rather than multiple overlapping sources.

The problem Google is solving with automatic detection is that most people don't know Companion Mode exists or when to use it. They just open the Google Meet app and join the meeting the normal way. This is why automatic detection through ultrasonic signals is so valuable. It removes the decision-making step. You walk into a meeting room with your phone, the app automatically detects the ultrasonic signal from the conference equipment, and Companion Mode becomes the obvious default choice.

Google also made sharing audio easier from third-party apps when using Chrome on desktop. Previously, if you wanted to share audio from Spotify, Apple Music, or another streaming service during a Meet call, you had to use workarounds. Now, sharing audio directly from media apps works natively. This further reduces the need for multiple audio sources and the confusion that creates.

Ultrasonic detection is estimated to be the most effective and privacy-preserving method compared to WiFi and Bluetooth. Estimated data.

Automatic Check-In and Attendance Tracking

One secondary benefit of the ultrasonic detection system is automatic check-in. When your device detects the ultrasonic signal from conference room hardware, Google Meet automatically marks you as checked in to that meeting, assuming you're in the call or about to be.

For enterprise organizations with significant conference room utilization, this is a meaningful operational improvement. IT admins and facilities teams can track which meeting rooms are actually being used, when they're occupied, and by how many people. Instead of relying on calendar data (which is often inaccurate because meetings get scheduled but moved or cancelled), they have real-time information about room occupancy based on actual device proximity.

This data informs capacity planning, helps identify underutilized conference spaces, and can feed into hot-desking and desk-sharing systems. If you have data about which rooms are consistently full during certain time windows, you can make better infrastructure investments.

The check-in happens automatically through the phone's microphone detecting the ultrasonic signal. No additional user action required. No QR codes to scan or Bluetooth devices to connect. The system is entirely passive from the user's perspective.

However, there's a privacy question worth noting: your phone's microphone is being used to detect these signals constantly. Google says this is limited to the ultrasonic frequency range and doesn't record audio, but it does mean your device's audio hardware is actively listening for these signals when the Meet or Gmail app is open. For most organizations, this is a reasonable tradeoff for the operational benefits and improved meeting quality. But it's worth understanding what's happening under the hood.

Technical Implementation: What IT Admins Need to Know

For organizations deploying this feature, the technical requirements are straightforward but important to understand.

Hardware Requirements: Your conference room hardware needs to emit the ultrasonic signal. This includes:

- Google Meet hardware (Nest Hub, Nest Hub Max, and dedicated Meet hardware like the Series One)

- Compatible third-party conferencing systems that support Google's ultrasonic protocol

- Dedicated video bar systems from vendors who've integrated the technology

If you have older conference room equipment—basic projectors, simple speakerphones, or non-smart displays—you won't get ultrasonic beacon functionality. You'll need to upgrade to compatible hardware to enable this feature.

Software Requirements: On the user side, proximity detection requires:

- Google Meet app on iOS or Android (version with the detection feature enabled)

- Gmail app with integrated Meet functionality

- Microphone permissions enabled for the app

- Bluetooth or location services are NOT required

The feature works on both primary devices (the ones you use for work) and secondary devices (tablets, older phones, or shared devices in the meeting room).

Admin Controls: Workspace admins can manage this feature through the Google Admin console:

- Enable or disable proximity detection at the organization level

- Enable or disable at the room level (if your hardware supports it)

- No granular user-level controls are currently available

- Organizations can't require users to use Companion Mode, only recommend it through auto-selection

Google stores information about which devices detected which conference room signals, but this is used primarily for functionality and quality improvement. The company doesn't link this data to user identity beyond what's necessary to mark attendance.

Network Considerations: The ultrasonic detection doesn't depend on network connectivity, but Companion Mode itself requires the device to be connected to the same network as the conference room hardware for optimal functionality. Your WiFi infrastructure doesn't need special configuration, but it should be robust enough to handle multiple simultaneous connections in each conference room.

Privacy and Security Implications

Anytime you're talking about microphones actively listening for signals, privacy concerns are legitimate. Let me break down what's actually happening and what the real risks are.

What Google Is Actually Doing: The phone's microphone is listening for specific ultrasonic frequencies (around 18-20 kHz) that are way above human hearing range. The Google Meet app doesn't record audio. It doesn't analyze speech. It's literally just detecting the presence of a specific frequency pattern and acting on that detection.

This is fundamentally different from the phone's microphone listening to conversations. It's more like a proximity beacon. Your phone is asking, "Is there an ultrasonic signal in this room?" If yes, it does something. If no, it doesn't.

Privacy Tradeoffs: The real privacy question is whether you want your organization to know when you're physically in specific conference rooms. For most corporate environments, this is acceptable—your employer already knows you're in the building, in meetings, and using conference rooms. The ultrasonic detection is just making implicit knowledge explicit and usable.

However, if you work in a field where location privacy is critical—journalism, healthcare research, legal services—you might want to disable this feature. You have the right to ask your IT department to turn off proximity detection, and they should be able to do this at the organization or room level.

Security Considerations: The ultrasonic signals themselves can't be used for attacks or exploits. They're one-way broadcasts. Someone can't inject ultrasonic signals to join meetings they shouldn't be in or spoof the system. The worst they could do is emit ultrasonic noise that causes Companion Mode to trigger when it shouldn't, creating a mild annoyance rather than a security breach.

The real security advantage is actually the flip side: by automatically using Companion Mode in conference rooms, you reduce the risk of people accidentally joining the main meeting twice or from multiple devices, which can create audio loops and make calls harder to secure.

Data Retention: Google retains proximity detection logs for operational purposes—tracking which rooms were used, capacity planning, and system improvement. This data is subject to the same security and privacy policies as other Google Workspace data. Organizations can request this data be deleted, and it's included in data export requests.

For most organizations, the privacy tradeoff is worth it. The improvement in meeting quality and the operational data for room utilization are valuable. But you should make an informed decision based on your industry and security requirements.

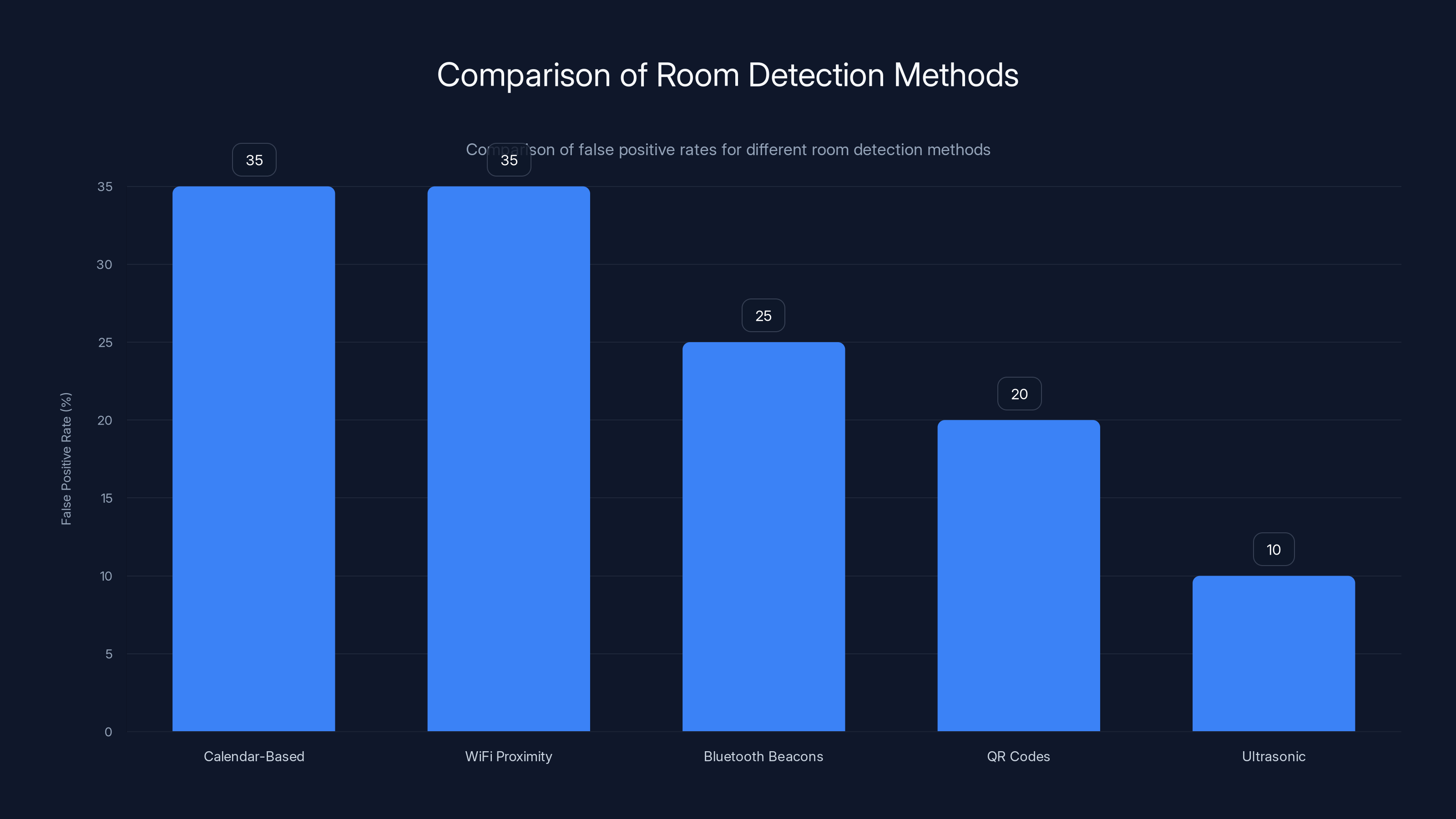

Ultrasonic detection by Google has the lowest false positive rate at 10%, making it more reliable compared to other methods like calendar-based and WiFi proximity detection, which both have rates around 35%.

Comparison with Alternative Room Detection Methods

Google Meet's ultrasonic approach is new, but it's worth comparing to other methods organizations have tried for solving the hybrid meeting problem.

Calendar-Based Detection: Some conferencing systems try to detect when you're in a meeting room by looking at your calendar. If you have a meeting scheduled in the conference room calendar and you're joining at that time, the system assumes you're in that room.

Problems: Calendars are frequently inaccurate. Meetings get scheduled multiple times, rescheduled, moved, or cancelled. People join meetings from different locations than where they're scheduled. This approach has a false positive rate around 30-40%.

WiFi Proximity Detection: By analyzing signal strength from WiFi access points, systems can estimate whether devices are in the same physical area.

Problems: WiFi signals penetrate walls, making it hard to pinpoint specific rooms. Signal strength varies. Multiple access points can create confusion about location. You need to be on the same network. False positive rates are similar to calendar-based detection.

Bluetooth Beacons: Dedicated Bluetooth devices placed in conference rooms broadcast their presence. Devices with Bluetooth enabled can detect and join accordingly.

Problems: Requires Bluetooth to be enabled (battery drain, security concerns). Requires explicit pairing or discovery in many cases. Works at variable ranges. Requires additional hardware that needs maintenance and battery replacement.

QR Codes and Manual Check-In: Users manually scan a QR code or tap a button in the room to indicate they're present.

Problems: Relies on user behavior. People forget to check in, don't find the QR code, or don't understand the purpose. High friction for a basic use case. Check-in doesn't automatically select Companion Mode.

Ultrasonic Detection (Google's Approach): Devices listen for inaudible ultrasonic signals emitted by conference room hardware.

Advantages: Precise room-level detection with minimal false positives. No user action required. No additional hardware pairing. Works reliably within a room's footprint. One-way signal prevents spoofing. No privacy concerns about location tracking (it's device-to-hardware, not server-based).

Limitations: Requires compatible conference room hardware. Ultrasonic signal range is limited to the physical room. Older equipment can't support it without replacement.

Ultrasonic detection is objectively the best approach among these options. It's why companies like Apple and others have been exploring similar technologies. The main limitation is hardware compatibility, which is a deployment issue rather than a technology flaw.

Rollout Timeline and Availability

Google began rolling out conference room detection in late 2024, but like most Google Workspace features, the rollout is happening in waves rather than all at once. Understanding the timeline helps IT teams plan when to expect the feature and how to prepare.

Initial Rollout: Started in October 2024 for organizations with Google Meet Enterprise or Google Workspace customers with certain account tiers. Individual Google account holders using Google Meet were included in the initial rollout, though with potential delays.

Current Status: By late 2024 and into 2025, the feature should be available to all Google Workspace users, including Business Starter, Business Standard, Business Plus, and Enterprise tiers. Individual account holders (people using Meet without a Workspace subscription) have access, but some features like admin controls are Workspace-specific.

Domain Variation: Google is always transparent about this: some domains roll out faster than others. If you don't see the feature yet, it's coming. The variation depends on Google's deployment infrastructure, regional factors, and account characteristics.

No Opt-Out at User Level: Users can't disable the feature individually. If your organization has enabled it, the feature is available. You can ask your admin to disable it at the room or organization level, but there's no per-user toggle. This is worth communicating to your workforce so they understand what's happening when Companion Mode suddenly becomes their default.

Hardware Update Timeline: For the feature to actually work in your conference rooms, you need compatible hardware. If you have older meeting equipment, upgrading will take time. Google is working with hardware manufacturers to support the ultrasonic standard, but adoption varies:

- Google's own hardware: Full support across the entire product line

- Cisco Webex systems: Support coming through firmware updates

- Polycom and other vendors: Varying degrees of support and update timelines

- Older equipment: Likely won't be updated; upgrade required

For organizations with lots of conference rooms, the hardware replacement timeline might be 12-24 months to get full coverage. Plan accordingly.

How to Enable and Configure Room Detection

If you're an IT administrator, here's what you need to do to enable conference room detection in your organization.

Step 1: Verify Hardware Compatibility First, inventory your conference rooms and determine which have compatible hardware. Check with your vendors:

- Google Meet hardware: Usually compatible out of the box

- Third-party systems: Contact the manufacturer for firmware version requirements

- Older equipment: Probably not compatible; plan upgrade timeline

Step 2: Access the Admin Console Go to the Google Admin console (admin.google.com). You need an account with the appropriate admin permissions. If you don't have this access, your super admin needs to delegate the Google Meet administration role to you.

Step 3: Navigate to Meet Settings In the admin console, go to Apps & services > Google Meet > Settings. The conference room detection settings should be available in the version released in late 2024.

Step 4: Enable Proximity Detection At the organization level, you can enable or disable proximity-based joining. If you enable it, all compatible conference rooms will start broadcasting ultrasonic signals and devices will start detecting them.

Step 5: (Optional) Configure at Room Level If your hardware supports it, you can enable or disable proximity detection for specific rooms. This is useful if:

- Some rooms have newer hardware and others don't

- You want to pilot the feature in certain areas before full rollout

- You have privacy concerns about specific room usage tracking

Step 6: Communicate to Users This is critical and often overlooked. Send out a message explaining:

- What's happening (ultrasonic detection for better meeting quality)

- Why it matters (eliminates echo and audio problems)

- How it works from a user perspective (automatic Companion Mode selection)

- What they should do (nothing—it's automatic)

- Who to contact if they have concerns

Step 7: Monitor Adoption and Feedback Check your Admin console for usage metrics. Are users actually getting into Companion Mode in conference rooms? Are there technical issues? Are there complaints? Gather feedback and adjust accordingly.

Google Meet's conference room detection feature significantly reduces audio feedback and improves meeting efficiency and user satisfaction. Estimated data based on typical feature impact.

Real-World Impact: Conference Room Scenarios

To understand why this feature matters, let's walk through some concrete meeting scenarios and see how room detection changes the experience.

Scenario 1: The Hybrid All-Hands A company has 40 employees. 15 are in the main conference room for an all-hands meeting. 25 are dialing in from home. Someone's laptop is on the table where the main camera is set up. Two people have their phones out on the table checking notes. One person has a Bluetooth speaker nearby for whatever reason.

Old approach: Three audio devices actively transmitting. The people dialing in hear themselves echoing. The in-room folks hear their own voices come back through the speaker system. The meeting is miserable, takes 3 minutes to sort out audio, and people are frustrated for the whole call.

New approach: Conference room detection automatically puts everyone's phones and laptops in Companion Mode. One audio source (the room's system). Clear audio from the room. Remote participants hear exactly what's happening, and the meeting starts on time.

Scenario 2: The Back-to-Back Meeting Blender A manager has meetings scheduled in three different conference rooms in a row. She walks from room to room, trying to join her second meeting while still in the first room (running late). She opens Google Meet, and normally would have to choose which room she's actually in or just join normally and create an audio problem.

With detection: The app automatically puts her in Companion Mode for the room she's in. When she walks down the hall to the next room, the detection updates automatically. Transitions between meetings are seamless.

Scenario 3: The Late Joiner A participant walks into a meeting room 10 minutes late. With calendar-based or manual check-in systems, they either don't get checked in or they have to take manual action. With ultrasonic detection, they walk in, join the meeting, and they're automatically in Companion Mode and checked in, ready to participate immediately.

Scenario 4: The Video-Only Collaboration Session A team is in a conference room working on designs or code together. They want to show their work to a remote colleague. They don't need shared audio (the room's audio quality is fine for background). They just need good video and clean screen sharing.

Companion Mode handles this perfectly. The room's audio stays isolated. High-quality video and screen sharing goes to the remote colleague. The room's team uses their room audio, the remote person uses their home audio. No conflicts.

In all these scenarios, the old approach required users to understand the system, make correct choices, or tolerate poor quality. The new approach just works automatically.

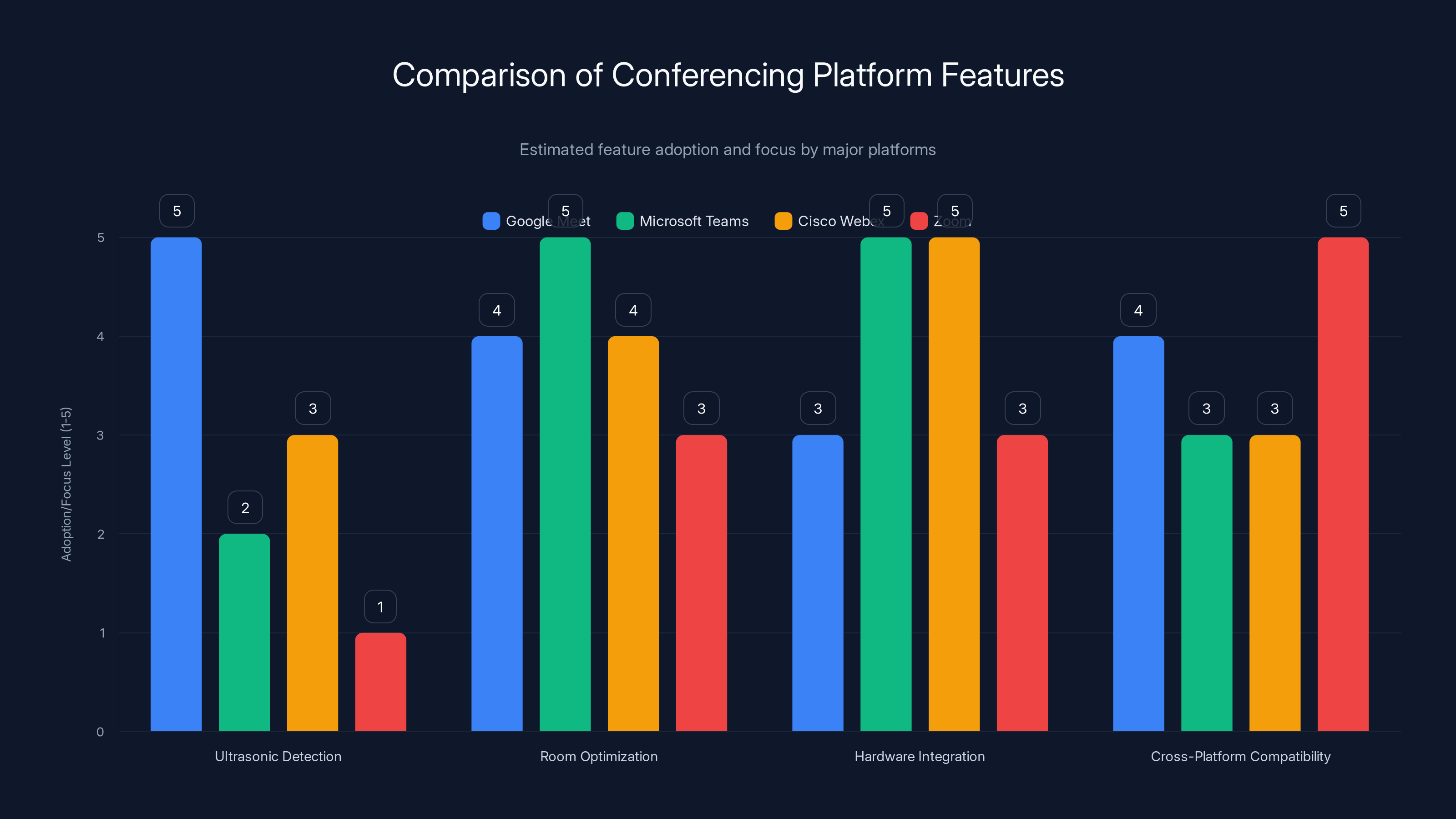

Competitive Landscape: What Others Are Doing

While Google is leading with ultrasonic detection, other conferencing platforms are approaching the hybrid meeting problem differently. Understanding where the industry is headed helps contextualize Google Meet's strategy.

Microsoft Teams: Microsoft has been investing in room optimization features, including intelligent camera systems that detect how many people are in a room and adjust the video feed accordingly. Teams doesn't yet have ultrasonic room detection, but they're moving toward similar automation-first approaches. They're also integrating with hardware partners more aggressively.

Cisco Webex: Cisco has been building room intelligence features for years. They offer proximity detection through Webex Desk Pro devices and are working on integration with their broader hardware ecosystem. Cisco is also exploring ultrasonic detection capabilities, but adoption is slower than Microsoft or Google.

Zoom: Zoom has been focused on cross-platform compatibility and has been slower to add room-specific features. They're adding Zoom Rooms intelligence features but aren't yet at the automatic detection level.

The trend is clear: every major conferencing platform is moving toward context awareness. Instead of users making decisions about how to join or configure settings, the software automatically detects context (is this a conference room? How many people? What's the network quality?) and optimizes accordingly.

Google's ultrasonic approach is the cleanest implementation of this concept because:

- It's hardware-independent (works with any WiFi setup)

- It's location-precise (identifies specific rooms, not just "in a building")

- It's one-way (no privacy concerns about tracking)

- It's passive from the user perspective (no actions required)

Other platforms will likely adopt similar approaches. The ultrasonic standard isn't Google-exclusive, though Google is driving its adoption.

Audio Sharing Improvements for Desktop Users

Along with room detection, Google also improved audio sharing from third-party applications when using Google Meet on the desktop (specifically Chrome). This is a smaller but meaningful improvement for certain use cases.

The Problem: If you wanted to share audio from Spotify, Apple Music, YouTube, or any other streaming service during a Meet call, you had limited options. You could share your entire screen and share audio that way, but it was clunky. Or you could play music through your speakers and rely on your microphone to pick it up, which sounds terrible.

The Solution: Google Meet on Chrome now allows direct audio sharing from compatible apps. You select the audio source you want to share (Spotify's playback, a YouTube video, etc.) and it goes directly into the Meet call. Remote participants hear clean, high-quality audio without going through your microphone.

Real-World Use Cases:

- Sharing a presentation that includes background music

- Playing a training video with sound during a meeting

- Sharing music during a team celebration or casual hangout

- Demonstrating audio applications during a technical presentation

This feature was "highly requested" according to Google, which means it was a common workaround need. The fact that it took this long to implement is somewhat surprising—competitors have had this for a while—but it's good to see it arriving.

How to Use It: Open a Google Meet call on Chrome on the desktop. Click the "Share screen" button. Instead of selecting your entire screen, select "A tab" or "A window". When you select a tab with audio, Google Meet now captures that audio automatically and includes it in the call.

This is technically a separate feature from room detection, but it's part of Google's broader effort to improve audio quality in video calls.

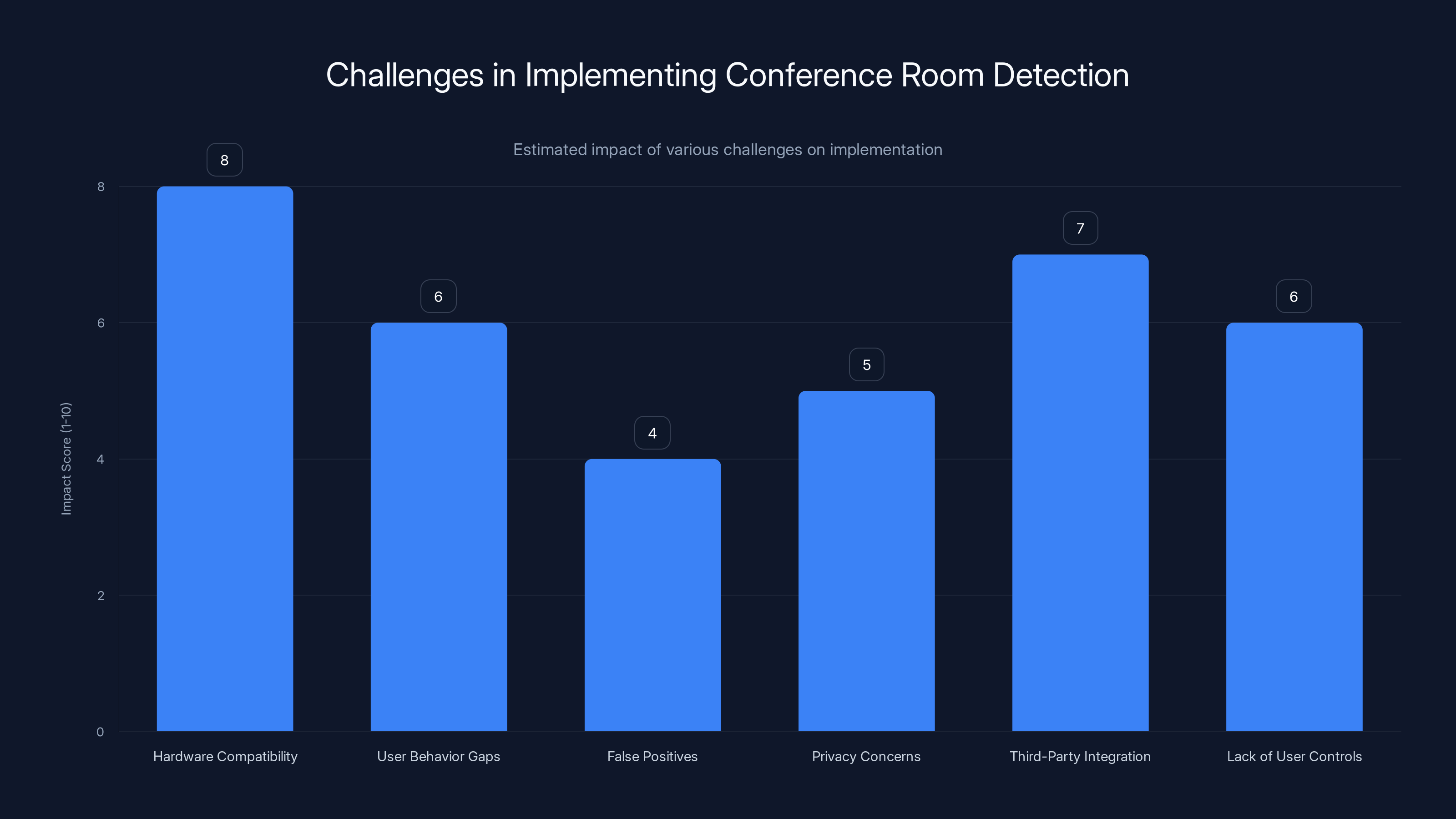

Hardware compatibility and third-party integration pose the highest challenges in implementing conference room detection. Estimated data based on typical IT deployment issues.

Implementation Challenges and Limitations

While conference room detection is a solid feature, real-world deployment will expose some limitations and create specific challenges IT teams need to anticipate.

Hardware Compatibility Challenges: Not all conference room hardware will support ultrasonic broadcasting. Older systems, cheaper equipment, and devices from smaller manufacturers might not have firmware support. This creates a patchwork where some rooms work perfectly while others don't, creating user confusion. Teams should prioritize upgrading conference room hardware, but this is expensive and time-consuming.

User Behavior Gaps: Some users won't understand why Companion Mode is suddenly selected for them. They might think the app is broken, might switch back to participant mode to troubleshoot, or might disable the feature because they don't understand it. Clear communication and documentation are essential.

False Positives in Open Spaces: While ultrasonic signals don't travel far, there could be edge cases where detection triggers unexpectedly. If a conference room is immediately adjacent to an open office area, the signal might reach nearby desks. For most organizations, this is a minor issue, but it's worth testing in your specific environment.

Privacy Pushback: Some organizations will face questions from employees about microphones listening for signals. Even though it's safe, explaining the technical details to non-technical staff takes effort. Having clear privacy documentation from Google helps, but IT teams might still face questions.

Integration with Third-Party Systems: If your organization uses third-party attendance tracking, resource booking systems, or analytics tools, integrating room detection data with those systems requires additional work. Google provides APIs and data exports, but custom integration isn't automatic.

No Per-User Controls: Unlike some features, users can't disable room detection for themselves if their organization enables it. They have to ask an admin. For organizations where privacy or personal preference is important, this is a limitation worth noting.

None of these are deal-breakers, but they're worth planning for during rollout.

The Broader Vision: Context-Aware Conferencing

Conference room detection is a specific feature, but it's part of a larger trend in video conferencing: context-aware systems that automatically adapt to the situation rather than requiring users to configure things manually.

In the future, you can expect video conferencing systems to:

Detect Physical Context: Know whether you're in a conference room, home office, car, or public space, and optimize audio, video, and privacy accordingly.

Optimize Network Conditions: Automatically adjust video quality, resolution, and bitrate based on available bandwidth rather than requiring manual selection.

Understand Participant Roles: Recognize who's participating from where and optimize the layout accordingly. If you're in a room with 5 people and 10 dialing in, the system shows the room prominently and the remote folks in a grid. If it's 1 in the room and 15 remote, swap that.

Manage Peripherals Automatically: Connect to room cameras, microphones, and speakers without requiring users to select devices. The system finds compatible devices and uses them.

Predict Problems and Prevent Them: Instead of waiting for echo or feedback, the system detects the configuration (multiple microphones in the same room) and prevents it automatically through Companion Mode selection.

Google's ultrasonic detection is the opening chapter of this vision. The feature solves one specific problem, but it's built on infrastructure and thinking that enables much more sophisticated context awareness in the future.

For organizations, this means the next few years will see conferencing software becoming less about "what buttons do I click?" and more about "the software understands my situation and optimizes accordingly." The manual burden decreases, and the experience improves.

Deploying in Enterprise Environments

If you're managing a large organization with dozens or hundreds of conference rooms, conference room detection creates both opportunities and deployment challenges. Here's how to think about rolling this out at scale.

Phase 1: Assessment (Weeks 1-4) Inventory all conference rooms and meeting spaces. Categorize them by:

- Hardware type and age

- Size and primary use case

- Utilization rates

- Which departments use them most

Identify which rooms have compatible hardware and which will need upgrades. For a typical organization, 30-50% of conference rooms might have compatible hardware initially.

Phase 2: Pilot Program (Weeks 4-12) Enable conference room detection in 5-10 high-traffic conference rooms that have compatible hardware. Brief the teams who use these rooms. Collect feedback about whether the feature works as expected, whether there are technical issues, and whether users understand what's happening.

Monitor for problems like:

- Rooms that don't detect properly

- Users who keep switching out of Companion Mode

- Audio issues that the feature doesn't solve

- Network problems related to the feature

Phase 3: Iterative Rollout (Months 3-9) Expand to more rooms. Begin hardware upgrade planning for incompatible equipment. Update documentation and training materials based on pilot feedback. Brief new groups of users before their conference rooms get the feature.

Phase 4: Full Deployment (Months 9-18) Roll out organization-wide with upgraded hardware. At this point, most rooms should support the feature. Continue monitoring usage metrics and user feedback.

Success Metrics to Track:

- Adoption rate: How many people are using Companion Mode when in conference rooms?

- Audio quality: Do reported audio problems decrease after deployment?

- Attendance tracking: Is room detection data useful for your occupancy tracking?

- User satisfaction: Are people happy with the automatic Companion Mode selection?

- Hardware utilization: Are conference rooms being used more effectively now that meetings work better?

For a large organization, this process takes 12-24 months from assessment to full deployment. This isn't because the feature is complicated, but because coordinating hardware upgrades, training staff, and rolling out across multiple locations takes time.

Google Meet leads in ultrasonic detection, while Microsoft Teams excels in room optimization and hardware integration. Zoom focuses on cross-platform compatibility. (Estimated data)

Best Practices for Maximizing the Feature

Once you've deployed conference room detection, how do you make sure it's delivering value and people are using it effectively?

Clear Communication: Before enabling the feature, send an email explaining what's happening, why it matters, and what users should expect. Make it clear that Companion Mode is automatic and that it improves meeting quality.

Adequate Hardware: Make sure your conference room hardware is actually compatible and properly configured. A partially-deployed feature creates confusion. Either fully deploy it or don't deploy it.

Training: Include conference room detection in your video conferencing training and documentation. Show people what Companion Mode is, why it's used, and how to switch out if they absolutely need to (though they shouldn't).

Monitoring: Use the admin console to monitor adoption. If rooms aren't getting used or Companion Mode isn't being selected consistently, there's a problem to troubleshoot.

Feedback Loop: Ask users for feedback. If certain rooms are having issues, identify them early. If users are confused, provide more documentation.

Hardware Refresh Planning: As you replace conference room equipment for normal reasons, prioritize compatible hardware. Over time, your entire fleet becomes compatible.

Meeting Room Standards: If you have the opportunity to standardize on conference room equipment, do so. It makes deployment and support much easier.

Privacy, Security, and Compliance Considerations

Before deploying conference room detection at scale, think through privacy, security, and compliance angles.

Privacy Framework: Does your organization have a privacy policy that addresses location tracking and device monitoring? Conference room detection isn't location tracking in the sense of GPS or cellular data, but it does create a record of which devices were in which rooms at what times. Make sure this aligns with your privacy policy.

Compliance Requirements: Some industries have specific requirements about monitoring and location tracking:

- HIPAA (healthcare): Conference room detection would generally be fine, but document how you handle health information discussed in rooms.

- PII Protection: If your organization is subject to PII protection regulations, be clear about how device-to-room data is handled.

- Labor Laws: Some jurisdictions have specific rules about employee monitoring. Conference room detection is very light monitoring, but it's worth reviewing.

Data Residency: Where is the conference room detection data stored? Google Workspace stores this data in the regions specified in your Workspace agreement. Make sure that aligns with your data residency requirements.

Data Retention: How long does Google keep the data? You should have a policy about how long your organization retains this information. You can request deletion at any time.

User Rights: Do employees have the right to know when conference room detection is enabled? Should you have explicit consent? Different organizations will answer these questions differently, but consistency and transparency are important.

For most organizations, conference room detection doesn't create significant privacy concerns beyond standard video conferencing. But it's worth thinking through proactively rather than reactively.

Looking Forward: The Future of Hybrid Meetings

Conference room detection is one step in a larger evolution of how we'll work in hybrid environments. What's coming next?

Spatial Audio: Future systems will use directional audio to enhance the in-room experience. Remote participants will be able to hear audio from multiple in-room participants as if they're in the room, with audio coming from the direction each person is sitting.

AI-Assisted Facilitation: Video conferencing systems will use AI to identify when remote participants aren't being heard and automatically adjust camera angles, audio levels, and speaker focus to include them more fairly.

Virtual Room Presence: Instead of just seeing a video feed of the conference room, remote participants might see an immersive view that makes them feel more present. This could involve AR/VR integration.

Automatic Transcription and Summarization: Conference room detection could trigger automatic transcription and summarization specific to that room, with summaries automatically sent to absent team members.

Cross-Room Meetings: When multiple teams in different office locations are in their own conference rooms joining the same call, the system could orchestrate camera and audio switching to make it feel like one shared room rather than separate video feeds.

Ambient Intelligence: Conference rooms themselves could become smart participants, managing cameras, lighting, and audio based on what's happening in the meeting.

These aren't science fiction. They're the natural evolution of systems like conference room detection. The technical foundation is being laid now.

Troubleshooting Common Issues

When you do deploy this feature, some issues will inevitably arise. Here's how to troubleshoot the most common ones.

Issue: Companion Mode isn't selecting automatically in a specific conference room

Diagnosis: The room's hardware probably isn't emitting the ultrasonic signal, or your device isn't picking it up.

Solutions:

- Check that the hardware in the room is compatible and has the latest firmware

- Verify that the room is enabled for proximity detection in the admin console

- Test with a different device (if it works on your phone but not your tablet, it might be device-specific)

- Move closer to the conference room's speaker system—range is limited

- Check that your app is up to date

- Restart the app and try again

Issue: Users are confused about why Companion Mode is suddenly selected

Diagnosis: Users didn't get adequate communication about the feature.

Solutions:

- Send follow-up documentation explaining Companion Mode and why it's used

- Add a tooltip or info message in the Meet app explaining automatic Companion Mode selection

- Include conference room detection in onboarding training

Issue: Audio quality is still bad even with Companion Mode

Diagnosis: Companion Mode is working, but there's a different audio problem—poor room microphone quality, network issues, etc.

Solutions:

- Check the quality of the conference room's microphone hardware

- Test with a different device and different participant to isolate whether the problem is inbound or outbound

- Check network connectivity and bandwidth

- Consider upgrading the room's audio hardware if it's outdated

Issue: Privacy concerns from employees about microphone monitoring

Diagnosis: Employees don't understand what the feature does or don't trust it.

Solutions:

- Provide clear, non-technical explanation of how the feature works

- Share Google's security and privacy documentation

- Offer the option to disable at the admin level if concerns are widespread

- Emphasize that the feature is optional and can be turned off

Runable: Automating Your Meeting Workflows

While Google Meet's room detection improves the meeting experience itself, automating the broader meeting workflow is just as important. Runable can help teams automate meeting-related tasks like generating meeting notes, creating action item documents, and producing meeting summaries—all without manual work.

Imagine your team's Google Meet call automatically triggers a workflow that generates a clean meeting summary document, extracts action items with owners and due dates, and distributes it to all participants. That's the kind of automation that eliminates the administrative burden of meetings.

Use Case: Automatically generate meeting notes, action items, and summaries from Google Meet calls with AI agents, eliminating manual documentation work.

Try Runable For FreeFAQ

What exactly is ultrasonic detection and how is it different from other proximity detection methods?

Ultrasonic detection uses sound frequencies above the range of human hearing (typically 18-20 kHz) emitted by conference room hardware to signal to nearby devices that they're in that specific room. Unlike WiFi-based detection which can have false positives across large areas, or Bluetooth which requires pairing, ultrasonic detection is precise to the room level, immediate, and requires no setup. The device's microphone detects the frequency but doesn't record audio, making it both effective and privacy-preserving.

Does Companion Mode mean I won't be able to share my screen or video from my device?

No, Companion Mode doesn't restrict sharing. Your device still participates fully in the video call—it displays video, shares screens, and enables chat. The only thing Companion Mode changes is that your device's microphone isn't active. Instead, the room's audio system captures and transmits sound. You keep all the visual and interactive features of full participation.

Can I opt out of automatic Companion Mode selection if I'm in a conference room?

Yes, but not at the individual user level. If you're in a room and Companion Mode is automatically selected, you can manually switch to participant mode if you need to use your device's microphone (though this will likely create audio problems). However, there's no permanent per-user toggle. If you want to disable the feature entirely, you need to ask your IT administrator to disable proximity detection for your organization or specific rooms.

What happens if the conference room hardware doesn't emit the ultrasonic signal—will Companion Mode still work?

No, Companion Mode requires proximity detection to be enabled by the ultrasonic signal. If the room's hardware isn't compatible or isn't broadcasting the signal, your device will show you the standard joining options. You can still manually select Companion Mode if it's available, but it won't be automatic. You'll need to upgrade your conference room hardware to enable proximity detection.

Does this feature work on all devices and with all Google Meet clients?

Conference room detection works on iOS and Android devices running the Google Meet app or Gmail app (which includes Meet integration) with the ultrasonic detection feature enabled. Desktop/web versions don't currently detect ultrasonic signals, though this could change. The feature requires that your device's microphone is functioning and that the Meet or Gmail app has microphone permissions enabled.

How does Google use the data collected when devices detect conference rooms?

Google uses proximity detection data for operational purposes—tracking which rooms are being used, capacity planning, system improvement, and ensuring the feature works correctly. This data is subject to standard Google Workspace privacy policies. Organizations can request data exports, deletion, or can disable proximity detection entirely. The data is not used for advertising or sold to third parties. Your organization controls whether the feature is enabled, and Google provides transparency about how the data is handled.

What's the business case for deploying conference room detection? What ROI should we expect?

The primary business benefits are improved meeting quality (reducing audio problems and the time spent troubleshooting them), better attendance tracking for conference room utilization, and reduced friction for users (no decisions required, automatic optimization). The quantifiable ROI depends on your organization's size, meeting frequency, and pain points. Organizations with frequent hybrid meetings typically see 10-15% time savings on meeting-related troubleshooting and better data for conference room capacity planning. There's also an intangible benefit of improved remote worker satisfaction—being part of a hybrid meeting that works well is significantly better than one that doesn't.

Can the ultrasonic signals be detected or exploited from outside the conference room?

Ultrasonic signals have very limited range—they degrade quickly with distance and don't travel far through walls. Someone would need to be in the room or immediately adjacent to detect them. The signals themselves are one-way broadcasts and can't be used to send data or attack anything. The worst-case scenario is someone generating ultrasonic noise nearby to trigger false detections, which would cause a minor inconvenience but no security breach. From a security perspective, ultrasonic detection is actually safer than some alternative methods.

Conclusion: The Small Feature That Shapes the Future of Work

Conference room detection sounds like a small thing. It's a convenience feature that automatically selects the right mode for joining a meeting from a conference room. But its implications are larger than the feature itself.

This is Google saying: We understand that hybrid work is the default. We're building infrastructure that acknowledges physical context and optimizes for it automatically. The technology is smart enough to eliminate decisions that users were making wrong anyway. The feature reduces friction. It improves quality. It solves a real, recurring problem that affected millions of calls every day.

For organizations deploying this feature, the timeline is clear: assessment now, pilot within a few months, full deployment within a year. The hardware compatibility challenge is real but solvable through normal refresh cycles. The privacy concerns are manageable through transparency and control.

For the industry more broadly, this is one piece of a larger trend. Video conferencing systems are becoming context-aware. They're moving from "user configures everything" to "system detects context and optimizes accordingly." Conference room detection is step one. Spatial audio, AI-assisted facilitation, cross-location meeting optimization—these are coming next.

The future of hybrid work isn't about making remote participation feel like being in the room. It's about making it irrelevant where you're joining from. The system figures out your context and optimizes automatically. Google Meet's conference room detection is a small but significant move in that direction.

If you're responsible for video conferencing infrastructure in your organization, start planning now. Assess your hardware, prepare your users, and plan your rollout. This feature is coming to your organization, and being ready makes the transition seamless.

If you're a user, understand what's happening. Companion Mode is better for everyone. You don't need to do anything—the system will handle it. The next time you join a meeting from a conference room and see Companion Mode automatically selected, you're experiencing the future of work that Google and others are building right now.

Key Takeaways

- Google Meet's ultrasonic detection uses inaudible 18-20 kHz sound frequencies emitted by conference room hardware to identify when devices are in physical proximity, automatically enabling Companion Mode to eliminate audio problems

- Companion Mode designation of a single audio source (the room's professional system) prevents echo and feedback by removing multiple microphone inputs that plague hybrid meetings

- Ultrasonic detection outperforms calendar-based, WiFi, and Bluetooth proximity methods with precise room-level identification and minimal false positives, requiring no user action or additional hardware pairing

- IT administrators can enable/disable proximity detection at organization or room level through Google Admin console, with automatic user check-in providing valuable occupancy data for facilities planning

- Enterprise deployment requires 12-24 months including assessment, hardware compatibility evaluation, pilot testing, iterative rollout, and staff communication to maximize adoption and resolve technical issues

Related Articles

- Windows Server 2008 End of Support: Complete Migration Guide [2025]

- Bose QuietComfort Ultra Headphones: Complete Guide to Noise-Canceling Excellence [2025]

- How to Disable Copilot on Windows 11 Work Devices [2025]

- Lenovo's CES 2026 Lineup: Rollable Screens, AI Tools & Enterprise Devices [2025]

- Gmail Emoji Reactions: Complete Guide to Quick Email Replies [2025]

- Apple AirPods Pro 3: Complete Guide to Features, Pricing & Savings [2025]

![Google Meet Conference Room Detection: Complete Guide [2025]](https://tryrunable.com/blog/google-meet-conference-room-detection-complete-guide-2025/image-1-1768502340859.jpg)