The GPU Market Just Broke, And We're All Paying For It

Last year, I watched a gaming PC enthusiast spend

The problem isn't just the price tags, though those are painful. It's the fundamental misalignment between what GPU manufacturers are optimizing for and what gamers actually need. Nvidia, AMD, and Intel have collectively decided that AI training and inference are where the real money lives. Gaming? That's a rounding error now. A legacy market. The thing that used to fund GPU development but now gets whatever's left over.

I've been covering hardware for eight years. I've never seen the market this broken. Graphics cards are becoming like printer ink: priced for industries with unlimited budgets, not consumers trying to play Cyberpunk 2077 at 4K.

Here's the thing that really gets me: this wasn't inevitable. There were choices made. Bad ones.

Why AI Completely Hijacked The GPU Market

GPUs are fundamentally good at two things: rendering pixels really fast, and doing massive parallel mathematical operations. For thirty years, gaming was the primary use case. Then deep learning arrived, and suddenly those same parallel operations became essential for training models that could answer questions, generate images, and make predictions.

The math is straightforward. An AI company training a large language model might spend

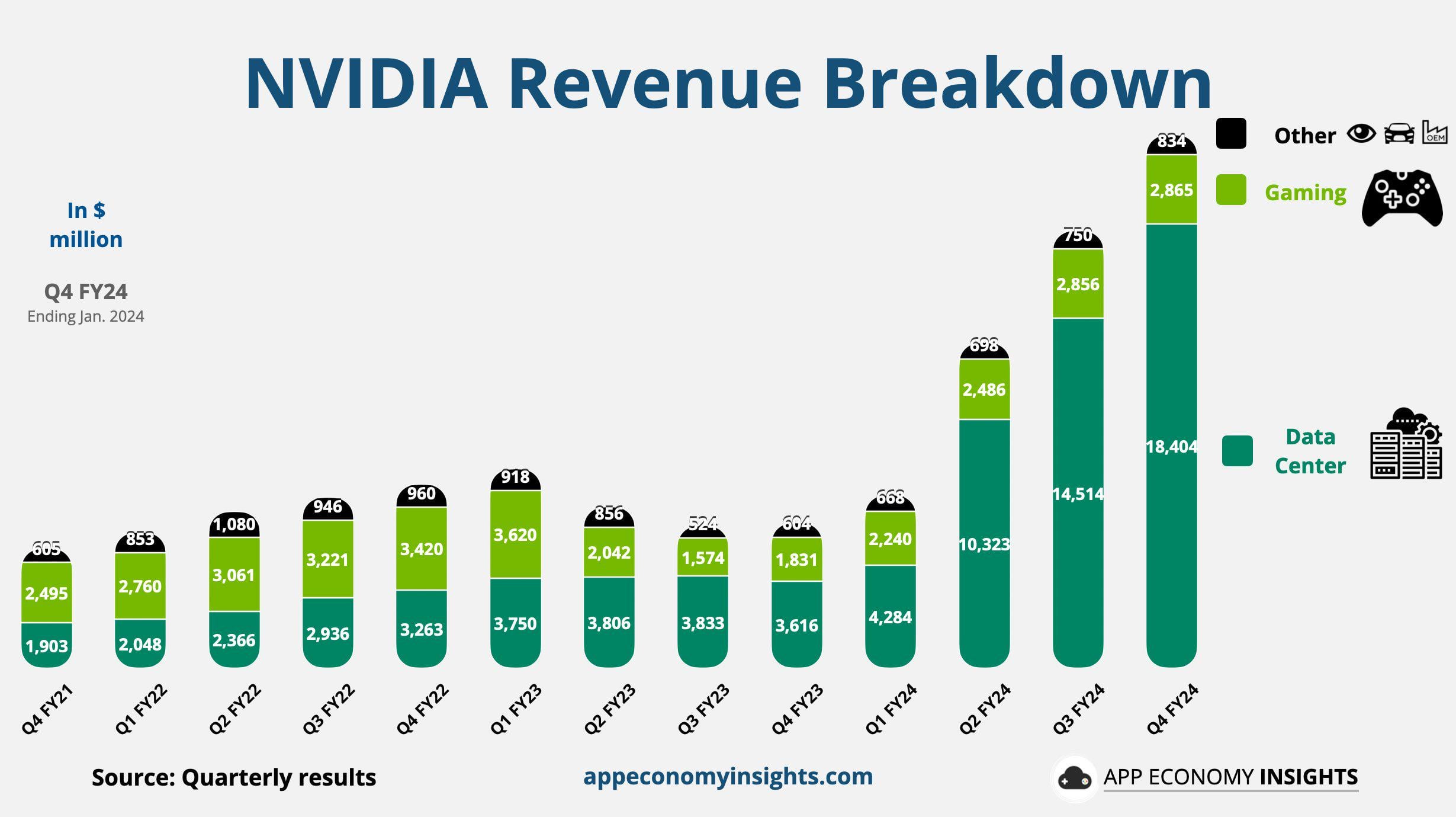

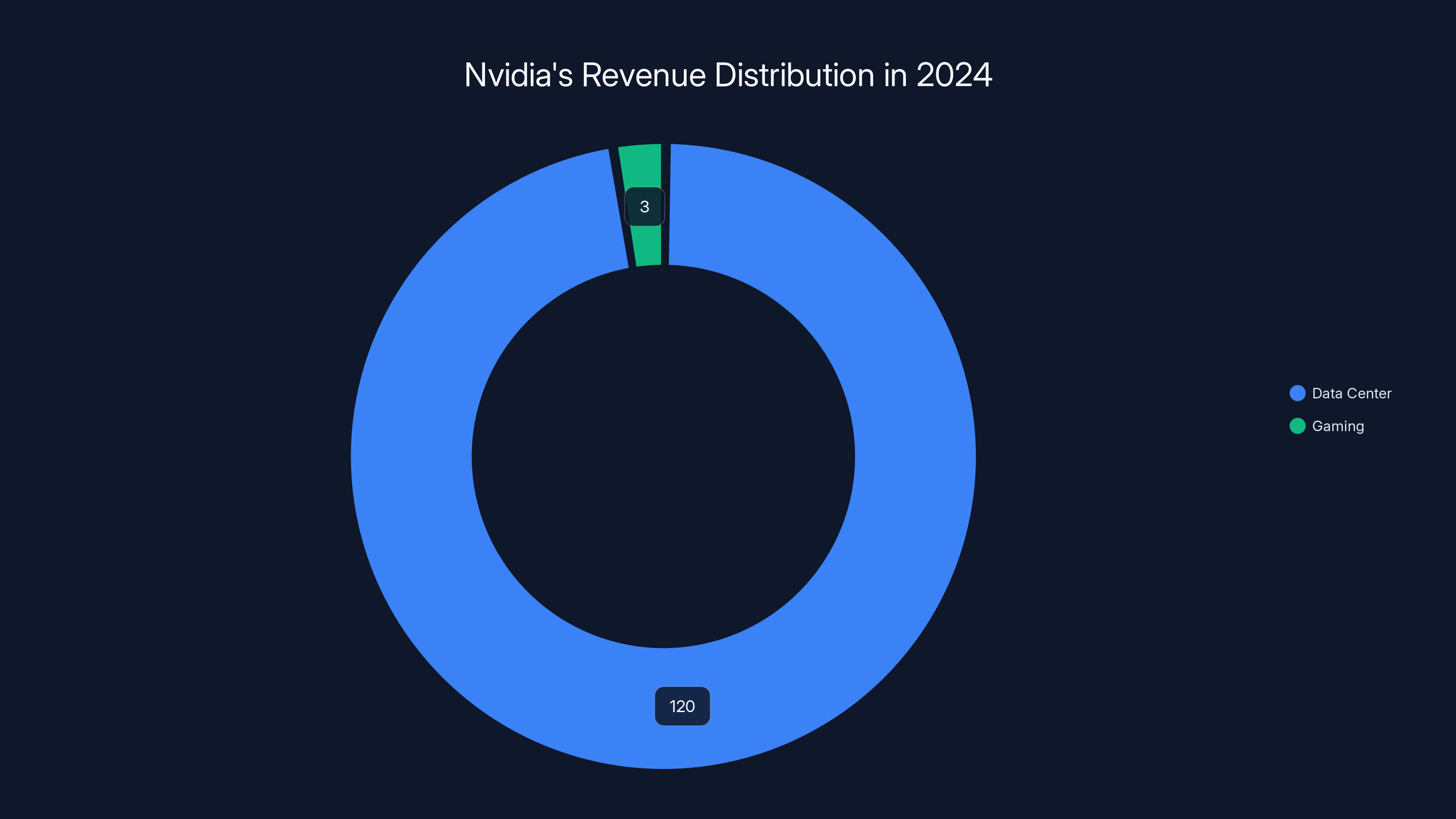

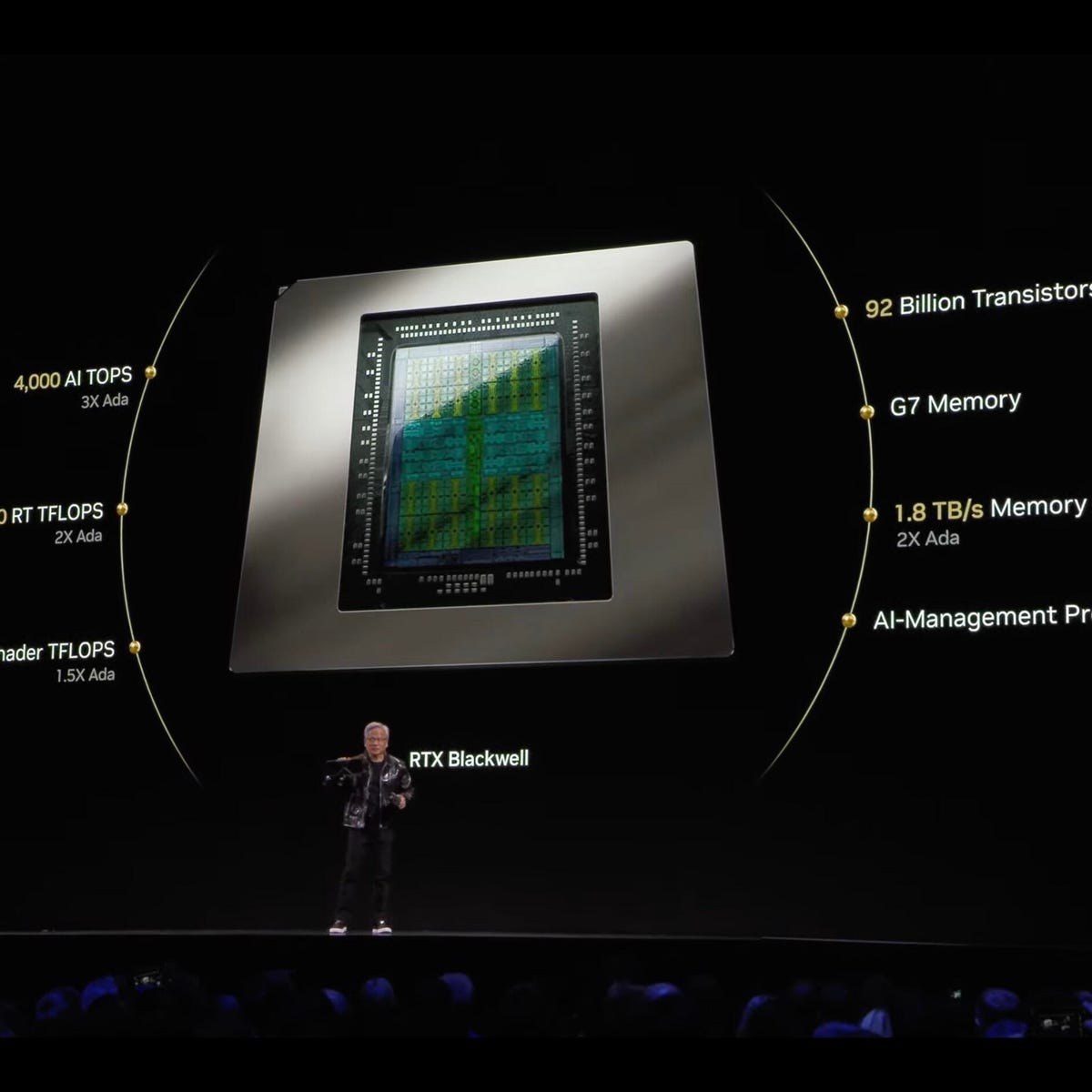

Nvidia saw this shift coming and committed entirely to it. Their CEO Jensen Huang made the call around 2016-2017 when deep learning started becoming mainstream. By 2024, Nvidia's data center revenue (which is almost entirely AI-focused) hit

This isn't conspiracy thinking. This is just how capitalism works. Manufacturers follow the money.

But here's where it gets genuinely problematic: the infrastructure, the supply chains, the manufacturing capacity—it all got reallocated. Samsung and TSMC (who manufacture most GPU chips) started prioritizing Nvidia's H100, H200, and newer chips designed specifically for AI workloads. Gaming GPUs got pushed to secondary production lines with lower priority.

The result? Gaming GPUs became supply-constrained exactly when prices should have been dropping due to new releases. Instead, Nvidia can charge whatever they want because enterprise demand is insatiable. If they sell 100,000 RTX 5090s to gamers at inflated prices versus 50,000 H200s to enterprises at premium pricing, the enterprise deal still wins on total revenue.

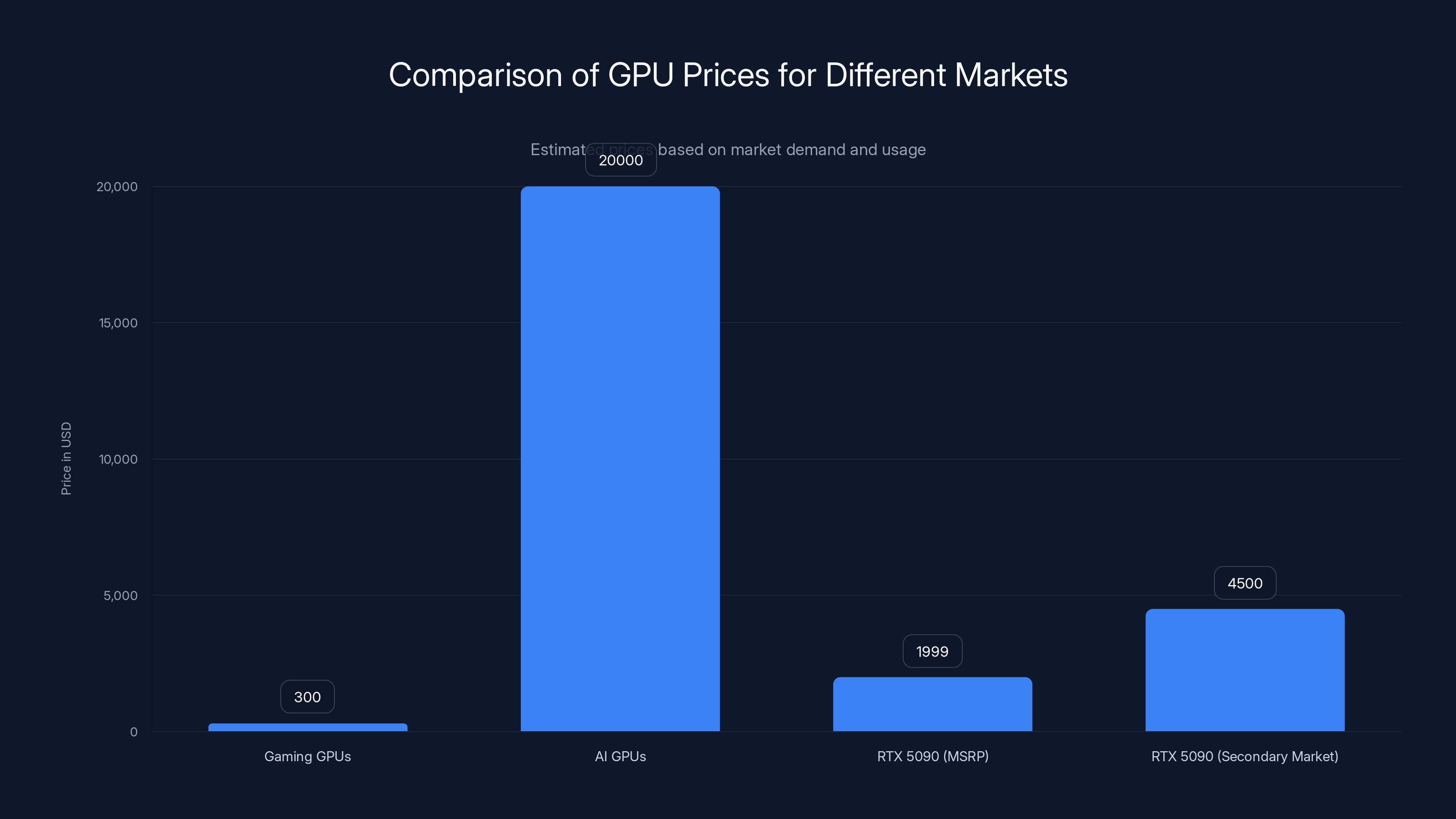

AI GPUs command significantly higher prices due to enterprise demand, while gaming GPUs remain more affordable. The RTX 5090's secondary market price reflects scarcity and high enterprise value. Estimated data.

The RTX 5090: A Case Study In Market Failure

Let's talk specifics, because the RTX 5090 is the perfect example of how broken things have gotten.

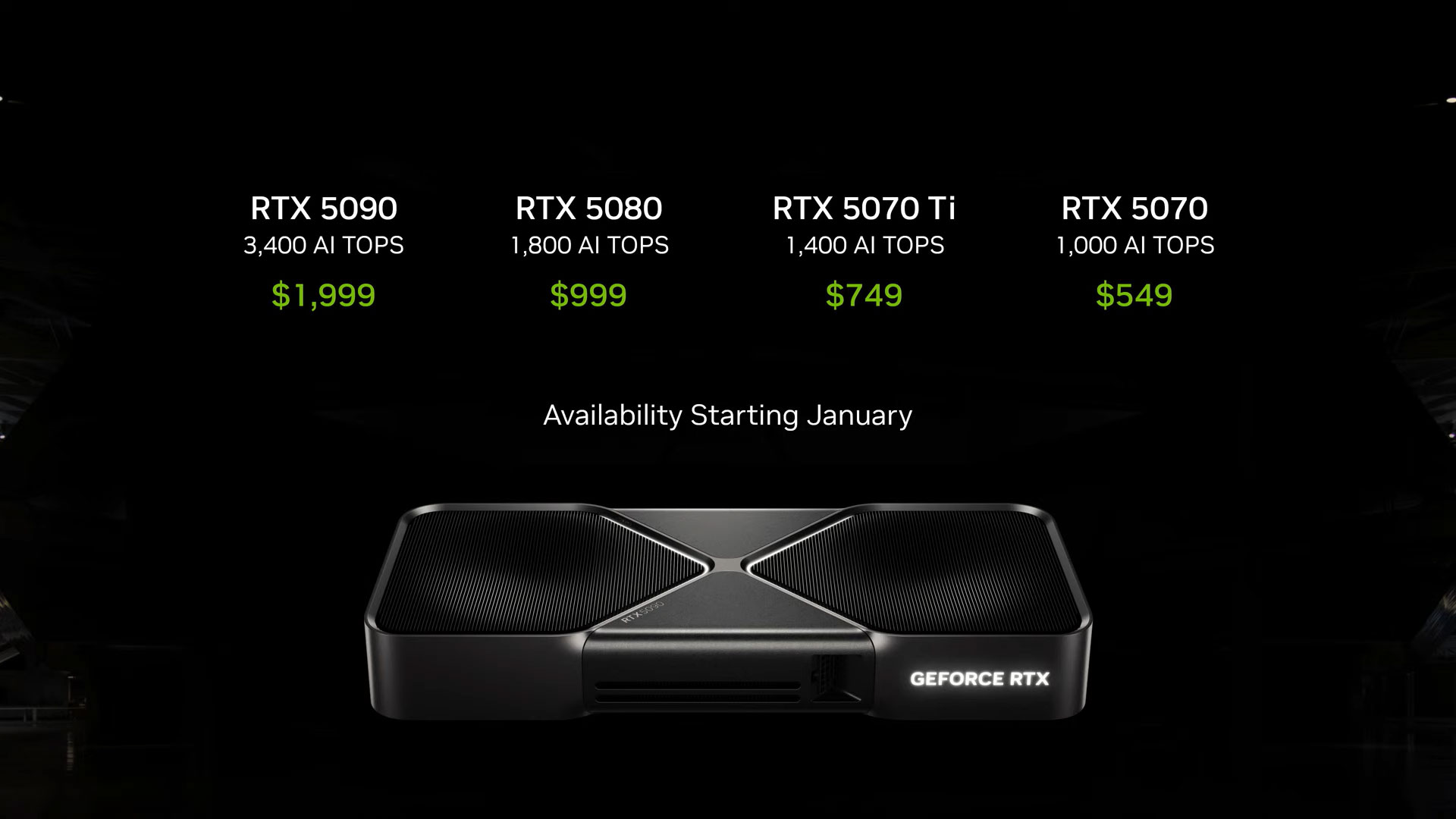

Nvidia's official MSRP for the RTX 5090 is $1,999. Sounds almost reasonable until you remember that Nvidia hasn't controlled pricing on enthusiast GPUs in years. Partners like EVGA, Asus, and Gigabyte set their own prices, and suddenly that "official" price becomes theater.

At launch in January 2025, the RTX 5090 was selling for

Why? Because the RTX 5090 isn't really a gaming card anymore. It's a compute accelerator that happens to also play games. Its architecture was optimized for inference workloads (running AI models), not rasterization or ray tracing. Yes, it's faster at gaming than the RTX 4090. But that's almost incidental to its design goals.

The specifications tell the story: 32GB of GDDR7 memory with 960GB/second bandwidth. That memory bandwidth is insane for gaming—you don't need it. But for running quantized language models? For batch inference on multiple AI queries? It's essential. The RTX 5090 was built for data centers first, and gaming was an afterthought.

Compare this to previous GPU generations. When the RTX 2080 Ti launched in 2018, the MSRP was

Nvidia could have made supply decisions to support gamers. They could have prioritized gaming GPU production or prevented enterprise bulk purchases of consumer cards. They didn't. Why would they? If a data center is willing to pay

In 2024, Nvidia's data center revenue reached

AMD And Intel's Terrible Response To The Crisis

You'd think AMD would seize this moment. Their Radeon RX 7900 XTX is legitimately good, performs well in rasterization, and costs less than comparable Nvidia cards. So why hasn't AMD captured the market?

Because AMD faced an identical choice, and they made the same calculation. Their MI300X accelerator (designed for AI) is where the profit lives. Their gaming GPU division got deprioritized. Radeon released the RDNA 4 architecture in early 2025, which was underwhelming. Not terrible, but not a "must buy" either.

Intel tried to be aggressive with Arc. Their Intel Arc B580 actually offers decent 1440p gaming performance for under $250. On paper, it's one of the only sane GPU options left. In practice, driver support has been spotty, performance scaling between games is inconsistent, and adoption rates remain abysmal. Gamers don't want to be beta testers for GPU drivers.

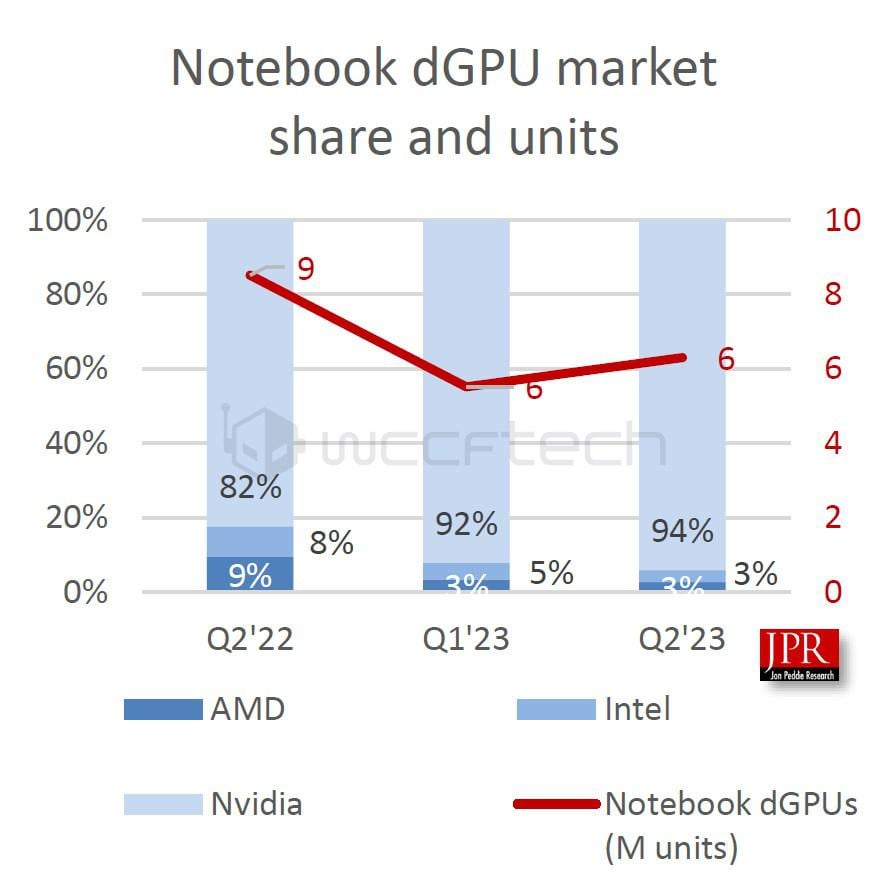

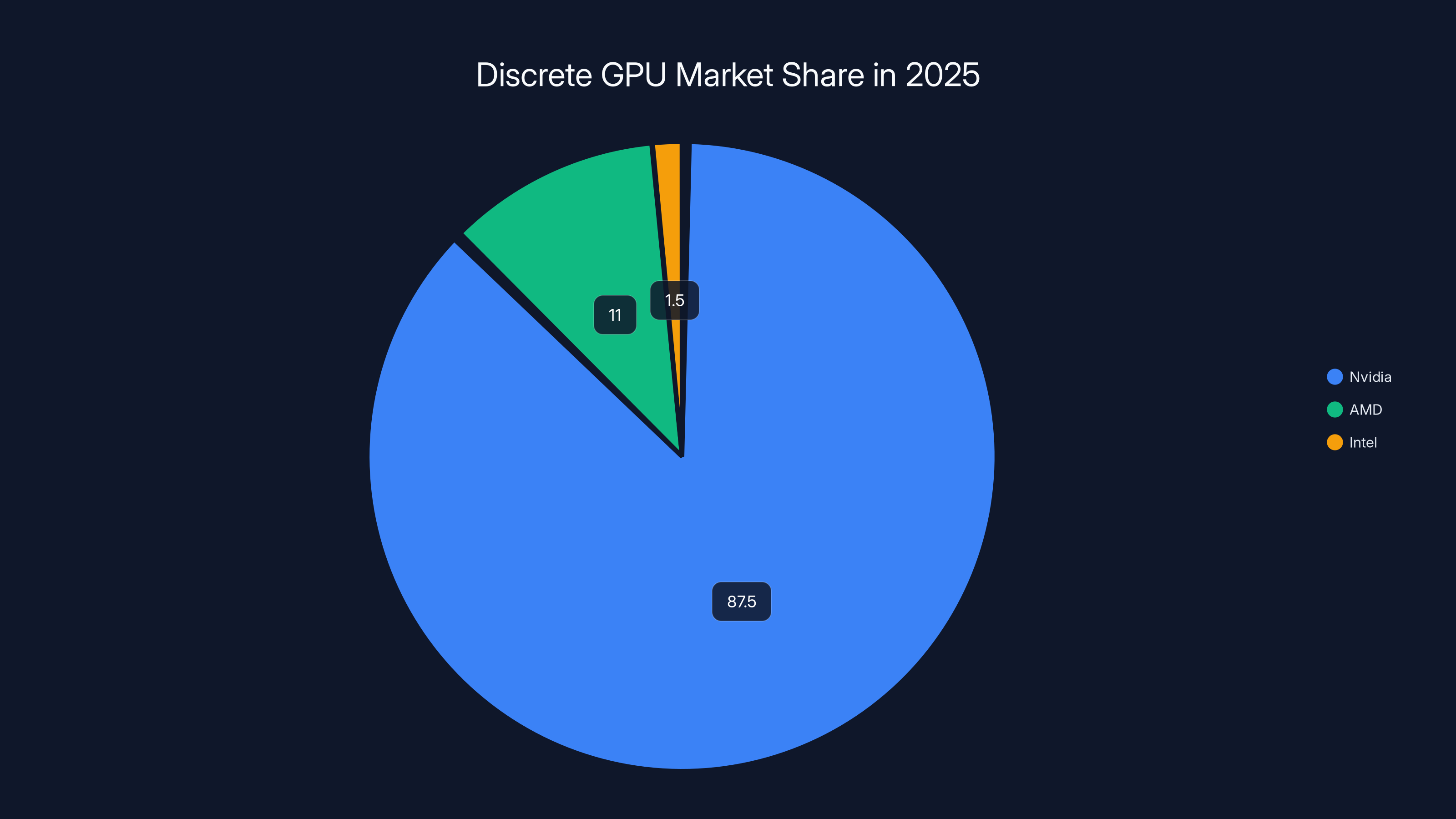

So here we are. Nvidia has 85-90% of the discrete GPU market. AMD is a distant second with maybe 10-12%. Intel is struggling to hit 3%. In a healthy market, Nvidia's dominance and price hikes would create opportunity for competitors. Instead, the competitors are equally focused on the AI market and equally ignoring gaming.

This is actually worse than monopoly. At least with monopoly, there's hope that a competitor will eventually challenge the leader. Here, the entire industry collectively decided that gaming is beneath them now.

The Real Damage: Stagnation In Gaming Innovation

High-end GPU prices matter beyond just the sticker shock. They affect the entire gaming ecosystem.

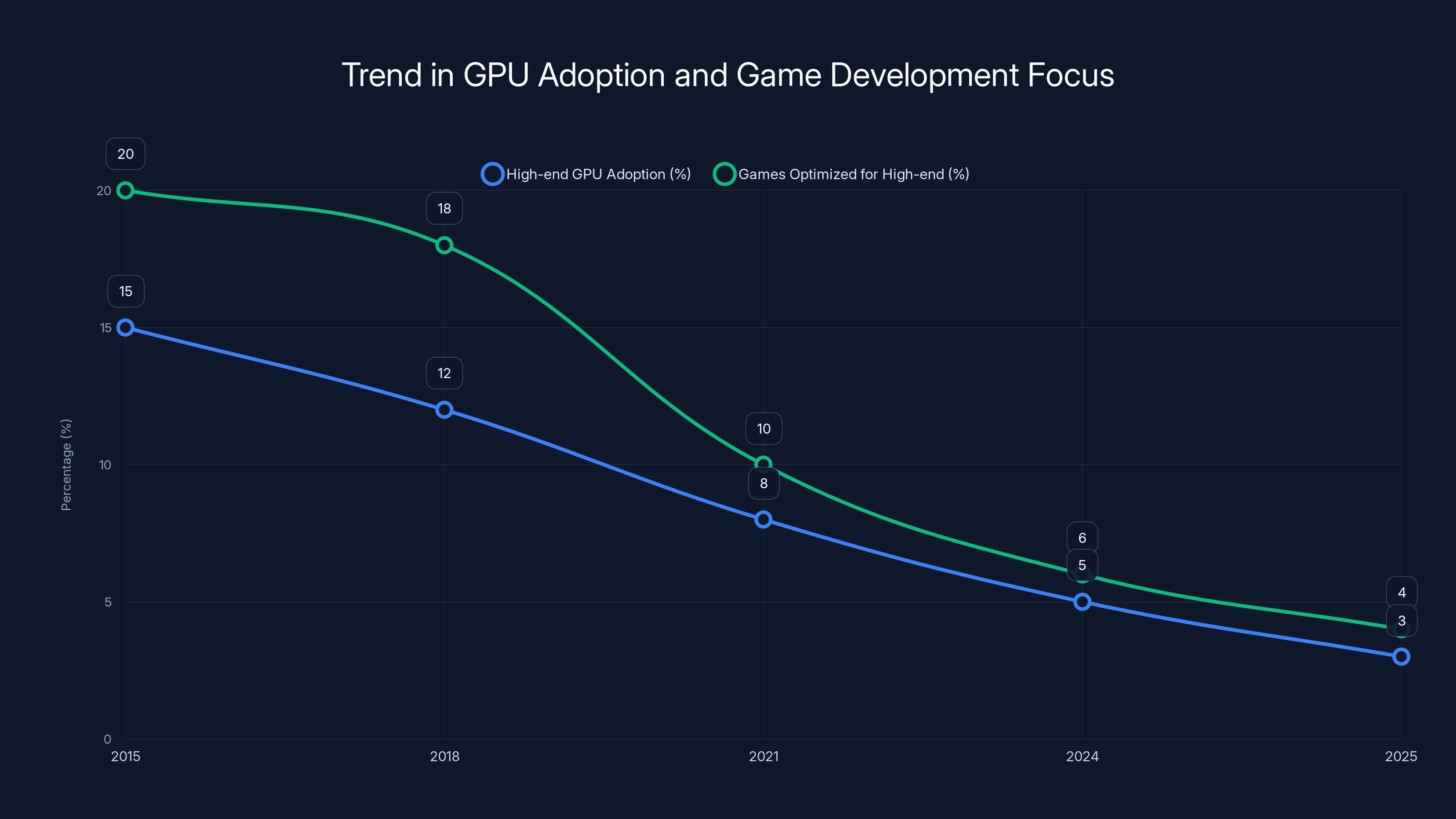

When top-tier GPUs cost $4,000, game developers stop optimizing for high-end hardware. They optimize for midrange hardware—whatever the majority of players can afford. This means development budgets for advanced graphics features shrink. Ray tracing improvements slow down. DLSS and FSR innovations plateau because there's less incentive to push visual boundaries.

You see this in 2024-2025 game releases. Most new AAA titles run acceptably on a $300-400 GPU (like the RTX 4060 Ti). The games that pushed real visual boundaries—games that required high-end hardware to showcase—those became rare. There's no business case for developing games that only 2-3% of your audience can experience at full quality.

Compare this to 2015-2018, when a $1,000-1,500 GPU purchase was common for enthusiasts. Developers optimized aggressively for high-end hardware. We got games like Crysis 3, heavily modded Skyrim, and VR titles that legitimately required top-tier cards. Those projects justified their existence because there was an audience willing to buy the hardware.

Now? The economics have flipped. If you're a studio deciding whether to optimize your game for RTX 5090-level features versus targeting 1440p midrange performance for 80% of your audience, you're going midrange every time.

This creates a feedback loop. As high-end gaming becomes niche, fewer studios invest in high-end features. As fewer features exist, there's less reason to buy high-end hardware. As fewer people buy high-end hardware, manufacturers deprioritize it further. You're watching a market slowly kill itself.

Estimated data shows a decline in both high-end GPU adoption and the percentage of games optimized for high-end features from 2015 to 2025. As high-end gaming becomes niche, fewer games are developed to leverage such hardware.

What Enterprise Demand Actually Looks Like

To understand why manufacturers made these choices, you need to see the enterprise perspective.

Open AI's Chat GPT has over 200 million users. Every conversation requires compute. When someone asks Chat GPT a question, it's running inference on servers filled with Nvidia's H100 and H200 accelerators. That's thousands of GPUs running 24/7, generating hundreds of millions in revenue annually.

Take another example: Microsoft's Copilot enterprise customers. A company with 50,000 employees using Copilot for writing, coding, and analysis needs massive inference capacity. We're talking about purchasing thousands of GPUs, not dozens.

Meta's training of their Llama models required custom-ordering tens of thousands of H100s. Google's training of Gemini required similar scales. These aren't small orders—they're six or seven-figure GPU purchases per major AI company.

Now compare: One data center customer ordering 10,000 GPUs at an average

And enterprise customers aren't price sensitive the way gamers are. If they need compute capacity, they'll pay

Manufacturers seeing these dynamics would be irrational not to focus on enterprise. This is basic business math.

The Artificial Demand Problem

But here's the thing that genuinely bothers me: much of the AI demand is not real.

I don't mean AI itself isn't real. I mean the computational demand being driven by wasteful implementation and hype cycles rather than genuine necessity.

Large language models are computationally expensive partly because of their architecture, but also partly because companies haven't bothered to optimize them. Running GPT-4 requires massive inference hardware. But quantized versions of GPT-4 (where the model is compressed and made less precise) run on consumer hardware. They're slower, but they work.

Yet enterprises continue buying premium hardware for less-optimized deployments because optimization requires engineering effort, and hardware is capital expenditure they can expense immediately.

Similarly, many AI startups are building infrastructure before they have product-market fit. They're ordering massive GPU quantities as a hedge, betting that when they scale, they'll need the capacity. Some of those bets will be wrong. Some of those GPUs will sit idle.

This artificial demand, combined with real demand from major AI labs, is what's inflating GPU prices beyond what actual adoption curves would predict.

Add to this the "everyone needs AI" marketing narrative from chip manufacturers, and you've got a perfect storm. Nvidia's investor calls in 2023-2024 were dominated by AI hype. The stock price reflects that hype. Wall Street expects GPU growth to continue at 30-40% annually. If actual demand doesn't support that, something has to give.

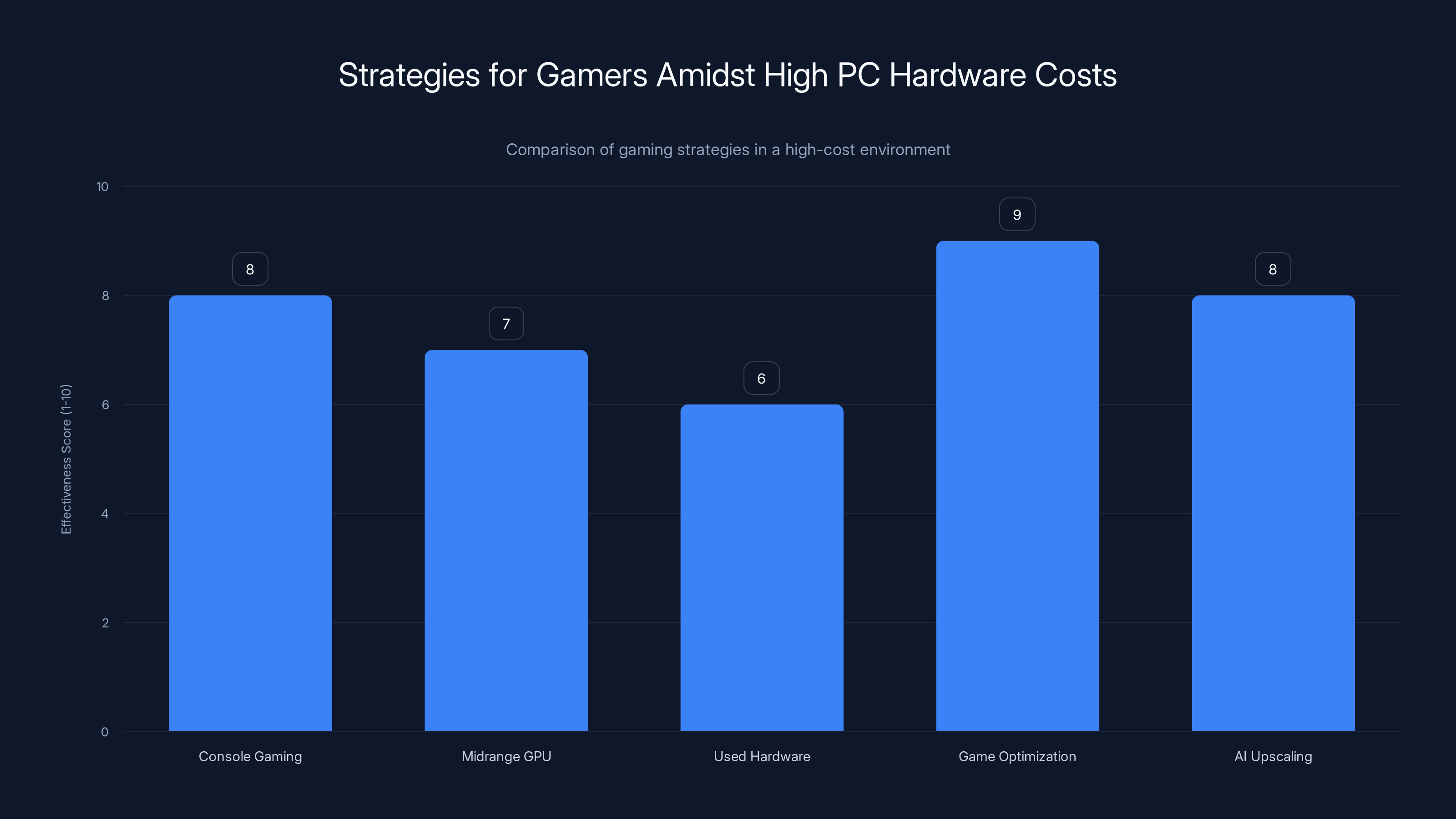

Game optimization and AI upscaling are highly effective strategies for maintaining gaming performance without high costs. Estimated data based on current trends.

Gaming GPUs Get The Scraps

Understanding all of this makes what happened to gaming GPUs inevitable.

Manufacturers aren't intentionally sabotaging gaming. They're just prioritizing based on profit and demand. Gaming gets whatever production capacity is left after enterprise orders are fulfilled.

When Nvidia launches a new generation, they allocate silicon wafers across different products: H200 accelerators, RTX 5000-series enterprise cards, RTX 50-series consumer cards, and mobile GPUs. The H200 comes first because that's the highest-margin product. By the time gaming cards get allocated wafer capacity, enterprise demand has already consumed available supplies.

Add in the fact that some gaming card components (like GDDR7 memory) are also used in enterprise cards, and you've got bottleneck on top of bottleneck.

The result is gaming GPU availability that looks more like a limited release than a mainstream product. When RTX 5090s hit the market, there weren't millions available. There were tens of thousands. That scarcity drives prices up. Secondary markets inflate further. Gamers either pay inflated prices or wait for lower-tier cards.

This would be irritating but manageable if lower-tier cards were reasonably priced. Instead, the entire product line gets inflated. An RTX 4070 that should cost

The Secondary Market Collapse

One of the most damaging effects is what's happening in the used GPU market.

Traditionally, gamers upgraded by selling their old GPU and using the proceeds to offset the cost of a new one. An RTX 3090 bought for

That market has completely broken down. Used GPU prices are completely divorced from new pricing. Older generation cards (like RTX 3000 and 4000 series) are holding value artificially high because new cards are unaffordable. Gamers can't justify upgrading when selling their old card doesn't cover enough of the new cost.

This creates perverse incentives. Someone holding an RTX 3080 might be tempted to sell it on the used market for

Manufacturers see this as good news: people are keeping older hardware longer, which means longer replacement cycles, which means less need to produce new cards. In reality, it's a warning sign. They're optimizing for short-term margin at the cost of long-term ecosystem health.

Nvidia dominates the discrete GPU market with an estimated 87.5% share, leaving AMD and Intel with significantly smaller portions. Estimated data.

What Gamers Are Actually Doing

Instead of buying premium GPUs, gamers are making increasingly creative alternatives happen.

Some have switched to lower-end discrete cards paired with AI upscaling. An RTX 4060 that costs $300 paired with DLSS 3 or FSR 3 can deliver surprisingly good framerates on demanding games. You're trading native resolution for upscaling quality, but the visual difference is minimal for most players.

Others have moved to console gaming entirely. Play Station 5 and Xbox Series X offer a known gaming experience at a fixed price point. Yes, you're limited to 30 or 60 fps depending on game settings, but there's no uncertainty about whether you can afford the next generation. There's also no Windows driver hell, no compatibility issues, no hardware conflicts.

Still others are shifting to competitive multiplayer games that don't require cutting-edge hardware. Valorant, CS2, and similar esports titles run on basically any GPU from the past eight years. The entire esports industry doesn't need premium hardware—a good monitor and keyboard matter more than a high-end GPU.

And a significant portion has just... stopped gaming. Or reduced their gaming significantly. They're not buying RTX 5090s because they can't justify the cost relative to other entertainment options. Streaming services, mobile gaming, and other hobbies have become more appealing when PC gaming requires a second mortgage.

Manufacturers should be alarmed by this shift. They're not. Why? Because they've already stopped caring about gaming as a growth market. The money is in enterprise.

The Manufacturing Perspective: Why This Seems Rational

To be fair to manufacturers, their decision-making does make sense from their standpoint.

If you're Nvidia's CEO looking at market data in late 2023, what you see is:

- Gaming GPU demand: Relatively stable at 20-30 million units annually, trending slightly down

- Enterprise GPU demand: Exploding at 50% Yo Y growth, with no ceiling in sight

- Average selling price for gaming GPUs: $300-500

- Average selling price for enterprise accelerators: $15,000-50,000

- Profit margin on gaming: 15-20%

- Profit margin on enterprise: 50-60%

Given those numbers, reallocating fab capacity toward enterprise is obviously correct from a shareholder perspective. Growing a 50%+ margin business with unlimited demand is what you're supposed to do.

The problem is that this optimization is destroying an important part of Nvidia's ecosystem: gamers. Without gamers buying GPUs, there's less consumer interest in Nvidia technology. Without consumer demand, there's less software development targeting Nvidia hardware. Without software, enterprise customers have fewer reasons to prefer Nvidia to AMD or other competitors.

By optimizing for short-term enterprise margin, Nvidia might be setting itself up for long-term competitive vulnerability. But that's a problem for 2030. The stock price is driven by 2025 earnings, so here we are.

How This Breaks The Innovation Cycle

There's a hidden cost to prioritizing enterprise over gaming: you lose the feedback loop that drives innovation.

When gamers were the primary customer, GPU architecture evolved to solve gaming problems. Better rasterization. Improved ray tracing. Faster VRAM. These innovations didn't emerge from nowhere—they came from studying how games actually use hardware and designing accordingly.

Gamers also drove software innovation. Graphics APIs like Vulkan and Direct X evolved because game developers pushed hard for better hardware abstraction and lower overhead. Deep learning frameworks got GPU support because researchers wanted faster neural network training, and game developers had already proven that GPUs could be reprogrammed for arbitrary compute tasks.

Now that enterprise is the primary customer, architecture decisions are driven by AI workloads. Tensor operations. Floating-point precision tradeoffs. Memory bandwidth for serving models. These are real optimizations for real problems, but they're narrow optimizations focused on a single use case.

The result is that GPU innovation is becoming increasingly specialized. Nvidia's H200 is brilliantly designed for inference but mediocre for gaming. The RTX 50 series is gaming-capable but not optimally architected for the use case.

This matters because it means the innovation pipeline for consumers is broken. We're not getting the next generation of GPU breakthroughs that would make gaming more compelling. We're getting incremental refreshes that aren't worth upgrading for.

A healthy market would have competing priorities—enterprise and gaming both pulling innovation in different directions, resulting in products that excel at both. Instead, gaming is being neglected, and we're all worse off for it.

The Price Spiral Continues

Here's what worries me most: there's no correction mechanism anymore.

In previous cycles, high GPU prices would stimulate competitor innovation or new entrants. AMD would release aggressive products. Intel would make a play. Startup manufacturers would emerge. Market competition would drive prices down.

That mechanism has broken. AMD and Intel are equally focused on enterprise. There are no startup GPU manufacturers because the barrier to entry is too high (you need billions in R&D and fabrication capacity). So Nvidia faces no real price pressure.

Meanwhile, enterprise demand shows no signs of slowing. If anything, it's accelerating. Every tech company and enterprise is investing in AI infrastructure. The compute capacity needed just keeps growing.

So what you'll see is:

- Stable or rising prices for consumer GPUs

- Continued supply constraints

- Gradual erosion of gaming as a use case

- Further consolidation around enterprise hardware

This could persist for years. AI adoption will eventually hit saturation, but that's 3-5 years away minimum. Until then, expect the market to stay broken.

The Path Forward (If There Is One)

Okay, so the situation is grim. What actually fixes this?

The most realistic scenario is that enterprise AI demand eventually moderates as the technology matures and companies finish deploying infrastructure. When that happens—probably in 2027-2028—you'll see a sudden shift of manufacturing capacity back toward consumer products and prices will normalize.

Until then, gamers have limited options:

Console gaming becomes more attractive when PC hardware costs $3,000+ for a competitive experience. PS5 and Xbox Series X offer fixed-cost gaming that doesn't require annual equipment anxiety.

Midrange GPU purchases make more financial sense than premium. An RTX 4070 paired with upscaling tech delivers 90% of what an RTX 5090 does for 30% of the cost.

Used hardware markets might stabilize once new hardware pricing stabilizes. Right now, secondhand market are inflated. When new cards drop in price, used cards will follow.

Game optimization becomes more important than raw GPU power. Studios that optimize aggressively deliver better experiences than studios that assume high-end hardware.

AI upscaling technology (DLSS, FSR, Xe SS) will continue improving. At some point, AI upscaling will be indistinguishable from native resolution rendering for most visual cases. That flips the calculus—you can buy a midrange GPU and let AI handle the heavy lifting.

Beyond these interim solutions, the only real fix is for manufacturers to remember that gaming matters to their long-term ecosystem health and to balance enterprise and consumer optimization accordingly. That's not business incentive right now, but it might become one as the market matures.

Why This Should Make You Angry

I'm not trying to be a doomer here. But I think it's fair to be angry about what's happening to gaming hardware.

For 30 years, gamers funded GPU innovation. Hobbyists and enthusiasts bought the highest-end hardware and drove demand that kept fabs operating at capacity. That demand created the competitive environment that produced the world-class GPUs that eventually enabled AI and deep learning.

Now that AI has become more profitable, manufacturers are treating gaming like an unwanted stepchild. Not because gaming isn't profitable—it is. But because gaming isn't as profitable as enterprise, so it gets deprioritized in allocation decisions.

The frustrating part is that this doesn't have to happen. A company with Nvidia's resources could support both markets adequately. They could allocate sufficient fab capacity to gaming products, set competitive pricing, and still dominate enterprise. They choose not to because the profit margins are higher if they don't.

This is capitalism working exactly as designed: optimization toward maximum shareholder value. The problem is that this optimization is destroying consumer choice and innovation in a market that used to be healthy.

Somewhere, a 16-year-old who dreams about building a high-end gaming PC is looking at GPU prices and deciding it's not worth it. That kid who might have become a game developer or GPU engineer is choosing a different path because the barrier to entry got too expensive.

That's the real cost of this market failure.

The Runable Connection: Productivity In A GPU-Scarce World

While we can't fix GPU pricing directly, we can at least optimize how we use the computing resources we do have available.

Tools like Runable help teams automate document generation, report creation, and workflow optimization without requiring high-end GPU hardware. If you're creating presentations, reports, or documents that would normally require hours of manual work, AI-powered automation can handle that efficiently on modest hardware, freeing up your actual GPU compute for tasks that genuinely need it.

The point is: if you're working with limited hardware resources (which most gamers are now), focus your compute on what actually matters. Use AI tools to handle boilerplate work. Save your GPU power for things that truly benefit from high-end acceleration.

Use Case: Automate your weekly reports and presentations so you have more time and resources to focus on actual gaming, not work overhead.

Try Runable For Free

FAQ

Why did GPU prices spike when AI became popular?

GPU manufacturers realized that AI applications generate significantly more profit per unit than gaming. Enterprise customers building AI infrastructure are willing to pay

Is the RTX 5090 actually worth 5,000 for gaming?

Absolutely not. The RTX 5090's MSRP is

Will GPU prices ever come down?

Yes, but probably not until 2027-2028 when AI infrastructure buildout slows down. Once enterprise demand moderates and manufacturing capacity becomes available again, manufacturers will shift focus back to consumer markets and prices will normalize. Until then, expect gradual increases or flat pricing in the high-end segment.

Should I buy a gaming PC now or wait?

Unless you absolutely need one immediately, waiting is generally the better choice. If you must buy, skip the premium tier and opt for a midrange GPU ($400-600 range) paired with modern upscaling technology like DLSS 3 or FSR 3. You'll get 85-90% of the gaming experience at 40% of the cost. Avoid the high-end RTX 5000 series unless you're willing to pay inflated prices.

Why haven't AMD and Intel captured market share from Nvidia despite high prices?

AMD and Intel made identical business decisions to prioritize enterprise AI products over gaming. AMD's Radeon focuses on AI accelerators now, and Intel's Arc division gets minimal resources. Without competitive pressure from two well-resourced competitors, Nvidia faces no incentive to lower prices or improve gaming support. The entire industry collectively abandoned gaming as a primary market.

How can I game affordably while GPU prices are inflated?

Consider these alternatives: Switch to console gaming (PS5/Xbox Series X offer fixed pricing), play competitive multiplayer titles that don't need high-end hardware (Valorant, CS2), build a budget gaming PC with a midrange GPU and AI upscaling, or try cloud gaming services where you don't own hardware directly. These options sidestep the premium GPU pricing problem entirely.

What impact do high GPU prices have on game development?

High prices reduce the developer incentive to optimize games for high-end hardware. When fewer people can afford premium GPUs, there's less business case for pushing visual boundaries. This creates a feedback loop where game innovation stagnates because fewer resources go to advanced graphics features that only premium GPUs can handle. It's bad for gamers and ultimately bad for hardware innovation.

Is AI demand artificial or genuine?

It's both. Genuine demand from major AI companies (Open AI, Meta, Google, Microsoft) is real and massive. However, some demand is artificial—startups over-investing in infrastructure before proving market fit, companies choosing suboptimal implementations that require more compute than necessary, and hype-driven investment cycles. This artificial component inflates prices beyond what real adoption curves would predict.

Conclusion: The GPU Market Is Broken, And Nobody's Fixing It

The GPU market fracture isn't a temporary crisis. It's a structural shift that will persist for years. Manufacturers have consciously chosen enterprise profit over gaming ecosystems. They've done the math, seen that AI generates higher margins, and optimized accordingly. This is rational behavior from a shareholder value perspective.

But it's terrible for consumers, bad for gaming innovation, and ultimately risky for long-term competitive positioning. By neglecting gaming, manufacturers are eroding the consumer base and software ecosystem that historically drove GPU innovation.

The frustrating part is that none of this had to happen. Nvidia, AMD, and Intel have sufficient resources to support both markets adequately. They chose not to. That choice reflects current incentive structures and shareholder expectations, but it's still a choice with real consequences for millions of potential customers.

If you're a gamer hoping to upgrade, accept that the premium market is off-limits for now. Buy midrange hardware paired with modern upscaling tech, or switch to console gaming. These aren't ideal solutions, but they're rational responses to an irrational market.

And if you're paying attention to tech industry trends, understand what's happening here. The GPU market breaking under AI demand is a preview of what happens when one use case becomes so profitable that manufacturers stop optimizing for diversity. We'll see this dynamic play out in other hardware categories too. It's the inevitable result of AI becoming the most lucrative application of computing resources.

The market will eventually correct. Demand will moderate, supply will normalize, and prices will fall. Until then, we're all just waiting in a market that doesn't want our business anymore.

Key Takeaways

- GPU manufacturers reallocated production toward enterprise AI, making gaming GPUs artificially scarce and expensive

- The RTX 5090's 5,000 secondary market price reflects enterprise demand, not gaming value

- AMD and Intel prioritized enterprise AI over gaming, eliminating competitive pressure on pricing

- Gaming GPU innovation has stalled because developers won't optimize for hardware most players can't afford

- Gamers should consider console gaming, budget GPUs with upscaling, or waiting until 2027-2028 when enterprise demand moderates

Related Articles

- The Best Rising Star VPNs to Watch in 2026 [2025]

- Apple Fitness+ 2026: New Workouts, Programs & What's Coming [2025]

- The Traitors UK Season 4 Secret Traitor Revealed [2025]

- Fender ELIE Bluetooth Speakers: Playing 4 Audio Sources Simultaneously [2025]

- CES 2026 Guide: New Tech Launches & Trends to Watch [2026]

- Clicks Communicator: The Modern Blackberry Phone Explained [2025]

![GPU Gaming Crisis: How AI Is Destroying High-End PC Gaming [2025]](https://tryrunable.com/blog/gpu-gaming-crisis-how-ai-is-destroying-high-end-pc-gaming-20/image-1-1767366512158.jpg)