How AI Is Reshaping Customer Service Teams [2025]

Customer service teams aren't just tweaking their processes anymore. They're completely reimagining how support works.

We're talking about fundamental shifts in who does what, how work gets routed, and what skills actually matter on the front lines. And it's happening faster than most people realize.

Recent research analyzed 166 in-depth interviews with support leaders, managers, and frontline specialists to understand what actually changes when AI agents enter the picture. The data is striking. Nearly every single team reported meaningful workflow transformations. Some saw their team structures completely reorganized. Others discovered they needed entirely new skill sets just to manage AI outputs.

Here's what makes this different from previous tech adoption cycles: it's not about making humans faster at the same job. It's about fundamentally redefining what the job even is.

TL; DR: The Core Shifts

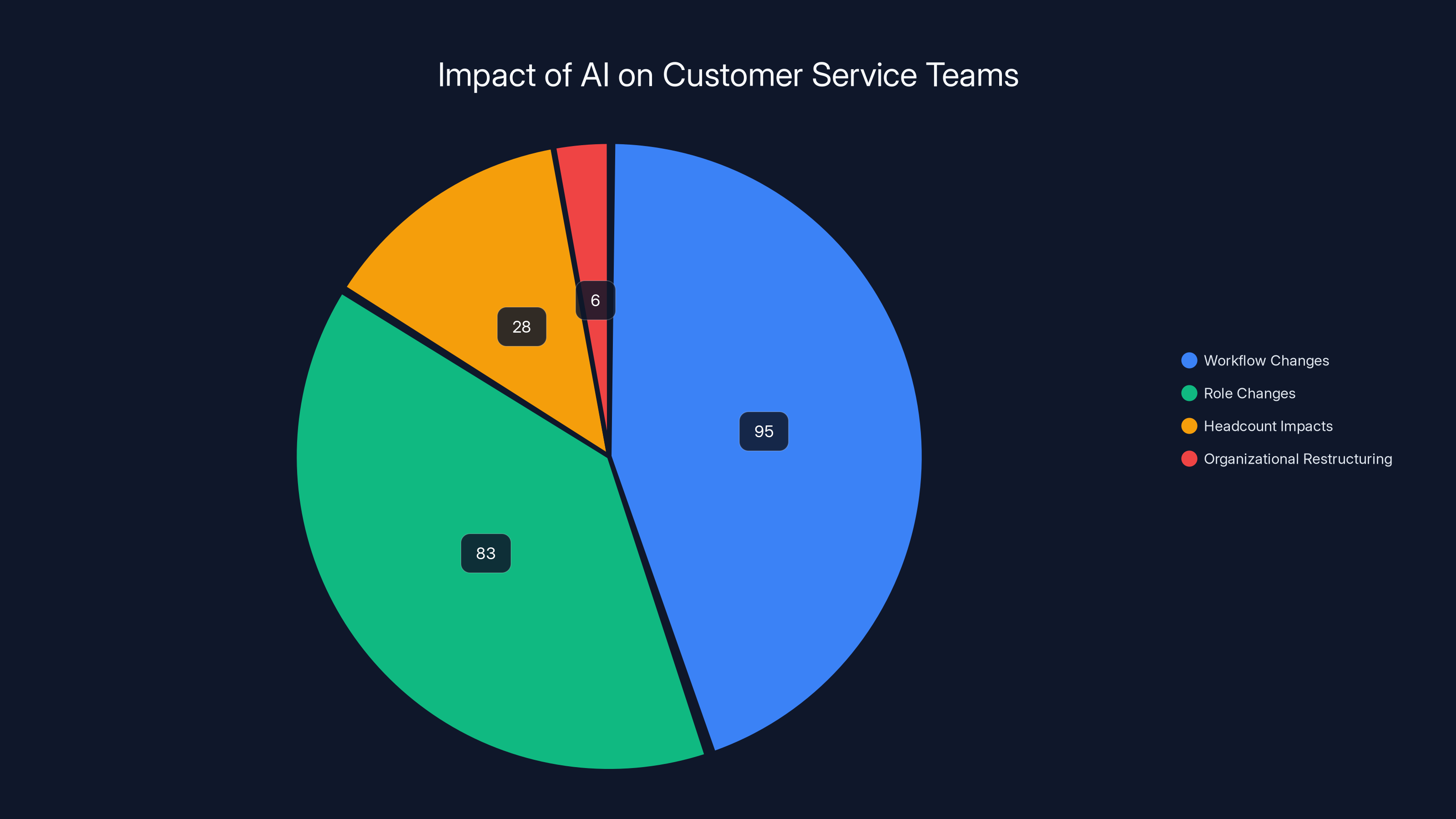

- 95% of teams reported meaningful workflow changes after implementing AI agents

- 83% experienced new roles, responsibilities, or organizational restructuring

- 28% saw hiring freezes or reduced Tier 1 headcount as AI manages more requests

- Triage, routing, translation, and categorization are now primarily AI functions

- Human work shifted toward QA, oversight, and handling edge cases requiring nuance

- Skill gaps widening in data literacy, AI quality assurance, and cross-functional work

- Structure varies widely: some companies built dedicated AI pods, others kept traditional setups

AI implementation has led to significant workflow changes in 95% of customer service teams, with notable impacts on roles and headcount.

The Scale of Change Is Massive (And Most Leaders Aren't Ready)

When you're inside a customer service operation, you see incremental improvements all the time. A new ticketing system. A better knowledge base. Faster search. These feel like progress.

AI agents represent something different entirely. This isn't an incremental improvement. It's a wholesale reorganization of how support work flows through your organization.

The numbers tell the story: 94.58% of participants reported having their processes and workflows disrupted by implementing AI agents. That's not a majority. That's basically everyone.

But here's what's interesting: the disruption isn't all negative. Most teams reported that their operations became more efficient. The problem is they weren't prepared for the transition. Leaders often discovered, months after deployment, that they'd accidentally created skill gaps, unclear handoffs, and confusion about who actually owns different parts of the support process.

One pattern emerged repeatedly in the interviews: companies that succeeded were the ones that treated AI implementation as an organizational redesign project, not a software implementation project. They thought about job descriptions, skill development, and team structures from day one. Everyone else scrambled to fix things after the fact.

The research showed that confidence intervals for these changes are tight: at a 90% confidence level with a margin of error of ±6.4%, these aren't flukes or outliers. This is happening across the industry. If you're not experiencing these shifts, you're likely about to.

What's particularly striking is the variance in how companies respond. Some built entirely new "AI pods" with dedicated team members responsible for training, monitoring, and improving AI outputs. Others just distributed these responsibilities unevenly across their existing staff, creating burnout and confusion. There's no universal playbook yet, which means team leaders are essentially experimenting in real time.

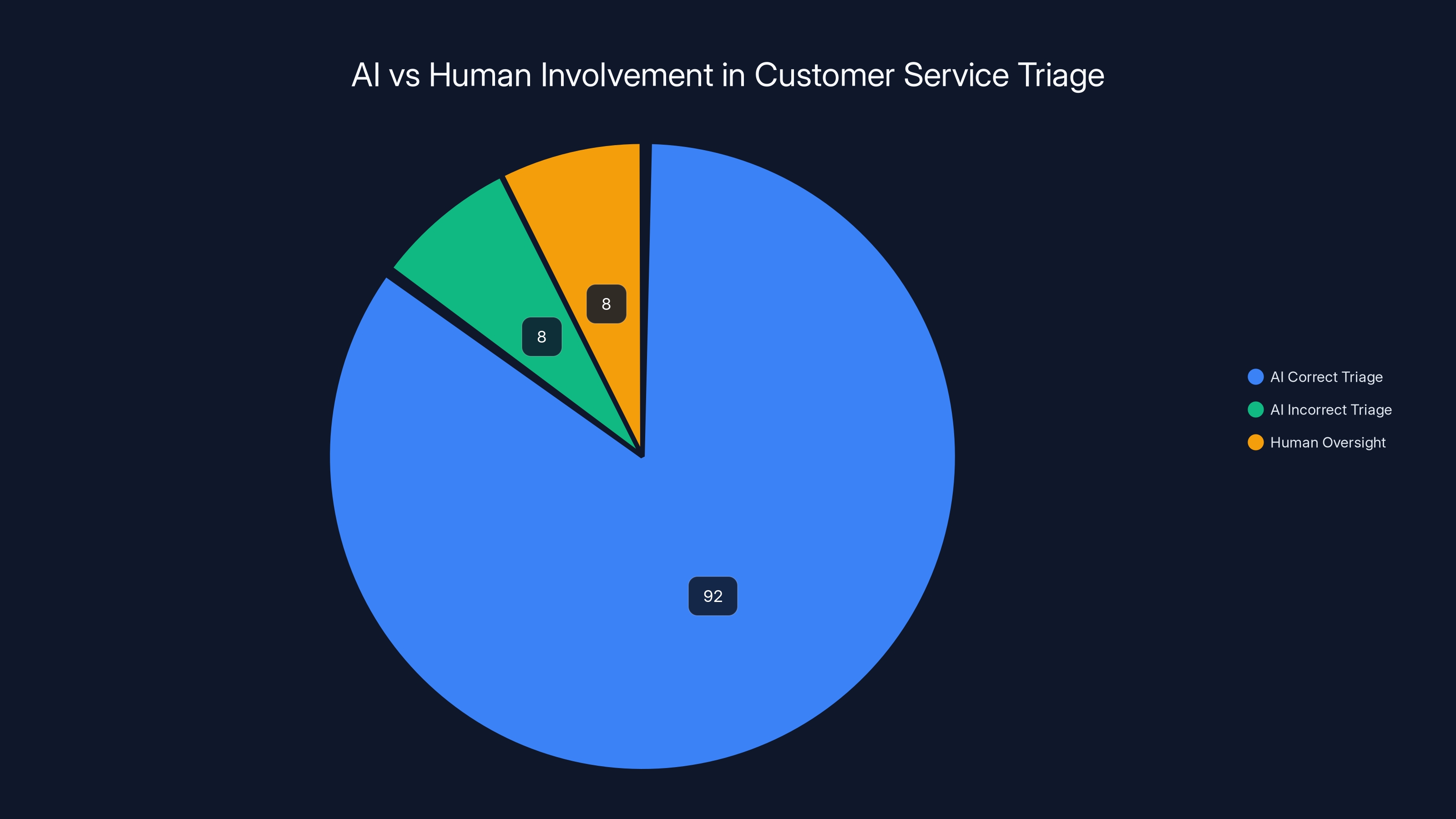

AI agents correctly triage customer service tickets 92% of the time, with human oversight required for the remaining 8% due to AI uncertainty. Estimated data.

Workflow Transformation: From Sequential to Hybrid

Traditional customer service workflows moved in one direction: tickets entered a system, got sorted, got assigned, got resolved. It was sequential. It was predictable. It made sense to organize people and processes around it.

That model is breaking down.

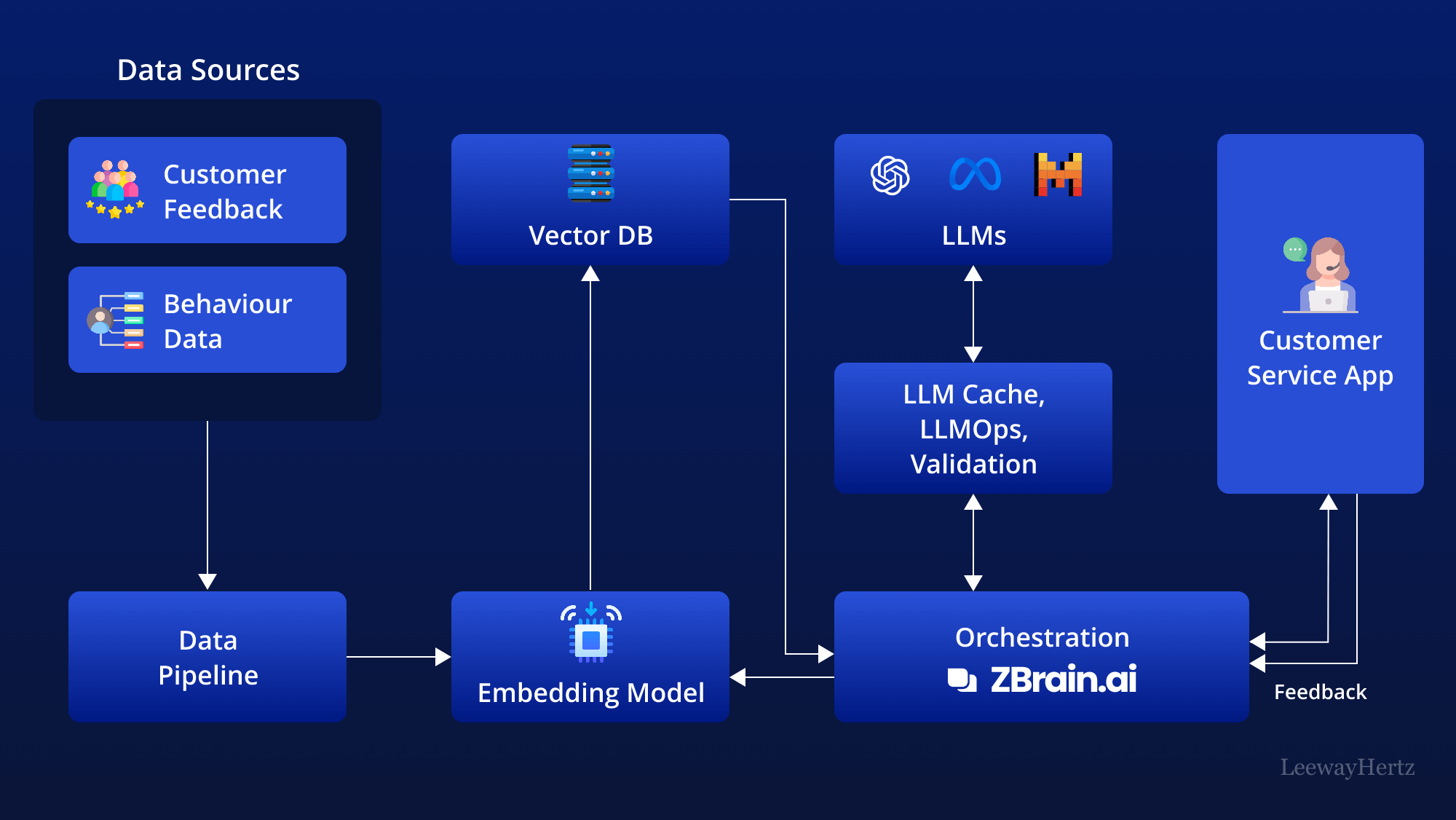

With AI agents handling initial triage, routing, and categorization, the workflow becomes something else entirely. It's now a hybrid system where humans and AI make decisions in parallel, not in sequence. A ticket arrives. The AI agent immediately starts working on it—understanding the intent, pulling relevant information, and starting a response. A human monitors the AI's work, jumps in when the AI confidence is low, and takes over for nuanced situations.

This isn't just a process change. It fundamentally alters what people need to do at every stage.

Triage is now AI-driven: Rather than having humans sort incoming tickets by urgency or category, AI agents now do the initial assessment. They read the message, understand the intent, and often make the routing decision automatically. Human involvement happens only when the AI is genuinely uncertain. One team found that AI triage got the category right 92% of the time, but the 8% failure rate required careful human oversight because miscategorized tickets often got assigned to the wrong team entirely.

Routing becomes probabilistic: Traditional systems routed based on explicit rules: if issue contains keyword X, send to team Y. AI routing is more sophisticated. It considers request complexity, available expertise, agent workload, and historical performance. But it also means that routing decisions aren't transparent. When a manager asks why a particular ticket went to Sarah instead of Mike, the AI might genuinely not have a simple explanation. It just weighted multiple factors.

Translation and categorization happen automatically: Many support teams work globally, which means handling tickets in multiple languages. Previously, companies either employed native speakers for every language or used basic translation tools that often failed on support jargon. AI agents now handle translation as a standard feature. Similarly, automatically categorizing support issues used to be a major pain point. Now it's something AI does as a base function.

The human role shifts to quality assurance: This is the big one. Humans don't disappear from these processes—they supervise them. A support representative might now spend 30% of their day reviewing AI responses, testing edge cases, and flagging issues. They're essentially QA-ing the AI's work. This feels different than providing support directly, and many teams underestimated how different it would feel.

The research showed that teams reporting the smoothest transitions were the ones that explicitly redesigned their workflows with AI in mind. They didn't try to retrofit AI into existing processes. They asked "what should the workflow be" and then figured out how to train and deploy AI to support it.

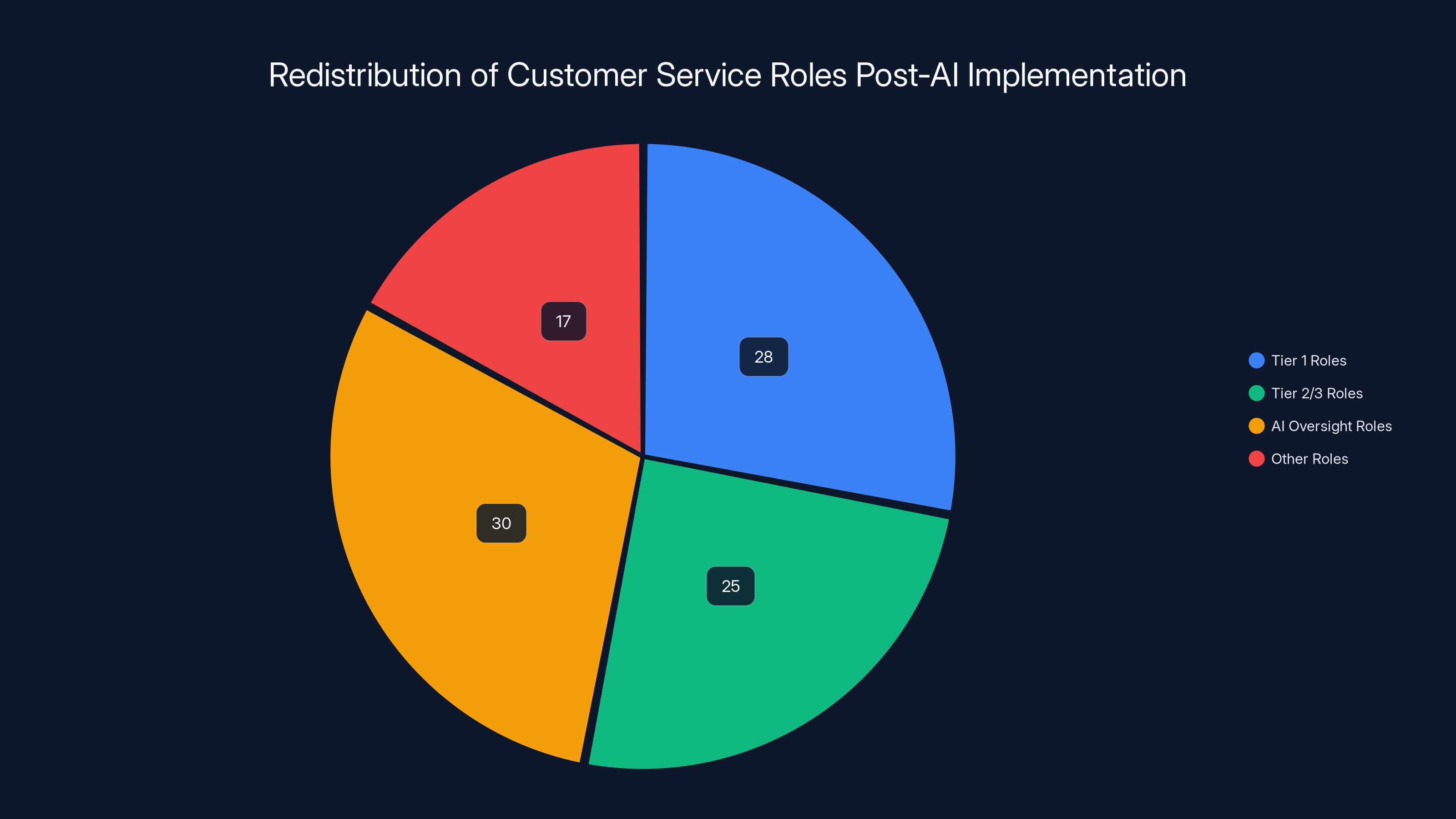

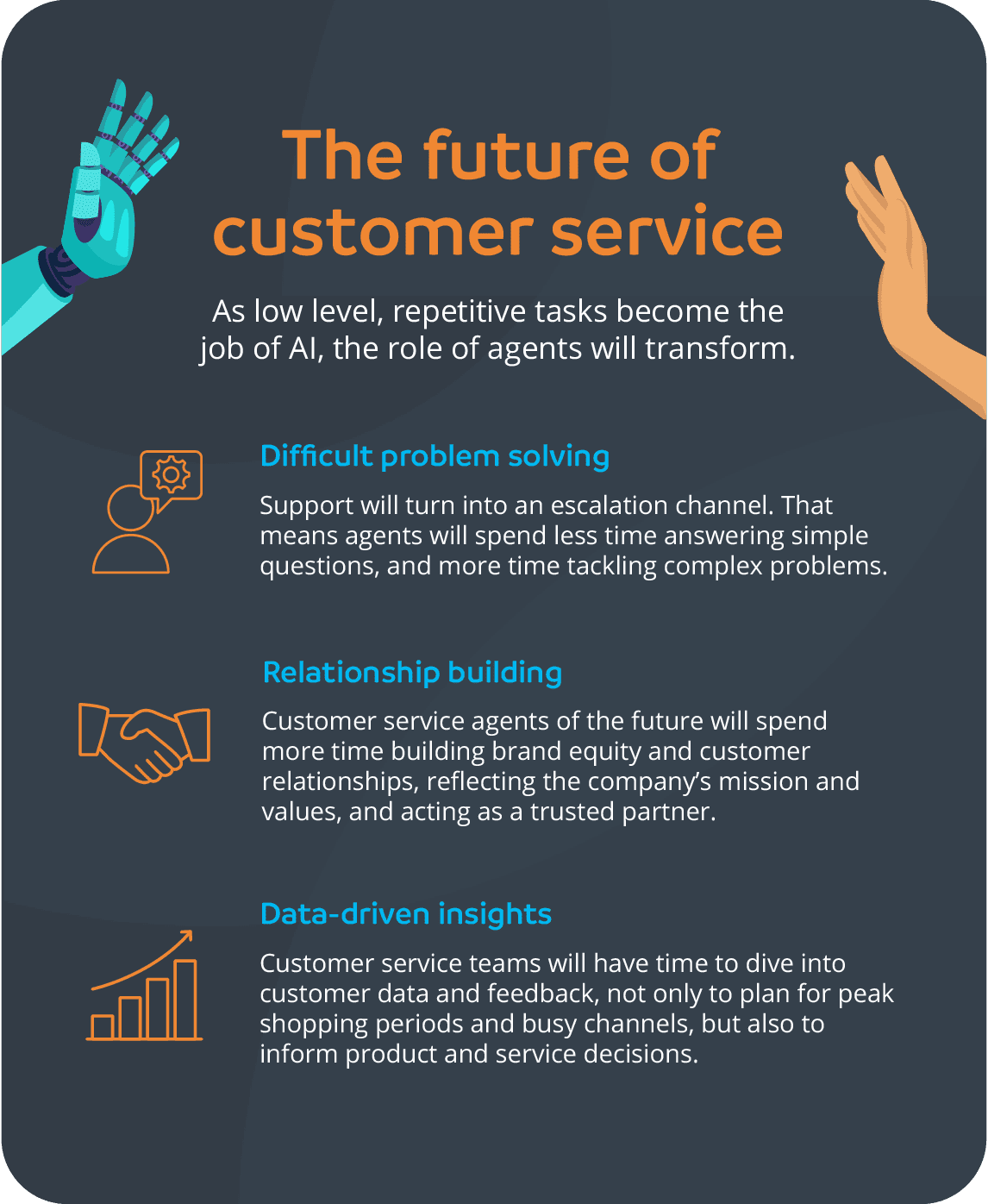

The New Customer Service Job: From Handling Requests to Managing AI

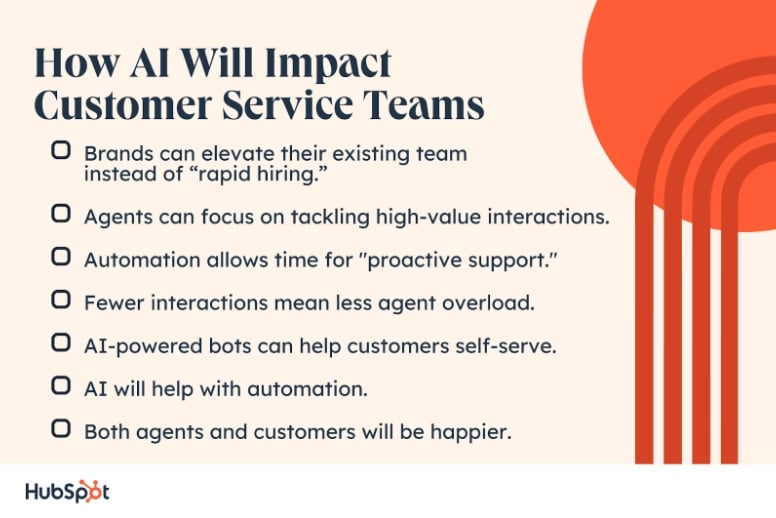

Here's something surprising from the research that most support leaders didn't anticipate: implementing AI didn't eliminate support jobs. It changed what support jobs actually are.

About 82.53% of participants reported changes in their role and responsibilities. But it wasn't cuts across the board. It was redistribution.

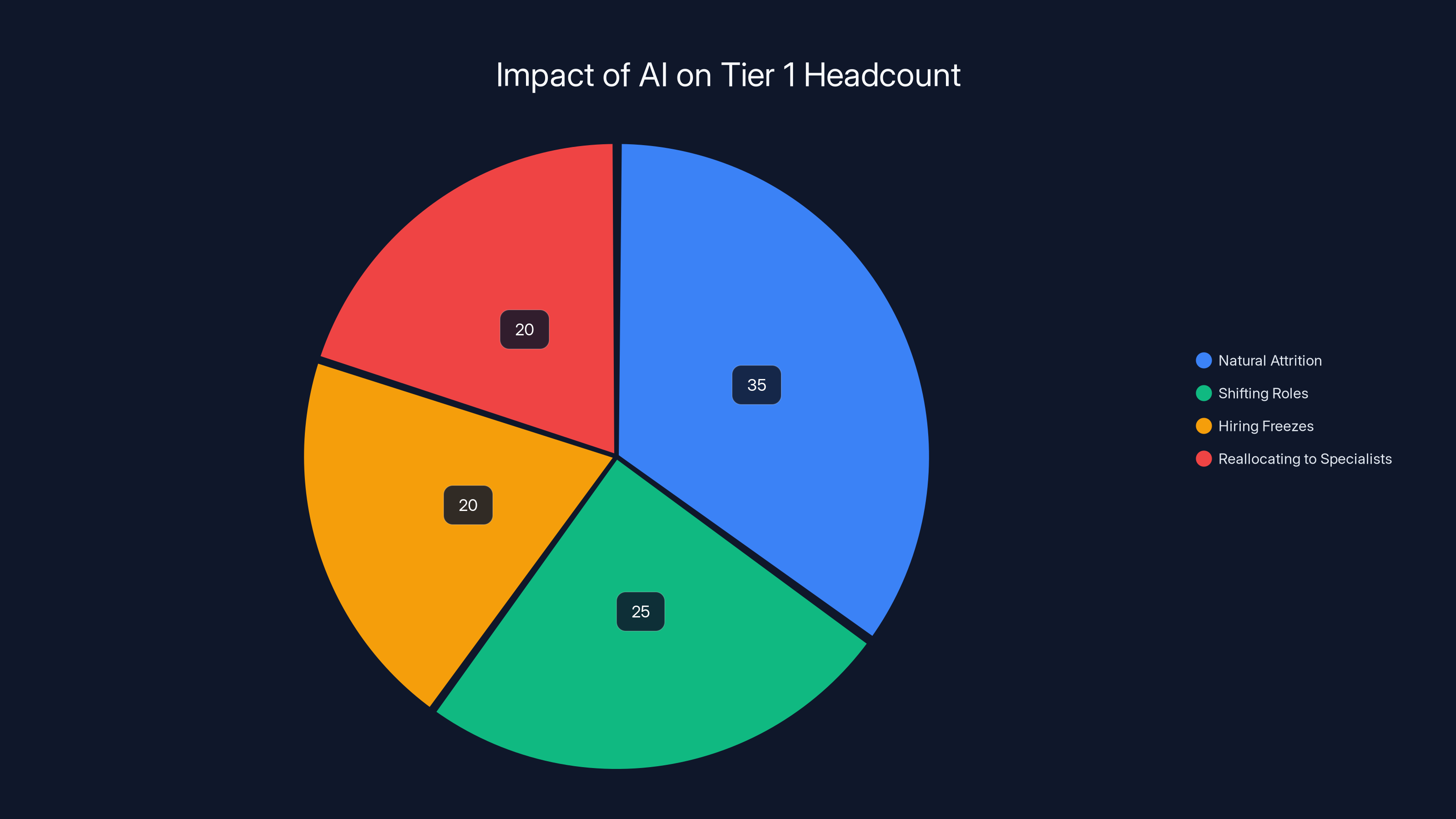

Tier 1 demand is declining: Tier 1 support traditionally handled high-volume, low-complexity issues. Things like password resets, basic troubleshooting, simple questions. AI agents are excellent at this. The data showed that 28% of companies experienced hiring freezes, slowdowns, or natural attrition at the Tier 1 level. When positions opened up, they often didn't get filled. The work just... didn't need as many people anymore.

But here's the nuance: companies didn't shed 28% of their headcount. They reallocated people. Some moved up to Tier 2 or Tier 3 roles handling more complex issues. Others transitioned into AI oversight positions.

New roles emerged that didn't exist before: Many teams created positions like "AI Quality Assurance Specialist" or "AI Training Coordinator." These people monitor AI performance, flag issues, provide feedback, and help improve outputs. It's not a role that existed in traditional support organizations, but it's essential once you have AI agents.

What humans now focus on: According to the research, humans increasingly focus on:

-

Nuance handling: Issues where the customer is angry, confused, or needs emotional intelligence. AI can sympathize syntactically, but humans bring actual empathy.

-

Edge case handling: The weird, unusual situations that fall outside normal patterns. AI agents get trained on common scenarios. When something unusual happens, a human takes over.

-

Quality control: Reviewing AI outputs, testing responses for accuracy, and flagging problems.

-

Customer relationship building: Complex accounts or long-term relationships where the customer has preferences for human interaction.

-

Product feedback: Supporting agents now spend time documenting edge cases and feeding them back to product teams.

One interesting finding: frontline staff didn't universally celebrate these changes. Some appreciated moving from repetitive work to more engaging problem-solving. Others struggled with the shift from "I resolved this ticket" to "I monitored the AI's resolution." It felt less tangible. Several teams reported needing to invest in training and mindset shifts to help people see value in the new role definitions.

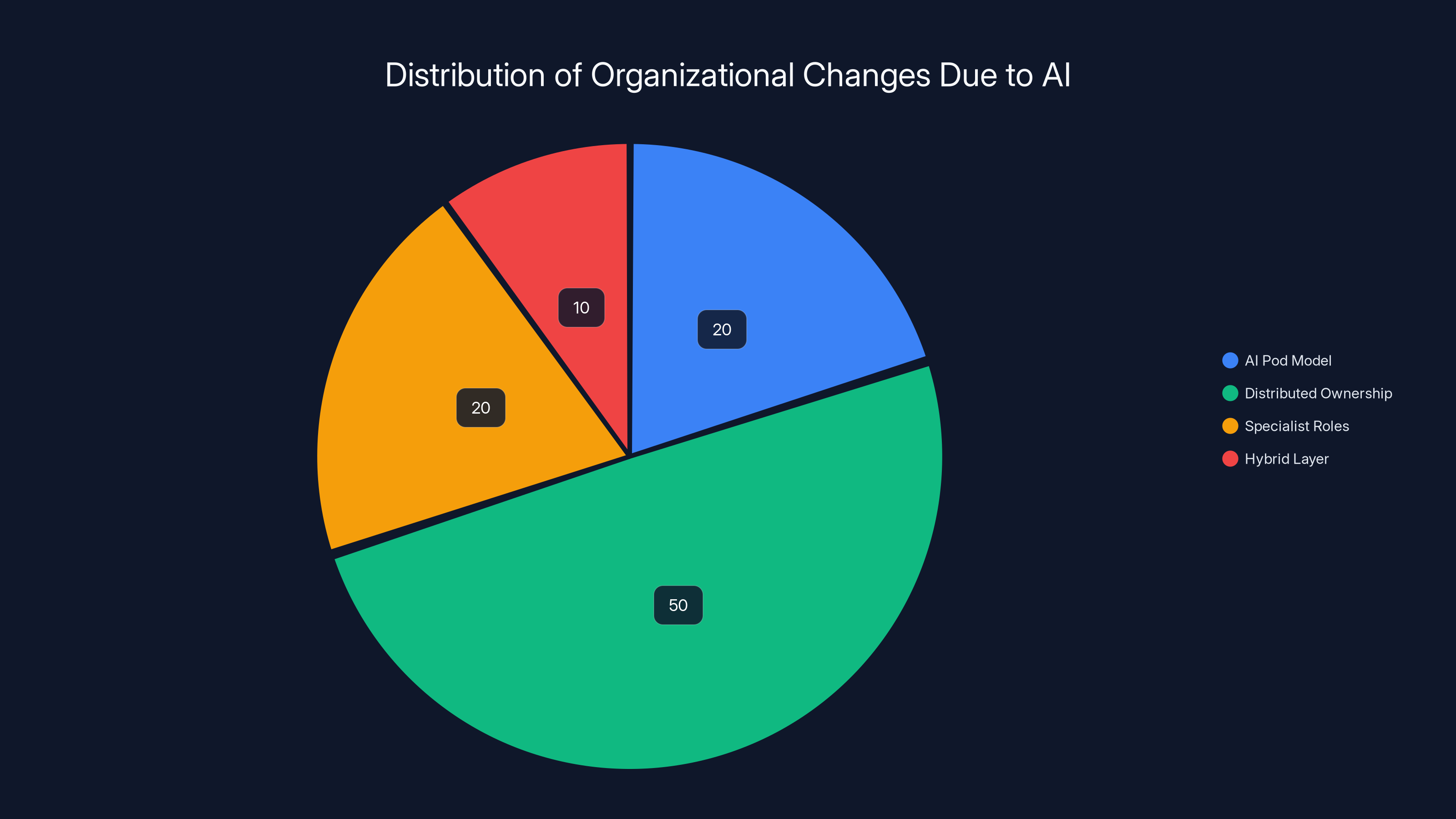

The majority of companies (50%) adopted a distributed ownership model for AI responsibilities, while 20% each opted for AI pods or specialist roles, and 10% implemented a hybrid layer. Estimated data based on narrative insights.

How Team Structures Are Reorganizing

About 6% of companies reported changes in team structure or reporting lines directly due to AI implementation. That number sounds small until you realize that organizational restructuring is rare and disruptive. The fact that it happened at all in 6% of cases shows how fundamental these changes can be for some organizations.

But structural changes showed up in the data in other ways too. About 83% of companies reported new responsibilities or new roles. And 16.27% reported broader organizational changes beyond the categories we've been discussing.

The structural changes took a few different forms:

The AI Pod Model: Some organizations created dedicated teams responsible for AI implementation and optimization. These pods usually included a mix of support specialists, data analysts, and product people. They'd monitor AI performance metrics, identify retraining opportunities, and own the process of improving outputs over time. This works well for companies with 200+ support people, but it's overkill for smaller teams.

Distributed Ownership: Most companies took a different approach. They distributed AI-related responsibilities across their existing structure. Team leads became responsible for overseeing their team's AI performance. Individual contributors took turns monitoring outputs. It's less formal but also less resource-intensive.

Specialist Roles: Some companies hired new specialists. A data analyst to pull performance metrics. A trainer to help update AI outputs. A program manager to oversee the implementation. These weren't restructures exactly, but they were structural additions.

The Hybrid Layer: A few organizations created a layer between frontline staff and managers specifically for AI oversight. One company described it as "AI specialists" who sit with support teams but are dedicated to ensuring AI outputs are high quality.

What the research revealed is that companies are still experimenting. There's no standard model yet for how to organize around AI support. The ones that seemed to be doing best were the ones that explicitly discussed structure early, made a deliberate choice, and then adjusted based on what they learned.

The Hiring Puzzle: Why Tier 1 Headcount Is Shrinking

This is the data point that's getting attention: 27.71% of companies reported changes to headcount or hiring plans due to AI implementation.

On its surface, that seems like layoffs are coming. But the reality is more nuanced.

What actually happened:

Natural attrition rather than cuts: Many companies didn't lay people off. They just didn't replace people who left. If you have 50 Tier 1 support agents and two quit in a given quarter, you might historically hire two replacements. With AI handling 40% of the workload they previously handled, you hire one replacement instead. Over a year or two, your headcount naturally declines.

Shifting up rather than out: Companies that were strategic about this found Tier 1 people who showed aptitude for more complex work and moved them into Tier 2 or Tier 3 roles. It's not a huge shift in total headcount, but it changes the composition of the team.

Hiring freezes while figuring things out: Some companies just paused hiring completely. They wanted to see what AI actually changed about their support load before committing to new headcount. A few months in, when they better understood the impact, they might resume hiring—just at a lower level than before.

Reallocating toward specialist roles: The hiring that did happen often went toward specialist positions. AI trainers. Data analysts. Product support people who could work with AI outputs.

Here's what's important: the research showed that headcount changes weren't evenly distributed. Some companies cut significantly. Others didn't change headcount at all. The difference came down to how they approached AI implementation. Companies that viewed AI as a tool to make existing staff more productive didn't necessarily need fewer people. Companies that viewed AI as a way to handle more volume with the same staff reduced headcount.

One more pattern: companies with lower AI implementation quality had to keep more people on payroll because they needed more human oversight. Companies that invested in proper training and setup saw genuine reductions in headcount needs. Quality of implementation directly impacted hiring decisions.

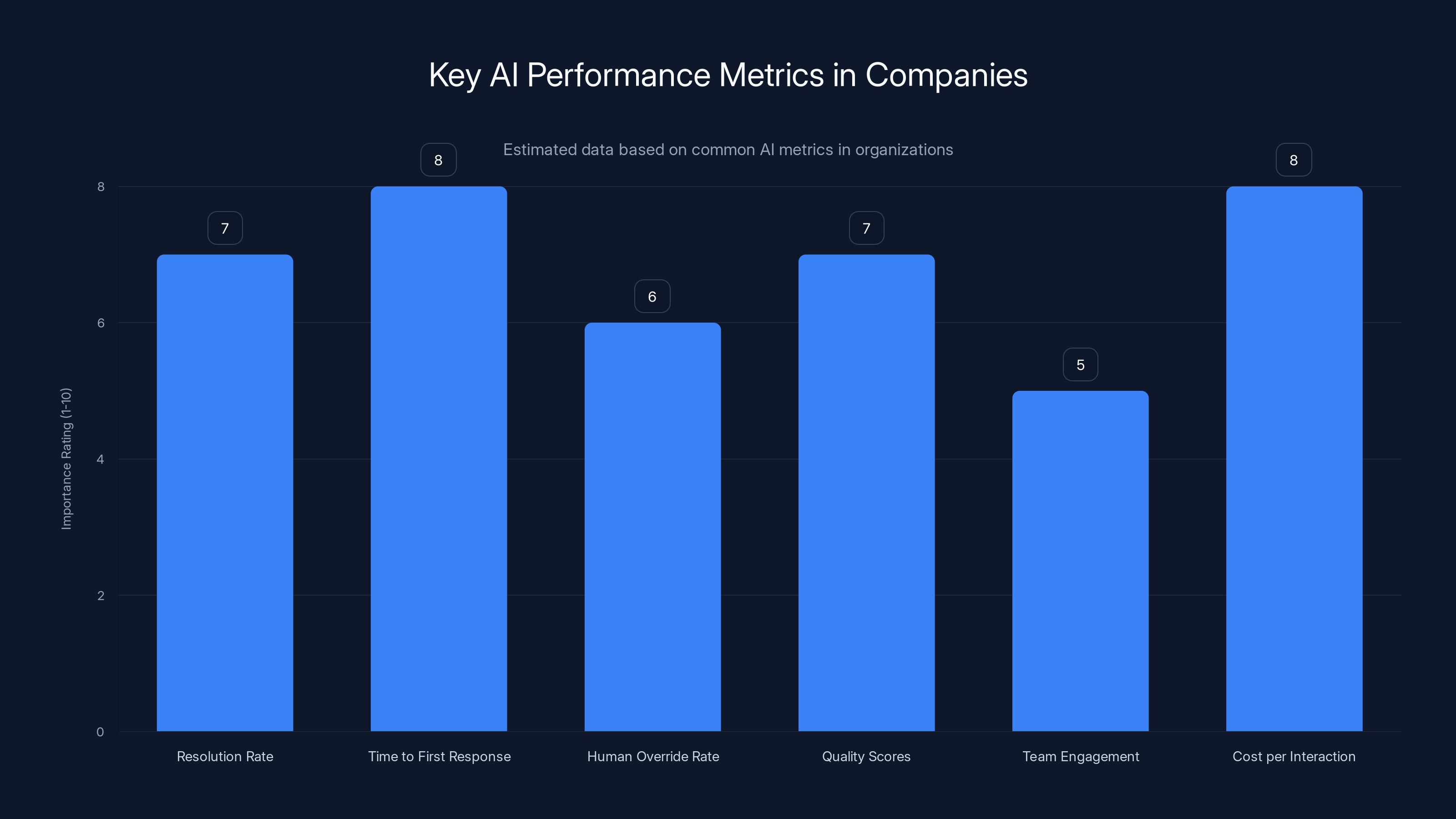

Resolution rate and cost per interaction are highly prioritized metrics, with companies focusing on efficiency and cost savings. Estimated data reflects typical organizational focus.

The Skill Gap Crisis Nobody's Talking About

Here's what might be the most important finding from the research: companies are creating new skill gaps while solving old ones.

Traditionally, support organizations valued product knowledge, customer empathy, and ability to troubleshoot. Those skills are still valuable. But the research showed that three new skills are rapidly becoming essential:

Data literacy: To understand how your AI is performing, you need to read metrics. What's your AI resolution rate? How often does it escalate to humans? What's the accuracy of categorization? Which issue types does it handle well? Leaders need people on their team who can interpret these metrics, spot trends, and identify problems. Many support teams don't have this skill yet.

AI quality assurance: Reviewing AI outputs for correctness is different than reviewing human responses. You're looking for hallucinations, accuracy issues, tone mismatches, and subtle errors that an AI might make differently than a human. This skill isn't taught in most support training programs.

Cross-functional communication: Supporting an AI system requires coordinating with product teams, engineering, and data teams. Traditional support roles were often siloed. Now you need people who can talk to engineers about model behavior, discuss data quality with data teams, and coordinate with product on how to handle edge cases.

The research showed that companies identified these skill gaps, but many didn't have a clear plan for developing them. Some hired new people with these skills. Others started training existing staff. The ones that moved fastest were the ones that treated skill development as urgent rather than optional.

One company built a "support engineering" track—basically career growth for support people who wanted to develop technical and analytical skills. Another started a monthly training program focused on data literacy. These proactive moves made a real difference in managing the transition.

Implementation Approaches: Why One Size Doesn't Fit All

The research uncovered something important: there's no single "right" way to implement AI in support. But there are definitely better and worse approaches.

The big bang approach: Some companies decided to deploy AI broadly across all channels, all issue types, and all teams at once. This creates maximum organizational disruption but also forces everyone to adapt quickly. The upside: you figure out the real issues fast. The downside: chaos and mistakes happen at scale.

The gradual expansion: Other companies started narrow. Maybe one channel (chat-only). Maybe one issue type (account problems). Maybe one team as a pilot. They learned what worked, debugged the issues, then expanded. This is less disruptive but takes longer to see real benefits.

The vertical approach: Some organizations deployed AI deeply in one area before expanding horizontally. They'd get email support optimized with AI, completely retrain the team, nail the workflows, then move to chat.

The experimental approach: A few companies treated their first AI implementation as an experiment. They explicitly told teams "we're trying this, documenting what happens, and will adjust." This lower-pressure framing actually led to better feedback and faster iteration.

Companies that succeeded tended to do a few consistent things:

-

Clear communication: They explained why they were implementing AI, what would change, and how roles would evolve. Surprise restructuring bred resentment.

-

Gradual rollout: Even companies with big ambitions started small. They used initial rollouts to learn before going big.

-

Feedback loops: They created explicit ways for frontline staff to report issues, suggest improvements, and voice concerns. Teams felt heard rather than dictated to.

-

Management training: They didn't just train support staff on the AI system. They trained managers on how to lead through organizational change, how to identify and develop new skills, and how to handle the emotional aspects of role transitions.

-

Long-term vision: They communicated what support would look like in a year or two after AI was fully integrated. This helped people understand that the disruption was temporary and that there were real career paths forward.

Estimated data shows a significant shift in customer service roles, with a decline in Tier 1 roles and an increase in AI oversight and higher-tier roles.

The Case for Deliberate Role Design (Before You Hire)

One pattern stood out in the research: companies that explicitly designed new roles before fully deploying AI had smoother transitions than companies that tried to figure things out as they went.

This makes intuitive sense. If you suddenly deploy AI without clarity on who owns what, you get gaps. One team thought the AI QA was someone else's job. Another team thought feedback to product happened somewhere else. These gaps cause problems.

Companies that did this well went through a deliberate process:

-

Mapped the workflow: Starting to end, where does AI step in and step out?

-

Identified decision points: Where do humans need to make choices?

-

Defined role responsibilities: Who monitors this? Who escalates that? Who provides feedback?

-

Created skill requirements: What does someone need to know to do this job?

-

Developed training: How do we teach these skills?

-

Set up feedback loops: How do we continuously improve?

One company created an explicit "AI coordinator" role within each support team. This person's entire job was managing the interface between the human team and the AI system. They monitored metrics, coordinated feedback, trained people on how to work effectively with AI, and raised issues. It sounds like overhead, but it actually prevented a lot of chaos.

Another company created role tiers explicitly around AI involvement:

- AI Specialist: 100% focused on AI oversight and improvement

- AI-First Agent: Works alongside AI, stepping in for escalations

- Complex Case Specialist: Handles the cases that AI doesn't take

This clarity meant people understood where they fit, what success looked like for their role, and how to develop their skills.

Measurement and Metrics: What Actually Matters

The research showed that companies measure AI impact in wildly different ways. Some focus on volume (how many tickets did AI handle?). Others focus on quality (how often did humans have to redo the AI's work?). Some focus on cost (did we save money?). Others focus on employee experience (did the job become better or worse?).

There's no universal metric because the goals vary. A company trying to reduce costs has different priorities than a company trying to improve customer satisfaction. But several metrics emerged as valuable across most organizations:

Resolution rate without human escalation: What percentage of issues does the AI resolve without involving a human? One company tracked this obsessively and discovered it was actually lower than expected (around 35%) when they required legitimate customer satisfaction, not just AI-generated responses that might be wrong.

Time to first response: AI systems are fast. Measuring whether this actually improved customer experience requires looking at downstream metrics too—like resolution time and customer satisfaction.

Human override rate: When humans review AI outputs, how often do they need to change something? High override rates suggest the AI isn't handling the work well.

Quality scores: Various tools measure response quality on dimensions like accuracy, tone, and completeness. Companies using AI typically saw initial dips in quality (AI gets something wrong 3% of the time instead of humans getting it wrong 1% of the time), then quality improved over time with training and feedback.

Team engagement and satisfaction: This is the metric nobody tracked until after implementation, then realized it mattered. Did your team get more engaged by moving away from repetitive work? Or did they disengage because the work felt less tangible?

Cost per interaction: This matters, but it's more complex than it looks. If AI reduces cost per interaction but humans have to spend time fixing its mistakes, the real savings are less than they appear.

The companies that succeeded were the ones that tracked multiple metrics, understood how they interacted, and used them to make deliberate improvements rather than just celebrating one number.

Estimated data shows that 35% of companies experienced natural attrition, 25% shifted roles, 20% implemented hiring freezes, and 20% reallocated to specialist roles due to AI implementation.

Change Management: The Human Side Nobody Plans For

The research included a lot of qualitative feedback from frontline staff and managers about how the transition actually felt. And there's a consistent pattern: implementation often goes smoothly at first, then hits a wall when the emotional and psychological aspects of change kick in.

Concrete examples from the research:

-

Identity shift: Support people often take pride in resolving issues. Moving to an AI oversight role can feel less meaningful, even if the actual impact is greater.

-

Power dynamics: AI removes some of the judgment and autonomy from support work. Decisions that used to be made by experienced agents are now made algorithmically. Some people appreciated the standardization. Others resented losing autonomy.

-

Trust erosion: When an AI makes mistakes, it affects both customer trust and team trust. The research showed that trust in AI systems tracks closely with the quality of human oversight. When people trust that someone's actually monitoring the AI, trust in the system increases. When oversight feels spotty, trust erodes.

-

Career clarity: People need to understand how the change affects their career. Is there a path forward? Are these new roles better, worse, or lateral moves? Ambiguity around this created anxiety.

Companies that managed these elements well did a few things:

-

Reframed the narrative: Instead of "AI is taking your job," it was "AI is taking the repetitive parts of your job so you can focus on more meaningful work."

-

Provided choice: Some companies let people opt into new roles rather than assigning them. This agency made a real difference in how people approached the transition.

-

Celebrated wins: They highlighted cases where humans and AI together solved something neither could solve alone. This reinforced that it was a collaboration, not a replacement.

-

Invested in people: Training budgets, skill development programs, and career path clarity signaled that the company was investing in people, not just technology.

-

Gathered feedback: They asked people regularly how the transition felt and made adjustments. One company discovered their people felt more tired after the transition (constant monitoring and switching between AI oversight and escalation handling). They restructured roles to alternate between oversight work and complex case work, and energy levels improved.

Long-Term Strategy: Where This Is Actually Heading

The research points to something important: most companies don't have a clear long-term vision for what support looks like two or three years after AI implementation.

This creates a problem. Short-term, companies need to manage the disruption and learn how to work effectively with AI. But without a longer-term vision, they risk building ad-hoc structures that don't scale and training people for jobs that might change again soon.

Some future scenarios emerged from conversations with leaders:

Scenario 1: Specialized support models: In the future, support might split into very different roles. Some people become AI coaches who train and improve the AI system continuously. Others become specialist responders who handle only the cases that require human judgment. Others become customer advocates who focus on relationships with high-value customers. These aren't roles that exist in traditional support organizations.

Scenario 2: Full integration: AI becomes so embedded that there's no longer a distinct "AI oversight" function. Everyone just works alongside AI as a standard tool. Support people spend their time on what humans do best (understanding complex situations, showing empathy, making judgment calls). AI handles what it does best (fast research, categorization, pattern matching).

Scenario 3: Support automation dominance: In some specific domains (e.g., technical support for well-documented products), AI might handle 70-80% of issues, with humans handling only the truly unusual cases. This fundamentally changes headcount needs and skill requirements.

Scenario 4: Human-AI teams: Rather than AI handling some issues and humans handling others, most issues get handled by human-AI teams where they're actively collaborating. The human provides judgment and understanding. The AI provides information and pattern recognition. This requires a completely different operating model.

Most companies haven't chosen which future they're building toward. That's a strategic gap. The companies that do will likely move faster and more coherently than the ones that just react to day-to-day changes.

One company took a deliberate approach: they articulated what support would look like in year two and year three. They communicated this to their team. They started training people now for the roles they'd need then. This gave people clarity about where things were heading and made the current disruption feel more like a transition to something better rather than just chaos.

Building an AI-Ready Support Organization Now

If you're implementing AI in your support team or thinking about it, the research points to several things worth doing now:

First, map your current workflows in detail: You need a baseline. What does support look like right now? Where does AI fit? Where will humans still be essential? You can't manage change you don't understand.

Second, involve your team early: Don't design changes in a conference room and announce them. Ask your team where they see AI fitting in. Ask what worries them. Ask what excites them. This surfaces real issues and builds buy-in.

Third, pilot deliberately: Start small. Maybe one channel or one team. Document what changes. Measure what matters. Get people trained. Get feedback. Then expand. The cost of a slow rollout is patience. The cost of a fast rollout is chaos.

Fourth, invest in skill development: Identify the new skills your team will need. Start training now. Don't wait until you've implemented AI to realize nobody knows how to do QA on AI outputs.

Fifth, create role clarity: Define what people will actually do. How much time will they spend on AI oversight? How much on escalations? How much on other work? Ambiguity breeds stress.

Sixth, measure what matters: Pick metrics that track toward your actual goals. If you want better customer satisfaction, measure that—not just volume handled by AI. If you want happier team members, measure engagement and job satisfaction.

Seventh, communicate the long-term vision: Tell people where support is heading. What will roles look like in two years? What's the career path? What's the vision? This helps people understand that today's disruption is a transition to something better.

The Competitive Advantage of Getting This Right

Here's something that doesn't get said enough: how you integrate AI into your support organization is a genuine competitive advantage.

Two companies might implement the same AI technology and get completely different results. One gets 35% automation and improves customer satisfaction. The other gets 50% automation and decreases customer satisfaction because they cut people too aggressively and the quality of human responses dropped.

The difference isn't the technology. It's the organizational capability to integrate it effectively.

Companies that are doing this well share some characteristics:

- They view AI as augmentation, not replacement

- They invest in people and skill development

- They measure broadly (not just cost)

- They gather feedback and iterate

- They communicate clearly and often

- They design roles intentionally

- They have a long-term vision

This actually creates moat. A company that figures out how to integrate AI effectively into support will have better customer satisfaction, more engaged employees, and lower costs than competitors. That's not a short-term advantage—it's sustainable.

Common Mistakes and How to Avoid Them

The research also revealed patterns of what doesn't work:

Deploying without workflow redesign: Companies that dropped AI into existing workflows without rethinking the flow experienced chaos. Tickets got routed wrong. Escalations didn't work. Humans stepped in too late or too early. The solution: redesign workflows first.

Underestimating change management: Lots of companies focused entirely on AI implementation and underestimated the organizational challenge. People got stressed, confused, or disengaged. The solution: invest in change management equally to technology implementation.

Not building feedback loops: AI systems improve when you feed them data about what's working and what's not. Companies that didn't create structured feedback loops struggled to improve quality over time. The solution: make feedback loops a core part of your implementation.

Hiring freezes without a plan: Some companies stopped all Tier 1 hiring without having a clear model for whether they'd need fewer people. This created gaps and understaffing. The solution: model the impact carefully before making headcount decisions.

Losing good people: Some experienced agents got frustrated with the transition and left. The company lost expertise and had to hire new people to replace them. The solution: involve experienced people in designing how AI works, give them meaningful roles, and invest in them.

Measuring the wrong things: One company measured only the percentage of issues AI handled, declared victory when it hit 40%, then later realized customer satisfaction had dropped because quality suffered. The solution: measure toward actual outcomes, not just activity metrics.

FAQ

What percentage of customer service teams are experiencing changes due to AI implementation?

According to research analyzing 166 in-depth interviews with support leaders and specialists, approximately 95% of customer service teams reported meaningful workflow changes after implementing AI agents. About 83% experienced changes in roles and responsibilities, 28% saw headcount impacts, and 6% reported organizational restructuring. These changes span across team structure, processes, hiring, and skill requirements.

How are customer service roles changing due to AI implementation?

Roles are shifting from handling routine requests to overseeing AI outputs and managing complex edge cases. Humans now focus on quality assurance of AI responses, handling issues requiring nuance and empathy, providing feedback to improve AI systems, and managing customer relationships for complex accounts. Tier 1 roles (high-volume, repetitive work) are declining, while new specialist roles like AI Quality Assurance and AI Training Coordinator are emerging. The work is becoming more strategic and less transactional.

Are customer service jobs disappearing due to AI?

Not disappearing, but transforming. About 28% of companies experienced hiring freezes or reduced hiring at the Tier 1 level as AI handles more routine requests. However, this isn't universal layoffs—it's more often natural attrition and reallocation. Many people transition to more complex support roles, AI oversight positions, or specialist functions. The total headcount impact varies significantly by company and implementation approach.

What new skills do support teams need as AI becomes part of their work?

Three critical skill gaps emerged: data literacy (understanding AI performance metrics and identifying trends), AI quality assurance (reviewing outputs for accuracy and appropriate tone), and cross-functional communication (coordinating between support, product, and engineering teams). Additionally, emotional intelligence became more valuable as routine work shifts to complex cases. Companies that addressed these skill gaps early experienced smoother transitions.

What's the best approach to implementing AI in customer service operations?

Research indicates that successful implementations typically involve several elements: detailed workflow redesign before deployment, gradual rollout starting with one channel or team, explicit role design with clear responsibilities, investment in team training and skill development, structured feedback loops for continuous improvement, clear communication about changes and long-term vision, and measurement of multiple metrics including customer satisfaction and employee engagement. Companies that treated AI implementation as organizational redesign rather than just software deployment had better outcomes.

How should companies measure the impact of AI on their support operations?

Multiple metrics matter: AI resolution rate without escalation, override rates (how often humans change AI outputs), quality scores across accuracy and tone, first response time, customer satisfaction, employee engagement, and cost per interaction. Companies focusing on only one metric often found surprising problems downstream. For example, maximizing AI resolution rate without tracking quality led to customer satisfaction drops. Measuring toward actual business outcomes rather than activity metrics provides better guidance for improvements.

What are the biggest risks when implementing AI in customer service?

Common risks include deploying without workflow redesign (leading to confusion and poor escalation), underestimating change management needs (causing team stress and disengagement), losing experienced employees who get frustrated with transitions, cutting headcount too aggressively before understanding true AI capabilities, creating skill gaps by not investing in training, and failing to build feedback loops that allow AI quality to improve over time. Companies that proactively address these risks experienced much smoother implementations.

How can organizations prepare their support teams for AI implementation?

Effective preparation includes: mapping current workflows in detail, involving frontline staff and managers in planning, communicating clearly about why change is happening and what it means for roles, providing skill development and training before or concurrent with deployment, piloting with one team or channel first to learn before scaling, creating clear role definitions with explicit responsibilities, establishing feedback mechanisms so people feel heard, and articulating a long-term vision for what support looks like post-AI. Teams that felt prepared and involved adjusted much better than teams that felt surprised by changes.

What percentage of companies are changing their organizational structure due to AI?

About 6% reported direct changes to team structure or reporting lines specifically due to AI. However, 83% reported new roles or responsibility changes, and 16.27% reported broader organizational changes. The variation reflects different implementation strategies. Some companies created dedicated AI pods, others distributed responsibilities across existing teams, and others created specialist roles. There's no universal model yet—companies are experimenting with different approaches.

How does AI change the workflow of customer service teams?

Traditional sequential workflows (ticket enters system → gets sorted → gets assigned → gets resolved) are becoming hybrid workflows where AI and humans work in parallel. AI typically handles initial triage and categorization, routes complex issues based on sophisticated algorithms, translates and auto-categorizes requests, and drafts initial responses. Humans then review, quality-assure, and escalate when needed. This parallel workflow requires fundamentally different process design than sequential models, including new decision points, feedback loops, and handoff mechanisms.

Looking Ahead: The Future of Support Operations

The research paints a clear picture: the next few years will determine what support organizations look like for the next decade. Companies that figure out how to integrate AI thoughtfully—preserving what's valuable about human work while amplifying it with technology—will emerge with competitive advantages in customer satisfaction, employee retention, and operational efficiency.

The ones that treat AI as a cost-cutting measure without considering the organizational and human dimensions will likely face problems down the road: quality issues, team disengagement, talent loss, and customer dissatisfaction.

The good news? The path forward is becoming clearer. The research shows what works: clear communication, intentional role design, investment in people and skills, deliberate measurement, and a long-term vision. These aren't revolutionary ideas. They're fundamentals of good organizational change management applied to AI implementation.

Your support organization is in the middle of a transition. How you navigate the next 12-18 months will shape what it looks like in 2027 and beyond. The teams that move intentionally and thoughtfully will win. The ones that just react will scramble to catch up.

Key Takeaways

- 95% of support teams report meaningful workflow changes from AI implementation; 83% see new roles and responsibilities emerge

- Tier 1 headcount demand is declining by approximately 28%, but this reflects role evolution rather than universal job loss across support organizations

- New critical skills are emerging: data literacy, AI quality assurance, and cross-functional communication, creating skill gaps companies must address proactively

- Workflow redesign from sequential to hybrid human-AI models is essential; companies treating this as organizational redesign versus software implementation see better outcomes

- Long-term strategy clarity is missing: most companies lack a clear vision for support operations 2-3 years post-implementation, creating organizational uncertainty

![How AI Is Reshaping Customer Service Teams [2025]](https://tryrunable.com/blog/how-ai-is-reshaping-customer-service-teams-2025/image-1-1767607567570.png)