Introduction: Why Visual Explanations Beat Words Every Time

You know that moment when you're trying to explain something complicated and the person just isn't getting it? Then you sketch something on a napkin and suddenly their eyes light up. That's the power of visual explanation.

But here's the frustrating part: not everyone can draw. Some of us can barely stick-figure our way through a concept. That's where AI image tools come in, and honestly, they've become genuinely useful in the last couple of years. According to PCMag, AI image generators have advanced significantly, offering tools that can create professional visuals without needing artistic skills.

The shift is real. A few years ago, AI-generated images looked like they were created by an alien trying to understand human anatomy through fever dreams. Now? You can generate clean, professional visuals that actually help people understand what you're trying to say. No Picasso degree required.

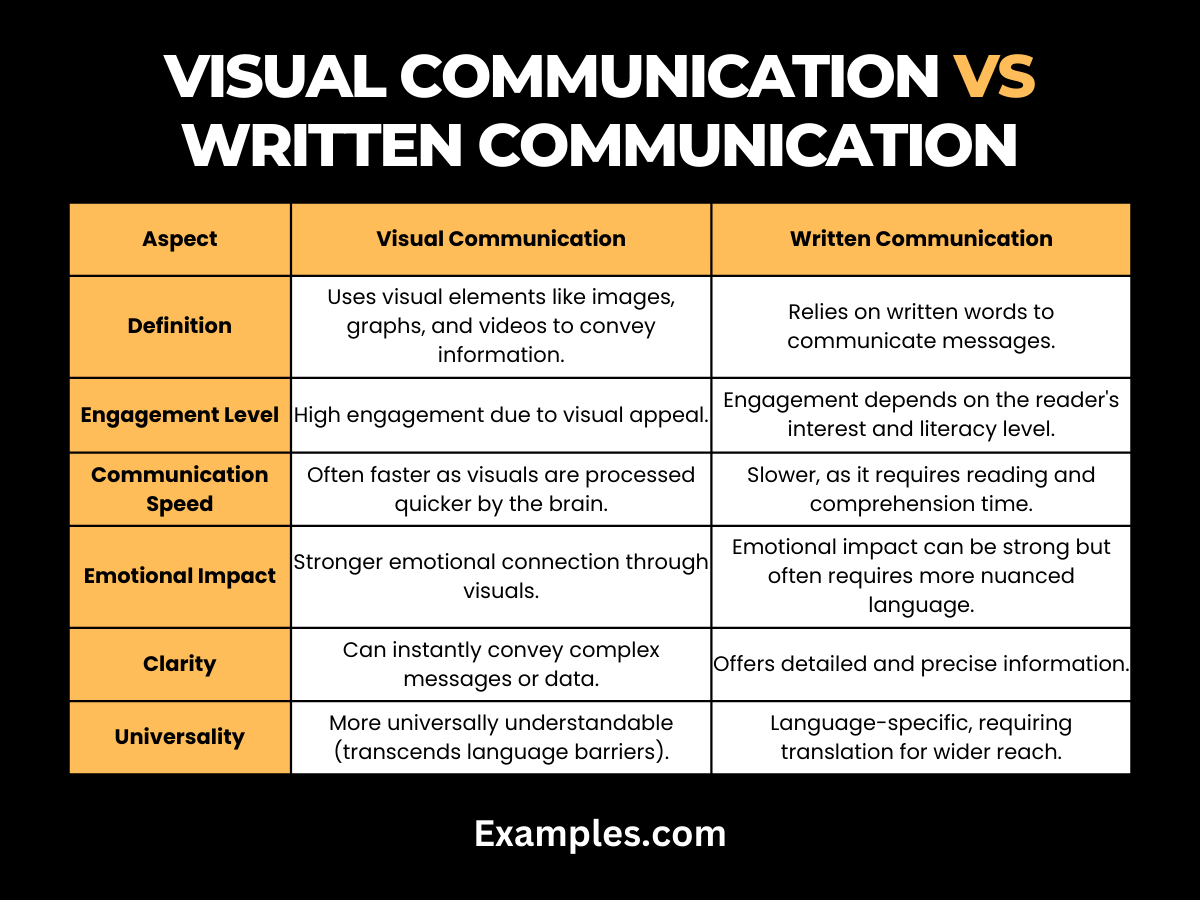

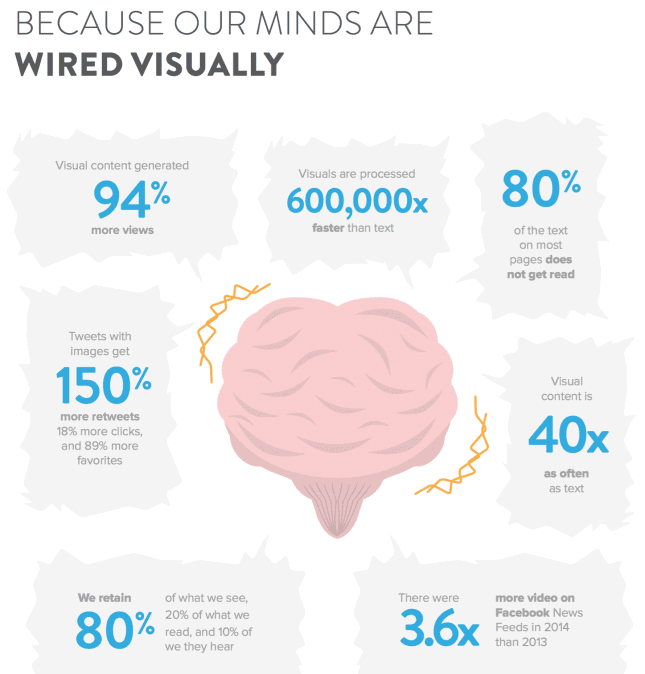

What makes this work is understanding how visuals communicate differently than text. Your brain processes images 60,000 times faster than text. An infographic that would take three paragraphs to explain in writing can be absorbed in seconds. This isn't just about making things look pretty. It's about making information stick. Research on human brain processing supports this, highlighting the efficiency of visual information processing.

The real advantage emerges when you're dealing with abstract concepts. How do you show "workflow optimization" or "data fragmentation" in a way that doesn't feel like corporate nonsense? AI image tools let you generate custom visualizations that match exactly what you're describing, not some generic stock photo from 2008.

Throughout this guide, we're going to walk through how to actually use these tools effectively. We'll cover which platforms work best for different types of explanations, the specific techniques that produce results, and the common mistakes that make AI images look like AI images (spoiler: you want to avoid that).

The goal isn't to replace illustrators or designers. It's to give you a way to visualize ideas quickly, iterate on them, and communicate more effectively. Whether you're building a product, explaining a concept to stakeholders, or creating educational content, these tools have legitimate value when you know how to use them.

TL; DR

- AI image tools have matured significantly and can now generate professional visuals for explanations without design skills, as noted by PCMag.

- Visual communication is 60,000x faster than text processing, making images crucial for idea explanation.

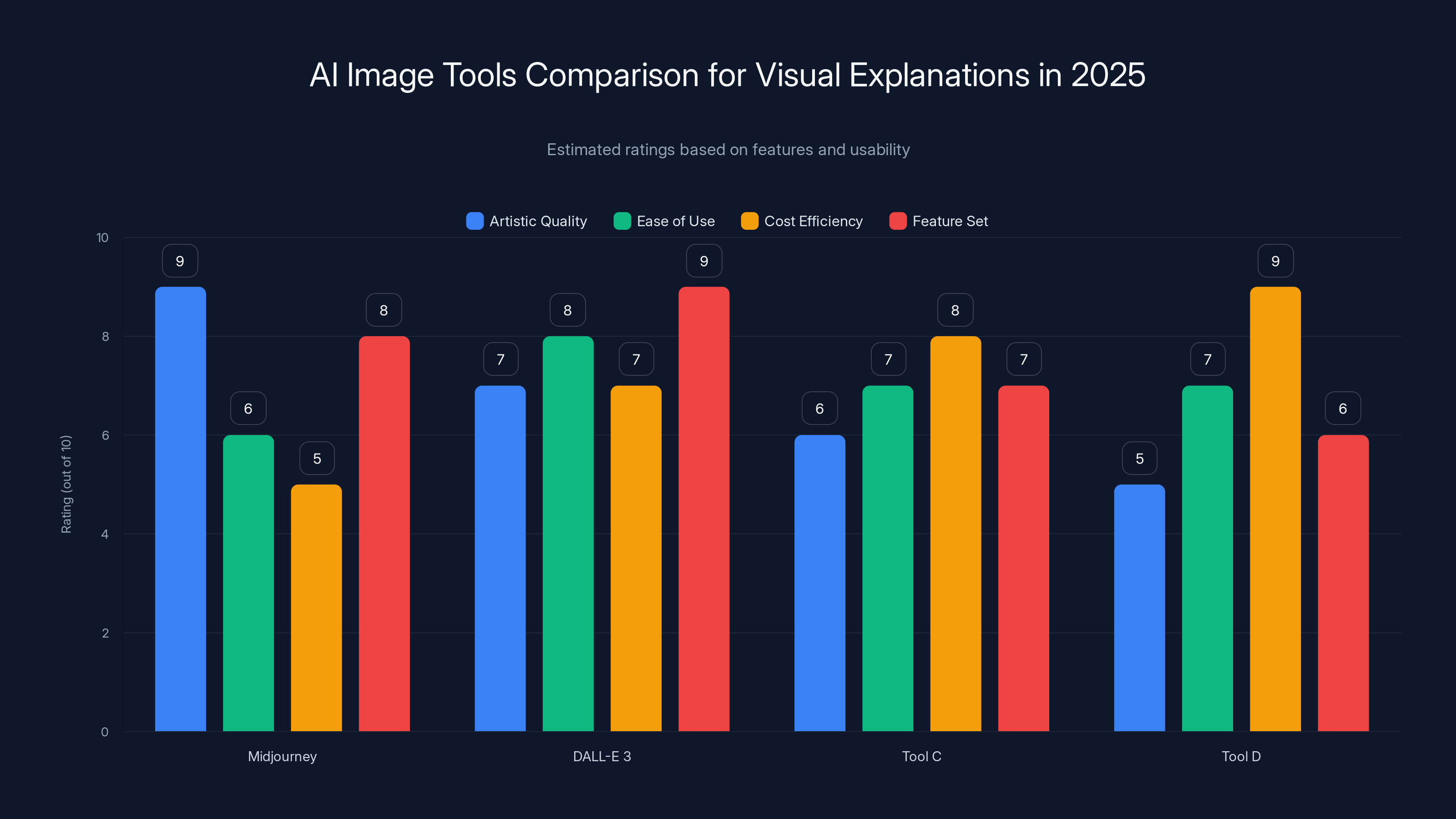

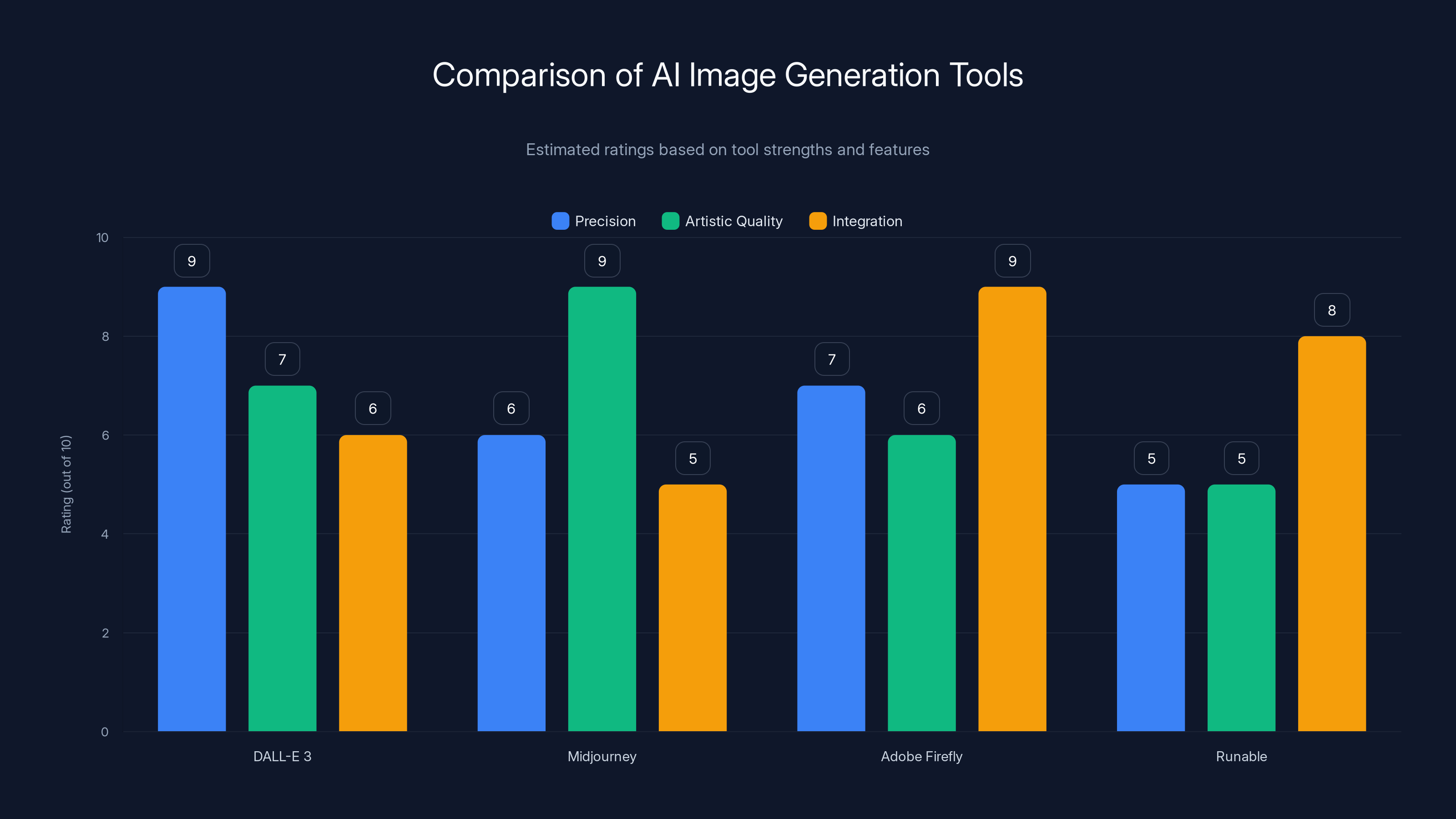

- Best tools vary by use case: Midjourney for artistic renders, DALL-E 3 for precision, and Adobe Firefly for professional integration.

- Specific prompting techniques matter more than tool choice, including detail specificity, style references, and layout descriptions.

- Practical workflow involves starting with concept sketches, iterating rapidly, and using AI outputs as bases for refinement.

Midjourney excels in artistic quality but has a steeper learning curve and higher cost. DALL-E 3 offers a balanced feature set with ease of use. Estimated data based on tool descriptions.

Understanding Why Visuals Beat Text for Complex Ideas

Before jumping into tools, let's understand the actual science of why this works. Your brain has dedicated neural pathways for processing visual information. When you read text, your brain has to decode language, translate it to meaning, then create a mental image. That's three steps. When you see an image, recognition happens in one step.

This matters especially for explaining relationships. Try describing a flowchart in pure text and you'll understand immediately why visuals are essential. Even a simple five-step process becomes tedious to read as paragraphs. The same process as a diagram? Instant comprehension.

The real power emerges with abstract concepts. You can't photograph "digital transformation" or "customer journey optimization." These are conceptual frameworks that need visual representation. That's where AI image generation becomes genuinely useful rather than just convenient.

There's also a credibility factor worth mentioning. Studies show people find information more trustworthy when it includes relevant visuals. It's not just about looking nice. It's about how our brains actually process information. We evolved to learn from visual observation. Text is actually the unusual medium. According to Nerdbot, animation and visual storytelling are becoming strategic tools for businesses to convey complex ideas effectively.

Another dimension: retention. People remember 80% of what they see and do, compared to just 20% of what they read. So that diagram you create explaining your quarterly goals? People will actually remember it when you explain it next quarter.

For educators, this becomes transformative. A statistics teacher can generate 50 different visualizations of the same distribution concept, each with slightly different parameters, to help students really internalize the idea. That would be impossible without AI tools.

But there's a practical reality: custom visuals still took talent and time. You had to commission an illustrator, or spend hours in design software, or settle for generic stock images. AI changes that equation. Suddenly the friction disappears. You can ideate, generate, refine, iterate in minutes instead of days.

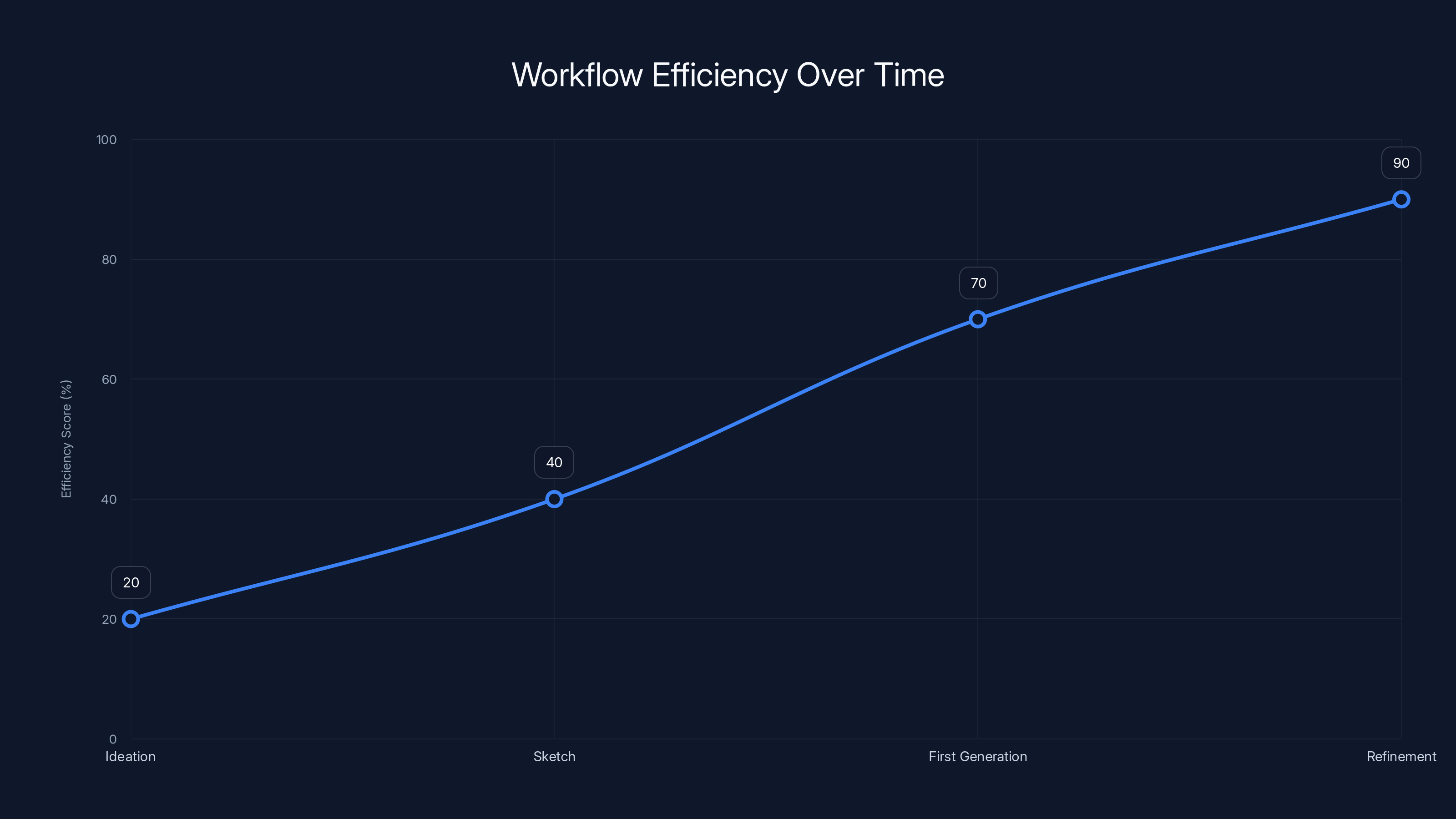

Estimated data suggests that integrating AI image generation into workflow significantly improves efficiency, especially during the refinement phase.

The Best AI Image Tools for Visual Explanations in 2025

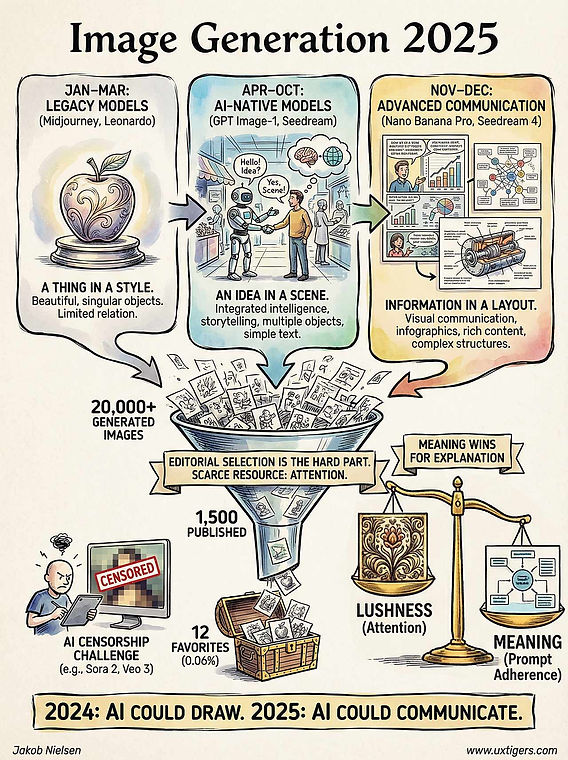

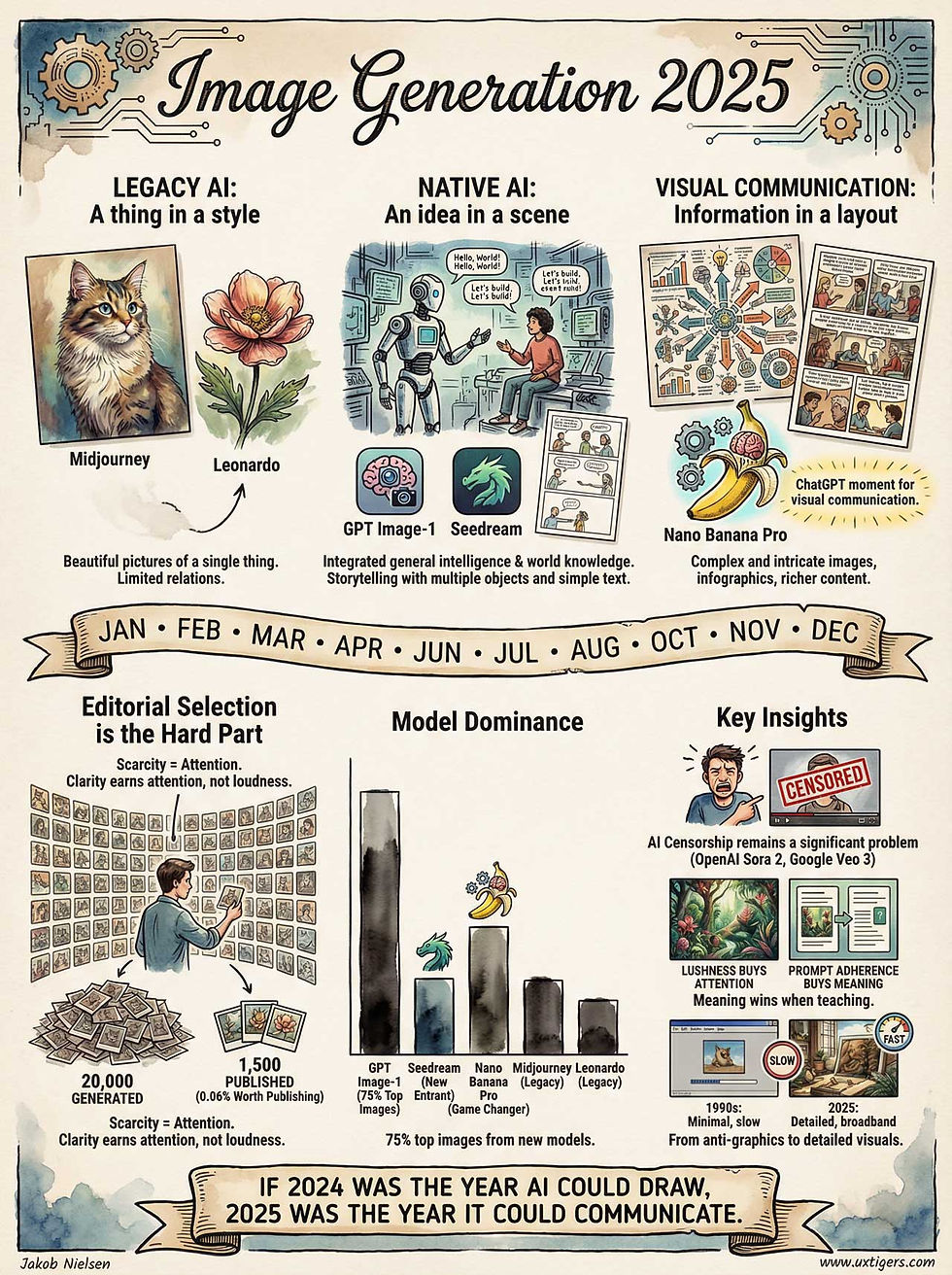

The landscape has matured significantly. We're past the point where one tool dominates everything. Different tools excel at different tasks.

Midjourney: The Artistic Powerhouse

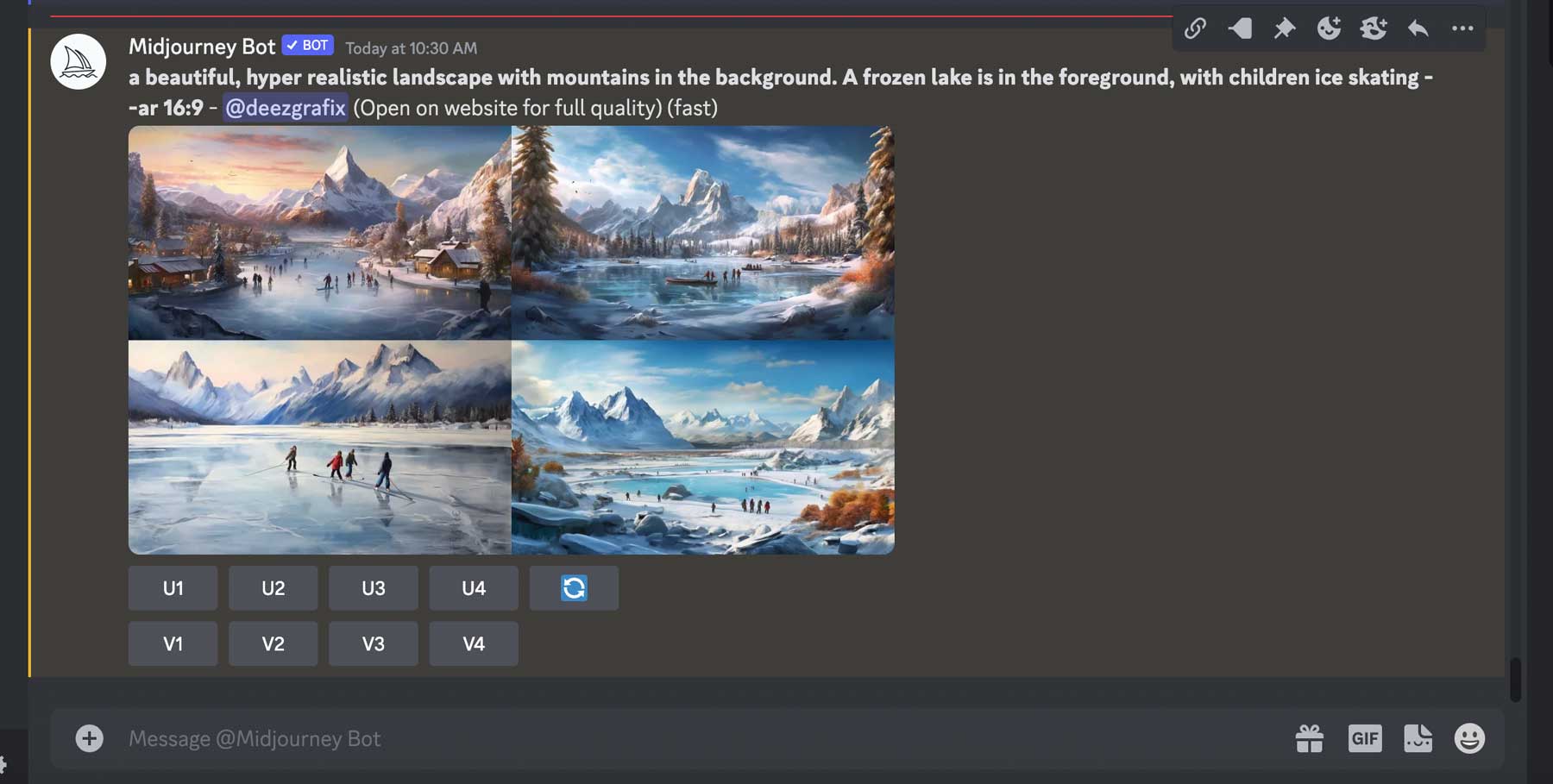

Midjourney is the tool that created the "AI art" explosion. It works through Discord, which feels clunky at first but becomes natural once you understand the workflow.

What makes Midjourney special is consistency and artistic quality. If you're explaining something that benefits from aesthetic polish, this is your tool. The iteration system is phenomenal. You can generate variants, refine specific areas, upscale, or modify prompts and run again.

Real talk: it's not cheap. Pricing starts at

The learning curve is steeper than other tools. You need to understand prompting language, reference styles, and composition. But once you internalize it? You get incredibly consistent results.

When to use Midjourney:

- Conceptual illustrations with artistic style

- Diagrams where aesthetics matter

- Generated background elements for custom designs

- Situation simulations (showing "what this looks like in practice")

The Discord interface actually becomes an advantage once you realize you can save your entire conversation history. You can revisit old prompts, iterate further, or reference what worked six months ago.

DALL-E 3: The Precision Option

DALL-E 3 is integrated into Chat GPT, which sounds convenient but actually transforms how you work. You can describe what you need in conversation, refine through dialogue, and iterate based on what's working.

DALL-E 3 excels at understanding detailed instructions. You can specify exact layouts, text placement, specific objects, and it gets surprisingly close to what you're asking for. The prompt-to-image alignment is better than it was, which matters for precision-dependent explanations.

Pricing is tied to Chat GPT Plus (

The real advantage is the conversation interface. Instead of iterating through Discord commands, you're having a chat about what works and what doesn't. Many people find this more intuitive than Midjourney's command structure.

When to use DALL-E 3:

- Technical diagrams with precise specifications

- Educational content where clarity matters most

- Business presentations needing professional feel

- Quick iterations based on feedback

- Integrated workflows where you're already in Chat GPT

Adobe Firefly: The Professional Choice

Adobe Firefly is integrated into Creative Cloud, which means it plays nicely with Photoshop, Illustrator, and In Design. For people already working in Adobe software, this is the obvious choice.

Firefly's strength is integration and commercial usage rights. You own what you generate without ambiguity. For companies concerned about licensing, this matters.

The tool itself is more constrained than Midjourney or DALL-E. You're not getting the artistic freedom, but you are getting reliable, professional results. Think of it as the sensible, enterprise-friendly option.

Pricing depends on your Creative Cloud plan. If you're paying for creative software anyway, Firefly is included. That makes it effectively free if you're already a customer.

When to use Adobe Firefly:

- Professional design workflows

- Marketing materials needing brand consistency

- Integrating with Photoshop or Illustrator

- When clear commercial licensing matters

- Teams already standardized on Creative Cloud

Stable Diffusion via Clipdrop: The Free Alternative

Clipdrop offers free access to Stable Diffusion models. It's not as polished as the commercial options, but it's genuinely usable.

For education or non-commercial work, this is worth exploring. You get surprising quality without cost. The trade-off is less consistency and fewer refinement options.

Runable: The Automation-First Approach

If you're building multiple visuals as part of a larger workflow, Runable provides AI-powered automation for creating presentations, documents, reports, and images starting at just $9/month. Rather than jumping between tools, you can orchestrate image generation alongside other content creation tasks. This becomes powerful when you're explaining ideas through multiple formats (slides with generated visuals, documents with infographics, presentations with custom illustrations).

Runable's strength is workflow integration. Generate the image, then automatically use it in your slide deck or report. It's not best for pure artistic generation, but it's exceptional for building complete visual explanations across multiple deliverables.

Crafting Effective Prompts for Visual Explanations

Here's where most people stumble. They treat AI image generation like a search engine: type what you want, get an image. That's not how it works.

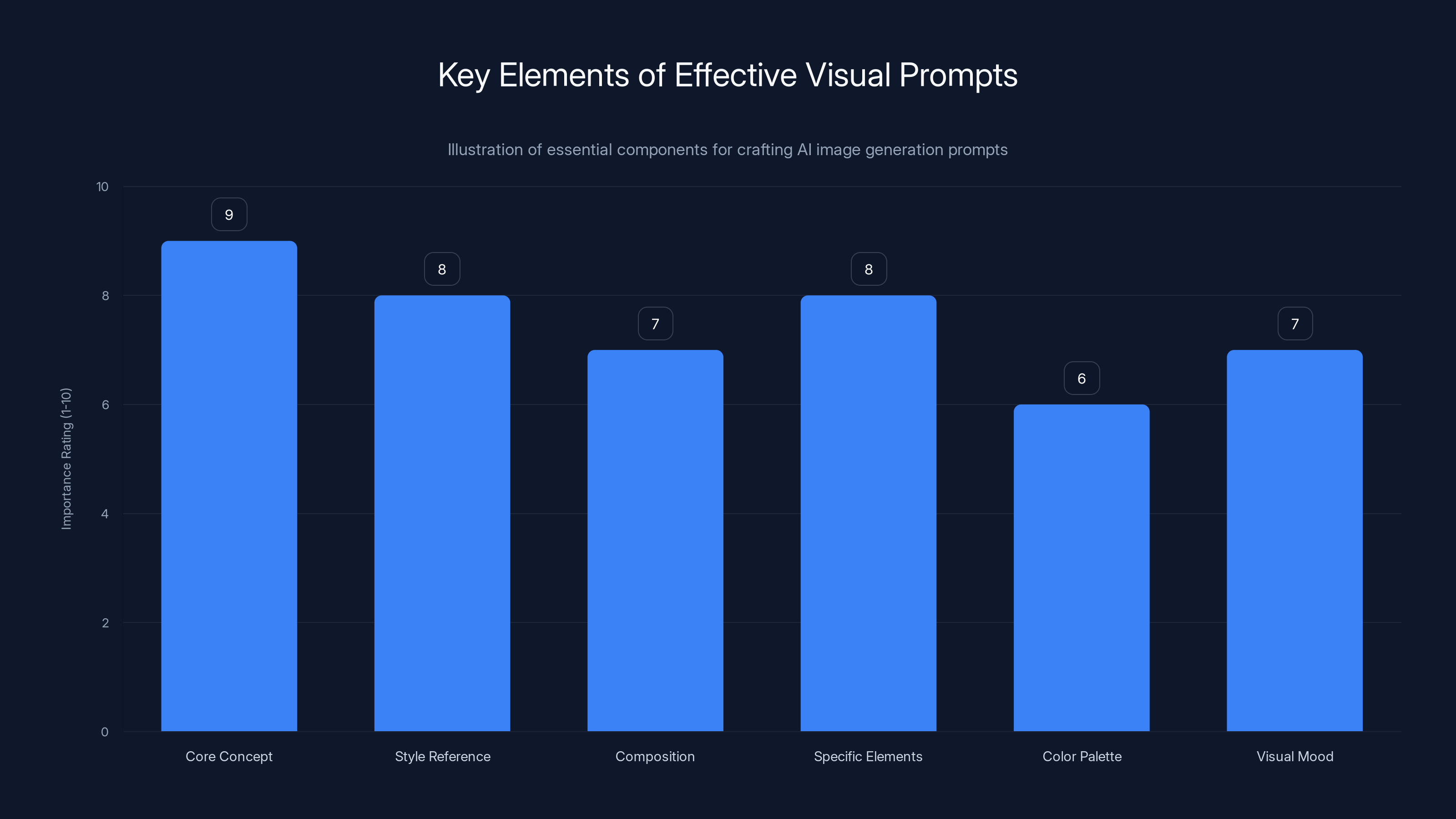

Effective prompts follow a specific structure. You're not describing the image. You're describing everything about the image: composition, style, perspective, specific elements, color palette, mood, and reference points.

The Anatomy of a Winning Prompt

A prompt should include these elements:

1. Core Concept (What you're visualizing) Start specific. "A diagram showing data flow" is too vague. "A technical diagram showing how customer data flows from point-of-sale systems through data warehouses into analytics dashboards" is specific.

2. Style Reference (Aesthetic approach) This is critical. Are you wanting illustration, photography, technical diagram, infographic, or 3D render? Reference existing work. "In the style of technical documentation from Apple's design system" or "Minimalist flat illustration like the Shopify design guidelines."

3. Composition (How things are arranged) Describe the layout. "Left side shows the problem, right side shows the solution" or "Center composition with icons arranged in a circle."

4. Specific Elements (What's actually in the image) List what needs to appear. "Include three stages labeled with timestamps, arrows showing progression, and icons representing data security at each stage."

5. Color Palette (What colors should dominate) "Professional color scheme using navy blue, white, and teal accents" works better than "nice colors."

6. Visual Mood (The feeling it should convey) Is this technical and serious? Approachable and friendly? Energetic and modern? State it explicitly.

Real Examples That Work

Let's walk through an actual example. Say you're explaining how machine learning models make predictions.

Bad prompt: "A diagram of machine learning"

Better prompt: "A technical illustration showing the machine learning pipeline: on the left, rows of training data flowing into a central neural network represented as interconnected nodes, then flowing right to predictions. Use a clean, minimalist style with blue as the primary color. Include subtle icons representing each stage (database, neural network, prediction output). Arrange horizontally, high contrast, professional technical documentation style, like Tensorflow documentation."

See the difference? The second prompt gives the AI tool specific instructions about layout, style, color, and exactly what should appear.

Here's another real example for explaining customer journey:

Bad prompt: "Show customer journey"

Better prompt: "An infographic showing a customer journey in 5 stages: awareness, consideration, decision, onboarding, retention. Each stage as a distinct section with icons representing that stage. Flowing left to right with arrows connecting stages. Use warm colors (orange, coral, amber) against a light background. Style should be modern and approachable, like Mailchimp's design language. Include subtle decorative elements at each stage. Wide aspect ratio suitable for presentation slide."

Notice we're being extremely specific about what we want, but not telling the AI how to draw it. We're giving parameters, not line-by-line instructions.

Iterating and Refining

Your first generation is rarely perfect. The workflow should be:

- Generate initial concept

- Identify what's working

- Identify what's missing or wrong

- Refine the prompt based on what you learned

- Generate variations

- Repeat until satisfied

This is where tool choice matters. Midjourney's variant and remix features make rapid iteration frictionless. DALL-E's conversation interface helps you articulate what's wrong more naturally. You'll develop preferences based on how your brain works.

One practical tip: generate multiple versions of each concept (use the platform's grid generation feature). Usually one or two will be close to what you need. Refine from the best one rather than starting over.

Common Prompting Mistakes

Vague positioning: "Put the chart in the middle" is weaker than "Create a wide-format infographic with the timeline across the center, supporting icons arranged above and below in a symmetrical pattern."

Too many ideas: If you're asking the tool to show five different concepts in one image, you're losing clarity. Better to generate separate visuals for each concept.

Conflicting styles: "Photorealistic technical diagram" doesn't make sense. Be consistent about aesthetic approach.

Ignoring aspect ratio: If you're putting this in a presentation, specify "16:9 landscape format." If it's for social media, specify "1:1 square." Wrong dimensions waste your generation.

Forgetting readability: For diagrams with text, explicitly request readable labels and sufficient contrast. AI often makes text illegible.

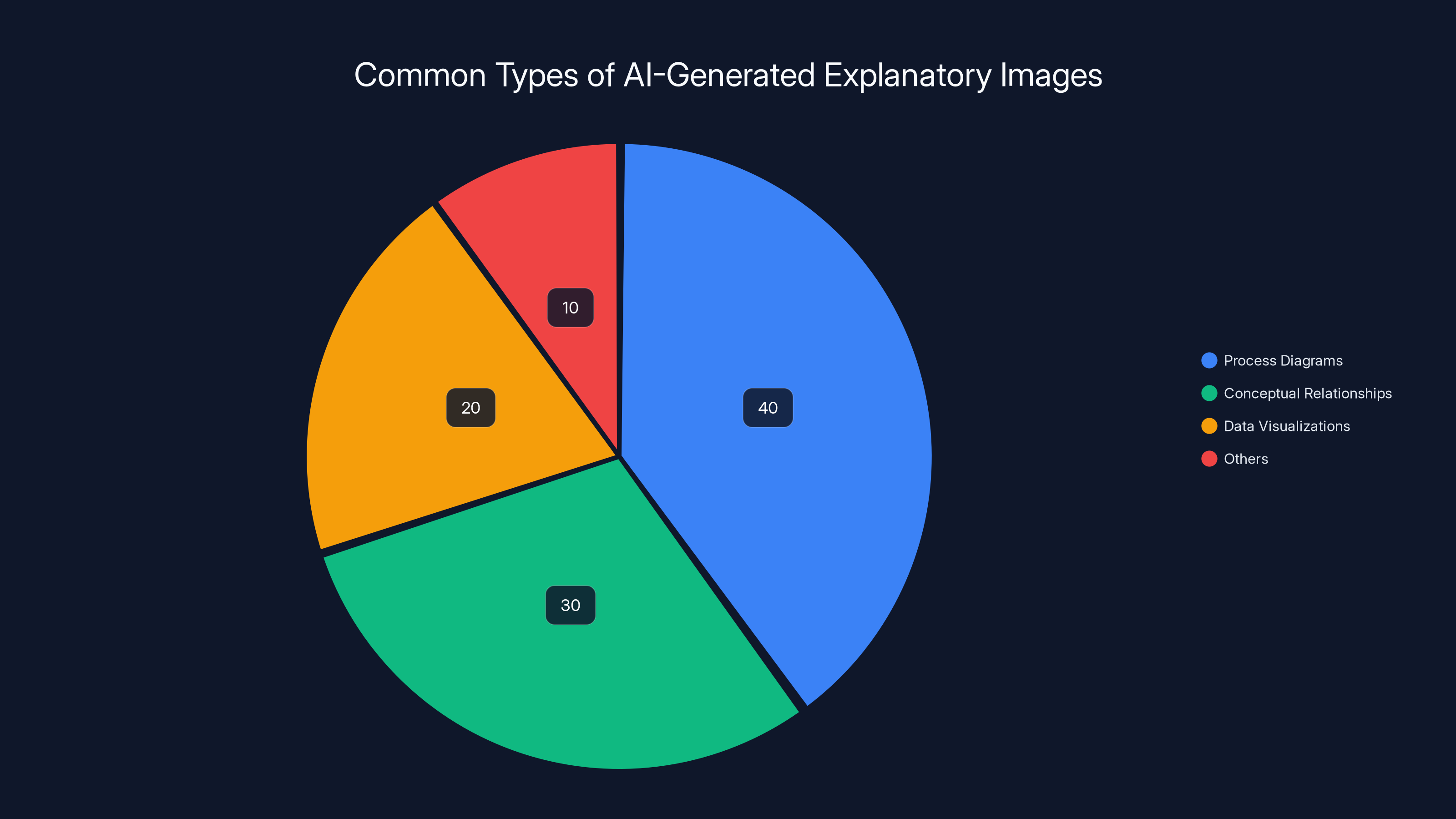

Estimated data suggests that process diagrams are the most common AI-generated images for explanations, followed by conceptual relationship diagrams.

Using AI Images for Different Types of Explanations

Different explanation types need different approaches. Let's talk through how to handle each.

Explaining Processes and Workflows

For processes, your primary goal is showing sequence and progression. The visual should show clear stages with transitions between them.

Key elements for process diagrams:

- Clear stages: Each step distinct and labeled

- Visual progression: Arrows or flow lines showing direction

- Consistent iconography: Each stage should have a recognizable icon or symbol

- Logical color gradient: Often useful to shift colors through the progression

Example use case: Explaining how a payment processor works. You'd want to visualize: customer initiates transaction → payment gateway validates → bank processes → confirmation returns. Each stage needs distinct visual representation.

When prompting, be explicit about the number of stages and what happens at each. "Show a 4-stage pipeline with clear visual progression, each stage slightly different shade of blue to show progression."

The generated image should immediately show a viewer "oh, this happens first, then this, then that." If they can't tell the sequence without reading labels, iterate.

Explaining Conceptual Relationships

Sometimes you're not showing sequence but relationship. How do different elements connect?

For these, consider:

- Hub-and-spoke layouts: One central concept with connections radiating out

- Matrix approaches: Showing multiple dimensions at once

- Venn diagram style: Overlapping concepts showing intersection

Example: Explaining the intersection of UX, Business, and Technology in product development. You could show three overlapping circles, with each intersection labeled with what emerges (tech + UX = prototyping, UX + business = research, etc.)

Prompt specifically about the relationship structure: "Create a diagram showing three overlapping circular concepts: UX, Business, and Technology. Where they overlap, show the combined discipline (product design, strategy, engineering). Use icons to represent each discipline."

Explaining Comparisons and Contrasts

When you're showing how things differ, visual comparison is powerful. Split-screen layouts work well here.

Example: Explaining the difference between supervised and unsupervised learning in machine learning. Show supervised learning on the left (labeled training data with clear categories) and unsupervised learning on the right (unlabeled data with emerging patterns), with visual distinction making the difference immediate.

Prompt for comparison structures: "Create a side-by-side comparison diagram. Left side shows [concept A with these attributes]. Right side shows [concept B with these different attributes]. Use contrasting colors (warm vs cool) to visually reinforce the difference. Include specific icons and labels for each side."

Explaining Data and Metrics

When your explanation involves numbers, the visual approach is critical.

You could generate actual charts, but often a custom visualization works better. Example: Instead of a standard bar chart showing engagement metrics, generate an illustration showing these metrics as visual metaphors. Customer retention as a fortress (stronger = higher retention), engagement as plants growing (growth visualization), churn as water draining away.

This is where AI excels: generating custom metaphor visualizations that communicate data through visual story.

Prompt for data visualization: "Create an infographic showing three key metrics: 85% customer retention (show as a strong structure), 150% engagement growth (show as vibrant plant growth), and 3% monthly churn (show as minimal water loss from a container). Arrange horizontally with the metrics clearly labeled and using a green color scheme to emphasize positive growth."

Explaining Problems and Solutions

Often you need to show a problem then show how your solution fixes it.

Structurally, this is before-and-after. The AI image should clearly show the contrast: problem state on left/top, solution state on right/bottom, with clear visual difference.

Example: Explaining how a project management tool solves team coordination problems. Left side shows chaos (people pointing different directions, confusion, missed deadlines as warning signs). Right side shows the same scenario with the tool (aligned team, clear timeline, everyone coordinated). The difference should be unmistakable visually.

Prompt for problem-solution: "Create a before-and-after comparison. Left side shows the problem: a team struggling with project chaos (scattered documents, confused people, tangled lines representing miscommunication). Right side shows the solution: the same team now organized (clear structure, coordinated arrows showing communication, clean timeline). Use red/chaotic colors on left, green/organized colors on right. Make the contrast very obvious."

Building a Practical Workflow for Visual Explanations

Now let's talk about integrating AI image generation into your actual work process.

The Ideation Phase

Start with rough thinking. What exactly are you trying to help people understand? Write it down in one sentence. Not your entire explanation, but the core idea.

"I'm trying to help people understand that our platform reduces decision-making time through better data visibility."

Now ask: what's the visual story? What would help someone see this immediately?

Maybe it's: "Fast-moving data flowing into a clear dashboard (representing visibility) enabling quick decisions (represented by rapid actions)."

Don't start with the tool yet. Start with the visual concept. What's the metaphor? What's the core visual you're building?

The Sketch Phase

Yes, actually sketch. Even stick figures count. This forces you to think about composition before you start prompting.

You don't need to be good at drawing. You're just roughing out: where do elements go? What's the flow? What's the visual hierarchy?

This 5-minute sketch saves hours of regenerating AI images because you already know what arrangement works.

The First Generation

Write your prompt based on your sketch. Include composition notes from your rough layout.

Generate. Then immediately evaluate:

- Is the composition close to your sketch?

- Is the style appropriate?

- Are all the key elements present?

- Is the message clear without explanation?

Rate it: is this 80% there (worth refining) or 20% there (need a different approach)?

If 80% there, proceed to refinement. If 20% there, consider whether your prompt needs rethinking or your approach needs revision.

The Refinement Phase

Here's where the tool's iteration features matter most.

If Midjourney, use the U (upscale) button on your best generation, and use the V (variant) buttons to generate variations. Or modify your prompt and regenerate.

If DALL-E, describe what's working and what needs adjustment. "The layout is good but the icons need to be more technical-looking" or "The color works but add more contrast between the stages."

Refinement iterations are cheap (in terms of time and usually cost). Do 5-10 variations until you have something that effectively explains the concept.

The Integration Phase

Once you have your AI-generated image, it becomes a foundation for further work.

For marketing materials: use it as the base, then overlay text in design software.

For presentations: import it, resize it, ensure it fits your slide design.

For documents: embed it with proper captions and citations explaining what you're showing.

For products: sometimes you use the AI image directly, sometimes you have a designer refine it for final quality.

The key: the AI image is rarely your final product. It's your starting point that gets you 80% of the way there, then you add the final 20% through polish, context, and integration.

Building Your Visual Library

As you generate images, keep the successful ones. Create a folder structure:

- Process Diagrams

- Conceptual Illustrations

- Comparison Visuals

- Data Visualizations

- Problem-Solution Pairs

Include the prompts that generated them. In three months, you'll have a library of reference work you can remix and rebuild for new contexts.

This becomes your visual style guide. When you need a new process diagram, you reference your existing ones and ensure consistency.

DALL-E 3 excels in precision, while Midjourney leads in artistic quality. Adobe Firefly offers the best integration with Creative Cloud. Estimated data based on tool descriptions.

Common Mistakes and How to Fix Them

Let's be honest about where things go wrong.

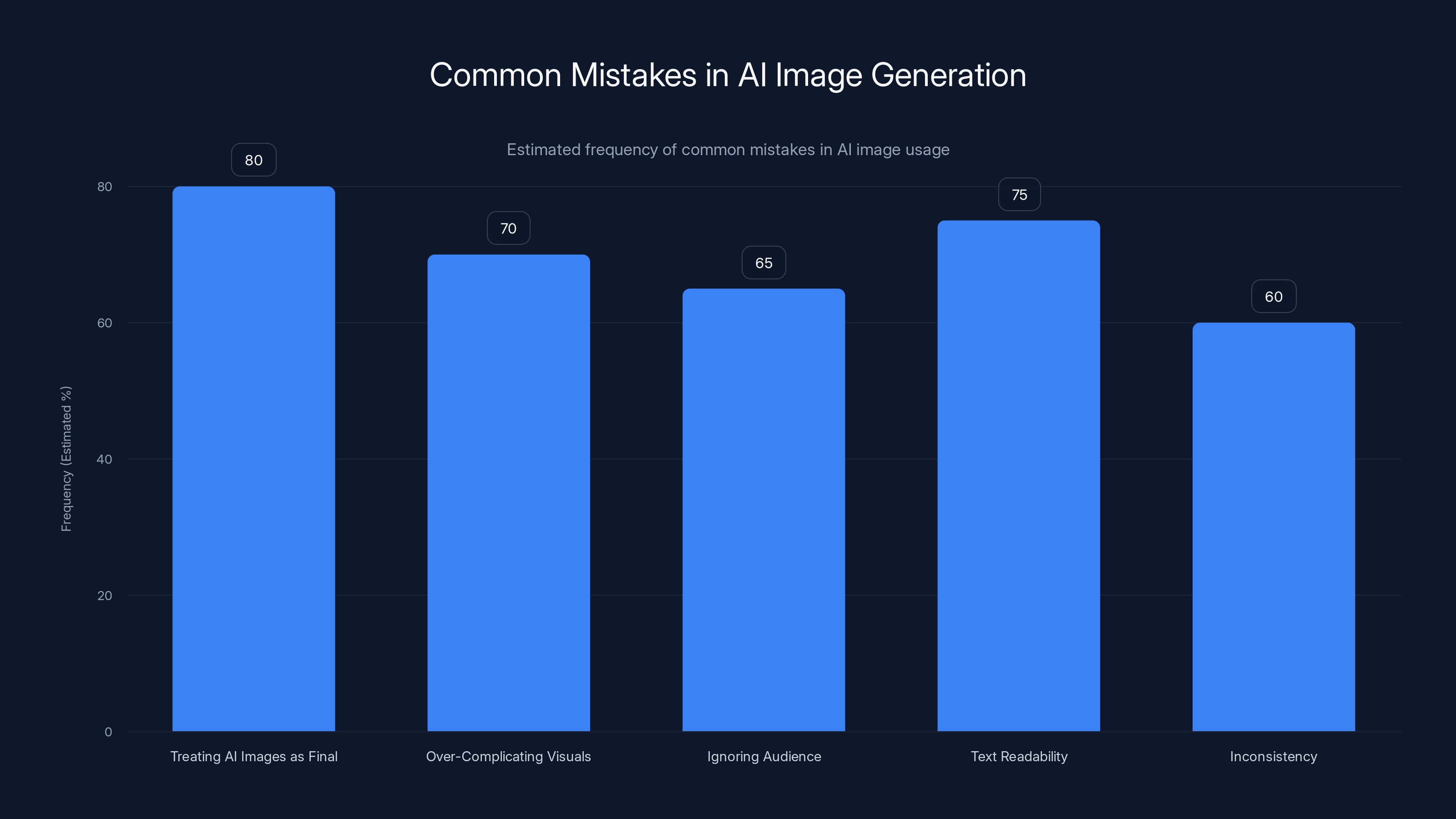

Mistake: Treating AI Images as Final Product

The biggest error is hitting generate, loving the result, and using it immediately without context or refinement.

AI images are building blocks. They work best when you're contextualizing them, adding typography, integrating them with other visuals, and treating them as part of a larger communication system.

If your image is doing all the explaining, it's probably too complicated. Pair it with one clear headline or caption that anchors the interpretation.

Mistake: Over-Complicating the Visual

Trying to show five different concepts in one image creates confusion rather than clarity.

Better approach: Generate separate visuals for each major concept, then show their relationships through layout (side-by-side, sequential, hierarchical).

Specificity matters more than comprehensiveness. A clear image of one thing beats a cluttered image of five things.

Mistake: Ignoring the Actual Audience

You're not generating for yourself. You're generating for people who need to understand something.

Will they have domain knowledge? Will they recognize the metaphors? Will the visual style feel appropriate to them?

Generating a playful, cartoonish illustration for a serious B2B compliance explanation creates cognitive dissonance. Generate an approachable but professional visual instead.

Before you prompt, think about your audience. That directly impacts your style choice.

Mistake: Text Readability

AI tools struggle with rendering readable text within images. Labels often come out blurry or oddly-positioned.

Workaround: Ask the AI to leave space for labels you'll add later. Or request text be placed outside the main visual.

Better: use DALL-E 3 which handles text better than others, or add text in post-production using design software.

Mistake: Inconsistency Across Multiple Images

If you're generating a series of related visuals, they need consistency in style, color, iconography.

Fix this by saving your successful prompts and using them as templates. Build in color palette specifications. Create a reference style sheet showing your icon approach.

When generating related images, reference your first successful image in your prompt: "Create a similar illustration to [reference], showing [new concept], maintaining the same style and color palette."

Mistake: Not Iterating Enough

Some people generate once and call it done. They're leaving 40% value on the table.

Your first generation shows you what the tool understands about your prompt. Your second and third iterations refine it based on what you learned.

Build iteration into your timeline. Budget for 5-10 generations per final image.

Advanced Techniques for Professional Results

Once you understand the basics, here are techniques that professionals use.

Style Stacking

You can reference multiple styles to find your aesthetic. "In the style of Dieter Rams industrial design combined with Bauhaus minimalism" gives very different results than "In the style of modern Instagram infographics."

Experiment with style combinations that resonate with your brand or project.

Color Psychology Integration

Don't request colors casually. Understand what they communicate:

- Blue: Trust, stability, professional (B2B)

- Green: Growth, health, sustainability

- Orange: Energy, enthusiasm, approachability

- Red: Urgency, importance, action

Request specific colors based on what you're trying to communicate emotionally. "Use professional blue and green with gold accents" sends a different message than "Use bright red and pink."

Negative Space Strategy

Instruct the AI to use lots of white space. "Create a clean, minimalist design with significant negative space around the central concepts" produces very different results than requesting a dense, information-filled graphic.

Negative space makes images feel professional and easier to read.

Reference Image Integration

Many tools let you upload reference images. If you have an existing visual you love the style of, upload it and say "Create a new diagram in this visual style."

This dramatically improves consistency and quality of results.

Perspective and Scale Manipulation

"Show this from a bird's eye view" or "Extreme close-up macro photography style" or "Isometric perspective" gives completely different visual approaches to the same content.

Experiment with perspective requests to find what communicates your concept most effectively.

Core Concept and Style Reference are the most critical elements in crafting effective prompts for AI image generation, with ratings of 9 and 8 respectively. Estimated data based on topic analysis.

Integrating AI Visuals with Written Explanations

Your visual doesn't stand alone. It works with text, headlines, and supporting explanation.

The Caption Layer

Every image needs a headline that anchors what viewers should see. "How our platform processes customer data" is more powerful than no context.

Keep it short (5-10 words max) but specific enough that viewers understand what they're looking at.

The Supporting Text

Your image should show the main idea. Your text then explains implications or details.

If your image shows a workflow, your text might explain why that workflow exists or what it enables. The visual and text should complement, not repeat.

Hierarchy and Layout

Whether it's a presentation slide, webpage, or document, the visual should be the primary element, with text supporting it.

- Primary visual: 60-70% of space

- Headline: 5-10% of space

- Supporting text: 15-25% of space

This visual hierarchy guides attention properly.

Multiple Visuals for Complete Explanation

Complex ideas often need multiple images working together.

Example: Explaining machine learning might involve:

- Training data flowing into the model

- The model's internal process

- The output/prediction

Generate each separately, then arrange them sequentially. This creates a visual narrative that's more compelling than one complicated image.

Using AI Images in Different Contexts

The same generated image works differently in different contexts.

For Presentations and Slides

AI images excel in presentations. They break up text slides, help audiences follow complex logic, and make presentations more memorable.

Best practices:

- One major image per key idea

- Ensure it's visible from the back of the room (large enough, high contrast)

- Pair with minimal text (let the image explain most of it)

- Use consistent visual style across all slides

For Marketing and Web Content

Marketing benefits from custom visuals that align with brand identity.

Generate landing page hero images, infographics explaining your value proposition, process diagrams showing how your service works.

Key consideration: make sure generated visuals feel intentional, not random. Connect them to your brand voice and visual identity.

For Educational Content

Educators get enormous value from custom visualizations. You can generate multiple variations of the same concept to show different perspectives or build understanding progressively.

Example: Generate five different ways to visualize the water cycle, each highlighting different aspects. Use them sequentially to build understanding.

For Internal Documentation

Company wikis and internal documentation become clearer with custom visuals.

Instead of describing your architecture in text, generate a diagram. Instead of explaining a process with paragraphs, generate the visual process flow.

This becomes especially valuable for onboarding, where visual clarity dramatically reduces learning time.

For Social Media and Posts

AI-generated visuals perform well on social platforms where visual content drives engagement.

Generate explainer graphics for Linked In posts, visual tips for Twitter/X, or educational illustrations for Instagram.

Key: ensure the generated image looks intentional and on-brand, not obviously AI-generated in a way that feels cheap.

Treating AI images as final products is the most common mistake, occurring in an estimated 80% of cases. Estimated data based on typical user errors.

Quality Control and Ensuring Professional Results

How do you know if your generated image is actually good?

The Clarity Test

Show it to someone unfamiliar with your project without explanation. Can they understand the core idea?

If they guess the concept correctly, you've succeeded. If they're confused, iterate.

The Context Test

Does it actually explain what you need explained? Or are you just using it because it looks nice?

Every visual should have a purpose in your explanation. Decorative visuals are sometimes fine, but primary visuals need to do explanatory work.

The Appropriateness Test

Does the visual style feel right for the context?

Playful cartoon styles work for consumer education but feel wrong in an enterprise compliance document. Professional technical diagrams feel stuffy in a startup pitch deck.

Match visual style to context and audience.

The Consistency Test

If generating multiple images for the same project, do they feel like a cohesive set?

Consistency in color palette, icon style, and visual approach makes your explanation feel more intentional and professional.

The Legibility Test

Can people read any text? Do icons remain clear at smaller sizes? Are colors contrasting enough?

When you export your final image, test it at the actual size it will be used. What looks clear at 1000px might become blurry at 200px.

Future of AI-Generated Visuals for Explanation

Where is this going?

Improved Text Handling

Current tools struggle with readable text in images. Next generation will handle this better, making diagram generation smoother.

Real-Time Iteration

Tools will get faster and cheaper, enabling truly interactive visual design where you tweak and regenerate in seconds.

Consistency Engines

Future tools will maintain visual style consistency across dozens of images automatically, eliminating the manual reference work.

Interactive Visuals

Static images are effective now, but imagine AI-generated interactive explanations where users can adjust parameters and see the visual update in real-time.

Domain-Specific Models

Specialized AI models for specific industries (healthcare visualization, financial diagrams, technical documentation) will produce better results than general-purpose tools.

Integration Into Content Workflows

Tools like Runable are pointing toward a future where you write explanation text and AI automatically generates appropriate visuals, maintains consistency, and integrates everything into your final output format (slides, documents, web pages) automatically.

This removes the friction of switching between tools. You explain what you need, and the system handles visualization, formatting, and assembly.

Measuring the Impact of Visual Explanations

How do you know if your AI-generated visuals are actually helping?

Understanding Metrics

If you're creating content, track:

- Time on page: Do pages with visuals keep people engaged longer? (They typically do)

- Share rate: Visual content is shared more frequently

- Comprehension tests: Do people demonstrate better understanding of explained concepts?

- Return rate: Do people come back for more visual explanations?

Audience Feedback

Direct feedback is valuable. Ask people: "Did the visual help you understand this?"

You'll quickly learn what visual styles and approaches resonate with your audience.

Adoption and Implementation

For internal documentation, do people actually implement what they learn?

Visual explanations typically show higher implementation rates than text-only documentation because people actually understand what to do.

Training Effectiveness

For educational content, track:

- Test scores for visually-explained concepts vs. text-only explanations

- Time to competency (how long until people become effective?)

- Error rates (fewer errors when people truly understand)

Ethical Considerations and Transparency

One important reality: be honest when you're using AI-generated imagery.

For public-facing content, consider disclosing when images are AI-generated. Some audiences care, others don't, but transparency builds trust.

If you're using AI images in a professional setting (internal documentation, pitches, presentations), you don't need disclosure but being aware it's AI-generated can influence how you refine it.

For marketing content where you're claiming to show real scenarios, using AI-generated imagery that could be mistaken for photography raises ethical questions. Make it clear these are illustrations, not photographs of real situations.

The goal is effective communication, not deception. Slight differences between "we created this illustration to show the concept" and "here's a photograph of our system in action."

FAQ

What is an AI image generation tool?

AI image generation tools use machine learning models trained on billions of images to create new images based on text descriptions. You describe what you want to see, the AI understands your description, and generates an original image matching your description. They work by converting text prompts into visual representations through neural networks that have learned patterns about how visual elements connect to language.

How do AI image tools help with explaining ideas?

Visuals are processed by the human brain 60,000 times faster than text, making them ideal for explanation. AI tools let you quickly generate custom visuals that match your exact concept without needing design skills or waiting weeks for an illustrator. You can iterate rapidly, refining visuals based on what's working, and create visual explanations that would be impossible (or very expensive) to commission traditionally.

Which AI image tool is best for creating diagrams?

The best tool depends on your specific needs. DALL-E 3 excels at precise, technical diagrams because it understands detailed layout instructions. Midjourney produces more artistic, polished visuals but is less precise about exact positioning. Adobe Firefly works best if you're already using Creative Cloud software and need professional integration. For workflow integration, Runable streamlines generating images as part of larger automation workflows, starting at just $9/month.

How do I write effective prompts for AI image generation?

Effective prompts include five key elements: the core concept (be specific about what you're visualizing), style reference (what aesthetic you want), composition (how things are arranged), specific elements (what must appear in the image), and color palette (what colors should dominate). Example: instead of "make a diagram," try "Create a technical diagram showing three stages connected by arrows, arranged horizontally, professional minimalist style with blue and white colors, technical documentation aesthetic."

Can AI-generated images be used commercially?

Yes, but terms vary by tool. Adobe Firefly gives clear commercial rights and is designed for this. DALL-E 3 allows commercial use for paid subscribers. Midjourney allows commercial use on paid plans. Always check current terms for your specific tool, as licensing policies sometimes change.

How long does it take to generate an AI image?

Generation typically takes 30 seconds to 2 minutes depending on the tool and complexity. DALL-E 3 is usually fastest, Midjourney takes longer but produces higher quality. Once you have a base image, iterations usually happen within the same timeframe, making rapid refinement feasible in a single work session.

Should I use AI-generated images or hire an illustrator?

It depends on your timeline and budget. AI-generated images are faster and cheaper for initial creation and iteration. Professional illustrators provide custom work tailored to your needs and can refine AI-generated images into final, publication-ready artwork. Many professionals use AI to create rough concepts quickly, then have an illustrator polish them. For educational content, internal documentation, and presentations, AI-generated images often don't need further refinement. For marketing materials and published work, professional polish typically adds value.

How can I ensure AI-generated images look professional?

Specific prompting with style references is crucial. Reference professional design systems (Apple's design language, Shopify's visual style, technical documentation from your industry). Request consistent color palettes and icon styles if generating multiple images. Iterate multiple times to refine results. Integrate generated images with appropriate typography and layout in design software rather than using them standalone. Set clear expectations: AI images work well for explanation visuals; less well for photorealistic product photography.

What's the difference between different AI image tools?

Midjourney produces highly artistic, beautiful results but less precise layouts (

Can I use AI images for scientific or technical documentation?

Absolutely, but with caveats. AI excels at conceptual diagrams, process flows, and explanatory illustrations. For accuracy-critical technical documentation, verify the generated image matches technical specifications. AI sometimes creates plausible-looking but incorrect technical details. For scientific explanation, AI works great for concepts; for actual data visualization, use tools designed for data. The best approach: use AI for diagram concepts, then verify technical accuracy and refine if needed.

Conclusion: Making Visual Explanation Your Superpower

We've covered a lot of ground here. Let's recap the key insights and how you actually use this.

AI image generation has reached the point where it's genuinely useful for explanation. You don't need to be an artist or designer. You don't need to spend thousands commissioning illustrations. You can generate custom visuals in minutes.

But here's the crucial part: the tool isn't what makes this work. Your ability to think visually, to understand your audience, and to refine iteratively makes this work. The tool is just removing friction.

Start with clarity about what you're trying to help people understand. Then think visually. Sketch rough ideas. Write specific prompts. Generate, evaluate, refine. Integrate your results into complete explanations.

The competitive advantage emerges when you develop this as a system. You'll build intuition about what prompts work, you'll develop your visual style, you'll create reusable approaches that you apply to new contexts faster and faster.

Do this consistently and you develop a superpower: the ability to communicate complex ideas visually, quickly, and effectively. In a world drowning in information and text, visual communicators stand out.

The tools are democratizing something that used to require specialized skills. But specialized skills still matter: understanding composition, knowing your audience, maintaining consistency, and actually making the visual do explanatory work rather than just decorative work.

Leverage these tools to become better at visual explanation. Your ideas will land harder, your audience will understand better, and you'll communicate more effectively.

Now go generate something. Pick an idea you're struggling to explain. Spend 20 minutes creating a visual for it. See if it helps. I'm confident it will.

The technology is ready. The question is whether you're ready to use it.

Key Takeaways

- Visual communication is processed 60,000x faster than text, making AI-generated visuals essential for effective explanation.

- Different AI tools excel at different tasks: Midjourney for artistic polish, DALL-E for precision, Adobe Firefly for professional integration, Runable for workflow automation.

- Specific, detailed prompts with composition instructions dramatically outperform vague requests.

- Effective workflow involves ideation, rough sketching, generation, refinement iteration, and integration into larger explanations.

- AI images work best as building blocks within comprehensive visual systems, not standalone assets.

Related Articles

- How to Create & Use GIFs for Marketing: Complete 2025 Guide

- DJI Mic 3 Wireless Microphone: Complete Guide & Setup [2025]

- Microsoft Paint AI Coloring Book Feature Explained [2025]

- Google Trends Explore Gets Gemini AI: What Changed [2025]

- Apple Creator Studio: $12.99/Month Bundle Analyzed [2025]

- DJI Osmo Mobile 7P Gimbal Stabilizer: Complete Guide & Reviews [2025]

![How to Use AI Image Tools to Explain Ideas Visually [2025]](https://tryrunable.com/blog/how-to-use-ai-image-tools-to-explain-ideas-visually-2025/image-1-1769488681304.png)