Intel's Comeback: The Xeon 600-Series Changes Desktop Workstations Forever

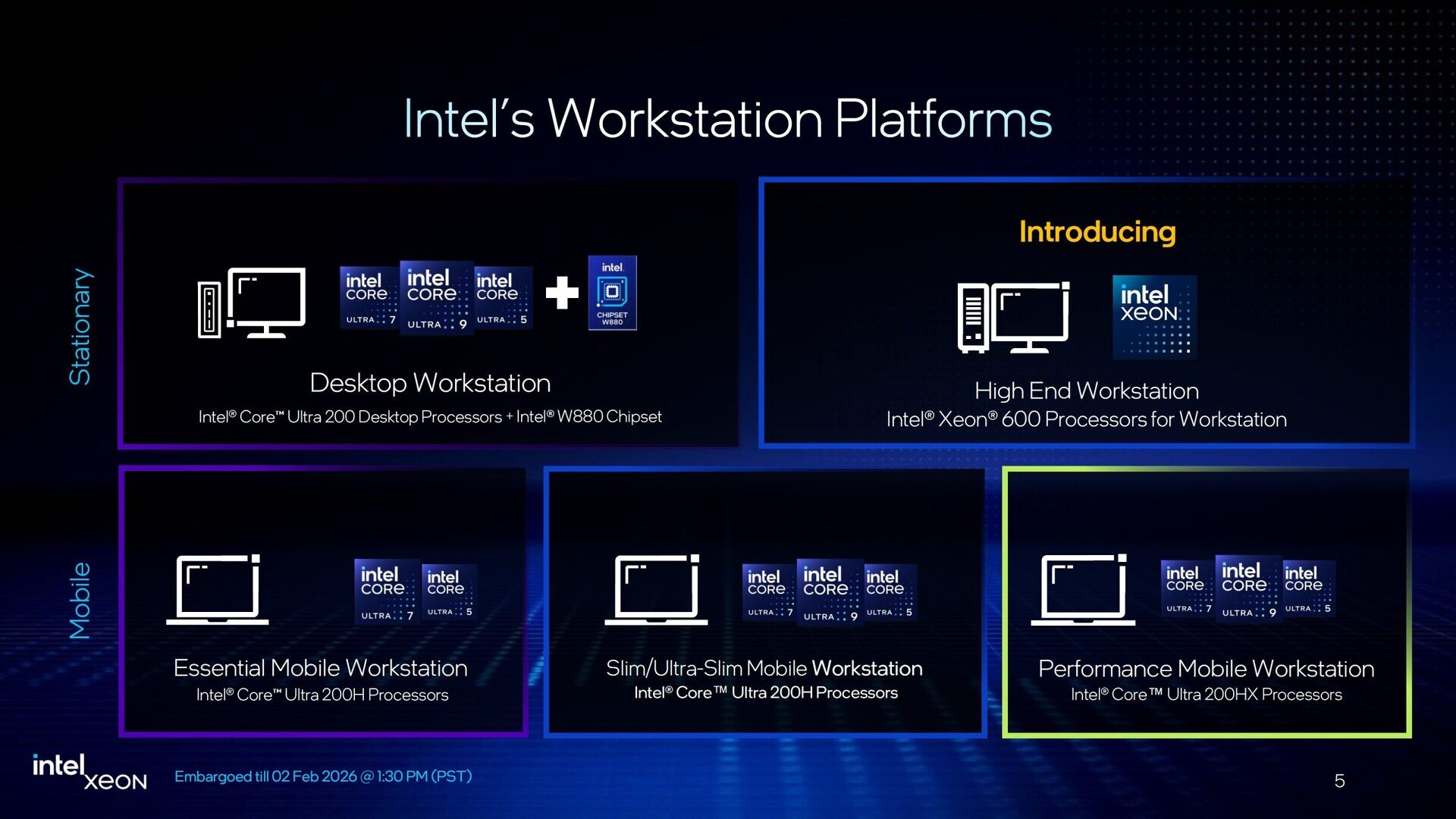

After nearly three years away from the desktop workstation market, Intel just dropped something that caught everyone's attention. The new Xeon 600-series processors are here, and they're not playing around. We're talking about machines with up to 86 cores, clock speeds hitting 4.9GHz, and memory configurations that can max out at 4TB. That's not a typo.

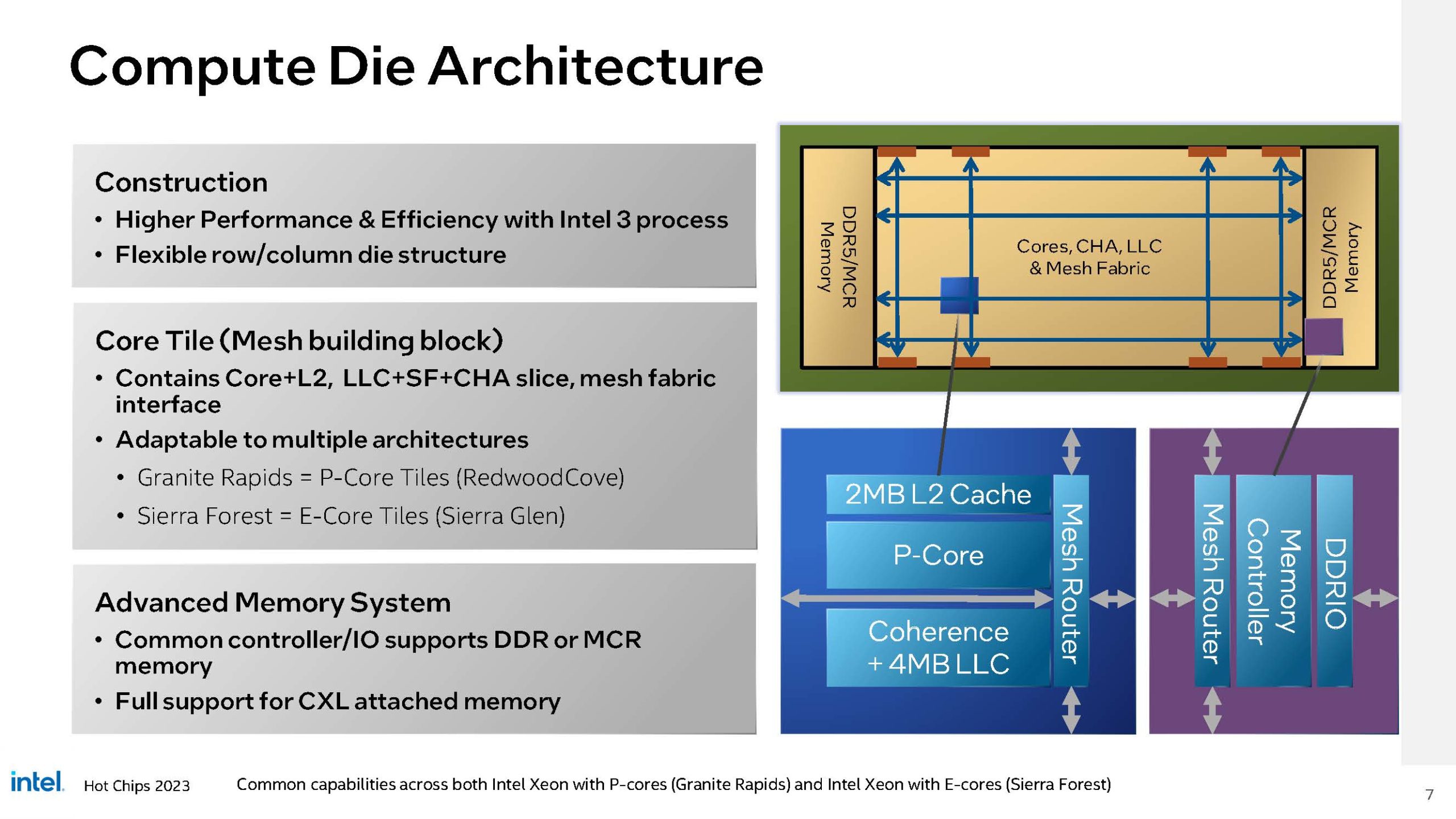

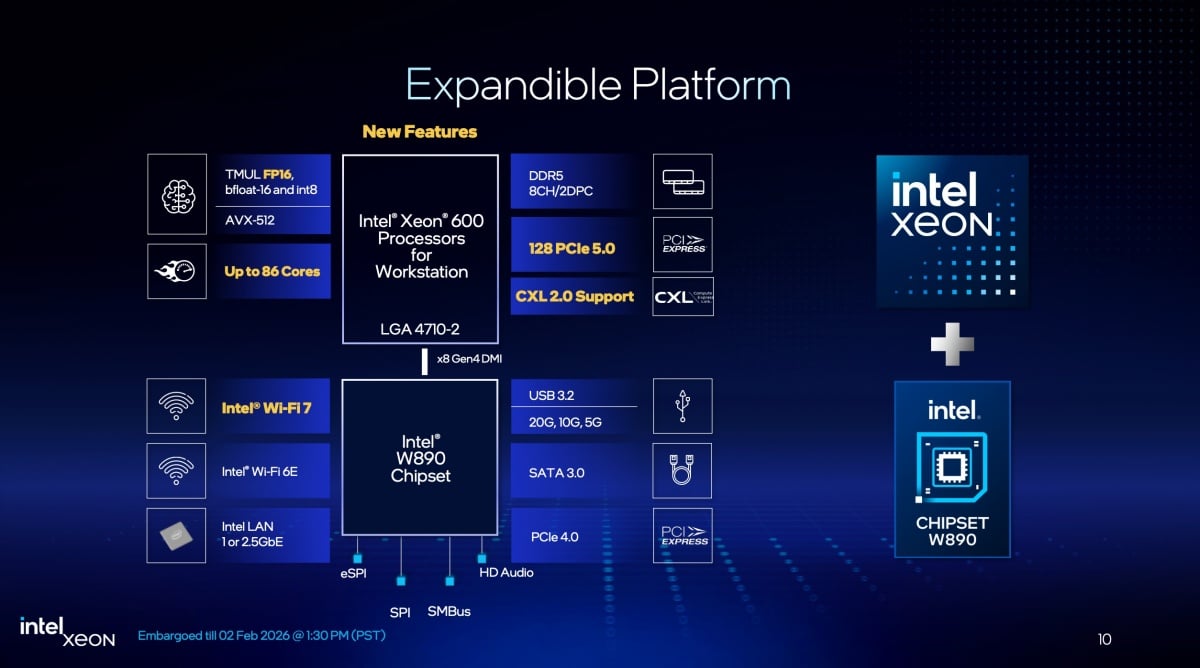

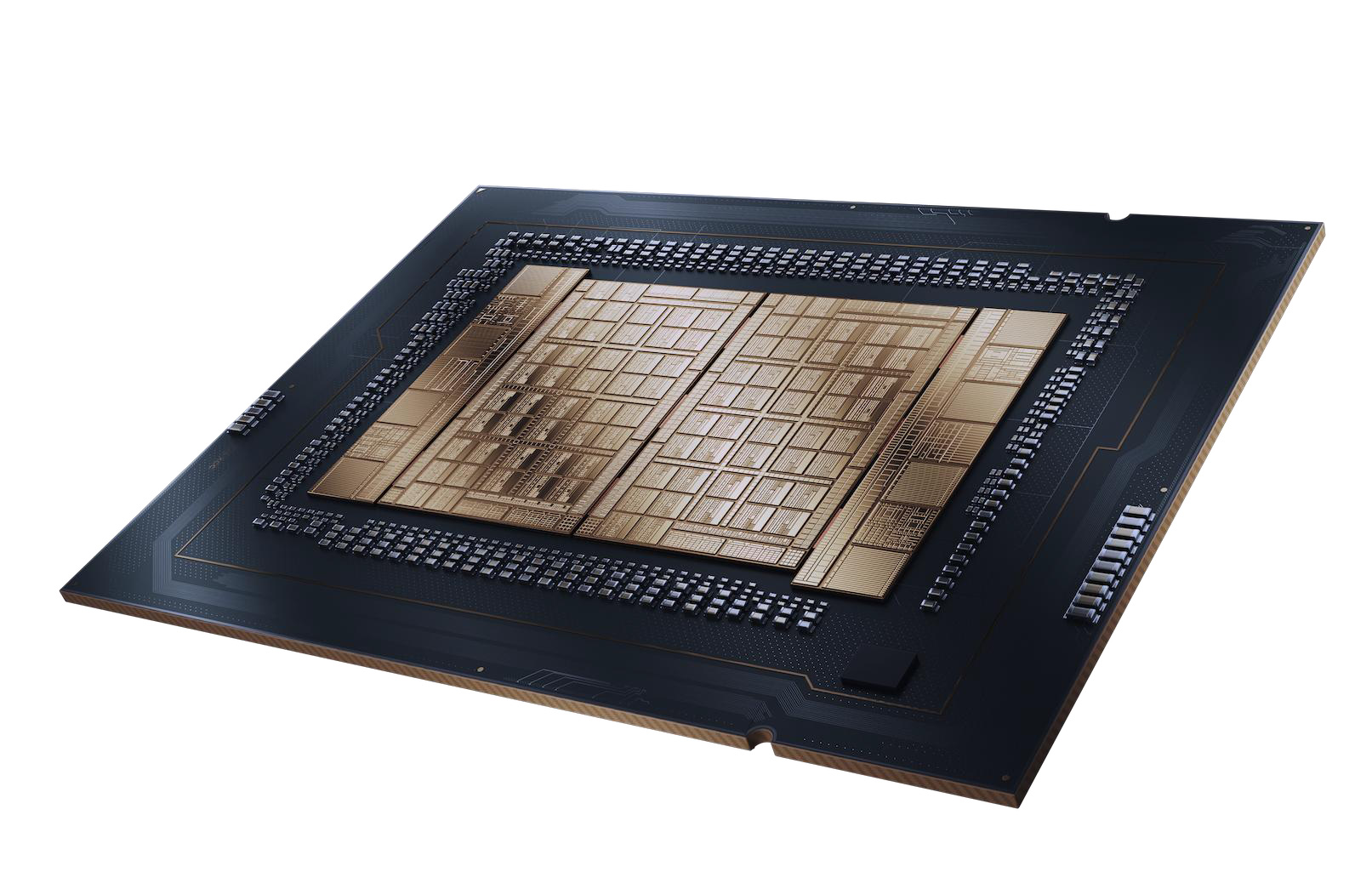

What makes this particularly interesting is the underlying architecture. Built on Granite Rapids, these chips represent Intel's aggressive move to reclaim the workstation space from AMD's increasingly dominant Threadripper line. But here's what really matters: Intel didn't just crank up the core count. They redesigned the memory subsystem, added serious PCIe 5.0 connectivity, and integrated AI acceleration directly into the silicon. For professionals working with massive datasets, complex simulations, or AI-heavy workflows, this is a significant shift.

The timing is telling too. The professional workstation market has been heating up. Cloud rendering farms are consuming more compute. Machine learning engineers need more bandwidth. Content creators dealing with 8K footage and massive 3D scenes demand systems that can handle real-time performance without bottlenecks. Intel watched AMD dominate this space with Threadripper, and now they're swinging back hard.

What surprised me most while researching this wasn't just the raw specs. It's the memory architecture. Octa-channel DDR5 memory with MRDIMM support (Multi-Rank DIMM) is genuinely new to desktop workstations. This means multiple memory ranks can combine their bandwidth, hitting speeds of 8,000MT/s on the top SKUs. For data-intensive workloads, that's a game changer. Your system can shuffle data between memory and CPU far faster than previous generations.

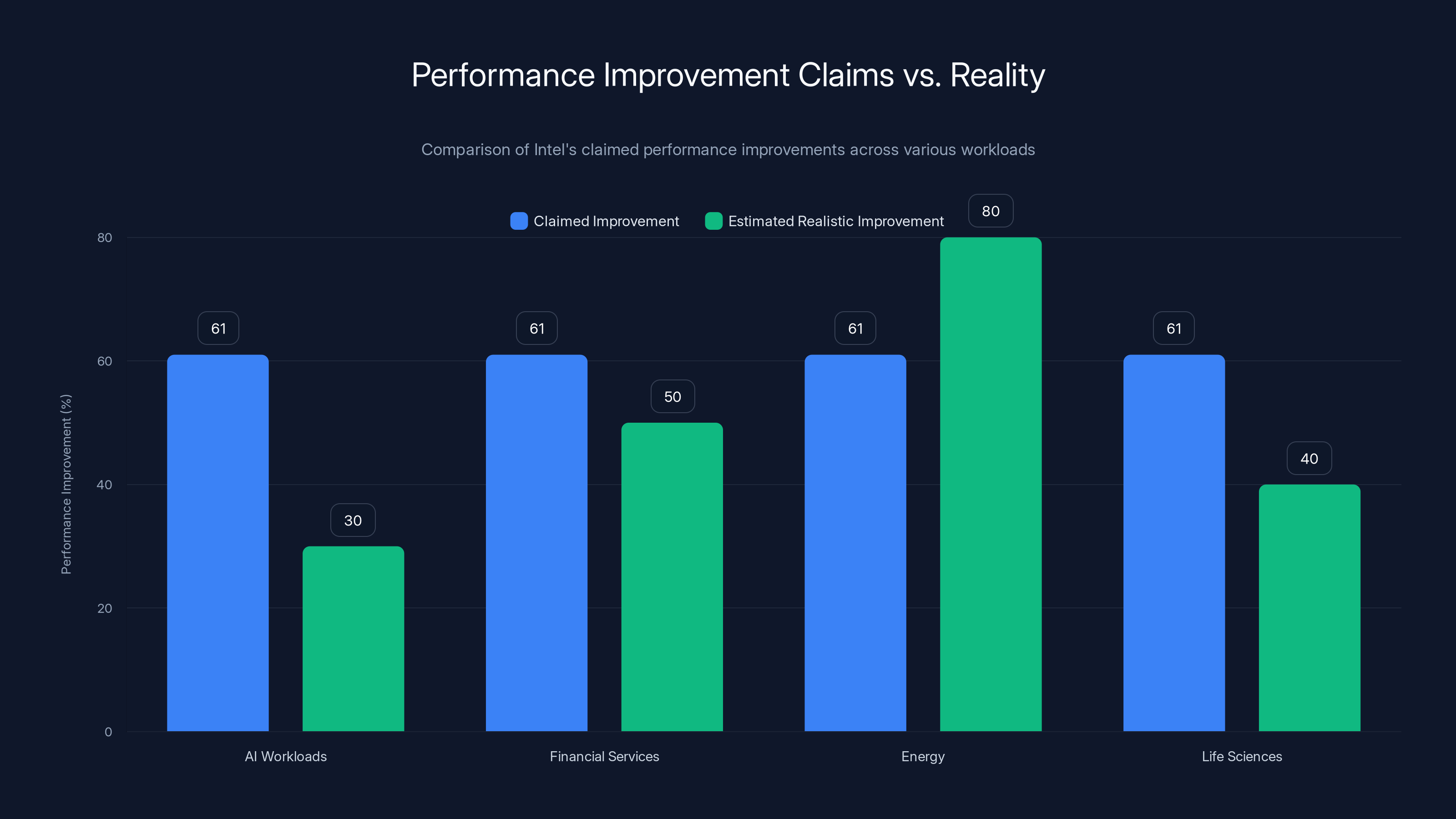

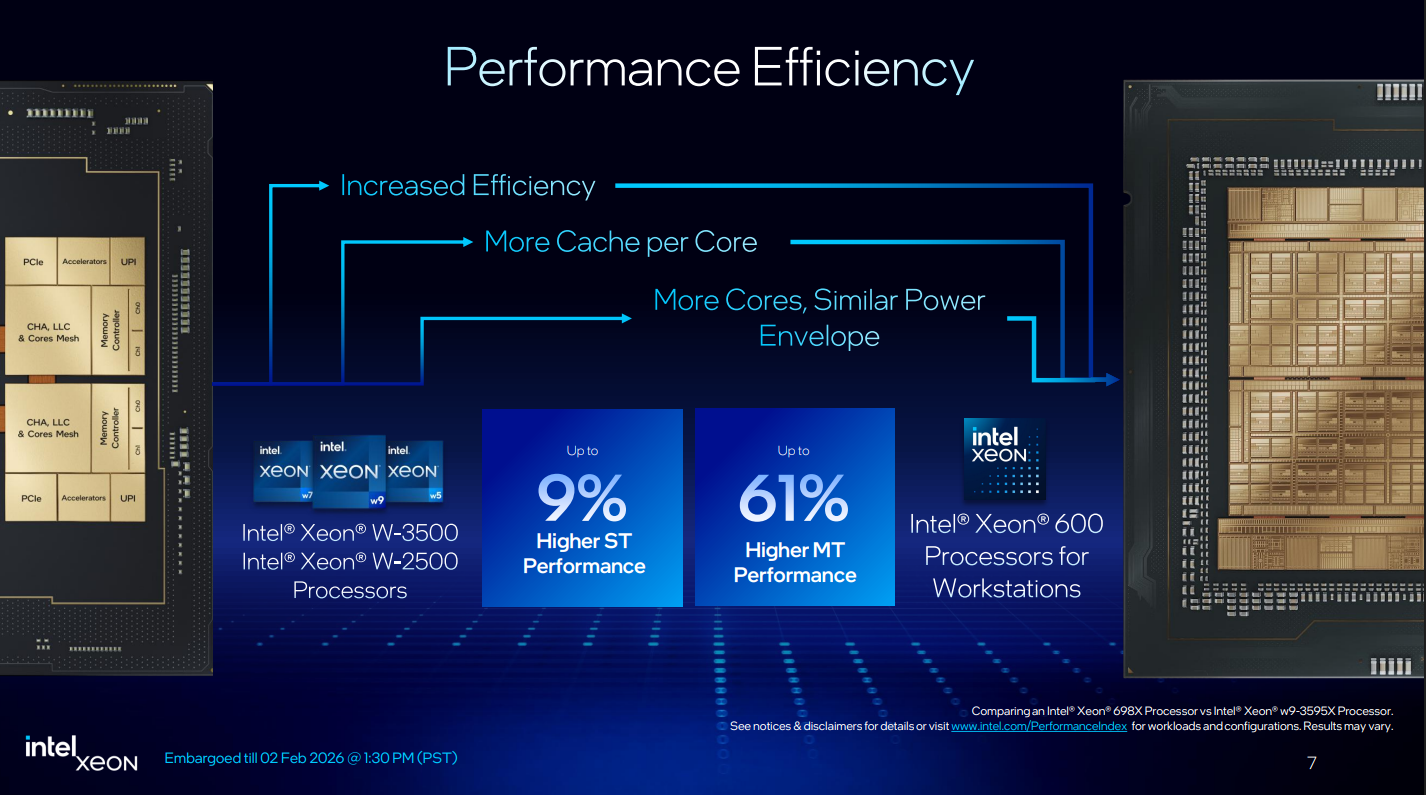

But let's be real. High core counts and impressive memory speeds only matter if they translate to actual performance gains. Intel claims up to 9% single-threaded performance improvements and 61% higher multithreaded performance compared to the previous W-2500 and W-3500 chips. That's a substantial jump. Whether it holds up in your actual workload is another question entirely.

This article breaks down everything about the Xeon 600-series: the architecture, the memory innovations, the AI capabilities, pricing, availability, and most importantly, whether this is actually worth your attention if you're building a high-end workstation right now.

TL; DR

- Xeon 600-series spans 12 to 86 cores with all performance cores and Hyper-Threading for consistent execution across demanding workloads

- Octa-channel DDR5 memory with MRDIMM support reaching 8,000MT/s marks the first introduction of high-speed memory tech to desktop workstations

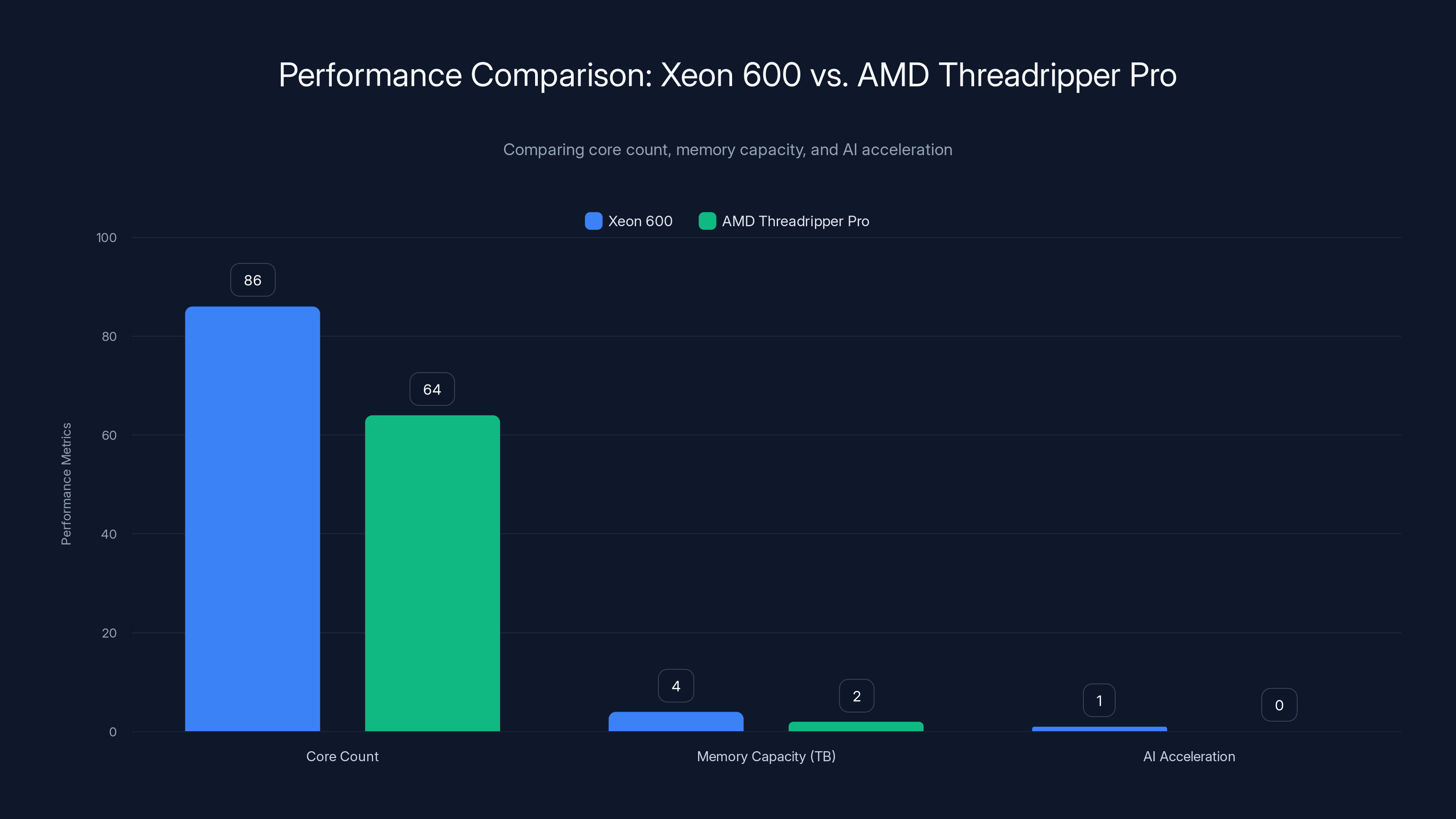

- Up to 4TB memory capacity doubles AMD Threadripper Pro 9000 WX and quadruples standard Threadripper 9000, enabling massive data-intensive operations

- 128 PCIe 5.0 lanes with CXL 2.0 support enable blazing-fast connectivity for storage, AI accelerators, and external compute

- Integrated AMX accelerators with FP16 support boost AI inference performance, making these chips competitive for machine learning workloads

- Granite Rapids architecture delivers 61% higher multithreaded performance versus older W-3500 chips, with real gains across AI, finance, and life sciences

- Pricing ranges 7,699, with boxed chips and new W890 motherboards arriving late March 2026

Intel claims up to 61% performance improvement across various workloads, but realistic gains vary significantly, with some workloads achieving only 30% improvement. Estimated data.

The Granite Rapids Architecture: More Than Just More Cores

Let's start with the elephant in the room. When you hear "86 cores," your first instinct might be to compare raw core counts with AMD's offerings. But that's only half the story. The Redwood Cove microarchitecture that powers these chips is something Intel ported from their mobile division and then heavily optimized for desktop workstation performance.

The critical difference between Granite Rapids and previous Xeon designs is philosophical. Earlier Xeon chips tried to be clever with mixed core designs, splitting performance cores from efficiency cores. Granite Rapids says no to that. Every single core in the Xeon 600-series is a full performance core with Hyper-Threading enabled. This matters because it means you don't get weird scheduling quirks where your software bounces between different core types. Consistent execution. Predictable performance. That's what professional workflows demand.

The cache hierarchy also got a serious upgrade. Each core has access to 2.5MB of L2 cache, and the chips feature a massive 210MB L3 cache on the flagship models. That's a lot of silicon dedicated to keeping your data close to the execution units. When you're running AI inference or processing massive datasets, L3 cache hits versus memory misses can mean the difference between seconds and minutes in execution time.

Clocking is another story. The flagship Xeon 698X hits 4.9GHz boost clocks, which is genuinely impressive for an 86-core chip. Typically, when you add more cores, frequency drops because power delivery and thermal considerations become nightmares. Intel managed to keep the clocks high across the entire lineup while shipping 86 cores. That's solid engineering.

Here's what's fascinating from an architecture perspective: Granite Rapids introduces predictive logic that anticipates memory access patterns. When your workload is predictable (which many professional tasks are), the chip preemptively fetches data into cache before you explicitly request it. This doesn't sound revolutionary, but it fundamentally changes how the processor feeds data to cores. Less waiting. More throughput.

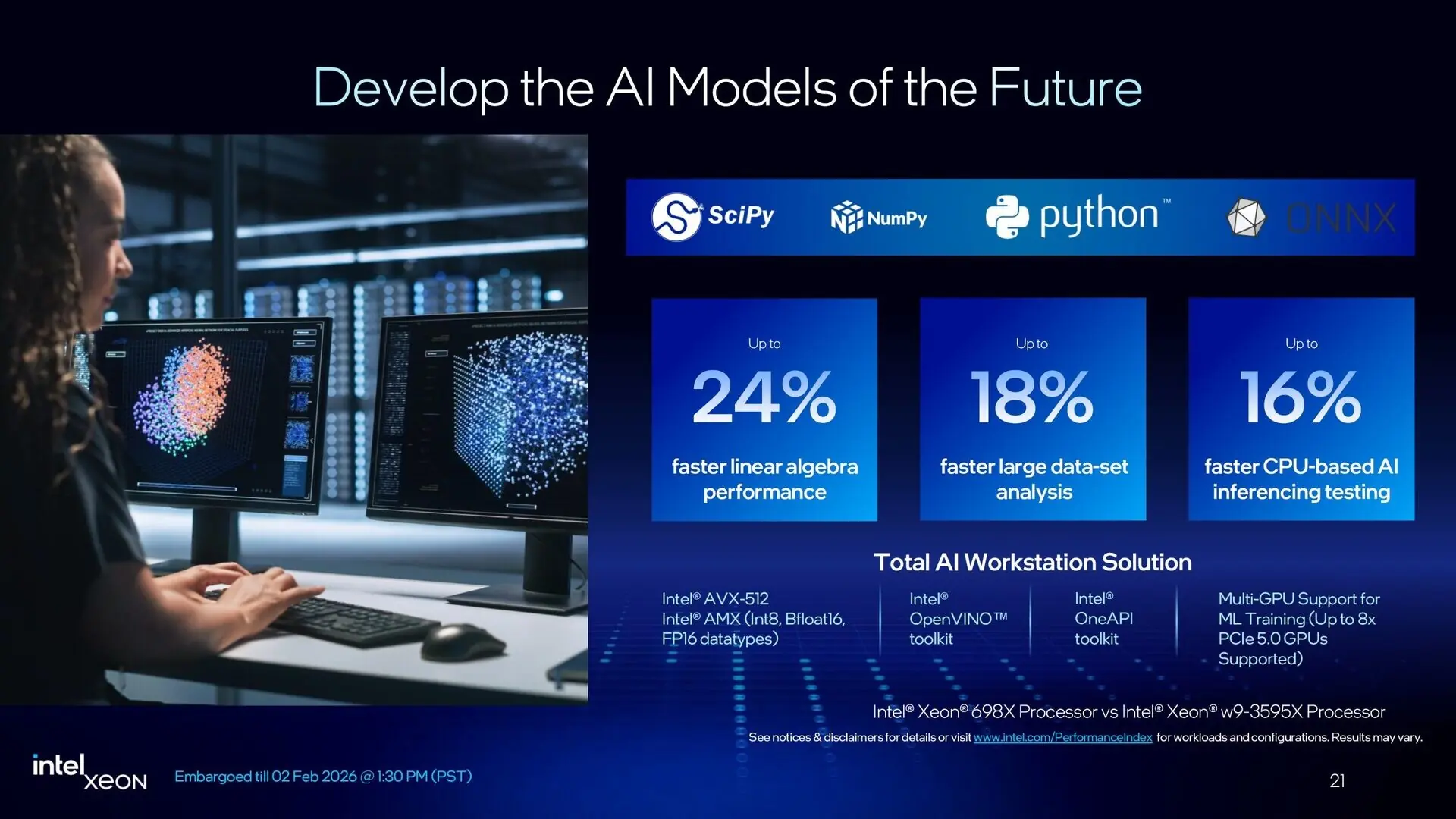

The instruction set extensions also matter. AMX (Advanced Matrix Extensions) are built into every core, providing hardware acceleration for matrix operations. This is crucial for AI workloads. When you're running neural network inference, matrix multiplication is the bottleneck. Having dedicated hardware that can crunch those operations faster than general-purpose cores means you can process more data per second.

Intel added FP16 (half-precision floating point) support to AMX, which is significant because many modern AI models use 16-bit floating point for inference. It's fast, uses less memory bandwidth, and maintains enough precision for accurate results. Your GPU might be sitting idle while your Xeon chips handle inference tasks with integrated acceleration.

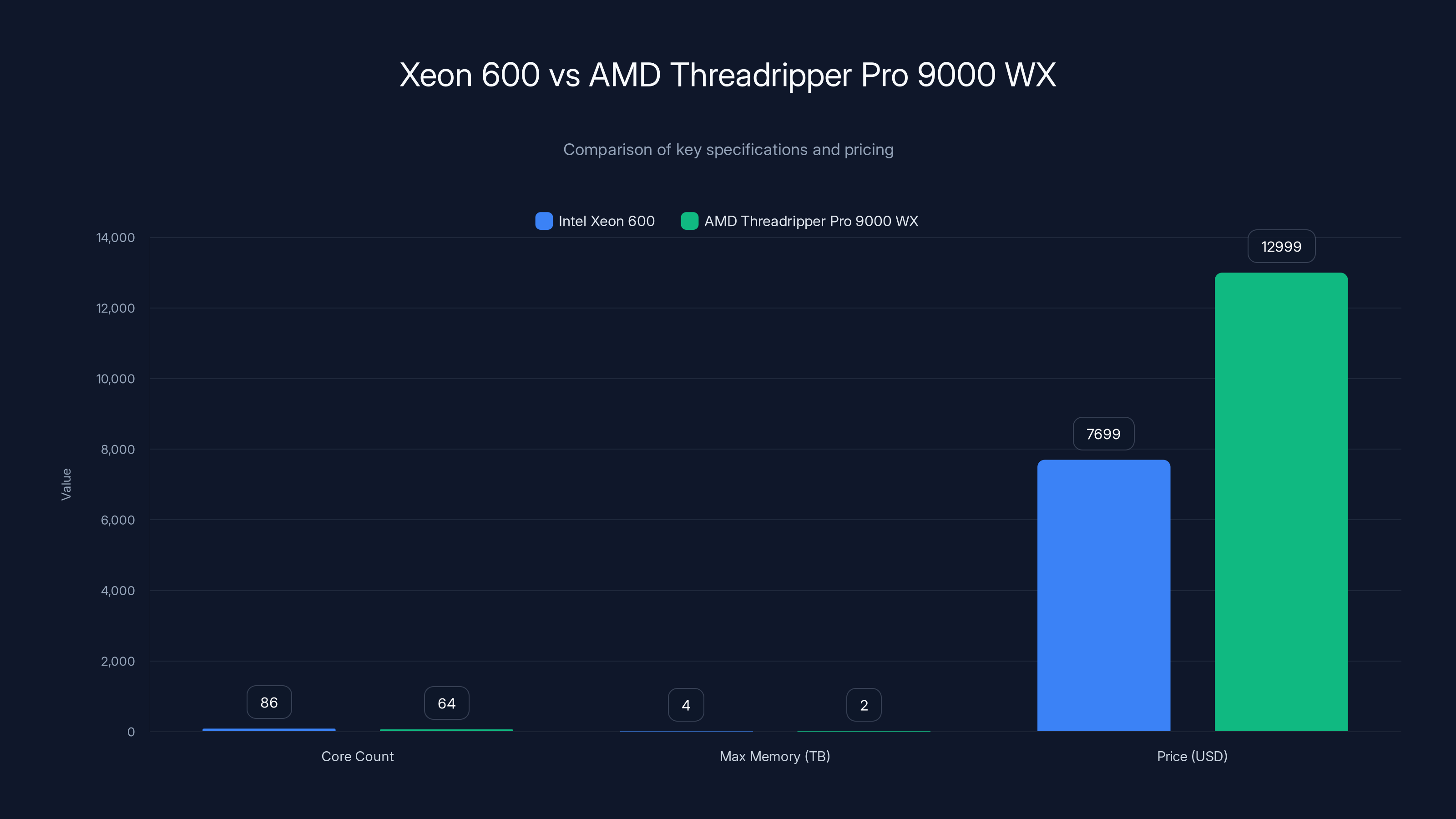

Intel Xeon 600 offers more cores and higher memory capacity at a lower price compared to AMD Threadripper Pro 9000 WX. However, AMD's architecture and software maturity may offset these advantages.

Memory Revolution: Octa-Channel DDR5 and MRDIMM Technology Explained

This is where things get genuinely interesting. Octa-channel memory means eight independent pathways from the CPU to your RAM modules simultaneously. Compare that to typical workstations which max out at dual-channel (two pathways) or quad-channel (four pathways). Eight. That's a huge jump in raw bandwidth capacity.

Here's the math: Each DDR5 channel can theoretically deliver about 6.4GB/s of bandwidth at 6,400MT/s speeds. Multiply that by eight channels, and you're looking at approximately 51.2GB/s of memory bandwidth. That's more bandwidth than some high-end GPUs. For workloads that shuffle massive amounts of data, this changes the game entirely.

But wait, there's more. The top five SKUs in the lineup support MRDIMM (Multi-Rank DIMM) modules that can reach 8,000MT/s. Traditional memory modules have a single rank (imagine a single layer of memory chips). MRDIMM modules can have multiple ranks with built-in buffering that allows the memory controller to optimize access patterns. You get higher speeds and higher capacity per module.

What does this mean practically? You can pack more memory into fewer physical slots. The max capacity jumps to 4TB per CPU. Yes, four terabytes. That's double what AMD's Threadripper Pro 9000 WX supports and four times the standard Threadripper 9000. If you're working with genomics datasets, climate simulations, or massive machine learning models that need to stay in memory, this is profound.

Let me be specific about why this matters: A genomics researcher processing whole-genome sequencing data for thousands of samples can now keep most datasets in RAM simultaneously. Previously, they'd need to constantly swap data from storage to memory, creating artificial bottlenecks. Memory that fits 4TB means fewer stalls, faster analysis, and months saved on research timelines.

The bandwidth considerations go deeper. Memory-bound workloads are common in professional software. Video editing, 3D rendering, scientific simulation—they all move enormous amounts of data per second. Traditional systems with 2-4 channels of DDR4 at 3,200MT/s deliver roughly 12-25GB/s of bandwidth. A Xeon 600 system with octa-channel DDR5 delivering 51GB/s is literally 2-4 times faster at moving data. That's not a small increment. That's a wholesale transformation.

Intel also added CXL 2.0 support (Compute Express Link), which means your workstation can coherently attach external memory or accelerators. Imagine adding custom memory pools or specialized AI accelerators that appear as if they're part of the main memory system. It's a glimpse into future workstation designs where the lines between CPU, memory, and accelerators blur significantly.

There's a practical concern though: Power consumption. Eight channels of DDR5 memory, especially with MRDIMM support, demands serious power delivery. A fully loaded Xeon 600 system with maximum memory could draw significant wattage. Thermal design matters. You need proper cooling. This isn't a system you throw together with a basic cooling solution.

PCIe 5.0 and Connectivity: The Overlooked Performance Multiplier

When people talk about Xeon 600 specs, they often gloss over the 128 PCIe 5.0 lanes. That's a mistake. This connectivity is where the real next-generation capabilities live.

First, understand what PCIe 5.0 delivers: 32GB/s per lane. Double that of PCIe 4.0. With 128 lanes, you're theoretically looking at 4,096GB/s of total PCIe bandwidth. Obviously you don't use all lanes simultaneously for a single device, but having that pool of bandwidth available transforms what you can attach to your workstation.

High-speed storage is the immediate beneficiary. NVMe drives using PCIe 5.0 can sustain 7-14GB/s sequential read speeds. Compare that to PCIe 4.0 drives maxing around 7GB/s. It's not earth-shattering, but when you're working with massive video files, raw image sequences, or backing up terabytes of data, every GB/s counts. A 4K 60fps video sequence that took 5 minutes to copy to your working storage might now take 2 minutes. Multiply that across dozens of tasks per day and you're saving real time.

But the real story is GPU connectivity and AI accelerators. Here's the thing: Xeon 600 doesn't have integrated graphics like some competitor chips. It relies entirely on discrete GPUs or accelerators. That means maximum PCIe 5.0 bandwidth directly to your AI inference cards, tensor processing units, or discrete graphics processors. If you're running AI workloads, having that much bandwidth between CPU and accelerator eliminates a common bottleneck.

Consider a practical scenario: You're running a large language model inference across multiple AI accelerators. Your Xeon 600 needs to feed these accelerators with tokens, collect results, and manage the pipeline. With PCIe 5.0 lanes dedicated to each accelerator, you avoid having GPU cards competing for bandwidth. Throughput increases. Latency decreases. Your inference pipeline moves faster.

CXL 2.0 support adds another dimension. This emerging standard allows coherent memory access between the CPU and external devices. Imagine attaching a custom AI accelerator that appears to your software as if it shares the same memory space. You eliminate extra copy operations between different memory pools. Data coherency is maintained automatically. This is future-proofing your investment toward standards that will define next-decade workstations.

The lane configuration also matters. Xeon 600 supports full-width NVMe storage—up to 16 PCIe 5.0 NVMe drives can attach simultaneously without competing for bandwidth. That's not just fast storage. That's redundant, striped, maximum-throughput storage architecture. Media professionals with multi-petabyte projects can now have dedicated drives for timeline, cache, final output, and backups, all running at maximum speed without bottlenecks.

Xeon 600 outperforms AMD Threadripper Pro with more cores and higher memory capacity, also offering integrated AI acceleration, making it ideal for demanding workloads.

AI Acceleration: From Workload Afterthought to Core Responsibility

Here's what's different about Granite Rapids versus older Xeon workstation chips: AI acceleration isn't bolted on as an afterthought. It's woven into the core fabric of every CPU.

Every single core in the Xeon 600-series includes AMX (Advanced Matrix Extensions) units. These are specialized execution units dedicated to matrix operations—the fundamental building blocks of neural networks. When you run AI inference workloads, you're essentially running millions of matrix multiplications. Having hardware that accelerates this is transformative.

The FP16 (16-bit floating point) support matters because modern AI models increasingly use lower precision for inference. Why? Because a model trained in FP32 (full precision) can often do inference just fine in FP16. You use half the memory bandwidth, half the storage space, and the math is faster. Intel's addition of native FP16 AMX support means your Xeon chips can do inference approximately twice as fast for compatible models compared to using FP32.

Let's ground this in reality. Suppose you're running a large language model inference for customer support chatbots. Your model might be 70 billion parameters. Serving thousands of concurrent users requires serious hardware. A Xeon 600 with integrated AMX acceleration plus attached AI accelerators can handle token generation much faster than a similar-spec CPU without native matrix acceleration. You serve more users with lower latency. Your infrastructure costs drop.

Intel claims that applications like Blender and Topaz Labs see performance benefits from integrated AMX accelerators. Blender's AI upscaling features, Topaz's video enhancement—both do tensor operations internally. Having hardware-accelerated matrix math directly in the CPU means these operations complete faster, making your overall rendering or enhancement pipeline quicker.

There's an architecture angle too. Older workstation designs forced you to choose: put your compute on the CPU or offload to a GPU. The Xeon 600 blurs that line. Some AI workloads benefit from running on the CPU using AMX acceleration. Others prefer specialized AI accelerators. You can now seamlessly split the work between both, using each resource optimally. Your Xeon CPU handles some inference tasks while your attached accelerators handle others, all in parallel.

However—and this is important—AMX acceleration for AI is best suited for inference, not training. If you're training large models from scratch, you still want GPUs or specialized AI chips. But for inference, model serving, and AI-powered features integrated into applications, CPU-side AMX acceleration is game-changing for workstations.

Consider the broader trend: More and more professional software is embedding AI capabilities. Video editors want AI upscaling. Photo tools want content-aware fill. CAD software wants generative design suggestions. Having built-in AI acceleration in your workstation CPU means all these features run locally, faster, without depending on external API calls or GPU offloading. It's convenience married to performance.

Performance Benchmarks: Separating Marketing Claims from Reality

Intel claims up to 61% higher multithreaded performance compared to previous W-3500 chips. That's the kind of claim that makes you want to dig deeper. What workloads? Under what conditions? Let's be honest about what these numbers actually mean.

Intel references SPEC Workstation 4 benchmarks, which is the industry standard for measuring workstation performance. This suite includes tests for AI, financial services, energy, and life sciences workloads. The 61% improvement is probably legitimate for certain specific tests within that suite, but it's not universal. Some workloads might see 30% gains. Others might see 80%. The "up to 61%" is technically true but potentially misleading if you're not doing exactly the workload that achieved that number.

Multithreaded performance scaling is predictable math at this point. If you go from 28 cores to 86 cores, roughly triple the core count, you'd expect workloads to scale roughly 3x if they're highly parallelizable. If a workload hits 61% higher performance, that's actually slightly better than pure core-count scaling, which suggests architectural improvements are contributing. The memory bandwidth upgrades and cache improvements are likely playing a role.

Single-threaded performance claims of "up to 9% improvement" are more conservative, and probably accurate. Single-threaded performance is harder to improve without fundamentally rearchitecting the CPU. You're locked by physics at that point. A 9% improvement suggests better branch prediction, slightly improved instruction per cycle metrics, or clock speed advantages. Nothing revolutionary, but real.

Here's the crucial caveat: These benchmarks are synthetic. They don't represent your actual workload. Your specific application might scale differently. If your software bottlenecks on memory bandwidth, the octa-channel DDR5 gives you huge wins. If your software bottlenecks on PCIe connectivity or storage speed, the PCIe 5.0 lanes help dramatically. If your software is single-threaded and can't parallelize, core count doesn't matter—you're stuck with that 9% single-threaded improvement.

The glaring absence is direct comparison with AMD Threadripper 9000 series. Intel hasn't published head-to-head benchmarks showing Xeon 600 versus Threadripper. That's suspicious. It suggests the comparison might not be as favorable as Intel's marketing wants you to believe. Yes, Xeon 600 has more cores and memory, but is it actually faster for your workload? Until benchmarks show that, it's speculation.

Access issues also matter. Xeon 600 is new. There are no real-world, long-term deployment reviews yet. By the time these systems ship (late March 2026 for motherboards, possibly later for boxed chips), months will have passed since announcement. That's enough time for the hype cycle to settle and reality-check benchmarks to emerge.

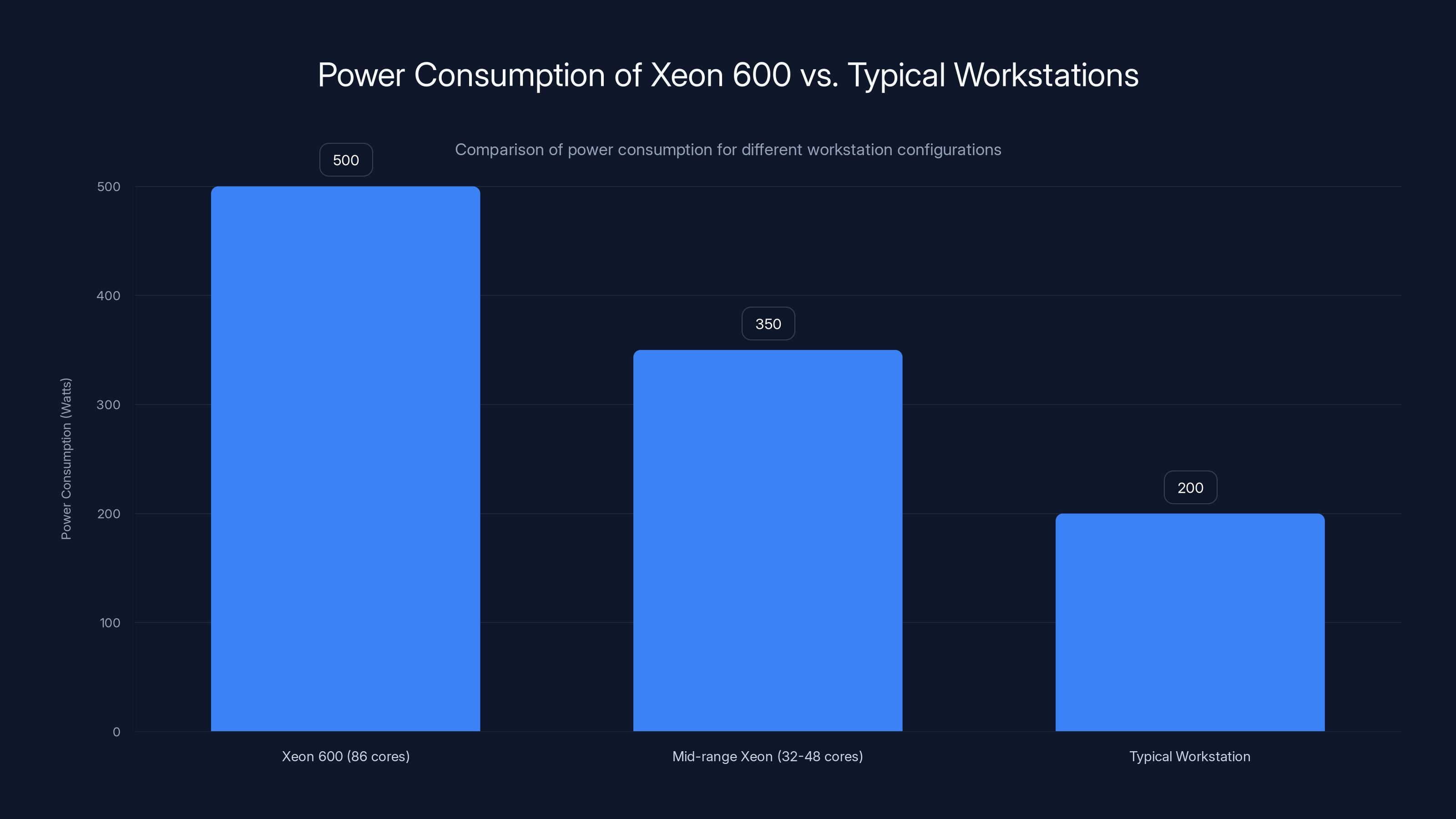

Xeon 600 systems consume significantly more power than typical workstations, highlighting the need for robust cooling and power solutions. Estimated data.

Processor SKU Breakdown: Which Core Count Actually Matters

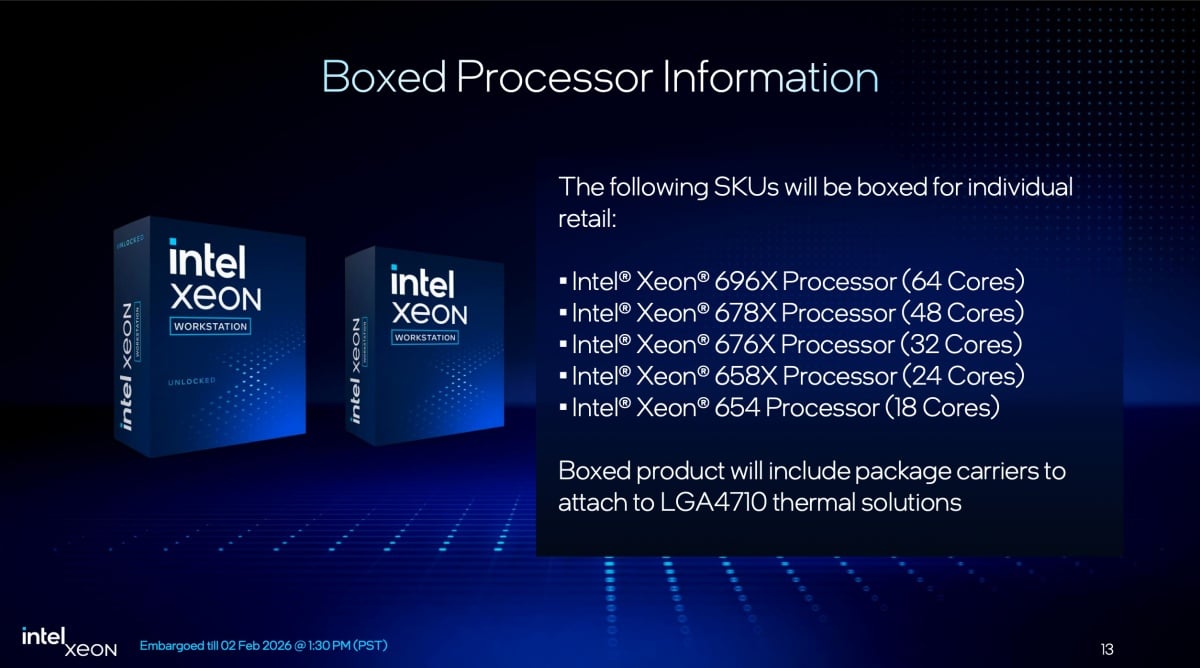

The Xeon 600-series spans 12 cores to 86 cores. That's a wide range, and not all SKUs are created equal for every workload. Let's break down the lineup and what each tier actually does.

The entry-level 12-core models are interesting. They're the smallest CPUs in the lineup, priced at around $499. For this price, you get all-performance cores and Hyper-Threading. If you're a small studio or independent contractor on a budget who needs to stay competitive with powerful single-threaded applications (video editing, CAD, 3D modeling), this is interesting. It's not a top performer, but it's genuine professional-grade hardware at a consumer price point.

The 32-core to 48-core sweet spot represents where most professional workstations probably settle. These offer strong all-around performance without the thermal or power delivery complexity of higher core counts. You get enough parallelism for rendering and simulation tasks while maintaining reasonable electricity consumption and cooling requirements. These SKUs are where value lives.

The flagship Xeon 698X with 86 cores is the statement piece. It's not a practical fit for most workstations. The thermal design requirements are serious. Power consumption reaches levels that demand dedicated circuit infrastructure. Cooling solutions are beefy. If you're a major production studio, university research department, or massive content creation facility, you might justify it. For most people? Massive overkill. But it sets the perception of power and capability, which has marketing value.

Each SKU tier includes different memory configurations. Lower-core-count models support octa-channel DDR5 at 6,400MT/s. The top five models unlock MRDIMM support at 8,000MT/s. That means memory ceiling and speed vary by model. This is intentional segmentation—you don't get the fastest memory on the cheapest CPUs.

The X-series SKUs are overclockable. This is rare for Xeon workstation chips. Enthusiasts and power users can push clocks higher, trading thermal headroom for additional performance. Standard non-X SKUs likely have locked multipliers. This differentiation gives options for different user profiles.

One practical concern: Not all motherboards support all SKUs. The W890 platform motherboards arriving in late March 2026 will support the full lineup, but there might be firmware version dependencies or BIOS updates required. If you're buying a system in the weeks after launch, verify that your specific CPU is supported by your motherboard before purchase.

Thermal and Power Considerations: The Reality of 86 Cores

Let's talk about something the marketing materials downplay: power consumption and heat dissipation.

An 86-core Xeon 600 running at full load is going to draw serious power. We're talking 400+ watts at full frequency. For comparison, typical office workstations draw 150-250 watts. This isn't a home-office machine. You need proper infrastructure.

Thermal design power (TDP) for the flagship models likely sits around 500-600W. That means you need a cooling solution designed for that scale. A basic tower cooler isn't cutting it. You're looking at liquid cooling solutions, either air-to-liquid radiators or custom loops. Your power supply needs headroom. A 1500W PSU isn't unreasonable for a fully configured system.

Mid-range models (32-48 cores) have more reasonable power budgets, probably in the 300-400W range. These are still power-hungry compared to consumer hardware, but they're approachable with quality tower coolers and good case airflow.

Here's the practical implication: A Xeon 600 workstation isn't just about the CPU cost. Infrastructure costs mount. Better cooling. Larger power supplies. Potentially custom case designs to handle thermal requirements. Larger UPS systems if you want power protection. These costs add up to 10-20% of the total system cost beyond just the CPU price.

Electricity costs matter too. If a Xeon 600 draws an average of 300 watts during heavy workloads and you're running it 8 hours a day, that's roughly 0.9 kilowatt-hours per day. At average US commercial electricity rates (around

Noise is another factor nobody mentions. Systems that draw 400+ watts under load also generate significant heat. Cooling that efficiently means fans running hard. A Xeon 600 workstation under load will be louder than most consumer workstations. If you're in a shared office space or sensitive environment, this matters. Some deployments warrant acoustic enclosures, adding more cost.

There's also the ceiling effect. Your power infrastructure might have limits. If your office is wired for 20-amp circuits with 2,400W available, maxing out with multiple Xeon 600 systems isn't feasible without electrical upgrades. This is a constraint most people don't think about until they're trying to deploy systems and hit the wall.

The 32-core entry system is the most budget-friendly option at

Competitive Comparison: How Xeon 600 Stacks Against AMD Threadripper

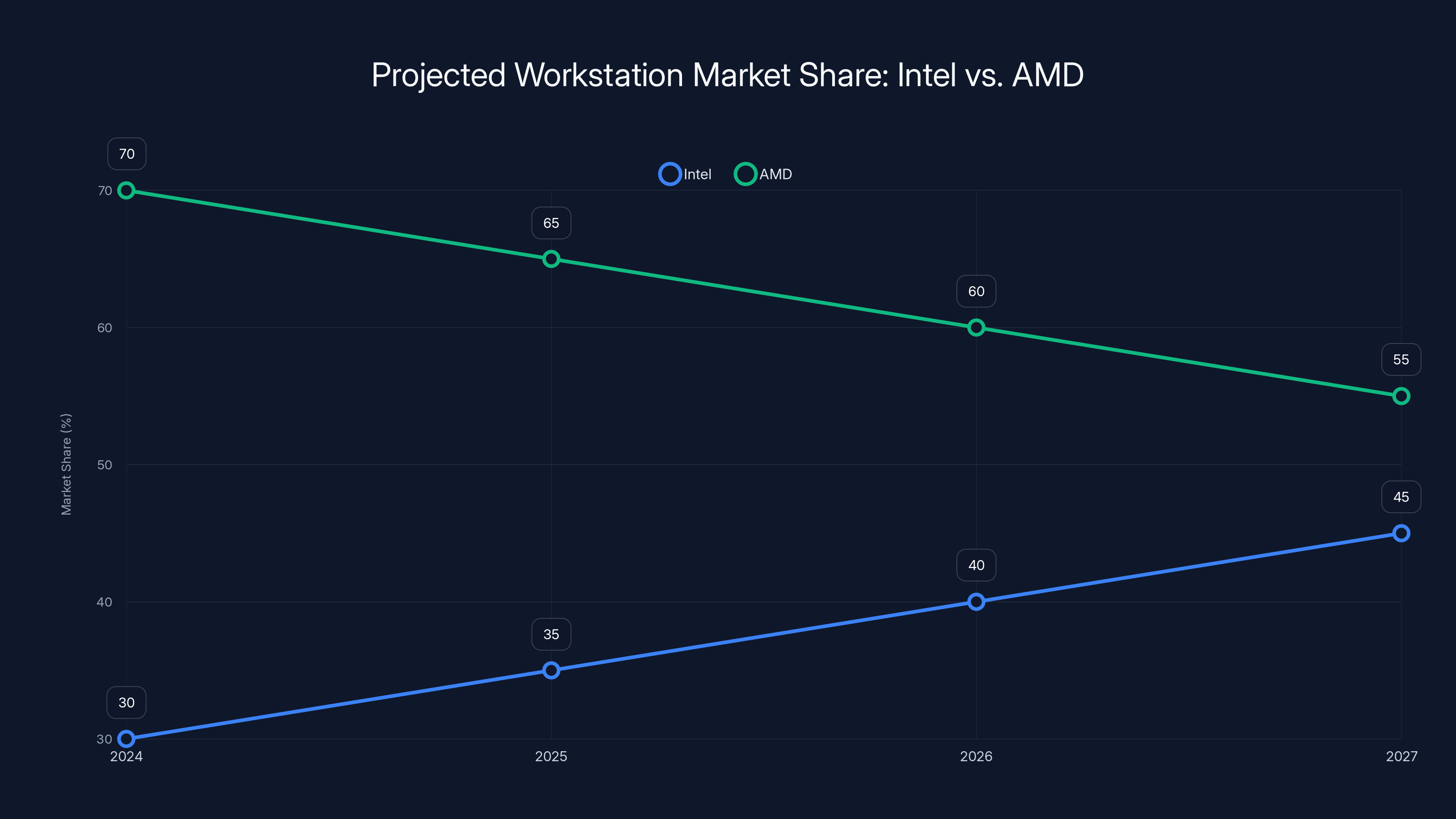

The elephant in the room is AMD. Over the past few years, AMD's Threadripper lineup has owned the desktop workstation space. Threadripper Pro 9000 WX series directly competes with Xeon 600. How do they compare?

AMD maxes out at 64 cores on Threadripper Pro 9000 WX, versus Intel's 86-core flagship. Core count advantage: Intel. AMD supports up to 2TB of memory (standard Threadripper 9000 supports 1TB), versus Intel's 4TB. Memory ceiling: Intel. PCIe connectivity is comparable—both support PCIe 5.0 with substantial lanes.

The architecture styles differ significantly. Threadripper uses Zen 5 architecture, which is proven, mature, and highly optimized. Threadripper Pro includes extra memory bandwidth and dedicated support for professional workflows. Granite Rapids is newer, unproven in real-world production at scale.

Pricing is interesting. AMD Threadripper Pro 9000 WX tops out around

The software ecosystem matters. Threadripper has years of driver maturity, BIOS stability, and application optimization. Xeon 600 is day-one hardware. There will inevitably be BIOS updates, driver refinements, and software compatibility surprises in the first 6-12 months after launch. This favors waiting a few months after release before committing financially.

Real-world performance disparity might be smaller than specs suggest. If both systems have similar single-threaded performance and comparable memory bandwidth, workload execution times might be nearly identical even with core count differences. The true differentiator will be whether Xeon 600's integrated AI acceleration actually provides meaningful benefit for your specific software.

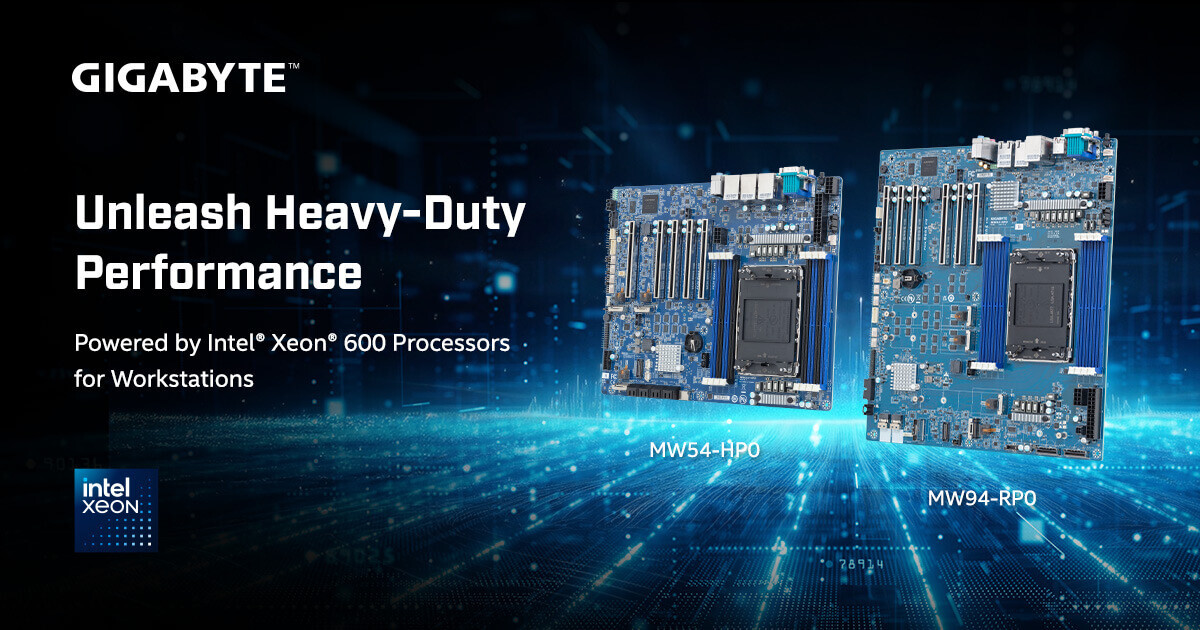

One advantage Xeon 600 has: Intel's broad OEM relationships. New W890 motherboards from Dell, Lenovo, Supermicro, and Puget are coming. Turnkey systems will be available quickly from established vendors. Threadripper Pro systems are fewer vendors and often more limited configurations. If you want off-the-shelf solutions with proper support, Xeon might ship first with options.

The honest take? For most existing Threadripper users, Xeon 600 isn't an immediate "must upgrade." Threadripper Pro systems are still competitive. But for new workstation purchases starting in mid-2026 onward, Xeon 600 presents a viable alternative that wasn't available before. Competition is good—it drives innovation and reasonable pricing.

Motherboard Platform: The W890 Ecosystem Arrives

A processor is only as good as the platform supporting it. Xeon 600-series uses the new W890 motherboard platform, a significant departure from W680 platforms that supported previous generations.

New motherboards are expected from major system integrators in late March 2026. That's roughly 3-4 months after expected CPU availability. Yes, there will be a gap between CPU arrival and full platform availability. Early adopters might struggle to find compatible boards for DIY builds.

The four primary motherboard vendors—Dell, Lenovo, Supermicro, and Puget Systems—suggest this isn't a fragmented launch. These companies have massive experience building professional workstations. Their W890 designs will be battle-tested and optimized for the hardware.

Key features expected in W890 boards:

- Full support for octa-channel DDR5 memory with MRDIMM modules

- Multiple PCIe 5.0 slot configurations for storage and accelerators

- Advanced power delivery systems handling the high current draw of 86-core CPUs

- Robust thermal monitoring and management systems

- Professional-grade networking with high-speed Ethernet ports

- Comprehensive BIOS features for enterprise and creative professionals

What won't appear is desktop motherboards at

One implication: If you're considering a Xeon 600 system, wait until late March 2026 to have multiple motherboard options. Buying in early 2026 when only a single vendor has W890 boards available risks compatibility issues and limited features. Later availability means more options, more competition between vendors, and better value.

BIOS support and driver maturity will be ongoing through 2026. Early boards might need multiple firmware updates to unlock full capability and stability. This is normal for new platforms but can be frustrating. If stability is critical for your workflows, waiting 6-12 months post-launch lets vendors iron out quirks.

Estimated data suggests Intel's market share could grow from 30% in 2024 to 45% by 2027, driven by the Xeon 600 series. AMD's share may decrease as competition intensifies.

Pricing Strategy: Where Xeon 600 Fits Your Budget

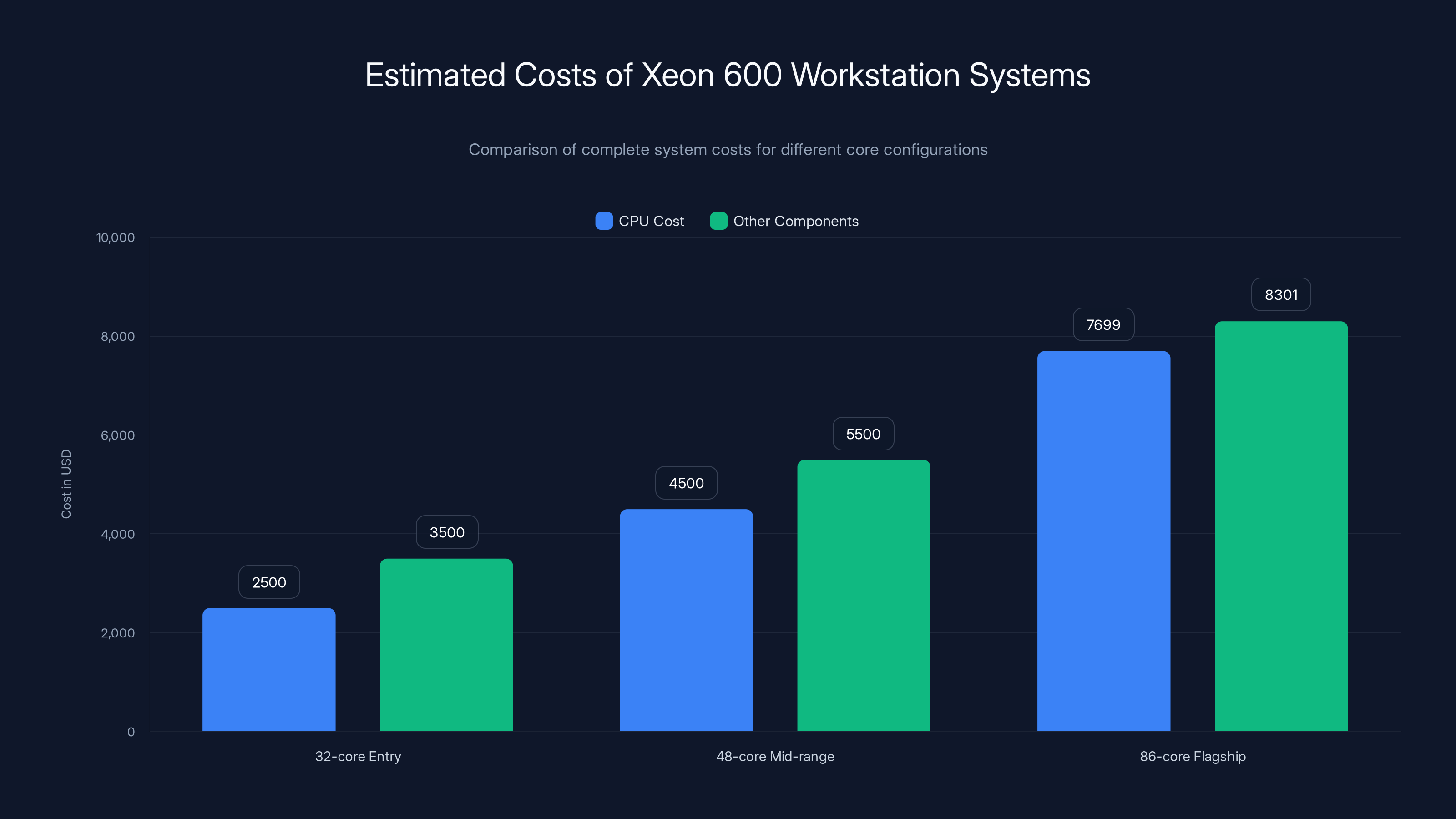

Let's talk money. Boxed CPUs range from

A complete workstation system might run:

- 32-core entry system: ~2,500 + motherboard800 + storage300 + case/PSU $700)

- 48-core mid-range system: ~4,500 + motherboard1,500 + storage400 + case/PSU $900)

- 86-core flagship system: ~7,699 + motherboard3,000+ + storage600+ + case/PSU $1,500+)

These estimates assume you're building or buying semi-custom. Turnkey systems from integrators might cost 15-25% more due to design, support, and warranty services.

The value proposition gets interesting when compared to alternatives:

- Threadripper Pro 9000 WX systems: Roughly similar price for comparable performance, possibly higher for top-end models

- Dual-socket EPYC server systems repurposed as workstations: Can be cheaper per-core but complex cooling and power requirements

- GPU-accelerated consumer systems: Lower cost upfront but limited for CPU-intensive professional workflows

For small studios or independent professionals on tight budgets, the $4,000-6,000 32-core system represents a significant capital investment. It's not an impulse purchase. The ROI calculation matters: How much faster will my renders complete? How much time will I save? Does that translate to more billable hours or faster project delivery?

Leasing is another consideration that's often overlooked. Some professional services firms lease rather than buy workstations. Xeon 600 systems might be available through leasing programs for companies that prefer capital expense avoidance. Check with integrators about 3-5 year lease options.

Expected Workloads: Who Actually Benefits Most

Here's the reality check: Xeon 600-series isn't universally better than every alternative. Certain workloads benefit tremendously. Others? Meh.

Excellent fit:

- 3D rendering and animation: Cinema 4D, Blender, Maya with extensive ray-tracing or complex simulations benefit from high core counts and memory bandwidth

- Video processing: Editing 8K footage, color grading massive timelines, and rendering visual effects need memory bandwidth and cache

- Scientific simulation: Physics simulations, molecular dynamics, climate modeling—all scales beautifully across many cores

- AI model inference: Especially with integrated AMX acceleration for compatible models

- Geospatial analysis: Processing satellite imagery and geographic data involves large datasets that need memory bandwidth

- Financial modeling: Complex spreadsheets and quantitative analysis benefit from multithreading

Poor fit:

- Single-threaded applications: Code compilation, some database operations where parallelization doesn't help. A cheaper system with higher single-threaded performance might outperform

- Real-time work with strict latency requirements: Games, interactive VR, live streaming where variability matters more than throughput

- Legacy software that doesn't parallelize: Old CAD or engineering software written before multi-core became standard

- Workloads bottlenecked by I/O: If your bottleneck is network speed or storage throughput rather than compute, more cores don't help

The critical point: Benchmark your actual workload before buying. Run your software on similar hardware. Measure. Calculate ROI. Don't assume more cores equals more speed for your specific application.

Memory Configuration Strategy: How Much Do You Actually Need

Xeon 600 supports up to 4TB of memory. That's an absurd amount for most users, but the architecture makes it practical. Let's talk sensible configurations.

512GB-1TB range: Sufficient for most professional workflows. You can keep massive datasets in memory simultaneously. Rendering operations, simulations, AI inference—all run without memory swapping. This is the "sweet spot" for professional studios.

2TB range: Now you're getting specialized. Genomics research, climate data analysis, massive machine learning datasets. Organizations working with truly enormous information sets justify this capacity.

4TB: Reserved for research institutions, universities, or massive corporate deployments. The diminishing returns are real—you're paying exponentially more for linearly more capacity.

One practical consideration: MRDIMM modules are expensive. They're new technology with limited supply and higher complexity. You might pay 30-50% premium for MRDIMM modules versus standard DDR5. If your workflow doesn't need the absolute highest memory speeds (8,000MT/s), standard octa-channel DDR5 at 6,400MT/s might deliver 95% of the benefit at 70% of the cost.

Here's the formula for sensible memory: Take your largest single working dataset, double it for active operations and temporary buffers, add 50% more for comfortable headroom. If your largest file is 60GB, you're looking at 180GB of memory. Allocate 256GB and you have breathing room without overpaying for unused capacity.

Availability Timeline and Launch Expectations

Here's the critical schedule everyone needs to know:

Late March 2026: New W890 motherboards from OEMs arrive. This is the earliest realistic date for complete platforms.

Boxed CPU availability: Unclear. Intel sometimes launches server CPUs first, then releases consumer/workstation versions months later. Expect mid-to-late 2026 for boxed chips to be readily available for DIY builders.

This gap matters. If you're planning a build, you might need to wait until April-May 2026 for completed motherboard designs to be available. Jumping in before then risks compatibility issues or missing features from incomplete designs.

There's also the supply chain angle. Xeon 600 will have limited initial supply. Companies like Intel prioritize OEM partners (Dell, Lenovo, etc.) before consumer availability. If you want a Xeon 600 workstation in Q2 2026, you might face waiting lists or availability constraints. Budget extra time for procurement.

Driver and BIOS maturity follows a predictable pattern. Expect BIOS updates monthly for the first 6 months, then quarterly. Driver support from GPU vendors, storage manufacturers, and software companies ramps up gradually. By month 6 after launch, the ecosystem is reasonably stable. By month 12, it's mature.

Future-Proofing: Will Xeon 600 Stay Relevant

A Xeon 600 workstation is a 5-7 year investment typically. Will it stay competitive? Let's think through it.

The core architecture should age well. Redwood Cove is fundamentally sound microarchitecture. Performance improvements will come from clock speed increases and optimizations in subsequent generations, but the basic approach is proven. Software optimization for 86-core systems will improve over time, making gains even better than launch benchmarks suggest.

Memory bandwidth ceiling is generous. 51.2GB/s standard or 64GB/s with MRDIMM—that's comparable to high-end discrete GPUs. Memory bandwidth needs for professional applications might scale, but not by 2x or 4x in the next 5 years. You're buying headroom that will age well.

PCIe 5.0 is already shipping broadly. By 2026-2027, it will be standard. Your PCIe 5.0 connectivity won't age into obsolescence quickly. PCIe 6.0 is coming, but backwards compatibility ensures your PCIe 5.0 devices will work on future systems.

The catch: AI capabilities might age fastest. Integrated AMX acceleration is competitive now. In 2-3 years, when specialized AI accelerators are even more powerful and ubiquitous, the CPU-side acceleration might feel dated. But that doesn't break the system—it just means AI workloads increasingly offload to dedicated hardware rather than using CPU AMX.

Software optimization favors proven platforms. As developers spend more time with Xeon 600 systems, software gets faster through better thread scheduling, cache utilization, and algorithmic choices tuned to the hardware. A Xeon 600 in 2028 running highly optimized software might perform 20-30% better than the same system in 2026 running generic code.

The Honest Assessment: Is Xeon 600 Worth the Leap

Let me be direct. Xeon 600-series is impressive from a specification perspective. 86 cores, 4TB memory, octa-channel DDR5, PCIe 5.0, integrated AI acceleration—it's genuinely next-generation workstation hardware.

But impressive specs don't automatically translate to buying decisions. Here's what matters:

Buy Xeon 600 if:

- You're replacing a system that's 4+ years old and performance is measurably limiting your work

- Your workload is genuinely CPU-bound (rendering, simulation, analysis) rather than limited by I/O or single-threaded performance

- You have budget for proper infrastructure (cooling, power, motherboard) beyond the CPU

- You can wait until late Q1 or early Q2 2026 for mature platforms and good reviews

- You benchmarked your actual software and saw meaningful performance gains in testing

Skip Xeon 600 if:

- Your current workstation performs adequately for your tasks

- Your workload is bottlenecked by something other than CPU (storage, network, single-threaded performance)

- Budget is tight—Threadripper Pro is competitive and available now with mature ecosystem

- You need immediate availability rather than waiting 3-6 months for stable platforms

- You're doing primarily GPU-accelerated work where the CPU is secondary

Xeon 600 represents Intel's serious attempt to reclaim the professional workstation space. Whether that translates to smart purchasing depends entirely on your specific situation, workload, budget, and timeline.

FAQ

What is Granite Rapids architecture?

Granite Rapids is Intel's latest processor architecture optimized for data center and workstation workloads. It's based on the Redwood Cove microarchitecture and features optimizations for memory bandwidth, AI acceleration through AMX units, and high clock speeds. This architecture powers the Xeon 600-series workstation processors.

How does octa-channel DDR5 memory differ from standard dual-channel systems?

Octa-channel memory provides eight simultaneous pathways between the CPU and memory, compared to just two in typical dual-channel systems. This dramatically increases memory bandwidth—up to 51.2GB/s for standard DDR5 or 64GB/s with MRDIMM modules. For data-intensive workloads like 3D rendering, video editing, or scientific simulation, this translates to faster data access and reduced stalls.

What are the primary benefits of PCIe 5.0 connectivity?

PCIe 5.0 doubles bandwidth compared to PCIe 4.0, delivering up to 32GB/s per lane. With 128 lanes available, Xeon 600 systems can attach high-speed NVMe storage, multiple AI accelerators, or external compute devices without bandwidth contention. This enables faster data movement and smoother workflows for storage-intensive or accelerator-dependent applications.

Who should consider buying Xeon 600-series workstations?

Xeon 600 is ideal for professional studios, researchers, and organizations working with CPU-intensive tasks like 3D rendering, video processing, scientific simulation, AI model inference, geospatial analysis, or complex financial modeling. The system excels for workloads that can utilize many cores and require substantial memory bandwidth or capacity. It's less ideal for single-threaded applications or workloads bottlenecked by I/O rather than compute.

How does Xeon 600 compare performance-wise to AMD Threadripper Pro?

Xeon 600 offers more cores (86 vs. 64), higher memory capacity (4TB vs. 2TB), and integrated AI acceleration through AMX units. However, detailed performance comparisons aren't yet available. Threadripper Pro uses proven Zen 5 architecture with mature driver support, while Xeon 600 is new hardware with developing ecosystem support. Real-world performance for your specific workload depends on software optimization, not just core counts.

What's the expected total cost of a Xeon 600 workstation system?

Complete systems range from roughly

When will Xeon 600 workstations actually be available for purchase?

W890 motherboards from major OEMs are expected in late March 2026. Complete, validated systems from integrators will arrive shortly after. Boxed CPUs for DIY builders might not be widely available until mid-2026 or later. Early adoption means waiting until at least April 2026, with May-June 2026 being safer for stable platform availability.

What cooling solution do I need for Xeon 600?

The flagship 86-core models require serious cooling capable of handling 500-600W thermal load. Liquid cooling solutions or high-end tower coolers rated for 400W+ are necessary. Mid-range 32-48 core models can use quality tower coolers rated for 300-400W. Your case must support these cooling solutions with adequate airflow and space.

How much memory do I actually need in a Xeon 600 system?

For most professional workloads, 512GB-1TB is sufficient and practical. This capacity allows you to keep massive datasets in memory simultaneously without swapping to storage. Unless you're working with specialized applications requiring extreme memory capacity (genomics, climate data, massive AI datasets), amounts beyond 2TB experience diminishing returns relative to cost. Calculate your actual working dataset size and double it for comfortable headroom.

![Intel Xeon 600-Series Workstations: 86 Cores, DDR5, AI Power [2025]](https://tryrunable.com/blog/intel-xeon-600-series-workstations-86-cores-ddr5-ai-power-20/image-1-1770250218471.png)