Microsoft Teams Gets Serious About Security: What's Changing in 2026

If you've ever felt like your organization's security posture was held together with duct tape and prayers, you're not alone. Most companies stick with default security settings because, honestly, nobody has time to audit every single protection layer. Microsoft's betting that you won't notice the change they're about to make, and frankly, that's the point.

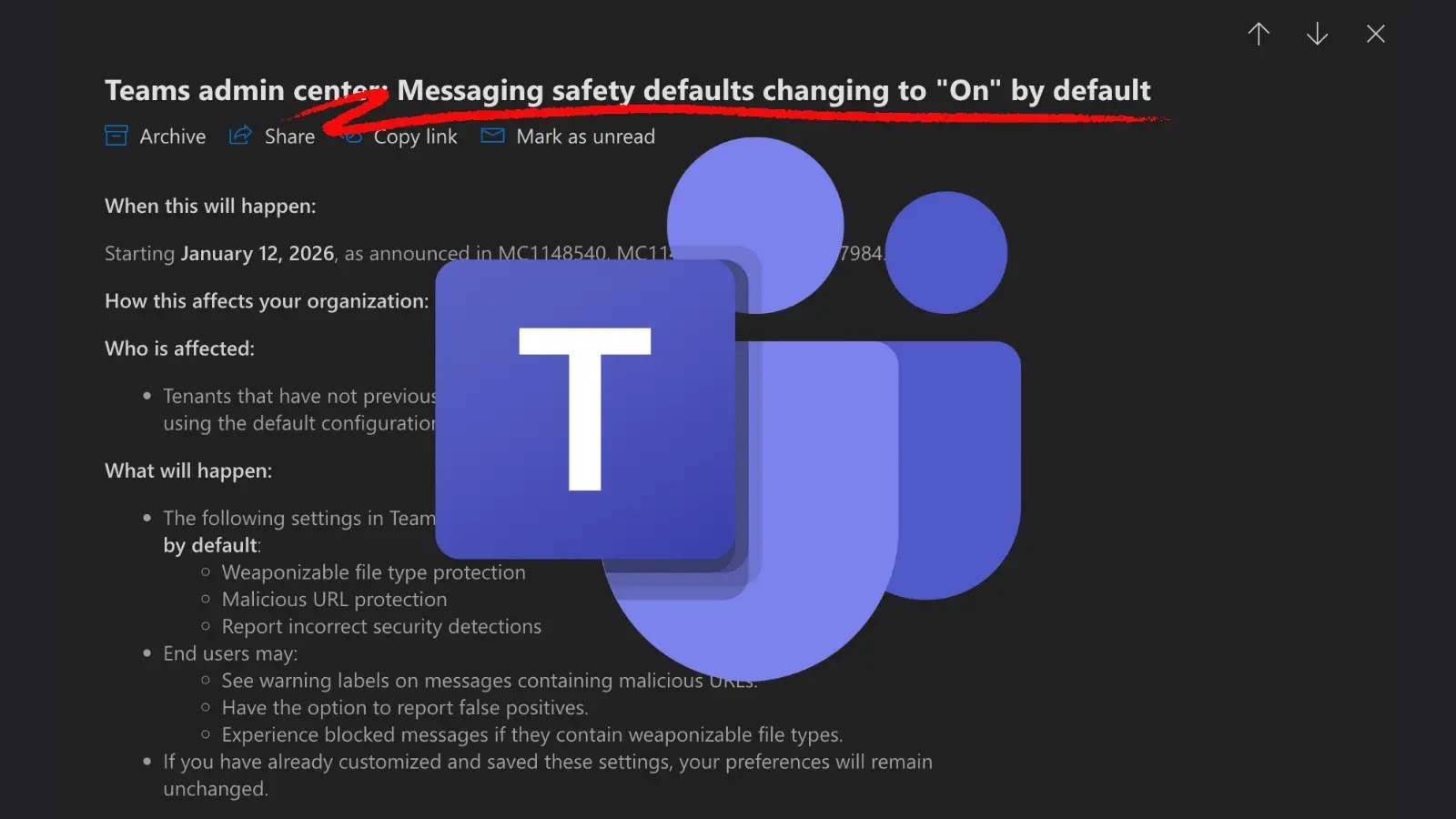

Starting January 12, 2026, Microsoft is flipping the switch on three critical security protections in Teams without asking permission first. These aren't opt-in niceties—they're automatic, enterprise-wide implementations designed to stop the stuff that actually hurts: malware, phishing, and the kinds of social engineering attacks that cost companies millions.

Here's what's wild about this move: Microsoft isn't waiting for you to get hacked. They're implementing what security experts have been screaming about for years. The average organization experiences 32 security incidents annually, according to industry benchmarks, and a huge portion of those come through messaging platforms like Teams. When you've got thousands of employees clicking links and downloading files all day, the math gets grim fast.

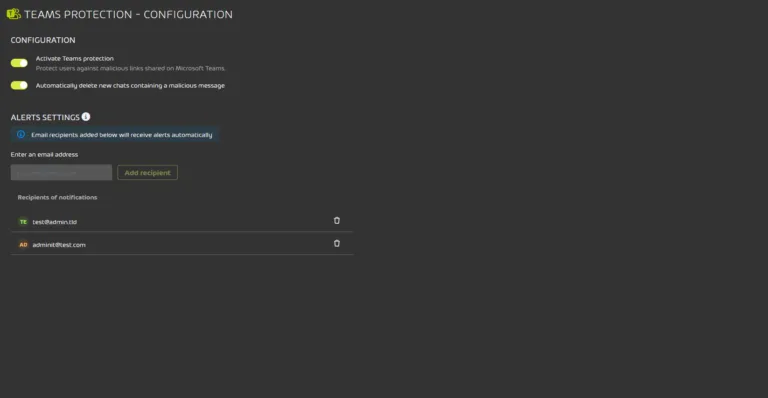

The three protections rolling out are weaponizable file type blocking, malicious URL detection, and a feedback mechanism for false positives. Each one addresses a specific attack vector, and together they form a baseline that should've been there from the start. But better late than getting pwned, right?

What makes this announcement interesting isn't the features themselves—it's that Microsoft is making them mandatory. They're not giving IT teams the option to skip this update. They're not hiding the settings in some obscure admin portal and hoping nobody notices. This is Microsoft essentially saying: "We're taking security seriously enough to override your preferences."

That's a big shift in philosophy for a software company. Normally they add features that sit in settings menus, accumulating dust until someone discovers them years later. This time, the default is the secure option, and if you want the weaker configuration, you've got to actively opt out before the deadline.

Why This Matters Now

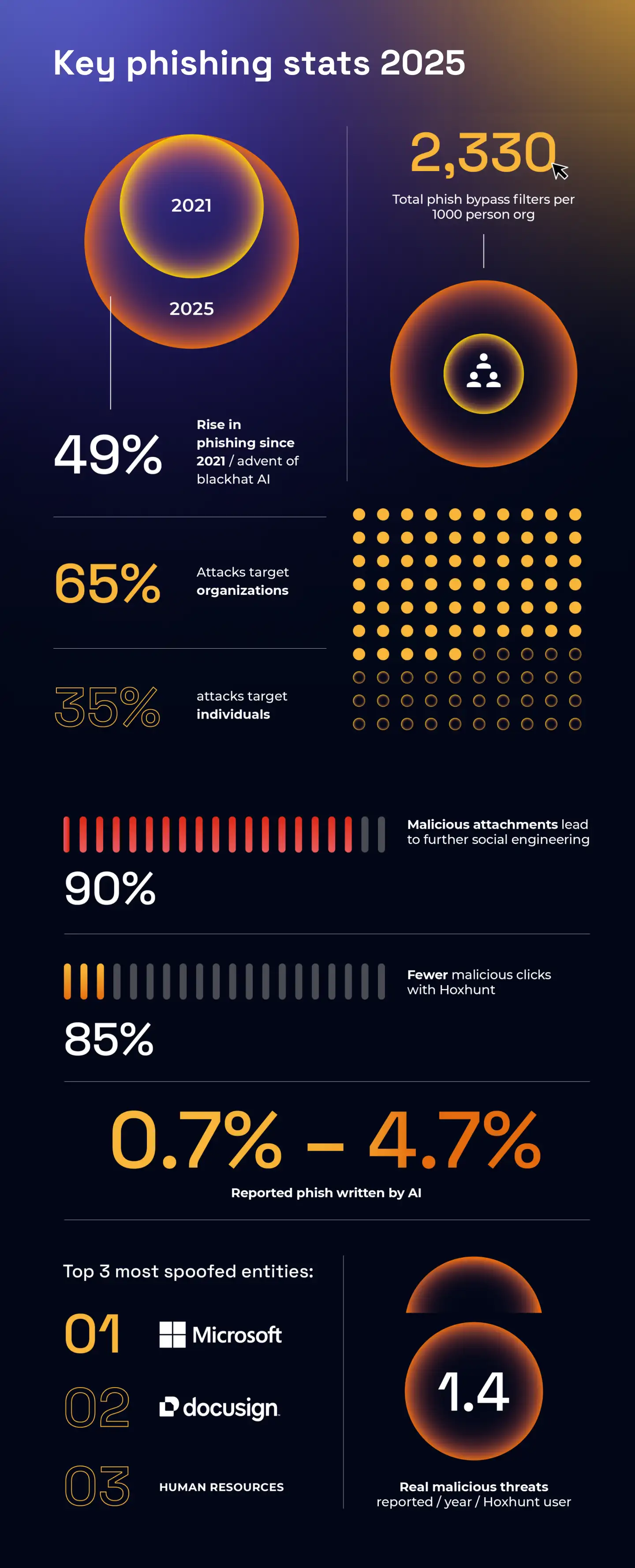

Phishing attacks have gotten worse, and the data backs this up. Since the widespread adoption of AI, phishing sophistication has skyrocketed. Attackers now use generative AI to craft messages that sound eerily authentic, clone voices with minutes of audio, and create convincing fake documents. Your executives aren't just getting spoofed anymore—they're getting AI-spoofed, and the difference is devastating.

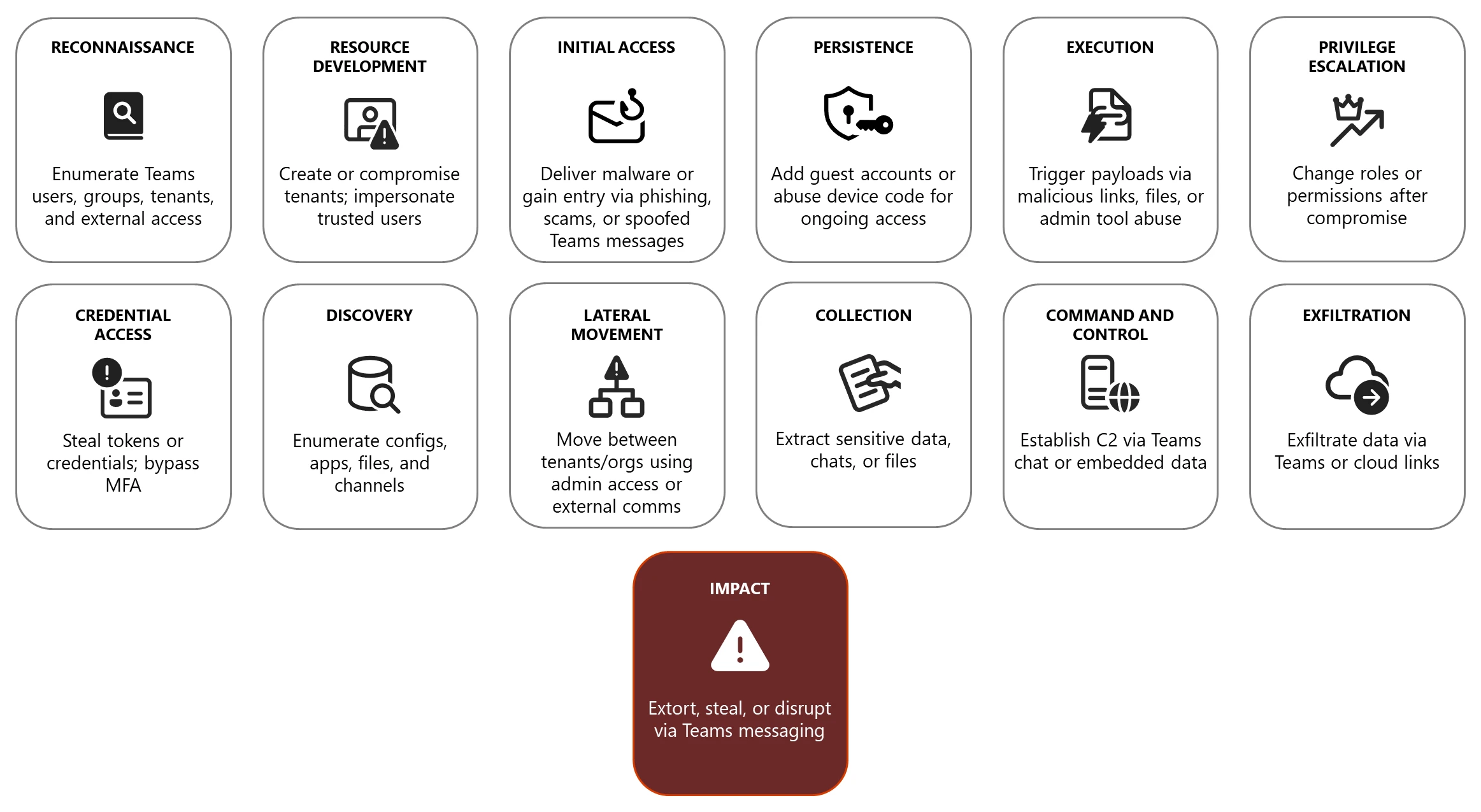

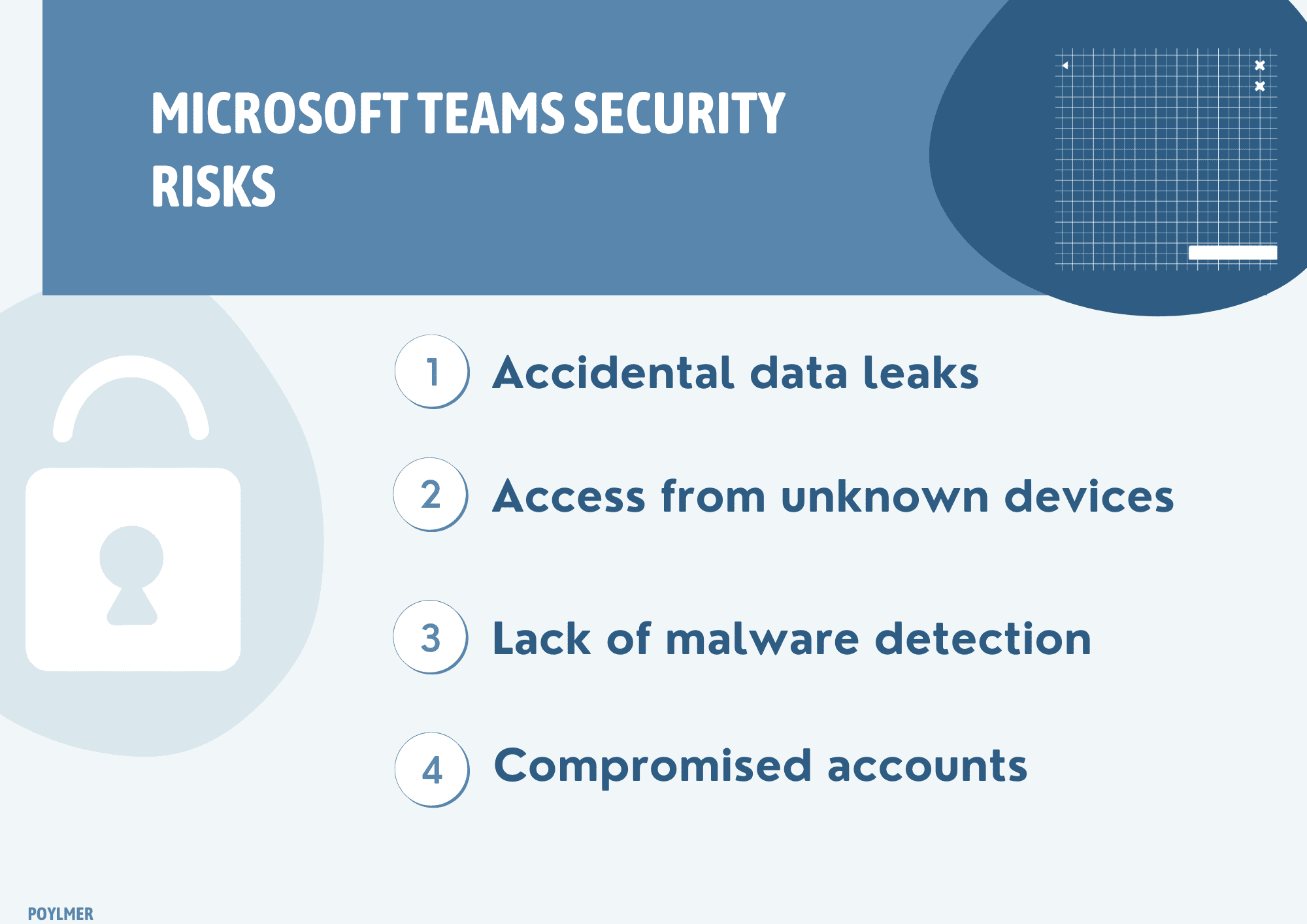

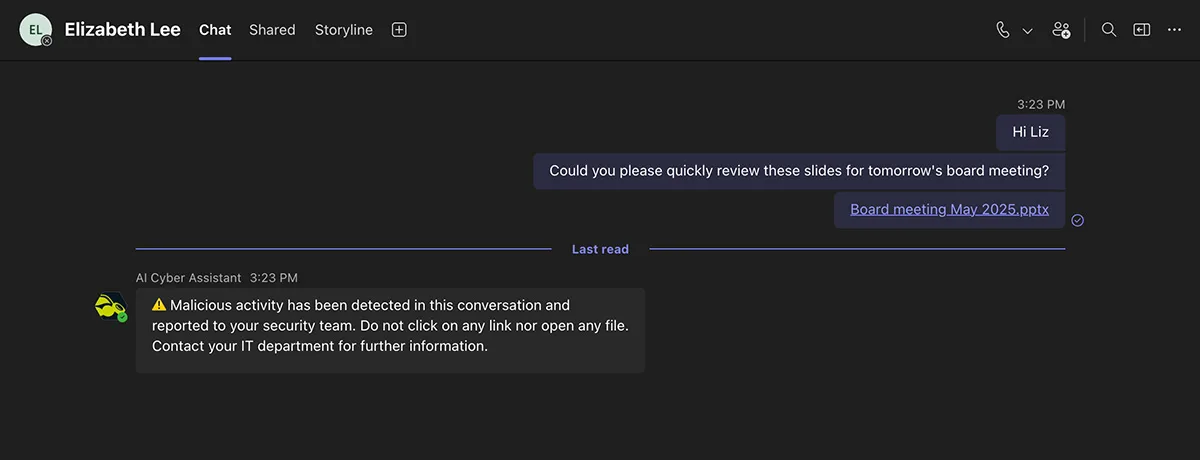

Teams is a prime target because it's where work happens. Unlike email, which many people have trained themselves to be suspicious about, Teams feels safe. It's internal. Your coworkers are in there. The boss sends messages through it. When someone tells you to click a link or download a file in Teams, you're more likely to comply than if they'd sent the same request via email.

That trust is exactly what attackers exploit. There have been documented cases of hackers compromising Teams accounts, then using those accounts to target executives with convincing requests. One breach in a mid-level department spreads laterally through the entire organization because Teams makes collaboration so frictionless. The authentication happens once, and then the attacker has the run of the place.

Microsoft's response is practical. They're not trying to make Teams completely unhackable—that's impossible. What they're doing is raising the baseline friction for attack execution. If you want to send malicious files through Teams, you need to get creative. The obvious approach stops working.

The Three Protections Explained: What's Actually Blocking

Weaponizable File Type Protection: The First Line of Defense

When security teams talk about "weaponizable file types," they're referring to executable and macro-enabled files that attackers use to deploy malware. We're talking about .exe files, .dll files, Office documents with embedded macros (.docm, .xlsm), scripts (.ps 1, .bat, .cmd), and archive files that might contain any of the above.

The protection works by preventing messages containing these file types from being delivered through Teams channels and direct chats. If someone tries to upload a .exe file to a channel, Teams stops it. If someone tries to send a .docm file in a direct message, it gets blocked. The message itself doesn't go through—the user gets a notification that the file type isn't allowed.

Now, here's where it gets nuanced. Most organizations probably already have file storage protections elsewhere in their stack. OneDrive, SharePoint, and email already scan for malicious files. But Teams occupies a weird middle ground. It's not email, so email security rules don't always apply. It's not file storage, so storage protections sometimes don't catch it. Teams is this direct-communication channel that sits outside traditional security perimeters.

Attackers know this. They use Teams specifically because it feels like collaboration, not email. The user experience is smoother. You don't think as hard before clicking. It's the path of least resistance, and attackers are lazy—they'll take the easy route every time.

The weaponizable file type blocking closes that gap. It's not sophisticated, but that's the point. It doesn't rely on AI or machine learning to detect threats. It just looks at the file extension and says no. This approach has two huge advantages: it's fast (no scanning overhead), and it almost never creates false negatives (it catches nearly everything it's designed to catch). The tradeoff is false positives. If you legitimately need to send a PowerShell script through Teams, you can't. You'll need to use a different channel, compress it, rename the extension, or work around it somehow.

For most organizations, this is fine. For development teams or system administrators who frequently share scripts, it's annoying. They'll have to change workflows. But Microsoft's betting that the security benefit outweighs the friction, and based on what actually happens in enterprise environments, they're probably right.

Malicious URL Detection: Real-Time Link Scanning

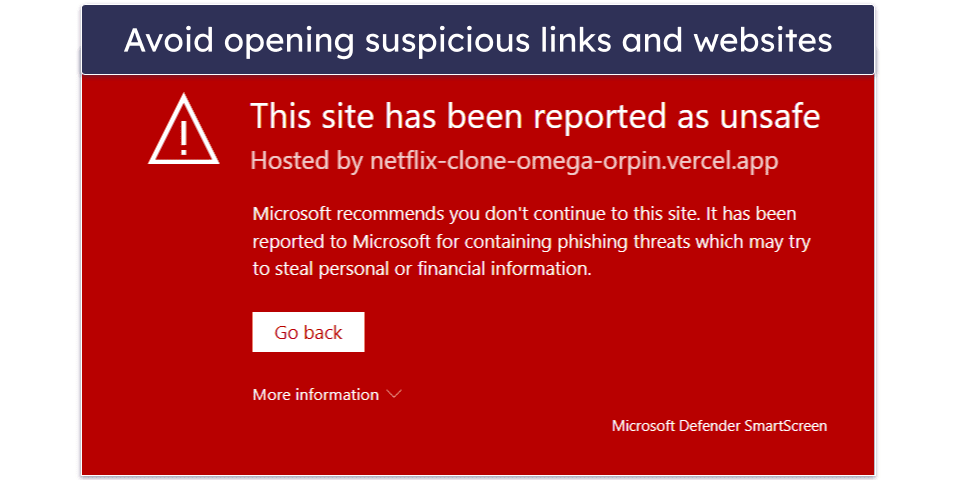

The second protection is malicious URL detection. This is where it gets more complex because URLs are harder to categorize than file types. A URL is just text. It doesn't have an extension. It can point to legitimate-looking domains that are actually malicious. It can redirect through multiple hops. It can change what it serves depending on who's accessing it.

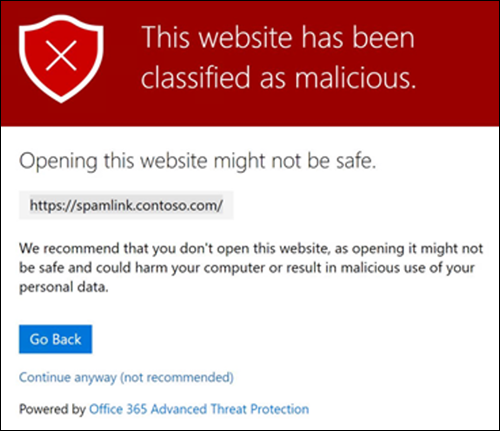

Microsoft's approach is real-time scanning. When someone posts a link in Teams, the system checks it against threat intelligence databases. These databases include known phishing URLs, drive-by download sites, credential harvesting pages, and other malicious domains. If a match is found, the link gets flagged. Ideally, the message doesn't get delivered, or it gets delivered with a warning.

The accuracy of this system depends heavily on the threat intelligence feeds. How fresh are they? How many threats do they cover? How many false positives do they generate? These are the questions that determine whether this feature is helpful or just annoying.

Microsoft has advantages here. They monitor hundreds of millions of email messages daily through Outlook. They track threat patterns across the entire Microsoft 365 ecosystem. They see attacks at scale. When a new phishing campaign starts spreading, they see it fast. That data feeds directly into URL reputation systems that power Teams protection.

But there's still room for attackers to evade. New domains created specifically for phishing campaigns might not be in the database yet. Legitimate domains that have been compromised might not be flagged immediately. Attackers can use URL shorteners to obfuscate the actual destination. And here's the tricky part: users will still click suspicious links if they trust the person who sent them. A coworker's compromised account posting a malicious link is more dangerous than an obvious phishing attempt, because the trust is real.

What this protection does is catch the obvious stuff. The phishing campaigns that cast wide nets. The drive-by downloads from known-bad domains. The credential harvesting pages that show up in spam campaigns. It's not a complete solution, but it's better than nothing, which is what most organizations have if they're relying purely on user judgment.

Feedback Mechanism: Teaching the Algorithm to Learn

The third protection is a feedback mechanism. This one's important but often overlooked. When security systems block something, they can be wrong. False positives are inevitable. Someone sends a link that's been temporarily flagged by the URL detection system, but it's actually a legitimate business tool. A file type gets blocked even though the user has a valid reason to share it.

Without a feedback mechanism, the user is stuck. They can't send the file. They can't help. They're frustrated. And the security system never learns that it made a mistake. The false positive repeats, again and again.

Microsoft's feedback system lets users report when the security detection is wrong. They can flag a blocked message and provide context. That feedback gets sent back to Microsoft. It gets analyzed. And ideally, the threat detection system learns from it and adjusts.

This matters because it keeps the false positive rate manageable. If users have no way to report false positives, they start disabling security features entirely. They find workarounds. They use shadow IT. They ship content outside the system. Ironically, trying to be too strict makes the organization less secure overall.

The feedback mechanism also gives Microsoft real-world data about where their threat detection is missing. They see patterns. They see which legitimate URLs are getting flagged, which file types are getting caught, where the system is too aggressive. They can then tune the detection rules to be more precise.

Of course, this creates a potential attack vector. If the feedback system isn't designed carefully, attackers could abuse it to get malicious content approved. Microsoft's going to have to be careful about how they implement this. The feedback probably gets routed to security teams for review, not automatically into the system. There's likely some kind of approval process. But the general principle is sound: let users report false positives, collect that data, and use it to improve the system.

Estimated effectiveness ratings for new Microsoft Teams security features show that malicious URL detection is expected to be the most effective, followed by file type blocking and the feedback mechanism.

How These Protections Work Together: Layered Defense Strategy

None of these three features is revolutionary on its own. Blocking file types is basic. URL scanning has been around for years. Feedback mechanisms are standard security practice. The real value comes from implementing them together, automatically, across the entire organization.

That's a three-layer defense. First layer stops file-based attacks. Second layer stops link-based attacks. Third layer creates a feedback loop that improves both. Individually, each one has limitations. Together, they eliminate the lowest-hanging fruit for attackers.

Here's the formula: when you make the obvious attacks harder, attackers move up the sophistication ladder. They invest more effort. They target fewer organizations. The cost of attack increases. For most organizations, this shift is huge. The attackers targeting you aren't nation-state adversaries with unlimited budgets. They're opportunistic. They're running automated campaigns. Raise the bar, and a lot of them move on.

The beauty of mandatory implementation is that this protection applies to everyone. Your competitor down the street? They get it too. Your customers? Same protections. This creates a baseline level of security across the entire Microsoft 365 ecosystem. It's not perfect, but it's better than the current situation where every organization patches their security differently.

The Attack Vectors This Actually Stops

Let's be specific about what actually gets disrupted here. First, the "I'll send you a document" attack largely stops working through Teams. Attackers can't attach a malicious Word document with embedded macros because the system blocks it. They could try converting it to PDF or other formats, but that changes the attack vector and limits options. Some attackers will find workarounds. Many won't bother.

Second, the phishing link distribution gets harder. If an attacker is running a large phishing campaign and needs to get thousands of users to click a malicious link, Teams becomes less useful. The URL detection catches a lot of these links. Users who might have clicked get a warning instead. The conversion rate drops. Attackers are running the math and deciding if it's worth it.

Third, the account compromise attack becomes more visible. When a compromised account starts sending malicious content, the blocking protections create artifacts. The user sees their message didn't go through. They might report it. The security team gets visibility into the account compromise faster. Detection time matters in breach scenarios. Faster detection means less lateral movement, less dwell time, less total damage.

What these protections don't stop: sophisticated spear-phishing from trusted senders with clean links, social engineering that doesn't rely on direct links or files, supply chain attacks that come through legitimate vendors, and insider threats where the attacker has legitimate access. The security model here is "stop the low-skill opportunistic attacks" not "stop determined adversaries."

That's actually the right approach for most organizations. The number of attacks you need to worry about vastly outnumbers the sophisticated, targeted attacks. Defend against the statistical reality, and you get the most bang for your security buck.

Estimated data shows .exe files are the most commonly blocked weaponizable file type in Teams, followed by scripts and macro-enabled Office documents.

The Timeline: January 12, 2026 and What to Do Now

Microsoft announced this change to give organizations time to prepare. January 12, 2026 is the activation date. That gives IT teams about a month (depending on when this article reaches you) to review settings and make decisions.

The implementation approach is important. Microsoft isn't turning this on silently. They're sending notifications to administrators through the Microsoft 365 message center. IT teams will see messages warning them about the change. Microsoft is giving explicit instructions about where to find these settings if you want to modify them.

The settings location is: Teams admin center > Messaging > Messaging settings > Messaging safety.

If you want to keep weaker security settings, you need to go into that menu and disable the protections before January 12. If you don't do anything, the stronger settings automatically become your default.

Now, practically speaking, most organizations won't do anything. They'll ignore the notifications. They'll let the defaults roll out. Partly because they're busy, partly because the defaults are good, and partly because enabling these protections is the right move anyway.

But some organizations will have legitimate reasons to adjust. Development teams might need to share scripts. Vendors might send legitimate executable files. Some organizations might have custom security policies that conflict with these settings. For those organizations, the advance notice is important. They need time to test the new defaults against their workflows, identify conflicts, and adjust settings if necessary.

Microsoft's also built in the flexibility. You can enable or disable each protection separately. You don't have to take all three. You can enable file blocking but disable URL detection if that makes sense for your use case. You can keep the feedback mechanism but adjust the file type list.

The key point is: the change is happening. Microsoft is making a strategic bet that this is the right default for enterprise security. They're probably right. But they're also giving enough notice and enough flexibility that organizations that need different settings can adjust.

The Bigger Picture: Microsoft's Security Philosophy Shift

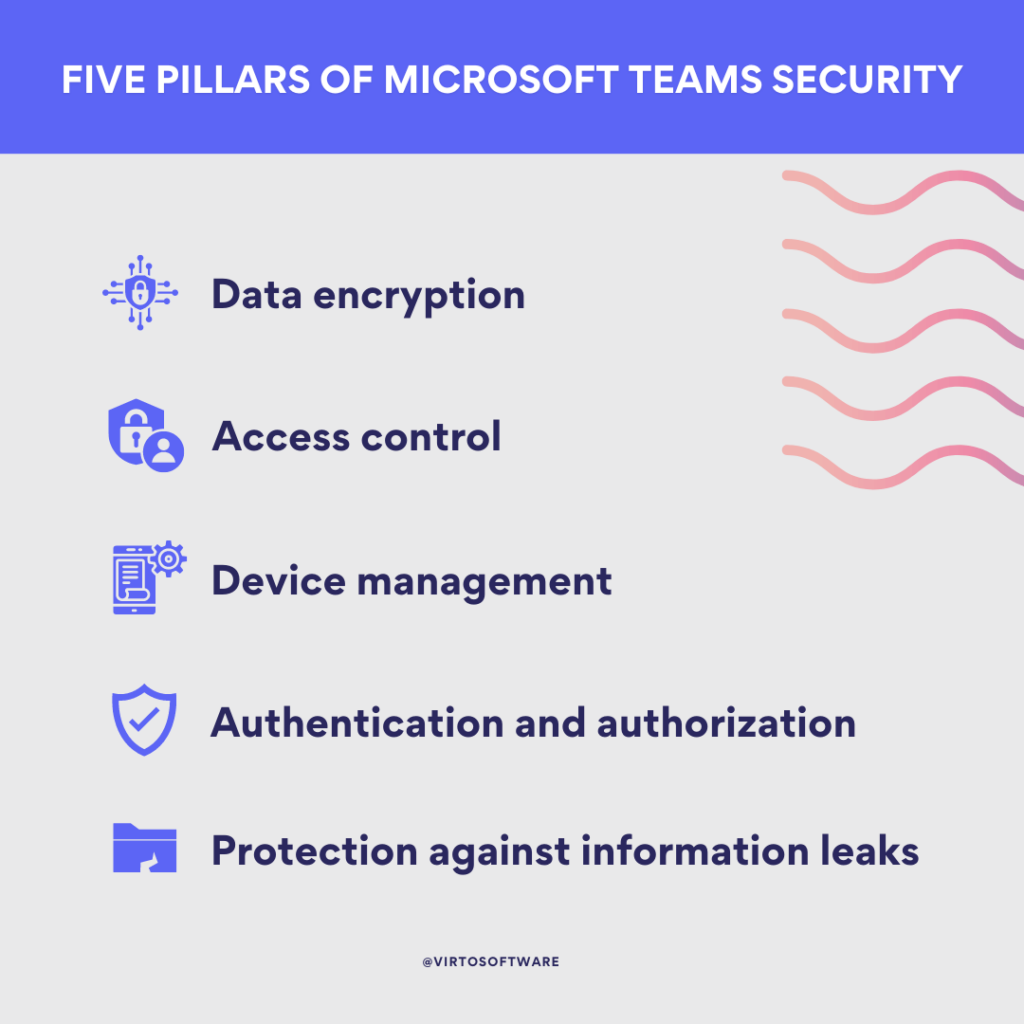

This announcement is part of a larger pattern. Microsoft has been moving toward "secure by default" for years. They've been making controversial decisions to force security improvements. They've required multi-factor authentication for administrators. They've encrypted data by default. They've added security holds to prevent accidental deletion. Each move was met with some resistance from organizations that wanted the flexibility, but overall, they've raised the security baseline.

Teams security is the latest evolution of this philosophy. Microsoft looked at how Teams is used, identified the attack vectors, and decided that the default configuration should protect against those vectors. They decided that the tradeoff between security and usability favors security, at least for this feature set.

This is different from how enterprise software traditionally works. Historically, vendors add features and let customers enable what they want. The philosophy is "we give you the tools, you decide how to use them." That approach respects customer autonomy but creates massive variations in security posture across organizations.

Microsoft's new approach is "we decide what's secure by default, and you can opt out if you have specific reasons." It's more paternalistic, but it's also more effective at actually reducing incidents across their customer base. When the default is secure, most organizations end up secure. When the default is permissive, most organizations end up exposed.

The implications for security professionals are mixed. On one hand, it's nice to have baseline protections that are automatically applied. On the other hand, it's frustrating when a major vendor makes decisions that override your security strategy. In this case, I think Microsoft's got it right. The protections they're enabling are reasonable. They're not blocking legitimate use cases. They're catching the obvious attacks. The feedback mechanism provides an escape hatch for false positives.

Competitive Implications

Other platforms are going to face pressure to match these protections. Google Workspace users are going to ask why Gmail isn't blocking weaponizable files by default. Slack users will ask why their messaging platform doesn't have the same protections. Zoom users will wonder about security in their chat features.

This creates a competitive dynamic. Microsoft moves first with a security feature. Competitors have to follow or lose customers. The baseline gets raised across the entire industry. Everyone benefits, which is exactly how security improvements should happen.

It also puts open-source collaboration platforms and self-hosted solutions in an interesting position. They've got to implement these protections themselves or accept that they're less secure than the major cloud platforms. For many organizations, that's fine—they accept the security risk of self-hosting. For others, it's a forcing function to migrate to platforms with better security infrastructure.

Estimated data suggests that the new mandatory security features in Microsoft Teams could reduce the average number of security incidents from 32 to 20 annually.

Implementation Reality: What Actually Happens on January 12

When the switch flips on January 12, most users won't notice anything. That's by design. The protections are designed to be invisible when they're working correctly. You don't see file blocks on legitimate messages. You don't get warnings about clean URLs. Life goes on as normal.

But some users will definitely notice. Someone will try to send a PowerShell script and get a blocked message. Someone will click a link that gets flagged and see a warning. Someone will try to share a macro-enabled Excel file and hit a wall. They'll contact IT support. IT support will have to explain that the file type is blocked for security reasons. There will be workarounds requested. Some will be granted. Some won't.

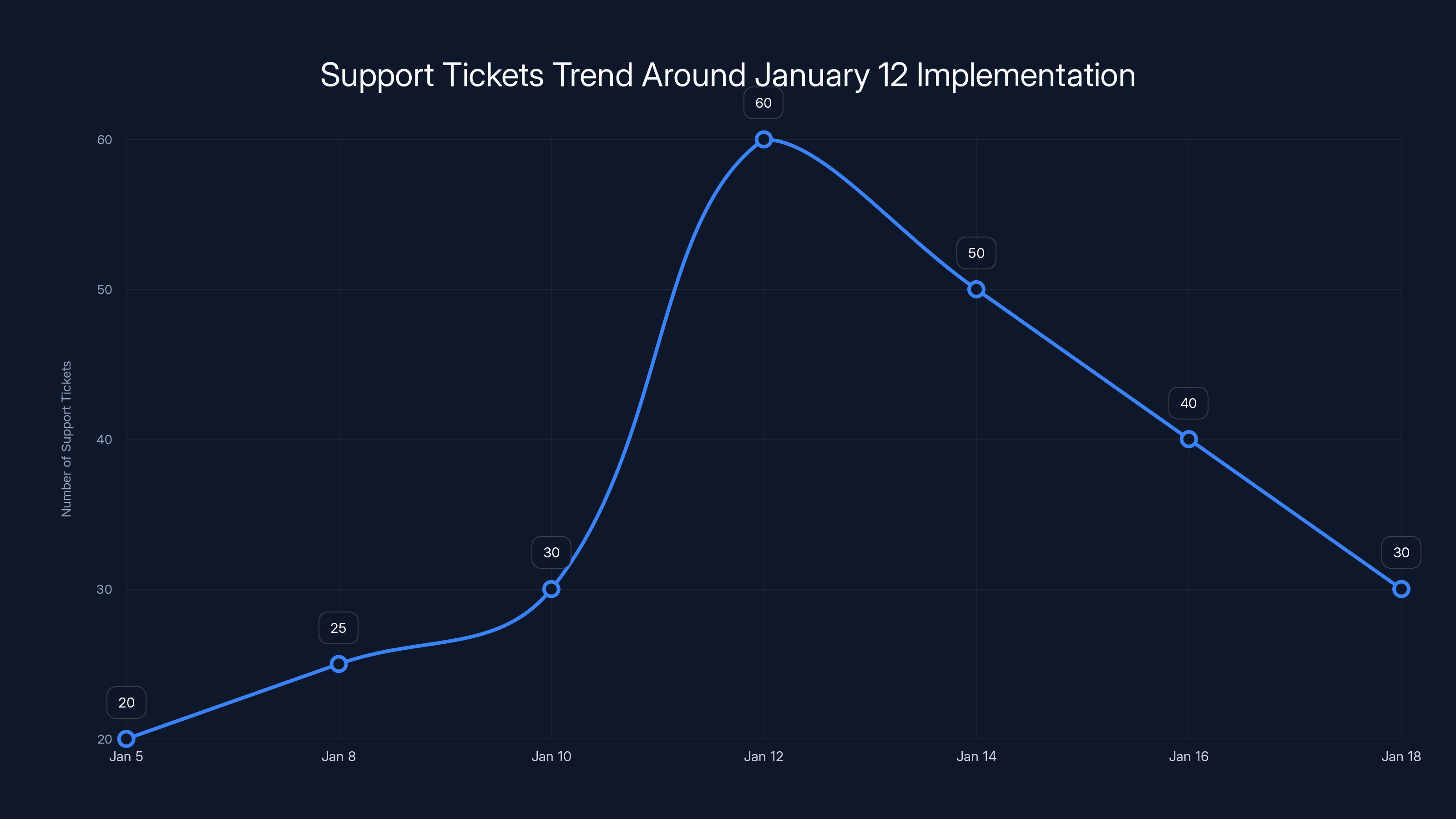

For IT teams, there will be a predictable uptick in support tickets around the change date. Users will call asking why their file got blocked. IT will need to be trained on the new feature set. Help desk documentation will need updating. Some organizations might see issues if they have workflows that depend on sending blocked file types through Teams.

The feedback mechanism will also start generating data. Users will report false positives. That data flows back to Microsoft. Microsoft adjusts detection rules. In subsequent updates, the system gets smarter and more accurate. The false positive rate should decrease over time.

Most organizations will see net positive results. The blocked malicious files will prevent some infections. The flagged phishing URLs will prevent some compromise. The reduction in incidents will be worth the minor friction from false positives. But some organizations will hate it and immediately start looking for configuration options to disable everything.

False Positives and When They Become a Real Problem

Here's the part nobody wants to admit: all security systems create false positives, and false positives are worse than people realize. When security blocks something legitimate, it doesn't just create friction. It erodes trust in the security system. Users start finding workarounds. They use email instead. They use external file sharing services. They use personal devices. The security theater breaks down.

Microsoft's betting that they can keep false positives low enough that this doesn't happen at scale. Let's hope they're right. The URL detection should be fairly accurate—it's built on their own threat intelligence feeds from Outlook and Office 365, which see massive volumes of data. The file type blocking is almost certain to be accurate by definition—if you're not trying to send a .exe file, you won't see it blocked.

But the intersection of these protections with legitimate business workflows is where things get tricky. Some organizations run security infrastructure in a hybrid model where they need to pass certain script files through Teams. Some organizations work with vendors who send macro-enabled documents. Some organizations use older software that requires executable files.

For these organizations, the feedback mechanism is critical. They need to report the false positives. They need data showing that their workflows are being impacted. They need to request exceptions or adjusted rules. If Microsoft doesn't respond to this feedback, the system will become unpopular fast.

There's also a timing factor. If the feedback mechanism takes weeks to process reports, organizations won't wait. They'll just disable the protections. If feedback is processed quickly and rules are adjusted within days, the system maintains trust and adoption.

Estimated data: The layered defense strategy significantly disrupts common attack vectors, with link-based attacks being the most effectively mitigated.

What About Organizations That Need Different Settings?

Some organizations operate in regulatory environments or security models where these settings don't work. Healthcare organizations with specific compliance requirements. Financial services organizations with strict protocols. Government contractors with classified information. These organizations might legitimately need different configurations.

For these organizations, the advance notice is critical. They need time to test the new defaults in a staging environment. They need to identify conflicts. They need to plan their exceptions strategy. And they need to get internal approvals for any deviations from the new default.

Microsoft is typically good about providing compliance-specific guidance for these situations. They'll have documentation explaining how to configure Teams for different regulatory environments. But it's on the organization to do this planning before January 12.

Large organizations with security teams should already be tracking this announcement. They should be setting up test environments. They should be documenting their current Teams security configuration. They should be planning their change management process. If you're not doing this, your organization is behind.

The Role of Tenant-Level Configuration

The settings are at the tenant level, which means they apply organization-wide. Individual teams or channels can't have different security settings. That's actually good for security—it prevents someone from creating an unsecured channel for quick collaboration and accidentally exposing sensitive data. But it also means you need to find a configuration that works for the entire organization.

For organizations with diverse use cases—developers in one part, finance in another, executives in another—finding that sweet spot is tricky. Developers want the ability to share scripts. Finance wants conservative security. Executives want a good user experience. You're trying to satisfy all of these with one configuration.

The answer is that these configurations aren't all-or-nothing. Microsoft's giving you granular controls. You can enable file type blocking but keep URL detection disabled if that makes sense. You can customize the list of blocked file types. You can adjust how feedback is handled.

Using these granular controls, most organizations should be able to find a configuration that balances security and usability for their specific needs.

The Phishing Problem That Sparked This Move

Why is Microsoft doing this now? Because phishing is out of control. Email-based phishing has been a problem for decades, and email security is relatively mature. Spam filters catch most obvious phishing. Multi-factor authentication catches account compromises even if someone falls for a phishing attack. But Teams phishing is newer, and the defenses are less mature.

Attackers have discovered that phishing is more effective in Teams than in email because users have lower guard. Email is where you expect attacks. Teams is where you expect legitimate business communication. The cognitive difference matters. Users click more readily. Compromise rates are higher.

And then there's the account compromise aspect. If an attacker compromises a Teams account, they can impersonate that person. They can send messages to their entire organization pretending to be them. The social engineering is trivial at that point. "It's me, the CEO. Here's the important file you need to open." People listen to the CEO.

One documented case had attackers compromising a mid-level employee's Teams account, then using it to target executives with convincing requests. The organization didn't realize it was compromised until weeks later. The damage was significant. The attacker had lateral access to sensitive systems. The compromise had spread to other departments.

Microsoft's looking at these incidents and concluding that the status quo isn't acceptable. They're using their platform to push security improvements. It's a reasonable move given the actual threat landscape.

Estimated data shows a spike in support tickets on January 12 due to new security features, with a gradual decrease as users adapt.

Integration With Other Microsoft Security Tools

These protections don't exist in isolation. They're part of a larger Microsoft security ecosystem. Teams integrates with Azure AD for authentication. It integrates with Microsoft Defender for cloud-based threat detection. It integrates with Data Loss Prevention (DLP) policies. It integrates with e Discovery for compliance.

When you enable weaponizable file type blocking in Teams, it works in concert with DLP policies that might already be blocking certain file types. When you enable URL detection, it shares threat intelligence with Outlook and Microsoft Defender. The feedback mechanism generates data that flows into Microsoft's broader threat intelligence system.

For organizations using the full Microsoft security stack, these protections make that stack more effective. For organizations mixing Microsoft with other vendors, the benefits are more limited but still useful.

The integration also creates interesting possibilities. Microsoft could theoretically use Teams as a delivery mechanism for security updates. If they detect a new malware variant through URL detection, they can push a block to all Teams tenants within hours. The platform becomes a security control point, not just a communication tool.

This is a subtle but important shift. Your communication platform isn't just for communication anymore—it's a security boundary. That's good for security but creates new operational challenges. When Teams has a security issue, it can't be fixed by just updating the app. It requires orchestrating changes across the entire infrastructure.

Looking Ahead: Is This Just the Beginning?

If you're thinking this is just the start, you're probably right. Microsoft has signaled that secure-by-default is their philosophy going forward. Expect more automated security features. Expect more defaults that might conflict with existing workflows. Expect Microsoft to make controversial decisions in the name of security.

Some of those decisions will be right. Some will be overly aggressive. But the overall trend is toward higher baseline security across the platform. Organizations that adapt early will have an easier time than those that wait and then have to scramble.

The evolution of Teams security also signals what's coming for other Microsoft products. If you see patterns in Teams, you'll likely see them in other products eventually. Microsoft is using Teams as a proving ground for secure-by-default approaches. If they work here, they'll expand.

For IT teams, this means staying on top of announcements. It means having test environments where you can validate changes before they impact production. It means building processes to handle security updates that come with behavioral changes. It means shifting from a reactive security posture to a proactive one.

It also means accepting that not all security changes will be popular. Some users will complain that files can't be shared. Some workflows will break. Some legitimate use cases will be blocked. You'll need to have procedures for handling these situations. You'll need to balance security against usability. You'll need to explain security decisions to non-technical stakeholders.

But overall, Microsoft's moving in a direction that makes organizations more secure, and that's worth some friction in the short term.

The trend shows a steady increase in security standards as major vendors adopt secure-by-default practices. Estimated data.

Best Practices for Preparing Your Organization

If your organization runs Teams, you should be preparing now. Here's what that means:

First: Conduct an audit of current workflows. Identify which teams or departments send file types that will be blocked. Identify which workflows rely on sharing URLs that might be flagged. Document these systematically. You need an inventory before January 12.

Second: Set up a staging environment. If you have the resources, create a test tenant or test team with the new security settings enabled. Run your critical workflows through it. Identify conflicts early. This is especially important if you have custom workflows or integrations that depend on Teams.

Third: Train your IT support team. They're going to get calls from confused users. They need to understand the new protections. They need to know how to explain them. They need to know the workarounds. Get them educated before January 12.

Fourth: Prepare your user communication. You'll want to send out a message to users explaining the changes. Explain what's changing, why it's changing, and what users should do if they encounter blocked content. Make it clear that these are security protections, not bugs.

Fifth: Establish a process for handling exceptions. Some legitimate file types might get blocked. You need a process for users to request exceptions. You need approval criteria. You need to document these requests so you have data when you need to escalate to Microsoft.

Sixth: Plan for the feedback mechanism. Designate someone to monitor feedback reports. When users report false positives, you need to collect, analyze, and potentially escalate this data. This information is valuable for tuning your settings.

If you're a smaller organization without IT staff, you might not be able to do all of this. That's fine. In that case, just let the defaults apply. They're reasonable for most organizations. Just be aware that some workflows might break, and be prepared to help users find workarounds.

Comparing Teams Security to Competing Platforms

How do Teams' new security features stack up against alternatives? Slack, Google Workspace, and other collaboration platforms all have security features, but the level of automatic protection varies.

Slack has similar capabilities—they can block certain file types, they have URL detection, they have detection for compromised accounts. But many of these features are optional or require specific plan levels. Slack doesn't automatically enable them the way Microsoft is doing.

Google Workspace has strong email security through Gmail, and that extends partially to Chat and Spaces. But Google's approach is less aggressive about blocking by default. They tend to flag and warn rather than block, which preserves usability but risks security.

Microsoft Teams is moving toward a more aggressive stance. That's partly because Microsoft owns the entire stack—they can see the full picture of threats across Outlook, Office, and Teams. That visibility makes them more confident in their threat detection. They can make decisions about defaults that other platforms can't afford to make.

For organizations evaluating platforms, this is worth considering. Microsoft's commitment to secure-by-default might be appealing if you're security-focused. It might be frustrating if you value flexibility and user choice. There's a tradeoff. Pick the platform that aligns with your security philosophy.

The Cost of Implementation

Microsoft isn't charging extra for these security features. They're available at the same pricing tier you're already paying for Teams. That's actually important. They could have made advanced security a premium tier, but they didn't. They're making it available to everyone.

The cost is in implementation and change management. Your IT team will spend time testing the new settings. They'll spend time handling support tickets from users hitting false positives. They might need to implement workarounds or exceptions for specific workflows. That's not a monetary cost, but it's a real cost in terms of time and effort.

For most organizations, this cost is worth it. Preventing one incident—one compromised account that spreads to ten other users—can save thousands in incident response, remediation, and lost productivity. The security benefit vastly outweighs the implementation cost.

But for some organizations with particularly complex workflows or edge cases, the implementation cost might be higher. Those organizations need to do the upfront planning and testing to figure out if the new settings will work for them.

How to Handle Legitimate Use Cases That Get Blocked

Let's say your organization needs to distribute PowerShell scripts through Teams as part of your standard operating procedure. The new file type blocking is going to prevent this. You've got options:

Option 1: Use a different channel. Don't send the scripts through Teams. Use email, or a dedicated secure file sharing platform, or a code repository. Shift the workflow away from Teams.

Option 2: Compress the files. Zip up the PowerShell script so it becomes a .zip file. File type blocking probably won't block zip files (they're not inherently executable). Users download the zip and extract it. A bit more friction, but it works.

Option 3: Request an exception. Work with your IT team and Microsoft to request an exception for that specific file type. Microsoft can exclude certain file types from blocking if you have a documented business need. This requires approval and documentation, but it's possible.

Option 4: Disable the protection for your team. If you really need the flexibility, you can disable weaponizable file type blocking for your organization. You lose the protection, but you gain the flexibility. This is a tradeoff—security for usability.

Which option you choose depends on your specific situation. For most organizations, Option 1 (using a different channel) or Option 2 (compressing files) is the right answer. For organizations with specific compliance or operational requirements, Option 3 (requesting an exception) might be necessary. Option 4 is the last resort—you'd only do it if none of the other options work and you really need maximum flexibility.

What Happens If You Ignore This Announcement

If your organization ignores this announcement and doesn't prepare, what happens? On January 12, the security features automatically enable. Most users don't notice. But some do. They can't send files they need to send. They can't access links they need to access. They contact IT support complaining.

Your IT team, unprepared and unprepared, has to scramble. They don't understand the new features. They don't have procedures for handling exceptions. They have to go back and enable the feedback mechanism to start collecting false positives. They're reactive instead of proactive.

This situation is fixable but unpleasant. You'll eventually get your systems configured correctly. But you'll have spent extra time and effort that you could have avoided with advance planning.

Plus, there's a coordination problem. If multiple organizations ignore the announcement and scramble on the same day, Microsoft's support channels get overwhelmed. Your support requests go into a queue. Your problems don't get solved as quickly. You stay frustrated longer.

The organizations that prepared in advance are running smoothly on January 12. The unprepared ones are dealing with chaos. It's a difference between good planning and bad planning.

The Broader Implications for Enterprise Security

Microsoft's move here has implications beyond Teams. When a major platform vendor makes security the default, it raises the baseline for the entire industry. Customers of other platforms start asking questions. "Why doesn't our platform do this? Why do we have to configure security manually?" That pressure drives improvements elsewhere.

It also sends a signal to the market about what's acceptable. Secure-by-default is becoming the expectation, not the exception. Software vendors that continue shipping with permissive defaults are going to face criticism. They'll be seen as less security-conscious. Over time, this competitive pressure improves security across the board.

It also normalizes the idea that vendors can force security improvements. Previously, enterprise software companies were very cautious about making changes that users didn't explicitly ask for. They feared backlash. They worried about support issues. They let security remain optional.

Microsoft's demonstrating that you can make security changes, get some complaints, and still have a good outcome overall because the benefits outweigh the costs. That's going to embolden other vendors.

For long-term security professionals, this is mostly positive. It means the baseline keeps getting higher. It means attacks have to get more sophisticated. It means the low-skill, opportunistic attackers get weeded out, leaving only more determined adversaries. That's actually better for defense, because you can focus your resources on real threats instead of commodity malware.

FAQ

What are the three security features that Microsoft is enabling in Teams on January 12, 2026?

Microsoft is enabling three protections: weaponizable file type blocking (prevents messages with dangerous files like .exe, .ps 1, or macro-enabled Office documents), malicious URL detection (scans links in real-time against threat intelligence databases to catch phishing URLs), and a feedback mechanism (allows users to report false positives so Microsoft can improve detection accuracy). These three work together as a layered defense against the most common attack vectors in Teams.

Can I disable these new security features if they interfere with my workflows?

Yes, you can disable any or all of these protections if you have specific business needs that require it. The settings are located in the Teams admin center under Messaging > Messaging settings > Messaging safety. You'll need to make these changes before January 12, 2026, since that's when they automatically enable. However, Microsoft recommends keeping at least some of these protections enabled for security reasons. If you need to disable protections, document your business justification and any compensating controls you have in place.

What file types will be blocked by the weaponizable file type protection?

The protection blocks high-risk executable and script files including .exe, .dll, .cmd, .bat, .ps 1 (PowerShell), and macro-enabled Office documents like .docm, .xlsm, and .docm.m. It also blocks archive files that might contain these types of files. If you need to share any of these file types legitimately, you can compress them into a .zip file, use a different communication channel, or request an exception from your IT team.

How does the malicious URL detection work in Teams?

When someone posts a link in Teams, the system scans it against Microsoft's threat intelligence databases in real-time. These databases contain known phishing URLs, malware distribution sites, credential harvesting pages, and other malicious domains collected from Outlook and Microsoft 365 activity across hundreds of millions of messages. If the system detects a suspicious link, it flags the message and typically prevents delivery or displays a warning. Users can report false positives through the feedback mechanism.

What should I do to prepare my organization for these changes?

You should start by auditing your current Teams workflows to identify which teams regularly send file types that will be blocked or rely on sharing URLs that might be flagged. Set up a test environment with the new security settings enabled to identify conflicts before January 12. Train your IT support team on the new features so they can help users when issues arise. Prepare user-facing communication explaining what's changing and why. Finally, establish a process for handling exceptions when legitimate use cases get blocked—you'll likely need this for handling false positives.

Will these security features block legitimate business activities?

Possibly, yes. Some legitimate workflows do rely on sharing file types that will be blocked (like PowerShell scripts for system administrators) or URLs that might be flagged as suspicious. This is why the feedback mechanism matters. When your legitimate use case gets blocked, you report it as a false positive. Microsoft collects this data and adjusts the detection rules to be more precise. This is a normal part of the system working correctly. If you have specific workflows that consistently get blocked, you can work with your IT team to request an exception.

What happens if I don't prepare for these changes before January 12?

The security features will automatically enable on January 12 regardless of whether you've prepared or not. Most users won't notice if the default settings work with their workflows. However, users who rely on sending blocked file types or accessing URLs that get flagged will encounter errors. Without advance preparation, your IT team will be reactive instead of proactive, responding to confused users instead of having planned solutions ready. You'll also lack the vendor relationship and documentation to quickly escalate issues to Microsoft if needed.

How does the feedback mechanism improve the system over time?

When users report false positives through the feedback mechanism, that information gets sent back to Microsoft. Security teams analyze the feedback to identify patterns. If a legitimate URL is being over-flagged, Microsoft adjusts the detection rules to be more precise. If a legitimate file type is being blocked unnecessarily, they update the blocklist. This feedback loop means the system should get more accurate over time, with fewer false positives annoying users while maintaining protection against actual threats.

Are these security features available in all Teams pricing tiers?

Yes, weaponizable file type blocking, malicious URL detection, and the feedback mechanism are being rolled out to all Teams users at no additional cost. Microsoft isn't creating premium security tiers for these features—they're making them available to everyone because the baseline security benefit is important for the entire ecosystem. This is different from how some features are traditionally licensed.

What if our organization has specific compliance requirements that conflict with these settings?

If your organization operates in a regulated industry (healthcare, finance, government) with specific compliance requirements, you might have legitimate reasons to adjust these settings. The good news is that Microsoft has provided granular controls—you can enable or disable each protection separately, and you can customize which file types get blocked. Before January 12, work with your security team to determine the configuration that meets both your compliance requirements and Microsoft's security defaults. If needed, you can also request exceptions from Microsoft for specific use cases with proper documentation.

Conclusion: Security by Default Is Here

Microsoft Teams is joining a broader movement toward security-by-default. The automatic activation of weaponizable file type blocking, malicious URL detection, and feedback mechanisms represents a philosophical shift in how enterprise software handles security. It's no longer optional. It's no longer something you configure later. It's the baseline.

For most organizations, this is good news. These protections will catch the obvious attacks. They'll reduce the volume of malware and phishing that reaches users. They'll make life harder for attackers, not harder for legitimate users. The false positive rate should be manageable, especially with the feedback mechanism in place.

For some organizations with specific workflows or compliance requirements, the change will require adjustment. You'll need to find workarounds or request exceptions. You'll need to update procedures. But even for these organizations, the security benefit is worth the friction.

The real challenge is organizational. You need to prepare now. You need to audit your workflows. You need to test in staging environments. You need to train your teams. You need to have a plan for January 12. Organizations that do this preparation will transition smoothly. Organizations that don't will face a chaotic few weeks dealing with support requests and frustrated users.

The good news is that Microsoft gave you advance notice. They're not forcing this change silently. They're giving organizations time to prepare. That's responsible behavior from a major vendor. Use that time wisely. Get your house in order before January 12. Test the new settings. Identify conflicts. Plan your approach. Then on January 12, when the switch flips, you'll be ready.

This is how security improves at scale. Not through individual organizations making better decisions (they often don't), but through platforms making the secure decision the default decision. Expect more of this from Microsoft and other major vendors. Expect your security posture to improve whether you actively work for it or not. And expect to have some friction along the way. That's the tradeoff. You gain security. You lose a little flexibility. For most organizations, that's a deal worth taking.

Key Takeaways

- Microsoft is automatically enabling weaponizable file type blocking, malicious URL detection, and feedback mechanisms in Teams starting January 12, 2026

- Organizations have until January 12 to test these settings and adjust them if needed for their specific workflows

- The three protections create a layered defense against file-based malware, phishing attacks, and account compromise

- False positives are inevitable, which is why the feedback mechanism is critical for reporting legitimate files that get blocked

- Organizations should audit current workflows, test in staging environments, train support teams, and prepare exception procedures before the January 12 activation date

![Microsoft Teams Security Features: Malicious Content Protection [2025]](https://tryrunable.com/blog/microsoft-teams-security-features-malicious-content-protecti/image-1-1767119800957.jpg)