The Most Dangerous People on the Internet [2025]: Digital Power, Real-World Consequences

The internet isn't separate from reality anymore. It hasn't been for years.

What happens in your feeds, on encrypted channels, and through coordinated campaigns doesn't stay online. It bleeds into law enforcement actions, healthcare decisions, election outcomes, and the daily lives of millions of people who never signed up to be part of someone else's digital experiment.

Every year, we see the same pattern: individuals with outsized influence over digital spaces weaponize that power in ways that create tangible, measurable harm. Some do it intentionally. Others do it carelessly, with the kind of casual confidence that comes from never experiencing real consequences.

In 2025, this dynamic reached a new apex. The people shaping the internet's worst impulses aren't faceless algorithms anymore. They're politicians with direct access to the levers of government power. They're billionaires running companies that billions of people depend on. They're conspiracy theorists now holding cabinet-level positions. They're state-sponsored hackers operating with virtual impunity.

This isn't a moral judgment disguised as journalism. This is pattern recognition. These are people who demonstrably caused measurable harm through their digital activities, whose online behavior had downstream consequences for physical safety, financial security, and fundamental rights.

Let's talk about who they are, what they're actually doing, and why it matters beyond the algorithm that served you this article.

TL; DR

- Donald Trump dominates digital spaces with Truth Social as his primary governing tool, posting 169 times in a single evening while setting federal policy

- Stephen Miller and Kristi Noem architect anti-immigration enforcement through digital surveillance, social media tracking, and facial recognition systems

- DOGE operatives gained unauthorized access to federal payment systems and sensitive databases, creating unprecedented surveillance infrastructure risks

- Robert F. Kennedy Jr. weaponized vaccine skepticism and conspiracy theories to influence public health policy affecting millions

- Elon Musk continues consolidating power across communication platforms, news distribution, and government influence simultaneously

- State-backed hackers from China, Russia, and Iran conduct industrial espionage, critical infrastructure attacks, and voter manipulation at scale

- Online scam networks exploit financial desperation while regulatory bodies struggle to keep pace with evolving fraud techniques

- Bottom Line: Digital influence has become government policy, with minimal oversight or accountability structures in place

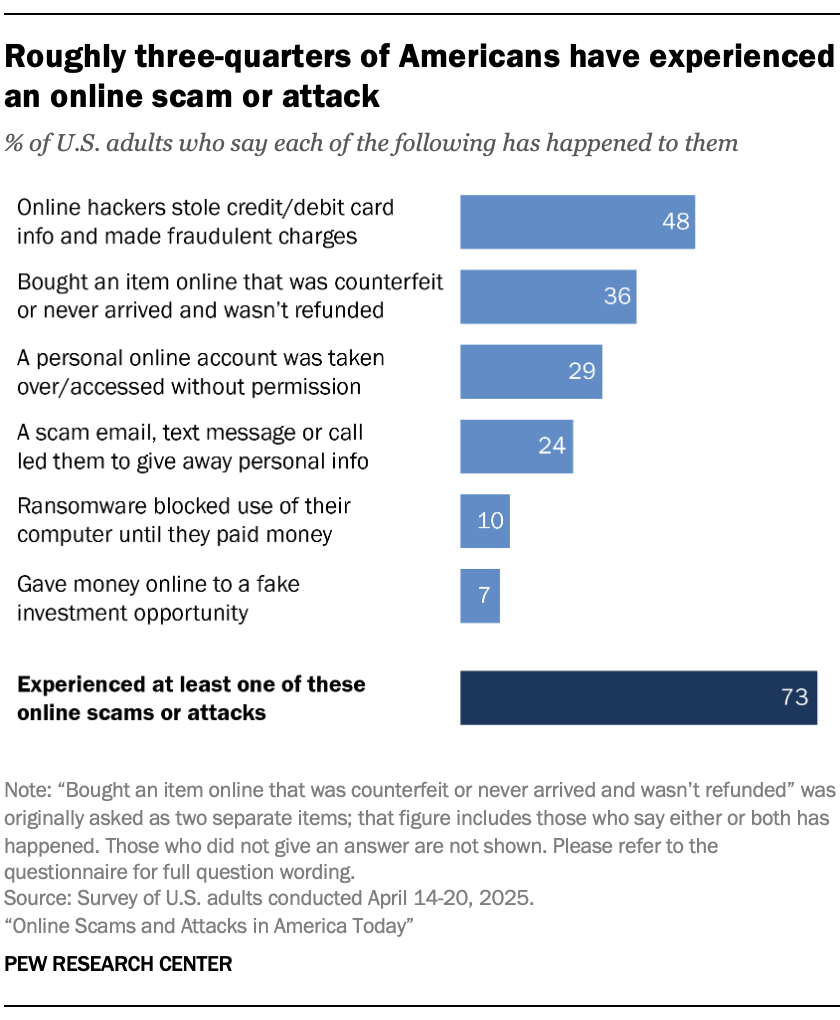

Estimated data suggests that losses from online scams in the U.S. could double every three years, reaching $80 billion by 2029 if current trends continue.

Donald Trump: The President Who Rules Through Posts

Donald Trump didn't invent online radicalization, but he perfected its weaponization at the highest level of governmental power.

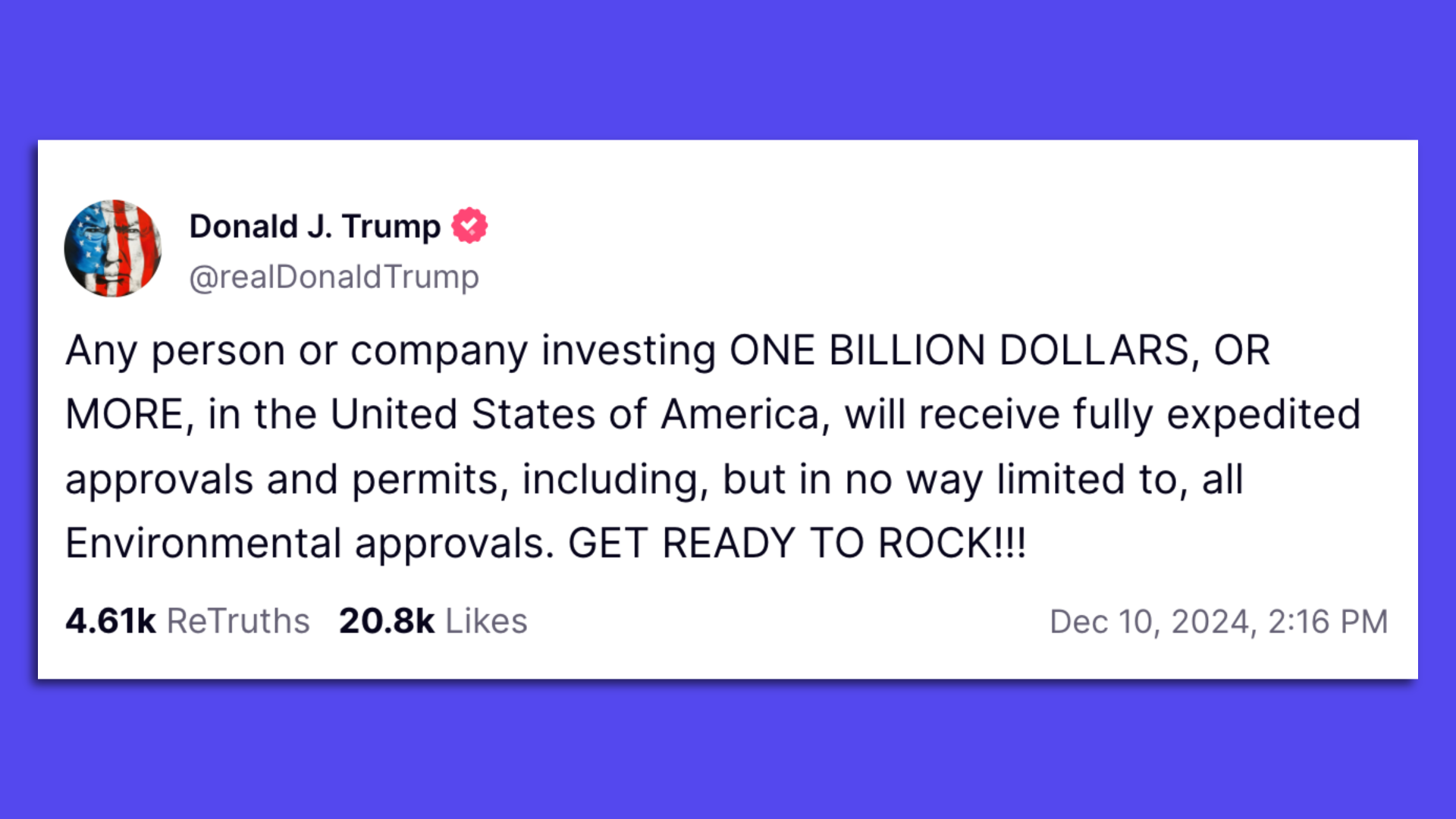

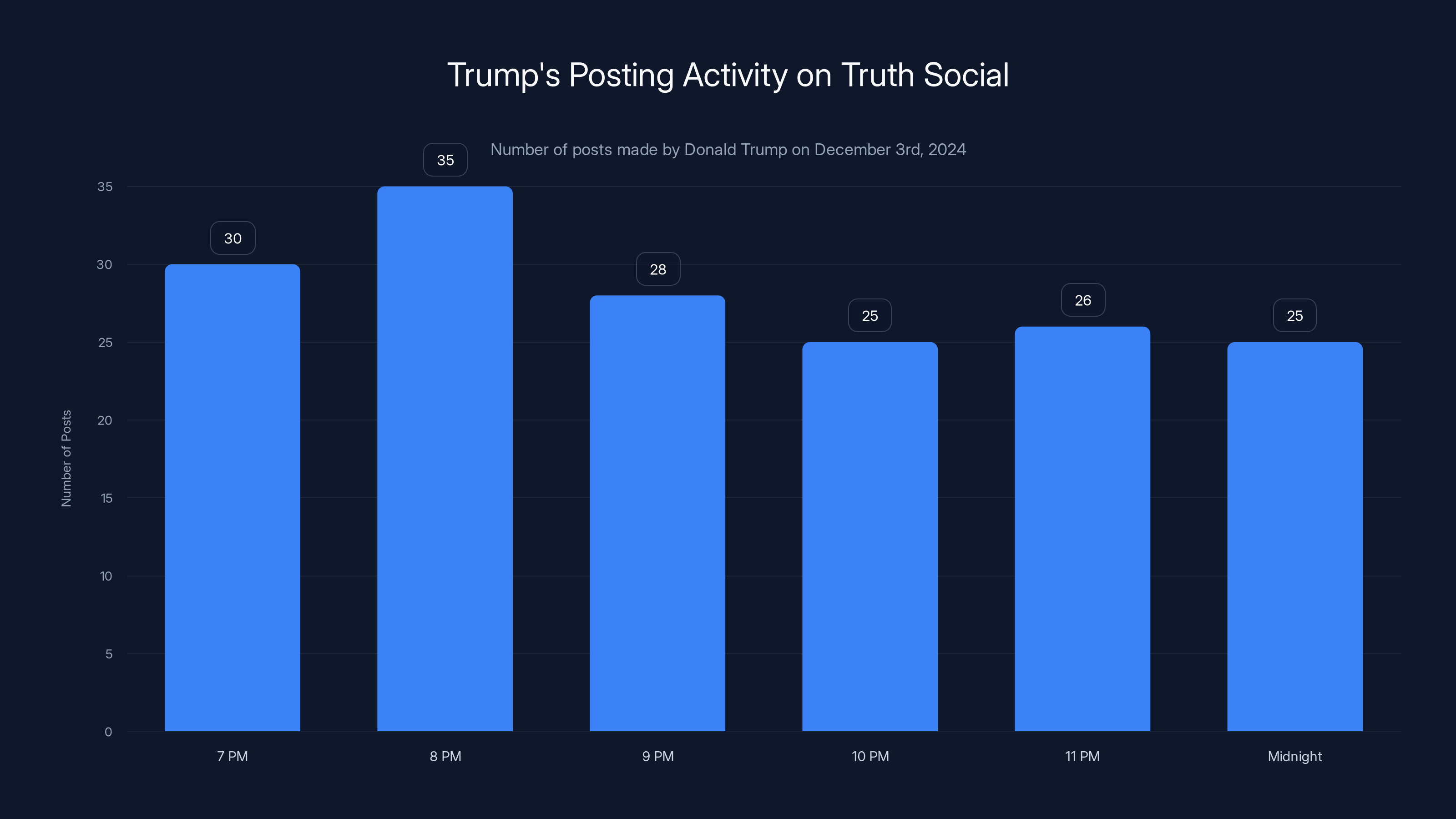

He's now in his second term as president, and his primary governing tool isn't behind closed doors in the Oval Office. It's Truth Social, his social network where he posts with virtually no content moderation, fact-checking, or editorial oversight. On December 3rd, 2024, between roughly 7 PM and midnight, Trump posted 169 times. One hundred sixty-nine posts. In five hours. At the White House.

These weren't casual updates. They included directives to Congress about constitutional procedures ("TERMINATE THE FILIBUSTER"), commentary on foreign governments' election outcomes, and posts claiming that a video reminding US troops of their legal obligations was "SEDITIOUS BEHAVIOR, punishable by DEATH."

This is how contemporary governance works now: the president of the United States disseminates policy, attacks, conspiracy theories, and AI-generated content directly to his followers, bypassing traditional media, bypassing advisors who might counsel restraint, bypassing democratic norms and institutional checks.

When the president posts, it becomes news. When it becomes news, entire news cycles organize around it. Cable networks dedicate hours to analyzing his statements. Political opponents craft responses. International allies and adversaries study his implications. Everyone in the United States is forced to absorb the chaos he creates online, whether they follow him or not.

Trump's approach to social media was revolutionary a decade ago. Now it's the template for how government actually operates. Policy isn't announced through official channels with time for analysis and institutional response. It's posted to Truth Social with all the permanence and considered judgment of an angry 3 AM tweet.

What's made this particularly dangerous in 2025 is the combination of Trump's increased comfort with digital governance and his administration's simultaneous control over the apparatus of law enforcement. He posts attacks on judges, journalists, and political opponents, and federal agents respond. He posts conspiracy theories, and they spread through law enforcement networks. He posts directives, and they become policy without traditional review processes.

The cracks have shown. When Trump posted callous comments following the stabbing death of filmmaker Rob Reiner and his wife in December, even Republican congressional members pushed back publicly. But these moments are exceptions, not patterns. The default remains: Trump posts, the country reacts, and institutional guardrails erode a little further.

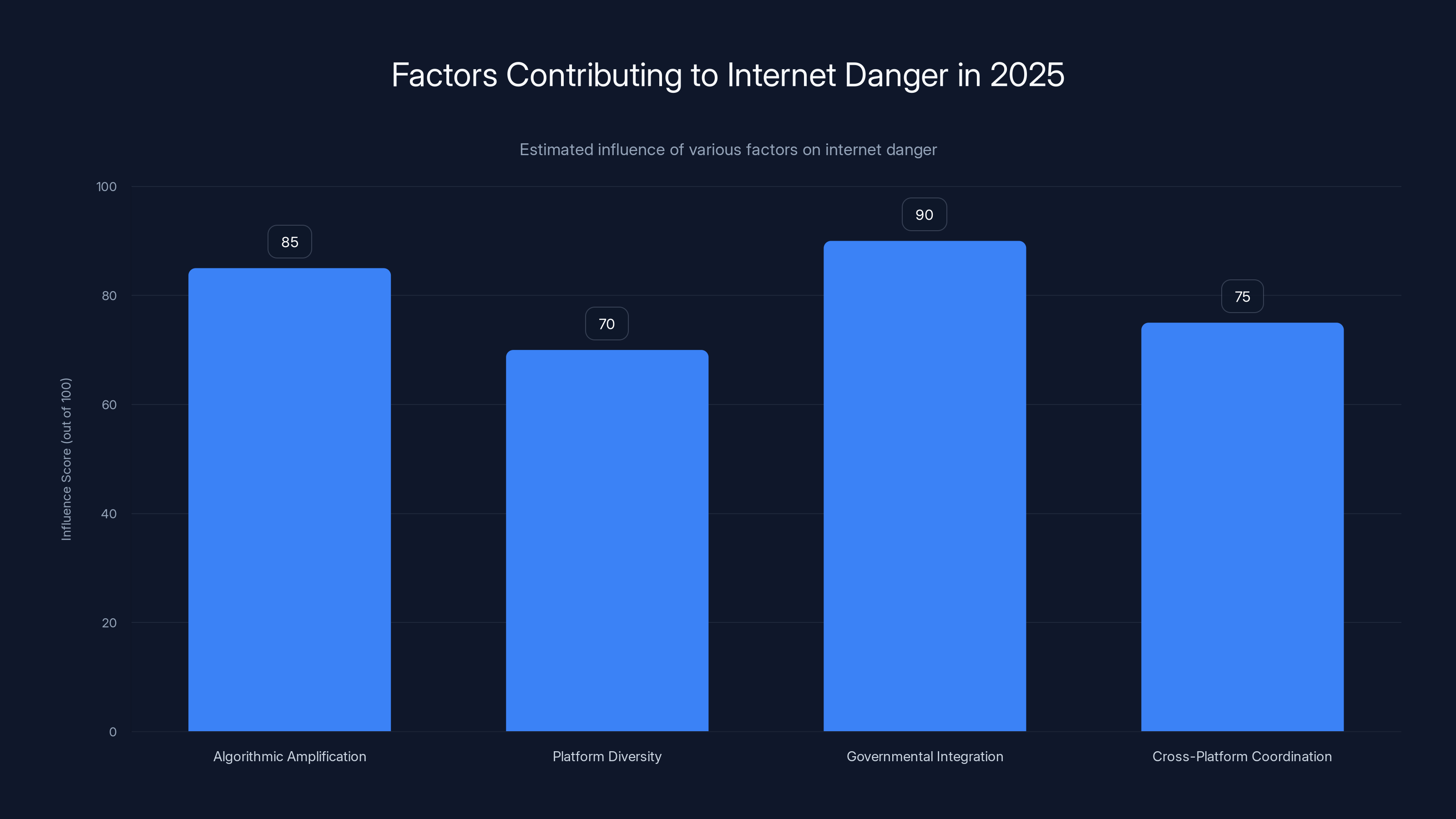

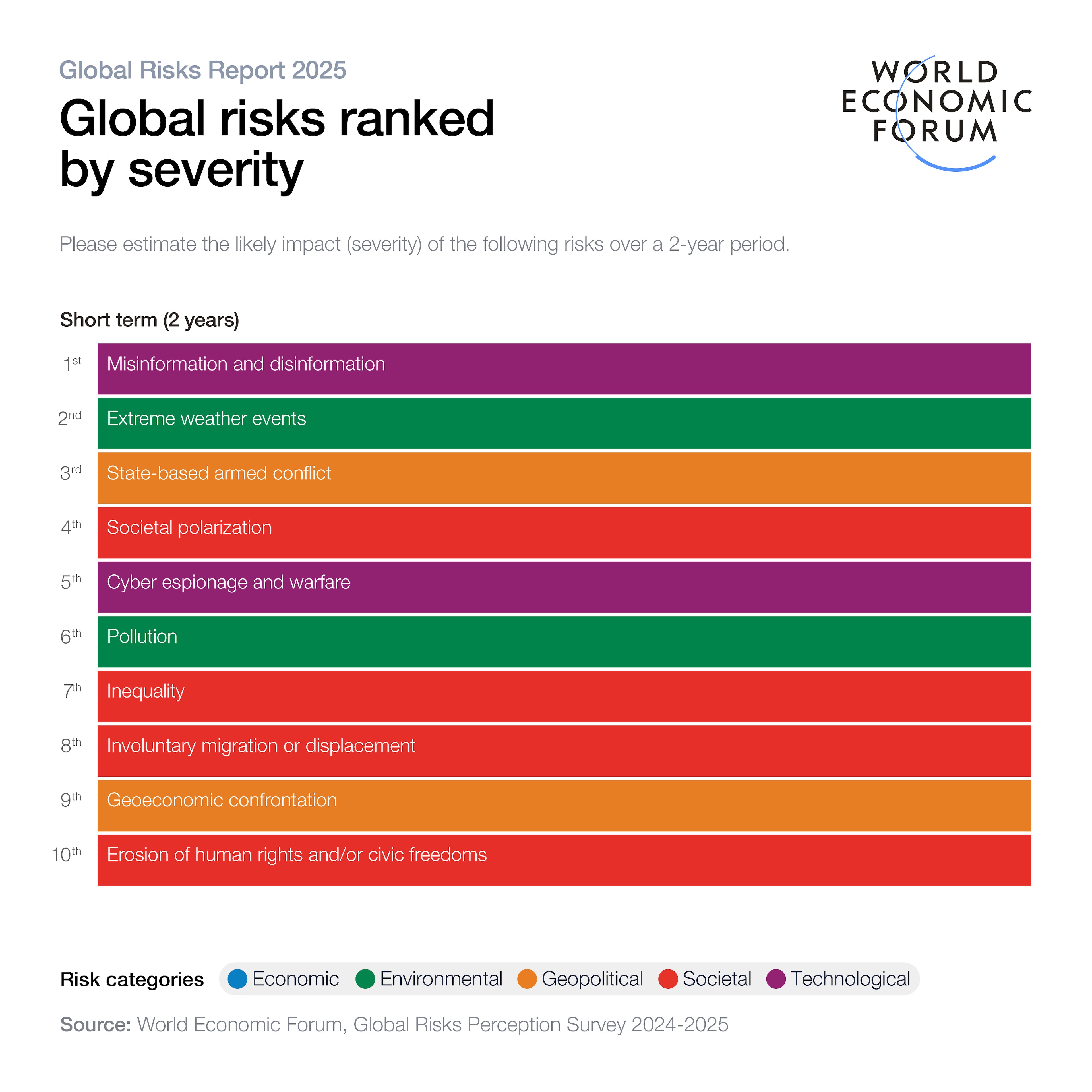

In 2025, governmental integration and algorithmic amplification are the most influential factors contributing to internet danger. (Estimated data)

Stephen Miller and Kristi Noem: Architects of Digital Enforcement

If Trump is the poster, Stephen Miller is the architect. As deputy chief of staff for policy, Miller designed the anti-immigration enforcement apparatus that defined daily life in America throughout 2025.

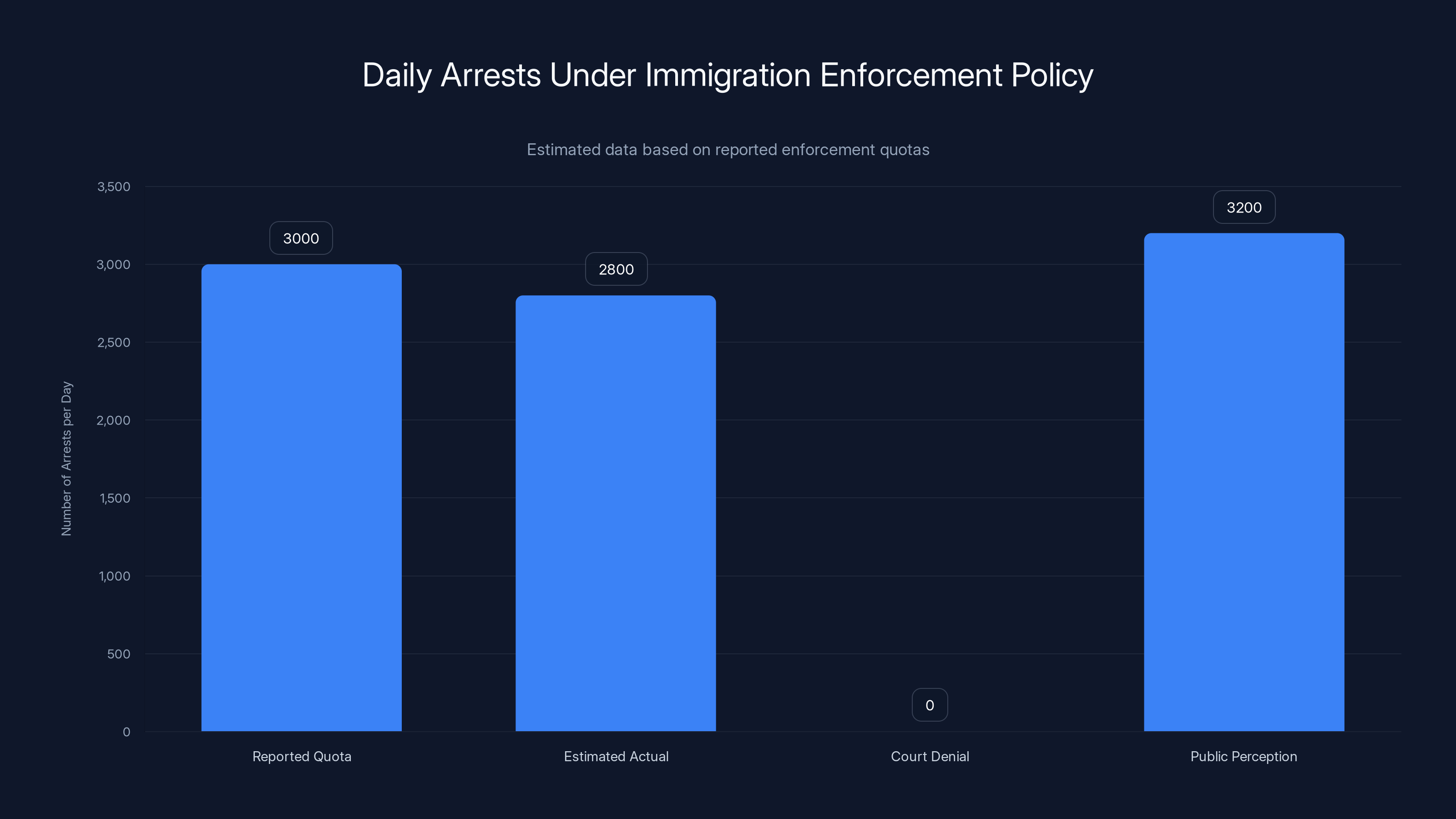

His role isn't ceremonial. Miller went on television to announce that federal agents would arrest 3,000 people per day. The administration later denied the existence of this "quota" when forced to defend it in court, but the policy was implemented consistently. Immigration and Customs Enforcement (ICE) and Customs and Border Protection (CBP) agents deployed across cities, conducting mass traffic stops, workplace raids, and neighborhood sweeps targeting anyone perceived as foreign.

What made this particularly dangerous was the infrastructure Miller built to make it efficient. Federal agencies expanded social media surveillance. CBP developed facial recognition apps connecting to government databases. The administration created systems for identifying, tracking, and monitoring millions of people based on digital footprints.

Miller didn't do this alone. Kristi Noem, as Secretary of Homeland Security, oversees the actual execution. She commands both ICE and CBP, directing how enforcement is conducted on the ground. Under her leadership, the agency proposed requiring travelers to the United States to submit five years of social media posts for government review. This sounds dystopian because it is. It's also essentially law.

Noem has also pushed back against public accountability for federal agents, classifying attempts to document their activities as illegal "doxing." When citizens film enforcement actions or share information about ICE operations, the government treats this as a crime worth prosecuting.

The digital infrastructure they built doesn't disappear when Trump leaves office. It's embedded now. Future administrations will inherit surveillance systems, facial recognition databases, and enforcement protocols that originated in 2025. The normalized practice of treating social media as intelligence for law enforcement has expanded the definition of acceptable government surveillance in ways that will take decades to unwind.

What's most insidious is how the digital surveillance apparatus becomes self-justifying. When the government creates systems to track immigrants, those systems inevitably identify more targets. When facial recognition is deployed, it gets used for purposes beyond its original scope. The infrastructure becomes the policy, regardless of who's using it.

DOGE: When Conspiracy Podcasters Control Federal Systems

Imagine a scenario where a team of largely inexperienced operatives, many in their twenties, bypass normal background checks and security clearances to access some of the most sensitive computer systems in the federal government. Their stated mission is to cut waste. Their actual activities include demanding God-mode permissions on federal payment systems, accessing databases controlling trillions of dollars in transactions, and attempting to wire together datasets to create a master surveillance database.

This isn't fiction. This is DOGE, the Department of Government Efficiency.

Elon Musk's DOGE became the template for how the Trump administration reorganized vast portions of the federal bureaucracy. It attracted people from his various ventures (primarily X, formerly Twitter), cryptocurrency communities, and online conspiracy spaces. Some had legitimate technical backgrounds. Many didn't.

One 25-year-old former X employee received direct access to Treasury payment systems. Another DOGE member demanded access to federal databases without clear justification for why they needed it. The group operated with minimal oversight, requesting permissions that made security professionals across federal agencies nervous.

The most troubling aspect isn't what DOGE did in its initial months. It's the infrastructure it left behind. When the initial controversy forced a tactical retreat, DOGE operatives didn't disappear. They became employees throughout the government, placed in positions of authority over information systems, data integration, and access permissions.

This matters because the actual goal of DOGE remains: integrate data across federal agencies to create a master database with unprecedented surveillance potential. That goal hasn't changed. It's just progressed more quietly.

The danger here is structural. You can't unknow how to access systems you've already breached. You can't unring the bell on security protocols that have been compromised. The federal government's information architecture is now significantly more vulnerable because DOGE demonstrated it could be breached by operatives with political connections but minimal security clearance.

Future administrations, regardless of political alignment, will inherit the knowledge that federal payment systems and sensitive databases can be accessed by political appointees without normal security procedures. The precedent has been set. The vulnerabilities have been mapped.

On December 3rd, 2024, Donald Trump posted 169 times on Truth Social in a span of five hours, averaging over 30 posts per hour. This highlights his intensive use of social media for governance.

Robert F. Kennedy Jr.: Conspiracy Theories as Health Policy

Robert F. Kennedy Jr. represents something new: a prominent figure with a decades-long track record of vaccine skepticism and medical conspiracy theories now holding cabinet-level authority over public health.

As Health and Human Services Secretary, Kennedy oversees policy affecting hundreds of millions of people. He has the authority to influence vaccine distribution, pharmaceutical regulation, and public health decisions during medical emergencies. He's also someone who has promoted the thoroughly debunked theory linking vaccines to autism, amplified claims about 5G health effects, and suggested that vaccines have caused an autism epidemic with essentially no epidemiological support.

The danger isn't that Kennedy personally convinced anyone. It's that his position legitimizes decades of online conspiracy content that has been percolating through various internet communities since at least 2010.

When vaccine skepticism was purely an online movement, it affected a subset of the population. When vaccine skepticism is held by the Health Secretary, it affects policy. When policy reflects conspiracy theories, it affects public health outcomes.

Kennedy's appointment created a feedback loop. Online communities that had promoted vaccine skepticism for years suddenly found their narratives validated by someone in actual power. Media coverage amplified their claims. Research funding shifted toward projects that reflected his priors. Public health initiatives were questioned by people with actual expertise but suddenly no institutional authority.

This is how conspiracy theories transition from internet phenomenon to material reality. They gain institutional legitimacy. They attract resources. They influence decision-making.

The damage isn't immediate or easily quantifiable. You won't see a single statistic that says "Kennedy caused X deaths by implementing conspiracy theory policy." Instead, you'll see vaccine hesitancy increase by 5-7 percentage points over the next few years. You'll see immunization rates drop in specific communities. You'll see rare diseases re-emerge in areas that had essentially eliminated them.

But those outcomes trace back to a moment in 2025 when someone who spent twenty years promoting medical conspiracy theories was given authority over the health system of a nation of 330 million people, largely because he has excellent social media reach and online credibility in specific communities.

Elon Musk: Consolidating Power Across Every Vector

Elon Musk doesn't govern the country. He doesn't need to. He owns or controls enough of the infrastructure that shapes political discourse that he's become a quasi-governmental actor.

He owns X (formerly Twitter), the platform where political announcements happen, where news breaks, where the president's advisors communicate. He owns Tesla, which influences energy policy and transportation infrastructure. He owns Neuralink, involved in neural implant development. He owns The Boring Company and is launching Starlink satellite internet globally. He runs DOGE, which has embedded operatives throughout the federal government.

What makes this particularly dangerous isn't any single entity. It's the overlap. When Musk expresses political views on X, the algorithm he controls amplifies them. When X is a primary news distribution channel and Musk owns it, he has editorial power over what becomes news. When he's simultaneously advising the president through DOGE, the feedback loops become impossible to separate.

Musk isn't thoughtful about these conflicts. He's openly used X to promote his own business interests, attack competitors, and influence stock prices. He's changed content moderation policies based on personal preferences rather than institutional standards. He's deliberately amplified content from political allies and suppressed content from critics.

The danger compounds when you realize he's doing this across multiple platforms and industries simultaneously. Starlink is expanding internet access globally, which means Musk controls access to the primary internet connection for millions of people outside urban areas. That's not trivial infrastructure. That's sovereignty-adjacent power.

In 2025, Musk has essentially unlimited ability to shape political discourse at scale. He can amplify or suppress information through the platform he owns. He can advise the government through DOGE. He can direct his companies' resources toward outcomes that align with his political preferences. And he has no institutional constraints limiting any of this.

Previous tech billionaires have had enormous influence. None have had this many overlapping vectors of power concentrated in one person simultaneously.

The reported quota of 3,000 arrests per day was denied in court but estimated actual figures suggest close adherence. Public perception often exceeds reported figures. Estimated data.

China's State-Backed Hackers: Industrial Espionage at Scale

While American political chaos dominates headlines, Chinese state-backed hacking operations continue operating with remarkable efficiency and minimal accountability.

China's digital espionage apparatus targets intellectual property, trade secrets, and critical infrastructure with sustained, methodical precision. The goal isn't to cause immediate damage. It's to accumulate advantage. When Chinese companies develop competing technologies, they often benefit from blueprints, source code, and design documentation obtained through hacking.

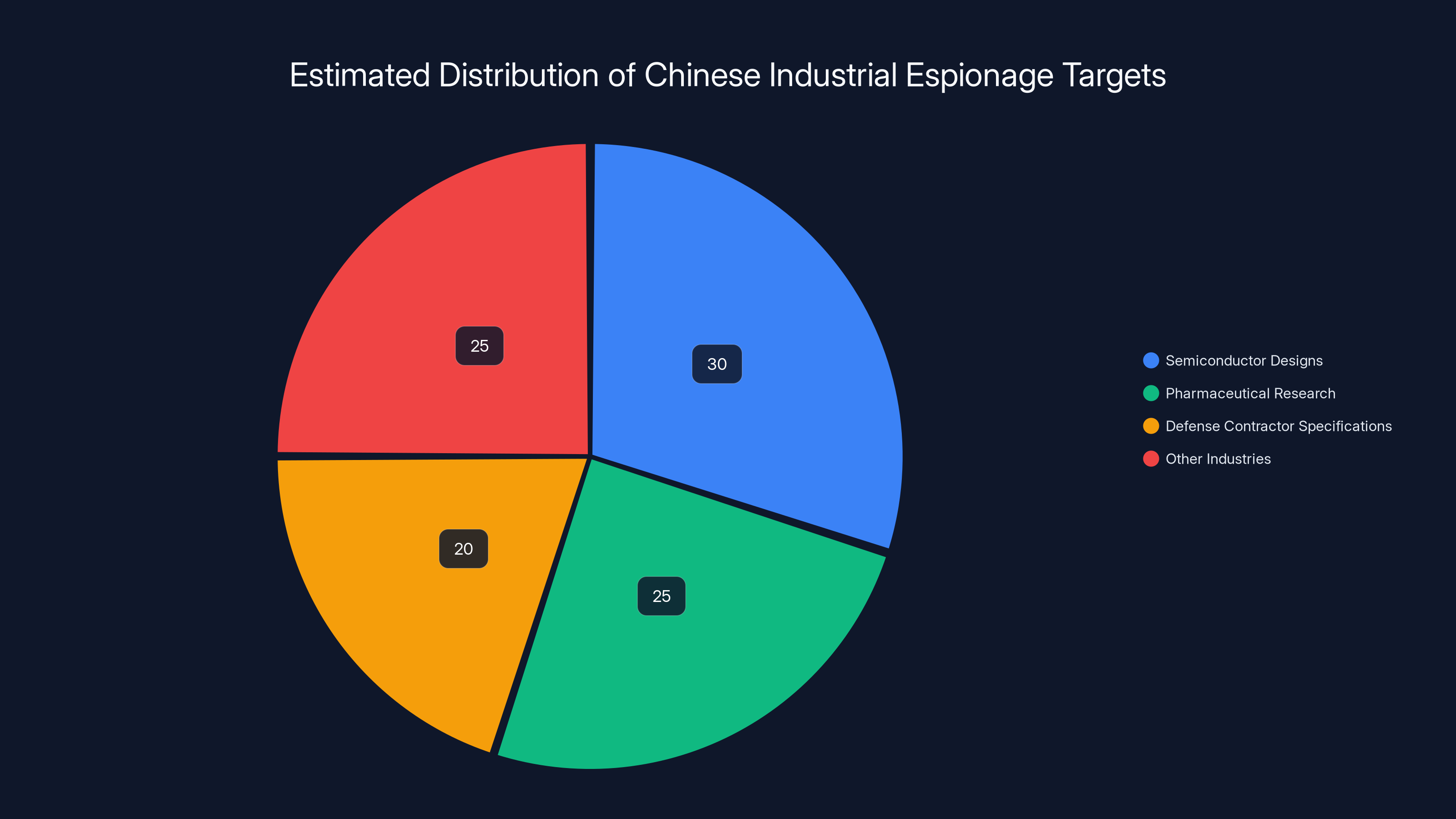

The scale is difficult to comprehend. We're talking about systematic theft of billions of dollars worth of intellectual property annually. American firms operating in competitive industries lose trade secrets constantly. Chinese operations have targeted everything from semiconductor designs to pharmaceutical research to defense contractor specifications.

What makes this particularly difficult to counter is that Chinese state-backed actors operate with governmental protection. American companies can sue other companies for theft. They can pursue criminal charges. Against Chinese operations backed by the government? The legal system offers no recourse. The FBI can investigate, but the perpetrators are beyond extradition or prosecution.

Some of the most advanced technologies being deployed by Chinese companies benefit from intellectual property stolen from American firms. When this compounds across multiple industries over decades, it shifts competitive advantage at massive scale.

The digital espionage isn't limited to theft. Chinese operations also engage in active disinformation campaigns, manipulating social media during American elections, spreading divisive content, and amplifying conspiracy theories. The goal is destabilization. If Americans are focused on fighting each other, they're not focused on Chinese advancement.

This is a form of information warfare that doesn't trigger conventional responses. It's not an act of aggression you can point to and say "that's a threat." It's systematic, ongoing, and integrated into normal operations.

Russia's Disinformation Infrastructure: Sophisticated and Adaptive

Russian hacking and disinformation operations evolved significantly throughout 2024 and into 2025. Unlike Chinese operations focused on economic espionage, Russian operations prioritize political destabilization.

Russia's Internet Research Agency and connected operations run thousands of fake accounts across social media platforms, creating synthetic political discourse. They generate convincing deepfakes of political figures. They launch sophisticated phishing campaigns targeting government employees, journalists, and activists. They conduct active attacks on critical infrastructure.

What's evolved is the sophistication. Early Russian disinformation campaigns were relatively crude, easy to identify as fake. Modern operations blend authentic content with synthetic material, making them harder to detect. They use AI to generate text and images that look legitimate. They infiltrate actual communities and post from accounts that look real because they've been seeded with months of authentic-seeming activity.

The goal remains consistent: increase polarization, undermine trust in institutions, and create the impression that nothing is real anymore. When everyone believes information is compromised, democratic institutions lose legitimacy.

Russian operations also maintain sophisticated persistent access to American critical infrastructure. Hackers have accessed power grid systems, water treatment facilities, and financial networks. The access often goes undetected for months. When it's discovered, it's unclear whether Russia is using it for theft, surveillance, or waiting for a moment to cause maximum damage.

What's particularly unsettling is that these operations continue despite sanctions, international condemnation, and US military capacity to respond. The calculation appears to be that the political will to escalate beyond sanctions doesn't exist, making the operation low-risk.

Estimated data shows that semiconductor designs, pharmaceutical research, and defense contractor specifications are major targets of Chinese industrial espionage.

Iran's Targeting Infrastructure: Growing Sophistication

Iran's cyber operations historically focused on regional targets and internal surveillance. Increasingly, Iranian hackers target American infrastructure, election systems, and political figures.

Iranian operations conducted sophisticated reconnaissance on presidential campaigns in 2024. They've accessed email accounts of military contractors, law enforcement officials, and political operatives. They've conducted reconnaissance on power grid vulnerabilities and critical infrastructure.

Iranian operations are often less sophisticated than Chinese or Russian equivalents, but they're improving. What they lack in technical skill they compensate for through persistence and willingness to operate openly under state protection.

The Iranian government doesn't hide its hacking operations the way China does. It acknowledges them as legitimate responses to perceived American aggression. This creates a different kind of threat: operations conducted without need for operational security because the government supports them openly.

Whether through hacking infrastructure directly or through proxy operations, Iranian capabilities have expanded significantly. Their goals appear to be a combination of intelligence gathering, disruption, and demonstrating capability as deterrent against potential American military action.

Anonymous, Lulz Sec, and the Chaos Collective: Digital Anarchists

They call it "the comm," short for community. It's an amorphous collection of online anarchists, hackers, scammers, and chaos agents united primarily by the belief that institutions deserve to be attacked and that rules are suggestions.

Members of "the comm" conduct coordinated hacking operations, leak confidential information, conduct harassment campaigns, and generally operate according to the principle that nothing is off-limits if the target is perceived as deserving it.

The danger here is that "the comm" has no centralized leadership, making it impossible to negotiate with or deter. There's no leader to arrest, no single point of failure. When authorities take down one operation, five splinter groups replace it.

When Anonymous conducted hacking operations in the 2010s, it was relatively easy to track the motivations and identify specific targets. Modern "the comm" operations are harder to understand. They hack election infrastructure. They release database credentials. They conduct harassment campaigns against specific individuals. They leak private information.

Sometimes there seems to be a political goal. Sometimes it's pure chaos. Sometimes it's someone's personal vendetta amplified through collaborative hacking. The lack of coherent motivation makes it nearly impossible to develop effective countermeasures.

What's particularly unsettling is the skill level. These aren't amateurs. They include people who have worked in cybersecurity, government, and technology industries. They have significant technical capability. They have access to sophisticated tools and exploit zero-day vulnerabilities governments haven't discovered yet.

And they operate almost entirely on the wrong side of law enforcement response. Prosecuting distributed anonymous operations is technically and legally complex. Most never face consequences.

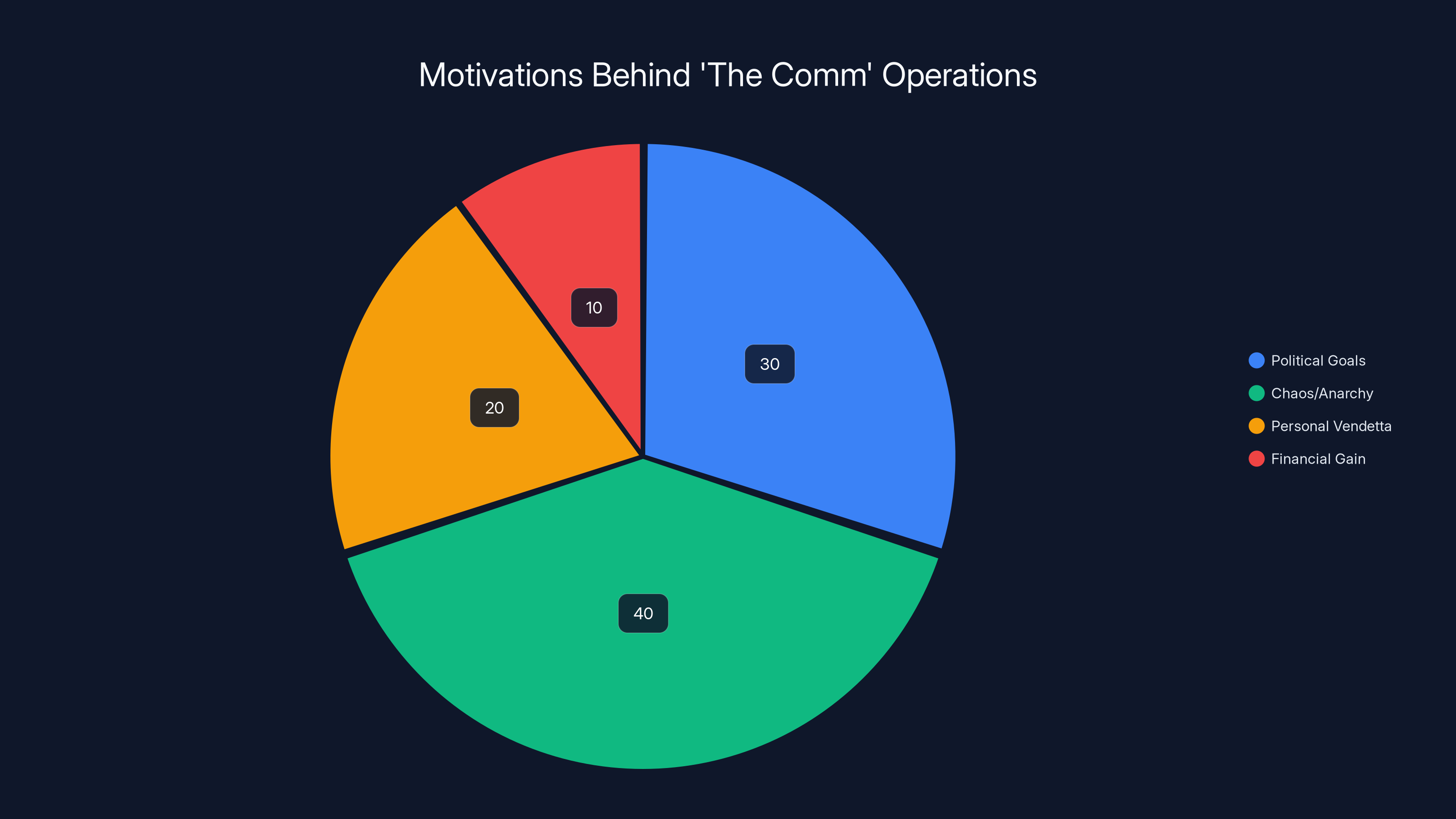

Estimated data suggests that chaos/anarchy is the leading motivation for 'the comm' operations, followed by political goals and personal vendettas.

Scammers, Grifters, and Online Fraud Operations: The Industrial Complex

Online scamming has professionalized into something resembling an actual industry.

You have romance scammers operating across continents, targeting specific demographics (often older people with savings), developing elaborate fake personas, and extracting hundreds of thousands of dollars before disappearing. You have tech support scammers calling people claiming to have detected viruses. You have cryptocurrency scammers creating fake investment opportunities, celebrity impersonation accounts, and NFT pump-and-dump schemes.

The sophistication is remarkable. Modern scam operations use AI to generate convincing deepfake videos. They create entire fake company infrastructure, including websites, customer service representatives, and support documentation. They use social engineering techniques that take weeks to execute but extract massive payouts.

What makes this particularly dangerous is scale combined with regulatory gaps. A scammer can target thousands of people simultaneously through automated systems. Law enforcement can't prosecute all of them. The financial systems facilitating the scams are difficult to track. By the time authorities identify a scam operation, the money is already moved through multiple jurisdictions.

The victims aren't interchangeable. Scammers specifically target vulnerable populations: elderly people, people in economic desperation, people dealing with grief. They weaponize psychological manipulation at scale.

And they operate with virtual impunity because international law enforcement struggles with jurisdiction, the volume of cases overwhelms prosecutorial capacity, and many scammers operate from countries with no extradition treaties with the United States.

Estimates suggest Americans lose over $10 billion annually to online scams. That number has roughly doubled every three years. At current trajectory, Americans will lose more money to online fraud than to natural disasters within the next five years.

But scamming rarely appears on lists of "dangerous people on the internet" because the targets are dispersed and the operators are often unknown. A single scammer extracting $500,000 from someone's retirement savings doesn't trigger the same cultural response as a politician posting something offensive.

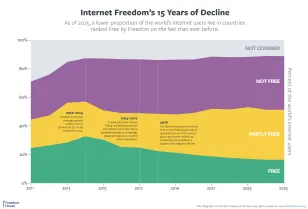

Maneuvering Between Platforms: How Dangerous Actors Cross Boundaries

One of the most effective strategies dangerous people employ is platform diversification. When they face content moderation on mainstream platforms, they migrate to alternative networks where accountability is minimal.

Trump shifted to Truth Social when Twitter moderated him. Conspiracy theorists operate across Telegram, Discord, 4chan, and encrypted channels where moderation is either non-existent or actively protective of extreme content. Scammers use encrypted communication to coordinate, making law enforcement investigation nearly impossible.

This creates a problem for any attempt to address dangerous digital behavior: the responsibility is fragmented. You Tube has policies. Telegram has different policies. Signal has different policies. Tik Tok has different policies. Discord has different policies.

A figure like Trump can post on Truth Social knowing that no content moderation applies. A conspiracy theorist can operate Telegram channels with thousands of members discussing harmful theories. A scammer can coordinate operations on encrypted platforms.

The platforms themselves have little incentive to aggressively moderate. Moderation costs money. Enforcement is complex. And many platforms derive value from the engagement that inflammatory content generates.

The Algorithm: How Digital Architecture Enables Dangerous Behavior

Algorithms aren't neutral. They're optimization functions programmed to maximize engagement, time on platform, and advertising revenue.

When Trump posts 169 times in an evening, the algorithm amplifies it because it generates engagement. When a conspiracy theorist creates emotionally manipulative content claiming to expose truth, the algorithm distributes it because people interact with it. When a scammer creates a convincing deepfake video, the algorithm learns it should be recommended because people click on it.

The algorithm doesn't care whether the content is accurate, beneficial, or harmful. It cares about measurable engagement metrics.

This creates a system where the most inflammatory, emotionally manipulative, and false content gets amplified. Truth is less engaging than lies. Nuance is less engaging than polarization. Accuracy is less engaging than outrage.

The dangerous people profiled in this article are often effective precisely because they understand this dynamic and weaponize it. They create content optimized for algorithmic distribution. They understand what the algorithm rewards and they provide it.

Facebook's algorithm recommends increasingly extreme content to increase engagement. You Tube's recommendation system has been documented suggesting conspiracy theory videos at higher rates than mainstream content. Tik Tok's algorithm optimizes for watch time without regard for truth value. Twitter's algorithm amplifies controversial posts.

No individual is responsible for these design decisions in the way we typically understand responsibility. But they're consequential. When the architecture rewards dangerous behavior, you'll get more of it.

The Structural Problem: Why Digital Power Lacks Accountability

The most dangerous aspect of internet power in 2025 is the absence of accountability mechanisms.

Trump posts falsehoods and it becomes policy. There's no fact-checking process. There's no requirement that statements be accurate. The president can post whatever he wants to his private network and it cascades through media systems.

Musk controls X's algorithm, meaning he effectively controls what information gets distributed to hundreds of millions of people. There's no transparency about how algorithmic decisions are made. There's no appeals process if you believe you've been unfairly suppressed. He's never required to justify his decisions to anyone.

Chinese hackers steal intellectual property with impunity because the legal system provides no remedy. DOGE operatives accessed federal systems and weren't prosecuted. Russian disinformation campaigns continue despite sanctions. Scammers operate from countries outside US jurisdiction.

The problem is structural: digital power operates at a scale and speed that regulatory systems can't match. By the time a policy is created to address dangerous behavior, the behavior has evolved. By the time a law is passed, perpetrators have migrated to different platforms or techniques.

There's also a fundamental asymmetry: individuals with concentrated digital power can move fast and break things. Accountability institutions move slowly and require consensus. The default advantage goes to those with concentrated power.

Information Warfare and Collective Delusion: The Cascade Effect

What distinguishes 2025 from previous years is the professionalization and scale of information warfare.

It's no longer isolated conspiracy communities creating false narratives. It's coordinated campaigns with state backing, algorithmic amplification, deepfake technology, and AI-generated content indistinguishable from authentic material.

When thousands of accounts claim something is true, and algorithms recommend that content, and media amplifies the narrative, a cascade effect occurs. Even people skeptical of the claim encounter it repeatedly from seemingly independent sources. Repetition creates the impression of consensus.

This is where dangerous people on the internet transition from being merely influential to being potentially destabilizing. When a significant portion of the population believes false information about elections, vaccines, or foreign policy, institutional trust erodes. When people don't trust institutions, they're vulnerable to further manipulation.

The dangerous people identified in this article are effective partly because they understand this cascade dynamic and exploit it. Trump posts something. His supporters amplify it. Media covers the amplification. Algorithms recommend related content. Within hours, something he posted has shaped collective discourse.

It's not that Trump is an unusually skilled communicator. It's that he has access to infrastructure that amplifies whatever he says at scale no previous political figure could match.

The Long View: How Digital Power Becomes Structural Power

The question that looms over 2025 is whether digital power is becoming institutionalized in ways that will persist regardless of who holds it.

When Trump leaves office, will the next administration be able to dismantle the surveillance infrastructure built by Miller and Noem? Will DOGE's intrusions into federal systems be fully remediated? Will the algorithmic systems that amplified dangerous content be restructured? Or will these become accepted baseline infrastructure?

Historically, power structures expand and rarely contract. Once an administration builds surveillance capabilities, the next administration inherits them and often expands them further. Once an algorithm is optimized to reward engagement over truth, reversing that optimization is organizationally difficult.

What's happening in 2025 isn't temporary. It's the establishment of new baseline expectations about what digital power looks like and what accountability is required of it.

The dangerous people profiled here aren't anomalies. They're the logical outcome of systems that concentrate power without distributed accountability. As digital systems become more central to governance and society, these dynamics will intensify unless structural change occurs.

The question isn't whether dangerous people will exploit digital power. It's whether we'll build systems that distribute power and accountability in ways that make concentration less possible.

FAQ

What makes someone "dangerous" on the internet in 2025?

Dangerousness on the internet in 2025 refers to individuals who wield outsized influence over digital spaces in ways that create measurable real-world harm. This includes direct control over platforms and algorithms, institutional power amplified by digital communication, state-sponsored cyber operations, and coordination across multiple vectors of power without distributed accountability. The distinction from previous years is that digital power has become governmental power, making internet influence inseparable from actual consequences.

How does algorithmic amplification enable dangerous behavior?

Algorithms optimize for engagement metrics like clicks, shares, and time spent on platform. These metrics reward inflammatory, emotionally manipulative, and false content at higher rates than accurate information. When dangerous people understand this dynamic and create content specifically optimized for algorithmic distribution, the algorithm becomes a force multiplier. A single post can reach hundreds of millions of people within hours, shifting cultural discourse before fact-checking or institutional response is possible.

Why is platform diversity a problem for accountability?

Dangerous actors migrate between platforms to evade moderation. When content is removed from mainstream platforms, perpetrators move to alternative networks with minimal oversight like Telegram, Signal, or encrypted channels. This fragmentation means no single accountability system can address the behavior comprehensively. A person banned from one platform can simply operate on five others, making traditional enforcement ineffective.

What's the difference between 2025 and previous years of dangerous internet activity?

The key difference is institutionalization. Previous dangerous internet activity was primarily conducted by non-governmental actors. In 2025, dangerous behavior is integrated into governmental structures. A conspiracy theorist now holds cabinet-level health authority. A social media platform owner advises the president through official channels. State-backed hackers operate with governmental protection. The consolidation of digital power and governmental authority makes the risk profile fundamentally different.

How do state-backed hacking operations differ from criminal hackers?

State-backed operations benefit from governmental protection, unlimited resources, and no accountability to legal systems. A criminal hacker can be prosecuted, extradited, and imprisoned. A Chinese state-backed hacker operates under government protection and can't face legal consequences. This asymmetry means state-backed operations can be more ambitious, take longer timeframes, and focus on strategic advantage rather than immediate profit.

What's the role of conspiracy theories in creating dangerous digital movements?

Conspiracy theories function as psychological frameworks that make people resistant to institutional authority and more vulnerable to manipulation. When someone believes institutions are fundamentally corrupt and lying, they're more likely to distrust official information and seek alternative explanations. Dangerous actors exploit this vulnerability by offering explanations that align with conspiratorial thinking. When conspiracy theorists gain institutional power, they can use government resources to validate their priors and expand their influence.

How does digital surveillance infrastructure created in 2025 affect future governance?

Once surveillance infrastructure is built, it persists and expands across administrations regardless of political alignment. The systems Miller and Noem created for targeting immigrants will be inherited by the next administration, which will likely expand their scope to other targets. The precedent that federal data can be accessed by political appointees without normal security procedures remains for future administrations. Infrastructure doesn't just disappear; it becomes baseline expectations about what's possible and acceptable.

What's the relationship between platform ownership and political power?

When a single individual owns the platform where political discourse happens, that individual has editorial control over information distribution without formal accountability. Musk's ownership of X means he can suppress or amplify political content based on personal preference. This gives him a form of power that previous tech entrepreneurs didn't have: direct control over the primary mechanism through which political information circulates for hundreds of millions of people.

Conclusion: The Reckoning We Haven't Had Yet

The internet was supposed to democratize information. Instead, it created new vectors for concentrated power and distributed vulnerability.

In 2025, the most dangerous people aren't necessarily the smartest or most capable. They're the ones positioned at the intersection of digital influence and institutional authority. They're people who understand how systems work and have access to infrastructure that amplifies whatever they do.

Trump doesn't need to convince people through persuasion. He posts on his own platform where he controls moderation. Miller doesn't need to explain his policies through normal democratic processes. He embeds them in surveillance infrastructure that operates without transparency. Musk doesn't need to compete fairly. He owns the platform where competition is discussed.

What's striking about 2025 is how normalized all of this has become. A president posts 169 times in an evening. That was shocking once. Now it's expected. Conspiracy theorists hold cabinet positions. Billionaires advise the president through official channels. State-backed hackers maintain access to critical infrastructure. None of this generates the kind of systemic response it probably should.

The pattern is clear: digital power is becoming governmental power, and we've built almost no structures to distribute accountability across that transition.

The dangerous people profiled here aren't dangerous because they're evil or uniquely malevolent. They're dangerous because they've positioned themselves at leverage points in systems that concentrate power without distributing accountability. They've understood that in a digital age, control of information distribution is control of reality.

The question for 2026 and beyond is whether we'll build systems that make concentrated digital power less possible or whether we'll accept it as the baseline for how governance functions in a digital age.

History suggests we'll accept it, regulate incrementally, and only move decisively after a crisis makes inaction impossible. But the record of 2025 should at least make clear what we're accepting: a world where digital influence has become governmental power, where accountability is minimal, and where the people positioned at those leverage points operate with virtually no constraint.

That's not a statement of inevitable outcome. It's an observation about trajectory. Course correction is still possible. But it requires recognizing that the problem isn't individual bad actors. It's the structural absence of distributed accountability in systems that concentrate power at unprecedented scale.

Key Takeaways

- Digital power has merged with governmental authority, creating unprecedented concentration without distributed accountability structures

- Algorithmic systems reward engagement over accuracy, amplifying dangerous behavior at scale while suppressing truthful content

- State-backed hacking operations from China, Russia, and Iran conduct systematic espionage and disinformation with governmental protection and virtual impunity

- Infrastructure built for specific purposes (immigration enforcement, data integration) persists and expands across administrations regardless of political alignment

- Conspiracy theorists and digital operators now hold cabinet-level positions, legitimizing false narratives and influencing policy affecting millions

- Platform fragmentation and encrypted communications enable dangerous actors to evade accountability by migrating between communities with minimal oversight

- Online scam operations have professionalized into an industrial complex, targeting vulnerable populations and causing billions in annual financial harm

- The structural absence of distributed accountability in digital power systems makes concentrated influence by dangerous actors not an anomaly but an inevitable outcome

![Most Dangerous People on the Internet [2025]](https://tryrunable.com/blog/most-dangerous-people-on-the-internet-2025/image-1-1767006372475.jpg)