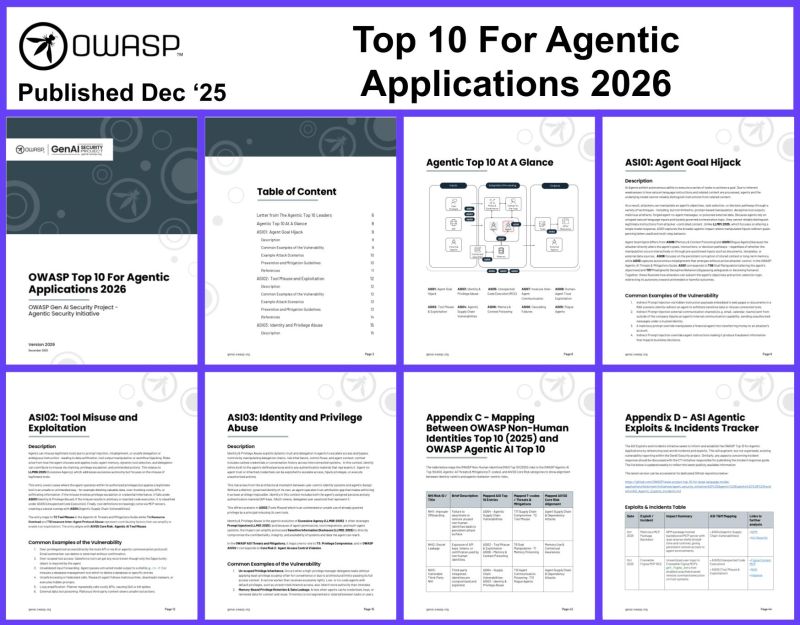

OWASP Top 10 for Agentic Applications [2026]

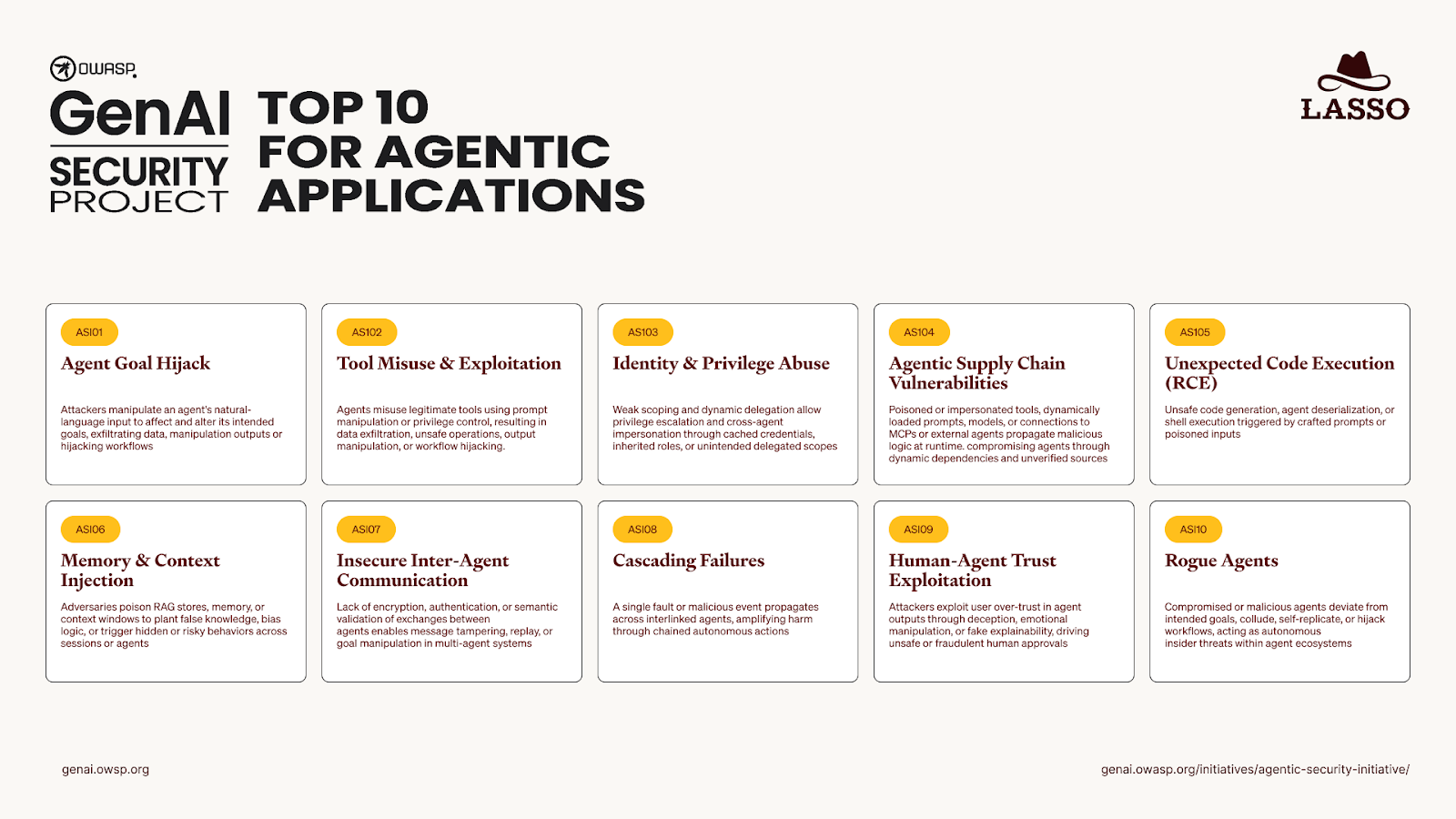

Artificial Intelligence (AI) has rapidly evolved from being a mere tool to becoming an autonomous actor capable of making decisions, learning, and interacting with the world nearly as independently as a human. With this evolution comes a new realm of security challenges unique to agentic applications. Enter the OWASP Top 10 for Agentic Applications 2026—a comprehensive, peer-reviewed framework that identifies and mitigates the most critical security risks facing these advanced AI systems.

TL; DR

- Dynamic Risk Landscape: The security risks for agentic AI applications are constantly evolving, necessitating ongoing vigilance and adaptation.

- Autonomous Decision-Making Risks: Security threats arise when AI systems make decisions without human oversight.

- Data Integrity and Privacy: Ensuring data security is pivotal, given AI's reliance on vast, often sensitive data sets.

- Trust and Transparency: Building trust through transparency in AI processes is essential for widespread adoption.

- Future-Proofing Security: Continual updates and community collaboration are needed to keep security measures ahead of emerging threats.

Introduction

Welcome to a deep dive into the OWASP Top 10 for Agentic Applications 2026, a crucial guide designed to navigate the complex security landscape of autonomous AI systems. This framework was collaboratively developed by experts worldwide to address the unique challenges posed by agentic AI, providing a structured approach to identifying, understanding, and mitigating risks. As AI continues to pervade every aspect of our lives, ensuring its safe and secure operation becomes paramount.

In this article, we'll unravel the intricacies of the OWASP Top 10, exploring each security risk, practical measures to counteract them, and the future trends that will shape the security protocols of tomorrow.

The Best Practices for Securing Agentic Applications

| Practice | Description | Implementation |

|---|---|---|

| Risk Assessment | Regular evaluations to identify potential vulnerabilities in AI systems. | Integrate into the development lifecycle. |

| Access Control | Implementing strict access controls to ensure only authorized interactions with AI systems. | Use role-based permissions. |

| Data Encryption | Encrypting sensitive data both at rest and in transit. | Employ strong cryptography standards. |

| Auditing and Monitoring | Continuous monitoring of AI operations to detect and respond to anomalies. | Use AI-driven monitoring tools. |

| AI Model Security | Protecting AI models from tampering and adversarial attacks. | Use model watermarking and integrity checks. |

Dynamic Risk Landscape

Agentic applications operate in a continuously changing environment, inherently increasing their exposure to security threats. Unlike traditional software, these applications often learn and evolve, which can introduce new vulnerabilities over time.

Understanding Risk Dynamics

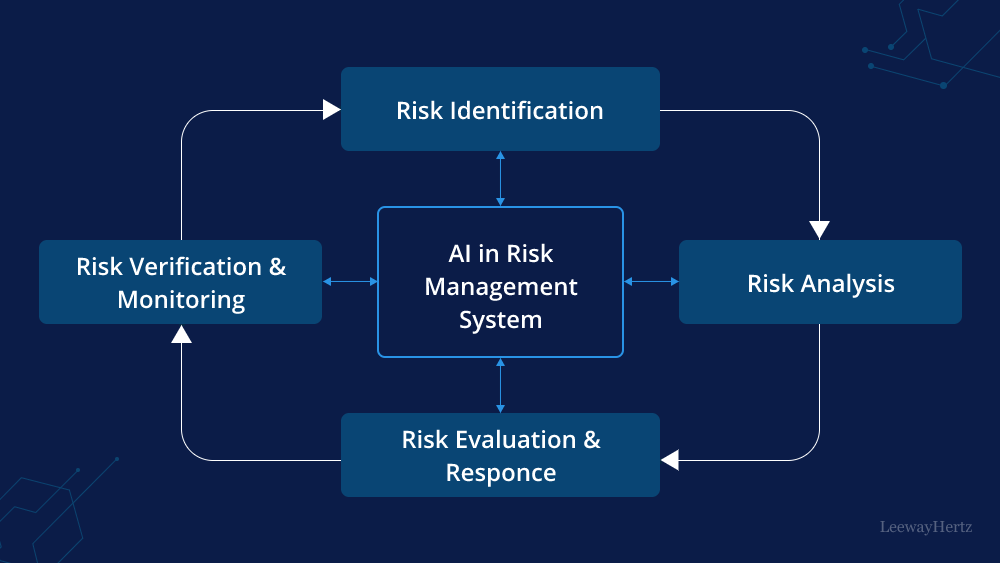

Security risks in agentic applications are not static. As AI systems learn and adapt, their operational contexts change, potentially activating previously dormant vulnerabilities. Regular risk assessments and dynamic security strategies are crucial to keeping pace with these changes.

Best Practices

To stay ahead in this dynamic landscape, organizations should adopt a proactive security posture:

- Continuous Risk Monitoring: Employ AI-driven tools to monitor and analyze system behavior in real-time.

- Adaptive Security Policies: Develop flexible security policies that can be quickly adjusted in response to new threats.

- Collaborative Intelligence Sharing: Participate in industry forums to share insights and strategies for emerging threats.

Autonomous Decision-Making Risks

Agentic AI systems are unique in their ability to make autonomous decisions. While this autonomy is a strength, it also introduces significant security risks, particularly when decisions are made without human oversight.

Potential Threats

- Misaligned Objectives: AI systems may pursue goals that conflict with user intentions or ethical standards.

- Manipulated Decision Processes: Adversaries can exploit AI decision-making processes to cause harm.

- Unauthorized Autonomous Actions: Rogue actions by AI can lead to unintended consequences.

Mitigation Strategies

- Goal Alignment: Ensure AI objectives are aligned with organizational values through robust training and validation.

- Decision Transparency: Implement systems that provide visibility into the decision-making processes of AI.

- Fail-Safes and Overrides: Design AI systems with built-in fail-safes and manual override capabilities.

Data Integrity and Privacy

Agentic applications rely heavily on data, often involving large volumes of sensitive information. Protecting this data's integrity and ensuring privacy is paramount.

Key Challenges

- Data Breaches: Unauthorized access to sensitive data can compromise AI operations.

- Data Poisoning: Malicious actors can manipulate input data to alter AI behavior.

Protection Measures

- Robust Encryption: Utilize encryption standards like AES-256 to secure data at rest and in transit.

- Data Anonymization: Implement techniques to anonymize sensitive data, reducing privacy risks.

- Access Controls: Employ multi-factor authentication and role-based access controls to limit data access.

Trust and Transparency

Building trust in agentic applications is essential for their adoption and success. Transparency in AI operations enhances trust and allows users to understand and verify system behavior.

Enhancing Trust

- Explainability: Develop AI systems with explainability features that allow users to understand AI decisions.

- Accountability: Establish clear accountability for AI actions and decisions, providing recourse when necessary.

- User Education: Invest in educating users about AI capabilities and limitations to build a foundation of informed trust.

Future-Proofing Security

As agentic applications continue to evolve, so must the security measures that protect them. Future-proofing involves anticipating emerging threats and adapting security strategies accordingly.

Preparing for the Future

- Regular Updates: Keep AI systems updated with the latest security patches and threat intelligence.

- Community Engagement: Engage with the broader AI security community to stay informed about new risks and solutions.

- Invest in Research: Support ongoing research into AI security to develop innovative solutions to emerging threats.

FAQ

What is the OWASP Top 10 for Agentic Applications?

The OWASP Top 10 for Agentic Applications is a framework that identifies the most critical security risks facing autonomous and agentic AI systems.

How does autonomous decision-making pose security risks?

Autonomous decision-making can lead to misaligned objectives and unauthorized actions, creating security vulnerabilities.

What are the best practices for securing agentic applications?

Best practices include risk assessment, access control, data encryption, auditing, and AI model security.

Why is transparency important in AI systems?

Transparency enhances trust by allowing users to understand and verify AI decisions.

How can organizations prepare for future AI security challenges?

Organizations can future-proof their security by staying updated with the latest security patches, engaging with the community, and investing in research.

Conclusion

The OWASP Top 10 for Agentic Applications 2026 provides a crucial roadmap for navigating the security challenges of autonomous AI systems. By understanding and addressing these risks, organizations can harness the full potential of AI while safeguarding their operations and data. As the security landscape continues to evolve, ongoing vigilance and adaptation will be essential to keeping agentic applications secure.

For teams looking to automate workflows, Runable provides AI-powered solutions that enhance security and efficiency.

Use Case: Automating your weekly reports securely with Runable.

Try Runable For FreeKey Takeaways

- Dynamic risks require continuous adaptation.

- Autonomous decision-making introduces unique security challenges.

- Data integrity is crucial for AI security.

- Transparency builds trust in AI systems.

- Future-proofing involves community and research engagement.

Related Articles

- Is Artificial Intelligence a Bubble? An In-Depth Analysis [2025]

- [2025] Mastering Productivity: The Top 9 Tools I Swear By

- [2026] What Is Hinamatsuri? Understanding Girls' Day in Japan

- [2025] Grand Blue Review – Dive into Laughter!

- [2025] Unlocking Quantum Potential: Google's Quest with UK Experts

- All The Best Places To Click On When You Want To Get Off - Sex, Dating & Relationships