Razer Project Motoko: The Future of AI Gaming Headsets [2025]

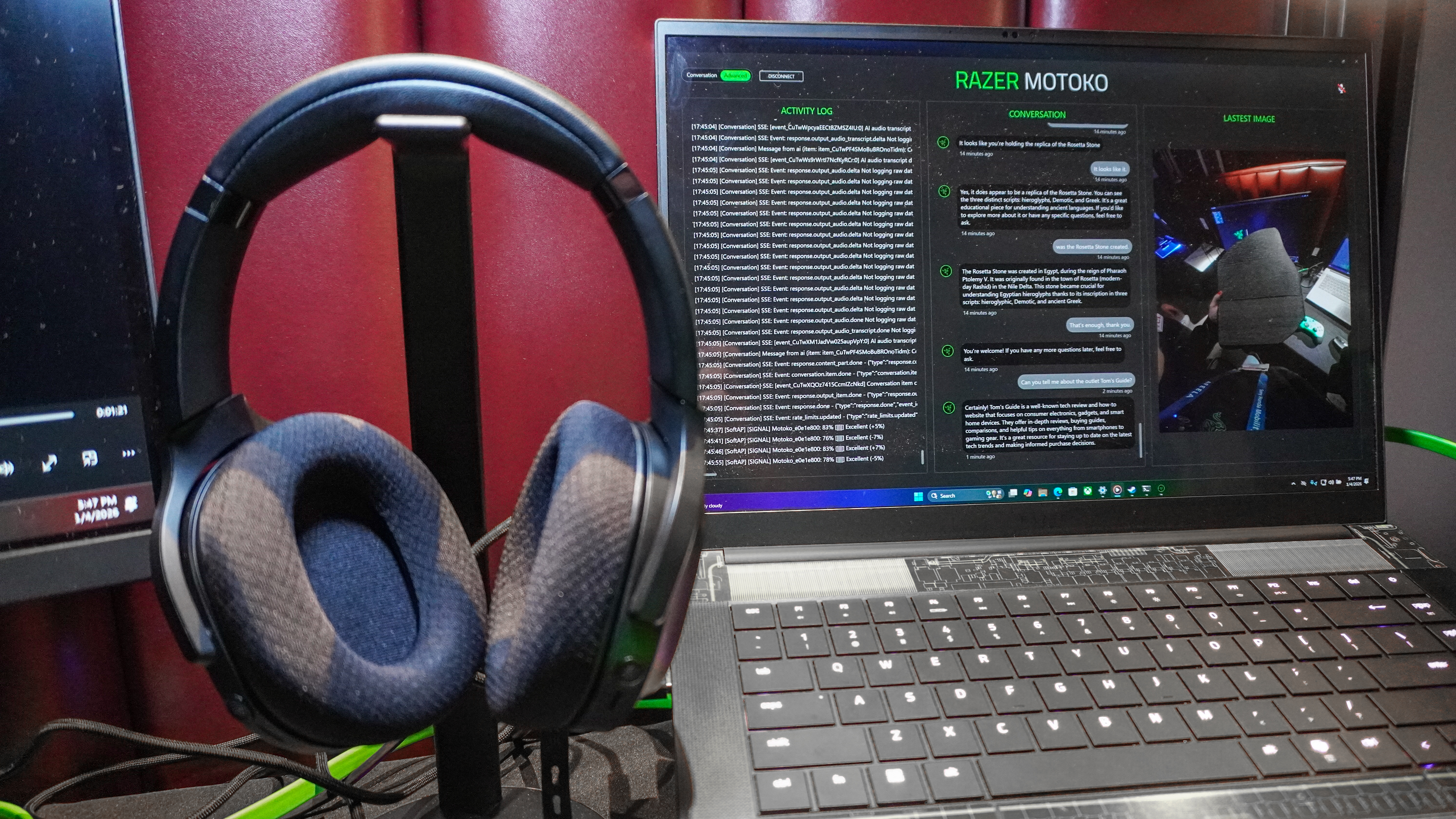

When you first see Project Motoko, your brain does a double take. It's not just a gaming headset. It's not just an AI wearable. It's both, somehow fused together in a way that feels like it jumped straight out of a cyberpunk fever dream. Razer showed this concept device at CES 2025, and honestly, it represents something bigger than just another gaming gadget.

Let me be clear upfront: Project Motoko isn't coming to stores. It's a concept piece. But here's what makes it worth your attention: it shows where the entire industry is headed. Gaming hardware is evolving beyond controllers and monitors. Wearable AI is moving beyond smartwatches. The intersection of these two worlds is where things get genuinely interesting.

The headset takes inspiration from Ghost in the Shell, specifically the Major character, which tells you something about Razer's ambitions. This isn't about incremental updates to noise cancellation. This is about reimagining what a headset can be when you add AI vision, spatial awareness, and real-time environmental understanding.

TL; DR

- Project Motoko combines gaming performance with AI wearable capabilities, featuring first-person view cameras, real-time object recognition, and microphone arrays for spatial audio

- Powered by Qualcomm Snapdragon, the device runs AI chatbots including Grok, Open AI, and Google Gemini for multi-purpose functionality

- This represents a major shift in wearable computing, blurring lines between gaming peripherals and everyday AI assistants

- The concept reveals industry trends toward eye-level computing, ambient AI, and seamless integration of gaming and productivity

- While not commercially available, Project Motoko signals the future of how headsets might evolve over the next 3-5 years

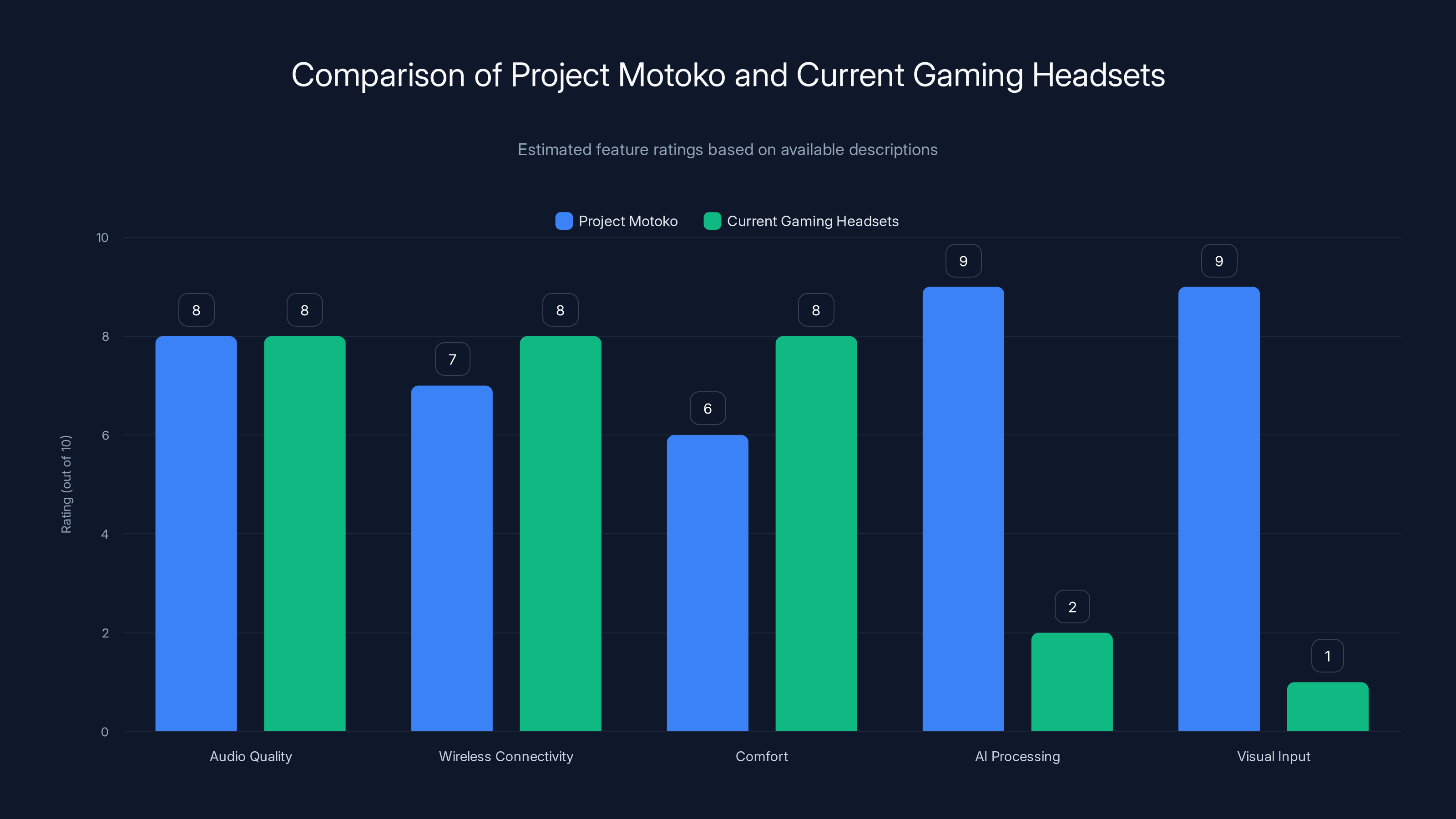

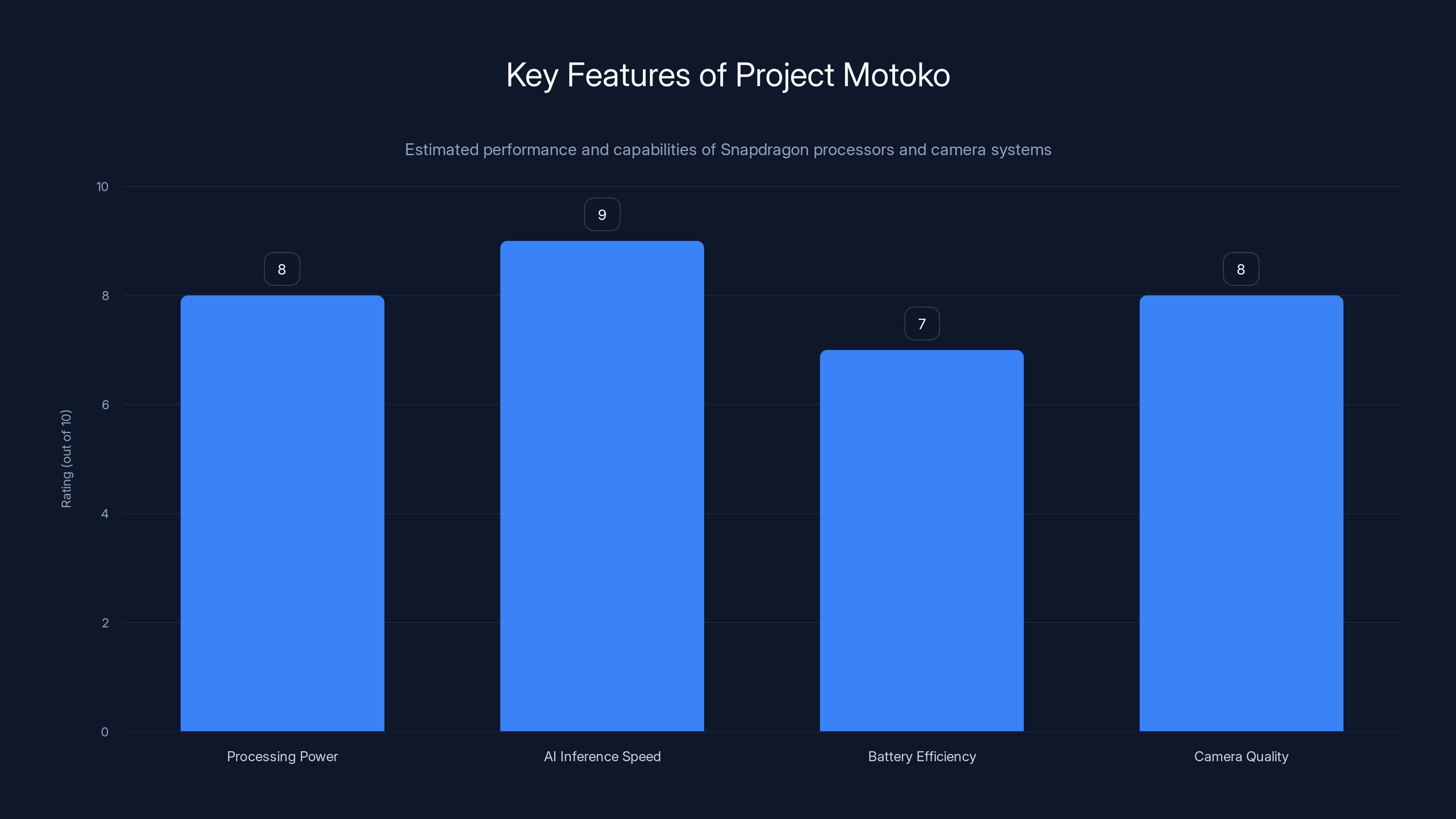

Project Motoko excels in AI processing and visual input compared to current gaming headsets, which focus more on audio quality, connectivity, and comfort. Estimated data based on feature descriptions.

What Exactly Is Project Motoko?

Project Motoko is Razer's vision for what happens when you stop thinking about gaming headsets as devices purely for gaming. The concept merges high-performance audio hardware with wearable computing that's designed to work throughout your day.

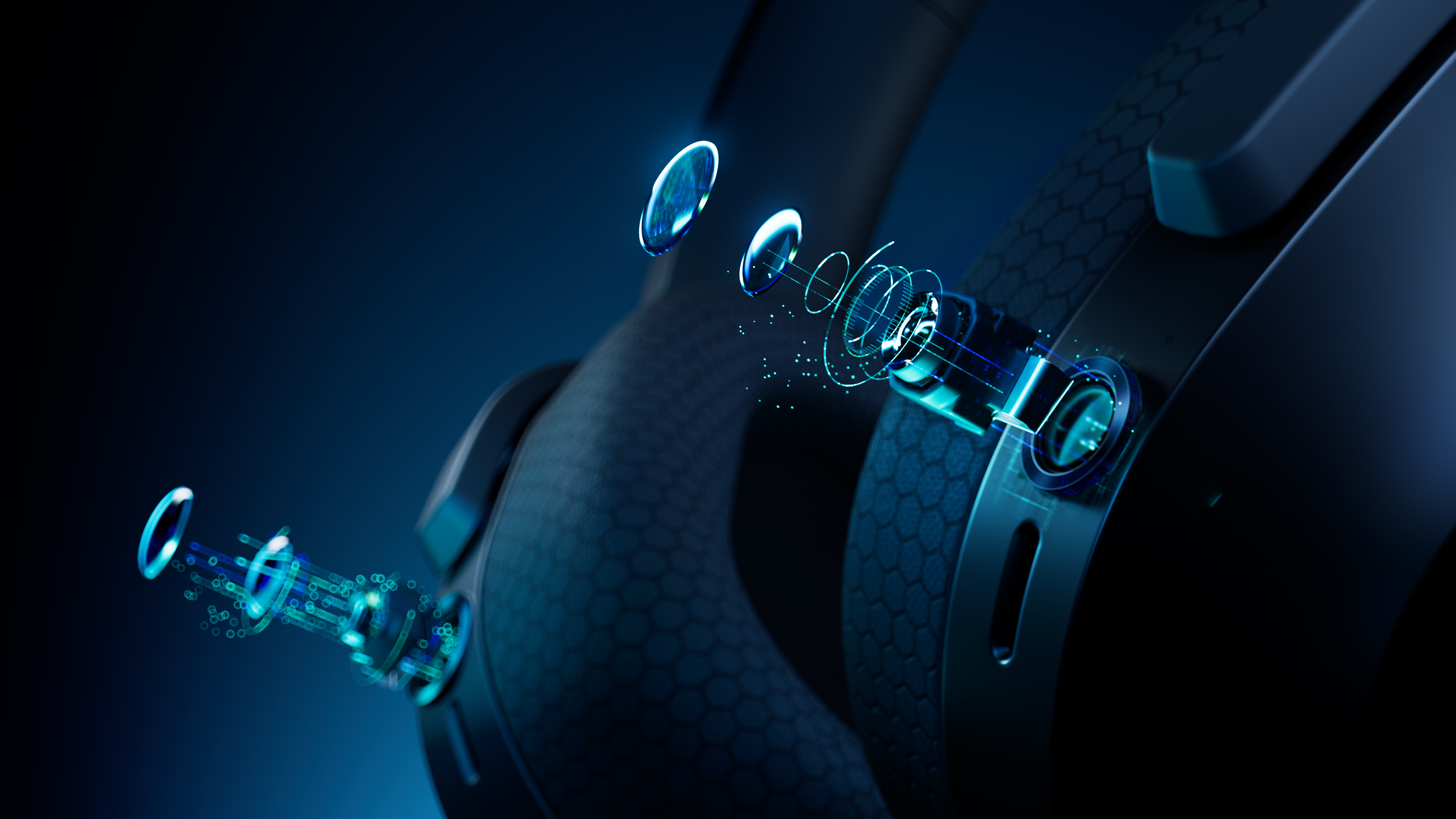

The physical design sits somewhere between a gaming headset and a heads-up display. It's got the audio quality you'd expect from a gaming peripheral, but it also packs cameras at eye level, sophisticated microphone arrays, and enough processing power to run real-time AI models.

What's wild is that Razer isn't positioning this as niche hardware for hardcore gamers only. Nick Bourne, Razer's global head of the mobile console division, positioned it as a broader vision: "Project Motoko is more than a concept, it's a vision for the future of AI and wearable computing. By partnering with Qualcomm Technologies, we're building a platform that enhances gameplay while transforming how technology integrates into everyday life."

That quote matters. It shows Razer sees gaming as the entry point, not the final destination. Gaming communities have always been early adopters of experimental hardware. VR headsets found their biggest audience with gamers. High-refresh-rate monitors? Gamers pushed those into the mainstream. Razer's using the same playbook here.

The Hardware Breakdown: What's Actually Inside

Understanding Project Motoko requires breaking down what Razer actually built here. This isn't marketing fluff—every component serves a specific purpose.

The Snapdragon Processing Core

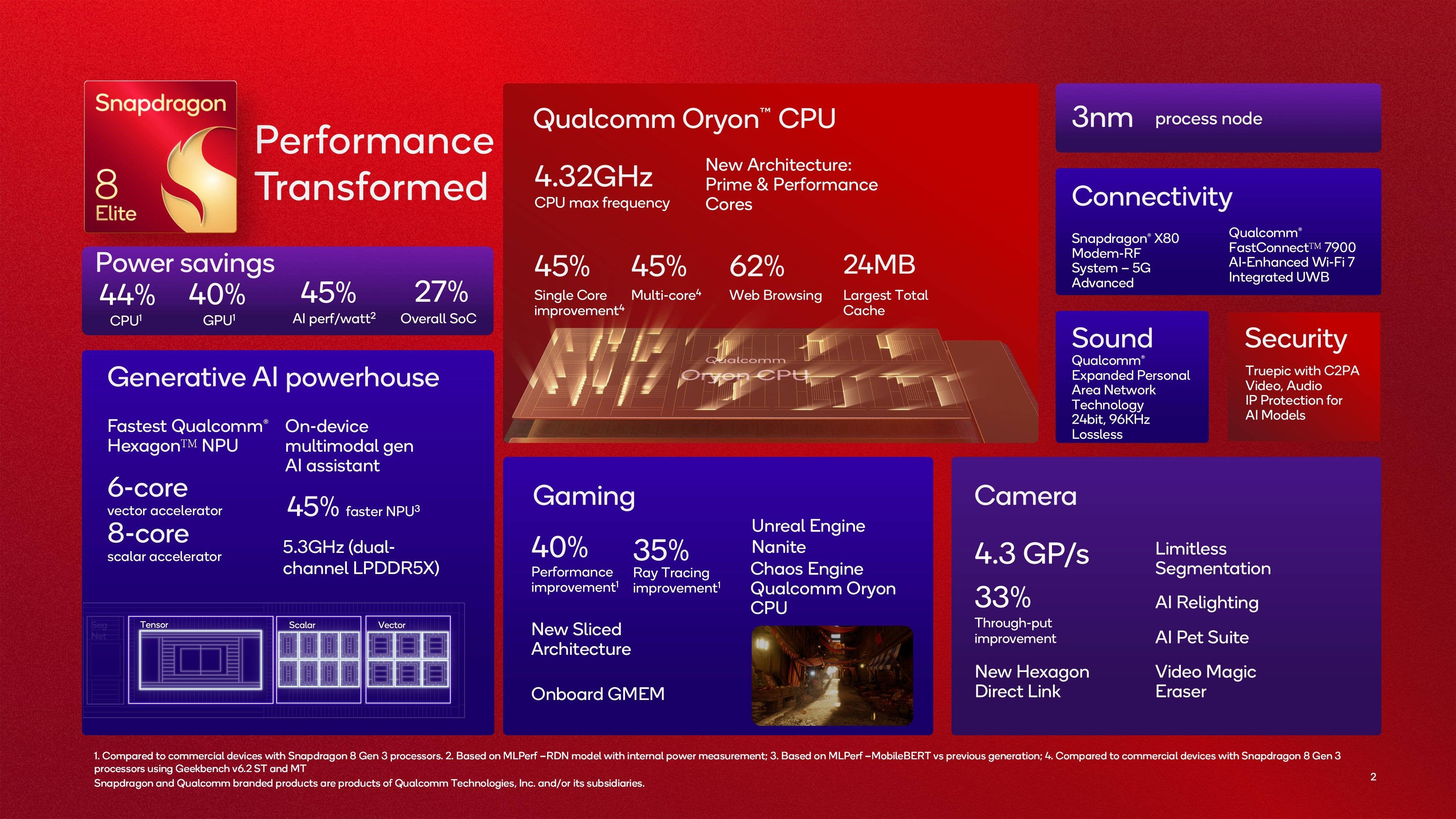

Project Motoko runs on Qualcomm's Snapdragon platforms. This is significant because Snapdragon processors are already proven in wearables, mobile devices, and AR headsets. Choosing Snapdragon over custom silicon tells you something: Razer prioritized ecosystem maturity and developer support over pure performance optimization.

Snapdragon gives you enough compute for real-time AI inference without requiring massive battery capacity. These processors can run quantized AI models efficiently, which matters when you're trying to fit everything into a headset-sized form factor.

The processing power enables on-device AI rather than relying on cloud connections for every inference. That's crucial for latency and privacy. If your headset needs to recognize objects in real time, you can't afford 500ms round trips to a server farm.

First-Person View Cameras

The pair of cameras positioned at eye level represents a major departure from most gaming headsets. These aren't depth sensors or simple IR cameras. They're designed to capture what you're actually looking at from your perspective.

Think about what this enables: real-time object recognition of everything in your visual field. Text recognition from signs, documents, or screens. Spatial mapping of your environment. These capabilities blur the line between gaming peripheral and augmented reality device.

The camera placement is deliberate. Eye-level positioning means the cameras capture approximately what your eyes see. This enables more accurate object detection and allows the device to understand context in real time.

But here's the catch: camera-based AI requires serious processing power and raises privacy questions. Every frame of video needs to be processed, analyzed, and either retained or discarded. For a concept device, Razer sidestepped these thornier questions, but real-world deployment would need to address privacy and data handling.

Advanced Microphone Array

Razer equipped Project Motoko with microphone arrays designed to capture both near and distant audio. This isn't just about voice clarity—it's about spatial awareness.

Multiple microphones allow the device to use beamforming technology, which focuses on audio from specific directions while rejecting noise from others. In a gaming context, this lets you hear footsteps behind you while filtering out other environmental noise. In an AI assistant context, it means the device can understand spatial relationships between sounds.

The array's ability to capture "distant audio beyond normal peripheral hearing" suggests Razer built in directional audio analysis. Your eyes see a narrow cone. Sound travels in all directions. By processing audio spatially, the device creates a more complete environmental model than vision alone provides.

The Wide Field of Attention

Human peripheral vision is limited. You focus on a narrow central area while your periphery catches motion and rough shapes. Project Motoko extends this with a "wide field of attention" that can capture things your eyes would normally miss.

This matters for both gaming and AI functionality. In gaming, it enables better situational awareness. In AI contexts, it prevents you from missing important environmental information. The headset becomes a way to augment your natural perception.

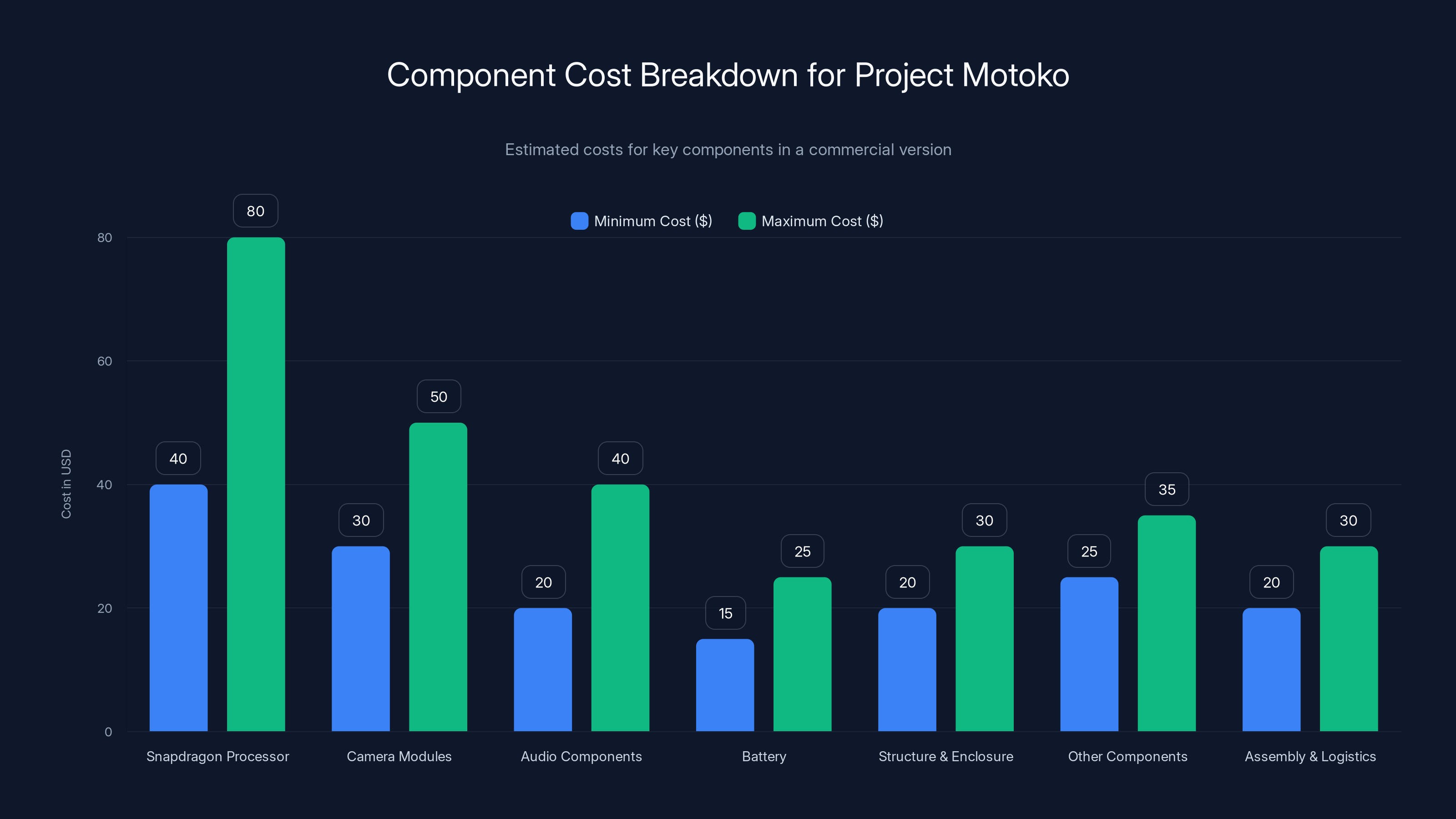

The Snapdragon processor and camera modules are the most expensive components, with costs ranging from

AI Integration: More Than Just a Chatbot

What separates Project Motoko from previous gaming headsets is the integration of AI systems. This isn't an afterthought—it's core to the device's purpose.

Multi-Chatbot Compatibility

Razer designed Project Motoko to work with multiple AI platforms: Grok, Open AI, and Google Gemini. This multi-platform approach is important. It avoids vendor lock-in and gives users choice about which AI systems they prefer.

Having multiple AI options available means Project Motoko isn't bet on a single company. If one platform stumbles or falls out of favor, users have alternatives. This is smart design thinking and also sends a message to the industry: the headset is a platform, not a proprietary silo.

The real utility emerges when you combine this AI availability with the device's sensors. Imagine pointing at a product and getting instant information from an AI that can see what you're seeing. Or asking your AI assistant questions about your immediate environment and getting answers based on real-time analysis.

Real-Time Object and Text Recognition

The ability to recognize objects and text in real time opens possibilities that current gaming headsets can't touch. This is where Project Motoko shifts from gaming peripheral to something closer to AR glasses.

Object recognition means the device can identify what you're looking at: people, objects, locations, brands, products. This information could feed into gameplay mechanics or assist in real-world tasks like shopping, navigation, or information lookup.

Text recognition is equally powerful. Your headset could read signs, menus, documents, or labels in real time. For gaming, this enables entirely new interaction models. For daily use, it's like having a translator, information assistant, or documentation reader built into your headset.

But real-time processing at headset resolution introduces challenges. Modern AI vision models want high-resolution input, which requires more processing power and more power consumption. Razer likely had to make trade-offs between accuracy and performance.

AI as an Everyday Assistant

Beyond gaming, Project Motoko positions AI as an integrated part of daily life. This is where the device's industrial design and functionality choices make sense.

Wearing an AI-capable headset means you always have access to processing power, microphones, and cameras. You can ask questions, get information about your surroundings, or receive assistance throughout the day. The AI isn't something you switch to when needed—it's always available.

This represents a different mental model from smartphones or smartwatches. Those devices sit in pockets or on wrists. Your headset sits on your head, maintaining direct access to your ears and eyes. The AI integration feels more natural, less like "activating a tool" and more like "having an assistant."

Gaming Performance: Still a Gaming Headset First

Despite all the AI functionality, Project Motoko needed to remain a credible gaming headset. Razer's reputation is built on gaming hardware, so compromising audio quality or gaming features would undermine the entire concept.

Audio Quality and Positioning

Gaming headsets live or die on audio quality and, specifically, on spatial audio for competitive gaming. Being able to hear footsteps, directional cues, and environmental sounds accurately affects performance in first-person shooters and other competitive titles.

Project Motoko's microphone array and spatial audio capabilities suggest Razer maintained this focus. The microphones enable not just voice input but also environmental audio analysis. The drivers (which Razer didn't detail extensively) need to deliver the clarity and directional accuracy gamers expect.

The integration of cameras and object recognition doesn't compromise audio. Instead, it extends the device's capabilities into new territories while preserving core gaming functionality.

Real-Time Recognition During Gameplay

Here's where things get interesting for gaming specifically. Imagine playing a competitive FPS while Project Motoko recognizes enemies, highlights objectives, or provides tactical information. The device could analyze what you're seeing and deliver real-time assistance without relying on external systems.

This is different from traditional gaming overlays or wallhacks. The device isn't cheating or modifying game state. It's enhancing your awareness of what's already visible on screen. Some games might term this cheating if it provides competitive advantage, but other games—open-world, casual, or solo experiences—could integrate this technology naturally.

Voice Control Integration

With a sophisticated microphone array and AI processing, voice control becomes viable at headset level. Adjusting game settings, switching AI assistants, or executing complex commands becomes possible without leaving the game.

Voice in gaming contexts is tricky. You're often in communication with teammates. The device needs to distinguish between gameplay voice chat and commands directed at the AI. Razer's microphone array design likely addresses this through beamforming—focusing on the direction you're speaking (toward the mic, close to your mouth) while filtering out other audio sources.

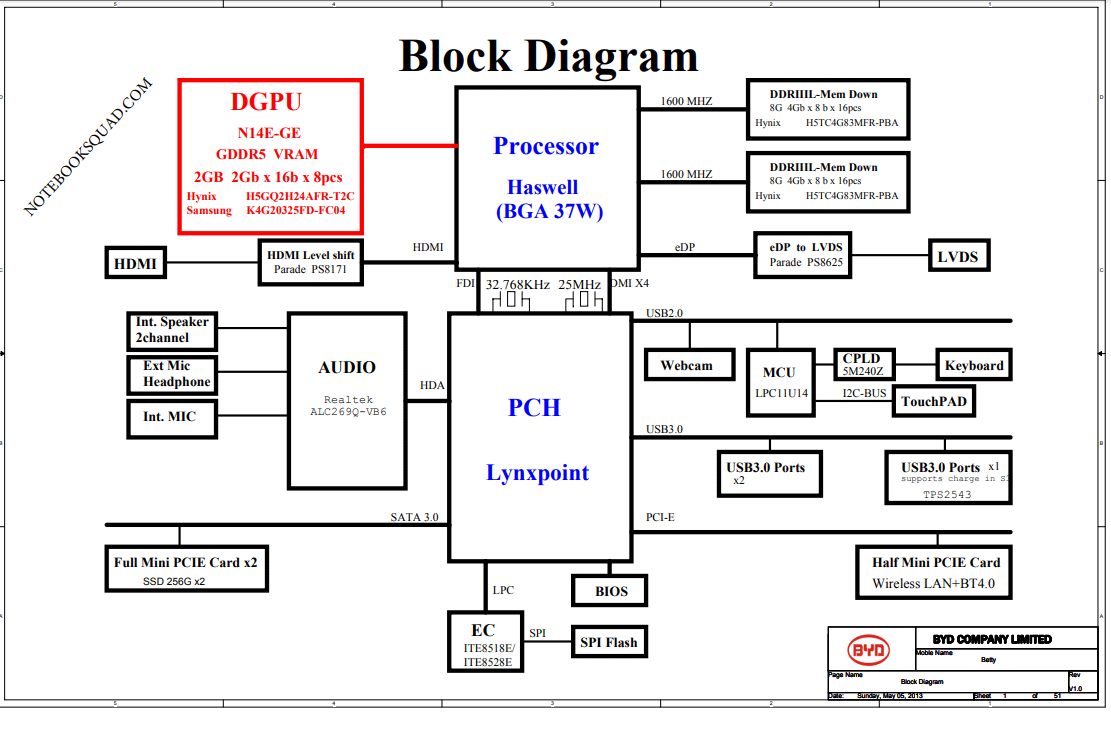

The Technical Architecture: How It All Fits Together

Project Motoko's architecture represents thoughtful integration of multiple systems. Understanding how these pieces work together reveals the device's real capabilities.

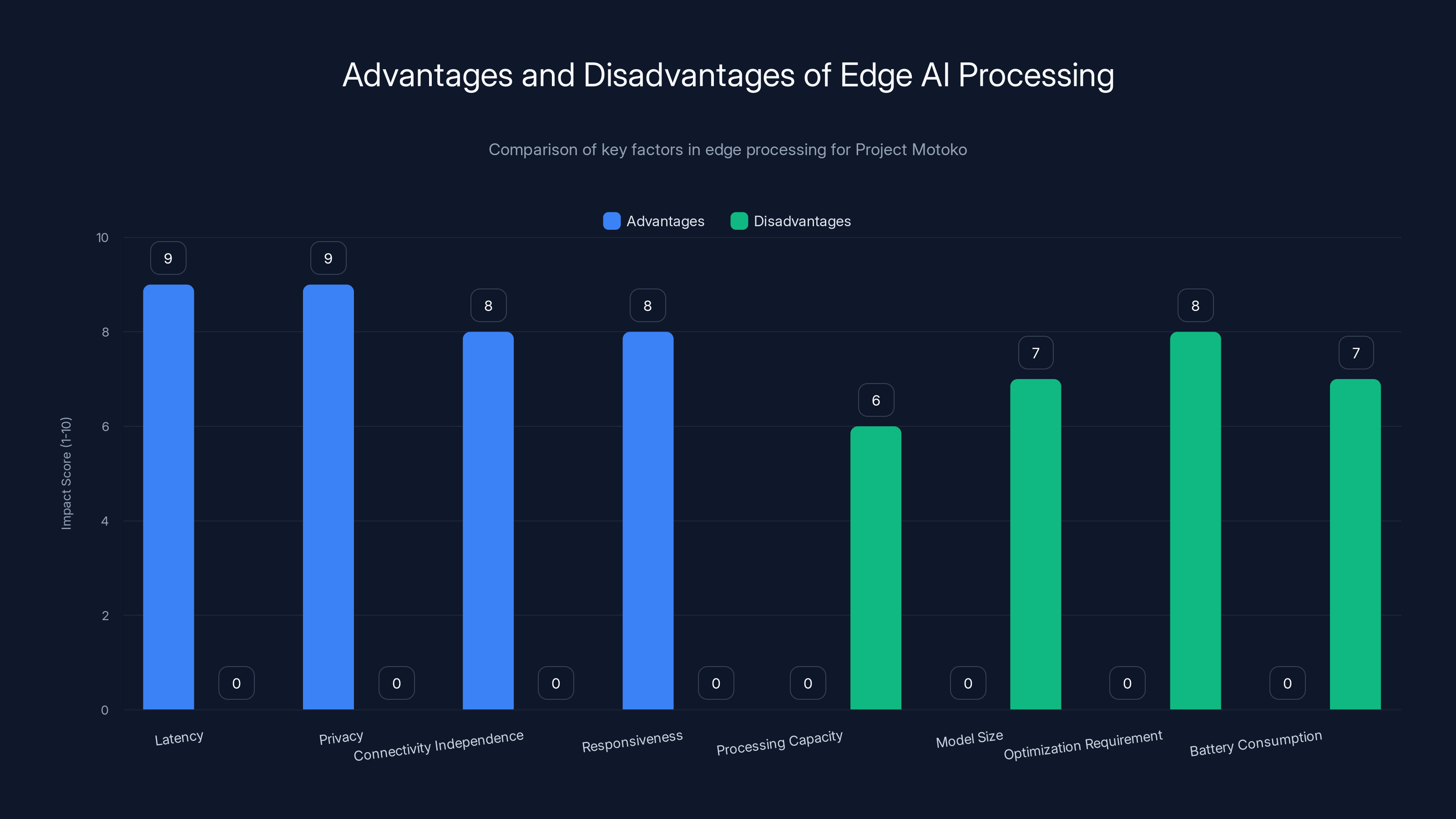

Edge AI Processing Model

The decision to include on-device processing (Snapdragon) rather than relying purely on cloud AI is crucial. Edge processing means the headset performs AI inference locally, without sending data to remote servers.

Advantages of edge processing:

- Latency is minimal (10-100ms instead of 500ms+)

- Privacy is preserved (video doesn't leave the device)

- Functionality doesn't depend on internet connectivity

- Responsiveness feels natural rather than delayed

Disadvantages:

- Device has limited processing capacity

- Can't run the largest, most powerful AI models

- Requires careful optimization of AI models

- Battery consumption increases significantly

Razer likely optimizes AI models for mobile/wearable deployment. These quantized or distilled models trade some accuracy for speed and efficiency. A model that runs at 200ms with 95% accuracy is more useful than a model with 99% accuracy that takes 2 seconds.

Sensor Fusion

Project Motoko combines data from multiple sensors: cameras, microphones, IMU (inertial measurement unit), potentially orientation sensors. Sensor fusion combines these data streams to create a more complete understanding of the user's context.

For example:

- Camera sees an object

- Microphone hears relevant audio

- IMU detects your head position and orientation

- Combined, the system understands what you're looking at, what you're hearing, and where you're positioned

This fusion requires sophisticated software architecture. Raw sensor data needs to be synchronized, processed, and correlated in real time. Qualcomm's Snapdragon includes specialized processing units for sensor fusion tasks.

The AI Pipeline

From sensor input to AI output involves multiple stages:

- Sensor Input: Cameras, microphones, and other sensors collect data

- Preprocessing: Raw data is cleaned, normalized, and prepared for AI models

- Inference: AI models process the data and generate predictions

- Decision Making: The system decides what to do with the AI outputs

- Output: Results are presented to the user (audio, text, haptic feedback)

- Feedback Loop: User responses inform future processing

Each stage has latency requirements. If the total pipeline takes more than 200-300ms, the experience feels sluggish and unresponsive. Optimizing every stage is crucial for a device that needs to feel natural and interactive.

Edge AI processing in Project Motoko offers significant advantages in latency, privacy, and connectivity independence, but faces challenges in processing capacity and battery consumption. Estimated data.

The Wearable Computing Paradigm Shift

Project Motoko represents something bigger than one company's hardware experiment. It signals a shift in how the industry thinks about wearable computing.

From Smartphone Adjacency to Autonomous Devices

For years, wearables were conceived as extensions of smartphones. Your smartwatch displayed notifications from your phone. Your wireless earbuds connected to your phone. The phone was the primary device; wearables were secondary.

Project Motoko suggests a different paradigm. The headset is a standalone AI-capable device that doesn't necessarily depend on a phone. It has processing power, sensors, and AI models built in. It can function independently for many tasks.

This mirrors broader industry trends. As mobile processors become more powerful, wearables become less dependent on phones. AI models become smaller and more efficient. Wearables transition from accessories to primary devices.

Over the next 5-10 years, expect this trend to accelerate. Wearable devices will become more independent, more AI-capable, and more integrated into daily life. Project Motoko is early in this evolution but clearly signals the direction.

Eye-Level Computing

Most computing happens on screens below your eye level: phones in hands, monitors on desks, tablets on laps. Project Motoko places processing and interaction at eye level.

This is significant for user experience. Information that appears at eye level feels more natural. Cameras at eye level capture your actual perspective. Displays at eye level don't require neck strain.

Augmented reality headsets like Microsoft Holo Lens place computing at eye level, and Project Motoko adopts this paradigm. Over the next decade, expect more devices to follow. Eye-level positioning may become the new default for wearable computing, replacing the smartphone-in-hand interaction model.

Persistent AI Availability

Currently, AI assistants require explicit activation. You ask Siri, Alexa, or Google something. The system responds. Then it disengages.

Project Motoko suggests a model where AI is persistently available. Your AI assistant is always there, always listening (with proper privacy controls), always able to analyze your environment. You can ask questions or receive suggestions without activation steps.

This persistent availability changes user behavior and expectations. Instead of thinking, "I'll Google this later," you might think, "I'll ask my headset." Instead of trying to remember something, you ask the AI to recall it.

This paradigm requires solving privacy, battery life, and attention management challenges. But the direction is clear from Project Motoko's design.

Privacy and Security Considerations

A headset with cameras and microphones always in your environment raises legitimate privacy concerns. Project Motoko's design hints at how these might be addressed, but real-world deployment would require more.

Visual Data Privacy

If cameras are constantly recording your environment, what happens to that video data? Project Motoko doesn't store raw video permanently—that would require massive storage. Instead, the device processes frames in real time, extracts useful information, and discards the visual data.

But "discards" requires verification. How can you trust that the device isn't secretly recording? Physical design choices matter here. Razer would need indicators showing when cameras are active, toggles to disable recording, and clear documentation of data handling.

Real-world deployment would benefit from hardware-level privacy guarantees. A physical camera shutter or indicator light that shows recording status. Cryptographic proofs that data is processed locally. Open-source firmware options for privacy-conscious users.

Audio Surveillance

Microphone arrays present similar challenges. How do you know the device isn't recording conversations for later analysis? How do you control what audio gets processed?

Multiple microphones enable sophisticated audio processing but also enable sophisticated surveillance. Razer would need to implement clear safeguards: user controls over recording, local-only audio processing, transparent logging of what audio is captured.

Regulatory Landscape

Privacy regulations like GDPR and emerging AI regulations would apply to Project Motoko. Any commercial release would need to comply with regulations around data collection, processing, and storage.

These regulations are still evolving. By the time Project Motoko reaches commercial viability (if it does), the regulatory landscape will have matured. Razer is wise to work on these issues during the concept phase.

Comparing to Existing Wearable Technology

Project Motoko doesn't exist in isolation. It sits within a landscape of existing wearable devices and emerging competitors.

Gaming Headsets

Compare Project Motoko to current gaming headsets from Razer, Steel Series, Corsair, or Hyper X. Current headsets focus on audio quality, comfort, and wireless connectivity. They don't include cameras, AI processing, or environmental awareness.

Project Motoko evolves this category by adding AI and environmental sensing. The core gaming functionality remains, but the device becomes useful beyond gaming.

This is a natural evolution. Gaming has always been the proving ground for new hardware concepts. VR headsets, high-refresh monitors, and mechanical keyboards all found early audiences with gamers before mainstream adoption.

AR/VR Headsets

Devices like Microsoft Holo Lens, Magic Leap, and Apple Vision Pro place computing at eye level with displays and spatial understanding. Project Motoko shares some design philosophy but differs significantly.

Project Motoko isn't a full AR headset with immersive display. It's audio-focused with AI capabilities. This is actually an advantage for everyday use. You don't need to see digital overlays in your environment for most AI assistant tasks. Audio is sufficient and less intrusive.

Meanwhile, full AR headsets require display technology, processing power, and form factors that make everyday wear uncomfortable. Project Motoko's lightweight design positions it for better everyday adoption.

Smartwatches and Earbuds

Apple Watch, Samsung Galaxy Watch, and Air Pods show how wearables have evolved. They're personal, always available, and AI-capable through voice assistants.

Project Motoko extends this concept significantly. The combination of first-person cameras, sophisticated audio, and AI processing exceeds what smartwatches and earbuds currently offer.

But smartwatches and earbuds have advantages: longer battery life, smaller form factor, less intrusive design. Project Motoko trades these for more processing power and richer input/output capabilities.

Project Motoko's Snapdragon processors excel in AI inference speed and camera quality, offering a balanced performance for AR applications. Estimated data.

The Path from Concept to Commercial Reality

Project Motoko is explicitly a concept device with no commercial release planned. But what would the path to commercialization look like?

Technical Maturation

First, the underlying technology needs maturation. Current AI vision models work well but not perfectly. Inference speed needs improvement. Battery life needs to extend from hours to days.

Some of these challenges are hardware problems (battery density, processor efficiency). Others are software challenges (model optimization, power management). Both are solvable but require sustained engineering effort.

Razer's partnership with Qualcomm is important here. Qualcomm will improve Snapdragon processors year over year, bringing faster inference and better power efficiency. By the time Razer launches a commercial product, the underlying platform will be significantly more capable.

Design Refinement

Concept devices push boundaries, sometimes at the expense of ergonomics. Project Motoko needs to be refined for everyday wear. The form factor needs to be lighter, more comfortable, and more aesthetically acceptable for non-gamers.

Camera placement needs validation. Are eye-level cameras optimal, or would slightly different positioning work better? Microphone arrays need optimization for real-world environments. Display (if added) needs evaluation.

Privacy and Regulatory Frameworks

Before selling a headset with cameras and microphones, Razer needs clear privacy frameworks and regulatory compliance. This means:

- Privacy policy development

- Compliance with emerging AI regulations

- User consent mechanisms

- Data handling documentation

- Security audits and certifications

These aren't exciting engineering challenges, but they're essential for commercial success. A great product with privacy concerns won't achieve mainstream adoption.

Ecosystem Development

A device is only useful if applications take advantage of it. Razer would need to work with game developers, AI platform providers, and software companies to create experiences that leverage Project Motoko's capabilities.

This is where Razer's established relationships with game studios become valuable. Convincing developers to optimize for a new device is hard. But Razer's reputation and existing partnerships provide leverage.

Gaming Implications and Use Cases

Beyond the technological accomplishment, what does Project Motoko mean for gaming specifically?

Competitive Gaming

In competitive gaming contexts, real-time environmental analysis could provide significant advantages. A device that analyzes your field of view and highlights threats or objectives could improve competitive performance.

But this raises fairness questions. Most competitive games prohibit external assistance that provides advantage. If Project Motoko offered significant competitive edge, it might be banned in competitive play. Razer would need to work with esports organizations and game developers to establish fair guidelines.

Single-Player and Narrative Gaming

For single-player experiences, Project Motoko offers genuine utility without fairness concerns. Imagine:

- Environmental hints based on in-game objects

- Text recognition that translates signs or documents

- Character identification that provides lore and background

- Contextual information about your surroundings

These features enhance immersion and reduce friction in games that already want to provide assistance.

Accessibility Improvements

AI-assisted gameplay could dramatically improve accessibility. A device that recognizes in-game text and reads it aloud helps players with visual impairments. Spatial audio analysis helps players with hearing challenges understand environmental cues.

Project Motoko's architecture naturally supports accessibility features. This is a significant opportunity that extends beyond "gaming for gaming's sake."

VR Integration

Project Motoko's architecture could integrate with VR headsets. Imagine a VR headset with Project Motoko's AI capabilities built in. The device could understand real-world context even while in VR, enabling seamless transitions between VR gaming and real-world awareness.

Or Project Motoko could serve as a companion device to VR headsets, providing additional processing power and sensors that improve VR experiences.

Industry Trends Signaled by Project Motoko

Beyond Razer specifically, what does this device tell us about industry direction?

AI Capabilities Moving to the Edge

Project Motoko's emphasis on on-device AI reflects a broader industry trend. Cloud AI (sending data to remote servers for processing) has advantages but also latency and privacy drawbacks.

As AI models become more efficient, edge processing becomes increasingly viable. The next 5 years will see more AI processing move to edge devices, with cloud AI handling only the most demanding tasks.

This changes the competitive landscape. Companies that can efficiently deploy AI models to devices gain advantage. Qualcomm's investment in AI-capable processors reflects this trend.

Wearables as Primary Devices

For decades, wearables were accessories. Project Motoko signals a shift toward wearables as primary computing devices for certain use cases.

You might interact with a gaming/AI headset for entertainment, productivity, and information lookup. Your phone becomes secondary, something you use when you need broader functionality.

This isn't certain—smartphones have huge advantages in screen size and versatility. But the direction is clear. Over the next decade, expect wearables to become more independent and more central to how people interact with technology.

Gaming Hardware Leading Innovation

Gaming hardware continues to drive innovation. High-refresh displays, mechanical keyboards, and specialized processors all found early adoption with gamers.

Project Motoko shows this trend continuing. Gaming applications justify the complexity and cost of advanced hardware. Gamers are willing to experiment with new form factors and features.

If Razer launches a commercial product, gaming will be the initial market. Broader adoption might follow as the technology matures and costs decline.

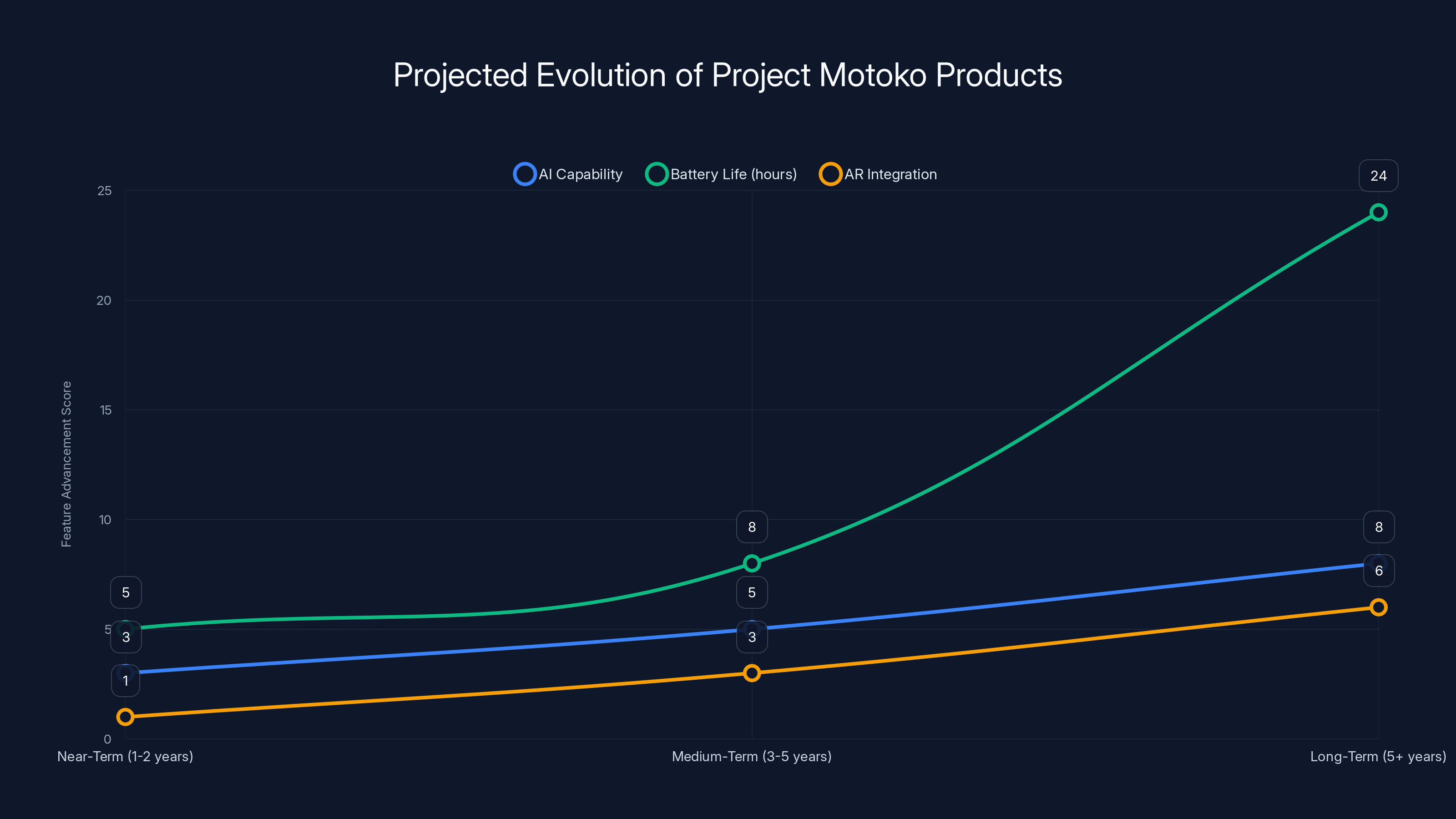

Estimated data shows a steady improvement in AI capabilities, battery life, and AR integration over time, with significant advancements expected in the long-term.

The Ghost in the Shell Connection

Razer explicitly referenced Ghost in the Shell in naming this device. Understanding that reference provides insight into Razer's vision.

The Major, the protagonist of Ghost in the Shell, is a cyborg with integrated technology. She doesn't carry devices—they're part of her body. Her technology provides enhanced perception, processing, and capabilities that feel seamless and natural.

Project Motoko isn't true biological integration (it's still a wearable device), but it moves in that direction. A headset that augments perception and cognition is conceptually similar to The Major's integrated systems.

This reference suggests Razer is thinking about wearable technology as extending human capability rather than simply adding another device. The device should feel like part of you, not something you carry.

This is important psychologically. If wearable AI is perceived as a tool you use, adoption will be lower than if it's perceived as an extension of yourself. Razer's design and messaging should emphasize integration and natural interaction.

Future Iterations and Natural Evolution

If Project Motoko does lead to commercial products, what might the evolution look like?

Near-Term (1-2 years)

Razer might release a more refined version targeting gaming specifically. This would include:

- Improved audio quality and directional capabilities

- More efficient AI inference

- Better battery life

- Refined ergonomics for extended wear

- Integration with major gaming platforms

This version would essentially be a gaming headset with advanced AI assistant capabilities. Not a full AR device, but significantly more capable than current gaming headsets.

Medium-Term (3-5 years)

Second-generation products might include:

- Display technology for visual overlays (subtle AR, not full immersion)

- Better environmental sensing (depth cameras, additional audio inputs)

- Improved AI models (more accurate, faster inference)

- Extended battery life (8+ hours)

- Broader ecosystem integration

By this timeline, AI model efficiency will have improved significantly. Batteries will be better. Manufacturing will have matured. The product would be closer to ready for mainstream adoption.

Long-Term (5+ years)

Fully mature Project Motoko-inspired products might include:

- Seamless integration with multiple AI platforms

- Advanced AR display for selective information presentation

- Holistic environmental understanding (vision + audio + other sensors)

- Days of battery life

- Mainstream consumer pricing

- Ecosystem of applications and integrations

At this point, the device might be as common as Air Pods and have similar cultural acceptance.

Competitive Responses and Industry Impact

Razer isn't the only company thinking about these problems. What might competitors do?

Established Gaming Hardware Companies

Companies like Steel Series, Corsair, and Hyper X will likely develop competing concepts. Their advantage is established gaming distribution and credibility. Their challenge is that they lack AI expertise that companies like Open AI, Google, and Meta possess.

Expect partnerships between gaming hardware makers and AI platform providers. Steel Series might partner with Open AI. Corsair might work with Google. These collaborations would accelerate product development.

Tech Giants

Meta, Apple, Google, and Microsoft all have research groups working on AR/wearable technology. They might leapfrog Razer's approach, incorporating more advanced display technology or AI capabilities.

Meta's focus on metaverse and AR positioning makes them particularly likely to invest in wearable AI. Apple's history of product refinement suggests they'd create a more polished consumer version. Google's AI expertise positions them well for software and intelligence layers.

Competition in this space benefits consumers. Multiple companies investing in wearable AI drives innovation faster than one company alone.

Startups and Niche Players

Smaller companies might target specific niches: professional applications (AR for engineering or medicine), specific gaming genres, or specialized use cases.

These startups can move faster than established companies and experiment with form factors that larger companies wouldn't risk. Some will fail, but a few will likely create genuinely innovative products.

The projected investment for Razer's Project Motoko ranges from

The Broader Context: AI and Wearables Convergence

Project Motoko exists within a broader convergence of AI technology and wearable computing.

Why Now?

Why is this convergence happening now? Several factors align:

-

AI Capabilities: Large language models, vision models, and multimodal AI have matured enough to run on mobile devices. Two years ago, this wasn't true. Three years from now, efficiency will be much better.

-

Processing Power: Mobile processors (Snapdragon, Apple Silicon) now include AI accelerators. Dedicated hardware for neural networks makes edge AI practical.

-

Model Optimization: Researchers have developed techniques to compress AI models without losing much accuracy. Quantization, distillation, and other methods make edge deployment viable.

-

Market Readiness: Consumers have accepted always-on AI assistants through phones and smart speakers. The leap to wearable AI feels natural rather than novel.

-

Form Factor Maturity: Wearable devices have become more comfortable and feature-rich. People wear Air Pods, smartwatches, and fitness trackers without thinking twice. Extending these with cameras and additional processing is conceptually straightforward.

The Next Decade

Over the next 10 years, expect:

- Mainstream wearable AI: Most people wearing some form of AI-augmented wearable (headsets, glasses, or other devices)

- Sophisticated environmental understanding: Devices that understand their surroundings comprehensively

- Seamless integration: AI capabilities that feel natural, not intrusive

- Diverse form factors: Not just headsets, but rings, glasses, clothing, and other wearables incorporating similar technologies

- Regulatory maturity: Clear frameworks for privacy, safety, and fair use

Project Motoko is early in this evolution but points clearly toward where the industry is heading.

Challenges and Open Questions

Despite the exciting potential, significant challenges remain.

Battery Life

Running AI models, cameras, microphone arrays, and wireless connectivity consumes power rapidly. Project Motoko would likely need daily charging, which limits adoption for all-day wear.

Battery technology is improving, but the gap between smartphone battery life (all day) and what advanced wearables can sustain (8-12 hours) remains. Solving this requires breakthroughs in battery chemistry, not just efficiency improvements.

Thermal Management

Packaging processing power, cameras, and batteries into a headset-sized form factor creates thermal challenges. The device will get warm during heavy use. Heat management affects both comfort and component lifespan.

Razer would need innovative cooling solutions. The headband could include heat pipes. Materials could be chosen for thermal conductivity. Active cooling (small fans) might be necessary, though this adds complexity and power consumption.

User Interface

How do users interact with Project Motoko? Voice is obvious, but what about situations where voice is impractical (loud environments, social situations where speaking to your headset feels weird)?

Touch controls on the headset could work, but they're less discoverable than physical buttons. Gesture recognition from the cameras could enable hands-free control but introduces latency and accuracy concerns.

Finding the right interaction model is harder than it seems. Razer will likely need to iterate extensively, trying different approaches before settling on something that feels natural.

Regulation and Acceptance

A headset with cameras and microphones in public raises concerns for other people, not just the wearer. How do others know if they're being recorded? What regulations will govern wearable AI in public spaces?

These social and regulatory questions lack clear answers. Project Motoko's concept status provides time to think through these issues before commercial launch.

Use Cases Beyond Gaming

While gaming is the obvious application, Project Motoko enables use cases across many domains.

Professional and Workplace Applications

A technician could wear Project Motoko while troubleshooting equipment. The device could recognize components, provide documentation, and guide repairs through audio instructions.

A surgeon could receive real-time information about anatomy while operating. An architect could visualize building designs overlaid on existing structures.

These professional applications have high value and might reach market faster than consumer gaming versions, as regulatory requirements are often different and users are motivated by clear ROI.

Education and Learning

Imagine a student wearing Project Motoko while studying. The device could recognize educational content, provide contextual explanations, suggest related materials, and track learning progress.

Language learning becomes more practical. The device recognizes foreign language text and audio, provides translations and pronunciations, creates immersive learning environments.

Navigation and Exploration

Project Motoko could provide real-time navigation guidance without a handheld phone. Tourist mode could identify landmarks and provide historical information. Urban exploration becomes richer when the device can provide contextual information about what you're seeing.

Health and Wellness

Even without medical-grade sensors, Project Motoko could support health applications. Fitness coaching through audio guidance. Posture analysis through the cameras. Mental health support through conversational AI.

These applications require careful privacy and regulatory consideration but represent genuine value propositions.

Cost Analysis and Market Feasibility

For Project Motoko to reach the market, it needs to be economically viable. What might the cost structure look like?

Component Costs

Estimating component costs for a hypothetical commercial version:

- Snapdragon processor: $40-80 (OEM pricing)

- Camera modules (2x): $30-50 total

- Audio components (drivers, mics, amps): $20-40

- Battery: $15-25

- Structure and enclosure: $20-30

- Other components: $25-35

- Assembly and logistics: $20-30

Total component and assembly cost: approximately $170-290 per unit, depending on optimization and scale.

With a target 50% gross margin, retail pricing might be

Market Size and Addressability

Who would buy Project Motoko at launch?

- Gaming enthusiasts: 5-10 million potential customers in developed markets

- Tech early adopters: 2-5 million additional customers

- Professional applications: 1-3 million potential customers

Total addressable market in year one: roughly 10-20 million units globally if the product is compelling and well-marketed.

At

Investment and Development Timeline

If Razer decides to commercialize Project Motoko, what would the timeline and investment look like?

Research and Development (Current)

Razer is already investing in R&D for the concept. Estimated current spend: $5-10 million annually across hardware, software, and AI integration.

Production Readiness (1-2 Years)

Moving from concept to production-ready requires:

- Prototype iteration: 2-3 major revision cycles

- Supplier relationships: Qualifying component suppliers

- Manufacturing process: Developing tooling and assembly procedures

- Quality assurance: Validation across temperature, stress, and operation scenarios

Estimated investment: $20-50 million

Launch and Scale (Year 2-3)

Manufacturing at scale, marketing, and distribution

Estimated investment: $50-150 million (depending on market ambitions)

Total Investment Through Launch: $75-210 million

This is significant but achievable for a publicly traded company like Razer with strong cash flow.

Conclusion: The Future of Wearable AI

Project Motoko is simultaneously a gaming headset concept, an AI wearable preview, and a signal about where technology is heading.

Razer showed this device because the company understands that the convergence of AI and wearables is inevitable. The questions aren't whether this will happen, but when and how well executed the first products will be.

The headset form factor makes sense as the first platform for this convergence. Headsets are already widely worn, already integrate audio and microphone input, already have precedent for always-on operation. Adding cameras and AI processing feels like a natural evolution rather than a radical departure.

Will Project Motoko itself reach the market? That's genuinely uncertain. Concept devices often never ship commercially. But the technologies demonstrated, the design language, and the use cases outlined will definitely shape the products that do ship.

Over the next 3-5 years, expect to see commercial products from Razer, competitors, and new startups that build on Project Motoko's foundation. These products will mature the technology, work out privacy and regulatory challenges, and establish best practices for wearable AI.

For anyone paying attention to gaming hardware, wearable technology, or AI integration, Project Motoko is worth studying. It represents serious thinking about how these technologies converge and what the user experience might feel like.

The gaming community has always been early adopters of experimental hardware. If wearable AI becomes mainstream, gamers will likely be the first consumers. Project Motoko positions Razer to lead or compete in that inevitable evolution.

We're watching the early stages of a significant technology transition. That's rare to witness clearly in real time. Project Motoko is one company's contribution to that transition, but the transition itself is much larger and more significant than any single product.

FAQ

What is Project Motoko exactly?

Project Motoko is a concept wearable AI headset created by Razer that combines gaming audio capabilities with AI-powered environmental awareness. It features first-person view cameras for object and text recognition, sophisticated microphone arrays for spatial audio, and integration with multiple AI chatbots like Open AI and Google Gemini. The device runs on Qualcomm's Snapdragon platform and performs AI inference locally on the headset rather than requiring cloud processing.

How does Project Motoko's AI functionality work?

The device uses on-device processing through its Snapdragon processor to run AI models for real-time object recognition, text recognition, and spatial audio analysis. The dual cameras capture your field of view and process that visual information through computer vision models to identify objects and text in your environment. The microphone array uses beamforming to understand spatial audio relationships. The device can integrate with multiple AI platforms including Open AI's Chat GPT, Google Gemini, and Grok, allowing users to get information and assistance based on their visual and audio environment.

When will Project Motoko be available for purchase?

Razer has explicitly stated that Project Motoko is a concept device with no plans for commercial release. It's a vision piece shown at CES 2025 to demonstrate the company's thinking about the future of AI wearables and gaming hardware. However, the technologies and design principles demonstrated may eventually appear in future commercial Razer products, though any such products would likely be released 2-5 years from now after further development, refinement, and regulatory compliance work.

What makes Project Motoko different from current gaming headsets?

Current gaming headsets focus primarily on audio quality, wireless connectivity, and comfort. Project Motoko fundamentally extends these core functions by adding AI processing, visual input through cameras, and environmental awareness capabilities. Rather than being a dedicated gaming device, it positions itself as an AI wearable that enhances both gaming and everyday productivity. This represents a shift from gaming peripheral to multimodal AI assistant that happens to include excellent audio.

How does Project Motoko compare to AR headsets like Microsoft Holo Lens or Apple Vision Pro?

Project Motoko is fundamentally different from full AR headsets. While devices like Holo Lens and Vision Pro include visual displays for digital content overlay, Project Motoko is audio-focused with cameras for environmental understanding but no display. This makes Project Motoko more practical for everyday wear, lighter, less socially intrusive, and likely less expensive. However, full AR headsets offer visual feedback capabilities that Project Motoko lacks. Project Motoko represents a lighter-weight approach to AI-augmented perception that prioritizes audio and environmental understanding over visual overlay.

What are the main privacy concerns with Project Motoko?

The device's always-on cameras and microphone arrays raise legitimate questions about data collection and surveillance. Key concerns include: what happens to video data captured by the cameras, how is audio processed and stored, who has access to recordings, and how can users verify that the device isn't secretly recording. Addressing these requires hardware-level privacy controls like physical camera shutters, clear indicators of recording status, local-only data processing without cloud transmission, and transparent documentation of what data is captured and retained. These privacy frameworks would be essential before any commercial release.

Can Project Motoko be used for cheating in competitive games?

Potentially, yes. If the device's real-time object recognition could identify enemy players or objectives in competitive games before they're visible on screen, it could provide competitive advantage. This would likely violate competitive gaming rules. However, for single-player games, story-driven experiences, and casual gaming, Project Motoko's features could enhance enjoyment without fairness concerns. Razer would need to work with game developers and esports organizations to establish guidelines about acceptable uses in competitive contexts.

What technologies would need to improve before Project Motoko could be commercialized?

Several areas need advancement: battery technology (to extend from hours to full days of use), thermal management (to prevent overheating in a headset form factor), AI model efficiency (to perform more sophisticated analysis with less power), microphone array technology (to reliably distinguish voice commands from background noise), and camera technology (for better recognition accuracy in varied lighting conditions). Additionally, privacy frameworks and regulations around wearable AI would need to mature, and manufacturing processes would need refinement to achieve appropriate cost and quality levels.

How would Project Motoko integrate with existing gaming platforms?

Integration would likely work through audio interface primarily, since Project Motoko doesn't include a visual display. The device could connect to PC, console, or mobile gaming via wireless protocols. AI assistance could suggest strategies, provide information about in-game items or locations, or read game lore aloud. Voice control could adjust game settings or execute complex commands without leaving gameplay. API integrations would let game developers create Project Motoko-aware content that leverages the device's unique capabilities. Real-world environmental analysis could theoretically inform gameplay mechanics, though this varies by game.

What is the estimated price range if Project Motoko reaches commercial release?

Based on component costs and industry pricing precedents, a commercial Project Motoko would likely cost between

What's the significance of Razer naming this device after Ghost in the Shell's Major character?

The Ghost in the Shell reference reveals Razer's philosophical approach to wearable technology. The Major is a cyborg whose technology is integrated into her body, providing seamless cognitive and perceptual enhancement without feeling like a separate tool. By naming Project Motoko after this character, Razer signals ambition to create wearable technology that feels like a natural extension of the user rather than an external device. This suggests the company is thinking about wearables not as gadgets you use but as capabilities you internalize, which is a different (and more ambitious) design philosophy than most tech companies adopt.

Key Takeaways

- Project Motoko represents a fundamental shift in how wearable devices integrate gaming and AI capabilities rather than keeping them separate

- On-device AI processing using Snapdragon enables real-time environmental analysis without relying on cloud infrastructure or privacy compromises

- The concept signals industry-wide trends toward wearables as primary devices rather than accessories, eye-level computing, and persistent AI availability

- Camera and microphone-equipped wearables will require solving regulatory, privacy, and social acceptance challenges before mainstream adoption

- While Project Motoko itself won't ship commercially, competing products will likely emerge within 3-5 years as the underlying technologies mature

Related Articles

- Bee AI Wearable: Amazon's Big Moves After Acquisition [2025]

- Audeze Maxwell 2 Gaming Headset: Complete Review & Analysis [2026]

- Anker's Eufy Smart Home CES Lineup: $1,600 Robot Vacuum & More [2025]

- Garmin Health Status Upgrade: What's Coming in 2025

- Leaptic's 8K Action Camera Takes On DJI: What We Know [2025]

- Plaud NotePin S: AI Wearable with Highlight Button [2025]