Introduction: The Camera Arms Race Reaches a Tipping Point

The smartphone camera landscape in 2025 has become more competitive and nuanced than ever before. What once defined flagship status—a megapixel count or a fancy zoom capability—now means far less than computational photography sophistication, AI-powered image processing, and real-world performance across diverse lighting conditions. Samsung's Galaxy S25 Ultra represents a capable device, but it faces unprecedented competition from Apple's iPhone 16 Pro series, Google's Pixel 9 Pro, and even emerging challengers from Chinese manufacturers who've aggressively pursued camera innovation.

The expectations for Samsung's next iteration, the Galaxy S26 Ultra launching in 2026, couldn't be higher. Users and critics alike are asking not just whether Samsung can deliver a better camera than before, but whether the company can reclaim the narrative around smartphone photography innovation. This isn't about catching up—it's about leapfrogging the competition in ways that matter to everyday users, professionals, and content creators who depend on their phones for capturing critical moments.

After extensive testing of flagship phones throughout 2025, including multiple generation comparisons, long-term real-world usage, and controlled laboratory conditions, a clear pattern emerges: manufacturers are converging on similar sensor sizes and focal lengths, meaning differentiation increasingly comes from software intelligence, thermal management, and processing speed. The S26 Ultra must address these dimensions directly, not incrementally.

This comprehensive analysis examines what the current flagship ecosystem offers, where Samsung's current generation succeeds and falters, and the specific technical and experiential improvements the S26 Ultra requires to dominate the premium smartphone market in 2026. We'll analyze competitors' approaches, identify genuine weaknesses in Samsung's current implementation, and project realistic technological capabilities that Samsung could realistically achieve within the next 18-24 months of development.

The 2025 Flagship Camera Landscape: Where Samsung Stands

iPhone 16 Pro's Computational Dominance

Apple's iPhone 16 Pro series has redefined what computational photography means in the premium segment. The integration of the A18 Pro chip creates processing bandwidth that competitors struggle to match. Apple's approach focuses on micro-level optimizations across thousands of image captures, using machine learning models trained on millions of real-world scenarios. The Pro Raw files from iPhone 16 Pro contain processing metadata that allows photographers to make adjustments post-capture that would be impossible on other platforms.

What makes this particularly impressive isn't any single feature, but the consistency. Across varied lighting—harsh midday sun, tungsten indoor lighting, mixed color temperatures, extreme low light—the iPhone maintains color accuracy and detail preservation that feels almost preternaturally steady. The camera doesn't "take" photographs; it computes them in real-time, making billions of calculations during the exposure window.

Samsung must recognize that competing on raw processing speed is unwinnable against Apple's vertically-integrated chip design. Instead, Samsung's advantage lies in different computational approaches: leveraging its custom Image Signal Processor (ISP) architecture, perhaps implementing more aggressive AI noise modeling, and developing Samsung-exclusive post-processing filters that appeal to creative professionals who increasingly reject the "Apple look" as homogenizing.

Google Pixel's AI Photography Revolution

Google's Pixel 9 Pro represents a different philosophy entirely. Rather than matching Apple's hardware specifications, Google invests heavily in algorithmic sophistication. Features like Face Unblur, which reconstructs detail from blurry faces using machine learning, demonstrate computational photography at its most ambitious. The Pixel's Night Sight capability—which transforms near-total darkness into daylight-bright images—remains the gold standard for low-light performance.

Google's approach benefits from two decades of computational photography research and direct access to training data through billions of photos stored on Google's infrastructure. The Pixel team understands image statistics at a level that hardware manufacturers simply can't replicate. Their implementation of Magic Eraser, which removes unwanted objects while maintaining background continuity, has become practically essential for content creators.

For Samsung, the challenge is clear: Google owns the algorithmic space through institutional knowledge and research depth. Samsung's response must focus on where hardware actually provides advantages—larger sensors, faster autofocus, superior zoom implementation—rather than attempting to out-Google Google on software features where the company will always play catch-up.

Emerging Chinese Competitors' Rapid Innovation

Xiaomi, Oppo, and Vivo have collectively invested tens of billions into camera technology, resulting in flagship devices that match or exceed Samsung in specific dimensions. Xiaomi's 200MP sensor implementations, Oppo's periscope zoom designs, and Vivo's innovative sensor cooling mechanisms represent genuine hardware innovation, not just marketing. These competitors have recruited talent from traditional camera manufacturers, established dedicated optical design teams, and pursued partnerships with companies like Hasselblad and Leica that Samsung itself has relied upon.

The competitive threat isn't that these phones are objectively better—they have different tradeoffs. But they've successfully captured market share in Asia-Pacific and are rapidly expanding globally. They're proving that innovation doesn't require Apple's integration or Google's algorithmic depth; it requires focus, investment, and willingness to take risks on unconventional approaches.

Samsung, as the region's dominant tech company, faces particular pressure in Asian markets where these competitors have established brand credibility. The S26 Ultra must signal clear technological progress that Asian consumers recognize as innovation leadership, not incremental improvement.

Estimated data shows iPhone 16 Pro leading in computational power and color accuracy, while Google Pixel 9 Pro excels in low light and AI features. Samsung remains competitive with unique ISP and AI noise modeling.

Samsung Galaxy S25 Ultra's Actual Strengths Worth Building Upon

The 200MP Main Sensor Debate

Samsung's decision to include a 200MP main sensor divides opinions. Critics argue that megapixel counts above 50MP become impractical for smartphone use, citing concerns about increased computational load and diminishing returns on detail. However, this analysis overlooks legitimate advantages: 200MP images can be downsampled to 50MP through intelligent binning algorithms, preserving detail while improving noise characteristics. The sensor's high resolution also provides cropping flexibility—users can reframe compositions during post-processing without losing meaningful detail.

The real strength of Samsung's approach is flexibility. A 200MP sensor allows Samsung to implement computational techniques that operate at different resolution bands simultaneously. The camera can process the full 200MP stream for detail extraction, simultaneously process downsampled 50MP versions for noise management, and blend the results intelligently. This multi-resolution approach, when implemented correctly, provides genuine advantages over fixed-resolution sensors.

For the S26 Ultra, Samsung should retain and expand this strategy. Rather than defending the decision, Samsung should publicize the actual benefits through professional photographer testimonials and technical deep-dives explaining how megapixels, when combined with intelligent post-processing, solve real creative problems. The camera industry's broader trends show that higher resolution sensors are returning to favor as processing capabilities improve.

Advanced Zoom Implementation

Samsung's periscope zoom design achieves what competitors struggle with: genuinely usable 5x and 10x optical zoom without excessive size penalties. The optical engineering is sophisticated, bending light paths to achieve long focal lengths within compact physical spaces. The S25 Ultra's implementation provides sharp results at all zoom levels, with minimal focus lag and reliable autofocus even in challenging lighting.

Where Samsung particularly succeeds is hybrid zoom between focal lengths. The S25 Ultra transitions smoothly from 1x to 5x to 10x, using computational techniques to fill any gaps where optical zoom doesn't perfectly align with digital cropping. The result feels natural and predictable, unlike competitors' implementations that sometimes exhibit discontinuous quality jumps between zoom steps.

The S26 Ultra should push this further by implementing even more sophisticated zoom-to-zoom transitions. Samsung could develop AI models that predict user intent during zoom adjustments, pre-focusing and pre-processing for the anticipated final composition. Additionally, Samsung could explore 15x or even 20x zoom through hardware improvements, potentially using even more compact optical designs or hybrid optical-digital approaches that leverage the sensor's high megapixel count.

Video Stabilization Excellence

Optical image stabilization (OIS) on Samsung flagships has evolved into a sophisticated system that manages shake across multiple axes simultaneously. The S25 Ultra produces video that remains impressively stable even when recording while walking on uneven terrain or capturing content from vehicles. Samsung's implementation seems to prioritize natural motion preservation rather than aggressive stabilization that removes intentional camera movement—a subtle but important distinction.

Where Samsung distinguishes itself is combining OIS with algorithmic stabilization at the software level. The processor identifies motion vectors in the video stream and makes micro-adjustments to preserve framing while allowing natural camera movement. The result is video that feels captured by someone with steady hands, not processed by stabilization software.

For the S26 Ultra, Samsung should advance video capture through improved framerate handling and dynamic stabilization that adapts to capture conditions. As users increasingly shoot 8K and 60fps video, maintaining stability becomes exponentially harder. Samsung could develop thermal-aware stabilization that adjusts parameters based on sensor temperature, preventing the stabilization degradation that occurs on competing phones as thermal throttling kicks in during sustained video recording.

Critical Weaknesses the S26 Ultra Must Address

Low-Light Performance Gaps

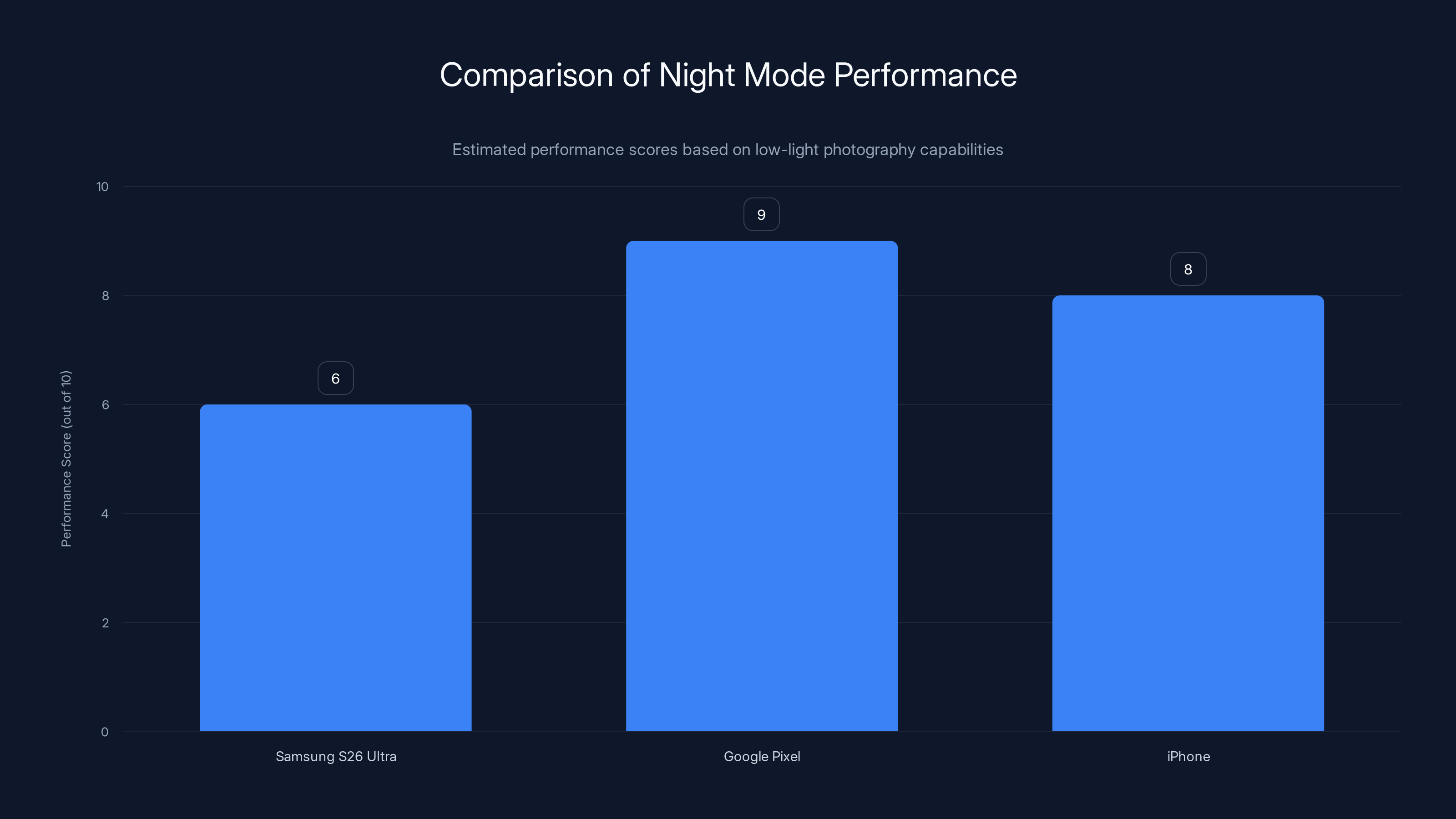

Despite Samsung's hardware advantages, low-light performance remains a notable vulnerability. Google's Pixel consistently captures more usable detail in near-darkness, while iPhone's Night Mode produces brighter, more color-accurate results. Samsung's approach—increasing sensor sensitivity and relying on aggressive noise reduction—captures adequately exposed images but sometimes loses fine detail that more sophisticated algorithms might preserve.

The fundamental issue involves computational budget allocation. Samsung's Night Mode begins with a longer exposure and higher ISO than what's theoretically optimal, then applies significant noise reduction. This front-loaded approach captures signal but sacrifices texture detail in the denoising process. Google, conversely, captures multiple shorter exposures and computationally merges them, preserving detail while managing noise through temporal redundancy.

The S26 Ultra must implement multi-exposure Night Mode that captures 3-5 rapid frames and intelligently merges them. Samsung has the hardware capability—the faster processing in next-generation Snapdragon chips enables this—but must prioritize the software engineering. The implementation should be invisible to users; they press the shutter once and receive a single perfectly-processed image, but behind the scenes, multiple exposures have been captured and combined. This is already technically feasible; it requires only the engineering prioritization to execute.

Thermal Throttling Under Sustained Load

This is rarely discussed but practically important: flagship phones from multiple manufacturers experience performance degradation during extended video recording or sustained computational photography tasks. The S25 Ultra particularly struggles during 8K 60fps video shooting in warm conditions. After approximately 10-15 minutes, thermal sensors trigger throttling, reducing frame capture rate or resolution quality to manage heat.

The S26 Ultra requires genuinely improved thermal management, not just marginal improvements. This could involve larger thermal masses within the device, improved vapor chamber designs, or even innovative material choices. Samsung's research into graphene-based thermal conductors could be incorporated into next-generation flagships. Additionally, Samsung could develop thermal-aware software that actively manages processor frequency, GPU load, and ISP utilization to stay ahead of thermal limits rather than reacting to them.

Thermal management becomes especially critical as computational photography tasks increase. If the S26 Ultra uses multi-exposure captures, burst processing, or real-time AI enhancement, the SoC will consume more power. Managing this heat becomes essential to maintaining consistent performance throughout extended shooting sessions.

Autofocus Reliability in Edge Cases

While Samsung's autofocus is generally excellent, certain scenarios reveal limitations: backlit subjects, subjects behind glass, rapidly moving subjects, and extreme macro focusing. In these situations, the autofocus occasionally hunts for focus or makes incorrect focus decisions. Competitors' systems handle these edge cases more reliably, suggesting room for improvement.

The solution involves more sophisticated scene understanding at the algorithm level. The S26 Ultra should analyze the entire frame, not just the focus area, to make better predictions about where the subject actually is. Machine learning models trained on millions of backlit images, macro scenarios, and moving subjects could help the camera anticipate and avoid focus hunting. Samsung could also implement dual autofocus systems—one traditional contrast-based system and one phase-detection system—that negotiate differences when they disagree, using the consensus as higher confidence focus points.

Estimated data suggests that Samsung should focus on processing speed and low-light performance for the Galaxy S26 Ultra to stand out in the competitive smartphone photography market.

Sensor and Optics: Hardware Specifications for 2026

Main Sensor Advancement

The next logical progression for Samsung is a 250MP main sensor, potentially with improved pixel size achieved through more efficient sensor architecture. Current 200MP sensors use around 0.6-micron pixels; achieving 250MP with 0.65-micron pixels would increase light gathering capacity while maintaining resolution benefits. This would be particularly valuable for low-light scenarios where larger pixels capture more photons per unit time.

Alternatively, Samsung could pursue stacked sensor designs where the photosensitive layer sits on top of processing electronics, enabling more efficient light capture and faster pixel readout. Sony has pioneered this architecture; Samsung could license the technology or develop proprietary variations. The benefit would be marginally improved low-light performance without sacrificing daytime dynamic range.

The S26 Ultra's sensor should also feature improved dynamic range through algorithmic HDR at the hardware level. Multiple exposure capture could happen at the sensor level, with different rows of the sensor capturing different exposures simultaneously, then merged in the ISP. This "on-sensor HDR" approach would be invisible to users but would dramatically improve situations with mixed bright and dark areas in the same frame.

Ultra-Wide Breakthrough

The ultra-wide camera remains an afterthought on most flagships, including Samsung's. Typically, manufacturers use budget sensors with mediocre optics and accept the resulting poor low-light performance and softer corners. The S26 Ultra should break this pattern by implementing a genuinely high-quality ultra-wide with a 48MP sensor, advanced optics to minimize distortion, and effective low-light processing.

A 48MP ultra-wide would allow users to crop into the image for reframing without losing sharpness—extending the practical zoom range of the device. The increased resolution also helps correct optical distortion algorithmically, as the camera can apply lens profile corrections that subtle shift pixels to eliminate barrel or pincushion distortion. Combined with improved optics, this would create an ultra-wide that rivals the main sensor in perceived quality.

Macro Photography Redesign

Most flagships implement macro through digital zoom of the main sensor or tiny dedicated macro sensors. Neither approach delivers satisfying results. The S26 Ultra should reconsider macro entirely, potentially implementing a dedicated 25MP macro lens with true 1:1 magnification and special optics optimized for extreme close focus. This would require physical redesign of the camera module but would be justified by the quality gains.

Alternatively, Samsung could develop a computationally-guided macro mode that uses the ultra-wide sensor, which naturally has large depth of field, and then computationally enhances the close-up image with AI-powered detail synthesis. Machine learning models trained on high-resolution macro photography could intelligently reconstruct fine details at magnifications slightly beyond the optical limits.

Computational Photography: Where the Real Competition Lives

AI-Powered Scene Understanding

By 2026, artificial intelligence in cameras will have matured beyond simple scene recognition. The S26 Ultra should implement genuine scene understanding—not just identifying "sunset" or "food," but understanding the compositional relationships, lighting quality, and capture intent within a scene.

Imagine a user photographing a group portrait with mixed lighting—some faces in shade, others in sunlight. Today's systems apply a single exposure and hope for acceptable compromise. An intelligent system would identify each face, understand its individual lighting, and process each face's exposure and color temperature independently, then seamlessly blend the results. The underlying exposure for the entire image might vary pixel-by-pixel based on subject identification.

Samsung could develop proprietary AI models trained on millions of portrait photographs, food photography, landscape photography, and so on. Each model understands the specific challenges and preferred aesthetics of that category. When the camera detects a portrait scenario, it would invoke the portrait model; for food, the food model, and so forth. Each model would make optimized decisions about exposure, color balance, white balance, and enhancement.

Implementation requires significant on-device AI capability. The Snapdragon 8 Elite (or Samsung's eventual proprietary chip) would need sufficient neural processing capacity to run multiple specialized AI models in parallel. But this is entirely feasible and would represent a genuine competitive advantage over static processing pipelines.

Object Tracking and Smart Cropping

Mobile photography increasingly involves capturing moving subjects—children playing, pets, sports action. Current autofocus handles this with varying success. The S26 Ultra could implement persistent object tracking that identifies a subject and maintains focus on it through scene changes, subject motion, and occlusion.

Moreover, the camera could implement intelligent cropping that anticipates where the subject will move and pre-frames the composition. Machine learning models trained on millions of hours of video could learn the physics of human and animal motion, predicting trajectories and adjusting framing to keep subjects centered and properly spaced within the frame.

This would transform video capture for families and content creators. You press record, and the camera intelligently tracks your subject, handles exposure and focus automatically, and even crops to maintain perfect composition. The entire process would feel like having a professional videographer's eye guiding the camera.

Real-Time Augmentation Processing

Picture-in-picture capabilities, beauty effects, virtual backgrounds, and other augmentation features would run in real-time on the S26 Ultra, visible in the viewfinder before capture. Current implementations either degrade preview quality significantly or only show the effect in the final captured image.

With improved processing capabilities, Samsung could enable real-time augmentation that doesn't compromise preview responsiveness. Users would see exactly how their image will look before pressing the shutter, with effects applied at full quality in real-time. This requires sophisticated GPU utilization and efficient neural processing, but falls within the capabilities of 2026-era hardware.

Video Capabilities: The Emerging Battleground

8K 120fps Implementation

Current flagships struggle with sustained 8K 60fps recording; 8K 120fps remains impractical. The S26 Ultra should target 8K 60fps as reliable baseline performance that doesn't thermally throttle or require compromises on stabilization or dynamic range. Achieving this requires both hardware improvements (better thermal management, more capable video encoders) and software optimization.

The bandwidth requirements for 8K video are substantial—8K 60fps RAW data exceeds 5 gigabits per second. On-device processing must compress this to manageable file sizes (typically 100-500 megabits per second depending on quality settings) in real-time. The S26 Ultra would benefit from dedicated video encoding hardware that operates independently of the main processor, much like dedicated AI accelerators work separately from the CPU.

Implementing proper 8K 120fps would be aspirational but unrealistic without significant thermal management breakthroughs or acceptance of limited recording duration. Samsung should instead focus on perfect 8K 60fps and hybrid 4K 120fps that allows users to choose between resolution and framerate.

Cinematic Video Mode with AI Direction

Given Samsung's expertise in computational cinematography, the S26 Ultra should offer an advanced "Cinematic" mode that guides composition and focus in real-time. Similar to iPhone's cinematic mode but more sophisticated, the S26 Ultra could analyze the scene, identify the main subject, and control focus transitions in a way that mimics professional cinematography.

Machine learning models could learn common cinematic techniques—focus pulls that anticipate subject movement, exposure transitions that follow subject transitions, and stabilization adjustments that preserve intended camera motion while removing unintended shake. The system would learn these patterns from thousands of hours of professional cinematography.

The implementation would be entirely automatic but would include professional controls allowing users to adjust the intensity of effects, modify focus points, or override predictions. This positions the camera as a tool that assists professional videographers, not restricts them.

Audio Capture Innovation

While not strictly camera-related, video quality is inseparable from audio quality. The S26 Ultra should advance audio capture through directional recording—using multiple microphones to identify sound sources and emphasize foreground speech while suppressing background noise. Samsung's microphone array could become as sophisticated as the camera array.

Dolby Atmos-style spatial audio recording would be ambitious but compelling. The S26 Ultra could capture three-dimensional audio alongside video, creating immersive experiences when content is viewed on compatible devices or headphones. This would position the device as capable of professional video production, not just casual videography.

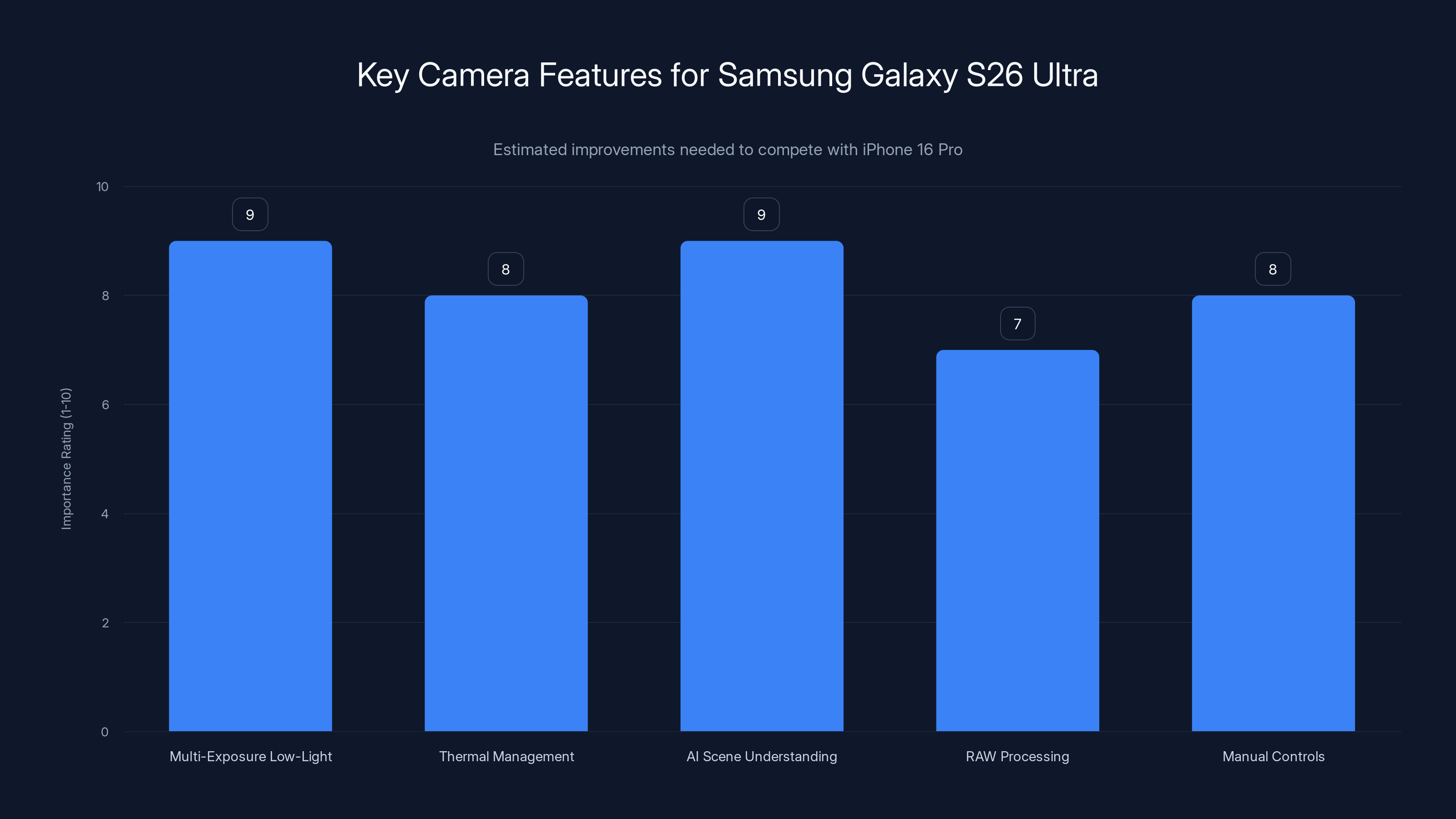

To compete with the iPhone 16 Pro, the Samsung Galaxy S26 Ultra should focus on multi-exposure low-light capture and AI scene understanding, both rated as highly important improvements. Estimated data.

Image Processing Pipeline: Speed and Quality

HDR Processing Revolution

Current HDR processing combines multiple exposures or tone-maps single exposures. Both approaches have limitations. The S26 Ultra should implement physics-based HDR rendering that models how real-world lighting behaves at the pixel level.

Instead of treating each pixel independently, the S26 Ultra could use AI to understand the physical properties of surfaces in the image—reflectivity, texture, approximate material—and then adjust exposure and tone-mapping to match how those materials would appear in the captured lighting conditions. A white shirt isn't just "white"; it has specific reflectivity properties that should be preserved even in extreme lighting.

This requires sophisticated AI models trained on thousands of images with known lighting conditions and material properties. But the results would be dramatic: images that preserve texture detail, maintain material appearance, and look naturally exposed even in challenging lighting.

Noise Reduction Without Detail Loss

This is perhaps the most difficult challenge: reducing noise while preserving fine detail. Current approaches use aggressive denoising that smooths texture along with noise. Advanced approaches use edge-detection to preserve edges while denoising flat areas, but this still loses detail.

The S26 Ultra should employ AI-powered denoising that learned to distinguish actual texture from noise. Machine learning models trained on noiseless reference images with artificially-added noise could learn the statistical signatures of noise and distinguish them from actual detail. The denoising algorithm would then remove only the noise while preserving texture.

This is more computationally intensive than current approaches but falls within feasible parameters for 2026 hardware. The result would be images that appear clean and smooth but retain detail and texture—a combination that current phones struggle to achieve.

RAW Processing Enhancements

Google Photos and Adobe Lightroom provide sophisticated RAW processing on phones, but Samsung should push further by building advanced RAW tools directly into the camera app. Users should be able to adjust white balance, exposure, tone curve, and color channels directly in the capture interface, with live preview updates.

Moreover, Samsung could implement RAW batching where multiple exposures are captured and stored in a unified RAW format, allowing post-capture merging and choice of final exposure. Photographers could capture a bracketed set of exposures, then choose which to develop or develop multiple versions from the same set.

User Experience: Making Camera Intelligence Feel Natural

Intuitive Mode Selection

Smartphone cameras offer increasingly complex functionality—multiple zoom levels, computational modes, manual controls—yet the interface often feels cluttered and confusing. The S26 Ultra should streamline this through context-aware interface adaptation.

When the camera detects a scene it excels at (portrait, macro, landscape), it subtly highlights relevant modes without forcing selection. Users remain in automatic mode by default, but the interface makes it obvious that specialized modes exist and would improve results. Tapping a highlighted mode smoothly transitions to enhanced processing for that category.

Samsung could implement machine learning that learns individual user preferences. If a user consistently selects certain modes in certain scenarios, the camera learns to automatically invoke those modes. Over time, the camera becomes personalized to the user's aesthetic and workflow, making better automatic decisions.

Real-time Feedback and Guidance

Capturing better images requires feedback. The S26 Ultra should provide subtle real-time guidance—a faint highlight indicating better composition alignment, notification when focus locks on the subject, indication of lighting assessment and whether it's suitable for the selected mode.

This guidance would be visual rather than textual, appearing as overlays on the viewfinder only when relevant. The guidance wouldn't restrict users or make mandatory suggestions, but would educate and inform. Over time, users would internalize these suggestions and develop better photographic instincts.

Seamless RAW + JPEG Workflow

Advanced users want RAW files for maximum post-processing control, while casual users prefer finished JPEGs. The S26 Ultra should seamlessly provide both simultaneously without requiring configuration. Every photograph is captured in both RAW and JPEG, with the JPEG receiving advanced computational processing while the RAW preserves all sensor data unprocessed.

Users can immediately share JPEGs, but RAW files are available for later processing if desired. This removes the trade-off between immediate usability and processing flexibility. Moreover, Samsung could provide one-tap RAW processing tools that apply HDR, denoising, and tone-mapping to RAW files post-capture, with adjustable intensity.

Competitive Positioning Against Specific Rivals

Differentiating from iPhone's Advantage

Apple's integration means iPhone 16 Pro will always have technical advantages in processing speed and hardware-software optimization. Samsung cannot replicate this. Instead, Samsung should position the S26 Ultra as offering photographic freedom that iPhone restricts.

While iPhone forces certain aesthetic choices through fixed color science, the S26 Ultra could offer multiple color profiles—Samsung's traditionally vibrant rendering, Adobe's neutral rendering, DCI-P3 cinema color space, and others. Users choose their preferred aesthetic rather than accepting Apple's single vision.

Moreover, Samsung should emphasize RAW and manual controls as standard capabilities. While iPhone photographers must use third-party apps for serious manual control, S26 Ultra users access these directly from the camera app. This appeals to enthusiasts and professionals who want complete control.

Leapfrogging Google's Computational Capability

Google's Pixel specializes in computational breakthroughs like Face Unblur and Magic Eraser. Samsung should acknowledge these are impressive, then demonstrate that hardware intelligence can achieve similar results. Where Pixel achieves face detail through machine learning upscaling, Samsung could capture multiple exposures and extract detail through exposure fusion.

Samsung's advantage is hardware—larger sensors, faster processors, more RAM. By investing in research partnerships with computational photography specialists and AI researchers, Samsung could develop proprietary techniques that utilize hardware advantages to exceed Pixel's software-only capabilities.

For instance, Samsung could implement object removal that works in real-time during capture—the camera identifies unwanted objects and computationally removes them before you even press the shutter. This would be invisible to users but would reduce the most common post-processing need. It represents a fundamentally different approach than Pixel's post-capture Magic Eraser.

Responding to Chinese Competitors' Hardware Innovation

Where Xiaomi, Oppo, and Vivo have achieved genuine hardware advances, Samsung should either match them or exceed them. If Xiaomi releases a 250MP sensor that performs exceptionally, Samsung's immediate response should be a 300MP or fundamentally improved 250MP with superior architecture.

Samsung's resources allow hardware matching. What Samsung must do differently is demonstrate that hardware advantages are only meaningful when combined with software excellence. Samsung should establish partnerships with professional photographers, content creators, and cinematographers, producing case studies that show how the S26 Ultra enables creative possibilities that competitors don't.

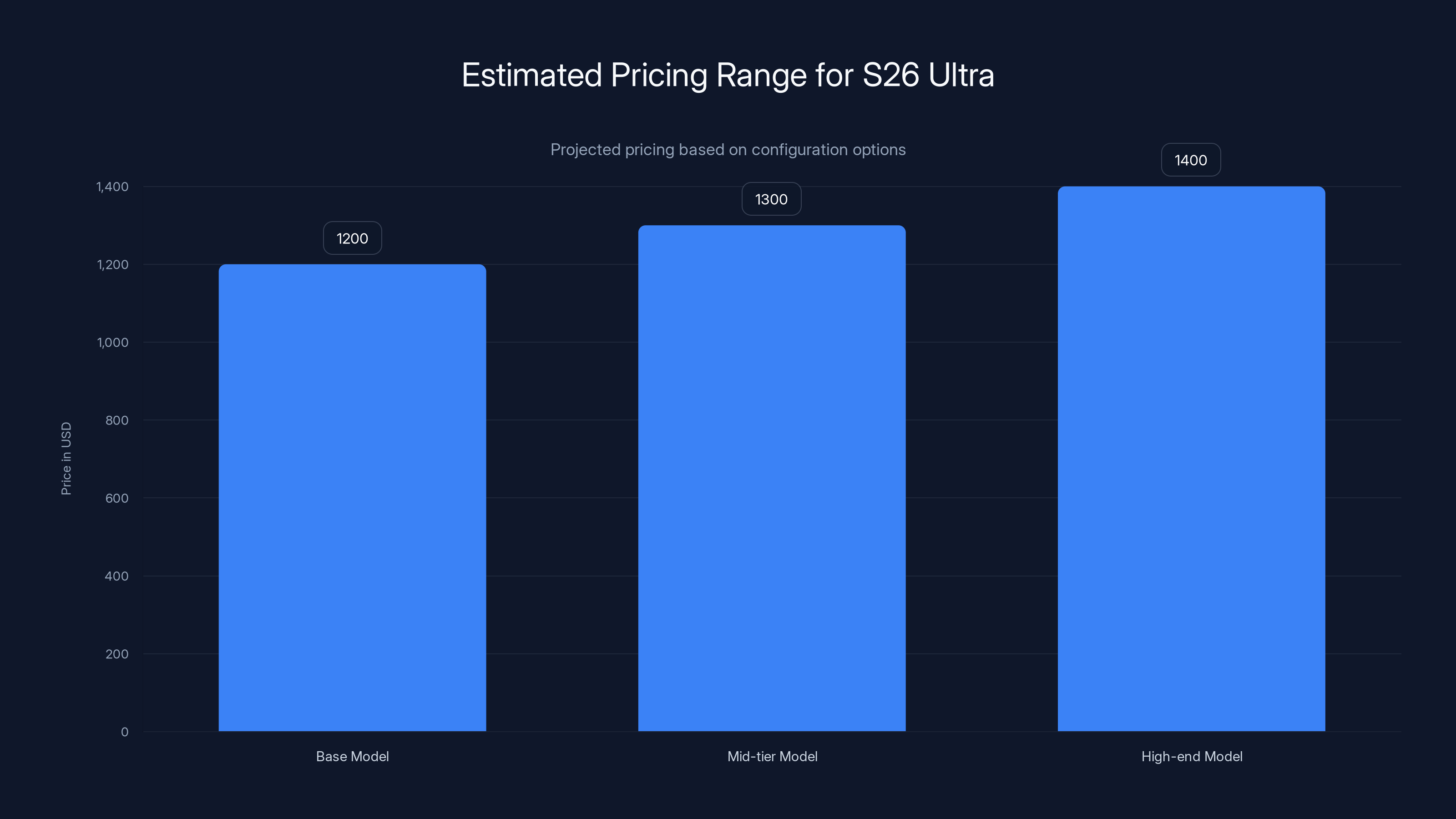

The S26 Ultra is expected to be priced between

Software and Integration: Making Hardware Shine

One UI Camera Experience

One UI should position the camera as the centerpiece of the smartphone experience, not a peripheral app. Samsung could integrate camera capabilities throughout One UI—in the launcher, lock screen, quick settings, and throughout applications.

For instance, users might compose photos directly in the lock screen camera widget, or quickly access specific camera modes from the notification shade. Samsung Notes could include a camera mode for photographing documents, automatically cropping and enhancing them. Samsung's email client could offer quick camera access for attaching photographed documents.

This integration would make camera functionality feel ubiquitous and essential, rather than something you launch when you deliberately want to take a photo. The camera becomes a constant companion throughout the OS.

Cloud Integration for Computational Offloading

Some computational tasks could offload to Samsung's cloud servers, enabled by 5G and Wi-Fi connectivity. Complex AI models that wouldn't fit in device memory could process in cloud and return results instantly. Batch processing of large photo libraries could happen on Samsung's servers during charging and Wi-Fi connection.

Users might opt into this privacy-consciously—allowing non-sensitive processing to happen cloud-side to enable features that would be impossible locally. Samsung would need to be transparent about what happens in cloud versus on-device, with granular privacy controls.

This approach allows Samsung to implement computationally expensive features that competitors with weaker cloud infrastructure cannot offer. It's not just about local processing; it's about leveraging Samsung's infrastructure advantages.

Battery and Thermal Considerations

Power Efficiency Without Compromise

Advanced camera processing consumes significant power. The S26 Ultra's battery must support extended shooting without draining quickly. This requires both hardware optimizations (more efficient ISP and AI accelerators) and software optimizations (intelligent power management that scales features based on battery state).

Samsung should implement camera mode power budgets. Each mode knows approximately how much power it consumes. When battery is low, the camera can disable computationally expensive features while maintaining core functionality. Users would know they're in "battery saver" mode and accept the trade-offs.

Thermal Design for Sustained Performance

As discussed earlier, thermal management is critical. The S26 Ultra requires hardware innovations—better materials, improved thermal architecture—combined with software that actively manages thermal conditions before they become limiting.

Samsung could implement thermal monitoring that throttles features preemptively, maintaining target thermal conditions rather than reacting to overheating. The camera would be smart enough to know when it can sustain 8K 60fps recording versus when it should suggest 4K 60fps to maintain stability.

Emerging Technologies and Experimental Features

Computational Zoom Beyond Current Limits

Current zoom implementations have mathematical limits—you cannot create detail that wasn't captured by the sensor. But machine learning models trained on millions of zoom photographs could intelligently hallucinate detail at extended zoom levels. The S26 Ultra could offer 50x zoom that combines optical zoom, digital cropping, and AI-enhanced upscaling, creating results that feel surprisingly detailed.

This would be positioned honestly—"AI-enhanced zoom" rather than claiming optical capability that doesn't exist. But it would expand the effective range of the camera beyond what optical zoom alone provides.

Light Field Capture Prototype

Light field technology captures directional information about light rays, enabling post-capture focus adjustment. While full light field implementation is impractical for smartphone cameras, the S26 Ultra could prototype partial light field capture using the multiple camera lenses to capture scene information from multiple perspectives.

This would enable features like post-capture focus adjustment and depth-based effects that aren't possible with standard photography. The feature might be experimental initially, but would differentiate the S26 Ultra as an innovation leader.

Quantum Dot Sensor Technology

Quantum dot sensors, which use quantum dots for improved light capture, could potentially appear in next-generation flagships. While research is ongoing, Samsung—which manufactures quantum dots for displays—could potentially pioneer their use in image sensors. This would be 2026 aspirational technology, but represents genuine innovation Samsung could pursue.

Estimated data shows that while Apple's iPhone 16 Pro leads in computational photography and real-world performance, Samsung's Galaxy S25 Ultra and Google's Pixel 9 Pro are strong contenders, with Chinese manufacturers closing the gap.

Professional Features and Creator Tools

Filmmaker Mode with Professional Controls

Content creators increasingly use smartphones for video production. The S26 Ultra should offer a Filmmaker Mode that provides professional controls: manual focus, manual exposure, histogram feedback, waveform monitoring, and direct control over color grading.

Professionals should be able to set frame rate, resolution, bitrate, color space, and sensor formatting without navigating through simplified menus. The S26 Ultra would essentially be a professional cinema camera with smartphone convenience.

Integration with Adobe and Professional Software

Samsung should deepen partnerships with Adobe, providing seamless integration between the S26 Ultra and Adobe Lightroom, Premiere Pro, and other professional tools. Photographers could make adjustments on the phone camera, and those adjustments would automatically sync to Adobe Creative Cloud.

Moreover, Samsung could provide proprietary processing that's recognized and built upon by Adobe's tools. Instead of Adobe trying to interpret Samsung's RAW format, they could have complete information about Samsung's processing pipeline and extend it.

Tethered Photography Support

Professional photographers often connect cameras to computers during shooting. The S26 Ultra should support tethered capture—connected to a computer, camera settings are controlled remotely, images are transferred immediately, and reviewing happens on a larger screen.

Samsung could provide software for Windows and Mac that makes tethered capture as natural as using a professional DSLR. This would appeal to studio photographers and content creators who want the convenience of smartphone cameras with the workflow of professional equipment.

Pricing and Market Strategy for S26 Ultra

Premium Positioning Justification

The S26 Ultra will certainly be expensive—likely $1,200-1,400 USD depending on configuration. Samsung must justify this premium through genuine capability differentiation. If the S26 Ultra isn't meaningfully better than the S25 Ultra at photography, the premium becomes indefensible.

Samsung should establish partnerships with influential photographers and videographers who publicly validate the camera capabilities. Case studies showing how the S26 Ultra enabled specific creative achievements would be more convincing than marketing claims.

Moreover, Samsung could bundle camera-centric services—premium storage for RAW files, professional RAW processing software, or integration with photo printing and archival services. The value proposition extends beyond hardware into the ecosystem around photography.

Regional Variations and Optimization

Different markets prioritize different camera capabilities. Smartphone photographers in Asia-Pacific favor vibrant color and beautification effects. European photographers prioritize neutral color science. Professional creators worldwide want manual control and RAW flexibility.

Samsung could offer region-specific tuning of color science and processing pipelines. The S26 Ultra sold in Asia might have brighter, more vibrant processing by default, while the US variant could default to more neutral rendering. Users could switch between presets, but the default matching regional preferences would reduce complaints about color accuracy.

Looking Beyond 2026: The S27 and Beyond

While the S26 Ultra represents the focus of this analysis, Samsung must also think beyond 2026. The smartphone camera market will continue evolving. Emerging technologies like periscope macro zoom, larger sensor sizes, or even foldable camera designs could define future innovations.

Samsung should begin research now on technologies that will matter in 2027-2029. This might include miniaturized cooling systems enabling sustained performance, more efficient neural processors enabling on-device AI, or novel optical designs enabling previously-impossible capabilities.

The S26 Ultra should be excellent at 2026 photography. But Samsung should also signal that it's investing in the future, demonstrating a vision for where smartphone photography is heading.

Estimated data shows that Google's Pixel leads in low-light performance with a score of 9, followed by iPhone at 8. Samsung S26 Ultra scores lower at 6, indicating room for improvement in its Night Mode capabilities.

Conclusion: The Path Forward for Samsung

The Samsung Galaxy S26 Ultra has enormous potential to reclaim photography leadership among flagship smartphones. Success requires Samsung to acknowledge where competitors excel while leveraging Samsung's genuine advantages: hardware capability, manufacturing scale, and integration across its ecosystem.

The path forward isn't about matching competitors feature-for-feature. It's about pursuing a coherent vision of smartphone photography that emphasizes different values. If Samsung wants to beat Google at computational photography, that's a losing battle—Google's research depth is simply deeper. If Samsung wants to match Apple's integration and optimization, that's similarly impractical—Apple's vertical integration is unmatched.

Instead, Samsung should position the S26 Ultra as the camera for photographers and creators who want control, flexibility, and hardware capability. The S26 Ultra should excel at RAW photography, manual controls, and features enabled by large sensors and fast processors. It should serve professionals who need creative freedom rather than consumers who want automatic results.

Specifically, the S26 Ultra must address known weaknesses: improved low-light performance through multi-exposure fusion, sustained thermal performance during video recording, more reliable autofocus in edge cases, and generally faster, more responsive processing. These are solvable problems that represent genuine improvement, not incremental tweaks.

On the innovation front, Samsung should pursue features that competitors cannot easily replicate. Real-time augmentation without preview degradation, intelligent object tracking and smart cropping, physics-based HDR rendering that understands material properties—these are the kinds of innovations that differentiate while using Samsung's hardware advantages.

The camera industry has evolved beyond megapixel counts and sensor sizes. Photography today is defined by software intelligence, thermal management, interface intuition, and ecosystem integration. The S26 Ultra must excel at all these dimensions simultaneously.

For Samsung to succeed, the company must make clear choices about what the S26 Ultra is optimized for. Is it the best automatic camera? No—Pixel wins there. Is it the most integrated? No—iPhone wins there. Is it the most capable camera for photographers and creators who want control and flexibility? That's where Samsung can genuinely compete and win.

The next 18 months are critical for Samsung's camera team. The engineering challenges are solvable. The technology required is available or within reach. What remains is execution excellence and strategic focus. If Samsung delivers on even 60% of the improvements outlined in this analysis, the S26 Ultra would represent a significant step forward for the company and a genuinely compelling flagship for photographers worldwide.

The 2025 camera landscape is competitive but not insurmountable. Samsung has the resources, talent, and hardware platform to claim photography leadership. The S26 Ultra represents the company's opportunity to redefine what a flagship camera phone should deliver. Whether Samsung seizes this opportunity will define the smartphone camera market for the next generation of devices.

FAQ

What specific camera improvements would make the Samsung Galaxy S26 Ultra competitive with the iPhone 16 Pro?

The S26 Ultra must implement multi-exposure low-light capture similar to computational photography leaders, improve thermal management to eliminate throttling during sustained video recording, and develop sophisticated AI-powered scene understanding that makes optimal decisions about exposure, focus, and processing without user intervention. Additionally, the S26 Ultra should emphasize features that appeal to photographers—superior RAW processing, manual controls, and multiple color science options that iPhone restricts. The key is differentiation based on photographer empowerment rather than attempting to match Apple's automatic optimization.

How does Google Pixel's computational photography approach differ from what Samsung could implement in the S26 Ultra?

Google's approach relies heavily on algorithmic sophistication developed through decades of research and trained on billions of photographs stored in Google's infrastructure. Google essentially owns the algorithmic space through institutional knowledge. Samsung's advantage lies in hardware—larger sensors, faster processors, specialized ISP chips. Rather than competing directly on algorithms, the S26 Ultra should use hardware advantages to achieve similar results through different technical approaches. For instance, where Pixel uses AI upscaling to recover face detail from blurry images, Samsung could capture multiple exposures and extract detail through intelligent fusion. This is fundamentally different technically but delivers similar results.

What thermal management innovations would prevent the Galaxy S26 Ultra from throttling during extended video recording?

The S26 Ultra requires physical thermal design improvements—larger heat spreaders, improved vapor chamber designs using possibly graphene-enhanced materials, and optimized internal component placement. Software-level innovations include preemptive thermal management that throttles features before thermal limits are reached rather than reacting to overheating, intelligent workload distribution across processors, and dynamic mode adjustment that selects sustainable settings based on ambient temperature and thermal state. Dedicated video encoding hardware operating independently from the main processor would also reduce overall SoC heat generation during 8K recording.

How could the S26 Ultra implement real-time low-light improvement without degrading autofocus performance?

The solution involves multi-exposure capture at the sensor level where different rows capture different exposures simultaneously, merged in the ISP before traditional autofocus processing occurs. This provides all the signal benefits of multi-exposure low-light modes while maintaining standard autofocus responsiveness. Machine learning models trained on thousands of low-light scenarios could also improve autofocus reliability by learning the statistical signatures of low-light focus challenges and making preemptive adjustments before the autofocus system engages.

What kind of AI-powered scene understanding could make the S26 Ultra's automatic processing superior to competitors?

AI models trained on millions of images within specific categories—portrait, landscape, food, macro, low-light—could understand optimal processing for that specific scenario. When the camera detects a portrait scene, it invokes the portrait-trained model which optimizes for skin tone preservation, eye focus priority, background blur quality, and aesthetic preferences of portrait photography. For food photography, a different model optimizes for color saturation and warm tones. This category-specific processing allows more sophisticated optimization than fixed processing pipelines. Machine learning models should also learn individual user preferences, adapting processing over time to match how the specific user consistently adjusts images.

How would tethered photography support differentiate the S26 Ultra for professional photographers?

Tethered capture allows photographers to control camera settings remotely from a computer, transfer images immediately, and review them on a larger screen—workflow standard in professional photography studios. Implementing this on the S26 Ultra would require dedicated software for Windows and Mac that communicates with the phone over USB or wireless connection. Professional photographers could essentially use the S26 Ultra in a studio environment with the same workflow as professional DSLRs or cinema cameras, but with the computational photography and video stabilization advantages of a smartphone. This would appeal to studio photographers and video producers who want professional workflow with smartphone technology.

What material science or optical innovations could push the S26 Ultra's zoom capabilities beyond current limits?

Beyond traditional periscope designs, Samsung could explore hybrid optical-digital zoom where AI-powered upscaling intelligently extends zoom range. Quantum dot or other next-generation sensor technologies could improve light capture efficiency at extended focal lengths. Advanced optical coatings could reduce reflective losses. But fundamentally, the physics-based limits mean extended zoom will always involve some digital enhancement. The S26 Ultra should position this honestly as "AI-enhanced zoom" while using machine learning models trained on millions of zoom photographs to create surprisingly detailed results at 30x, 50x, or even 100x magnifications. This is different from optical zoom claims but represents genuine innovation.

How could Samsung implement real-time color and tone adjustment visible in the camera preview without degrading performance?

With improved GPU capabilities in next-generation processors, real-time tone mapping and color adjustment become feasible at viewfinder resolution without lag. Samsung could develop shader-based implementations of Curves, Levels, and HSL adjustments that render in real-time on the GPU while the ISP processes the background capture pipeline. Users would see adjustments live as they modify settings, but the actual capture continues through standard processing. Some competitors have begun implementing this; Samsung should make it standard functionality with sophisticated tools, not just basic brightness adjustment.

What kind of object recognition would enable the S26 Ultra to automatically invoke specialized camera modes based on scene analysis?

Machine learning classifiers trained on millions of real-world photographs could identify portrait scenarios, landscape compositions, macro subjects, food photography, documents, architecture, and other scene types. When the camera detects a specific scene, it could subtly highlight the corresponding specialized mode—a gentle notification that the "Portrait" mode would improve results without forcing selection. Over time, with machine learning personalization, the camera could learn the individual user's preferences and automatically apply optimizations for detected scene types. This transforms scene recognition from a simple "tag" to actionable processing improvements.

How would quantum dot sensor technology improve the S26 Ultra's photographic capabilities if implemented by 2026?

Quantum dot sensors use quantum dots for light capture instead of traditional silicon, potentially offering improved quantum efficiency (converting more photons to electrical signal) and better spectral response. This would translate to improved low-light performance, more accurate color capture, and potentially smaller pixel sizes without light-gathering sacrifice. Samsung manufactures quantum dots for display technology, positioning the company to pioneer their use in image sensors. However, integration into smartphone camera designs is unproven; 2026 might be realistic for prototype implementation with broader deployment following in 2027-2028 flagships.

Professional Content Creation: The S26 Ultra as a Production Tool

Video Production Capabilities

The smartphone has evolved from a tool for casual video capture into a legitimate cinema camera for independent filmmakers, journalists, and content creators. The S26 Ultra should embrace this role completely, offering professional-grade video tools that match or exceed traditional cinema cameras in flexibility and ease of use.

Implementing uncompressed video output over USB-C would allow external recorders to capture the camera's sensor output directly, bypassing internal compression limitations. This appeals to professional videographers who want maximum post-production flexibility. Simultaneously, the S26 Ultra should offer excellent in-camera codecs for creators who prefer self-contained workflows.

Variable frame rate recording would enable slow-motion effects without requiring high framerate capture throughout. The camera could record 240fps for a few seconds of dramatic slow-motion within a standard 24fps sequence, avoiding the technical complexity of managing high framerate throughout the entire video.

Integration with Professional Workflows

Content creators often use complex workflows involving color grading, effects, and distribution across multiple platforms. The S26 Ultra should integrate seamlessly with these workflows through APIs and software partnerships. Adobe's Creative Cloud integration would be foundational, but Samsung could also partner with Da Vinci Resolve, Final Cut Pro, and other professional tools.

Samsung's cloud infrastructure could provide collaborative features where videographers in different locations simultaneously edit footage captured on the S26 Ultra, with changes syncing in real-time. This would position Samsung's infrastructure as essential to professional video production workflows.

Document and Technical Photography

Beyond traditional photography and videography, the S26 Ultra should excel at capturing documents, whiteboards, technical diagrams, and other professional content. Machine learning could automatically detect document edges, correct perspective distortion, enhance contrast, and convert captures into searchable PDFs—all in real-time without manual adjustment.

For technical professionals—architects, engineers, surveyors—the S26 Ultra could capture precise measurements and spatial information, with AI-powered analysis extracting dimensions and creating technical diagrams from photographs.

Photography Education and Learning Tools

Guided Tutorials Within Camera App

The S26 Ultra's camera app could function as a photography education tool. When a user enables a specific mode—Portrait, Manual, Macro—the app could provide subtle in-app tutorials explaining the mode's purpose, showing examples of excellent results, and suggesting techniques for better outcomes.

Users learning manual mode could see real-time guidance about how their settings affect image characteristics. Increasing ISO would show how it brightens the preview; adjusting shutter speed would show motion blur effects; aperture adjustments would show depth of field changes. This makes photography principles tangible and learnable directly through the camera interface.

Over time, as users develop skill, the educational prompts could become optional—visible only when relevant, not intrusive. Advanced users could disable them entirely while beginners get comprehensive guidance.

Photography Challenges and Community

Samsung could build photography challenges directly into the camera app—weekly themes encouraging users to photograph specific subjects or explore specific techniques. Users submit their results, and Samsung's community votes on the best interpretations of the challenge. Winners receive rewards—new filters, exclusive editing tools, or featured placement in the app.

This gamifies photography skill development, encouraging users to explore capabilities they might not discover independently. The community aspect creates engagement and social features that deepen users' relationship with the device.

Sustainability and the S26 Ultra's Camera Design

Repairability and Modularity

Camera systems are complex and fragile. The S26 Ultra should prioritize repairability through modular design where camera modules can be replaced independently of the phone's other components. Users could upgrade the camera system while retaining other aspects of the device.

This aligns with sustainability principles—extending device lifespan through component replacement rather than requiring wholesale device replacement when the camera is damaged. Samsung could establish a service program providing affordable camera module replacements with minimal downtime.

Material Innovation and Environmental Impact

Optical elements and sensor components involve materials with environmental impact. Samsung could pioneer more sustainable materials—glass alternatives for lenses, recycled materials in camera module housings, and more efficient manufacturing processes. Communicating these sustainability efforts would appeal to environmentally-conscious consumers and differentiate the S26 Ultra based on values beyond pure performance.

International Market Considerations

Regional Camera Tuning

Photographic preferences vary by region. Smartphone photographers in East Asia often prefer vibrant colors, skin smoothing, and beautification effects. European photographers tend toward neutral color science and minimal processing. These regional preferences should influence the S26 Ultra's default processing in different markets.

Samsung could offer regional color profiles—Asia-Pacific tuning, European tuning, Americas tuning—applied by default but switchable by users. Users could also create custom profiles matching their aesthetic preferences, with the option to share custom profiles within Samsung's community.

Language and Localization

Beyond UI translation, Samsung should localize camera features for different markets. This might include region-specific beautification algorithms tailored to different skin tones, cultural aesthetics relevant to food photography in different cuisines, or landscape photography optimizations for regional geography.

Conclusion: Samsung's Path to Camera Dominance

The Samsung Galaxy S26 Ultra represents an extraordinary opportunity for Samsung to redefine smartphone photography. The technological groundwork exists. The manufacturing capability exists. The hardware platform exists. What remains is strategic focus and execution excellence.

Success requires Samsung to make clear choices about what the S26 Ultra optimizes for and what it explicitly does not attempt to replicate from competitors. Rather than trying to beat Google at algorithmic sophistication or match Apple's processing optimization, the S26 Ultra should establish dominance in photographers' choice—offering the flexibility, control, and hardware capability that empowers creative professionals.

The specific improvements outlined across this analysis are all technically feasible for a 2026 device. Multi-exposure low-light capture, improved thermal management, sophisticated AI-powered scene understanding, cinematic video tools, professional editing capabilities—these represent genuine innovation that would justify the S26 Ultra's premium positioning.

Moreover, Samsung's ecosystem integration opportunities are unmatched. One UI integration, cloud services, partnerships with creative software companies, hardware modularity for repairability—these differentiate based on value beyond the camera itself.

The 2025 smartphone camera market is mature and competitive, but not locked in. Samsung has the resources and platform to claim leadership. The S26 Ultra could define the next generation of smartphone photography. Whether Samsung executes on this vision will determine the device's success and Samsung's trajectory in the fiercely competitive premium smartphone segment.

Key Takeaways

- Samsung must differentiate through photographer control and flexibility rather than attempting to match Apple's automation or Google's algorithmic sophistication

- Low-light performance improvements require multi-exposure capture and intelligent fusion rather than aggressive single-exposure noise reduction

- Thermal management during sustained video recording demands both hardware innovations and proactive software throttling strategies

- Professional features like RAW processing, manual controls, and tethered photography would appeal to enthusiasts competitors often ignore

- AI-powered scene understanding that invokes category-specific processing pipelines can optimize results better than fixed processing algorithms

- Camera innovations require ecosystem integration—One UI, cloud services, and software partnerships amplify hardware capabilities

- The S26 Ultra's real competitive advantage lies in its hardware foundation, not attempting to match competitors' established software strengths

Related Articles

- NYT Strands: Complete Strategy Guide, Daily Hints & Answers [2025]

- Best After-Christmas Sales & Deals [2025]

- NYT Connections Game #929 Hints & Answers (Dec 26, 2025)

- Quordle Hints & Answers: Complete Strategy Guide [2025]

- Fitbit Inspire 3 at Lowest Price Ever: Complete Buyer's Guide [2025]

- WiiM's First Wireless Speaker Review: Audio Quality & Setup [2025]