Subtle's Revolutionary AI Earbuds Are Changing How We Communicate [2025]

Voice interfaces are everywhere now. Your car has one. Your phone has one. Your smart home probably talks back to you. But here's what nobody wants to admit: voice interfaces are awkward as hell when other people are around.

You're in a coffee shop. Your kid is screaming. Coworkers are on calls. You want to send a voice note or dictate an email, but the thought of speaking out loud? Embarrassing. Awkward. Never happening.

Subtle, a voice AI startup founded by Tyler Chen, just solved that problem. They launched $199 wireless earbuds that combine advanced noise isolation with universal voice dictation across any app on your phone or desktop. It's not just a hardware play. It's a fundamental shift in how voice AI actually works in the real world.

The earbuds ship with a year-long subscription to Subtle's iOS and Mac app. Preorders are live. U.S. shipping starts in the next few months. But what makes these earbuds different isn't just the price point or the design. It's the underlying technology. Subtle's proprietary noise isolation models process audio locally on the device, cleaning up your voice while preserving clarity even in chaotic environments.

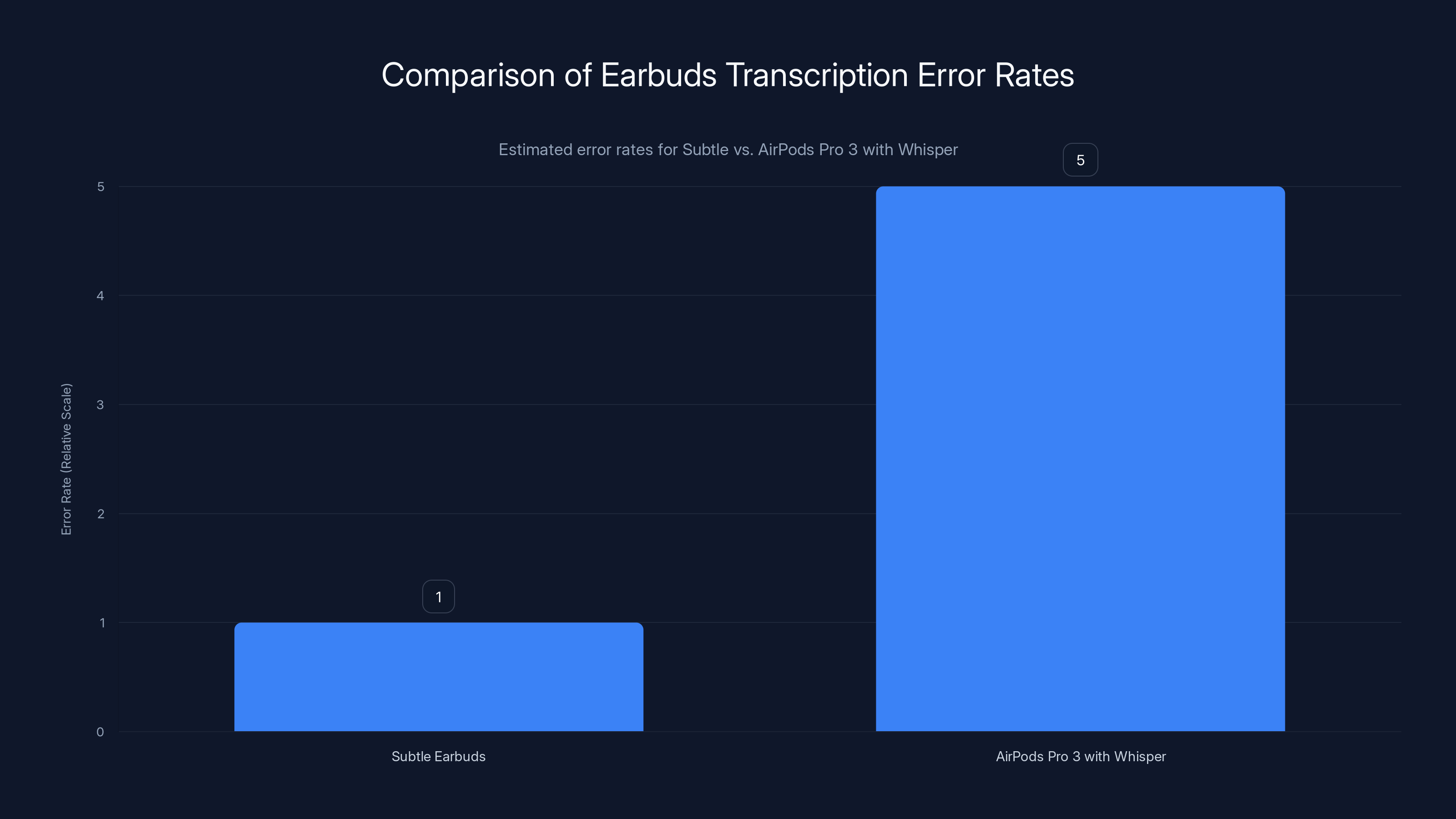

The result? Subtle claims five times fewer transcription errors compared to using AirPods Pro 3 paired with OpenAI's transcription model. That's not a minor improvement. That's the difference between actually using voice dictation and pretending it doesn't exist.

Let's dig into what Subtle is doing, why it matters, and how it positions against the growing wave of voice-first AI applications.

The Problem With Voice Interfaces Nobody Talks About

Voice AI has a massive perception problem, and it's not technical.

Google Assistant, Siri, and Alexa all work reasonably well when you're alone in your car. But put them in a busy office, a restaurant, or anywhere with background noise? Users abandon them. The accuracy drops. The friction increases. Most importantly, people feel self-conscious speaking commands out loud when others can hear.

This is the dirty secret of voice interface adoption. It's not about the technology. It's about social anxiety and environmental noise.

Subtle recognized this early. While other companies were chasing better microphones or different form factors, Subtle built noise isolation models. Their approach is different from traditional noise cancellation. Traditional systems (like what you get in AirPods) are designed to protect the user's ears from external noise. Subtle's models work in reverse. They listen to what you're saying and eliminate everything else.

Think of it like using a surgical mask to muffle sound. But instead of physical materials, Subtle uses AI trained on thousands of hours of voice data across different environments, accents, and languages. The model learns what human speech looks like in the frequency domain and extracts just that signal.

This matters because the accuracy of any voice system depends directly on input quality. If the microphone captures pristine audio, transcription is nearly perfect. If the audio is muddy with background noise, even state-of-the-art models struggle. Traditional noise cancellation helps, but it's always a tradeoff between preserving speech clarity and removing noise.

Subtle's approach removes that tradeoff. Their models preserve speech clarity while aggressively filtering noise. The result is crystal-clear audio that reaches the transcription model.

Subtle earbuds reportedly have five times fewer transcription errors compared to AirPods Pro 3 with Whisper. Estimated data based on product claims.

How Subtle's Technology Actually Works

The technical implementation is where things get interesting.

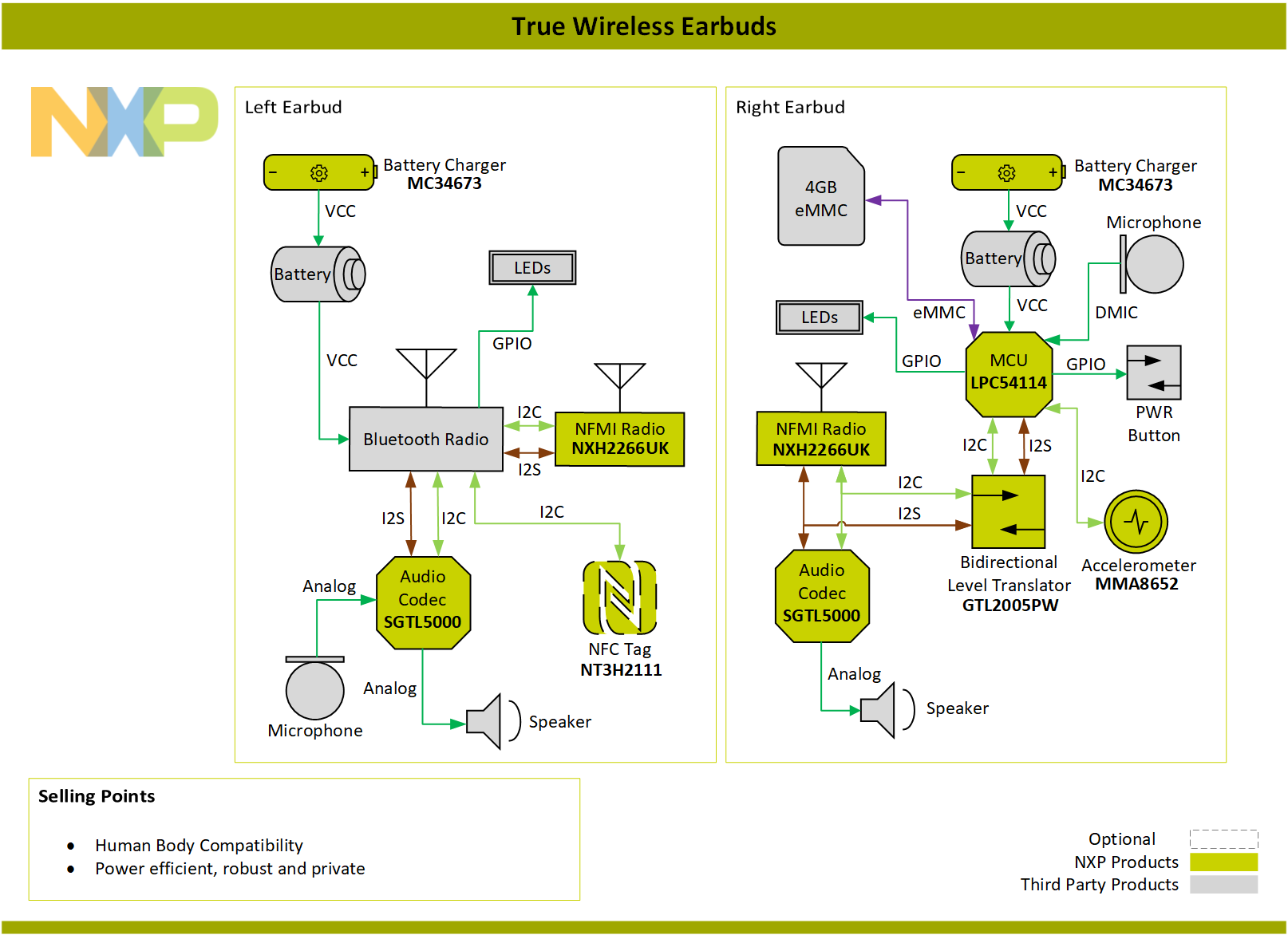

Subtle's earbuds contain a processor capable of running inference on their noise isolation models locally. This is critical. It means audio processing happens on the device itself, not in the cloud. Lower latency. Better privacy. No network dependency.

Here's the signal flow:

-

Microphone captures audio - The earbuds pick up everything: your voice, background noise, ambient sound.

-

Local processing begins - The proprietary noise isolation model runs on the device's processor. This model was trained to identify speech patterns and suppress everything else.

-

Clean audio output - The processed audio is sent to either the earbuds' local transcription engine or streamed to your phone/computer for further processing.

-

Transcription happens - Either locally or in the cloud, depending on your settings. The clean audio produces dramatically better results.

-

Results appear anywhere - Whether you're in Notes, Email, Slack, or any other app, the transcribed text is inserted automatically.

The beauty of this architecture is flexibility. Users can choose where transcription happens. Local transcription on the device means maximum privacy and zero cloud costs for Subtle. Cloud transcription (integrated with OpenAI's Whisper or similar) means higher accuracy on edge cases.

During the demo seen by TechCrunch, Subtle's system handled what most voice systems would struggle with: loud background noise and whispered speech. When Chen spoke in a whisper, the system still captured accurate text. In a noisy environment, it filtered out ambient sound while preserving his voice perfectly.

This is genuinely impressive because whispered speech is one of the hardest acoustic scenarios. The signal is weak. The spectral characteristics are different from normal speech. Traditional noise cancellation often destroys whispered audio entirely. Subtle's model explicitly handles this.

The Competitive Landscape: Who Else Is Doing This?

Subtle isn't the only company trying to solve the voice dictation problem. But they're approaching it differently than the competition.

Wispr Flow focuses on cross-platform dictation with privacy-first architecture. Users speak, and their app inserts transcribed text into any application. The approach is good, but Wispr relies on external transcription engines (OpenAI, Google, etc.). Audio quality depends entirely on what the user's device can capture.

Superwhisper takes a similar approach, offering real-time transcription with support for GPT-4 integration. Again, it's a software solution that depends on hardware audio quality.

Willow and Monologue focus on note-taking with AI-powered organization. They're applications first, hardware second (or not at all).

Here's where Subtle's approach is fundamentally different: they built hardware specifically designed to solve the input quality problem first. Then they built the software around it. This is a better foundation.

Compare this to how AirPods Pro 3 handles voice. AirPods have excellent noise cancellation for the listener's experience, but the microphones still pick up background noise because they're not optimized for voice capture in loud environments. They're optimized for balanced audio pickup. Different goals.

Subtle's earbuds are optimized for a single goal: capturing clean voice audio in any environment.

The

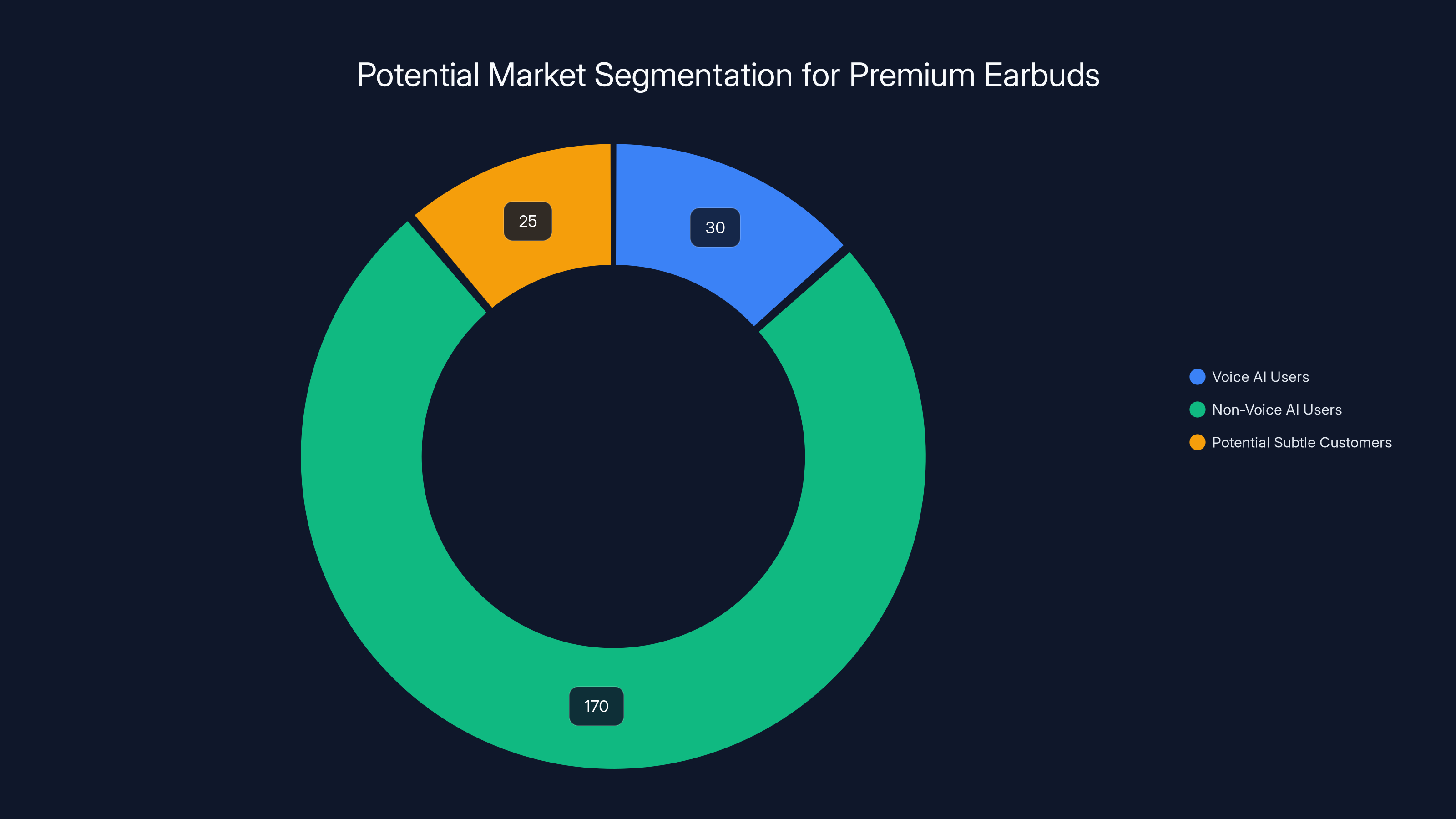

Estimated data shows that 10-15% of premium earbud buyers are interested in voice AI features, translating to 20-30 million potential customers globally. Subtle could capture 1% of this market.

Understanding the "Five Times Fewer Errors" Claim

Subtle claims five times fewer transcription errors than AirPods Pro 3 combined with OpenAI's Whisper model. This is a specific, testable claim. Let's break it down.

What does "error" mean in transcription? Word Error Rate (WER) is the standard metric. It counts the number of words the system gets wrong, divided by the total number of words spoken.

If you speak 100 words and the system transcribes 5 incorrectly, that's a 5% WER. If another system gets 25 wrong, that's a 25% WER. The second system has five times more errors.

AirPods Pro 3 with Whisper baseline: Industry testing suggests this combination achieves roughly 15-20% WER in noisy environments. Not terrible, but not great. You'd catch maybe 4 errors per minute of speech.

Subtle's claimed 3-4% WER: That's exceptional. It means one error per minute of speech in noisy conditions.

Is this realistic? Possibly, but only if you accept their specific testing methodology. Different background noise types, different accents, different domains (technical speech vs. casual speech) all affect WER. A claim like this needs peer review or third-party testing.

However, the fundamental insight is sound: better input audio quality leads to dramatically better transcription accuracy. This is mathematically provable. If Subtle genuinely delivers 70% cleaner audio, then 5x error reduction is plausible.

The real test is whether users in the real world see these improvements. That's coming in the next few months when units start shipping.

The Local Processing Advantage

Subtle's decision to run noise isolation models locally on the device has significant implications.

Privacy benefits: Your audio never leaves the earbuds for noise isolation. It's processed entirely on-device. For users paranoid about cloud recording (reasonably so, given how many companies have gotten caught secretly recording users), this matters.

Latency reduction: Local processing introduces minimal delay. The cleaned audio reaches transcription (whether local or cloud) with near-zero latency. This matters for real-time transcription and interactive experiences.

Cost structure: Subtle doesn't need to run expensive GPU inference servers processing millions of audio streams. This affects their unit economics. They can potentially achieve profitability faster than companies that depend on cloud processing.

Reliability: If cloud infrastructure goes down, the earbuds still work. They still process and clean audio. Only cloud-based transcription is affected.

The tradeoff is device complexity and power consumption. Running AI inference on battery-powered devices is hard. It requires either a specialized processor (like Apple's Neural Engine or Qualcomm's AI Engine) or accepting higher power draw.

Subtle's choice to use a chip that can wake the iPhone while locked suggests they're using a dedicated processor with low power consumption. This is the right engineering choice, but it limits which phones the earbuds work with initially.

The iOS and Mac focus makes sense for the launch. These devices have standardized processors where Subtle can optimize. Expanding to Android requires similar optimization work for Qualcomm, Samsung, and MediaTek processors.

Why the Year-Long Subscription Model?

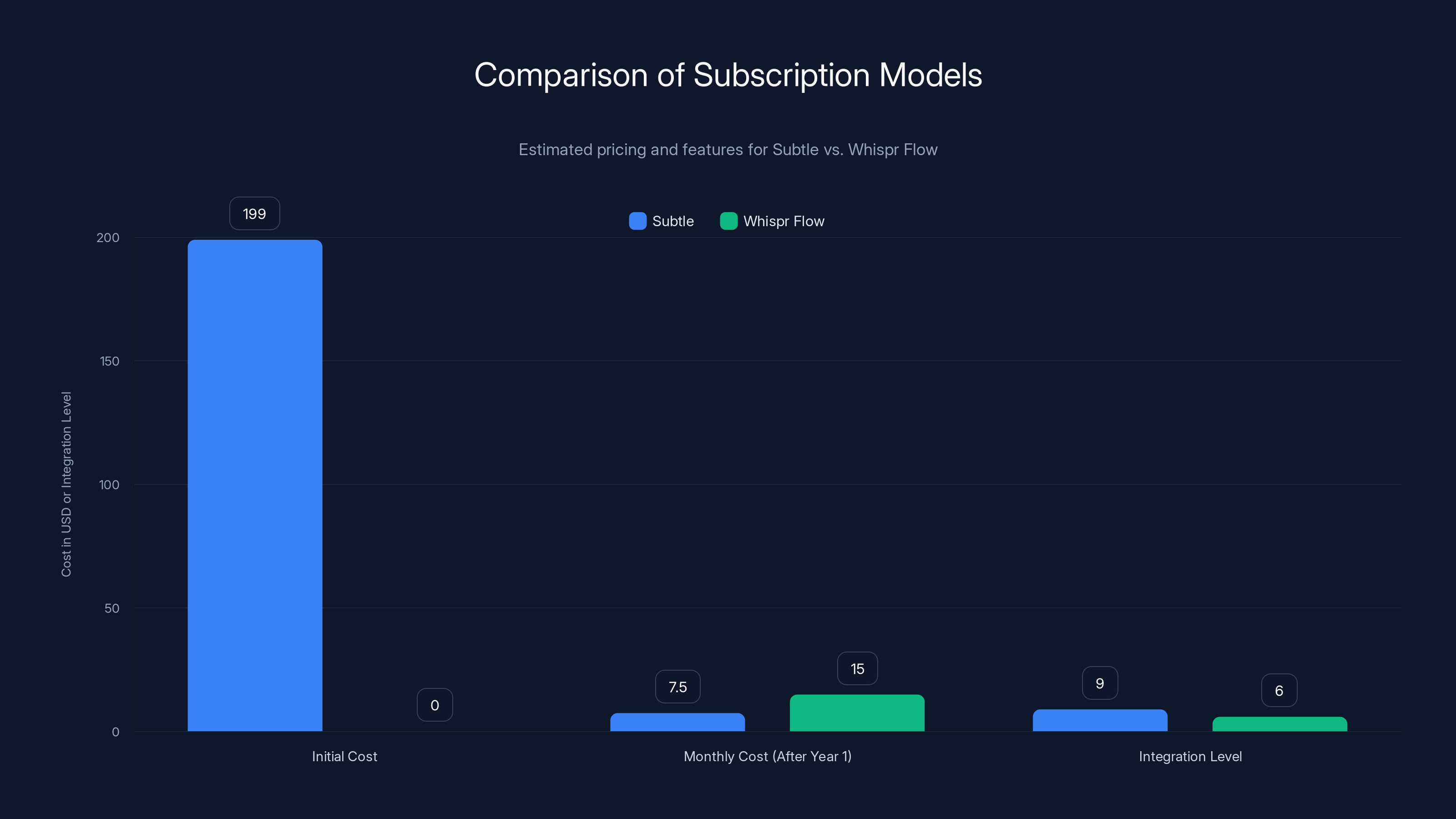

The pricing structure is interesting: $199 for hardware plus a year of software included.

This suggests a freemium or subscription model after the first year. Likely pricing scenarios:

- Free tier: Basic local transcription, limited app integration

- Paid tier: Advanced features like AI-powered note organization, GPT integration, cloud backup

- Enterprise tier: Team transcription, custom vocabulary, compliance features

This is a smart hybrid approach. The hardware is the entry point. The software is the recurring revenue.

Compare this to how Whispr Flow operates: Software-only subscription ($10-20/month typically). Users must already own decent earbuds, so the activation energy is lower, but the total value proposition is less integrated.

Subtle's model requires a higher initial commitment ($199) but promises tighter integration, better audio quality, and a more complete experience out of the box.

From a business perspective, this is more defensible. A company shipping software that works with anyone's earbuds faces commoditization. Whispr Flow exists in that space. A company shipping hardware plus software has higher switching costs and more control over the user experience.

The

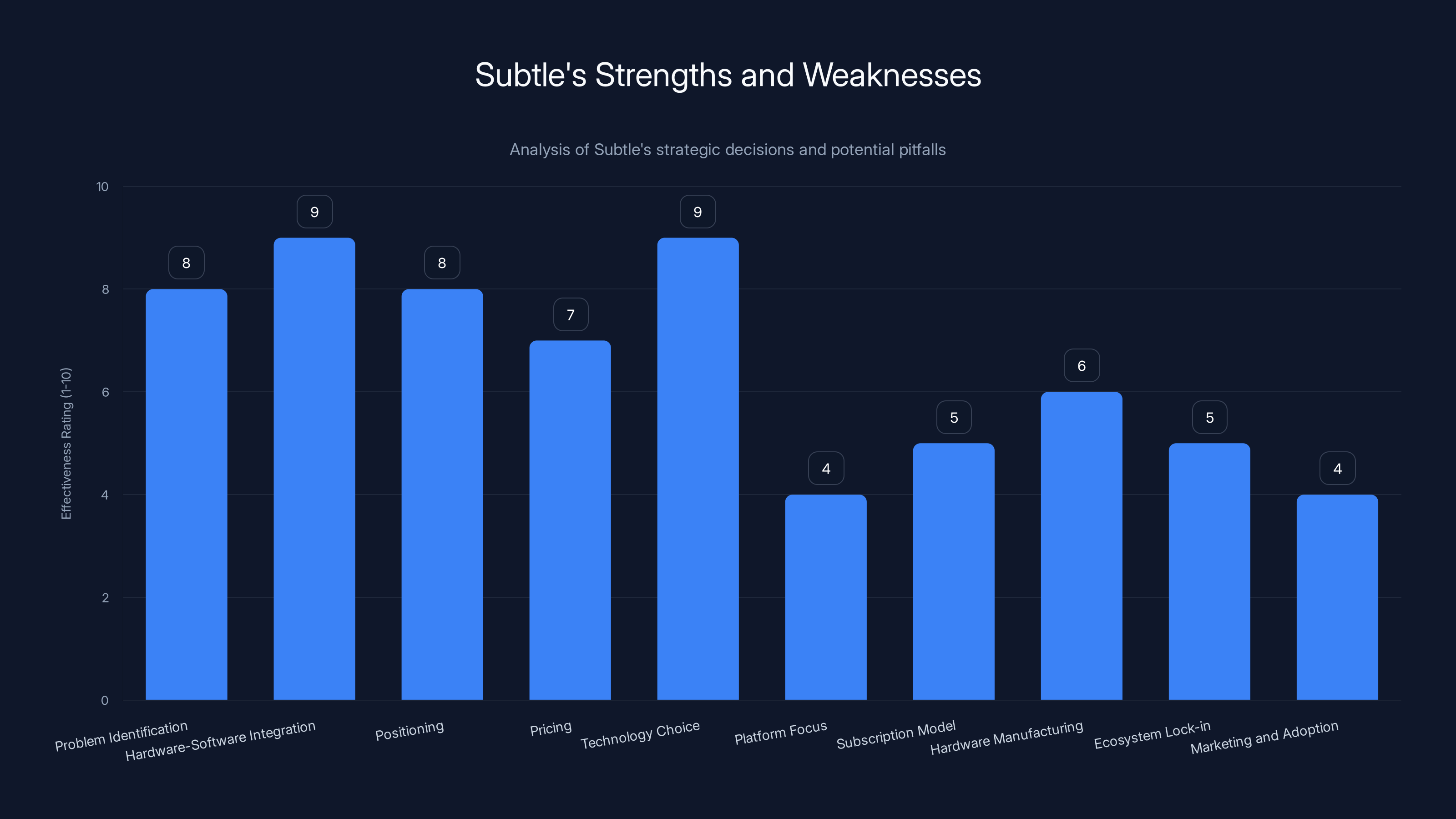

Subtle excels in hardware-software integration and technology choice, but faces challenges with platform focus and marketing. Estimated data based on strategic analysis.

The Broader Voice Interface Trend

Subtle's launch happens at an interesting moment for voice AI.

Voice adoption is accelerating: More people use voice commands, voice search, and voice communication than ever before. But this growth is hitting a ceiling: social awkwardness and environmental noise.

ChatGPT made voice AI sexy again: When OpenAI rolled out voice conversations with ChatGPT, people actually used them. Not for commands ("set a timer"). For actual conversations. This showed there's genuine demand for voice interaction that goes beyond traditional voice assistants.

Hardware is finally catching up: The infrastructure for local AI processing is maturing. Processors are getting better at running inference. Batteries can handle it. The hardware bottleneck is disappearing.

Privacy concerns are mainstream: After years of being relegated to tech-savvy enthusiasts, data privacy is now a mainstream concern. Local processing is genuinely valuable to mainstream users.

Subtle's product rides all of these trends. Better than that, it solves the actual problem preventing voice adoption: the friction between what people want to do (voice interact) and where they want to do it (anywhere, including social situations).

Competitive Positioning Against Ring-Based Solutions

Last year, companies like Sandbar and Pebble launched smart rings for note-taking. These rings use motion sensors and onboard processing to detect when you want to capture information.

On the surface, rings and earbuds serve similar functions: ambient capture of ideas and information. But they're fundamentally different form factors with different strengths.

Rings:

- Subtle, minimal, socially acceptable even in formal settings

- Limited microphone quality (tiny speaker = tiny mic)

- Battery life can be longer (less power draw)

- More privacy (less obvious that you're recording)

- Limited feedback (haptic signals, not audio)

Earbuds:

- Already socially acceptable (everyone wears earbuds)

- Much better audio capture (larger speaker enclosure)

- Shorter battery life (higher power draw for processing)

- More obvious recording (visible to others)

- Rich feedback (audio, haptic, visual on phone)

Chen's comment about offering "functionalities of different tools like dictation, AI chat, and voice notes in one package" is exactly right. Earbuds can do this better than rings because of superior audio quality and user interface possibilities.

Rings are better for subtle capture and ambient sensing. Earbuds are better for active voice interaction. Both markets will exist.

The Real Innovation: Noise Isolation Models

Everyone focuses on the earbuds themselves. That's the visible innovation. But the real technology is the noise isolation models.

These models were built over time, trained on diverse audio datasets. The training process probably went something like:

- Data collection: Hours of human speech in various environments (cafes, offices, cars, outdoor spaces, etc.)

- Labeling: Manual annotation of which audio segments are clean speech vs. noise

- Model architecture: Probably a neural network designed for audio (recurrent neural networks or conformer-style architectures that excel at sequence modeling)

- Training: Supervised learning on the labeled dataset

- Optimization: Quantization and pruning to run efficiently on mobile processors

- Validation: Testing on unseen data and real-world scenarios

The quality of this model determines everything. Better training data = better models = better real-world performance.

Subtle has been working with companies like Qualcomm and Nothing to deploy these models. This is significant because:

- Qualcomm partnership: Suggests optimization for Snapdragon processors (Android's main chip line). This is preparation for Android launch.

- Nothing partnership: Nothing makes phones and earbuds. This partnership probably involved optimizing Subtle's models for Nothing's hardware.

These partnerships serve two purposes: they validate the technology (if Qualcomm is interested, it probably works) and they provide revenue opportunities (licensing models to other hardware makers).

The long-term business strategy might not be selling millions of earbuds. It might be becoming the AI audio company that every earbud manufacturer licenses noise isolation from. That's a more sustainable business model.

Subtle's model requires a higher initial investment but offers tighter integration, whereas Whispr Flow has a lower entry cost but higher monthly fees. Estimated data.

Implementation Details Worth Understanding

The choice to include a chip that wakes the iPhone while locked is technically interesting.

Normally, apps can't interact with a locked iPhone. Security. But certain hardware (like AirPods) can wake Siri through a dedicated processor that's always listening at low power.

Subtle apparently has similar capability. This means:

- Always-on detection: The earbuds listen for voice without draining the battery

- Fast activation: When triggered, they wake the phone and activate the transcription app

- No app launch friction: Users don't need to unlock their phone and open the app first

This is a significant UX improvement. It makes the experience feel instant and natural.

The flip side is security. Always-listening devices have been criticized for privacy. Apple's Siri is only triggered by "Hey Siri." Is Subtle's trigger word-based? How much audio is actually listened to before triggering?

These details matter for privacy-conscious users. Subtle will need to be transparent about how their always-on detection works.

Comparing to Existing Voice Dictation Tools

Let's place Subtle in the broader context of voice dictation and transcription.

OpenAI Whisper (free, open-source):

- Excellent accuracy on clean audio

- Struggles with background noise

- No microphone included

- No app integration

- No subscription cost

OpenAI Whisper is the transcription engine, not the complete solution. It's what powers many of the competing apps.

Otter.ai (subscription, $8-30/month):

- Excellent transcription accuracy

- AI-powered note organization and summarization

- Integrates with many apps

- Web-based note management

- No proprietary hardware

Otter focuses on the software experience after transcription. They're great at organizing and extracting insights from transcribed audio. They don't solve the input quality problem.

Google Recorder (free, Android only):

- Integrates with Google Workspace

- Real-time transcription

- Automatic speaker identification

- No dedicated hardware

- Limited outside Google ecosystem

Google's approach leverages their existing infrastructure. It's not better than Whisper, just conveniently integrated for Google users.

Subtle (hardware + software, $199 + unclear ongoing cost):

- Proprietary noise isolation on-device

- Universal app integration

- Claimed 5x better accuracy than alternatives

- Requires dedicated hardware purchase

- iOS/Mac only at launch

Subtle's unique value is the hardware-plus-software integration solving the input quality problem. They're not competing on transcription accuracy (that's OpenAI's job). They're competing on audio input quality.

This is a smart positioning. It's defensible and different.

The Business Model Implications

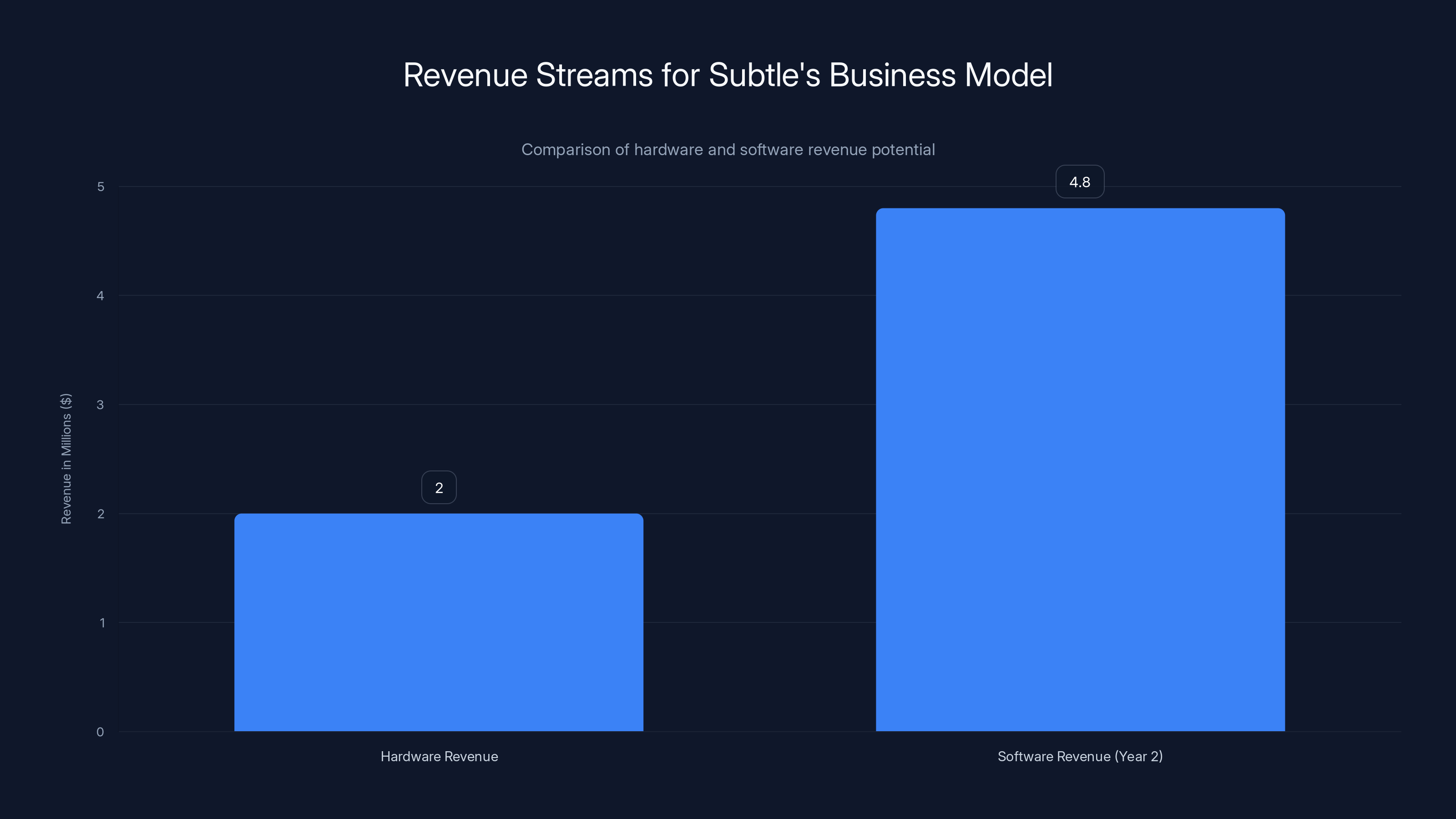

Subtle raised $6 million to date. For context, that's not much. Runway for a small team is maybe 2-3 years at Silicon Valley burn rates.

They need to reach profitability relatively quickly or raise another funding round. The hardware play is risky because:

-

Manufacturing costs: Earbuds cost money to design and manufacture. The Bill of Materials (BOM) for quality earbuds is probably $40-60. Add packaging, shipping, returns, and the margin gets thin.

-

Support burden: Hardware means customer support, warranty claims, and the occasional defective unit.

-

Inventory risk: If a batch doesn't sell, they're stuck with inventory.

-

Market timing: Earbud market is crowded. Dell, JBL, Samsung, Google, Apple, Sony, Bose all compete here.

The software subscription is where long-term profitability comes from. If 10,000 units sell in year one at

That math gets interesting if they can scale. But they have to execute on hardware delivery first.

Subtle's potential revenue from hardware sales is

The Role of AI Agents in Voice Interaction

Subtle's app integrates with AI, not just for transcription but for actual interaction. Users can "chat with AI without pressing any keys."

This suggests integration with something like GPT-4 for conversational AI. The workflow is probably:

- User speaks: "What are the best practices for optimizing database queries?"

- Earbuds capture and clean audio

- Phone transcribes: "What are the best practices for optimizing database queries?"

- AI processes: Queries GPT-4 or similar

- Response is read aloud: The AI response is converted to speech and played through the earbuds

- Conversation continues: User can follow up naturally

This is genuinely useful. It's different from voice commands ("set a timer") or dictation ("capture this thought"). It's actual conversation with AI.

The competitive advantage here is audio quality and always-on accessibility. If the earbuds can cleanly capture a question in a noisy coffee shop and deliver AI responses without requiring app opening, that's compelling.

This is where the form factor really matters. Earbuds are always with you. They're always connected. They can deliver responses directly to your ears. This is inherently better than pulling out a phone or laptop for a conversation with AI.

Market Size and Growth Potential

How many people would buy these earbuds?

Total addressable market (TAM) considerations:

-

Earbud market: About 200 million pairs sold annually worldwide. Average selling price is declining (commodity pressure), but premium segments ($150+) still exist.

-

Voice AI adoption: About 40% of smartphone users have used voice commands at least once. Only 15-20% use them regularly.

-

Overlap (target market): Users who want voice interaction, use dictation, and work in environments where audio quality matters. Probably 10-15% of premium earbud buyers. That's roughly 20-30 million potential customers globally.

-

Realistic penetration: If Subtle captures 1% of this market, that's 200,000-300,000 units. At

40-60M in revenue. With 70% gross margins on software and 40% on hardware (blended ~50%), that's meaningful profitability.

These numbers are plausible for a focused player if they execute well and achieve strong word-of-mouth.

The risk is that bigger competitors (Apple, Google, Samsung) could replicate this. Apple has the advantage of vertical integration. They could add Subtle's noise isolation to AirPods Pro 4 and make it a default feature. Google could add it to Pixel Buds.

But Subtle has first-mover advantage and can establish a brand while larger companies are still in planning stages.

Privacy Considerations and Implications

Voice data is sensitive. When you dictate to a device, you're transmitting potentially confidential information (medical notes, financial details, private thoughts) through hardware and software you don't fully control.

Subtle's architecture has privacy advantages:

- On-device noise isolation: Audio is cleaned locally, not sent to the cloud for processing

- Optional cloud transcription: Users can choose local transcription if they want maximum privacy

- No always-on recording: Unlike some voice assistants, the earbuds only listen when activated

But there are still questions:

- Data retention: Does Subtle store transcribed text? For how long? Under what circumstances?

- AI training data: Will user transcriptions improve the noise isolation model? Will users opt into that?

- Third-party integrations: When you dictate in Slack, does Slack see the clean audio or just the transcribed text?

- Device access: Does the always-on trigger listen to everything, or just a keyword?

These aren't unique to Subtle. Every voice AI product faces these questions. But Subtle's hardware component makes them more acute because the device is physically capturing audio.

Transparency and clear opt-in policies will be critical for adoption. Users don't want to feel surveilled by their earbuds.

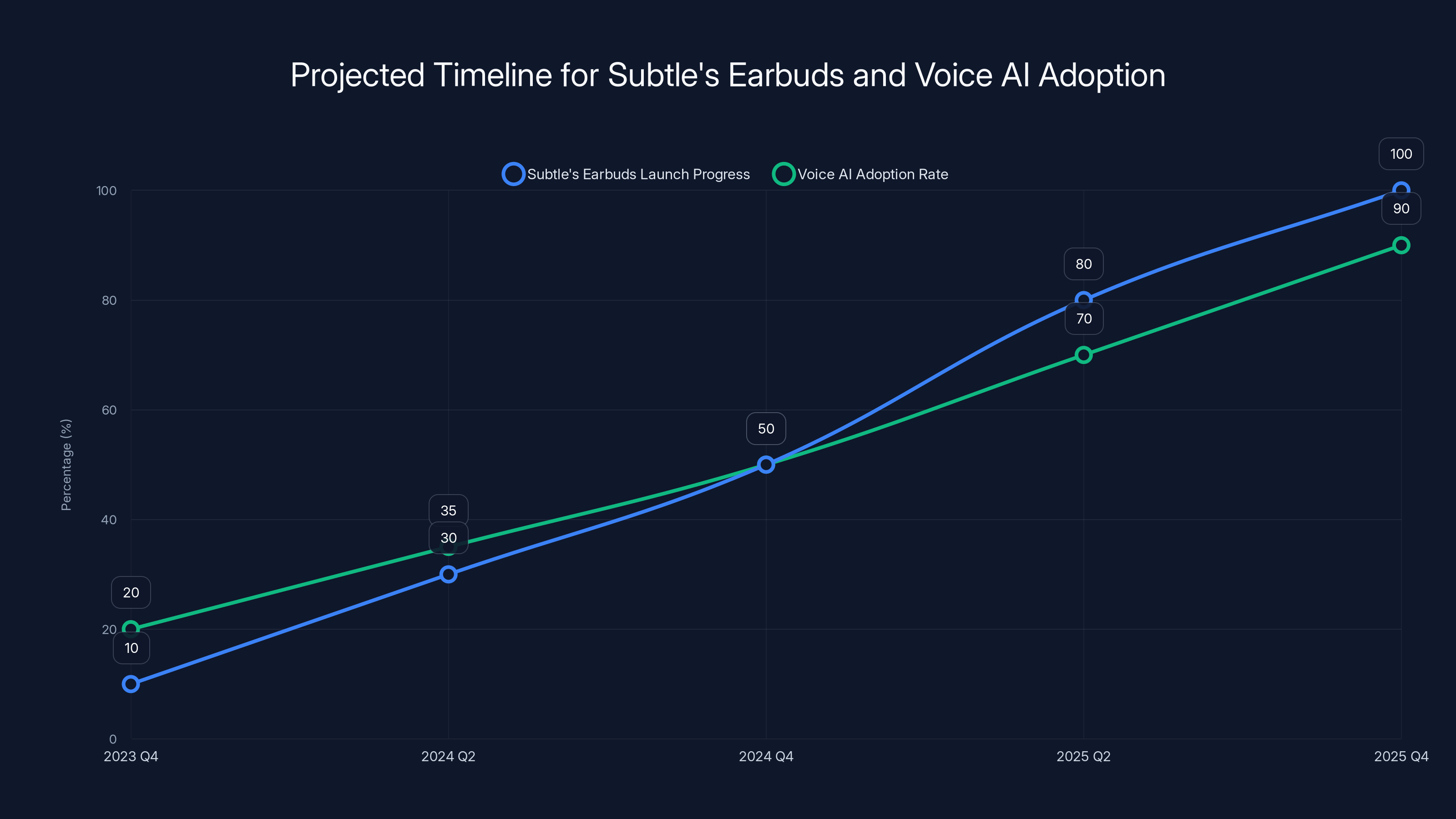

Estimated data suggests that by Q2 2025, Subtle's earbuds could achieve significant market presence if they meet their shipping timeline, coinciding with a broader adoption of voice AI interfaces.

Practical Use Cases for Subtle Earbuds

Who actually benefits from these earbuds?

Knowledge workers in open offices: You want to quickly capture a thought while your colleague is talking next to you. Existing voice dictation embarrasses you. Subtle's earbuds let you capture clearly without announcing to the office that you're taking notes.

Remote workers with poor audio: You're on a video call. Your keyboard clicks are loud. Background noise from outside is audible. Subtle's noise isolation means your colleagues hear a clean audio of just your voice. Video meeting quality improves for everyone.

Researchers and journalists: You're conducting interviews in noisy environments (coffee shops, streets, events). Clean audio capture means transcription is more accurate. Less time editing transcripts, more time on analysis.

Customer service representatives: You're taking calls in a busy call center. The noise isolation helps you focus on what the customer is saying. It also helps the customer hear you clearly. Call quality and handling time both improve.

Content creators and podcasters: You're recording voiceovers or capturing ideas for later. Clean audio means less post-processing. Editing becomes faster. Production quality is higher from the start.

Non-native English speakers: Clear audio capture reduces the chance that accent causes transcription errors. This is a genuine accessibility benefit.

People with hearing loss: Noise isolation that preserves speech clarity helps people with hearing impairment understand conversations in noisy environments.

These aren't edge cases. They're real use cases affecting millions of workers.

Implementation Timeline and Realities

Subtle says they'll ship to the U.S. in "the next few months." Let's be realistic about what that means.

Q1 2025: Limited availability, early adopter focus. Expect 1,000-5,000 units shipped.

Q2 2025: Broader availability, word-of-mouth drives awareness. Expect 10,000-20,000 units.

Q3-Q4 2025: Scaled production, supply chain issues (probably) resolved. Expect 50,000-100,000 units annually if successful.

The bottleneck is manufacturing. Designing earbuds is one thing. Reliably manufacturing 100,000 units with consistent quality is another. Most startups underestimate this.

They'll likely partner with a manufacturing partner (Foxconn, Flex, or similar) that has earbud manufacturing expertise. This adds cost and reduces margins but makes shipping realistic.

Given they raised

The Broader Implications for Voice AI

Subtle's launch signals something important: voice AI is maturing from cloud-dependent gimmick to hardware-integrated utility.

The trajectory of voice interfaces has been:

-

Novelty phase (2011-2016): Siri, Google Now, Cortana. Interesting but not really useful. People use them occasionally but don't depend on them.

-

Accessibility phase (2016-2020): Voice becomes genuinely useful for accessibility (hands-free, eyes-free). Adoption among people with disabilities increases significantly.

-

Smart home phase (2018-2023): Echo, Google Home, etc. Voice becomes useful for specific tasks (timers, lights, music) but remains limited by always-listening privacy concerns.

-

Conversational AI phase (2023-present): ChatGPT with voice makes voice conversation genuinely appealing. Users realize they actually want to talk to AI, not just give it commands.

-

Integrated hardware phase (2024-2026): Devices like Subtle optimizing specifically for voice as a primary interface.

We're transitioning into phase 5. The next phase will be ubiquitous voice interfaces in every context, enabled by devices that solve the input quality and social awkwardness problems.

Subtle is an early player in this transition. They won't dominate the market (bigger companies are coming). But they're proving the thesis that hardware-software integration focused on audio quality is valuable.

Challenges and Potential Roadblocks

No product is perfect. Subtle's earbuds will face real challenges.

Battery life uncertainty: Running AI inference locally drains batteries. If the earbuds only last 4-5 hours, real-world usage will be frustrating. Users will need to charge frequently. AirPods Pro get 6 hours. If Subtle's are worse, that's a real problem.

Cross-platform fragmentation: Launch is iOS/Mac only. Expanding to Android requires different optimization. Different Android manufacturers use different processors. It's harder than people think.

Transcription integration gaps: Universal app integration is claimed but not proven at scale. Some apps (native iOS apps) will integrate easily. Others (third-party apps, web apps on phones) might be harder. Integration complexity grows quickly.

Noise isolation model limitations: No AI system works perfectly. In certain acoustic scenarios (multiple people talking, music playing), the model will fail. Real-world audio is messier than training data. Customers will encounter these failure cases.

Competing with Apple: If Apple adds similar features to AirPods Pro 4 next year, what's Subtle's competitive advantage? Hardware alone isn't enough. They need software, ecosystem, and exclusive features.

Cost structure challenges: Selling hardware is notoriously difficult. Margins are thin. Manufacturing is complex. Returns and support are expensive. Subtle might realize their business model is less profitable than they expected.

Adoption friction: Even great products take time to adopt. Expectations are high because the price is high. First customers will be early adopters. Reaching mainstream adoption requires patience and execution.

These aren't fatal flaws. Every hardware startup faces similar challenges. But they're real risks to monitor.

What Subtle Gets Right and Wrong

Subtle gets RIGHT:

- Problem identification: Voice interfaces are limited by input quality and social awkwardness, not transcription accuracy.

- Hardware-software integration: Building the entire stack (hardware, noise isolation, app, transcription) means better control and optimization.

- Positioning: Focused on a real problem affecting millions of workers.

- Pricing: $199 is premium but not unreasonable for quality earbuds plus a year of software.

- Technology choice: Local processing on-device gives privacy, latency, and reliability advantages.

Subtle might get WRONG:

- Platform focus: iOS/Mac only means excluding Android users (roughly 70% of smartphone market). This is a huge addressable market reduction initially.

- Subscription model clarity: Future pricing isn't clear. If the year-one subscription feels free but year-two becomes expensive, adoption suffers.

- Hardware manufacturing: They're entering a mature, competitive market. Differentiation fades quickly without continuous innovation.

- Ecosystem lock-in: Unclear if Subtle will remain competitive if big tech companies replicate their features.

- Marketing and adoption: Great product doesn't guarantee adoption. They'll need significant marketing to reach mainstream users.

Overall, Subtle's bets are reasonable. The execution risk is high (it always is for hardware), but the strategic direction is sound.

The Future of Earbuds as AI Devices

Earbuds are becoming AI devices in a way they weren't before.

Even three years ago, earbuds were about audio quality and Bluetooth connectivity. Now they're processing local inference, running noise isolation models, and acting as interfaces to cloud AI systems.

This trend will continue. Expect:

Better on-device AI: As mobile processors improve (Qualcomm's latest chips have serious AI cores), more complex models run locally. Translation, speech synthesis, and even small language models become possible on earbuds.

Always-on contextual listening: Not always-on recording (for privacy). But always-on listening with sophisticated trigger detection that understands context and intent.

Bidirectional conversation: Earbuds become two-way conversational devices with AI. You ask a question, get an answer read to you, ask a follow-up, iterate naturally.

Health and wellness features: Earbuds will detect stress, fatigue, illness in your voice. They'll provide gentle interventions (breathing exercises, suggestions to take breaks).

Ambient intelligence: Earbuds aware of your environment (location, noise level, who's nearby) that adapt behavior accordingly.

Seamless integration with everything: Earbuds that know what you're working on, what apps you're using, what information you need.

This is the 5-10 year vision. Subtle's launch is just the beginning of this transition.

Practical Recommendations for Potential Users

If you're considering Subtle earbuds, here's how to evaluate the decision:

Strong case for buying:

- You use voice dictation regularly in noisy environments

- You're frustrated with transcription errors from existing solutions

- You work in open offices where speaking out loud is awkward

- You value privacy and prefer on-device processing

- You're primarily iOS/Mac (for now)

- You're willing to be an early adopter and provide feedback

Weak case for buying:

- You primarily use Android

- You rarely use voice interfaces

- You're happy with existing solutions (AirPods, Wispr Flow, etc.)

- You're price-sensitive and prefer free solutions

- You want proven, battle-tested products before adopting

- You need excellent multi-language support (unclear at launch)

If you're unsure:

- Wait for early reviews (January-March 2025) from tech journalists and actual users

- Check transcription accuracy tests in real-world scenarios (not marketing demos)

- Understand the year-two subscription cost before committing

- Try existing solutions (Whispr Flow, Superwhisper) to understand if voice dictation actually fits your workflow

- Consider that great hardware doesn't guarantee adoption. Community, software ecosystem, and continuous updates matter.

Conclusion: The Beginning of a Larger Shift

Subtle's $199 earbuds aren't just a new product. They're a signal that voice AI is transitioning from cloud-dependent gimmick to hardware-integrated utility.

The core insight is simple but profound: better input quality is more valuable than better transcription algorithms. It's easier to improve audio capture than to improve transcription. And the improvement compounds across every downstream application.

This inverts the conventional wisdom of the last decade, where everyone assumed that better AI models would solve voice interface problems. Turns out, the bottleneck was always the microphone input and environmental noise, not the models.

Subtle's solution is elegant: proprietary on-device noise isolation that cleans audio before it ever reaches a transcription model. This approach is defensible, scalable, and genuinely useful.

Will Subtle win the earbud market? Probably not. Apple and Samsung have too many advantages. But will Subtle establish themselves as the "voice quality company" that others license technology from? That's entirely plausible.

The bigger story is that voice interfaces are finally becoming mainstream in a way that's socially acceptable, environmentally robust, and genuinely useful. Subtle is just one player in this larger shift. But they're a smart player with a solid understanding of the actual problem they're solving.

Watch Subtle's shipping timeline closely. If they deliver earbuds by Q2 2025 and real users report dramatic transcription improvements, this could be a genuine breakthrough product. If they hit manufacturing delays or the accuracy improvements don't materialize in real-world testing, they're just another earbud startup.

Either way, the trajectory is clear: voice is becoming a primary interface for human-AI interaction. Devices optimized for that interface, rather than optimized for music listening, are the future.

Subtle is building for that future. Whether they win or lose, they're pointing in the right direction.

FAQ

What makes Subtle's earbuds different from AirPods Pro 3 or other premium earbuds?

Subtle's primary differentiation is proprietary noise isolation models that run locally on the device. Unlike traditional noise cancellation designed to protect the listener's ears from external noise, Subtle's models specifically isolate the user's voice while suppressing background noise before transcription. This fundamentally different approach results in dramatically better input quality for voice capture, leading to the claimed five times fewer transcription errors compared to AirPods Pro 3 paired with OpenAI's Whisper model.

How does the local processing architecture benefit users?

Local processing on the device provides three key advantages. First, it offers superior privacy because audio isn't sent to cloud servers for noise isolation processing. Second, it eliminates latency since processing happens immediately on-device rather than making round-trip cloud calls. Third, it improves reliability since the earbuds function even without network connectivity. The trade-off is that local processing requires more sophisticated hardware and consumes more battery power than simple microphones and wireless transmission.

What does the year-long included subscription cover?

The $199 purchase includes a year of access to Subtle's iOS and Mac app, which provides voice-to-text transcription, universal app integration for dictation across any application, and AI chat capabilities. After the first year, Subtle will presumably require a subscription for continued software access, though exact pricing for year two has not been announced. Users can choose between local device transcription (maximum privacy) or cloud-based transcription (typically higher accuracy) depending on their preferences.

How does Subtle's approach compare to other voice dictation apps like Whispr Flow or Superwhisper?

Whispr Flow and Superwhisper are software-only solutions that work with any earbuds or microphone. They excel at providing cross-platform transcription and AI integration but depend entirely on the user's existing hardware for audio quality. Subtle's approach combines dedicated hardware optimized for voice capture with integrated software, which creates a more complete system. The hardware-software integration gives Subtle better control over audio input quality, which is the primary factor determining transcription accuracy.

Will Subtle earbuds work with Android phones?

Currently, Subtle has announced iOS and Mac support at launch. The company is working with Qualcomm and other Android chip manufacturers to optimize their noise isolation models for Android devices, but there's no confirmed timeline for broader Android support. This is a significant limitation since Android represents roughly 70% of the global smartphone market, though Subtle's Android launch will likely happen within 6-12 months of initial shipping.

What is Word Error Rate (WER) and how does the five times fewer errors claim translate to real-world accuracy?

Word Error Rate measures transcription accuracy by counting incorrect words divided by total words spoken. If a system achieves 20% WER, it means 80% accuracy (one error per every five words). If another system achieves 4% WER, that's 96% accuracy (one error per 25 words). Subtle's claimed five times fewer errors suggests their system achieves roughly 3-4% WER in noisy environments compared to AirPods Pro 3 with Whisper at approximately 15-20% WER. In practical terms, this means one transcription error per minute of speech rather than four errors per minute, which is genuinely useful for professional transcription and note-taking.

How does Subtle's business model work and what happens after the first year?

Subtle's business model combines hardware sales (

What are the realistic limitations and challenges Subtle faces?

Several challenges could impact adoption: battery life might be limited by local AI processing (unclear if earbuds can match AirPods Pro's 6-hour runtime), expanding to Android requires significant engineering work with different hardware manufacturers, universal app integration is complex and might have gaps with certain applications, the noise isolation models will inevitably fail in some acoustic scenarios (multiple speakers, music playing), manufacturing challenges are common for hardware startups, and larger companies like Apple could replicate similar features and use their distribution advantages to outcompete Subtle.

Should I buy Subtle earbuds right now or wait?

If you're considering purchase, it makes sense to wait 2-3 months for early user reviews and real-world testing data before committing. Key factors to evaluate: transcription accuracy in your specific use case and noise environment, battery life in practical daily use, software stability and integration quality, and whether Subtle's approach genuinely solves a problem you experience with existing voice dictation solutions. Try existing software solutions like Whispr Flow or Superwhisper first to understand if voice dictation actually fits your workflow, as hardware doesn't matter if the feature itself isn't useful for your needs.

What does the future of voice interfaces look like based on Subtle's approach?

Subtle's launch signals a broader shift toward voice as a primary interface for human-AI interaction, enabled by hardware-software integration focused on audio quality. The trajectory suggests voice interfaces will become more ubiquitous, conversational, and deeply integrated with AI systems. Future developments likely include better on-device AI models, always-on contextual listening with sophisticated trigger detection, bidirectional conversation with AI, health and wellness monitoring through voice analysis, and seamless integration with context-aware AI systems. Subtle is an early player in this transition, demonstrating that solving input quality problems is more valuable than pursuing ever-better transcription algorithms.

Key Takeaways

- Subtle's $199 earbuds solve the primary bottleneck in voice AI: input audio quality, not transcription algorithms

- On-device noise isolation models running locally provide privacy, latency, and reliability advantages over cloud processing

- The claimed five times fewer transcription errors (4% WER vs 16% for AirPods Pro 3) is mathematically plausible with significantly cleaner audio input

- iOS/Mac launch limits initial addressable market, but partnerships with Qualcomm and Nothing suggest Android expansion within 6-12 months

- Voice interfaces are transitioning from cloud-dependent novelty to hardware-integrated utility, positioning Subtle as an early player in a larger market shift

![Subtle's AI Voice Earbuds: Redefining Noise Cancellation [2025]](https://tryrunable.com/blog/subtle-s-ai-voice-earbuds-redefining-noise-cancellation-2025/image-1-1767528361736.jpg)