Switch Bot's New AI Recording Device: The Rise of Wearable Conversation Capture

Last year, the idea of a tiny device clipped to your collar that records everything you say sounded like science fiction. Now it's real. Switch Bot, the smart home automation company behind everything from robotic vacuum cleaners to smart locks, just released a wearable recorder that does exactly that. And honestly? It feels like we've crossed into something we should probably pause and think about.

The device is small. Impossibly small. You clip it to your collar, pocket, or bag, and it starts capturing every word you say, every cough, every awkward silence in a conversation. The pitch is simple: never miss another important detail again. Never forget what someone said. Never have to actually pay attention because the AI will do it for you.

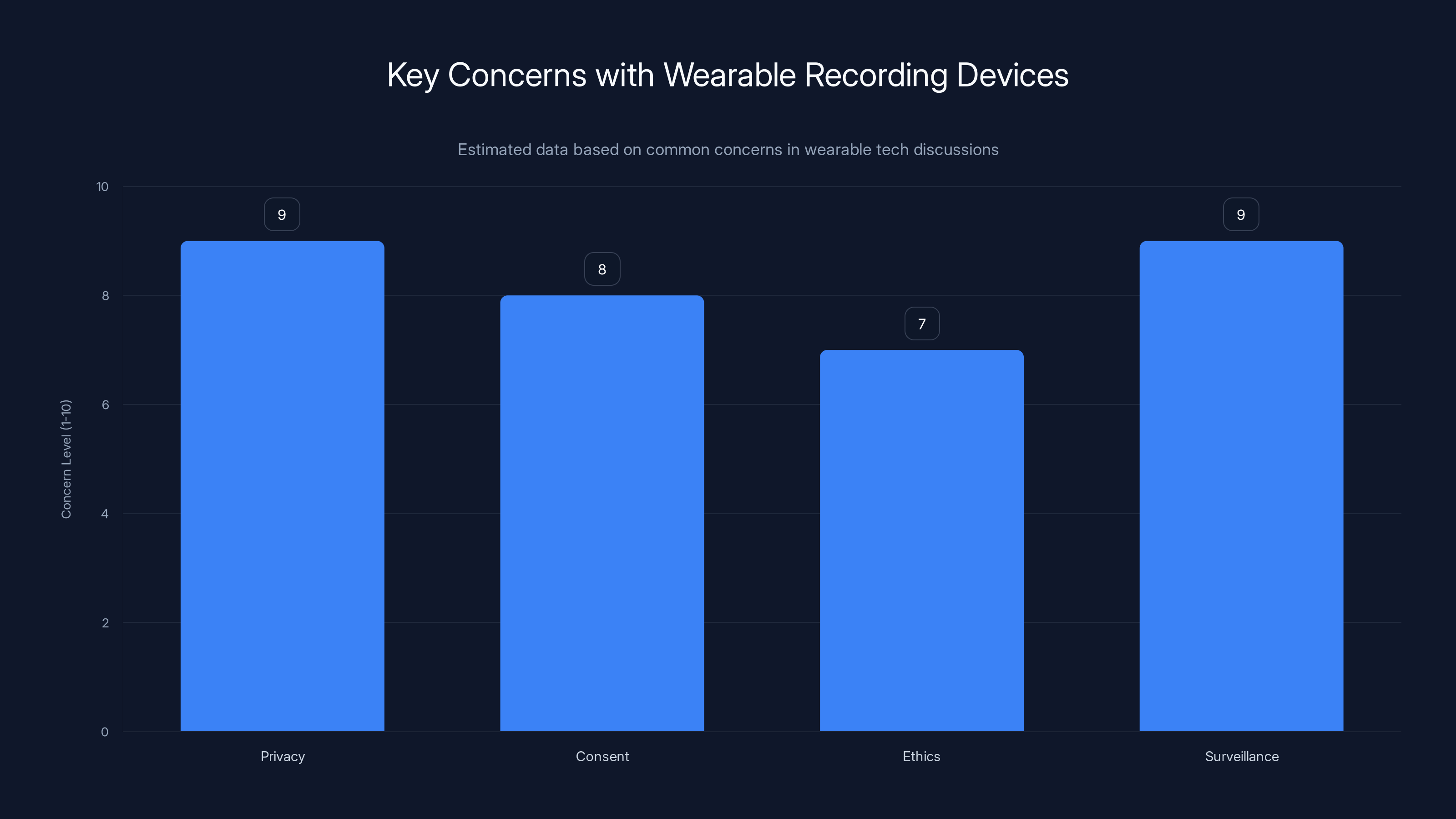

But here's where it gets complicated. This isn't just about convenience. It's about privacy, consent, ethics, and the kind of surveillance culture we're sleepwalking into. When one person in a conversation is recording without everyone's knowledge or explicit consent, we've entered murky legal and moral territory. Different countries have different rules about this. Some places require all-party consent to record conversations. Others don't. And Switch Bot's marketing doesn't seem overly concerned about the distinction.

The device represents a broader trend that's been accelerating for the past few years: the normalization of recording technology. We've gotten used to Alexa listening in our kitchens. We accept that Siri might be recording our voice commands. We know Google Assistant is always listening for the wake word. But those are devices we consciously activated and brought into our homes. This is different. This is a device you wear. On your body. Recording the people around you.

What makes this particularly interesting (and troubling) is the positioning. Switch Bot isn't marketing this as a surveillance tool. They're marketing it as a productivity solution. A memory aid. Something that will make you more efficient, more organized, less scattered. And for some use cases, that's genuinely true. If you're a journalist conducting interviews, or a researcher gathering data, or someone with ADHD who struggles with memory, this has legitimate value. But the marketing glosses over the elephant in the room: the people you're recording might not want to be recorded.

The technology itself is competent. It uses AI to transcribe conversations, identify key topics, and surface important moments. You clip it on, and the companion app gives you a transcript and summary. For some users, it's genuinely useful. But usefulness isn't the only consideration here. We need to talk about what this means for privacy, for consent, and for the kind of society we're building.

Understanding the Technology Behind the Device

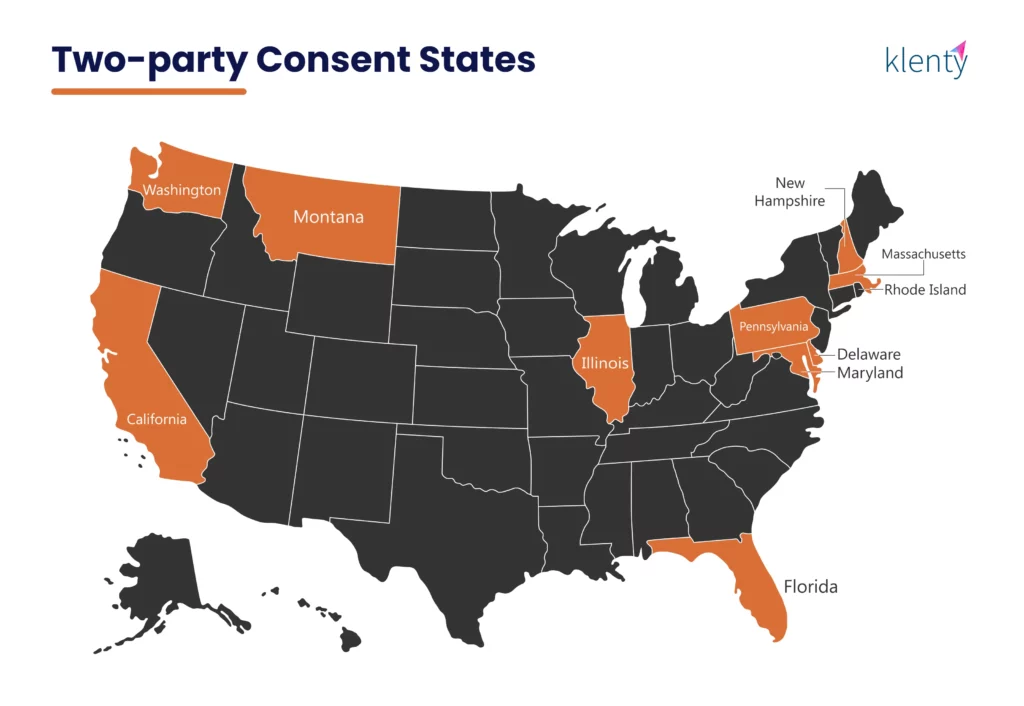

The Switch Bot recorder isn't revolutionary technology. It's competent execution of existing capabilities. At its core, it's a small microphone paired with local processing and cloud AI integration. The device captures audio, either processes it locally or sends it to the cloud, and then uses speech-to-text and AI analysis to extract meaning.

What sets it apart from a simple voice memo app on your phone is the Always-On aspect. Your phone sits in your pocket. This device is actively positioned to capture conversations around you. The microphone is optimized for picking up speech in noisy environments. The AI is trained to recognize not just words, but context and significance.

The processing pipeline works roughly like this: audio comes in, gets compressed, either transcribed locally or sent to servers for processing. The AI identifies speakers, topics, and important moments. You get back a transcript plus a summary of key points. All of this happens in real-time or near-real-time.

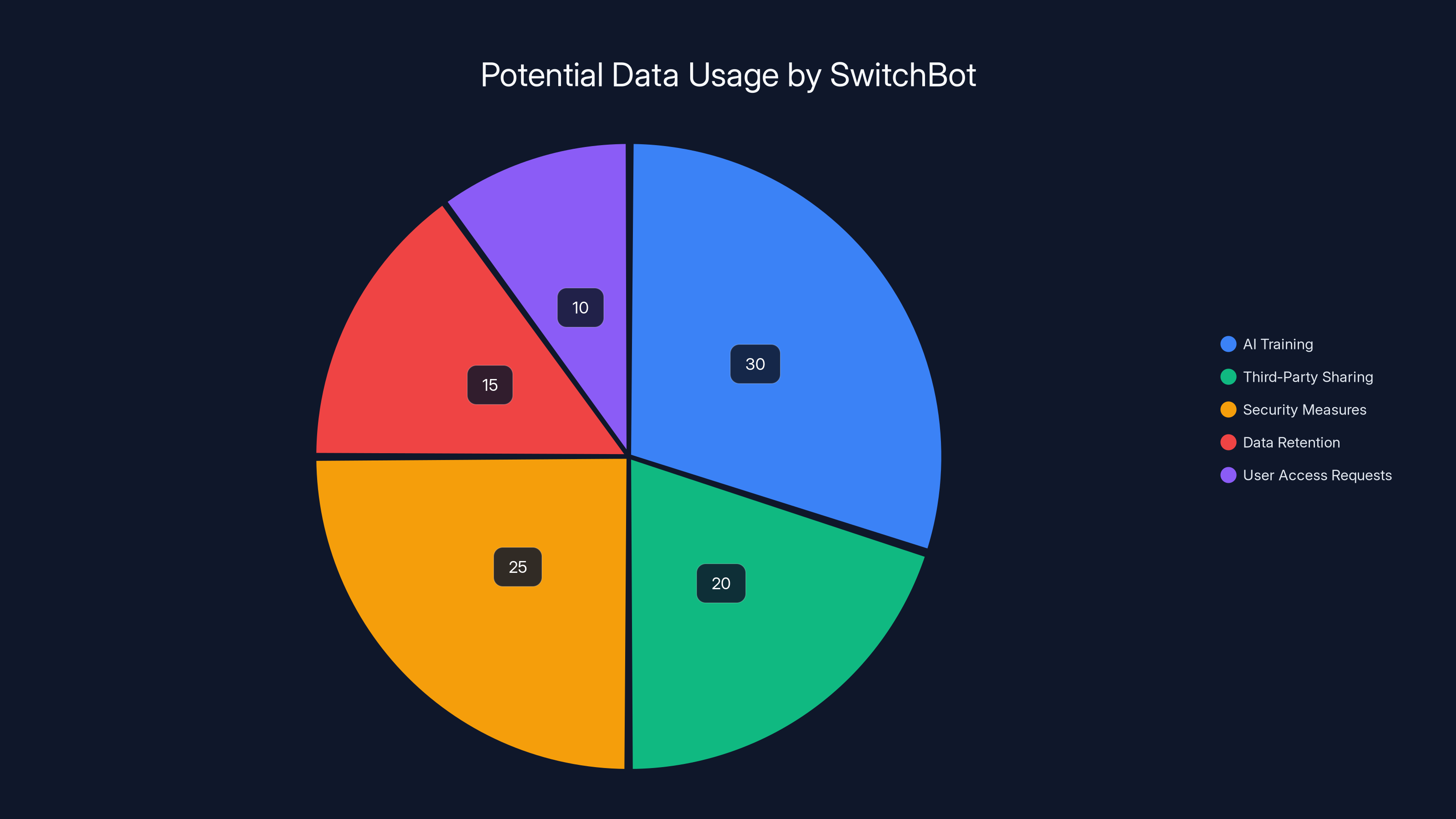

One technical consideration that gets glossed over in the marketing: where is the data going? Switch Bot has to process this audio somewhere. Is it all local? No. Some of it is, but the heavy lifting happens in the cloud. Which means your conversations are being uploaded to Switch Bot's servers. Which means Switch Bot has access to everything you're recording. Which means there's a whole new security and privacy surface to worry about.

The battery life is respectable, usually lasting through a full workday. The form factor is genuinely clever. It's small enough that you might forget you're wearing it. Which is kind of the point. And also kind of the problem.

The SwitchBot Recorder excels in cloud AI integration and always-on recording, setting it apart from typical voice memo apps. Estimated data based on feature descriptions.

The Legal Minefield: Where Recording Gets Complicated

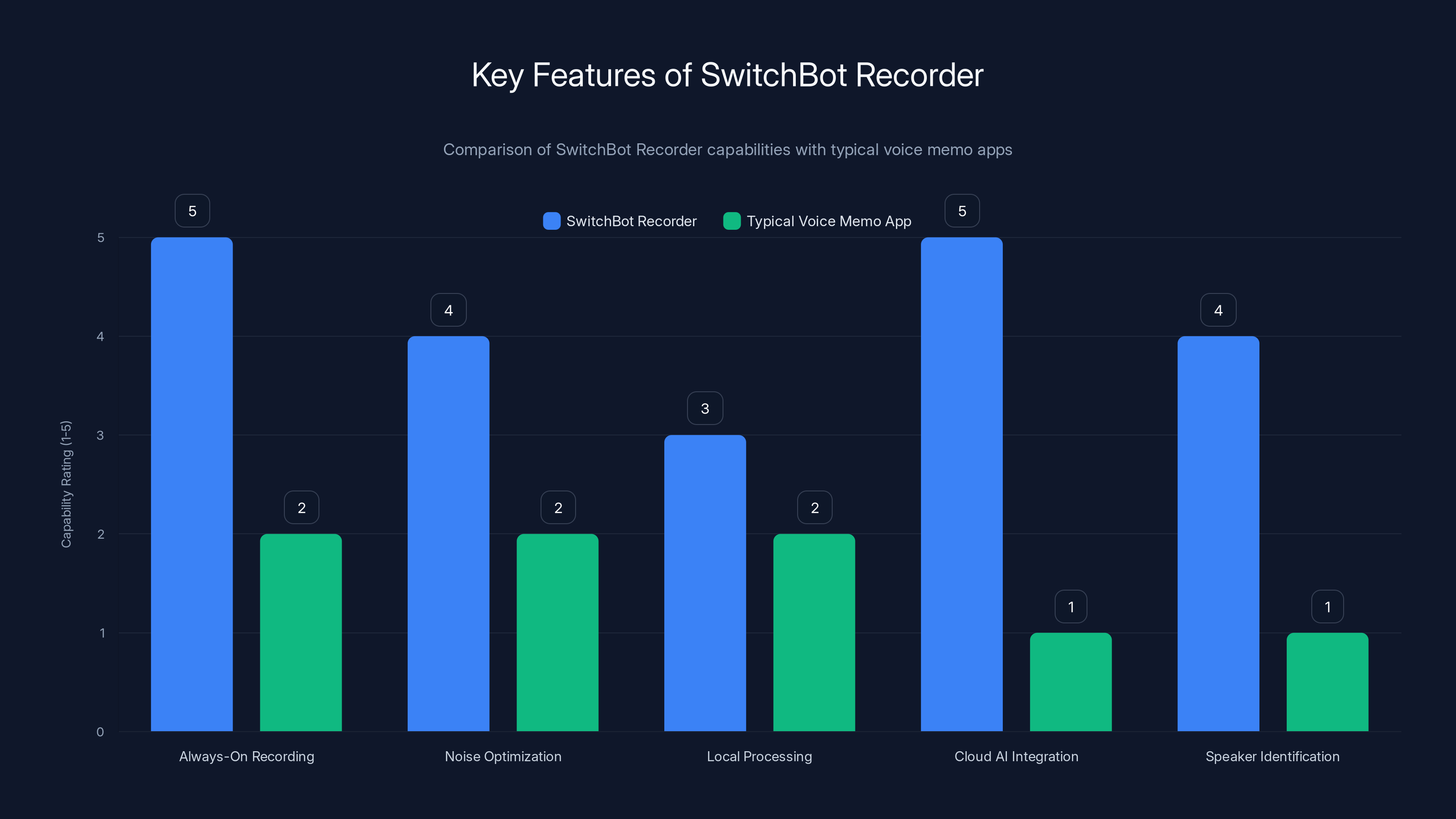

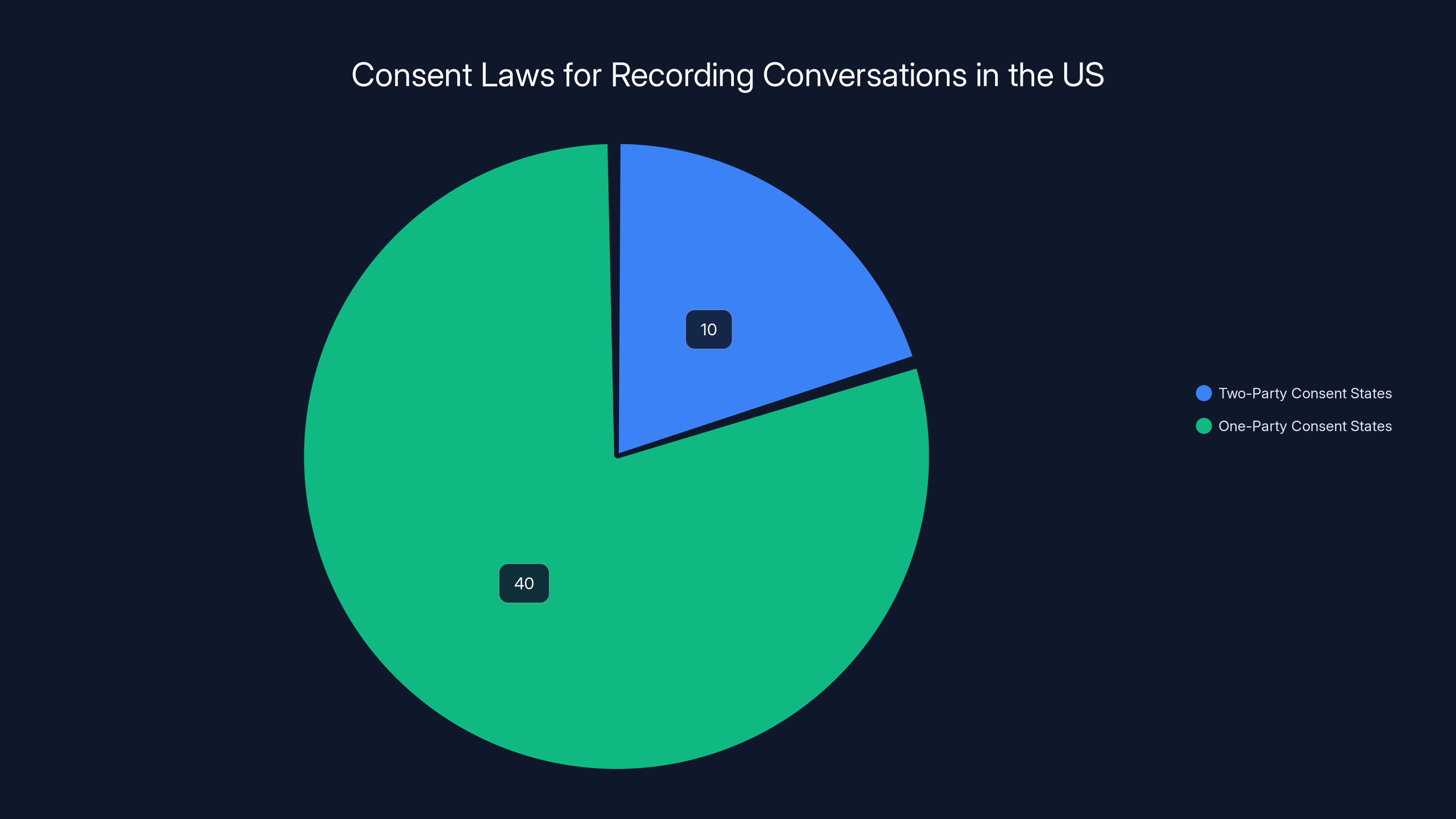

This is the part that should concern you most. Recording laws in the United States are a patchwork. There's no federal law prohibiting recording conversations you're a party to. But there are state laws, and they vary dramatically.

In two-party consent states, you cannot record a conversation without the knowledge and explicit consent of all parties involved. That's the law in: California, Florida, Illinois, Maryland, Massachusetts, Michigan, Montana, New Hampshire, Pennsylvania, and Washington. In these states, if you clip this device to your collar and record someone without telling them, you could face criminal charges. Fines up to thousands of dollars. Even jail time in some cases. It's serious.

In one-party consent states, you only need the consent of one party to the conversation. So if you're part of the conversation, you can record it without telling the other person. That's legal in the majority of states. But here's the thing: just because something is legal doesn't mean it's ethical or smart. And it doesn't mean your employer will allow it, or that your relationships will survive the discovery that you've been secretly recording conversations.

Europe has its own set of rules, and they're stricter. Under GDPR, recording someone's personal data (which includes their voice) requires a legitimate legal basis. Consent is one of those bases, but consent has to be informed, specific, and freely given. Simply clipping a recorder to your collar and recording everyone around you doesn't meet that standard.

In Canada, it's similar to the United States: mostly one-party consent, but with some provinces having stricter rules. In Australia, recording conversations you're a party to is generally legal, but recording private conversations you're not part of is not.

The practical issue is this: Switch Bot's marketing makes no real effort to educate users about these legal complexities. The assumption seems to be that because the device exists and is being sold, recording with it must be fine. It's not. It's a legal minefield, and users are responsible for navigating it.

Privacy and surveillance are the highest concerns with wearable recording devices, highlighting the ethical and consent issues involved. Estimated data.

Privacy Concerns: Where Your Data Actually Goes

Here's what the marketing doesn't emphasize: your conversations are now part of someone else's database. Switch Bot's database. When you use this device, you're trusting Switch Bot with some of the most sensitive information in your life.

Think about what you talk about in conversations. Business strategy. Personal health information. Financial details. Relationship problems. Passwords. Security codes. Sensitive employee information. All of it gets captured, transcribed, and stored somewhere on Switch Bot's infrastructure.

Now, Switch Bot's privacy policy probably says something about protecting your data. Most companies' privacy policies do. But privacy policies are written in legal language that obscures more than it reveals. What actually happens to this data? How long is it stored? Who has access to it? Can it be sold or shared with third parties? Can law enforcement access it? What happens if Switch Bot gets hacked?

There's also the question of how the data is used for AI training. Your conversations might be used to improve Switch Bot's AI models. You might have consented to this in the terms of service, buried in a 40-page document nobody actually reads. But consent buried in dense legal language isn't really meaningful consent.

The security aspect is another layer of concern. Any data that's transmitted and stored is vulnerable to breaches. Switch Bot would need to implement serious security infrastructure to protect audio recordings of conversations. Military-grade encryption. Multiple redundancy. Intrusion detection. Access controls. Not every company, especially smaller ones focused on smart home automation, has the security infrastructure to protect this kind of sensitive data.

There's also the question of retention. How long does Switch Bot keep this data? Forever? A few years? Do you have the ability to delete it? Can you request a copy of everything Switch Bot has recorded about you? Under GDPR, you have the right to request this data. Under CCPA in California, you have similar rights. But not every jurisdiction has these protections.

The Ethical Problem: Consent and the People Around You

Let's zoom out from the legal questions and talk about the ethical ones. Those might matter more.

When you record someone without their knowledge, you're making a decision for them. You're saying: "I've decided to capture this interaction, and I'm not going to ask your permission or even tell you it's happening." That's not a small choice. It's a choice about autonomy, agency, and basic respect.

Imagine you're in a meeting at work. Your colleague clips this recorder to their collar. Without telling anyone. Without asking permission. They're now capturing everything you say, everything your boss says, everything that happens. You don't know it's happening. You have no control over what's recorded or what gets done with that recording. How does that feel?

Or imagine you're at dinner with a friend. They clip on the recorder. They're recording your conversation. Your personal thoughts. Things you said in confidence. Things you'd only say because you thought it was private. They never tell you. You only find out later when they mention the AI summary. How does that change your relationship?

This is the core ethical issue. Recording normalizes the idea that conversations don't need to be consensual experiences. It creates an information asymmetry. One person knows they're being recorded. The others don't. That asymmetry is the basis for a lot of harm.

There's also the question of power dynamics. If you're the boss, and you're recording conversations with employees, you're using a power advantage to gather information about them without their knowledge. If you're a parent recording your teenager, you're using authority to bypass their privacy expectations. If you're a partner recording your spouse, you're undermining trust.

The device also changes the nature of conversations. When people know they're being recorded, they behave differently. They're more careful. More guarded. They might not share genuine thoughts or feelings. Recording secretly gets "authentic" conversations, but at the cost of violating consent. There's a trade-off here, and it's worth acknowledging.

What concerns me most is the normalization aspect. If enough people start wearing these devices, if they become as common as smartwatches, then the expectation of conversation privacy disappears. We'll all just assume that every conversation might be recorded, transcribed, and stored. That's a different world. It's less trusting. Less vulnerable. Less human.

In the US, 10 states require two-party consent for recording conversations, while the remaining 40 states allow one-party consent. Estimated data based on typical state distribution.

Use Cases Where This Device Actually Makes Sense

Now, I don't want to be entirely dismissive. There are legitimate use cases where a recording device like this serves a real purpose.

Journalism and Interviews: If you're a journalist conducting interviews, a recorder is essential. You get accurate quotes. You have proof of what was said. You don't have to rely on handwritten notes that might be inaccurate. As long as you disclose to your subject that you're recording, this is perfectly legitimate and ethically sound. This is literally what journalists do.

Research and Academic Work: Researchers often record interviews and focus groups. Again, with proper consent, this is standard practice. A wearable recorder is more convenient than a tabletop mic or a phone placed on the table.

Accessibility and Medical Applications: If you have hearing loss, or certain types of cognitive impairment, a recording of conversations can be genuinely helpful. You can play back conversations later. You can get AI-generated summaries. You can make sure you didn't miss important information. This is accessibility technology.

Legal Protection: If you're in a profession where you might need documentation of conversations—a police officer, for example, or someone in a legal field—recording can protect you. It creates a record of what was actually said, not what someone claims was said later.

Personal Note-Taking: Some people use voice recorders just for themselves. Recording ideas, thoughts, reminders. If you're doing that and you're not capturing anyone else's conversations, that's fine. That's just a voice memo device, which phones already do.

The common thread in these legitimate use cases: consent and disclosure. The people you're recording know it's happening. They've agreed to it. You're not sneaking around.

Where this device becomes problematic is when it's used as a general-purpose conversation capture tool without consent. When people clip it on and start recording everyone around them without asking. That's where it crosses ethical and legal lines.

Workplace Implications: When Recording Gets Complicated

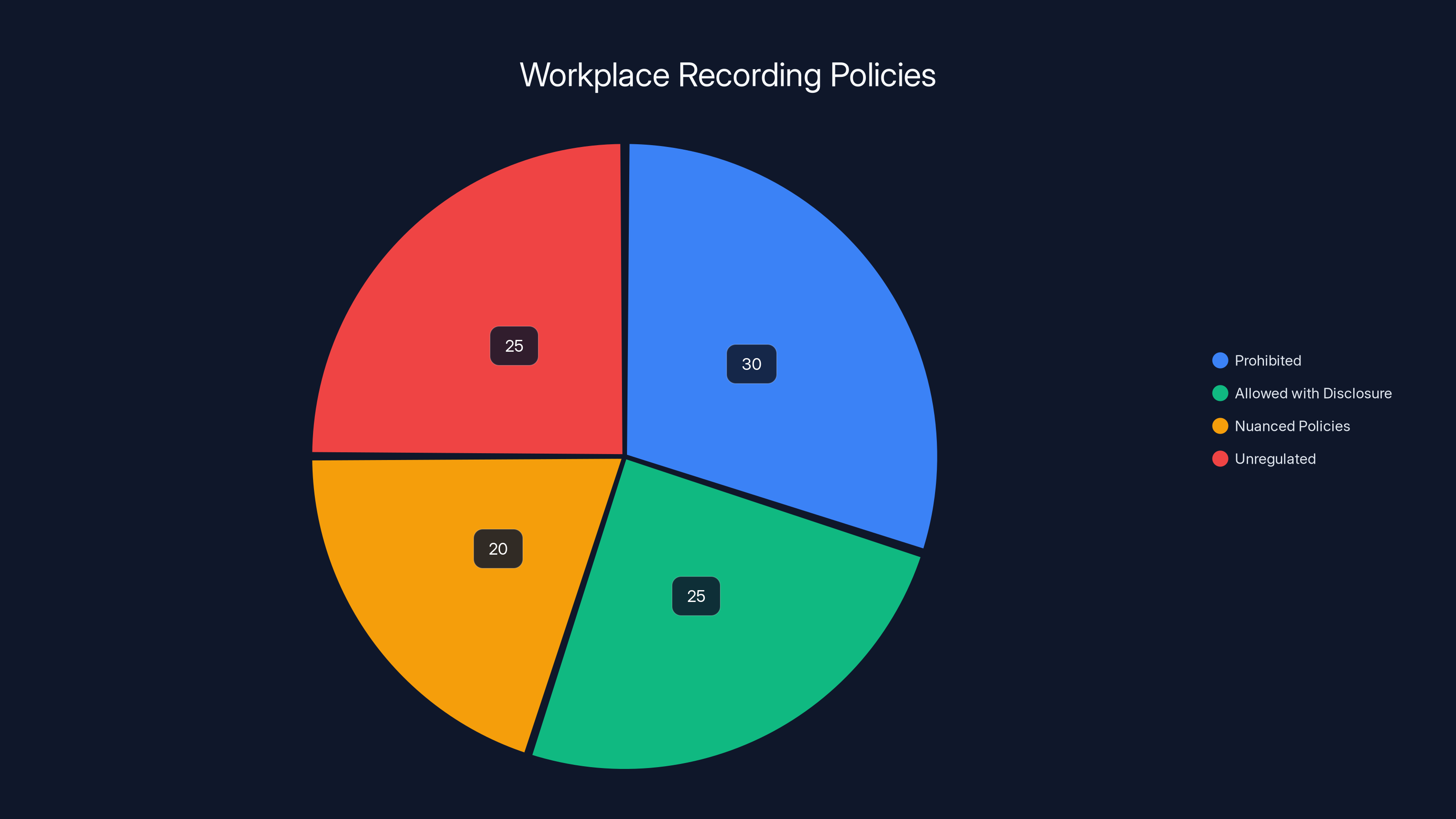

One place where this device creates immediate problems is the workplace. Most employers have policies about recording in the office. Some explicitly prohibit it. Some allow it only with disclosure. Some have nuanced policies about what you can and can't record.

If you clip this device to your collar at work and start recording meetings without telling anyone, you might be violating company policy. You might be putting yourself at legal risk. You might be violating your employees' or colleagues' rights. And you're definitely eroding trust.

There's also the question of intellectual property. If you're recording work conversations, who owns the recording? In many cases, your employer might claim ownership. Or they might claim ownership of any ideas discussed in the recording. Recording at work can create legal complications you hadn't thought about.

For HR purposes, this is a nightmare. If managers start recording employee conversations without disclosure, it creates an adversarial workplace. Employees will feel watched, monitored, and distrusted. Engagement suffers. Retention suffers. Culture suffers.

For managers trying to be transparent: if you're going to record conversations, tell people. Make it part of your communication style. "I record conversations so I have an accurate record of what we discussed." Some people might object. But at least you're being honest about it.

The potential for abuse is significant. An unethical manager could record employee conversations, pick out comments that sound bad out of context, and use them to justify firing someone. A colleague could record a conversation where you said something stupid and use it against you. A business rival could record a sales pitch and steal your ideas. These aren't hypothetical scenarios. They're realistic, and they happen.

Estimated data distribution shows AI training as a major use of user data, with significant portions potentially shared with third parties or used for security measures. (Estimated data)

Comparison: Other Recording Solutions and Alternatives

If you genuinely need to record conversations, there are other options that might be better depending on your situation.

Phone-Based Recording Apps: Almost every smartphone has a voice memo or recording app built in. You can record conversations directly on your phone. The advantage is that everyone can see you holding a phone, so it's obvious you're recording. The disadvantage is it's less convenient and less discreet.

Dedicated Digital Recorders: Companies like Zoom make dedicated recorders designed for meetings and interviews. The Zoom H6, for example, is a professional-grade digital recorder. Much higher audio quality than a smartwatch-sized device. But also more obvious, less discreet.

AI Meeting Transcription Tools: Services like Otter.ai or Fireflies.ai integrate with video conferencing platforms and automatically transcribe meetings. The advantage is that transcription happens automatically, and there's no separate device. The disadvantage is you need to be in a video call. The advantage for privacy is that everyone in the meeting knows it's being recorded because it's integrated into the meeting platform.

Smart Speaker with Recording: Devices like Amazon Echo already have always-on microphones. They're less discreet than a wearable, which is actually a feature for transparency. Everyone knows the microphone is there.

Analog Note-Taking: Just write things down. Use a notebook. It's slower, but it forces you to actually engage with the conversation instead of relying on a device to capture everything.

The Switch Bot device's advantage is form factor and integration. It's small, it's wearable, it's designed specifically for capturing conversations. But those advantages come with ethical and legal trade-offs.

Technological Alternatives: Will This Get Better or Worse?

The Switch Bot device is just the beginning. We're going to see more wearable recording technology. More AI-powered conversation capture. More devices designed to record, transcribe, and analyze what people say.

In the near term, expect smaller devices. More sophisticated AI. Better battery life. Better integration with other smart home systems. Switch Bot will probably release updates that improve the transcription accuracy, add speaker identification, better topic detection.

Longer term, we might see this technology integrated into clothing. Earbuds that record continuously. Smart glasses with built-in microphones. Devices so small and so ubiquitous that recording becomes the default state.

There's also the question of AI sophistication. Right now, the AI does basic transcription and summary. In a few years, it might do real-time emotion detection. It might identify when someone is lying based on voice patterns. It might recognize people by their voice. It might extract personal information and flag it as sensitive.

And then there's the data side. As companies like Switch Bot collect more conversation data, they'll have massive databases of how people actually talk. How they make arguments. How they persuade. How they manipulate. This data could be valuable for training more sophisticated AI models. It could be valuable for marketing purposes. It could be valuable for surveillance and social control.

The technology trajectory is clear: more recording, more analysis, more intelligence extracted from conversations. The question is whether society will put guardrails in place to limit what can be done with this technology.

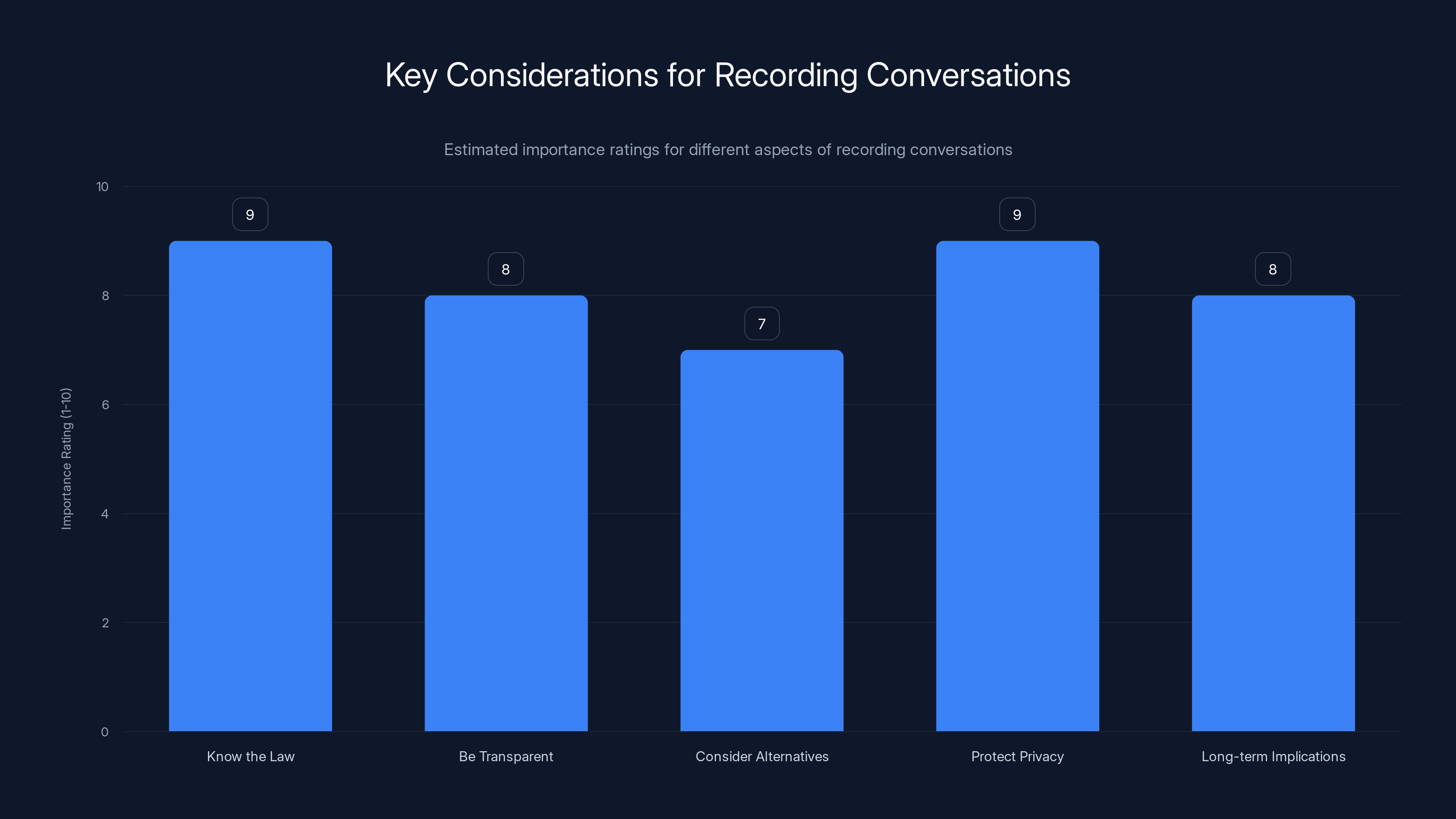

Understanding legal requirements and protecting privacy are the most critical aspects when considering recording conversations. Estimated data based on topic importance.

Regulatory Response: What Laws Are Changing

Governments are starting to notice this trend. Some are already making changes. Others are at least talking about it.

The European Union has taken the strongest stance through GDPR. Recording someone's personal data requires a legitimate legal basis, and in most cases, that's consent. Consent has to be informed, specific, and freely given. You can't just secretly record people. The EU has also started implementing additional regulations around biometric data and surveillance.

California has some of the strictest recording laws in the US (two-party consent) and also passed CCPA, which gives people rights over their personal data. California is also one of the few places that have started talking specifically about regulating recording technology.

China has taken a different approach, actually encouraging recording in some contexts while strictly controlling it in others. In some cities, facial recognition combined with audio recording is already ubiquitous.

India and several other countries are still figuring out their regulatory approach. Right now, recording laws are more permissive, but that's likely to change as technology becomes more prevalent.

What's interesting is that regulation is lagging behind technology. The devices are already here. The ability to record is already here. The legal and regulatory frameworks are still catching up.

I expect we'll see more rules about:

- Notification requirements (you have to tell people you're recording)

- Data retention limits (how long companies can keep recordings)

- Data access rights (people should know what's been recorded about them)

- Purpose limitations (data collected for one purpose can't be used for another)

- Security standards (companies have to protect recorded data)

- Consent requirements (more jurisdictions moving toward explicit consent)

But this will happen slowly, jurisdiction by jurisdiction, and companies will probably fight every restriction.

The Broader Surveillance Trend: Where This Fits In

The Switch Bot device doesn't exist in a vacuum. It's part of a much larger trend toward ubiquitous surveillance and data collection.

We already accept a level of monitoring that would have seemed dystopian 20 years ago. Your phone tracks your location constantly. Your email provider reads your emails (even if they say they don't). Your social media data is sold to advertisers. Your smart home devices listen for wake words. Your fitness tracker monitors your heart rate and sleep. Your car collects data about how you drive.

Now we're adding conversation recording to that list. It's another data point. Another way companies and individuals can monitor, track, and analyze us.

The concerning part is the normalization. Each new technology pushes the boundaries of what feels normal. We got used to location tracking. Then we got used to targeted ads based on our searches. Then we got used to smart speakers listening in our homes. Each incremental step feels small. But cumulatively, we've built a surveillance infrastructure that would have seemed impossible in 1995.

The conversation recording device is just the next step. And if it becomes normalized, if people start expecting that conversations might be recorded, it changes the nature of how we interact. We become more guarded. Less authentic. More aware that we're being observed.

This is the part that keeps me up at night. Not just the immediate privacy violation, but the long-term social impact. A society where everyone is potentially recording everyone else is not a free society. It's an anxious, suspicious, controlled society.

Estimated data suggests that 30% of workplaces prohibit recording, while 25% allow it with disclosure. Nuanced policies and unregulated environments make up the rest.

What You Should Actually Care About

If you're considering using this device, or if you're worried about others using it around you, here's what actually matters.

First, know the law. Look up the recording laws in your area. Understand whether you're in a one-party or two-party consent jurisdiction. Understand what you can and can't legally record. Don't assume that because a device exists, it's legal to use.

Second, be transparent. If you're going to record conversations, tell people. Make it explicit. Ideally, get written consent. At minimum, tell people verbally. The moment you're being secretive about recording, you're crossing into ethically and legally questionable territory.

Third, consider alternatives. Do you actually need to record conversations? Could you take good notes instead? Could you send a follow-up email summarizing what was discussed? Could you use a meeting transcription tool that's visible to all participants? Recording shouldn't be your default solution.

Fourth, protect your privacy. If others might be recording you, you need to be aware of it. Know the recording laws in your area. If someone records you without consent in a two-party consent state, you might have legal recourse. Don't assume you have no options.

Fifth, think about the long-term implications. Every time you normalize recording, you're changing what's acceptable in society. You're saying it's okay to gather information about people without their knowledge or consent. Is that the kind of world you want to live in?

The Myth of the Perfect Memory

One of the marketing angles for devices like this is the idea that if you record everything, you'll have a perfect record of what happened. You'll never forget. You'll never misremember. You'll always know what was actually said.

But this is kind of a lie. Recording doesn't create truth. It creates data. And data is subject to interpretation.

Humans misremember things all the time. But we also remember context, emotion, and meaning in ways that recordings don't capture. A recording is just audio. It doesn't capture what the speaker was feeling. What they meant to convey. What was going on beneath the surface.

There's also the question of selection bias. If you're recording conversations, you're choosing which conversations to record. You're choosing what to focus on when reviewing the recording. You're choosing which parts matter. That's not objective. That's very subjective.

There's also an argument that trying to have a perfect record of conversations is actually damaging to relationships. Part of what makes relationships work is a shared, negotiated understanding of what happened. When one person has an objective record and the other person only has their memory, it creates an asymmetry of power. The person with the recording can always say, "No, you're wrong, I have proof." That's not conducive to trust or understanding.

So the promise of the perfect memory is actually a false promise. What you get is a recording. Whether that recording is useful or harmful depends entirely on what you do with it.

Moving Forward: How to Think About This Technology

Switch Bot's recording device isn't inherently evil. It's a tool. And like all tools, it can be used well or badly.

Used well: A journalist uses it to accurately capture interviews. A researcher uses it to record focus groups with participant consent. Someone with a memory condition uses it to help them remember important conversations.

Used badly: A manager uses it to secretly record employees and build a case for firing them. A partner uses it to spy on their spouse. A person uses it to covertly gather information about others without consent.

The problem is that it's designed to be unobtrusive. It's designed to be discreet. Which means it's designed to be used without others knowing. That's a red flag. If a tool is designed to be hidden, that's usually because using it openly would be objectionable.

I think about how I'd feel if I found out someone had been wearing a device like this around me. Recording my conversations. Recording my thoughts. Recording things I said in what I thought was confidence. I wouldn't feel violated just by the recording. I'd feel violated by the deception.

Transparency matters more than the technology itself. If you're recording conversations, everyone involved should know it. It should be expected. It should be normal. The moment it becomes something hidden, something sneaky, something you do without telling people, that's when it becomes problematic.

So my recommendation: Be very cautious with recording devices. Use them transparently. Get consent. Understand the laws in your area. And think about whether you really need to record something, or whether you're just trying to outsource the work of actually paying attention to conversations.

Conclusion: The Choice We're Making

The Switch Bot recording device is here. It's not going away. Probably, more devices like it are coming. Smaller, smarter, more integrated into our clothing and accessories.

But just because the technology exists doesn't mean we have to embrace it. We have choices about how these devices are used, and what we accept as normal.

Right now, we're at a decision point. We can let recording become ubiquitous and normalized. We can build a world where every conversation might be captured, transcribed, and stored. Where privacy is a luxury rather than a right. Where trust is impossible because everyone is potentially recording everyone else.

Or we can draw lines. We can say that certain interactions require consent. That certain spaces are private. That recording other people without their knowledge isn't okay, regardless of whether it's legal.

These are society-level questions, not just individual questions. And they require more than just individual choices. They require regulation, cultural norms, and a collective decision about what kind of world we want to build.

For now, if you're using a device like this: be honest about it. Tell people you're recording. Understand the legal implications. Think about whether you're using it as a tool or as a weapon. And be prepared for the day when everyone around you is doing the same thing, and you realize what you've built.

For everyone else: be aware that these devices exist. Understand the recording laws in your area. Protect your own privacy. And think carefully about the kind of society we're creating, one conversation recording at a time.

The technology is neutral. But how we use it, and what we allow as normal, will define what kind of world we live in. Choose carefully.

FAQ

What is the Switch Bot recording device?

The Switch Bot recording device is a small, wearable clip-on recorder designed to capture conversations. It uses AI to transcribe audio in real-time and provide summaries of key topics discussed. The device connects to a mobile app where users can review transcriptions, listen to recordings, and search for specific topics mentioned in conversations.

Is it legal to use the Switch Bot recorder?

Legality depends entirely on your location and local recording laws. In one-party consent states (like Texas and New York), you can legally record a conversation you're part of without telling the other person. In two-party consent states (like California and Florida), all parties must consent to being recorded. Outside the US, EU jurisdictions require explicit informed consent under GDPR. Check your local laws before using any recording device.

What are the privacy concerns with conversation recording devices?

The main concerns are: where your conversation data is stored and who can access it, how long the company keeps the recordings, whether law enforcement can subpoena the data, whether the AI training uses your conversations, and the risk of data breaches. Additionally, recording without consent creates information asymmetry—the person wearing the device knows what's being recorded while others don't.

Do people need to know they're being recorded?

Ethically and legally, yes. In two-party consent jurisdictions, it's required by law. In one-party consent areas, it's not legally required, but it's ethically important for maintaining trust and respecting others' autonomy. Many workplace, professional, and relationship contexts expect transparency about recording before it happens.

What are legitimate uses for recording conversations?

Legitimate uses include: journalism and interviews (with subject consent), academic research (with participant consent), medical and legal documentation, accessibility for people with hearing loss or cognitive conditions, and personal voice note-taking when you're not capturing others' conversations. The common thread is informed consent from all participants.

How does the device store and process conversation data?

The Switch Bot device captures audio locally but relies on cloud processing for transcription and AI analysis. This means your conversations are uploaded to Switch Bot's servers, creating questions about data security, retention policies, and who can access the recordings. The exact details should be in their privacy policy, which users should review before using the device.

What should I do if I discover someone is recording me without my knowledge?

First, understand your local recording laws—if you're in a two-party consent state, you may have legal remedies. Document what happened and when. Save any evidence. Consult with a lawyer, particularly if the recording was used to harm you professionally or personally. You might have grounds for civil or criminal action depending on your jurisdiction.

How is the Switch Bot recorder different from other recording solutions?

The key difference is form factor and discretion. Dedicated recorders like the Zoom H6 are obvious and stationary. Phone apps are tied to your phone and visible. Video conferencing transcription tools are integrated into visible meetings. The Switch Bot device is designed to be worn and can record conversations more discreetly, which is both an advantage for convenience and a disadvantage for transparency.

Will this technology become more common?

Yes. Expect smaller wearable recorders, better AI processing, longer battery life, and integration into clothing and accessories. The question isn't whether the technology will improve, but whether society will implement guardrails around how it's used. Some jurisdictions are already considering regulations to require notification when recording takes place.

What's the ethical problem with secret recording?

Recording someone without their knowledge removes their ability to consent to the interaction. It creates an information asymmetry where you know what's happening but they don't. It violates basic respect for autonomy. It can also change how people behave—when people know they're being recorded, they're more cautious and authentic communication becomes harder. Trust requires transparency.

Key Takeaways

- Recording laws vary dramatically by location—two-party consent states require all parties to agree, while one-party consent states only need one person's approval

- SwitchBot's device uploads conversations to cloud servers, creating data privacy risks around storage, retention, and potential law enforcement access

- Ethical concerns center on consent and transparency—recording someone without their knowledge violates autonomy regardless of legality

- Legitimate uses exist for journalism, research, and accessibility, but only with proper disclosure and informed consent from all participants

- Normalizing secret recording creates a surveillance culture that undermines trust and changes how people communicate authentically

Related Articles

- US Withdraws From Internet Freedom Bodies: What It Means [2025]

- 19 CES Gadgets You Can Buy Right Now [2025]

- Amazon's Alexa+ Comes to the Web: Everything You Need to Know [2025]

- Bee AI Wearable: Amazon's Big Moves After Acquisition [2025]

- CES 2026: Why AI Integration Matters More Than AI Hype [2025]

- Plaud NotePin S: AI Wearable with Highlight Button [2025]

![SwitchBot's AI Recording Device: Privacy, Ethics & What You Need to Know [2025]](https://tryrunable.com/blog/switchbot-s-ai-recording-device-privacy-ethics-what-you-need/image-1-1767891961686.jpg)