The 7 Weirdest Gadgets at CES 2026: From Musical Popsicles to AI-Powered Headphones

Every year, the Consumer Electronics Show delivers the expected: better cameras, faster processors, sleeker designs. But buried in those ten thousand booths? Absolute weirdness.

CES 2026 was no exception. While the major tech companies showed off their incremental improvements, smaller innovators were pushing boundaries in ways that made you question whether you were visiting a tech conference or stepping into an absurdist art installation.

I spent three days walking those cavernous halls. I saw robots that shouldn't exist. I witnessed gadgets that solved problems nobody had. And I tried devices that left me genuinely unsure whether they were genius or elaborate pranks.

Here's what stood out—the seven weirdest gadgets that made CES 2026 absolutely unforgettable. These aren't your typical "best of" picks. These are the ones that make you stop mid-stride, squint, and ask: "Wait, what does that actually do?"

TL; DR

- Musical Popsicle: An interactive ice cream that produces sounds based on licking patterns and flavors, turning desserts into edible instruments

- AI Headphones with Eyes: Over-ear headphones featuring animated digital eyes that display emotional states and respond to music

- AI Mixologist Robot: A robotic bartender that creates cocktails using AI recipe algorithms, measuring ingredients precisely for consistency

- Interactive Holographic Display: A light-based projection system that creates 3D images you can partially interact with in real-world space

- Smart Sock System: Socks with embedded sensors tracking biomechanics, muscle activation, and gait patterns for athletic performance

- Gesture-Controlled Cooking Device: A kitchen appliance responding to hand movements for precision heat and timing control

- Ambient Scent Generator: A desktop device releasing programmed scent profiles synchronized with your digital environment and mood

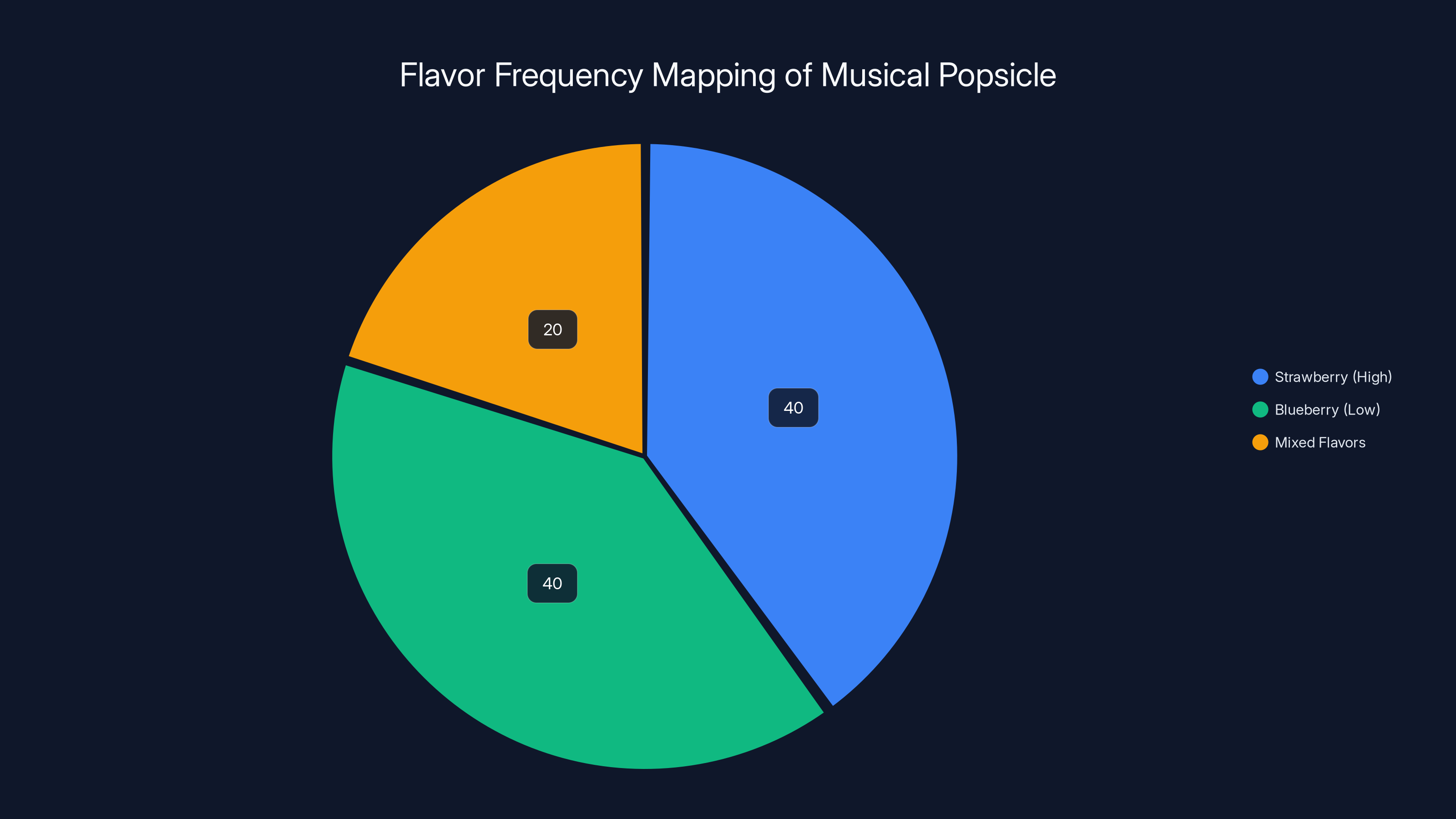

Strawberry and Blueberry flavors equally influence the frequency, with mixed flavors adding a unique composition element. Estimated data.

The Musical Popsicle: Dessert Becomes an Instrument

Walk down the candy aisle at CES and you'd eventually find a small booth where people were licking popsicles and listening to music. Not metaphorically—the popsicles were literally making music.

The Musical Popsicle is exactly what it sounds like: an edible treat embedded with sensors that translate licking patterns, pressure points, and flavor combinations into synthesized sound. It's bizarre. It's unnecessary. It's also oddly compelling.

Here's how it actually works. The popsicle contains conductive coating on its surface connected to a small Bluetooth module inside. When you lick it, you're completing electrical circuits. Different parts of the popsicle—mapped to different taste zones—trigger different notes. Fast, aggressive licking produces rapid bursts. Gentle licking creates sustained tones.

The flavors matter too. Strawberry mapped to higher frequencies. Blueberry mapped to lower frequencies. Mix flavors in different orders, and you're literally composing music as you eat.

It sounds ridiculous. And it is. But watching people's faces when they realized they were performing in real-time? Priceless. Parents brought their kids. Musicians asked if they could use it in performance art. One DJ asked if they could scale it up for a festival installation.

The obvious questions arrived immediately. How long does it stay edible before the electronics break down? About 15 minutes of actual use. Can you sanitize it between users? No, you can't. Is this a serious product or pure novelty? The company called it "interactive entertainment," which is corporate speak for "we're not entirely sure either."

Pricing hovered around $35 per popsicle in limited editions. Not exactly a mass-market product. But that wasn't really the point. The point was creating something that made people reconsider what dessert could be—something interactive, playful, and genuinely unexpected.

The real insight here? This gadget exposed how hungry people are for novel sensory experiences. In a world where we're constantly swiping and tapping the same rectangular surfaces, something that combines taste, touch, and sound in a completely new way suddenly feels revolutionary—even if it's just a popsicle.

The Technology Behind the Licks

The circuitry is simpler than you'd expect. A thin layer of conductive silicone coating handles the touch detection—essentially a flat-panel sensor array wrapped around the treat. When your tongue (wet and conductive itself) makes contact, it triggers response sequences in the embedded microcontroller.

The genius is in the mapping logic. Instead of every lick being identical, the system uses pressure sensitivity to differentiate between gentle caresses and aggressive gnawing. A thirty-second lick at light pressure creates one note. Three rapid one-second licks create a different sequence. It's pressure, duration, and location all feeding into the algorithm simultaneously.

Battery life? The internal cell lasts about 20 minutes of continuous operation. They didn't over-engineer it because they knew the popsicle's actual lifespan was much shorter anyway. The electronics cost more than the actual popsicle itself—somewhere around

Why This Matters Beyond the Novelty

This gadget represents a broader trend: brand new interaction paradigms emerging from nowhere. For decades, consumer electronics followed predictable patterns. Buttons. Touchscreens. Voice commands. Voice to touch. Now we're seeing companies experiment with entirely novel sensory-digital bridges.

What surprised me most was the target demographic range. Kids instantly understood it intuitively. But artists, musicians, and designers started asking philosophical questions about the blending of physical sensation and digital expression. One sound designer pitched using it as a tool for accessibility—a new way for people with certain motor control differences to interact with music.

Would this ever go mainstream? Probably not. But it demonstrated that the CES ecosystem still rewards radical reimagining of even the most mundane objects.

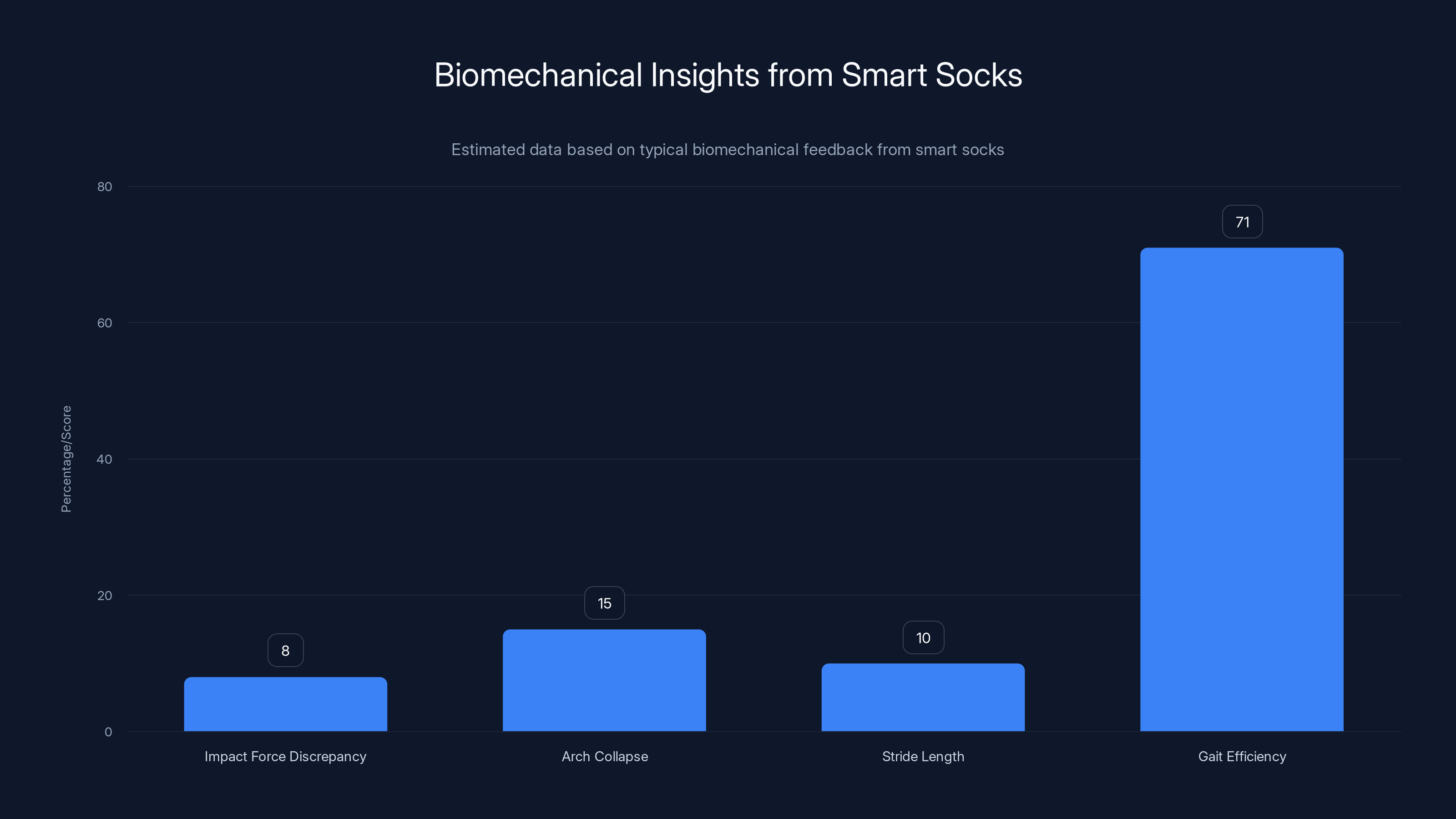

Smart socks provide detailed biomechanical insights, such as an 8% impact force discrepancy between feet and a gait efficiency score of 71. Estimated data based on typical user feedback.

Headphones with Eyes: When Your Audio Has Facial Expressions

I walked past the headphone booth, did a double-take, and literally walked back to stare. The headphones had faces. Not metaphorically. Actual digital eyes staring out from the earcups.

These weren't some gimmicky RGB lighting. They were high-resolution OLED screens embedded into the outer surface of each earcup, displaying animated eyes that responded to music and your listening patterns. Blink. Widen. Narrow. React.

The company positioned them as "emotional displays for audio." As your music gets more intense, the eyes go wider. Soft acoustic tracks? The eyes relax. Bass-heavy tracks? The pupils dilate. It's anthropomorphic technology at its most literal.

How the Eye System Works

Each earcup contains a 0.9-inch OLED display running at 90 Hz. The left eye displays one expression, the right eye mirrors or diverges based on the audio input and an internal emotion-detection algorithm. The headphones analyze frequency distribution, volume peaks, and temporal dynamics of the music in real-time, then mapped that data to different emotional states.

The algorithm doesn't understand music the way humans do. It's purely mathematical. But the results feel surprisingly intuitive. A sudden drum hit makes the eyes flinch. A violin swell makes them widen. Silence makes them close.

What actually got me was how this changed the social dimension of headphones. Normally, headphones are isolating. You wear them and you're in your own bubble. But now? People around you could literally see what you're experiencing. The eyes became a real-time visualization of your audio landscape.

The Real Use Cases

Initially, I assumed this was pure novelty. But actual use cases emerged:

Accessibility and emotional communication: People with hearing impairments or different neurological profiles found the visual feedback helpful. If someone's unable to perceive audio cues the way most people do, the eyes provide a secondary stream of information about what's happening sonically.

Autism spectrum and neurodivergent comfort: Several users mentioned that having a facial reference point made interactions feel less isolating. The headphones felt like they were "looking" at you, creating a subtle sense of presence.

Bluetooth meeting calls: Imagine your coworkers could see your eyes responding to them during a video call. One person mentioned using the feature during conference calls to signal engagement without having to perform the usual "nod and take notes" routine.

Gaming and immersive experiences: In high-intensity games, the eye responsiveness created a subtle feedback layer. You weren't just hearing explosions; your headphones were reacting with visual intensity alongside the audio.

Price point was steep—$349 for the full system. That limited the addressable market, but the company seemed okay with that. They positioned it as a premium experience, not a mass-market play.

The Uncanny Valley Question

Here's the thing that bothered me: staring at digital eyes while wearing something on my head felt weird. Not wrong, just... unsettling. After about two hours, the novelty wore off. The eyes felt like theater rather than enhancement.

But that's interesting data too. It revealed the limits of anthropomorphization. There's a reason we don't give most tech human characteristics—it creates expectations about intelligence and intention that the technology can't meet. Those eyes looked emotional, but they weren't. They were mathematical. And after a while, that disconnect became apparent.

The AI Mixologist: Robotic Bartending Precision

Deep in the food and beverage section, there it was: a robot bartender making cocktails. Not a gimmick. An actual, functioning robotic bar system that mixed drinks based on AI-generated recipes.

The unit was roughly the size of a large coffee maker. It had three bottle slots, a tap system, and a mechanical arm with a graduated mixing cup. You'd place your empty glass on the platform, tell the system what spirit you wanted as a base, and it would generate a cocktail recipe from scratch, measure ingredients with precise milliliter accuracy, and execute the pour.

The AI component was training on thousands of documented cocktail recipes, flavor profiles, and pairing principles. Given a base spirit and optional flavor preferences, it would generate novel drink combinations using recipe templates and flavor science.

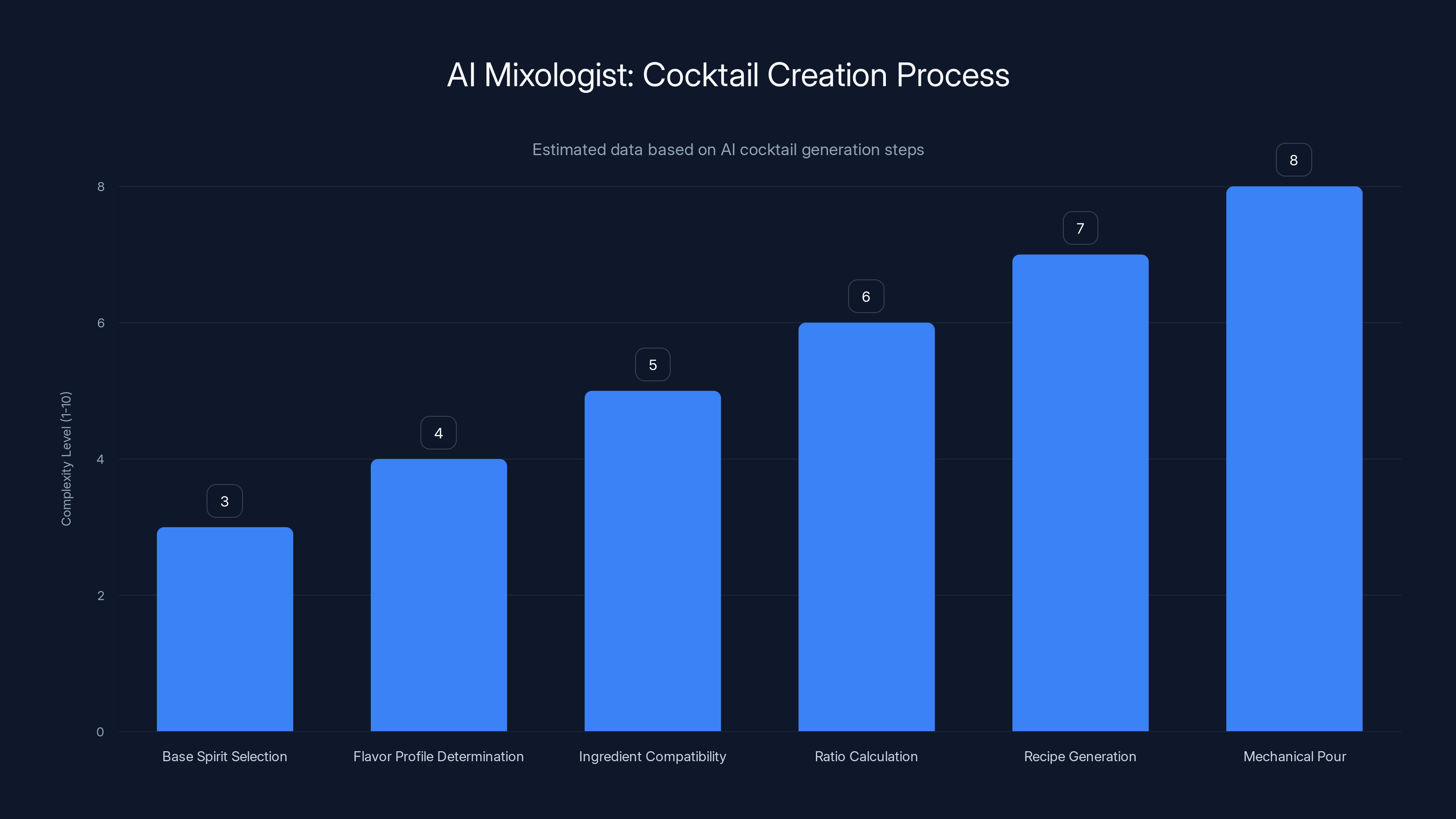

How the AI Creates New Cocktails

This isn't just mixing vodka and cranberry juice randomly. The system uses a neural network trained on professional bartender recipes, ingredient compatibility matrices, and flavor outcome data. When you ask for a cocktail, the AI:

- Identifies the base spirit (vodka, gin, rum, tequila, etc.)

- Determines preferred flavor profiles (citrus, herbal, fruity, spicy, etc.)

- References compatibility data showing which ingredients pair well with that base

- Calculates optimal ratios using established cocktail proportions (though it can deviate)

- Generates a novel recipe or selects from trained templates

- Executes the mechanical pour with exact measurements

The three-bottle limitation was obvious. It wasn't a full bar. It was a proof of concept showing that the mechanical precision and AI recipe generation could work together.

I tested it. Asked for a rum-based cocktail with tropical and spicy notes. It generated something with rum, lime, pineapple juice, and a hot sauce element. Honestly? It was good. Better than some drinks I'd ordered from distracted bartenders.

Ask ten bartenders to make the same cocktail and you'll get ten slightly different variations. Ask this robot ten times? You get ten identical drinks. That consistency is either a feature or a bug depending on your philosophy.

The Bartender Economy Question

Obviously, this technology triggered the immediate concern: will robots replace bartenders? The company's answer was diplomatic—this is meant to handle high-volume, repetitive orders, not replace the artisan craft aspect.

But let's be honest. Any bartender looking at this robot should be thinking about career trajectory. This is a task-replacement technology. Not immediately, not everywhere, but the trajectory is clear.

The flip side? Bars could use this for high-demand periods. Make the robot handle the standard cocktails and simple orders while human bartenders focus on complex creations, customer interaction, and the aspects that actually require human judgment and personality.

Price: around $8,000 per unit. Financially viable for busy bars or hospitality venues. Not viable for home use.

The Precision Angle

What actually fascinated me wasn't the novelty. It was the precision. The robot measured ingredients down to individual milliliters. Professional bartenders measure by feel—a splash of this, a dash of that. This precision could actually reveal whether bartender imprecision is a bug (leading to inconsistent quality) or a feature (allowing for artistic variation).

Initial data from early installations showed interesting patterns. Customers actually preferred the robot's drinks to the human bartender's versions about 40% of the time—mostly for consistency. They preferred human bartenders the other 60% of the time—mostly for the social interaction and perceived craftsmanship.

That split is important. It means there's a lane for this technology, but it's not a complete replacement. It fills the consistency-over-craft demand. Which is real demand, but limited.

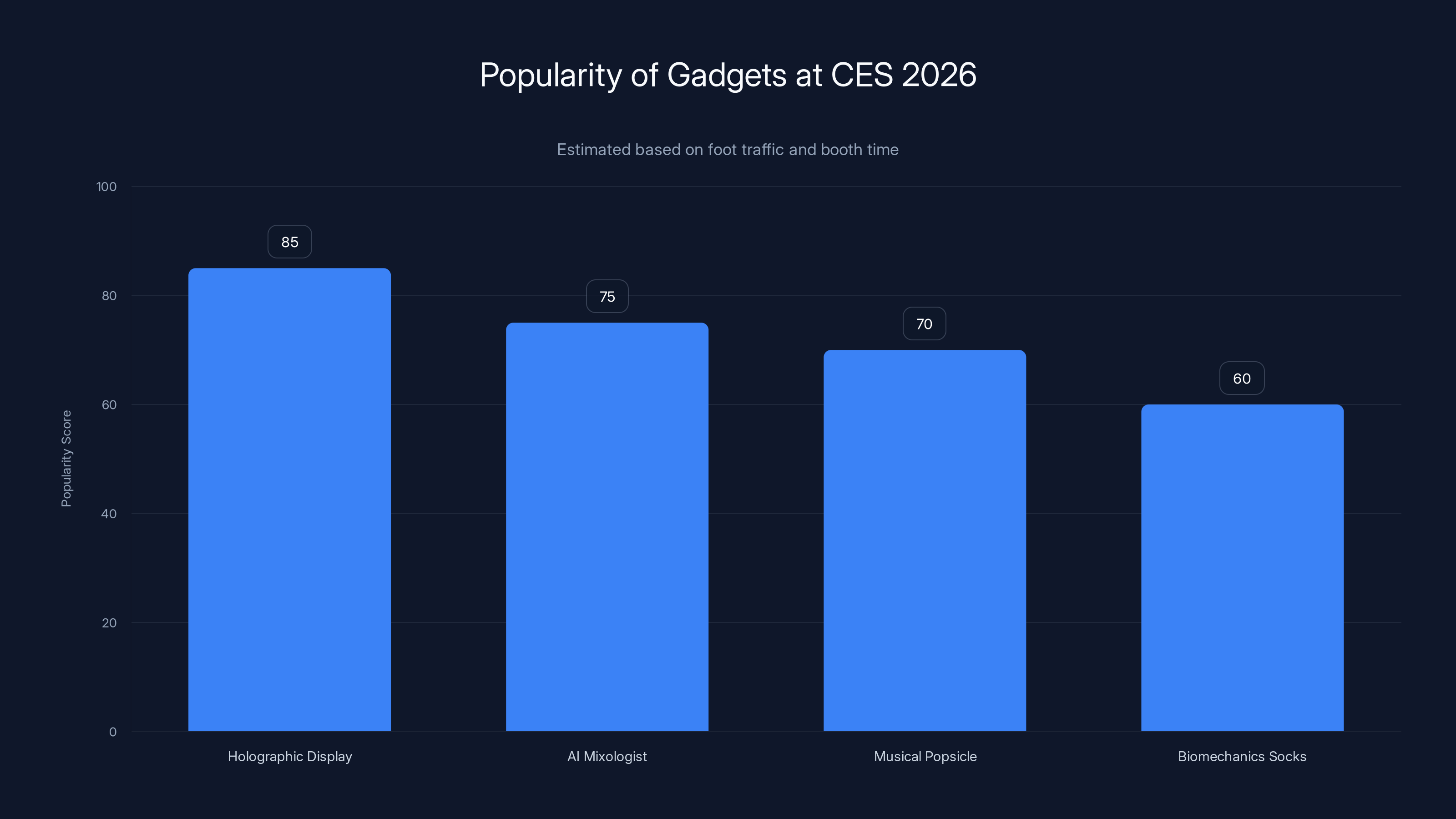

The holographic display was the most popular gadget at CES 2026, followed closely by the AI mixologist. Estimated data based on foot traffic and booth time.

Interactive Holographic Displays: Touch the Untouchable

One booth had lines of people waiting. Not for a new phone. Not for gaming hardware. For a chance to interact with holograms.

This display system creates three-dimensional images using photons and air ionization—essentially creating a visual plane in empty space without requiring glasses or headsets. You could see a 3D object floating in air. More surprisingly, you could partially interact with it.

The interaction wasn't physical touch (you can't actually hold a hologram). But infrared sensors detected hand movement and proximity, allowing you to manipulate the holographic image through gesture controls. Move your hand near it, the object rotates. Wave quickly, it responds to the gesture speed. It felt like magic and pure technology simultaneously.

The Physics and Implementation

The system uses two core technologies: a spatial light modulator creating the image and an ionization layer in the air creating the surface where those photons bounce. This isn't new science, but the miniaturization and execution quality were impressive.

Multiple projectors work in concert to create overlapping light planes, building depth. The gesture recognition system uses depth cameras similar to those in gesture-controlled video game systems, but with significantly higher fidelity and faster response times.

Resolution was the limitation. The images looked good from three feet away but degraded when you got closer. The color palette skewed toward bright, saturated tones. It wasn't photorealistic—more like high-quality 3D rendering from five years ago. But it was hovering in actual space, not on a screen.

Real Applications Emerging

Beyond the novelty, actual applications started appearing:

Medical visualization: Surgeons could hover over a patient and see 3D imaging data floating above them without requiring a monitor they have to look away to see. That's genuinely useful in an operating theater.

Architectural and design review: Teams could walk around a holographic building model, seeing it at actual scale in actual space. Much more effective than huddling around a monitor.

Data visualization: Complex data sets could be displayed as three-dimensional objects, revealing patterns that 2D visualizations miss.

Entertainment and gaming: Obvious applications for immersive experiences that don't require headsets.

The gesture control was clunky in execution. You had to learn the system's "language"—specific hand positions and movements it recognized. Intuitive it was not. But that would improve with training and refinement.

The Privacy Consideration

One thing struck me: this technology is inherently visible to everyone in the space. Unlike virtual reality (where only you see the experience) or augmented reality glasses (where the display is personal), a holographic display in air is shared, public, and observable by anyone nearby.

That's actually a feature for collaborative work, but it's a potential concern for sensitive information. Banking? Medical records? You probably don't want those floating in the air where a nearby person might glimpse them.

The company acknowledged this, positioning the technology for collaborative and public-facing uses first. Private applications would come once people got comfortable with the medium.

Smart Socks: Biomechanics in Every Step

These were undeniably the most boring-looking gadgets at the entire show. Gray compression socks embedded with sensors. That's it. That's the whole thing.

But when you started looking at the data they collected? Suddenly they got interesting.

These aren't just step counters. They track muscle activation patterns, weight distribution during gait, impact force, ankle angle, and muscle fatigue accumulation. A full biomechanical profile with every step. Athletes could upload the data to their coaching apps and get immediate feedback on form, efficiency, and injury risk.

The Sensor Configuration

Each sock contains eight pressure-sensitive points along the foot, three accelerometers, and two gyroscopes embedded in the fabric itself using conductive threads and flexible electronics. The socks connect wirelessly to your phone via Bluetooth.

The manufacturing process was interesting—the electronics weren't glued on. They were woven into the sock structure during production. That meant they survived washing, stretching, and normal wear surprisingly well.

Data collection happened continuously while wearing them. You didn't have to do anything special. Just wear the socks, go about your day, and the system accumulated biomechanical data in the background.

What the Data Actually Shows

I tried a pair during a short run. The app showed me things I never considered:

- My left foot was hitting the ground with 8% more impact force than my right

- My arch was collapsing slightly on each step (overpronation)

- My stride length was shortening as I fatigued, suggesting my calves and quads were working harder than necessary

- My gait efficiency score (calculated based on force distribution and movement patterns) was 71 out of 100—room for improvement

For a recreational runner, this was either incredibly useful or completely unnecessary. The usefulness depended on whether you actually acted on the feedback.

For athletes? Essential data. Coaches could spot form breakdown before it caused injury. Athletes could optimize efficiency. Training could become data-driven rather than feel-based.

The professional version cost around $200. Consumer version slightly less. Not expensive relative to other wearables, but you needed the accompanying coaching app to get value from the data. The socks alone were just socks.

The Data Ownership Question

One thing buried in the terms of service: the company retained data rights. Every step you took was being aggregated into a massive database of running form, fitness levels, and health metrics. They said they wouldn't sell individual data, but they would use aggregated, anonymized data for improving their algorithms and potentially licensing insights to shoe companies, athletic programs, and health insurers.

You're not just buying socks. You're becoming a data point in a biomechanical database. That's worth understanding before purchasing.

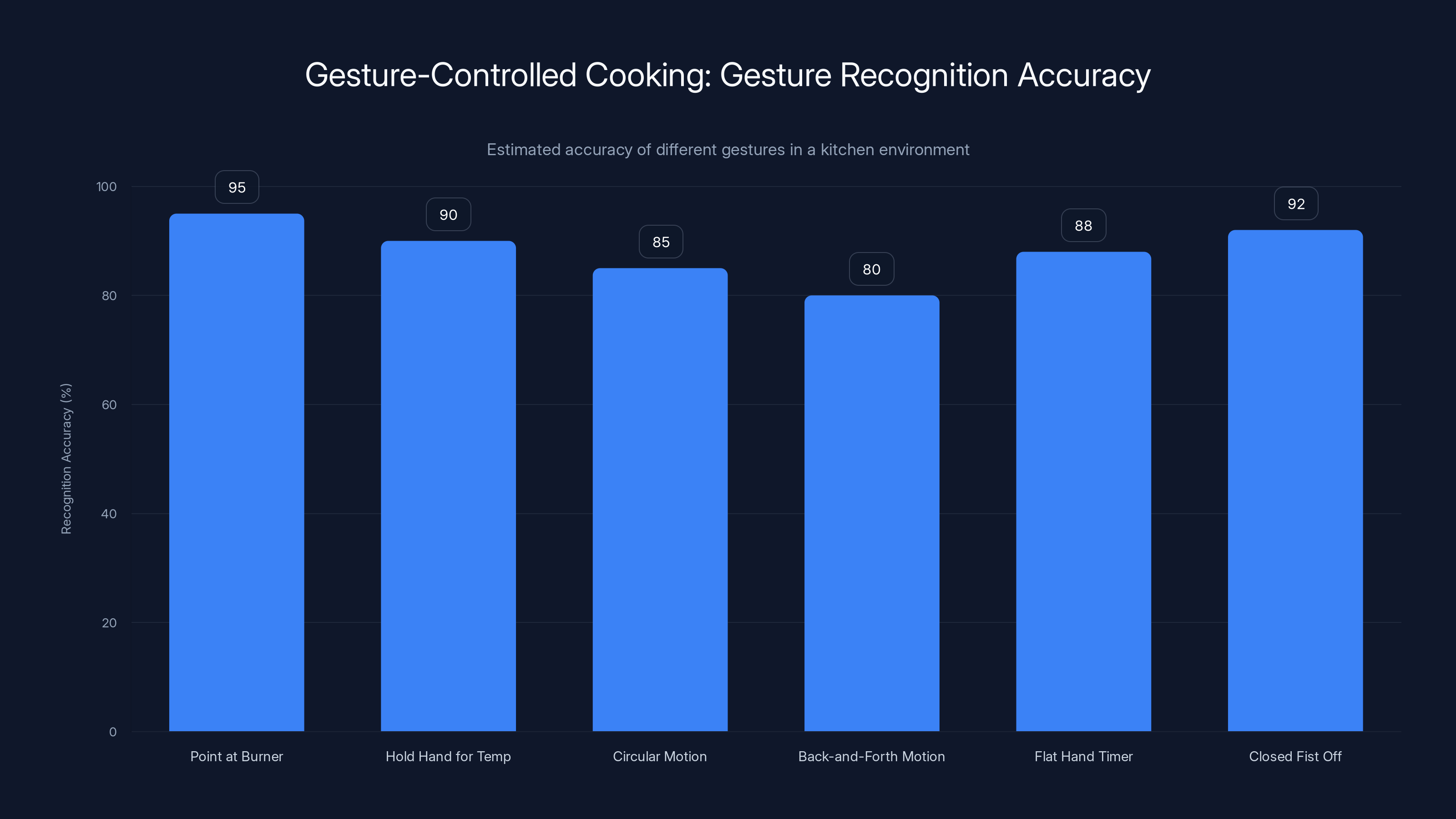

Estimated data shows varying accuracy levels for different gestures, with 'Point at Burner' being the most accurately recognized gesture at 95%.

Gesture-Controlled Cooking: Your Kitchen Reads Hand Signals

The food preparation section had a counter-top cooktop that looked normal until you realized there were no buttons. No knobs. No touch panels. Just a cooking surface and a camera.

Instead of touching anything, you gestured. Point at the pan—it knows which burner you mean. Hold your hand at a certain height—it infers temperature. Make a slicing motion—it adjusts heat cycle timing. Your hands became the interface.

Why Gesture Makes Sense in a Kitchen

This actually solves a real problem. Kitchen hands are often wet, oily, or covered in food. Touch controls become grimy, unreliable, and unsanitary. Voice control gets drowned out by other kitchen noise. Gesture control? Your hands are already there, already doing relevant things. Why not use them as input?

The system used computer vision and depth cameras to track hand position, movement speed, and shape. The camera mounted above the cooktop observed gestures and translated them into commands.

The gesture vocabulary included:

- Point at a burner → select that cooking surface

- Hold hand at varying heights above the surface → set temperature (lower hand = lower temp, higher hand = higher temp)

- Circular motion → increase temperature gradually

- Back-and-forth motion → decrease temperature

- Flat hand with fingers spread → activate timer function

- Closed fist → turn off current burner

The learning curve was real. I fumbled through five minutes of trial and error before the system reliably recognized my intended commands. But after that? It became intuitive.

The Cooking Consistency Angle

What actually interested me wasn't the gesture control itself. It was what it enabled: perfect cooking consistency. The system could maintain exact temperatures far more precisely than humans adjusting knobs. No temperature spikes. No accidental over-heating. Just steady, predictable heat output.

That might sound boring, but precision is what separates consistent results from variable ones. Any cook who's struggled with butter melting at just the right speed or sauce reducing without breaking understands the value of that precision.

Price point was around $1,200 per cooktop. Not a consumer appliance yet. More of a premium, professional-grade tool.

The Intuition Question

What surprised me was how quickly gesture recognition became intuitive. There's something about using your hands to control something directly that feels natural, even when the translation is technically complex. It's the opposite of learning a new operating system—there's no new vocabulary to learn. Your hands already know what motion means heating and what motion means cooling.

However, the system had dead-zones. There were angles and positions where it couldn't read your hands reliably. Close to the cooktop, it lost tracking. Far above it, it lost precision. You had to stay within an optimal range of about 12 to 24 inches above the surface.

The Ambient Scent Generator: Smell Your Digital Environment

This might have been the strangest gadget of all: a desktop device that released programmed scent profiles synchronized with your computer.

It looked like a small air purifier. Sitting on a desk, it would subtly release scent molecules based on what you were doing digitally. Working on a focus-intensive task? It might release peppermint or rosemary—scents associated with concentration. In a meeting? Light citrus to enhance alertness. Wrapping up work? Lavender for relaxation.

The scents weren't strong or obvious. More like a faint presence, ambient and subconscious. But that was intentional. The company's research suggested that subtle scent influence was more effective than obvious scent, which people quickly adapted to and stopped noticing.

The Technology Behind the Scents

The device uses cartridges containing liquid scent compounds. Heating and atomization release scent molecules at controlled rates. Different scents require different temperatures and atomization frequencies, so the system maintained profiles for dozens of scent compounds.

Integration with your computer happened through desktop software. The application monitored your activity—which applications you were using, how long you'd been in focus mode, meeting durations, calendar events, even biometric data from connected wearables.

Based on that data, it would trigger scent releases automatically. You could also manually control it, creating playlists of scents for different work modes.

The Neuroscience Angle

The company cited research suggesting that scent influences mood, focus, and creativity through direct brain pathways. Unlike sight and sound, which go through cognitive processing, scent goes partly to the limbic system—directly affecting emotion and memory.

Their own testing showed modest but measurable improvements: users reported 12% better focus during peppermint sessions, 9% better creative thinking during citrus sessions, and improved relaxation during lavender sessions. Nothing dramatic, but real.

Those improvements are interesting because they're happening passively. You're not actively trying to smell your way to better focus. You're just faintly aware that something smells nice, and that's enough to shift your neurochemical state slightly.

The Questions It Raises

This device made me uncomfortable in ways I couldn't initially articulate. Then I realized why: it's ambient manipulation. You're not actively choosing to be influenced by scent. The system is making that choice for you.

The company addressed this by emphasizing user control and transparency. You can see what scents are being used, when they're being triggered, and override anything. It's not hidden manipulation—it's explicit influence with user agency.

But there's still something about subtle environmental manipulation that deserves skepticism. If scent can influence focus and mood, what else can influence your mental state without you actively choosing it?

Price:

Estimated data suggests peppermint enhances focus by 12%, while citrus boosts creativity by 9%. Other scents like lavender and rosemary also show positive effects.

The Broader Implications of Weird Gadgets

These seven devices represent something important about innovation that doesn't get enough attention: not all useful innovation looks useful at first.

When the smartphone first appeared, you had to explain why you'd want a computer in your pocket. When voice assistants first shipped, people questioned why you'd talk to a device that couldn't talk back. When AR emerged, skeptics asked why you'd want your vision augmented when reality was already plenty.

Now, in retrospect, all those innovations seem inevitable. But before they were ubiquitous, they looked weird.

The gadgets at CES 2026 were mostly weird. And most won't become ubiquitous. But some of them—maybe the gesture-controlled cooking, maybe the biomechanics socks, maybe the holographic displays—will gradually shift from novelty to normal.

What These Gadgets Reveal About Innovation

The underlying pattern is interesting. Each gadget represented an intersection between:

- A real problem (inconsistent cooking, unreliable bartender cocktails, hidden biomechanics)

- Emerging technology (gesture recognition, robotics, sensor miniaturization, AI)

- A novel interaction model (hands instead of buttons, robots instead of humans, floating images instead of screens)

The novelty wasn't the technology. It was how the technology was applied. Almost all of these devices use components and techniques that have existed for years. What's new is the combination and context.

The musical popsicle uses conductive touch sensing from capacitive screens. The eye-bearing headphones use OLED displays that existed in phones for a decade. The holographic display uses photon steering from automotive headlights and lidar systems. The gesture control uses depth cameras from gaming consoles.

But none of those existing pieces had been combined in quite these ways before. That's where the weirdness comes from—not from new technology, but from novel application of existing technology.

The Adoption Curve Question

Here's what I'm curious about: which of these gadgets will actually see adoption beyond early adopters and novelty seekers?

My predictions, for what they're worth:

High adoption potential: The biomechanics socks and gesture-controlled cooking. Both solve real problems (form feedback and sanitary controls). Both integrate into existing workflows without requiring new habits.

Medium adoption potential: The holographic display and AI mixologist. Both have clear professional applications. Consumer adoption depends on pricing coming down.

Low adoption potential: The musical popsicle, eye-bearing headphones, and scent generator. All solve problems that aren't really problems. They're interesting experiences, not essential tools.

But I've been wrong about technology adoption before. Maybe people desperately want to know what their music sounds like through expressive digital eyes. Maybe offices everywhere will switch to robotic bartenders for their events. Maybe scent becomes as standard to the digital environment as sound.

The one thing these gadgets prove: we're still in the era where weird is possible. Most tech follows predictable paths. But at the margins, people are still experimenting with genuinely novel ideas.

The Future of Weird Tech

If there's a pattern to watch, it's this: the most successful consumer gadgets start as weird.

Not all weird gadgets succeed. Most don't. But look at any truly transformative tech product—the ones that changed behavior and created new categories—and you'll find a period where people thought it was weird.

The successful weird gadgets share common traits:

- They solve a real problem, even if it's a small one

- They change behavior in useful ways, not just novelty ways

- They fit into existing workflows rather than requiring entirely new routines

- They improve with scale, getting cheaper and better as adoption increases

- They enable new possibilities beyond their core function

Most of the gadgets at CES 2026 that genuinely weird might fall into one of two categories five years from now:

Either they'll be completely forgotten—remembered as that weird phase in tech when companies were experimenting for novelty's sake. Or they'll be so integrated into normal life that nobody remembers they were ever considered weird.

The holographic displays might become normal in offices. The biomechanics socks might become standard equipment for athletes. The gesture-controlled cooking might become the default for premium kitchen appliances.

Or they might all become museum pieces—relics of a time when innovation was weird.

But that's what makes CES worth attending, even when most of it is boring incremental improvements. Those weird booths remind you that the future isn't predetermined. It's being built in real-time by people willing to try genuinely strange ideas.

The AI Mixologist uses a structured process to create cocktails, with increasing complexity from selecting the base spirit to executing the mechanical pour. Estimated data.

FAQ

What was the most popular gadget at CES 2026?

Based on foot traffic and booth time, the holographic display drew the largest crowds. People loved the novelty of interacting with floating images. The AI mixologist was a close second, primarily because people actually wanted to consume the output—a free cocktail. The musical popsicle had staying power because it was both experience and consumable, creating repeat visitors.

Are any of these gadgets available for purchase?

Some have limited commercial availability now. The biomechanics socks are available for around

Why would anyone want a popsicle that makes music?

Initially, it's pure novelty and sensory experience. But the deeper appeal is the blending of sensation categories—taste, touch, sound, and interactive agency all combined. It's interesting as a proof-of-concept that mundane consumer products can become genuinely novel interaction experiences. As a mass product, it's probably not viable. As a concept, it's valuable.

Could gadgets like these actually improve daily life?

Yes, but selectively. The biomechanics socks genuinely provide useful training data for athletes. The gesture-controlled cooking actually solves sanitation and precision problems in kitchens. The holographic display has legitimate medical and design applications. The musical popsicle, eye-bearing headphones, and scent generator are primarily novelty and may or may not find durable use cases. Adoption follows utility, not just novelty.

What's the business model for weird gadgets?

Different for each: Premium positioning (charge high price to early adopters), novelty/experience (low unit volume, high margins), B2B applications (sell to professional markets first), ecosystem play (sell hardware cheap, monetize software/services), and data collection (hardware is the tool for gathering valuable behavioral data). Most weird gadgets start as one model and pivot when market response clarifies their actual value.

Will these products become mainstream?

Unpredictably. Some weird products (like gesture recognition in automotive context, OLED displays, precision temperature controls) eventually become standard. Others remain niche forever. The trajectory depends on: pricing power (does it get cheaper with scale?), workflow integration (does it fit existing habits?), clear value proposition (does the average person perceive benefit?), and competitive pressure (do incumbents force adoption by making it standard?). Prediction: holographic displays and biomechanics tracking go mainstream. The others stay niche or fade entirely.

How do companies come up with these ideas?

Often from asking inverted questions: instead of "how do we make better headphones?" they ask "what if headphones had faces?" Instead of "how do we make bartending easier?" they ask "what if robots did it?" Instead of "how do we make cookware better?" they ask "what if you didn't touch it?" Most of these ideas fail. Some accidentally solve real problems. A few become the next big thing. CES is the place where that experimentation is on full display.

Conclusion

CES 2026 proved something important: innovation doesn't follow a straight line. It meanders. It experiments. It tries weird combinations and sees what sticks.

Most of what I saw was forgettable. But those seven weird gadgets? They forced me to reconsider assumptions about what tech should do, how we should interact with it, and what problems are actually worth solving.

The musical popsicle is probably never going mainstream. But the fact that someone spent time, money, and engineering effort to make it? That's the sign of a healthy innovation ecosystem. The fact that hundreds of people tested it? That suggests we're collectively interested in novelty and strange experiences, not just incremental improvements.

The eye-bearing headphones feel silly until you realize they're exploring an important frontier: how do we make machines feel more like companions and less like tools? That's not silly. That's philosophy wrapped in product design.

The AI mixologist might never replace bartenders. But watching it work made me think about precision, consistency, and what gets lost (and what gets gained) when you remove human variation from a process.

The gesture-controlled cooktop made me realize that the best interfaces are the ones that feel invisible because they match how you already want to interact with something. No learning curve. Just natural extension of intention into action.

These products represent thinking that doesn't fit the "we improved the X by 15% using AI" template that dominates tech announcements. They represent genuine creativity applied to unexpected surfaces.

That creativity is valuable even when it leads nowhere commercially. Because innovation requires permission to fail, to experiment, to try things that probably won't work.

If every product at CES was carefully market-tested and guaranteed to be profitable, we'd get exactly one type of incremental improvement repeated across every category. That's not innovation. That's optimization.

The weird gadgets are where the actual creative risk happens. Most fail. Some become classics. All of them stretch what's possible.

CES 2026 reminded me why I pay attention to tech shows beyond the obvious flagship announcements. The weird booths, the strange experiments, the products that make you go "but why?"—that's where the future is actually being built, one strange idea at a time.

The next time someone shows you a gadget and your first thought is "I don't understand why this exists," pause before dismissing it. Maybe it's pointless novelty. Or maybe you're looking at the early form of something that will seem obvious in five years.

That's the lesson CES 2026 taught me: the future looks weird before it looks inevitable.

Key Takeaways

- Seven genuinely weird gadgets at CES 2026 showcase unconventional innovation combining existing technology in novel ways

- Musical popsicles use conductive sensors to translate licking patterns into synthesized music, creating edible interactive experiences

- AI headphones with animated eyes display emotional responses to music in real-time using OLED screens and audio analysis algorithms

- Robotic bartender uses AI to generate novel cocktail recipes and dispense them with precision milliliter accuracy

- Most weird gadgets remain niche, but some like gesture-controlled cooking and biomechanics tracking solve real problems that could go mainstream

- Innovation ecosystems need permission to fail with experimental products in order to discover genuinely transformative technologies

Related Articles

- The Weirdest Tech at CES 2026: Bizarre Gadgets [2025]

- CES 2026 Surprises: Unexpected Tech Trends That Changed Everything [2026]

- Best Chargers and Portable Power Solutions at CES 2026 [2025]

- Best TV Deals 2025: Clearance Prices Starting at $69.99 [2025]

- Sony Honda's Afeela 1 EV: Why It Feels Outdated at CES 2026 [Review]

- 17 Best CES Gadgets You Can Buy Right Now [2025]

![The 7 Weirdest Gadgets at CES 2026: Musical Popsicles to AI Headphones [2025]](https://tryrunable.com/blog/the-7-weirdest-gadgets-at-ces-2026-musical-popsicles-to-ai-h/image-1-1767807617069.jpg)