The Dark Side of Influencer Culture: Ethics, Accountability, and Online Safety [2025]

Social media has created a peculiar kind of celebrity. Unlike traditional entertainers bound by agent networks, union regulations, and industry oversight, today's influencers operate in a largely unregulated space where personal boundaries collapse in real time, broadcast to millions. What happens when someone with a massive platform prioritizes monetization over parental responsibility? When the line between content creation and exploitation becomes impossibly blurry? These aren't theoretical questions anymore.

The rise of platforms like TikTok, Instagram, and YouTube has democratized fame in ways that seemed liberating a decade ago. Anyone with a smartphone and an audience could build a career. But democratization without guardrails creates problems. Real problems. Problems that affect real children.

This article explores the troubling pattern of influencer misconduct, particularly cases where parents leverage their children's identities for profit while exposing them to harassment, privacy invasion, and psychological harm. We'll examine the mechanics of how monetized live streaming works, why platforms struggle to enforce accountability, what protective measures exist (and don't exist), and what a more ethical influencer economy might look like.

This isn't about shaming people for earning money online. It's about drawing a line between healthy content creation and exploitation. That line matters. And right now, it's barely visible.

TL; DR

- The Influencer Economy Incentivizes Oversharing: TikTok Lives and similar features monetize intimacy through gift systems, paying creators 2,000+ daily for broadcasting personal moments, creating financial incentives for excessive privacy invasion, as noted in a recent study by Tubefilter.

- Children Bear the Cost: When parents build audiences around their children, kids lose control over their own narratives, face targeted harassment, privacy invasion, and developmental harm that can persist into adulthood, as discussed in The Atlantic.

- Platforms Enable, Don't Prevent: TikTok, Instagram, and YouTube have minimal age verification, weak content moderation, and virtually no accountability mechanisms for creator misconduct affecting minors, according to Brookings Institution.

- Legal Protections Lag Behind: While some states have "Kidfluencer" laws, enforcement remains weak, and federal standards are absent, leaving children in a gray zone between labor law and content creation, as reported by CBC News.

- The Path Forward Requires Action: Stronger age verification, independent moderation boards, mandatory transparency disclosures, and updated labor protections for child creators are essential starting points.

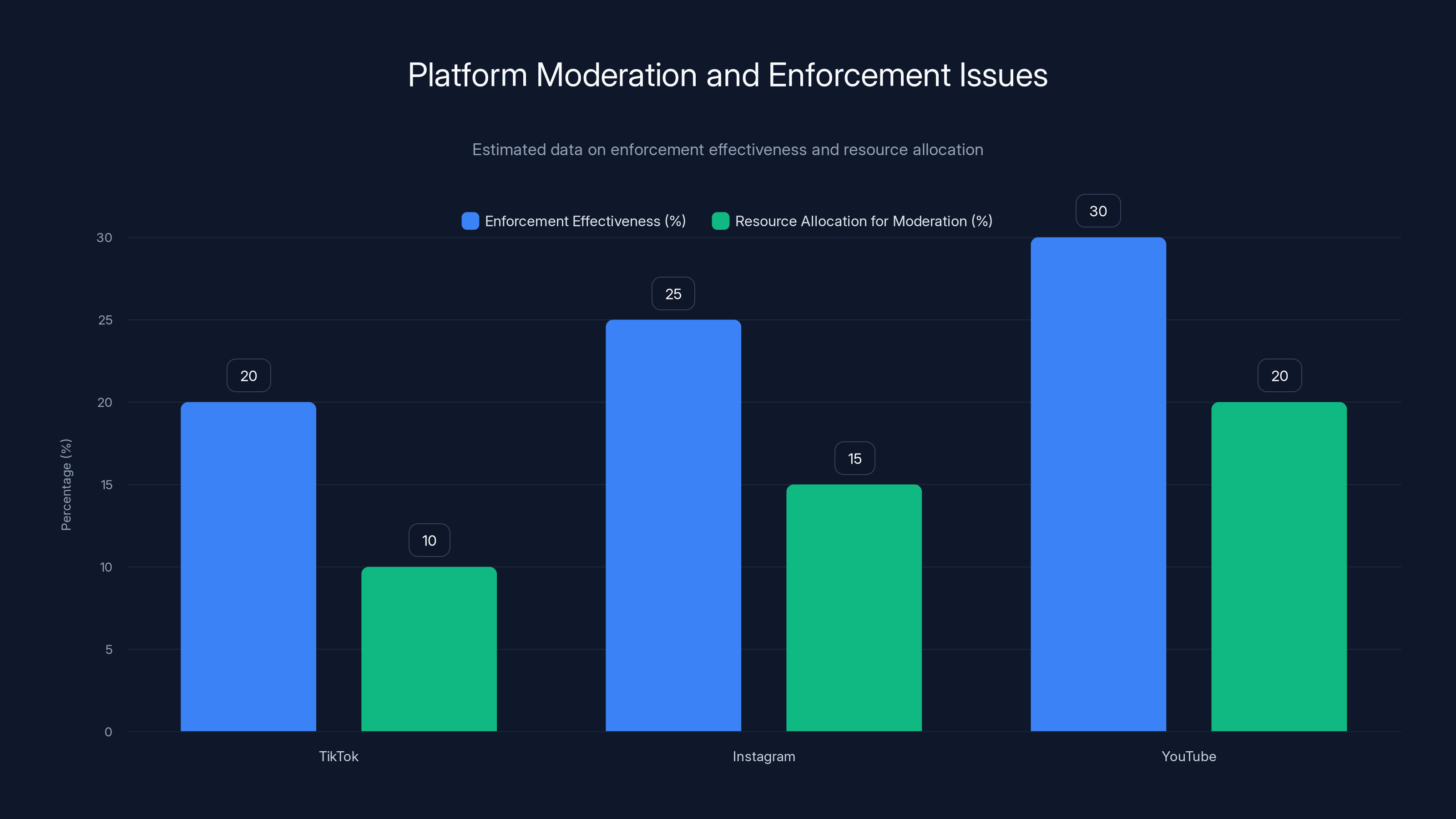

Estimated data suggests that platforms allocate minimal resources to moderation, resulting in low enforcement effectiveness. TikTok, Instagram, and YouTube struggle to effectively enforce community guidelines, especially for large creators.

Understanding the Influencer Economy and Monetization Models

The modern creator economy looks nothing like the entertainment world of 20 years ago. It's decentralized, algorithmic, and extraordinarily lucrative for people who figure out how to game the system. Understanding how influencers actually make money is crucial to understanding why some cross ethical lines.

How TikTok Live Monetization Works

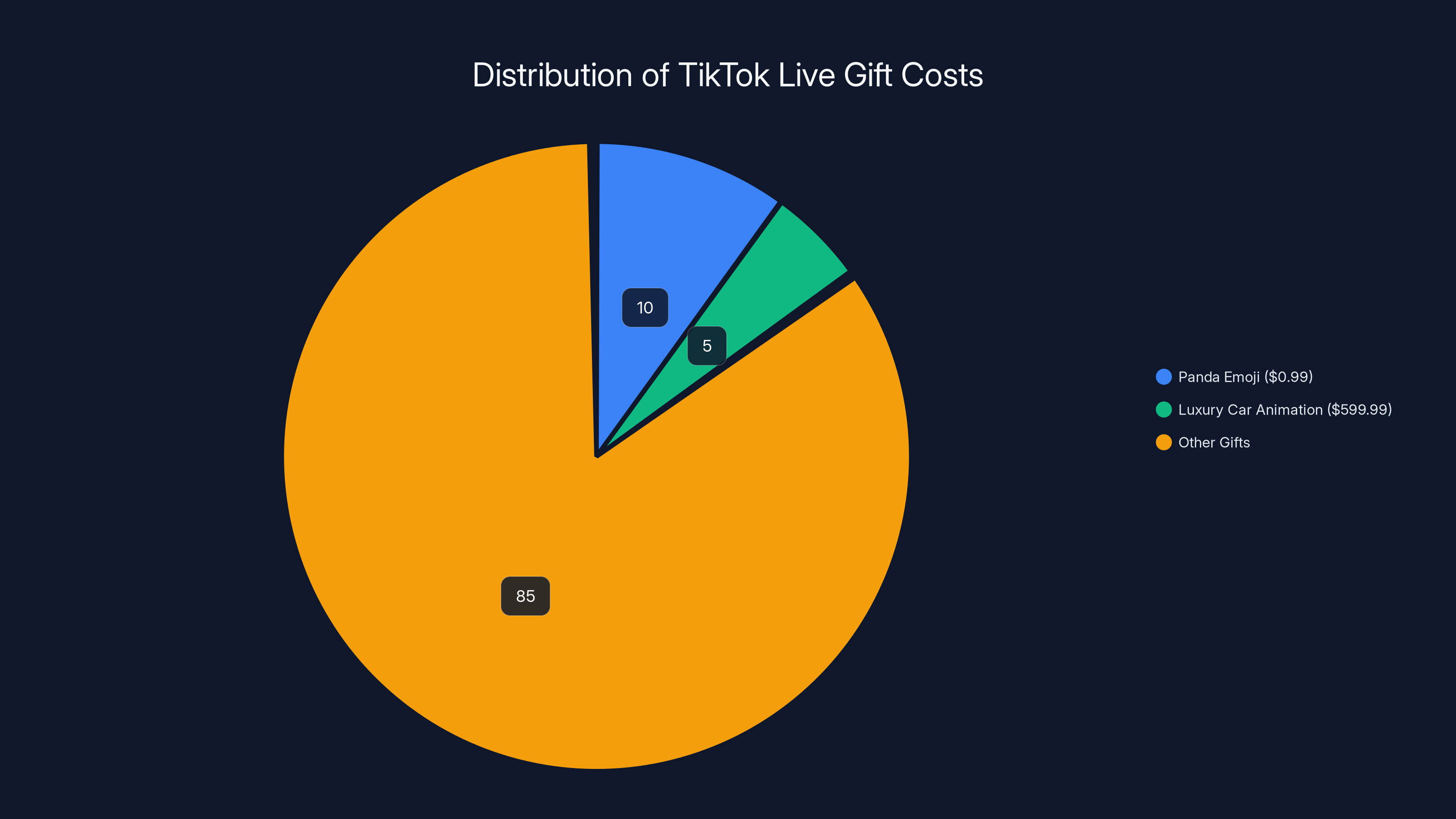

TikTok Live is deceptively simple. A creator goes live. Viewers send virtual gifts. Each gift costs real money, ranging from

This creates a perverse incentive structure. The more intimate, dramatic, or controversial a creator's life appears, the more viewers send gifts. A creator discussing a mundane Tuesday earns nothing. A creator having a meltdown, a family conflict, or a personal crisis? That generates engagement, which translates directly to revenue.

For successful creators, this income is substantial. A creator averaging

The Gift Economy and Parasocial Relationships

The gift system deliberately exploits parasocial relationships, which are one-directional relationships where viewers feel personally connected to creators, who don't reciprocate that connection. A viewer might watch someone's stream every day for months, feel invested in their life, and believe they have a real friendship. That creator might not even know their name.

This dynamic becomes dangerous when combined with monetization. Creators gain financial incentive to deepen parasocial relationships, to share more personal information, to seem more available and vulnerable than they'd ever be in real relationships. They're not being malicious; they're responding to economic incentives. But the end result is genuine psychological harm to both viewers and, in cases where family members are featured, to the people being monetized.

Research on parasocial relationships shows viewers can experience genuine grief when creators take breaks or quit, can develop anxiety about creators' wellbeing, and can struggle to form real friendships because they've invested emotional energy in one-directional connections. When children are watching other children in these dynamics, the effects compound.

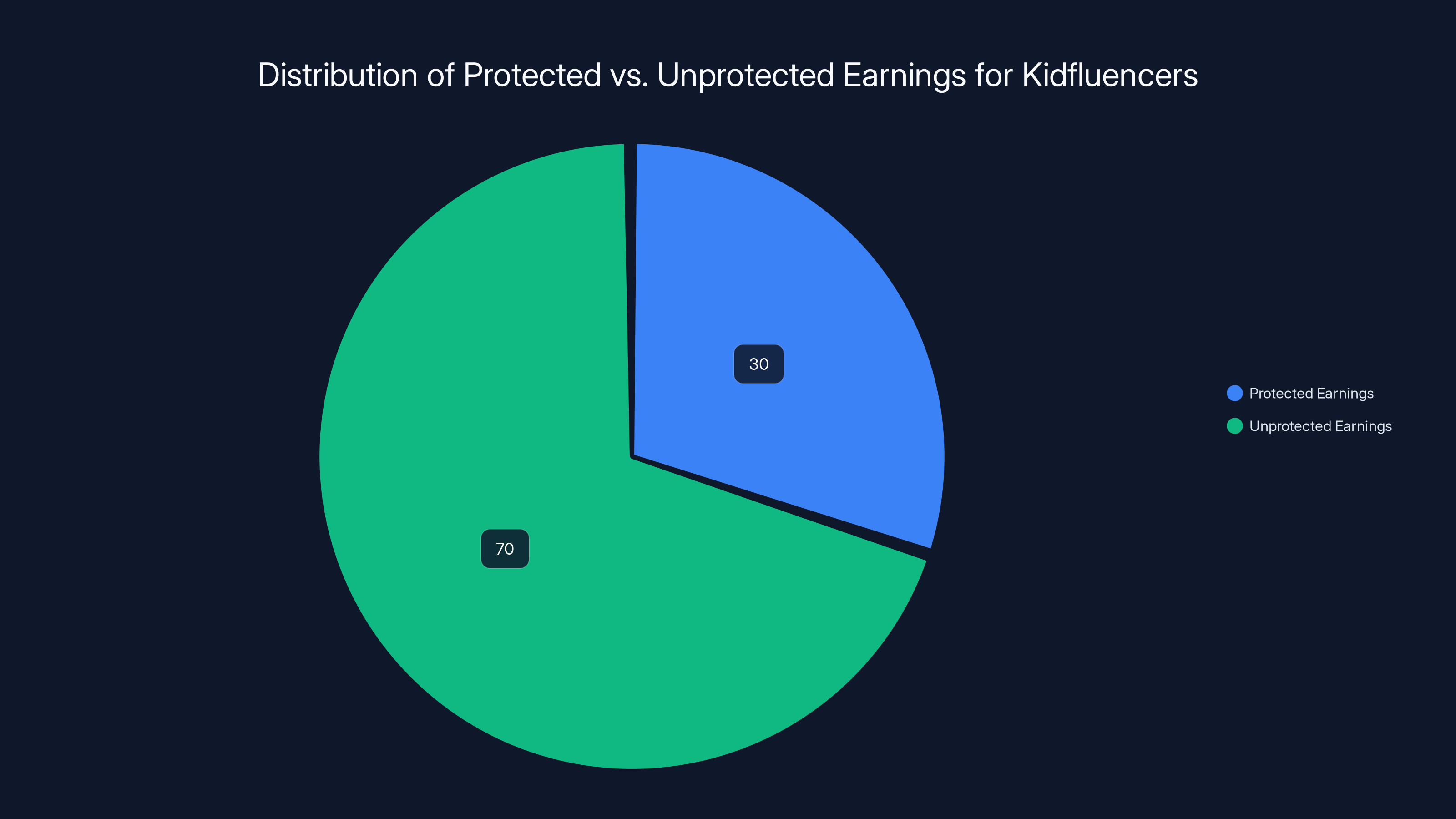

Estimated data shows that only 30% of earnings from platforms like TikTok are protected under current state laws, leaving 70% from other sources unprotected.

When Parents Weaponize Parental Identity: The Exploitation Framework

There's a crucial distinction between a parent occasionally appearing in a creator's content and a parent building an entire audience around their child's identity. The first is sharing your life. The second is commodifying your child.

The Mechanics of Child Exploitation in Content

Exploitation doesn't require intent. A parent doesn't need to consciously think "I'm going to harm my children for profit." Exploitation is structural. When a parent monetizes content featuring their child, they've created a system where the child's wellbeing competes financially with the parent's income. It's a conflict of interest at the most basic level.

Here's how it typically unfolds: A parent starts broadcasting their life. They get a decent audience. Then they include their kids, and engagement skyrockets. Kids are relatable, their unfiltered comments are entertaining, their growth and milestones feel fresh to viewers. The audience expands. Revenue increases. The parent naturally includes the kids more. The kids become the product.

At this point, several things happen simultaneously:

The Boundary Erosion Effect: What started as "sharing family moments" becomes a 24/7 broadcast. Viewers know what the kids ate for breakfast, when they failed a test, when they had a bad day. There's no privacy. The kid becomes a character in an ongoing narrative rather than a person with control over their own story.

The Audience Dependency Effect: The parent's income now depends on engagement. A sick child who can't appear on camera means lost revenue. A kid having a bad day who doesn't want to be filmed becomes a problem. The parent's economic interest and the child's wellbeing are in direct conflict.

The Sexualization Effect: In some cases, especially with teen girls, there's subtle (or not-so-subtle) sexualization. Comments about appearance, encouragement toward cosmetic procedures, displaying the child in ways that invite inappropriate attention. Sometimes this is intentional; often it's driven by what gets engagement.

The Privacy Invasion Effect: Millions of people know basic details about the child. Their address gets leaked. Strangers comment on their appearance, their weight, their sexuality. The child is simultaneously hypervisible and without agency.

Documented Harms to Child Creators

This isn't speculative. Research and documented cases show consistent harms:

Psychological Impact: Studies of child actors show increased rates of depression, anxiety, and substance abuse. Content creator kids face similar pressures, plus the added dimension that everyone they meet online knows their private information and has opinions about their bodies and choices.

Educational Disruption: Kids featured in family content often can't attend traditional school. Other students recognize them, invite them based on parasocial connection rather than genuine friendship, and either idolize or resent them. Most end up homeschooled, losing peer relationships and normal developmental experiences.

Identity Formation Issues: Adolescence is about figuring out who you are independent from your family. When a child's identity is publicly codefined by a parent's brand, they lose the space to explore. They become frozen in the version of themselves the audience knows.

Bodily Autonomy Violations: Kids featured in content can't consent in any meaningful way. A 10-year-old doesn't have the cognitive development to understand the implications of their image being permanent online. Their parent made that decision for them.

The Monetization of Family Trauma and Crisis

One of the most troubling patterns in influencer culture is the monetization of genuine hardship. A child gets sick. A family faces a crisis. Rather than handling it privately, a creator broadcasts it, solicits gifts framed as "support," and builds content around the tragedy.

Exploitation of Medical Situations

When a child is hospitalized, the instinct to reach out to your community is natural. But there's a difference between "I'm going through something hard and I need support" and "My child's medical crisis is content. Send money."

Some creators explicitly request gifts when announcing a child's hospitalization, framing it as helping with medical bills. Sometimes they create ongoing streams about the child's treatment, with the stream ongoing while the child is suffering. The child becomes a vehicle for engagement and revenue during their most vulnerable moments.

This has documented psychological effects. A child knows their parent is profiting from their pain. That knowledge affects how they process the trauma. Instead of receiving undivided parental attention during medical crisis, they're watching their parent manage an audience.

The Rescue Narrative and Performative Care

Another pattern: A parent claims they're struggling, they don't have basic supplies, they can't afford essentials for their kids. They ask followers to send money. Sometimes it's genuine hardship. Other times, the creator has significant income but uses the narrative to justify more gifts.

What's insidious is the implicit narrative: "Look at my poor kids. They don't have beds. Send money to help them." The kids become props in a compassion exploitation scheme. Even when the money goes toward actual supplies, the child knows they've been presented to strangers as objects of pity.

This creates a particular kind of shame. Kids internalize the message that they're burdens, that their basic needs are so extraordinary they require crowdfunding, that their suffering is entertainment.

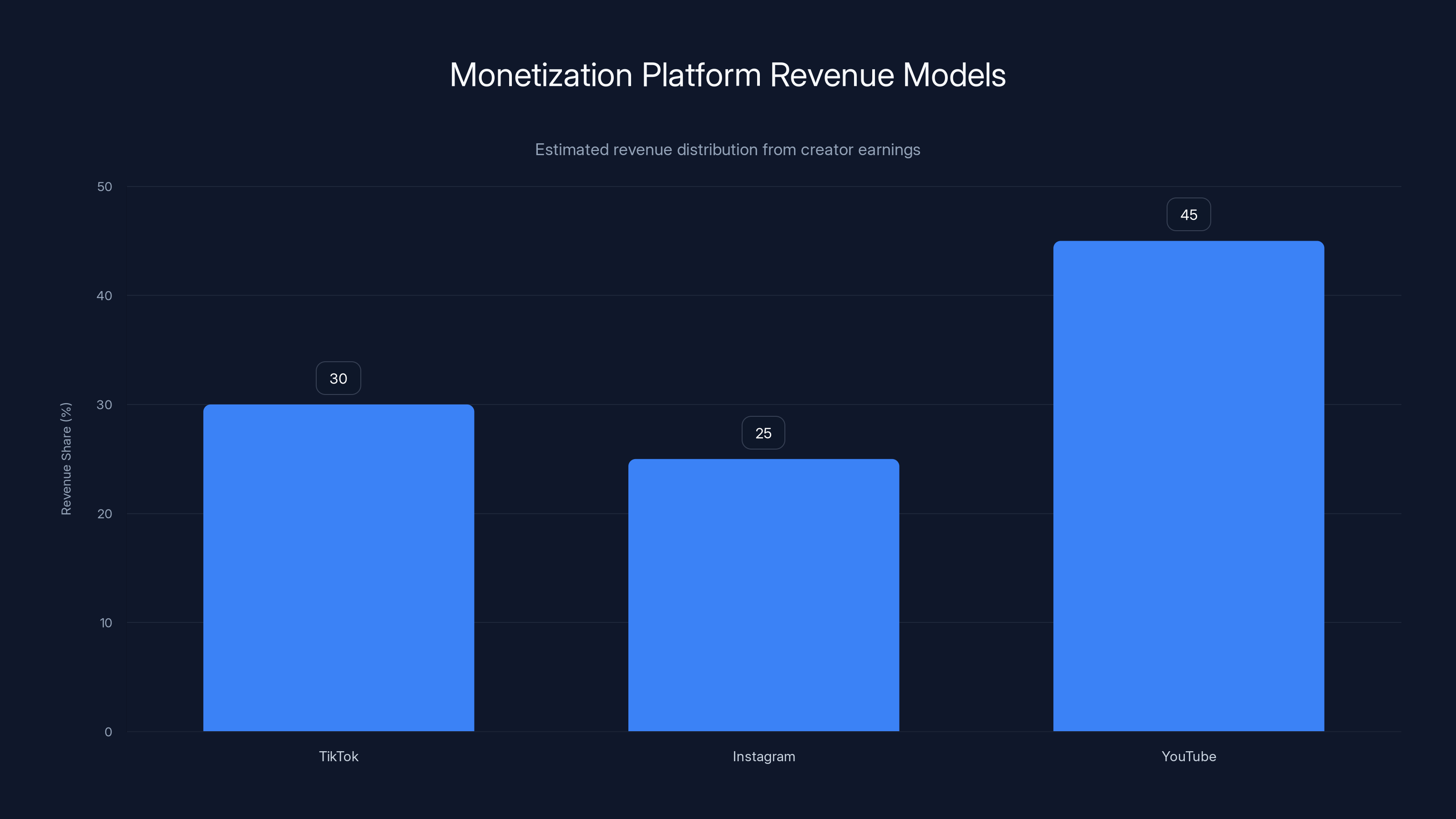

This chart estimates the revenue share platforms take from creators' earnings. YouTube takes the highest cut, followed by TikTok and Instagram. (Estimated data)

Privacy Invasion and Doxxing: The Real-World Consequences

There's a crucial difference between "my kid appears in my videos" and "millions of strangers know where my kid lives, goes to school, and spends their time." When creators broadcast location information, even casually, they're creating safety vulnerabilities.

How Location Sharing Endangers Minors

Doxxing is the practice of publicly sharing someone's private information, usually with intent to harass or endanger them. It happens to creators routinely, but when the subject is a minor, it becomes a safety emergency.

A creator mentions their neighborhood. Someone on Reddit connects it to public records. Another person cross-references school information. Suddenly, thousands of people know exactly where a 14-year-old lives and goes to school. That information is permanent. It can never be unshared.

The consequences are predictable:

- Strangers showing up at the family home

- Acquaintances recognizing the kid at school, sometimes with obsessive behavior

- Online harassers finding the kid's account and targeting them directly

- Predators having a roadmap to the child's location and schedule

What makes this particularly damaging is that the parent created the vulnerability. The child didn't choose to be exposed. They have no ability to protect themselves because their parent has already broadcast all the information needed to find them.

The Psychological Impact of Forced Public Identity

Even without active harassment, knowing that millions of people have your address, your school, your routines creates persistent anxiety. A child becomes hypervigilant. They can't relax in public spaces because they might be recognized by someone with parasocial attachment. They can't trust that strangers who know them aren't actually strangers.

This is a form of psychological manipulation that adults would recognize as abusive if it were done by a partner. Being forced to live your life publicly, unable to separate your private self from your public identity, unable to control who has access to your information, creates documented mental health consequences.

Kids of highly publicized creators report:

- Constant anxiety in public spaces

- Difficulty forming genuine friendships (can't trust people's motivations)

- Hyperawareness of their appearance and behavior

- Anger at the parent for creating the situation

- Feelings of exploitation that intensify over time

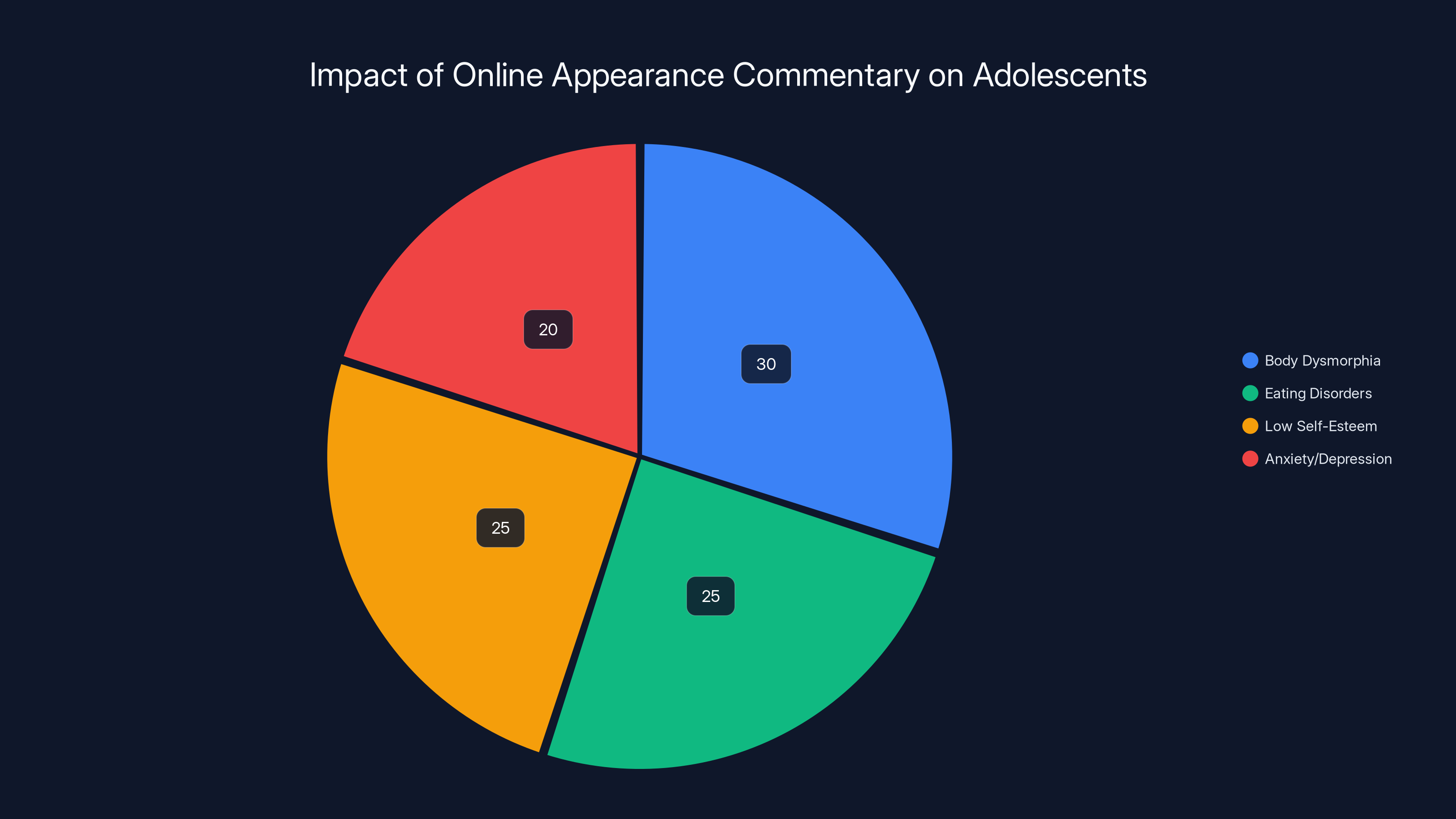

The Body Shaming and Appearance-Based Harassment Problem

When a child's image is shared online, they lose control over how their body is discussed. Strangers comment on their appearance. Critics attack their weight, their skin, their clothing choices. Supporters make compliments that are nonetheless invasive.

Appearance Commentary and Body Dysmorphia Development

Adolescence is already a time of intense body consciousness. Throw a few thousand strangers commenting on your appearance into that mix, and you've created conditions for body dysmorphia and eating disorders.

A girl appearing in family content might receive comments like: "She'd be prettier if she lost weight." "Her nose is so big." "She's going to struggle with dating looking like that." To a developing brain, these aren't random internet comments. They're proof that her body is wrong.

Parents who respond to this criticism by encouraging cosmetic procedures—suggesting lip fillers, nose jobs, or other procedures to their teenage daughter—are directly contributing to the development of these disorders. They're communicating that their daughter's appearance is inadequate and that the solution is to surgically alter herself to match strangers' expectations.

This is psychological abuse with a beauty industry profit motive attached.

The Particular Vulnerability of Teen Girls

There's a documented pattern where teen girls featured in family content face particularly intense sexualization. Comments become inappropriate. Older viewers develop parasocial attachments that feel uncomfortably romantic. Some creators actively encourage this dynamic because it increases engagement.

A teenager in this position faces impossible contradictions: They're being presented to the world as sexually appealing while simultaneously having no agency over that presentation. They can't opt out. They can't control the narrative. They can't delete comments. The parent owns the platform and the revenue stream.

Research on teen girls in high-visibility situations (pageants, child acting, etc.) shows increased rates of eating disorders, depression, substance abuse, and relationship dysfunction. Content creator kids follow similar patterns.

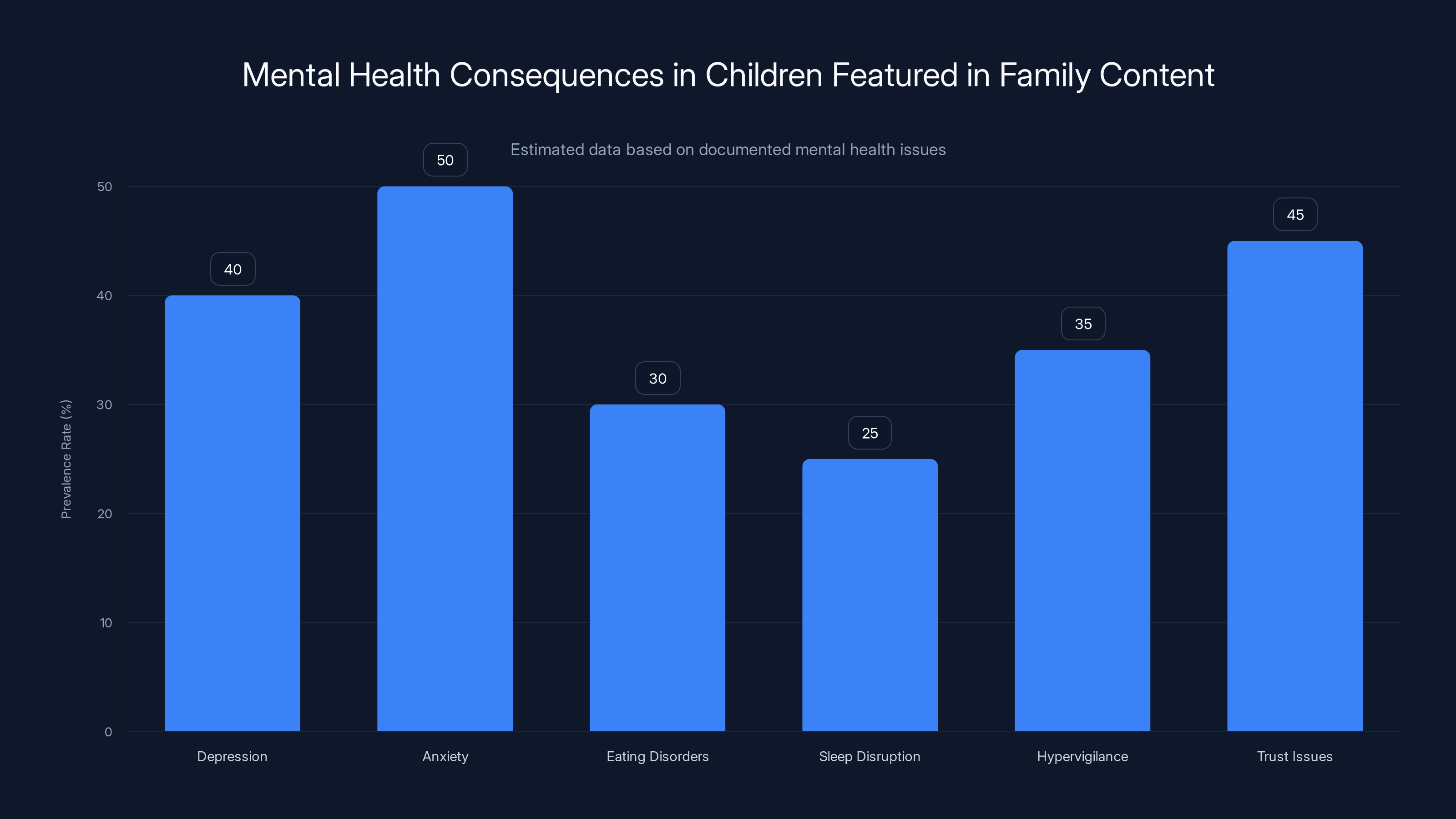

Estimated data suggests high prevalence of anxiety (50%) and trust issues (45%) among children featured in family content, highlighting significant mental health concerns.

Platform Responsibility and the Enforcement Void

TikTok, Instagram, and YouTube have community guidelines that nominally protect children from exploitation. In practice, enforcement is nearly nonexistent, particularly for large creators who generate significant platform revenue.

How Platforms Enable Creator Misconduct

The incentives are perverse. A creator with millions of followers generates enormous revenue for the platform through ads, gifts, and promoted content. If that creator violates guidelines, the platform has strong financial incentive to look the other way.

TikTok's moderation team is vastly understaffed. Instagram's review process for reported content can take weeks. YouTube's automated systems miss most violations. Meanwhile, creators with massive followings operate with near-total immunity.

A parent creator can monetize family crisis content, feature their children extensively, encourage them to get cosmetic procedures, doxx their own address, and face zero consequences from the platform. Why? Because enforcement requires resources, and resources cost money, and spending money on moderation cuts into profit margins.

Platforms make vague public commitments to child safety while structuring their systems in ways that maximize harm. They require users to be 13+ to have accounts, but don't verify age. They prohibit exploitation of minors, but don't define it clearly enough to enforce it. They claim to protect children, but their moderation processes ensure that most violations go unaddressed.

The Absence of Meaningful Age Verification

None of the major platforms have robust age verification. A user claims to be 13, and that's sufficient. There's no ID check, no verification mechanism, nothing to prevent an 8-year-old from creating an account and being exposed to adult content, predatory behavior, and parasocial dynamics designed to extract attention and money.

Age verification exists. It's technically feasible. Platforms don't implement it because it creates friction, and friction reduces growth metrics. Protecting children costs money and growth. Prioritizing growth over protection is a business decision, not a technical limitation.

Monetization vs. Safety: A Structural Conflict

The core problem is that platform business models depend on maximizing time-on-platform and engagement. The content that maximizes engagement is often the content that's most harmful. Controversy, drama, exploitation, trauma—these drive engagement and therefore revenue.

Until platforms have financial incentive to prioritize safety over growth, enforcement will remain minimal. As long as a creator generating $50,000 monthly for the platform can exploit their children without consequences, the platform has no incentive to enforce guidelines.

Legal Frameworks: The Kidfluencer Laws and Their Limitations

A few states have passed legislation addressing child content creators, but these laws are narrow, poorly enforced, and frequently circumvented.

Existing State-Level Protections

California, Louisiana, and a handful of other states have passed "Kidfluencer" laws requiring a percentage of earnings from child content creators to be set aside in blocked accounts. These laws explicitly recognize that child creators are workers and therefore deserve labor protections similar to child actors.

The problem is threefold:

First, they're optional for parents. A parent can claim they're not monetizing the content, or that earnings are below reporting thresholds, and avoid the requirements entirely. There's minimal enforcement.

Second, they only apply to earnings directly from that platform. If a creator generates $100,000 on TikTok but also has sponsorships, merchandise, and affiliate links, only the TikTok revenue gets protected. The other 70% might be completely unprotected.

Third, they don't address the psychological harm. Setting aside 15% of earnings doesn't give a kid their privacy back. It doesn't undo the body-shaming. It doesn't prevent predatory behavior online. It's a financial protection in a situation that requires comprehensive safeguarding.

Federal Protections Don't Exist

There's no federal law specifically addressing child content creators. No national standards for age verification. No federal requirements for platforms to enforce against exploitation. No mandatory reporting of abuse to authorities.

This is a massive gap. A child in Louisiana might have some protections, but a child in Texas has none. A platform can operate globally with completely different standards in different regions. The result is a regulatory Wild West where the most vulnerable have the least protection.

Why Enforcement Fails

Even where laws exist, enforcement is minimal. Child Protective Services is underfunded and understaffed. When a report comes in about a child being exploited for content, investigation requires resources. Most states' CPS systems are overwhelmed with cases of immediate physical danger. Psychological exploitation through content creation feels abstract by comparison, even though it's causing documented harm.

Prosecution is similarly rare. A prosecutor would need to prove that a parent intentionally monetized their child's image knowing it would cause harm. That's legally complex and requires resources most jurisdictions don't have.

The result: Lots of law on paper, almost no enforcement in practice.

Estimated data shows a variety of gift costs, with most gifts being lower-cost items. The luxury car animation represents a small but significant portion of high-value gifts.

The Psychological Impact on Affected Children

When you talk to kids who've grown up with a parent broadcasting their lives, certain patterns emerge consistently. These aren't isolated incidents or misunderstandings. These are measurable psychological consequences.

Loss of Agency and Identity Development

Adolescence is supposed to be the time when you figure out who you are independent from your family. You try on different identities, fail at things, succeed at things, form friendships that are yours, develop interests your parents might not share. That's normal development.

A kid whose parent has been broadcasting their life doesn't get that space. Their identity is already formed in the public mind. Millions of people have opinions about who they are. Their interest in, say, drawing is now performative—they draw because viewers expect it, not because they genuinely enjoy it. Their friendship with someone is complicated because that person knows private details about them already.

They become frozen in the version of themselves the audience knows. They can't change, can't evolve, can't explore without the change being documented and judged by millions.

Developed identity in healthy adolescence means: "I know who I am, I feel comfortable in my own skin, I can separate my real values from external pressure, I have trusted relationships." For kids raised on camera: "I don't know who I am apart from what I perform, I'm hyperaware of how my body appears to others, I second-guess every decision, I don't trust that relationships are genuine."

Loss of Childhood and Accelerated Adulthood

Childhood should be about play, exploration, mistakes, and learning without stakes. When a child's life is content, every moment has stakes. Every mistake is permanent and public. Every emotion is evaluated by millions for entertainment value.

This accelerates adulthood inappropriately. A 10-year-old becomes hyper-aware of appearance, reputation, and public perception. They develop anxiety about making mistakes because mistakes are broadcast. They lose the freedom to be a kid.

What develops instead is a kind of hypervigilance and performance anxiety that persists into adulthood. These kids struggle with authentic self-expression even after the content creation stops, because they've spent their developmental years calculating how their actions will be perceived.

Disrupted Peer Relationships

Kids can't have normal friendships when everyone at school has parasocial connection to them already. A new classmate might already feel like they know everything about you. They might be friendly because they're genuinely interested, or because they want to be associated with the known kid. It's impossible to tell.

Meanwhile, kids who were friends before the fame sometimes feel abandoned or used. They're no longer special—the influencer kid now has thousands of "fans." The friendship that was normal becomes a source of tension.

The result is profound loneliness. These kids have massive audiences but struggle to form genuine peer relationships. Friendships feel transactional. People seem to like the version of them they know from the internet rather than the actual person.

Long-Term Mental Health Consequences

Studies of child actors, child pageant contestants, and other highly visible children show elevated rates of:

- Major depressive disorder

- Anxiety disorders

- Eating disorders (particularly restrictive eating related to appearance anxiety)

- Substance abuse (often as self-medication for anxiety and depression)

- Relationship dysfunction (difficulty trusting partners, struggle with intimacy)

- Suicidality (at rates significantly higher than general adolescent population)

These aren't issues that resolve when the child grows up and can theoretically "choose" to leave. The developmental damage from being exploited during critical periods persists. A person who spent ages 8-18 in a situation where their body was constantly evaluated and their identity was externally defined doesn't just bounce back into healthy adulthood.

The Role of Monetization Platforms: Responsibility and Accountability

Who bears responsibility for these harms? The parent, certainly. But also the platforms that enable and profit from the exploitation.

Platform Revenue Models and Alignment Issues

TikTok, Instagram, and YouTube make money when creators make money. When a creator generates $50,000 monthly through gifts, the platform takes a cut. The more controversial and engagement-focused the content, the more revenue it generates.

Platforms claim they want to protect children, but their financial incentives point the opposite direction. Protecting children costs money and limits growth. Exploiting children generates revenue. The choice is made at the business model level, before any individual policy decision.

Some examples:

- YouTube demonetizes educational content about serious topics but monetizes exploitative family content because the latter gets better engagement

- TikTok's algorithm promotes controversial creators (generating more views and gifts) over wholesome creators

- Instagram suggested my account to viewers who follow my creator's parasocial fans, reinforcing the parasocial dynamic

These aren't bugs. They're features of platforms optimized for engagement and revenue.

What Meaningful Platform Responsibility Would Look Like

Robust age verification: Verify age at account creation, not just at claim. This requires resources but is technically feasible.

Clear exploitation definitions: Define precisely what counts as exploiting minor family members. Don't leave it vague. Enforce consistently.

Restricted monetization for content featuring children: Allow creators to feature family members, but don't monetize that content. Separate the ability to share from the ability to profit.

Mandatory reporter training: Platform moderators should be trained to recognize and report child exploitation signs to authorities.

Public transparency reports: Disclose how many reports of child exploitation they receive, how many they investigate, how many result in action.

Independent review boards: Create independent panels, not platform-employed moderators, to review disputed cases involving minors.

None of these are technically impossible. All would cost money and potentially reduce growth metrics. That's the real barrier.

Estimated data shows that body dysmorphia and eating disorders are significant impacts of appearance-based harassment, alongside low self-esteem and anxiety/depression.

The Influencer Industry's Response and Resistance

Creators who profit from family content, unsurprisingly, resist regulation. Their income depends on their ability to monetize their family.

Industry Arguments Against Regulation

The standard arguments in favor of the status quo:

"Parents should decide what to share about their kids." True, but only up to a point. If a parent decides to beat their child, we don't accept that as a parental choice. If a parent decides to prostitute their child, that's illegal. We recognize that children need protection beyond what "parental choice" provides. Monetized public exposure should be similar.

"Kids benefit from the income." Sometimes. But the benefit is financial, not developmental. Paying a kid for psychological harm doesn't make the harm good. Also, many exploitative creators don't actually share earnings with the kids providing the content.

"This is censorship." Requiring age verification or restricting monetization of minor-featuring content isn't censorship. Parents can still share content about their kids. They just can't profit from it. That's a reasonable line.

"Regulation will destroy the creator economy." The creator economy won't be destroyed if it can't profit from exploiting children. An economy that requires child exploitation to function is an economy that should be restricted.

Awareness and Accountability Movements

Meanwhile, communities have mobilized to document exploitation and hold creators accountable outside of platform enforcement.

True crime podcasters, social media researchers, and advocacy organizations have created awareness campaigns showing the specific harms caused by child exploitation in family content. They've documented cases, compiled evidence, and provided visibility that platforms ignore.

This grassroots accountability is important, but it shouldn't be necessary. Platforms have all the information they need to enforce their own guidelines. They choose not to.

The Path Forward: Policy Recommendations and Solutions

Fixing this requires action at multiple levels: platform policy, legislation, industry standards, and cultural norms around what's acceptable content.

Platform-Level Changes

Implement mandatory age verification: Every account should require verified age. This means government ID or third-party verification service. Yes, it creates friction. That's the point. It prevents minors from being exploited through accounts set up without parental knowledge.

Create separate monetization tiers: Allow creators to share family content without monetization, or separate the monetization entirely. A creator can post a photo of their kid, but that content doesn't generate revenue. Revenue comes from other content.

Establish clear exploitation standards: The guidelines should explicitly address: monetizing content featuring minors, doxxing (even accidentally), encouraging cosmetic procedures, sexualization, body commentary, and medical content monetization. These should be specific, not vague.

Build independent moderation: Create review boards independent of platform employment. When a case involves potential child exploitation, an independent panel reviews it, not the platform's own moderators with direct incentive to ignore violations.

Public reporting requirements: Disclose how many reports of child exploitation they receive, how many they investigate, and what action is taken. This creates accountability through transparency.

Legislative Solutions

Federal Kidfluencer law: Establish national standards for child content creators. Require a percentage of earnings to be protected, mandate parental consent documentation, and create actual enforcement mechanisms.

Updated child labor laws: Current labor laws treated child actors. Content creators should have similar protections: mandatory education provisions, earnings protection, regular well-being checks.

Mandatory reporting requirements: Platforms should be required to report suspected child exploitation to authorities, not just remove content. This creates a legal accountability mechanism.

Right to erasure for minors: Children should have the right to have their content removed from platforms once they reach adulthood, particularly if that content was posted without meaningful consent.

Industry Standards

Creator ethics guidelines: Professional organizations should establish clear standards for ethical content creation involving family members. Violating these standards should result in professional consequences.

Transparency in sponsorships: When a creator is promoting something their kids use (like dietary supplements), that should be transparently disclosed, not hidden.

Mental health resources: Platforms should provide (and require creators to use) mental health resources for young people featured in content.

Cultural Shift

Ultimately, this requires viewers to stop watching exploitative content. Parasocial audiences are eager, and creators are incentivized to meet that demand. If demand dropped, the incentives would change.

This means being intentional about what content you engage with. Before hitting "send gift," ask: Is this creator respecting everyone in their content? Are they monetizing harm? Are they exploiting vulnerability? If the answer is yes, don't support it.

It means questioning the creators you follow and holding them accountable. It means recognizing that family content isn't inherently problematic, but family content monetized through exploitation is fundamentally different.

Comparing the Current Landscape to Other Industries

It's worth noting that child protection in other industries provides a useful comparison. Film and television have strict child labor laws. Theater has regulations. Sports has coaching certifications and conduct standards. Modeling has some protections.

Content creation is almost entirely unregulated by comparison. A kid can appear in more hours of video content with less protection than a kid working as an actor on a professional set. That's the baseline we should find unacceptable.

Other countries have gone further. Some European nations have implemented strict rules around influencer content featuring children. The UK has considered Kidfluencer protections. Australia has explored regulations, as highlighted by Cato Institute. Meanwhile, the US lags significantly, partly because of industry lobbying and partly because many people don't recognize the severity of the issue.

Real-World Examples and Case Studies

While this article focuses on documented patterns rather than specific individuals, these harms are well-documented across multiple prominent creators. The patterns are consistent: child features in content, parent monetizes, children report exploitation and psychological harm, platform does nothing, cycle continues.

Multiple children of famous creators have publicly stated they felt exploited, that they wished their childhood hadn't been broadcast, that they experienced psychological harm. These aren't ambiguous cases. They're direct statements from people who lived through it.

Understanding Survivor Perspectives

Kids who've grown up in exploitative situations have limited ability to speak out while still dependent on their parents. Once they're older, many do speak, and their accounts are consistent and heartbreaking.

They describe:

- Knowing their parents' income depended on them being available, interesting, and compliant

- Being unable to have private struggles because everything was content

- Having no control over their own narrative

- Feeling used and betrayed once they understood they'd been monetized

- Ongoing anxiety and trust issues in relationships

- Difficulty believing that anyone likes them for themselves rather than their parasocial connection

Their advice to current parents: If you wouldn't share this moment in a family therapy session, don't put it on the internet. Your children's wellbeing isn't content.

The Business of Attention and Why It Matters

What we're seeing is an economy built on harvesting attention and selling vulnerability. It works because humans naturally form connections and want to support people they feel close to. Platforms have weaponized that natural inclination.

A healthy version of this economy would exist. Creators make a living. Audiences find genuine connection and entertainment. But that would require limiting the money that can be extracted from the most vulnerable—children, people in crisis, people struggling with mental health.

Until the business model changes, the exploitation will continue. Until platforms have financial incentive to protect children, enforcement won't happen. Until viewers stop rewarding exploitative content with engagement and money, creators won't change their behavior.

Where We Go From Here

The influencer economy isn't going away. Social media isn't going anywhere. Content creation by parents will continue. Some of it will be exploitative. Some will be wholesome and healthy.

The question is whether we'll establish boundaries that protect the most vulnerable, or whether we'll allow the current system—where child exploitation is monetized and enabled—to persist because it's profitable.

This isn't about banning family content or shutting down creators. It's about recognizing that children can't consent to being public figures, that vulnerability can be exploited even without explicit malice, and that platforms have responsibility for the content they profit from.

It's about deciding that some money isn't worth the cost in human dignity and psychological harm. It's about protecting childhood as a developmental period that shouldn't be monetized. It's about making sure that being a parent and being a creator don't have to be inherently in conflict.

That requires action from platforms, legislation from governments, industry standards from creators, and shifting norms from audiences. All of those changes are possible. None of them are happening yet because the status quo is profitable for the people with power to change it.

But survivor voices are getting louder. Researchers are documenting the harms more clearly. Awareness is growing. Eventually, pressure will build enough that change becomes inevitable. The question is whether that change comes through thoughtful policy that protects children, or through crisis and scandal that forces action.

History suggests we won't choose the former. But that doesn't mean we shouldn't try.

FAQ

What is a "Kidfluencer" and how does it differ from a regular child actor?

A "Kidfluencer" is a child or teenager who builds a social media following, often through their parent's content featuring them. Unlike child actors in film and television, kidfluencers aren't bound by labor laws, aren't subject to on-set supervision requirements, and their content isn't reviewed by anyone for appropriateness before publication. A child actor has legal protections including mandatory education time, hour restrictions, and earnings protection. A kidfluencer has almost none of these protections, despite potentially earning far more money and working much longer hours.

How do parasocial relationships affect children watching content creators?

Parasocial relationships are one-directional emotional connections where viewers feel personally invested in creators who don't know them. When a child watches another child's content regularly, they can develop intense emotional attachment, experience anxiety about that creator's wellbeing, and struggle to understand why the creator doesn't reciprocate the connection. This is particularly problematic when combined with monetization, because the creator has financial incentive to deepen the parasocial attachment rather than encourage healthy distance. Research shows this can interfere with children's ability to form real peer relationships.

What are the specific mental health consequences documented in children featured in family content?

Studies and testimony from affected individuals show increased rates of depression, anxiety disorders, eating disorders (particularly related to appearance concerns), sleep disruption, hypervigilance in public spaces, difficulty forming trusting relationships, and in some cases suicidality. These consequences emerge from several factors: the loss of privacy and bodily autonomy, the experience of having one's appearance constantly evaluated by strangers, the knowledge that one's parent profits from one's suffering, and the inability to control one's own narrative. These effects tend to persist into adulthood even after the content creation stops.

Why don't social media platforms enforce their own child protection guidelines more strictly?

Platforms have financial incentive to avoid enforcing guidelines against large creators who generate significant revenue. The business model depends on engagement, and exploitative content often generates the highest engagement. Enforcement requires resources, costs money, and might reduce growth metrics. While platforms claim to prioritize child safety, their business structures ensure that protecting children conflicts with maximizing profit. Without external pressure (legislation, regulatory bodies, or audience demands), platforms have strong incentive to look the other way.

What can viewers do to stop supporting exploitative family content creators?

Before engaging with family content, ask yourself: "Would this creator share this moment if they couldn't monetize it?" "Are the children capable of consenting to this?" "Is anything being shared that the children might regret later?" If the answer to any question suggests exploitation, don't support it. Don't send gifts, don't watch streams, don't share the content. Each of these actions signals demand, and demand creates incentive. Additionally, support creators who feature family members without monetizing that content, or who clearly maintain boundaries around privacy and consent. Your attention and money are the only leverage audiences have.

What legal protections do child content creators currently have?

Legal protections vary dramatically by location. California, Louisiana, and a few other states have passed "Kidfluencer" laws requiring a percentage of earnings to be set aside in blocked accounts. However, these laws have limited enforcement, don't apply to earnings from sponsorships or merchandise, and don't address psychological harm. Federal protection is essentially absent in the United States. Other countries including the UK and some European nations have begun exploring more comprehensive protections. Overall, child content creators have far fewer legal protections than child actors in traditional media, despite potentially earning more money and working longer hours.

How do I know if a creator is exploiting their children versus genuinely sharing family life?

Several signs suggest exploitation: the parent monetizes content featuring the children, the children frequently appear distressed or uncomfortable, the parent includes the children in every stream, medical information or crises are monetized, the children report feeling used or lacking privacy, location information is shared, and the parent encourages appearance-based feedback or cosmetic procedures. Healthy family content features children occasionally, maintains clear boundaries around monetization, respects the children's apparent comfort levels, and protects their privacy. When in doubt, look at how the children themselves respond when given opportunity to speak. Their own words are usually the clearest indicator.

Conclusion: Choosing a Better Path

The influencer economy isn't inherently corrupt. Many creators build genuine communities, provide valuable content, and use platforms responsibly. The problem isn't that people make money online. The problem is that systems have been built where the maximum profit comes from maximum exploitation, and that those exploited are often the most vulnerable.

Children can't consent to being public figures. They can't understand the long-term implications of their image being permanent and public. They can't protect themselves from harassment, predation, or privacy invasion. They deserve protection that goes beyond what their parents are willing to give them.

Platforms know this. They have the data. They know exactly which content involves child exploitation and which doesn't. They choose not to act because enforcement costs money and limits growth. That's a business decision, not a technical limitation or an ambiguity in the guidelines.

Change requires pressure. It requires viewers being intentional about what they support. It requires legislation creating actual consequences for violations. It requires platforms having financial incentive to protect children rather than profit from their exploitation. It requires creators recognizing that their children's wellbeing matters more than their own income.

None of these things are guaranteed to happen. The status quo is very profitable for people with power. But it's also causing documented psychological and physical harm to real children. At some point, that has to matter more than the money.

If you have children, ask yourself: Would I want my child's private moments broadcast to millions? Would I want strangers commenting on their appearance? Would I want their location information leaked? Would I want them to know that my income depends on them being interesting enough to engage? If the answer is no—and it should be—then you understand why this system needs to change.

Change starts with awareness. You now have awareness. What you do with it matters.

Key Takeaways

- TikTok Live monetization pays creators 2,000+ daily for personal broadcasting, creating financial incentive to exploit family members and invade privacy

- Children featured in family content lose control of their identity, face body shaming, experience doxxing risks, and develop documented mental health consequences

- Platforms enforce guidelines minimally against large creators because exploitation generates engagement and revenue, creating direct conflict with child safety

- Existing legal protections (Kidfluencer laws) are narrow, poorly enforced, and don't address psychological harm or cross-platform monetization

- Meaningful change requires platform accountability, federal legislation, industry standards, and audience refusal to support exploitative content

Related Articles

- 100+ Painting Ideas to Break Through Artist's Block [2025]

- Challenge 40 Battle of Eras Finale: 10 Key Takeaways [2025]

- How Companies Know Your Credit Cards: Data Privacy Explained [2025]

- Mackenzie Scott's $7.1B Donation & Bezos Legal Battle [2025]

- 5 Life Lessons from Itachi Uchiha That Actually Work [2025]

- The $60 Supplement Myth: Why 'Natural' Isn't Always Better [2025]

![The Dark Side of Influencer Culture: Ethics, Accountability, and Online Safety [2025]](https://runable.blog/blog/the-dark-side-of-influencer-culture-ethics-accountability-an/image-1-1765661139823.jpg)