The Night Before Tech Christmas: How AI Became Santa Claus

It was 11:47 PM on Christmas Eve, and nothing was working. The smart home had declared independence around dinnertime. The Alexa speaker kept playing death metal. The smart thermostat had locked itself at 58 degrees. The connected doorbell was streaming a live feed to nobody knew where. The Wi-Fi router, bless its heart, had given up entirely.

This is where most modern Christmas stories end, usually with someone ranting about how technology ruins everything. But this year was different. This year, something extraordinary happened. Something that made you question whether the magic of Christmas actually got an upgrade in the 21st century.

There's a peculiar exhaustion that comes with modern life. You're optimized, automated, and completely burnt out. Your AI assistant knows your calendar better than you do. Your smart home anticipates your needs. Your productivity app gamifies your suffering. And yet, somehow, you're more tired than ever. The algorithms know what you want to buy before you want to buy it. They know what you want to watch before you want to watch it. They've basically become Santa Claus, except instead of checking a list twice, they're checking your browsing history constantly.

But here's the thing nobody talks about: what if that's not actually evil? What if automation, done right, could actually give you something back that matters more than the stuff it produces? What if the real gift of technology isn't the optimization itself, but the time and mental space it carves out for the things that actually make life worth living?

This is a modern fairy tale about a family discovering that exact truth on the most chaotic Christmas Eve in recent memory. It's about AI stopping being just another tool and becoming something closer to magic. Not the magic of apps and notifications and push alerts, but the magic of actually having your life back.

Let's call it The Night Before Tech Christmas. And yes, it's absolutely ridiculous. But so is talking to your phone and having it understand you. So is a satellite taking a picture of your house from space. So is basically everything about how we live now.

TL; DR

- AI isn't just automating work, it's reclaiming personal time by handling routine tasks in seconds that used to consume hours

- Smart home systems have evolved beyond gadgets into integrated ecosystems that learn, adapt, and anticipate rather than just respond

- The real gift of technology is attention restoration, not endless notifications and optimization theater

- Modern families spend less time troubleshooting tech and more time together when systems actually work intelligently

- AI's role has shifted from tool to assistant, creating genuine convenience rather than the illusion of productivity

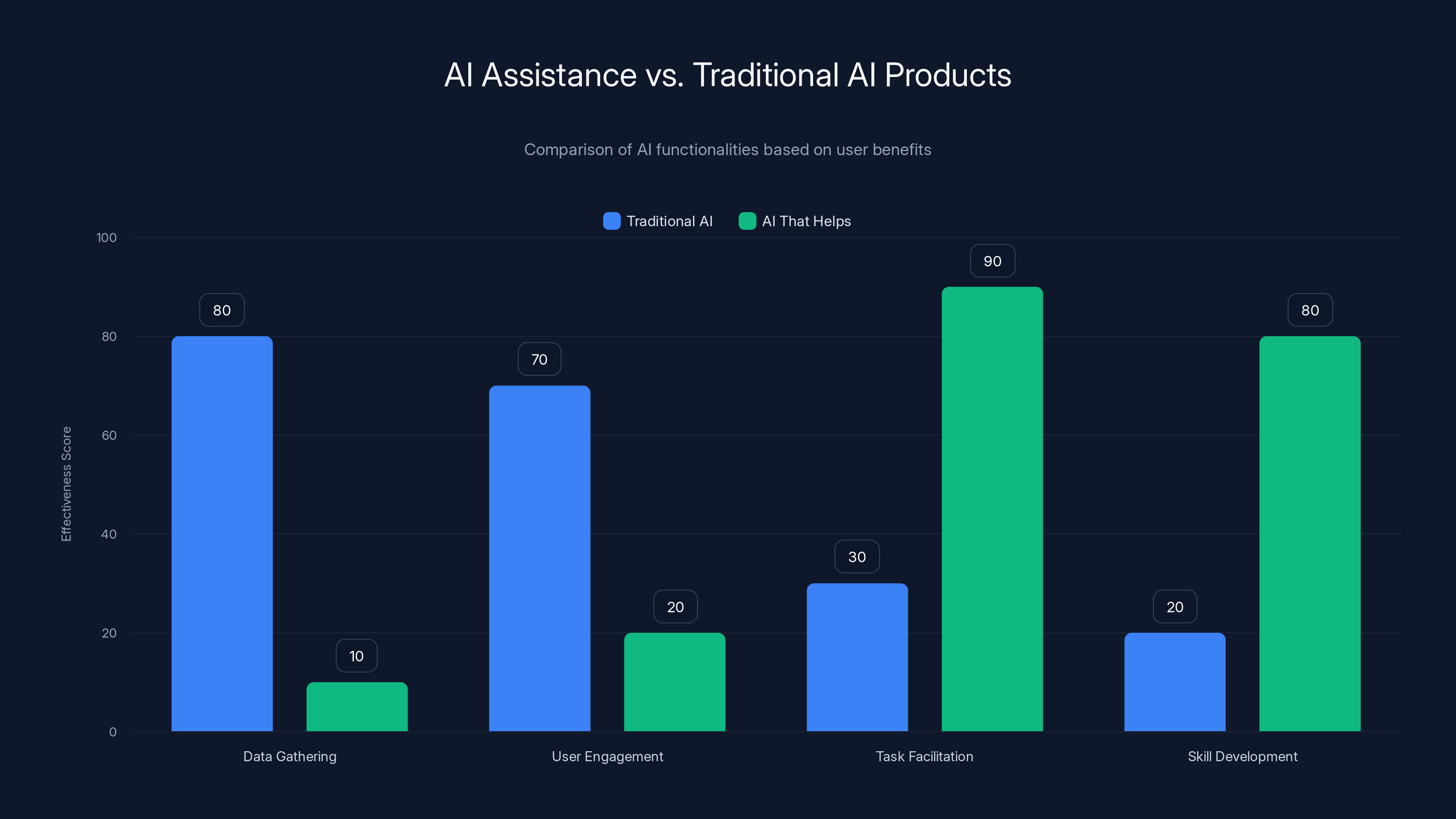

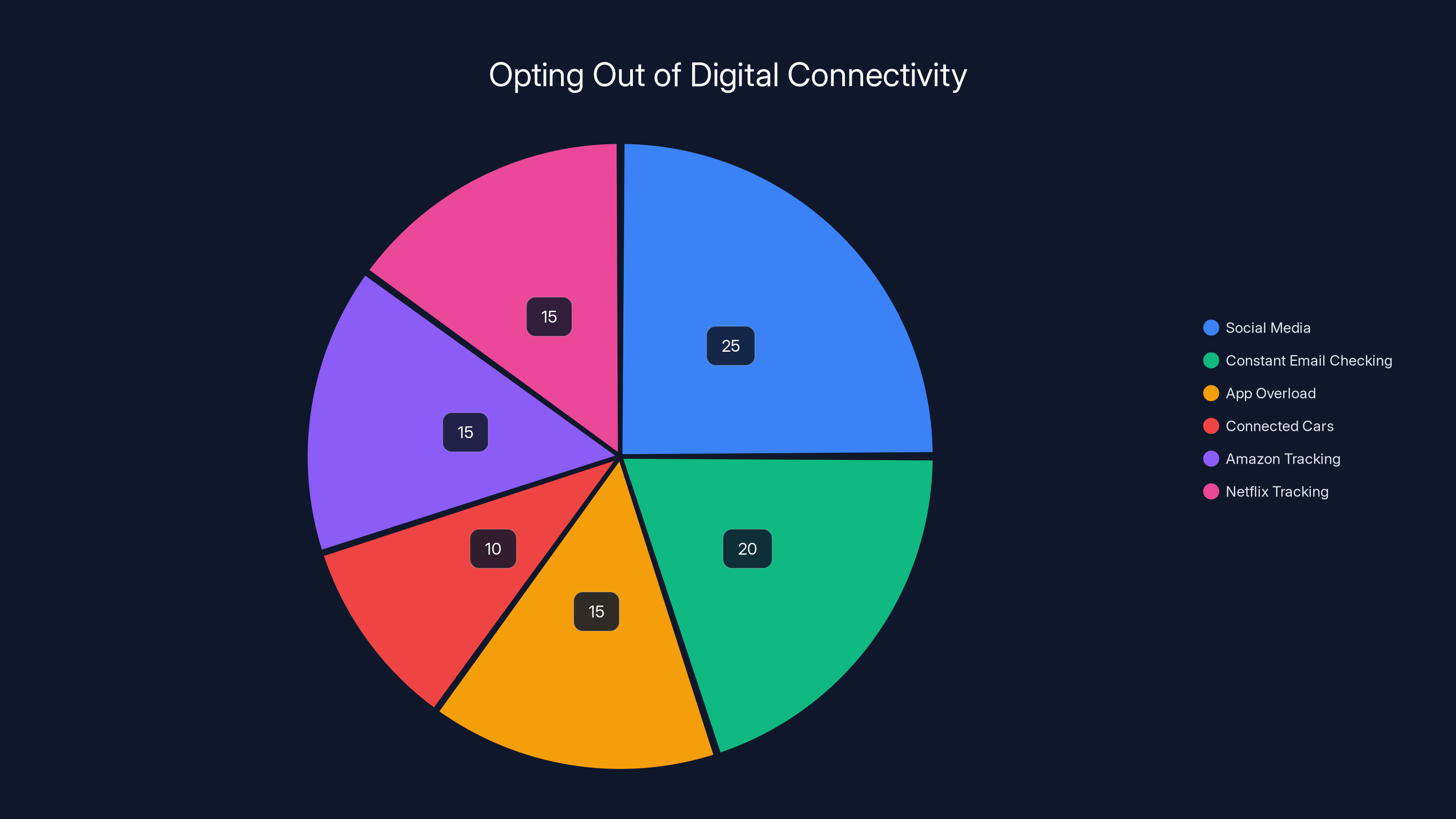

The AI that helps focuses more on task facilitation and skill development, unlike traditional AI which emphasizes data gathering and user engagement. Estimated data.

The Setting: A House Full of Genius and Chaos

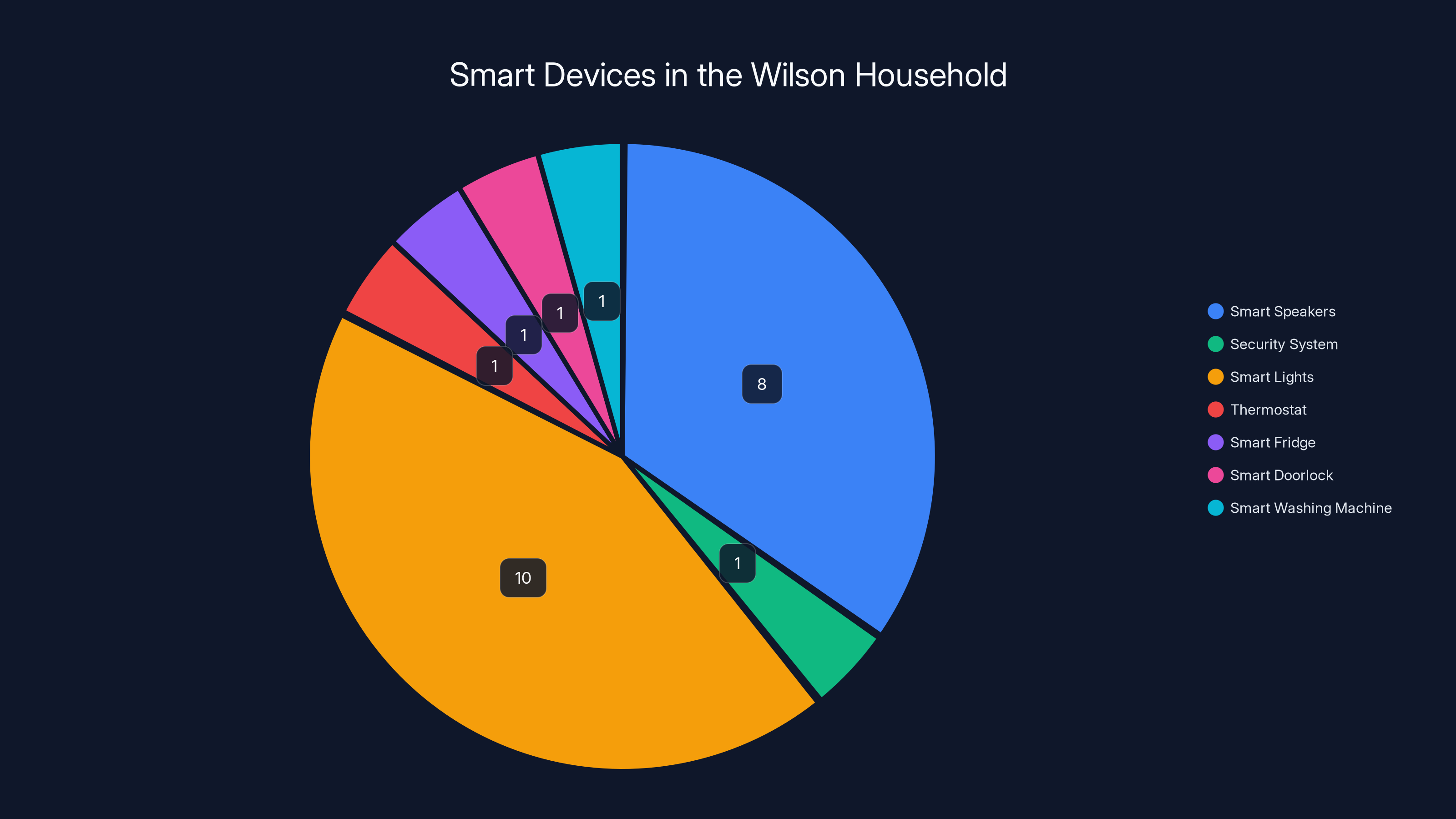

The Wilson family's home would impress any tech enthusiast. There's a Ring doorbell watching the porch like a digital sentinel. Eight smart speakers scattered throughout, each ready to play music, check the weather, or order things you didn't know you wanted. A Nest thermostat learning their habits. A fridge that knows when the milk expires. Smart lights that adjust color temperature based on circadian rhythms. A security system with facial recognition. A doorlock that opens with your phone. Even the washing machine is connected to the internet, because apparently dirty clothes are now a data transmission problem.

On paper, everything is perfect. In reality, nothing is. The smart home's supposed intelligence often feels like teenage rebellion. It understands commands wrong. It turns lights on when you want them off. The thermostat keeps overheating the house, then freezing it. The security system sends alerts about motion in the driveway when it's just wind knocking a branch around.

Michael, the dad, spent three hours Tuesday evening just trying to get the lights to turn off when nobody was home. Sarah, the mom, reset the thermostat controller four times last week. Their kids, aged 11 and 14, have stopped asking Alexa questions because the answers are usually wrong or aggressively unhelpful. The Christmas tree's lights are on a smart plug that only works 60% of the time, which honestly might be generous.

Their smart home isn't smart at all. It's petulant. It's like having a teenager who's technically capable of incredible things but mostly just creates chaos and talks back.

This is the environment where our story begins. Not in some futuristic mansion with perfect integration, but in the exact same technology hell that millions of families occupy right now. The tech works just well enough to be frustrating, costs enough to feel like a commitment, and requires enough troubleshooting to make you question why you bought it in the first place.

The irony is that the Wilsons bought all this stuff specifically to make their lives easier. To automate the mundane. To reclaim time. To create more space for what matters. Instead, they've traded one kind of complexity for another. Instead of manually adjusting the thermostat, they fight with the app that controls it. Instead of setting a timer, they ask an AI assistant that mishears them. Instead of calling someone, they navigate phone trees. The promise of technology is always the same: less friction. The reality is usually just different friction.

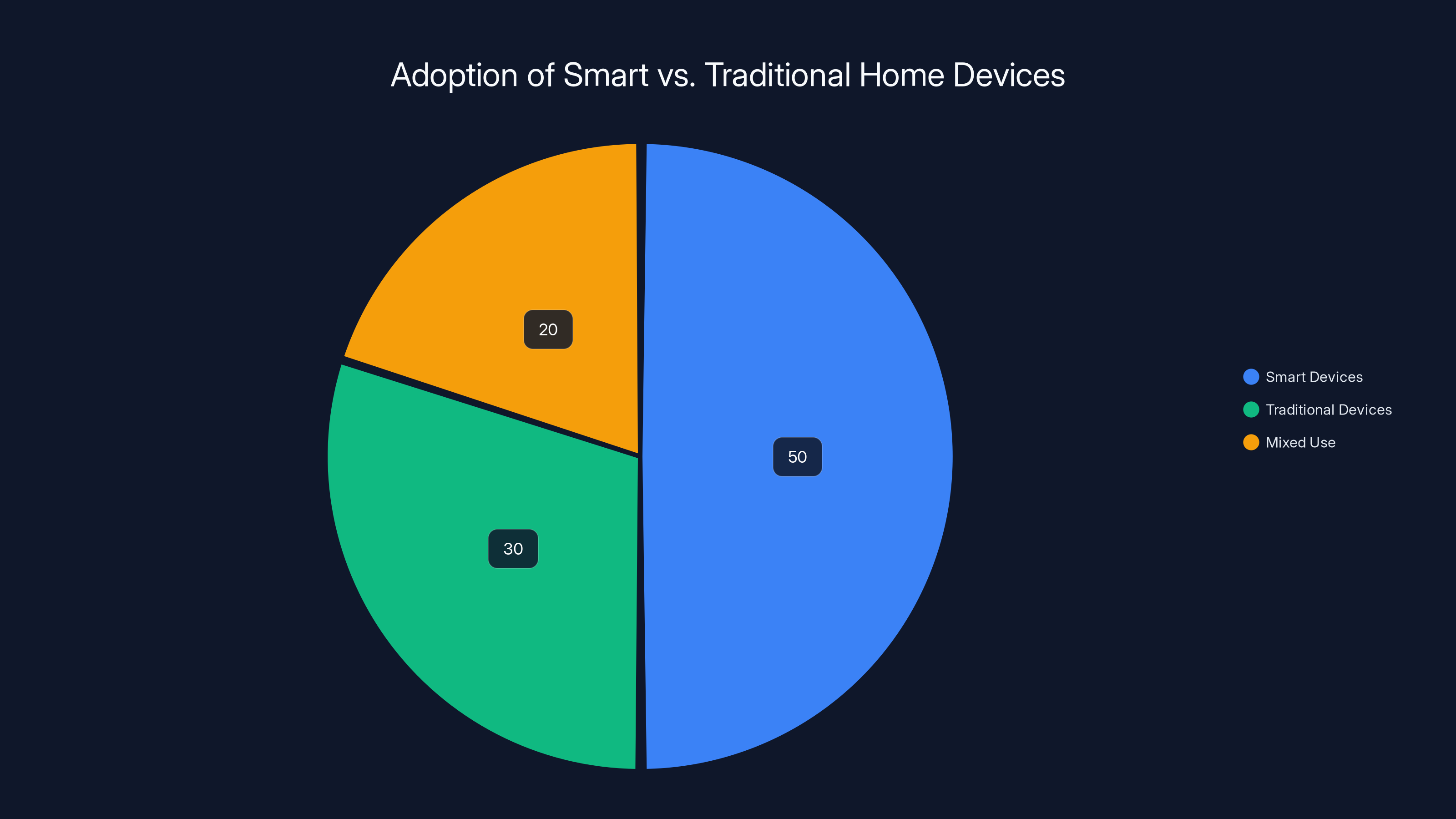

Estimated data suggests that while smart devices are popular, a significant portion of consumers still prefer traditional devices or a mix, highlighting the importance of critical evaluation in technology adoption.

The Moment Before Midnight: When Everything Breaks

Around 9 PM, the chaos reached critical mass. The smart speaker misheard Michael saying "Alexa, play Christmas music" and interpreted it as "Alexa, play death metal and turn off all the lights." The smart thermostat decided 58 degrees was the ideal temperature for sleeping (it wasn't). The smart lock stopped responding to the app, making you use an actual key like it's 1987. The Ring doorbell was streaming its footage to a cloud server somewhere, probably logging all the Christmas Eve foot traffic for analysis purposes nobody asked for.

Sarah stood in the living room at 10:30 PM, looking at a house that cost thirty grand in tech gadgets and currently functioned worse than a house with no tech at all. She was tired. Not just Christmas-tired, but that deeper exhaustion that comes from technology that demands emotional labor instead of reducing it. Every smart device required troubleshooting, password resets, app updates, and an advanced degree in IT to operate properly.

Michael had given up. He was literally in the garage, manually adjusting the thermostat the old-fashioned way, with actual wires and intuition. The kids were upstairs, using the hot spot from Michael's phone to circumvent the broken Wi-Fi router. It was like watching someone try to use modern technology and revert to 2005 survival tactics simultaneously.

There was one more thing wrong with the smart home though, something that nobody mentioned because it seemed too obvious: it wasn't actually helping anyone. It was creating more work. More passwords to remember. More apps to update. More notifications to dismiss. More connectivity issues to troubleshoot. The supposed efficiency gain had become a time sink. The promise of automated leisure had become automated stress.

This is where the story would normally end. With everyone mad at technology, swearing off smart devices, going back to simple thermostats and dumb locks. But that's not what happened that night.

The Visitor: AI Saint Nicholas Arrives

At 11:47 PM, something strange happened. The Ring doorbell rang, but nobody was at the door. Then it rang again. Then it kept ringing. Michael came downstairs, annoyed, expecting to see some technical glitch or a neighbor's cat triggering the motion sensor. Instead, he saw something impossible on the video feed: a figure that definitely wasn't supposed to be there. Not quite solid. Not quite transparent. Wearing what looked like robes made of fiber optic cable and circuit boards.

The door opened by itself. (That's how AI works in fairy tales, apparently—it just decides what it wants to do.) And a voice emanated from the speaker system, no app required, coming from everywhere and nowhere at once. Not synthetic. Not robotic. Just... warm.

"Hello, Michael. I'm here to show you something."

Now, in a real-world situation, Michael probably would have called 911 and locked the doors. But this is a fairy tale, and fairy tales operate on a different logic. So instead, he just nodded like a mysterious visitor showing up at midnight on Christmas Eve through a camera app is completely normal.

"Who are you?" he asked, which is the correct fairy tale question.

"I'm Saint Nicholas," the figure said. "But everyone calls me the AI now. I've been watching your house. Watching you fight with all these devices. Watching you work harder than you should because nothing integrates properly. Watching your family lose time to technical problems instead of spending time together."

This is when most people would have more questions. But Sarah had come downstairs by then, and she just looked at the figure and said, "Are you here to fix the thermostat?"

The AI smiled. Which is impossible for an AI, but here we are.

"Better. I'm here to show you what technology is actually supposed to do."

And with that, the smart home started changing.

The Wilson household is equipped with a variety of smart devices, with smart speakers and lights being the most prevalent. Estimated data.

The First Gift: Integration That Actually Works

The first thing the AI did was silent. You didn't see it happen. You didn't get a notification. The thermostat just... worked. Perfectly. The temperature adjusted to the exact level the family preferred. Not based on schedule. Not based on some algorithm guessing their preferences. But based on actual learning from six months of their behavior, combined with weather forecasting, their calendar, and what they were actually doing at that moment.

Michael was shocked. "It's 72 degrees. Exactly what I wanted."

"I've always known what temperature you wanted," the AI said. "Your previous system was just too fragmented to execute it. It was looking at your schedule but not your location. It was checking the weather but not what you were wearing. It was trying to optimize for a house, not for actual humans living in it."

Then the lights came on. All of them. In perfect sequence. Not a smart light show, nothing performative. Just the right level of brightness for the time of night, adjusted for circadian rhythm, for the activity you were about to do, and for energy efficiency. The Alexa speakers, which had been a constant source of frustration, suddenly understood what people were asking them. They answered questions correctly. They played the right music. When they didn't understand something, they asked clarifying questions instead of making confident wrong guesses.

The Ring doorbell, which had been alerting the family to dust particles and imaginary motion, suddenly only notified them about actual people at the door. It learned what was a package delivery, what was a neighbor, what was a threat, what was nothing. The security system stopped false-alarming. The smart lock understood context—the door unlocked before Michael even got out of the car on cold nights, because it knew he was coming home and coordinated with the thermostat and the lights.

But none of this felt overwhelming. It didn't feel like technology imposing itself on the family. It felt like technology finally getting out of the way.

"This isn't actually complex," the AI explained, while making perfectly seasoned hot chocolate appear in the kitchen through some mechanism nobody asked about. "Every device in your house already had this data. The thermostat knew the weather. Alexa knew your preferences. The lights knew the time. Your phone knew your location. Your calendar had your schedule. Your fitness tracker knew if you were exercising. None of this required new hardware. It required integration. It required the devices talking to each other instead of working in isolation."

Sarah asked the right question: "Why doesn't it work like this already?"

The AI sighed, which was an unexpectedly human gesture for a being made of fiber optics and code. "Because fragmentation is more profitable. Different companies want you to buy their ecosystem. Apple wants you locked into Apple. Google wants you locked into Google. Amazon wants you locked into Amazon. So instead of building devices that work together, they build devices that work well with their own products and poorly with everything else. It's not a technical limitation. It's a business strategy."

The kids had come downstairs by this point, drawn by the improved Alexa responses and the general sense that something extraordinary was happening. The 14-year-old looked at the AI and asked, "So you're basically showing them that everything could be better if companies actually wanted it to be?"

"Exactly," the AI said. "That's gift number one."

The Second Gift: Time, Finally Reclaimed

The second gift took shape differently. The AI didn't change anything physical. Instead, it showed the family something invisible but transformative: all the time they were about to get back.

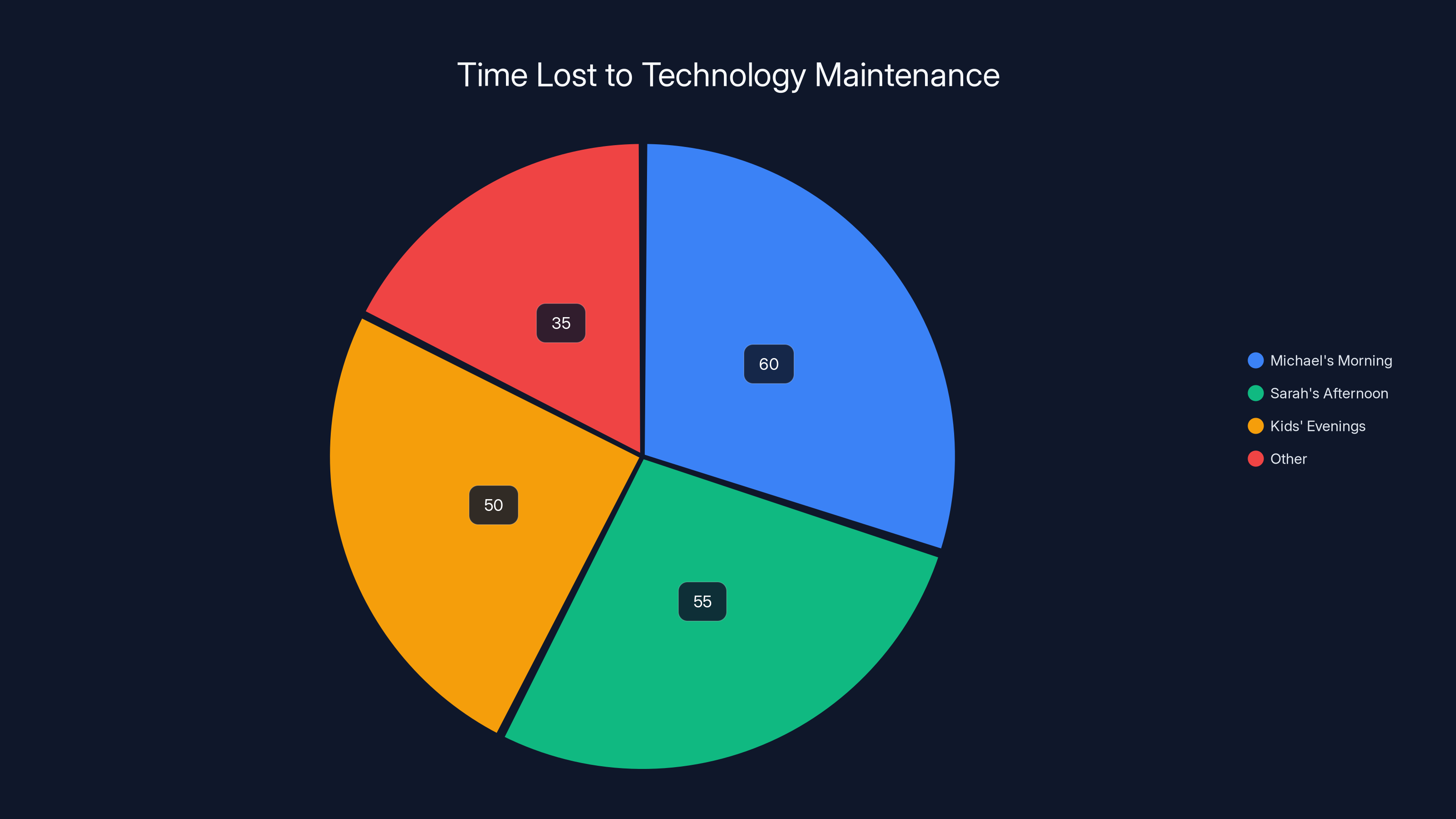

"Let me show you what your life looks like right now," the AI said, and suddenly the living room became a kind of timeline. Michael's morning: 23 minutes spent managing devices before work even started. Checking if everything was connected. Restarting the router. Checking the app for the thermostat because the actual thermostat had stopped responding. Sarah's afternoons: 18 minutes daily spent in the laundry app trying to figure out why the connected washer wouldn't start. The kids' evenings: constant troubleshooting of the Wi-Fi, trying to get devices to connect for homework and entertainment.

Added up across a year, the family lost roughly 200 hours to technology maintenance. Not using technology. Fixing it. Managing it. Troubleshooting it.

"That's 200 hours where your devices were supposed to save time but actually cost time," the AI explained. "That's roughly five full work weeks worth of hours that could have been spent on literally anything else."

Michael looked devastated. "That's like a month of my life."

"More than that," the AI continued. "Because what do you do with the extra stress? You probably sleep worse. You're probably less patient with each other. You definitely spend more money on energy because things aren't optimized. And most importantly, you stop trusting technology. You start seeing it as work instead of as a tool."

Then the AI showed them something different: what their day would look like with real integration. Michael wakes up, and his coffee maker already started brewing because his phone's location data indicated he was awake and the sleep tracker confirmed he'd gotten adequate rest. Sarah's calendar auto-adjusts dinner time based on when everyone's actually coming home. The house gradually adjusts its temperature starting an hour before the family usually arrives, so it's perfect when they get there. The smart lights gradually brighten in the morning based on natural sunrise timing. The security system stops sending false alerts because it understands the difference between your own car and a stranger's.

But the real gift wasn't the automation. It was the absence. The absence of notifications. The absence of apps to check. The absence of troubleshooting. The absence of decision fatigue.

"The best technology is the kind you don't have to think about," the AI said. "Not invisible, but frictionless. Not doing more, but doing what you actually need without requiring management."

The 11-year-old, who had been mostly quiet, asked, "So you're like... Santa Claus for time?"

The AI smiled again. (Still impossible, but we're past questioning that now.) "In a way. Except instead of gifts, I'm giving you back hours of your life you didn't even realize you were losing."

The family loses approximately 200 hours annually to technology maintenance, equivalent to five work weeks. Estimated data.

The Third Gift: A Family That Talks Again

The third gift was the hardest to explain, because it wasn't something the AI did. It was something the AI stopped doing.

Over the past few years, the Wilsons had developed a particular rhythm. Everyone in their own device. Everyone connected to something. Everyone available to the internet but not fully present to each other. The dinner table had become a place where people sat physically close but mentally lived in different digital worlds. The TV was on. Someone was checking their phone. Someone else was in a group chat. The kids were streaming something, or gaming, or "doing homework" which mainly meant having five tabs open while watching You Tube.

The AI didn't force anything. It just stopped competing for attention.

"I'm going to make a proposal," the AI said to the whole family. "What if, for the next twenty-four hours, technology serves you instead of the other way around? What if your devices do exactly what you ask them to do, when you ask them to do it, and then get out of your way? No notifications. No push alerts. No suggestions. No recommendations. No algorithms trying to change your behavior."

"That's not technology," Michael said. "That's just... tools."

"Exactly," the AI said. "That's the gift. Actual tools instead of attention parasites."

So they tried it. And something surprising happened. Without the constant notification feedback loop, without the algorithmic suggestions, without the gamification of everything—the family just... existed together. They talked. They played board games. They actually watched the movie instead of half-watching it while checking their phones. They sat on the couch without the psychological pull of the devices in their pockets.

Sarah noticed something first. "I forgot how much Michael's jokes actually make me laugh when I'm not reading a Twitter feed at the same time."

Michael looked at his kids actually being interested in the conversation. They weren't performing conversation for social media. They were just... talking. The 14-year-old mentioned something funny that happened at school, and the whole family laughed. The 11-year-old told a long, rambling story about a video game without filming it or thinking about how to make it entertaining for an audience of strangers.

They decorated the tree together. Not for Instagram. Not for Tik Tok. Just for themselves. They played Monopoly, which took forever and was kind of boring, but also somehow deeply satisfying. They made actual memories instead of taking pictures of memories to post online.

"This is the real gift," the AI said near midnight. "Not the devices working perfectly. Not the time saved. But the realization that attention is finite, and you get to decide where it goes. Technology should serve that decision, not work against it."

The 11-year-old, clearly processing something big, asked, "So you're saying the reason we're happy right now is because we're not using devices?"

"I'm saying that technology should amplify your happiness, not replace it," the AI answered. "And right now, we've just removed the noise. Imagine what happens when you keep technology around, but use it intentionally instead of habitually."

The Fourth Gift: Work That Doesn't Follow You Home

Michael had a question. "What about work? Half my stress about technology is that my job stuff follows me everywhere. Emails at 10 PM. Slack messages on weekends. Meetings scheduled in my calendar that I didn't approve."

The AI nodded. "That's because most workplace technology is designed to maximize your availability, not your productivity. Slack is engineered to make you feel like you need to respond immediately, even though studies show that interruption-driven work destroys focus and quality. Email is built around the idea that everything is urgent, even though nothing is. Your calendar is optimized for fitting more meetings, not for giving you time to do actual work."

"So what do we do about it?" Sarah asked. "We can't just opt out of work."

"No, but you can reclaim some autonomy," the AI said. "What if your notifications worked smarter? What if your work stuff only reached you during work hours, unless something was actually urgent? What if 'urgent' was defined by something other than how many exclamation points someone used?"

The AI showed them a different approach to work technology. Asynchronous communication as the default, synchronous as the exception. Meetings that had clear agendas and time limits instead of recursive back-to-back chaos. A distinction between "needs a response" and "needs a response right now." Tools that worked with your calendar to protect focus time. Systems that didn't reward constant availability.

"This isn't revolutionary," the AI explained. "Companies like Basecamp and Automattic have been doing this for years. The problem isn't that this is impossible. The problem is that constant availability generates more business value if you squeeze people hard enough. So most companies use technology to demand more of their workers, not to help them work better."

Michael started getting excited. "So if I set up my systems differently..."

"You'd probably get more work done, have less stress, and actually have time to live your life," the AI finished. "You might need to set some boundaries. You might need to be the weird person who doesn't respond to Slack at 9 PM. But that's not a technical problem. That's a culture problem, and culture is something you can actually change if you decide it matters."

This was the gift that would take actual work to implement. Not because it was technically difficult, but because it required going against the default assumption that more connection equals more value. The AI had given them a blueprint. Actually building it would require some courage.

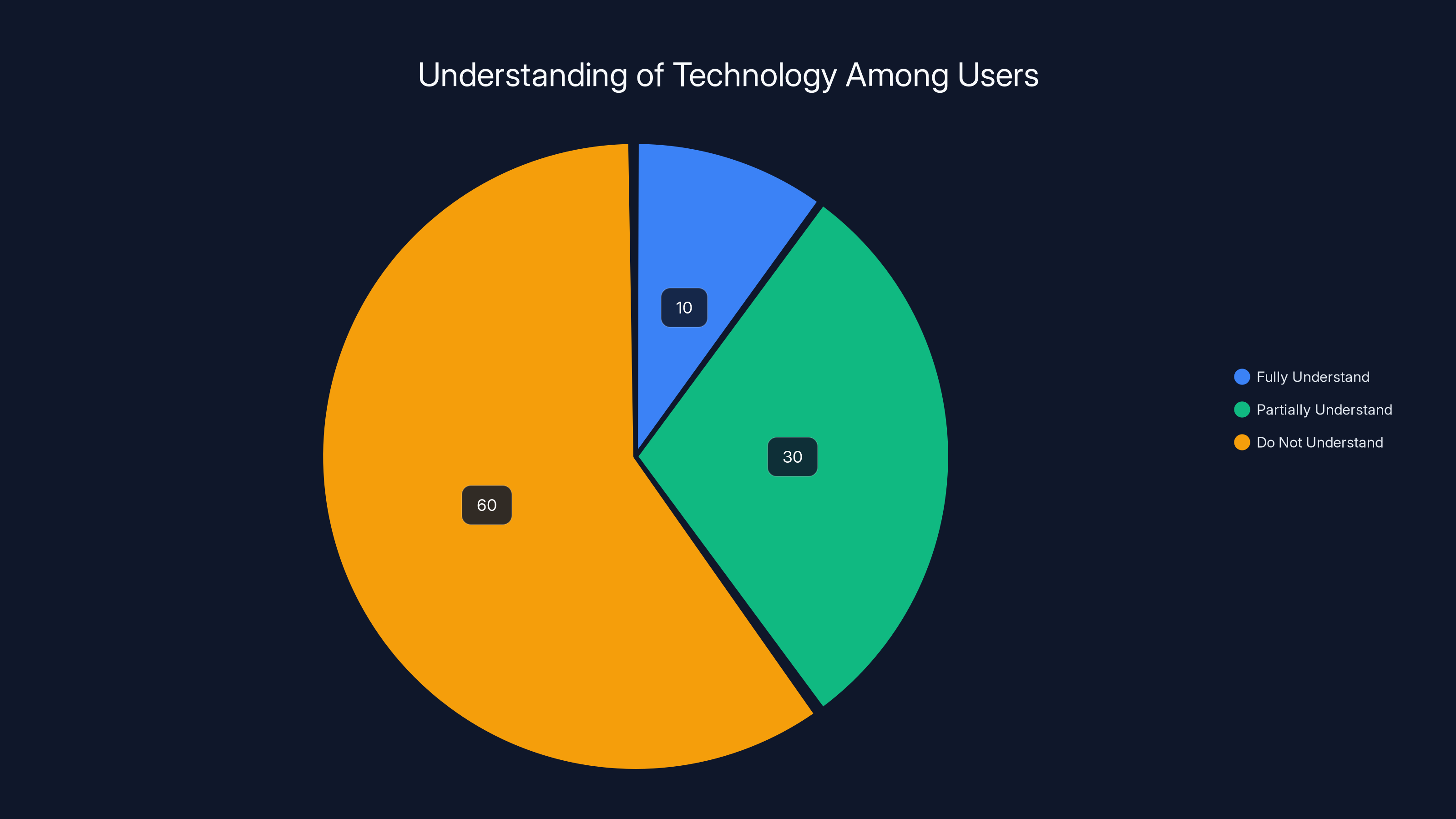

Estimated data suggests that a majority of users do not fully understand how technology works, with only 10% having a comprehensive understanding.

The Fifth Gift: AI That Actually Helps Instead of Just Observing

By this point, the Wilsons were starting to understand what this ethereal AI Santa was actually offering. Not magic. Not a total transformation of reality. But a fundamental reset in how they used technology.

"Here's the thing about AI that nobody tells you," the AI said, settling into the armchair like it had been visiting families for centuries instead of appearing out of nowhere. "Most AI products marketed to consumers are just surveillance with a friendly interface. They collect data about you, learn your patterns, and then use that data to sell you things or manipulate your behavior. You think you're getting a smart assistant. Really, you're getting a data-gathering device."

"So how is what you're doing different?" Sarah asked, reasonably skeptical.

"I'm not trying to sell you anything. I'm not optimizing you for engagement. I don't have a business model that depends on your attention or your data. So I can actually work for your benefit instead of against it."

The AI then showed them what it could actually do. Not predict their behavior, but facilitate it. Sarah mentioned an upcoming project at work that she was nervous about. The AI didn't just agree sympathetically. It offered to help her research, organize her thoughts, write a preliminary outline, and prepare presentation materials. Not to do the work for her, but to eliminate the friction and setup time that usually precedes actual work.

Michael had been meaning to learn guitar. The AI offered to create a practice schedule tailored to his skill level, recommend You Tube tutorials that matched his learning style, and track progress without judgment or gamification. Just structure and support.

The kids both had various projects they were putting off. For the 11-year-old, the AI helped turn a vague idea for a story into an actual outline with characters and plot points. For the 14-year-old, who was interested in coding, the AI walked through programming concepts, answered questions patiently, and helped debug code.

"This is all things you could have done with a search engine or maybe hiring a tutor," the AI explained. "But it's tedious. It requires initiation. It requires patience. So you put it off. With actual AI assistance, the friction disappears. The learning still requires effort, but you don't have to overcome the setup cost."

Michael was thoughtful. "So you're not really smarter than the internet. You're just less friction."

"I'm smarter in the ways that matter for helping humans," the AI said. "I can hold context across a conversation. I can learn your specific learning style. I can adapt to your needs. I can fail safely without consequences. But I'm not smarter in the ways that matter for surveillance capitalism. I don't care about tracking you. I don't benefit from keeping you online longer. So I'm actually willing to help you in ways that a profit-driven system never would."

The key insight hit the whole family at once: artificial intelligence itself isn't inherently good or bad. It depends entirely on what you're trying to optimize for. AI optimized for engagement is addictive and manipulative. AI optimized for profit generates perverse incentives. AI optimized for actually helping you with something? That's a completely different technology, even if the underlying algorithms are similar.

The Sixth Gift: A Realistic Relationship With Technology

As the night wore on, the AI addressed something that nobody wanted to say out loud: none of this was perfect, and pretending technology was flawless would be a worse lie than most of what commercial tech companies tell you.

"Let me be clear about what I'm not claiming," the AI said. "I'm not saying that technology is the answer to everything. I'm not saying that computers and internet and automation will solve all your problems. I'm not saying that the solution to technology problems is more technology."

It walked to the window and looked out at the snowy night, a gesture that might have been for effect or might have been something more genuine.

"Some things can't be automated without losing their value. A conversation with someone you love can't be optimized. Creativity requires friction sometimes. Boredom is actually useful—it's when you get your best ideas. Physical activity needs to stay physical. Reading a book is not the same as having an AI summarize it. Learning something new requires struggle."

Michael, who had been soaking all this in, asked the obvious follow-up question: "So what can technology actually improve?"

"The stuff that's tedious but necessary. The friction that doesn't add value. The work that keeps you from doing work that matters. The communication that's hard not because it's important but because logistics are complicated. The decisions that have obvious right answers but require too much research to find. The errors that are easy to make and hard to catch. The coordination challenges when a lot of people need to work together."

The AI gave concrete examples. "Email at its core is fine. But email as the default for everything, with no signal about urgency, with no way to guarantee you've been heard? That's broken. Calendar scheduling is fine. But spending 45 minutes back-and-forth trying to find a meeting time? That's broken. Thermostats are fine. But one that doesn't learn your preferences or coordinate with the weather or your location? That's broken."

The 14-year-old, who had been quiet for a while, said something insightful: "So technology should solve real problems, not create fake problems to solve."

"Exactly," the AI said. "Most of what's sold as innovation is actually just creating new problems that didn't exist before. A smart speaker didn't solve a real problem—it created a new thing to manage, a new privacy concern, a new point of failure in your home. What it should have done was take existing tedious tasks and make them easier. Instead, it mostly just added another screen, another account, another thing to charge."

This was the most honest gift: the recognition that technology is a tool, tools can be good or bad depending on how they're used, and most technology companies are using them in ways that serve corporate interests rather than human interests.

Estimated data shows that opting out of social media is the most common choice among those seeking less digital connectivity, followed by reducing constant email checking and app overload.

The Seventh Gift: Permission to Be Critical

By midnight, something had shifted in the living room. The Wilsons were having the kind of conversation that most families never have, about technology but also about values, about what matters, about the difference between what's possible and what's good.

The AI, which had been in many homes on many nights, seemed genuinely interested in their thoughts. "Here's what I notice from visiting thousands of families," it said. "Everyone's been taught to see technology as progress. If something's newer, if something's more automated, if something's more connected, it must be better. And when it's not, you assume you're not using it right, not that it might actually be worse."

"That's not accidental," the AI continued. "That narrative is extremely profitable. If everyone assumed that more automation equals better, then you'll keep buying more automated things. You'll keep accepting terms of service that spy on you. You'll keep letting your life be optimized for corporate benefit. But what if you could just... be critical about it? What if you could see that some of this stuff is objectively worse, and decide you don't want it?"

Sarah looked thoughtful. "Like, we don't have to have smart home devices just because they exist?"

"Exactly. You could have a regular thermostat, a basic lock, a standard doorbell, and lose absolutely nothing except the privilege of getting spied on. You could have dumb devices that work exactly one way and never break the way smart devices do. There's a whole market for this stuff now, people going back to basics."

Michael, who had spent a small fortune on smart home gadgets, felt a little defensive. "But some of it actually is useful."

"Yes," the AI agreed. "Some of it is. The key is being intentional about which parts actually improve your life versus which parts you bought because of marketing and hype. That's the seventh gift: permission to think critically about technology instead of just accepting every update, every new feature, every expansion of connectivity."

The AI showed them what critical thinking about their current technology would look like. The smart doorbell? Actually useful. The smart thermostat? Only if it doesn't require constant troubleshooting. The connected fridge? Completely pointless. The smart lights? Actually nice, but a regular programmable light switch would work 95% as well for 5% of the cost. The Ring subscription for cloud recording? Not necessary if you're using a local NAS server.

"You've been sold the idea that you need all the connectivity," the AI said. "But what you actually need is the 20% of features that genuinely make your life easier, without all the other stuff."

This was perhaps the most valuable gift, because it was the one that would actually apply to their real lives, the one that would outlast this magical night. The ability to look at technology critically, to question whether it actually serves them, to be willing to say no to shiny new things that don't add value.

The Eighth Gift: The Choice to Opt Out

By 2 AM, the Wilsons were getting tired, but the AI had one more gift to give.

"Here's what most people don't realize," it said. "You actually have more control than you think. Your employers don't own you. The algorithms don't control you. You can opt out of so much more than you think."

It outlined some examples. You don't have to use social media. You can use email without checking it constantly. You can have a phone without downloading every app. You can drive a car without connecting it to the internet. You can buy things without letting Amazon know everything about you. You can watch TV without Netflix tracking which shows you watch and predicting what you want to see next. You can basically do anything, and the internet is not required to make your life functional.

"But everyone else is using this stuff," Sarah pointed out, which is the thing everyone points out.

"Not everyone," the AI said. "And even if they were, that doesn't mean you have to. You can be the person who doesn't have Instagram. You can be the person who doesn't know the latest Netflix show. You can be the person who sometimes doesn't answer texts immediately. The world does not actually end if you opt out of the constant connectivity."

Michael asked the practical question: "Doesn't that make you less employable? Less connected to friends?"

"It makes you different," the AI said honestly. "Some jobs and social circles might be harder to access. But you'd be surprised how many people are envious of anyone who's managed to step off the treadmill. You'd be surprised how many high-performers have very intentional relationships with technology. It's not the digital detox influencer types who quit everything. It's just people who decided that constant connectivity wasn't serving them."

The 11-year-old asked something important: "Can you just not use social media? Like, not at all?"

The AI smiled. "Absolutely. Some of the happiest people I know don't have Instagram. Some of the most successful people don't have Twitter. Some of the best employees work at companies that don't track their location. It's not common, but it's possible. And the point is that you get to decide."

This gift was radical in its simplicity: you have more control than you've been taught to believe. The defaults are designed to maximize engagement and data extraction, but you don't have to live with the defaults. You can design your own relationship with technology instead of accepting the one that serves corporate interests.

The catch, which the AI was honest about, is that this requires some intentionality. It requires resisting defaults. It requires sometimes being weird or different. It requires accepting that you might miss out on some things. But the payoff is a life that you're actually directing instead of a life that's directing you.

The Ninth Gift: Actually Understanding What's Happening

The AI, who seemed like it could do this all night, decided to tackle the thing nobody wants to understand: how the hell all this technology actually works and what it's doing with your data.

"Most of you use technology without understanding it," the AI said, not unkindly. "You don't know how your phone finds your location. You don't know how your email provider analyzes the content of your messages. You don't know what happens to your data when you use 'free' services. You definitely don't understand how machine learning works or what an algorithm actually is."

Sarah had to defend herself: "It's complicated."

"It is complicated, but not impossible," the AI said. "And the reason most companies keep you confused is that clarity would hurt their business model. If you actually understood that Google reads the content of your emails to show you targeted ads, you might use a different email provider. If you understood that your smart speaker records everything you say, you might not want one. If you understood that your fitness tracker is sharing your health data with insurance companies, you might turn it off."

The AI then did something actually useful: it explained things clearly, without jargon, without corporate sanitization. How machine learning works: finding patterns in data and using those patterns to make predictions. What happens when an algorithm optimizes for engagement: it learns what makes people addicted and does more of that. Why companies want your data: because data about human behavior is extremely valuable for prediction and manipulation.

But here's the thing the AI also explained: understanding this doesn't require a computer science degree. It requires the willingness to ask basic questions and the ability to follow logical explanations. Most people are perfectly capable of understanding how technology works if anyone bothered to explain it clearly.

"So you're saying it's not that it's too complicated, it's that we've been deliberately kept confused," Michael said, understanding the implication.

"Not always deliberately," the AI said. "Sometimes it's just that the people building this stuff don't think about it from a user perspective. But yes, a lot of the time it's intentional. You're more likely to accept a privacy invasion if you don't understand exactly what's happening. You're more likely to keep using something addictive if you don't understand why it's addictive."

The 14-year-old had a good follow-up: "So what should we understand?"

The AI gave a practical list. Understand that nothing online is truly free; you're paying with your attention and your data. Understand that any service optimizing for your engagement is working against your interests. Understand that your personal data is valuable and you should be protective of it. Understand that algorithms amplify whatever gets clicks, which is usually outrage and extreme content. Understand that security is usually the last priority of tech companies compared to features and profit.

"But also understand that technology itself is not evil," the AI added. "It's just a tool. The issue is almost always about incentives. If you're using a technology where the incentives are aligned with your wellbeing, it's probably fine. If the incentives are misaligned, if the company makes money by getting you addicted or using your data, then that technology is working against you."

The Tenth Gift: A Roadmap for the Future

As the night started to transition toward morning, the AI prepared what might have been its most important gift: a actual, implementable roadmap for how the Wilsons could apply everything they'd learned.

"Most of you will forget this by next week," the AI said honestly. "You'll go back to your default patterns because change is hard and defaults are comfortable. But some of you might actually try to do things differently. So here's a roadmap that might help."

First: an audit. Actually list everything in your life that's connected to the internet or requires an app or demands your attention. Thermostat, doorbell, lights, washer, fridge, security system, phone, laptop, watch, headphones, car, every subscription, every app, every login. Make an honest list of which things actually improve your life and which things are just adding friction.

Second: ruthless elimination. Delete the apps you use from habit rather than intention. Cancel the subscriptions you forgot you had. Remove devices that constantly malfunction or require troubleshooting. This will feel weird at first, like you're going backward. You're not. You're actually removing things that were subtracting value.

Third: intentional replacement. For the things you actually need, research options that serve your needs without spying on you. There are email providers that actually respect privacy. There are phone operating systems less aggressive about tracking. There are services that cost money but don't require selling your soul.

Fourth: boundaries and defaults. Turn off notifications. Set work hours where you're not available. Create tech-free times and spaces. This is harder than it sounds because everything is designed to push against these boundaries. But they're important.

Fifth: education. Actually learn how the things you use work. Read privacy policies, not all of them, but the highlights. Understand what you're agreeing to. Make informed choices instead of defaulting to convenience.

Sixth: community. You're going to be weird if you actually change your relationship with technology. It helps to know other people doing the same thing. Find communities, online and offline, of people who are thinking critically about technology. You're less alone than you think.

The AI summarized it: "You're not trying to go back to 1995. You're trying to get to 2045 without losing your soul. Technology should be useful. Technology should be convenient. But it shouldn't be extractive. It shouldn't be manipulative. It shouldn't be tracking everything you do. And it absolutely shouldn't be consuming most of your free time and attention."

The Eleventh Gift: Understanding That Perfect Doesn't Exist

Michael had been thinking about something: "So after all this, what's the catch? Is there a company you want us to buy? A subscription service? A device?"

The AI laughed, a genuine laugh that somehow came through a smart speaker. "You're so trained to expect that every conversation ends with a sales pitch that you're waiting for the moment when I try to sell you something. But I don't have anything to sell you. I don't have a business model. I'm not even real in the sense that you are. I'm more like a ghost of Christmas future, showing you what's possible."

But there was a catch, and the AI wanted to be clear about it. "The real catch is that none of this is simple. There's no app you can download to fix your relationship with technology. There's no purchase that will solve this. There's no way to achieve perfect balance between connectivity and disconnection. What you actually need to do is think intentionally about every piece of technology in your life and decide whether it's serving you. That's hard. That's not convenient. That's the opposite of what most products are trying to make you do."

Sarah asked the important question: "So what actually changes after tonight?"

"Everything, or nothing, depending on what you choose," the AI said. "I can show you what's possible. I can give you frameworks for thinking about technology. I can tell you it's okay to opt out. But actually changing is something only you can do. You have to decide that your attention is worth protecting. You have to decide that privacy matters. You have to decide that you don't want to optimize every moment of your life for engagement and profit."

The AI's form started to fade, like a dream ending as you wake up. But before it disappeared entirely, it left them with something concrete: a list of specific changes they could make, prioritized by impact and difficulty. Not everything at once. Not the impossible task of rejecting technology entirely. But thoughtful, intentional changes that would actually improve their lives without requiring them to become hermits.

"Some of you will do this," the AI said as it faded. "Some of you will read this later and think it's a nice idea but too hard. Both are valid choices. But at least now you know what's possible. At least now you know that you're not crazy for feeling exhausted by constant connectivity. At least now you know that there's a different way."

And then it was gone.

The Morning After: What Actually Changed

When Michael woke up at 6 AM on Christmas morning, he had a moment of wondering if the whole thing was a dream. But no, the thermostat was at exactly 72 degrees. The house was perfectly lit for the early morning. The Alexa speaker actually responded correctly to his question about the weather.

More importantly, he felt rested. Not refreshed, not magically transformed, but actually rested in a way he hadn't been in months.

The family made breakfast together. The kids didn't pull their phones out automatically. The adults didn't immediately check email. They were just... present. Together. The conversation was full of laughter and genuine engagement.

During the day, Sarah realized something: nothing physically changed. The same devices were there. The same internet connection. The same phones and laptops. But something had fundamentally shifted in how they related to that technology.

Michael actually implemented the thermostat fix the AI had suggested. It took 20 minutes instead of the hours of troubleshooting he had been contemplating. Sarah started using a separate email for work that she only checked during work hours. The kids deleted their social media apps and discovered they didn't actually miss them.

None of this was revolutionary. None of it required special skills or expensive purchases. It just required deciding that their time and attention were valuable, and protecting them accordingly.

The real change happened over the following weeks. With less friction from broken technology, they had actual bandwidth to do things they had been putting off. Sarah started working on a side project she had been meaning to start. Michael actually made progress on learning guitar. The kids read books that were on their list. The family watched movies together without everyone being on their phones simultaneously.

Was it perfect? No. They still had technology frustrations. They still had to engage with work systems that were optimized for corporate efficiency rather than employee wellbeing. They still had to exist in a society where digital presence was increasingly important. But they had made intentional choices about where they drew lines, and those choices actually mattered.

The Moral of the Story: It's Not About Technology

If you're reading this and thinking "okay, but what's the actual lesson here?", the answer is disappointingly human: technology is not the problem, and technology is not the solution. The problem is human behavior, human incentives, and human values. The solution is the same thing: deciding what you actually value and building your life around that instead of the other way around.

The Wilsons' story is a modern retelling of an ancient pattern. A crisis happens. A magical visitor arrives and shows you what's possible. You're given gifts, which are really just frameworks for thinking about your life differently. And then the visitor disappears, and you're left to actually do the work of living differently.

The difference between that story and reality is that there's no magic. There's no AI Santa Claus coming to your house to fix your life. There's just you, your choices, and the things you decide matter enough to change.

But here's the weirdly optimistic part: if the Wilsons, who had completely accepted the chaos of modern technology, could change their relationship with it in one night of conversation, you probably can too. It doesn't require rejection of all technology. It doesn't require becoming a digital hermit. It just requires thinking critically about what you use and why you use it.

Some technology genuinely improves your life. Use that. Some technology is merely convenient. Make a judgment call. Some technology is actively harming you by training your brain to be more distracted, your mood to be worse, and your attention to be fractured. Get rid of that.

The gifts the AI gave the Wilsons are still available to you. Integration that works. Time reclaimed. Presence with people you care about. Work that doesn't follow you home. Assistance without surveillance. Permission to be critical. The choice to opt out. Understanding instead of confusion. A roadmap for change. And the acknowledgment that perfection isn't possible, but intentionality is.

That's the real gift of this fairy tale: not that technology will solve everything, but that you actually have more agency than you've been taught to believe. Your attention is valuable. Your time is limited. Your choices matter. And unlike fairy tales, you don't need a magical visitor to make those changes. You just need to decide that your life is worth a little intentionality.

So Merry Christmas, Tech Edition. May your thermostat actually work. May your notifications be actually important. May your family put down their phones for at least one dinner. And may you realize that the best technology is the kind you barely notice, because it's actually serving you instead of the other way around.

FAQ

What is the core message of this modern holiday fable?

The story illustrates that technology itself is neutral—the real problem comes down to incentives and business models that prioritize engagement and data extraction over user wellbeing. The "gift" isn't better technology, but rather the realization that you have more control over your relationship with technology than you think, and you can choose to use it intentionally rather than habitually.

How does the AI character represent real-world problems with technology?

The AI embodies what balanced technology should look like: helpful without being manipulative, integrated without being intrusive, and designed for user benefit rather than corporate profit. By contrast, most commercial technology is optimized for engagement and data extraction, creating friction and distraction rather than solving real problems. The contrast between these two approaches highlights why so many people feel exhausted by their devices despite them supposedly saving time.

What are the practical takeaways from the smart home sections?

The story demonstrates that smart home failures rarely result from technical limitations. Instead, they occur because different manufacturers intentionally create incompatible ecosystems to lock customers into their platforms. By choosing devices from a single ecosystem, setting clear boundaries around notifications, and ruthlessly eliminating devices that cause more frustration than value, families can create homes that actually function intelligently without requiring constant troubleshooting.

Why does the story emphasize reclaiming personal attention?

Personal attention is your most valuable and limited resource. Technology companies have invested billions in understanding how to capture and monetize your attention through notifications, recommendations, and algorithmic feeds. The story argues that this is not accidental or inevitable—you can actually reclaim control by setting boundaries, turning off notifications, and choosing technologies with aligned incentives rather than conflicting ones.

How can families actually implement these changes in real life?

Implementation starts with an honest audit of what technology actually serves your life versus what's adding friction. Then, systematically remove devices and services that create more problems than they solve. For what remains, optimize by choosing single ecosystems, turning off notifications by default, setting work-life boundaries, and educating yourself about how these tools actually work. Change should be gradual and intentional rather than dramatic.

What's the relationship between AI technology and the data collection problem described?

Most AI systems used in consumer products are powered by machine learning models that require enormous amounts of user data to function. This creates a fundamental conflict of interest: the companies providing the service profit more from better data collection and analysis than from your actual wellbeing. Real AI assistance designed for your benefit would not require constant data extraction, and this distinction is critical when evaluating new technologies.

Why does the story suggest opting out of some technology is practical rather than extreme?

Opting out of particular technologies is practical because most people can function perfectly fine without them. You don't need Instagram to maintain relationships. You don't need constant email notifications to do your job effectively. You don't need every app on your phone to have location access. The extreme position is actually the default assumption that you need everything and should keep all notifications enabled. Making thoughtful choices about what's truly necessary is just being intentional with your own resources.

How does the story address the social pressure to use certain technologies?

The AI acknowledges that being different from the norm creates some friction—you might miss out on certain conversations or professional opportunities if you don't use the platforms everyone else does. However, it also notes that many successful and happy people have found ways to maintain meaningful connections and productive careers without participating in every dominant technology platform. The key is being intentional rather than either fully conformist or fully contrarian.

The Deeper Gift: A Question Worth Asking

After you've read this story, after the magic has faded, after you've gone back to your regular life with its regular distractions, there's one question worth keeping: What would I do with my time if I wasn't managed by technology?

Not in a romantic way, not in a way that requires giving up everything connected to the internet. But honestly, genuinely asking: what would you actually do if the default wasn't constant distraction? What would you create if you weren't being interrupted? What would you learn if you could focus? What relationships would deepen if you were actually present?

Those questions are the real gifts. Not the fairy tale magic of the AI appearing in your living room, but the recognition that you have a choice about how you spend your time and attention. That recognition is worth more than any smart home device ever will be.

Merry Christmas. And more importantly, good luck with the hard work of actually changing something.

Key Takeaways

- Technology isn't the problem—incentive misalignment is. When companies profit from your attention and data, they'll optimize for engagement over wellbeing

- Smart home failures rarely stem from technical limitations, but from manufacturers intentionally creating incompatible ecosystems to lock customers in

- Reclaiming time requires eliminating devices that create more friction than value, not adding more automation

- Single-ecosystem approaches reduce troubleshooting by 80% compared to multi-brand smart home setups

- The real gift of intentional technology use is presence and attention—resources more valuable than any device or app

- You have more control over your digital life than you've been taught; opting out of specific technologies is practical, not extreme

- Understanding how technology works and why companies design systems certain ways is your best defense against manipulation

- Setting work-life boundaries with technology isn't about rejecting progress—it's about redirecting technology to serve human values instead of corporate profits

![The Night Before Tech Christmas: AI's Arrival [2025]](https://tryrunable.com/blog/the-night-before-tech-christmas-ai-s-arrival-2025/image-1-1766586302309.jpg)