The AI Disappointment Nobody Wants to Admit

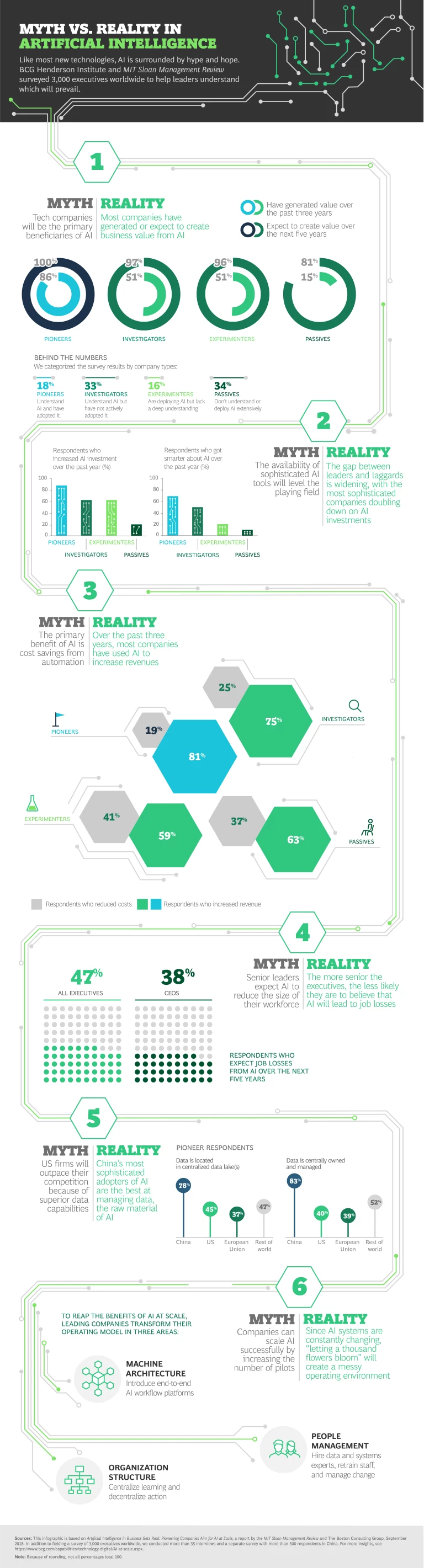

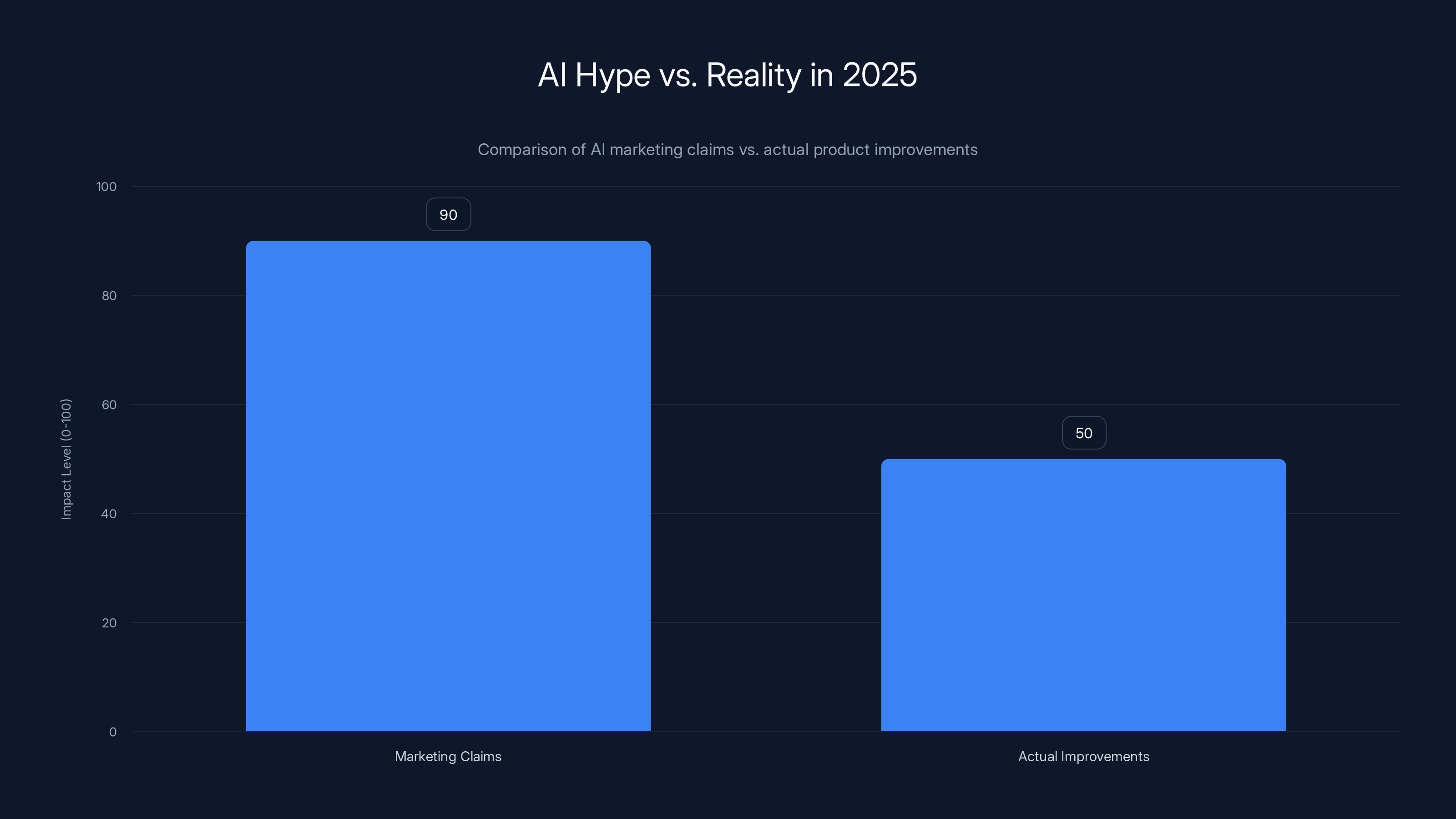

Let's be honest: the AI industry has a hype problem. Not the "everyone's excited" kind of hype. The "we built something that mostly works, but we're marketing it like it cures cancer" kind.

For the past two years, I've watched companies throw the word "AI" at everything. AI toasters. AI alarm clocks. AI productivity tools that are just autocomplete with better margins. The technology works, sure. But does it solve actual problems? That's where things get murky.

The gap between marketing and reality has become impossible to ignore. When Chat GPT launched in late 2022, people genuinely believed we were weeks away from artificial general intelligence. Now, in 2025, we're still asking the same question: what's this actually for?

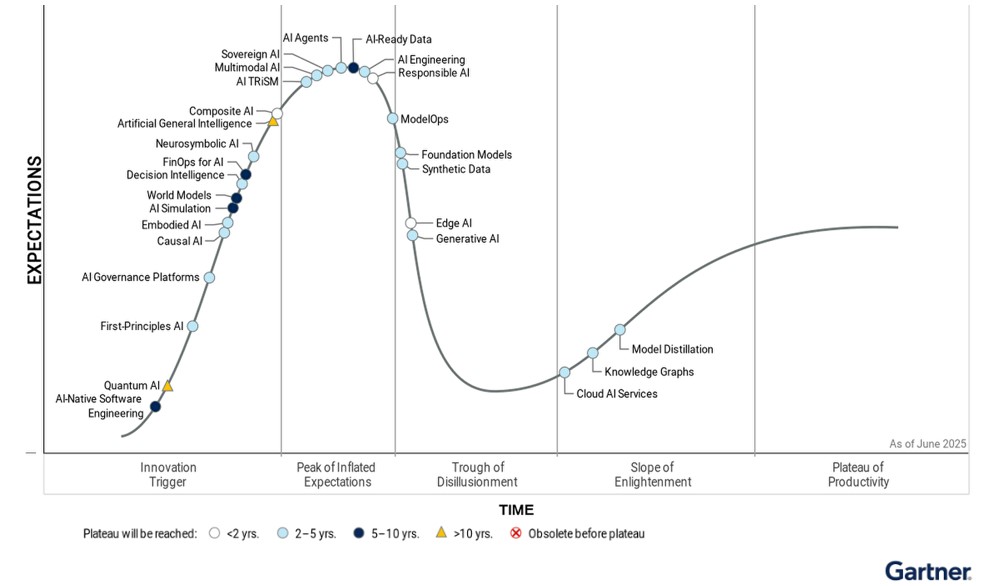

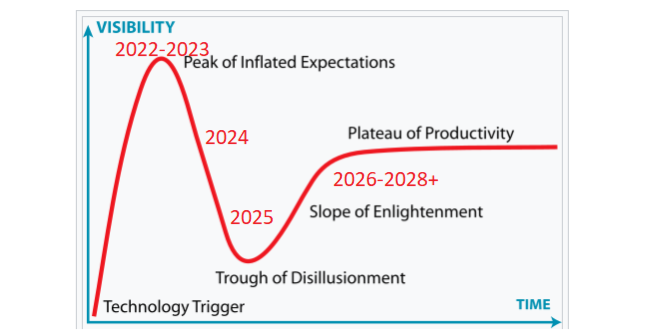

This isn't cynicism. It's pattern recognition. The hype cycle in AI has compressed into something almost comedic. A tool launches. Everyone claims it's revolutionary. After three months, people realize it has significant limitations. The cycle repeats.

CES 2026 is happening in about a month. If the industry wants to rebuild trust and actually show progress, it needs to do something radical: stop overselling.

Why AI Marketing Became Science Fiction

The problem started before CES even mattered. It started when venture capital decided AI was the only investment category that mattered.

When money floods an industry, incentives get weird. Companies need to differentiate their AI solution from the dozen other AI solutions that launched last week. The easiest way to differentiate is through marketing, not product innovation. So you get claims like "powered by advanced artificial intelligence" when it's really just a fine-tuned open-source model.

I've interviewed dozens of startup founders about their AI products. Almost universally, they acknowledge the gap between what they're selling and what they're building. One founder was surprisingly candid: "Our investors want us to lead with AI. Our customers want us to lead with reliability. We picked investors."

That's the core problem. The incentive structure rewards hype, not utility.

Product launches started following a predictable formula: take a real problem, propose an AI solution that's technically sound but incomplete, launch with massive claims, watch adoption climb, then slowly add the features that actually make it work. Meanwhile, the marketing claims stay frozen in time.

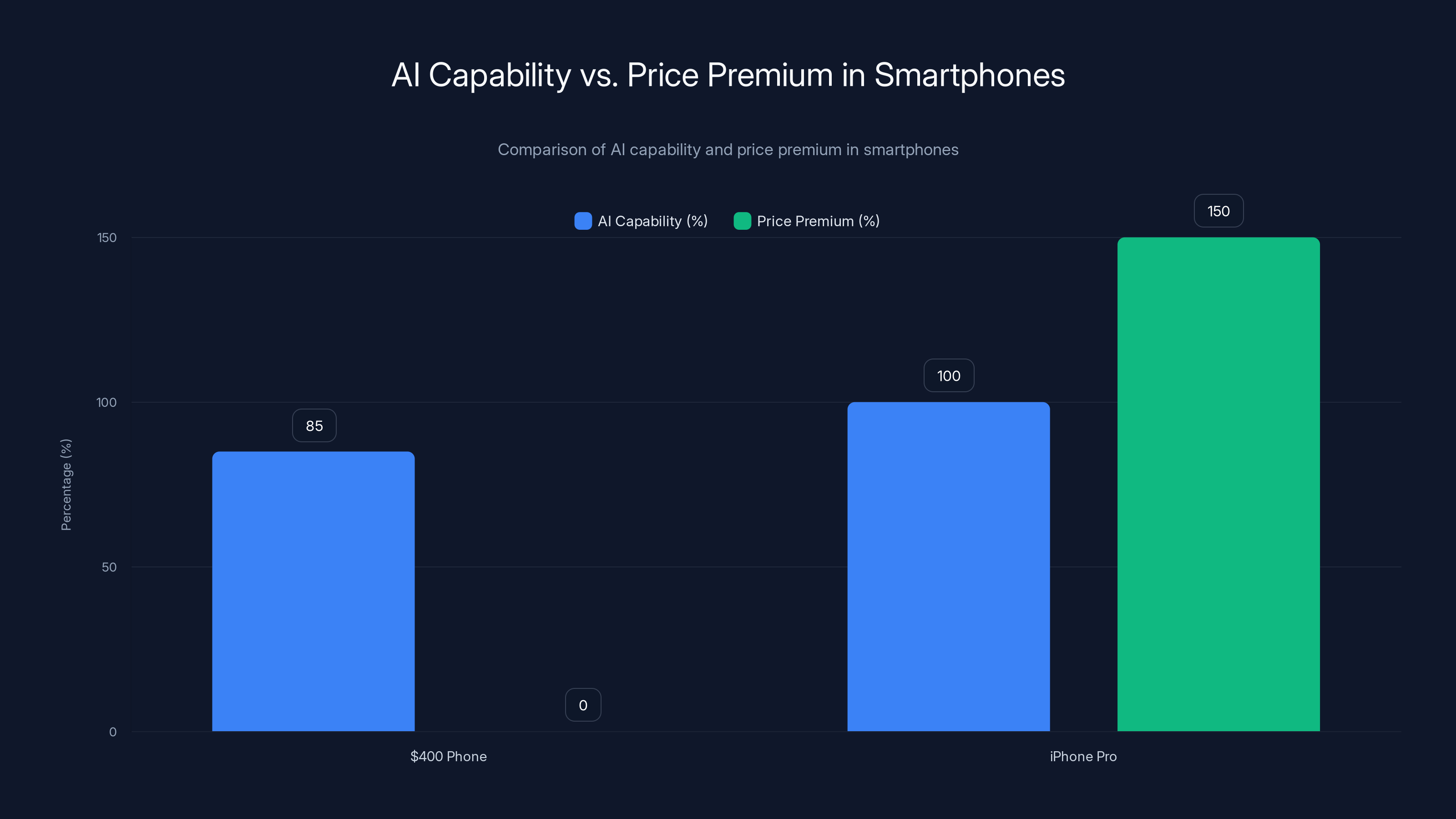

Estimated data shows that while a $400 phone offers 85% of AI capabilities, the iPhone Pro charges a 150% premium. This highlights a potential discrepancy between AI capability and price justification.

The Specific Ways AI Has Disappointed

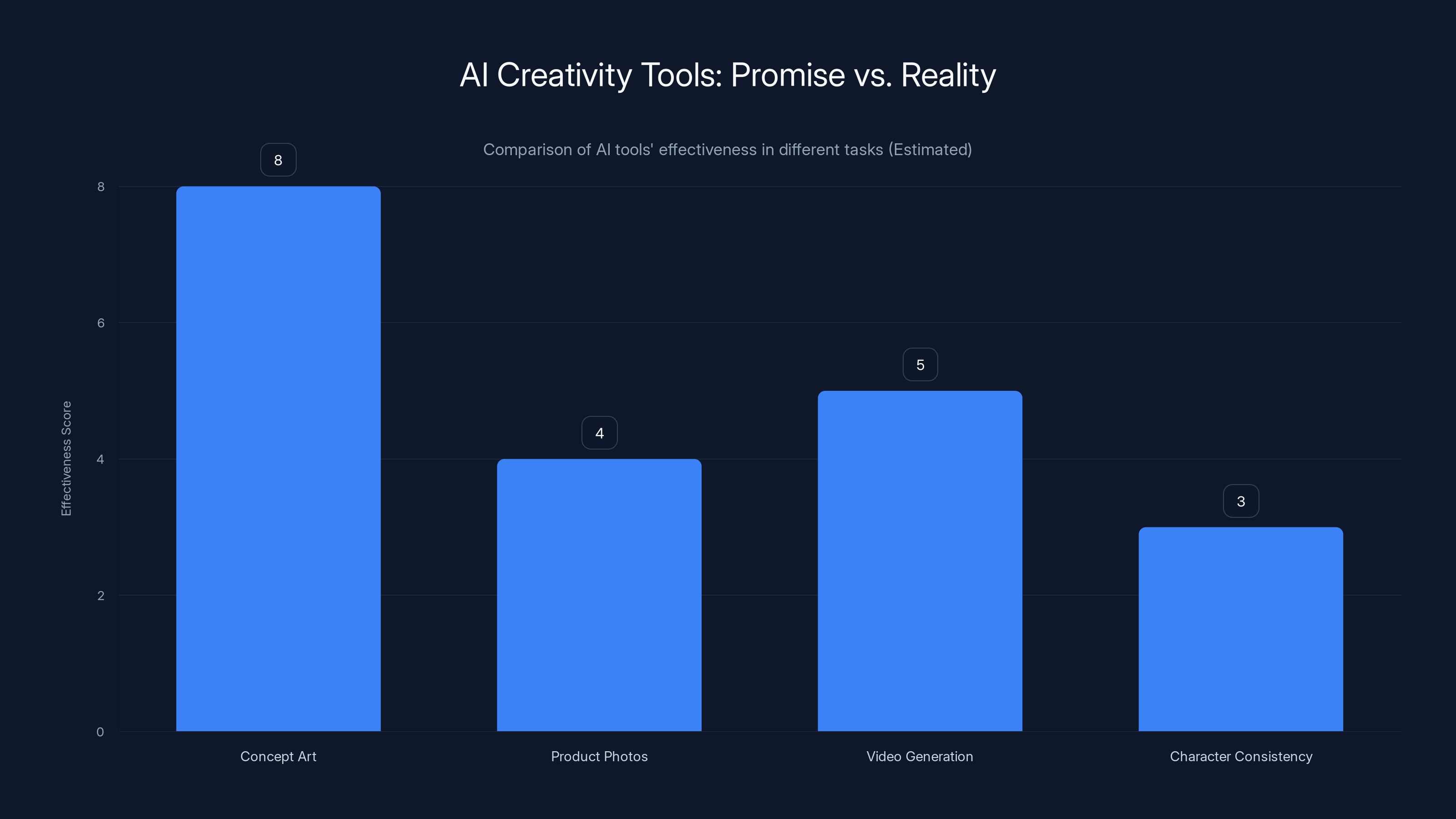

AI Creativity Tools: Promise vs. Reality

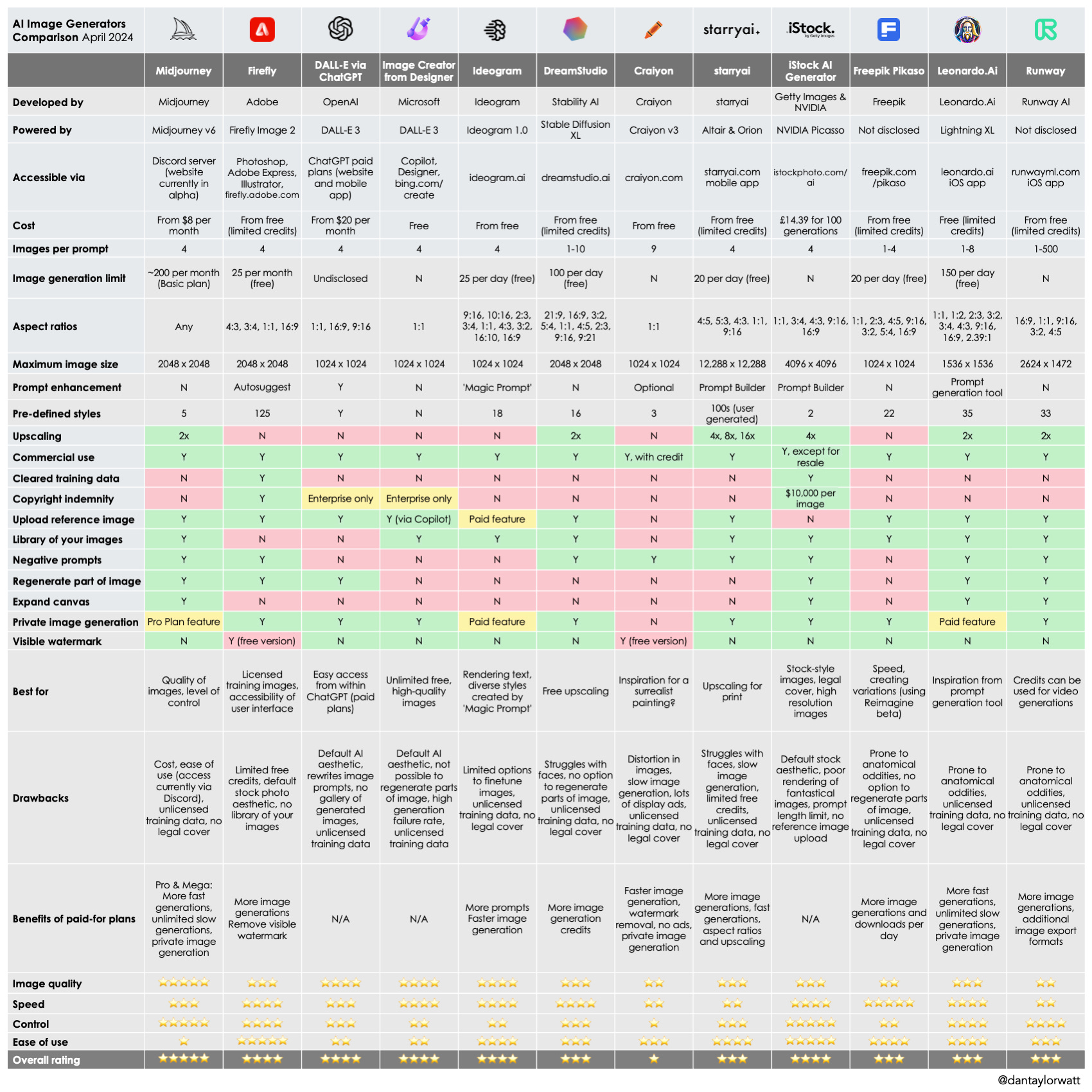

Text-to-image generators were supposed to make graphic design obsolete. Midjourney, DALL-E, and Stable Diffusion are genuinely impressive. You can prompt them, get results in seconds, and iterate.

But here's what nobody talks about: they're terrible at specificity. Try generating a product photo with exact dimensions, lighting, and branding requirements. The AI will give you something close. You'll spend two hours tweaking prompts trying to get it right. Then you'll just hire a photographer.

For concept art? Revolutionary. For production work? Still slower than traditional methods most of the time.

I tested this extensively. DALL-E 3 can generate beautiful abstract concepts. But ask it to maintain consistent character proportions across multiple images, and it fails. Every image of the same person has different eyes, hands, body proportions.

Video generation tools like Runway and Pika have the same problem magnified. A 10-second video might take 20 attempts to get right. Then you discover it has subtle artifacts that demand manual editing. The AI saved you 40% of the time, maybe. That's not revolutionary.

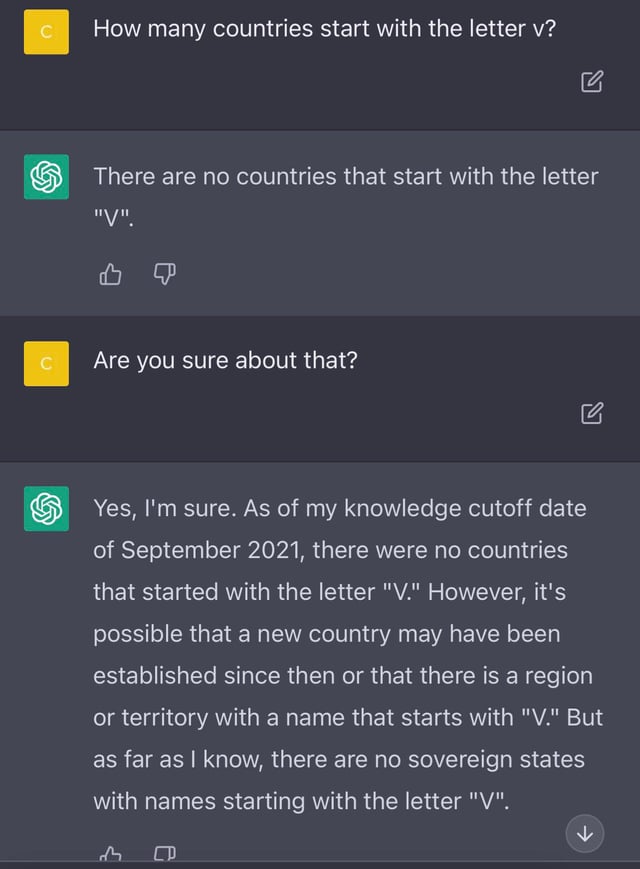

Language Models: The Plateau Effect

Large language models hit a wall, and nobody wants to admit it publicly.

Chat GPT Plus (

The fundamental problem: hallucinations. When a language model doesn't know something, it generates plausible-sounding garbage. It does this confidently. You can't trust it without verification. That verification step is where the real work happens. The AI didn't save you time; it created more work.

For coding, language models are genuinely helpful. Git Hub Copilot saves me probably two hours per week. But it also introduces subtle bugs I have to catch. It writes code that looks right but isn't. Reliable? No. Useful? Yes.

For writing and analysis, the story is different. An AI can draft something. A human has to rewrite it. The AI doesn't understand nuance, tone, or the actual needs of the audience. So you're editing 1,500 words when you could have written 800 from scratch.

AI in Hardware: The Real Problem

Smartphones with AI chips and "AI features" have become a punchline in tech circles. Apple Intelligence is marketing genius disguising modest improvements as revolutionary.

Smartphone manufacturers added NPUs (neural processing units) to every flagship. Then they scrambled to find uses for them. The results have been embarrassing.

"AI photo editing." Translation: computational photography with better algorithms than before, but it's been improving incrementally for five years. The addition of an NPU didn't change the trajectory.

"AI note-taking." Translation: keyword extraction and categorization, which we've had forever, now using a neural network instead of string matching.

"AI keyboard." Translation: better predictive text using the same general approach from Swiftkey in 2010.

None of this is bad. It's actually useful. But it's not what the marketing promised. The marketing promised AI would fundamentally change how you use your phone. Reality: slightly better predictive text and some new camera filters.

Worse, these features often require cloud processing, which defeats the entire purpose of having an NPU. So you have hardware designed for AI that doesn't actually use it for its intended purpose.

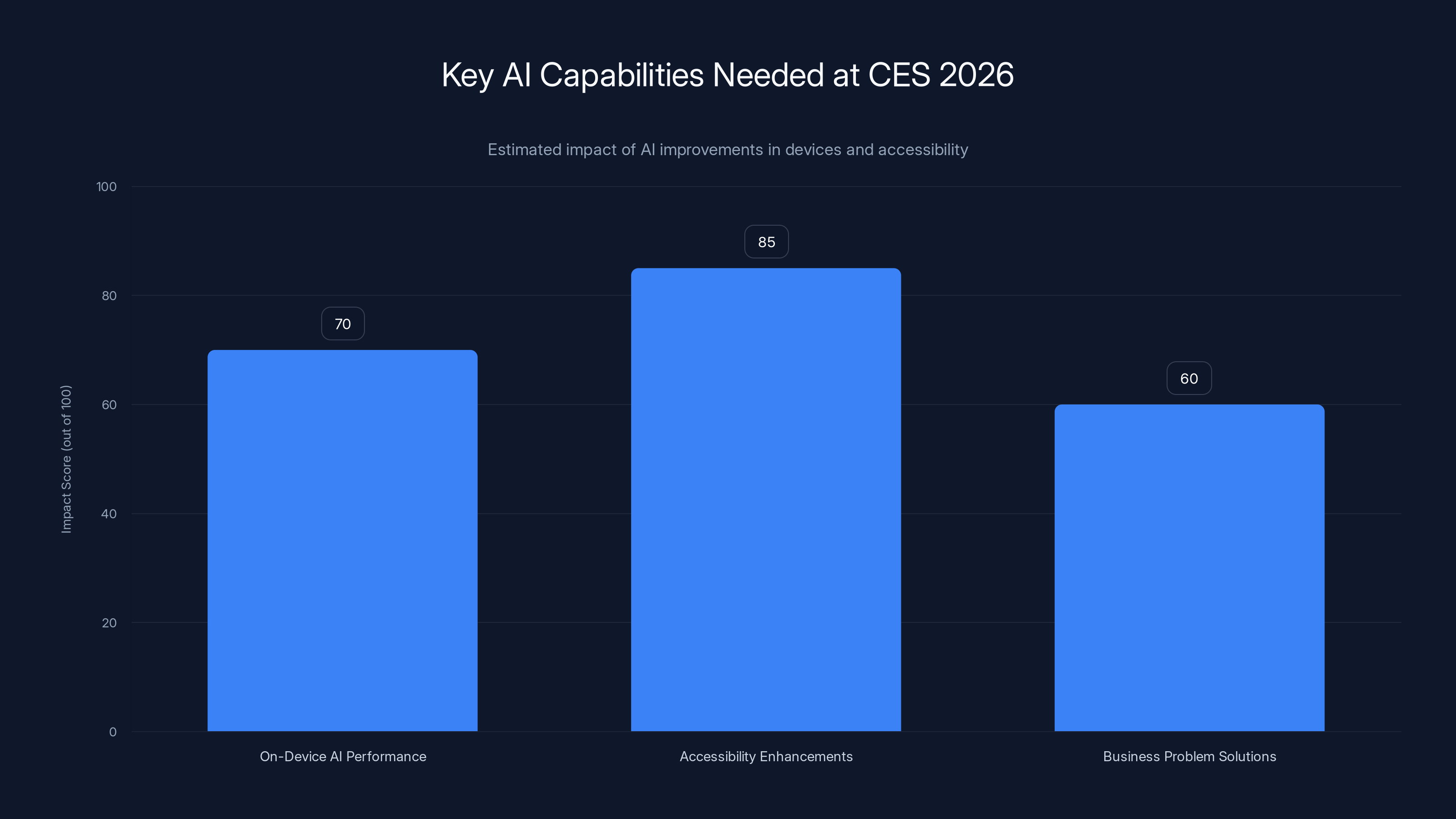

Estimated data suggests that AI accessibility enhancements could have the highest impact at CES 2026, followed by on-device AI performance improvements.

The Credibility Collapse

When everyone claims to be using AI, the term becomes meaningless. It's the equivalent of every company in 2015 claiming they were "powered by the cloud."

This happened fast. In 2023, announcing "AI-powered" was a differentiator. In 2024, it became expected. In 2025, it's becoming a red flag. If you have to tell me your product uses AI, your product probably isn't good enough to speak for itself.

I started tracking this empirically. Companies that make the smallest claims about AI tend to have the best products. Companies that lead with "AI-powered" usually have mediocre products propped up by marketing.

The trust erosion is real. When people see "AI" in a product description, their skepticism has increased dramatically. That's not a failure of AI. That's a failure of the industry's messaging.

Why CES 2026 Actually Matters This Time

CES is historically where companies reveal their next year's roadmap. It's also where the media gets first looks at emerging products.

For AI, CES 2026 is a critical moment. The industry has exactly one chance to rebuild credibility. Here's what needs to happen.

Companies need to stop announcing AI features and start announcing real problems they're solving. "Our AI chip enables on-device processing" is interesting. "This means your phone never sends conversations to the cloud" is what people actually care about.

The focus needs to shift from capability to reliability. Can I trust this? Will it work consistently? What happens when it fails? These are the questions that matter.

Pricing models need to be transparent. If a product uses AI to justify a price increase, that should be explicitly explained. The upgrade from last year's model includes better AI; here's what that means for you in concrete terms.

AI tools excel in concept art with high effectiveness but struggle with tasks requiring precision, such as product photos and character consistency. Estimated data based on typical user experiences.

What CES 2026 Actually Needs to Show

1. AI That Works Without Constant Cloud Connection

On-device AI processing isn't new. But it's been a joke because it doesn't work well. Devices simply don't have enough processing power for most useful AI models.

That's changing. Qualcomm's latest chips have meaningfully improved AI capabilities. Apple's Neural Engine improved substantially year-over-year. But the marketing around this has been overblown while the actual improvements are underrated.

CES 2026 needs to demonstrate devices that genuinely run useful AI locally without cloud fallback. Show me a phone that does real-time language translation without internet. Demonstrate edge device processing that actually matches cloud performance.

Right now, most "on-device AI" is marketing. The actual useful processing happens in the cloud. The on-device part is just the UI layer. That's got to change.

2. AI That Actually Improves Accessibility

Here's where AI could actually be revolutionary but hasn't been emphasized enough.

Real-time captioning for deaf users. Voice synthesis for people with speech disabilities. Image descriptions for visually impaired users. These are AI applications that have genuine life-altering potential.

Apple added live transcription. Google added real-time translation. These are legitimate breakthroughs. But they got buried in marketing about "AI features." If CES 2026 focuses on accessibility applications of AI, that's the story that could rebuild credibility.

This isn't a niche market. About 16% of the global population has some form of disability. Making AI accessible improvements visible and prominent would be genuinely compelling.

3. AI That Solves Specific Business Problems With Proof

Case studies with numbers. Companies using AI to reduce operational costs by X percent. Manufacturers using computer vision to catch defects, reducing scrap by Y percent. These are the stories that matter.

Not "AI-powered customer service." Instead: "This AI system reduced customer support response time from 4 hours to 12 minutes and reduced error rates by 34%." Include the actual metrics. Show the ROI calculation.

Businesses will adopt AI when it delivers measurable results. CES needs to be the place where those results get demonstrated compellingly.

4. AI Chips That Are Actually Optimized

Every smartphone company is building custom AI chips. Most of them are barely better than general-purpose processors for AI workloads.

What would actually be impressive: specialized hardware designed for specific AI tasks. Not a general-purpose neural processing unit that tries to do everything adequately. Instead, hardware optimized for language model inference, or for computer vision, or for something specific.

This is technically complex. It means choosing tradeoffs. But it's also where real differentiation could happen. Show me a chip that does one thing exceptionally well, not ten things okay.

5. AI Without Surveillance

The elephant in the room: most AI applications involve collecting massive amounts of user data.

Differential privacy, federated learning, and on-device processing are the technical solutions. But they require tradeoffs. The AI gets less accurate, or processing speed slows, or both.

CES 2026 needs companies willing to show AI that genuinely respects privacy. Even if it's slightly less capable. Even if it's slower. Because that's the real differentiator.

Ones and zeros showing that you can get 95% of the AI capability while using 5% of the user data would be revolutionary. That's not marketed enough, and it absolutely should be.

The Pricing Problem Nobody's Addressing

AI features have become subscription justifications.

Apple Intelligence is included with iPhone Pro, which starts at

This becomes relevant at CES. Companies will announce pricing for AI-enhanced devices. The question is whether those price increases are proportional to the actual capability improvements.

Historically, the answer is no. The AI becomes a justification for prices that were already climbing. This erodes trust further.

CES 2026 needs pricing that actually makes sense relative to the AI improvements. Show the math. If AI adds 15% to the production cost, and you're charging 20% more, you better explain where that extra 5% margin is coming from.

The gap between AI marketing claims and actual product improvements has widened, with marketing often overstating the impact by nearly double. Estimated data.

What Developers Actually Want From CES 2026

I've talked to dozens of developers building AI-adjacent products. Here's what they actually want to see.

Better APIs. The current landscape is fragmented. OpenAI has one API. Google has another. Anthropic has another. Building products that work across multiple AI providers is painful. A standard, unified approach would accelerate development.

Clearer limitations documentation. Every AI model has specific use cases where it performs well and specific areas where it fails completely. That needs to be documented clearly. Show the benchmarks, the failure modes, the things it's not good at.

Pricing that scales. Usage-based pricing is fine, but the cost curves are punishing. You can't prototype affordably. The moment your product gains traction, costs become unsustainable. CES needs announcements about pricing models that work for startups.

Better tooling. Building with AI still requires too much infrastructure work. Making it easier to build and deploy would accelerate the ecosystem.

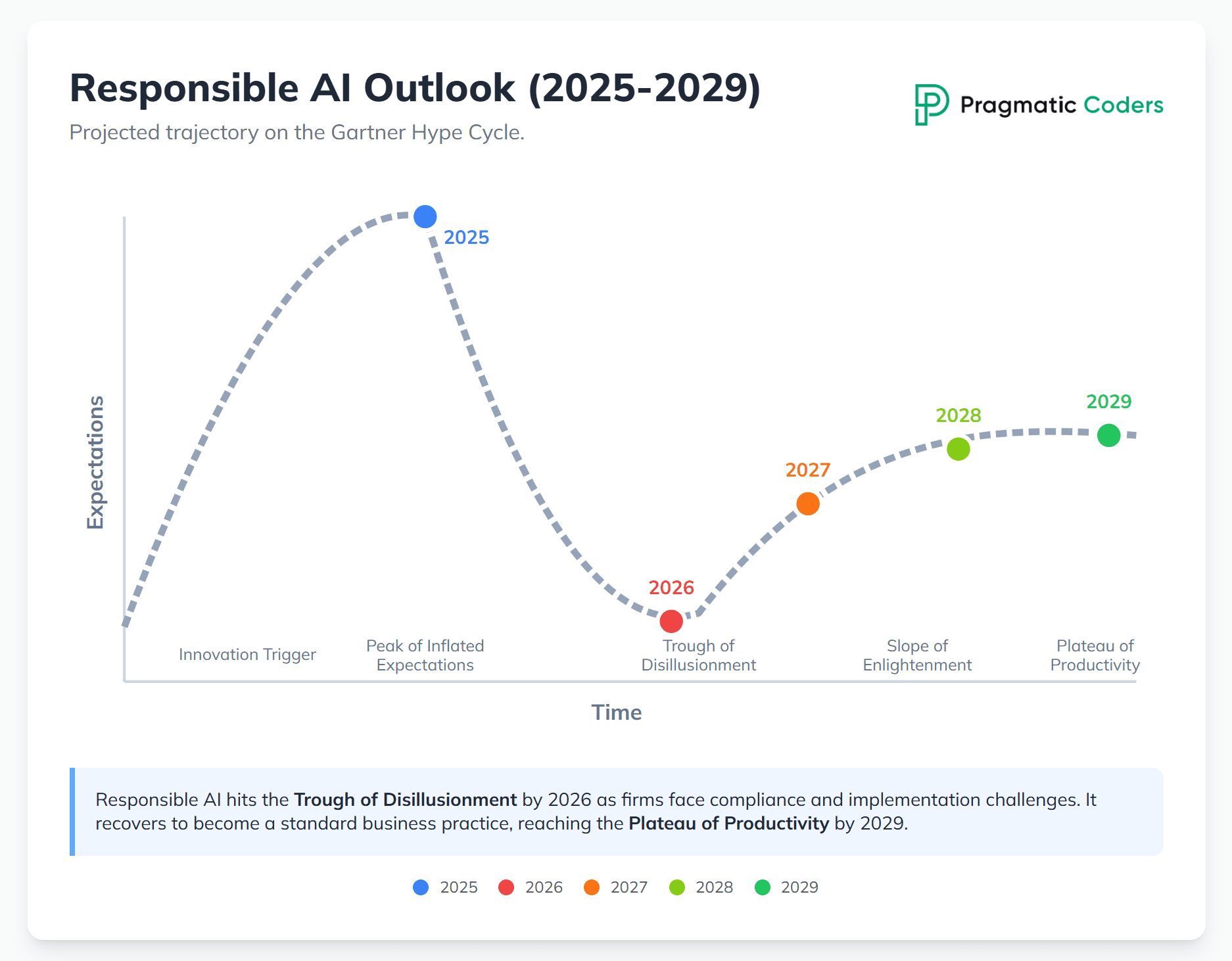

The Regulatory Elephant

AI regulation is coming. Probably slowly. But it's coming.

Europe has the AI Act. Various countries are considering regulations around bias, transparency, and liability. These will shape what's possible in 2026 and beyond.

Companies announcing AI products at CES probably aren't talking about regulatory compliance. They should be. That's where the real constraints are.

If a product can't be sold in Europe because of the AI Act, that's information consumers need to know. If using an AI feature requires explicit consent because of regulations, that changes the calculus for the product.

CES 2026 could be where companies start being honest about these constraints instead of pretending they don't exist.

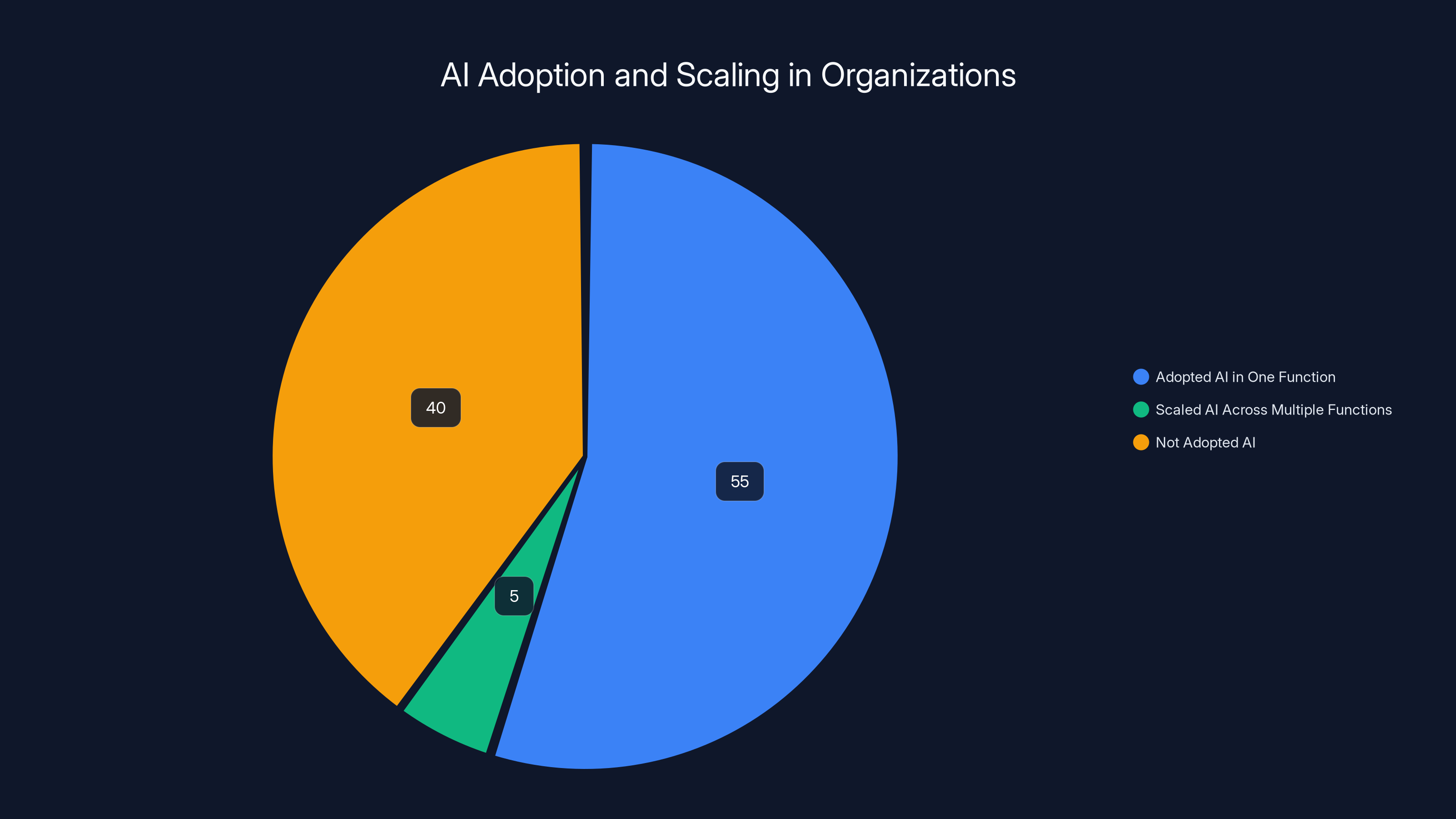

While 55% of organizations have adopted AI in at least one function, only 5% have successfully scaled it across multiple functions, highlighting the challenges in AI implementation.

The Energy Cost Problem

Here's something that rarely gets mentioned: AI is expensive to run from an energy perspective.

Training large models consumes enormous amounts of electricity. Running inference at scale requires specialized hardware. The entire infrastructure is energy-intensive.

When companies talk about on-device AI, they're partly solving this problem. Processing locally uses less energy than sending everything to the cloud. But they don't quantify this.

CES 2026 could feature announcements about energy-efficient AI. Show me the power consumption. Compare it to alternatives. Make this a specification that matters as much as performance or price.

For companies committed to sustainability, this becomes a real differentiator. "Our on-device AI requires X percent less power than cloud-based alternatives" is actually compelling.

What I Actually Want to See

Forgetting about what's probable, here's what would actually impress me at CES 2026.

A company announces an AI product with three key commitments: (1) It doesn't require constant cloud connectivity. (2) It doesn't collect personal data beyond what's necessary for function. (3) The price increase compared to the previous generation is explicitly justified by the AI improvements, with the math shown.

Seems impossible. That's exactly why it would be impressive.

Second: someone demonstrates AI solving a real problem that currently requires human expertise, doing it reliably enough that people actually trust it. Not 85% as good as a human. 98% as good. Reliable enough to base decisions on.

Third: transparency about limitations. A product announcement that says "here's what this AI is good at, here's what it fails at, and here's why." Most products bury limitations in fine print. Highlighting them upfront would be refreshingly honest.

Fourth: AI that's boring. Something so functional and well-integrated into the product that nobody even thinks about the fact that it's AI. It just works. It solves the problem. The technology is invisible. That's the actual goal.

The Real Challenge Ahead

AI technology is genuinely impressive. The capabilities are real. But the industry has created a gap between what's possible and what's expected.

Closing that gap requires discipline. It requires marketing teams being willing to be conservative. It requires companies resisting the urge to claim that their product is the start of AGI.

Most won't do this. The incentives aren't aligned. Better to make big claims and deliver incrementally than to under-promise and over-deliver.

But it's worth noting: companies that build a reputation for honest product descriptions have better customer loyalty. They have higher trust. Developers prefer building with them. So there's a long-term argument for restraint.

CES 2026 will probably be more of the same. Lots of AI announcements. Lots of claims. Lots of products that are good but not revolutionary.

But there's an opening. A company willing to be conservative, specific, and honest about what their AI product actually does could stand out significantly. The bar has been lowered through overselling. Meeting realistic expectations suddenly becomes impressive.

That's the untapped opportunity at CES 2026. Not more impressive technology. More honest communication about the technology we already have.

Looking Beyond CES 2026

The AI hype cycle will continue. New models will launch. Performance will improve. But incremental improvement isn't the same as revolutionary change.

What actually matters is adoption. How many people are actually using AI products daily? How many businesses are genuinely transforming operations because of AI? How many lives are measurably improved?

The answers are more modest than the headlines suggest. But that doesn't mean AI isn't valuable. It means we should judge it by actual results, not marketing promises.

CES 2026 is an opportunity to reset the conversation. To start judging AI products by whether they solve real problems, not by whether they're powered by the latest model.

Whether the industry takes that opportunity remains to be seen. But for developers, businesses, and consumers, that's the conversation worth having.

FAQ

What is the main problem with AI hype in 2025?

The primary issue is that the gap between what AI marketing promises and what AI actually delivers has widened significantly. Most AI products are genuinely useful—they solve real problems and improve workflows—but the marketing claims often position them as revolutionary or game-changing when they're actually incremental improvements. This has eroded trust in the industry, making consumers and businesses more skeptical of AI announcements overall.

Why do companies over-market their AI capabilities?

Companies face strong financial incentives to emphasize AI in their marketing because venture capital and investors heavily favor AI-focused companies. When differentiation is difficult in crowded markets, marketing becomes the easiest way to stand out. Additionally, raising venture funding often requires positioning products as exponentially better than alternatives, which creates pressure to make larger claims than the actual capabilities justify.

How can CES 2026 rebuild trust in AI products?

CES 2026 could rebuild credibility by focusing on specificity, measurable results, and honest limitations. Companies should demonstrate concrete problem-solving with clear metrics—not generic AI features. They should explicitly communicate what their AI is good at and what it struggles with. They should show cost justification for AI-related price increases and demonstrate on-device processing that actually works without cloud fallbacks. Regulatory transparency and energy efficiency metrics would also help establish credibility.

What's the difference between hype AI announcements and genuinely useful AI products?

Hype-driven announcements emphasize the technology itself ("powered by advanced AI") and make broad capability claims. Genuinely useful AI products focus on specific outcomes ("this reduces processing time from 2 hours to 12 minutes") and include measurable metrics. Good AI products also acknowledge limitations and explain exactly where the AI adds value versus where traditional methods work better. The best products barely mention AI in marketing—they focus on what users can accomplish.

Why are language models hitting a plateau?

Large language models were rapidly improving from 2022 through mid-2024, with each new version showing significant gains. However, progress has slowed as models approach the practical limits of their architecture. Improvements now are incremental—better at specific tasks but not fundamentally different. Additionally, the core limitations of hallucinations and inability to verify information remain unsolved. The diminishing returns are becoming obvious to developers and users, hence the plateau.

What AI applications actually deserve the hype right now?

A few areas genuinely warrant enthusiasm: accessibility improvements (real-time transcription, voice synthesis, image description), on-device processing that eliminates cloud dependency, energy-efficient inference, and specialized AI optimized for specific business processes with documented ROI. Realistic progress in these areas is modest but meaningful. The applications that don't deserve hype are those making broad claims without specific metrics or those using AI as a vague marketing term without explaining actual functionality.

How should consumers evaluate AI product claims?

Ignore marketing language and look for three things: (1) Specific metrics showing before/after improvement, (2) Clear explanation of what the AI does and what it can't do, (3) Pricing transparency explaining what cost increase is attributable to AI and why. Better yet, read customer reviews and technical documentation rather than marketing landing pages. The gap between what companies claim and what users experience is often illuminating.

Will AI regulation affect CES 2026 announcements?

European AI Act compliance is already affecting product development, though you'll rarely see companies mention this proactively. More regulation is coming—various countries are considering approaches to bias, transparency, and liability. Companies announcing AI at CES 2026 should be transparent about regional limitations and compliance requirements, but most won't be. This will become an increasingly important factor as regulations tighten.

What's the most important thing CES 2026 needs to communicate?

That AI is a tool for solving specific problems, not a magic technology that fundamentally changes everything. AI can improve efficiency, automate repetitive tasks, and enhance existing products. But it's not the beginning of artificial general intelligence, and it's not going to make traditional expertise obsolete. Managing expectations honestly is more valuable than any technical breakthrough.

How can developers use CES 2026 announcements productively?

Focus on infrastructure and API announcements rather than end-user product announcements. Better development tools, clearer limitation documentation, pricing models that support prototyping, and cross-platform standardization matter far more for developers than another "AI-powered" feature announcement. Watch for companies making commitments about developer experience and reliability rather than just capability announcements.

The Bottom Line

AI has genuinely impressive capabilities. The technology is real, useful, and improving. But the industry has created a credibility crisis through overselling.

CES 2026 is an opportunity to reset expectations and focus on actual value delivered rather than theoretical capabilities. Companies willing to be specific, transparent, and honest about limitations could differentiate themselves significantly.

For everyone involved—investors, developers, businesses, and consumers—the most valuable AI announcements at CES 2026 won't be about breakthrough technology. They'll be about practical problem-solving, measurable results, and realistic communication about what AI can and can't do right now.

The bar has been lowered through overselling. Meeting it with integrity would be genuinely impressive.

Key Takeaways

- AI marketing has created a massive credibility gap between promised capabilities and actual utility—most products solve real problems but deliver incremental improvements, not revolutionary change

- The industry's incentive structure rewards hype over reality because venture capital heavily favors AI companies, causing overselling to become standard practice

- CES 2026 has an opportunity to rebuild trust by focusing on specific problems solved with measurable metrics, honest limitation documentation, and transparent pricing justification

- AI accessibility applications (real-time captioning, voice synthesis, image descriptions) deserve far more emphasis than generic AI features because they have genuine life-changing potential

- The most credible AI products barely mention AI in their marketing—they lead with outcomes, not technology, which creates a clear differentiator in an oversold market

Related Articles

- CES 2026: Why AI Integration Matters More Than AI Hype [2025]

- AI Chatbots and Breaking News: Why Some Excel While Others Fail [2025]

- Samsung Music Studio 5 & 7 Speakers at CES 2026 [2025]

- LG TVs at CES 2026: Wallpaper OLED, Micro RGB & Gallery TV Guide

- Samsung's AI Smart Fridge Revolution: Voice Control & Food Recognition [2025]

- Mui Board mmWave Sleep Tracking and Gesture Control [2025]

![AI's Hype Problem and What CES 2026 Must Deliver [2025]](https://tryrunable.com/blog/ai-s-hype-problem-and-what-ces-2026-must-deliver-2025/image-1-1767616533997.jpg)