Amazon's AI Tools for Film & TV: What Producers Need to Know [2025]

Last summer, Amazon MGM Studios made a quiet but significant move. They launched a dedicated AI Studio to build proprietary tools for streamlining television and film production. Now, fast forward to 2025, and those tools are about to enter the real world.

In March, Amazon will begin a closed beta program inviting industry partners to test these AI tools. By May, the company plans to share initial results. This isn't just another tech company dabbling in entertainment. This is a studio with actual IP, actual production experience, and actual resources betting big on AI fundamentally changing how content gets made.

The implications? Massive. And messy.

I spent the last few weeks talking to producers, directors, and technical leads in the industry. The consensus is this: Amazon's move signals a tipping point. AI in Hollywood isn't coming. It's here. The question isn't whether studios will use it. The question is how fast, at what cost to jobs, and whether the final product is worth the tradeoffs.

Let's dig into what Amazon's actually building, why it matters, and what happens next.

TL; DR

- Amazon MGM Studios begins testing proprietary AI tools in March 2025 with a closed beta program targeting production companies and studios

- The tools focus on character consistency, pre-production, and post-production workflows, including AI-generated shots that require no manual animation

- "House of David" season two featured 350 AI-generated shots, proving Amazon's tech works at production scale

- Industry leaders like Robert Stromberg (Maleficent director) and Kunal Nayyar (The Big Bang Theory) are collaborating on implementation strategy

- The debate around AI in Hollywood is heating up, with concerns about job displacement, creative integrity, and IP protection

- Bottom line: AI production tools are no longer experimental. They're becoming operational infrastructure for major studios.

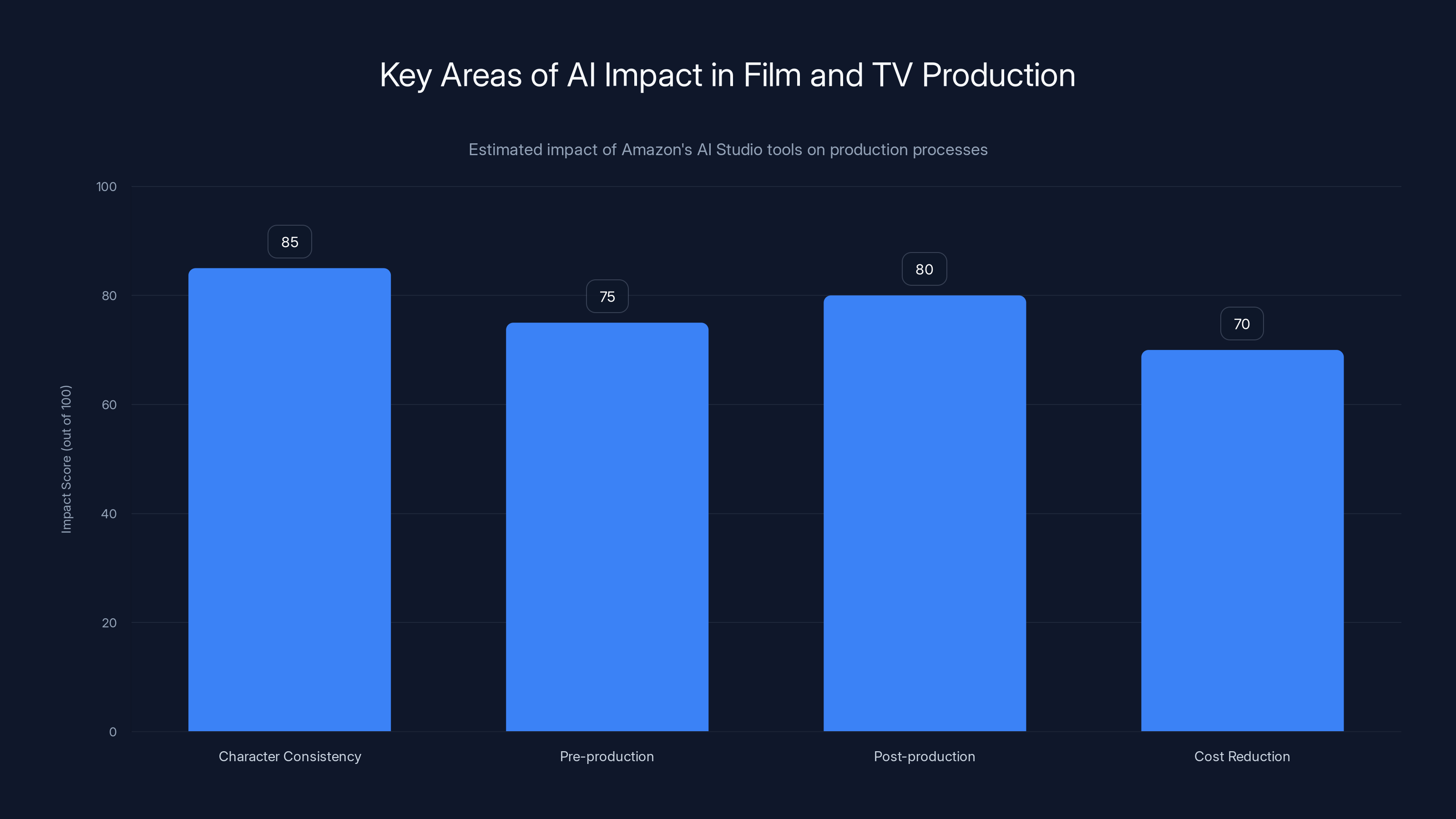

Amazon's AI Studio tools are estimated to significantly enhance character consistency, pre-production, and post-production processes, with notable cost reductions. (Estimated data)

What Amazon's Building: The Technical Foundation

Amazon's AI Studio isn't a single tool. It's a suite of interconnected systems designed to handle specific production challenges that currently consume massive amounts of time and money.

The core focus areas reveal what Amazon sees as the biggest pain points in modern filmmaking. Character consistency across shots is one. When you're shooting a scene with an actor in multiple takes, ensuring that lighting, facial expressions, and proportions remain consistent is laborious. It requires manual adjustments, rotoscoping, and frame-by-frame supervision. Amazon's tools can analyze character features across shots and suggest corrections or generate adjusted versions automatically.

Pre-production work is another target. Concept art, storyboards, animatic sequences, and previz all require skilled artists and substantial time. Early reports suggest Amazon's tools can generate variations on designs, test different compositions, and produce rough animatics faster than traditional methods.

Post-production is where the real complexity lives. Color grading, compositing, visual effects, and shot enhancement typically involve teams of specialists and weeks of iteration. If AI can accelerate even 20% of this work, the time and cost savings are enormous.

What's particularly interesting is that Amazon is positioning these tools as collaborative, not replacements. Albert Cheng, who heads the AI Studios initiative, was explicit about this in early statements. The goal isn't to fire the VFX team. It's to give the VFX team better starting points, faster iterations, and more time for creative decision-making rather than mechanical execution.

That's the messaging, anyway. Whether the market reality matches that messaging is another question entirely.

The Closed Beta: Who Gets Access and Why It Matters

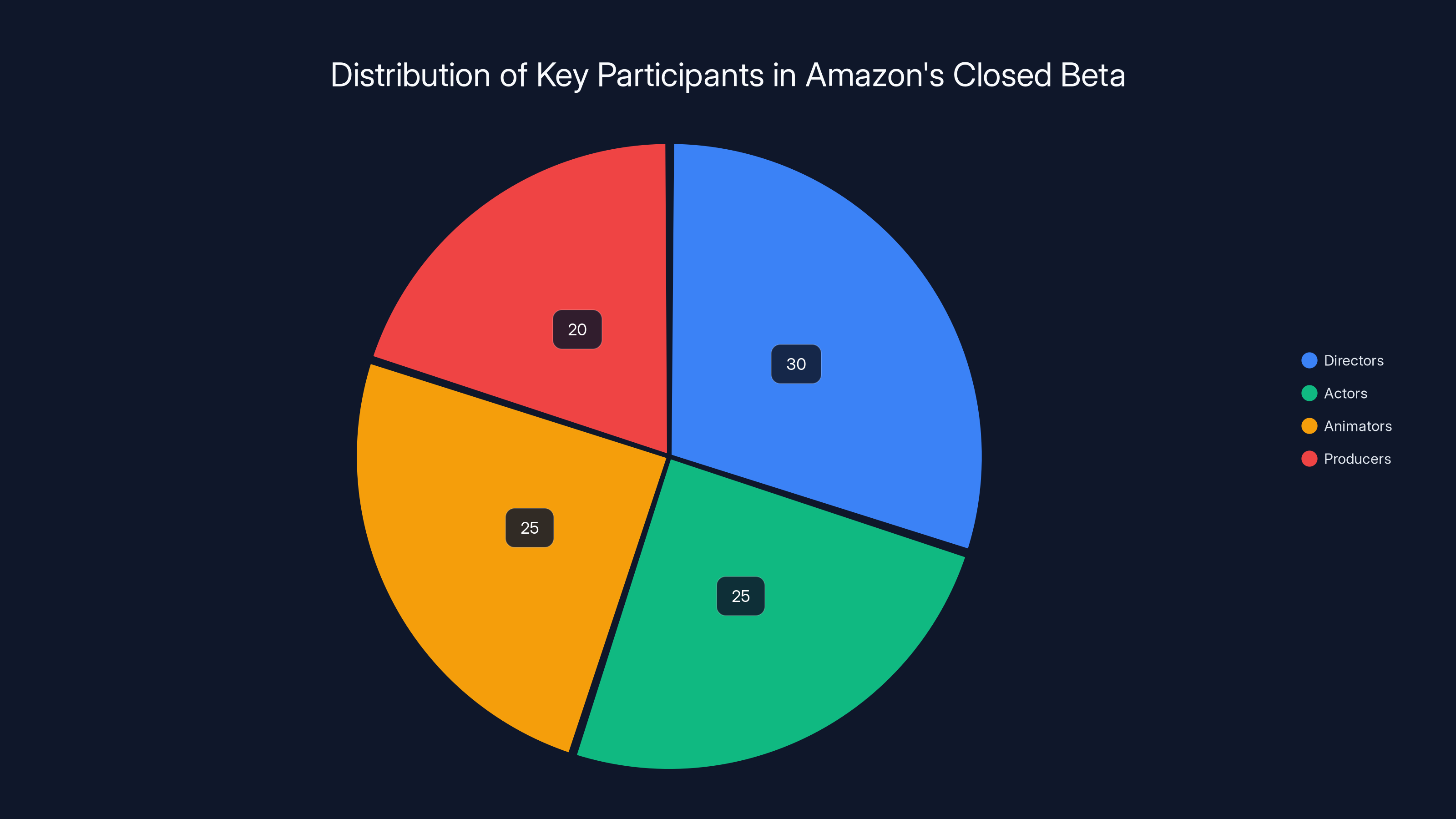

Amazon's not giving these tools to everyone immediately. The closed beta is strategic. It's a way to gather feedback from experienced producers and directors before a wider rollout, and it's also a way to shape narrative in Hollywood about what these tools can and can't do.

The producers collaborating with Amazon on this are significant. Robert Stromberg directed "Maleficent" and has extensive experience with visual effects-heavy productions. Kunal Nayyar, known for acting in "The Big Bang Theory," brings creative sensibility from long-form television production. Colin Brady, formerly at Pixar, brings animation expertise. This isn't a random selection. These are people with credibility in the industry who can influence how other creatives perceive the tools.

The timing matters too. A closed beta in March means results by May. This puts Amazon on a specific timeline. They're likely aiming for announcements at industry conferences like Vid Con or Cinema Con. They want momentum. They want other studios watching, thinking about what this means for their own production pipelines.

Beta testers will likely get access to tools in isolated environments where they can experiment without affecting live productions. They'll probably be signing strict NDAs, which means early feedback won't immediately leak to the broader industry. This gives Amazon runway to refine based on real usage before competitors start building alternatives.

The closed beta also serves as a liability buffer. If something goes wrong, if the AI generates something unusable or problematic, Amazon's liability is limited to the beta partners who signed agreements. By the time tools reach wider availability, many of those rough edges should be polished.

Amazon's AI Studio could potentially save 20-30% of time in key filmmaking processes, enhancing efficiency and creativity. Estimated data.

"House of David": The Proof Point

Amazon didn't just announce this initiative in abstract terms. They showed their work. Season two of "House of David" featured 350 AI-generated shots. That's not 5 shots. That's not 50 shots. That's 350 shots, fully integrated into a completed series that aired on Prime Video.

This is crucial because it proves the technology works at production scale, not just in test environments. These weren't cherry-picked perfect examples. These were shots embedded in a real show that real viewers watched. The fact that they generated without widespread public outcry or critical mentions suggests the quality was acceptable enough to blend into the final product.

What we don't know is exactly which shots were AI-generated. That's intentional opacity. It's hard to argue against a technology when you can't identify where it was used. But it also points to the quality level. If viewers couldn't reliably spot the AI shots, that suggests the integration was seamless.

The economics of this are worth considering. If 350 shots would typically require weeks of VFX work at professional rates, that's potentially millions in labor costs avoided. On a TV production budget, that's significant. It could be the difference between a show getting renewed or canceled, between hitting budget targets or going over.

But here's where it gets complicated. Those 350 shots might represent 350 jobs that didn't need to be filled. VFX artists, compositors, rotoscope specialists. In an industry already dealing with layoffs and consolidation, that math is concerning to a lot of people.

The Infrastructure: AWS and LLM Partnerships

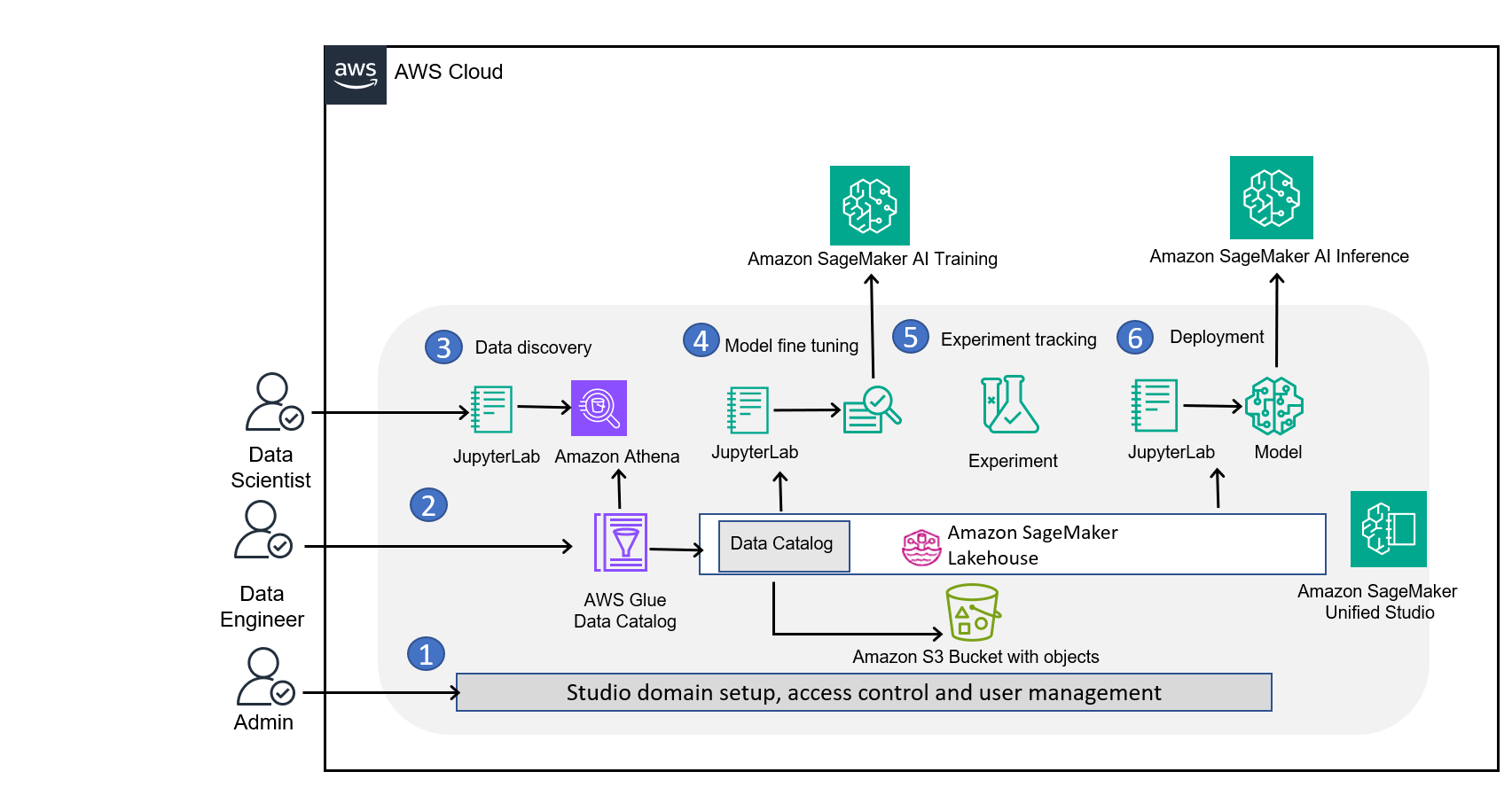

Amazon's not building this alone. They're leveraging Amazon Web Services (AWS) infrastructure and partnering with multiple large language model providers. This matters because it affects scalability, cost, and capability.

AWS provides the compute infrastructure. Running AI inference at production scale requires serious computational power. AWS has that. By building on AWS infrastructure, Amazon ensures that the tools can scale based on demand without Amazon having to build entirely new data centers. It also means Amazon can potentially offer these tools to external studios as a service, generating revenue from the infrastructure.

The LLM partnerships are equally important. No single company owns the best LLM for every task. By working with multiple providers, Amazon gets access to different capabilities. One LLM might be better at understanding spatial relationships for compositing suggestions. Another might be better at semantic understanding for script analysis. By integrating multiple models, Amazon creates something more capable than any single model could provide.

This also creates an interesting dynamic. Amazon isn't betting entirely on its own AI research. They're working with the industry ecosystem. That's partly pragmatic (faster time to market) and partly strategic (reduces risk if one model provider has issues).

The technical architecture likely involves API calls between Amazon's orchestration layer and various model providers, running on AWS. For production companies using these tools, they probably won't need to understand the underlying infrastructure. They'll see a user interface that takes their production data as input and returns suggestions or generated content as output. The complexity is hidden.

Character Consistency: Where AI Excels

One of Amazon's stated focus areas is character consistency across shots. This is worth examining in detail because it reveals both where AI is genuinely useful and where the limitations remain.

In traditional film production, character consistency requires careful attention to detail. If a character has a scratch on their face in shot A, it needs to be in the same place in shot B, or there needs to be a narrative explanation for why it's gone. If hair is parted left in a wide shot, it should be parted left in the close-up. These details are handled through script supervisors who photograph the set, through makeup continuity documentation, and through careful editing.

AI can accelerate this. Computer vision models can analyze character features across shots and flag inconsistencies. They can potentially generate corrected versions of shots where inconsistencies exist. Instead of a continuity specialist spending hours reviewing footage, they can run an AI analysis and review flagged items.

But here's the limit: AI is really good at detecting and suggesting fixes for mechanical inconsistencies. It's less good at understanding intentional inconsistencies that serve narrative purpose. If a character's appearance changes because they've experienced trauma, or if there's a time jump that justifies a different look, AI might flag that as an error when it's actually intentional.

This is why Amazon's messaging about "supporting creative teams, not replacing them" is important. The AI doesn't make final decisions. It surfaces information. The producer or director makes the call. That workflow actually makes sense. It's augmentation, not automation.

The efficiency gain comes from not having to manually examine every frame. You get suggestions. You review them in minutes instead of hours. That's real value, and it's why studios are interested.

Estimated distribution of key participants in Amazon's closed beta, highlighting the strategic selection of industry influencers. Estimated data.

The Pre-Production Revolution: From Concept to Animatic

Pre-production is where AI tools could have the most dramatic impact on production timelines. This is where ideas move from written description to visual form. It's also where iteration cycles typically slow things down.

Traditionally, a producer or director has an idea. They work with concept artists to visualize it. The concept artist creates designs. There's feedback. Revisions. More feedback. More revisions. This cycle can take weeks for something that ultimately might not make it into the final film.

AI tools can potentially collapse this timeline. A prompt-based interface could let directors describe a scene in text or rough sketch form, and AI generates multiple visual interpretations instantly. Instead of waiting for a concept artist to create one version, you get five versions in minutes. You pick the direction you like. The concept artist refines that rather than starting from scratch.

For storyboarding, the impact could be even more significant. Storyboards are currently drawn frame-by-frame by storyboard artists. It's skilled work, and it takes time. AI could generate rough storyboards from a script, which artists then refine. The artist's time shifts from creating the basic visual form to enhancing and directing the visual language.

Animatics, rough animated versions of scenes used for timing and pacing, could be generated faster too. Current animatic production involves storyboard frames synced with temporary audio. AI could potentially generate basic animation from storyboards and audio, which directors then review and provide feedback on.

The efficiency multiplier is significant. If pre-production typically takes 20-30% of total production time, and AI tools can compress that by even 25%, that's substantial calendar and budget impact.

But there's a cultural piece here that Amazon's tools need to navigate. Pre-production is where creative vision gets established. It's where a director's creative choices become tangible. If AI is generating too much of the visual foundation, does that dilute the director's creative ownership of the film? Or does it free them from mechanical work to focus on higher-level creative decisions?

Different directors will have different answers to that question. Amazon's tools need to be flexible enough to work with both workflow philosophies.

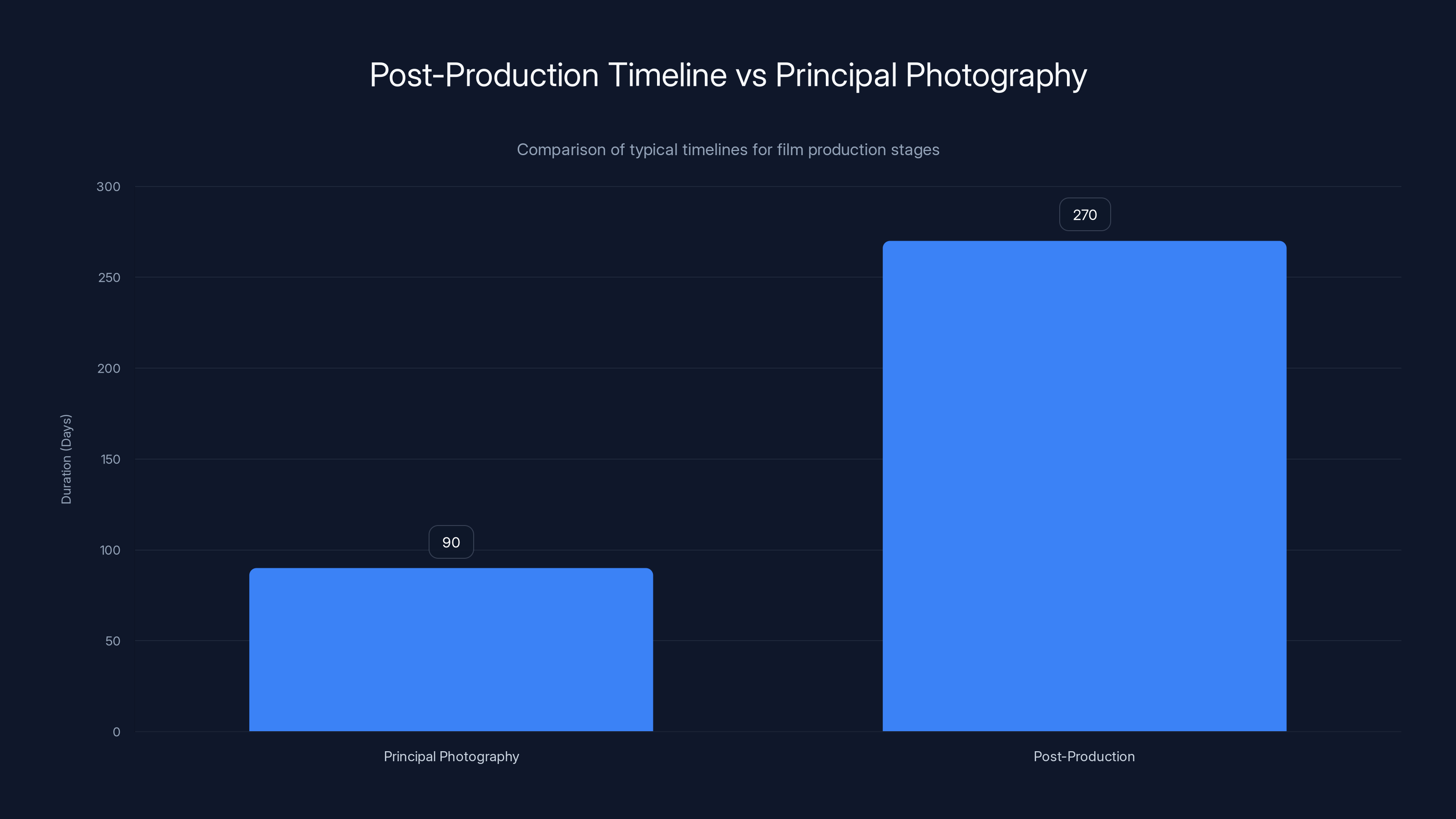

Post-Production: Where the Real Complexity Lives

Post-production is the most technically complex part of film production. It's where all the pieces come together. Color grading, compositing, visual effects, sound design, music, editing. Each of these specialties involves highly trained professionals working with sophisticated tools.

Post-production also has the longest production timeline. A feature film might have principal photography for 60-120 days, but post-production can run 6-12 months or longer. That's where the schedule pressure intensifies and where budgets balloon.

This is also where AI tools could have the most immediate impact, because so much of post-production is iterative refinement. Color grading involves adjusting colors shot-by-shot to achieve a consistent look and emotional tone. An AI could analyze a grader's choices across the first 20% of a project, learn the intended look, and suggest adjustments for the remaining 80%. The colorist reviews and approves them. Work that might take weeks condenses to days.

Compositing, which involves layering multiple elements (backgrounds, actors, effects, text) into single shots, involves similar pattern-recognition opportunities. If a composite style is established in early shots, AI could suggest similar approaches for later shots. If masking (selecting specific elements) is required, AI could help generate mask suggestions based on understanding what's in the shot.

Visual effects are more complex, but there are still opportunities. If an effect is being applied to multiple shots (color correction, blur, distortion), AI could automate parameter suggestion across shots. Effects that are repeated could be batch-processed more efficiently.

The constraint is that post-production is also where creative decisions are most visible. Unlike pre-production, where rough approximations are acceptable, post-production quality directly affects the final viewer experience. An AI suggestion that's 80% good might require more manual correction than it would've taken to do manually from scratch. So AI value in post-production depends on getting the suggestions good enough that approval-and-tweak is faster than creation-from-scratch.

Amazon's been operating this at scale with "House of David," so they presumably have data on where that threshold is.

The IP Protection Question: The Hidden Technical Challenge

Amazon was explicit about one constraint: AI-generated content can't be absorbed into other AI models. This is more technically complex than it might sound, and it reveals a major concern in the industry.

Here's the problem: if you train a new AI model on content that was generated by another AI model, you're potentially creating a lineage issue. Is the new model derivative? Is there liability? What happens if the original model's training data included copyrighted material? Does that get inherited by the new model?

These aren't just legal questions. They're technical ones. Amazon's tools need to generate content in a way that's "clean," that doesn't create linkages to their original training data. That requires careful architecture.

One approach is to generate content using inference-only models that don't retain connection to training data. Another is to generate and then process the output through a de-linking step that removes any potential connection to the original model. A third is to contract with LLM partners to ensure their models are used in ways that maintain clean IP lineage.

Production companies need this protection. If they use Amazon's tools to generate a visual effect, and later someone claims that effect violates someone's copyright, the production company needs to know they're protected. That means Amazon has to be absolutely clear about IP ownership and liability.

This is probably a detail that will matter enormously to the beta testers and will influence whether they recommend the tools to others. If IP protection is bulletproof, adoption accelerates. If there's ambiguity, companies will move cautiously.

Post-production typically takes 3 times longer than principal photography, highlighting its complexity and potential for AI optimization. Estimated data.

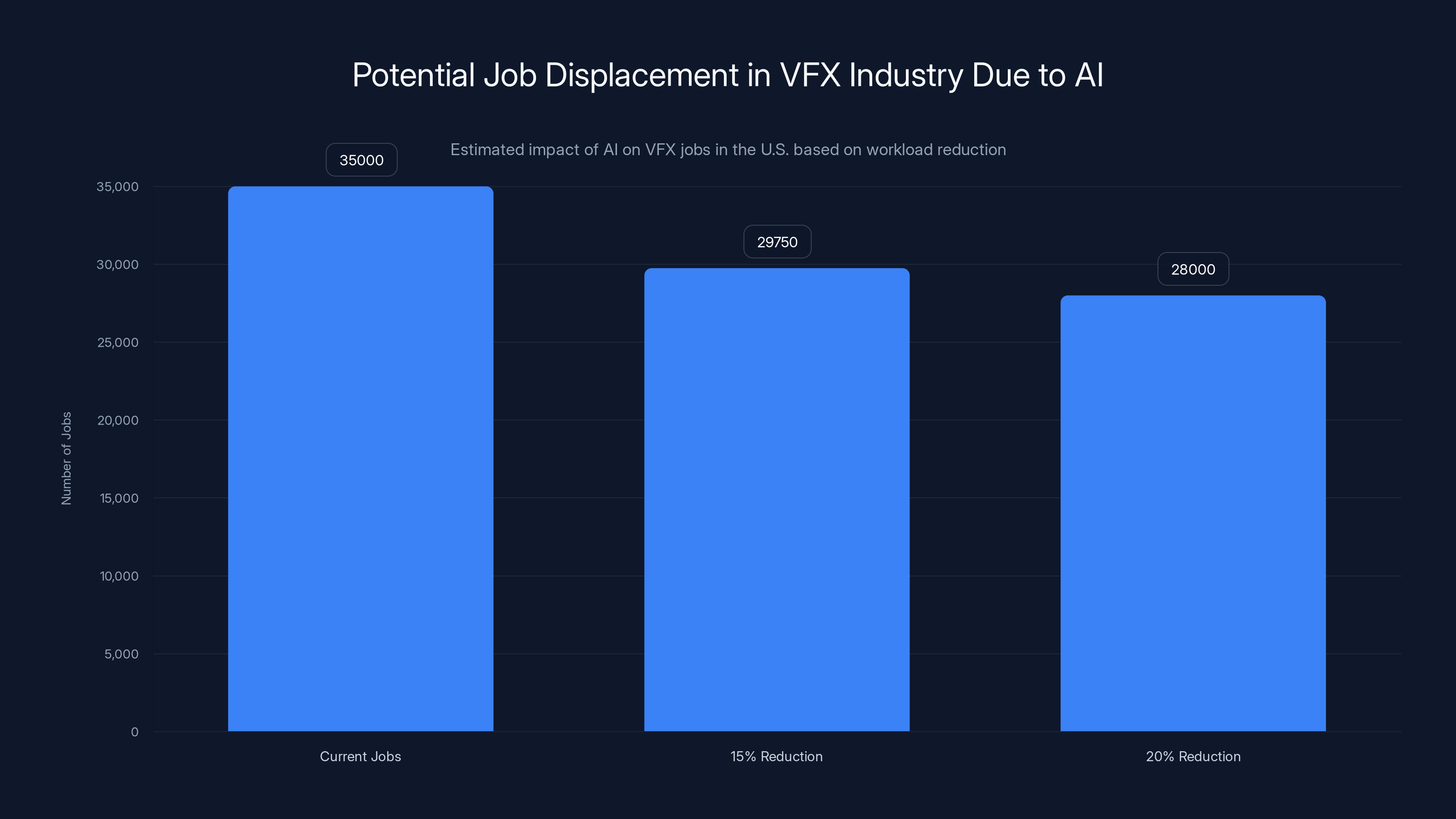

The Job Displacement Reality: What the Numbers Might Look Like

Let's talk about the thing everyone's thinking about but nobody's saying directly: jobs.

VFX is a significant employer. The U. S. VFX industry employs roughly 30,000-40,000 people directly, and many more in supporting roles. It's concentrated geographically, with major clusters in Los Angeles, Vancouver, London, and New Zealand. It's a global industry, but it's not evenly distributed.

If AI tools reduce VFX workload by even 15-20%, that's 4,500-8,000 jobs affected in the U. S. alone, potentially more globally. That's not immediate. It's measured in years. But it's real.

Here's what's complicated: the job displacement probably won't be evenly distributed. Senior VFX supervisors and artists who understand creative direction will be more valuable. Junior artists doing mechanical tasks will be more vulnerable. Companies will need fewer people doing grunt work and the same number doing creative leadership. That's a shift in the labor market, not just a reduction.

There's also a potential counterbalance. If production costs drop and timelines accelerate, studios might greenlight more projects. More projects could mean more work overall, just distributed differently. A studio that previously budgeted

Amazon has cited AI as a factor in company-wide layoffs. In January 2025, Amazon eliminated 16,000 jobs. In October 2024, it was another 14,000. The company's messaging was about efficiency and productivity, which translates to: "We can do more with fewer people using AI." That's the same value prop they're offering to production studios, just at the labor management level.

The industry conversation around this is becoming more prominent. Directors, producers, and labor organizations are pushing back on AI adoption without protections for workers. There are conversations about AI revenue sharing, about training requirements, about transition support for workers. These aren't settled questions yet.

Competitive Response: What Netflix and Others Are Doing

Amazon's not alone in exploring AI for entertainment. Netflix has already deployed AI in productions. Their series "The Eternaut" used generative AI to create a building collapse scene. That's significant because it shows the technology being used on a platform-owned show, not hiding in the background but intentionally announced.

The Netflix approach is different from Amazon's. Amazon is building proprietary tools for production efficiency. Netflix is using AI to generate specific visual elements that would've been expensive or complex to create through traditional methods. Both are valuable, but they're different strategies.

Streaming platforms have different incentives than traditional studios. Netflix needs to maximize content output. If AI can generate high-quality visual effects more cheaply, Netflix benefits. Disney, which has vast VFX infrastructure, has more incentive to move slowly because that infrastructure represents investment and competitive advantage.

Other players in the space are developing their own tools. Some VFX software companies are integrating AI capabilities into their products. Adobe is adding AI features to After Effects and Premiere Pro. Autodesk is adding AI to Maya. These are ecosystem moves that let production companies use AI tools they already know rather than learning new software.

The competitive landscape is fragmenting. There's no single dominant AI solution for film and TV production yet. There might never be, because different studios have different workflows and preferences. But the direction is clear: AI is becoming table stakes. If you're not integrating it into your production pipeline, you're falling behind on efficiency and cost.

The Ethical Minefield: Creativity, Authenticity, and Artistic Intent

There's a philosophical question lurking under all this: what does it mean to make a film if significant portions are generated by AI rather than created by human artists?

Film is traditionally understood as a director's art, realized by technical specialists. A cinematographer frames shots based on director vision. A sound designer creates soundscapes based on emotional intent. A VFX artist creates visual effects to serve the story. Each of these specialists brings creative agency to their work.

If portions of that work are delegated to AI, what happens to creative ownership? If a director says "generate 50 versions of this scene with different lighting," and picks the one they like best, are they creating or curating? There's a meaningful difference.

Different filmmakers will answer this differently. Some will see AI as a tool like any other, no different from a camera or editing software. Others will see it as threatening to the core of what filmmaking means.

The industry is going to need to develop frameworks for this. Questions about crediting AI in films, about disclosure of AI-generated content, about artistic intent and ownership. These aren't just philosophical. They affect marketing (consumers want to know what they're watching), awards (do AI-heavy films qualify for film festival categories designed for human creators?), and labor standards (how are artists credited and compensated when their work is substantially AI-assisted?).

Amazon's closed beta is partly an opportunity to gather feedback on these questions from experienced filmmakers. How do they feel about the tools after using them? Do they perceive the AI as supporting their vision or constraining it? Do they worry about the authenticity of the final product?

Estimated data shows that a 15-20% reduction in VFX workload due to AI could affect 4,500-8,000 jobs in the U.S. VFX industry.

The May Announcement: What to Expect

Amazon's committed to sharing initial results from the closed beta by May. That's a specific timeline, and it signals the company's confidence in the technology. But what does "initial results" actually mean?

Most likely, Amazon will publish case studies from beta partner productions. Stories about how many VFX shots were generated, how much time was saved, what the quality level was. They'll probably include testimonials from respected producers about their experience with the tools. They might publish technical documentation about how the tools work.

They probably won't publish negative results. If something failed during the beta, Amazon will frame the narrative around learning and improvement, not emphasizing the failure. That's standard practice for tech companies doing public beta results announcements.

The May announcement will likely coincide with broader marketing push. Blog posts, conference announcements, industry outreach. Amazon will be positioning itself as the studio that figured out AI production at scale. That's valuable positioning because it attracts talent (AI engineers and VFX specialists want to work on cutting-edge tech), attracts partnerships (other studios want access to the tools), and attracts investment (Amazon Studios is part of a larger corporate structure, but reputation matters for greenlight decisions).

The announcement will also be an opportunity for competitors to respond. Other studios and software companies will have to articulate their own AI strategies. If Amazon looks ahead and everyone else looks behind, that shifts market dynamics.

Integration Challenges: Making AI Tools Work in Real Production Pipelines

Building tools is one thing. Integrating them into real production workflows is another.

Production pipelines are incredibly complex. A major studio might have hundreds of pieces of software talking to each other. Cameras produce footage in specific formats. That footage gets ingested into media management systems. Color grading happens in one piece of software, editing in another, VFX in a third. Each of these systems has its own data formats, metadata standards, and compatibility requirements.

Adding AI tools to this ecosystem requires integration. The AI tools need to read data from the existing pipeline, produce output in formats the pipeline can ingest, and not break any existing workflows. That's complex work.

Amazon's building on AWS infrastructure, which is a smart choice. AWS already integrates with a lot of production software. By building AI tools that run on AWS and integrate with AWS services, Amazon's making the integration path easier than if they were building entirely separate infrastructure.

But there will still be friction. Every studio has idiosyncratic workflows. What works for Amazon MGM Studios might need significant customization for a different studio. The beta period is partly about identifying those integration challenges and developing solutions.

The tools also need to integrate with the human workflow. If VFX artists typically review composites at 3 PM each day, the AI tools need to fit into that rhythm, not force a different one. If producers need decisions at certain points in the process, the AI tools need to be available and reliable at those points. Integration is as much about human workflow as technical infrastructure.

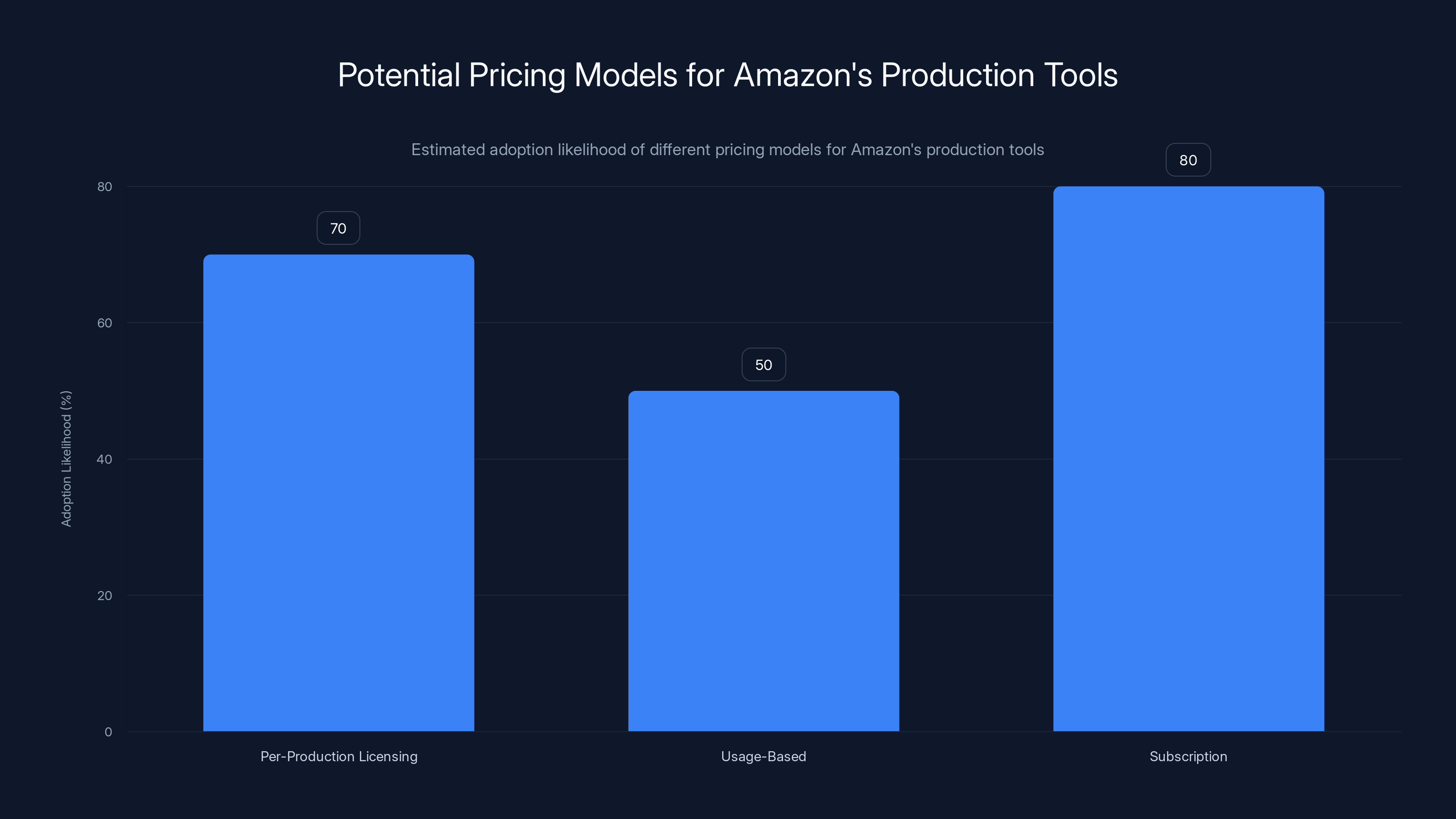

The Pricing Question: How Will Amazon Monetize This?

Amazon hasn't announced pricing yet, and that's a significant strategic question. How the company prices these tools will determine adoption rate and market impact.

There are a few possible models. One is a per-production licensing model, where studios pay a fixed fee for access to the tools for a specific production. Another is a usage-based model, where studios pay based on how much they use the tools (hours of footage processed, number of shots generated, etc.). A third is a subscription model, where studios pay monthly or annually for access.

Per-production licensing makes sense for Amazon because it aligns incentives and makes budgeting predictable for studios. A production that costs

Usage-based pricing could work too, particularly if the tools run on AWS infrastructure. Amazon already has AWS pricing models that are usage-based. Extending that to production tools is natural. But studios might resist because production budgets need to be fixed. Uncertainty about per-shot costs could be problematic.

Subscription pricing could work for ongoing relationships. A studio that produces multiple series per year might pay $X monthly for unlimited access to the tools. That aligns Amazon's incentive with the studio's incentive to maximize usage.

Pricing will also affect whether tools are positioned as premium offerings or democratized offerings. High pricing suggests premium positioning, serving major studios and productions. Low pricing suggests democratization, trying to capture market share across independent and mid-tier productions.

Amazon's likely to try different models for different customer segments. Enterprise studios might get subscription pricing. Independent productions might get per-project licensing. That tiered approach maximizes market penetration.

Estimated data suggests subscription pricing might have the highest adoption likelihood due to its alignment with studio incentives and predictability in budgeting.

Looking Forward: The Next 12-24 Months

The May announcement will be important, but it's just the beginning. Over the next 12-24 months, several things are likely to happen.

First, tools will move from closed beta to wider availability. If the beta goes well, Amazon will likely open access to more studios over summer and fall 2025. They might charge for access or offer it free to attract adoption. The goal will be getting these tools embedded in major studio workflows.

Second, competitors will respond. Other studios, software companies, and AI startups will develop their own tools or enhance existing offerings. The market for AI production tools will get crowded. That's actually healthy for the industry because it drives innovation and prevents any single company from having too much control.

Third, the labor question will intensify. There will be formal conversations between studios and labor organizations about how AI adoption affects workers. There might be new contracts, new union agreements, or new industry standards around AI use. These conversations are starting to happen but will become more urgent as AI deployment accelerates.

Fourth, filmmakers will develop best practices around AI integration. Directors and producers will figure out what works and what doesn't. Successful production studios will become known for their ability to use AI effectively without compromising creative vision. That becomes a competitive advantage.

Fifth, the regulations might evolve. There might be requirements for disclosing AI-generated content to audiences. There might be labor standards around how much human creative work must be involved in productions. There might be new copyright frameworks around AI-generated content. The legal landscape is still forming.

Real-World Applications: What This Looks Like in Practice

Let's get concrete about what using these tools actually looks like.

Scenario 1: A streaming series needs 200 VFX shots completed in three months. Traditionally, that's a massive undertaking requiring a dedicated VFX team and tight scheduling. With Amazon's tools, the VFX supervisor uses AI to generate rough versions of complex shots. The VFX artists review them, make creative adjustments, and approve them. Instead of creating shots from scratch, they're refining AI suggestions. Timeline compresses from 12 weeks to 8 weeks. Budget drops from

Scenario 2: A director wants to explore multiple lighting setups for a scene before principal photography. Traditionally, they either shoot multiple takes with different lighting or rely on previz created by artists. With Amazon's tools, they upload a rough animatic of the scene and request variations with different lighting approaches. They get 10 variations in hours instead of days. They pick their favorite and shoot the scene accordingly. Pre-production moves faster, and the director's vision is clearer going into production.

Scenario 3: A film is in color grading, and there's disagreement about the overall look. The colorist has finished the first 30 minutes of the film with a specific aesthetic. The director wants to explore alternatives for the remaining 90 minutes. Traditional approach requires the colorist to create multiple complete grades, which takes weeks. With AI assistance, the colorist creates a few variations that the AI applies to the entire film. Review is faster. Decisions are made quicker. Post-production timeline accelerates.

These scenarios sound ideal, and in many cases, they would be. But the actual experience will be messier. AI suggestions won't always be good. Refinement will sometimes take longer than creation from scratch. Integration won't be seamless. But on average, if AI gets it right 70-80% of the time, the value is substantial.

Addressing the Skeptics: Legitimate Concerns About AI in Film

There are legitimate reasons to be skeptical about AI in film production. It's worth acknowledging them directly.

One concern is quality degradation. If studios prioritize cost savings over quality, and AI tools enable that shortcut, the final product suffers. That's a real risk. The industry might race to the bottom, where good enough is good enough because it's cheaper.

Another concern is that AI-assisted work lacks authenticity. There's something intangible but real about human creative effort that audiences might perceive and value. If a film feels like it was assembled by algorithm rather than created by artists, does that change its impact?

A third concern is dependency. If studios become dependent on AI tools, what happens when the tools break or the companies providing them change their terms or go out of business? There's a vulnerability risk to relying on external tools for core production work.

A fourth concern is bias and representation. AI models are trained on existing content. If that content has biases (underrepresentation of certain groups, stereotypical portrayals), the AI will inherit those biases. Films created with AI tools might inadvertently perpetuate existing stereotypes or miss opportunities for diverse representation.

A fifth concern is creative control. If directors become overly reliant on AI-generated suggestions, do they lose the discipline of making hard creative choices? There's something to the idea that constraints foster creativity. If everything can be generated instantly, does that make creative choices less valuable?

These are legitimate concerns. They won't go away just because the technology is improving. The industry needs to grapple with them thoughtfully.

The Runable Connection: Automation Beyond Film

While Amazon's building tools specifically for film and TV production, the broader AI automation landscape is evolving rapidly. Platforms like Runable are democratizing AI-powered automation for teams and creators who need to generate presentations, documents, reports, and other content at scale.

The principles are similar: use AI to accelerate repetitive work, free humans for creative decision-making, and reduce production time and cost. Runable's approach to AI automation through agents and automated workflows mirrors what Amazon's building for film production, just in different domains.

For production companies, this is relevant because it means the AI tooling ecosystem is expanding beyond film-specific applications. Video editing software with AI capabilities, documentation tools with AI assistance, project management tools with AI suggestions, all of this is becoming table stakes. Production companies that understand how to work with AI tools across multiple domains will be better positioned than those that only understand AI in one context.

Use Case: Generate weekly production status reports and team updates automatically, saving hours of documentation work per week

Try Runable For Free

Industry Predictions: What Happens Next

Based on the trajectory of Amazon's announcement and industry trends, here are some predictions for the next 18-24 months.

Prediction 1: By end of 2025, at least 50% of major Hollywood studios will have evaluated AI production tools. Some will adopt, some will pilot, but most will be actively exploring.

Prediction 2: By mid-2026, there will be formal labor agreements around AI use in film and TV production. These will establish standards for worker retraining, income protection, and creative credit.

Prediction 3: By 2026, films will start to be marketed explicitly around their use of AI. Some will highlight how AI was used to enhance creativity. Others will market against AI, positioning human creativity as the differentiator. This becomes a marketing angle.

Prediction 4: By 2027, there will be categories of films and series that are predominantly AI-created. These will coexist with traditionally-created content. Audiences will develop preferences. Some will prefer AI-assisted films for their efficiency and cost-effectiveness. Others will prefer traditionally-created films for their perceived authenticity.

Prediction 5: Independent and mid-tier productions will be the first to fully adopt AI tools because they have tighter budgets and smaller teams. Major studios will adopt more cautiously because they have more legacy infrastructure and more resistance to change.

The Bottom Line: AI Is Production Infrastructure Now

Amazon's move isn't revolutionary. It's inevitable. The question was never whether AI would be used in film production. The question was when and by whom.

By launching an AI Studio and building proprietary tools, Amazon is positioning itself at the center of this shift. They're not just investing in technology. They're investing in narrative. They're saying to the industry: "This is the future. We're building it. Join us or fall behind."

The closed beta and May announcement are moments in a longer arc. This is the beginning of mainstream adoption of AI in Hollywood. It will be messy. There will be missteps. There will be beautiful creations and terrible abominations. There will be arguments about whether it's authentic art. There will be serious conversations about labor and ethics.

But the arrow is pointing forward. AI production tools are becoming operational infrastructure for major studios. That's not good or bad inherently. It's a shift. Like every significant technology shift in entertainment history, it will create new opportunities and new challenges. Creators, studios, and audiences will adapt and find new ways to make meaning.

The game has started. May's announcement is just the first move.

FAQ

What exactly is Amazon's AI Studio building for film and TV production?

Amazon's AI Studio is developing proprietary AI tools designed to improve several aspects of film and TV production, including character consistency across shots, pre-production workflows (concept art, storyboarding, animatics), and post-production work (color grading, compositing, visual effects). The tools aim to accelerate these processes and reduce production costs while maintaining creative control with human producers and directors.

How does Amazon plan to integrate AI tools into existing production workflows?

Amazon is building its AI tools on Amazon Web Services (AWS) infrastructure and working with multiple large language model providers to ensure compatibility and capability. The tools will likely integrate with existing production software through APIs and data format standards. The closed beta period will help Amazon identify integration challenges and develop solutions that fit different studios' unique workflows and requirements.

What does the "House of David" example tell us about the readiness of these tools?

"House of David" season two featured 350 AI-generated shots that were fully integrated into a completed series that aired on Prime Video. This demonstrates that Amazon's technology works at production scale, not just in test environments. The fact that viewers and critics couldn't reliably identify which shots were AI-generated suggests the quality was high enough to blend seamlessly into professional productions, which is a significant proof point for the technology's viability.

Why is IP protection such an important concern for production companies using AI tools?

Production companies need assurance that AI-generated content doesn't inherit licensing issues or training data linkages from the AI model that generated it. If AI-generated content could potentially be absorbed into other AI models or carry legal liability related to the original model's training data, it creates significant risk for studios using the tools. Amazon's explicit commitment to IP protection suggests they understand this concern and are designing their tools to maintain clean legal separation between generated content and the underlying AI models.

What are the job displacement implications of widespread AI adoption in VFX and post-production?

The VFX industry employs roughly 30,000-40,000 people in the U. S. alone. If AI tools reduce VFX workload by even 15-20%, that represents thousands of affected jobs. However, the job displacement likely won't be uniform. Junior artists performing mechanical tasks will face more vulnerability, while senior artists handling creative direction will likely become more valuable. The overall impact depends on whether production cost savings lead to more greenlit projects or just increased studio profitability, and whether labor standards and retraining programs are established to support displaced workers.

How does Amazon's approach to AI in film differ from Netflix's approach?

Amazon is building proprietary AI tools designed to improve production efficiency across multiple aspects of filmmaking. Netflix, by contrast, is using AI to generate specific visual elements that would be expensive or complex using traditional methods, like the building collapse scene in "The Eternaut." Amazon's approach is infrastructure-focused, while Netflix's is specific application-focused. Both strategies are valuable, but they serve different business objectives and production needs.

What timeline should production companies expect for wider availability of these AI tools?

Amazon will begin a closed beta in March 2025 and share initial results by May 2025. Based on typical tech company timelines, wider availability will likely follow within 6-12 months of a successful beta. That suggests broader access by late 2025 or early 2026. Pricing and licensing models will probably be announced along with the May results, giving more clarity on adoption timeline and cost structure.

What challenges might production companies face when implementing AI tools into their existing workflows?

Production pipelines are extremely complex, with hundreds of pieces of software and systems interacting. Integration challenges will include data format compatibility, metadata standards alignment, and ensuring AI tools don't disrupt existing workflows. Additionally, human workflow integration is critical—tools need to fit into established production schedules and decision-making processes. Different studios have idiosyncratic requirements, so while tools might work well for Amazon MGM Studios, customization will be necessary for other production companies with different infrastructure and practices.

How might AI adoption affect film quality and creative authenticity?

This is an open question without a single answer. If AI tools are used well, they can accelerate mechanical work and free artists for higher-level creative decisions, potentially improving quality and reducing time pressure that compromises creativity. However, if studios prioritize cost savings over quality, AI tools enable cutting corners that could degrade final products. Additionally, there's a philosophical question about whether films created substantially with AI assistance feel less authentic to audiences, though this will likely vary by viewer and by how transparently studios disclose AI use.

What are the likely regulatory and labor implications of widespread AI adoption in Hollywood?

The industry will likely see new labor agreements establishing standards for worker retraining, income protection, and creative credit allocation when AI is substantially involved in production. There may also be regulatory requirements for disclosing AI-generated content to audiences. Copyright frameworks around AI-generated content are still being developed, and there will probably be new standards around artistic intent, ownership, and liability when AI is involved in content creation. These conversations are already beginning with unions and industry organizations.

Conclusion

Amazon's announcement of AI tools for film and TV production, with a closed beta launching in March 2025 and results sharing by May, marks a definitive moment in entertainment technology. This isn't a company experimenting at the margins. This is one of the largest media companies in the world deploying AI as core production infrastructure.

The significance lies not in the technology itself, which is impressive but not unprecedented. The significance lies in the scale of deployment, the partnership with respected filmmakers, and the commitment to proving this works in real productions at real studios. Three hundred fifty AI-generated shots in a completed television series isn't a proof of concept. It's proof of operational readiness.

What happens next matters. If the closed beta goes well and studios adopt these tools at scale, the production landscape changes fundamentally. Timelines compress. Costs drop. The balance of creative labor shifts. Some roles disappear. New roles emerge. The industry adapts, as it always does when faced with significant technology change.

For creators, the message is clear: understand these tools now. Experiment with them. Figure out how they fit into your creative process and your workflow. The filmmakers and studios that master AI integration over the next 18 months will be the ones setting the standards for everyone else.

For industry professionals worried about displacement, the message is equally clear: this technology is coming, and adaptation beats resistance. Develop skills that complement AI rather than compete with it. Creative direction, artistic vision, project management, tool expertise. These become more valuable as mechanical work becomes automated.

For audiences, the message is more subtle: pay attention to how studios disclose AI use in their productions. That transparency will matter. Some productions will be made better by AI assistance. Others will feel like they were assembled by algorithm. Learning to distinguish between them, and expressing preference for films made with integrity and clear creative vision, will shape how the industry evolves.

Amazon's tools are just the beginning. The real story is how the entire entertainment industry responds, adapts, and evolves. That story is just starting, and May 2025 will be an important chapter.

The future of film and TV production is being written right now. It will include AI. How well it includes AI, and what that means for creators, workers, and audiences, is up to all of us.

Key Takeaways

- Amazon MGM Studios begins closed beta testing of proprietary AI production tools in March 2025, with initial results expected by May

- Tools focus on character consistency, pre-production workflows, and post-production tasks to reduce timelines and costs

- House of David season two featured 350 AI-generated shots, proving production-scale deployment is viable and quality is acceptable

- Industry partnerships with directors Robert Stromberg and others shape implementation strategy while maintaining creative control

- Job displacement concerns are legitimate—VFX employment could be affected by 15-20% if AI adoption accelerates, but new roles may emerge

- Competitive response from Netflix, software companies, and other studios will fragment the AI production tools market within 12-18 months

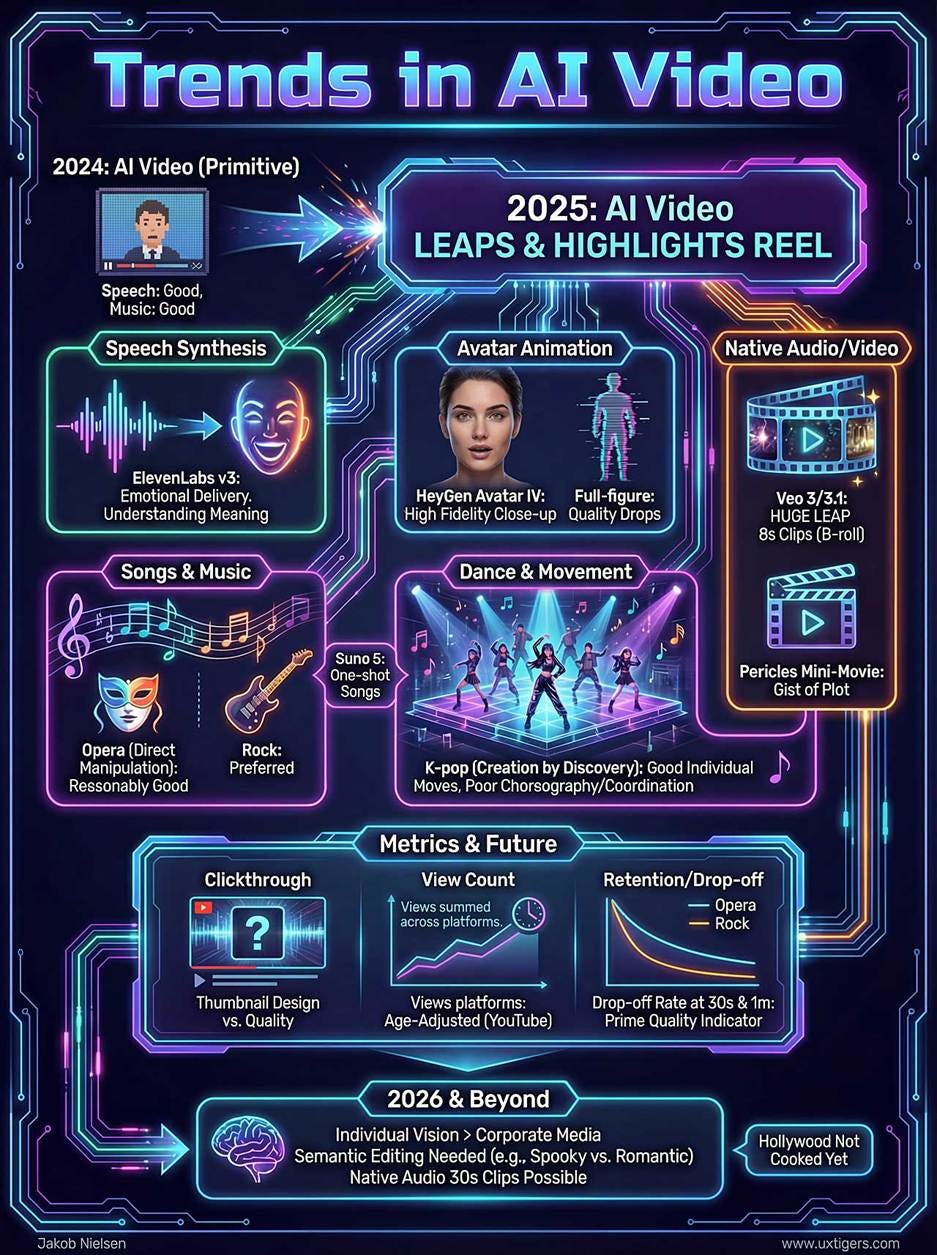

![Amazon's AI Tools for Film & TV: What Producers Need to Know [2025]](https://tryrunable.com/blog/amazon-s-ai-tools-for-film-tv-what-producers-need-to-know-20/image-1-1770241075723.jpg)