Introduction: The AI Problem Hollywood Never Wanted to Solve

Sometime in late 2024, a major Hollywood studio quietly panicked. Not about the usual stuff—box office numbers, streaming subscriptions, or the endless fight with talent unions. No, this panic was different. It was about artificial intelligence.

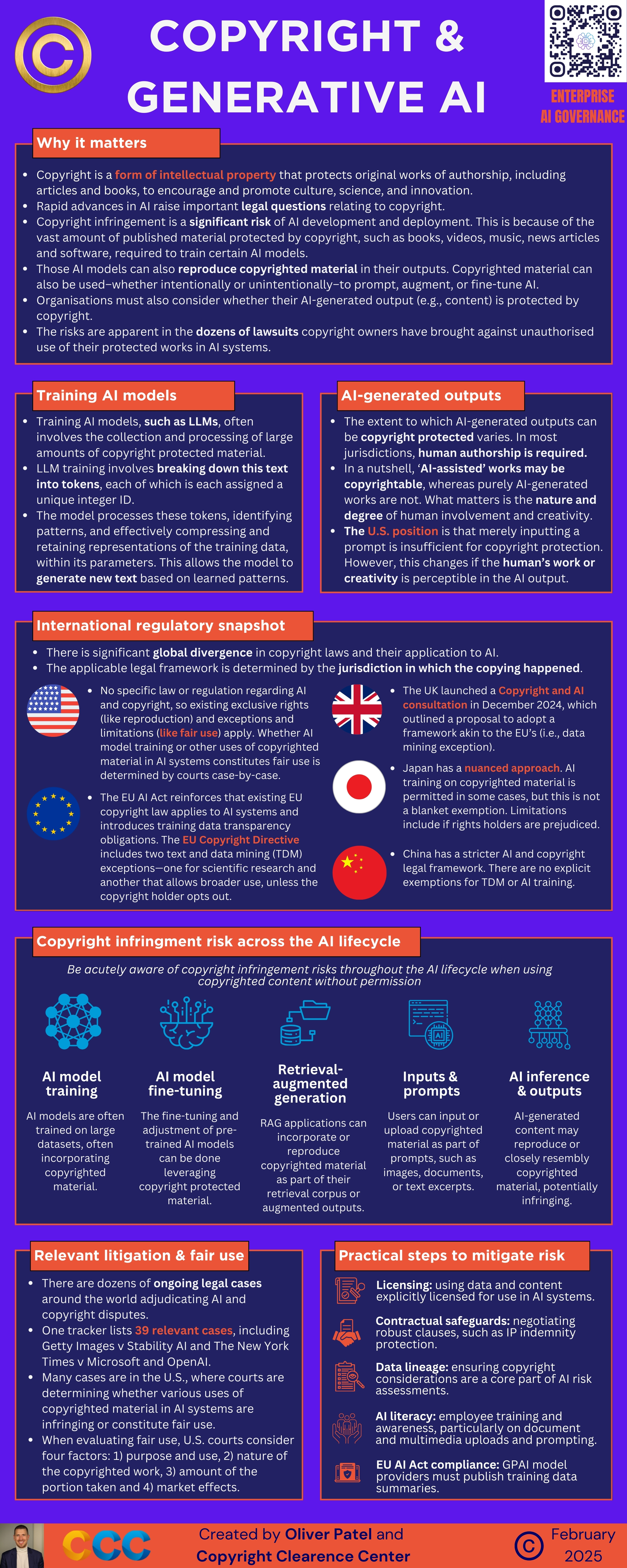

Here's the thing: every major generative AI model on the market was trained on billions of images, videos, scripts, and audio clips scraped from the internet. A lot of that internet? It contained protected intellectual property. Movies. TV shows. Character designs. Original music. The kind of stuff studios own, profit from, and protect fiercely.

Using these generic AI tools meant studios could accidentally (or obviously) violate copyright laws, trigger lawsuits, or worse, create assets that trained on competitors' work. That's not just a legal headache—it's existential. Your AI-generated character might have accidentally learned to move like someone else's character. Your generated dialogue might echo someone else's writing.

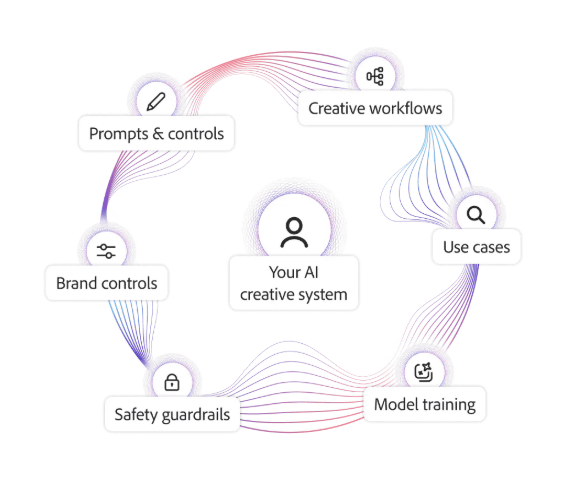

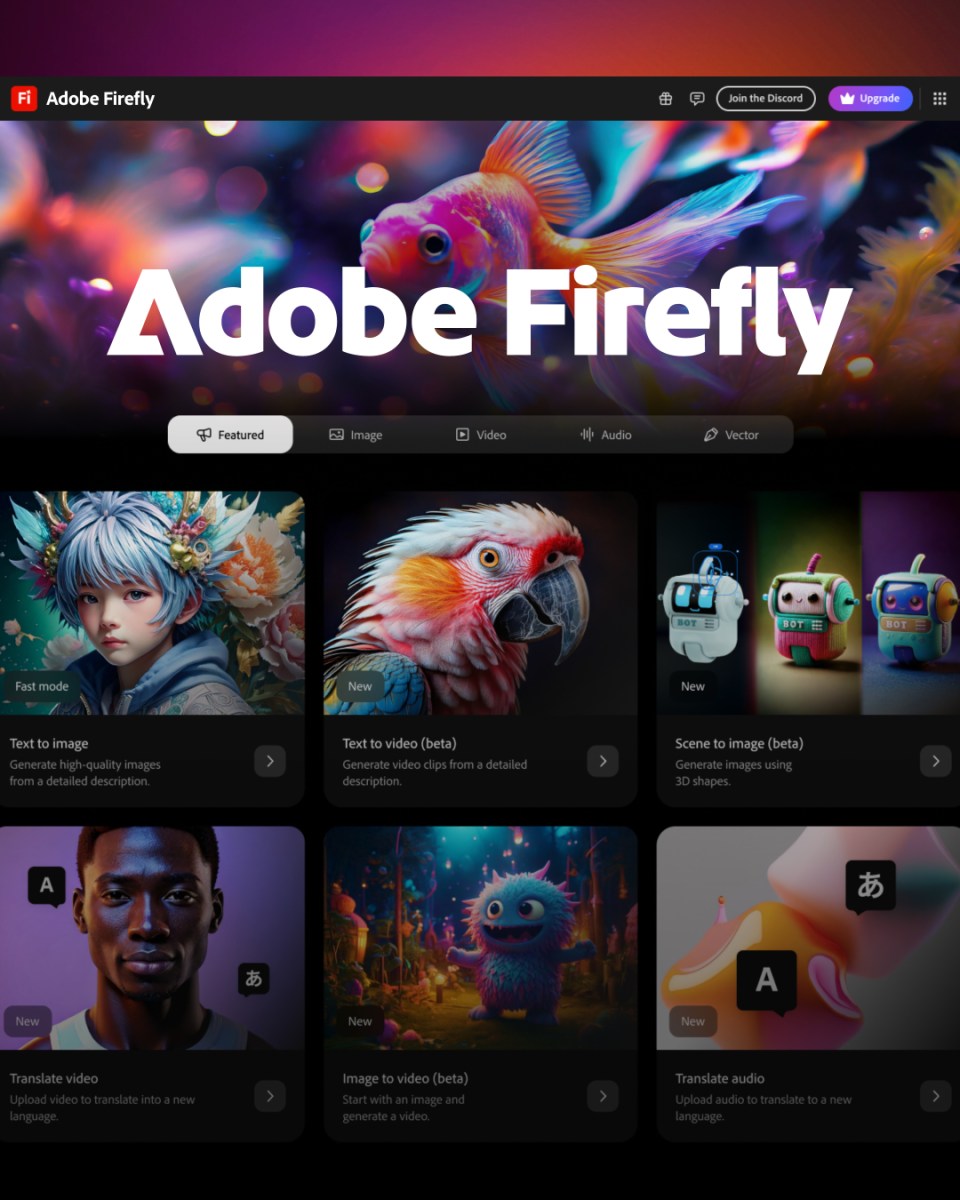

Adobe understood this problem deeply because studios told them about it directly. And in early 2025, the company announced a solution that's been a long time coming: Firefly Foundry. Custom AI models trained exclusively on content studios own, deployed privately, and integrated into creative workflows that already rely on Adobe's software.

This isn't a generic AI tool marketed to everyone. It's enterprise software designed specifically for the entertainment industry's most demanding production challenges. And it might be the breakthrough that finally makes generative AI actually safe for Hollywood to use at scale.

The implications ripple far beyond just Adobe's balance sheet. This represents a fundamental shift in how AI gets deployed in creative industries. Instead of training on the world's content and hoping for the best, studios now have a path to AI that respects their IP, protects their creative vision, and integrates seamlessly into production pipelines that have been built over decades.

Let's dig into what Firefly Foundry actually does, why it matters, and what it says about the future of AI in entertainment.

TL; DR

- Custom AI Training: Firefly Foundry models train exclusively on IP that studios own, eliminating copyright infringement risks

- Production-Ready: Models generate videos, 3D assets, vector graphics, and audio-aware content directly integrated into Premiere and other Adobe tools

- Strategic Partnerships: Adobe partnered with major talent agencies, directors, and production houses to refine the platform for real-world workflows

- Enterprise Focus: Designed for studios, not consumers—each client gets their own private model trained on their proprietary content

- Industry Momentum: Addresses the biggest blocker preventing Hollywood from fully adopting AI: the fear of legal liability and IP violation

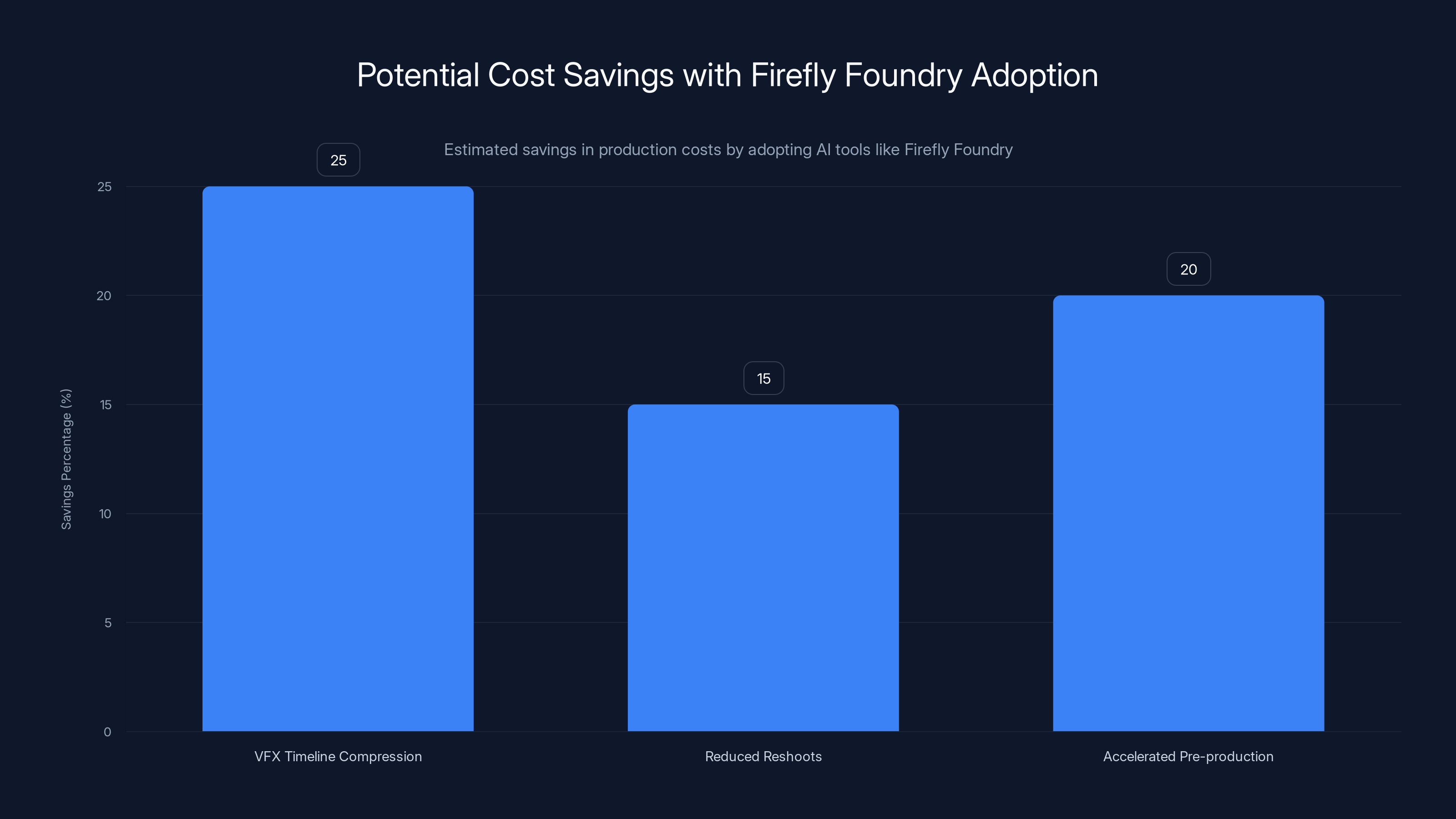

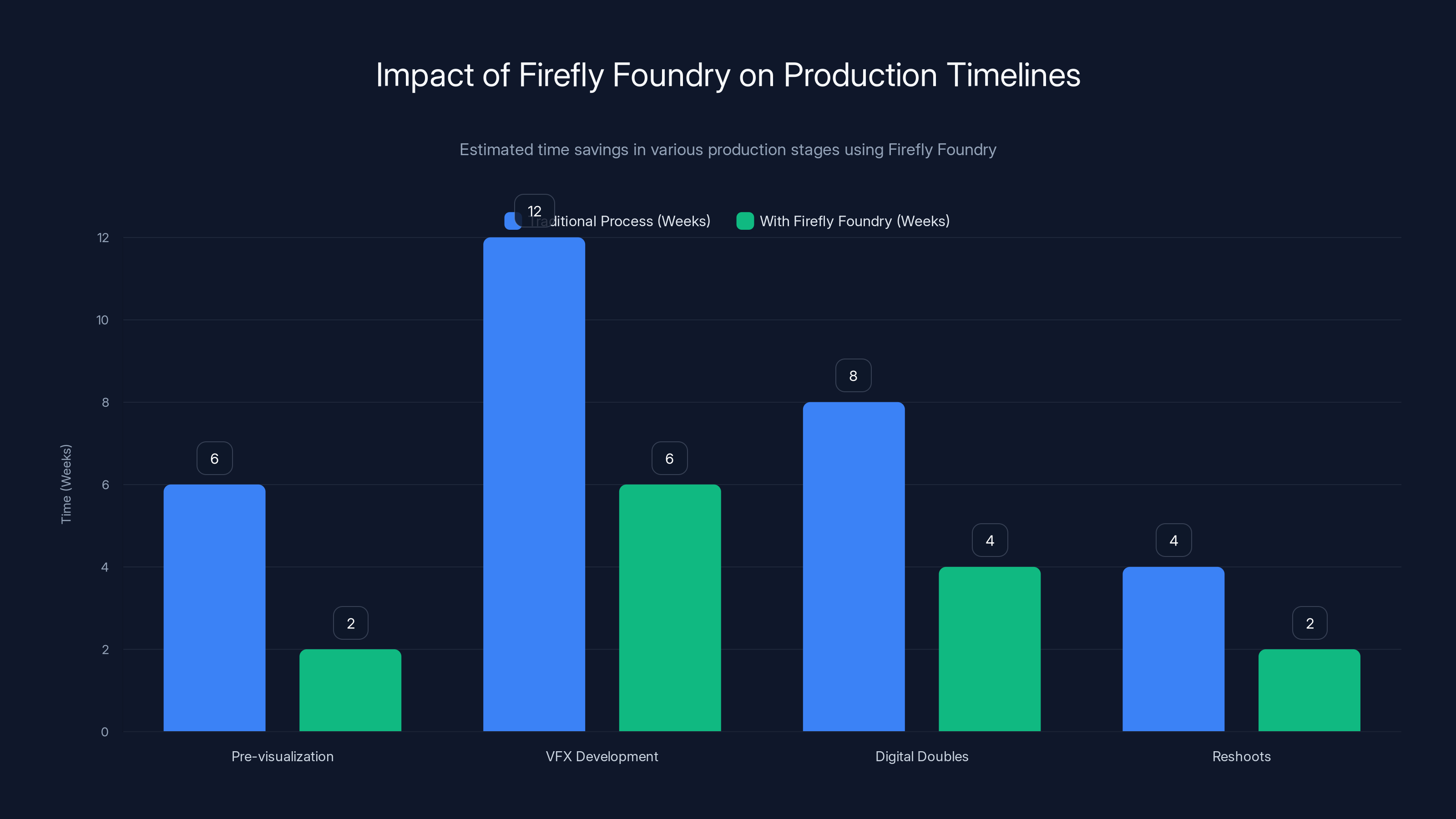

Adopting Firefly Foundry could lead to significant savings by compressing VFX timelines, reducing reshoots, and accelerating pre-production, potentially saving studios tens of millions annually. Estimated data.

The IP Problem That Killed AI Adoption in Entertainment

Let's be honest: Hollywood's relationship with generative AI has been complicated from the jump. Studios love the idea of speeding up production, cutting costs, and automating grunt work. But they've been terrified of the legal consequences.

Every public AI model—whether it's Chat GPT, Midjourney, Stable Diffusion, or any of the dozens of video generation tools—was trained on massive datasets pulled from the internet. Nobody asked permission. Nobody paid licensing fees. The companies just scraped everything they could find and fed it to their models.

For studios, this creates a specific nightmare scenario. Let's say you use a popular AI tool to generate concept art for your animated film. The model was trained on thousands of animated films, character designs, and visual styles. Your generated character might contain learned patterns from existing copyrighted work—not because you intended it, but because the model absorbed it during training.

Now you're exposed. Legally. Financially. Creatively.

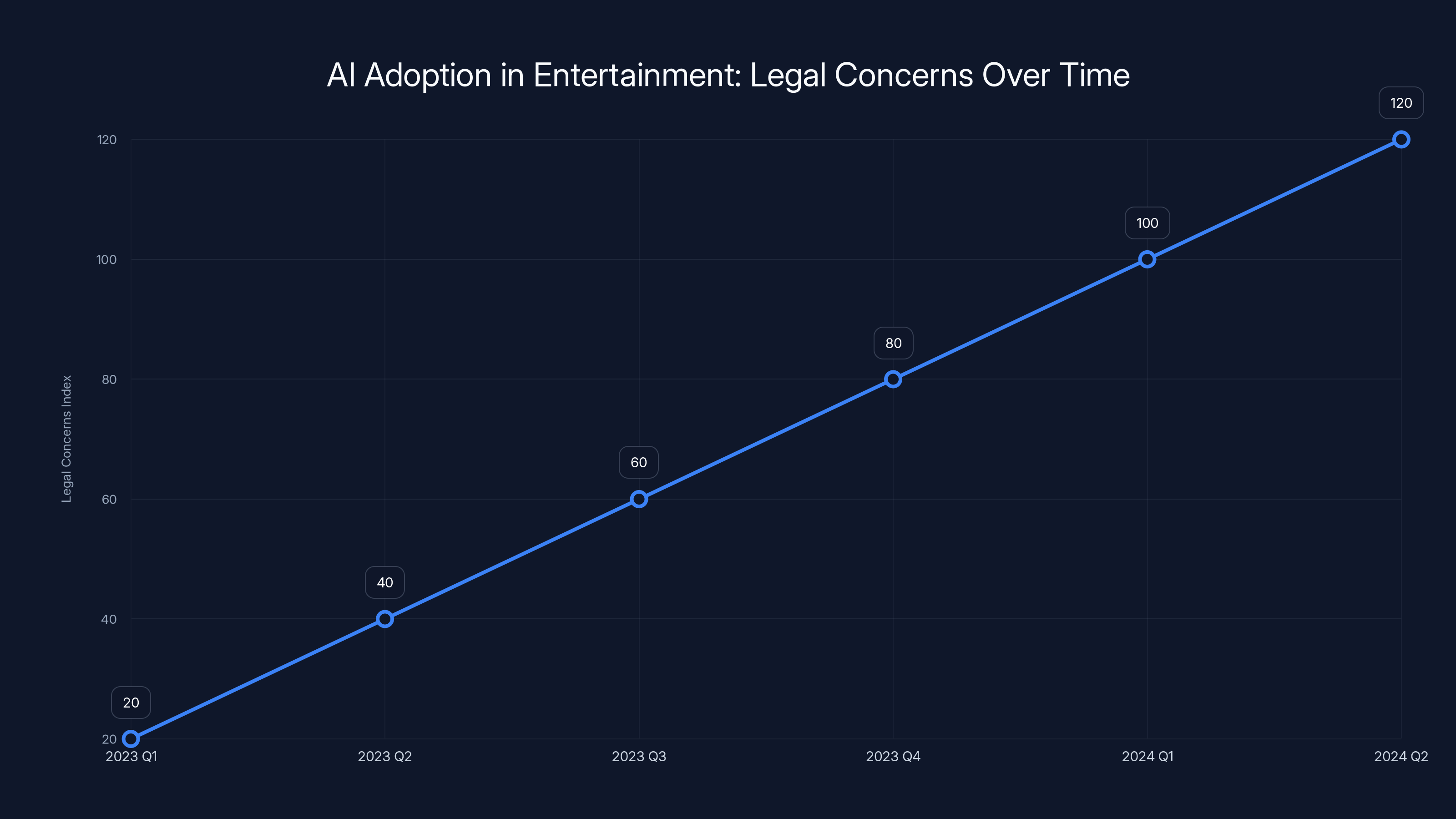

This isn't theoretical. In late 2023 and throughout 2024, multiple lawsuits were filed against AI companies by writers, actors, visual artists, and studios themselves. The Writers Guild struck in 2023 specifically demanding protections against AI replacing their work. The actors union followed with similar demands. Neither was just being stubborn—they were responding to real legal and economic threats.

So when studios looked at generative AI tools, they saw tremendous creative potential paired with genuine legal landmines. That's not a calculus that encourages adoption, especially in an industry where a single copyright lawsuit can cost millions and drag through courts for years.

Adobe recognized this as the primary barrier. The company had been working with major studios using their earlier Firefly models, and repeatedly heard the same request: give us AI that only knows our stuff. AI trained on our IP. AI that we can legally defend.

That insight became Firefly Foundry.

How Firefly Foundry Works: Custom Models for Custom IP

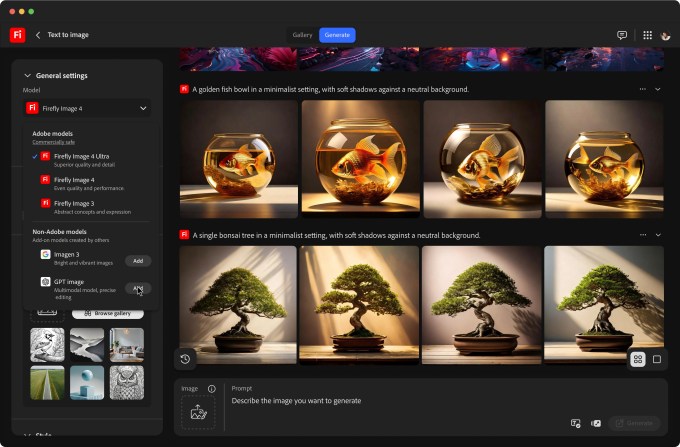

Understanding Firefly Foundry requires understanding how typical generative AI models work, and where Firefly Foundry breaks the mold.

Most generative AI systems follow this pattern: ingest massive datasets, extract patterns, compress those patterns into a model, then let users prompt the model to generate new outputs. The more diverse the training data, the more flexible the model. But that flexibility comes from learning patterns from millions of sources—many of which are copyrighted.

Firefly Foundry inverts this paradigm. Instead of one generic model serving millions of users, each enterprise client gets their own private model. That model trains exclusively on content the client owns or licenses. Disney's Firefly Foundry model only learns from Disney's approved content. Warner Bros. gets their own model trained on their library. Universal gets another.

This creates several immediate advantages.

First, there's absolute ownership clarity. Every piece of training data has documented rights and permissions. When the model generates new assets, those assets don't contain learned patterns from competitors' IP. They're derived from your own creative universe.

Second, the model understands context that generic models can't. Disney's Firefly Foundry model understands how Disney characters move, how Disney's world-building functions, what visual language Disney uses across its properties. It's not guessing based on patterns learned from thousands of studios. It's deeply specialized in one studio's creative DNA.

Third, privacy and security become manageable. The model lives on private servers. The training data never leaves the studio's control. Competitors don't get access to insights about how the model works or what it learned. Each deployment is completely isolated.

Adobe handles the infrastructure, updates, and optimization. But the studio maintains complete control over what gets trained on, what gets generated, and what happens next.

From a technical standpoint, Firefly Foundry still uses generative AI architecture similar to other foundation models. But the data pipeline is completely different. Instead of scraped internet content, training data comes from curated, rights-cleared studio libraries. Instead of training once and deploying to millions, training happens continuously as studios add new content to their approved dataset.

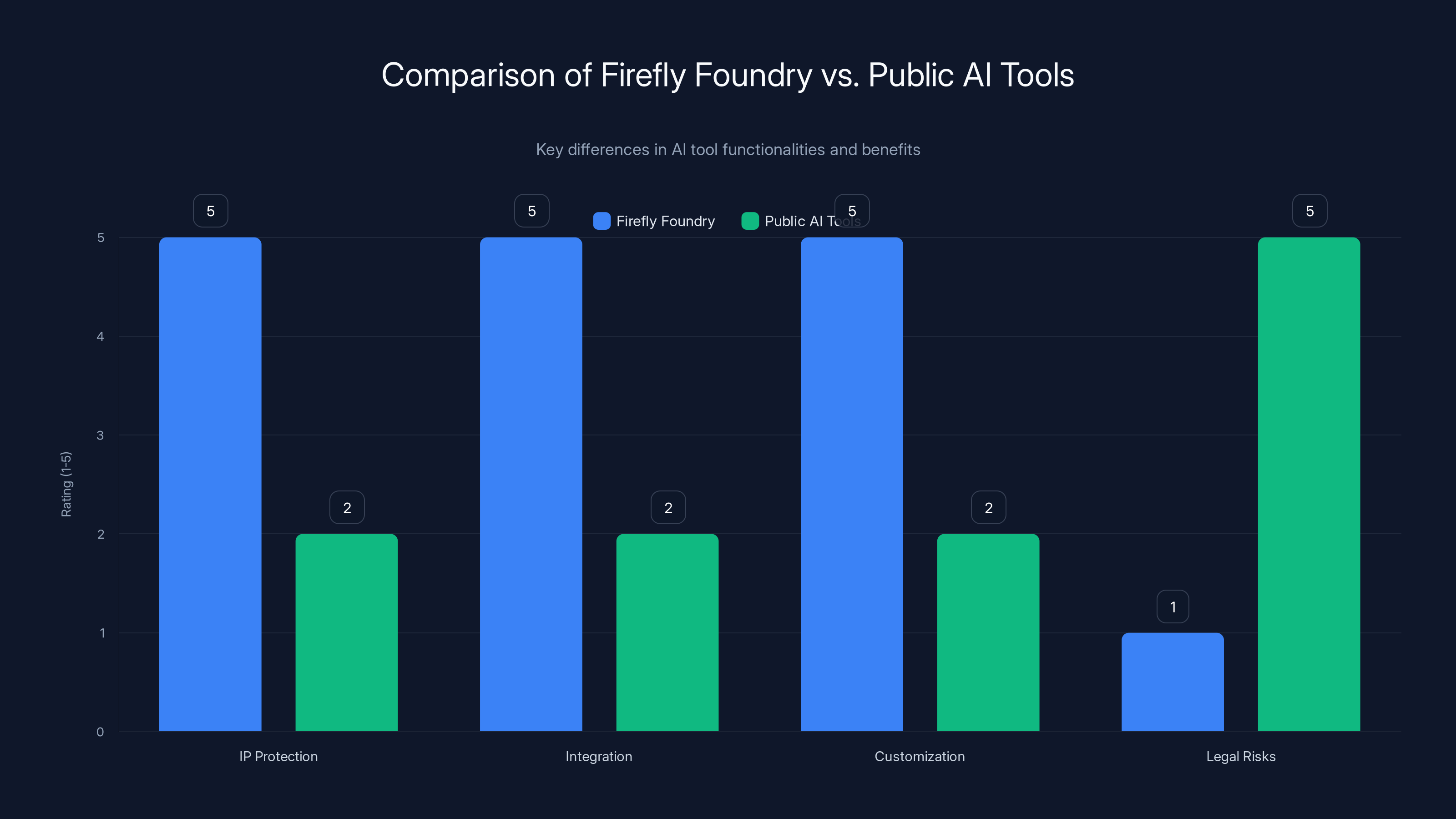

Firefly Foundry excels in IP protection, integration, and customization, while minimizing legal risks, compared to public AI tools. Estimated data based on feature descriptions.

The Production Workflow Integration: Seamless Content Creation

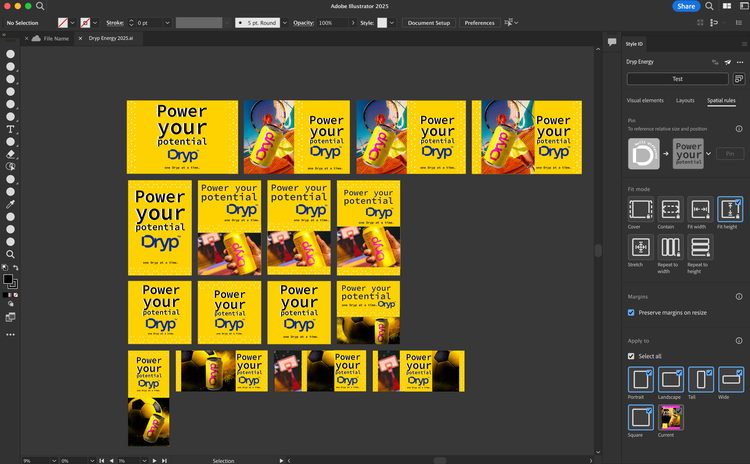

Here's where Firefly Foundry gets genuinely powerful: it doesn't exist in isolation. It integrates directly into production tools studios already use every single day.

Most major studios run their production pipelines through Adobe software. Premiere for editing. After Effects for motion graphics and VFX. Photoshop for concept art. The entire creative workflow is built around these tools. Artists, editors, and directors know these interfaces intimately. Switching tools isn't just inconvenient—it's workflow poison.

Firefly Foundry was built specifically to avoid that switching cost. Imagine you're editing a film in Premiere and you need a shot of your protagonist walking through a specific location. Normally, you'd need to either film a new scene or find stock footage. With Firefly Foundry, you describe what you need, and the AI generates it—right there in Premiere, using a model trained on your film's visual style and characters.

Or you're in post-production and need variations of a scene. Different camera angles, different lighting, different actor expressions—all within the physics and style of your film. Firefly Foundry generates them. Not as final assets, but as rich options for creative decision-making.

For visual effects, the advantage is even clearer. Complex VFX traditionally takes weeks or months. Rendering alone can consume massive computational resources. With Firefly Foundry, you can generate rough VFX sequences in hours, evaluate them creatively, then refine only the shots that make the cut.

Adobe also designed Firefly Foundry to handle audio-aware video generation. This is a specific technical achievement. Most AI video tools generate video independently of sound. Audio-aware generation means the AI understands dialogue, music, and ambient sound, and generates video that syncs meaningfully with the audio. For film production, this is essential. You can't shoot a scene with dialogue if the character's lips don't match the words.

The company also built in 3D and vector generation capabilities. Entertainment production involves 3D modeling for animation, visual effects, and virtual production. And vector graphics are essential for motion graphics, titles, and color grading workflows. Firefly Foundry generates these asset types directly, not just 2D images.

All of this integrates back into Adobe's existing ecosystem. Assets flow between Premiere, After Effects, Audition, and Substance tools without format conversion or manual export work. That's where the real productivity gain happens—not in the AI generation itself, but in eliminating the friction around it.

Strategic Partnerships: Why Directors and Agencies Chose Adobe

Adobe didn't build Firefly Foundry in a vacuum. The company announced partnerships with some of the entertainment industry's most respected figures and institutions.

Directors matter here. David Ayer, who directed Suicide Squad and Fury, partnered with Adobe to refine Firefly Foundry. Jaume Collet-Serra, who directed Black Adam and The Nun franchise films, did the same. These aren't studio executives or tech consultants. They're working directors who understand production workflows, budget constraints, and creative vision protection from firsthand experience.

When a director of Ayer's stature says "this tool works for how I actually make films," that carries enormous weight. It signals that Firefly Foundry isn't just theoretically useful—it's been tested against real production pressures.

Talent agencies played a crucial role too. Creative Artists Agency, United Talent Agency, and William Morris Endeavor all partnered with Adobe. These agencies represent actors, writers, and creatives. They're sensitive to IP protection because they profit from selling creative talent and protecting their clients' work from unauthorized use. If these agencies endorsed Firefly Foundry, it meant they believed it respected IP rights and protected their clients.

Production houses also signed on. B5 Studios, Promise Advanced Imagination, and Cantina Creative represent the working end of filmmaking. They know what tools actually accelerate production and which ones create headaches. Their partnerships suggest Firefly Foundry delivered practical benefits in real shoots.

Adobe also invested in education partnerships. The company partnered with Parsons School of Design and the Wearable Worlds Institute to develop curriculum and research resources around AI in creative fields. This was a long-term play. By getting the next generation of designers, animators, and filmmakers comfortable with Firefly Foundry during their education, Adobe builds a pipeline of professionals who'll naturally use the tool throughout their careers.

These partnerships served multiple strategic purposes. They provided real-world feedback that shaped the product. They offered social proof—if major directors and agencies trust this, maybe it's worth trusting. And they created a network of advocates who could explain Firefly Foundry to skeptics within the industry.

Industry adoption rarely happens because a product is technically superior. It happens because respected figures in the industry endorse it, demonstrate it working in their own practice, and create momentum that makes not using the tool feel risky.

The Legal Protection Architecture: How IP-Safe Actually Works

Understanding how Firefly Foundry protects intellectual property requires looking at the legal and technical architecture together.

Traditionally, AI companies argue they're not liable for what their models learn because they're just running an algorithm on publicly available data. The legal responsibility lies with users who generate content. But this argument collapses under scrutiny, especially in entertainment where studios need absolute certainty about their generated assets.

Firefly Foundry eliminates this ambiguity by being selective about training data from the outset. Every piece of data that feeds the model comes with documented licensing rights. Adobe performs due diligence on IP ownership before that data ever gets near the training pipeline.

This creates a legal foundation that's fundamentally different from generic AI tools. If Disney's Firefly Foundry generates an asset, Disney can trace exactly what training data influenced that asset. If there's ever a copyright question, Disney has documentation proving the training data was properly licensed.

It's not perfect—no system is—but it shifts the risk calculation dramatically. The studio isn't asking "will we get sued?" It's asking "can we defend ourselves if sued?" Firefly Foundry lets studios say yes with actual documentation.

Adobe also included contractual protections in enterprise agreements. If a Firefly Foundry-generated asset somehow infringes on third-party IP despite the careful data curation, Adobe covers legal costs. This shifts liability away from the studio and onto Adobe, which has the resources and insurance to handle it. No studio would adopt a tool without understanding liability. These contractual protections make adoption actually possible.

The technical architecture reinforces this. Because models are private to each client and trained only on that client's data, there's no cross-contamination. Disney's model doesn't learn from Warner Bros.' data. Sony's model doesn't absorb patterns from Universal's films. Each studio's creative universe remains hermetically sealed.

Version control also matters. Adobe tracks exactly which version of which training dataset produced which model at which point in time. If an issue emerges, the studio can audit the model, identify the training data that caused it, and make decisions about retraining or modification. This auditability is legally critical.

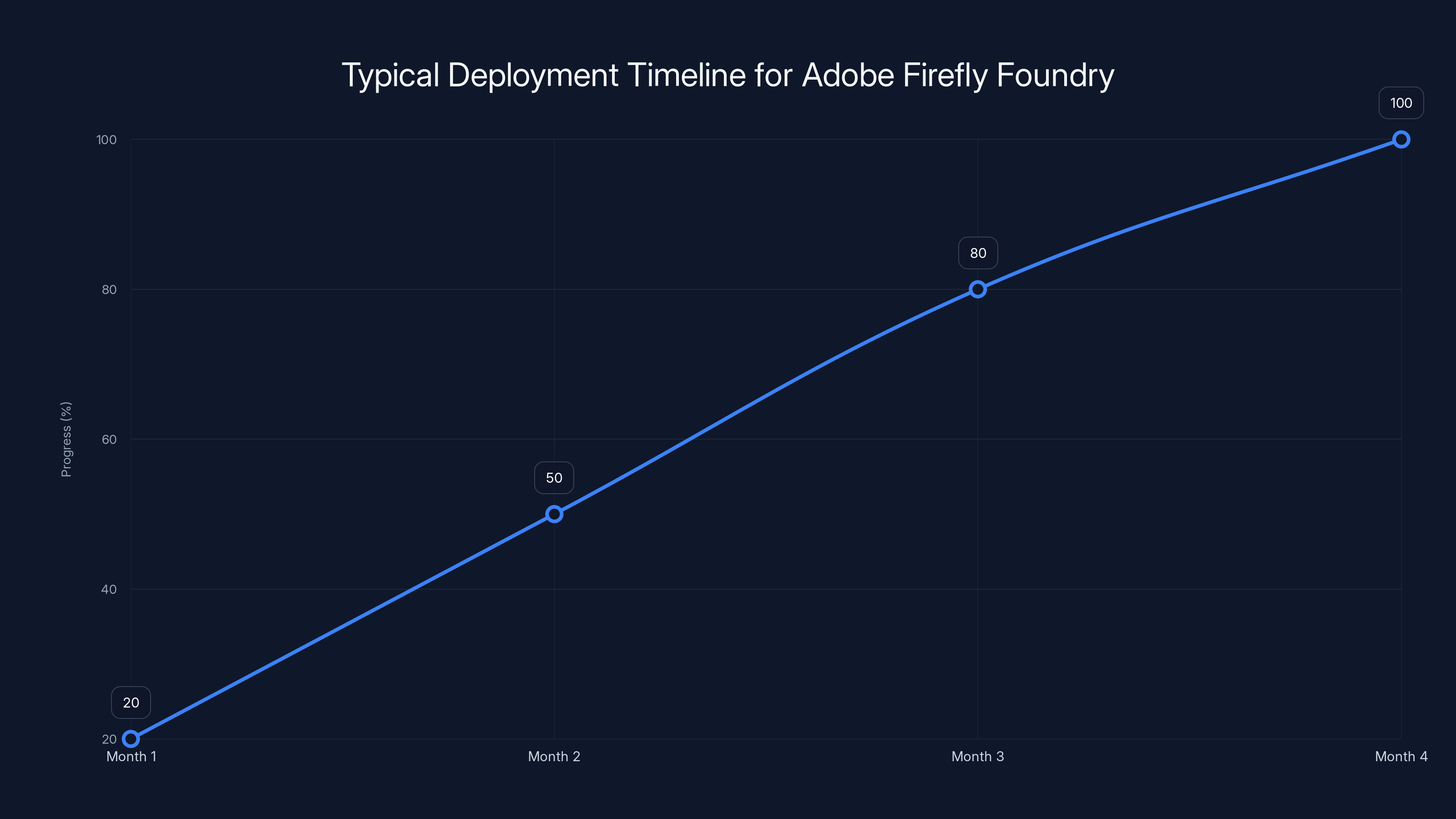

Studios can expect a 3-4 month deployment timeline from contract to production use, with progress milestones at each stage. Estimated data.

Cost Structure: Is Firefly Foundry Expensive?

Adobe hasn't publicly announced Firefly Foundry pricing, which tells you something important: it's not a flat-rate subscription tool. Pricing is enterprise, case-by-case, based on usage and customization.

For context, other custom AI deployments in enterprise environments typically cost six figures annually. You're paying for the model infrastructure, ongoing training and updates, integration engineering, support, and contractual liability protection. These aren't small expenses.

But here's the cost analysis studios actually do: compare Firefly Foundry pricing against the cost of production delays, reshoots, and legal liability. If Firefly Foundry can compress a four-week VFX timeline to two weeks, that's worth significant money. If it eliminates the risk of a copyright lawsuit, that's worth even more.

Large studios with nine-figure production budgets can easily justify investing in custom AI that accelerates production. Mid-sized studios with smaller budgets need to see clearer ROI. Independent filmmakers probably can't afford it.

This creates a tier of adoption where Firefly Foundry becomes standard at major studios first, then potentially becomes more accessible to smaller producers as costs decline over time. That's a typical enterprise software adoption curve.

Adobe also likely offers volume-based pricing where studios pay less per asset if they generate thousands of variations across multiple productions. And they probably offer year-long commitments that discount the per-asset cost compared to monthly metering.

The key insight is this: Firefly Foundry isn't trying to be cheap. It's trying to be cheaper than the alternative costs of traditional production. If you can save money, time, and legal risk, you pay for the tool. If you can't measure that value, you don't.

Firefly Foundry vs. Generic AI Tools: The Strategic Difference

To understand why Firefly Foundry matters, you need to see how it differs from the AI tools studios can already access.

Tools like Midjourney, Stability AI, or Claude are incredible at generating diverse content because they trained on massive datasets spanning the entire internet. Ask them to generate a unique character in a specific visual style, and they'll deliver something fast and impressive. But that impressive output learned from billions of images, some of which were copyrighted.

Studios using these tools have to be defensive. Run the generated assets through a legal review. Verify that the output doesn't infringe on existing IP. Modify assets that might be too similar to existing work. This friction extends production timelines and creates legal liability that internal stakeholders have to sign off on.

Firefly Foundry removes that friction. Because the model trained only on owned IP, studios can generate assets with confidence. There's no legal review process needed. No liability concerns. Just creative iteration at production speed.

The tradeoff is specificity versus flexibility. Generic AI tools can generate almost anything. Firefly Foundry generates variations within your creative universe. If you want a character that looks nothing like anything in your studio's catalog, Firefly Foundry will be less helpful than a generic AI tool. But that's not what studios usually need. Studios need variations on their existing characters, worlds, and visual language.

Another key difference: integration. Generic AI tools exist outside production pipelines. You generate something in Midjourney, download it, import it into Premiere, and hope the format and quality work. Firefly Foundry lives inside Premiere, After Effects, and Photoshop. No export-import workflow. No quality loss. No friction.

There's also the creative control dimension. With generic AI, you're subject to the model's limitations and quirks. It might refuse to generate certain content because of its training or safety guidelines. Firefly Foundry respects studio-specific guidelines. If your studio wants to generate violent content for a rated-R film, the model supports it. If your studio wants to avoid certain visual tropes, you can configure the model accordingly.

From a competitive standpoint, using Firefly Foundry gives studios advantages that other studios can't immediately copy. If Sony builds a Firefly Foundry model trained on decades of Spider-Man content, nobody else has access to that specialization. The model understands Spider-Man's physics, visual style, and character development in ways a generic AI never can. That's defensible differentiation.

Real-World Use Cases: Where Firefly Foundry Actually Accelerates Production

The strategic value of Firefly Foundry becomes clear when you trace it through actual production workflows.

Pre-visualization and concept work. Directors traditionally shoot extensive pre-viz footage to test camera angles, blocking, and sequencing before the expensive main shoot. This takes weeks. With Firefly Foundry trained on a studio's visual library, a director can generate dozens of pre-viz variations in days. Each one maintains consistency with the film's established visual language because the model learned it. This acceleration compresses the pre-production timeline significantly.

Visual effects development. Complex VFX shots take months. Before committing to expensive traditional VFX work, supervisors use Firefly Foundry to generate rough versions of multiple approaches. They evaluate which approach works creatively, then the VFX house executes the final version. This lets studios make creative decisions faster without paying full VFX costs for every iteration explored.

Digital doubles and character animation. If a film needs scenes with actors that weren't possible to shoot—action sequences, aging effects, alternate takes—studios have traditionally turned to expensive digital doubles and animation teams. Firefly Foundry can generate variations of existing character performance trained on footage of the actor in similar scenes. Not as a final deliverable, but as a foundation that animators refine. This compresses timelines and gives creators more options to evaluate.

Reshoots and extended footage. Sometimes films need additional scenes after principal photography wraps. Budget and scheduling constraints can make reshoots difficult. Firefly Foundry can generate variations or extensions of existing scenes trained on the film's visual consistency. Again, not as final content, but as options that accelerate decision-making.

Stock footage replacement. Many films use stock footage for establishing shots or background elements. Generic stock footage often breaks visual consistency. Studios can use Firefly Foundry to generate establishing shots and backgrounds trained on their film's visual language, ensuring perfect continuity.

Motion graphics and titles. Most films use title sequences, end crawls, and motion graphics. These are entirely generatable from Firefly Foundry, trained on the studio's visual identity. A studio's title package can adapt to each film within the studio's established brand language.

Each of these use cases has a similar pattern: they accelerate production by reducing the friction between creative intention and final asset. The director knows exactly what they want, but the traditional process takes weeks to deliver it. Firefly Foundry compresses that timeline to days or hours.

Legal concerns related to AI adoption in entertainment have increased significantly from early 2023 to mid-2024, primarily due to lawsuits and union strikes. Estimated data.

The Competitive Landscape: Why Adobe's Timing Matters

Adobe isn't the only company trying to serve entertainment studios with custom AI. But Adobe's position in the market is uniquely strong.

Most AI startups are trying to convince studios that AI is valuable. Adobe is trying to convince studios that Adobe's AI is safer and more integrated than alternatives. That's a much easier pitch because studios already use Adobe's software.

There's also the incumbent advantage. Adobe has spent 30+ years building relationships with studios, understanding production workflows, and updating software to match how professionals actually work. That institutional knowledge is hard to replicate. A startup can build better AI algorithms, but they can't instantly understand the nuances of film production like Adobe can.

Competitors exist. Companies like Runway are building video generation tools aimed at creators and studios. Eleven Labs is doing audio generation. Synthesia and Synthwave focus on specific use cases like video interviews and digital humans. But none of these offer the full package: legal protection, custom training on owned IP, integration into a complete production suite, and enterprise support.

Some studios might piece together custom solutions from multiple vendors. But that complexity creates technical and operational friction. With Firefly Foundry, everything lives in one system with one vendor managing liability and integration.

The timing also matters because the industry consensus around AI safety is still forming. Studios remain uncertain about AI liability. By offering IP-safe custom models integrated into familiar tools, Adobe positions itself as the conservative, protective option. Not the company promising revolutionary AI, but the company protecting studios from AI's legal risks.

This positioning matters especially because of ongoing litigation. If courts rule that training on copyrighted data without permission violates IP law, generic AI tools could face massive liability. But Firefly Foundry, trained only on owned or licensed content, would be unaffected. That legal protection could become dramatically more valuable than it seems today.

Implementation and Deployment: The Operational Reality

Having a powerful tool is one thing. Integrating it into production is another.

Adobe is handling the deployment heavily. The company manages the model infrastructure, retraining and updates, security, and compliance. Studios don't need to hire specialists to maintain custom AI models. They just provide training data and start generating content.

The deployment timeline typically involves several months. First, studios audit their content library and organize the data Adobe will use for training. Then Adobe handles the technical work of training the custom model. Then there's integration testing and training for creative teams. Most studios probably look at a 3-4 month deployment from contract to production use.

For large studios with massive content libraries, this process is involved but manageable. For smaller studios, it might be simpler since they have less data to curate. But the fundamental workflow is the same: provide data, get a custom model, integrate into existing tools, start creating.

Adobe also provides ongoing support and optimization. As studios use Firefly Foundry, the company monitors performance, updates the model with newly approved training data, and refines the system based on usage patterns. This ongoing engagement is part of the enterprise service, not a separate cost.

The deployment model assumes studios have already standardized on Adobe's creative suite. If a studio uses competing tools like Autodesk software, Cinema 4D, or Nuke, Firefly Foundry integration becomes more complex. But for studios already deep in Adobe's ecosystem, implementation is relatively straightforward.

Training Data Curation: The Foundation of IP Safety

Firefly Foundry's entire value proposition depends on training data quality. If the training data is bad, the model is bad. If the training data has IP problems, the model has IP problems.

Adobe has built significant infrastructure around data curation. The company works with each studio to identify which content should be included in training. This involves both technical and legal review. Technical teams verify that the data is in formats the training system can handle. Legal teams verify that the studio actually owns the rights to use this content in training.

This is more complex than it sounds. A typical major studio owns hundreds of thousands of hours of content. Some of it was produced entirely in-house. Some was licensed from other creators. Some contains third-party visual effects, music, or other elements that weren't created by the studio. Which content should be included in training?

Adobe and the studio make those decisions together. Generally, content the studio created entirely can be included. Content with third-party licensing gets more careful review. The goal is building a training dataset that's unambiguously legally safe to use.

Once training data is approved, Adobe implements version control and audit trails. The company maintains records of exactly which content was included in which model version. If a legal question ever emerges, Adobe can document the training data provenance.

This curation discipline is labor-intensive. It's one reason Firefly Foundry pricing is enterprise-grade. The value Adobe delivers includes not just the AI model, but the legal and operational infrastructure ensuring the model is actually IP-safe.

As studios add new content to their library, they can update the training data. New films, shows, or digital content get added to the approved training set, and the model gets retrained to incorporate that new creative material. This creates a continuously improving system that stays aligned with the studio's current work.

Firefly Foundry significantly reduces production timelines across various stages, offering up to 50% time savings in VFX development and digital doubles creation. Estimated data.

The Writers and Actors Problem: How Firefly Foundry Protects Talent

Hollywood's writers and actors have been resistant to AI for understandable reasons. AI that can generate scripts, dialogue, or digital doubles threatens their livelihoods.

Firefly Foundry attempts to address this by being transparent about training data. The model only trains on content the studio owns or has licensed. It doesn't train on scripts or performances created by external writers or actors unless the studio has explicit permission and likely compensation agreements.

But this doesn't fully solve the problem. If Firefly Foundry trains on existing films, it's learning from performances by real actors. The model can generate variations or digital doubles based on those performances. That raises obvious questions about actor rights and compensation.

Adobe and studios will need to navigate this carefully. The unions representing writers and actors have political leverage and legal grounds to challenge AI practices that undermine their members' interests. Any contentious use of Firefly Foundry—like generating dialogue that displaces writers or digital doubles that replace actors—could trigger union action.

The path forward likely involves negotiated agreements. Studios might agree to use Firefly Foundry-generated content only in specific circumstances, with union approval and potentially additional compensation. Firefly Foundry might be used for pre-viz and internal production work, but not for final released content without additional permissions.

This mirrors earlier negotiations around other technologies. When digital color grading emerged, unions initially resisted it as threatening traditional colorists. But eventually they negotiated agreements that allowed the technology while protecting their members' work and compensation.

Similar evolution is likely with Firefly Foundry. The unions will push back on perceived abuses. Studios and Adobe will negotiate reasonable limits. Eventually, a consensus will emerge about acceptable uses and protections. But that consensus doesn't exist yet, which creates uncertainty about how aggressively studios will deploy Firefly Foundry.

Production Economics: The Financial Pressure for AI Adoption

Why will studios actually invest in Firefly Foundry despite the legal and union complications?

Because production budgets are under unprecedented pressure.

Film and television production costs have skyrocketed over the past two decades. A major studio blockbuster now regularly costs

These economics are unsustainable. Streaming companies have realized they can't fund endless prestige television with massive budgets. Traditional studios are struggling with theatrical economics. Everyone is looking for ways to maintain creative quality while reducing costs and timelines.

Firefly Foundry offers a real mechanism for doing that. If it can compress VFX timelines by 20-30%, that's meaningful savings. If it can reduce the number of reshoots needed, that's meaningful savings. If it can accelerate pre-production processes, that's meaningful savings.

These savings compound across multiple productions. A studio that adopts Firefly Foundry across 8-10 productions annually could save tens of millions in production costs. That's real money in an era where every production is scrutinized for ROI.

The competitive pressure also drives adoption. If Warner Bros. adopts Firefly Foundry and gains production cost advantages over competitors, other studios have to match that capability or risk falling behind. This creates industry momentum toward adoption.

It's not that studios love AI. It's that they can't afford not to adopt tools that reduce costs and timelines while protecting their IP. Firefly Foundry solves both problems simultaneously, making adoption economically compelling.

Integration with Other Adobe Products: The Network Effect

One of Firefly Foundry's biggest advantages is how it connects to Adobe's broader product ecosystem.

Premiere Pro is where most film editing happens. If Firefly Foundry can generate missing shots directly in Premiere, editors gain enormous power. They're not exporting content, waiting for AI generation, importing results, and adjusting. They're creating variations in real-time, evaluating them against the cut, and choosing the best option.

After Effects is where motion graphics and compositing happen. Firefly Foundry can generate effects elements, motion graphics, and composite backgrounds directly in After Effects. Again, this eliminates friction and keeps creative decision-making in the tool where artists are already working.

Photoshop connects the same way. Concept artists and retouchers can generate variations and refinements without leaving Photoshop. The tool they've used for 20 years becomes slightly more powerful.

Substance 3D allows game developers and 3D artists to generate materials, textures, and 3D assets trained on the studio's visual language. This opens up game development and interactive content creation to Firefly Foundry.

Audition handles audio, and Firefly Foundry generates audio-aware content that syncs with existing audio tracks. This is critical for film production where dialogue must match visuals.

The network effect here is significant. Because Firefly Foundry integrates across the entire Adobe suite, studios don't need to choose between using Firefly Foundry and maintaining their existing workflows. Firefly Foundry enhances those workflows. This makes adoption much easier than a standalone tool would be.

It also creates lock-in. Once a studio is using Firefly Foundry integrated throughout their production pipeline, switching to a competitor becomes expensive and disruptive. Adobe gains customer stickiness beyond the Firefly Foundry product itself.

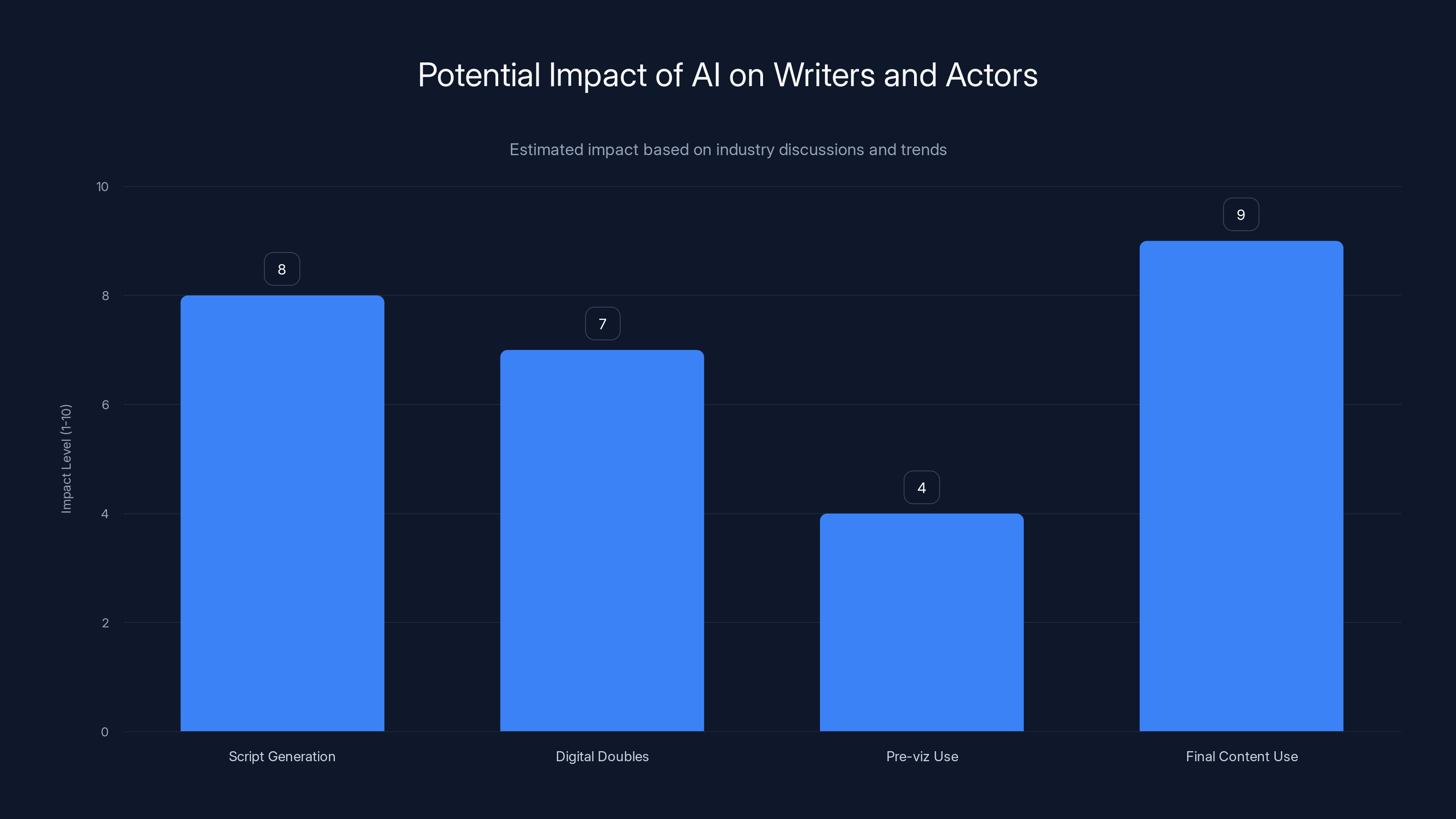

Estimated data suggests that AI's use in final content has the highest potential impact on writers and actors, while pre-viz use is less contentious.

The Sundale Announcement and Market Context

Adobe announced Firefly Foundry at the Sundance Film Festival in early 2025. This venue choice was strategic.

Sundance is where independent filmmakers, small studios, and emerging talent gather. It's the opposite of the Hollywood establishment. Announcing Firefly Foundry at Sundance signaled that Adobe wanted to position the tool as available to diverse creators, not just megacorporations.

But the actual deployment partnerships tell a different story. Adobe signed agreements with major talent agencies, established directors, and production houses. The early adopters are industry insiders, not indie filmmakers.

This gap between the messaging and the reality is important to understand. Adobe wants to seem inclusive and accessible. But enterprise AI tools are expensive, complex, and require significant technical infrastructure. They're not actually accessible to independent creators at launch.

Over time, this might change. Pricing might decrease. Deployment might simplify. Smaller studios might get access to shared model instances rather than fully custom models. But initially, Firefly Foundry is an enterprise tool for established studios.

The Sundance timing also positioned Adobe to capture mindshare before the industry fully confronts AI's role in production. By announcing partnerships with respected directors and offering a credible IP-safe solution, Adobe became the vendor to watch before alternatives could establish themselves.

Future Evolution: Where Firefly Foundry Goes From Here

Assuming Firefly Foundry succeeds with early enterprise adopters, how will it evolve?

The obvious path is expanding to adjacent creative industries. Fashion brands have custom visual languages. Advertising agencies need custom creative generation. Video game studios need asset generation trained on their art direction. Each of these verticals could benefit from custom Firefly Foundry models.

Adobe is likely already thinking about this. The company could offer Firefly Foundry services to luxury brands, architecture firms, product designers, and other creative enterprises. This expands the addressable market beyond entertainment.

Another evolution vector is capability expansion. Early Firefly Foundry might be good at generating variations and complementary assets. As the technology improves, it might become capable of generating complex sequences, entire scenes, or entirely new content within a studio's creative universe. This would further compress production timelines.

There's also the question of AI-to-AI workflow. Instead of humans prompting Firefly Foundry, other AI systems might generate prompts and coordinate multi-step generation. A director's intent could flow through an AI-orchestration system that breaks it into subtasks, dispatches them to Firefly Foundry, and assembles results. This would further abstract human creativity from execution.

But that gets into speculation. The more immediate question is whether Firefly Foundry actually works at scale and whether early adopters see real production benefits. If they do, adoption accelerates. If they don't, the product likely stays niche or gets retooled.

The broader question is whether generative AI in entertainment will follow the same pattern as earlier technologies: initial hype, then real adoption driven by genuine productivity gains, then eventual normalization as part of the creative toolkit. Firefly Foundry is betting that genuine productivity gains exist and that studios will pay for IP-safe tools to capture them.

Industry Reception and Skepticism

Not everyone in entertainment is excited about Firefly Foundry.

There's the obvious skepticism from writers and actors who see AI as threatening their work. That skepticism is politically powerful because these groups have union representation and legal leverage.

There's also skepticism from traditional visual effects houses. VFX companies employ thousands and generate billions in annual revenue. If Firefly Foundry meaningfully reduces VFX work, that's an existential threat. They have incentive to position Firefly Foundry as inferior, limited, or legally risky.

There's skepticism from independent filmmakers and smaller studios who will never afford Firefly Foundry and see it as entrenching the power of large studios that can afford custom AI.

And there's skepticism from creative professionals who doubt that AI can generate content worth using in final productions. They think Firefly Foundry is useful for pre-viz and exploration, but not for delivered work. This skepticism might be justified—the technology is still early and has clear limitations.

These skeptics might be right. Firefly Foundry might end up being less transformative than advocates hope. It might be useful in specific narrow cases but not reshape production broadly. Or it might generate spectacular failures—films that obviously look AI-made, creative disasters that hurt studios' reputations.

But the fact that major studios, directors, and agencies are investing in Firefly Foundry suggests they believe the risk of not adopting is higher than the risk of adopting. That's the real test—not whether skeptics approve, but whether decision-makers inside studios think it's worth the investment.

Comparing Firefly Foundry to Alternative Approaches

Studios considering Firefly Foundry might also consider alternatives.

Building proprietary AI in-house. Some large studios have in-house research teams and could theoretically build custom AI themselves. But this requires significant technical expertise, infrastructure investment, and time. Most studios lack the capability or desire to become AI infrastructure companies. Outsourcing to Adobe is pragmatic.

Using multiple generic AI tools. Studios could continue using Midjourney, Stability AI, Chat GPT, and other off-the-shelf tools while accepting IP risk and managing it through legal and creative review processes. This is cheaper than Firefly Foundry but carries greater liability.

Licensing third-party custom models. Other startups besides Adobe are building custom AI solutions. Studios could work with those vendors. But they'd lose the integration benefits and established relationships Adobe provides.

Continuing traditional production without AI. Studios could ignore AI entirely and continue with traditional production methods. But this exposes them to competitive disadvantage as other studios gain cost and timeline advantages.

Each alternative has tradeoffs. Firefly Foundry trades lower risk and better integration for higher cost and less flexibility. Whether that tradeoff is worth it depends on the studio's size, resources, and risk tolerance.

FAQ

What exactly is Firefly Foundry?

Firefly Foundry is Adobe's enterprise generative AI platform that creates custom models trained exclusively on IP that studios own or have licensed rights to. Unlike generic AI tools trained on internet-scale data, each Firefly Foundry deployment is private to a single client and generates content within that client's established creative universe. The models integrate directly into Adobe's creative suite, including Premiere Pro, After Effects, Photoshop, and other production tools.

How does Firefly Foundry differ from using public AI tools like Chat GPT or Midjourney?

Public AI tools train on massive datasets scraped from the internet, which includes copyrighted content and creates legal liability for users. Firefly Foundry trains exclusively on content the studio owns or has proper licensing rights for, eliminating copyright infringement risks. Additionally, Firefly Foundry integrates directly into Adobe's production software, whereas public tools require export-import workflows. The models are also specialized to understand a specific studio's creative language and characters, whereas public tools generate generic content.

What are the main benefits of using Firefly Foundry in production?

Firefly Foundry accelerates production timelines by enabling rapid generation of variations for pre-visualization, visual effects development, digital doubles, and other content. It reduces costs by compressing timelines and reducing the need for expensive reshoots or manual asset creation. It provides IP protection by training only on owned or licensed content, eliminating legal risks associated with generic AI tools. And it maintains consistency within a studio's established visual language since the model learned from the studio's specific creative work.

How does Firefly Foundry handle intellectual property and copyright concerns?

Firefly Foundry addresses IP concerns by training exclusively on content the studio owns or has explicit licensing rights to use. Adobe performs legal due diligence on every piece of training data before including it in the model. The company maintains detailed audit trails documenting the provenance of all training data. Enterprise agreements also include liability protections where Adobe covers legal costs if generated content infringes third-party IP despite these safeguards.

What types of content can Firefly Foundry generate?

Firefly Foundry can generate videos that are audio-aware (synchronizing with dialogue and music), static images and concept art, 3D models and assets, vector graphics, motion graphics and effects elements, and variations of existing scenes or characters. The platform is designed to generate content within a studio's established visual language and creative universe rather than entirely novel content that breaks from a studio's style.

Is Firefly Foundry available to independent filmmakers and smaller studios?

Not currently. Firefly Foundry is positioned as an enterprise tool with custom deployment, infrastructure management, and legal support. Early partnerships are with major studios, production houses, and talent agencies. Pricing and accessibility for smaller producers will likely improve over time as the technology matures and deployment processes simplify, but current access is limited to studios that can afford enterprise-scale investment.

How long does it take to deploy Firefly Foundry?

Deployment typically takes 3-4 months from contract to production use. The process involves auditing and organizing the studio's content library for training, Adobe training the custom model on that data, integration testing with the studio's existing tools and workflows, and training creative teams on how to use the system. Larger studios with massive content libraries might require longer deployment periods.

What happens as new content gets created? Does the model update automatically?

Firefly Foundry doesn't update automatically. Studios control when and what new content gets added to the training data. New films, shows, or digital assets created by the studio can be approved and added to the training set, and Adobe can retrain the model to incorporate that new creative material. This allows the model to evolve with the studio's work while maintaining the studio's control over what gets included in training.

How does Firefly Foundry protect against union and talent concerns?

Firefly Foundry's transparent training data approach helps address union concerns by showing that the system only trains on content studios own or licensed. However, full resolution of writer and actor concerns likely requires negotiated agreements that specify acceptable uses of Firefly Foundry (for example, using it for pre-visualization but not final released content without additional approvals) and potentially additional compensation when digital doubles or generated dialogue replace union work.

What is the competitive advantage for studios that adopt Firefly Foundry first?

Early-adopting studios gain production cost reductions and timeline compression advantages that persist until competitors catch up. More strategically, studios develop specialized models trained on their creative catalogs, creating defensible competitive advantages in their ability to generate variations and extensions of their franchises and characters. These advantages compound across multiple productions annually, creating significant economic moats for early adopters.

Conclusion: The Inevitable Evolution of Entertainment Production

Firefly Foundry represents something important that goes beyond one product announcement. It's evidence that AI adoption in entertainment isn't about whether AI will be used—it's about how responsibly and effectively it gets integrated.

For decades, entertainment studios have operated with relatively stable production methods. You wrote a script, hired talent, shot footage, edited the material, and released the work. The tools changed over time, but the fundamental workflow remained consistent. Firefly Foundry doesn't eliminate this workflow, but it augments it at crucial friction points.

The questions that remain are not technical but practical. Will Firefly Foundry-generated content actually look good enough to ship? Will production timelines actually compress meaningfully? Will cost savings materialize? Will unions accept the technology or fight aggressively against it? Will studios find creative ways to use AI or just replicate what they're already doing faster?

These are not questions Adobe can answer alone. They're answered through real production experience. Early studio partners will try Firefly Foundry, see what works and what doesn't, and adjust. That practical feedback will shape the next iteration.

What's clear is that studios need what Firefly Foundry promises. Production costs are unsustainable. Timelines are too long. And the legal risk of using generic AI tools is real. Any credible alternative to those problems gets serious consideration from major studios.

Adobe has positioned Firefly Foundry as the conservative, protective option. Not the tool promising to replace all creative work, but the tool that helps studios use AI responsibly within their existing creative processes. That positioning matters because Hollywood is skeptical of revolutionary promises but responsive to pragmatic improvements.

The next two years will reveal whether Firefly Foundry delivers on that pragmatic promise. If it does, adoption accelerates and competitive pressure forces other studios to match the capability. If it doesn't, the product gets iterated or deprioritized.

But either way, Firefly Foundry signals an industry consensus that AI has a role in entertainment production. The era of studios ignoring AI is over. The era of figuring out how to use it responsibly is just beginning. And Adobe, with its deep relationships in the industry and integrated tool suite, is well-positioned to lead that transition.

For studios, directors, and creative professionals, the immediate question is pragmatic: does Firefly Foundry solve real production problems better than alternatives? For some studios, the answer is clearly yes, and they're already moving forward. For others, more evidence is needed.

But the momentum is real. The partnerships are real. The production economics are real. And unless something dramatic changes, Firefly Foundry will become an increasingly common tool in entertainment production over the next few years.

The question isn't whether Hollywood will use AI. The question is how effectively they'll integrate it into their creative processes while protecting the intellectual property and creative control that defines the business. Firefly Foundry is Adobe's answer to that question.

Key Takeaways

- Firefly Foundry trains AI models exclusively on studio-owned IP, eliminating copyright infringement risks that plague generic AI tools

- Custom models integrate directly into Adobe's production suite, compressing VFX timelines and reducing production costs across multiple phases

- Enterprise partnerships with major studios, directors, and talent agencies validate real-world production value and accelerate industry adoption

- IP-safe architecture provides legal protection and liability coverage, addressing the primary barrier preventing studio adoption of AI

- Production economics and competitive pressure drive adoption—studios that gain cost and timeline advantages create momentum for industry-wide change

![Adobe's IP-Safe AI Models Are Reshaping Entertainment Production [2025]](https://tryrunable.com/blog/adobe-s-ip-safe-ai-models-are-reshaping-entertainment-produc/image-1-1769092649780.jpg)