Introduction: The Wearable AI Revolution is Here

Something shifted in the AI landscape when Amazon decided to acquire Bee, a San Francisco-based AI wearable startup. This wasn't just another acquisition. It was a statement about where the future of consumer technology is headed, and it tells us something important about the limitations of the smart home vision that dominated the last decade. According to Amazon's official announcement, the acquisition is aimed at expanding their AI capabilities beyond the home.

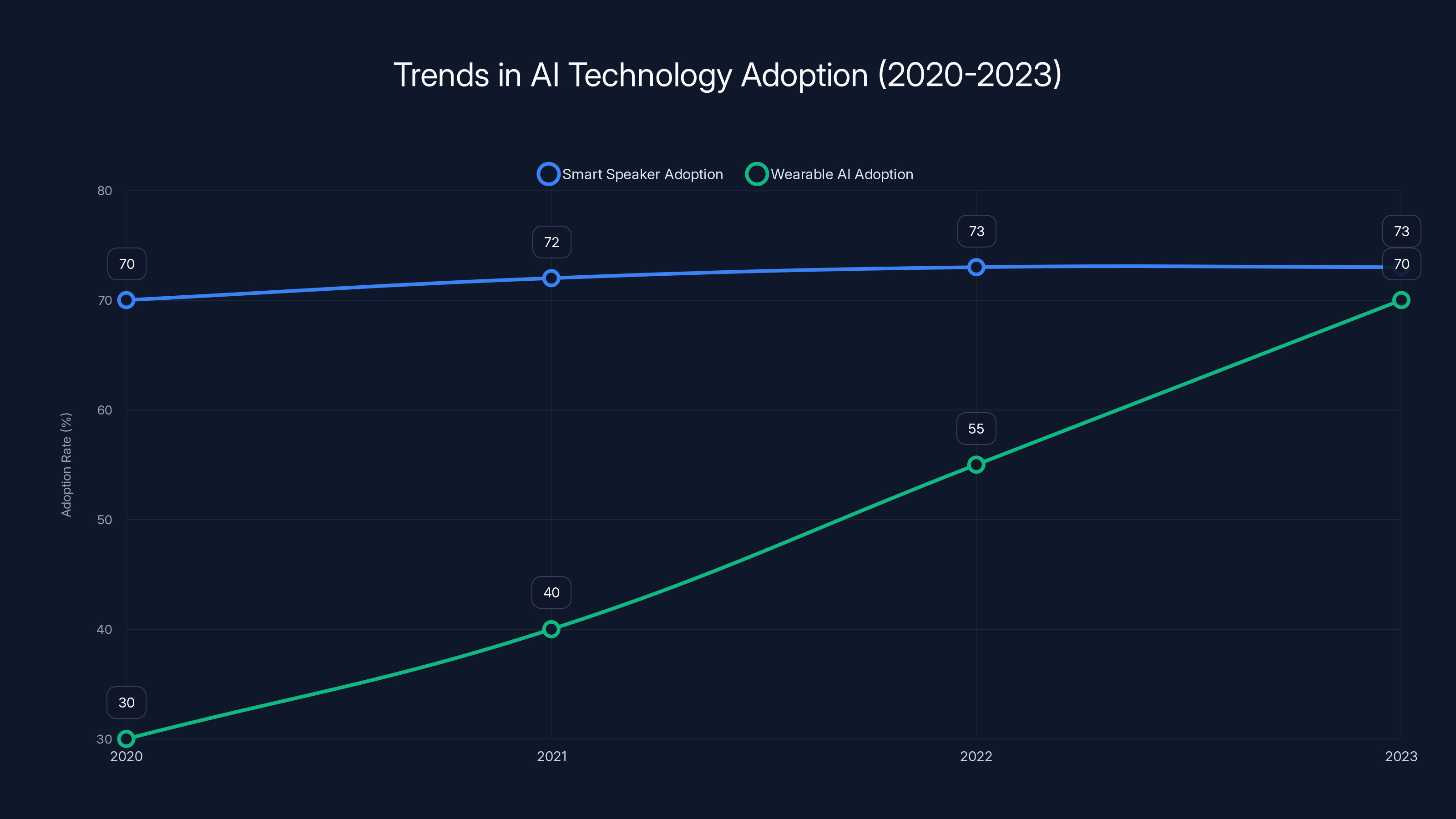

For years, tech companies pushed the narrative that AI should live in your home. Smart speakers on your nightstand. AI assistants in your kitchen. Voice-controlled everything in your living room. Amazon bet billions on this vision with Alexa, and while it succeeded in placing these devices in millions of homes, the reality is more complicated. Smart home adoption plateaued. Alexa's growth stalled. Competitors like Apple's Siri integrated deeper into daily life through the devices people actually carried with them, as noted in GeekWire's analysis.

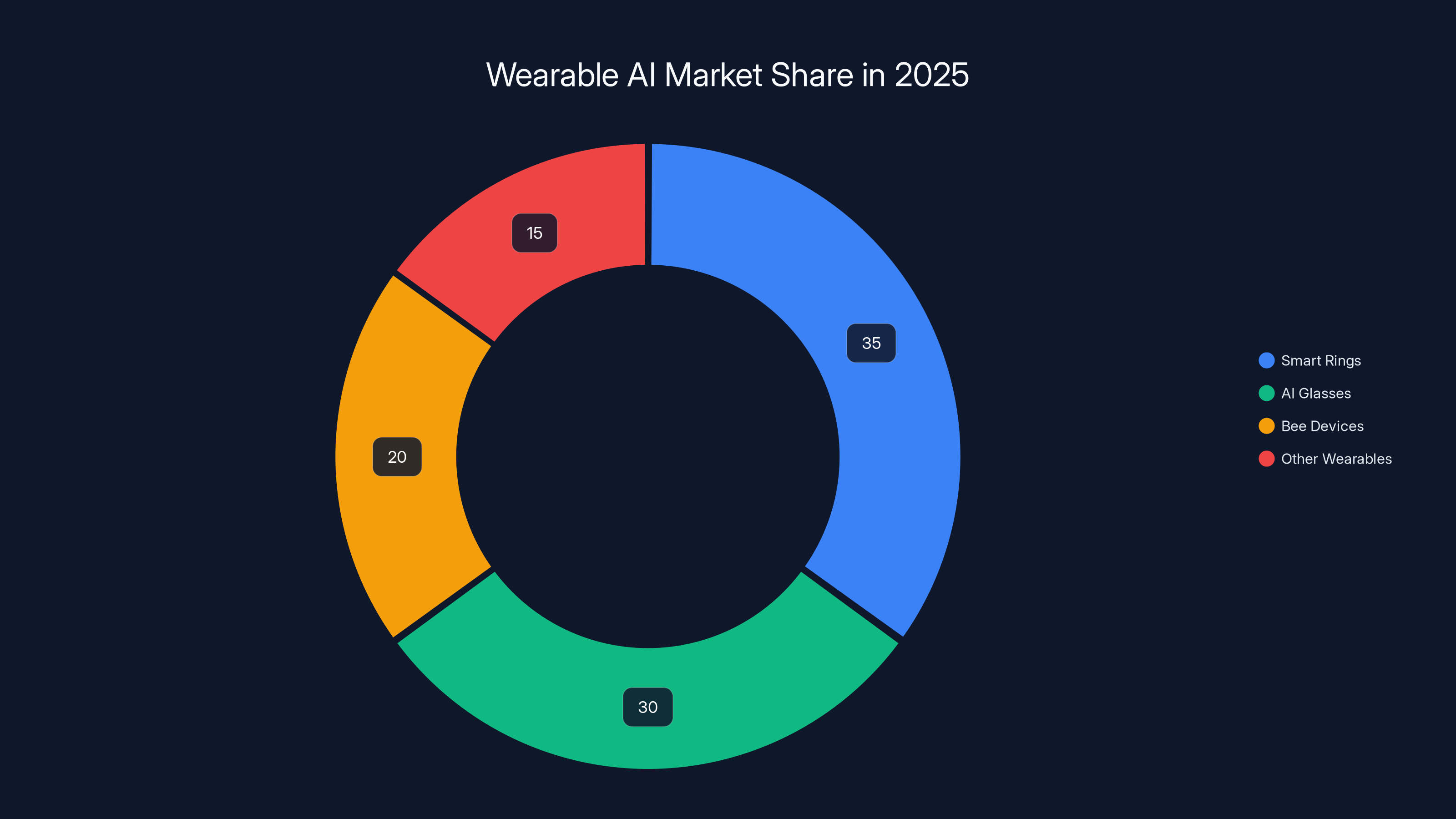

Then came 2024 and 2025. The AI wearable market exploded. Smart rings started appearing on people's fingers. AI glasses hit the mainstream. Wearable pins became status symbols. And suddenly, Amazon realized it had been thinking about AI in the wrong place. Not in your home. On your person. As reported by SF Standard, the shift towards personal AI devices has spooked traditional smart home markets.

Bee represents Amazon's acknowledgment of this shift and its bid to capture the next generation of AI interaction. Unlike Alexa, which lives on your countertop or in your speaker, Bee clips to your jacket, sits on your wrist, or hangs around your neck. It's with you constantly. It listens to your conversations, learns about your life, and acts as a personal AI companion that understands context about who you are and what matters to you.

But here's what makes this acquisition strategically brilliant: Amazon isn't trying to replace Alexa. It's not even trying to fully merge them yet. Instead, Amazon is thinking about the future of AI as layered, contextual, and distributed across devices. Alexa handles your home. Bee handles your world. Eventually, they work together seamlessly. It's a vision that could reshape how we interact with technology and how companies monetize AI going forward, as discussed in WebProNews.

This deep dive explores why Amazon made this move, what Bee actually does, how it differs from Alexa and other AI wearables, and what this means for the future of personal technology. We'll look at the technical architecture behind AI wearables, the business strategy driving the acquisition, and the implications for privacy, productivity, and how we work.

TL; DR

- Amazon acquired Bee to extend AI beyond the home into a personal wearable that records conversations and learns about users through their meetings, lectures, and daily life, as detailed by Amazon's official release.

- Bee and Alexa will eventually merge but currently operate as complementary AI systems, with Bee handling external context and Alexa managing the smart home.

- AI wearables are becoming the dominant interaction model as users prefer always-with-you devices over stationary smart speakers, according to 36Kr.

- The wearable AI market is exploding with smart rings, AI glasses, and pins all competing for personal device real estate.

- Privacy and recording raise fundamental questions about consent, data storage, and how companies use voice transcripts to train AI systems, as explored by Tech Policy Press.

Estimated data suggests that data usage is the highest privacy concern with Bee, followed by continuous recording and consent issues.

What is Bee and Why Does It Matter?

Bee is fundamentally different from every other AI assistant you've encountered. It's not a chatbot. It's not a voice assistant that responds to commands. It's a personal AI companion that runs locally on a wearable device and learns about your life through continuous recording and analysis, as described in Amazon's feature overview.

The core use case is deceptively simple: record your conversations. When you're in a meeting, Bee is listening. During a lecture, Bee is recording. At a conference, Bee captures everything. After the conversation ends, Bee transcribes the audio, discards the recording itself (reducing privacy concerns but also limiting playback capabilities), and uses the transcript to build a knowledge graph about you.

This knowledge graph is the magic. Bee doesn't just remember facts. It understands patterns. It learns your commitments, your goals, your relationships, and your projects. When you ask Bee a question later, it can draw on this accumulated understanding of your life to give contextually relevant answers. It can suggest follow-ups on discussions you had weeks ago. It can help you remember what you committed to during that client call last Tuesday.

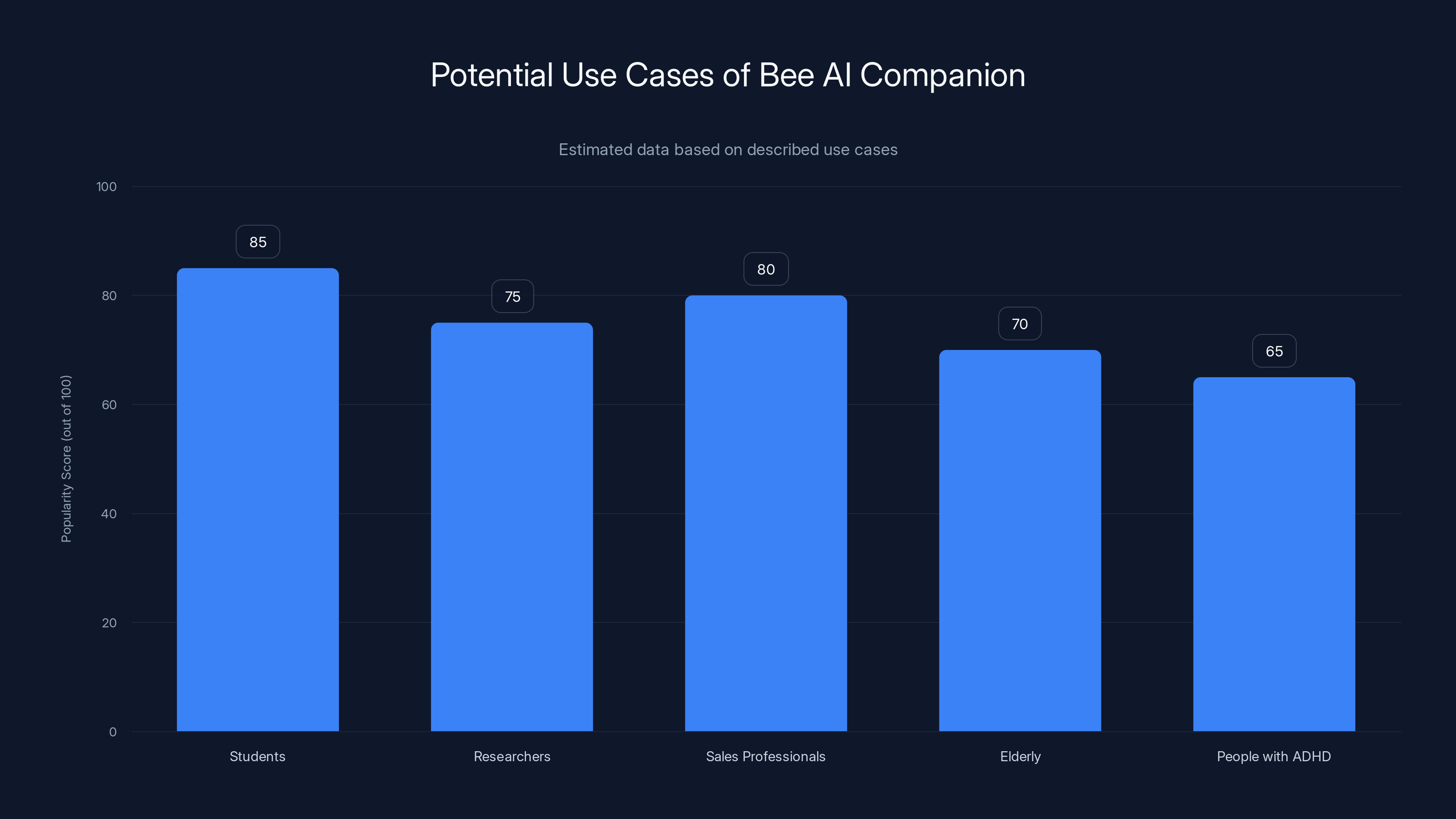

Early adopters have found surprising use cases. Students record lectures and get instant summaries. Researchers document interviews without the friction of manual note-taking. Sales professionals capture customer conversations and have Bee extract action items automatically. Elderly people with memory challenges use Bee to reconstruct what happened during their day. People with ADHD use it to externalize their thoughts and commitments.

What separates Bee from a simple voice recorder or even a transcription service is the AI layer on top. A voice memo just captures audio. Bee captures audio and understands it. It doesn't just transcribe words. It comprehends intent, extracts entities, identifies decisions, and builds relationships between different conversations and projects.

The device itself is elegant by design. Clip it to your jacket pocket. Wear it as a bracelet. Attach it to your backpack. It's small enough to be unobtrusive but visible enough that you're not secretly recording people. The physical form factor matters because it signals transparency. People know Bee is there. It's not hidden in your phone or buried in your pocket.

Estimated data shows that students and sales professionals are likely the most frequent users of Bee, leveraging its ability to summarize and extract action items.

The Strategic Logic Behind Amazon's Acquisition

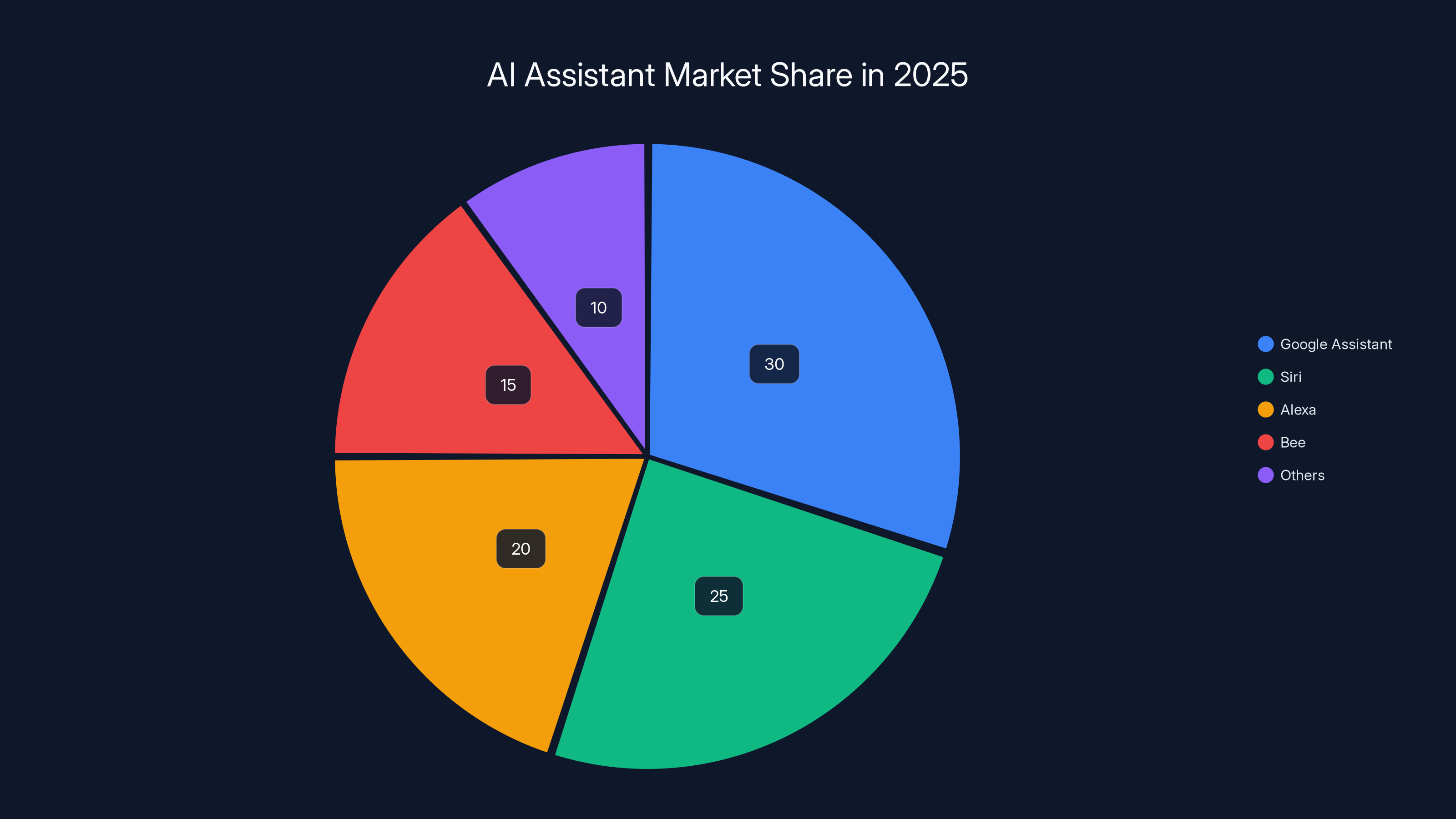

Amazon's purchase of Bee makes sense only when you understand the company's larger strategic position in 2025. Alexa, once Amazon's star product, has become a commodity. Every device manufacturer can embed a voice assistant. Every smartphone already has one built in. Google Assistant, Siri, and a dozen other competitors fragment the market. Alexa's growth has stalled, and revenue from Alexa devices has become a low-margin business, as highlighted by GeekWire.

Meanwhile, the AI landscape shifted completely. Large language models made it possible to build intelligent assistants that could actually understand context and nuance. Wearable technology matured enough to support always-on AI without draining batteries in three hours. User expectations evolved. People no longer wanted to shout commands at a device on their kitchen counter. They wanted AI that understood them personally.

Amazon faced a choice: spend years rebuilding Alexa from the ground up to compete in a wearable-first world, or acquire a team that already understands personal AI and has built product momentum. Acquiring Bee was faster, cheaper, and lower risk than rebuilding internally. The Bee team, led by founder Maria de Lourdes Zollo, had already solved the hard problems. They understood how to capture audio on a wearable device. They'd figured out privacy-preserving transcription. They'd built a personal knowledge graph that actually works. They had early traction with real users.

But there's a deeper strategic reason for the acquisition. Amazon recognizes that the future of AI isn't about single devices or single companies. It's about ecosystems. Alexa handles your smart home. Bee handles your personal life. Amazon's cloud services handle your business. AWS integrates with your enterprise tools. Over time, these layers integrate and share context. A meeting recorded by Bee can inform your home automation settings. Your smart home can coordinate with your wearable. Your Alexa devices can talk to your Bee, as noted in WebProNews.

This layered approach creates network effects. Each device becomes more valuable as it connects to the others. Amazon locks users deeper into its ecosystem. A customer who uses Alexa at home, Bee on their person, and AWS for business has much higher switching costs than someone using just Alexa. The economics improve too. A smart speaker at

There's also a talent angle. The Bee team is eight talented engineers and AI researchers building state-of-the-art personal AI. Amazon needs this talent to compete with Apple, Google, and emerging startups. Acquiring the company is cheaper than trying to recruit these people individually. They bring domain expertise, existing relationships with early users, and proven ability to execute in a domain where Amazon had failed.

How Bee's Architecture Differs from Traditional Voice Assistants

Almost every AI assistant you've used follows the same architecture. You give a command. The device processes your command and sends it to a cloud server. The cloud server processes your request, generates a response, and sends it back to the device. The device plays the response. This round-trip to the cloud introduces latency, requires constant internet connectivity, and means your voice data gets sent to external servers.

Bee's architecture is fundamentally different. The device records audio locally on the wearable. Processing happens on-device first. Only transcripts, not audio files, are sent to Amazon's servers. This approach has several advantages. It reduces privacy exposure because raw audio never leaves your body. It reduces latency because some processing happens immediately. It reduces bandwidth requirements, which is critical for a wearable with limited connectivity, as explained in CryptoRank.

The knowledge graph that Bee builds is also different from how traditional voice assistants work. Alexa processes requests one at a time. You ask a question, get an answer, move on. Alexa doesn't build a rich understanding of who you are or what matters to you. It doesn't learn from context. Each interaction is somewhat isolated.

Bee, by contrast, accumulates context over time. Every conversation is added to your personal knowledge graph. This graph isn't just a list of facts. It's a structured representation of relationships. It knows that Sarah is your team lead, that you have a project called Dashboard Redesign, that your company is exploring AI integration, and that you've committed to delivering a prototype by Q2. When you ask Bee a question weeks later, it can draw on this understanding to give you relevant, contextual answers.

The technical implementation matters here. Traditional chatbots use transformer-based models that process input and generate output. Bee uses multiple AI models working in concert. One model transcribes audio. Another extracts entities and relationships. A third handles question-answering. A fourth manages the knowledge graph. This modular approach allows each component to be optimized for its specific task and updated independently.

Privacy design is built into the architecture, not bolted on afterward. Audio is discarded immediately after transcription. What persists is structured data: who said what, when they said it, and what it means. This has privacy advantages but also limitations. You can't play back the original conversation to verify accuracy, which matters for some professional use cases. You can't hear tone of voice or inflection, which can change meaning. This trade-off—privacy for convenience—is baked into Bee's design.

Estimated data shows that Google Assistant leads the AI assistant market in 2025, with Alexa and Bee holding significant shares. The acquisition of Bee by Amazon aims to strengthen Alexa's position in this competitive landscape.

Bee vs. Alexa: Understanding the Complementary Strategy

The most confusing aspect of Amazon's acquisition strategy is the question of why the company needs both Bee and Alexa. Won't they cannibalize each other? Won't merging them make more sense?

The answer reveals sophisticated thinking about how different AI assistants serve different roles. Alexa is optimized for a specific use case: controlling your home. You say "Alexa, turn off the lights." Alexa turns off the lights. It's transaction-focused. You issue a command. Alexa executes it. The interaction is discrete and complete.

Bee operates in a completely different mode. It's not command-response. It's ambient. It's continuously recording and learning. It's building a relationship with you over time. You don't explicitly ask Bee to remember something. Bee observes your conversations and extracts what's important. Later, when you need context, Bee has it.

The complementary nature becomes clear when you think about where each device operates. Alexa lives in your home. It controls your environment. It handles entertainment, shopping, smart home automation, and informational queries that don't require personal context. You're at home, you want to do something in your home, you tell Alexa.

Bee operates outside the home. It's with you at work, in meetings, at conferences, during classes. It captures the context of your professional and personal life. It learns about your projects, relationships, and commitments. It helps you remember and organize everything that happened outside your four walls.

Eventually, Amazon plans to integrate these systems. Imagine this scenario: You're in a meeting captured by Bee. You commit to delivering a project report by Friday. Bee notes this commitment. Later, you get home. You say, "Alexa, what do I need to do this week?" Alexa checks with Bee, retrieves your commitments, and tells you that you need to deliver the report. Or imagine you tell Alexa to block your Friday afternoon on your calendar. Alexa coordinates with Bee to suggest that you should start working on the report Thursday evening. The integration creates intelligence that neither system can provide alone.

But this integration isn't happening immediately. Amazon is letting each system develop independently and prove its value first. This is smart. If Amazon tried to merge them too early, it might dilute Bee's focused functionality with Alexa's command-response paradigm. Let Bee establish its own user base, build momentum, and prove its value proposition. Then integrate when the technical and market conditions are right.

The Wearable AI Ecosystem Landscape in 2025

Bee doesn't exist in a vacuum. It's entering a crowded market of AI wearables that have exploded in popularity over the past eighteen months. Understanding this competitive landscape is essential to understanding why Amazon's acquisition makes strategic sense.

Smart rings have become the hottest wearable category. These tiny devices track health metrics, monitor your stress levels, and increasingly, handle payments and communicate with your phone. Companies like Oura and Samsung have captured significant market share. The appeal is obvious: it's always with you, it's subtle, and it handles critical health and financial functions. But smart rings have limited computational power, which constrains what they can do beyond biometric monitoring.

AI glasses represent the other major category. Meta's Ray-Ban AI glasses can answer questions, identify objects, and maintain conversations while you're walking around. Google Glass tried this years ago and failed, but the technology matured. The appeal of glasses is that they're a natural interface. You look at something, you ask a question, you get an answer. The barrier is social acceptance. Wearing AR glasses in public still feels unusual to many people. There's also the privacy question. If your glasses have cameras, you're potentially recording everyone you interact with, as discussed in ZDNet.

Bee occupies a middle ground. It's more powerful than a smart ring but less visually prominent than glasses. It's a personal recording device that's more socially acceptable than hidden recording (because it's visible) but less intrusive than glasses with cameras. It focuses on one specific function: capturing and understanding conversations through audio.

Other startups are building in adjacent spaces. Humane's AI Pin attempted to be a wearable that replaced your phone. It failed commercially but proved there's demand for a personal AI device. Rabbit's R1 is a handheld AI companion with a physical button interface. Various startups are building AI note-taking devices that try to do what Bee does. But none have achieved Bee's combination of elegant hardware, focused functionality, and user traction.

What separates Bee from these competitors is the backing and ecosystem. Bee is now owned by Amazon, one of the world's most sophisticated technology companies with massive cloud resources, an existing user base, and integration opportunities across dozens of services. This gives Bee advantages that independent startups can't match. Amazon can integrate Bee with Alexa devices, AWS services, Amazon's advertising platform, and eventually, its broader ecosystem.

The wearable AI market is still early enough that there's room for multiple players. But the consolidation is happening. Amazon buying Bee. Google deepening integration with Wear OS devices. Apple betting everything on its AI-powered iPhone and Watch. Meta pushing glasses and Ray-Ban integration. The market is stratifying into ecosystem plays dominated by big tech companies and niche applications served by smaller companies.

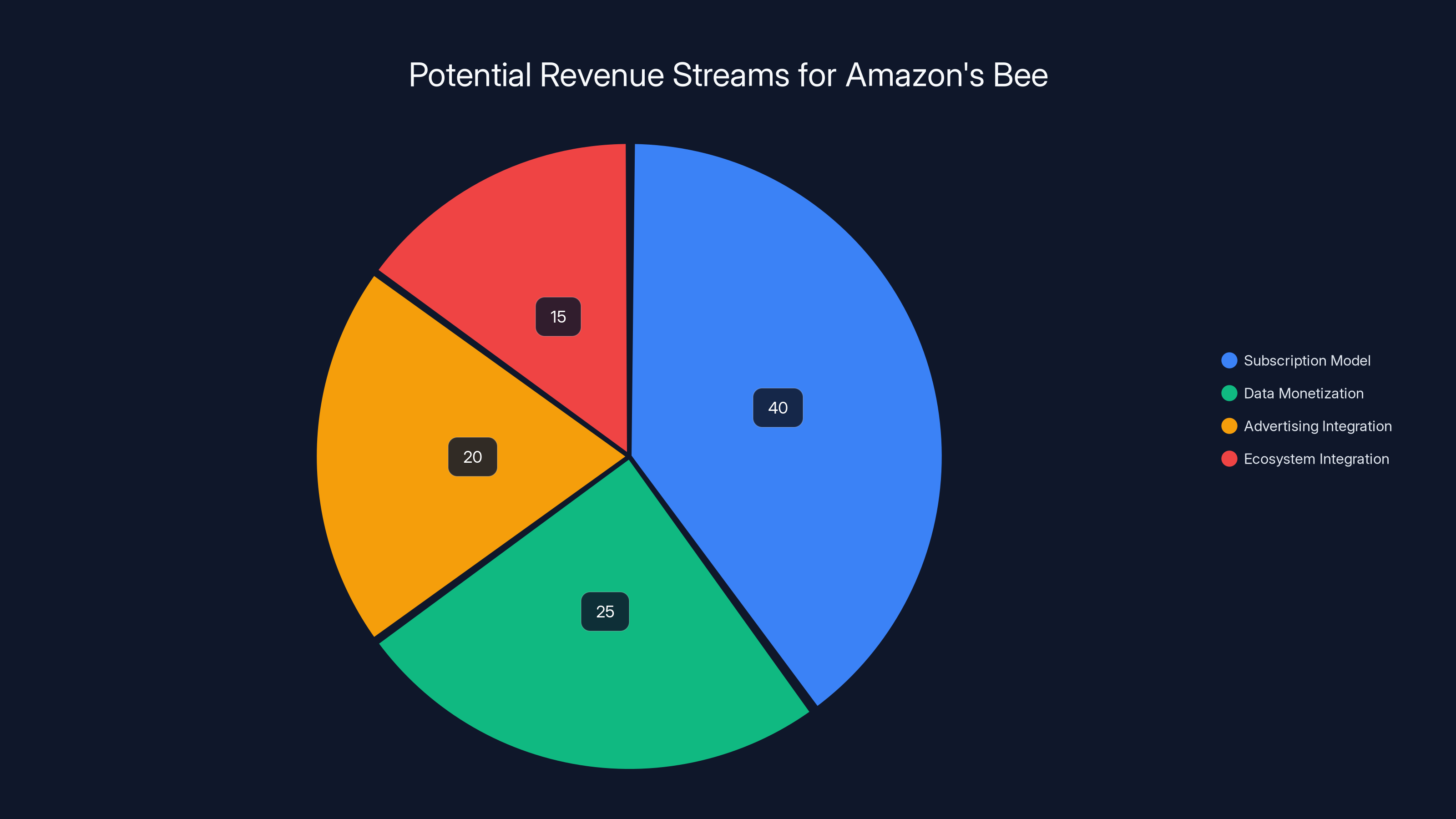

Estimated data suggests that the subscription model could account for 40% of Bee's revenue, followed by data monetization at 25%, advertising integration at 20%, and ecosystem integration at 15%.

The Privacy Question: Is Recording Everything Actually Okay?

Bee's core functionality raises a fundamental question that goes beyond just Bee and touches the future of AI wearables generally: Is it acceptable to record conversations constantly?

Bee's design tries to address privacy concerns head-on. It's not hidden. It's visible. Everyone around you knows it's recording because they can see it. The company discards audio after transcription, which means the recordings aren't stored permanently on Amazon's servers. Only structured data persists: a transcript and extracted information about commitments and relationships.

But this doesn't fully resolve the concerns. First, there's the question of consent. You might be comfortable with Bee recording in your private office. Are other people in meetings comfortable with it? What about recording in public spaces like coffee shops or hallways? Do you need to explicitly ask permission before recording conversations with other people?

Second, there's the practical reality of data handling. Even if Amazon discards audio, the transcripts are extremely valuable. They contain sensitive information about business discussions, personal relationships, and confidential information. Who has access to these transcripts? How secure are they? What happens if Amazon gets hacked or is subpoenaed? Companies have breaches. Governments demand data. These aren't hypothetical concerns.

Third, there's the question of how Amazon uses this data to improve Bee and develop new products. Bee's knowledge graph is built from your personal data. Amazon can analyze this data in aggregate to identify trends, understand how people work, and improve its AI models. This is how AI companies create competitive advantage. But it also means your personal data is being used to train the next generation of Amazon's AI systems.

These privacy questions don't have easy answers. The benefits of having a device that remembers and contextualizes your conversations are real. The productivity gains are legitimate. But the privacy trade-offs are also significant. Users need to make informed choices about what they're accepting.

Bee's approach so far seems to be lighter touch than many alternatives. The company is trying to minimize data collection, maximize transparency about what's being recorded, and give users control over which services Bee can access. These are good practices, but they're not sufficient to fully address privacy concerns. The fundamental issue remains: You're creating a detailed record of your conversations, and that record is valuable enough that Amazon is willing to spend millions to acquire it.

The Knowledge Graph: How Bee Actually Learns About You

The most sophisticated part of Bee's technology is the personal knowledge graph, and understanding how this works is essential to understanding Bee's competitive advantage.

A traditional chatbot processes text and generates responses. It has no memory of previous conversations. Ask it the same question twice on different days and you get essentially the same answer. It doesn't learn about you. It doesn't develop a relationship. It just processes input and generates output based on its training.

Bee builds a knowledge graph by analyzing all your conversations over time. This graph is a structured representation of facts about you. Not just facts like "your name is Sarah" but relational facts like "Sarah is your team lead and you report to her on Tuesdays and you're currently working on Dashboard Redesign and you committed to delivering the prototype by Q2 and Sarah is concerned about the timeline."

The knowledge graph represents these facts in a way that allows reasoning. If you ask Bee "What am I working on this quarter?" Bee doesn't just search for the words "this quarter." It understands the semantics. It knows that Q2 refers to the current quarter. It retrieves the Dashboard Redesign project and any other projects mentioned in conversations that happen to be in that time period. It can reason about deadlines, dependencies, and progress based on what it's heard in conversations.

This is different from traditional semantic search. A semantic search engine finds documents related to your query. A knowledge graph answers questions by reasoning over structured relationships. If you ask Bee "Who should I talk to about the API issue?" Bee can reason: "In a conversation on January 15, Marcus mentioned he's the API expert. In a conversation on January 20, you assigned the API issue to Marcus. Therefore, you should talk to Marcus." The knowledge graph connects these facts together.

Building an accurate knowledge graph from unstructured conversations is hard. The system has to understand natural language, extract entities (people, projects, dates, decisions, commitments), understand the relationships between entities, and represent this in a structured format. Get it wrong and the knowledge graph is garbage. It fills up with incorrect facts, false relationships, and misunderstood commitments.

Bee's approach seems to be using multiple AI models working in concert. One model does named entity recognition to identify people, projects, and dates mentioned in conversations. Another does relation extraction to understand relationships (who is the project lead? what are the dependencies?). A third handles event extraction to identify decisions, commitments, and milestones. These models then feed into a knowledge graph database that stores and reasons over this structured data.

The challenge is handling ambiguity and error. In conversations, people are imprecise. They use pronouns. They reference things implicitly. They change their minds. A commitment made casually in passing might not be a real commitment. The knowledge graph has to distinguish between real commitments that matter and casual mentions. It has to handle corrections when you say "Actually, I was wrong about that deadline, it's Friday not next Friday."

Bee seems to handle this by allowing manual correction and refinement. You can tell Bee "That's not right, the deadline is actually Friday" and Bee updates the knowledge graph. You can explicitly tell Bee what matters and what doesn't. Over time, the system learns your patterns. It understands which commitments you actually care about and which are throwaway comments.

Smart rings are projected to lead the wearable AI market in 2025 with a 35% share, followed by AI glasses at 30%. Bee devices are expected to capture 20%, while other wearables make up the remaining 15%. (Estimated data)

Revenue Models and Economics: How Amazon Plans to Monetize Bee

Amazon's acquisition makes financial sense only if there's a clear path to revenue. Acquiring a startup just to integrate it into existing products doesn't make sense if those products don't generate incremental revenue. So what's Amazon's monetization strategy for Bee?

The most obvious path is a subscription model. Bee could charge monthly for access to the wearable and cloud services. Given that premium wearables range from

But there are other revenue opportunities that are less obvious. Amazon can monetize the data. The knowledge graph contains valuable information about how people work, what they care about, and how they organize their lives. Amazon can analyze this in aggregate (without revealing individual data) to understand trends, improve its AI models, and develop new products. This is incredibly valuable for AI development.

Amazon can also integrate Bee with its advertising platform. If Bee knows what you're working on, what you care about, and what you're committed to, Amazon can show more targeted advertising. Not on Bee itself, but on other Amazon properties. If Bee knows you're planning a presentation, Amazon can show you ads for design tools. If Bee knows you're launching a new product, Amazon can show you ads for marketing services.

There's also the ecosystem integration angle. Bee could unlock new Alexa features. If Alexa knows what you discussed in meetings captured by Bee, it can provide better smart home automation. If Bee feeds into Amazon's enterprise services, small business owners can use Bee to capture customer interactions and integrate them with AWS services. These integrations increase the value of the broader Amazon ecosystem.

The long-term play might be even more interesting. Bee could become the foundation for Amazon's AI assistant strategy. Instead of trying to catch up with Apple and Google in smartphone-based AI, Amazon could leapfrog them by owning the wearable AI space. Bee captures personal context. Alexa controls the home. AWS handles business systems. Together, these create an AI layer across every part of your life. The revenue model becomes less about selling Bee and more about deepening your relationship with Amazon's ecosystem.

There's also an intangible benefit for Amazon's cloud business. If Amazon becomes known for best-in-class personal AI, it attracts AI researchers and engineers to the company. These people help improve AWS, develop better AI services, and maintain Amazon's competitive position in AI generally. Sometimes acquisitions are as much about talent and capabilities as they are about direct revenue.

Bee's Productivity Claims: Real Gains or Marketing?

Bee's marketing emphasizes significant productivity improvements. Students claim they save hours on note-taking. Professionals claim they eliminate tasks from their task list because Bee remembers commitments they would have forgotten. Researchers say Bee allows them to conduct interviews without the distraction of transcription.

But how real are these productivity gains? Are they measurable, or is it just early adopters excited about a new tool?

The evidence suggests real but bounded gains. Students who use Bee genuinely do save time on note-taking. A two-hour lecture still takes two hours to attend, but you don't need an additional hour to transcribe notes or organize them. That's real time savings. If a student can reinvest that hour into studying or other work, that's a productivity gain.

For professionals in meetings, the gains are also real but dependent on what you do with the transcripts. If you just let transcripts pile up without reviewing them, you get no benefit. But if you actively use them to track commitments and follow up on action items, Bee becomes genuinely valuable. The productivity gain isn't from Bee existing, it's from Bee changing how you manage information and commitments.

The outsized productivity gains that Bee marketing suggests might not materialize for everyone. Someone who takes good notes anyway probably won't see huge improvements. Someone who never forgets commitments won't see the benefit of Bee's reminder system. Bee helps people who struggle with organization, memory, and note-taking. For people already highly organized, Bee might add marginal value.

There's also the question of learning curve and setup time. Bee requires you to clip it on, make sure it's charged, and actively engage with its features. If you use Bee, you're spending time interacting with it, asking it questions, teaching it what matters. Some of the productivity gains are offset by this interaction time.

The honest assessment is probably this: Bee delivers real but modest productivity gains for people in specific roles (students, researchers, professionals in lots of meetings). For others, the gains might be smaller. The key question is whether the gains are large enough to justify the cost and privacy trade-offs.

Estimated data shows a plateau in smart speaker adoption since 2020, while wearable AI devices have seen significant growth, indicating a shift towards personal AI technology.

Integration with Amazon Services: Building the Connected Ecosystem

One of the least discussed but most strategically important reasons Amazon acquired Bee is the integration opportunity with existing Amazon services. Bee doesn't exist in isolation. It's part of a broader ecosystem that includes Alexa, AWS, Amazon's enterprise services, and Amazon's advertising platform.

Consider AWS integration first. Businesses run their entire operations on AWS. Customer relationship management systems, project management tools, communication platforms, and data warehouses all live on AWS. If Bee could integrate with these systems, it becomes more valuable for business users. Imagine a sales professional using Bee to record customer calls. Those recordings could automatically integrate with your CRM system, updating customer records with information from the call. Action items from the conversation could automatically create tasks in your project management tool. This integration creates lock-in. You don't just use Bee, you use Bee as the front end for your entire business software stack.

Alexa integration opens other possibilities. Bee could feed information to Alexa about what you're working on. When you get home, Alexa could brief you on your day based on what Bee recorded. Alexa could set reminders based on commitments Bee captured. You could ask Alexa to play back key points from meetings Bee recorded. The two systems become symbiotic. Each makes the other more valuable.

Advertising integration is perhaps the most economically important. Amazon makes billions from advertising by targeting ads based on what people are interested in. If Bee's knowledge graph reveals what you're working on, what problems you're solving, and what you're interested in, Amazon can show much more targeted advertising. Not to you directly necessarily, but on your other interactions with Amazon platforms. If Bee knows you're planning a presentation, Amazon can show you ads for presentation software on Amazon.com or in AWS services.

There's also the enterprise angle. Many companies need to track conversations with customers and employees. HR departments need records of performance reviews. Customer service teams need records of customer interactions. Sales teams need records of customer conversations. If Amazon can package Bee as an enterprise solution integrated with AWS services, it opens an entirely new revenue stream. Companies would pay for Bee licenses for their employees, and Amazon would provide the backend infrastructure.

The data integration opportunity is subtle but important. Every interaction you have with Bee is data. Amazon can analyze this in aggregate to understand trends about how people work, what they prioritize, and how they collaborate. This information helps Amazon build better products, train better AI models, and understand market opportunities. For instance, if Amazon notices that lots of Bee users are struggling with project management, Amazon might develop a new service to address this. If Amazon sees trends in what people are working on, it can predict demand for services.

The Competitive Response: What Apple, Google, and Meta Are Doing

Amazon's Bee acquisition didn't happen in a vacuum. It's part of a broader competitive battle to own the personal AI layer. Understanding what competitors are doing is essential to understanding why this acquisition matters.

Apple has the advantage of owning the iPhone, the device that's always with you and that you interact with constantly. Siri, Apple's voice assistant, is built into every iPhone. Apple is investing heavily in on-device AI processing, which means AI features can run locally without sending data to Apple's servers. Apple's strategy is to deepen Siri's capabilities and integrate it with the broader iOS ecosystem. The problem is that Siri has a reputation for being limited compared to ChatGPT or other advanced AI assistants. Apple is working to catch up, but it's behind.

Google owns Android and has massive reach through search. Google Assistant is available on most Android phones and smart home devices. Google is also investing in on-device AI through Gemini Nano, which allows lightweight AI models to run directly on phones. Google's challenge is coordination. Google has multiple AI teams and multiple assistant products, and they don't always work together seamlessly. Google needs to unify around a single, powerful AI strategy, and it's working on this.

Meta is betting on AI glasses. The company is investing billions in augmented reality and has Ray-Ban glasses that can answer questions, identify objects, and have conversations. The advantage is that glasses are a natural interface for AI. You point at something, you ask a question, you get an answer. The disadvantage is that AR glasses are still somewhat niche. Most people don't wear glasses, and wearing AR glasses feels unusual to many.

Microsoft has been quiet on wearable AI but has massive leverage through Windows and cloud services. Microsoft owns much of the enterprise productivity stack. If Microsoft could bundle Bee-like functionality into Microsoft Copilot and integrate it with Office 365, it would be incredibly valuable for businesses. But Microsoft hasn't made a major acquisition in the wearable AI space yet.

Bee's acquisition by Amazon creates a unique position. Amazon now owns a personal AI wearable that captures continuous context about your life outside the home. This is different from Apple's strategy (improve Siri on iPhone), Google's strategy (unify AI across Android), Meta's strategy (AR glasses), or Microsoft's strategy (enterprise AI integration). It's a complementary play that gives Amazon a unique asset in the broader competition for personal AI dominance.

The competitive response is likely to be more wearable acquisitions and new product launches. Apple might acquire a wearable AI startup or integrate deeper AI into the Apple Watch. Google might acquire a wearable company to compete with Bee. Meta might accelerate its glasses roadmap. The race for personal AI is just beginning.

The Technical Challenges of Personal AI Wearables

Building a wearable AI device that actually works is harder than it might seem. There are hardware challenges, software challenges, and integration challenges that need to be solved.

The hardware challenge starts with battery life. AI processing is power-hungry. A device that constantly records audio, processes it locally, and communicates with cloud services needs a battery that can last through a full day. Smart watches struggle with this. Phones struggle with this. A small wearable that does serious AI processing is even harder. Bee seems to have solved this to some degree, but battery life is always a constraint for wearables.

Audio quality matters a lot for a device that records conversations. If the microphone picks up too much ambient noise, transcription accuracy suffers. If it doesn't pick up your voice clearly, the same problem occurs. Wearable devices have to be small, which constrains microphone size and quality. Solving this requires careful microphone design and advanced audio processing to separate your voice from background noise.

Privacy-preserving processing is a technical challenge that often gets overlooked. If you're discarding audio after transcription, you have to do transcription on the device itself or send audio to a server briefly and immediately discard it. Sending raw audio to the cloud, even briefly, is a privacy risk if that server is hacked or if government agencies demand the data. Bee seems to handle this by processing on-device when possible and minimizing data transmission.

Knowledge graph construction is the deepest technical challenge. Building an accurate knowledge graph from natural language conversations requires solving hard problems in natural language understanding. You have to extract entities accurately. You have to understand relationships. You have to handle ambiguity. You have to recognize when something is a commitment versus a casual comment. Get this wrong and the knowledge graph is full of errors that make it useless.

Integration with other systems requires building robust APIs and connectors. If Bee needs to talk to your calendar, email, contacts, and other systems, those connections need to be secure and reliable. If one integration breaks, it shouldn't break the entire system. Building this robustness takes significant engineering effort.

Future Roadmap: What's Next for Bee and Alexa

Bee's founder, Maria de Lourdes Zollo, has hinted that the company has ambitious plans for 2026. She mentioned that the team is working on features like voice notes, templates, daily insights, and "many new things." But what's likely to come in the coming year?

Based on what Bee is learning from early users and where AI technology is progressing, several developments seem likely. First, more sophisticated context understanding. Bee will probably improve at understanding not just what you're committing to, but why. It will understand which commitments are critical and which are optional. It will prioritize the important stuff.

Second, deeper integration with your other tools and systems. Bee's value increases if it can automatically update your calendar with commitments, create tasks in your project management tool, and feed information to Alexa. These integrations will probably become more seamless and more numerous.

Third, improved on-device processing. As AI models get smaller and more efficient, Bee will probably do more processing locally on the device. This improves privacy, reduces latency, and reduces bandwidth requirements. Bee's claims about privacy will become stronger as less data needs to be sent to the cloud.

Fourth, better handling of ambiguity and context. Early Bee users will probably find that the system sometimes misunderstands commitments or extracts the wrong entities from conversations. As Amazon feeds more data through Bee's models, these models improve. The knowledge graph becomes more accurate.

The big question is when Bee and Alexa will merge. The founders suggest it's coming but not immediately. My guess is that the merger happens gradually. First, Bee and Alexa will share data. Information from Bee will flow to Alexa. Then, they'll share features. You might ask Alexa to pull up information from Bee. Eventually, the distinction between Bee and Alexa might disappear. You'll just have "Amazon AI" that works everywhere: on your wearable, in your home, on your phone, in your car.

But this merger requires solving coordination problems that are harder than they sound. Alexa is optimized for command-response interaction. Bee is optimized for passive learning. Merging them requires creating a system that excels at both. It requires figuring out when to be proactive and when to wait for a command. It requires handling the different privacy and data retention policies of the two systems.

Implications for Productivity and How We Work

Bee represents a fundamental shift in how we interact with technology and capture information about our work. Instead of manually taking notes, scheduling follow-ups, and organizing information, we delegate this to AI. The implications are profound and worth thinking through carefully.

On the positive side, cognitive offloading is real and valuable. If you don't have to remember commitments, you can focus your mental energy on the work itself. If you don't have to take notes, you can concentrate on the conversation. If you don't have to organize information, you can focus on using it. This is a genuine productivity benefit for people in information-heavy roles.

But there are downsides worth considering. Research on note-taking shows that the act of writing helps you remember things. If you delegate recording to Bee, you might remember less. There's a question of active versus passive learning. Actively processing information and writing it down helps you internalize it. Passively letting Bee record and summarize might leave you with less depth of understanding.

There's also the question of what happens to human relationships and attention. If you're wearing Bee in a meeting, part of your attention is going to the device. You're thinking about whether Bee is capturing the right information. You're not fully present in the conversation. This might seem like a small cost, but it could affect relationship quality and creativity, which often emerges from full engagement in conversations.

The broader implication is that AI is changing what skills matter. If Bee remembers commitments for you, remembering commitments becomes less valuable as a skill. If Bee takes notes for you, note-taking ability becomes less valuable. As we delegate more cognitive tasks to AI, the skills that matter change. The people who thrive will be those who can work effectively with AI, not those who are good at manual information processing.

There's also an inequality angle. AI tools like Bee are expensive. Not everyone can afford them. This could create a productivity gap between people who can afford AI assistants and people who can't. Over time, this could exacerbate inequality in the job market.

Privacy, Data Ownership, and Regulatory Questions

Bee raises fundamental questions about privacy, data ownership, and regulation that go beyond just this one product. These questions will define how the wearable AI market develops.

The privacy question is straightforward: Should companies be able to record conversations and build knowledge graphs about your life? There are arguments on both sides. On one hand, you own your body and what's attached to it. If you consent to wearing Bee and recording conversations, why shouldn't you be able to do this? On the other hand, other people in conversations haven't consented to being recorded. Recording them without consent might violate their privacy.

Different jurisdictions have different rules about recording. In some places, you need consent from everyone in a conversation to record. In others, you only need consent from one party. The rules around what Bee does legally vary by location, and this will probably be a source of confusion and litigation.

The data ownership question is more subtle. You wear Bee and it records your conversations. You can view the transcripts and the extracted information. But who owns the knowledge graph that Amazon builds from this data? Do you own it? Does Amazon own it? Can Amazon use it to train AI models? Can Amazon sell it to other companies? These questions are largely unresolved and will depend on the terms of service and how regulators respond.

There's also the question of government access. If government agencies demand that Amazon provide Bee data, will Amazon comply? Different countries have different laws about this. The United States has broad surveillance capabilities. China has extensive requirements for data localization and government access. Europe has GDPR which gives you data rights. As Bee expands internationally, these conflicts will become real.

The regulatory environment is probably going to tighten around wearables that record. Expect privacy laws to address wearable recording specifically. Expect required disclosures when recording others. Expect data protection requirements for knowledge graphs. Companies like Amazon will probably need to invest significantly in privacy compliance.

FAQ

What is Bee and what does it do?

Bee is an AI wearable device that records conversations and builds a personal knowledge graph about your life and work. You can wear it as a clip, bracelet, or pin. It transcribes conversations, extracts key information like commitments and decisions, and uses this to provide contextual assistance. Unlike traditional voice assistants that respond to commands, Bee is ambient and learns about you over time through observation of your conversations.

How does Bee integrate with Amazon's other products like Alexa?

Currently, Bee and Alexa operate as complementary systems. Bee captures context about your life outside the home through recording conversations, while Alexa controls your smart home environment. Amazon plans to integrate them in the future, allowing information from Bee to inform Alexa's responses and vice versa. For example, Alexa could remind you about commitments Bee recorded, or Bee could coordinate with Alexa to set reminders based on information it learned about your schedule.

What are the privacy concerns with Bee?

Bee raises several privacy concerns. First, it records conversations continuously, and while Amazon discards audio after transcription, the transcripts remain. Second, other people in conversations may not consent to being recorded. Third, the knowledge graph built from your data is valuable and could be used by Amazon to train AI models or improve targeting for advertising. Amazon's terms of service and data handling practices will determine how concerning these issues are in practice.

How does Bee's knowledge graph work?

Bee analyzes transcripts from your conversations to identify entities (people, projects, dates), relationships (who works on what project), and commitments (what you agreed to do). This structured information is stored in a knowledge graph database. When you ask Bee a question, it can reason over this graph to provide contextually relevant answers. For example, if you ask "What did I commit to in that meeting with Sarah?" Bee can retrieve information about your interaction with Sarah and what you discussed.

Is Bee's transcription accurate enough for professional use?

Bee's transcription accuracy depends on audio quality and the complexity of the conversation. For most standard conversations, accuracy is likely in the 95%+ range given current AI transcription technology. However, Bee's approach of discarding audio means you can't verify accuracy by playing back the recording. For professional use cases where verification is important, this is a limitation. Some users might need to pair Bee with other recording or verification mechanisms.

When will Bee be available and how much will it cost?

Bee was available to early adopters before Amazon's acquisition. Following the acquisition, Bee's pricing and availability will likely change as Amazon integrates it into its broader product strategy. Expect it to eventually be positioned as part of the Amazon ecosystem, potentially bundled with Alexa services or offered as a standalone subscription. Pricing will likely range from $200-400 for the hardware plus a monthly service fee.

What makes Bee different from smart rings or AI glasses?

Bee is different from smart rings in that it has significantly more computational power and can handle complex audio processing and knowledge graph operations. It's different from AI glasses in that it doesn't require cameras, which reduces privacy concerns about recording others. Bee's focus is narrow: capturing and understanding conversations. This narrow focus allows it to do one thing very well, which is a different strategy than smart rings or glasses that try to handle multiple use cases.

Will Bee eventually replace Alexa?

No. According to Amazon and Bee's founders, Bee will eventually integrate with Alexa rather than replace it. They'll continue serving different purposes: Bee for capturing personal context outside the home, and Alexa for controlling the smart home environment. The integration will make both systems more valuable, but they're not expected to merge completely into a single product.

Conclusion: The Wearable AI Era is Starting Now

Amazon's acquisition of Bee represents a clear acknowledgment that the future of AI isn't in your home. It's on your person. It's in a wearable that's with you constantly, learning about your life, capturing the context that matters to you, and acting as a truly personal AI assistant.

This shift has been coming for years. The limitations of smart speakers in achieving mainstream adoption have been apparent since at least 2020. Alexa's growth stalled. Smart home adoption plateaued. Meanwhile, wearable technology matured. AI models got more powerful. Users increasingly wanted AI assistants on their phones and in their earbuds, not shouting commands at a device on their kitchen counter.

Bee represents the solution Amazon developed to address this gap: a focused, elegant wearable that does one thing exceptionally well. It records conversations and learns about you. Not a general-purpose assistant that tries to control everything. Not a fitness tracker that tries to add AI as an afterthought. A device purpose-built for personal AI.

The strategic implications are significant. Amazon now owns one of the most promising personal AI wearables on the market. It has a clear path to integrate Bee with Alexa and AWS services, creating network effects that increase value. It has access to unique data about how people work and what they care about. It has recruited talented AI researchers and engineers.

But the real significance is broader than just Amazon. Bee's acquisition signals that the market for personal AI wearables is real, valuable, and increasingly competitive. Apple, Google, Meta, and others will respond. The next few years will see an explosion of wearable AI devices, each trying to find the right combination of functionality, privacy, and user experience.

For individual users, the question is whether Bee's benefits justify its privacy costs. That's a personal calculation that depends on your work, your values, and your comfort with continuous recording. For businesses, the question is how to integrate these tools into workflows and maintain privacy and security. For policymakers, the question is how to regulate wearable recording and ensure that companies handle personal data responsibly.

Amazon's Bee acquisition is not the end of a story. It's the beginning. The wearable AI era is starting now, and we're only seeing the first generation of products. The implications for productivity, privacy, relationships, and how we work are profound. The next few years will define how this technology develops and how society responds to it.

One thing seems clear: The device you wear will be more important than the device in your home. AI on your person will be more valuable than AI in your house. And companies that figure out how to build trustworthy, useful personal AI will define the computing landscape for the next decade.

Key Takeaways

- Amazon acquired Bee to extend its AI presence beyond the smart home into personal wearables that capture context outside the house

- Bee and Alexa will eventually integrate but operate as complementary systems serving different purposes and locations

- The wearable AI market is rapidly growing with smart rings, AI glasses, and personal recording devices competing for users

- Bee's knowledge graph technology is more sophisticated than traditional voice assistants, building contextual understanding from conversations over time

- Privacy concerns around continuous recording and data handling are significant but addressable through transparent design and user controls

Related Articles

- Amazon's Alexa+ Comes to the Web: Everything You Need to Know [2025]

- CES 2026 Best Products: Pebble's Comeback and Game-Changing Tech [2025]

- CES 2026 Tech Trends: Complete Analysis & Future Predictions

- CES 2026 Picks Awards: Complete Winners List [2025]

- AI Wearables & External Brain Devices: The Next Big Trend [2025]

- SwitchBot's AI Recording Device: Privacy, Ethics & What You Need to Know [2025]

![Amazon's Bee Acquisition: Why AI Wearables Are the Next Frontier [2025]](https://tryrunable.com/blog/amazon-s-bee-acquisition-why-ai-wearables-are-the-next-front/image-1-1768255698142.jpg)