Apple & Google's Gemini Partnership: The Future of AI Siri [2025]

For years, Siri sat on your iPhone like an outdated assistant gathering dust. You'd ask it to set a timer or read your texts, sure. But ask it something that actually required intelligence? Siri would fumble. Meanwhile, ChatGPT, Claude, and Gemini were doing the heavy lifting for millions of users.

Then came the announcement that caught everyone off guard: Apple is partnering with Google to power Siri with Gemini AI. According to CNBC, this partnership marks a significant shift in Apple's AI strategy.

Wait, what? Apple, the company that built its brand on privacy and doing everything in-house, is turning to Google for its biggest AI initiative in years? That's not a small deal. That's a complete recalibration of Apple's AI strategy, and it's happening right now.

This partnership represents something far bigger than a simple software upgrade. It's a statement about where the AI race actually stands. It's an acknowledgment that the capabilities gap between in-house models and cutting-edge foundation models is too wide to ignore. And it's a sign that even the world's most powerful tech companies are willing to partner with rivals when the stakes are high enough.

Let's unpack what's actually happening here, why it matters, and what it means for everyone using an iPhone or relying on these AI systems.

TL; DR

- Apple chose Google Gemini over OpenAI, Anthropic, and others to power a personalized Siri upgrade coming in 2025, as detailed in Tech Times.

- Multi-year partnership includes access to Gemini models and Google Cloud infrastructure for Apple Foundation Models, according to Quiver Quant.

- Why Google won: Gemini outperformed competitors on technical benchmarks and capability metrics Apple needed, as reported by Lifehacker.

- Privacy still matters: Apple plans to run some processing on-device while using cloud for complex tasks, as explained by CNET.

- Implications are massive: This changes how enterprise AI partnerships work and signals the end of the "build-it-all-in-house" era, according to Investor's Business Daily.

The Gemini-powered Siri is expected to have a phased rollout, starting with beta testing in spring 2025 and reaching full availability by winter 2025/2026. Estimated data based on typical release patterns.

The Moment Apple Admitted It Couldn't Build It Alone

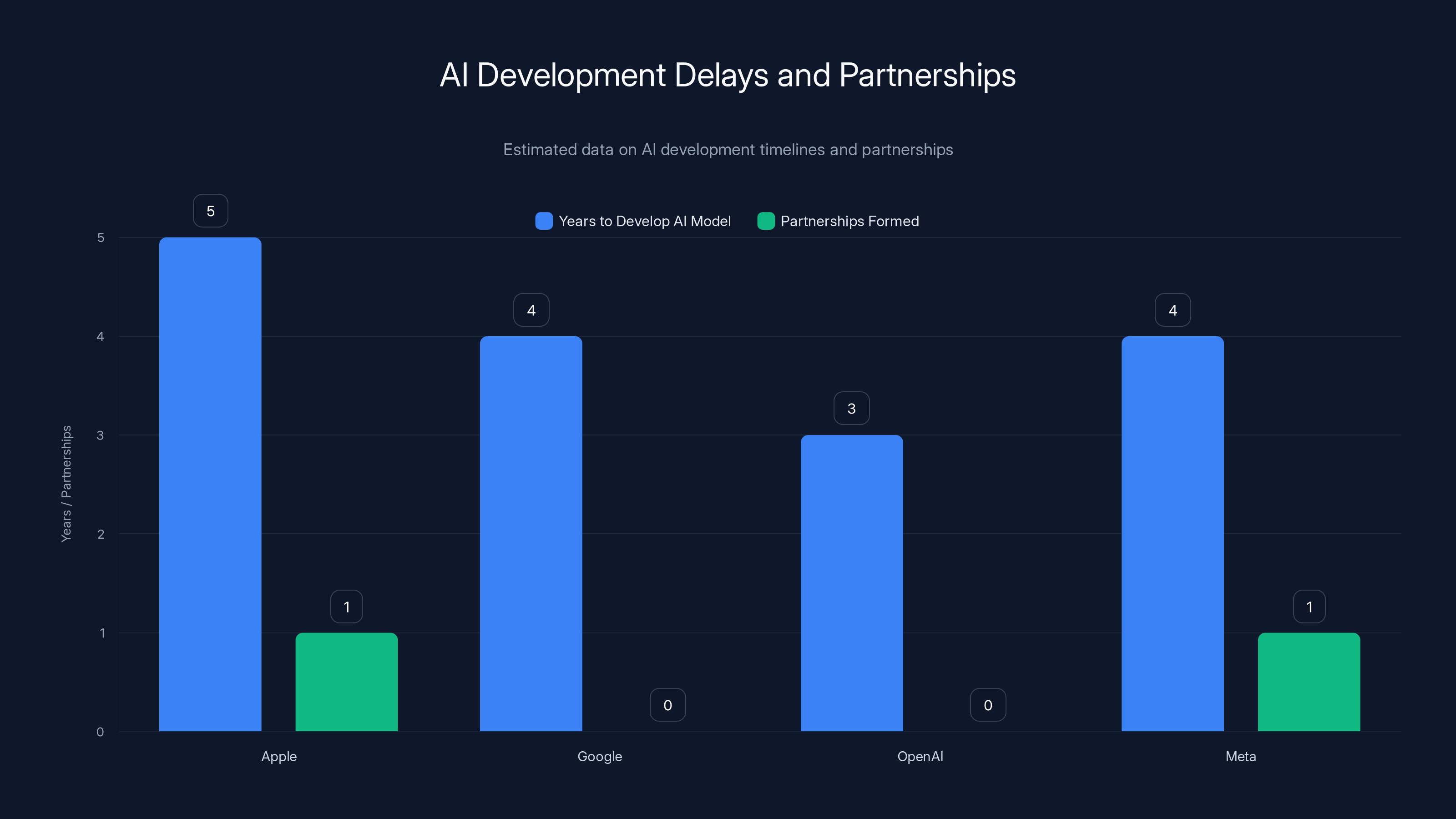

For the last five years, Apple has talked relentlessly about building its own AI. The company invested billions into on-device machine learning, hired top AI researchers, and promised that Siri would eventually become as capable as ChatGPT but faster and more private.

Then came the delays.

First, Apple pushed the Apple Intelligence rollout from iOS 18's launch to later in 2024. Then it pushed again. The company kept saying it needed more time to "get it right." Behind the scenes, internal teams were struggling. Siri couldn't handle complex requests. It couldn't understand context the way users expected. It couldn't match what Google's AI was already doing with Gemini.

So Apple did something that seemed impossible: it partnered with its biggest rival. This move was highlighted in CNBC's report on Apple's AI delays.

This isn't entirely surprising if you've been paying attention to the AI market. Every major tech company has faced the same reality check. Building a truly capable foundation model is expensive, time-consuming, and requires a specific kind of talent that's concentrated at a handful of companies. OpenAI spent years training GPT models. Google spent years on Gemini. Meta built Llama, but even Meta sometimes reaches for external partnerships. The idea that any company, no matter how large, can solo their way to the cutting edge of AI foundation models has died.

Apple's move makes sense when you look at the numbers. Gemini 3 topped multiple AI leaderboards when it launched. It's multimodal, meaning it handles text, images, audio, and video. It can reason through complex problems. And most importantly, it could be integrated into Apple's ecosystem without Apple starting from scratch.

The partnership does something else too: it signals that the old competitive playbook is dead. Apple and Google aren't traditional allies. They fight over search, advertising, mobile OS dominance, and ecosystem lock-in. But when it comes to AI, they're now partners. That tells you something crucial about how the AI industry is restructuring itself.

What Google's Gemini Brings to Siri

So what exactly is Apple getting here? Not just a faster Siri that understands more queries. That's the surface-level answer. The real value is much deeper.

Gemini brings three critical capabilities that Apple couldn't build quickly enough on its own:

Genuine reasoning and context understanding. Current Siri operates like a command processor. You say something, it matches patterns, and executes. Gemini works differently. It understands semantic meaning. It can follow a conversation thread across multiple turns. It understands your context not just from the current request, but from your phone history, your calendar, your previous commands. That means you can ask Siri something like "remind me to follow up with the people I met at lunch," and Gemini-powered Siri doesn't just understand what you asked—it knows who those people are because it looked at your calendar and messages.

Multimodal reasoning across content. You can show Gemini a photo, describe a problem, and ask it to help. It processes the image, understands the text context, and reasons across both simultaneously. Apple was working on this internally, but Gemini is already production-ready and proven at scale.

Real knowledge about the world. Siri's biggest weakness has always been its knowledge cutoff and inability to access current information reliably. Gemini can search the web in real-time, understand what it finds, and summarize information for you. Apple is building a "World Knowledge Answers" feature specifically for this—you ask Siri a question, Gemini searches for current information, and gives you an answer with sources cited.

These aren't incremental improvements. They're the difference between Siri being a useful utility and Siri being an AI assistant that might actually replace your web searches and reduce your time on Google Search.

For Google, that last point is the elephant in the room. By powering Siri with Gemini, Google is essentially letting a competitor get smarter at answering questions that users might otherwise ask Google Search. That's a genuine strategic risk for Google's core search business.

But Google apparently decided that the partnership benefits outweigh the risks. It gets to position Gemini as the AI engine powering Apple's devices. It gets cloud revenue from Apple using Google's infrastructure. And it gets to shape the future of AI assistance on the world's most profitable smartphone platform.

Apple's AI development faced delays, leading to a partnership with a rival. Estimated data shows the time taken by major companies to develop AI models and their reliance on partnerships.

The Technical Architecture: On-Device Plus Cloud

One question immediately jumps out when you hear "Apple partnered with Google for AI": what about privacy? Apple's entire brand promise is built on protecting user data. How does that work when you're sending Siri requests to Google's servers?

Apple's answer is a hybrid approach: some processing happens on your device, some happens on Google's cloud.

Here's roughly how it works:

Simple requests stay on-device. If you ask Siri to set a timer or play music, that computation happens entirely on your iPhone using Apple's neural engine. No data leaves your phone. That's not new—Apple's been doing on-device AI for years.

Complex requests route to the cloud. If you ask Siri something that requires world knowledge, reasoning across multiple information sources, or personalization that draws from your larger data context, that request gets encrypted and sent to Apple's servers, which then route it to Google's Gemini models for processing.

Apple insists that Google can't see your personal data. The way they've architected this, Google gets the query but not the user context. If you ask Siri "what's my schedule for tomorrow," Siri on-device finds that information, combines it with your question, and then sends a query like "I have meetings at 2pm and 3pm, what's the best order to tackle these tasks" to Gemini. Google sees your question but not your identity or your full personal data.

In theory, that's a reasonable privacy compromise. In practice, people have legitimate reasons to be skeptical. Encrypting data in transit and in processing is one thing. But trust is harder to verify than encryption. You're ultimately betting that Apple's engineering is as good as they claim it is, and that Google's incentive alignment matches what Apple is telling you.

This architecture also creates an interesting technical challenge. Siri has to be smart enough to figure out which requests can be handled on-device and which need cloud processing. Miss that judgment call, and you get either degraded functionality (refusing cloud requests that could be handled) or unnecessary privacy exposure (sending device-processable requests to the cloud).

Apple's solution is to build what they're calling "Apple Foundation Models," which are specialized versions of Google's Gemini adapted to Apple's hardware and privacy requirements. This is a clever bit of engineering. Rather than using Gemini directly, Apple is taking the base Gemini model and optimizing it specifically for iOS and macOS, with privacy safeguards baked in at the model layer.

Why Google Won Over OpenAI, Anthropic, and Perplexity

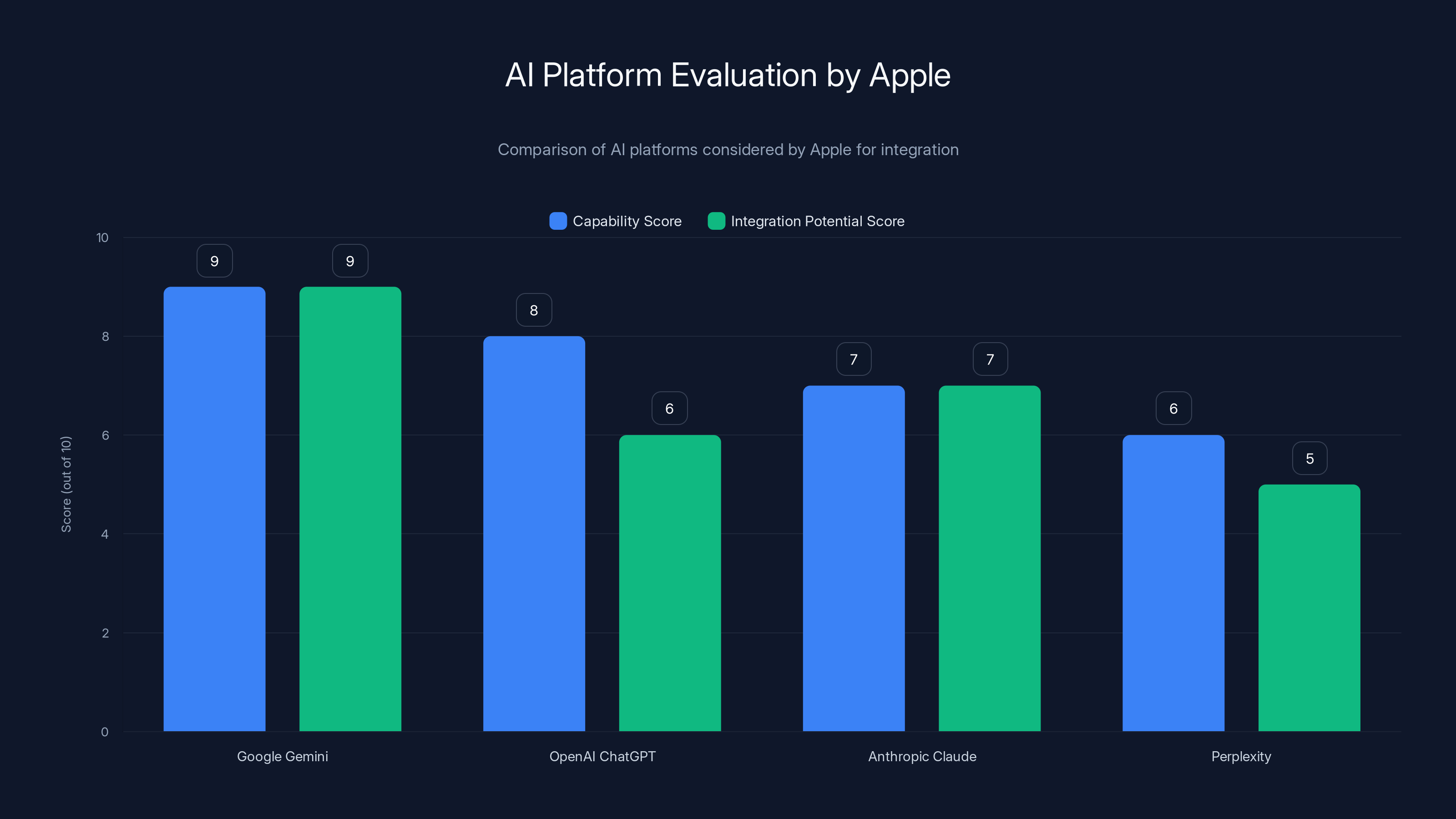

Here's what's fascinating: Apple didn't just pick Google because Google's AI is good. Apple actually evaluated multiple options. The company considered OpenAI (with ChatGPT), Anthropic (with Claude), Perplexity (with their specialized search capabilities), and others.

In the end, Gemini won. Why?

The most likely answer is that Google's Gemini offered the best combination of raw capability and integration potential. Gemini is good, but it's also designed to work at scale across different hardware. Google has been running Gemini on everything from servers to Pixel phones, which means they've solved a lot of the problems Apple needed solved: how to run advanced AI models efficiently, how to handle real-time latency requirements, how to process multimodal inputs reliably.

OpenAI's ChatGPT is arguably more capable in some ways. Claude from Anthropic is more reliable and better at following complex instructions. But neither of those companies has the infrastructure background that Google has. Google owns cloud infrastructure, data centers, and years of optimization experience running AI at planetary scale.

There's also a partnership fit issue. Google and Apple both have decades of experience working together, even as competitors. They understand each other's constraints, their regulatory environment, their business models. There's trust—or at least, a working relationship—already built in. Adding a new AI partner like Anthropic would require building all that from scratch.

Does this mean ChatGPT is no longer available on Apple devices? No. OpenAI's ChatGPT already integrates with Siri, and that relationship continues. But for the core Siri upgrade, for the foundational AI powering Apple's own AI features, Google's Gemini is now the primary partner.

That's a significant shift in how these partnerships work. Rather than Apple building something in-house or exclusively partnering with one company, we're seeing a multi-partner model where different AI companies power different features. Google powers the core reasoning and world knowledge. OpenAI powers ChatGPT integration. Possibly Anthropic or others will power specialized features down the line.

This multi-partner approach actually makes sense. It reduces Apple's dependency on any single AI company. If Google Gemini suddenly degrades, Apple has OpenAI as a backup. If one model is better at reasoning and another is better at code generation, Apple can use both.

The Strategic Pivot: Apple Admits It Needs Partners

There's a broader story here about Apple's AI strategy, and it's not a flattering one for Apple's internal AI teams. For years, the company bet big on building everything in-house. It hired John Giannandrea from Google to lead Apple's AI efforts. Giannandrea is legendary in the AI world—he built Google's core ranking systems and understands how to scale AI across billions of users.

Yet even with Giannandrea, Apple's internal efforts hit a wall. The company kept delaying Apple Intelligence. Siri remained a punchline. And eventually, Apple made a significant leadership change: Giannandrea stepped down, and Mike Rockwell, the head of Vision Pro, took over AI efforts.

That change signals something important. Apple decided it needed different leadership for AI—leadership that understands both AI and products, rather than pure AI research. Vision Pro's success (or failure, depending on your perspective) showed that Rockwell understands how to ship hardware-AI integration. That skill set mattered more than pure AI research credentials.

But here's what's really interesting: the Gemini partnership was probably baked into that leadership change. Giannandrea's departure and Rockwell's ascension likely happened because Apple's board realized that the in-house AI strategy wasn't working and they needed to shift to partnerships. Rockwell is the right leader for that shift—he's a pragmatist who ships products, not a researcher chasing theoretical improvements.

This is a humbling moment for Apple. The company that prided itself on vertical integration and complete control is now outsourcing its most critical AI capabilities to a competitor. That's not weakness exactly—it's pragmatism. Better to ship excellent features using a partner's AI than to ship mediocre features using your own.

But it does mark the end of Apple's grand vision of building an entirely proprietary AI stack. That era is over. The future is partnerships.

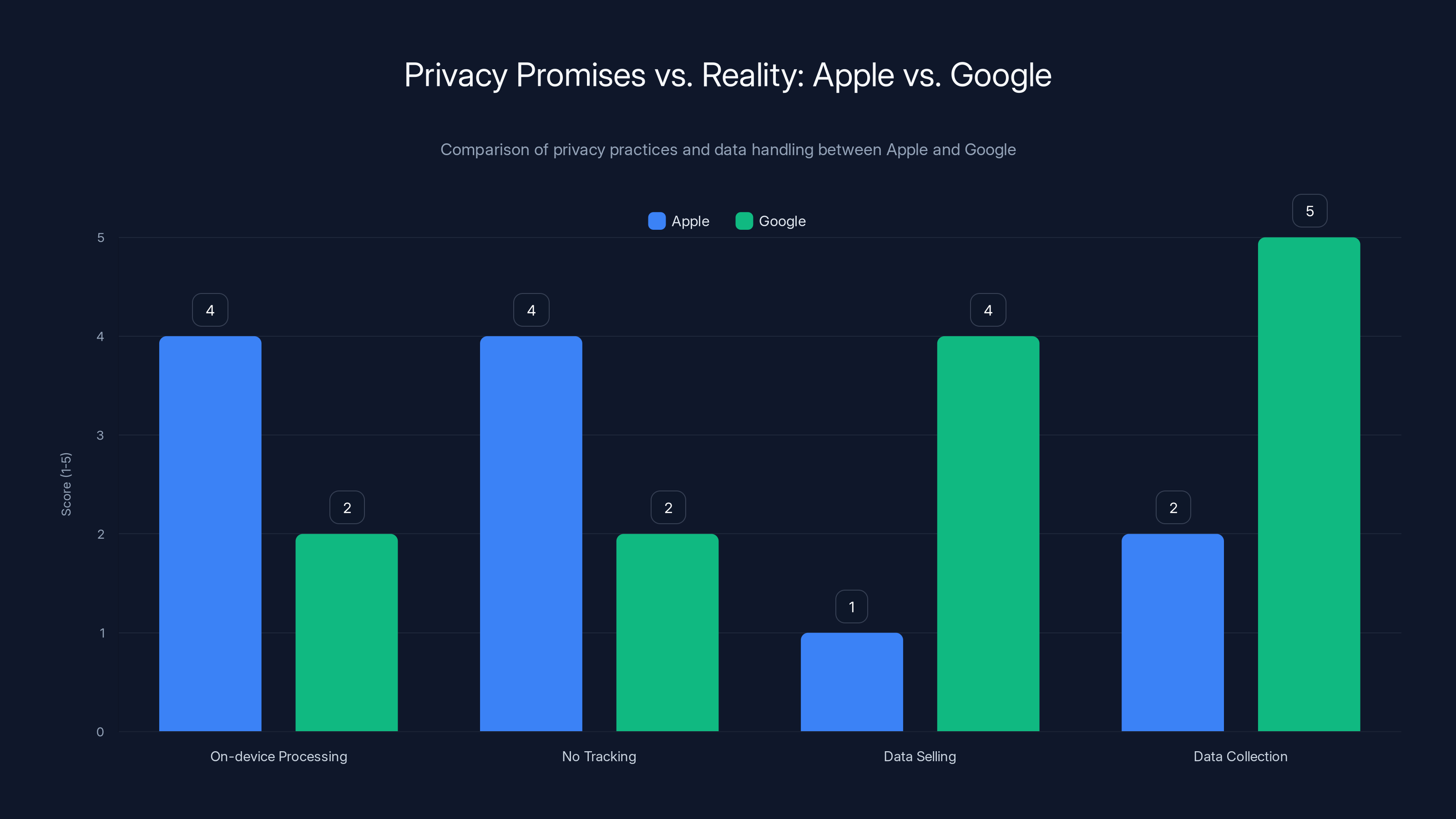

Apple scores higher in on-device processing and no tracking, while Google is more involved in data collection and selling. Estimated data based on privacy practices.

Privacy Promises vs. Reality: What You Need to Know

Let's be direct: there's a credibility gap between Apple's privacy marketing and what this partnership means in practice.

Apple's brand narrative is "we protect your data." That's the core message. On-device processing, no tracking, no selling data to advertisers. It's a compelling story, and in many cases, it's true. Apple genuinely does process a lot of data locally, and Apple doesn't operate an advertising business like Google does.

But now Siri is routing complex requests to Google's servers. Google is the company that built its $280 billion advertising empire on processing user data. Google knows more about you than almost any other company on earth. Google profiles your interests, your location, your search history, your YouTube watch time, your Gmail, your location data from Maps.

Apple says that Gemini-powered processing is partitioned—Google can't see your personal data. Maybe that's true. But you're still giving Google another signal: you're asking Siri a question. That metadata—the query itself, the fact that you're using Siri, the timestamp, potentially your device type—that's still valuable to Google. It's not your personal calendar or messages, but it's signal.

Over time, as Siri handles more of your requests, Google accumulates a profile of your interests and needs based on what you ask Siri. That's not the same as reading your email, but it's still data collection. And data collection is Google's core business.

Apple's engineers probably have implemented privacy safeguards as well as they can. But the fundamental truth is that you're now giving Google insight into your information needs in a way you weren't before. Whether that's acceptable depends on your threat model and your trust in both Apple's engineering and Google's promises.

One other thing to consider: regulatory risk. If there's ever a major scandal about Google's privacy practices, or if regulators crack down on tech company data collection, this partnership could be retroactively controversial. Apple's brand is tied to privacy. A future data breach involving Google and Siri queries would be a massive PR disaster for Apple.

What This Means for OpenAI and the AI Rivalry

OpenAI should probably be concerned about this partnership, though not for the reasons you might think.

The immediate reaction is that Apple's move means OpenAI lost a major partnership. And that's true—Apple is using Google's Gemini instead of OpenAI's models for core Siri functionality. But ChatGPT is still integrated into Siri as an option, so OpenAI hasn't lost everything.

The deeper concern is strategic positioning. Apple's move signals that Gemini is now the AI engine for one of the world's most important platforms. Every iPhone, iPad, and Mac that runs Apple Intelligence will be powered by Gemini for certain operations. That's billions of users. That's a massive distribution channel for Google's AI.

OpenAI's distribution model is different. ChatGPT is available through the web, mobile apps, and integrations like Siri. But it doesn't have the deep integration that Gemini now has with Apple's OS. That's a meaningful competitive advantage for Gemini going forward.

The other thing to consider: this partnership validates the idea that having the best AI model isn't enough. You also need distribution, infrastructure, and relationships. OpenAI has arguably the best consumer AI product in ChatGPT, but it doesn't have Apple-scale distribution. Google has both the best model (arguably) and the distribution infrastructure. That's a powerful combination.

For OpenAI, the silver lining is that ChatGPT can still compete on quality. If ChatGPT's responses are visibly better than Gemini's, users will ask for ChatGPT within Siri. But that's not the default anymore. The default is now Google.

For Anthropic, the implications are even starker. Anthropic doesn't have the distribution infrastructure that Google has, and Claude isn't yet as deeply integrated into major platforms as Gemini or ChatGPT. This partnership suggests that Anthropic might struggle to compete for OS-level integrations unless the company builds its own platform or finds a major partner.

The Broader Implications for Enterprise AI Partnerships

What Apple and Google are doing here is establishing a template for how enterprise AI partnerships should work in 2025 and beyond.

The old model was either build-it-yourself or partner with one company exclusively. Apple historically chose build-it-yourself for almost everything. Google chose partner strategically but maintains its own AI development. Neither approach is fully working anymore.

The new model is portfolio-based: build some stuff yourself, partner for other stuff, maintain flexibility to switch partners if needed. Apple is adopting this with Siri. Google does this across its product line (Google Cloud partners with multiple AI companies, for example). Microsoft does this too, with Copilot integrating multiple models.

This creates several interesting dynamics:

First, it reduces single-company dependency. If Gemini becomes critical to your product, but Google's quality degrades, you have OpenAI as a backup. That competitive pressure keeps Google honest.

Second, it enables faster innovation. Rather than building everything in-house, Apple can adopt best-in-class AI from multiple partners and ship faster.

Third, it commoditizes foundation models somewhat. If Gemini and GPT and Claude are all interchangeable, then the differentiation moves up the stack—to the companies that can best integrate and adapt these models for specific use cases. That's where Apple is placing its bets.

Fourth, it creates new leverage for AI companies. Being integrated into iOS is a massive advantage for Gemini. But it also means Google is now accountable to Apple for reliability, latency, and capability. That changes the power dynamic.

For other tech companies watching this, the message is clear: you can't compete on AI alone anymore. You need distribution, infrastructure, and partnerships. Pure AI companies like Anthropic and OpenAI will have to decide: do we build our own platforms, partner with existing platforms, or remain model-only companies? The margins are thinner in model-only, but the distribution challenge is lower.

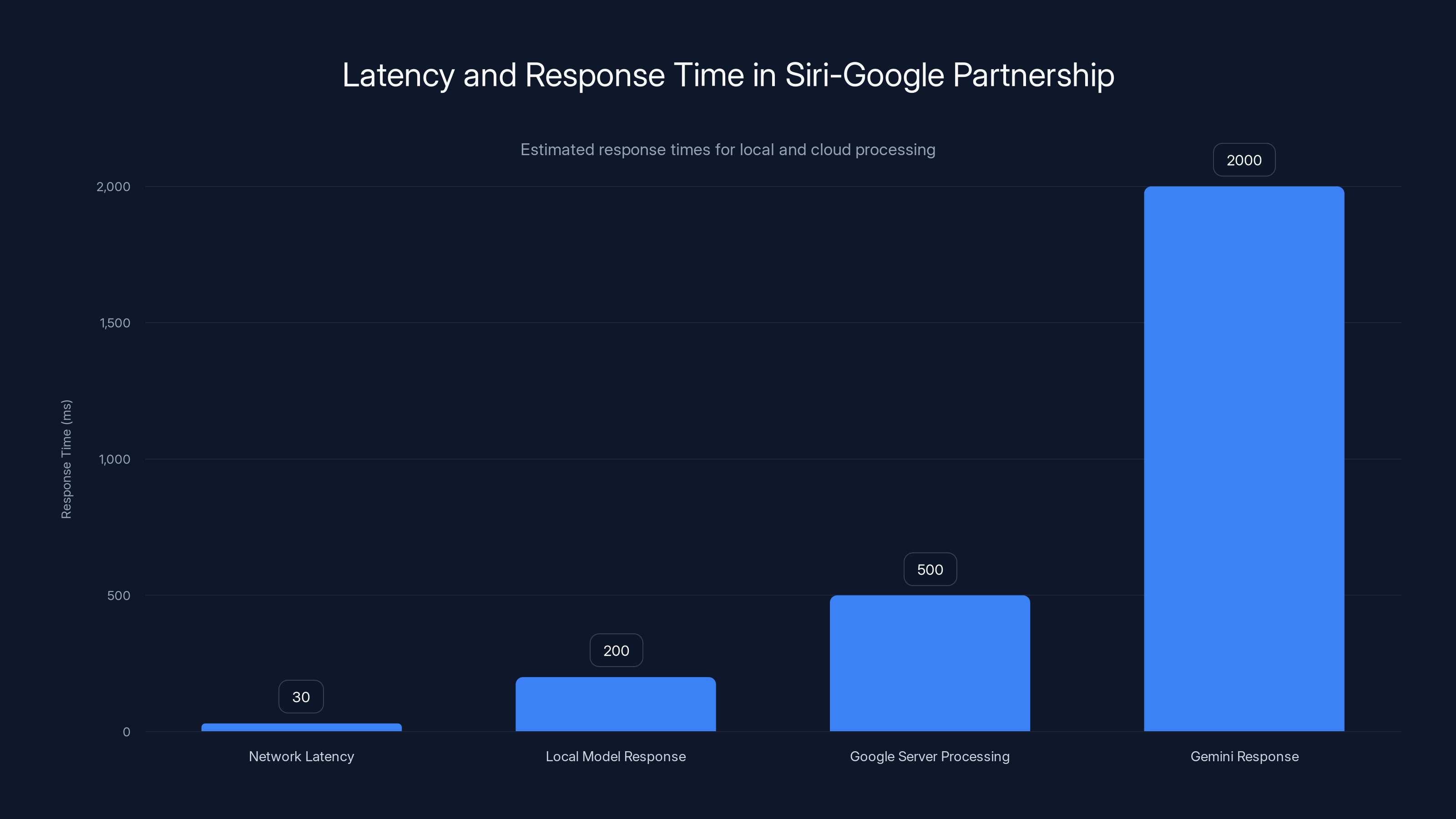

Estimated data shows that local models provide faster initial responses (200ms) compared to cloud processing (500ms to 2000ms), enhancing user experience by reducing perceived latency.

Technical Details That Actually Matter

Let's dig into some of the technical specifics that will determine whether this partnership actually works.

Latency is the first issue. Siri needs to feel responsive. You ask a question, and you expect an answer within about a second. If the request has to round-trip to Google's servers, that's immediately a problem. Google is 3000 miles away (in most cases). That's about 30-50 milliseconds of latency just for the network hop. Add in processing time on Google's servers, and you're easily at 500ms minimum, often longer.

Apple has handled this by running a local model that can answer simple questions instantly while fetching better answers from Gemini in the background. The local model might give you 90% of the answer in 200ms, while Gemini gives you 99% of the answer in 2 seconds. The user sees the quick response immediately, and the better response appears a moment later.

That's elegant, but it only works if the local model is good enough for the initial response. If it's too dumb, the initial response is useless, and users get frustrated waiting for Gemini. Finding that balance is crucial.

The second technical challenge is multimodal reasoning. Gemini can handle images, text, audio, and video. But iPhones generate a lot of image data (photos, screenshots) and audio data (Siri requests). Sending all that to Google's servers would be a privacy nightmare and a bandwidth problem. Apple has to be extremely selective about what gets sent to the cloud.

The likely approach is that Apple's on-device model handles basic image understanding (is this a photo of a person, a document, a scene?), and only sends the analyzed result to Gemini, not the image itself. So instead of sending an image to Google, Apple sends "user is showing me a photo of a contract with lots of text." Google then helps reason about that, but never sees the actual image.

Third is personalization. Gemini is trained on general web data. It knows about the world, but it doesn't know about you specifically. So when you ask Siri something that requires personal context, Apple has to handle that locally. "When is my next meeting" gets answered on-device with information from your calendar. "What should I do before my next meeting" requires reasoning that might go to Gemini, but only with the meeting description, not with other personal data.

Getting this right requires a sophisticated understanding of which data is personal and which is contextual. One wrong classification and you've either leaked personal data or reduced the quality of the response.

Timeline and Rollout: When This Actually Ships

Apple announced this partnership in March 2025, but you won't see Gemini-powered Siri until later in the year. The rollout will likely follow Apple's typical pattern:

Phase 1: Beta testing (Spring/Summer 2025). Apple releases the feature to beta testers and developers. This is where the real-world issues surface. Latency problems become apparent. Privacy edge cases emerge. Quality issues get logged.

Phase 2: Limited rollout (Fall 2025). The feature ships with iOS 19 and macOS 15 (or whatever the version numbers are), but probably with limitations. Maybe it only works with certain types of requests initially. Maybe it only works in certain languages or regions initially. This reduces the scope of potential problems.

Phase 3: Full rollout (Winter 2025/2026). Once the feature has been running successfully in limited form, Apple expands it to all users.

This timeline assumes everything goes well. If there are major privacy issues, security concerns, or quality problems, the timeline could slip further. Apple has a lot of incentive to get this right, because Siri's reputation is already fragile. A bad rollout could make things worse.

One thing to watch: whether Apple eventually moves more of the on-device processing to local Gemini models. Right now, Apple is partnering with Google's cloud services. Over time, Apple might work with Google to create smaller Gemini models that run locally. That would reduce Google's visibility into queries while maintaining most of the quality benefits.

The Competitive Landscape: What This Means for Other Players

This partnership reshapes the AI competitive landscape in ways that aren't immediately obvious.

For Google: This is a win-win-win situation. Google gets to claim that Gemini powers the world's most popular phone. Google Cloud gets revenue from the partnership. And Google gets signals from Siri queries that inform search and advertising. The only downside is the small privacy risk if something goes wrong.

For Microsoft: Microsoft has been betting on AI through partnerships with OpenAI. The Siri-Gemini deal doesn't change that fundamental strategy. But it does highlight that Microsoft needs to ensure Copilot is competitive enough to win partnerships, not just partnerships with OpenAI. If Copilot is better than Gemini, companies will choose Microsoft. The bar just got higher.

For OpenAI: This stings a bit. ChatGPT is still the leading consumer AI product, but it's not the default on iOS anymore. OpenAI is now in a supporting role rather than a primary role. That's a strategic loss, though ChatGPT integration still matters.

For Anthropic: Claude is the best AI model at following complex instructions and reasoning carefully. But Claude doesn't have the distribution or partnership depth that Gemini has. Anthropic needs to either build its own platform, find major partners, or accept being a specialist product used by developers and enterprises rather than consumers.

For Meta: Meta is building its own Llama models and integrating them into WhatsApp and other services. This partnership validates Meta's strategy of building in-house plus integrating with cloud partners. Meta should probably ensure Llama integration into Facebook, Instagram, and WhatsApp is as seamless as Gemini integration into iOS.

For startups and smaller companies: This is actually good news. The Big Tech partnerships mean that on-device and cloud AI is becoming a utility. Smaller companies can focus on building differentiated experiences on top of standard AI backends, rather than trying to build AI from scratch.

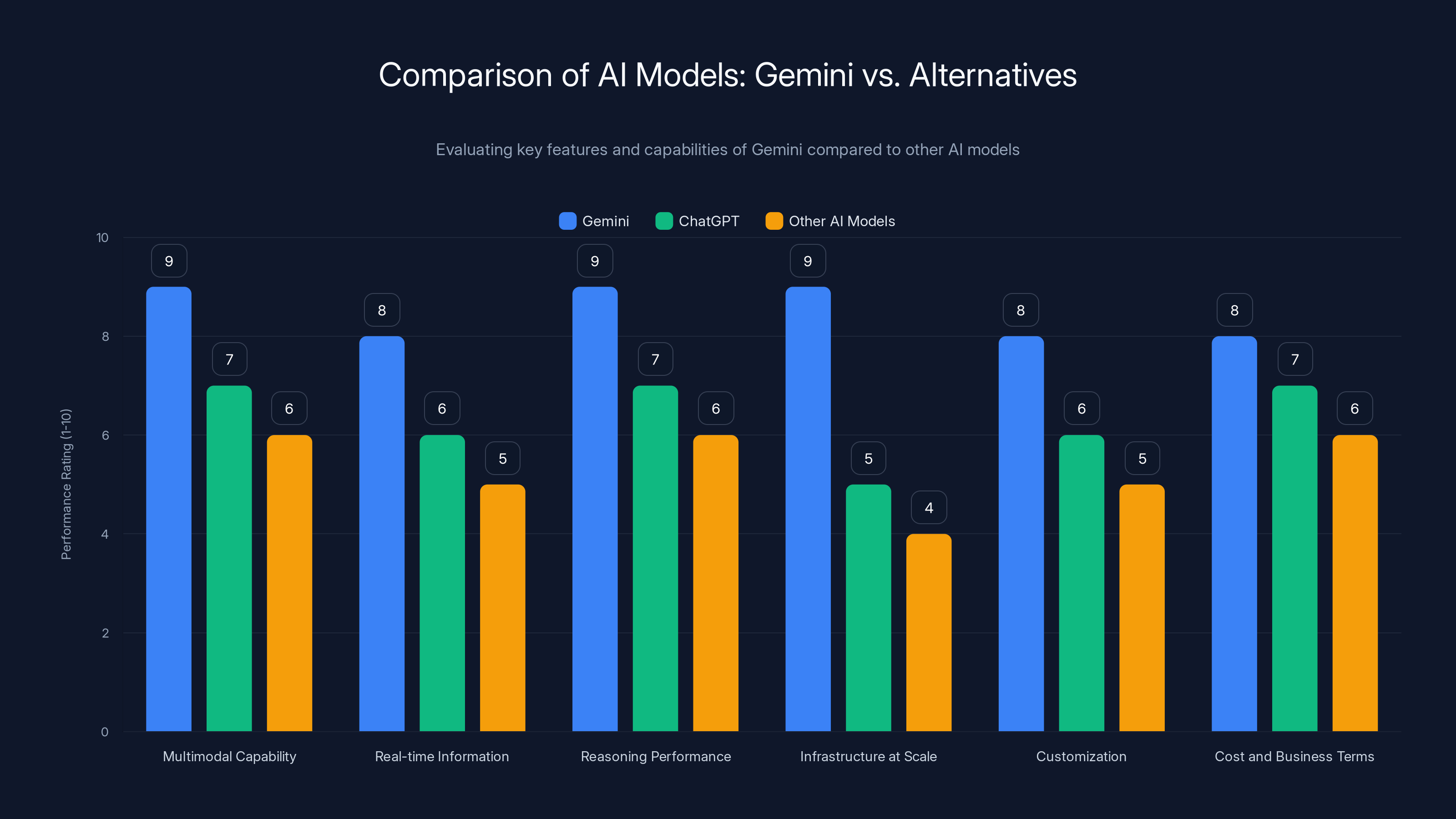

Gemini outperforms other AI models across key features such as multimodal capability and infrastructure scalability, making it a preferred choice for Apple. Estimated data based on qualitative descriptions.

Privacy Architecture Deep Dive: How Apple Says It Works

Apple's privacy promises are specific enough that they're worth examining in detail. Here's what the company is claiming:

-

Query encryption in transit. Siri requests are encrypted before they leave your device and remain encrypted in transit to Apple's servers and to Google's servers.

-

Data partitioning. Google doesn't receive your full request—only the contextual parts. Personal information like your calendar or messages stays on your device or on Apple's servers.

-

No personal data sent to Google. Apple's servers act as an intermediary that removes identifying information before sending queries to Gemini.

-

Opt-out capability. Users can disable cloud processing and use only on-device Siri, though with reduced capability.

-

No behavioral profiling by Google. Google doesn't build profiles of your Siri usage habits (or so Apple claims).

In principle, these safeguards make sense. In practice, verifying them is difficult. You can't see into Google's systems to confirm that they're not logging queries. You have to trust Apple's engineering and Google's integrity.

The biggest risk is probably what security researchers call "context leakage." Even if Google doesn't know that a query comes from you, the query itself might contain identifying information. For example, if you ask Siri "remind me to call my wife Jennifer on her birthday," that query contains identifying information about your wife's name and birthday. Google learns something about you from that query, even if it doesn't know your identity.

Apple's counter to this is that they've thought about context leakage and built in safeguards. For example, on-device processing might extract just the intent ("set a reminder") and send that to Gemini, without sending the specific content (your wife's name). But implementing that reliably across thousands of possible request types is genuinely hard.

The other privacy consideration is data retention. Does Google keep logs of Siri queries? For how long? Under what circumstances can law enforcement access those logs? These are important questions that Apple hasn't fully answered publicly.

One thing worth noting: this privacy architecture is probably more secure than what many other companies do. Microsoft's Copilot and OpenAI's API partnerships often involve less data partitioning. Google's own services often send more data to servers than Apple's Siri partnership requires. By that standard, Apple's approach is relatively privacy-conscious.

But it's still a step backward from the on-device-only Siri that Apple used to promote. That's the real privacy trade-off here: Apple is gaining capability at the cost of some visibility to Google.

The Road Ahead: What's Next for AI on Apple Devices

This partnership is just the starting point. Here's what's likely coming:

Expanded Gemini integration. Over the next 2-3 years, Gemini will power more and more features across iOS and macOS. Not just Siri, but also writing assistance, image generation, document understanding, and more. Anywhere Apple needs advanced AI, Gemini will probably be involved.

Competing models for specific tasks. While Gemini powers core Siri functionality, other specialized models might power other features. You might see Claude powering code analysis, Llama powering certain types of image understanding, and so on. That requires an abstraction layer that Apple is probably already building.

Local model evolution. Apple will continue investing in local models that run on-device. The split between local processing and cloud processing will probably shift more toward local over time, as on-device chips get more powerful.

Multi-region redundancy. As Siri becomes more critical, Apple will build redundancy into the cloud processing. If Google's Gemini is unavailable, Apple needs a backup. OpenAI's ChatGPT might serve that role, or Apple might build a secondary partnership with another provider.

Regulatory scrutiny. Regulators in the EU, US, and other jurisdictions will probably scrutinize this partnership. Is Google's involvement anti-competitive? Does it give Google unfair advantage? These are questions that probably haven't been fully resolved yet.

Developer access. Eventually, third-party developers might get access to the same Gemini integration that Apple uses internally. That could open up new categories of intelligent apps that weren't possible before.

Longer term, the question is whether this partnership becomes a template for other companies. Could Samsung partner with Google for Galaxy Assistant? Could other phone makers? Or is this specific to Apple and Google's unique relationship?

For Developers: What This Means for Building Apps

If you're building apps for iOS or macOS, this partnership matters for several reasons:

First, you have new capabilities available. You can now build features that rely on Gemini's reasoning and knowledge. Writing assistants, summarization tools, code generation—these become easier to build when you can tap into a high-quality foundation model.

Second, you can't count on being the default anymore. If Siri can do what your app does, some users won't need your app. You need to think about what you're offering on top of the AI—a specialized experience, better UI, domain expertise, or something else.

Third, you might want to integrate Gemini directly into your app. Apple will likely offer APIs for developers to call Gemini, similar to how you can call ChatGPT APIs. That's a new business opportunity.

Fourth, you should start thinking about Gemini as a platform. The way developers built on top of ChatGPT after launch, developers will probably build on Gemini. Learning how to work with Gemini APIs and capabilities will be valuable.

Fifth, privacy requirements become more complex. If your app relies on Gemini processing, you need to understand and communicate Gemini's privacy policies to your users. That's additional complexity compared to on-device processing.

The developers winning most from this will be those who layer domain expertise on top of Gemini. For example, a legal research app that uses Gemini to understand documents but adds legal-specific knowledge and analysis. A medical app that uses Gemini for reasoning but has medical training baked in. That layering is where the value is.

Google's Gemini scored highest in both capability and integration potential, influencing Apple's decision. Estimated data based on qualitative analysis.

The Market Reaction and Investor Implications

When Apple announced this partnership, markets reacted in predictable ways.

Google's stock was relatively flat—the partnership was viewed as good for Google, but not surprising. Google's already the market leader in AI, so cementing that leadership wasn't a dramatic new signal.

Apple's stock was also relatively flat. The partnership signals that Apple's in-house AI efforts didn't work as planned, which is negative. But it also signals pragmatism and a commitment to shipping good products, which is positive. These roughly canceled each other out.

OpenAI wasn't affected directly (it's private), but the AI investment community noted that OpenAI lost a major partnership opportunity. That probably influenced OpenAI's fundraising conversations and strategic decisions.

Anthropic saw a risk signal. If Anthropic wants to compete with Google and OpenAI for OS-level partnerships, it needs to build distribution or find partners faster. Anthropic's funding reflects this reality.

Longer term, this partnership probably validates the idea that AI models are becoming commodities. If Gemini, GPT, and Claude are increasingly interchangeable, then the value in the AI industry moves away from model companies and toward companies that integrate and apply these models.

That's good news for platform companies like Apple, Microsoft, and Google (which have both models and platforms). It's harder news for pure model companies like OpenAI and Anthropic, which have to differentiate on quality alone.

Comparing Gemini to Other Options: Why Gemini Won

It's worth understanding exactly why Apple chose Gemini over the alternatives. This wasn't a random choice—it was based on specific technical criteria.

Multimodal capability: Gemini handles text, images, audio, and video. ChatGPT has added image capability, but Gemini was earlier and more comprehensive.

Real-time information: Gemini can search the web and process results. That's critical for the "World Knowledge Answers" feature. ChatGPT has this too, but only through the web interface, not through APIs designed for OS integration.

Reasoning performance: On standard AI benchmarks, Gemini 3 was outperforming other models when the partnership was being finalized. That matters for a voice assistant that needs to understand context and reason through complex requests.

Infrastructure at scale: Google has been running Gemini on Pixel phones for months. Google understood how to optimize it for mobile, how to handle latency requirements, how to partition processing between device and cloud. OpenAI didn't have that operational experience.

Customization and fine-tuning: Gemini is designed to be fine-tuned for specific use cases. Apple could adapt Gemini specifically for the iPhone use case, with iOS-specific training data. That's not impossible with other models, but Gemini was built with that flexibility in mind.

Cost and business terms: Google and Apple have negotiated terms that work for both companies. Google gets distribution and signals. Apple gets high-quality AI at a cost that makes sense for Apple's business model. The specific terms aren't public, but the deal had to work financially for Apple.

None of these factors alone explains the choice. But together, they explain why Gemini beat the alternatives. It wasn't just raw capability—it was the combination of capability, infrastructure, flexibility, and business fit.

Common Questions and Concerns

Let's address the questions people are actually asking:

Is this bad for privacy? Compared to current Siri, it's a step backward—you're now sharing some data with Google that previously stayed on-device. Compared to ChatGPT or other cloud-based AI, it's probably a step forward—Apple is being more careful about what data gets sent. The net evaluation depends on your privacy bar.

Can I opt out? Yes, Apple says you can disable cloud processing and use on-device Siri only. But that Siri will be less capable. It's a trade-off between capability and privacy.

Will this replace Google Search? Partially. For certain types of questions, Gemini-powered Siri will give you good answers without requiring a web search. But for complex research, looking at multiple sources, and understanding different perspectives, web search is still valuable. Siri will compete with search for some queries, but not all.

Does this mean ChatGPT is dead? No. ChatGPT is still integrated into Siri and still available as a standalone app. But ChatGPT is no longer the default—Gemini is. That's a competitive loss for OpenAI, but not a mortal blow.

Will this work offline? No, not for the Gemini-powered features. The cloud processing requires internet connectivity. Apple's on-device Siri will work offline, but with limited capability.

Does Apple get paid by Google for this? The business terms aren't public, but the arrangement is mutually beneficial. Google doesn't write Apple a check—instead, the partnership works through a combination of revenue sharing, infrastructure costs, and strategic positioning.

FAQ

What exactly is the Apple-Google partnership for Siri?

Apple has partnered with Google to use Google's Gemini AI models to power an upgraded Siri that will launch later in 2025. The partnership includes a multi-year agreement for Apple to use Gemini models and Google Cloud infrastructure for Apple's AI features. Gemini will handle complex reasoning tasks, real-time web searches, and personalized responses that require cloud processing, while simpler requests will still be processed on-device through Apple's own models.

How does this partnership affect my privacy?

With Gemini-powered Siri, some of your voice requests will be sent to Google's servers for processing. Apple claims that it uses encryption and data partitioning to keep personal information separate from the queries sent to Gemini. You can disable cloud processing and use only on-device Siri for maximum privacy, though with reduced capability. The privacy impact is a trade-off: improved AI functionality in exchange for some visibility to Google.

Why didn't Apple use ChatGPT instead of Gemini?

While OpenAI's ChatGPT is excellent, Apple selected Gemini because of its multimodal capabilities, proven scalability on mobile devices, real-time web integration, and strong performance on reasoning benchmarks. Google also brings deep infrastructure expertise from running Gemini on Pixel phones. ChatGPT is still available in Siri as an optional integration, but Gemini powers the core features.

When will Gemini-powered Siri actually launch?

Apple announced the partnership in March 2025, with the Gemini-powered Siri expected to launch later in 2025, likely with iOS 19. Apple will likely do a phased rollout starting with beta testing in spring/summer, limited availability in fall, and full availability by winter 2025/2026.

Can I use Gemini-powered Siri if I don't want Google access to my data?

Yes, you'll be able to disable cloud processing and use only Apple's on-device Siri. However, the on-device version will have significantly reduced capability compared to the Gemini-powered version. You'll be choosing privacy over functionality, which is a legitimate choice for privacy-conscious users.

What does this partnership mean for OpenAI and ChatGPT?

OpenAI has lost the default position on iOS, where Gemini is now the primary AI engine for Siri. ChatGPT remains available as an integration option within Siri and as a standalone app. This is a competitive loss for OpenAI, but not catastrophic—users who prefer ChatGPT can still choose it. However, the default matters significantly for consumer adoption.

Will Gemini-powered Siri replace Google Search?

Partially. For certain types of queries (weather, quick facts, simple questions), Siri will provide answers without requiring you to open Google Search. But for in-depth research, comparing multiple perspectives, and complex queries, Google Search is still valuable. This partnership represents competition between Siri and Search, not replacement of Search.

Is Google paying Apple for this partnership?

The specific financial terms aren't public, but the partnership is mutually beneficial. Google likely pays for cloud infrastructure costs and may share revenue. Google benefits from the distribution of Gemini and signals from Siri queries. Apple benefits from having access to a best-in-class AI model without building it entirely in-house.

How will Apple keep my data private when sending requests to Google?

Apple uses encryption for data in transit and implements data partitioning on its servers to remove identifying information before sending queries to Google's Gemini servers. Apple also claims that simple requests remain entirely on-device without ever reaching Google's servers. However, these privacy safeguards can't be independently verified, so they require trusting both Apple's engineering and Google's integrity.

What about other AI companies like Anthropic and Perplexity?

They weren't chosen for the core Siri upgrade, though partnerships with other AI companies remain possible. Apple has reportedly explored partnerships with Anthropic and Perplexity as well. The multi-partner strategy means Apple might integrate different AI companies for different specialized tasks in the future, but Gemini is now the primary foundation for core Siri intelligence.

The Real Impact: What Happens Next

When you zoom out from the technical details, this partnership is significant because it represents a fundamental shift in how technology companies approach AI.

For years, the vision was that companies would build AI entirely in-house. Apple would build Apple AI. Google would build Google AI. Microsoft would build Microsoft AI. Each company would have its own proprietary model, trained on proprietary data, running on proprietary infrastructure.

That vision is dead.

What's replacing it is a partnership model where companies integrate best-of-breed AI from multiple providers, customize it for their use cases, and integrate it into their products. Apple does this now with Siri. Microsoft does this with Copilot. Meta probably will soon with its assistants.

This shift happens because building a truly capable foundation model is hard, expensive, and takes a long time. It's easier to partner with someone who's already done the work. And from a capability standpoint, that's not a compromise—it's a win. Apple gets access to Gemini's capabilities immediately, rather than spending years developing equivalent capabilities in-house.

But this shift also creates new dependencies. Apple is now reliant on Google's Gemini remaining good, remaining available, and remaining compatible with Apple's use cases. If the partnership breaks down or if Gemini degrades, Apple has limited options for pivoting quickly.

That's why Apple is probably also investing in local models and maintaining relationships with other AI providers like OpenAI. Diversity of suppliers reduces single-point-of-failure risk.

For consumers, this partnership should mean a better Siri. A Siri that actually understands context. A Siri that can answer questions reliably. A Siri that doesn't make you feel like you're talking to a confused robot.

Whether that happens in practice depends on execution. Apple has a track record of integrating AI well into products (think of how seamless machine learning is in Photos or Maps). If Apple applies that same discipline to Siri, this partnership could actually work.

But it's also the biggest bet Siri has ever had. After years of being a punchline, Siri finally has a chance to be good. If Apple ships this and it's still disappointing, Siri's reputation might never recover. Users will just give up and use ChatGPT, Google Search, or whatever else they can rely on.

The stakes are high. The opportunity is real. And the execution challenge is immense.

That's what makes this partnership genuinely interesting to watch. It's not just about AI integration. It's about whether Apple can save Siri from irrelevance and whether the partnership model for AI will actually work at scale.

Come back in six months and we'll know a lot more.

Conclusion: AI Partnerships Are the Future

Apple's decision to partner with Google for Gemini-powered Siri tells us something crucial about the state of AI in 2025: the era of complete vertical integration is over, and the era of strategic partnerships has arrived.

For years, tech companies competed on the idea that they could do everything themselves. Apple built its own chips, its own OS, its own AI. Google built search, advertising, cloud, and AI. Microsoft built Windows, Office, Azure, and AI.

That era created some incredible products. But it also had a fundamental flaw: it assumed that a single company could be world-class at everything simultaneously. Building cutting-edge AI while also maintaining an operating system, managing hardware, handling privacy infrastructure, and serving billions of users is genuinely hard. It turns out, impossible.

The winners in the new era will be companies that recognize what they can't do better than anyone else and partner strategically. Apple can't build foundation models faster than Google can. So Apple partners with Google. Apple brings OS integration expertise, privacy architecture, and distribution. Google brings AI capability. Together, they create something neither could build alone.

This pattern will repeat across the tech industry. Microsoft will partner with OpenAI and others for different specialized models. Meta will integrate with multiple AI partners. Amazon will do the same. The companies that are most pragmatic about partnerships will ship the best products.

The interesting question for the future isn't whether companies will build their own AI. Of course they will. But they'll also leverage the best models available from partners, whether that's OpenAI, Anthropic, Google, Meta, or specialized providers.

Apple's move is significant because it's Apple—the company most famous for doing everything in-house—admitting that it needs a partner. If Apple needs Google, then everyone needs partners. The partnership model isn't a second-rate option. It's the future of AI in tech.

The Gemini-powered Siri that launches later this year will be just the beginning. Over the next five years, expect to see partnerships like this become the norm rather than the exception. Foundation models become utilities. Integration becomes the differentiator. And companies compete on execution, not on who built the AI first.

That's actually good news for consumers. It means you'll get better AI features, shipped faster, with less lock-in to any single company's ecosystem. You'll have choices about which AI providers to use. And companies will focus on making great products rather than spending years building AI from scratch.

The only question is whether Apple can actually ship this partnership well. If Gemini-powered Siri is genuinely better than current Siri, this partnership will be remembered as the moment Siri finally became useful. If it's a clunky integration that doesn't work reliably, it'll be remembered as another Siri disappointment.

Based on Apple's track record with AI integration and Google's proven ability to scale Gemini, I'm optimistic. But we'll know in about six months when the first beta versions are in users' hands.

Until then, the most important thing to understand is this: Apple and Google just proved that partnerships are how the future of AI gets built. That changes everything.

Key Takeaways

- Apple chose Google's Gemini AI over OpenAI and Anthropic for Siri's core AI engine, representing a strategic pivot from building everything in-house to using strategic partnerships

- The multi-year partnership includes hybrid on-device and cloud processing, with Apple claiming to protect personal data through encryption and partitioning

- Gemini's multimodal capabilities, proven mobile optimization, and real-time web integration made it technically superior to alternatives for Siri's needs

- This partnership signals the end of vertical integration as a tech industry strategy and validates the emerging multi-partner AI model adopted by major companies

- The rollout timeline targets late 2025, with beta testing in spring/summer followed by phased availability, representing a significant gamble on Siri's ability to finally deliver on its AI promise

Related Articles

- Apple's 'One More Thing' Moments: Greatest Secret Reveals [2025]

- CES 2026 Best Products: Pebble's Comeback and Game-Changing Tech [2025]

- The Robots of CES 2026: Humanoids, Pets & Home Helpers [2025]

- CES 2026: The Biggest Tech Stories and Innovations [2025]

- Google Gemini for Home: Worth the Upgrade or Wait? [2025]

- CES 2026 Tech Trends: Complete Analysis & Future Predictions

![Apple & Google's Gemini Partnership: The Future of AI Siri [2025]](https://tryrunable.com/blog/apple-google-s-gemini-partnership-the-future-of-ai-siri-2025/image-1-1768234458372.jpg)