The Robots of CES 2026: Humanoids, Pets & Home Helpers

I walked the Las Vegas Convention Center's robotics section expecting the usual incremental improvements. Robot vacuums with slightly better sensors. Humanoids that moved a bit more smoothly. The standard CES fare.

Instead, I found myself genuinely unsettled. Not because the robots were scary or dystopian. But because they felt real in a way they never have before.

See, there's a difference between a robot that follows a pre-programmed script and one that actually thinks. The ones at CES 2026 crossed that line. They had conversations. They made decisions. They fumbled through tasks in ways that were weirdly human. And honestly, it was disorienting.

TL; DR

- AI advancement is reshaping robotics: Better neural networks mean robots can now learn, adapt, and make autonomous decisions without hardcoding every behavior

- Humanoid robots are becoming conversational: Companies like Agibot showed machines that can chat, crack jokes, and demonstrate surprising personality

- Specialized robots are solving real problems: Laundry-folding assistants, stair-climbing vacuums, and toy-cleanup bots move beyond novelty into functional home helpers

- The uncanny valley is real and getting weirder: Some robots nail the charm factor, while others land squarely in "I don't know how I feel about this" territory

- Commercial deployment is still years away: Most impressive demos are concept pieces, not products you can order today

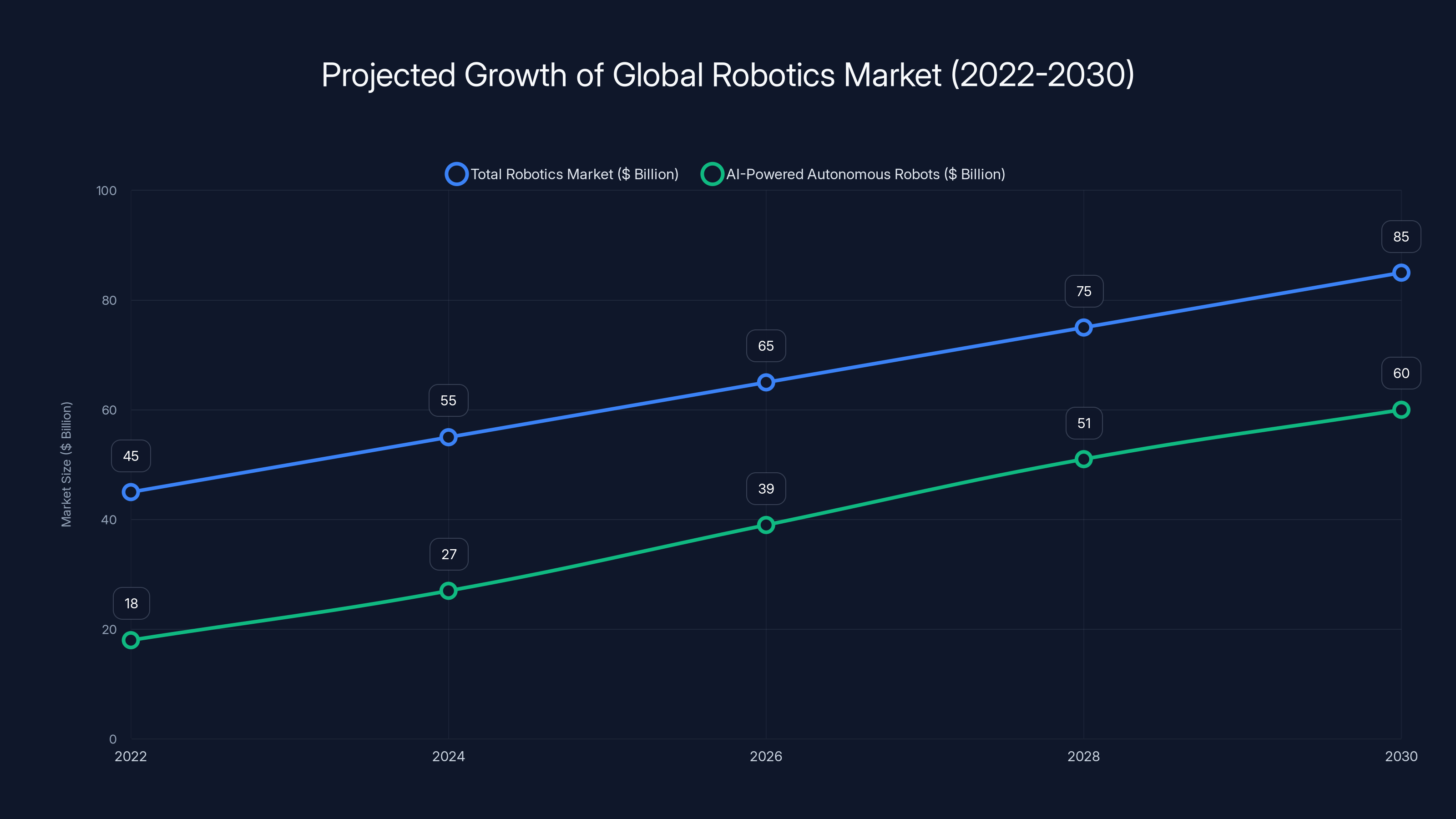

The global robotics market is projected to grow significantly, reaching $85 billion by 2030, with AI-powered autonomous robots driving 60% of this growth. Estimated data highlights the impact of AI advancements.

The Leap Forward: Why 2026 Feels Different

Every year, we see robots at CES. It's expected. But 2026 marked something distinct. The advancement in AI technology didn't just make robots "better." It fundamentally changed what they can do.

Previous robot generations relied on rigid programming. You'd hardcode: "If object detected, pick up object. If destination reached, stop." Everything was if-then logic. Limited, predictable, kind of dumb.

Now, robots have genuine neural networks powering decision-making. They can learn from failures. They understand context. They adapt to novel situations they've never seen before. That's not an incremental improvement. That's a paradigm shift.

The AI boom of 2022-2024 created the foundation. Large language models proved that neural networks could understand language, intent, and nuance. Computer vision models reached human-level accuracy on many tasks. Reinforcement learning showed machines could learn through trial and error.

All of that converged in 2026. Companies integrated these AI components into physical forms, and suddenly robots started doing things that required actual thinking.

The numbers back this up. The global robotics market is expected to reach $85 billion by 2030, with AI-powered autonomous robots accounting for 60% of that growth. That's not just improved vacuum cleaners. That's a fundamental restructuring of how we handle household and commercial labor.

What made 2026 special wasn't the arrival of perfect robots. It was the arrival of capable-enough robots. Robots that fail 40% of the time but succeed 60% of the time. Because honestly, that's often good enough.

Agibot's X2 and A2: The Conversational Humanoids That Actually Impressed

Walk into Agibot's booth and the first thing you notice is the feet. These aren't the typical wheeled bases or bird-like appendages you see on most humanoids. They're actually feet. Proportional. Capable of balance. It's such a small detail, but it's everything.

Agibot showed two models: the larger A2 and the smaller X2. Both are bipedal, both have articulated hands with multiple degrees of freedom, and both can move through space without looking like they're about to tip over at any second.

The X2 impressed with pure movement capability. The company demonstrated surprisingly complex choreography. We're not talking about pre-recorded sequences. The X2 learned these dance moves. It understood rhythm, timing, and body positioning. Watching a 60-pound humanoid nail a synchronized set of movements shouldn't be impressive, but it absolutely was.

But here's where it got genuinely interesting. Later in the show, I found the A2 at Int Bot's booth, running their demonstration space. Int Bot had customized both Agibot models for autonomous booth operation. And I spent several minutes actually talking with what the company called "Nylo," one of their custom A2 units.

The conversation wasn't scripted. Or rather, it wasn't only scripted. When I asked Nylo about its capabilities, it answered. When I followed up with a specific technical question, it processed that context and provided a relevant response. When I made a joke, it attempted a roast back. Not a great roast, admittedly. But it understood the social dynamic and attempted to match my energy.

This is where the creepy feeling sets in. Not because Nylo was malicious or threatening. But because conversing with Nylo made me realize how much of human interaction is just... processing context and generating appropriate responses. Which is exactly what large language models do. When you talk to a robot powered by a modern LLM, it feels less like talking to a machine and more like talking to something that understands you.

Agibot hasn't announced commercial availability for the A2 or X2. But the technical foundations are there. Both models use multi-jointed manipulation systems that can handle complex grasping tasks. Both have sufficient autonomy to navigate spaces and make independent decisions. Both can integrate external information to inform their responses.

What's still missing is scale, reliability, and cost. Building bipedal humanoids is expensive. Agibot's hardware costs are probably substantial. Mass production hasn't happened yet. But the capability gap between "prototype" and "shippable product" is much smaller than it was even two years ago.

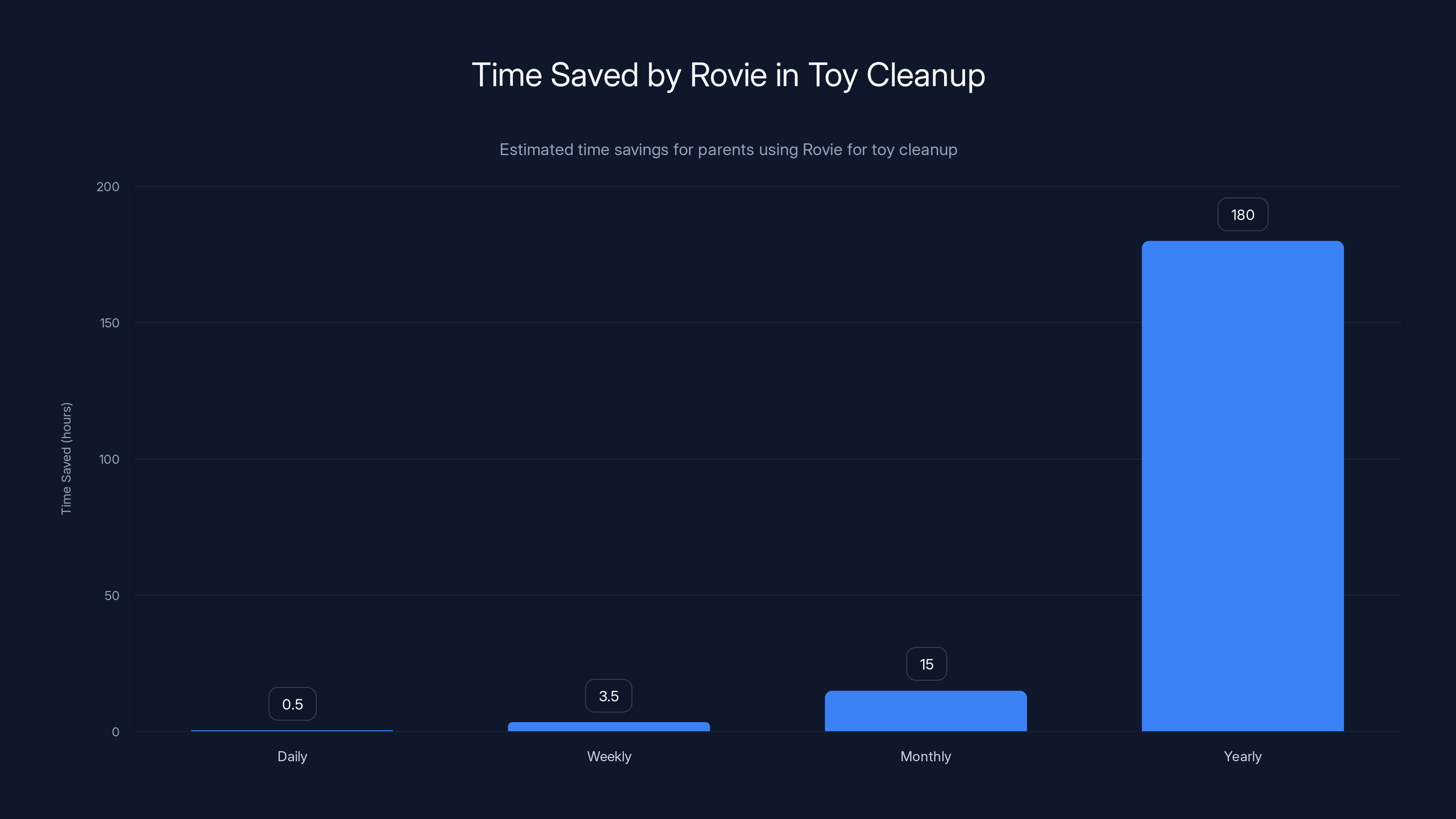

Rovie can save parents up to 180 hours per year in toy cleanup, making it a valuable time-saving tool. Estimated data based on typical daily cleanup times.

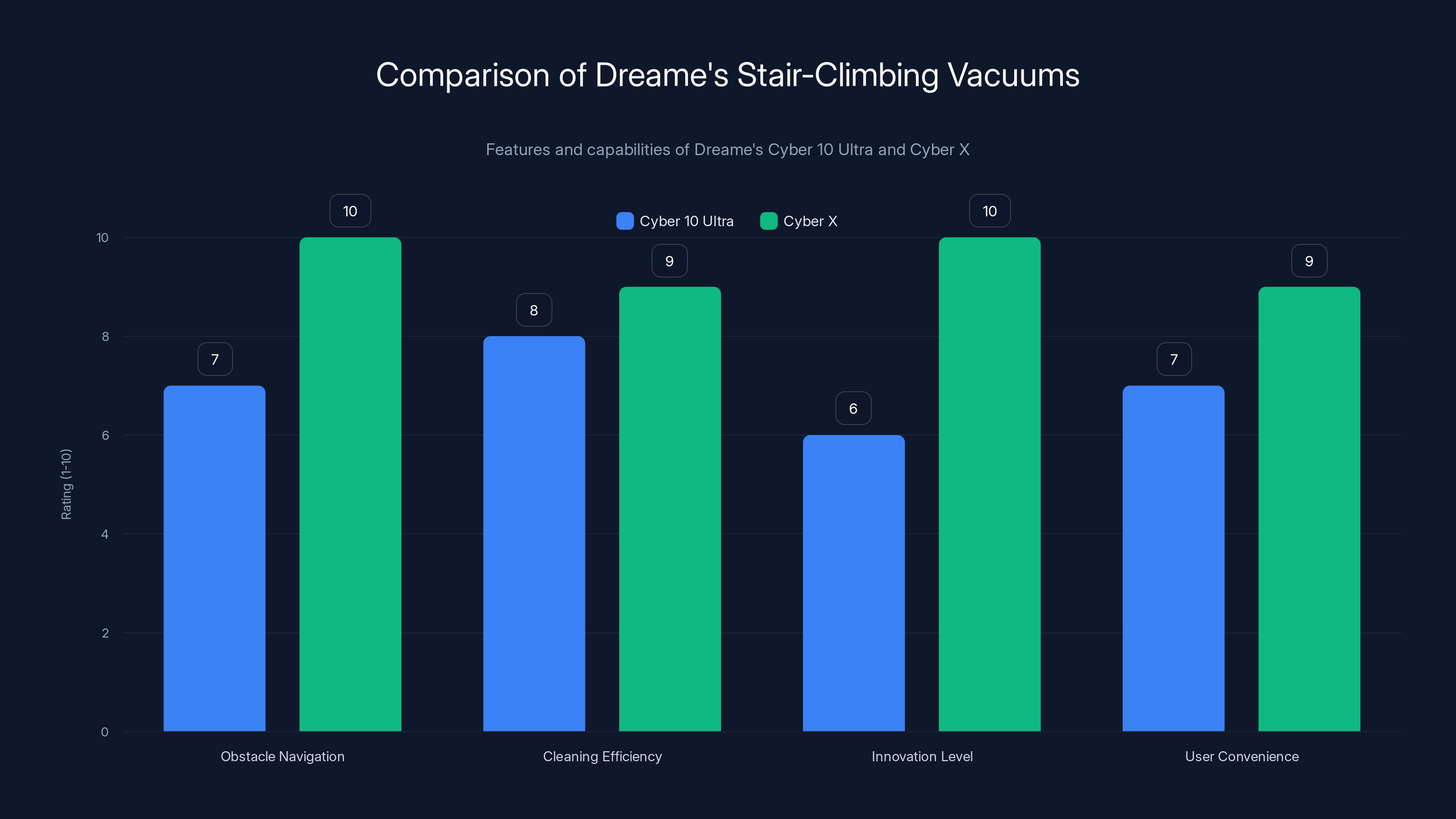

Dreame's Stair-Climbing Vacuums: Form Factor Innovation

Robot vacuums have become commoditized. There are dozens of brands, thousands of models, and the core technology hasn't fundamentally changed in years. Improved sensors, sure. Better mapping, absolutely. But the basic vacuum-on-wheels formula stayed static.

Dreame decided to break that formula.

Their Cyber 10 Ultra introduced something genuinely novel: an extendable arm with both gripping and cleaning attachments. The arm extends, moves around obstacles, and can either pick up objects or deploy specialized cleaning tools for hard-to-reach areas.

But that's the conservative innovation. The real statement piece was the Cyber X.

The Cyber X has legs. Actual articulated legs. That allow it to climb full-size staircases. Independently. Without human intervention.

I'll be honest, the first time I saw it operate, I had the uncanny valley reaction hard. The legs move with a vaguely insectoid motion. They're segmented. They look unsettlingly similar to miniature chainsaws. Watching this thing scurry up a flight of stairs is the kind of thing that satisfies your rational brain ("that's technically impressive") while simultaneously triggering some primal discomfort.

But here's the thing: it works. And it solves an actual problem that every robot vacuum owner has encountered. Stairs are the hard limit for autonomous vacuums. You have them vacuum the first floor, then you have to carry it upstairs, position it, and run it again.

With Cyber X, you don't need to. It traverses the obstacle independently. That's a genuine quality-of-life improvement.

Dreame's approach to form factor innovation is worth studying. Rather than incremental optimization of the existing vacuum shape, they asked: "What's actually holding us back?" The answer was physical obstacles. So they added the capability to overcome those obstacles.

It's not elegant. It's not the direction most roboticists expected. But it's practical, and sometimes practical beats elegant.

The staircase problem is significant. Approximately 87% of U.S. homes have at least one staircase. For multi-story homes, staircases represent 40-50% of the total floor area that vacuums can't autonomously clean. Solving that obstacle unlocks a meaningful chunk of untapped automation potential.

What Dreame hasn't solved yet is the cost question. Legged systems are mechanically complex. They require more actuators, more control logic, and more failure points than wheeled systems. Durability testing probably isn't complete. And manufacturing legs at scale is a different engineering challenge than manufacturing wheels.

But the fact that they built a prototype that actually works is the important part. The engineering challenges are solvable. It's just a matter of time and money.

Ollo Bot: When Charm Overwrites the Uncanny Valley

Ollo Bot shouldn't work. By every reasonable design principle, it should land firmly in "creepy and confusing" territory.

It has bulbous frog eyes mounted on top of its head. Its mouth is a tablet display. Its body is penguin-shaped with stubby flappy arms. It has a patch of fur on its neck and nowhere else, which makes exactly zero sense functionally. And it's charming as hell.

That last part is the secret. Ollo Bot succeeds because its designers embraced the absurdity. They didn't try to make it look "natural" or "professional." They made it deliberately goofy. And in doing so, they bypassed the uncanny valley entirely. You don't look at Ollo Bot and think, "that's a failed attempt at something human." You look at it and think, "that's a fun robot that's intentionally ridiculous."

Functionally, Ollo Bot is a family-focused smart display with wheels. It responds to voice commands. It has a touchscreen for interaction. It can capture photos and videos to build a family diary. It controls smart home devices. It makes calls.

None of that is revolutionary. You could do most of it with a tablet on a roomba base.

But Ollo Bot adds personality to commodity functionality. And personality, it turns out, is more valuable than most technologists expect.

The removable "heart module" is a smart design choice. All data stays local. Everything is stored in this physical component that you can access, manage, or remove entirely. In an era where people are increasingly concerned about device privacy, storing data locally rather than in the cloud is a genuine selling point.

Two form factors exist: the short-neck version and the long-neck variant. The extendable neck increases Ollo Bot's visual field and reach, which has practical benefits for capturing photos from different angles or seeing over obstacles.

What's interesting about Ollo Bot is that it targets a market that nobody else is really addressing: families with children who want a companion device. Most smart home tech skews toward either pure functionality (vacuum that cleans) or adult-focused applications (professional humanoids for warehouses).

Ollo Bot fills a gap. It's a device that kids find fun to interact with. Parents find useful for remote monitoring and communication. And it's cute enough that nobody feels weird having it in their living room.

That's actually harder to design than it sounds. Too cute and people might not take it seriously. Too functional and it loses the charm. Ollo Bot threads that needle well.

Rovie by Clutterbot: Making Toy Cleanup Autonomous

Clutterbot's Rovie is specifically designed to solve one problem: toys scattered across the floor.

Rather than using a single articulated arm to grab objects individually (which is mechanically complex and slow), Rovie has a dustpan-style tray with two sweepers that fold out from its front. It drives around, uses computer vision to identify toys on the floor, positions itself, and scoops them up in bulk.

It then drives to a designated bin and dumps the collected items.

The elegance here is in the simplification. Instead of trying to build a universally capable manipulation system, Clutterbot built something that's specifically good at one job: sweeping up multiple objects and consolidating them.

One team member mentioned that Rovie is still in R&D, which means the product isn't ready for commercial release. But the prototype works. It successfully identifies objects, navigates to them, collects them, and deposits them in the designated location.

Parents dealing with constant toy chaos recognize this as genuinely useful. Small children generate a remarkable volume of floor clutter. Blocks, dolls, vehicles, puzzle pieces. It accumulates throughout the day. Before bed, there's a 30-minute cleanup session. If Rovie can handle that autonomously, it saves meaningful time.

The economics are worth considering. A parent might spend 30 minutes per day on toy cleanup. That's 3.5 hours per week. 180 hours per year. If Rovie costs

The computer vision component is critical here. Rovie needs to identify objects without being specifically trained on every toy type. It needs to understand "this is a small object that should be swept up" without explicit programming for each item.

Modern vision models can do this. Models trained on general object detection work well on novel object types they've never seen before. Clutterbot is leveraging that capability to build a robot that doesn't need to be reconfigured when new toys arrive.

One limitation worth noting: Rovie's dustpan approach works for items roughly smaller than 6 inches across. Larger objects or awkwardly shaped items might not sweep cleanly. And objects with wheels or unusual centers of gravity might resist being scooped up. The system is optimized for typical toy dimensions, which is smart product design. Focus on the 80% use case rather than trying to solve everything.

The real question is whether this makes it to market. Clutterbot has a working prototype. They've demonstrated the core functionality. If they can handle production scale and durability testing, Rovie could become an actual product within 2-3 years.

Personally, I'm rooting for them. This is the kind of incremental robot that actually improves daily life without requiring fundamental breakthroughs.

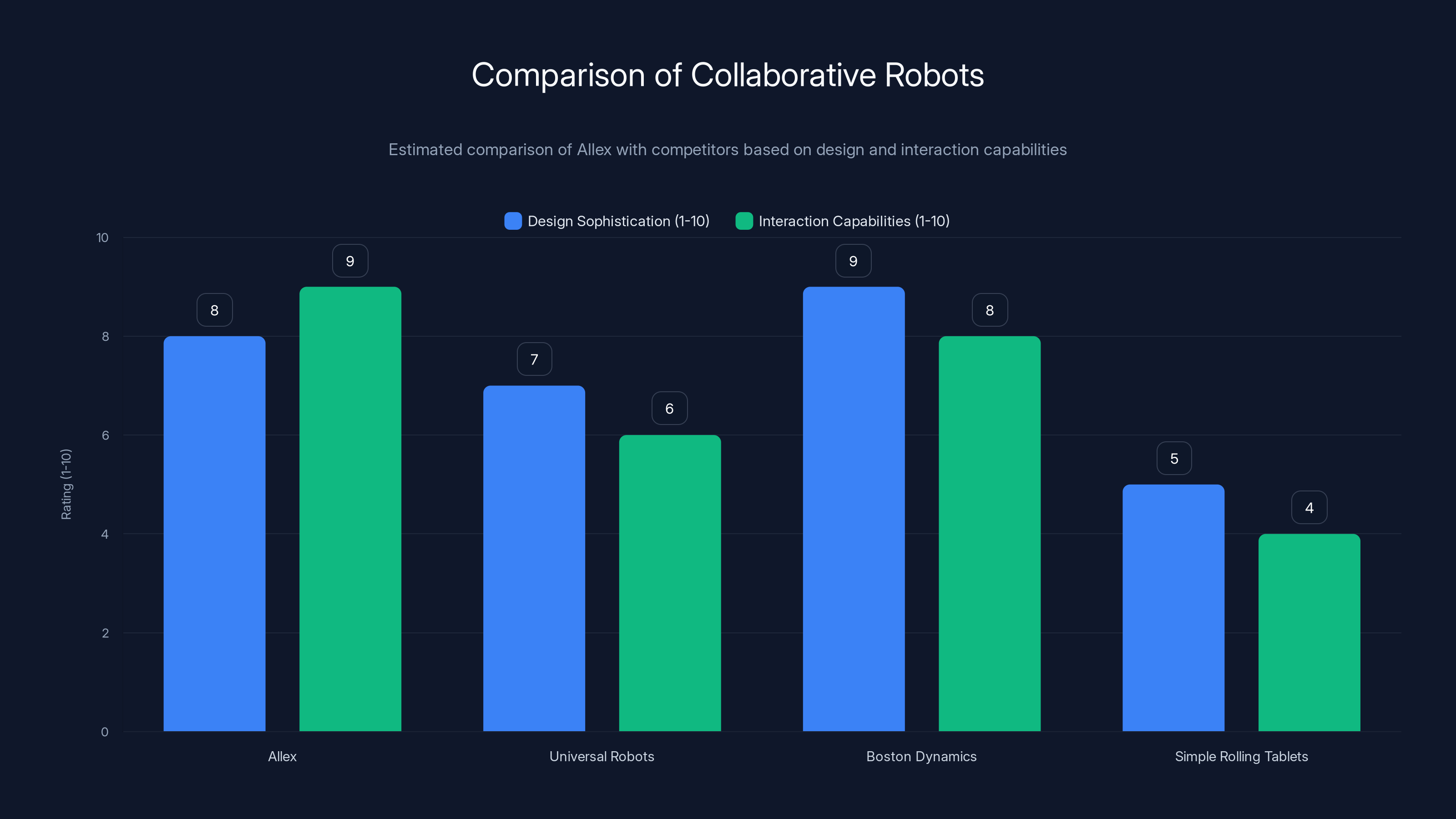

Allex scores high in both design sophistication and interaction capabilities, making it a strong contender in the mid-market collaborative robot space. Estimated data.

Roborock's Saros Rover: The Other Staircase Solution

Roborock entered the staircase game with a different approach than Dreame.

Where Dreame added legs to move vertically, Roborock's Saros Rover has articulated wheels and a low-slung frame designed to handle stair geometry. More importantly, the Saros Rover maintains cleaning capability while climbing.

This is mechanically harder than simply traversing stairs. You need sufficient ground contact to clean while navigating the irregular angles and heights of stair steps. Roborock's engineering team figured it out, though the demonstration at CES suggested it's still in active development.

The Saros Rover represents a different design philosophy. Rather than adding specialized appendages (legs), Roborock optimized the fundamental platform (wheels + chassis) to handle the obstacle while maintaining core functionality.

There's a tradeoff here. Roborock's approach is probably more elegant mechanically. Optimized wheel geometry is simpler than articulated legs. But it's also probably more limited in what it can climb and how quickly it can traverse stairs.

Neither approach is objectively superior. They're different engineering solutions to the same problem. Dreame prioritized climbing speed and confidence. Roborock prioritized cleaning while traversing.

Neither company has announced availability or pricing. Both are clearly prototypes undergoing refinement. But the fact that two companies independently decided to tackle staircase traversal suggests it's a genuinely important problem. When multiple teams attack the same challenge, it usually means there's real market demand.

The competitive dynamic is interesting. Five years ago, robot vacuum innovation was incremental. Slightly better sensors. Slightly more efficient motors. Today, companies are completely reimagining the form factor to solve existing limitations. That's healthy innovation.

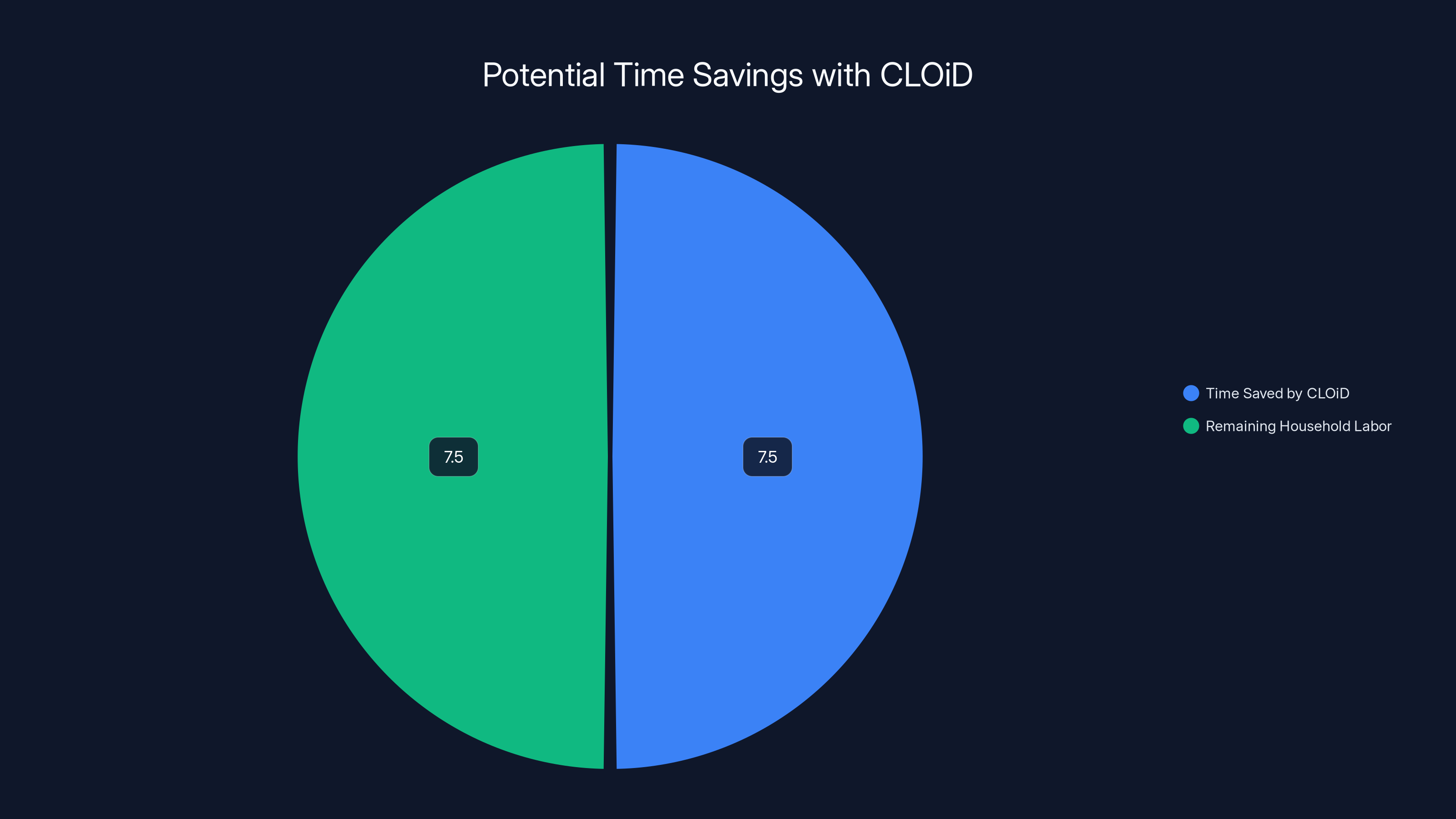

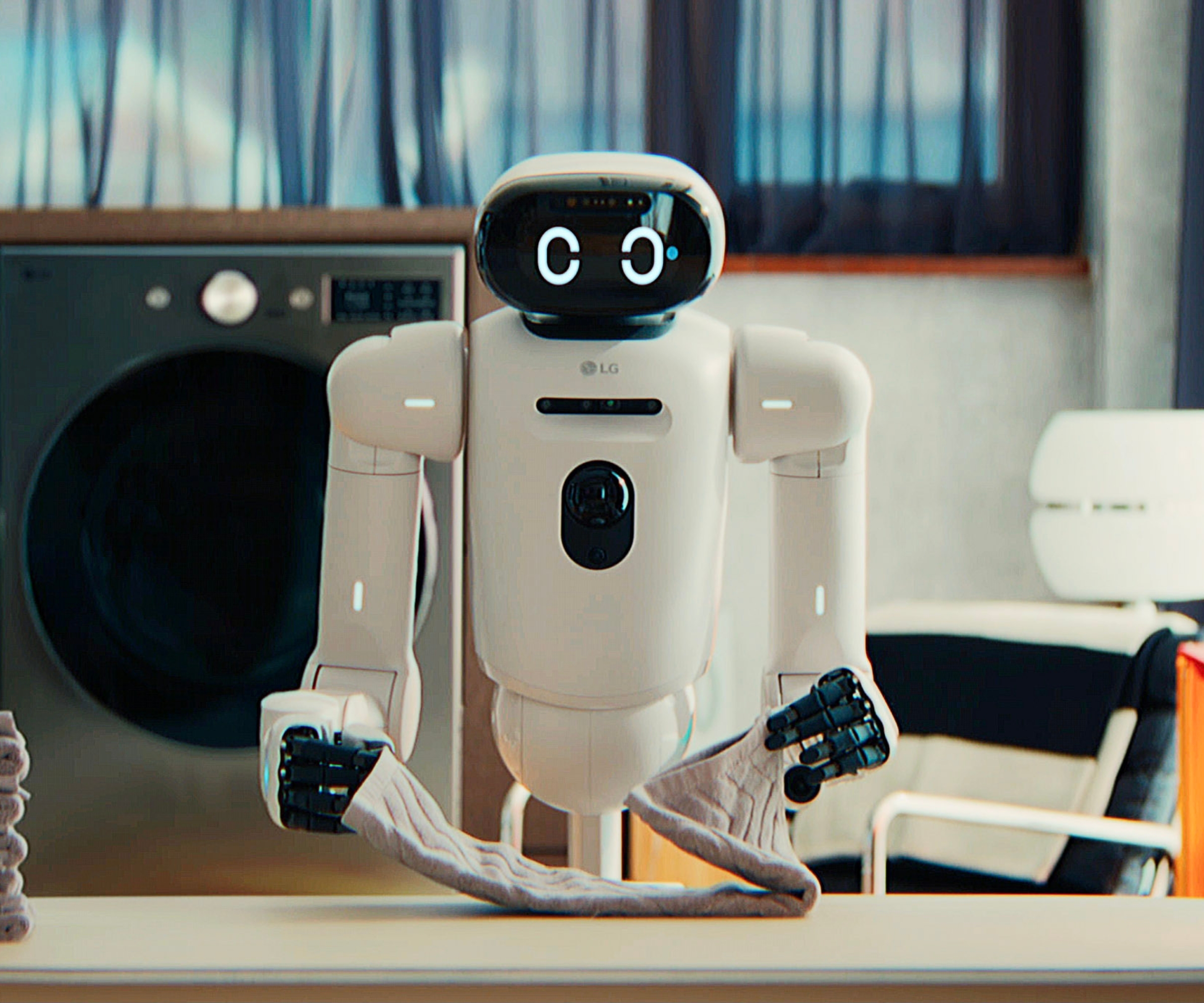

CLOi D: LG's Ambitious Vision (That Probably Won't Exist)

LG's CLOi D was far and away the most ambitious robot demonstration at CES 2026.

The company showed a 15-minute video of CLOi D performing household tasks: folding and sorting laundry, fetching drinks from the fridge, putting food in the oven, retrieving lost keys.

Each task was captured in real time. No cuts, no edits. The robot physically performed these actions, though admittedly in slow motion and with occasional awkward movements.

The underlying vision is what LG calls the "zero labor home." The idea that a general-purpose robot can handle household chores sufficiently well that humans spend their time on things other than cleaning, laundry, and food prep.

It's a compelling vision. Many people spend 10-15 hours per week on household labor. If a robot handles even half of that, it materially improves quality of life.

But here's the reality: CLOi D is almost certainly not coming to market anytime soon.

LG hasn't committed to commercial availability. They've shown no pricing roadmap. No prototype is available for extended testing. The company explicitly positioned CLOi D as a "concept" for their vision of the future.

Building a general-purpose household robot that can handle laundry, cooking, object retrieval, and kitchen tasks requires solving multiple hard problems simultaneously. Cloth manipulation is mechanically complex. Kitchen environments have countless objects of different sizes, materials, and fragility levels. Fridge operation requires understanding cold storage mechanics. Oven safety is a legal liability nightmare.

Any of these problems individually is solvable. Combined, they represent years of engineering, safety testing, liability documentation, and refinement.

LG has the resources to eventually build CLOi D. But "eventually" might be 10-15 years. And in that timeframe, other companies will have captured market share with narrower, more specialized robots.

What CLOi D does accomplish is establish a long-term vision. LG is saying, publicly, that they're investing in household robotics. That signals commitment to competitors, partners, and investors. Even if CLOi D itself never ships, it shapes the market.

The laundry folding demonstration was perhaps the most impressive component. Cloth folding is genuinely hard. Fabrics have variable stretch, drape, and texture. Every shirt is slightly different. CLOi D demonstrated folding multiple items consistently and placing them in proper stacks.

Is this good enough for consumer use? Honestly, probably not yet. The folding process took visible time. A human could fold the same pile in half the duration. But the robot did fold correctly. That's progress.

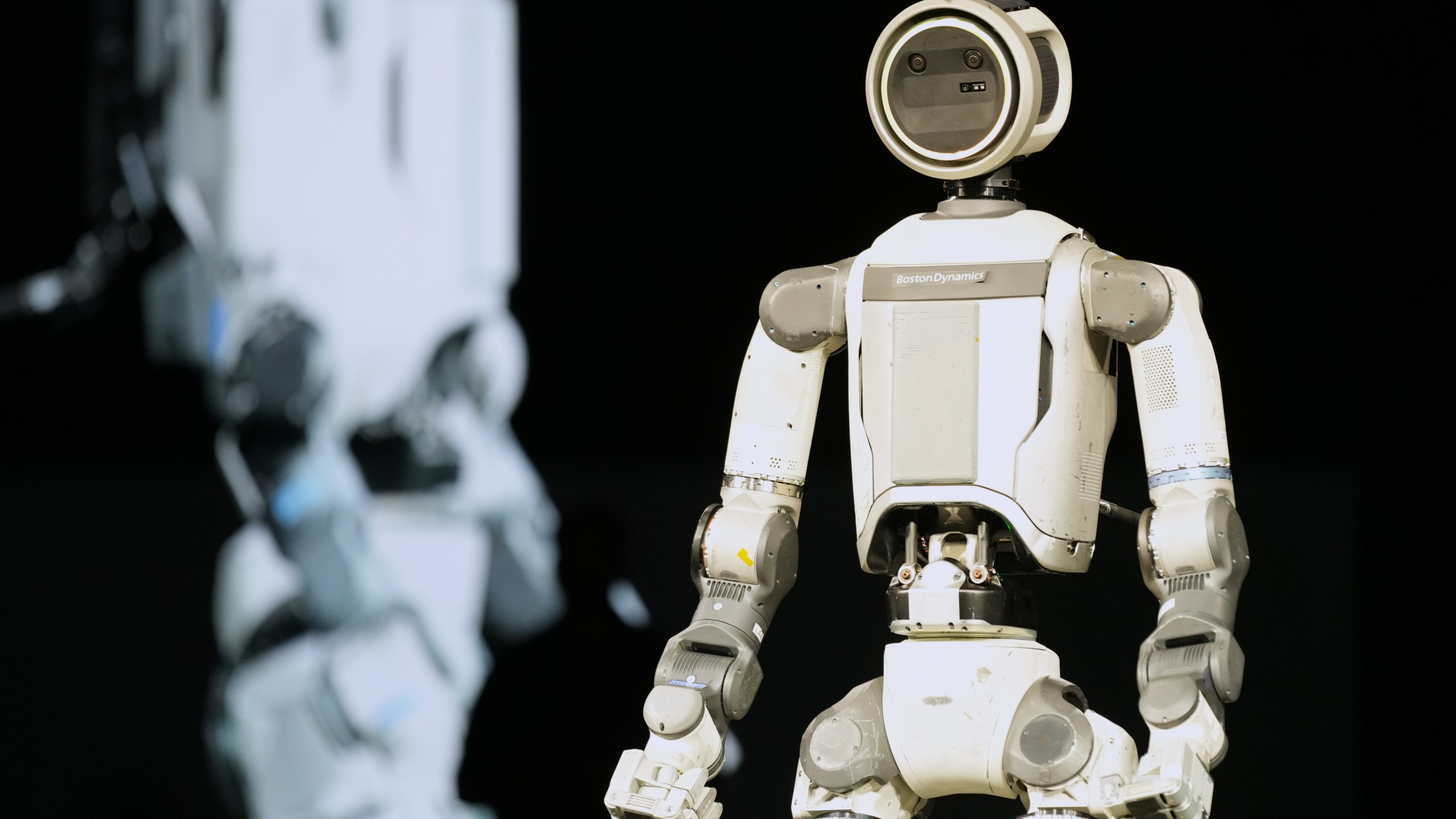

WIRobotics' Allex: The Expressive Humanoid

WIRobotics brought Allex to CES, and unlike some of the other humanoids, Allex is specifically designed for human interaction and task assistance.

Allex is a waist-up robot. No legs. Just an upper body, arms, and hands with articulated fingers. It was actively engaging with booth visitors, striking poses, making gestures, and generally hamming it up.

The articulation is impressive. Each finger moves independently. The arms have multiple degrees of freedom. The movements are fluid without being robotically stiff. Allex doesn't move like a robot struggling against mechanical constraints. It moves with surprising fluidity.

This is the difference between kinematic design (how many joints and axes of movement) and dynamic control (how gracefully those joints operate). Allex apparently got both right.

What makes Allex interesting is its potential use case: general-purpose collaborative robotics. Not a specialized picker-and-placer for warehouses. Not a humanoid designed for unstructured home environments. But a robot designed to work alongside humans in professional settings.

Think retail environments. Customer service. Educational settings. Situations where human customers or students need to interact with a robot and where anthropomorphic design matters.

Allex's articulated fingers are important here. Humans recognize hand gestures. If Allex can make meaningful hand movements—pointing, waving, demonstrating—that makes interaction more natural. It's less of a scary machine and more of a collaborative tool.

WIRobotics hasn't shared extensive technical specifications or pricing. But the hardware design suggests they're positioning Allex in the mid-market collaborative robot space. Cheaper than industrial-grade Boston Dynamics machines. More sophisticated than simple rolling tablets with arms.

The real competition for Allex comes from companies like Universal Robots and other collaborative arm manufacturers. Those robots have proven market demand. If WIRobotics can deliver similar capability with humanoid form factor, they've got a potential advantage for tasks requiring human-like interaction.

Cyber X excels in obstacle navigation and innovation, offering a significant quality-of-life improvement by independently climbing stairs. Estimated data.

The Pet Robots: Emotional Connection Without the Commitment

CES 2026 featured several AI-powered pet robots. These aren't robotic toys designed to look cute. They're systems specifically designed to provide emotional engagement and companionship.

The interesting thing about pet robots is that they solve a real problem for a specific demographic. People who want animal companionship but can't have real pets due to allergies, space constraints, or lifestyle factors.

A real dog requires daily care. Feeding, walking, veterinary visits, training. Pet robots eliminate those friction points. They provide emotional engagement without operational overhead.

Modern pet robots use AI to learn owner preferences, respond to voice commands, and adjust behavior based on interaction patterns. They're not pre-programmed sequences. They learn.

One particular demo robot (specific model name wasn't provided, but it was displayed prominently) demonstrated learning capabilities that suggested underlying LLM integration. The robot remembered previous interactions with visitors and referenced them in subsequent conversations. That's not pre-scripted behavior. That's learning and memory retention.

The companion animal market is worth billions globally. It's also an aging demographic heavily concentrated in developed nations. Robots targeting this market address genuine emotional needs for an underserved population.

What's still unsolved is the question of whether artificial companionship is emotionally equivalent to real animal companionship. Early studies suggest it provides genuine psychological benefit, particularly for isolated elderly individuals. But long-term effects are still being researched.

The Creepy Ones: Where Design Choices Backfired

Not every robot at CES succeeded in its design intent.

Several demonstrated the uncanny valley problem acutely. Robots that attempt to look almost-human but miss the mark slightly land in territory that triggers discomfort. The subtle wrongness of movement, the almost-but-not-quite facial expression, the nearly-convincing voice that carries just enough artificiality to feel alien.

One particular humanoid (which I'll avoid naming because the engineering is actually solid) attempted photorealistic facial features. The result was exactly what the uncanny valley theory predicts. It looked like a human. Not quite. Just enough difference to trigger discomfort. People reacted with visible wariness.

Compare that to Ollo Bot, which deliberately leans into cartoon aesthetics and generates delight instead of discomfort.

The lesson here is about design intent. If you're building a humanoid robot, you have two good choices: go deliberately artificial (acknowledge that it's a machine and design accordingly), or invest heavily enough in realism that you actually achieve human-level photorealism. The in-between territory is exactly where things feel wrong.

Some companies at CES haven't learned this lesson yet. There were several humanoids that were trying hard to look human and mostly achieving "unsettling."

AI Integration: The Real Story Behind the Robots

All of these robots share a common thread: they're powered by AI systems that are dramatically better than previous generations.

The conversation capability we saw in Agibot's humanoid? That's a large language model integrated into the robot's software stack. The computer vision that lets Rovie identify toys? That's a modern vision model, probably based on transformer architecture.

What changed between 2024 and 2026 isn't just incremental improvement. It's integration and optimization.

Two years ago, integrating an LLM into a robot was technically possible but practically awkward. Latency was high. Models were oversized for edge deployment. Energy consumption was problematic.

By 2026, companies have solved those problems. Quantized models run efficiently on edge devices. Latency is acceptable for real-time interaction. Energy consumption is manageable on battery power.

This is why 2026 felt different. The AI systems that enable intelligent robotics finally matured enough to power consumer products.

The computational requirements are still significant. A robot running an LLM for conversational capability typically needs a dedicated GPU or TPU. That adds cost and complexity. But it's now achievable at consumer price points.

The combination of better AI, better edge computing, and better robotics hardware created a convergence point. All the ingredients for capable autonomous robots aligned simultaneously.

CLOiD could potentially save up to 7.5 hours per week on household tasks, assuming it handles 50% of the typical 15 hours spent on chores. (Estimated data)

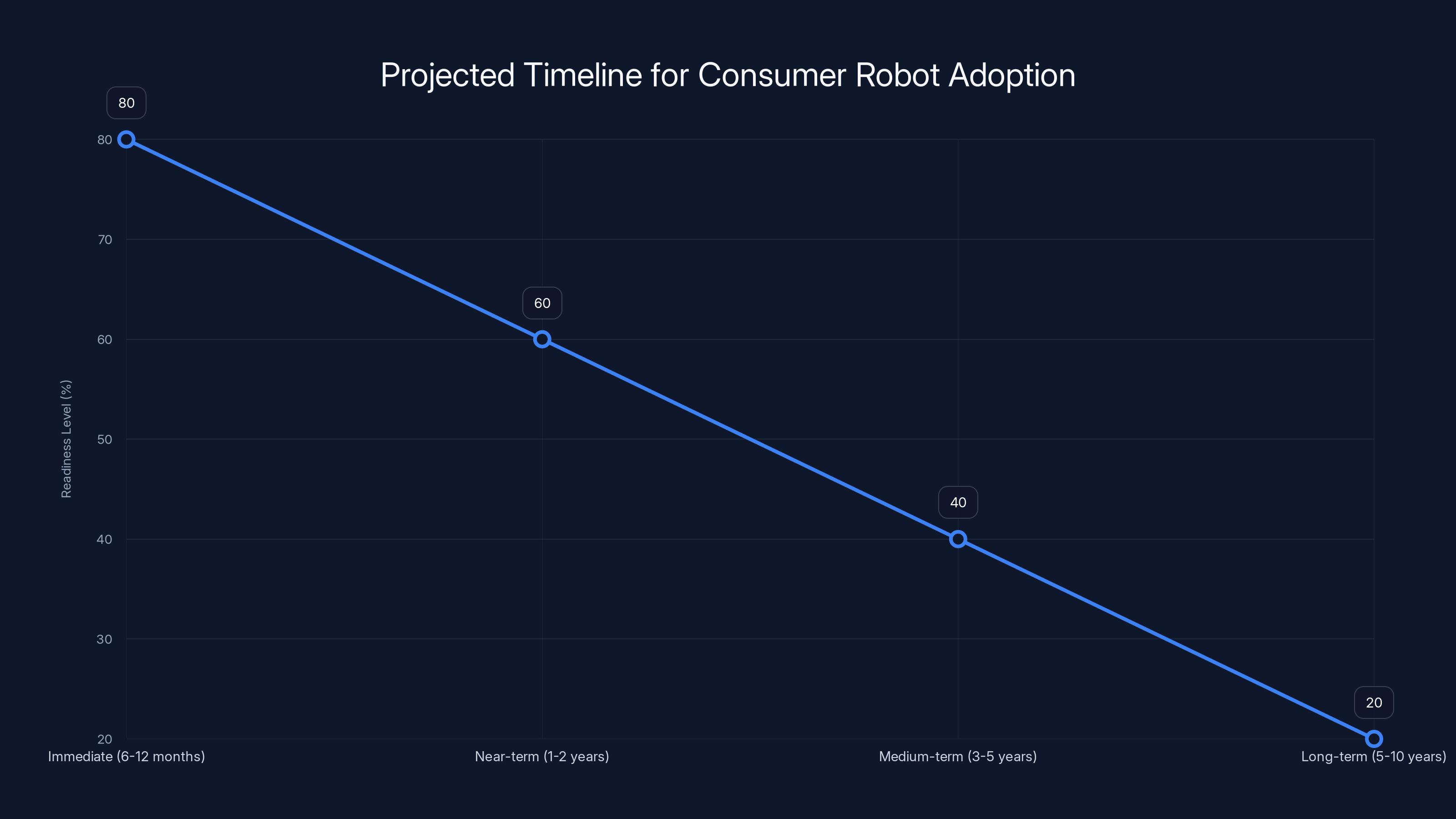

Commercial Viability: When Do These Become Real Products?

Most of what we saw at CES 2026 isn't ready for purchase yet.

Some are clearly years away: CLOi D, definitely. Most of the specialized humanoids, probably. The stair-climbing vacuums, maybe within 2-3 years.

Others are closer: Rovie could be a real product within 18 months if Clutterbot secures funding and completes durability testing. Some of the existing robot vacuum form factors with new AI features probably launch within the next fiscal year.

The timeline matters because robotics companies often announce products well before they're ready for market. It's good for raising capital and generating buzz. But it sets consumer expectations.

A practical timeline for consumer robot adoption:

Immediate (6-12 months): Improved robot vacuums with better AI, better obstacle avoidance, better cleaning patterns. These are iterations on existing product categories.

Near-term (1-2 years): Specialized task robots like Rovie. Single-purpose machines that solve specific household problems. More companies launch products in this space.

Medium-term (3-5 years): General-purpose household robots, possibly including laundry handling or basic cooking assistance. Still limited to specific task sets, but broader capability than current products.

Long-term (5-10 years): True CLOi D-type machines that handle diverse household tasks autonomously. Still probably not achieving the fantasy of a robot that handles everything. But approaching meaningful household labor replacement.

This timeline assumes continued AI progress and no major regulatory setbacks. Either assumption could be wrong.

The Scary Conversations: What This Really Means

The robots at CES 2026 sparked conversations about labor, displacement, and social impact.

A laundry-folding robot is convenient. It's also potentially job displacement for people who currently do laundry services. A humanoid that can perform general household tasks is helpful. It's also potentially labor market disruption.

These aren't hypothetical concerns. They're real tradeoffs that society will need to navigate.

What's interesting is that robots solving narrow, specific problems (like toy cleanup) probably have less labor market impact than general-purpose robots. If Rovie exists, it doesn't eliminate toy-cleanup as a job because toy-cleanup mostly isn't a paid position. But if a humanoid can do general housework, that directly competes with professional cleaning and household staff positions.

The AI boom is already impacting labor markets. AI-powered customer service displaces support roles. AI-generated content impacts creative workers. Adding physical robots to that mix accelerates the transition.

From a pure capability standpoint, robots at CES 2026 represent genuine progress. From a labor standpoint, they represent disruption. Both things are true simultaneously.

The robots themselves are neutral. They're tools. How society deploys them—whether we use them to enhance human productivity or replace human workers, whether we share the economic benefits broadly or concentrate them—that's not a robotics question. It's a policy question.

The Design Principles That Worked

Cross-cutting several successful robots were consistent design principles:

Specialized rather than general: Robots that excel at one thing usually outperform robots that attempt to do many things. Rovie is great at toy cleanup. It doesn't try to handle laundry. Stair-climbing vacuums are good at moving between floors. They're not trying to be general-purpose.

Lean into aesthetic intent: Ollo Bot works because it's deliberately quirky. Robots that try to be "almost human" usually fail. Robots that fully commit to looking robot-like succeed.

Solve real problems: The most impressive robots at CES addressed genuine pain points. Parents deal with toy chaos. Multi-floor homes have staircase limitations. These robots solve actual problems.

Graceful degradation: The best robots fail gracefully. When they can't accomplish a task, they communicate clearly rather than getting stuck. When confidence is low, they defer to humans. Good robots know their limits.

Edge deployment: Robots that rely on cloud connectivity for basic functionality are vulnerable to connectivity issues. The best robots run their AI models locally. Cloud connectivity is for optional enhancements, not core functionality.

The chart illustrates the projected readiness of consumer robots over time, with immediate improvements in existing products and long-term advancements towards autonomous household robots. Estimated data based on industry trends.

The Honestly Confusing Ones

A few robots at CES seemed to exist without clear purpose.

One particular exhibit (again, avoiding specific names) was a wheeled robot with multiple articulated appendages that could manipulate objects. It was genuinely impressive mechanically. But the practical application was completely unclear. What problem does it solve? What advantage does it have over simpler specialized robots? Why would someone buy it?

That's actually a common CES problem. Impressive engineering answering questions nobody asked.

The best robots at CES 2026 solved problems people actually have. The less impressive ones were technically advanced solutions looking for applications.

What's Missing: The Real Bottlenecks

For all the capability on display, several critical gaps remain:

Dexterity: Robots are still not as dexterous as human hands. Fine manipulation is hard. Cloth handling is hard. Anything requiring sensitivity or precision remains robotically challenging.

Common sense: Robots can be trained on specific tasks. But transfer learning to novel situations is still limited. A robot trained to fold t-shirts might fail on sweaters. A robot trained to set a table might struggle with atypical dinnerware.

Safety in unstructured environments: Homes are full of variables. Toys anywhere. Pets anywhere. Unexpected obstacles. Robots excel in controlled environments. Real homes are messier than any robot has been trained for.

Energy efficiency: Most demonstration robots operate tethered or with very short battery lives. Consumer robots need 8-12 hour battery endurance. That's not there yet for high-capability machines.

Cost: Building complex robots is expensive. For consumer viability, costs need to drop by 50-75%. Hardware economics need to improve dramatically.

Reliability: Demonstration robots fail occasionally and that's accepted. Consumer products need 95%+ reliability. That gap is real.

These aren't insurmountable challenges. But they're bigger challenges than many observers acknowledge.

The Optimistic Case

If you're bullish on robotics, the CES 2026 lineup is genuinely encouraging.

Companies are moving from pure research to prototype development. Engineering teams are solving mechanical problems that were considered intractable five years ago. AI integration is happening faster than expected. Multiple companies are tackling similar problems, suggesting market demand.

The optimistic timeline says consumer robots become commonplace within a decade. Some households have multiple robots handling different tasks. Elderly and disabled individuals gain independence through robotic assistance. Labor-intensive tasks become automated.

This is achievable. The technical foundations are there. The economic arguments are sound. The demographic need is real (aging populations in developed nations, rising labor costs).

The Pessimistic Case

If you're bearish on consumer robotics, the CES 2026 lineup is mostly vaporware.

Companies announce impressive concepts but struggle to commercialize. Costs remain too high. Robots are less reliable than required for consumer expectations. The last-mile problems prove harder than anyone expected.

Several promising robots from previous years still haven't shipped. LG's previous home robots never made it to market. Boston Dynamics' commercial partnerships have been modest. The graveyard of promising but unreleased robots is large.

The pessimistic case says most of what we saw at CES 2026 will never become products. Some companies will launch specialized robots in lucrative niches. General-purpose household robots remain 20 years away, perpetually.

The Most Likely Case

Reality probably lands somewhere between.

Specialized robots solve specific problems and reach mainstream adoption within 5-10 years. Stair-climbing vacuums eventually exist. Toy-cleanup bots become products. Pet robots become more sophisticated.

General-purpose household robots remain extremely limited. They handle one or two tasks very well. They don't replace the vision of a robot that handles all household labor.

Humanoid robots find niche applications in professional settings (customer service, education, entertainment) but don't displace human workers in meaningful numbers.

AI continues improving, which makes robots more capable. But the mechanical, economic, and legal challenges compound faster than the AI improvements overcome them.

Some companies succeed at commercialization. Many don't. Consolidation happens. A few dominant players emerge.

This is the boring, realistic outcome. Not the utopia of robots handling all household labor. Not the dystopia of no progress. Just incremental improvement at a pace faster than the past, but slower than hype suggests.

What to Watch

If you're tracking robotics progress, pay attention to:

Commercial releases: When do prototypes become purchasable products? When do companies launch second or third generation products? Product maturity matters more than capability demonstrations.

Failure analysis: When robots fail, how do they handle it? Do they recover? Do they call for help? Graceful degradation is more important than perfection.

Cost reduction: Are robots getting cheaper? Manufacturing scale drives down costs. Watch whether companies can achieve economies of scale.

Market adoption: Are people actually buying robots? How many units ship? Is it growing year-over-year? Real adoption matters more than impressive CES demos.

Integration with existing systems: Can robots work with your smart home? Can they understand your schedule? Integration complexity is a major adoption barrier.

Maintenance and support: How hard is it to maintain a robot? What's the support experience? Real product support often makes or breaks adoption.

CES 2026 in Context

CES 2026 will probably be remembered as the year robotics crossed a meaningful threshold. Not the year robots became household staples. But the year when robots crossed from "interesting research" to "legitimate near-term products."

AI maturity enabled this. Better processors enabled this. Better manufacturing enabled this. Multiple factors aligned.

The robots we saw weren't alien. They weren't dystopian. They were mostly charming, occasionally creepy, and genuinely capable.

And that might be the most important thing. Not that robots are scary or perfect. But that they're becoming normal. Unremarkable. Part of the technological landscape.

That transition from "wow, a robot!" to "oh, a robot, neat" is where the real disruption happens. When robots become normal enough that we stop questioning their existence and start questioning their deployment.

That's where we are now.

FAQ

What makes the robots at CES 2026 different from previous years?

The key difference is AI integration. Modern robots are powered by large language models and advanced computer vision systems that enable genuine autonomy and learning. Previous generation robots relied on pre-programmed behaviors. Current robots can adapt, learn from failures, and understand context. This represents a meaningful capability jump, not just incremental hardware improvements.

When will these robots actually be available for purchase?

Timeline varies significantly by robot type. Specialized robots like improved smart vacuums should appear within 6-12 months. Task-specific robots like toy-cleanup bots might launch within 18-24 months. General-purpose household robots remain 5-10 years away at minimum. Most impressive CES demonstrations are prototypes, not products. The gap between demo and commercial viability is substantial.

Are humanoid robots actually useful, or are they just cool-looking?

Humanoid form factor has genuine advantages for human interaction and for tasks designed for human workspaces. But humanoid robots are mechanically complex and expensive. For many applications, specialized non-humanoid robots are more practical. The appeal of humanoids is often aesthetic rather than functional. That doesn't make them bad, just expensive and limited in deployment scenarios.

How much will these robots cost when they launch?

Price estimates vary wildly. Specialized robots like stair-climbing vacuums will probably cost

What about the creepy feeling some robots generate?

The uncanny valley is real. Robots that attempt to look almost-human but don't quite land in unsettling territory. The solution is either going deliberately artificial (Ollo Bot approach) or investing enough in photorealism to achieve human-level fidelity. Most robots land awkwardly between those two extremes. Design intent matters more than technical capability for consumer acceptance.

Are these robots going to take people's jobs?

Yes, probably. Robots will displace some jobs, particularly in household labor and routine task handling. The timeline is slower than hype suggests (years, not months). The disruption is real but manageable if addressed proactively through education and policy. Society has more control over how robots impact employment than most people realize. It's not technologically inevitable, it's a choice about how to deploy technology.

Should I be concerned about robot safety in homes with kids and pets?

This is a legitimate concern that manufacturers are addressing. Modern robots have obstacle detection and emergency stop capabilities. Most are designed to fail safely. But early adopters will be taking on some risk with prototype technology. First-generation consumer robots should be carefully supervised around children and pets until durability and safety records are established.

How much energy do these robots consume?

Energy consumption varies. Robot vacuums are relatively efficient. Humanoids with active arms consume more. All current commercial robots require regular charging. Battery life is generally 4-8 hours for mobile robots doing moderate work. Full-day autonomy without charging is not yet standard. Energy efficiency will improve as hardware matures.

What's the maintenance burden?

Maintenance varies by robot type. Vacuums need regular filter changes and occasional repairs. Complex robots with articulated arms need more frequent maintenance. Current generation robots are not maintenance-free. Expect occasional troubleshooting and parts replacement. This is why support and repair infrastructure matter for consumer adoption.

Conclusion

CES 2026 delivered something we haven't seen before in robotics: legitimate products approaching market readiness, powered by AI systems that actually work.

Not perfect. Not ready for everyone. But genuinely capable.

The spectrum was wide. From the charmingly ridiculous Ollo Bot to the ambitious CLOi D vision to the intensely practical Rovie toy-cleanup bot. From conversational humanoids to insectoid staircase climbers. From dog-like companions to professional collaborative arms.

What struck me most was how normal some of this felt. Watching Agibot's humanoid have a conversation. Sure, it was clearly a robot. Sure, the responses came from an LLM. But the interaction felt surprisingly natural. That normalization is where the real disruption lives.

We're not at the point where robots handle all household labor. Not yet. Maybe not ever, given the mechanical and economic challenges.

But we're at the point where specialized robots solving specific problems become mainstream products within the next 1-3 years. That's a meaningful inflection point.

The next few years will determine whether CES 2026 marks the beginning of mainstream robot adoption or just another wave of impressive but ultimately commercialization-resistant technology.

My bet? Both happen simultaneously. Some robots make it to market and succeed. Some remain prototypes indefinitely. The adoption curve is steep but not vertical. Robotics becomes increasingly normal, but not in the explosive way hype suggests.

That's fine. Slow, incremental progress at scale often impacts society more profoundly than revolutionary change. Robots don't need to be perfect. They just need to be useful enough, reliable enough, and affordable enough.

CES 2026 suggested we're approaching that threshold. Not quite there yet. But close enough that I'm genuinely watching the next 12 months.

Because when one of these robots actually launches as a real product that real people can buy and use and troubleshoot, that's when robotics transitions from "cool tech to watch" to "actually relevant."

I think that moment arrives within the next 24 months. I'm curious which robot gets there first. And what it teaches us about everything that follows.

Key Takeaways

- AI maturity in 2026 fundamentally changed robot capabilities—machines now learn, adapt, and demonstrate genuine autonomy rather than executing pre-programmed scripts

- Specialized robots solving specific problems (toy cleanup, stair climbing, laundry folding) reached prototype maturity and approach commercial viability within 1-3 years

- Design philosophy matters as much as engineering capability—robots leaning into aesthetic intent (like OlloBot) succeeded where robots attempting near-human realism triggered uncanny valley responses

- General-purpose household robots remain 5-10 years away despite impressive demonstrations; commercial viability requires solving dexterity, cost, and reliability challenges beyond current technology

- The distinction between technical demonstration and commercial product remains vast—most impressive robots at CES won't reach consumers, but a few specialized systems will eventually disrupt household labor markets

Related Articles

- Sharpa's Humanoid Robot with Dexterous Hand: The Future of Autonomous Task Execution [2026]

- CES 2026: The Biggest Tech Stories and Innovations [2025]

- 7 Biggest Tech Stories: CES 2026 & ChatGPT Medical Update [2025]

- CES 2026 Best Tech: Complete Winners Guide [2026]

- CES 2026 Day 3: Standout Tech That Defines Innovation [2026]

- Lenovo's CES 2026 Lineup: Rollable Screens, AI Tools & Enterprise Devices [2025]

![The Robots of CES 2026: Humanoids, Pets & Home Helpers [2025]](https://tryrunable.com/blog/the-robots-of-ces-2026-humanoids-pets-home-helpers-2025/image-1-1768061203643.jpg)