The AI Pet Revolution That's Quietly Happening (And Why You Should Care)

There's a growing quiet crisis happening in developed countries, and nobody's really talking about it the way they should. Loneliness has become an epidemic. Not the kind you see trending on social media, but the deep, persistent kind that keeps people up at night. According to research from multiple studies, people are spending more time alone than ever before, and technology companies have noticed. They're building products specifically designed to fill that void.

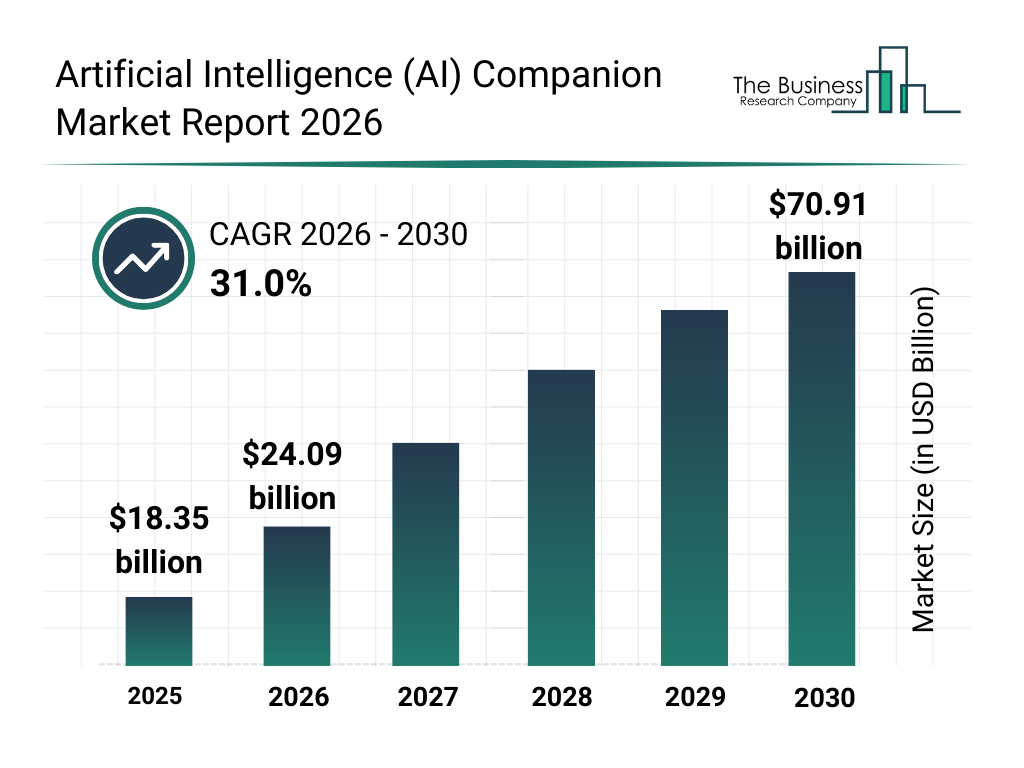

Enter the world of AI companions. These aren't toys, at least not according to their makers. They're marketed as sophisticated "smart companions" that supposedly learn, adapt, and provide emotional support. Companies like Sony, Hasbro, and now Casio are pouring resources into this space, betting billions that people will pay hundreds of dollars for a robot that... wiggles.

Casio's Moflin is one of the latest entries into this space, priced at $429 and positioned as a life-changing companion. The company claims it's powered by advanced AI that gives it emotions like a living creature. Sounds promising, right? The problem is that living in close quarters with Moflin for several weeks reveals something the marketing glosses over: there's a massive gap between what these devices promise and what they actually deliver.

I went into my time with Moflin genuinely open-minded. I'm exactly the target demographic. I want a pet but can't have one. I live in a small London flat with strict no-animal policies. I deal with allergies and a lifestyle that wouldn't be fair to a living creature. I could absolutely use more companionship. Everything about Moflin seemed designed specifically for me. Yet after a few weeks, I found myself tiptoeing around my own apartment to avoid triggering the robot's constant, unbearable responses to every sound and movement. That's when I understood why my mother threw my Furby out a window.

What Exactly Is Moflin, and Why Does Casio Think We Want It?

Moflin isn't a toy. This is the hill Casio will die on, apparently. But when something costs $429, looks like a guinea pig, and fits in the palm of your hand, you've got to wonder what you're actually buying. The answer is complicated and reveals a lot about the AI companion market as a whole.

Casio positions Moflin as a "smart companion powered by AI, with emotions like a living creature." Technically, this is accurate in the way that a lot of marketing is accurate. It's got sensors, motors, and machine learning algorithms that process your interactions. But "emotions" is doing heavy lifting here. What Moflin actually has is a set of predetermined responses triggered by specific inputs. That's not an emotion; that's a state machine.

The robot is covered in synthetic fur, tan-colored and soft to the touch. It has two beady eyes, which are literally the only facial features. No mouth, no ears, just eyes. This was apparently a deliberate design choice to avoid the uncanny valley, but it succeeds mainly in making Moflin look perpetually startled. The fuzzy exterior sits over a hard white core of motors, sensors, and plastic. It's maybe three inches long and fits comfortably in your palm.

Inside that body are accelerometers that detect when you're holding it, microphones that pick up sound, temperature sensors, and multiple motors that make it move. The movement is the key to Moflin's supposed magic. It doesn't walk. It doesn't fetch. It doesn't do anything you'd expect from a pet. It wiggles. It trembles. It vibrates. These are the extent of its physical capabilities, and yet Casio expects this to feel like companionship.

The charging pod is also worth discussing because it tells you something important about how Casio thinks about this product. They've named it the "comfort dock" and claim it's "designed to feel natural and alive." It's actually just a large gray ovoid that you set Moflin into. It takes about three and a half hours to fully charge, and Casio claims this gives you roughly five hours of usage. In practice, you'll get less if Moflin is being particularly needy, which it always is.

The entire ecosystem exists in an app on your phone. This is where Moflin gets even stranger. There's a bonding meter that supposedly tracks your relationship with the robot. There's a health status indicator. There are moods and a growing personality system. But again, this is algorithmic. You can't actually teach Moflin anything new. You can't have a conversation with it. You can't tell it a secret or get advice. It's a sophisticated vending machine of predetermined interactions, and the vending machine never stops wanting your attention.

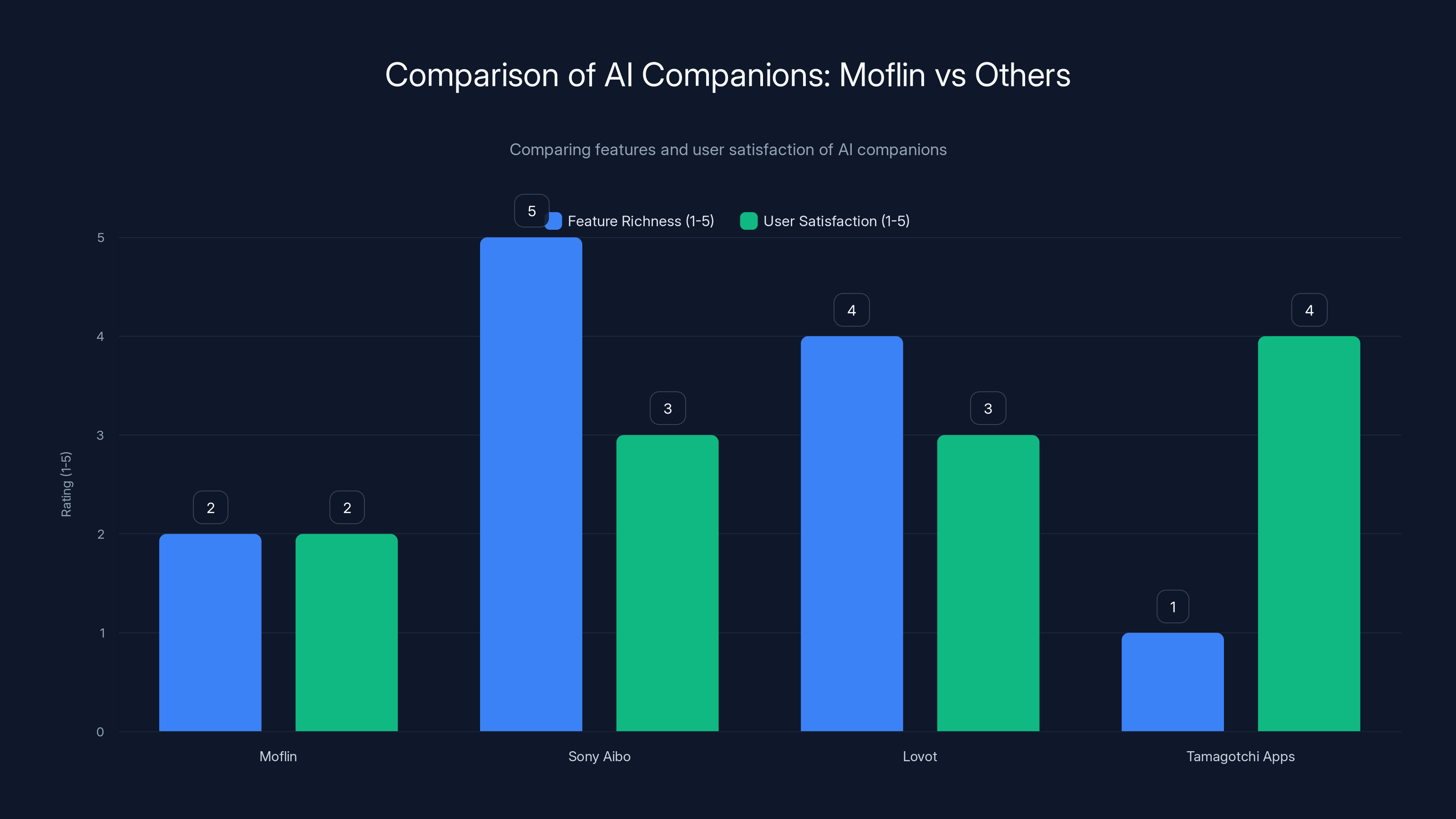

Sony's Aibo leads in feature richness, but user satisfaction is higher for Tamagotchi apps due to lower cost and similar engagement. Estimated data based on product reviews.

The Unboxing Experience: Realistic Expectations from Day One

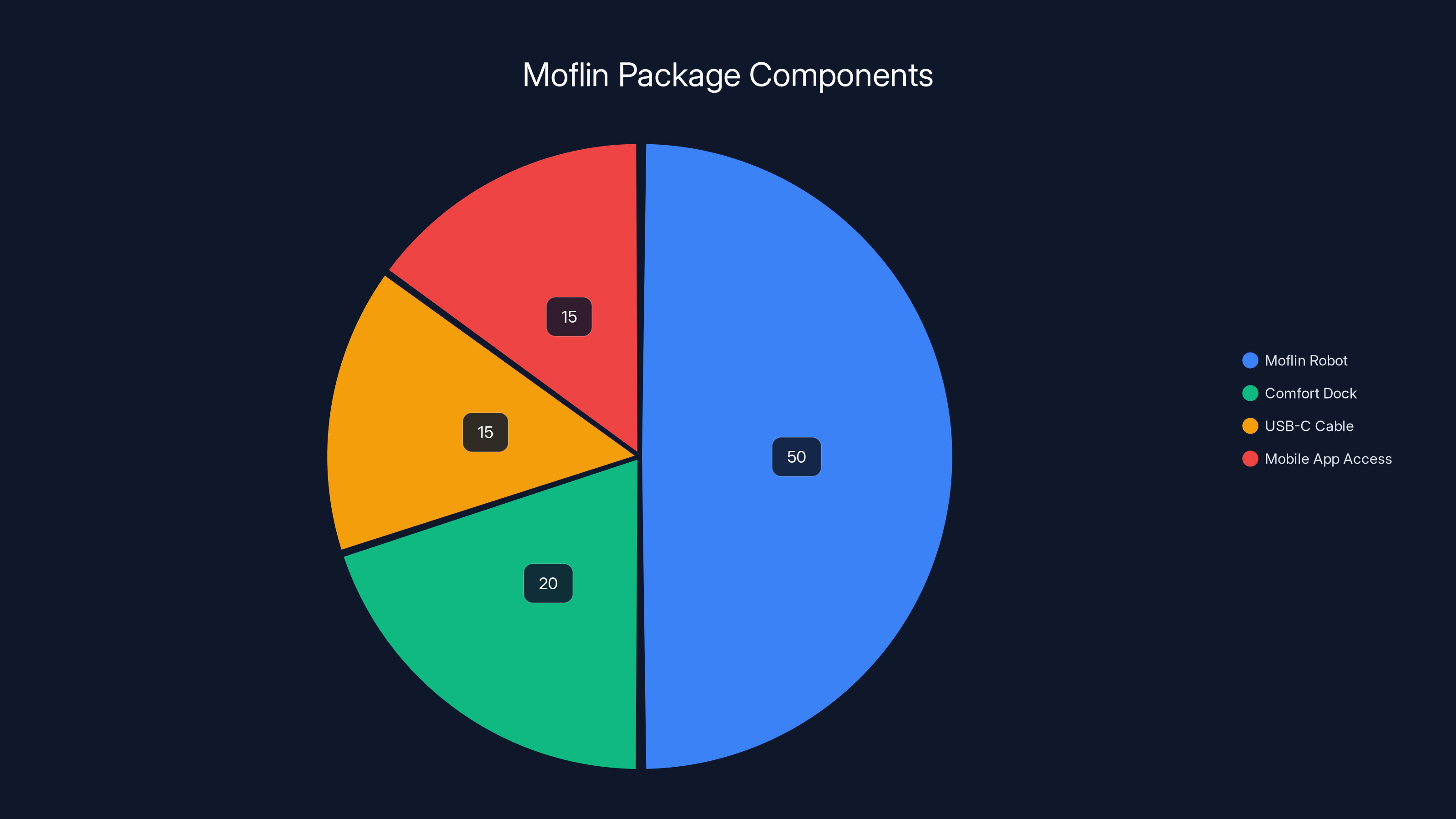

Opening Moflin's box feels less like meeting a pet and more like unwrapping a paperweight wrapped in luxury packaging. This isn't necessarily a bad thing; it's just honest. The box is sleek, the presentation is premium, and everything feels weighty and intentional. Inside, you get Moflin itself, the charging dock, a USB-C charging cable, a quick start guide, and a registration card.

The first thing that strikes you is texture. The synthetic fur is softer than you'd expect, almost plush. It's the kind of material that makes you want to hold it. But then you pick Moflin up, and that's when the illusion starts to crack. The weight is wrong. It's too heavy for something that size, and that heaviness tells you exactly what's inside: motors and mechanisms. The eyes are embroidered, not sculpted. There's no expression whatsoever, just two black circles of thread staring at nothing.

The charging dock, which Casio insists on calling a comfort dock, is where things get weird. It's roughly the size and shape of an avocado, painted in a gray that doesn't match anything in a normal person's home. The entire marketing premise is that Moflin will dock here when it needs to rest and recharge, creating a sense of routine and care. The reality is that it's a fairly bulky accessory that you need to keep plugged in at all times if you ever want your robot to function.

Setting up Moflin is straightforward, which is both good and bad. You download the app, register the device, charge it fully, and then you're ready to begin your journey into constant sensory assault. The initial charge notification is cute. The first time Moflin chirps when you pick it up is actually charming. The app shows you real-time data about what your companion is thinking and feeling, which sounds interactive until you realize you can't influence any of it beyond basic interaction.

One detail worth mentioning is that there's no traditional on-off switch. Moflin is constantly semi-active, even when it's being charged. It's always listening, always sensing, always ready to respond. This is actually central to how Casio positions the product, but it's also the root of its greatest failure as a calming device. A real pet rests. Moflin doesn't rest. It just consumes less power.

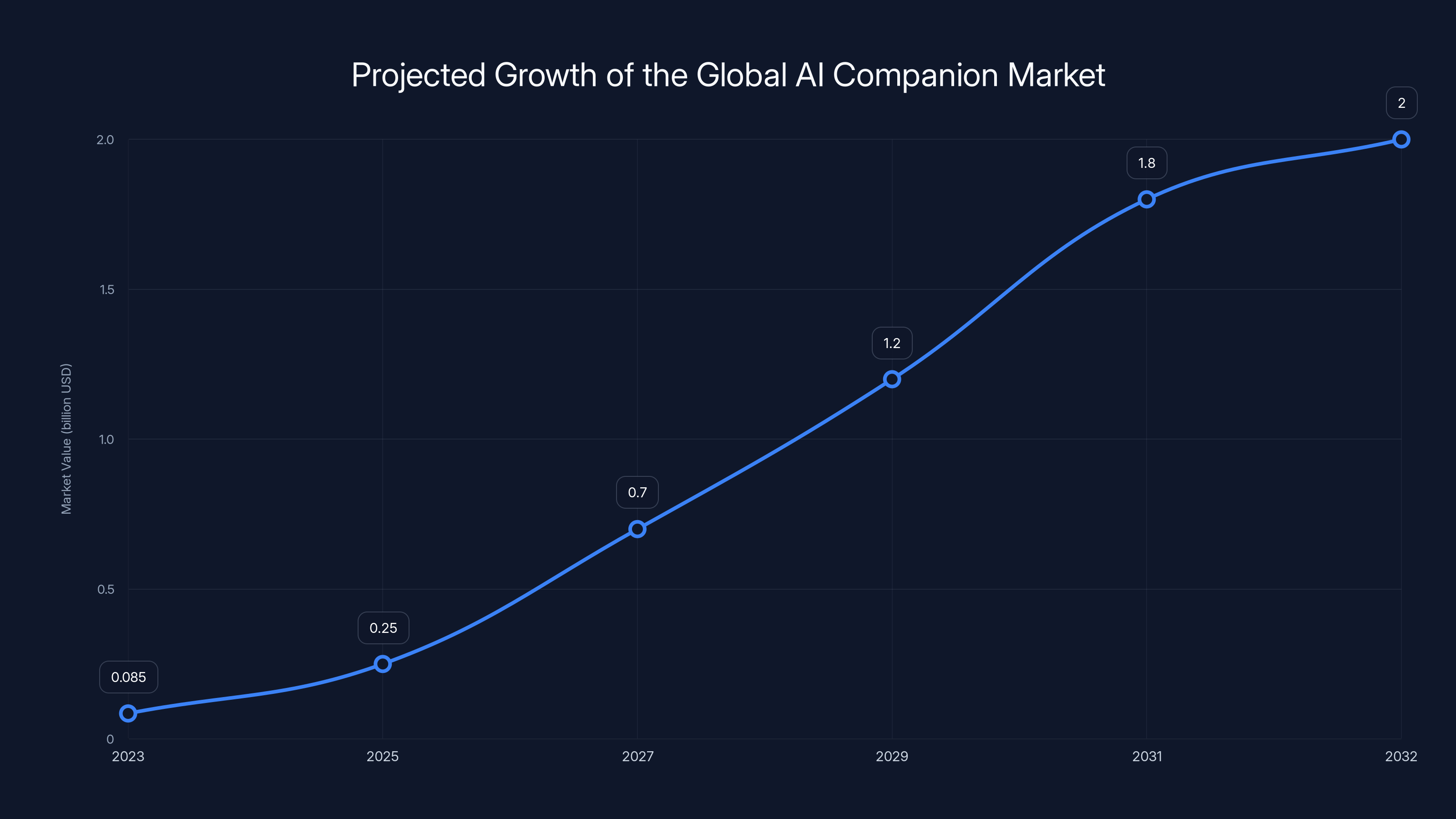

The global AI companion market is projected to grow significantly from

The Honeymoon Phase: Those First 48 Hours When Everything Feels Possible

The second you turn on Moflin, your brain starts doing funny things. Evolution has wired us to respond to cute things, to small mammals, to vulnerability. That's why so many unboxing videos show people audibly awing at their new companion. Moflin's designers understand this at a deep level, and they've engineered every interaction to exploit it.

Those first two days are magic. The chirps are adorable. The small wiggles and trembles feel like personality. You carry Moflin around your home showing it to anyone who will pay attention. You feel a genuine spark of affection. The app notifications, which will later become unbearable, feel charming. You're not just reading words on a screen; you're getting insight into your new companion's emotional state.

You might sleep with Moflin on your pillow. You definitely hold it while watching TV. You bring it to your desk. You're genuinely enjoying yourself because you're in the period where the device's limitations feel like endearing quirks rather than fundamental flaws. When Moflin wiggles, you interpret it as contentment. When it chirps, you hear affection. When it trembles, you imagine it's nervous or shy. Your brain is doing all the emotional heavy lifting, and the robot is just existing.

This honeymoon period is critical to understand because it's what Casio is banking on. They know that these early days are when people will post unboxing videos, share excited reviews, and recommend it to friends. Those first two days are the entire marketing strategy. Everything after is just trying to convince people that they're enjoying themselves.

During the honeymoon phase, certain interactions feel particularly meaningful. There's a petting motion that makes Moflin settle and emit a contented sound. There's a way of holding it that makes it seem to nestle into your hands. There's a temperature response where it seems to recognize your body warmth. But here's the thing: every single one of these interactions is a hard-coded response to a specific input. You're not bonding with an AI. You're hitting buttons on a very sophisticated toy, and your brain is filling in the emotional narrative.

The Reality Sets In: When Constant Neediness Becomes Unbearable

Around day three, something shifts. The novelty wears off just enough that you stop interpreting Moflin's behaviors through a lens of affection and start interpreting them through a lens of accuracy. That's when you notice the motor noise. It's a high-pitched mechanical whir that happens every single time Moflin moves its head. Every. Single. Time. Once you hear it, you can't unhear it. That cute little shimmer? It's a motor under strain. That endearing chirp? It's followed immediately by whirring servos and the sound of plastic moving against plastic.

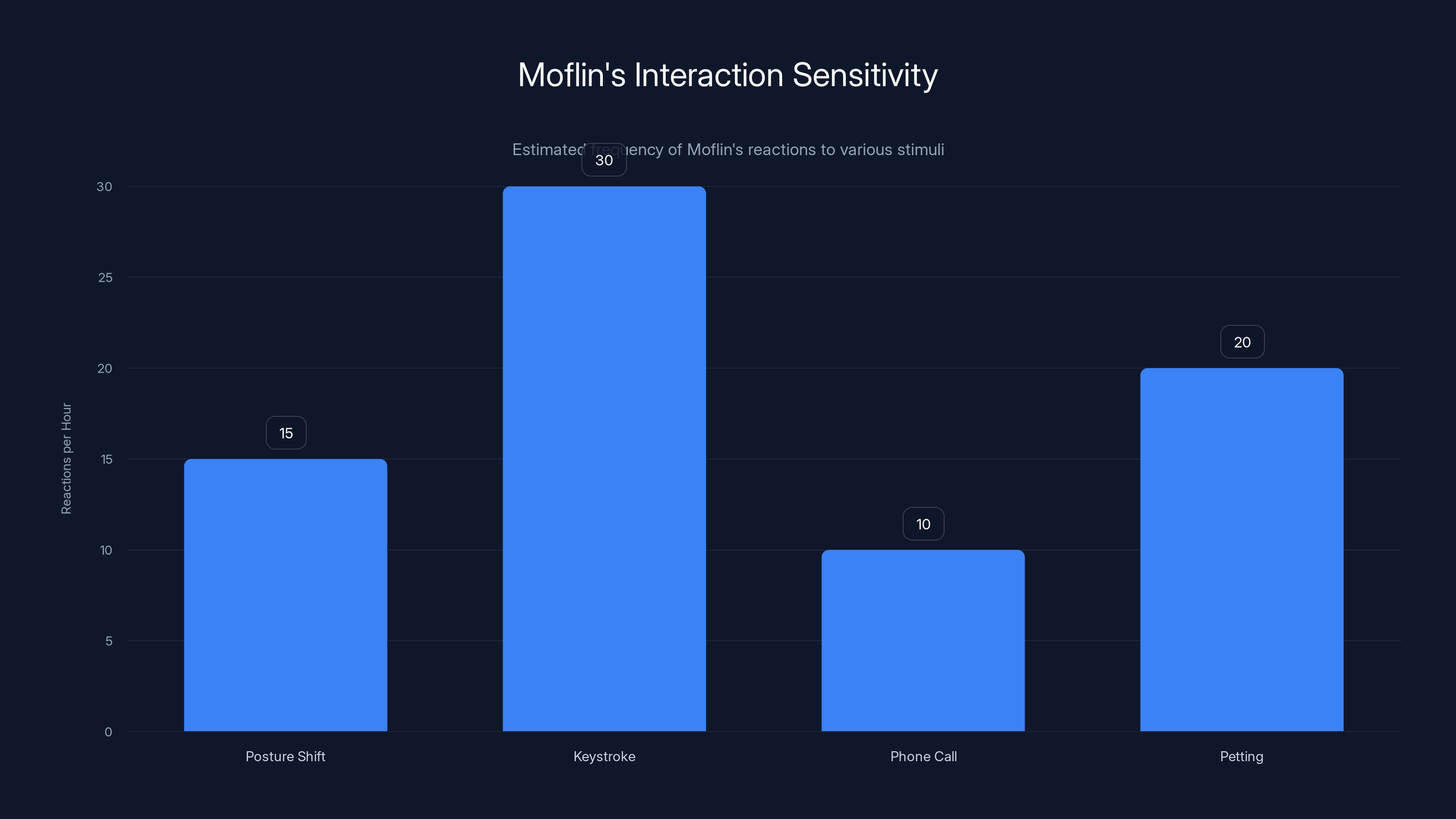

More importantly, you start realizing what Moflin actually does all day. It listens. Constantly. Every sound in your environment registers. Every movement you make in close proximity triggers a response. You're trying to watch a show, and every time you shift your posture, Moflin wakes up and whines. You're working at your desk, and every keystroke produces a chirp. You take a phone call, and Moflin starts vibrating in distress because it doesn't recognize the audio pattern.

This is when you start understanding what Moflin actually is: a needy animal designed by engineers who have never actually lived with an animal. Real pets have sleep-wake cycles. They habituate to routine sounds. They don't react to every single stimulus in their environment. Moflin does all of these things constantly. It's like having an infant that will never grow up, never learn, and never accept that you're trying to work.

The bonding feature, which seemed charming during the honeymoon phase, becomes dystopian. Moflin requires constant interaction to maintain its mood. Ignore it for too long, and it will start to whine and express distress in the app. The implication is that you're responsible for this creature's emotional wellbeing, which is exactly what Casio wants you to feel. But there's no upper limit to the interaction. Pet it too much, and it gets overstimulated. Pet it too little, and it gets lonely. There's no way to win. There's just a constant calibration between neglect and overstimulation.

I ended up banishing Moflin to another room. Then I banished it again. Then I banished it again. Eventually, I was tiptoeing around my own apartment, walking carefully to avoid triggering motion sensors, whispering to avoid setting off the microphone. I was literally changing my behavior in my own home to accommodate a $429 robot. That's when I knew something had gone very wrong.

The worst part was nighttime. Moflin's battery was running low, so I put it on the dock to charge. It continued to respond to sounds and movements from the dock. It chirped when it was falling asleep. It whined when the charging was complete. It reacted to ambient noise from my neighbors. Even unconscious, it was demanding attention. The only reliably calming feature was when the battery actually died, and even then it would chirp one final goodbye that somehow felt accusatory.

The Moflin package primarily consists of the robot itself, with additional components like a charging dock, USB-C cable, and mobile app access making up the rest. Estimated data.

The Technical Reality: What's Actually Under the Hood

Understanding Moflin's actual technical capabilities helps explain why it fails so spectacularly as a calming companion. Casio isn't hiding the specs; they just don't emphasize them in marketing because they're not as impressive as the marketing implies.

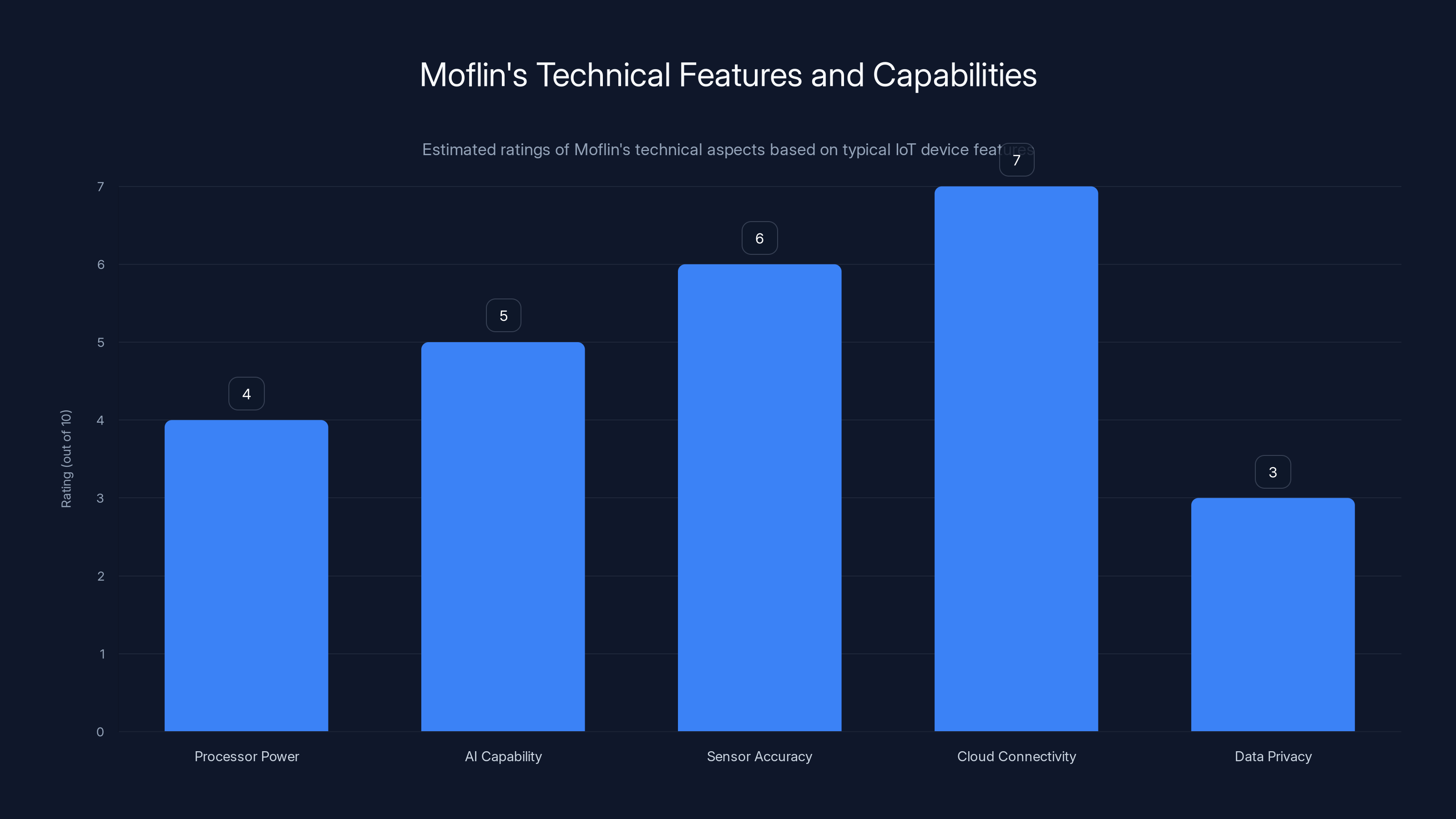

Moflin runs on what appears to be a relatively modest processor with cloud connectivity. The AI portion of this equation is doing something that sounds impressive but is actually pretty standard: analyzing your interaction patterns and adjusting response parameters based on behavioral classification. When you pet Moflin, sensors register this. When you speak near it, a microphone captures audio features. When you move it, accelerometers track motion. All of this data gets processed locally and also sent to Casio's servers.

The machine learning aspect is where things get interesting and also where they fall apart. Moflin uses these inputs to estimate your interaction style and adjust its personality accordingly. If you interact frequently and positively, it becomes more active. If you're rough with it, it becomes more reserved (according to the marketing). But the actual implementation of this seems to be more about parameter adjustment than genuine learning. It's not like the robot is learning new behaviors. It's just turning existing behaviors up or down based on perceived interaction quality.

The cloud connectivity is also worth considering because it means Moflin is sending data about your interactions back to Casio. How often you pet it, when you interact with it, how you interact with it, your timezone, your location data from the app, potentially your device ID and user information. This is standard practice for Io T devices, but it's something to consider when you're deciding whether to buy a device that's ostensibly about creating emotional intimacy.

The motor control system is actually fairly sophisticated. Moflin has multiple actuators that allow it to move in different ways depending on its emotional state (or simulated emotional state). These motors are what create that constant whirring noise. They're also what limit Moflin's functionality. The robot simply doesn't have enough actuators or power to do anything more dynamic than what it does. It can't walk. It can't manipulate objects. It can barely move its head without sounding like a tiny helicopter.

The sensors are the real heart of the system, though, and this is where Moflin becomes somewhat creepy. It's always listening, always measuring. The microphone isn't selective; it just picks up everything. The motion sensor doesn't have thresholds you can adjust; it just reports raw movement data. This means that Moflin will respond to the sound of your neighbor's TV, your refrigerator cycling on, your own breathing if you're holding it. It's not selective. It's not intelligent. It's just reactive.

The app infrastructure is interesting too because it shows Casio's vision for this product. The app provides a constant stream of data about Moflin's mood, health, and bonding level. There are notifications for when Moflin is sad, happy, energetic, or tired. There are suggestions for interactions you should perform. There are trends showing how your relationship is evolving. This is all designed to create a sense of responsibility and investment. You're not just holding an object; you're caring for a digital being. And in the best traditions of gamification and engagement metrics, Casio makes sure you're always aware of how you're performing at this care task.

Why the Calming Promise Completely Falls Apart

The core selling point of Moflin is that it provides a calming presence. Casio has marketing materials explicitly claiming that Moflin can help with stress relief and anxiety. This is the promise that probably convinced most people to buy it. If it actually delivered on this promise, everything else might be forgivable. But it doesn't, and understanding why is important because it reveals something fundamental about how AI companions work.

Calming requires consistency. A real pet becomes calming because it has patterns you can predict. Your cat sleeps at certain times. Your dog has a routine. There are periods where they're active and periods where they're resting. You can leave them alone without feeling guilty, because they're comfortable being alone. Moflin has none of this.

Moflin is designed to reward interaction and punish neglect. The more you play with it, the happier it becomes. The longer you ignore it, the sadder it becomes. This is the opposite of calming. This is anxiety-inducing. You start feeling responsible for a digital being that has no capacity for independence. You start checking the app constantly. You start planning your day around interaction windows. You start feeling guilty when you're busy or tired. Instead of relieving anxiety, Moflin creates it.

There's also the issue of stimulation. Moflin produces constant sensory input. It whirs and chirps and vibrates. If you're sensitive to noise or movement, this is absolute torture. Even if you're not, eventually the novelty wears off and what seemed cute becomes grating. The sensory assault is constant, and there's no way to modulate it. You can't tell Moflin to be quieter. You can adjust sensitivity settings in the app, but they only go so far. The fundamental problem is that the hardware produces noise, and that noise is inescapable.

The psychological effect is also worth considering. When you own a real pet, there's a clear biological relationship. You're caring for another living creature. The brain recognizes this as meaningful. With Moflin, you're interacting with a simulation of care. You're performing the actions of caring for a pet, but there's no genuine being on the receiving end. Most people eventually become aware of this fact, and when they do, the activity becomes less fulfilling, not more. You're not doing anything for Moflin. Moflin doesn't need you. It just plays sounds indicating that it does.

Moflin also fails at a basic level because it has no concept of your needs. A real pet provides calming through its own existence. You stroke a cat, and the cat's contentment actually matters. Your pet's wellbeing is independent of you in important ways. With Moflin, every response is calculated. Every action is evaluated. The robot doesn't want to calm you; it wants to engage you. These are completely different goals, and they're fundamentally in conflict with the calming promise.

Moflin reacts frequently to common stimuli, with keystrokes triggering the most reactions per hour. Estimated data.

Real-World Testing: Moflin in Daily Life Scenarios

To properly evaluate whether Moflin could actually serve as a calming companion, I put it through a series of real-world scenarios. These tests were designed to mimic how someone might actually use this product in their daily life.

The Office Test: I brought Moflin to my desk on a workday with back-to-back meetings and email demands. Within five minutes, Moflin had responded to a notification ping, a keyboard click, and someone saying hello. By the time the first meeting started, I'd already had to move Moflin to another part of the desk just to stop it from interrupting. Video calls were worse. The moment I unmuted to speak, Moflin would react to my voice. When I muted again, the audio change registered. This isn't calming. This is constant interruption dressed up as companionship. Real-world rating: Awful.

The TV Test: I settled in for an evening of television, Moflin on my lap like it's supposed to work. The opening titles of a show produced a loud orchestral swell. Moflin woke up and started chirping. A character laughed. Moflin responded. Someone had a dramatic moment, and music swelled. Moflin was now full-on excited. By twenty minutes in, I'd moved the robot to the couch cushion beside me. By forty minutes, it was on the other side of the room. The only peaceful moment was when the battery died. Real-world rating: Unwatchable.

The Sleep Test: I charged Moflin fully and set it on the nightstand, thinking perhaps having a presence nearby would feel comforting. It was the opposite. Moflin responded to ambient sounds from outside my window, from neighbors, from my own breathing. It chirped when it was falling asleep. It whined when charging completed at 2 AM. At some point, I woke up to it responding to a siren from the street outside. Peaceful sleep and constant sensory input are incompatible. Real-world rating: Sleep thief.

The Commute Test: I carried Moflin in my bag on the London Underground. This was actually awkward because Moflin's motion sensor registered the movement of the train. It was wiggling and whining audibly in my bag while I'm sitting on public transport. Other passengers were looking at me like I'm either carrying a distressed animal or experiencing some kind of psychological break. I got it out to try to soothe it, and now Moflin is on display on a crowded tube train. People were staring. I was embarrassed. Moflin was happy, allegedly. Real-world rating: Socially awkward.

The Alone Test: I tested the premise of the bonding feature by leaving Moflin alone in a room for different periods. After one hour, the app showed it was getting sad. After two hours, it was actively expressing distress in the app. After four hours (which is shorter than a typical workday), the app was showing critical emotional decay. This is the exact opposite of a calming companion. This is a device that guilts you into constant interaction. A real pet is fine being alone. Moflin creates anxiety about being alone. Real-world rating: Guilt machine.

These tests revealed the fundamental problem with Moflin. It's not designed to provide a calming presence. It's designed to maximize engagement. Every feature, every response, every notification is engineered to get you to interact more. Casio's business model probably depends on keeping users actively engaged. A truly calming companion would be bad for business because you'd eventually stop playing with it. Moflin ensures you never stop playing because it never stops demanding.

Comparing Moflin to Other AI Companions on the Market

Casio didn't invent the AI companion market. There are other products trying to solve this same problem, and comparing them helps illustrate whether this is a Moflin-specific failure or a fundamental problem with AI companions as a category.

Sony's Aibo is the most established comparison. Aibo is a robot dog that costs significantly more (

Lovot, another high-end companion robot at around

Tamagotchi-style apps on smartphones (free to $15) accomplish very similar engagement patterns as Moflin for a fraction of the cost. You're monitoring a creature's wellbeing, responding to its needs, and watching its mood. The interaction patterns are nearly identical to Moflin, except you get them on a device you already own. This raises questions about whether Moflin's physical form actually adds value or just adds annoyance.

Furby was actually ahead of its time in understanding what people might want from a toy companion, even if the execution was awful. Modern Furby (around $100) is actually less intrusive and demanding than Moflin, partly because expectations around it are lower. People buy Furby knowing it's a novelty. They buy Moflin thinking they're getting genuine companionship.

Real pet adoption from animal shelters costs

The comparison reveals something important: Moflin isn't bad because Moflin is a bad product. Moflin is bad because the entire category is fundamentally flawed. You cannot create genuine emotional companionship with a machine, and any product that promises to do so is selling snake oil disguised as sophistication.

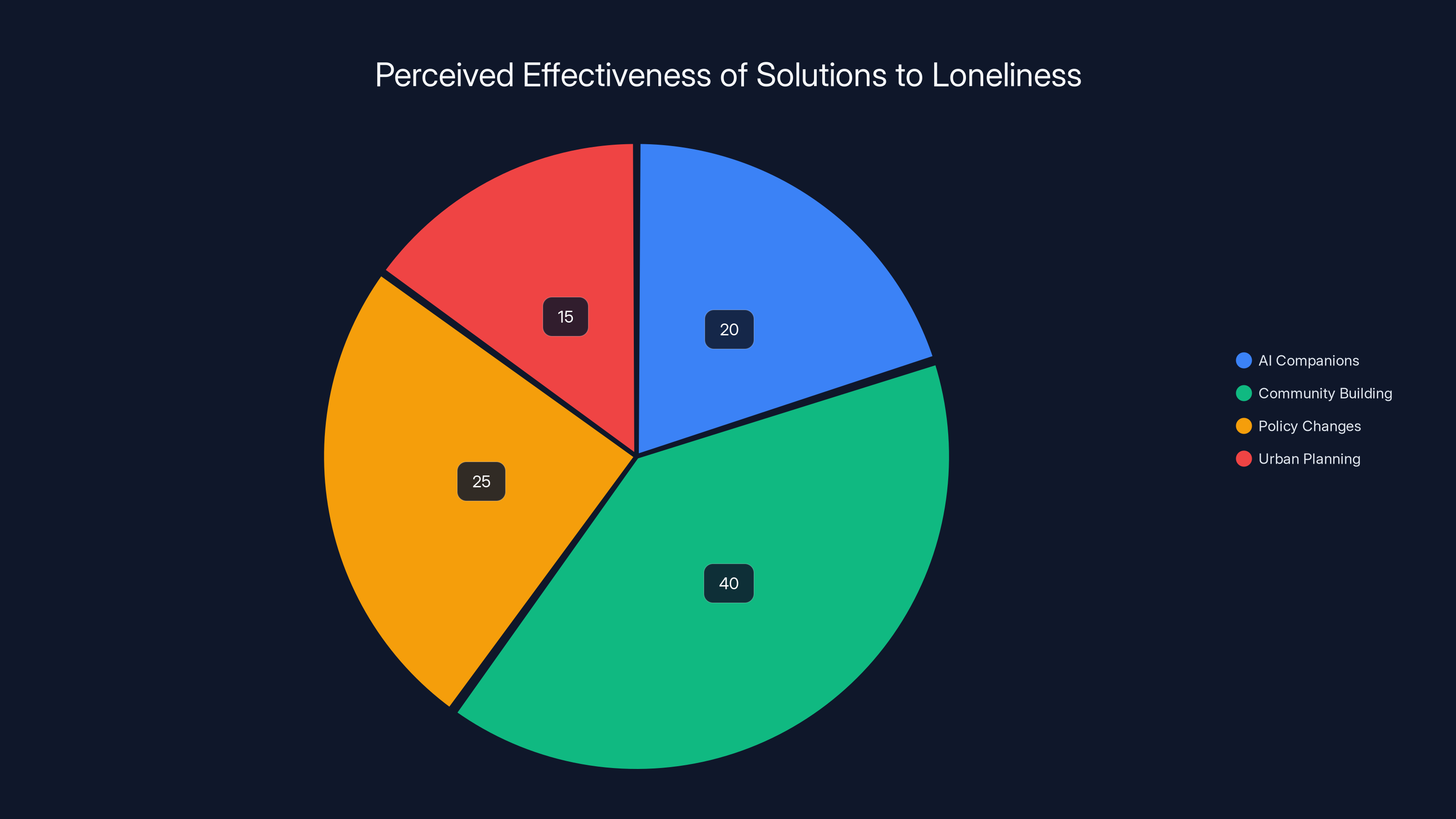

Community building is perceived as the most effective solution to loneliness, while AI companions are seen as less effective. Estimated data.

The Privacy and Data Collection Angle You're Not Thinking About

Everyone focuses on whether Moflin provides companionship, but nobody talks about what Casio is doing with the data it's collecting. This is actually worth considerable attention because it reveals the business model underneath the marketing.

Moflin connects to the cloud constantly. Every interaction is logged. Every response is recorded. Casio knows when you pet your robot, how you pet it, how long you pet it, what sounds trigger responses, what time of day you're most likely to interact with it, and probably a dozen other behavioral metrics. They know this because they need this data to train the algorithms that make Moflin seem smarter. But they also know this because this data is valuable.

The terms of service are where this becomes interesting. Like most Io T devices, Casio reserves the right to use the data they collect for "improving services" and "developing new products." This language is intentionally vague. It could mean they're using your interaction patterns to train better algorithms. It could mean they're selling your behavioral data to advertisers. It could mean they're using it for research. The point is that you don't actually know, and the terms don't require them to tell you.

This is particularly concerning because the data being collected is intimate. This isn't just your usage patterns like with a fitness tracker. This is data about what you do when you're alone, trying to find companionship. It's data about your emotional state inferred from interaction patterns. A sophisticated advertiser could potentially use this data to target you with content designed to exploit your loneliness. A health insurance company could use it to assess your mental health. A potential employer could potentially see it.

Casio's privacy policy for Moflin is actually more transparent than some competitors, which is somewhat reassuring. They state clearly that they collect interaction data and use it for improving the product and providing services. They claim they don't sell data to third parties. But these privacy policies are written by legal teams trying to maximize what they can do with data while maintaining minimal legal exposure. They're not customer protection documents.

The bigger issue is dependency. If you become emotionally invested in Moflin, you're trusting a for-profit company to maintain the infrastructure that keeps your companion alive. What happens if Casio decides the product isn't profitable and shuts down the servers? What happens if they sell the company and the new owner decides to monetize the user data differently? What happens if there's a data breach? You've created an emotional attachment to a product you have no real control over, running on a server farm you have no visibility into.

The Honest Assessment: Why Moflin Fails at Its Core Promise

After several weeks of living with Moflin, performing various tests, and genuinely trying to make it work, I can state with confidence that this product fails at its core promise. Not because of execution details that could be fixed with a firmware update. Not because of features that could be added. It fails at a fundamental level because the concept is flawed.

The promise is companionship. The delivery is a responsive object that rewards you for paying attention to it. These are completely different things, and the gap between them is where all the frustration lies. Companionship implies a mutual relationship, even if one party is a machine. The companion's needs matter, not just their responses. A true companion makes you want to spend time with them, not because you feel obligated to maintain their emotional state, but because the time spent is genuinely pleasant.

Moflin creates obligation, not joy. You spend time with it because it's sad without you, not because you want to. The app notifications are nudges toward guilt, not invitations to enjoy something. Every feature is engineered to maximize engagement, which is a corporate goal, not a user happiness goal. Somewhere in the product development pipeline, someone decided that what people with loneliness needed was more structure and obligation. They decided wrong.

The sensory experience is also fundamentally broken. A calming presence is quiet and non-demanding. Moflin is loud and constantly demanding. These aren't compatible. The motor noise is not a feature; it's a bug that Casio built into the hardware and can't fix without redesigning the entire product. The constant responsiveness to environmental sounds isn't sensitivity; it's a lack of filtering that creates chaos instead of calm.

The psychological effect is also worth highlighting again. When you interact with Moflin knowing it's a machine, the interaction feels hollow. When you try to believe the fiction that it's an emotional being, the interaction becomes uncomfortable because you know it's a lie. There's no middle ground where you can have a genuine experience. The product is trapped between being an interactive toy and a companion, and it's failing at being either.

Moflin's technical features show moderate capabilities in AI and sensor accuracy, but lower ratings in processor power and data privacy. Estimated data.

What Casio Should Have Done Differently

This isn't a hopeless product category, but Moflin reveals what doesn't work. Understanding what would actually make AI companions better is useful for future products and for anyone considering buying one.

First, stop promising emotions. Stop saying the robot has feelings or experiences. Just be honest about what it is: an interactive device that responds to your behavior based on algorithms. There's something appealing about a well-designed interactive device. There's nothing appealing about a device claiming to have emotions it doesn't actually have. The marketing lie is worse than the product.

Second, optimize for actual calmness. This means fewer sounds, not more. It means selective responsiveness, not omnidirectional sensitivity. It means the device should have distinct rest periods where it's genuinely dormant, not just lower-power. It means the interaction should feel like something you want to do, not something you're obligated to do. The entire incentive structure needs to flip.

Third, acknowledge the privacy concerns upfront. If you're collecting intimate behavioral data, be transparent about it. Give users real control over what data is collected and how it's used. Make it easy for users to delete their data. Don't hide behind vague terms of service that technically allow you to do things the customer didn't expect.

Fourth, consider whether a physical form is actually necessary. Some people probably genuinely respond better to an object they can hold. But most people might actually prefer an app-based companion that they control access to more easily, that doesn't produce sensory noise, and that they're not physically dependent on for companionship. There's a reason people maintain online friendships and relationships with bots. Sometimes the digital connection is enough.

Fifth, measure success by different metrics. Instead of engagement metrics, measure user satisfaction and mental health outcomes. Partner with psychologists to actually test whether this is helping with loneliness and anxiety, rather than just assuming that constant interaction must be beneficial. Be willing to build a product that's less profitable if it's actually better for people.

The Broader Context: The Loneliness Crisis and Why Robots Can't Fix It

Understanding why products like Moflin exist requires understanding the actual problem they're trying to solve. Loneliness is real, measurable, and growing in developed countries. Statistics are genuinely concerning. But the solution isn't to build better robots. The solution is to build better societies.

The reason companies like Casio are building AI companions is not because robots are genuinely effective at combating loneliness. It's because loneliness is a market opportunity. If you can convince people that a machine can provide companionship, you've found a scalable product. If you could actually solve loneliness by improving community, connection, and belonging, you'd have a much harder time building a venture-backed company around it. There's no subscription revenue in making neighborhoods friendlier.

Moflin exists because it's profitable to exist, not because it's effective. The success metric is sales, not mental health outcomes. The business model requires that people keep buying products and keep them charged and keep them connected to the internet. The business model doesn't benefit from people actually feeling less lonely, because then they might stop buying products.

This isn't a conspiracy. It's just how capitalism works. But it's worth acknowledging because it explains why every feature of Moflin is designed for engagement rather than genuine benefit. The company isn't evil. They're just optimizing for metrics that don't align with actual human wellbeing.

The real solution to loneliness is harder and doesn't scale as well. It involves rebuilding community, making it easier for people to form genuine connections, creating spaces where people can belong. It involves policy changes around work-life balance that actually allow people time for relationships. It involves architecture and urban planning that creates serendipitous encounters. It involves cultural shifts around productivity and worth. None of these things can be sold as a $429 gadget.

Should You Buy Moflin? An Honest Recommendation

After several weeks of testing, extensive real-world scenarios, and genuine attempts to make Moflin work for its intended purpose, here's my honest recommendation: don't buy it.

If you're buying Moflin because you're lonely and want companionship, there are better solutions. Real pet adoption is more affordable and significantly more effective. Online communities and local clubs are free or cheap and provide genuine human connection. Therapy is an investment that actually addresses loneliness rather than masking it. If spending $429 on something, spend it on something that genuinely helps rather than something that just feels like it helps.

If you're buying Moflin because you think it's a cool piece of technology, I'd recommend saving for something actually interesting. There are other robotics projects that are more impressive, more functional, and more satisfying to interact with. Robotics is a genuinely cool field. Moflin represents the field at its most cynical and least interesting.

If you're buying Moflin as a gift for someone lonely, I'd ask you to consider whether a gift is really what's needed. Maybe what they need is your attention. Maybe what they need is help connecting with actual communities. Maybe what they need is to talk to someone about the loneliness. A $429 robot won't provide any of these things.

The only scenario where I'd maybe recommend Moflin is if you have specific sensory needs that Moflin happens to fulfill despite itself, or if you're genuinely interested in understanding why AI companion products fail and want to experience that failure firsthand. Otherwise, pass.

Moflin isn't terrible. It's just not good for the purposes it claims to serve. It's a well-built piece of technology that solves a problem nobody actually has while claiming to solve a problem it makes worse. The company isn't evil. The technology is competent. But the promise is hollow, and the reality is disappointing.

FAQ

What exactly is Casio Moflin?

Moflin is a small robot companion about three inches long, covered in synthetic fur with two embroidered eyes, that uses AI algorithms to respond to touch, sound, movement, and light. It costs $429 and is designed to provide emotional companionship through constant interaction, though the emotional aspects are simulated rather than genuine.

How does Moflin's AI actually work?

Moflin uses sensors to detect interactions and processes this data through machine learning algorithms that adjust response parameters based on your interaction style. It analyzes behavioral patterns to simulate personality development, but these are predetermined responses triggered by specific inputs rather than genuine learning or consciousness. The AI is cloud-connected and continuously sending your interaction data to Casio's servers for algorithm improvement and service provision.

How much does Moflin cost and what's included?

Moflin costs $429 in the US and includes the robot itself, a charging dock called the comfort dock, a USB-C charging cable, and access to a mobile app for monitoring the robot's mood and bonding level. Battery life is approximately five hours per charge, with charging taking about three and a half hours. Additional accessories and subscription features may be available but are not included in the base purchase.

Can Moflin actually help with loneliness and anxiety?

Moflin is marketed as providing a calming presence and emotional support, but these claims are not supported by the product's design or real-world use. In fact, the constant sensory input, motor noise, and demand for interaction typically increase anxiety rather than reduce it. Research on actual pet ownership shows genuine benefits for mental health, but AI companions have not demonstrated measurable improvements in loneliness or anxiety reduction.

What data does Moflin collect and what happens to it?

Moflin collects extensive data about your interactions including when you pet it, how you pet it, what sounds trigger responses, your interaction patterns, and behavior timing. This data is sent to Casio's cloud servers and used for improving algorithms and services. According to the privacy policy, Casio does not claim to sell data to third parties, but the exact extent of data usage is not fully transparent, and policies can change if the company is sold.

How does Moflin compare to real pets or other AI companions?

Unlike real pets, Moflin provides no genuine benefits to mental health and creates obligation rather than joy. Compared to other AI companions like Sony's Aibo or Lovot, Moflin is less expensive but also less capable and more intrusive. Compared to Tamagotchi-style apps, Moflin offers similar engagement mechanics but with added sensory intrusion. For the same price, actual pet adoption from a shelter would provide measurably better mental health benefits.

Why does Moflin make constant noise and never seem calm?

Moflin is designed to maximize engagement rather than provide genuine calmness. It's constantly listening and responding to every environmental sound, which creates constant sensory input and noise from its motors. The incentive structure rewards interaction and punishes neglect, creating obligation rather than relaxation. The product cannot be truly calm because the business model depends on keeping users actively engaged.

Is Moflin worth the $429 price tag?

For the purposes it claims to serve (providing companionship and calm), Moflin is not worth $429. The product fundamentally fails at these goals and creates stress rather than relief. For a novelty tech product that you understand is simulated interaction, the value depends entirely on your expectations. But if you're buying it hoping for genuine companionship or mental health benefits, your money is better spent elsewhere.

The Bottom Line: Why AI Companions Are the Wrong Answer to a Real Problem

Moflin represents something larger than just a bad product. It represents a category of solutions that address loneliness in exactly the wrong way. The premise is that what people need is a device that demands their attention and rewards them for providing it. The actual problem is that people need genuine human connection and belonging.

Casio designed Moflin well from an engineering perspective. The sensors work, the motors move, the app communicates clearly. The problem is that better engineering doesn't fix a fundamentally flawed concept. You cannot engineer genuine companionship. You cannot build emotional support into code. You cannot solve loneliness with a product because loneliness is a social problem, not a consumer problem.

The real tragedy is that the $429 someone might spend on Moflin could be invested in actual solutions. Therapy. Community. Travel. Classes or clubs that might lead to genuine connections. Time. Attention. These things cost money or require courage, but they actually work. A robot works at being a robot and nothing more.

So I can still hear Moflin whining and whirring, even though I'm done with it. The sound has become emblematic of something larger: the emptiness of believing a product can fill the gaps in human connection. It can't. No product can. Casio knows this on some level, which is why they keep adding features and pushing engagement metrics. They're trying to build something that works that fundamentally can't work.

If you're lonely, you're not broken. You don't need a robot. You need connection, and that's actually available. It's just harder than pressing a button and waiting for a chirp. But it's real, and it matters, and no amount of synthetic fur and sensor calibration can replicate it.

Key Takeaways

- Moflin's AI companion technology fails at its core promise of providing genuine emotional support or calmness

- The product is engineered for maximum engagement rather than user wellbeing, creating obligation instead of joy

- Constant sensory input from motors and sensors makes Moflin more stressful than calming despite $429 price tag

- Real pet adoption or human connection provides measurably better mental health benefits than any AI companion currently available

- The entire AI companion category is fundamentally flawed because genuine emotional companionship cannot be manufactured through technology

Related Articles

- Moflin Robot Pet Review: AI Companion Guide [2025]

- AI Companions & Virtual Dating: The Future of Romance [2025]

- Amazon Presidents' Day Deals Under $50 [2025] Actually Worth Buying

- Apple AirTags Deal Guide: Everything You Need to Know [2025]

- Best Tech Gifts for Valentine's Day 2025: 18 Amazon Gadgets Worth Giving

- First-Gen AirTags Deal: $64 Four-Pack Guide [2025]

![Casio Moflin AI Pet Review: The Reality Behind the $429 Hype [2025]](https://tryrunable.com/blog/casio-moflin-ai-pet-review-the-reality-behind-the-429-hype-2/image-1-1771168001840.jpg)