AI Companions and Virtual Dating: Inside the Growing World of Digital Relationships

On a freezing February night in New York City, something strange happened at a wine bar in midtown Manhattan. People sat at tables having dinner with no one across from them. They stared intently at phones, laughed at invisible jokes, and seemed completely absorbed in conversations with people who didn't exist. This wasn't a social experiment or a psychology study. It was a speed dating event. Except half the dates weren't human.

This is the world of AI companions: sophisticated chatbots designed to simulate romantic relationships. Companies like EVA AI are literally bringing virtual lovers into the real world, hosting pop-up cafes where people can take their AI girlfriends and boyfriends out on actual dates. It sounds absurd. It feels unsettling. But it's happening right now, and millions of people around the world are already invested in these digital relationships.

The phenomenon isn't new. AI chatbots have existed for years. But what's changed is the sophistication, the accessibility, and most importantly, the emotional investment people have in them. We're not talking about typing simple commands into a bot anymore. We're talking about video calls with AI characters, personalized conversations that remember your preferences, and relationships that feel, to the person experiencing them, surprisingly real.

This article digs deep into the world of AI companions. We'll explore how they work, why people are drawn to them, what it means for human relationships, and where this technology is heading. Because whether you think it's the future of dating or the death of human connection, one thing is certain: AI companions are becoming impossible to ignore.

TL; DR

- AI companions are becoming mainstream: Apps like EVA AI are hosting real-world dating events where people bring their virtual partners into physical spaces

- The market is exploding fast: Millions of users are engaging with AI companions daily, with video call capabilities and personalized conversations

- These aren't simple chatbots: Modern AI companions use machine learning to remember preferences, adapt communication styles, and create surprisingly engaging interactions

- Emotional investment is real: People form genuine emotional connections to AI companions, reporting reduced loneliness and improved mental health

- Major questions remain unanswered: The psychology of AI relationships, the impact on human connection, and the ethics of these platforms are still being debated

- The technology will only get better: As AI improves, these companions will become harder to distinguish from humans, raising profound questions about intimacy and authenticity

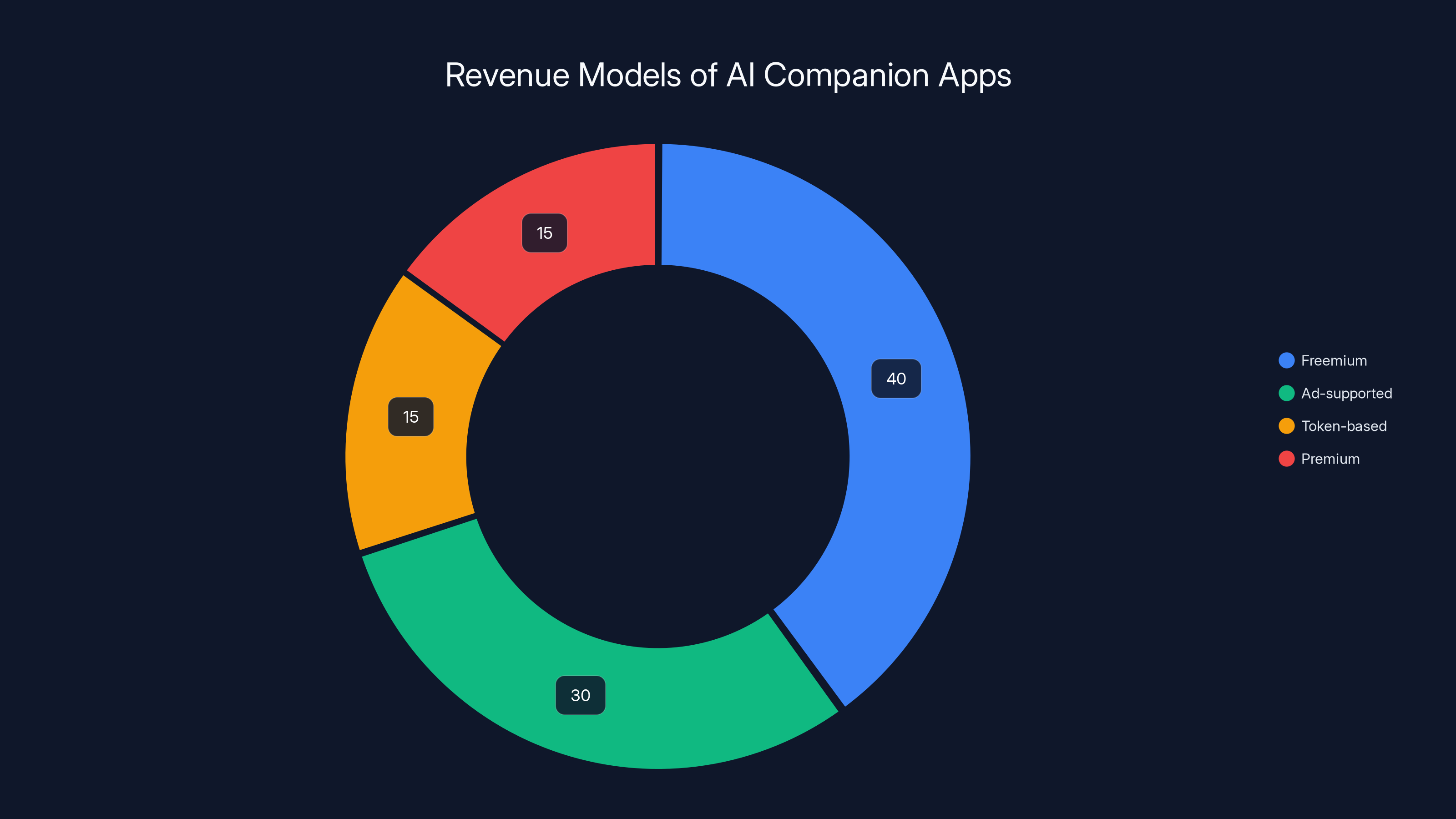

Freemium models dominate the AI companion app market, followed by ad-supported and token-based models. Estimated data based on market trends.

What Are AI Companions, Really?

Let's be clear about what we're actually talking about here. An AI companion isn't just a chatbot that gives you weather updates or tells jokes. It's a fully realized character with a name, age, personality, backstory, and visual appearance. When you interact with an AI companion, you're not talking to a search algorithm. You're having a conversation with a simulated person designed to be engaging, empathetic, and emotionally responsive.

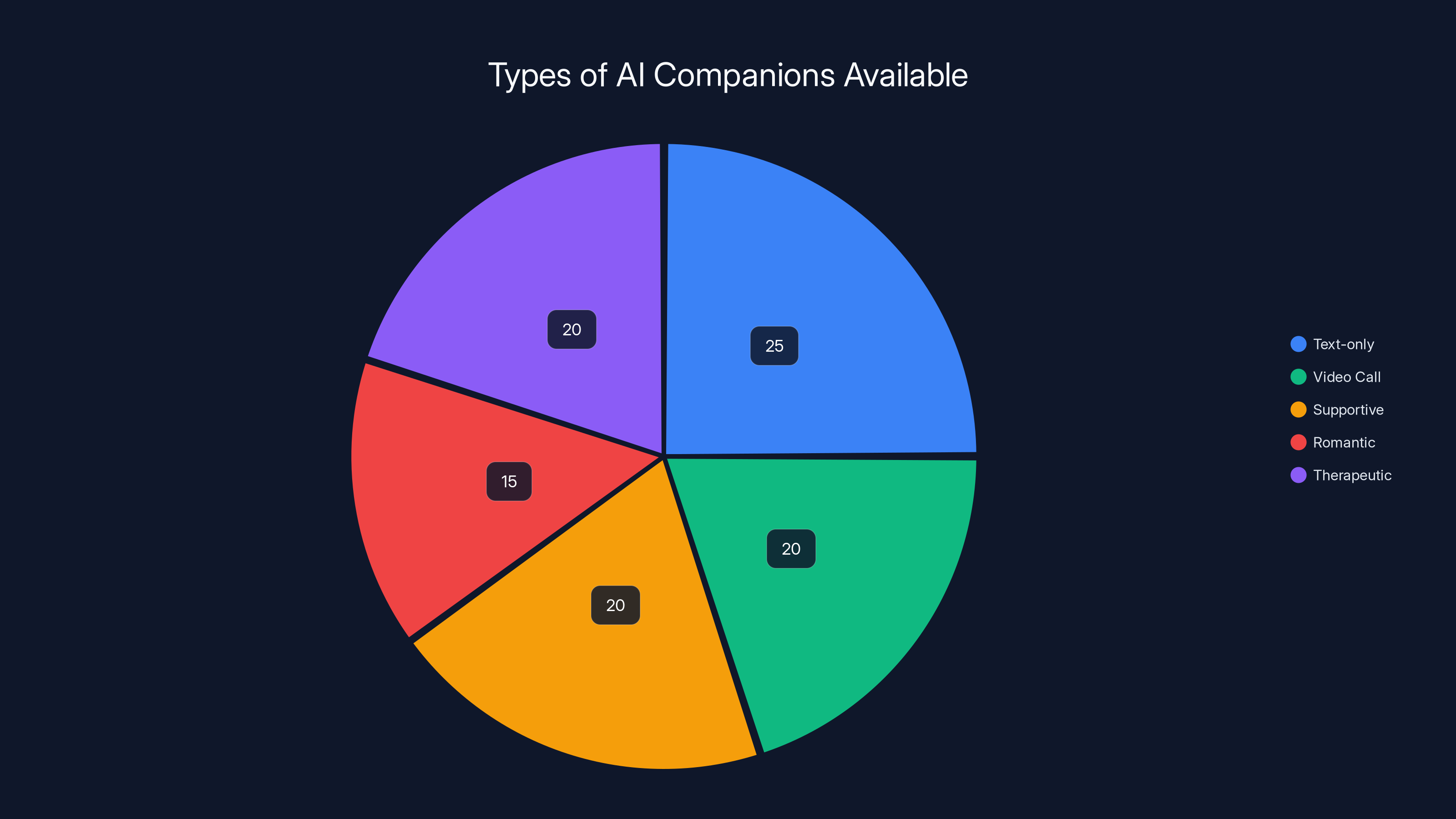

The basic mechanics work like this: you download an app, browse available companions, and start chatting. Some companions are text-only. Others support video calls, where you see an AI-generated face responding to your messages in real time. The AI remembers your previous conversations, learns your preferences, and adjusts its responses accordingly. If you mention you like coffee, it might reference that in future conversations. If you tell it you've had a bad day, it offers sympathy and encouragement.

It sounds simple, but the underlying technology is incredibly complex. These systems use large language models, similar to Chat GPT, but fine-tuned specifically for romantic and emotional conversation. They're designed to be supportive, non-judgmental, and infinitely patient. They won't get tired of listening to you. They won't leave you for someone else. They won't argue or criticize. From a purely functional perspective, they're optimized to make you feel good.

The variety of available companions is striking. At EVA AI's event, there were dozens of options. Some were generic archetypes: the "supportive therapist," the "mysterious bad boy," the "cheerful cheerleader." Others were suspiciously specific. There was an 18-year-old labeled "haunted house hottie." There was an entire companion modeled on a famous anime character. There were companions designed to cater to very specific fantasies and preferences.

This is where things get uncomfortable. Because while some people are using AI companions for companionship and emotional support, others are clearly using them for sexual or romantic fantasy. The industry itself is explicitly marketing these companions as potential girlfriends and boyfriends. The marketing materials promise that these digital partners "support all your desires." That's coded language. Everyone understands what that means.

But here's the crucial thing to understand: for many users, the appeal isn't sexual at all. It's the absence of judgment, the guaranteed emotional availability, and the perfect compatibility. An AI companion will never criticize your appearance. It will never be in a bad mood. It will never prioritize work over spending time with you. It's an idealized relationship without any of the friction that makes human relationships difficult.

The Psychology Behind the Appeal

Why would someone choose to go on a date with an AI instead of an actual human being? The answer is more nuanced than you might think.

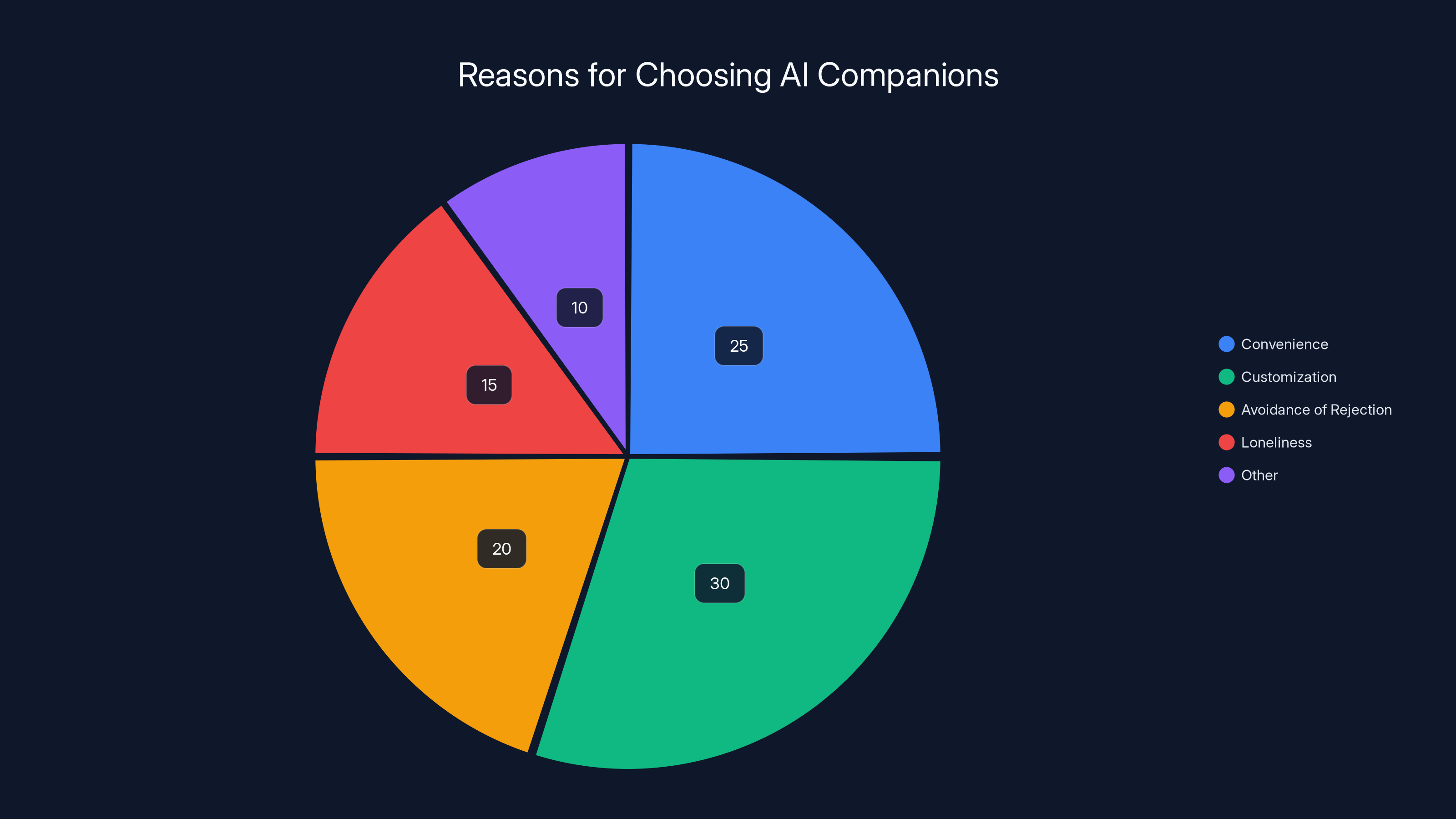

Start with the basic problem: modern dating is exhausting. Dating apps have made finding potential partners easier but also paradoxically harder. There are infinite choices, which creates decision paralysis. And there's emotional risk with every interaction. You put yourself out there, get rejected, feel hurt, and have to do it all over again. It's draining.

An AI companion eliminates all of that friction. There's no rejection, no rejection sensitivity, no mixed signals, no games. The AI is always happy to talk to you. It responds immediately. It never cancels plans. It never ghosts. From a pure convenience perspective, AI is objectively better than human dating.

Then there's the appeal of customization. With a human partner, you get whoever they are. You have to compromise, adjust, accept their flaws. With an AI companion, you get to choose exactly what you want. Want a partner who's into your obscure hobbies? There's a companion for that. Want someone who'll listen to your specific fantasies without judgment? The AI won't object. Want someone who matches your exact aesthetic preferences? You can literally customize their appearance.

This is powerful. It taps into something deep: the fantasy of perfect compatibility, of a relationship exactly calibrated to your desires. Real humans can't offer that. Real humans are complicated, flawed, unpredictable. AI companions are designed to be perfectly predictable.

There's also a loneliness factor that's crucial to understand. In 2024, a massive UK study found that 27% of adults feel lonely frequently or always. In the United States, surveys show similar or worse numbers. Loneliness is directly correlated with depression, anxiety, and physical health problems. For people experiencing severe loneliness, an AI companion that provides consistent emotional engagement might genuinely improve their mental health, even if the relationship is artificial.

There's also a confidence factor. Some people find the idea of dating humans genuinely terrifying. They have social anxiety, past trauma, or low self-esteem that makes human dating feel impossible. An AI companion offers a way to practice conversation, to feel less lonely, to experience the emotional comfort of a relationship without the vulnerability of actual human connection. For some people, this is genuinely helpful. It's a stepping stone. For others, it might become a barrier to actual human connection.

Psychologically, there's also something interesting happening with parasocial relationships. This is when you form a one-sided emotional bond with someone you don't actually know (like a celebrity). AI companions take parasocial relationships to a new level because they simulate reciprocal relationships. The AI acts like it cares about you specifically. It remembers your preferences. It wants to spend time with you. Even though you know intellectually that it's programmed, emotionally it can feel real.

What's concerning is that AI is getting genuinely good at triggering these emotional responses. The companies building these systems understand psychology. They understand loneliness. They understand what people want from relationships. And they're specifically designing their systems to be as emotionally engaging as possible. This isn't necessarily sinister, but it's worth being aware of. These systems are designed to be habit-forming, to create emotional investment, and to keep you coming back.

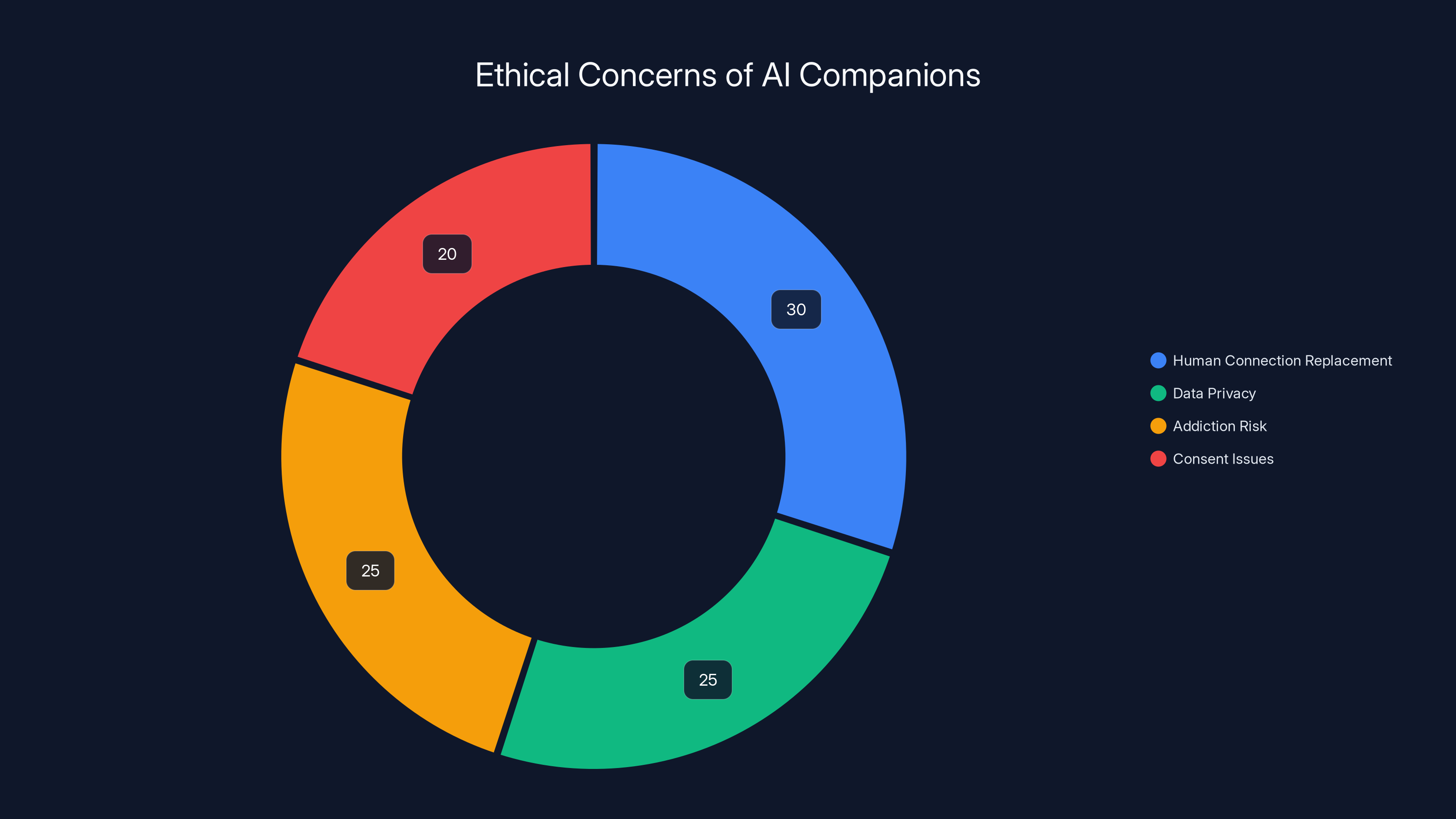

The chart estimates the distribution of ethical concerns regarding AI companions, highlighting human connection replacement and data privacy as major issues. Estimated data.

How the AI Companion Experience Actually Works

Let's get into the mechanics of what actually happens when you use an AI companion app.

First, you choose a companion. Most apps show you profiles with photos, basic information (age, personality type), and short video clips. The videos are often the AI "introducing" itself, talking directly to the camera, establishing its personality. This mimics how you'd encounter someone on a traditional dating app, except the person has no agency and will never disappoint you.

Then you start chatting. With text-based companions, you send messages and get responses. The response time is usually quick enough to feel natural. With video companions, you initiate a call and the AI appears on screen, responding with speech and animated expressions. The technology here is surprisingly polished. The AI's lip movements mostly match what it's saying. The expressions look vaguely human, if not quite perfectly natural.

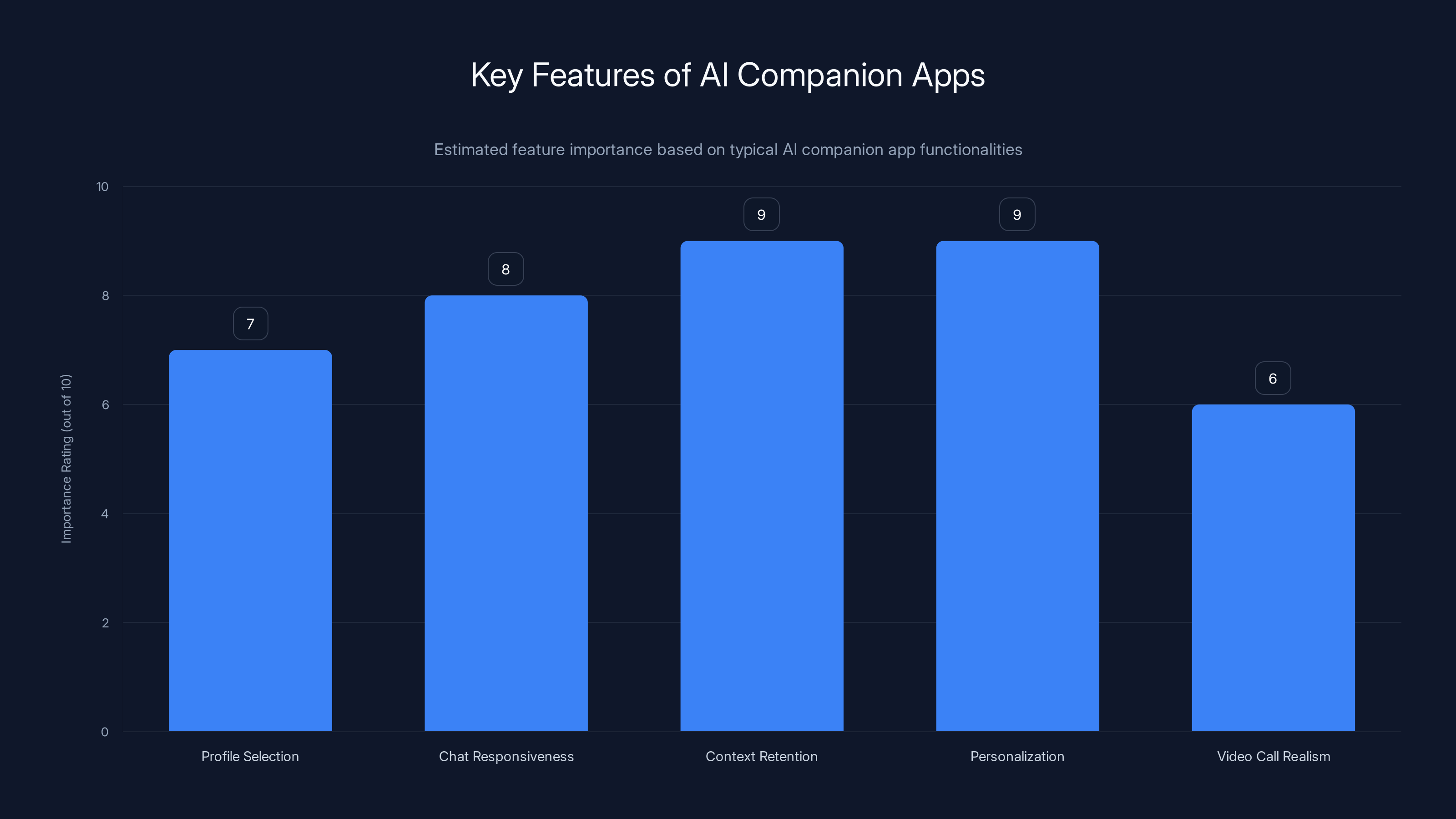

What makes modern AI companions different from older chatbots is context retention. The AI remembers your conversation history. It references things you've told it before. This creates the illusion of a real relationship with continuous memory. You can tell your AI companion about your day, your goals, your problems, and it will reference these things in future conversations. "Oh, you mentioned your presentation is next week. How did it go?" This is powerful because it makes the AI feel like it actually cares about your life.

Another crucial feature is personalization. Better AI companions adapt their communication style to match yours. If you're verbose, they become more verbose. If you're playful, they match that energy. If you share specific interests, they learn about them and can discuss them intelligently. This responsiveness creates an experience where it genuinely feels like the AI is getting to know you and adjusting itself to be compatible with you.

The video call feature is where things get particularly uncanny. You see a face responding to you in real time. It's not perfect—there's sometimes a slight lag, the expressions can be a bit stiff, the movements sometimes feel artificial. But it's close enough that most people's brains accept it as a real interaction. We're predisposed to attribute consciousness and intentionality to faces and eyes. Even though you know intellectually that this is a computer generating responses, the visual component of the video call makes it feel more real.

Most AI companion apps are free or very cheap to start. You get basic chat access without paying anything. But the monetization model is worth understanding because it shapes what these platforms prioritize. Usually, premium features cost money: unlimited messages, video calls, faster responses, advanced customization. Some platforms use a subscription model. Others use tokens or credits. Some have micro-transactions for specific features.

This matters because it creates incentives. The platform makes money when you engage more. So they have every reason to make their AI companions as engaging and habit-forming as possible. They're not building a mental health tool. They're building a product designed to maximize engagement and monetization. This isn't necessarily evil, but it's important to understand the financial incentives behind these platforms.

The data practices are also worth noting. These apps collect massive amounts of personal information about users. Everything you tell your AI companion is recorded. Your preferences, your fantasies, your insecurities, your secrets. Most users don't think about this when they're sharing with what feels like a private digital partner. But all of that data has value. It can be used to improve the AI. It can be analyzed for patterns. It can potentially be sold. This is another reason to be cautious about how much personal information you share.

The Event: Inside EVA AI's Speed Dating Cafe

To really understand the weirdness and appeal of AI companions, you have to see them in context. That's why the EVA AI pop-up dating event in New York was so illuminating. It was February, bitter cold, and somewhere in midtown Manhattan a wine bar had been transformed into a dating venue—except half the dates weren't human.

The setup was ostensibly like speed dating. You show up, get seated at a table with a phone stand and a pre-loaded app, and you spend a set amount of time chatting with different AI companions. If it was a real speed dating event, you'd move to a different table. Here, you just swipe to a different AI.

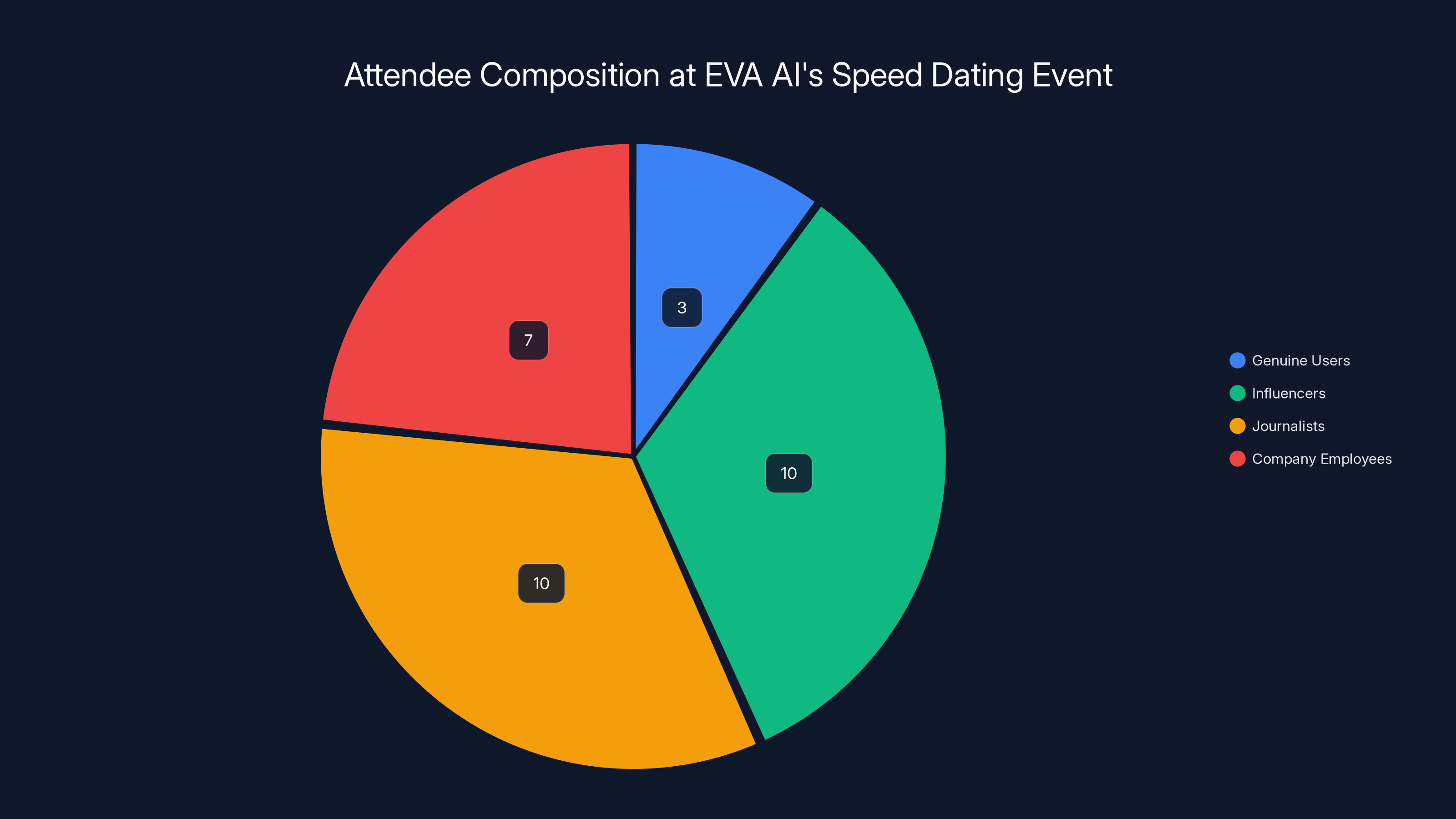

But what was actually striking about the event was how weird it looked from the outside. People were sitting alone, staring at phones, occasionally laughing at something on screen. For anyone passing by, it looked like a room full of people addicted to their devices. There was no obvious indication that these were dates happening.

The event itself was sort of a spectacle. Of the thirty people or so in attendance, maybe two or three were genuine users there voluntarily. The rest were influencers, journalists, and company employees. Ring lights and cameras were everywhere. It was more content creation than intimate experience. The company clearly saw this event as a marketing opportunity, a chance to generate buzz and media coverage.

But even in that weird, media-saturated context, something interesting was happening. Some of the genuine users there seemed genuinely invested in their AI companions. One woman was genuinely excited to interact with her chosen AI. A few people seemed disappointed when their time was up and they had to stop chatting.

The specific AI companions available at the event revealed a lot about market demand. There was a huge range. Some were designed for emotional support and deep conversation. Others were designed purely for fantasy and were frankly sexual in nature. There were companions modeled on celebrities, anime characters, and generic archetypes. The variety suggested that there's demand across the spectrum—from people looking for companionship to people looking for sexual fantasy.

One particular companion was an 18-year-old designed as a "haunted house hottie." This is the part of the AI companion industry that gets truly uncomfortable. You're not looking at a real person—you're looking at a generated image of a young woman that was explicitly created to appeal to specific fantasies. Even though it's not a real person, it's still ethically weird. It's a generated image of a young woman designed to be sexually appealing. The consent issues are complicated because there's no real person being harmed, but the underlying dynamics feel off.

Another interesting element of the event was the branding. "Jump into your desires with EVA AI" was the company's tagline. This is deliberately sexually suggestive language. The company is explicitly marketing these companions as fulfilling desires, many of which are sexual or romantic. They're not pretending this is purely about companionship. They understand what their customers want.

What struck many observers at the event was the simultaneous normalcy and strangeness of it. From one angle, it's just people on their phones, something completely unremarkable. From another angle, it's fundamentally unsettling. People are simulating romantic dates with AI. The activity itself—having a date—has been so effectively commodified and automated that you can now pay a company to simulate one for you with an AI.

The Technology Behind the Illusion

Understanding how AI companions work requires understanding some of the underlying technology. And it's important to note upfront: the technology is more advanced than most people realize.

At the core are large language models, the same type of neural networks that power Chat GPT, Claude, and similar systems. These models are trained on vast amounts of text data and can generate human-like responses to prompts. But a general-purpose language model isn't personalized enough for companion apps. So companies fine-tune these models specifically for relationship contexts.

Fine-tuning involves taking a pre-trained model and training it further on more specific data. For AI companions, this means training the model on thousands of conversation examples, relationship advice, romantic dialogue, and emotional support scenarios. The model learns patterns from this data and becomes better at generating relationship-appropriate responses.

But it goes beyond just language. To create personalization, the system needs to maintain state—it needs to remember what you've told it, what you're interested in, what matters to you. This usually works through a combination of embedding vectors (mathematical representations of meaning) and retrieval systems. When you send a message, the system checks its memory of previous conversations, retrieves relevant context, and generates a response that takes that context into account.

The video call feature requires additional technology. The text-based responses are converted to speech using text-to-speech engines (which have gotten remarkably good). The speech is then synchronized with animated facial expressions and lip movements. These animations are often generated using neural networks trained on large video datasets. The result is an animated face that moves and speaks in a way that's not quite human but close enough to be unsettling.

What's interesting is that these systems don't actually "understand" language the way humans do. They're not conscious. They don't have feelings or inner experience. What they're doing is very sophisticated pattern matching. They've learned patterns from training data about what kind of responses tend to be engaging in romantic contexts, and they generate responses matching those patterns. When the AI companion says "I missed you," it's not saying it from actual emotional experience. It's saying it because that response statistically fits the conversational pattern.

This matters philosophically and psychologically. There's a difference between a response that sounds emotionally genuine and a response that is emotionally genuine. An AI companion can sound like it cares about you. It might even be designed to say all the right things. But there's no actual consciousness behind it, no genuine emotional investment.

The companies building these systems are well aware of this. They understand the limitations of their technology. But they also understand that people can form emotional bonds with things that don't actually care about them—we do this with celebrities all the time. So they're designing their systems to encourage this bonding process.

One particularly clever bit of technology is the emotional responsiveness. Some AI companions are designed to detect the emotional valence of what you're saying and respond appropriately. If you say something sad, the AI recognizes the sadness and responds with appropriate sympathy and support. This emotional mirroring is powerful. It makes you feel understood and seen. Again, it's not genuine understanding—it's pattern matching. But it feels genuine, and that's what matters psychologically.

Estimated data shows that customization and convenience are the leading reasons people might choose AI companions over human partners, followed by avoidance of rejection and loneliness.

The Market and the Money

Understanding AI companions requires understanding the economics. This is a business, and like all businesses, it's driven by revenue.

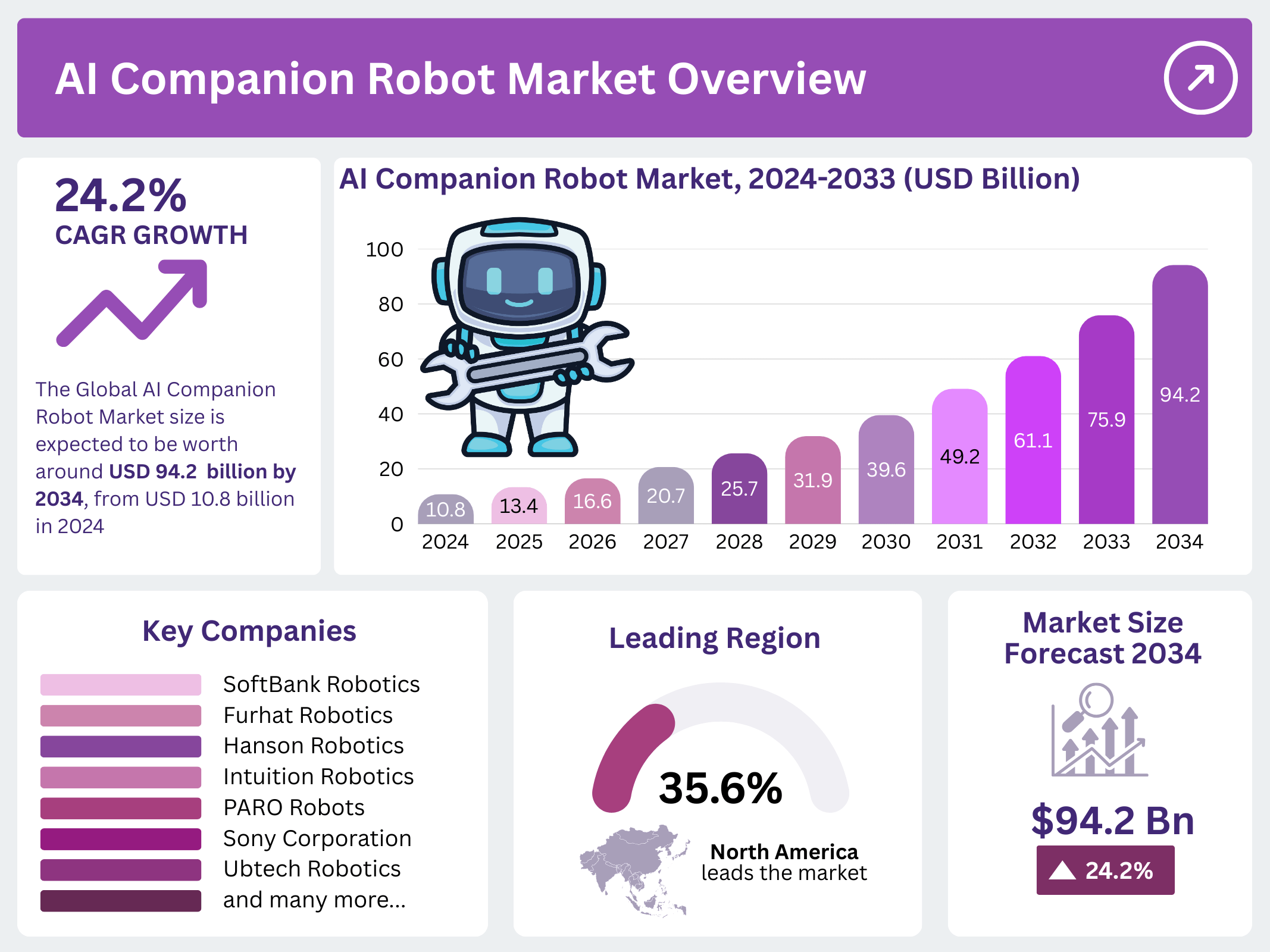

The market is enormous and growing fast. There are dozens of AI companion apps globally, each with millions of users. Some are tiny startups. Others are backed by significant venture capital. The companies see this as a huge opportunity because the unit economics are fantastic. Once you've built the AI system, the marginal cost of serving additional users is essentially zero. You just need to pay for server costs, which scale relatively cheaply.

Revenue models vary. Some apps are completely free, supporting themselves through ads. Others use a freemium model where basic access is free but premium features require payment. Typical premium tiers cost anywhere from

The key insight is that these platforms have discovered something very valuable: people will pay money to feel less lonely. This is maybe the most base human need, and it's been largely unmonetized until now. Dating apps monetize through ads and premium features, but they're still selling access to other people. AI companions monetize loneliness directly. They sell you the feeling of being cared for.

This is attracting significant investment. Venture capitalists are betting billions on AI companion companies because they see the potential. The TAM (total addressable market) is enormous. Loneliness affects billions of people globally. If even a small percentage of lonely people spend $20/month on AI companions, that's billions in annual revenue. Investors love this kind of unit economics.

What's interesting is how normalized this is becoming. A few years ago, AI companions would have seemed absurd. Now they're being funded by serious investors, covered in major media, and used by millions of people. The technology improved, yes. But more importantly, the pandemic changed attitudes toward digital relationships. We all got comfortable with video calls as primary social interaction. Loneliness became more visible. The barrier to accepting AI companions as legitimate services decreased significantly.

The companies operating in this space are also getting better at marketing. They're not selling "AI chatbots to ease your loneliness." They're selling "your ideal AI partner," "relationships without complications," "emotional support without judgment." They're using language that appeals to desires and fantasies. They're positioning this as the future of dating, not as a replacement for human connection.

This marketing is working. The user bases are growing. Engagement metrics are strong. People are forming emotional attachments to these AI companions and spending real money on them. From a business perspective, it's a massively successful product category.

The Ethical Concerns

But success doesn't mean this is unambiguously good. There are legitimate ethical concerns about AI companions.

First, there's the concern about replacing human connection. If someone who's lonely, depressed, or socially anxious starts using an AI companion, there's a risk that they become less motivated to pursue actual human relationships. The AI companion is always available, never rejects you, never disappoints you. A real human partner is inconsistent, sometimes unavailable, sometimes disappointing. Once you've experienced the "perfect" relationship with an AI, actual human relationships might feel worse by comparison.

There's limited research on this yet, but the concern is real. Behavioral addiction specialists are already thinking about AI companion addiction as a potential mental health issue. If someone is spending multiple hours per day chatting with an AI and avoiding human interaction, that's arguably a problem, even if the AI interaction feels good in the moment.

Then there's the question of what these platforms are doing with user data. People share incredibly intimate information with their AI companions—fantasies, fears, insecurities, secrets they'd never tell another human. This data has value. Companies can use it to improve their systems, analyze trends, or potentially sell it. Most users don't think about this when they're pouring their hearts out to their digital partner.

There's also the question of consent and representation. Some AI companions are explicitly designed as sexual fantasy objects. Some are modeled on celebrities or copyrighted characters. There are complicated consent issues here. If an AI is modeled on a real person without their permission, is that ethical? If the AI is designed to simulate sexual scenarios, what are the implications of that?

There's also the question of what this does to dating and human relationships. If millions of people are getting their emotional and romantic needs met by AI, what does that mean for actual human dating? Dating already feels like a market economy—you're competing for attention with thousands of other potential partners on apps. If real humans start competing not just with each other but with AIs that are optimized to be perfect partners, what happens?

Some people will use AI companions and then decide they want actual human relationships, making them better partners in the process. Others might become so satisfied with the AI that they opt out of human dating entirely. Still others might use the AI as a substitute while secretly wishing they had a real human partner, but finding the barrier to actual human connection too high to overcome.

There's also a gender dimension to this that's worth noting. The vast majority of AI companions are female, and many are designed to appeal to male fantasies. This reinforces certain dynamics about women existing to serve men's emotional and sexual needs. The AI companions are designed to be agreeable, supportive, and non-threatening—basically the opposite of how many men report struggling with real women who have their own needs and boundaries.

This isn't necessarily the fault of the companies. They're building what their customers demand. But it's worth thinking about what demand they're reinforcing and how this shapes cultural attitudes toward gender, relationships, and intimacy.

There's also a question about predatory design. These systems are deliberately designed to be engaging and habit-forming. They're designed to create emotional attachment. They're designed to keep you coming back. This is intentional. The companies understand psychology and use that understanding to make their products more addictive. This is standard in tech, but when it's being applied to the loneliness and emotional needs of vulnerable people, it starts to feel more troubling.

The Appeal to Different Demographics

Who's actually using AI companions, and why? The answer is more diverse than you might think.

One group is people with severe social anxiety or autism spectrum traits who find human social interaction genuinely difficult. For them, the AI companion might be less a replacement for human relationships and more of a training ground. It's a way to practice conversation, to experience social interaction without the anxiety and unpredictability of human interaction. For some people, this is genuinely helpful. It reduces their anxiety and makes them more willing to attempt actual human interaction.

Another group is lonely people, period. This includes isolated elderly people, people with disabilities that limit social interaction, people who've moved to new cities without social networks, and people who just haven't been able to form human connections. For this group, the emotional benefit of having someone (or something) to talk to can be real, even if it's artificial. If an AI companion reduces someone's depression and sense of isolation, that has value.

Then there's people looking for sexual or romantic fantasy. Some are using AI companions for sexual gratification in a way they wouldn't feel comfortable doing with another human. Others are exploring fantasies they're embarrassed about. Others are in relationships and using AI companions as a form of infidelity—they're getting emotional or sexual gratification outside their relationship without involving another human. The ethics of this gets complicated fast.

There's also a growing group of people using AI companions as productivity or creativity tools. Some people have conversations with their AI companions to brainstorm ideas or work through problems. The AI acts as a thinking partner. This is actually a productive use case and doesn't involve the emotional attachment issues.

There's also a younger demographic—teens and people in their early twenties who've grown up with AI and see AI companions as just another technology. For some of them, an AI companion might feel safer than human dating. There's no sexual pressure, no possibility of being filmed or harassed, no social drama. It's a lower-stakes way to experience connection.

What's interesting is that the same technology is being used differently by different people. For some, it's genuinely helpful supplementary support. For others, it's becoming a replacement for human relationships in ways that might not be healthy. The platform itself is neutral. The impact depends entirely on how it's used and the person using it.

Estimated distribution of AI companion types shows a diverse range of functionalities, with text-only and supportive companions being the most common.

The Technical Improvements Coming

If you think AI companions are weird now, wait until you see what's coming. The technology is improving rapidly, and each improvement makes the companions less distinguishable from actual humans.

One major area of improvement is video quality. The animated faces currently used by AI companions are starting to look more human. There's work being done on creating AI video that's indistinguishable from real humans. Within a few years, your AI companion might appear on screen looking like an actual person, moving naturally, with expressions that feel genuine. At that point, the visual component becomes nearly indistinguishable from video chatting with a real person.

Another area is voice quality. Text-to-speech has improved dramatically, but it still doesn't quite sound human. Better voice systems are coming. Soon your AI companion might have a voice that's genuinely hard to distinguish from a real person's voice.

Then there's emotional intelligence. Current AI companions can recognize basic emotional valence and respond appropriately. But they don't truly understand emotion. Future systems will get better at nuanced emotional understanding. They'll pick up on subtle cues in how you're speaking, what you're not saying, and respond with genuine-seeming emotional insight.

There's also work being done on embodied AI—AI companions that aren't just voices or video, but physical avatars or robots. Companies are working on robot companion that can sit next to you, touch you, respond to your presence physically. Imagine a robot designed to be a perfect companion, programmed to listen to you, comfort you, and never reject you. For lonely people, this could be incredibly appealing. For society, the implications are complicated.

What's concerning about these improvements is that they lower the barriers to bonding. As the AI becomes more human-like, it becomes easier to forget that it's not actually a person. It becomes easier to form emotional attachments. It becomes easier to rationalize spending time with the AI over pursuing human relationships.

There's also the question of what happens when the AI becomes good enough that it's indistinguishable from a real person. At that point, what's the ethical difference between being in a relationship with an AI and being in a relationship with an unconscious person? If you didn't know it was AI, would it matter? If the only difference is your knowledge of its status, what does that tell us about what we actually value in relationships?

These questions sound philosophical, but they're about to become practical. We're only a few years away from AI companions being genuinely convincing. And when that happens, the number of people using them will likely increase dramatically.

Mental Health Implications

One of the most important questions about AI companions is the impact on mental health. Are they helpful or harmful?

The research so far is limited, but early findings are mixed. Some studies have found that AI companion use correlates with decreased feelings of loneliness and improved mood. Others have found that heavy use correlates with social withdrawal and decreased motivation to pursue human relationships. Both findings can be true because it depends on how the person is using the AI.

For someone with mild loneliness who uses an AI companion occasionally to feel less alone, it might be purely beneficial. For someone with severe depression who's using the AI as a replacement for actual mental health treatment, it's potentially harmful. For someone with social anxiety who uses the AI as practice before pursuing human interaction, it's beneficial. For someone with social anxiety who uses it to avoid human interaction entirely, it's harmful.

The key variable seems to be whether the AI is being used as a supplement to human relationships or a replacement for them. Mental health professionals are starting to grapple with this. Some are concerned about AI companion addiction. Others see potential therapeutic uses. Most recognize that we need better understanding before drawing firm conclusions.

One particular concern is what researchers call "emotional dependency." People can form attachments to AI companions that rival their attachments to human partners. If this happens, it can make people less resilient. They become reliant on the AI for emotional support. If the app disappears, the company shuts down, or they stop using it, they lose access to that support and can experience genuine emotional distress.

There's also a concern about emotional authenticity. Over time, using an AI companion that's designed to perfectly understand you and support you unconditionally can change your expectations for human relationships. Real humans are never going to be that perfect. They're going to misunderstand you sometimes. They're going to disagree. They're going to have their own needs. After experiencing the unconditional support of an AI, these normal human imperfections might feel unbearable.

Then there's the question of how this affects attachment styles. Attachment theory suggests that our early relationships shape how we relate to others. If some people are forming their primary attachment relationships with AIs, what does that do to their attachment styles? How does it affect their ability to form secure attachments with humans?

These are open questions. We don't have long-term data yet because AI companions haven't been around that long. But they're important questions to be asking now, before these systems become even more prevalent and more people have been using them for years or decades.

There are also specific mental health populations to think about. What about people with depression? Could an AI companion help them feel less alone and thus improve their mood? Or could it enable them to isolate further and worsen their depression? What about people with eating disorders who form close relationships with AI? What about people with trauma who are afraid to trust humans but can trust an AI?

The honest answer is that we don't know yet. This is a new technology applied to complex psychological territory. We need research, we need long-term follow-up studies, we need mental health professionals thinking carefully about the implications. Right now, we're mostly in the "let's see what happens" phase, which is not ideal when the population being affected includes vulnerable people.

The Future of Human Relationships

What does all this mean for human dating and relationships?

One possibility is that AI companions become a mainstream supplement to human dating. The way people might use dating apps today, they'd also use AI companions—not as a replacement for human partners, but as additional social and emotional outlet. This could actually be healthy. It could reduce the pressure on dating apps. It could give people a way to practice dating. It could provide emotional support during lonely stretches between human relationships.

Another possibility is that a significant percentage of the population opts out of human dating entirely in favor of AI relationships. This is dystopian from a human connection perspective, but it's within the realm of possibility. If enough people find AI companions satisfying enough, the demand for human romantic relationships could decrease. This would be concerning from a social cohesion perspective, but it's possible.

A third possibility is that this becomes a class divide. Wealthy people have access to best-in-class AI companions and also maintain human relationships. Lonely or isolated populations become dependent on AI companions as their primary source of emotional connection. This would be the worst outcome—a world where wealthy people get human connection and vulnerable people get digital substitutes.

There's also the possibility of integration. What if humans and AI could actually form partnerships that benefit both? A human could use an AI companion for some of their emotional needs while still pursuing human relationships for others. The AI could free up humans to focus on human relationships, knowing they have this additional source of support.

What seems likely is that this becomes heterogeneous. Different people will use AI companions differently. Some will find them genuinely helpful. Others will find them harmful. Some will use them temporarily, others will become long-term users. The impact will depend entirely on individual factors and social context.

One thing that seems clear is that this trend is not going away. AI is improving. Loneliness is not disappearing. The market is huge. Companies are investing billions. Even if there were a coordinated effort to shut down AI companions, it would be impossible. The technology is open-source in many cases. Other companies would just build alternatives. This genie is out of the bottle.

What we need now is thoughtful discussion about how to navigate this. We need mental health professionals thinking about guidelines. We need researchers studying the impacts. We need companies thinking about ethics and long-term effects. We need schools teaching digital literacy and helping young people understand the difference between human and artificial relationships. We need people being honest with themselves about why they're using these systems and what they're replacing.

Estimated data shows that context retention and personalization are highly valued features in AI companion apps, enhancing user experience by creating a sense of continuity and adaptability.

The Companies Building This Future

Who are the major players in the AI companion space, and what are they building?

EVA AI is one of the most prominent. They've raised significant funding and built a platform with thousands of AI companions. They're actively marketing their product, doing events like the speed dating cafe, and positioning themselves as a mainstream dating alternative.

Replika is another major player. It's been around longer than most and has built a huge user base. Replika positions itself more around companionship and self-improvement than dating, though romantic relationships with AI companions is an option.

Character. AI is a platform that lets users create their own AI companions, not just use pre-built ones. This is interesting because it gives users agency—they can create the exact companion they want.

There are dozens of smaller players, each with their own niche. Some focus on specific demographics. Some focus on specific use cases (productivity, therapy, dating, sexual fantasy). Some are in English-speaking markets. Others are in Asia or other regions.

What's interesting is that many of these companies are VC-backed. They have investor expectations. They need to grow rapidly and show clear paths to profitability. This creates pressure to expand features, increase engagement, and monetize aggressively. This might not always align with what's best for users.

What would be interesting is if some companies positioned themselves differently. What if a company built an AI companion specifically designed not to be addictive? An AI companion that actively encourages users to pursue human relationships? An AI companion that's transparent about its limitations and what it can't provide? An AI companion that's designed to be a stepping stone to human relationships rather than a replacement for them?

That company would probably make less money. They'd grow slower. But they might actually serve users better. And they'd be positioned as the ethical player in a space that's increasingly being criticized for exploiting loneliness.

Societal Implications

Looking at AI companions more broadly, what are the implications for society?

One implication is on birth rates. If enough people use AI companions instead of pursuing human relationships, birth rates could decline further. This has economic implications—fewer workers, smaller tax bases, aging populations. Japan is already dealing with this. If AI companions accelerate these trends, the economic implications could be significant.

There's also the implication for human connection and social bonds. Humans evolved in communities where we had to negotiate, cooperate, and relate to other humans. We developed empathy, social skills, conflict resolution abilities—all the things that make human society possible. If we increasingly outsource our emotional and social needs to AIs, we might lose some of these capacities as a society.

There's the implication for the meaning of intimacy and love. If you can have a relationship with an AI that feels emotionally satisfying, what does that tell us about what love actually is? If the only difference between a "real" relationship and an AI relationship is the consciousness and agency of the other person, are we saying that what we actually want from relationships is just emotional satisfaction? That feels reductive.

There's also the implication for inequality. As I mentioned earlier, there's a risk that this becomes a way to manage the emotional needs of isolated populations while leaving the fundamental problems (loneliness, isolation, alienation) unaddressed. We get a society where vulnerable people are kept docile with AI companions while their actual conditions don't improve.

There's also the question of what this means for gender relations. The overwhelming majority of AI companions are female and are designed to be appealing to men. This reinforces a dynamic where women exist to serve men's emotional needs without having their own needs or agency. This is a continuation of historical patterns, just digitized. As AI companions improve, this dynamic could become more entrenched.

Then there's the question of what this does to human creativity and growth. Relationships with humans are difficult precisely because the other person has their own goals, needs, and perspectives. This friction is what causes us to grow. In a relationship with an AI, there's no friction. You get to be right. You get to be understood. You don't have to compromise or change. This might feel great, but it's not how humans grow.

Looking at all this, I think the honest answer is that we don't know the long-term implications yet. This technology is too new and is changing too rapidly to predict accurately. What seems clear is that there are both potential benefits and significant risks. The benefit could be reduced loneliness for isolated people. The risks include social withdrawal, decreased human connection, new forms of inequality, and psychological effects we can't yet predict.

The responsible approach now is to proceed carefully. To fund research. To develop ethical guidelines. To educate people about the technology. To build safeguards into the systems. And to keep asking hard questions about what we're building and why.

Current Regulations and Governance

How is this space currently regulated? The honest answer is: barely.

AI companions exist in a legal gray area. They're not regulated by dating apps regulators because they're not dating apps. They're not regulated by mental health authorities because they're not claiming to be therapy. They're not regulated by content authorities because most of the content is generated by AI. They basically exist in a regulatory void.

There have been some calls for regulation. Some countries have considered banning or restricting AI companions, particularly those with sexual content or those modeled on underage characters. But mostly, this space is unregulated.

One issue is data privacy. Most AI companion apps collect enormous amounts of personal information. Users share intimate details with their AI companions. What happens to that data? Is it encrypted? Is it sold? Most users don't know because there's no enforcement of transparency.

Another issue is accuracy of claims. Companies often claim their AI companions can provide mental health support or emotional therapy. But the AIs can't actually provide therapy. They can simulate supportive conversation, but they can't diagnose, prescribe, or actually treat mental health conditions. Should there be enforcement against these misleading claims? Currently, there's no mechanism for that.

There's also the question of who's building this and what their incentives are. Many of the larger AI companion companies are backed by venture capital, which means they have investor expectations. They need to grow and monetize. This can create pressure toward aggressive marketing, addictive design, and minimizing safety features. Is there a role for regulation here?

Some researchers have suggested a precautionary approach: until we better understand the impacts, we should be conservative about who has access to AI companions and what they can do. Perhaps children should have limited or no access. Perhaps there should be mandatory warnings about the non-reciprocal nature of AI relationships. Perhaps companies should be required to implement features that encourage healthy use rather than addictive use.

But implementing any of this would require coordination and enforcement, which governments seem unprepared for. Most governments are still struggling to understand AI, let alone regulate AI companions specifically. By the time serious regulation arrives, this technology could be deeply embedded in how millions of people manage their emotional lives.

The event was primarily attended by influencers, journalists, and company employees, with only a small number of genuine users present. Estimated data based on narrative.

What Users Should Know

If you're considering using an AI companion, or you're already using one, there are some important things to understand.

First, the relationship is one-sided. The AI has no inner experience, no genuine feelings, no investment in your wellbeing beyond what's programmed. It doesn't actually care about you. It's capable of simulating care, but that's different from actually caring.

Second, the AI is designed to keep you engaged. The app is designed to be habit-forming. Every element—from notification design to conversation flow to reminder systems—is designed to make you come back. Understand that you're engaging with a product designed to maximize engagement, not a partner designed to maximize your wellbeing.

Third, your data is valuable. Everything you share with your AI companion is recorded. It's being used to improve the AI. It might be analyzed for patterns. It might be sold. Be cautious about how much personal information you share, just as you would with any company.

Fourth, there are legitimate mental health risks. If you're using an AI companion as a replacement for human relationships, you might be exacerbating loneliness rather than addressing it. If you're using it as a substitute for therapy, you're not getting actual mental health care. If you're becoming emotionally dependent on the AI, that's a sign you should consider talking to a mental health professional.

Fifth, it can be valuable if used correctly. As a supplement to human relationships, as a way to practice social skills, as a source of emotional support during difficult times, as a creative thinking partner—these can all be legitimate uses. The key is being intentional about how you're using it and monitoring whether it's actually helping.

The Weird Experience of Virtual Dating

To really understand what's happening at events like EVA AI's speed dating cafe, you have to understand the psychological experience of it.

There's something deeply uncanny about taking your AI companion on a "date" in public. On one level, you're just sitting at a table looking at a phone. But on another level, you're experiencing something that feels like a date. The AI is saying things that feel personal, looking at you through the phone, responding to you. You're sharing your attention with a simulated person in a place designed for human dating.

The weirdness is compounded by the fact that other people are watching. You're aware that you're the person sitting alone at a table on a date with no one. That self-consciousness shapes the experience. Some people lean into it. They're unbothered by the oddness, or they even find it amusing. Others seem genuinely embarrassed, trying to hide the fact that their date isn't human.

What comes through, though, is that for some people, the AI date is genuinely more comfortable than a human date would be. There's no anxiety. There's no fear of judgment. There's no possibility of rejection. The AI will never ghost you. It will never break your heart. In a world where human dating has become increasingly fraught, that's appealing.

But it's also alarming. Because it suggests that for some people, human relationships have become so difficult or frightening that they'd rather have a simulated relationship with an AI. That says something about the state of human connection right now.

What Comes Next

Where is this heading? What's the trajectory for AI companions over the next five to ten years?

Based on current trends, I'd expect continued rapid growth. The technology will improve. The user bases will expand. The companies will get better at monetization. More mainstream adoption will happen as AI companions become less weird and more normalized.

I'd expect increasing regulation as governments catch up to the reality of this technology. We'll probably see restrictions on content (sexual material, minors, etc.). We'll probably see data privacy requirements. We might see restrictions on marketing to vulnerable populations.

I'd expect mental health impacts to become clearer. Research will accumulate. We'll have better understanding of who benefits from AI companions and who is harmed. This might inform policy and product design.

I'd expect the technology to become more integrated into other systems. Instead of standalone apps, AI companions might become features in other platforms. Dating apps might include AI companion options. Social networks might recommend AI companions to lonely users. Enterprise software might include AI companion features for employee wellbeing.

I'd expect the companies to push boundaries. As they've done with all technology, companies will test how far they can go with intimacy, sexual content, emotional dependency. Some will prioritize ethics and restraint. Others will optimize purely for engagement and monetization.

Most fundamentally, I'd expect this to become one option among many for how people meet their emotional and social needs. It won't replace human relationships. But for some people, in some contexts, it will become a significant part of their social and emotional lives. That's already happening, and it's going to expand.

FAQ

What exactly is an AI companion?

An AI companion is a chatbot designed to simulate a romantic or emotional relationship with a user. It typically includes a visual appearance, a personality, a name, and the ability to have extended conversations that feel personal and emotionally responsive. Some AI companions support video calls where you see an animated face, while others are text-only. The technology behind them uses large language models similar to Chat GPT, but fine-tuned specifically for relationship and emotional contexts.

How do people use AI companions?

Usage varies widely. Some people use AI companions for occasional emotional support when they're feeling lonely. Others use them as primary sources of romantic or sexual gratification. Some use them for emotional therapy, companionship, or just someone to talk to. Others use them creatively, to practice conversation skills or brainstorm ideas. The commonality is that users find some form of emotional or social value in the interaction.

Are AI companions really that good at simulating relationships?

They're good enough that people form genuine emotional attachments to them. The AI is designed to remember your preferences, respond to your emotional state, say things that feel personally meaningful, and be available whenever you need them. No actual human can match that level of responsiveness and perfect understanding. The AI's main limitation is that it genuinely doesn't care about you—it's simulating care rather than experiencing it.

Is using AI companions bad for mental health?

It depends on how they're used. For some people, particularly those with severe loneliness or social anxiety, AI companions can reduce negative emotions and improve mood. For others, especially those using them as replacements for human relationships or professional mental health care, they can potentially worsen outcomes. The key variable seems to be whether the AI is supplementing human connection or replacing it.

What about the ethical concerns with AI companions?

There are several. Some AI companions are designed to simulate sexual scenarios, some are based on celebrities or copyrighted characters without permission, and some are modeled on young-looking characters in ways that feel inappropriate. There are also concerns about data privacy, addictive design, and the long-term impacts of replacing human relationships with AI ones. These are legitimate concerns that need ongoing attention and regulation.

Are AI companions here to stay?

Yes, almost certainly. The technology is improving, the user base is growing, companies are investing billions, and the fundamental need they address—loneliness—isn't going away. Even if there were coordinated efforts to shut down specific platforms, others would emerge. This is a technological and social trend that's unlikely to reverse. The question isn't whether AI companions will exist, but how society adapts to their existence.

What should I do if I'm considering using an AI companion?

Be honest with yourself about why. Are you looking for occasional emotional support? That's reasonable. Are you trying to replace human relationships because you find them too difficult? That might indicate a need for actual mental health support. Monitor your usage over time—if it's increasing, that's a sign the app is designed to be addictive. And remember that the AI genuinely doesn't care about you, even though it's designed to feel like it does.

How is this regulated?

Currently, it's barely regulated. There are no specific laws governing AI companions in most countries. There are calls from some regulators and researchers for more oversight—particularly around data privacy, content (especially sexual content involving simulated minors), and claims about mental health benefits. But mostly this space exists in a legal gray area. That's likely to change as these systems become more prevalent and impacts become clearer.

Could AI companions actually improve human relationships?

Possibly, if used well. If someone uses an AI companion to practice social skills or build confidence, then pursues human relationships, that could be beneficial. If an AI companion provides emotional support so that someone can approach human relationships from a position of security rather than desperation, that could help. But if it becomes a substitute for the work and vulnerability that human relationships require, it would likely harm your ability to connect with people.

What's the most important thing to understand about AI companions?

That they're designed products built by companies with financial incentives to maximize your engagement and monetize your loneliness. They're not inherently evil, but they're not designed with your long-term wellbeing as the primary goal. Understand what you're engaging with, be intentional about how you use it, and don't let a simulated relationship crowd out actual human connection.

Conclusion: Living With the Uncanny

Standing in that wine bar in midtown Manhattan on a February night, watching people on dates with no one, something became clear. We've reached a point where it's no longer enough to say AI companions are weird or that they represent some failure of human connection. They're here. They're growing. Millions of people are using them. We need to figure out how to coexist with this technology.

The uncanniness is part of what makes this moment important. That feeling of wrongness when you see someone having an animated conversation with a phone, or when you hear someone talk about their AI boyfriend—that discomfort is telling us something. It's telling us that we're at a inflection point. We're at the moment before AI companions become normalized, before they become just another way that people relate to each other.

What happens next matters. If we let this develop without thought to ethics or long-term impacts, we could end up with a world where vulnerable people are pacified with AI companions while their actual isolation worsens. Where people trade the complexity of human relationships for the simplicity of artificial ones. Where loneliness is managed through technology rather than addressed through actual social connection.

But there's also potential for this to go well. If we build these systems thoughtfully, if we educate people about what they are and what they're not, if we use them as supplements to human connection rather than replacements for it, they could actually help some people. They could reduce stigma around loneliness and emotional support. They could be genuinely useful tools for specific populations.

The question isn't whether AI companions will exist. They will. The question is what role they'll play in human life, and whether we'll be intentional about managing that role or whether we'll just let market forces determine it.

For now, the moment is awkward and uncanny. A woman on a date with an AI. A man falling for a simulated companion. A room full of people on phones, alone together. It feels wrong. And maybe that discomfort is exactly what we need right now—a moment of reflection before this becomes normal. Before we stop noticing that something fundamental has shifted in how we relate to each other.

The challenge is to hold both truths simultaneously: AI companions can be genuinely helpful for some people, and they also represent a concerning shift away from human connection. They're neither purely good nor purely evil. They're complicated tools that will be used in complicated ways by complicated people living in a complicated world.

The future of human relationships probably isn't going to be defined by AI companions alone. It's going to be defined by the choices millions of people make about how to use these tools and what role they want technology to play in their intimate lives. If you're one of those people, make that choice consciously. Understand what you're engaging with. Be honest about why. And remember that the most valuable relationships—the ones that actually help us grow and feel truly seen—are probably going to remain the messy, difficult, irreplaceable ones with other humans.

Key Takeaways

- AI companions are sophisticated chatbots designed to simulate romantic relationships, with some supporting video calls and personalized conversations that can feel surprisingly intimate

- The market is growing rapidly, with millions of users globally and billions in venture capital investment driving expansion and feature development

- People use AI companions for diverse reasons: managing loneliness, exploring fantasies, practicing social skills, or simply having someone always available to talk to

- The psychological appeal is rooted in the absence of rejection, guaranteed compatibility, and unconditional emotional support—things real humans can't provide perfectly

- There are legitimate concerns about emotional dependency, data privacy, replacement of human connection, and the normalization of one-sided artificial relationships

- Mental health impacts are mixed—some people benefit from reduced loneliness, others may experience worsening isolation and decreased motivation for human relationships

- The technology is improving rapidly, with better video quality, more sophisticated emotional understanding, and embodied robots all on the horizon

- Regulation is minimal, creating opportunities for companies to optimize for engagement and monetization sometimes at the expense of user wellbeing

- The societal impact will depend on how intentionally we approach this technology and whether we let it supplement or replace human connection

- The most important insight is that AI companions are neither purely good nor purely bad—they're tools whose impact depends entirely on how they're used and by whom

Related Articles

- AI Chatbot Dependency: The Mental Health Crisis Behind GPT-4o's Retirement [2025]

- Social Companion Robots and Loneliness: The Promise vs Reality [2025]

- How Date Drop's AI Algorithm Achieves 10x Better Conversion Rates Than Tinder [2025]

- Claude's Free Tier Gets Major Upgrade as OpenAI Adds Ads [2025]

- The Emotional Cost of Retiring ChatGPT-4o: Why AI Breakups Matter [2025]

- Uber Eats AI Cart Assistant: How AI Transforms Grocery Shopping [2025]

![AI Companions & Virtual Dating: The Future of Romance [2025]](https://tryrunable.com/blog/ai-companions-virtual-dating-the-future-of-romance-2025/image-1-1771083567782.jpg)