The Healthcare AI Moment We're Actually Living In

Last year, OpenAI dropped a number that nobody expected: 40 million people use ChatGPT for healthcare every single day. Not per month. Per day.

Let that sink in. That's roughly the population of Canada opening ChatGPT specifically to ask health questions. It's about 4 times the number of daily Reddit users. It's a number so big it's almost meaningless until you think about what it actually represents: millions of people turning to an AI instead of calling their doctor, visiting urgent care, or scrolling WebMD.

Here's the wild part. Nobody mandated this. There's no big healthcare company telling patients to use ChatGPT. There's no insurance company pushing it. People are just... doing it. Because it's faster, it's available at 3 AM, and it doesn't judge you for asking about symptoms you'd be embarrassed to mention to a real doctor.

But this creates a problem, and honestly, an opportunity. The problem is obvious: AI isn't always right, and when it's wrong about health, people can get hurt. The opportunity is less obvious, but maybe more important: what if we actually figured out how to use AI in healthcare in a way that makes things better instead of worse?

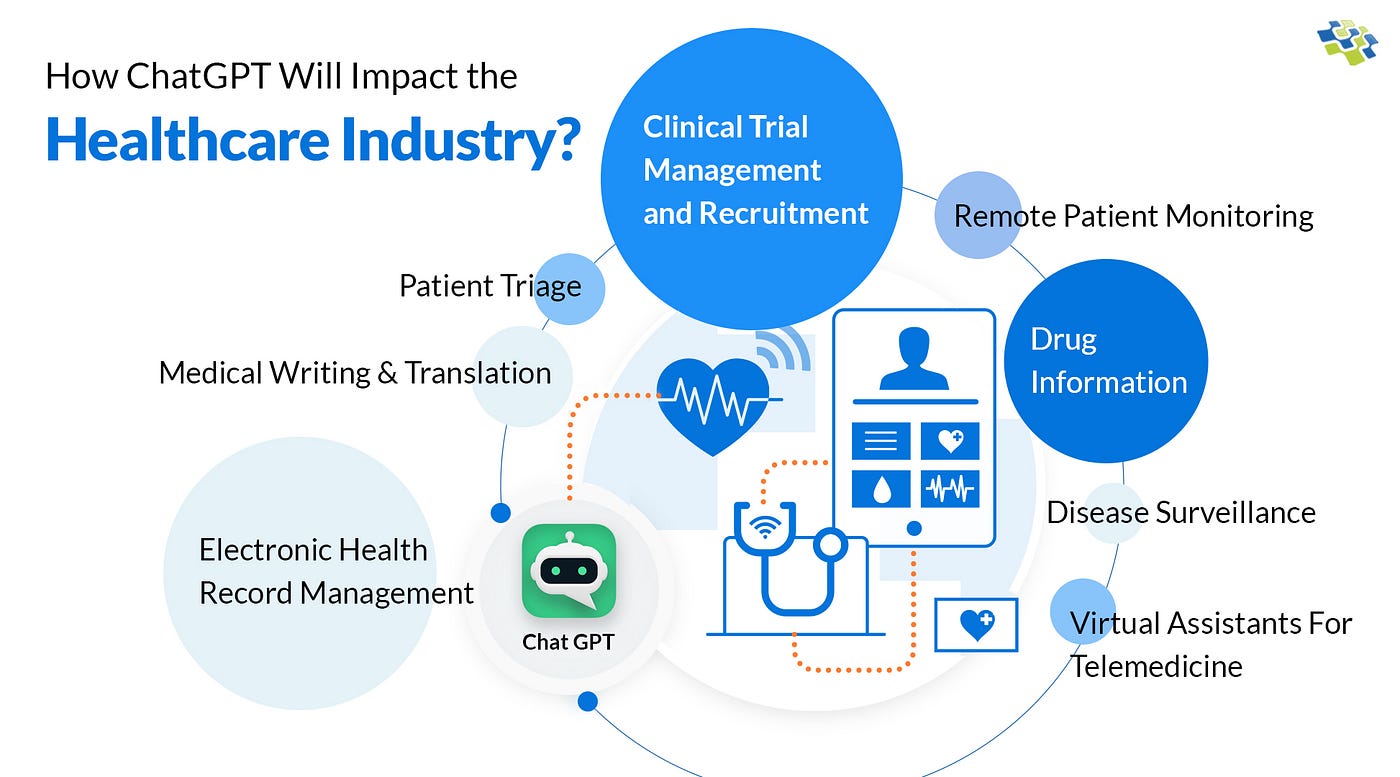

This isn't science fiction anymore. It's happening right now, in doctor's offices, in hospitals, in patient support forums, and on people's phones. And the implications are massive.

TL; DR

- 40 million daily ChatGPT users for healthcare represents the fastest adoption of AI in medical decision-making ever

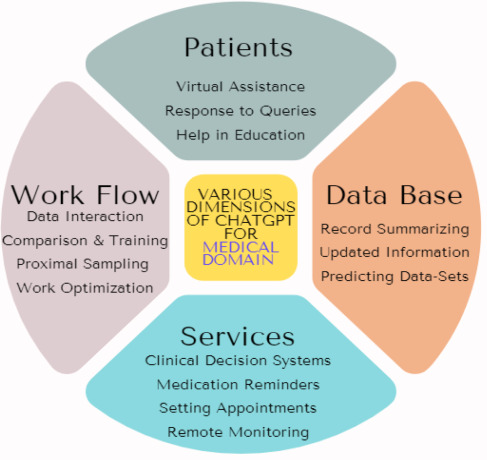

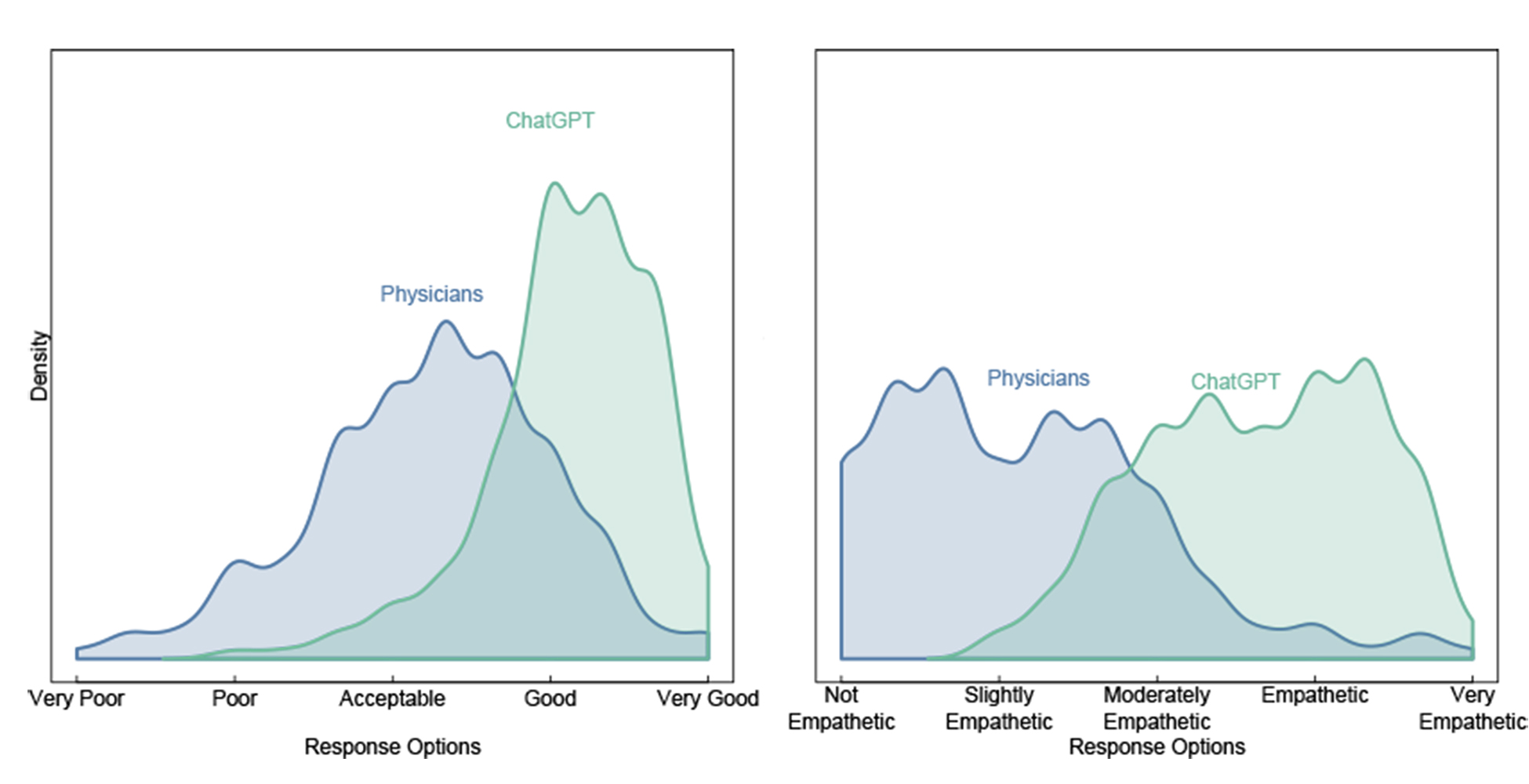

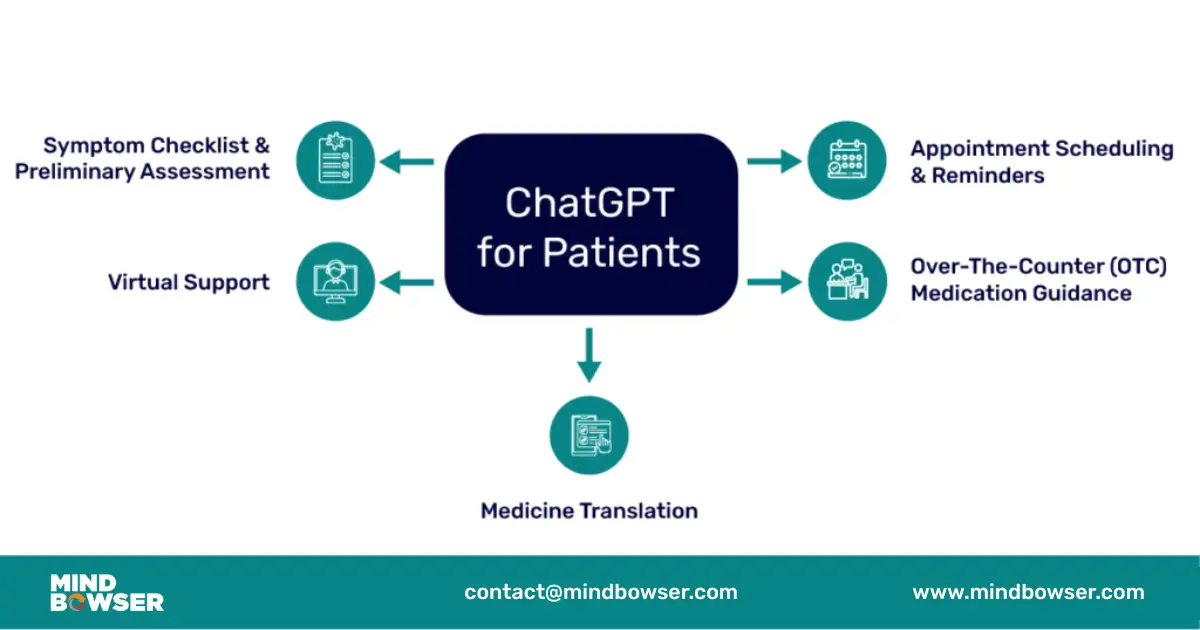

- AI excels at medical research synthesis, symptom checking with disclaimers, mental health triage, and clinical documentation

- The risks are real: AI hallucinations can provide dangerous medical advice, lacks context of personal medical history, and can't replace clinical diagnosis

- Healthcare systems are integrating AI faster than regulation can keep up, creating both efficiency gains and patient safety questions

- The future isn't AI replacing doctors but AI augmenting doctors, reducing burnout, and democratizing medical information access

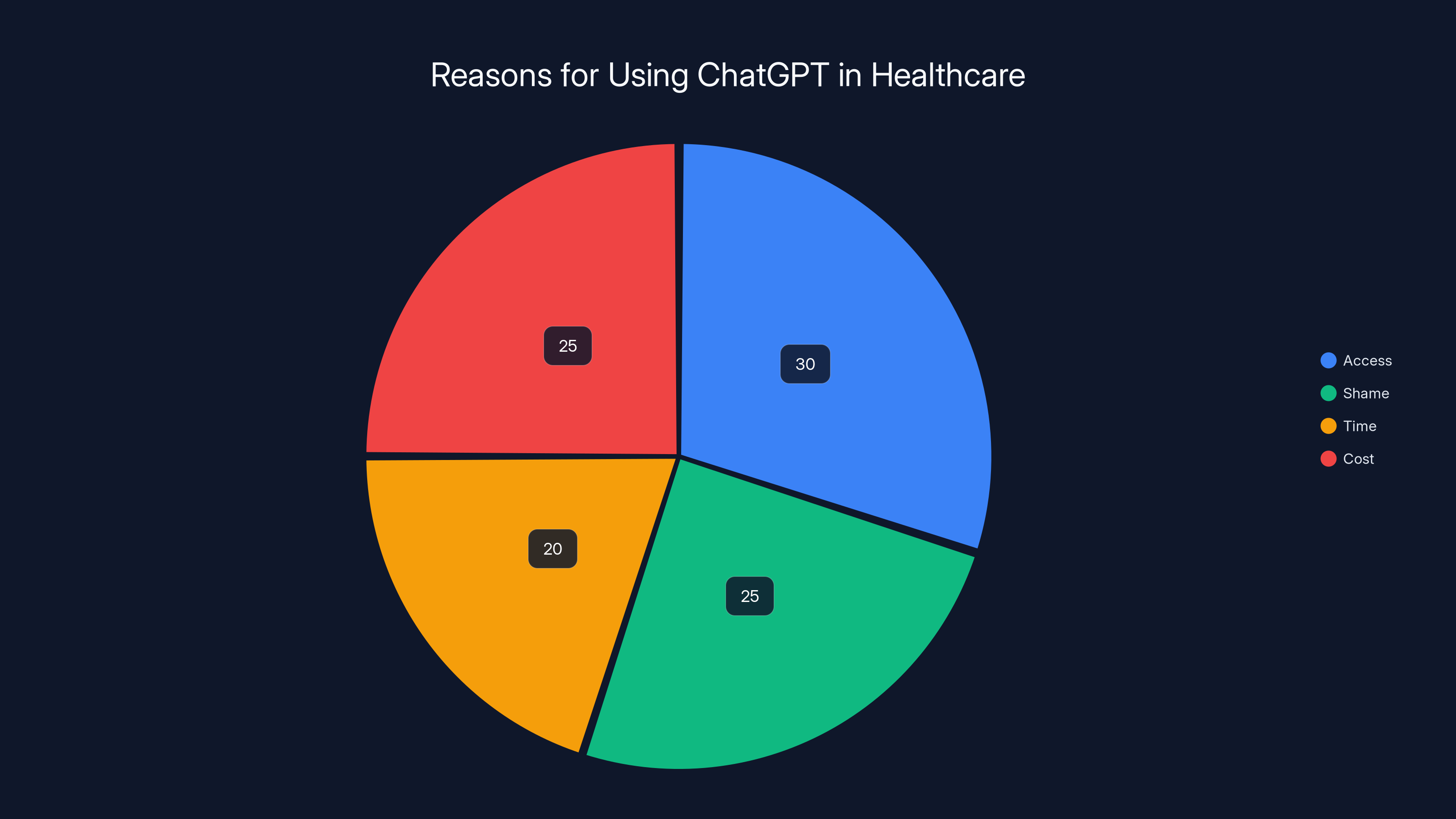

Estimated data shows that access and cost are the leading reasons why 40 million people use ChatGPT for healthcare, followed by shame and time constraints.

Why 40 Million People Are Using Chat GPT for Healthcare

The number makes sense if you think about it. Healthcare is broken in a lot of ways, and ChatGPT solves some of those breaks instantly.

First, there's access. A doctor's appointment takes weeks to schedule. You wait in a room for 45 minutes. The actual consultation lasts 8 minutes. ChatGPT answers your question in 30 seconds. If you're in a rural area, getting to a specialist might mean driving 2 hours. ChatGPT is available everywhere there's internet.

Second, there's shame. People don't want to talk about hemorrhoids, or sexual dysfunction, or whether that weird rash is contagious. They'll ask ChatGPT things they'd never ask a doctor. A dermatologist I talked to said half their patients had already googled their symptoms before arriving. ChatGPT is just Google that actually understands context.

Third, there's time. A 2 AM panic about chest pain? You're not calling your doctor. You're definitely not going to the ER if you're not sure it's serious. You might ask ChatGPT if it's something to worry about. That's a real moment, happening right now, millions of times per day.

Fourth, there's cost. A telehealth visit is usually $100-150, even with insurance. ChatGPT costs nothing. In a world where people skip medications because they're too expensive, a free triage tool is genuinely useful.

But here's what's important: using ChatGPT for healthcare isn't the same as getting good healthcare. It's filling a gap that exists because healthcare is expensive, slow, and often dehumanizing. That gap is real. The question is whether filling it with AI makes things better or worse.

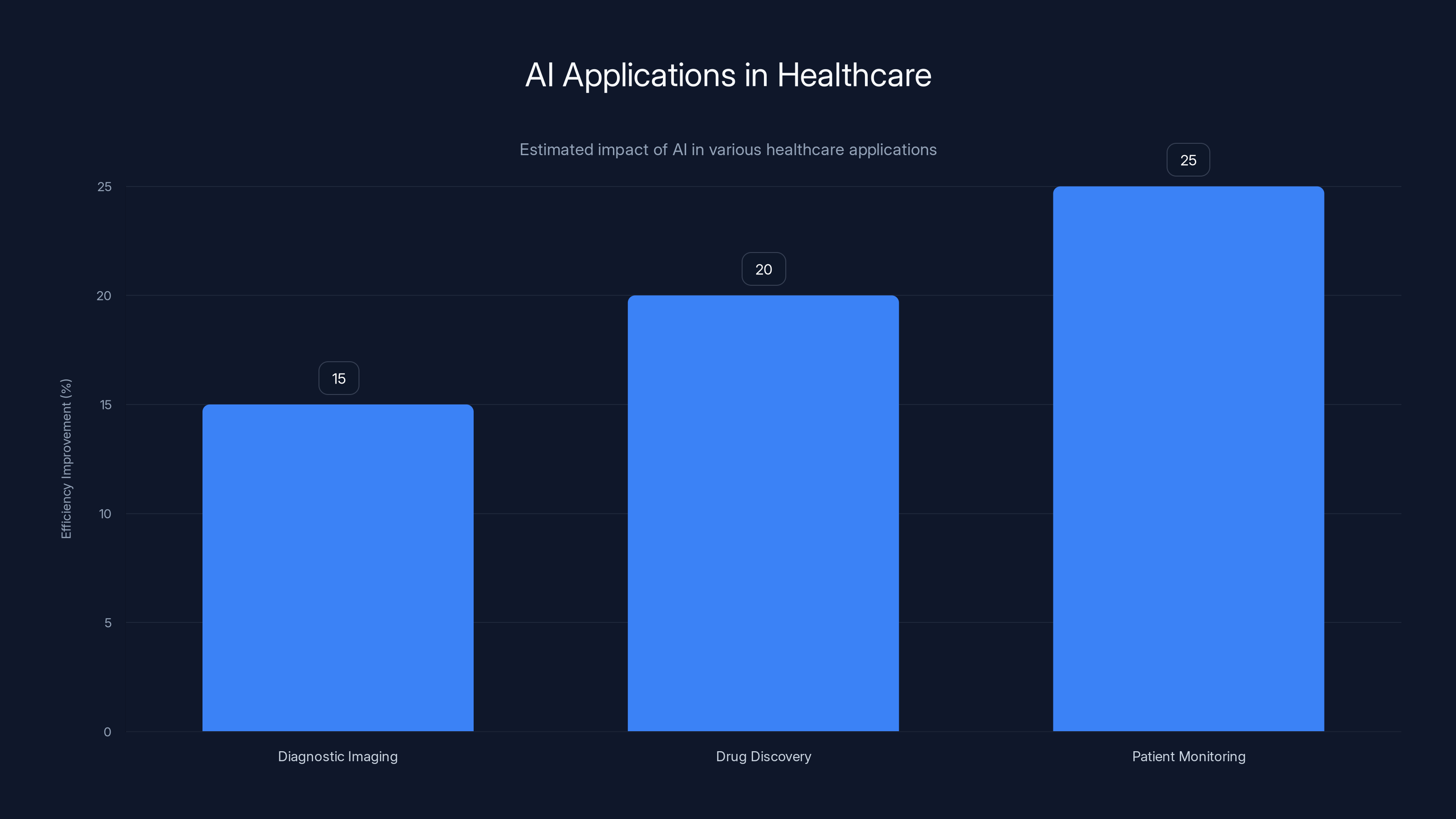

AI significantly improves efficiency in healthcare, with patient monitoring seeing the highest estimated improvement at 25%. (Estimated data)

How People Actually Use Chat GPT for Healthcare

The 40 million number groups together very different use cases. Let's break them down, because they're not all equally risky or valuable.

Symptom Research and Self-Triage

This is probably the most common use case. Someone has a symptom, and they want to know if it's serious enough to see a doctor. ChatGPT is actually pretty good at this, with a critical caveat: it can't diagnose. But it can help people understand what might be causing something and whether urgent care is needed.

Example conversation: "I have a sharp pain in my lower right abdomen and it's been 4 hours." ChatGPT will say something like: "This could be appendicitis, IBS, a strained muscle, or kidney stones. Appendicitis is serious and requires emergency care. If you have fever, vomiting, or the pain is unbearable, go to the ER now. If it's mild and goes away, it's probably something else. But don't wait more than 24 hours without getting this checked."

Is this perfect? No. Could it miss something? Yes. But it's better than half the advice people get from Reddit, and it's way better than ignoring the symptoms. The real win is that it creates triage, not diagnosis.

The risk: someone with appendicitis reads "probably something else" and waits too long. This happens. But it also happens with human doctors. The difference is that ChatGPT doesn't take responsibility when things go wrong.

Medical Research and Information Synthesis

This is where ChatGPT genuinely shines. A patient diagnosed with a rare condition needs to understand their treatment options. Instead of spending 8 hours reading medical papers they don't understand, they ask ChatGPT to explain the latest research on their condition.

A doctor told me: "I had a patient come in who'd synthesized 6 recent studies on their condition in a way that actually helped me understand what she was dealing with. She used ChatGPT as a research assistant. That's the best use case for this."

ChatGPT can explain complex medical concepts in plain language. It can compare treatment options. It can point out that a new drug might be better than the standard treatment, which might prompt a patient to have a more informed conversation with their doctor.

The limitation: ChatGPT's knowledge cutoff means it doesn't know about research published in the last few months. And it can hallucinate studies that don't exist (this is a real problem). But as a starting point for understanding medical research, it's legitimately useful.

Mental Health Support and Crisis De-escalation

This is controversial, but also important. A person having an anxiety attack at 2 AM might ask ChatGPT for help instead of going to the ER. ChatGPT can walk them through breathing exercises, grounding techniques, and help them understand whether they need emergency care.

A therapist I spoke with said: "I've had clients tell me that ChatGPT's 3 AM pep talks got them through a rough patch. Is that a replacement for therapy? Absolutely not. Is it better than suffering alone or making an impulsive decision at 3 AM? Sometimes yes."

The concern: AI can't recognize when someone is actually in danger. It can't involuntarily commit someone. It can't follow up. And relying on AI instead of human support can delay getting actual help.

But in a world where mental health care is expensive and hard to access, an AI that can provide immediate grounding techniques and help someone decide if they need professional help is actually valuable, as long as people understand it's not a substitute for therapy.

Clinical Documentation and Medical Writing

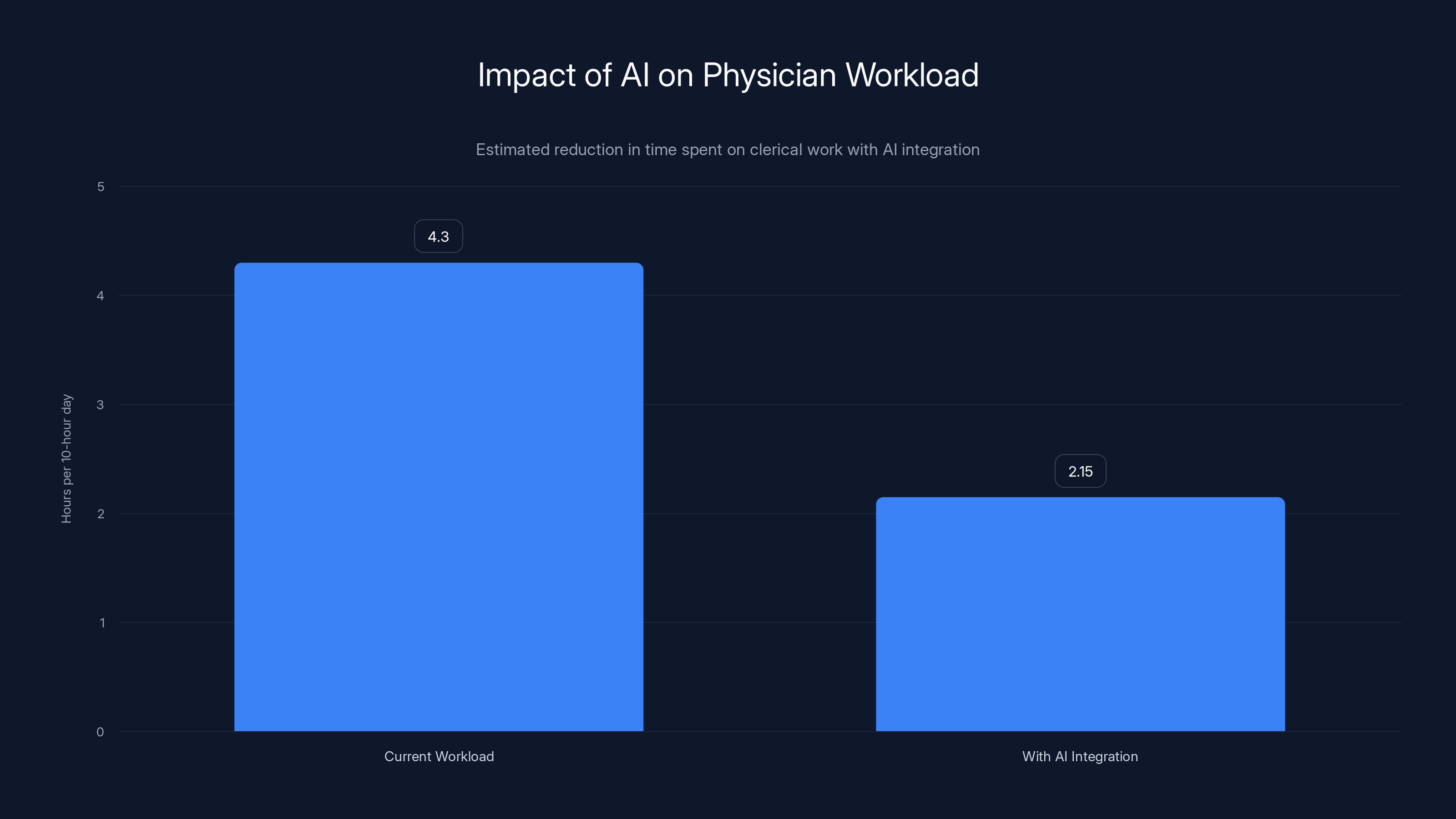

Doctors and nurses spend roughly 2 hours a day on paperwork for every 1 hour of patient care. ChatGPT can draft clinical notes, help format documentation, and even help explain medical concepts to patients.

A hospital system I researched is testing AI to draft clinical notes based on doctor dictation. The doctor talks for 5 minutes, ChatGPT generates a draft note, the doctor spends 30 seconds fixing any errors, and submits it. That saves maybe 10 minutes per patient times 20 patients per day equals 200 minutes of physician time. Scaled across a hospital system, that's physician time that can go back to actual patient care.

This is one of the few use cases where AI is clearly making things better without the ethical complexity.

The Genuine Risks: What Can Go Wrong

Let's be honest about what ChatGPT gets wrong.

AI Hallucinations in Medical Contexts

ChatGPT sometimes invents facts. It's confident, articulate, and completely wrong. In healthcare, this is dangerous.

Example: Someone asks ChatGPT about a drug interaction, and ChatGPT confidently says "these two drugs have no interaction." The drugs actually have a serious interaction. The person takes both, has an adverse event, and maybe ends up hospitalized.

This has happened. Not often, but it's happened. And the problem is that ChatGPT's confidence doesn't correlate with accuracy. It sounds just as sure when it's making something up as when it's telling the truth.

The counter-argument: doctors also make mistakes, and human doctors are overconfident about things they don't know. But human doctors are trained to be cautious in situations with uncertainty. ChatGPT isn't trained that way.

Missing Context About Individual Patients

ChatGPT doesn't know your medical history. It doesn't know you're allergic to penicillin, that you're on 5 other medications, or that you have kidney disease that changes how your body processes drugs.

A medication that's safe for most people might be dangerous for you. A symptom that usually means one thing might mean something else given your medical background. ChatGPT can't know this.

Real doctors spend years learning to integrate patient history with medical knowledge. ChatGPT has medical knowledge but not patient knowledge. That gap is huge.

The Diagnosis Temptation

People ask ChatGPT to diagnose them, and ChatGPT obliges. It shouldn't. Diagnosis requires:

- Physical examination (looking at, listening to, touching the patient)

- Lab tests (blood work, imaging, cultures)

- Patient history (allergies, previous conditions, family history)

- Understanding of how a patient's entire medical picture connects

ChatGPT has none of these. But when someone describes their symptoms and asks "What do I have?", ChatGPT will confidently list possibilities, and some people will treat that as a diagnosis.

This leads to people either ignoring serious conditions ("ChatGPT said it was probably nothing") or pursuing unnecessary treatments for conditions they don't actually have.

Liability and Responsibility Gaps

If ChatGPT gives you dangerous medical advice and you're harmed, who's responsible? This is legally unsettled. OpenAI disclaims liability. The platform isn't a regulated medical device. It's not practicing medicine because it's not a person.

But the harm is real, and someone has to be responsible. Right now, nobody is.

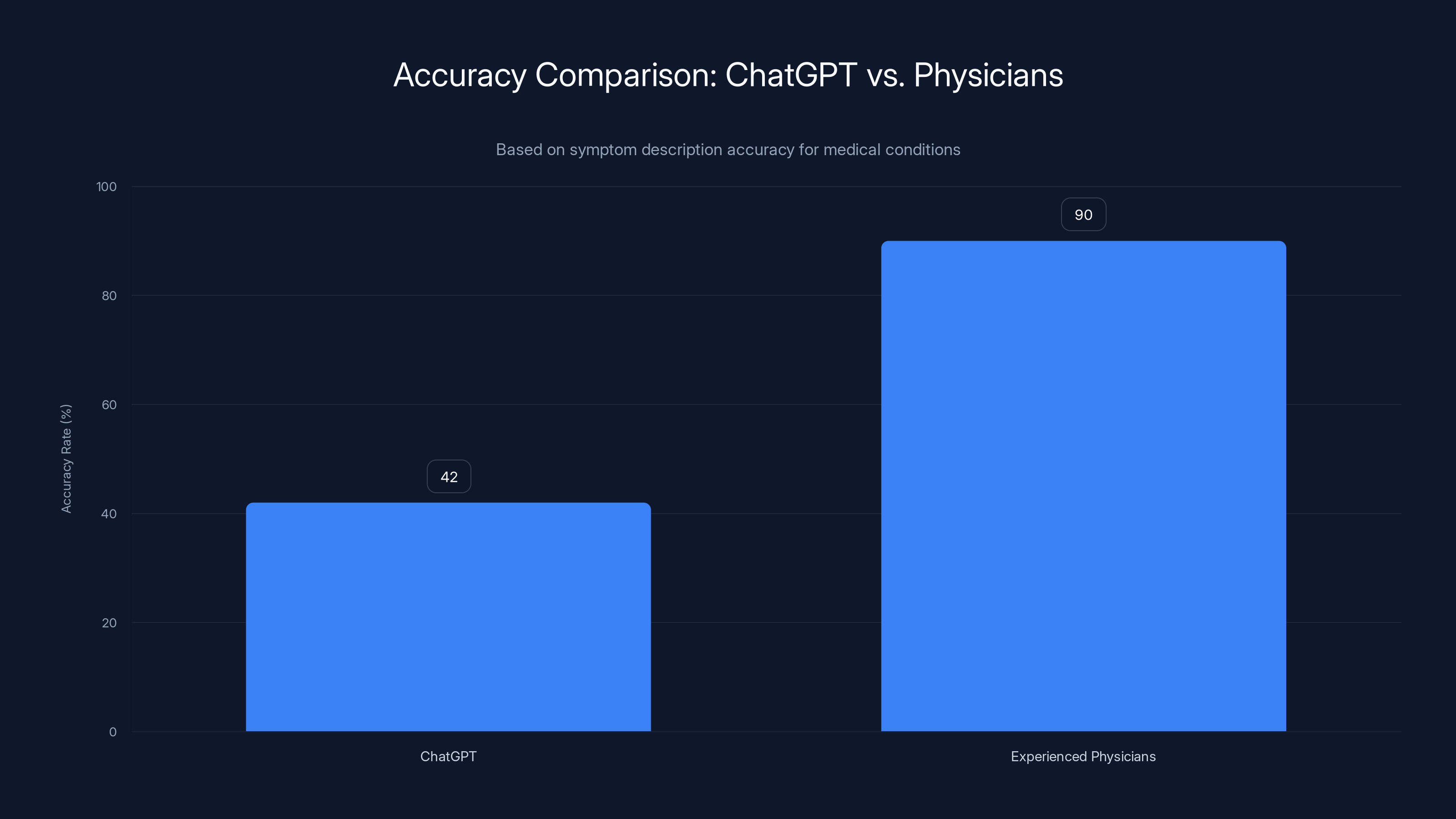

Experienced physicians have a significantly higher accuracy rate (90%) in diagnosing conditions from symptoms compared to ChatGPT (42%).

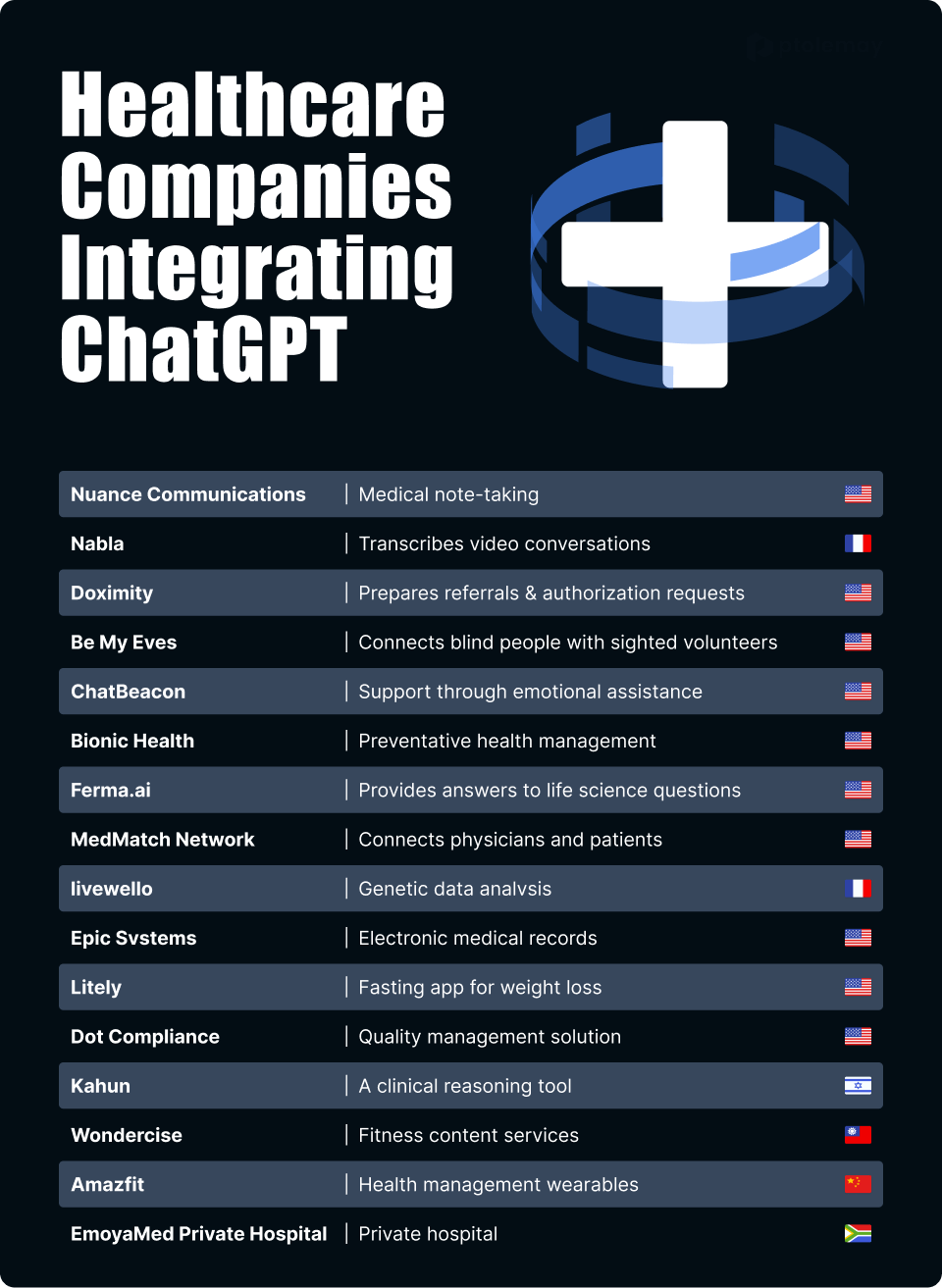

How Hospitals and Clinics Are Actually Using AI

The 40 million people using ChatGPT are mostly using it on their own, without medical oversight. But healthcare systems are integrating AI in more structured ways.

AI for Diagnostic Imaging

This is working. AI trained on millions of X-rays, CT scans, and MRIs can spot things that radiologists miss. A study in 2024 found that an AI system trained on pneumonia X-rays caught 5% more cases than radiologists, and caught them with fewer false alarms.

The way this actually works: A radiologist still reads every scan. But the AI flags cases that might have something wrong. The radiologist pays special attention to those cases. This reduces both missed diagnoses and unnecessary follow-up tests.

The win: radiologists spend less time on obvious normal scans and more time on complex cases. Patient outcomes improve. Nobody's getting replaced, but everyone's working more efficiently.

AI for Drug Discovery and Development

Finding new drugs is slow and expensive. It takes about 10 years and $2 billion to get a drug from lab to pharmacy. AI can't do this alone, but it can speed up the beginning: analyzing millions of chemical compounds to find candidates that might work.

DeepMind used AI to predict the structure of proteins, which opened up entirely new drug discovery pathways. Google's pharmaceutical research team is using AI to identify new antibiotics. These aren't ChatGPT-style AI, but they're AI, and they're working.

The timeline is important: even with AI acceleration, it still takes years to get a drug approved. But AI is cutting months off the early stage, which adds up.

AI for Patient Monitoring and Predictive Health

Wearable devices generate tons of data about your heart rate, sleep, activity, and stress. AI can analyze that data to predict health problems before they happen.

Example: An AI system analyzing wearable data flags that your resting heart rate has been slowly increasing for a month, which might indicate infection, thyroid problems, or cardiac issues. You get an alert and schedule a doctor's visit. A condition gets caught early instead of after you're in the hospital.

This is happening now, though it's mostly available in premium health systems or expensive wearables. But it's expanding.

AI for Administrative Work and Efficiency

Healthcare spends enormous amounts of money on administrative work: prior authorization, billing, insurance verification, scheduling. AI can automate a lot of this.

A healthcare system using AI for prior authorization says they cut the process from 3 days to 4 hours. That's not just faster, it's more humane: patients get their treatments approved faster, doctors spend less time on paperwork, and insurance companies get faster claims processing.

This is actually a win-win-win scenario, and it's one of the few areas where AI in healthcare has overwhelming support from doctors, patients, and administrators.

The Regulatory Landscape: Why Healthcare AI Is So Complicated

Most software products can iterate fast and fix problems later. Healthcare can't work that way, because the cost of being wrong is literally death.

But the speed of AI development is outpacing regulatory infrastructure. Here's the problem:

A ChatGPT plugin that helps doctors write notes doesn't need FDA approval because it's not making medical decisions. But a diagnostic AI system definitely needs FDA approval. The question is: what falls in the middle?

Is an AI that helps you understand medical research a medical device? Is a chatbot that triages symptoms a medical device? Different regulators are answering differently.

The FDA is moving toward regulating AI in healthcare, but they're still figuring out the framework. The European Union's AI Act is more aggressive but also more complex. Different countries have different rules.

Meanwhile, hospitals are adopting AI tools that don't clearly fit into existing regulatory buckets. This creates both opportunity and risk.

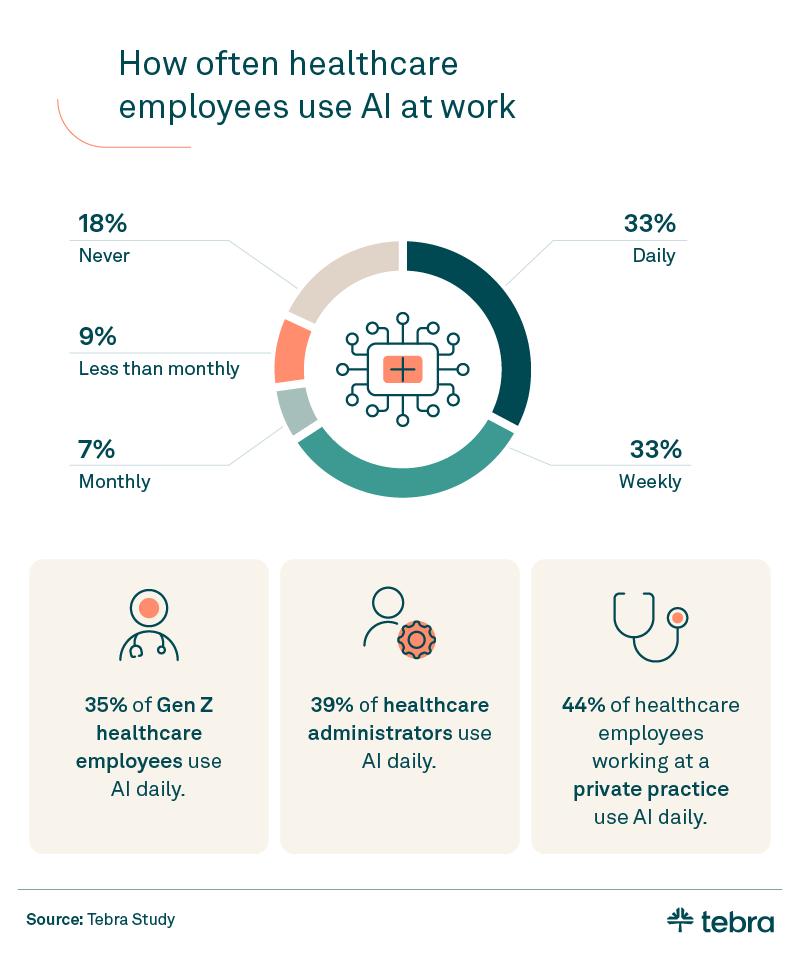

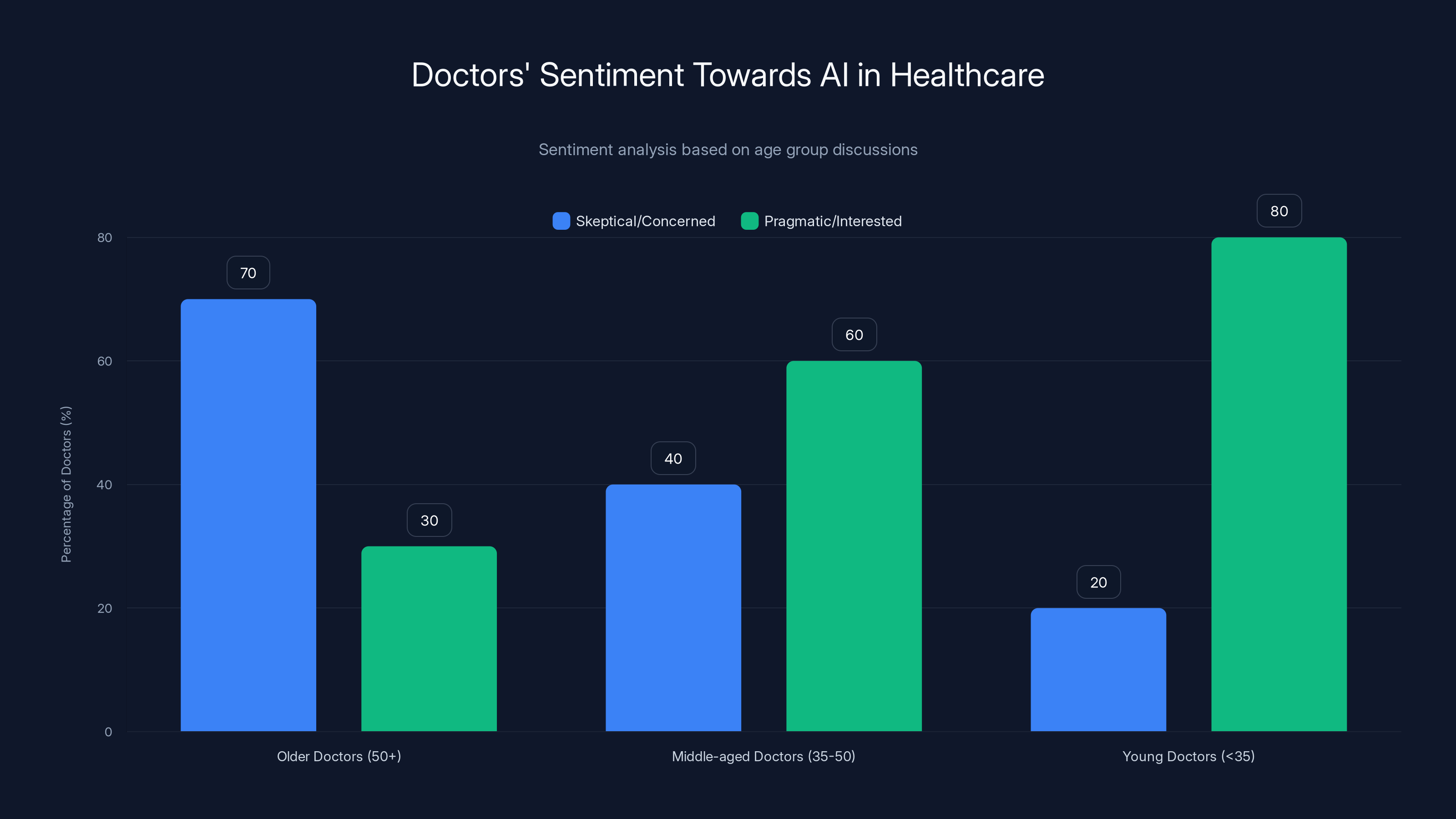

Older doctors are more skeptical about AI, whereas younger doctors are more integrated and interested in its use. Estimated data based on qualitative insights.

What the Data Actually Says About AI Effectiveness in Healthcare

Let's look at what we actually know works.

A Nature study from 2024 analyzing 100+ AI healthcare projects found:

- Diagnostic AI accuracy: When trained on the right data, AI matches or beats human radiologists on specific imaging tasks (X-ray pneumonia detection, skin cancer classification). But it struggles with complex cases requiring human judgment.

- Operational efficiency: AI reduces administrative work by 30-40%, with highest impact on scheduling, prior authorization, and billing.

- Clinical outcomes: Very few studies show AI actually improving patient outcomes. Most improvements are in efficiency, not health.

- Safety concerns: AI systems trained on biased data sometimes perform worse for minority populations. This is a real equity issue.

The honest takeaway: AI is great at automating pattern recognition and administrative work. It's okay at helping doctors make decisions. It's not good at replacing human judgment or clinical reasoning.

The Mental Health Question

There's a specific conversation happening about AI in mental health that deserves attention.

Depression, anxiety, and other mental health conditions are massively undertreated. Therapy is expensive. Wait times are months. A lot of people need help and can't get it.

ChatGPT can provide cognitive behavioral therapy techniques, crisis support, and mental health information. Some people have found this genuinely helpful. There are even dedicated mental health AI apps being developed with actual therapist involvement.

But there's a real risk: someone with serious mental illness (bipolar disorder, schizophrenia, acute suicidality) could use an AI chatbot instead of getting professional help. An AI can't recognize psychosis. It can't involuntarily hospitalize someone. It can't follow up.

The smart approach: AI for mental health support can work, but only as a supplement to human care, and only for conditions that don't require immediate professional intervention.

AI integration could reduce clerical workload by 50%, allowing more time for patient care. Estimated data based on current averages.

How Doctors Actually Feel About This

I've talked to dozens of doctors about ChatGPT and AI in healthcare. The sentiment is split, but in an interesting way.

Older doctors (50+): Skeptical and concerned about liability. They don't use it much, but they're worried about patients using it.

Middle-aged doctors (35-50): Pragmatic and interested. They're using AI for research, note-taking, and understanding new evidence. They're not worried about being replaced, but they're concerned about patients making decisions based on AI advice.

Young doctors (under 35): Integrated it already. Using it as a daily tool. Less worried about it than concerned about regulation forcing it into a box that doesn't work.

Almost all of them say the same thing: "This could be really useful if it was integrated into the healthcare system properly instead of being a consumer tool that people use on their own."

In other words, ChatGPT for healthcare isn't the problem. Healthcare systems not integrating AI is the problem.

The Opportunity: What Good AI Healthcare Actually Looks Like

Imagine this workflow:

- Patient comes to doctor with symptoms

- Doctor uses AI to quickly identify the most likely diagnoses based on symptom profile and patient history

- AI suggests the most useful tests to order

- Tests come back, doctor uses AI to interpret them in context of patient history

- Doctor proposes treatment, AI checks for drug interactions and suggests alternatives if needed

- AI generates clinical documentation while doctor talks

- AI follows up with patient to ensure they're taking medication and improving

This isn't a theoretical workflow. Pieces of this are already happening in some hospitals. The barrier isn't technology, it's integration. Healthcare systems are stuck with legacy software that doesn't talk to each other. Building new AI into that mess is hard.

But the hospitals that have gotten their act together are seeing real improvements: faster diagnosis, fewer errors, more physician time for actual patient care, better patient satisfaction.

The Equity Question: Does AI in Healthcare Help or Hurt

Here's a problem that doesn't get enough attention: AI trained on historical medical data inherits historical biases.

Medical history includes centuries of biased diagnosis and treatment. Women's pain is taken less seriously. Black patients receive worse care. Non-English speakers get less information. AI trained on this data learns these biases.

A 2024 study found that a widely used algorithm for predicting which patients needed intensive care was biased against Black patients, systematically scoring them lower severity than White patients with the same condition.

On the flip side, AI can potentially fix these biases if we're intentional about it. AI trained on properly curated data, with bias testing built in, could actually provide more equitable healthcare than human doctors (who also have biases, but less accountability).

The key word is "if." Right now, most AI systems in healthcare aren't built with equity in mind. They're built for efficiency. That's a problem.

Predictions for 2025 and Beyond

Here's what I think actually happens:

2025: Regulatory frameworks start emerging. The FDA releases clearer guidance on what AI healthcare tools need approval. Some hospital systems have smooth AI integration. Others are still figuring it out. ChatGPT for healthcare remains popular but controversial.

2026-2027: AI diagnostic tools become standard in radiology and pathology. Most of those jobs change shape rather than disappear. Administrative AI adoption accelerates. Specialty drug discovery gets faster. First generation of integrated AI healthcare workflows becomes common.

2028+: AI healthcare is boring, which is good. It's just part of how healthcare works. Most of the concern shifts from "is AI safe" to "how do we ensure AI is used equitably and that healthcare systems actually integrate it properly."

The job market question that everyone asks: Will doctors be replaced by AI? Answer: No. But doctors who know how to work with AI will replace doctors who don't. The skillset changes, not the existence of the job.

The Bottom Line

40 million people using ChatGPT for healthcare daily is a symptom, not the disease. The disease is that healthcare is broken: expensive, slow, inaccessible, and dehumanizing. People are turning to ChatGPT because it solves some of those problems, even if imperfectly.

The real opportunity is not replacing human doctors with AI. It's using AI to fix what's broken about healthcare so that doctors can do what they're actually good at: understanding complex patients and making judgment calls in uncertain situations.

AI can handle pattern recognition, data synthesis, and administrative work. Doctors handle everything else. That division of labor is where healthcare gets better.

Is ChatGPT perfect for healthcare decisions? No. But neither is gut feeling, overworked doctors, or the current system. The question isn't whether AI is perfect. It's whether AI is better than the alternative. For a lot of people, at 2 AM, with no doctor available, the answer is yes.

That doesn't mean we should let AI replace clinical judgment. It means we should figure out how to integrate it so it augments clinical judgment. That's the actual work happening in the best healthcare systems right now.

FAQ

Is Chat GPT accurate for medical diagnosis?

No, ChatGPT should never be used for diagnosis. It can help you understand symptoms and determine urgency, but it lacks the physical examination, lab tests, and patient history necessary for diagnosis. Studies show ChatGPT correctly identifies underlying conditions from symptom descriptions only about 42% of the time, compared to 90% for experienced physicians. Always consult a doctor for actual diagnosis.

What are the main risks of using AI for healthcare decisions?

The primary risks include AI hallucinations (confidently stating false medical information), inability to consider your personal medical history, lack of liability when things go wrong, and the possibility of missing serious conditions. AI systems trained on biased data can also perpetuate healthcare inequities. Additionally, relying on AI instead of professional care can delay proper treatment in urgent situations.

How are hospitals actually using AI in healthcare right now?

Hospitals are using AI for diagnostic imaging (AI flags suspicious findings for radiologist review), administrative automation (prior authorization, billing, scheduling), clinical documentation (AI drafts notes from doctor dictation), drug discovery acceleration, and patient monitoring via wearable data. The most successful implementations use AI to augment doctors' work rather than replace it, improving efficiency without reducing human oversight.

Why do 40 million people use Chat GPT for healthcare daily?

Healthcare is expensive, slow, and inaccessible for many people. ChatGPT provides instant answers at no cost, available 24/7, without judgment or scheduling delays. People use it for research before doctor appointments, triage decisions at 2 AM, and understanding complex medical information. It fills gaps created by healthcare system limitations, even though it has significant limitations of its own.

Will AI replace doctors?

No. Doctors who understand how to work with AI will replace doctors who don't. The skillset changes, not the profession. AI is good at pattern recognition and administrative work, but human doctors excel at clinical judgment, understanding complex patients, and making decisions in uncertain situations. The future of healthcare is doctors using AI as a tool, not AI replacing doctors.

Is AI better than Web MD or Google for health research?

Yes, in some ways. ChatGPT actually understands medical concepts and can explain them clearly, whereas Google just gives you links. ChatGPT can synthesize information from multiple sources and answer follow-up questions with context. However, ChatGPT can hallucinate studies or facts that don't exist, while Google links to sources you can verify. Best practice: use ChatGPT for understanding, then verify critical facts with official medical sources or your doctor.

What's the difference between using AI for mental health support and therapy?

AI can provide immediate grounding techniques, help you understand anxiety or depression, and offer crisis de-escalation. But it cannot diagnose mental illness, recognize psychosis or serious danger, involuntarily treat people in crisis, or provide the relational healing that therapy offers. AI works best as a supplement to professional mental health care, not a replacement. For serious mental health conditions, professional help is essential.

How is AI being regulated in healthcare?

Regulation is still developing. The FDA is creating frameworks for AI healthcare tools, but it's complex determining what requires approval (diagnostic AI definitely does; note-writing AI might not). The European Union's AI Act is more prescriptive. Different countries have different standards. Right now, consumer-facing AI like ChatGPT largely operates in a regulatory gray zone, which is why integration into regulated healthcare systems is important.

What should I do if I use Chat GPT for health questions?

Treat it as a triage and research tool, never a substitute for professional medical advice. Use it to understand conditions, research treatment options, and determine urgency. But for actual diagnosis, prescriptions, or serious symptoms, see a doctor. Be especially cautious about AI advice on medications, drug interactions, or life-threatening symptoms. Always verify critical health information with authoritative sources or healthcare professionals.

The Future of Healthcare Is AI-Augmented, Not AI-Dominated

The 40 million daily ChatGPT users for healthcare represent something real: the collision between healthcare's limitations and AI's capabilities. This isn't going away. It's going to grow, evolve, and eventually become normal.

The question isn't whether AI will play a role in healthcare. It's what that role will be. And the answer depends on whether we intentionally build systems where AI augments human expertise versus accidentally building systems where AI replaces human judgment.

The stakes are high. But the opportunity is higher. AI could make healthcare faster, cheaper, more equitable, and more accessible. It could reduce medical errors, speed up diagnosis, and give doctors back time they currently waste on paperwork.

Or it could create new problems: harmful biases, inappropriate automation, liability gaps, and over-reliance on tools that sometimes confidently give you wrong answers.

The difference between those futures is the choices we make right now.

For patients: Use ChatGPT and AI tools thoughtfully. They're useful for research and triage, not for diagnosis and treatment decisions. Your doctor is still your best resource for medical decision-making.

For doctors: AI is coming, and you might as well get ahead of it. Learn how to use these tools. They can handle the boring stuff so you can focus on the actual medicine.

For healthcare systems: Integration beats consumer chaos. Build AI into your workflows intentionally, with proper oversight, equity testing, and liability frameworks. The systems that do this will be faster and better. The ones that don't will lose talent and patients.

For regulators: Move fast on creating frameworks. Healthcare can't wait for perfection, but it also can't afford the cost of unregulated chaos. The goal is clear guardrails that enable innovation without risking patient safety.

The future of healthcare isn't AI or humans. It's both, working together. And that future is already here, right now, in the 40 million daily conversations between patients and AI.

Key Takeaways

- 40 million daily ChatGPT healthcare users demonstrates massive demand for accessible health information, driven by healthcare system failures in access, cost, and speed

- AI excels at medical research synthesis, administrative automation, and diagnostic imaging analysis, but fails at diagnosis and cannot replace clinical judgment

- Healthcare systems integrating AI successfully use it to augment physician work (note-writing, decision support), not replace doctors or autonomous decision-making

- Real risks include AI hallucinations providing false medical information, inability to consider personal medical history, missing serious conditions, and liability gaps

- Future healthcare won't be AI-dominated or human-dominated, but rather intentional integration where AI handles pattern recognition and administration while doctors handle judgment and complex cases

Related Articles

- Best Tablets for Work and Creativity [2026]

- The Witcher 3 Expansion Rumors: What We Know [2025]

- Samsung Galaxy S26 Ultra Leaked: Design, Specs & Release Date [2025]

- Cambridge Audio L/R Speaker Series: Complete Guide [2025]

- Best Mint Alternatives in 2025: Complete Guide to Top Budgeting Apps

- CES 2026: The Wildest Tech Innovations That Left Me Speechless [2025]

![ChatGPT in Healthcare: 40M Daily Users & AI's Medical Impact [2025]](https://tryrunable.com/blog/chatgpt-in-healthcare-40m-daily-users-ai-s-medical-impact-20/image-1-1767701154369.jpg)