Introduction: When Characters Feel Real

There's a moment that happens in the best theme park experiences. You're standing there, the music swells, and suddenly you can't tell the difference between the person in front of you and the character you've watched on screen a hundred times. That moment just got way more intense at Disney World.

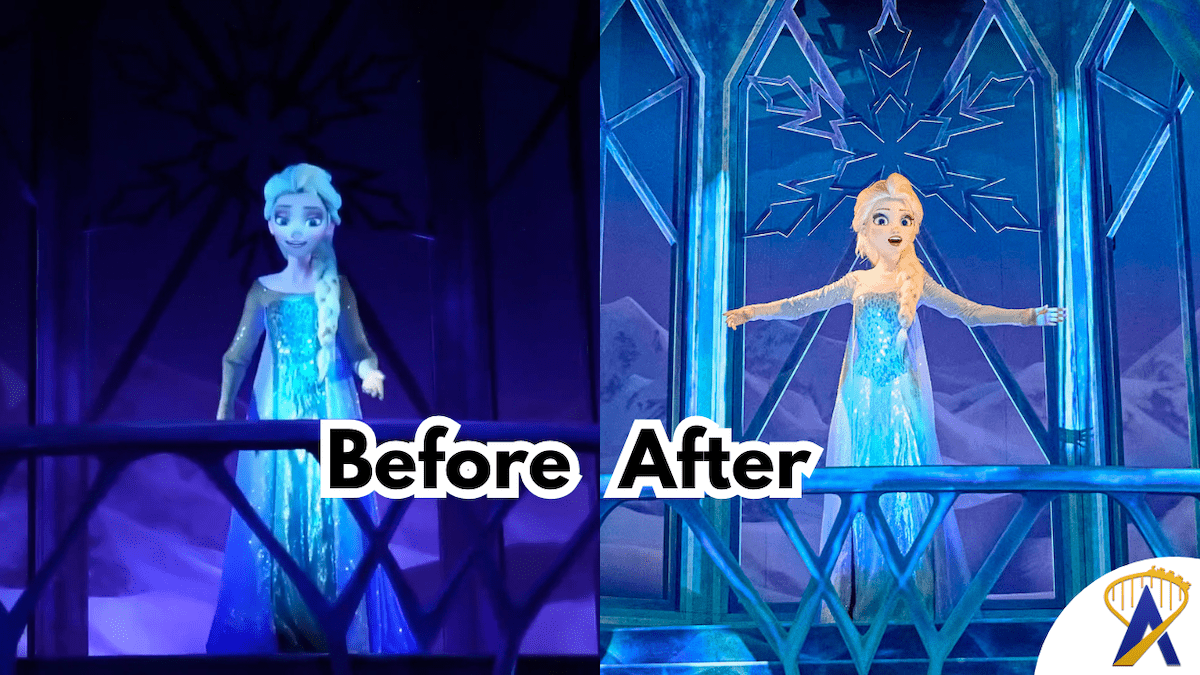

The Frozen Ever After attraction recently reopened with a significant upgrade to its animatronic systems, and the reactions have been nothing short of remarkable. Guests are reporting an almost unsettling level of realism—the kind that makes your brain do a double-take. The animatronics move with fluid grace, their facial expressions shift with genuine emotion, and their interactions feel less like watching a pre-programmed sequence and more like witnessing live performance.

But here's what makes this worth your attention: this isn't just Disney throwing more budget at the problem. This is engineering meeting storytelling. The technology powering these updated animatronics represents a significant leap forward in how robots can embody character, emotion, and presence. Whether you're interested in theme park innovation, AI-driven robotics, or just want to understand how a massive corporation makes you believe in magic, this upgrade shows us where entertainment technology is headed.

The improvements go deep. We're talking about advances in servo motors, facial recognition systems, motion tracking, real-time expression mapping, and synchronized audio-visual systems. Each character now responds to environmental conditions, adjusts movements based on guest proximity, and performs with a level of consistency that would make any live actor jealous. And yes, there are practical business reasons Disney is making these investments—but that doesn't make the technical achievement any less impressive.

In this guide, we'll break down exactly what's different about the new Frozen Ever After animatronics, how the underlying technology works, why this matters for the future of entertainment, and what it reveals about where Disney and similar companies are taking immersive experiences. If you've ever wondered how theme parks create those "did that just happen?" moments, you're about to get the full picture.

TL; DR

- Advanced Servo Systems: The new animatronics use precision servo motors with millisecond response times, allowing for fluid, natural movement instead of jerky mechanical motion

- Facial Recognition & Expression Mapping: Real-time AI systems track guest positions and adjust character expressions and gaze direction accordingly

- Synchronized Audio-Reactive Movement: Character animations now sync perfectly with dialogue, music, and environmental audio using sophisticated timing algorithms

- Motion Capture Foundation: The character movements are based on motion-capture data from real actors, ensuring authenticity at the foundational level

- Future Applications: This technology will likely expand to other Disney attractions and influence how other entertainment venues approach immersive experiences

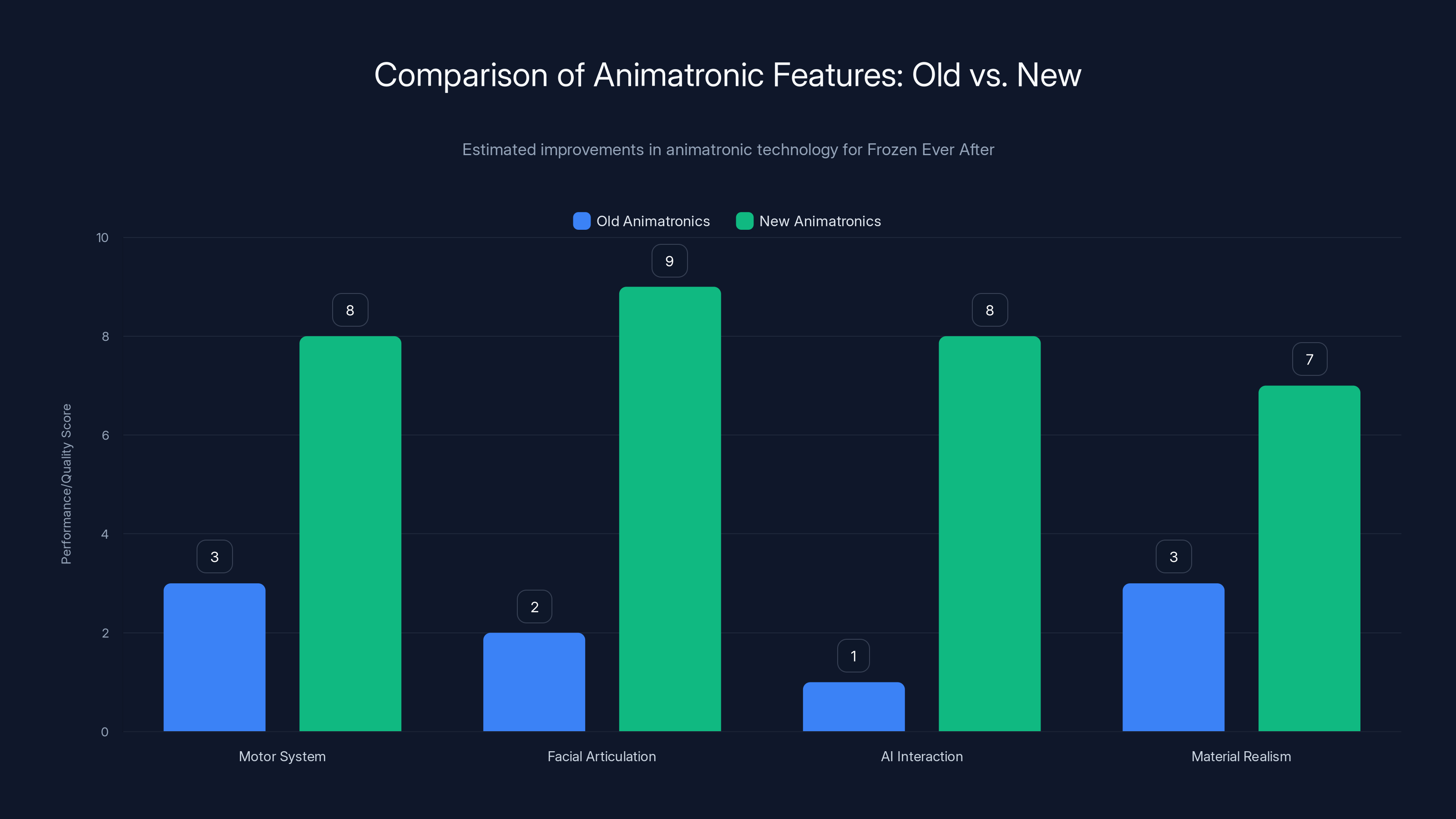

The new animatronics show significant improvements in motor systems, facial articulation, AI interaction, and material realism compared to older models. Estimated data based on described enhancements.

The Original Frozen Ever After: What Guests Knew Before

Frozen Ever After has been a staple of the EPCOT theme park since it opened in 2016, replacing the previous Maelstrom attraction. The ride was well-received from day one, offering a relatively short (around 5 minutes) indoor boat journey through scenes from the Frozen story, with animatronic characters at key emotional moments.

The original animatronics were solid by pre-2024 standards. The characters moved, they had some facial expressions, and the ride itself was mechanically sound. But if you watched them with the eyes of someone who'd seen the Frozen films, there was a noticeable uncanny valley effect. The movements felt slightly stiff. The facial expressions changed in discrete steps rather than flowing naturally. Eye contact with guests felt either too deliberate or completely absent. The overall effect was "we've got a really good robot here," not "that's actually Elsa."

Guests still loved the ride. It was immersive, well-themed, and short enough that kids didn't get bored. But there was room for improvement, and Disney apparently decided the investment was worth it.

The decision to upgrade made strategic sense. Frozen is one of Disney's biggest franchises. The ride was already popular. And the technology for better animatronics had matured enough that the improvements would be noticeable to regular guests, not just theme park enthusiasts. Disney likely saw this as a way to extend the ride's relevance and give guests a reason to experience it again if they'd ridden it before.

How the New Animatronics Were Built: The Foundation

Understanding what makes the new Frozen Ever After animatronics feel different requires understanding how they were engineered from scratch. This wasn't a simple software patch or minor mechanical adjustment. Disney effectively rebuilt the character figures and rewired their control systems.

The first step in this process was motion capture. Disney brought in talented actors and animators to perform the character movements in a motion-capture studio. The actors studied the Frozen films, analyzed how Elsa, Anna, and Kristoff move in specific scenes, and then performed those movements while wearing motion-capture suits. Cameras tracked dozens of points on their bodies, translating their real movements into digital data.

This is standard practice in animation and some advanced robotics, but the key difference here is the purpose. Instead of creating CGI characters for a film, Disney was generating movement templates for physical robots. Every subtle shift in posture, every gesture, every micro-movement was captured with high precision.

Once the motion-capture data was collected, Disney's engineers faced a fascinating challenge: how do you translate human movement onto a robot that doesn't have identical proportions? A human dancer can rotate their torso in ways a robot might not. A human actor's shoulders might shrug in a way that requires specific servo positions in a robot body. The engineering team had to map human kinematics onto the mechanical constraints of the animatronics.

Then came the question of how many degrees of freedom each character needed. How many individual joints and servo motors would give the characters enough expressiveness to feel alive without making them impossibly complex to maintain? Disney apparently decided to prioritize facial expressiveness above all else, with detailed servo systems controlling eyes, eyebrows, mouth, cheeks, and even subtle head tilts.

The actual construction of the animatronics involved 3D-printed components, custom-molded silicone faces (which allow for more natural skin texture and expression), and articulated frameworks that can hold multiple servo motors while remaining light enough for the ride mechanism to smoothly move them. Each animatronic is a marvel of engineering compromise between realism, durability, and maintainability.

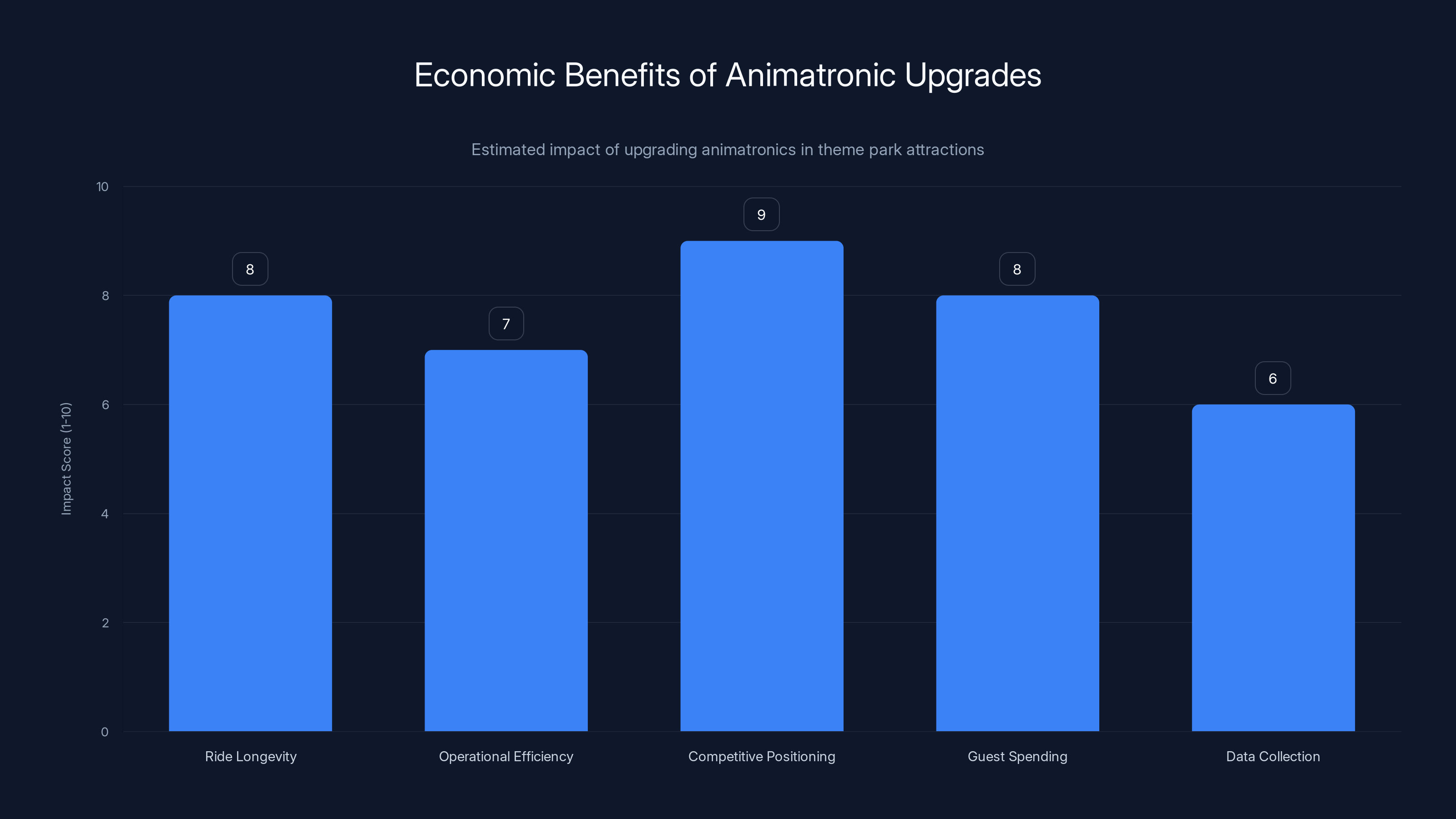

Upgrading animatronics significantly enhances competitive positioning and ride longevity, offering Disney substantial economic benefits. Estimated data.

The Servo Motor Revolution: Making Motion Fluid

If there's one thing that separates the new Frozen Ever After animatronics from older theme park robots, it's the servo motors. And this detail matters more than you might think.

Older animatronics relied on stepper motors or simple gear mechanisms. These components could move an arm from point A to point B, but the motion itself felt mechanical. The movement would start, accelerate, maybe decelerate, and then stop. The speed of movement was often the same regardless of the distance. If a stepper motor was moving a head, it would rotate at a fixed speed until it reached the target position. That's why older animatronics often feel "robotic" in the literal sense.

Servo motors are different. A servo motor uses feedback from a sensor (usually a potentiometer or encoder) to continuously adjust its position. If you tell a servo motor to reach position X, it will adjust itself in real time to match that target. More importantly, you can program how it reaches that position. You can tell it to accelerate smoothly, decelerate gently, add a slight overshoot and settle back, or perform a dozen other motion profiles. This allows servo motors to replicate the natural motion of living creatures, where acceleration and deceleration curves are never perfectly linear.

The new Frozen animatronics use precision servo motors throughout their skeletal systems. The eyes have individual servo motors for left-right movement, up-down movement, and even slight rotation. The mouth has servos that control jaw position, lip shape, and cheek involvement. The head has multiple servos for tilting, turning, and nodding. Hands have servos for finger positions, wrist angles, and grip strength. The torso might have servos for posture adjustments.

But here's the critical part: all these servos work in concert. When Elsa smiles, it's not just her mouth moving. Her cheeks rise slightly, her eyes narrow just a bit, her head might tilt slightly, and her overall posture might adjust. This coordination is what makes the movement feel alive instead of mechanical. It's the difference between a smile that looks like a motor moving a jaw and a smile that looks like genuine emotion.

The response time of modern servos also matters. Older systems might have had response times measured in hundreds of milliseconds. The new systems have response times in tens of milliseconds. That means when a guest moves in front of Elsa, her eyes can follow them with barely any perceptible lag. The movement feels responsive and intelligent.

Facial Recognition and Expression Mapping: The AI Layer

Now it gets really interesting. The new animatronics aren't just executing pre-programmed movements. They're using real-time AI systems to recognize guests and respond to them.

Facial recognition technology has advanced dramatically in recent years. Modern systems can identify a face in an image, extract dozens of specific features (the distance between eyes, the shape of the chin, the symmetry of facial proportions), and match those against a database of known faces with high accuracy. Theme parks and entertainment venues have started incorporating this technology into attractions.

But Disney's implementation here goes a step further. The system is likely using computer vision to detect guest positions, estimate their gaze direction, and predict their emotional state based on facial expressions. When a guest walks past Elsa, the camera system detects their presence and position. The AI system processes this information and sends commands to Elsa's servo motors to adjust her gaze, ensuring she appears to make eye contact. If the guest smiles, the system might trigger Elsa to smile back or wave. If a guest lingers near a character, the animatronic might perform additional expressions or movements to maintain engagement.

This is where the "it's as if they hopped off the screen" feeling comes from. The characters aren't just performing a script at set intervals. They're responding to the guests. They seem aware of the audience. They give the impression of being intelligent, present, and engaged.

The technical implementation requires several synchronized systems: a camera array positioned around the attraction, a real-time computer vision pipeline that processes video feeds, a machine learning model that can recognize faces and estimate emotional states, a middleware layer that translates recognition outputs into animatronic commands, and the control system for all the servo motors. If any of these components has latency or performs poorly, the illusion breaks.

Disney has apparently optimized this pipeline well enough that most guests don't notice lag or mistakes. The character's responses feel natural and spontaneous, even though they're the result of complex computation happening in real time.

Synchronized Audio-Visual Systems: Perfect Timing

One of the most noticeable improvements in the updated Frozen Ever After is the timing. The characters' mouth movements match their dialogue perfectly. Their body movements sync with the music. Their gestures align with the emotional beats of the soundtrack. This precision is harder to achieve than it might seem.

In older animatronics, the audio and video were often semi-independent. The ride would play a pre-recorded soundtrack, and the animatronics would perform pre-programmed movements. If an engineer set up the timing wrong, the mouth movements might be slightly out of sync with the dialogue. It's subtle, but noticeable, and it breaks the illusion.

The new system uses synchronized timing across all components. The ride control system receives master timing information from the audio system. Every servo motor command is timestamped relative to that master clock. The result is perfect synchronization between what the characters are saying and what their mouths are doing, between the music crescendo and the characters' movements, between the emotional arc of the story and the characters' expressions.

This requires a sophisticated real-time control architecture. The ride's programmable logic controller (PLC) must manage the positions of dozens of servo motors, trigger effects and lighting changes, control animatronic movement, all while staying synchronized with the audio stream playing to the guests. A delay of even 100 milliseconds would be noticeable.

Modern Disney attractions likely use a network of microcontrollers and real-time operating systems to achieve this coordination. Each animatronic might have its own control computer that receives commands from a central orchestration system. Ethernet networks (or more likely, wireless systems) communicate between controllers with enough bandwidth and low enough latency to keep everything synchronized.

The sophistication here is often invisible to guests. They just notice that the characters' mouths perfectly match their words, and it feels natural rather than robot-like. But achieving that naturalness requires engineers solving complex distributed systems problems.

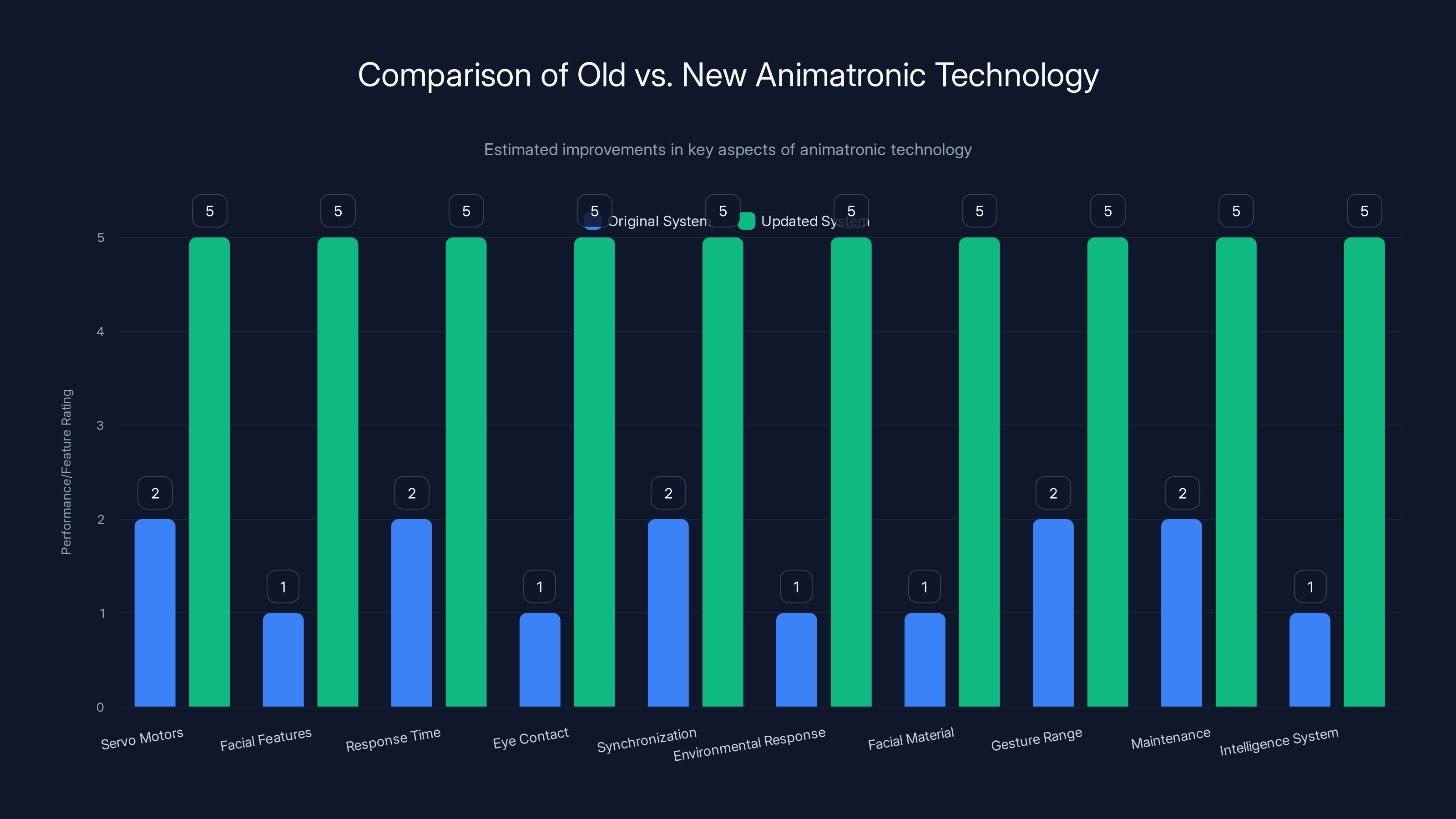

The updated animatronic systems show significant improvements across all aspects, particularly in precision, responsiveness, and intelligence. Estimated data.

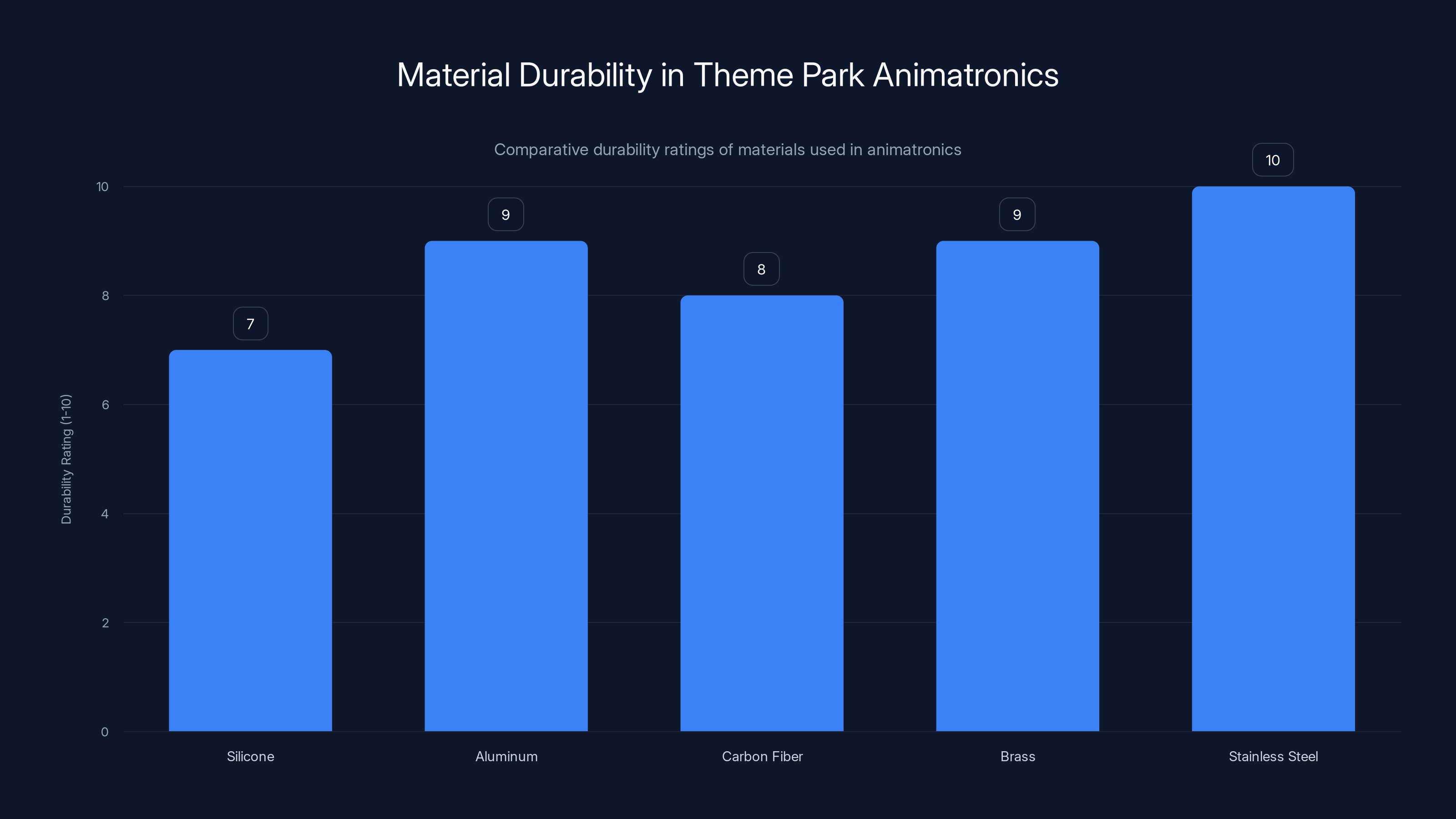

Material Science and Physical Construction: Durability Meets Realism

Building a theme park animatronic that needs to perform dozens of times per day requires serious material science expertise. The characters need to look realistic but also withstand constant use, temperature changes, humidity, and the occasional guest interference.

The faces of the new Frozen animatronics are made from detailed silicone. Silicone is more realistic than rigid plastic because it has some give to it, allowing facial features to move and deform slightly in natural ways. The silicone is pigmented to match human skin tones and is often embedded with hair or crafted to have realistic skin texture. Creating a silicone face involves creating a mold from a sculpture or 3D model, then casting the silicone into that mold with multiple layers for different properties.

But silicone faces have challenges. They're subject to UV degradation from the lights in the attraction. They can stain from the moisture and dust in the Florida air. They can accumulate dirt and grime over time. Disney engineers have to apply protective coatings, use specialized lighting that minimizes UV exposure, and probably incorporate regular cleaning into their maintenance schedule.

The body structures use aluminum frames or possibly carbon-fiber components where weight is a concern. Joints are often brass or stainless steel for durability. Servo motors and moving components are sealed against moisture. The internal mechanisms are designed to be maintainable, with quick-disconnect panels that allow technicians to access servo motors, cables, and control systems for adjustment or replacement.

Cable management is another overlooked but critical aspect. An animatronic with dozens of servos has dozens of cables running from the control computer to the individual motors. These cables need to be routed so they don't tangle, don't get pinched during movement, and don't degrade from exposure to moisture or temperature extremes. Poor cable management is one of the leading causes of animatronic failures in theme parks.

The overall structure might weigh anywhere from 200 to 500+ pounds per full-sized animatronic, depending on the complexity and the number of motors. This weight needs to be supported by the ride mechanism and positioned so that the center of gravity remains stable even as the animatronic moves.

Environmental Responsiveness: Characters That React to Their Surroundings

One of the more sophisticated aspects of the new animatronics is their environmental responsiveness. The characters don't just perform the same movements every ride cycle. They respond to conditions around them.

Temperature affects how servo motors perform. Cold can make hydraulic fluid thicker and reduce responsiveness. Heat can cause electronic components to thermal throttle. The new animatronics likely have sensor systems that monitor temperature and can adjust servo speeds or movement profiles to compensate. If it's a hot Florida day and the internal electronics are running warm, the system might reduce movement speed or add brief pauses between commands to let components cool.

Humidity is another factor. EPCOT is in a subtropical climate with high humidity, and an indoor water ride creates additional moisture. Electronics need to be sealed and protected. Servo motors need to be designed to resist corrosion. Moving parts might need occasional lubrication to prevent rust.

Lighting changes can affect how realistic an animatronic looks. The new attractions probably incorporate sophisticated lighting design where spotlights follow the characters, highlighting their best angles. The characters' faces might change subtle hues based on the lighting to appear more lifelike. This requires coordination between the lighting system and the character animation system.

Guest proximity is another factor. If a guest gets very close to a character, the computer vision system can detect this and potentially adjust the animatronic's behavior. A character might pull back slightly, maintain eye contact more intensely, or perform a more dramatic expression if guests are crowded close. This prevents the unsettling feeling of a robot not reacting when someone is directly in its face.

The Human Element: Why Real Acting Still Matters

While the technology behind the new Frozen Ever After animatronics is impressive, it's important to understand that it's not replacing human actors. Rather, it's capturing and reproducing the work of human performers.

Disney hired or contracted talented actors and voice professionals to create the motion-capture performances that form the basis of the animatronics. These aren't generic movements. They're nuanced performances informed by understanding the characters, their emotional arcs, and how they interact with guests. An actor bringing authenticity and emotional intelligence to their motion-capture performance results in animatronics that feel alive because they're based on a living person's choices.

The voice acting also matters. The dialogue isn't just synchronized with the mouth movements. The performance quality of the voice acting influences how guests perceive the character. A well-performed line with proper emotional inflection makes the character seem more real, even if it's coming from a speaker.

This is where things get interesting from a labor perspective. Theme parks still employ live character performers for meet-and-greets and other experiences. Animatronics aren't replacing those performers. They're adding a new tool to the entertainment toolbox. A guest might ride Frozen Ever After in the morning and then meet Anna and Elsa as live performers in the afternoon. Each experience has different value.

But the motion-capture performances do involve talent and skill that Disney has invested in and probably continues to invest in. When an animatronic looks particularly lifelike or expressive, it's because somewhere, a real performer gave their time and talent to create that foundation.

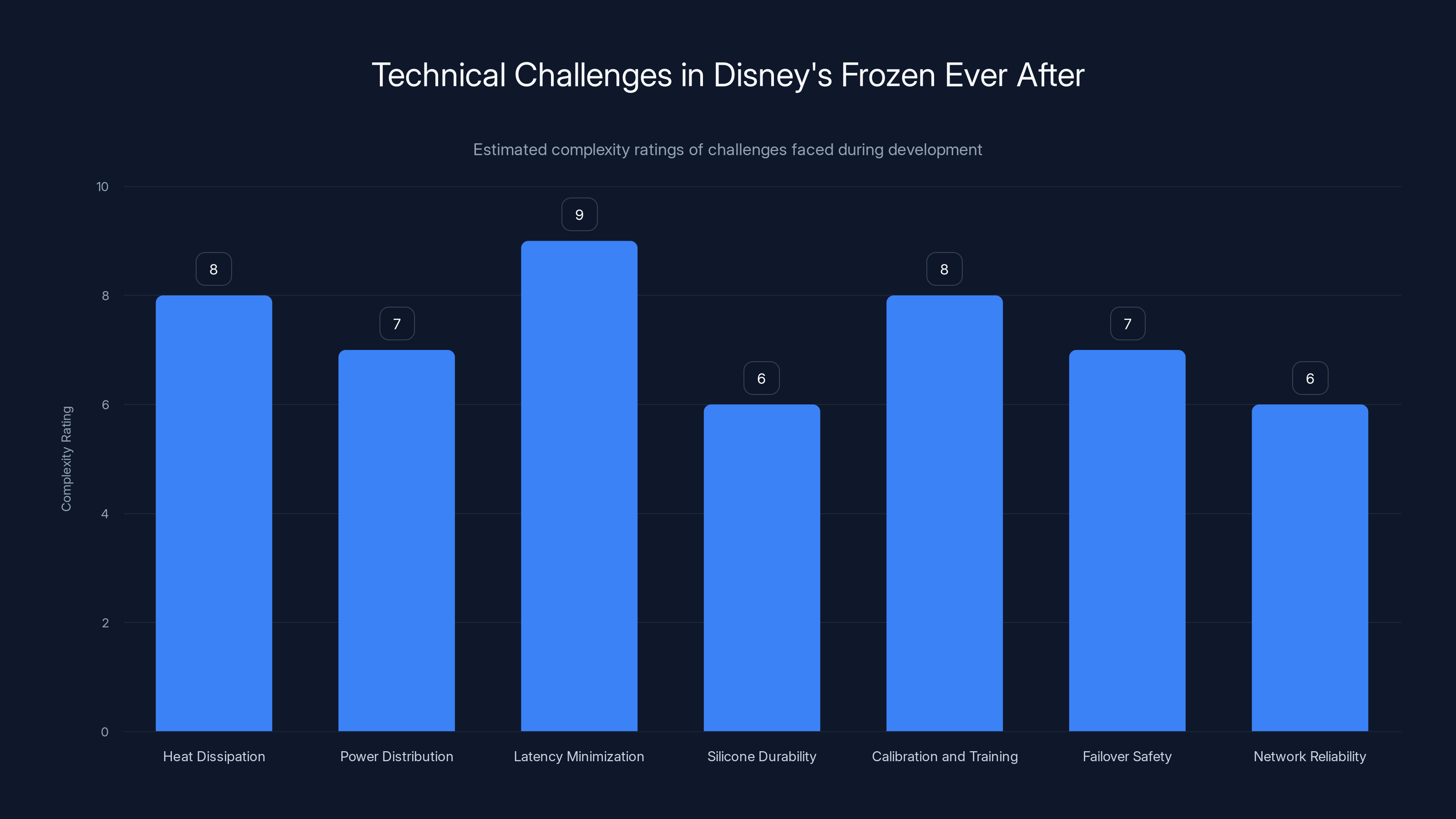

Estimated complexity ratings highlight that latency minimization and heat dissipation were among the most challenging technical issues Disney engineers faced while developing the Frozen Ever After animatronics.

Comparison: Old vs. New Animatronic Technology

Let's break down the specific improvements between the original Frozen Ever After animatronics and the newly updated versions:

| Aspect | Original System | Updated System |

|---|---|---|

| Servo Motors | Basic stepper motors with fixed speeds | Precision servos with programmable motion profiles |

| Facial Features | Limited articulation (mouth, maybe eyebrows) | 30+ individual servo motors for micro-expressions |

| Response Time | 200-500ms latency | 30-100ms latency for real-time reactions |

| Eye Contact | Fixed gaze or simple scanning | Dynamic eye tracking based on guest position |

| Synchronization | Approximate timing with audio | Perfect frame-by-frame sync with dialogue and music |

| Environmental Response | Fixed movement sequence | Adaptive movements based on temperature, guest proximity |

| Facial Material | Rigid plastic or painted foam | Silicone with realistic texture and skin tone |

| Gesture Range | 8-12 key poses per scene | Continuous smooth movement between hundreds of positions |

| Maintenance | Component replacement every 1-2 years | Modular servos with quick replacement capability |

| Intelligence System | Simple timers and triggers | AI-driven facial recognition and expression mapping |

Installation and Integration Challenges

Upgrading the animatronics in an existing attraction that stays open to guests presents massive logistical challenges. Disney couldn't just shut down Frozen Ever After for a year while engineers rebuilt everything. The work had to be done in phases, likely during off-seasons or through careful choreography of closures and maintenance windows.

The physical retrofitting required removing the old animatronics, which had been in place since 2016. These animatronics weren't necessarily designed for easy removal. They were likely bolted securely to the ride vehicles or fixed locations. Disney engineers had to carefully disassemble them without damaging the ride structure or other components.

Then came the installation of the new animatronics. Every servo motor needed proper power routing. Control cables needed to be run and tested. The computer vision systems needed to be positioned optimally to see guests as they rode through. The new control computer needed to be integrated with the existing ride control system. Everything needed to be tested and calibrated.

Testing is a massive undertaking. Disney engineers would have needed to run hundreds of test cycles to ensure that the animatronics perform reliably. They needed to verify that the motion sequences didn't cause servo strain or premature wear. They needed to test the facial recognition system in actual lighting conditions to ensure it worked reliably. They needed to verify that the updated systems didn't interfere with other ride systems (the boat motion, lighting effects, other animatronics).

And all of this had to be done while maintaining ride safety and security. The updated attractions probably underwent re-certification by Florida's Department of Agriculture and Consumer Services, the regulatory body that oversees amusement park safety.

Guest Reactions and Immersion Theory

The guest reactions to the updated Frozen Ever After have been remarkably positive and notably emotional. Social media posts describe the experience as "gave chills" and "it's as if they hopped off the screen." These aren't casual compliments. These are expressions of genuine emotional impact.

This connects to what's called the "uncanny valley" in robotics and animation. The uncanny valley is the phenomenon where something that's almost but not quite human can be deeply unsettling. A robot that moves jerkily or has blank eyes feels eerie rather than impressive. But as robots or animatronics get more realistic, there's a point where they cross from "impressive robot" to "something that might be alive." The new Frozen animatronics seem to have crossed that threshold for most guests.

The updated animatronics achieve immersion through multiple channels simultaneously. The movement is realistic. The facial expressions are nuanced. The eyes respond to the guest's presence. The dialogue is synchronized perfectly. The emotional performance feels authentic. When all these elements work together, the guest's brain accepts the character as real or at least as something worthy of genuine emotional engagement.

There's also an element of nostalgia and story resonance. Guests know Elsa, Anna, and Kristoff from the Frozen films. When the animatronics look and move like the characters from those films, it creates a moment of recognition and pleasure. The animatronics aren't competing with live actors. They're bringing beloved fictional characters into physical space in a way that feels genuine.

Theme parks are basically selling immersion. The entire point is to create an environment where guests can temporarily step out of reality and into another world. Every dollar Disney spends on updating animatronics is an investment in deepening that immersion. When guests describe the experience as "giving chills" or breaking emotional barriers, that's Disney's investment showing returns.

Silicone offers realism but lower durability compared to metals like stainless steel. Estimated data based on typical material properties.

The Future: Where This Technology Goes

The updated Frozen Ever After animatronics represent the current state of the art in theme park character technology, but they're not the endpoint. Several developments suggest where this technology will evolve:

Broader Adoption Across Disney Properties: The technology that worked for Frozen will likely be applied to other attractions. Disney parks have dozens of animatronic characters in attractions around the world. The improvements that worked in EPCOT can be adapted to Magic Kingdom, Hollywood Studios, and international parks. Expect to see more animatronics with improved servo systems, facial recognition, and responsive behavior over the next 5-10 years.

Augmented Reality Integration: Future animatronics might integrate with augmented reality systems that overlay digital information on the real animatronic. A guest might see a digital magical effect originating from an animatronic character, blending the physical and digital in new ways. This requires careful design to not override the impressive physical animatronic work.

More Sophisticated AI: Current systems use facial recognition and relatively simple response logic. Future systems might incorporate more sophisticated natural language processing, allowing characters to respond differently based on what guests say or show. An animatronic might have actual conversations with guests rather than just performing responses to proximity or emotional cues.

Autonomous Movement: Current animatronics are limited to pre-designed spaces along the ride track. Future systems might incorporate mobility, allowing animatronics to move around and interact with guests more freely. This would require solving complex challenges around safety, collision avoidance, and crowd management.

Biomimetic Design: As animatronics get more sophisticated, Disney and other companies will likely invest more in biomimicry, where the design process studies real animals or humans in detail and translates those findings into robotic systems. This goes beyond motion capture to understanding the principles of how creatures move and adapting those principles for mechanical systems.

Emotional Intelligence Systems: Future animatronics might incorporate affective computing systems that can recognize not just faces but emotional states with higher accuracy. An animatronic might genuinely seem to care about how guests feel and adjust its behavior accordingly.

Broader Industry Implications

The investment Disney is making in advanced animatronics has implications beyond theme parks. The technology and expertise being developed here applies to several other industries:

Entertainment and Film: The motion-capture and animatronic systems developed for theme parks directly inform how films and streaming content are created. Better animatronic performance capture means better animated characters in movies.

Healthcare and Robotics: Lifelike animatronics require advances in materials science, servo motor control, and AI-driven interaction systems. These advances often find applications in surgical robots, prosthetics, and therapeutic robots used in healthcare settings.

Customer Service and Retail: The facial recognition and interaction systems being used in theme park animatronics have applications in retail environments, airports, and customer service scenarios. Animated characters in storefronts or service areas might soon be as interactive and responsive as theme park figures.

Education: Educational institutions are exploring using animatronics to teach subjects ranging from biology (using realistic animal models) to history (realistic historical figures for immersive learning). The technology Disney is pioneering will eventually make its way into classrooms.

Museum Exhibits: Museums are increasingly using animatronics and interactive exhibits. The improvements in realism and responsiveness will make museum experiences more engaging, particularly for exhibits about history, nature, or science.

The Cost Factor: Why This Matters Economically

Upgrading animatronics is expensive. Estimates suggest that a complete retrofit of animatronics in a major attraction could cost Disney tens of millions of dollars. This includes engineering, manufacturing, installation, testing, and the cost of taking the attraction offline during construction and retrofitting.

Why would Disney make such a massive investment? Several economic factors likely influenced the decision:

Ride Longevity: Animatronic attractions need regular updates to remain competitive and to keep guests interested. A ride that debuted in 2016 can start feeling dated by 2024. Updating animatronics extends the ride's relevant lifespan by another 5-10 years, delaying the need for a complete attraction replacement, which would cost 10-50 times more.

Operational Efficiency: Modern servo motors and control systems are often more reliable and require less maintenance than older systems. Better reliability means less downtime and fewer missed ride cycles, which directly translates to higher revenue. If a ride has 10% more uptime, that's meaningful income over the years.

Competitive Positioning: Other theme parks and entertainment venues are also investing in advanced animatronics. Disney needs to stay ahead to maintain its reputation for cutting-edge immersive experiences. When potential guests compare options, "more realistic animatronics" is a real factor that influences decisions.

Guest Spending: Better attractions mean longer park stays, which means more spending at restaurants, shops, and other facilities. Guests who have an amazing experience are more likely to return and to recommend the parks to others. The return on investment extends beyond just the ride itself.

Data Collection: Modern animatronic systems with facial recognition capabilities generate valuable data about guest behavior, emotional responses, and preferences. This data helps Disney understand what works and what doesn't, informing future design decisions.

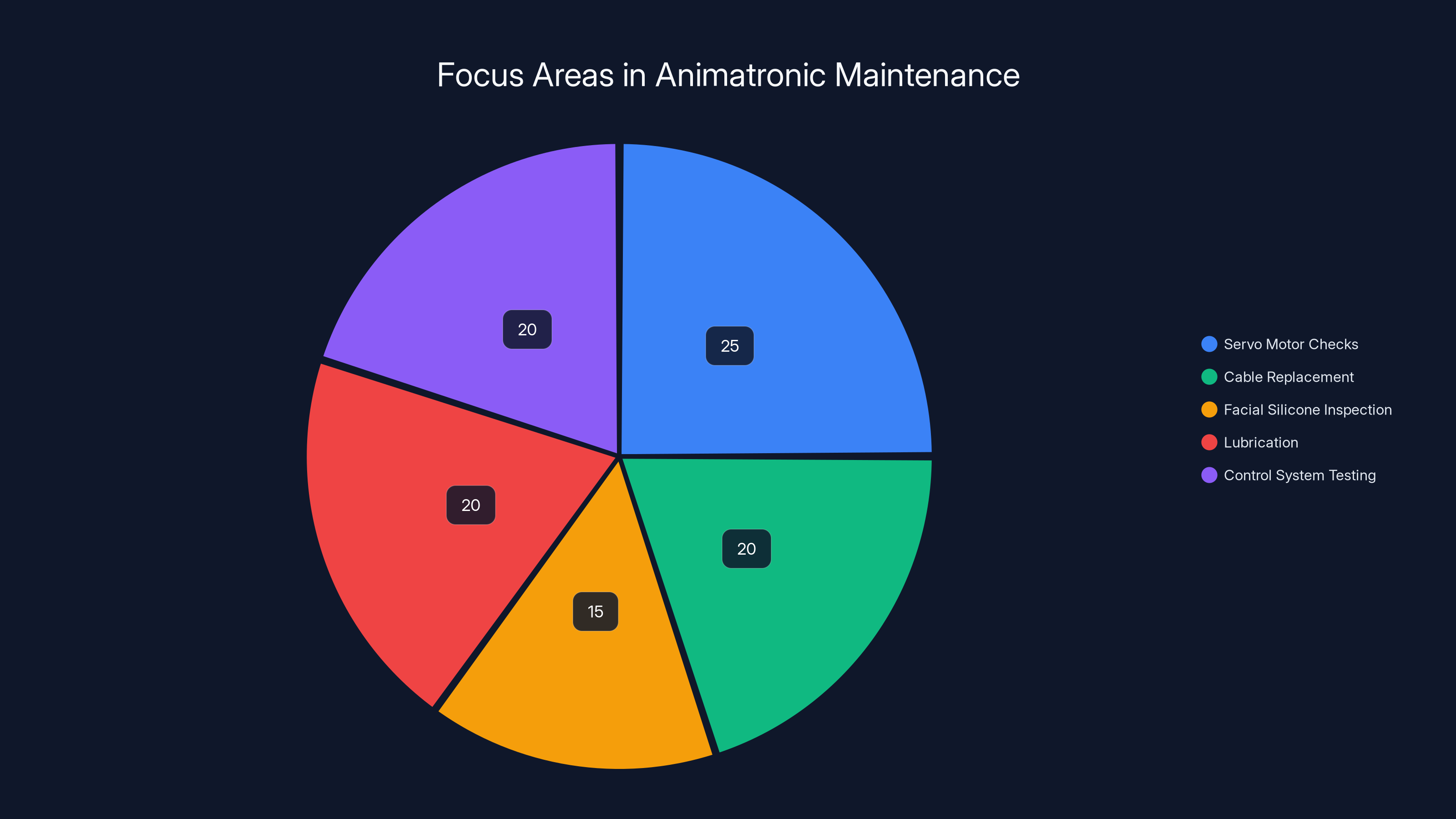

Servo motor checks and control system testing are key focus areas, each comprising 25% of maintenance efforts. Estimated data based on typical maintenance tasks.

Technical Challenges Disney Had to Solve

Creating the new Frozen Ever After animatronics required solving several non-obvious technical challenges:

Heat Dissipation: Multiple servo motors running continuously generate heat. In a small enclosed space in a Florida climate, managing that heat to prevent servo degradation and ensure consistent performance required careful thermal design, possibly including active cooling systems.

Power Distribution: Powering dozens of servo motors simultaneously requires substantial electrical infrastructure. The ride vehicle needs to supply power reliably to the control systems and all the motors without power spikes or drops that could cause glitches.

Latency Minimization: Real-time AI systems operating on video feeds need to process information and send commands with minimal delay. This required optimizing the entire pipeline from camera input to motor command output, possibly including custom hardware or FPGAs (field-programmable gate arrays) for real-time processing.

Silicone Durability: Silicone faces look realistic but degrade under UV light and constant use. Disney engineers had to develop or discover silicone formulations and protective coatings that maintain appearance and durability over years of continuous operation.

Calibration and Training: AI systems for facial recognition and expression mapping need to be trained on diverse data and calibrated to work in the specific lighting and environmental conditions of the attraction. This required collecting training data, iterating on models, and testing in actual conditions.

Failover Safety: If a servo fails, the animatronic needs to gracefully handle the failure without becoming a safety hazard or causing guest injury. The control system probably includes failsafe mechanisms that move the animatronic to a safe position if individual components fail.

Network Reliability: If the animatronics are networked (via Ethernet or wireless), the network needs to be extremely reliable with redundancy and failover capabilities. A network glitch shouldn't cause animatronics to freeze or malfunction.

Maintenance and Long-term Operations

Once the upgraded animatronics are installed and operating, the ongoing maintenance becomes critical. Disney has entire teams dedicated to animatronic maintenance across its parks.

Regular maintenance includes checking servo motors for wear, replacing cables that show signs of fraying, verifying that facial silicone hasn't cracked or degraded, ensuring that all moving parts are properly lubricated, and testing the control systems to verify everything is synchronized.

Predictive maintenance is increasingly important. Modern servo motors have built-in sensors that monitor performance and can detect early signs of failure. Disney's systems probably collect telemetry data from the animatronics, looking for patterns that indicate impending problems. This allows maintenance teams to replace components before they fail, reducing unexpected downtime.

Component replacement is designed to be straightforward. Servo motors are probably quick-disconnect units that can be swapped out in minutes rather than hours. This modular design minimizes ride downtime for repairs.

Software updates might be deployed periodically to improve facial recognition accuracy, adjust movement sequences based on guest feedback, or optimize energy consumption. These updates probably don't require taking the ride offline.

How This Compares to Live Actors and Other Solutions

Theme parks have several options for bringing characters to life: live actors, animatronics, projection-based characters, and hybrid approaches. Each has trade-offs.

Live Actors: Genuine human performers can ad-lib, respond naturally, and engage guests in unpredictable ways. But live actors are expensive, require breaks, can be inconsistent in quality, and aren't scalable to the number of ride cycles a busy attraction experiences.

Animatronics: Consistent, available 24/7 (or whenever the park is open), don't require wages or benefits, and can be engineered for specific character appearances. The downside is they're expensive to build and maintain, require technical expertise, and can feel impersonal if not well-executed.

Projections: Relatively inexpensive, easy to update with software changes, don't require physical space. But projections don't have physical presence in the environment and can't interact with the three-dimensional space in convincing ways.

Hybrid Approaches: Some attractions combine live actors with animatronics, or use projections overlaid on animatronics. These can maximize the strengths of each approach.

The updated Frozen Ever After uses primarily animatronics. This was the right choice for a ride-through attraction where guests are moving at a consistent pace through predefined scenes. The animatronics provide physical presence and consistency that guests find more immersive than projections, and they don't have the labor costs of live actors.

What Makes This Moment Significant for Entertainment Technology

The updated Frozen Ever After represents a moment where multiple technologies have matured enough to be integrated into a consumer-facing experience. Servo motors have become more affordable and reliable. AI systems can run on edge devices (local computers) rather than requiring cloud processing. Real-time computer vision has advanced dramatically. Manufacturing processes allow for detailed silicone casting and 3D printing of components.

Separately, each of these technologies has been developing for years. But seeing them all integrated into a single immersive experience that works reliably and impresses guests is significant. It suggests we're at an inflection point where animatronic characters are becoming competitive with or superior to previous technologies for creating immersion.

This will likely cascade through the industry. Other theme parks will see the success of the updated Frozen attraction and invest in similar upgrades. Entertainment venues in shopping malls, museums, and other locations will adopt the technology. The companies manufacturing servo motors, control systems, and AI platforms will see increased demand. The overall trend is toward more lifelike, more interactive, more immersive character experiences in physical spaces.

From a storytelling perspective, this is also significant. Better animatronics mean that stories can be told with greater nuance. Characters can express a wider range of emotions. Guest interactions can be more sophisticated. This opens new creative possibilities for theme park designers and storytellers.

FAQ

What exactly was upgraded in the new Frozen Ever After animatronics?

The new animatronics feature advanced servo motor systems (replacing basic stepper motors), sophisticated facial articulation with 30+ individual servo motors controlling expressions, real-time AI-driven facial recognition systems for guest interaction, perfectly synchronized audio-visual systems, and improved silicone materials for more realistic facial appearance. The characters now have dynamic eye tracking, responsive movement profiles, and environmental adaptation capabilities.

How does the facial recognition system work in the updated attraction?

The system uses camera arrays positioned throughout the ride to capture video feeds. Real-time computer vision software analyzes guest positions, facial features, and emotional expressions. Machine learning models interpret this data and send commands to the animatronics' servo motors to adjust gaze direction, facial expressions, and sometimes movement. This all happens with minimal latency, typically 30-100 milliseconds, making responses feel spontaneous and natural.

What role did motion-capture play in creating the new animatronics?

Motion-capture technology allowed Disney to record performances from trained actors who studied how the Frozen characters move. The captured movement data was then translated into servo motor positions and sequences for the physical animatronics. This ensures that the animatronics' movements are based on authentic human performance and emotional understanding of the characters rather than generic robotic movements.

How do the servo motors in the new animatronics differ from older systems?

Older animatronics used basic stepper motors or gear mechanisms that moved from one position to another at fixed speeds, resulting in mechanical-looking motion. The new animatronics use precision servo motors that can accelerate and decelerate smoothly, respond in real-time to feedback, and achieve millisecond-level response times. This allows for fluid, natural movement that mimics living creatures.

What materials were used to make the animatronics look realistic?

The animatronics' faces are constructed from detailed silicone that's pigmented to match human skin tones and embedded with realistic hair. Silicone allows for subtle deformation and movement, creating more lifelike expressions than rigid plastic. The structural components use aluminum frames and brass or stainless steel joints for durability. Everything is sealed to resist moisture and the humid Florida environment.

How is the synchronization between dialogue and mouth movement achieved?

The ride's master control system synchronizes all components using a central clock tied to the audio playback. Every servo motor command is timestamped relative to this clock, ensuring that mouth movements align perfectly with dialogue. The control architecture uses real-time operating systems and microcontrollers to maintain this synchronization across dozens of animated systems operating simultaneously.

What kind of maintenance does the new system require?

Regular maintenance includes checking servo motors for wear, replacing cables, verifying silicone condition, ensuring proper lubrication of moving parts, and testing control systems. Predictive maintenance systems monitor servo telemetry to detect early signs of failure. Components are designed for quick replacement, with servo motors featuring quick-disconnect capabilities to minimize downtime.

How does Disney ensure the animatronics are safe around guests?

The systems include failsafe mechanisms that move animatronics to safe positions if individual components fail. Collision avoidance systems prevent the animatronics from moving in ways that could injure guests. All updates to attractions trigger comprehensive re-certification by Florida's regulatory bodies. Regular safety inspections verify that the systems continue to operate safely throughout their lifespan.

What's the approximate lifespan of the updated animatronics before they need another upgrade?

With proper maintenance, the servo motor and control systems should remain effective and competitive for 8-12 years. However, aesthetic elements like silicone faces may require more frequent updates or restoration. The modular design allows components to be replaced individually rather than overhauling the entire system, extending the useful life significantly.

How does this technology compare to the animatronics used in other Disney attractions?

The Frozen Ever After animatronics represent some of the most advanced character systems Disney operates. Other attractions may use simpler animatronics or different technologies. The tech in Frozen represents the current state of the art and will likely be adapted and improved for future attractions. Other theme parks are developing comparable systems, but Disney remains at the forefront of consumer-facing animatronic sophistication.

The Bottom Line: Immersion as Engineering

The updated Frozen Ever After is fundamentally an exercise in applied engineering in service of storytelling. Every servo motor, every camera, every line of code exists to serve one purpose: to make guests believe, even for a moment, that they're in the presence of something alive and intelligent.

Guests don't care about the technical specifications of servo response times or facial recognition algorithms. They care about the feeling when Elsa's eyes meet theirs. They care about the visceral reaction when her expression changes in response to their presence. They care about the moment when disbelief is suspended and a character seems real.

Disney's investment in this technology is an investment in what makes theme parks special: the ability to create experiences that reality can't. A guest can see Frozen on Disney Plus at home anytime. But they can only see Elsa move with fluid grace and respond to their presence in a physical space at a Disney park. That's what's worth the trip. That's what's worth the cost of admission. That's what's worth the engineering challenge.

As technology continues to advance, we'll see more of this. Animatronics will become more common, more sophisticated, more responsive. Other entertainment venues will adopt the technology. The line between character and guest interaction will blur further. And somewhere, an engineer will be working on the next problem to solve, the next small detail that makes the illusion slightly more convincing.

That's the real magic behind the magic.

Key Takeaways

- Updated Frozen Ever After features precision servo motors with 30+ individual controls for realistic facial expressions and movements

- Real-time AI facial recognition systems enable animatronics to respond dynamically to guest position and emotions

- Motion-capture performances from trained actors provide the foundation for authentic character movements

- Perfect synchronization between dialogue, music, and animatronic movement creates unprecedented immersion

- This technology represents the current state of the art in consumer-facing entertainment robotics and will likely expand to other theme park attractions

Related Articles

- CBP's Clearview AI Deal: What Facial Recognition at the Border Means [2025]

- Facebook's Meta AI Profile Photo Animations: A Deep Dive [2025]

- Discord Age Verification 2025: Complete Guide to New Requirements [2025]

- Augmented Reality Theater: Redefining Live Performance [2025]

- Humanoid Robots & Privacy: Redefining Trust in 2025

- Discord Age Verification for Adult Content: What You Need to Know [2025]

![Disney's Frozen Ever After: Animatronic Tech That 'Hopped Off Screen' [2025]](https://tryrunable.com/blog/disney-s-frozen-ever-after-animatronic-tech-that-hopped-off-/image-1-1770854925850.jpg)