Ford's AI Revolution: What You Need to Know About the Voice Assistant and Level 3 Autonomy

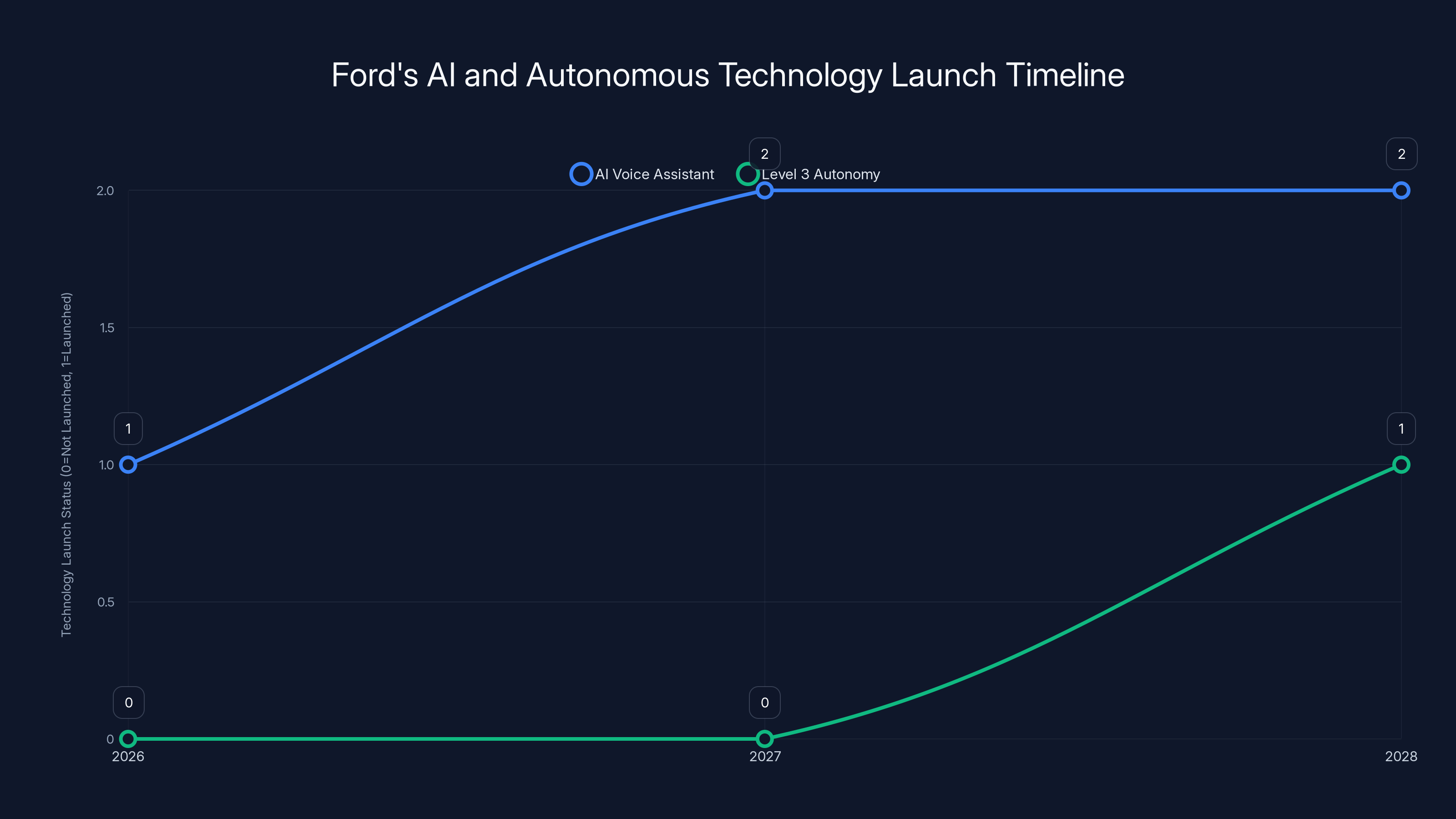

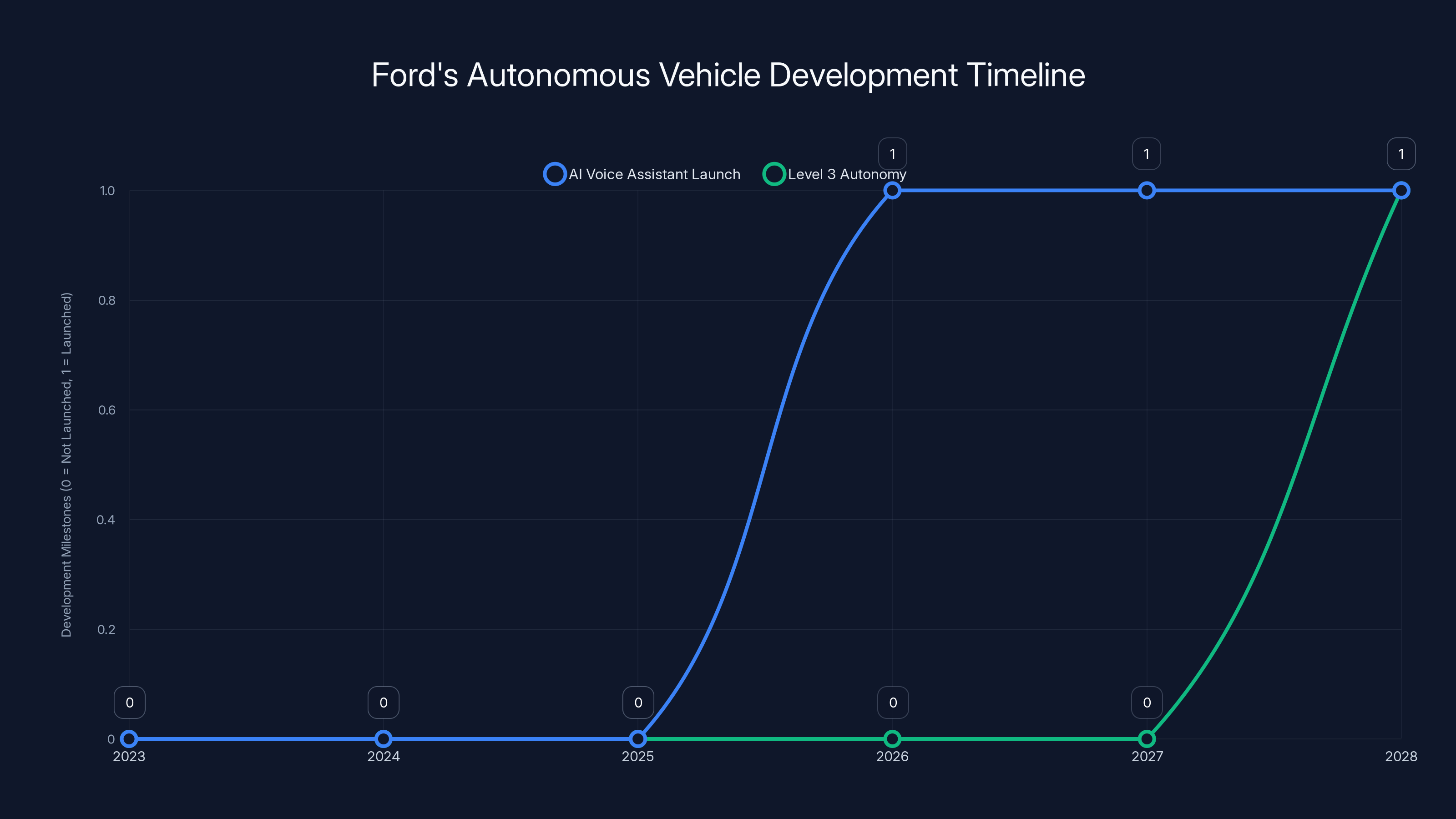

Ford just dropped something pretty significant at CES, and honestly, it caught a lot of people off guard. The automaker announced not one but two major AI-powered features coming to its vehicles over the next few years. An AI voice assistant is rolling out to customers starting this year through mobile apps, then moving into cars in 2027. Then comes the big one: Level 3 autonomous driving hitting Ford vehicles by 2028.

Now, here's what makes this announcement different from all the hype we've seen from Tesla, Rivian, and others. Ford isn't betting the farm on fully autonomous vehicles. Instead, it's taking a more measured, practical approach. The company is developing much of this technology in-house to keep costs down and maintain control over the products customers actually buy.

The automotive industry is at a crossroads. Electric vehicle sales have cooled off considerably. Ford's Mustang Mach-E and F-150 Lightning haven't been the cash cows the company hoped for. The company even cancelled the F-150 Lightning entirely. So Ford is recalibrating. It's betting on AI to make vehicles smarter, more personalized, and hopefully more profitable. But unlike Tesla's full self-driving obsession or Hyundai's robot taxi dreams, Ford is being pragmatic about what AI can realistically deliver in the near term.

Here's the thing: this move matters more than it might seem at first glance. When a traditional automaker like Ford starts talking about in-house AI development and custom hardware, it signals a seismic shift in how the automotive industry views software. For decades, cars were primarily mechanical products. Now, they're becoming software-defined platforms. And Ford is stepping up to compete in that space.

TL; DR

- AI Voice Assistant Launches in 2026: Ford's voice assistant will roll out through mobile apps first, then integrate into vehicles in 2027, with access to vehicle-specific data for better recommendations

- Level 3 Autonomy Coming in 2028: Hands-free, eyes-off driving features will arrive with Ford's affordable UEV platform, allowing drivers to take eyes off the road in specific situations

- In-House Development Strategy: Ford is designing custom software and hardware modules in-house rather than relying on external partners, reducing costs and increasing control

- Pragmatic AI Approach: Ford is focusing on conditional autonomous features rather than pursuing fully autonomous Level 4 vehicles, making AI technology accessible at lower price points

- Strategic Pivot After Market Headwinds: The announcements follow Ford's cancellation of the F-150 Lightning and reflect broader market cooling on premium electric vehicles

Ford plans to launch its AI voice assistant on mobile apps in 2026, with in-car integration by 2027. Level 3 autonomy is set to debut in 2028. Estimated data.

Understanding Ford's AI Voice Assistant Strategy

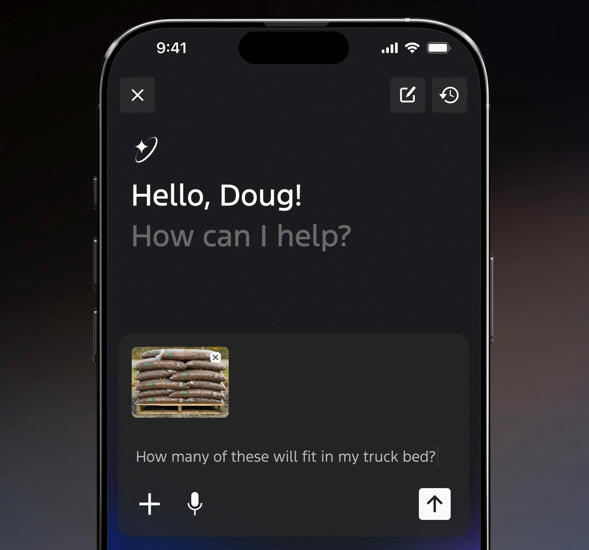

Let's talk about Ford's new AI voice assistant, because it's actually way more sophisticated than "Alexa in your car." This isn't just a gimmick for controlling your lights or playing music. Ford built this thing to understand your specific vehicle and its capabilities.

Imagine you're standing in a hardware store, staring at bags of mulch. You want to haul some home in your F-150, but you're not sure how many bags will fit in the truck bed. You could take a wild guess. Or you could snap a photo with your phone, open the Ford app, and ask the AI assistant. Here's what makes Ford's version special: it knows your exact vehicle specifications. It knows your truck bed dimensions, your trim level, your payload capacity. So instead of a generic answer from Chat GPT or Google Gemini, you get a response tailored to your specific truck.

That's the competitive advantage Ford is after. Most AI assistants work the same way for everyone. Ford's is personalized to your vehicle's unique configuration.

The Multi-LLM Approach

Ford made a clever decision here. Rather than betting everything on a single large language model, the company designed its assistant to be "chatbot-agnostic." That means it can work with different LLMs from different providers. Ford said it would integrate Google Gemini, but it's not exclusive to Gemini.

Why does this matter? Flexibility. If a better LLM comes out next year, Ford can swap it in. If Google's Gemini has an outage, Ford can route requests elsewhere. It's the opposite of the Tesla approach, where everything is tightly coupled to a single proprietary system. Ford's betting that openness is an advantage.

Rolling Out in Phases

Ford's timeline is methodical. The assistant launches on Ford and Lincoln mobile apps in 2026. That's the testing ground. Real customers, real use cases, real feedback. Then in 2027, it moves into the in-car experience. That second phase is important because it means integrating with your vehicle's infotainment system, voice controls, and the in-cabin experience.

This phased approach lets Ford work out the kinks without disrupting the entire user base. It's how you do a major rollout without breaking things.

The LLM Integration Layer

Sammy Omari, Ford's head of ADAS and infotainment, explained the technical architecture pretty well. Here's how it works: Ford takes the LLM and gives it access to all the relevant Ford systems. The assistant doesn't just have general knowledge about vehicles. It has deep integration with your specific car's data.

This is non-trivial engineering. It means building APIs between the LLM and your vehicle's computer systems. It means ensuring that vehicle data is passed securely to the LLM without compromising privacy. It means designing fallbacks for when the LLM is unavailable or having connectivity issues.

Ford is handling all of this in-house rather than outsourcing it. That's the key difference. The company isn't just licensing Chat GPT and slapping it in a car. It's building the integration layer, the data pipeline, the security architecture.

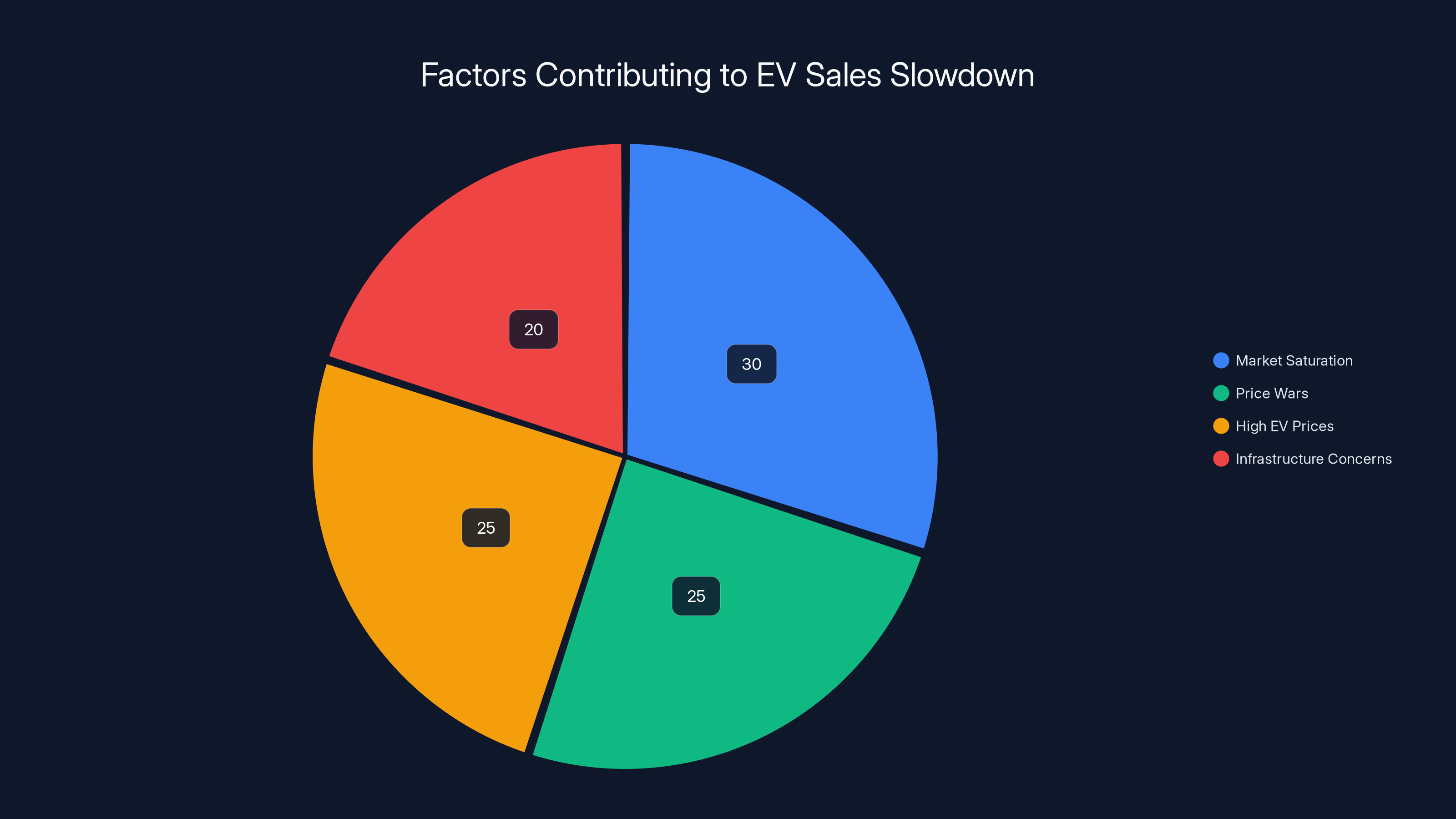

Estimated data shows market saturation and price wars as leading factors in the EV sales slowdown, each contributing significantly to the plateau in 2023-2024.

The Level 3 Autonomous Driving Story

Now here's where things get really interesting. Level 3 autonomy is way more controversial than people realize. In a Level 3 system, the car can drive itself, but the driver needs to stay alert and be ready to take over when prompted. Your eyes can be off the road in certain situations. Your hands don't need to be on the wheel.

That might sound like Level 4 (fully autonomous), but it's fundamentally different. And that difference is causing a lot of debate in the industry.

What Level 3 Actually Means

Let's be precise here. The Society of Automotive Engineers defines six levels of automation, from 0 (no automation) to 5 (full autonomy in all conditions). Here's where we are:

Level 0: Human does everything. No automation.

Level 1: Adaptive cruise control or lane keeping. Driver controls one aspect, car assists with another.

Level 2: Driver assistance on steroids. Car can control steering, acceleration, and braking simultaneously, but driver must pay attention at all times. This is what Autopilot and Blue Cruise are.

Level 3: Conditional autonomy. Car can drive itself in certain situations. Driver can take eyes off road and hands off wheel, but must be ready to take over when the car requests it. This is what Ford is aiming for in 2028.

Level 4: High autonomy. Car can drive itself in most conditions without driver intervention. Can handle edge cases in limited geographic areas.

Level 5: Full autonomy. Car can drive itself anywhere, in any condition, like a human driver.

Ford's Level 3 announcement is significant because the company is skipping Level 4 (fully autonomous) for now and going straight to conditional autonomy. It's a smart move given the state of the technology.

Ford's Current Blue Cruise System

Before we talk about Level 3, let's look at what Ford already has. Blue Cruise is the company's Level 2 hands-free driver assist system. It works on most highways, handling steering, acceleration, and braking without driver input. But you need to keep your hands close to the wheel and pay attention.

Blue Cruise works on limited routes. It's optimized for highways where traffic flow is relatively predictable. Add a few curves, some traffic lights, or a confusing intersection, and the system hands control back to you.

What Level 3 Changes

Ford's Level 3 system will be significantly more capable. It will recognize traffic lights. It will navigate intersections. It will handle point-to-point routes, not just highways. And critically, it will allow drivers to take their eyes off the road in certain situations.

This is where the controversy comes in. Some experts argue that Level 3 is actually more dangerous than Level 2 or Level 4. Why? Because of the handoff problem. In Level 2, you're already paying attention. If something goes wrong, you can react immediately. In Level 4, the car is fully responsible and won't hand off unless it's genuinely safe to do so. But in Level 3, you're not paying attention. When the car says "I need you to take over," you have a few seconds to regain situational awareness. Can you actually react in time?

Ford is aware of this. The company is investing heavily in sensor redundancy and robust fallback systems. The goal is to never hand off to a driver in a genuinely dangerous situation.

The Universal EV Platform Connection

Here's a key piece of context. Ford's Level 3 system is rolling out with the company's Universal Electric Vehicle (UEV) platform in 2027. This isn't a random timing. The UEV is Ford's effort to make affordable EVs. The company cancelled the F-150 Lightning because it was too expensive and wasn't selling well. The UEV is supposed to fix that problem.

Ford's strategy is to put advanced autonomous features into the UEV platform. That might seem backwards. Wouldn't you want autonomy features in your expensive, premium vehicles? But Ford's thinking is different. By building autonomy into an affordable platform from the ground up, the company can reach more customers and scale the technology faster.

It's similar to what Tesla did with the Model 3. The Model 3 was the car that made EVs accessible to normal people, not just wealthy early adopters. Ford is betting that the UEV will do the same for Level 3 autonomy.

Why Ford Is Building AI Technology In-House

This is the part that most people are missing in coverage of Ford's announcements. The real story isn't just about the AI features. It's about how Ford is approaching their development.

Traditionally, automakers worked with suppliers. Need a new transmission? Talk to ZF or Allison. Need infotainment software? Work with a tier-one supplier. Need autonomous driving? Partner with a tech company. This model worked for a century because software wasn't central to the vehicle experience.

But everything's changed. Now software is everything. And Ford recognizes that it can't rely on external partners to build its competitive advantage.

The Cost Reduction Angle

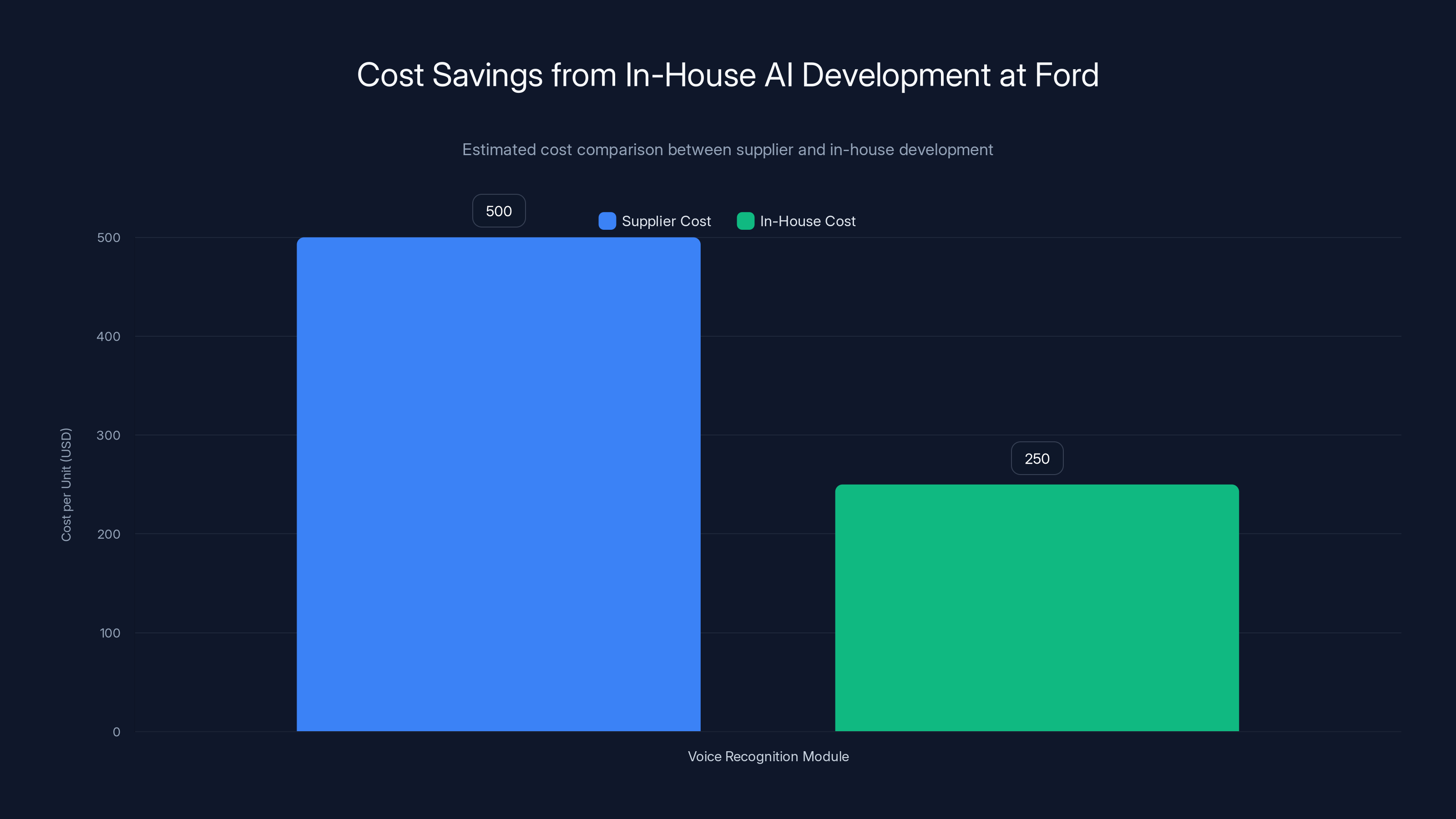

Douglas Field, Ford's chief officer for electric vehicles and software, wrote in a blog post that by designing hardware and software in-house, Ford can reduce costs significantly. This isn't just about engineering efficiency. It's about eliminating middlemen and supply chain complexity.

Here's a simplified example. Suppose Ford wants to integrate voice recognition hardware into a vehicle. If Ford buys this from a supplier, the cost might be $500 per unit. The supplier builds these for multiple customers, so they can't optimize for Ford's specific use case.

But if Ford designs its own module, optimized specifically for their vehicles and their software stack, the cost might drop to $250 per unit. The savings come from eliminating layers of markup, reducing unnecessary features, and tighter hardware-software integration.

Multiply $250 savings across millions of vehicles, and you're talking about billions of dollars. That's the incentive driving Ford's in-house development strategy.

The Control Factor

There's another benefit beyond cost: control. When you rely on external suppliers for critical components, you're at their mercy. If the supplier goes bankrupt, gets acquired, raises prices, or deprioritizes your product line, you're in trouble.

Ford learned this the hard way during the semiconductor shortage. The company had to shut down production lines because they couldn't get enough chips. That wasn't Ford's fault directly, but it highlighted the risk of depending on suppliers for critical technology.

By developing technology in-house, Ford regains control. The company decides the roadmap, the features, the release schedule. That's invaluable for a company trying to compete in a rapidly changing market.

The Talent Acquisition Challenge

There's a catch, though. Building world-class AI and autonomous driving technology requires world-class engineers. Ford needs to compete with Tesla, Google, Open AI, and everyone else for top talent.

Ford's advantage? It's a household name with 120 years of automotive expertise. Its disadvantage? Dearborn, Michigan isn't Silicon Valley. Salary competition is brutal. Culture at big established companies can feel bureaucratic compared to startups.

Ford is addressing this by creating semi-autonomous research groups, hiring from top tech companies, and giving engineers significant autonomy. The company also acquired key talent through strategic partnerships and selective acquisitions.

What Ford Isn't Building

It's important to understand what Ford isn't doing. The company isn't building its own large language models. Ford isn't designing its own AI chips like Tesla's custom silicon. Those are reasonable decisions. Training a competitive LLM costs hundreds of millions of dollars and requires computational infrastructure at a scale that makes sense only for a few companies.

Instead, Ford is focusing on the integration layer. The company is building custom hardware modules that are smaller and more efficient than off-the-shelf solutions. It's building the software that ties everything together. It's creating the data pipeline and the infrastructure to deliver personalized AI experiences.

This is a smarter approach than trying to do everything from scratch. Ford is making strategic choices about where to invest development resources.

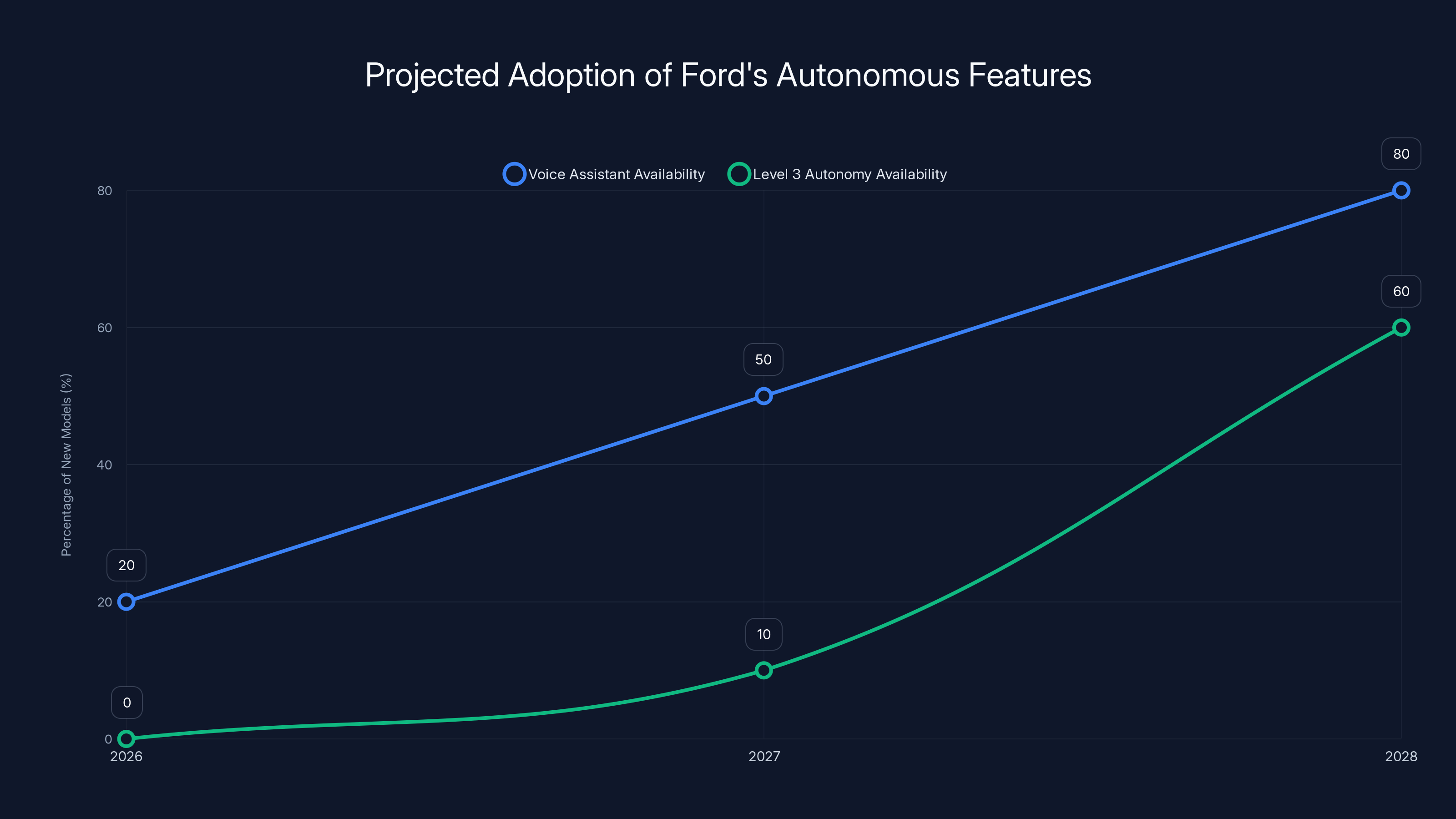

Estimated data shows a gradual increase in the availability of Ford's voice assistant and Level 3 autonomy features, with significant adoption expected by 2028.

The Competitive Landscape: Ford vs. Tesla vs. Others

Ford's announcements don't exist in a vacuum. They're happening in a specific competitive context. Let's look at what other companies are doing.

Tesla's Aggressive Approach

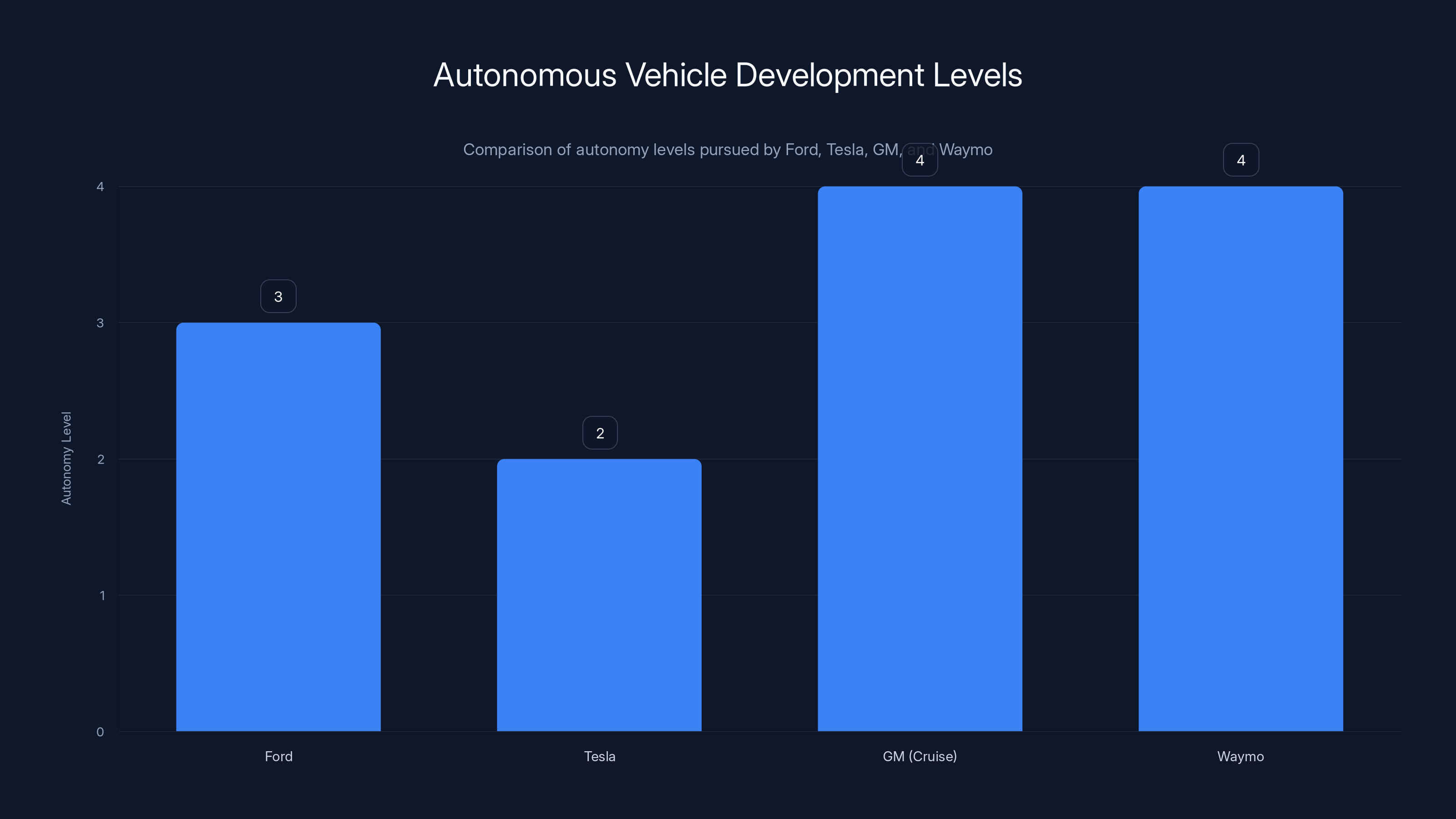

Tesla has invested billions in Full Self-Driving, pursuing Level 4 autonomy. The company has built custom silicon, developed end-to-end neural networks, and accumulated massive amounts of real-world driving data. Elon Musk has consistently promised that Level 4 autonomy is just around the corner.

However, Tesla is still at Level 2 in reality. Full Self-Driving is available to select customers as a paid beta feature, but it's not approved for Level 4 deployment anywhere. Critics argue that Tesla's aggressive timeline claims have consistently missed targets.

Ford's approach is the opposite. The company is being conservative, setting achievable timelines, and planning incremental releases. Level 3 in 2028 is ambitious but more credible than Tesla's vague promises of full autonomy.

General Motors' Autonomous Vehicles Division

General Motors invested heavily in autonomous vehicles through its Cruise division. GM spent billions developing Level 4 technology. Then in 2024, GM essentially shut down Cruise after a self-driving vehicle hit a pedestrian and dragged her. The company is now refocusing on driver assistance features instead of full autonomy.

This is a cautionary tale. GM's investment was massive. The technology was sophisticated. But the safety incidents and regulatory scrutiny made it clear that the market wasn't ready, and the liability was too high.

Ford's Level 3 approach sits between Tesla's ambitious full autonomy and GM's now-abandoned Level 4 efforts. It's a middle path that acknowledges both the potential and the real risks.

Waymo's Focused Strategy

Waymo is pursuing Level 4 autonomy in specific, controlled environments. The company operates robotaxi services in Phoenix, San Francisco, and other cities. Waymo isn't trying to sell consumer vehicles. It's focused on commercial autonomous vehicle services.

This is a valid approach, but it's not what Ford is doing. Ford is selling consumer vehicles. Level 3 features need to work in diverse, unpredictable environments. Ford can't afford the hyperlocal, controlled approach that Waymo uses.

Traditional Automakers Going Hybrid

Mercedes-Benz, BMW, and Audi are all pursuing Level 3 autonomy in the next few years. Mercedes has already deployed Level 3 features in some markets. These companies are following a similar playbook to Ford: incremental automation, focus on conditional autonomy, and partnerships with tech companies.

Ford's announcement is part of a broader trend among traditional automakers. The industry is collectively deprioritizing Level 4 autonomy and focusing on Level 2 and Level 3 features that can generate value in the near term.

Market Context: Why Ford Is Making These Moves Now

Ford's AI announcements aren't random. They're a response to specific market pressures and business challenges.

The EV Sales Slowdown

Electric vehicle sales growth has stalled in the United States. After years of rapid expansion, the EV market hit a plateau in 2023 and 2024. Several factors contributed:

First, the easy wins are over. People who desperately wanted an EV already bought one. The remaining market requires addressing concerns about range, charging infrastructure, and price.

Second, EV prices fell as competition increased. Tesla was forced to cut prices on Models S and 3. That triggered price wars across the industry. Margins compressed. Profitability became harder to achieve.

Third, premium EV models like the Mustang Mach-E and F-150 Lightning didn't deliver the sales volume Ford expected. These vehicles were expensive, targeting affluent early adopters. But the market for $50,000+ electric vehicles proved smaller than Ford anticipated.

Ford's response? Pivot to more affordable EVs and focus on features that make vehicles more valuable. That's where the AI voice assistant and Level 3 autonomy come in. These features create differentiation without massive price increases.

The F-150 Lightning Cancellation

Ford cancelled the F-150 Lightning, its electric pickup truck. This was a stunning admission. The F-150 is Ford's most iconic vehicle. An electric version seemed like a sure winner.

What went wrong? Manufacturing complexity. Battery costs were higher than expected. Demand was lower than projected. The vehicle was too expensive to build profitably at the market price customers were willing to pay.

The cancellation freed up resources and manufacturing capacity. Ford can now focus on more achievable products. The Universal EV Platform is supposed to be simpler and cheaper to build than the F-150 Lightning.

The Diversification Strategy

Ford also announced it would be investing in battery storage systems and expanding hybrid vehicle production. This is a savvy move. AI data centers need reliable power storage. Electric utilities need grid storage. There's a massive market opportunity that Ford can address with its battery expertise.

By diversifying beyond just selling vehicles, Ford spreads risk and taps into different revenue streams. The AI assistant and Level 3 autonomy fit into this broader strategy of making Ford more than just a vehicle manufacturer.

Ford plans to launch its AI voice assistant in 2026, followed by Level 3 autonomy in 2028. These milestones mark significant steps in Ford's strategic roadmap for autonomous vehicles. Estimated data.

Technical Deep Dive: How Ford's Voice Assistant Works

Let's get technical for a moment. Understanding how Ford's voice assistant works requires understanding several layers of technology.

The Voice Recognition Layer

When you speak to Ford's assistant, the first thing that happens is voice recognition. Your audio is processed locally or streamed to servers where a speech-to-text engine converts your words to text.

Ford likely uses a combination of local processing and cloud processing. Local processing provides low-latency response for simple commands. Cloud processing provides more sophisticated understanding for complex queries.

The challenge here is accuracy. Background noise, accents, and speech patterns affect recognition. Ford's engines need to handle these variations.

The Natural Language Understanding Layer

Once your words are converted to text, the next layer is natural language understanding. This is where the magic happens. The system needs to understand what you're asking, not just what words you said.

For example, "How much mulch fits in my truck?" and "What's the payload capacity of my F-150?" are asking the same thing in different ways. An NLU engine maps both to the same semantic meaning.

Ford's system uses machine learning models trained on vehicle-related queries. The models learn patterns in how people ask vehicle-related questions and what they're actually trying to accomplish.

The Vehicle Data Integration Layer

Here's where Ford's system gets special. After understanding your query, the system accesses your vehicle's data. It looks up your truck bed dimensions, payload capacity, trim level, and other specifications.

This integration is non-trivial. It requires secure APIs connecting the voice assistant to your vehicle's computer. It requires real-time data fetching. It requires caching strategies so the system doesn't bog down with unnecessary queries.

Ford is building all of this in-house. That's a significant engineering effort.

The LLM Query Layer

Once the system understands your question and has your vehicle data, it constructs a prompt for the large language model. This prompt includes your question plus relevant vehicle data. For example:

"User asked: 'How much mulch fits in my truck?' User's vehicle: F-150 Super Crew, 5.5-foot bed, 1,825 lb payload capacity. Provide a specific answer based on this vehicle's specifications."

The LLM generates a response tailored to your specific vehicle. That response is then converted back to speech and delivered to you.

The Fallback Layer

What happens if something breaks? If the cloud connection fails? If the LLM times out? Ford needs robust fallback systems.

One approach: cache common queries locally. If your system has processed "How much mulch fits in my truck?" before, it can provide the cached answer without hitting the cloud.

Another approach: have pre-written responses for critical queries. If the LLM fails, the system falls back to structured responses for common vehicle questions.

These fallback systems are essential for making the feature reliable in real-world conditions.

Technical Deep Dive: Level 3 Autonomy Infrastructure

Level 3 autonomy is significantly more complex than voice assistants. Let's break down what's required.

Sensor Architecture

Ford's Level 3 system relies on multiple redundant sensors. This is critical because if a single sensor fails, the system needs to continue functioning safely.

Typical autonomous vehicle sensor suites include:

- Li DAR: Laser-based distance measurement. Provides precise 3D maps of surroundings.

- Radar: Radio-based distance and velocity measurement. Works in bad weather when Li DAR struggles.

- Cameras: Multiple cameras providing different fields of view. Used for object recognition and lane detection.

- Ultrasonic sensors: Short-range sensors for detecting nearby objects.

- IMU (Inertial Measurement Unit): Measures acceleration and rotation.

- GPS: Provides global positioning.

Redundancy is the key. If Li DAR fails, the system can fall back on cameras and radar. If one camera fails, others take over. This architecture ensures the system can handle sensor failures gracefully.

The Perception Stack

Raw sensor data is useless without processing. Ford's perception stack converts raw sensor data into a useful representation of the world.

First, sensor fusion: data from multiple sensors is combined into a consistent world model. Li DAR provides accurate distance, camera provides rich visual information, and radar provides velocity. Together, they create a comprehensive picture.

Second, object detection: the system identifies cars, pedestrians, cyclists, lane markings, traffic signals, and other relevant objects.

Third, object tracking: the system follows detected objects over time, maintaining identities and predicting trajectories.

This stack runs in real-time, typically 10-30 times per second. It requires significant computational power.

The Decision-Making Stack

Once the vehicle understands its environment, it needs to decide what to do. Should it accelerate, brake, or turn? When should it change lanes? How should it handle an unexpected obstacle?

Ford's decision-making system uses a combination of approaches:

- Rule-based systems: Hard-coded rules for specific scenarios. "If traffic light is red, stop." These are simple and reliable but can't handle novel situations.

- Machine learning models: Neural networks trained on real-world driving data. These can handle complex scenarios but are harder to debug and validate.

- Planning algorithms: Algorithms that generate candidate trajectories and evaluate them against cost functions. These ensure smooth, comfortable driving.

The key is integration. All three approaches work together. Rule-based systems handle known scenarios. ML models handle ambiguous situations. Planning algorithms ensure smooth execution.

The Handoff System

This is specific to Level 3. When the system encounters a situation it can't handle safely, it needs to hand off control to the driver. The handoff system needs to:

- Detect that a handoff is necessary (system limitation, request from user, potential safety issue).

- Alert the driver clearly and urgently.

- Give the driver a few seconds to respond (this is the controversy).

- Monitor whether the driver is taking appropriate action.

- If the driver doesn't respond, execute a safe fallback maneuver.

Ford is investing heavily in robust handoff systems. The goal is to never hand off in a genuinely dangerous situation. The car should only request control in situations where the driver can reasonably take over.

Ford's in-house development of AI technology can reduce costs by 50% per unit compared to using external suppliers. Estimated data.

Privacy and Security Concerns

When you're sharing vehicle data with cloud-based AI systems, privacy matters. Ford needs to address several concerns.

Data Collection

Ford's voice assistant will listen to your voice commands. That audio is processed, converted to text, and discarded (hopefully). But during processing, Ford has access to your words.

Likewise, the Level 3 system will collect comprehensive data about your driving: locations, speeds, routes, braking patterns, acceleration patterns. This data is valuable for improving the system, but it's also sensitive.

Ford has stated that it will be transparent about data collection and will provide users with control over what data is collected and how it's used. The company will comply with privacy regulations like GDPR and CCPA.

However, privacy regulations are still evolving. As of now, there's no comprehensive federal privacy law in the US. That means Ford has significant discretion in how it handles user data.

Data Security

If Ford collects sensitive data, it needs to protect it from hackers. This means encryption in transit and at rest, access controls, regular security audits, and incident response plans.

The automotive industry has a relatively poor track record on cybersecurity. Historical breaches have exposed vehicle location data, personal information, and even enabled remote vehicle control.

Ford is aware of this and has invested in security infrastructure. The company hires security researchers, conducts penetration testing, and works with external security firms. However, security is an ongoing arms race. Attackers are constantly finding new vulnerabilities.

Data Retention

How long does Ford keep your data? This is critical for privacy. Audio recordings of your voice commands could contain sensitive information: health conditions, family details, financial information.

Ford should delete voice recordings as soon as they're processed into text. Similarly, driving data should be retained for as long as necessary for system improvement, then deleted.

Ford hasn't provided detailed data retention policies yet. As the systems launch, these policies will become important for users concerned about privacy.

Regulatory Challenges and Approvals

Neither voice assistants nor Level 3 autonomy face significant regulatory barriers in the US. The regulatory landscape is surprisingly permissive.

Voice Assistant Regulations

Voice assistants are essentially software features. The FCC doesn't regulate them specifically. There are some regulations about in-vehicle electronics (EMC emissions, for example) that apply, but nothing specific to AI assistants.

Privacy regulations do apply. GDPR applies if Ford has European customers. CCPA applies to California residents. Various state laws apply elsewhere. But from a pure vehicle functionality perspective, voice assistants face minimal regulatory hurdles.

Level 3 Autonomy Regulations

Level 3 autonomy faces more scrutiny. Regulators want to ensure the systems are safe before allowing them on public roads.

In the US, the NHTSA (National Highway Traffic Safety Administration) oversees vehicle safety. The agency has authority to require testing, demonstrations, and certifications before approving Level 3 features.

However, the NHTSA has been relatively hands-off on autonomous vehicles. The agency issued guidelines in 2016 and hasn't substantially updated them since. There's no explicit approval process for Level 3 systems. Instead, manufacturers can deploy them and the NHTSA can investigate if there are safety issues.

This is actually advantageous for Ford. The company can move faster without waiting for formal approval.

However, public trust is critical. If Ford's Level 3 system causes accidents, there will be immediate backlash. Regulators will step in. Liability concerns will emerge. The company needs to deploy very conservatively.

State-Level Regulations

States have their own vehicle regulations. Some states explicitly allow Level 3 driving. Others have more restrictive policies. Ford will need to ensure its systems comply with laws in all states where they're deployed.

This is complicated because regulations vary. A system that's legal in California might be illegal in Texas. Ford will need a flexible approach to comply with different state rules.

Ford is targeting Level 3 autonomy by 2028, while Tesla is currently at Level 2 despite aiming for Level 4. GM's Cruise division reached Level 4 but was shut down, and Waymo operates at Level 4 in controlled environments.

Real-World Challenges and Limitations

Here's where we get honest. Ford's AI systems will face real-world challenges that aren't obvious in press releases.

Weather and Sensor Degradation

Sensors degrade in bad weather. Li DAR doesn't work well in heavy rain or snow. Cameras struggle in fog. Radar provides velocity information that cameras can't, but it's less precise.

Ford's redundant sensor architecture helps, but it doesn't solve the problem completely. In severe weather, the system might hand off to the driver or slow down dramatically.

This is a real limitation. If you live somewhere with frequent snow and ice, Level 3 autonomy might not work for you half the year.

Edge Cases and Long Tails

Autonomous driving systems are trained on data from normal driving scenarios. But there's an infinite tail of unusual situations: a car broken down in the middle of the road, a construction zone without clear markings, a bicycle rider on the sidewalk darting into the road.

Training on more data helps, but you can never capture every possible scenario. Ford will face situations its system hasn't seen before.

The question is how the system handles unknowns. Does it hand off to the driver? Does it slow down? Does it try to navigate anyway? These are hard problems without perfect solutions.

Legal Liability

If a Ford vehicle equipped with Level 3 autonomy causes an accident, who's liable? Is it Ford? Is it the driver? Is it the other person who broke traffic laws?

This is genuinely unsettled legal territory. Courts will need to establish precedent. Regulators will need to create frameworks. Insurance companies will need to figure out how to price this risk.

Ford will likely face lawsuits. The company has insurance and legal resources to handle that, but litigation risk is real.

The Business Model Question

Here's something to think about: how does Ford make money from these AI features?

Subscription Models

One approach: charge subscriptions for advanced features. Ford could offer Level 3 autonomy as a $50/month subscription add-on. Customers who want it pay, others don't.

This model works for Tesla, which charges $99/month for access to Full Self-Driving. However, Tesla's subscription rate is relatively low. Most Tesla owners don't pay for FSD.

Ford might face similar adoption challenges. Consumers are resistant to subscription fees for features they expected to be included.

In-Vehicle Monetization

Another approach: use AI features to enable new revenue streams. The voice assistant could recommend nearby restaurants or services. Ford gets a commission if you book through its recommendation.

When the car is driving itself on a long highway trip, Ford could offer premium entertainment or shopping experiences. Passengers could watch movies, order food, shop online.

This is speculative, but it's how tech companies typically monetize AI features: create engagement, then monetize attention.

Hardware Bundling

The simplest model: include AI features as standard on vehicles and make money from the hardware margin. Better features justify higher vehicle prices.

This is likely Ford's initial approach. The voice assistant and Level 3 autonomy will be standard or included with higher trim levels. Ford doesn't charge separately.

Over time, as these features become commoditized, Ford might move to subscription or usage-based models.

What This Means for Ford Owners and Potential Buyers

Let's ground this in reality. If you own a Ford or are considering buying one, what does this announcement mean for you?

For Current Ford Owners

If you own a Ford built before 2026, you're unlikely to get the voice assistant or Level 3 autonomy. These features are designed for new vehicles.

Your Blue Cruise system might get software updates that make it more capable. Ford might improve its Level 2 features. But the big AI upgrades require new hardware that older vehicles don't have.

This is typical in the auto industry. Features are baked into new vehicle generations, not retrofitted to older ones.

For People Buying in 2026-2027

If you buy a Ford in 2026 or 2027, you'll get the voice assistant through the mobile app first. That's useful for planning trips, getting information about your vehicle, or controlling some vehicle functions.

You probably won't get Level 3 autonomy yet. That's coming with the UEV platform in 2028.

For People Buying in 2028+

If you buy a new Ford in 2028 or later with the UEV platform, you'll get Level 3 autonomy. This is where things get genuinely interesting.

Level 3 will change how you experience driving on highways. You can take your eyes off the road in certain situations. You can relax instead of constantly monitoring the road.

But it's not full autonomy. You need to be ready to take over. It requires a mental shift from current driving.

The Pricing Question

Will the UEV platform with Level 3 autonomy be affordable? Ford says the goal is to make advanced features accessible to price-conscious buyers, not just luxury market buyers.

If Ford can deliver an EV with Level 3 autonomy for under $35,000, that's transformative. It would make advanced autonomous features accessible to mainstream buyers.

But there's skepticism. Battery costs, sensor costs, and computing costs are still high. Can Ford really deliver Level 3 autonomy in a $35,000 vehicle? That's the critical question.

Comparing Ford's Strategy to Competitors

Let's zoom out and look at how Ford's approach compares to other companies' strategies.

Tesla's Full Self-Driving Obsession

Tesla is betting everything on Level 4+ autonomy. The company invests billions in FSD development. Elon Musk has promised Level 4 robotaxis for years. The promise keeps getting delayed, but the company keeps investing.

Tesla's advantage: real-world data. With over 3 million vehicles equipped with cameras collecting driving data, Tesla has one of the largest autonomous driving datasets in the world.

Tesla's disadvantage: the tech isn't proven. FSD is still in beta after years of development. Autonomous taxis remain a promise, not a reality.

Ford's Level 3 approach is more conservative. The company is being realistic about what's achievable in the near term.

Waymo's Robotaxi Focus

Waymo has taken a different path. Instead of selling autonomous vehicles, Waymo operates robotaxi services. The company controls the vehicles, the driving, and the customer experience.

Waymo's advantage: incredible focus. The company doesn't need to deal with diverse consumer use cases or varied driving environments. Waymo operates in specific, well-mapped cities.

Waymo's disadvantage: limited market. Robotaxi services are only valuable in dense urban areas. Most car purchases are in suburban and rural areas where Waymo can't operate.

Ford's approach reaches different markets: consumers everywhere, not just city dwellers.

Traditional Automakers' Incremental Approach

Mercedes, BMW, and Audi are pursuing Level 3 and Level 2+. These are incremental improvements that create value in the near term without betting the company on Level 4.

This approach is safer and more pragmatic than Tesla's moonshot or Waymo's niche focus. If the technology doesn't pan out, the companies haven't lost everything.

Ford is following this playbook. It's a sensible strategy for an established automaker with existing products and customers.

The Future Roadmap: What's After Level 3?

Ford's 2028 Level 3 launch isn't the end of the story. What comes next?

Level 4 Possibility

Ford hasn't ruled out Level 4 autonomy in the future. Level 4 would mean hands-off, eyes-off driving in most conditions. The vehicle doesn't need driver intervention.

Levl 4 is significantly harder than Level 3. It requires solving edge cases that Level 3 systems can hand off. It requires better sensors, more computing power, more sophisticated algorithms.

Ford probably won't pursue Level 4 for consumer vehicles in the near term. But for robotaxi services or specialized fleet applications, Level 4 might make sense longer term.

AI Assistant Evolution

Ford's voice assistant will evolve. Early versions will handle basic queries and vehicle functions. Future versions will be more sophisticated, more personalized, more proactive.

Imagine an assistant that learns your driving patterns and suggests maintenance before problems occur. Or recommends routes based on your preferences and real-time traffic. Or helps you navigate unfamiliar cities with personalized recommendations.

This requires continuing investment in LLM technology, machine learning, and data collection. But the opportunity is significant.

Integration with Mobility Services

Ford is increasingly interested in mobility services, not just vehicle sales. The company owns Go Daddy, an electric scooter company, and has partnerships with ride-sharing services.

Future integration could mean: your Ford's voice assistant coordinates with ride-sharing services if the autonomous system thinks it's safer to hand off to a professional driver. Or your vehicle seamlessly transitions between autonomous driving and calling a professional driver service for complex situations.

These aren't fantasy. They're logical extensions of Ford's strategy.

Investment and Financial Implications

What does this mean for Ford's business?

Capital Expenditure

Developing AI voice assistants and Level 3 autonomy requires substantial investment. Ford is hiring engineers, building R&D facilities, and investing in testing infrastructure.

These investments reduce near-term profitability. They're bets on future differentiation.

Competitive Positioning

If Ford successfully launches Level 3 autonomy in 2028 ahead of competitors, the company gains a competitive advantage. Early adoption of a compelling feature attracts customers.

If competitors beat Ford to market or launch more capable systems, Ford loses ground.

This competitive dynamic drives the entire industry. Everyone is racing to demonstrate autonomous capabilities.

Valuation Impact

Investors reward companies perceived as technology leaders. Tesla commands a premium valuation despite limited profitability, largely because investors believe in the autonomous driving potential.

Ford's AI announcements might improve investor perception. But Ford needs to execute flawlessly. Missed timelines or disappointing capabilities would hurt valuation.

Skepticism Worth Entertaining

It's healthy to be skeptical. Ford's announcements sound great, but there are legitimate reasons to question whether they'll materialize as promised.

Timeline Credibility

Ford says Level 3 autonomy in 2028. That's only three years away. Given the complexity of autonomous driving systems, this timeline is aggressive.

Almost every company in autonomous vehicles has missed timelines. Tesla promised Level 4 years ago. Google's Waymo took longer than expected. It's reasonable to expect Ford might slip.

Feature Scope

When Ford launches Level 3 in 2028, what exactly will it do? Will it work everywhere or just on highways? Will it work in rain and snow? Will it work on rural roads or only interstates?

If Level 3 is limited to perfect weather and well-marked highways, it's less impressive than it sounds.

Cost Viability

Ford promises Level 3 in affordable vehicles. But autonomous driving hardware is expensive. Sensors, computers, and redundant systems add up. Can Ford really deliver this in a $35,000 vehicle?

Maybe. With careful engineering and in-house development, it's possible. But don't be shocked if Level 3 vehicles cost considerably more than Ford currently projects.

Adoption Barriers

Even if Ford delivers Level 3 as promised, will customers want it? Consumer trust in autonomous vehicles is still low. Many drivers are skeptical about handing off control to a computer.

Ford might develop a capability that customers don't care about. That would be a massive waste of R&D investment.

The Bigger Picture: AI Transformation in Automotive

Ford's announcements reflect a broader transformation in the automotive industry. AI is becoming central to vehicle design, manufacturing, and operation.

Software-Defined Vehicles

Cars are transitioning from mechanical products with software to software-defined products with mechanical components. This is a fundamental shift.

Ford's in-house development strategy reflects this shift. The company is investing in software engineering capability because software is now central to competitive advantage.

Personalization and Customization

AI enables personalization at scale. Ford's voice assistant that understands your vehicle and preferences is an example. Future vehicles will customize driving modes, recommendations, and interfaces based on individual preferences.

This creates stronger customer relationships and increases switching costs. If your vehicle knows your preferences and adapts to you, you're less likely to switch brands.

Safety and Liability Evolution

As autonomous features become mainstream, safety and liability frameworks will evolve. Insurance will change. Regulations will adapt. Legal precedent will establish.

Ford's willingness to deploy Level 3 suggests confidence in the safety of its systems. If these systems cause accidents, the liability implications could be significant for Ford and the entire industry.

Environmental Considerations

Autonomous vehicles could improve fuel efficiency through smoother driving patterns and optimized routing. They could reduce congestion through better traffic coordination.

Or they could increase total vehicle miles traveled if autonomous vehicles make driving more convenient. This would increase emissions, not reduce them.

The environmental impact depends on how autonomy is deployed and what transportation patterns emerge.

FAQ

What is Ford's AI voice assistant and when is it launching?

Ford's AI voice assistant is an intelligent voice-controlled system that will integrate with your vehicle and understand your specific car's capabilities. It launches on Ford and Lincoln mobile apps in 2026, then moves to in-car infotainment systems in 2027. The assistant accesses vehicle-specific data like truck bed dimensions and payload capacity to provide personalized answers, making it smarter than generic AI assistants like Chat GPT.

What is Level 3 autonomous driving and how does it differ from Level 2?

Level 3 autonomy means the vehicle can drive itself and handle most situations without driver input, but the driver can take their eyes off the road temporarily. Level 2 (like Ford's current Blue Cruise) requires drivers to stay actively engaged and keep their hands close to the wheel. Level 3 gives drivers brief respite from constant monitoring, though they must remain ready to take control if the system requests it within seconds.

When will Ford's Level 3 autonomous driving feature be available?

Ford plans to introduce Level 3 autonomous driving in 2028 as part of its Universal Electric Vehicle (UEV) platform launch. This hands-free, eyes-off system will initially work on highways and in specific conditions, with plans for point-to-point driving that can recognize traffic lights and navigate intersections.

Why is Ford developing these technologies in-house instead of partnering with suppliers?

Ford is developing core AI and autonomous technologies in-house to reduce costs and maintain control over product development. By building custom hardware modules and integration layers in-house, Ford can optimize systems specifically for its vehicles without the markup of external suppliers, reducing per-unit costs significantly across millions of vehicles.

How does Ford's voice assistant access vehicle information to answer your questions?

Ford's assistant receives access to your vehicle's computer systems through secure APIs. When you ask about your truck's payload capacity or other specifications, the assistant looks up your exact trim level, dimensions, and capabilities in real-time, then uses that data to generate accurate, personalized responses that wouldn't be possible from a generic AI system.

What are the privacy concerns with Ford's AI systems?

Ford's voice assistant will record and process your voice commands, and Level 3 systems will collect driving data including locations, speeds, and routes. Privacy risks include unauthorized access, data breaches, and unclear data retention policies. Ford must comply with regulations like GDPR and CCPA, and consumers should review data collection policies carefully before opting in to these features.

Will Ford's Level 3 autonomy work in all weather and road conditions?

Level 3 will likely have limitations in severe weather like heavy snow or rain, where sensors like Li DAR and cameras degrade. The system will initially focus on well-marked highways in favorable conditions, with an expansion to more complex scenarios over time. Drivers in areas with frequent severe weather should expect handoffs to manual driving during poor conditions.

How does Ford's strategy compare to Tesla's Full Self-Driving approach?

Ford is pursuing incremental Level 3 autonomy with realistic timelines, while Tesla is betting billions on Level 4+ full autonomy with aggressive promises. Ford's approach is more conservative and pragmatic, acknowledging current technological limitations, whereas Tesla is pursuing a moonshot that would completely eliminate driver intervention in all conditions.

What is the Universal EV Platform (UEV) and why is Level 3 launching with it?

The UEV is Ford's new, more affordable electric vehicle platform designed to compete in the mass market after the F-150 Lightning cancellation. By integrating Level 3 autonomy into this affordable platform from the ground up, Ford can reach broader customers with advanced autonomous features rather than limiting them to expensive vehicles, similar to how Tesla made EVs mainstream with the Model 3.

Will Level 3 autonomous driving require a subscription fee?

Ford hasn't announced specific pricing for Level 3 autonomy. The feature might be included standard, added as a trim upgrade, or offered as a monthly subscription like Tesla's $99/month Full Self-Driving. Ford's focus on affordability suggests the feature will be accessible at reasonable cost points, but specifics will emerge closer to the 2028 launch.

How does Ford's in-house development reduce costs compared to supplier partnerships?

When Ford designs custom hardware modules optimized specifically for its vehicles and software stack, it can eliminate unnecessary features and reduce complexity, dropping per-unit costs from perhaps

What happens if Ford's Level 3 system encounters a situation it can't handle safely?

When Ford's Level 3 system encounters an unfamiliar or dangerous situation, it will alert the driver and request they take control. The driver has a few seconds to respond and take over steering, acceleration, and braking. If the driver doesn't respond, the system executes a safe fallback maneuver, such as gradually slowing to a stop on the shoulder.

Conclusion: The Road Ahead for Ford and Autonomous Vehicles

Ford's announcement of Level 3 autonomy in 2028 and AI voice assistants launching this year represents a genuine inflection point for the company. But it's important to understand this announcement in context: it's not Tesla's moonshot, and it's not revolutionary. It's pragmatic, strategic, and designed to address real business challenges.

The EV market has cooled. Customers aren't excited about $50,000 electric trucks. Profit margins are compressed. Ford needed a strategy to differentiate vehicles and justify premium pricing. AI-powered features and autonomous driving are that strategy.

Ford's in-house development approach is savvy. The company is building the pieces that matter most: the integration layer, the custom hardware, the personalized experience. It's not trying to build its own LLMs or custom silicon. It's being realistic about where to invest R&D resources.

The timeline is aggressive. Three years to Level 3 is optimistic given industry history. Expect delays. But Ford's being specific about what Level 3 will do, not making vague promises about full autonomy sometime in the future.

The real test comes in 2026 when the voice assistant launches through mobile apps. That's when we'll see whether Ford's technology actually works and whether customers actually use it. If that launch is solid, confidence in the 2028 Level 3 timeline increases. If the launch is buggy or underwhelming, skepticism is warranted.

For Ford customers, this is mostly good news. AI features that actually work, that understand your vehicle and preferences, are genuinely useful. Level 3 autonomy, when it arrives, will change how you experience driving.

For the industry, Ford's approach signals a middle path between Tesla's aggressive autonomy bets and the status quo of driver assistance features. Traditional automakers are collectively betting that Level 3 is the sweet spot: ambitious enough to be genuinely transformative, but achievable with current technology and acceptable to regulators.

Time will tell if Ford executes. The company has the resources, the engineering talent, and the motivation. But execution is always harder than announcement. Watch the 2026 voice assistant launch closely. That will tell you everything you need to know about whether Ford can deliver on its 2028 promises.

Key Takeaways

- Ford's AI voice assistant launches on mobile apps in 2026 before integrating into vehicles in 2027, offering personalized vehicle-specific recommendations

- Level 3 autonomy arriving in 2028 with the Universal EV Platform will allow hands-off driving in specific conditions, representing a pragmatic middle path between current Level 2 systems and full autonomy

- In-house development of software and hardware modules is reducing per-unit costs by an estimated 50% compared to third-party suppliers

- Ford's approach differs significantly from Tesla's aggressive full autonomy bet and Waymo's limited robotaxi service model, focusing on accessible features for mainstream buyers

- Real-world challenges including weather sensor degradation, edge cases, and driver handoff complexity remain significant implementation hurdles for Level 3 systems

Related Articles

- Mercedes Drive Assist Pro: The Future of Autonomous Driving [2025]

- CES 2026: Why AI Integration Matters More Than AI Hype [2025]

- Bosch CES 2026 Press Conference: Live Stream Guide & What to Expect [2025]

- Sony Honda Afeela CES 2026 Press Conference: Complete Guide [2025]

- Bosch CES 2026 Press Conference Live Stream [2025]

![Ford's AI Voice Assistant & Level 3 Autonomy: What's Coming [2025]](https://tryrunable.com/blog/ford-s-ai-voice-assistant-level-3-autonomy-what-s-coming-202/image-1-1767832818781.jpg)