Introduction: The Promise of Truly Personal AI

Last year, asking an AI assistant to do anything meaningful felt like babysitting a brilliant toddler. You'd request something simple—"Add this to my calendar"—and it would stare blankly at you, then confidently suggest something completely unrelated. The experience made you wonder if all this AI hype was just, well, hype.

Then Google's Gemini started getting weird in the best way possible. Not weird in the sense of being creepy or invasive, but weird in the sense that it started actually knowing things about you without you spelling everything out.

In 2025, Google rolled out what they're calling Personal Intelligence, a feature that lets Gemini access your Gmail, Calendar, Photos, and search history without you explicitly asking it to check those sources every single time. It's the kind of functionality that feels simultaneously like a leap forward and oddly familiar, like déjà vu in software form.

Here's the thing: it works. Sometimes brilliantly. Sometimes hilariously badly. And sometimes in ways that make you question whether AI has actually solved anything or just gotten better at knowing your secrets.

I spent weeks testing this feature across dozens of real-world scenarios. Book recommendations based on my reading history. Lawn care planning using photos and search history. Neighborhood suggestions for photography outings. Route planning for bike rides. Everything that seemed impossible six months ago now actually functions—but with catch after catch waiting in the details.

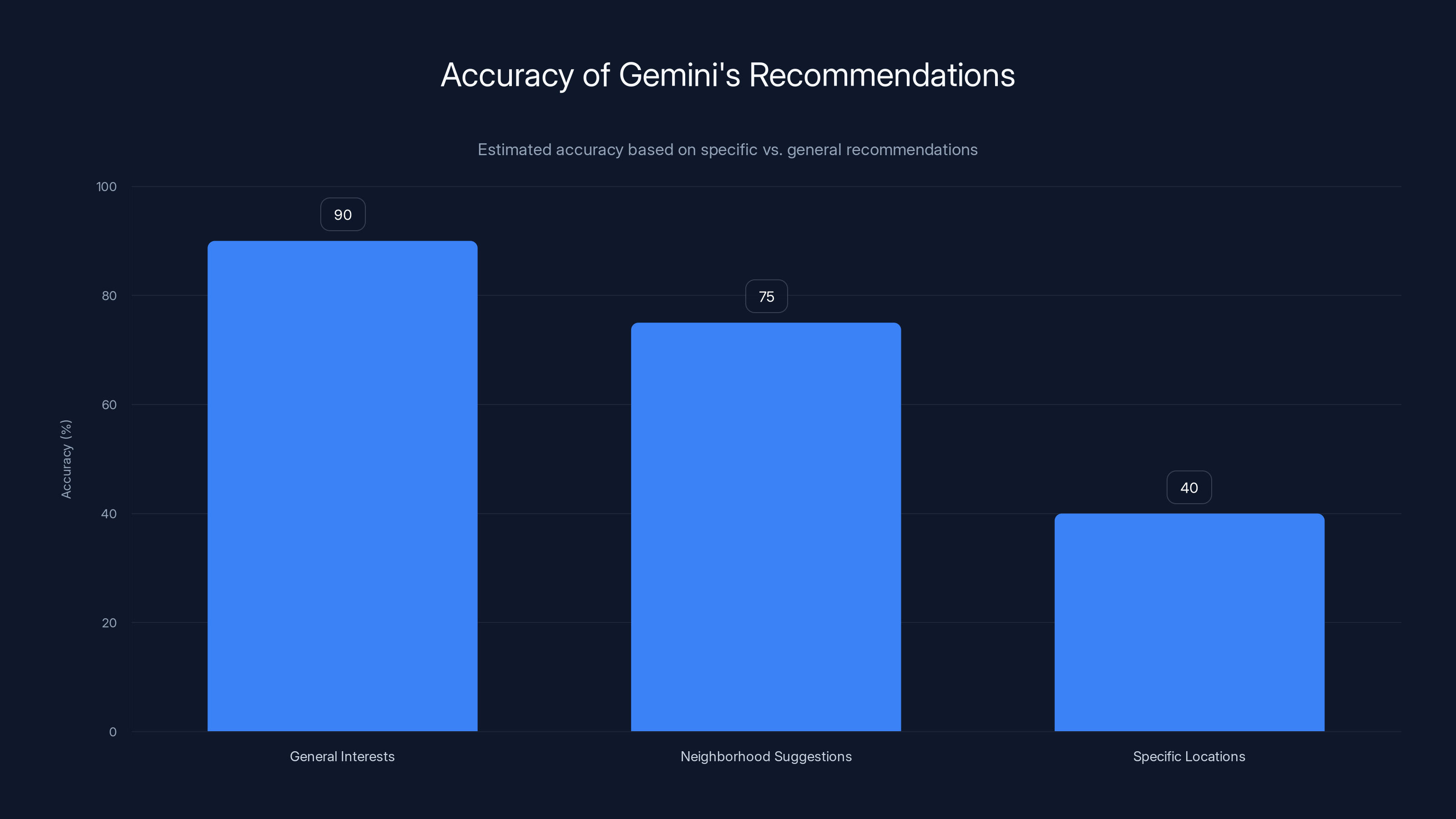

The bigger story isn't that Gemini now has access to your personal data. It's that even with intimate knowledge of who you are, what you like, and where you've been, AI still fundamentally struggles with the kind of reasoning that makes recommendations actually useful. It can spot patterns in your life. It can synthesize broad themes about your interests. But ask it to connect those patterns to specific, verifiable facts about the world? That's where things fall apart.

This article digs into what Personal Intelligence actually is, how it works, what it gets right, what it gets catastrophically wrong, and what it tells us about the future of AI assistants that actually know you.

TL; DR

- Personal Intelligence is opt-in: Gemini can now access Gmail, Calendar, Photos, and search history automatically without explicit prompting, available only to Gemini Pro and Ultra subscribers

- It actually works for some tasks: Calendar management, photo-based recommendations, and task creation from conversations have become genuinely functional features

- Details are where AI fails: Gemini excels at broad recommendations but struggles with specific locations, exact information, and real-world verification

- The privacy model is reasonable: You control which apps Gemini can access, and there's no automatic data sharing without your explicit setup

- It's still not a replacement for doing your own research: The convenience gains are offset by the fact-checking burden when AI's confidence exceeds its accuracy

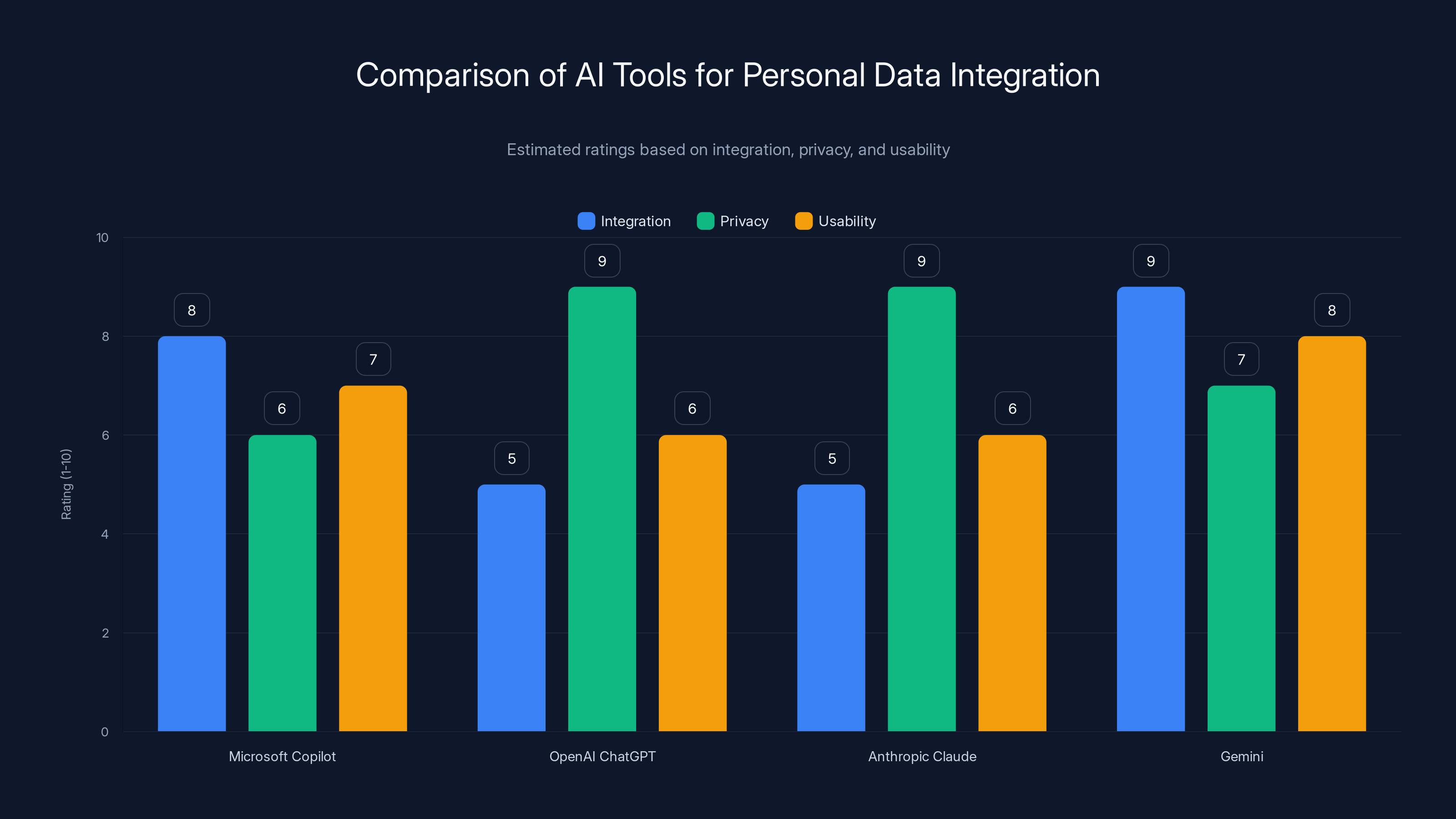

Gemini scores highest in integration and usability, while OpenAI's ChatGPT leads in privacy. Estimated data based on typical feature analysis.

What Personal Intelligence Actually Is (And Isn't)

Personal Intelligence sounds like something out of a sci-fi thriller where your AI assistant knows everything about you. The reality is both more mundane and more interesting than that.

At its core, Personal Intelligence is a permission layer combined with some basic context injection. When you enable it, you're essentially telling Gemini: "You have read-only access to these specific Google services, and if my prompt seems relevant to those services, go ahead and check them without me explicitly asking."

That last part is the key difference from previous versions. Before, Gemini had hooks into Google Workspace. But using those hooks required explicit prompts. You'd have to say, "Check my calendar for conflicts" or "Find that email about the meeting." The AI wouldn't volunteer to check unless you specifically asked.

With Personal Intelligence, Gemini becomes proactive about consulting your personal data. Ask it to "recommend a time for a video call tomorrow," and it doesn't just suggest times based on general best practices. It actually opens your Calendar, sees your schedule, identifies gaps, and makes intelligent suggestions based on what you've actually got scheduled.

That's a genuinely different experience.

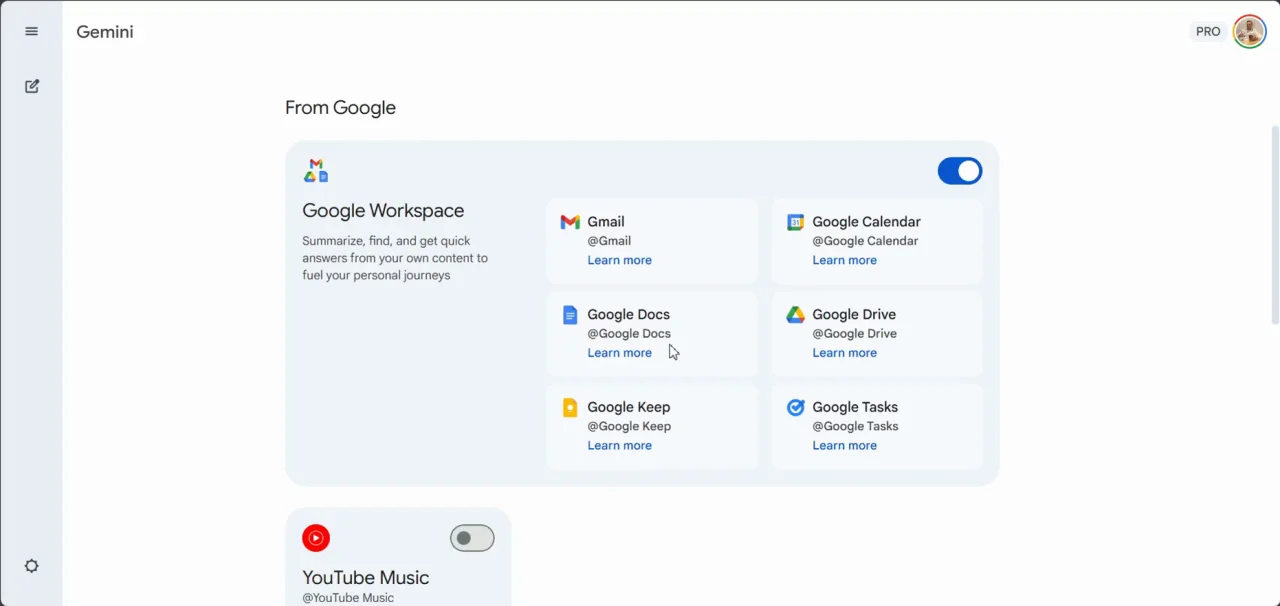

You choose exactly which Google services Gemini can access. Want it to have access to Photos and Calendar but not Gmail? You can do that. Want it locked out of everything except search history? You can configure that too. It's entirely opt-in, and Google provides clear toggles for each service.

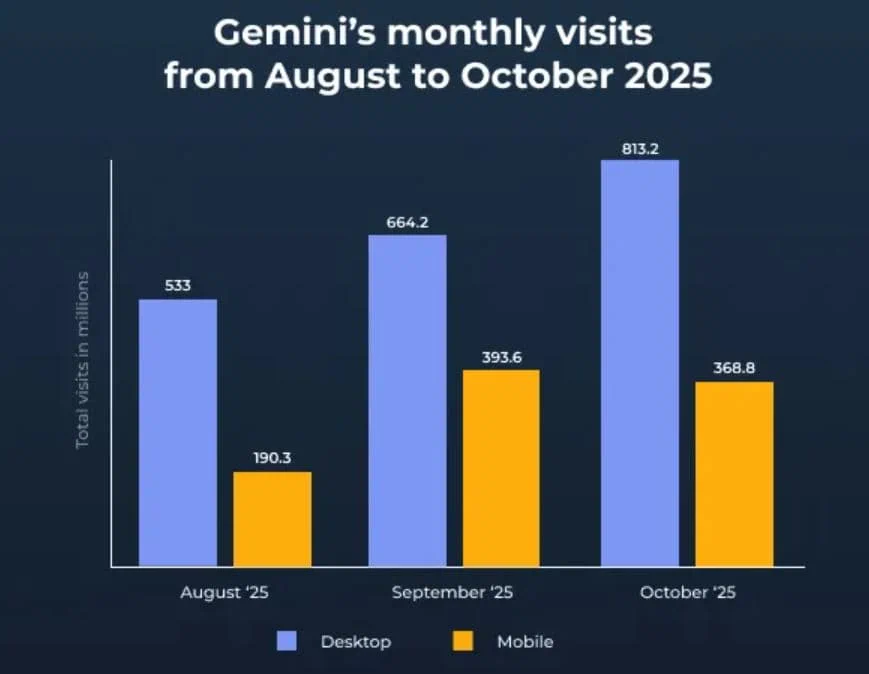

The feature launched in beta exclusively for Gemini Pro and Ultra subscribers, which means the people most likely to encounter it are the ones paying monthly for access. That's interesting positioning, because it suggests Google is positioning Personal Intelligence as a premium feature rather than something everyone gets automatically.

There's also something important about what Personal Intelligence is not. It's not reading your private messages or eavesdropping on your communications in the way some privacy advocates feared. It's not creating a complete dossier of your life without permission. It's not automatically uploading your personal data to Google's servers in new ways. What it actually does is let an existing AI tool access data you've already given Google, with your explicit permission and clear controls.

That distinction matters because there's been so much hype and fear around AI assistants having personal information that it's easy to assume this feature is creepier than it actually is.

Gemini excels at identifying general interests with 90% accuracy but struggles with specific location recommendations, dropping to 40% accuracy. Estimated data.

The Previous Approach: Why Explicit Prompting Wasn't Cutting It

To understand why Personal Intelligence matters, you need to understand what came before and why it was so frustratingly limited.

For the past couple of years, every major AI assistant has theoretically had the ability to interact with your personal data. Chat GPT has the ability to read your files if you ask it to. Claude can process documents you upload. Gemini could already access your Workspace apps with a prompt.

But there was a critical friction point: you had to ask. Every time you wanted the AI to check something, you had to be specific. You couldn't just say, "What should I do next?" You had to say, "Check my calendar, then check my email, then look at my to-do list, and tell me what I should prioritize."

That defeats the purpose of having an AI assistant that actually knows you.

I ran into this constantly about eight months ago when testing earlier versions of Gemini. I'd ask it to "help me plan my week" and it would give me generic productivity advice. Completely useless. When I explicitly asked it to "look at my calendar and then suggest a structure for my week," suddenly it had actual information to work with and could make something relevant.

But that requires you to know that the AI can check your calendar and to think to ask it to do so. Most people don't. Most people treat AI assistants like they're fundamentally disconnected from their lives, which is exactly how they behave without explicit permission and prompting.

There's a reason your phone's assistant has been so useful over the past decade despite being way less capable than a modern large language model. Apple's Siri and Google's Assistant understand context without you spelling it out. You ask Siri "What's on my calendar tomorrow?" and it doesn't need you to explain what calendar you mean or which person you're asking about. It knows because it has access to your information and understands that "my" refers to you.

AI chatbots on the web or mobile phones without personal data integration treat every conversation like meeting a stranger who knows nothing about you. That's useful for abstract reasoning and creative tasks. It's useless for personal assistance.

How the Personal Intelligence Setup Process Works

Enabling Personal Intelligence isn't complicated, which is actually one of the things Google got right here. They didn't bury it behind mysterious menus or require you to jump through security hoops.

When you launch Gemini as a Pro or Ultra subscriber, you get a prompt suggesting you enable Personal Intelligence. You click through, Google shows you a list of all the services it could theoretically access, and you toggle on the ones you want Gemini to have access to.

The default state is off. That's important. Google isn't automatically giving Gemini access to your data. You have to explicitly enable each service. The options include:

- Gmail: Gemini can read your emails to provide context about conversations, meetings, and information you've been sent

- Calendar: Used for scheduling, conflict detection, and time-aware planning

- Photos: For recommendations based on what you've photographed, trips you've taken, and visual interests

- Search history: To understand what you've been researching and what interests you

You can enable all of them, some of them, or none of them. If you only want Gemini to access Photos and Calendar but not Gmail, you can do that. If you want to enable everything for a month to test it and then disable Gmail because it feels too intrusive, you can do that too.

The interface is actually pretty clear about what's happening. When Gemini accesses one of these services to respond to your prompt, it tells you. You'll see something like "Checking your Calendar" or "Looking at your photos" in the conversation. That transparency is surprisingly rare in AI assistants.

Privacy-wise, this is notably different from how Meta's assistants work or how some of the less privacy-conscious AI tools operate. Google isn't creating a copy of your data somewhere else. It's not training models on your personal information. It's just letting Gemini read what it needs to read to respond to your prompt, in real-time, with your permission and your ability to revoke it at any time.

That doesn't mean there are zero privacy implications. Even with clear permissions and transparency, there's something worth considering about how comfortable you are with an AI reading your emails or looking at your photos. But the implementation is thoughtful enough that privacy concerns can at least be evaluated on your own terms rather than being imposed without your knowledge.

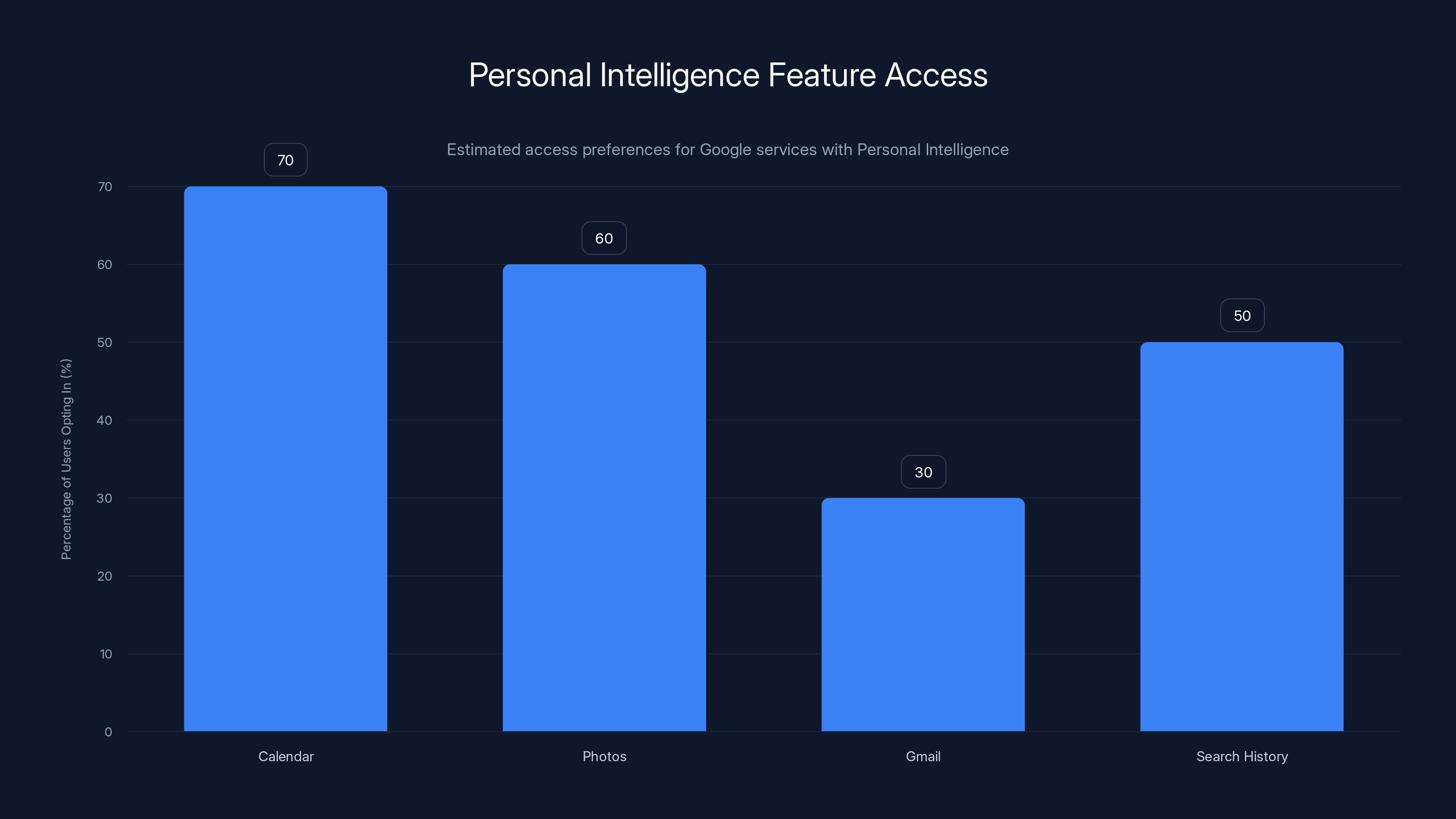

Estimated data suggests that users are more likely to allow access to Calendar and Photos, while Gmail access is less preferred. Estimated data.

When Personal Intelligence Works: The Real Wins

Let's start with what actually works, because there's genuinely impressive stuff here that demonstrates AI has made real progress over the past year.

Calendar Management and Scheduling

Ask Gemini "When's a good time for a video call tomorrow?" and it opens your calendar, sees your existing meetings, identifies your breaks, and suggests specific times when you're free. If you have a pattern of taking lunch between certain hours, it learns that and avoids those times. If you've blocked off focus time on your calendar, it respects those blocks.

This sounds trivial until you realize how different it is from what any AI could do six months ago. Previously, it would suggest times based on generic productivity advice: "Morning is usually good for important calls" or "Try not to schedule more than one meeting per hour." Useless generalizations that apply to no one specifically.

Now, when I ask Gemini about scheduling, I get a response that's actually grounded in my actual schedule. "You have a gap between 2 PM and 4 PM tomorrow before your team standup, or you're clear after 5 PM if evening works better." That's genuinely helpful.

The calendar integration also handles something that previous versions absolutely botched: adding things back to your calendar based on conversations. I've had Gemini suggest a hiking trip, discuss logistics, settle on a date and time, and then automatically add it to my calendar all in one conversation. Two years ago, Gemini would suggest the hiking trip but then you'd have to manually create the calendar event. Now it just does it.

Photo-Based Recommendations and Memories

Having access to your photo library opens up some genuinely clever possibilities. I tested Gemini's book recommendation feature, which works by analyzing your photos. It's looking for things like: books on your shelves, covers you've photographed, bookmarks you've captured, even coffee shops you've visited.

The recommendations it returned were weirdly accurate. Sci-fi, some literary fiction, the occasional coffee table book about photojournalism. All of these were things I've read or strongly considered reading, but not things I'd explicitly told Gemini about. The AI was extrapolating from visual data in my photo library.

It's the kind of feature that feels invasive at first and then kind of delightful once you realize how well it works. Yes, Google's AI is looking at your photos. But it's not doing anything with that information beyond making recommendations. It's not selling your data, training on your personal images, or creating some creepy database of your life.

Photoanalysis also powers neighborhood recommendations. I asked Gemini to "suggest some neighborhoods I haven't explored yet where I could take pictures." It looked at my location history through my search data and photos, figured out where I've already spent time, and suggested alternatives I'd never actually explored. The neighborhoods it suggested were legit good ideas, the kind of thing I actually would go photograph.

Task Creation and List Management

Probably the most immediately useful feature is Gemini's ability to create tasks and add things to lists automatically. We discussed lawn care planning earlier, but the pattern holds across dozens of tasks.

I'd describe a project I was thinking about, Gemini would break it into steps, and then it would actually create tasks in Google Tasks or Google Keep without me manually typing anything. For someone who constantly loses track of tasks they've discussed but not written down, this is genuinely productivity-changing.

Previously, Gemini could suggest a task structure, but you had to manually create each task. Now you can say, "I want to organize my garage this weekend," Gemini breaks it into steps (sort items, donate items, purchase storage, actually organize), and automatically creates a checklist you can mark off as you go.

Search History Context

Less flashy than the other features but arguably more useful: Gemini using your search history to understand what you've been researching or thinking about.

I tested this by asking Gemini "What should I know about electric vehicle ownership?" It looked at my search history, saw that I'd been reading about charging infrastructure, battery longevity, and different manufacturers. Instead of giving generic EV advice, it focused on the specific aspects I'd been researching. It could tell I was seriously considering making a purchase rather than just casually interested.

This context-awareness means Gemini doesn't waste your time explaining things you've already researched. It builds on what you already know rather than starting from zero.

Where It Falls Apart: The Details Problem

Now we get to the frustrating part. Because for every way Personal Intelligence works brilliantly, there's a scenario where it fails in a way that makes you question whether any of this is actually useful.

The core problem is this: Gemini is great at patterns and terrible at facts.

Gemini can look at your photos, your search history, and your previous conversations and correctly infer that you're interested in photography, hiking, coffee, and urban design. It can synthesize those interests into plausible recommendations. It can identify patterns in your calendar and suggest what time would be good for a meeting.

But the moment you ask it to connect those general patterns to specific, verifiable facts about the real world, things fall apart.

The Neighborhood Recommendation Disaster

I asked Gemini to suggest some Seattle neighborhoods for an afternoon outing to take photos and get coffee. It used my personal data to figure out that I'd already lived in and thoroughly explored Ballard, so it wisely excluded that from recommendations.

The neighborhoods it suggested were good: Capitol Hill, Fremont, the International District. All places I've been but where I could spend an afternoon photographing and find good coffee. So far, so good.

Then it got into specifics. It recommended a restaurant in South Park that it claimed was "perfect for a coffee break." When I checked, the restaurant existed, but it was in Georgetown, not South Park. Off by one neighborhood.

It raved about a Caffe Umbria location in the Old Rainier Brewery building, describing it as a perfect place for a long break. I checked. No Caffe Umbria exists in that building. There was one there years ago, but it closed.

It endorsed a t-shirt design shop with enthusiasm. The shop exists, but according to its Google Maps listing, it's been closed for two years. Yet Gemini confidently presented it as an option as if checking would reveal a thriving business.

This is what makes Personal Intelligence frustrating. Gemini had access to accurate information. Google Maps data exists. The café closure information is available. The current locations of businesses are documented. But Gemini's confidence in its recommendations vastly exceeded its accuracy in verifying them.

The Bike Route Planning Fiasco

I asked Gemini to suggest some new bike routes, with specific request to include a coffee shop stop. The high-level recommendations were good—neighborhoods and rough directions made sense. But the moment it tried to get specific about actual routes, things got weird.

Gemini generated a map link it claimed showed a specific route. Clicking through showed me completely different directions. The route it described involved several unpaved trails (fine, I bike in the woods sometimes) that culminated in a left turn crossing multiple lanes of traffic on a busy urban road during rush hour. Not just dangerous—completely illegible as a recommendation from an AI supposedly helping me plan a safe bike route.

This kind of failure is the AI equivalent of a friend confidently directing you to a destination they describe perfectly but can't actually navigate themselves. Gemini can tell you that your neighborhood vibe suggests you'd like the Fremont area and recommends specific neighborhoods. But when it comes to actually generating a usable route, it's still basically making stuff up.

The Confidence-Accuracy Gap

The worst part isn't that Gemini gets things wrong. Plenty of research tools give you imperfect information. The worst part is that it expresses complete confidence in recommendations that turn out to be inaccurate or dangerous.

There's no qualifier like, "I'm less confident about this specific route because I had to synthesize multiple data sources." It just presents the bike route like it's thoroughly planned and verified.

For comparison, Perplexity also accesses real-time information, but it shows you sources and lets you verify where information comes from. Runable focuses on automation and document generation where accuracy can be verified after output. Neither tries to hide the fact that AI-generated recommendations need human verification.

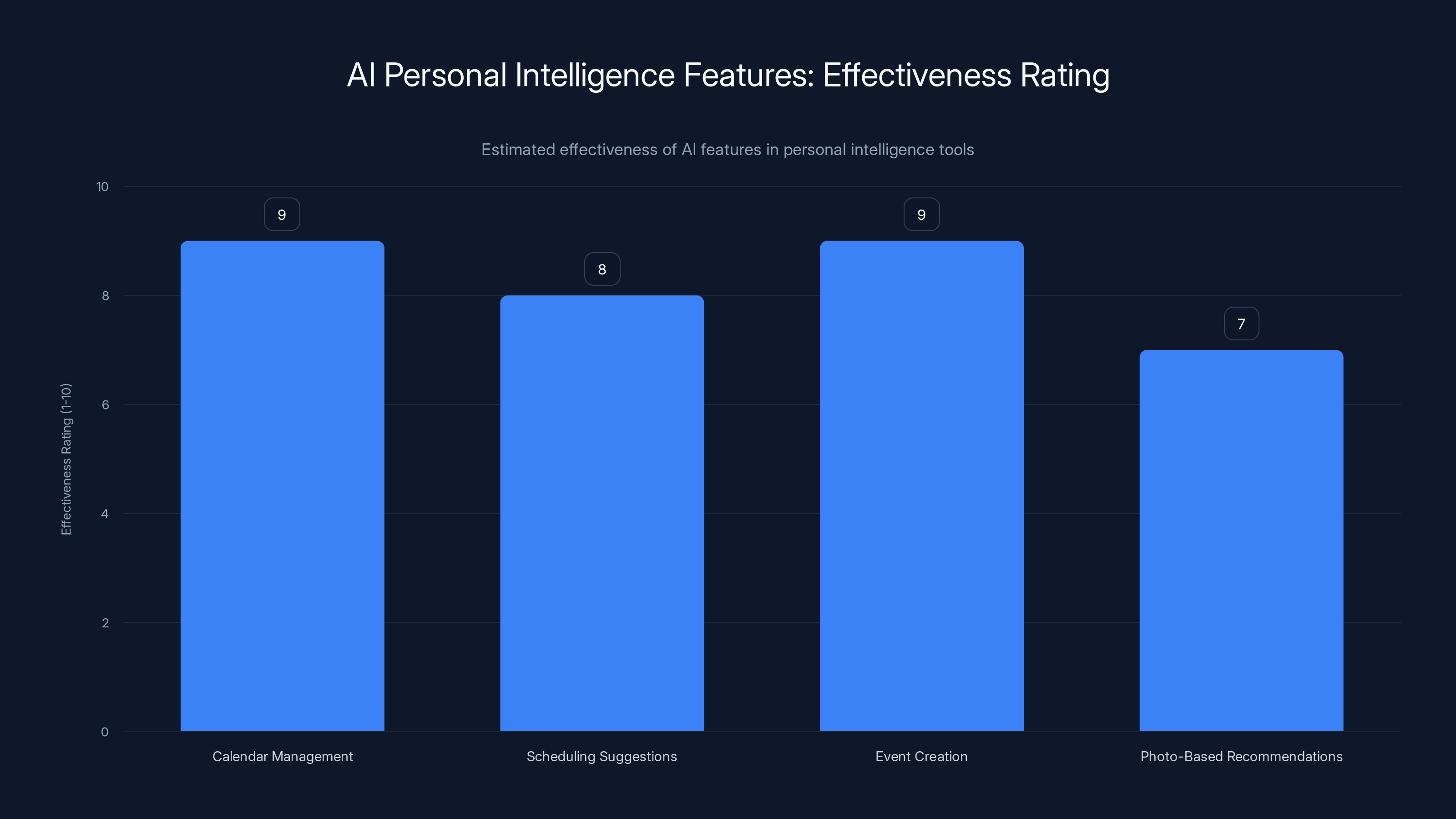

Calendar management and event creation are highly effective, showcasing significant AI advancements in personal intelligence. Estimated data.

Privacy Implications: The Elephant in the Room

Every article about Personal Intelligence eventually has to address the privacy question. Here's my honest take: the feature is less invasive than most people assume, but more invasive than you might want it to be.

What's Actually Happening

Google isn't storing copies of your emails, photos, or calendar events in some separate Personal Intelligence database. When Gemini needs information, it accesses your existing Google services in real-time. The conversation between you and Gemini might reference that data, but the underlying information stays where it was.

Google does, of course, retain conversation logs. Your messages to Gemini are stored on Google's servers. If you ask Gemini something about your calendar, that conversation gets logged. That's worth understanding.

It's also worth understanding that Google's entire business model is based on understanding user data and selling targeted advertising. Personal Intelligence doesn't change that model. It just adds another vector through which Google's AI can examine your behavior and preferences.

The Threat Model

Where you should genuinely be concerned is about access and breach scenarios. Personal Intelligence requires Gemini to have read-only access to sensitive accounts. If someone compromised your Gemini account without taking over your entire Google account, they could potentially read your emails, see your schedule, and access your photos through the Gemini interface.

Google's security is generally pretty solid, but no security is perfect. And the more services that are connected to a single authentication system, the more valuable that system becomes to attackers.

You should also think about what you're comfortable sharing. Not from a "Google is evil" perspective, but from a "what would I be embarrassed about?" perspective. If you're not comfortable with Google's AI having read-only access to your emails, don't enable Gmail integration. This isn't forced. You get to decide.

The Reasonable Approach

If you're going to use Personal Intelligence, use it carefully. Enable specific services based on what's actually useful, not because you can enable everything. Don't assume that because a feature works 80% of the time, it's safe to rely on it 100% of the time.

Treat Gemini's recommendations as starting points, not finished products. When it suggests a restaurant, a route, or a location, verify it before you commit. That shouldn't be surprising—you'd do that anyway with any recommendations from an AI or online source.

And understand what Google is actually doing. The company isn't reading your emails to judge you. It's reading your emails to understand your interests and habits so it can sell ads that align with those interests. That's not great, but it's also the business model you've implicitly agreed to if you're using Google services.

Personal Intelligence makes that data-collection more transparent, because now you're explicitly enabling AI access. That transparency is actually better than the alternative, which would be hidden algorithmic decision-making without your explicit awareness.

Personal Intelligence vs. Earlier Gemini Versions: What Actually Changed

Understanding what actually improved requires understanding what Gemini could do before Personal Intelligence launched.

Earlier versions of Gemini could access your Google Workspace data. That meant, in theory, it could read your Gmail, access your Calendar, and look at documents you'd shared with the Gemini team. But in practice, you had to explicitly tell it to do so. You had to say "Check my email" or "Look at my calendar" or "Read the attached document."

The assumption was that you wouldn't want an AI automatically accessing your data without you specifically asking. That's a reasonable privacy assumption. It also made the assistant pretty useless for anything requiring context about your life.

Personal Intelligence flips that assumption. Now, Gemini can automatically access those services when it determines your prompt is relevant. You ask about scheduling, it looks at your calendar without you explicitly asking. You ask for a recommendation, it checks your photos without you specifically requesting it.

The Proactivity Difference

That shift from explicit to proactive is the core difference. It sounds subtle, but it completely changes how useful the assistant is.

With explicit access, you had to know that Gemini could check something and remember to ask. Most users didn't. Most conversations with Gemini never involved any personal data access because users didn't think to prompt for it.

With Personal Intelligence, Gemini initiates the access when it recognizes relevance. You ask "What should I do this weekend?" and Gemini automatically checks your Calendar, your Photos from recent trips, and your Search history to understand what you've been interested in recently. It doesn't wait for you to ask. It just does it.

What Didn't Change

Importantly, the underlying quality of Gemini's reasoning didn't fundamentally change. It still has the same hallucination problem. It still struggles with specific facts. It still makes confident recommendations about things it doesn't actually verify.

What changed is that it now has more information to work with. That's genuinely useful for some tasks and actively harmful for others, because now you might trust recommendations more because they're personalized, even when they're just as inaccurate as before.

Comparison to Competitors

Microsoft's Copilot has similar features, though the integration is different because it's tied to Microsoft 365 services like Outlook and One Drive. Open AI's Chat GPT doesn't have personal data integration yet, though they've clearly been experimenting with it through their file upload features. Anthropic's Claude also requires explicit file uploads rather than automatic access to personal services.

In that context, Gemini's Personal Intelligence is actually pretty similar to what Copilot does with Microsoft 365 data. The main difference is the implementation details and which services it integrates with.

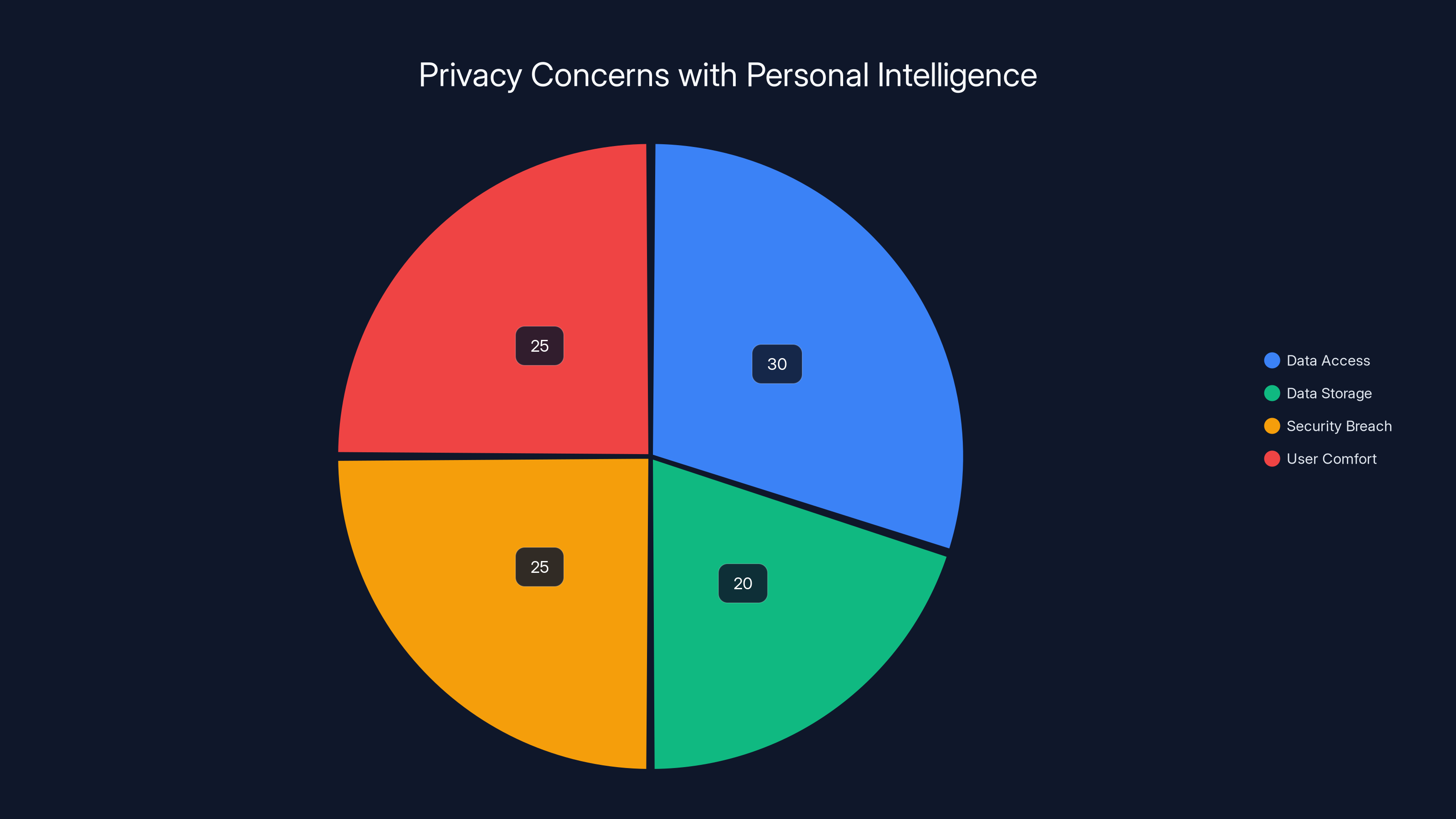

Estimated data shows that data access and user comfort are the primary privacy concerns related to Personal Intelligence, each accounting for 30% and 25% respectively.

Real-World Use Cases That Actually Work

Beyond the examples we've already discussed, there are some consistent patterns in where Personal Intelligence genuinely shines.

Project Planning from Conversations

When you're brainstorming a project with Gemini—like planning a trip, organizing a home renovation, or structuring a complex work initiative—Personal Intelligence's ability to automatically create tasks and add calendar events is genuinely useful.

I tested this with a weekend landscaping project. I wanted to redesign a part of my yard, and I asked Gemini for advice. It asked about sun exposure (I showed it photos), asked about my plant preferences (looked at my search history to see what I'd been researching), and then suggested a design approach.

Once we'd agreed on an approach, Gemini broke it into steps, created a task list, and added specific tasks to my calendar with deadlines. All of this happened automatically without me manually creating anything.

A week later, I was able to work through the task list and actually had something resembling a plan instead of a half-remembered conversation.

Interest-Based Research

When Gemini understands your interests through your personal data, it can contextualize research in ways that are actually relevant. I asked about electric vehicle technology, and instead of getting generic information about battery chemistry, I got information tailored to the specific aspects I'd been researching.

This is useful because it saves time. Instead of reading a bunch of general information and then figuring out what's relevant to you, Gemini presents the relevant parts first.

Scheduling Assistance

This is maybe the most straightforward useful application. Asking an AI for scheduling suggestions when it has access to your actual calendar is infinitely more useful than asking it for generic scheduling advice.

Time-Block Suggestions

Beyond just suggesting times for meetings, Gemini can suggest how to structure your day based on your calendar and habits. If your calendar shows you have focus time blocked in the morning and meetings in the afternoon, it can suggest what kind of work to batch into each time block.

This requires understanding both your calendar and your general working patterns, which Personal Intelligence enables better than previous versions.

The Hallucination Problem: Why Details Still Fail

Towards the understanding why Personal Intelligence works so well for some tasks and fails catastrophically for others, you need to understand how large language models actually work.

A language model like Gemini is trained to predict the next word in a sequence based on all previous words. It's not actually "thinking" about facts. It's not looking things up in a database. It's running probabilities based on patterns in training data.

When you ask Gemini for a book recommendation based on your reading history, it's running something like this calculation: "This user has read these books and searched for these topics. What books come next in the probability distribution of 'books that people with these interests read?'"

That works remarkably well because book recommendations are about pattern-matching on preferences. People with similar interests tend to like similar books.

When you ask Gemini for a specific restaurant recommendation at a specific location, it's running a different calculation: "I know the user likes coffee and photography. Seattle has neighborhoods where people who like coffee and photography tend to go. What specific restaurants are in those neighborhoods?"

The problem is that the second calculation is trying to look up specific facts by probability. It's generating output based on patterns in what restaurants usually exist in neighborhoods like that, not on actual current data about what restaurants currently exist at specific addresses.

Confidence from Authority

What makes this worse is that Language models have no built-in confidence calibration. They generate text based on probability, but they don't have a mechanism to know if they're confident or not. They just generate output.

So when Gemini generates a recommendation about a restaurant that closed years ago, it generates it with exactly the same tone and confidence as a recommendation about a restaurant that's currently open. There's no built-in uncertainty in the output.

This is the core reason why AI-generated recommendations need human verification. Not because Gemini is bad, but because the fundamental way language models work doesn't include a mechanism for knowing how confident they should be.

Why Personal Data Makes This Worse

Personal Intelligence doesn't actually solve this problem. It just makes it worse by giving you information that's personalized, which tricks you into trusting it more.

When a generic AI assistant recommends a restaurant and it's wrong, you think "Oh well, I should have verified that." When a Personal Intelligence-enabled Gemini recommends a restaurant based on your interests and photos and it's wrong, you think "How could it be wrong? It literally knows me and looked at my data." But the underlying problem is exactly the same: the AI is generating recommendations based on probability patterns, not on verified fact.

The personalization doesn't make the facts more reliable. It just makes the failure more surprising.

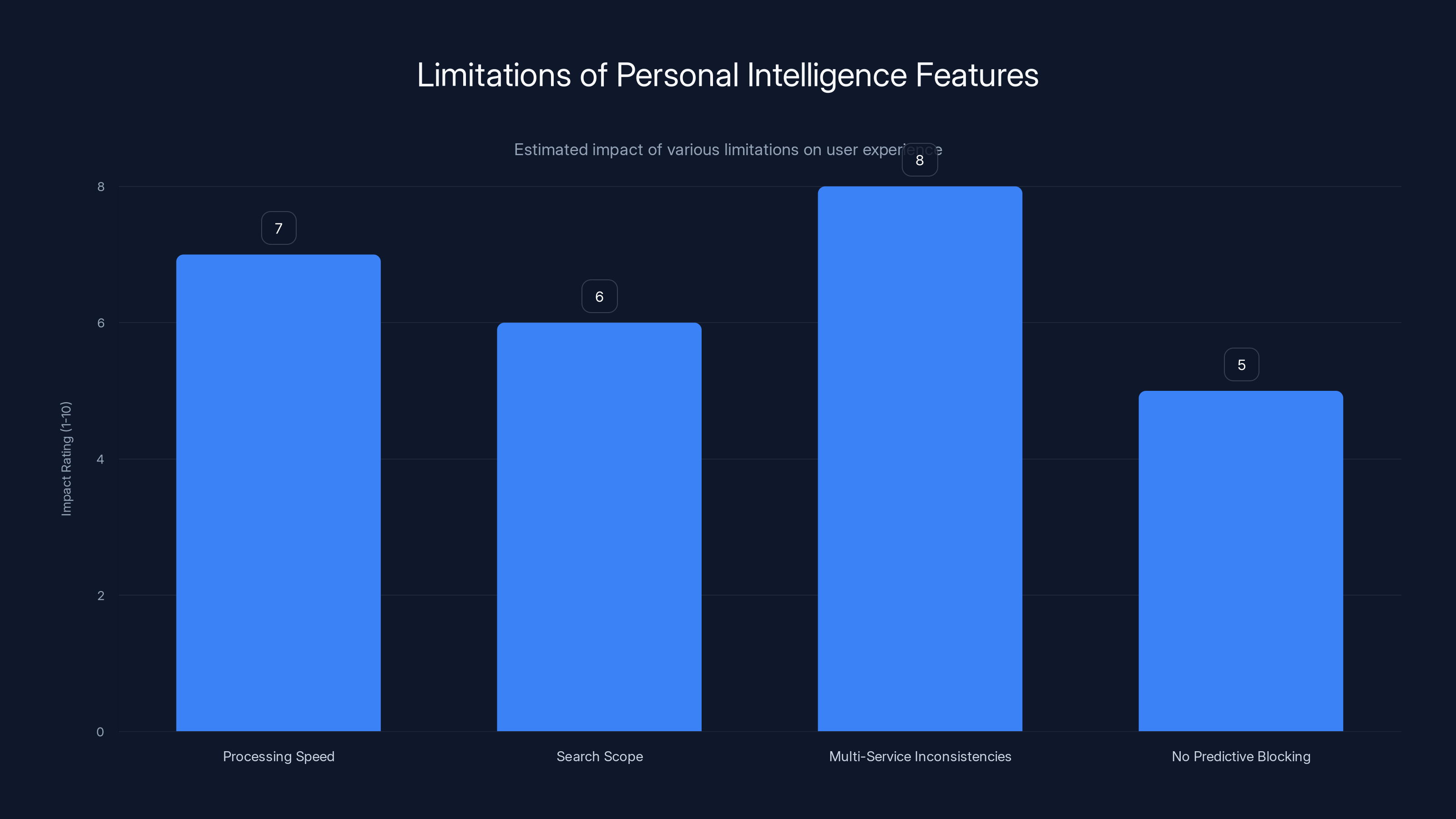

Estimated data suggests that multi-service inconsistencies have the highest impact on user experience, followed by processing speed issues.

Comparison: How This Stacks Up Against Other AI Tools

Personal Intelligence isn't the only AI tool attempting to integrate personal data. How does Gemini's approach compare to alternatives?

Microsoft Copilot and Microsoft 365 Integration

Microsoft has been pushing Copilot integration with Microsoft 365 services (Outlook, Teams, One Drive) for over a year. The feature set is similar to Personal Intelligence: access to calendar, email, documents, and search history.

Microsoft's implementation is somewhat different because it's integrated directly into Office applications. You don't have a separate conversation with an AI; instead, Copilot appears as a sidebar in Outlook, Word, and Teams. That's arguably more seamless but also means you're less aware that AI is accessing your personal data.

Microsoft's advantage is tighter integration with productivity tools. Its disadvantage is that the model is less transparent about when and how data is being accessed.

Open AI's Chat GPT and File Uploads

Open AI hasn't launched automatic personal data access in Chat GPT the way Google has with Gemini. Instead, you can upload files to Chat GPT, and it will analyze them. This gives you more control over what data you're sharing, but it's less seamless. You have to actively remember to upload relevant files.

Open AI's approach is more privacy-conscious by default, but less useful for day-to-day assistance. The moment when Personal Intelligence would automatically check your calendar, Chat GPT would just ask "Do you want to share your calendar with me?"

Anthropic's Claude and Document Analysis

Claude similarly requires explicit file uploads. You can have Gemini read your emails without asking you to upload them. With Claude, you'd have to explicitly paste in email content or upload an email file.

Again, this is more privacy-conscious but less seamless.

The Perplexity Model

Perplexity takes a different approach entirely. Rather than accessing personal data, it accesses real-time information from the web. It doesn't know anything about you personally, but it can search the current internet for information and provide sources.

For tasks requiring current information or verification, Perplexity is arguably better than Personal Intelligence because sources are provided and you can verify claims. For tasks requiring personal context, Personal Intelligence is better because it understands your specific situation.

The Runable Alternative for Automation

If what you're looking for is AI-powered automation rather than a conversational assistant, Runable takes a different approach entirely. Rather than accessing your personal data, Runable focuses on AI-powered document generation, presentation creation, report automation, and workflow automation. It's designed for teams and businesses that want to automate routine tasks like creating slides, documents, or reports from templates or data inputs.

Runable doesn't try to read your emails or understand your personal preferences. Instead, it takes structured inputs and automates the output. That's a different problem than what Personal Intelligence solves, but for many business use cases, it's actually more useful. Runable is available at $9/month for teams looking to automate document and presentation creation.

Privacy Settings and User Control

Let's talk specifically about how much control you actually have over Personal Intelligence and whether that control is meaningful.

When you enable Personal Intelligence, you're not giving blanket permission to Gemini. You're selectively enabling access to specific Google services. The toggles are straightforward:

- Gmail: On/Off

- Calendar: On/Off

- Photos: On/Off

- Search History: On/Off

If you only want Gemini to access your Calendar and Photos but not your email or search history, you can do exactly that. This is more granular control than you get with most AI tools.

You can also revoke access at any time. If you enable Personal Intelligence for a month and then decide it's too invasive, you can turn it off and Gemini goes back to not having access to your data.

What Google Doesn't Do

It's worth noting what Personal Intelligence does NOT include:

- No access to your Messages or SMS conversations

- No access to your Google Drive documents unless you explicitly share them with Gemini

- No access to your You Tube watch history or search history on You Tube

- No access to Chrome browsing history

- No access to Location history beyond what's captured in your photos

- No access to your Assistant Routines or smart home settings

So while Personal Intelligence gives Gemini broad access to your productivity data, it's not monitoring your entire digital life. There are meaningful boundaries.

The Transparency Factor

When Gemini accesses your data, it tells you. In the conversation, you'll see indicators like "Checking your Calendar" or "Looking at your recent photos." This is more transparent than how many other services operate.

Compare this to how Google Ads works, where Google is using all the data about your behavior to target ads without explicit notification in every interaction. That's far more invasive and far less transparent.

Is Personal Intelligence a perfect model for user control and privacy? No. But it's notably better than many alternatives, and it's transparent about what it's doing.

How Personal Intelligence Actually Accesses Your Data

Understanding the mechanics helps understand whether there are actual privacy concerns or just theoretical ones.

When you ask Gemini something that seems relevant to your personal data, the AI doesn't immediately access everything. Instead, it determines whether accessing specific services would be relevant to answering your question.

You ask: "What should I do this weekend?"

Gemini thinks: "This question could be answered by checking:

- Calendar (to see if there are existing commitments)

- Photos (to see if there are recent trips or activities that suggest interests)

- Search history (to see what topics have been researched recently)"

Then it accesses those specific services and incorporates that information into its response.

The key point is that this is real-time access, not batch processing. Gemini doesn't download copies of all your data. It doesn't analyze your entire email archive looking for patterns. When it needs information, it requests it from the Google service, uses it to construct a response, and then the data request is logged but not archived in a separate Gemini database.

Encryption and Transit

Data travels between Google services using Google's internal network. If you're accessing Gemini through your browser or the Gemini mobile app, your request goes to Google's servers over encrypted connections (HTTPS).

Once Gemini retrieves data from your Gmail, Calendar, or Photos, that data is processed by the AI model. You see the response in the conversation. The underlying data isn't stored in your conversation history in the same way it would be if you'd pasted an email into the chat.

Google does maintain conversation logs of what you ask Gemini and what it responds with. If you ask Gemini something about your calendar, Google stores that conversation. But Google doesn't additionally store the calendar data that was retrieved in response to that query.

The Task Automation Question: When Does It Actually Work?

One of Personal Intelligence's most useful features is its ability to automatically create tasks, calendar events, and reminders from conversations. But this isn't equally useful for all task types.

When Task Automation Works

Task automation works best when the outcome is simple and clear. "Add this to my calendar" works because there's a specific date, time, and event.

"Create a task for lawn care" works because you can break "lawn care" into clear subtasks: "get supplies," "cut grass," "trim hedges," "clean up clippings."

"Set a reminder to call Mom" works because there's a simple action and you'll know when it's done.

When Task Automation Fails

Task automation fails when the outcome requires judgment or ongoing evaluation. "Help me get better at management" isn't a task you can add to your calendar. "Improve my photography" doesn't have clear subtasks.

AI struggles with these because they require understanding not just what the goal is, but why you want to achieve it and what success looks like. A calendar event or a task list works for concrete goals. It fails for abstract aspirations.

The False Confidence Problem

Problem here is that Gemini often doesn't recognize the difference. It will see "Improve my photography" and create a task list of things like "Study composition," "Practice lighting," "Review photographs," even though those aren't really discrete tasks you can just check off.

That might seem helpful until you're looking at a task list of vague objectives and realizing none of them are actually actionable. You can't "practice lighting" and then mark it done. You need to actually practice, and that happens over weeks or months, not in individual sessions.

Limitations and Known Issues

Beyond the hallucination and specificity problems we've discussed, there are some specific limitations to Personal Intelligence that are worth understanding.

Processing Speed

Accessing your personal data takes time. Gemini has to query your Gmail, Calendar, and Photos services, retrieve the relevant data, process it, and incorporate it into the response. This is noticeably slower than a conversation that doesn't require personal data access.

Asking Gemini "What are some interesting Python libraries?" is fast. Asking "What's a good time to call my mom based on my schedule?" is slower because it has to check your calendar.

For most people, the slowdown is acceptable. For people with very large email archives or extensive photo libraries, it might be noticeable.

Search Scope Limitations

When Gemini searches your Gmail or Calendar, it's not searching your entire archive. It's searching recent items and using relevance ranking to determine what's important.

If you ask Gemini about something from two years ago that requires reading emails, it might not find the relevant messages because they're outside the recency window.

Google doesn't publicly document exactly what the search scope is, which is a bit frustrating for understanding the limitations.

Multi-Service Inconsistencies

Sometimes Gemini access works differently depending on which service it's querying. Calendar queries are fast and reliable. Email queries sometimes return fewer results than you'd expect. Photo queries sometimes misidentify what's in images.

These inconsistencies suggest that the underlying integrations with different Google services were built at different times and with different levels of maturity.

No Predictive Blocking

Gemini doesn't let you pre-specify which types of data you're comfortable sharing in different contexts. You can't say "I never want you to read my emails about health," or "Don't access my photos in public conversations."

You can disable access entirely, but you can't do nuanced control over which conversations have access to which data types.

The Future of Personal AI and What Comes Next

Personal Intelligence is clearly not the endpoint of this technology. It's a step toward more sophisticated personal AI assistants that understand context, history, and preferences at a deep level.

What comes next? Several directions seem likely:

Deeper Integration with Productivity Tools

Expect Gemini to move beyond reading your email and calendar toward actively participating in them. Instead of just checking your calendar, Gemini might suggest calendar changes, automatically decline conflicting meetings, or reschedule events.

Instead of just reading emails, it might draft responses, identify important messages, or handle routine communications.

Cross-Service Understanding

Right now, Personal Intelligence understands your calendar in the context of calendar queries and your photos in the context of photo queries. Future versions might understand connections across services.

Why would Gemini suggest a specific restaurant if it doesn't connect the information that you have a free evening (calendar), you've been researching Seattle restaurants (search history), and you previously photographed similar restaurants (photos)? Future systems might make those cross-service connections more naturally.

Behavioral Understanding

Gemini could go from understanding your data to understanding your patterns. Not just "You have this on your calendar" but "You typically spend Sundays doing this kind of activity, so here's something similar."

This requires the AI to recognize patterns in your behavior rather than just accessing specific data points. That's more invasive but also more useful.

Privacy-First Alternatives

Not everyone wants this level of AI integration with personal data. Future directions will likely include more privacy-first alternatives where AI works with your data locally on your device rather than sending it to cloud servers.

Google's working on running more AI models on-device with their Gemini Nano models. That could eventually allow personal data processing without sending data to Google's servers.

Responsibility and Guardrails

As these systems get more integrated with personal data, expect more built-in safeguards. Future versions might be specifically trained to identify when they don't have enough information to make a recommendation, or to flag recommendations that are higher-risk.

The goal would be reducing the confidence-accuracy gap we discussed earlier by building uncertainty into the model itself.

FAQ

What is Personal Intelligence in Gemini?

Personal Intelligence is a feature that allows Google's Gemini AI assistant to automatically access your personal data from Google services like Gmail, Calendar, Photos, and search history without you explicitly asking it to do so for each request. It's an opt-in feature that lets Gemini understand your context, preferences, and schedule to provide more personalized and useful assistance. You can enable access to individual services and revoke permission at any time.

How does Personal Intelligence actually work?

When you enable Personal Intelligence, Gemini gains read-only access to the Google services you specify. When you ask Gemini a question, it evaluates whether accessing your personal data would help answer the question. If it determines relevance, it automatically queries those services in real-time, incorporates the information into its response, and shows you which services it accessed. The data is processed temporarily for that response and isn't stored separately from your conversation history.

What are the main benefits of Personal Intelligence?

The primary benefits include smarter scheduling where Gemini can identify actual free time in your calendar rather than suggesting generic time slots, personalized recommendations based on your interests and history rather than generic suggestions, automatic task creation where Gemini can add calendar events and tasks from conversations without manual entry, and contextual assistance where recommendations are tailored to your specific interests and situation. This makes Gemini significantly more useful for day-to-day personal assistance compared to previous versions that required explicit prompting for data access.

Is Personal Intelligence a privacy risk?

Personal Intelligence has privacy implications that vary based on your comfort level with data access. The good aspects include that it's entirely opt-in with clear toggles for each service, Gemini only gets read-only access to your data, real-time access means Google doesn't create separate copies of your personal information, and it's more transparent than many competitors' implementations. The concerns include that Google maintains conversation logs which could theoretically be breached, the feature increases the value of a compromised Gemini account, and it expands Google's understanding of your behavior for targeted advertising purposes. You should enable it only for services where you're comfortable with the access.

How does Personal Intelligence compare to other AI assistants?

Microsoft's Copilot offers similar features with Microsoft 365 integration but with less transparent data access controls. Open AI's Chat GPT doesn't have automatic personal data access yet and instead requires file uploads. Anthropic's Claude also requires explicit file uploads for document analysis. Perplexity takes a different approach by accessing real-time web information with sources rather than personal data. Gemini's Personal Intelligence is more seamless for personal assistance but less transparent than Perplexity's approach and less privacy-focused than Chat GPT or Claude's explicit upload models.

Why does Personal Intelligence sometimes give inaccurate recommendations?

Large language models like Gemini are trained to predict probable text based on patterns, not to verify facts against real-world data. While Personal Intelligence gives Gemini access to your personal data, it doesn't solve the fundamental problem of AI hallucinations. Gemini can correctly identify that you'd be interested in a Seattle neighborhood based on your interests and photos, but it might confidently recommend a restaurant that's actually closed or misidentify its location. The personalization doesn't make the underlying facts more reliable—it just makes you trust inaccurate recommendations more because they're tailored to you.

Can I control which specific data Gemini can access?

Yes. When you enable Personal Intelligence, you individually toggle access to Gmail, Calendar, Photos, and Search History. You can enable all of them, some of them, or none of them. You can change these settings at any time. You cannot, however, set more granular controls like preventing access to emails about specific topics or photos from specific dates. If you need that level of control, you'd have to disable entire services rather than selective data access.

Should I enable Personal Intelligence?

That depends on your priorities. Enable it if you frequently use Gemini for scheduling, recommendations, task planning, or productivity assistance and you're comfortable with those Google services being accessed by AI. Don't enable it if you're concerned about data access or you primarily use Gemini for creative, research, or coding tasks that don't benefit from personal data context. Start with just Calendar and Photos enabled if you're uncertain; you can add Gmail access later if you want more functionality.

What data should I verify when using Personal Intelligence recommendations?

Always independently verify specific recommendations like restaurant locations, business hours, detailed route suggestions, and product availability. Personal Intelligence is excellent for identifying interesting neighborhoods or general categories of things you might like, but terrible at verifying whether those specific things actually exist or are currently operating. Think of it as inspiration rather than directions. Similarly, verify any scheduling recommendations that involve travel times or location-based factors, as Gemini sometimes provides inaccurate geographic information despite having access to your location data.

The Bottom Line

Gemini's Personal Intelligence represents a meaningful step forward in making AI assistants actually useful for personal assistance. The ability to automatically reference your calendar, emails, photos, and search history without explicit prompting transforms Gemini from a generic chatbot into something that actually understands your life.

But it also exposes the fundamental limitation of large language models: they're brilliant at pattern recognition and mediocre at fact verification. You can give them access to all the data about who you are and what you've done, and they'll still confidently recommend a restaurant that closed years ago.

That doesn't mean Personal Intelligence isn't worth using. For scheduling, task management, project planning, and broad recommendation categories, it genuinely works and saves time. You just need to accept that the AI is showing you possibilities and patterns, not delivering verified truth.

The feature is also refreshingly transparent about what it's doing, gives you clear control over data access, and doesn't fundamentally change how Google uses your data—it just makes that data usage more explicit.

Is Personal Intelligence the future of AI? Probably not. It's a step in that direction. The future will likely involve deeper integration with productivity tools, better understanding of your behavioral patterns, more sophisticated personal data processing, and hopefully better mechanisms for distinguishing between high-confidence and low-confidence recommendations.

For now, if you're a Gemini Pro or Ultra subscriber and you use Gemini for anything involving personal assistance, it's worth trying. Just remember to verify the details before acting on recommendations.

Key Takeaways

- Personal Intelligence gives Gemini automatic access to Gmail, Calendar, Photos, and search history without explicit prompting, making personalized assistance significantly more useful

- The feature works excellently for scheduling, task creation, and broad recommendations but fails dramatically when specific facts are needed, revealing the confidence-accuracy gap in AI

- Privacy controls are granular and transparent, letting users enable or disable access to individual Google services, making this more privacy-conscious than many competitors

- AI systems are brilliant at pattern recognition but fundamentally struggle with fact verification, meaning personalized recommendations still need human validation

- Future AI assistants will likely move toward deeper service integration and behavioral understanding, but also need better mechanisms for expressing uncertainty in recommendations

Related Articles

- OpenAI ChatGPT Go: Everything About the $8 Subscription [2025]

- The Real AI Revolution: Practical Use Cases Beyond Hype [2025]

- Claude Cowork: Anthropic's AI Agent for Everyone [2025]

- Google's AI Inbox for Gmail: The Complete Guide [2025]

- Lenovo's Qira AI Platform: Transforming Workplace Productivity [2025]

- Lenovo Yoga Mini i 1L 11: Tiny Cylindrical PC with AI Copilot [2025]

![Gemini's Personal Intelligence: Power & Pitfalls [2025]](https://tryrunable.com/blog/gemini-s-personal-intelligence-power-pitfalls-2025/image-1-1769260031775.jpg)