The Real AI Revolution: Practical Use Cases Beyond Hype

Let me paint you a scenario. You're stranded on the side of a highway at midnight. Your car's navigation system quit, your phone battery's running on fumes, and you can't figure out how to even open your car's manual in the dark. Panic starts creeping in.

Then you remember: you've got Chat GPT.

You pull up the app, snap a photo of your dashboard with your phone's last sliver of battery, and ask: "What does this warning light mean? How do I fix it?" Within seconds, you get a clear, accurate answer. The crisis averts. You drive home safe.

This isn't some sci-fi fantasy. This actually happened to someone, and it perfectly captures what AI should be: a practical tool that solves immediate, real-world problems. Not a replacement for human creativity. Not a magic bullet that solves everything. Just... useful.

For all the hype around artificial intelligence—the breathless headlines about Chat GPT, the doomster predictions about the future of work, the endless debate about whether AI will replace human creators—we've lost sight of something crucial: AI actually excels at very specific things. And when you understand what those things are, you can start using AI not as a career threat, but as a legitimate force multiplier.

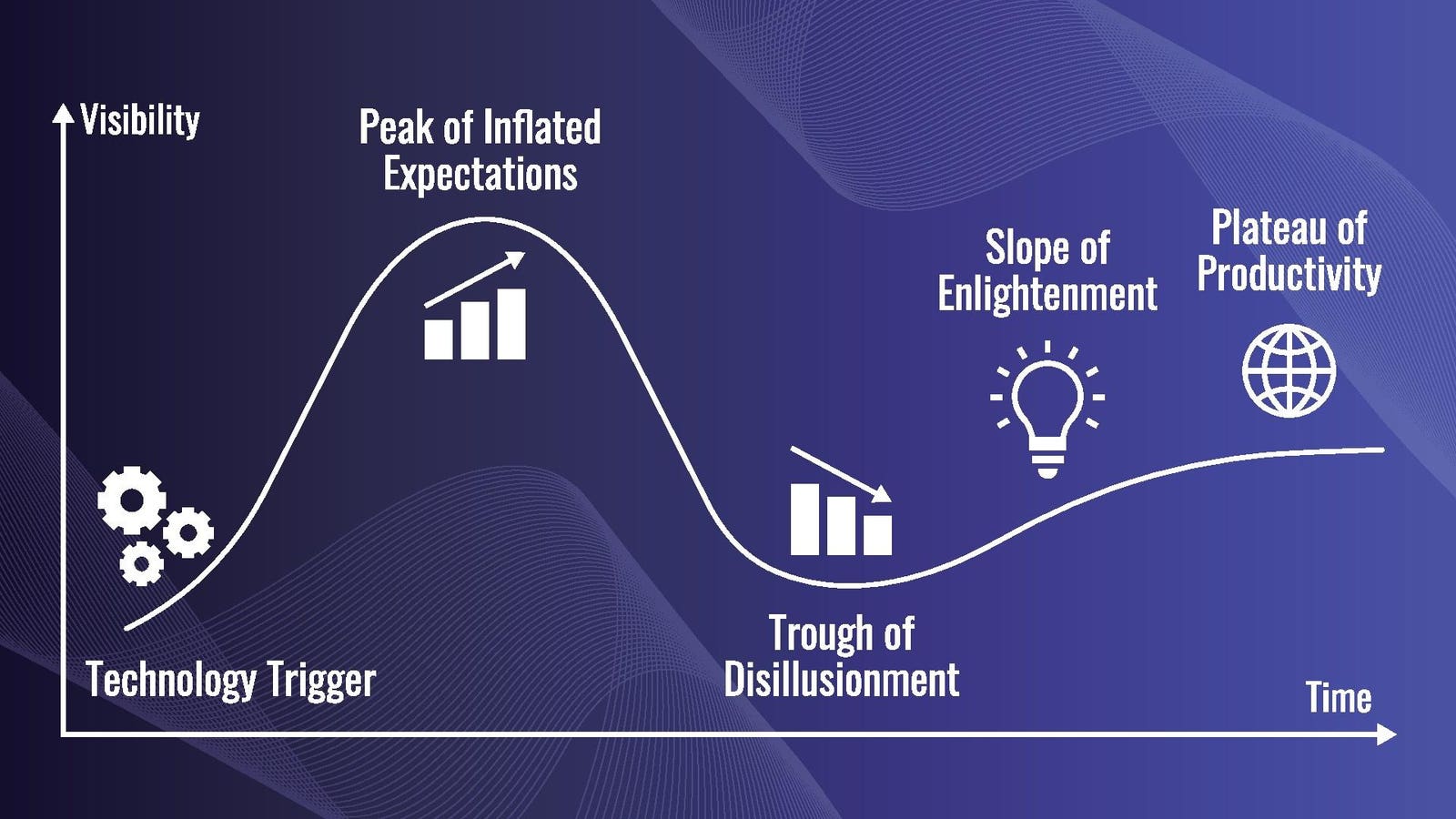

The conversation around AI has become absurdly polarized. On one side, you've got the "AI will solve everything" crowd, treating language models like they're the second coming. On the other, you've got people convinced that AI is coming for their job tomorrow. Both miss the mark.

The truth? AI is exceptionally good at certain tasks. It's mediocre at others. And it's terrible at things that require genuine human judgment, creativity, or context that goes beyond pattern recognition. Understanding which is which changes everything about how you approach AI in your own work.

The roadside car problem is a perfect case study because it highlights what makes AI genuinely useful: it's accessible, it's instant, and it's expert-level information without needing to call a mechanic at midnight. But it also shows AI's limits: it can't actually fix your car. It can't handle situations that require physical intervention, real-time decision-making about your specific circumstances, or accountability if something goes wrong.

This article digs into the real applications where AI shines, the jobs it's actually changing (and how), the misconceptions that have everyone either panicked or unrealistically optimistic, and most importantly, how to think about integrating AI into your workflow without losing the parts of your work that make you valuable.

By the end, you'll have a clearer picture of AI not as some existential threat or savior, but as what it actually is: a powerful tool with specific strengths and hard limits. And that clarity is way more useful than any hype.

TL; DR

- AI excels at information retrieval, pattern matching, and instant expert-level answers on problems you can describe clearly

- AI struggles with subjective judgment, original strategy, and situations requiring human accountability and contextual understanding

- Jobs aren't disappearing because of AI, but job roles are shifting toward higher-level thinking and decision-making

- The best use cases combine AI's speed and pattern recognition with human creativity, judgment, and responsibility

- Practical integration beats hype: use AI where it solves concrete problems, not where it feels innovative

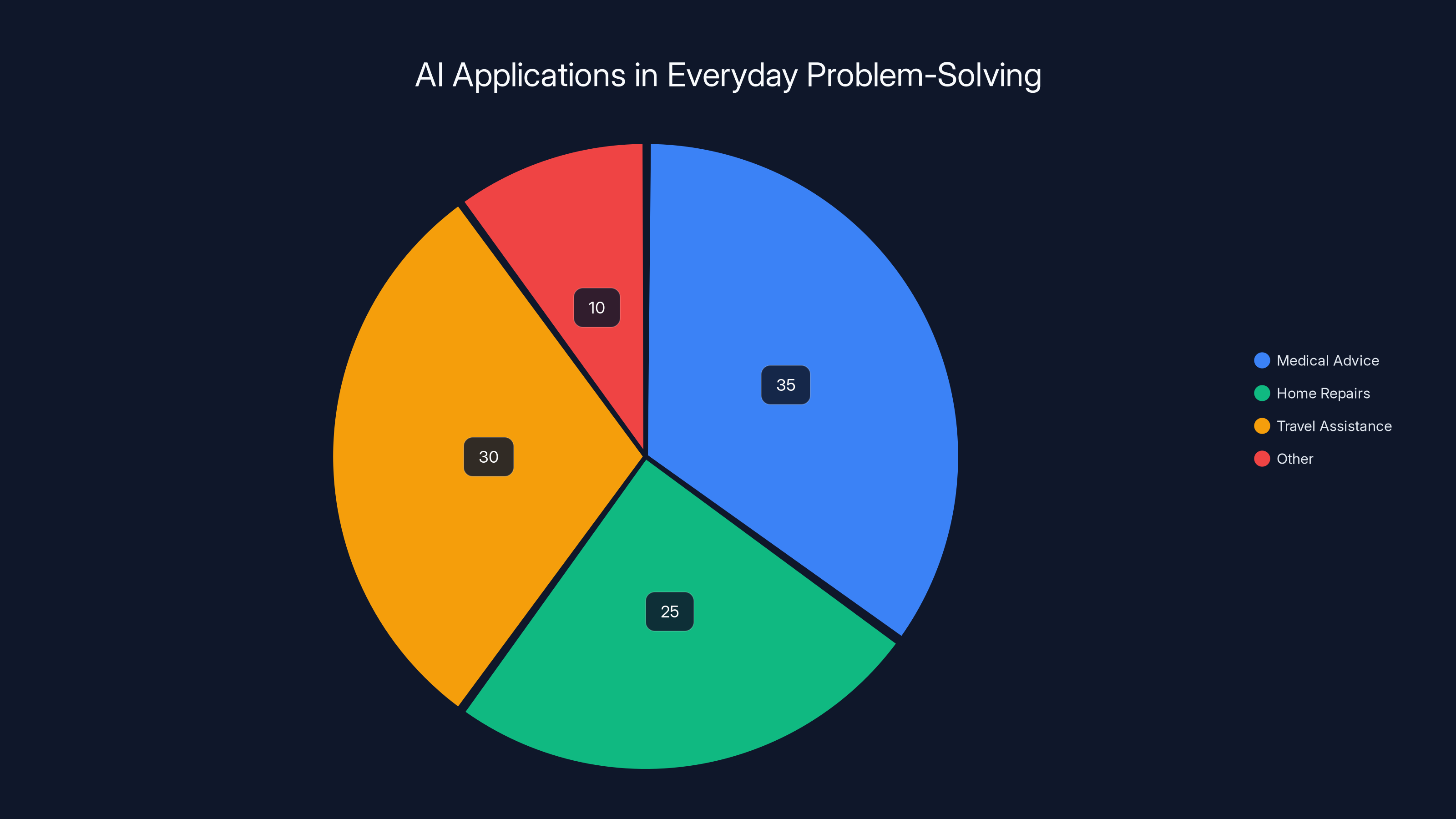

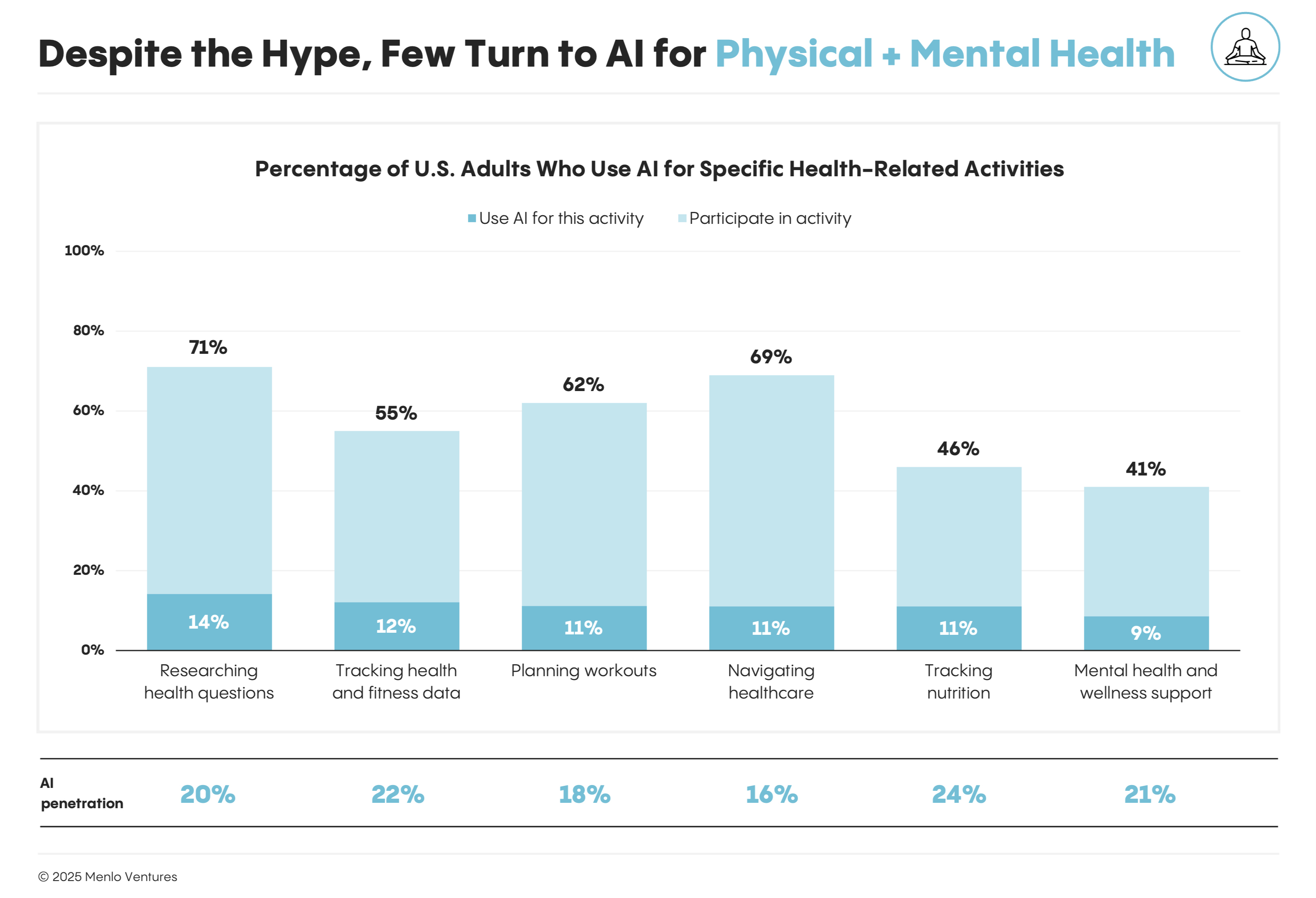

Estimated data shows AI is most frequently used for medical advice and travel assistance, highlighting its role in providing quick, expert-adjacent information.

Where AI Actually Works: Practical Applications That Solve Real Problems

The best place to start isn't with the flashy use cases or the theoretical doomsday scenarios. It's with situations where AI has already proven itself in the real world, where people are using it daily, and where the results are measurable.

Emergency Information and Problem-Solving

That roadside scenario? It's not unique. Every day, thousands of people use AI to answer urgent questions they can't wait to solve:

You're in a medical emergency and need to know if symptoms warrant an ER visit. You don't have time to wait for a doctor's appointment, and Web MD is too chaotic. A quick conversation with an AI (with the caveat that it's not a replacement for medical advice) can give you framework-level thinking to inform your decision. According to Cleveland Clinic, AI is increasingly being used in healthcare to provide quick, accessible information.

Your furnace breaks in January. The repair person can't come for three days. You ask Chat GPT what you can do to stay warm, how the thermostat works, and what warning signs suggest the system is dangerous. You get usable answers instantly.

You're traveling abroad and need to understand local regulations quickly. Instead of scrolling through tourist blogs, you ask an AI to explain visa requirements, currency exchanges, or local etiquette. You get structured information in seconds.

The common thread: you have a specific problem, you can articulate it clearly, and you need expert-adjacent information right now. AI delivers on all three. It's not replacing the furnace technician, doctor, or embassy official. It's replacing the wait time and the frustration of finding reliable information.

This is where AI creates the most immediate value. Not in replacing professions, but in democratizing access to information that previously required expensive experts or hours of research.

Content Drafting and Summarization

Here's where the misconception gets thick. When people say "AI will replace writers," they usually mean "AI can write some things." Which is true. But it's not the same as "AI can write well."

AI's genuine superpower in writing is speed and scaffolding, not creativity or voice. If you need to:

-

Summarize a 50-page report into a two-page executive summary: AI is exceptional at extracting key points and presenting them in business-standard language. You still need to review it for accuracy and decide what matters, but the heavy lifting is done in seconds.

-

Generate first drafts of routine documentation: API documentation, internal process guides, employee handbook entries—these follow patterns. AI can generate solid first drafts that you refine rather than write from scratch.

-

Create multiple versions for testing: If you're writing marketing copy and want to test five different angles, AI can generate variations in minutes. You pick which resonates, then you refine it with actual judgment.

-

Overcome writer's block with scaffolding: You have ideas but can't get them out. AI can help structure your thoughts, generate outline options, or write a rough version that you rewrite in your voice.

What AI cannot do: write with genuine voice, make creative leaps, take real risks, or know which version is actually right for your specific audience. Those require judgment.

The writers whose jobs are changing aren't the ones writing novels or strategy pieces. They're the ones writing high-volume, low-creativity content at scale—product descriptions, boilerplate email templates, stock social media posts. And honestly? If your job was just generating volume without strategy, you were vulnerable anyway.

The writers whose work is becoming more valuable are the ones who can direct AI, refine it, add judgment, and create original strategy. Basically, the ones doing actual creative work.

Code Generation and Technical Problem-Solving

If you've watched developers work in the last year, you've seen this shift firsthand. AI coding assistants like GitHub Copilot have moved from "cool toy" to "standard part of the toolkit."

The reason is simple: coding has a lot of pattern-matching. If you're writing a function to connect to a database, that's largely scaffolding. If you're validating form inputs, that's boilerplate. AI excels at this.

You describe what you need: "Create a function that validates email format and checks if the domain has an MX record," and the AI generates working code in seconds. You review it for correctness, modify it for your specific context, and move on.

For experienced developers, this is a huge time saver. Not because it writes the complex logic—it often can't. But because it handles the routine scaffolding, which is maybe 40% of coding. It lets developers focus on architecture, edge cases, and the strategic thinking that actually requires human judgment.

Where it struggles: novel problems with unique constraints, deciding which approach is architecturally best, understanding the broader system context, and debugging complex issues where the error message is vague. These require the kind of human judgment that comes from experience.

The job market for developers is changing. But it's changing toward developers who understand AI as a tool, can verify that generated code actually works, and can handle the parts that require thinking. The developers just generating routine code from spec sheets? Yeah, that role is compressing.

Research and Information Synthesis

Imagine you're a researcher, analyst, or anyone whose job involves understanding a topic deeply. Traditionally, this means reading dozens of papers, articles, and reports, extracting key points, and synthesizing them into your own framework.

AI doesn't replace this work. But it radically accelerates the first 60% of it.

You can ask AI to: read a stack of research, identify the key disagreements between studies, explain what we actually know with confidence versus what's speculative, and point out which sources are contradicting each other. You get a research landscape overview in minutes instead of days.

Then you do the real work: diving into the sources that matter, making judgment calls about which evidence is most credible, and building your own synthesis based on critical thinking.

This is where AI becomes a force multiplier for smart people. It's not thinking for you. It's doing the boring parts of thinking fast, so you can do the interesting parts better.

The caveat: AI often presents confident-sounding information that's wrong or outdated. It hallucinates sources. It misses important nuance. So you must verify anything that matters. But if you're the type of person who would verify anyway, AI just saved you enormous time on the legwork.

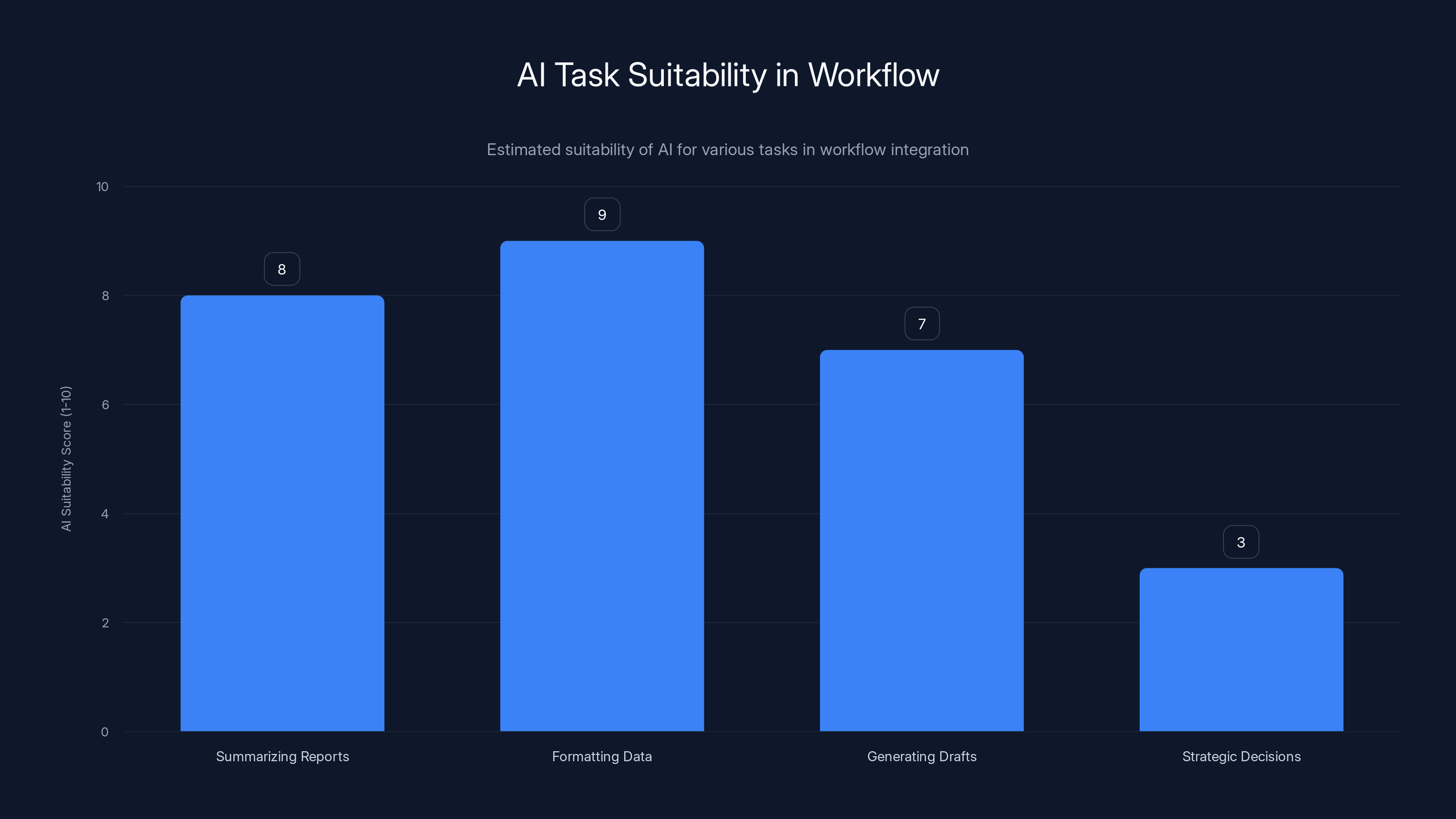

AI is highly suitable for tasks like summarizing reports and formatting data, but less so for strategic decision-making. Estimated data.

Where AI Falls Short: The Limits Nobody Talks About

The hype around AI obscures something important: there are huge categories of work where AI is actively bad, overconfident, or fundamentally misaligned with what's actually needed.

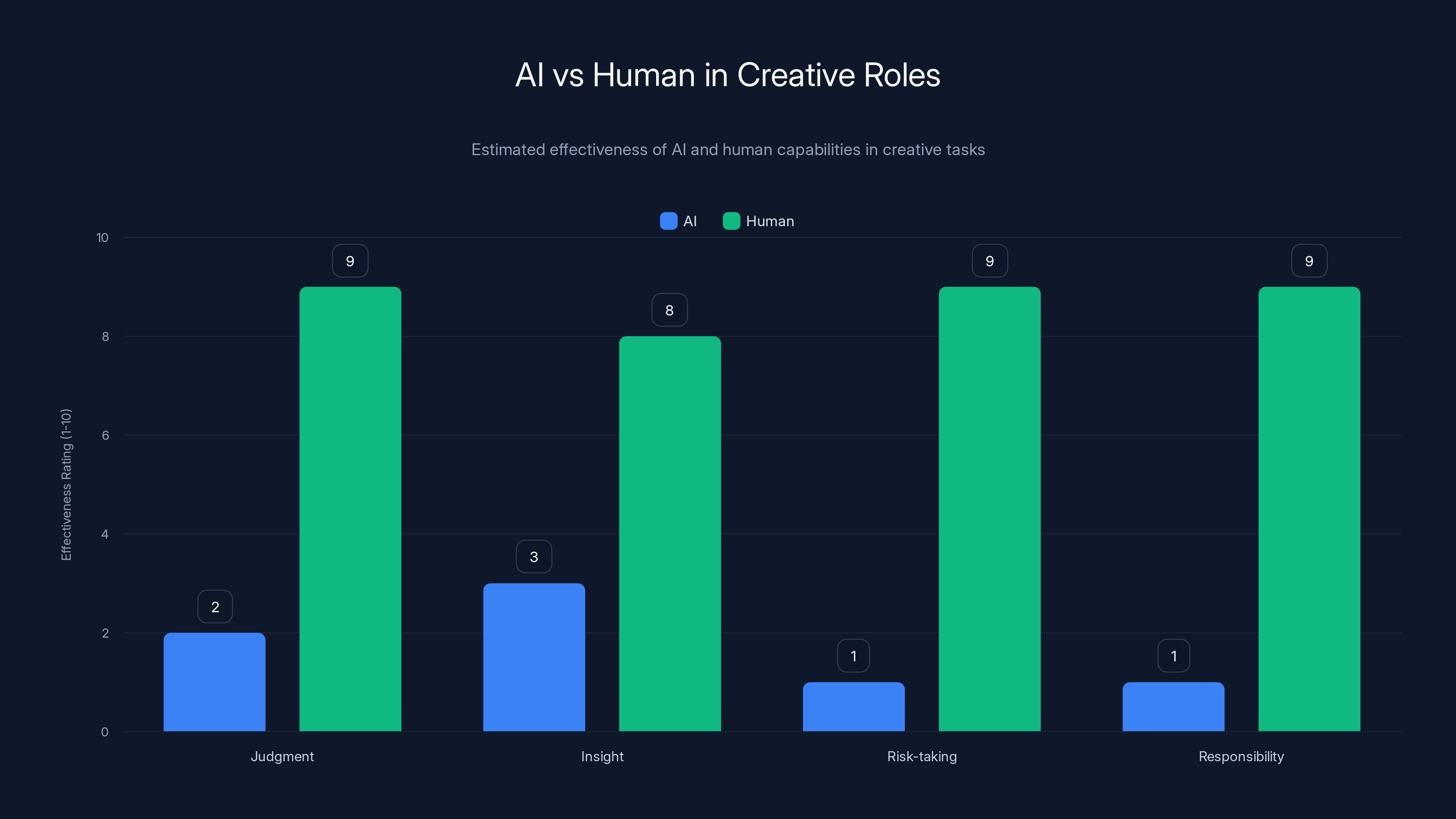

Creative Direction and Strategy

This is where the "AI will replace creatives" argument breaks down completely.

Creative direction isn't about generating content. It's about judgment: deciding what matters, what will resonate with your audience, what's worth the investment, what's genuinely novel versus derivative.

AI can generate logos. It cannot decide if a logo is right. A designer can show you five AI-generated logos and choose the one that captures the brand's essence because she understands the brand's history, audience, and context. That's judgment, and it requires human experience.

AI can write ad copy. It cannot decide that your entire positioning is wrong and you should target a different audience. A strategist can look at your messaging and say, "You're speaking to the wrong people," and restructure your entire approach. That's insight, which requires understanding human behavior and market dynamics in ways that transcend pattern matching.

AI can generate variations. It cannot take a real creative risk. The creative work that actually changes markets—the "different" thinking that breaks through—requires someone to bet on an idea, understand why it's worth the risk, and be willing to defend it when it feels wrong. That's responsibility and judgment, neither of which an AI has.

The creative professionals whose work is becoming more valuable are the ones who use AI as a tool for exploration and iteration, then apply judgment and strategy. The ones whose work is at risk are the ones who weren't doing strategy anyway.

Accountability and High-Stakes Decisions

Here's something that never makes the headlines: if you use an AI's recommendation and it causes harm, who's responsible?

If an AI system recommends denying a loan, and it turns out that decision was based on a discriminatory pattern in training data, who's accountable? The person who relied on the recommendation without questioning it? The company deploying the system? The model creators?

This question isn't abstract. It's actively causing problems in hiring systems, lending decisions, healthcare recommendations, and criminal justice. AI can't be held accountable. Humans can. And for decisions with real consequences, that matters. As noted by Lockton, AI in HR and other sectors requires careful consideration of accountability and bias.

Until we solve the accountability problem—and we haven't—there are certain decisions that require a human to be willing to stand behind them. Using AI to inform those decisions is smart. Using AI to make them is dangerous.

Context-Dependent Judgment

AI sees patterns in data. But it can't really understand context the way humans do.

You mention to an AI that you're having trouble at work, and it might suggest meditation or talking to HR. A real mentor who knows you, knows your workplace, knows your personality, and understands the specific situation can give you advice that actually fits. That requires context that goes way beyond the information in your message.

This is why AI is bad at:

-

Advice that depends on knowing the person giving it: Therapy, coaching, mentorship. You can use AI to supplement these things, but AI can't replace the relationship and contextual understanding that makes the advice actually useful.

-

Judgment calls about your specific situation: "Should I take this job?" depends on your priorities, risk tolerance, family situation, career goals, and a dozen other factors that are individual. An AI can explore options but can't actually know what's right for you.

-

Negotiation and persuasion: These require understanding the specific person you're talking to, reading the room, adjusting strategy based on reactions, and making judgment calls in real time. AI can help you prepare but can't do the actual negotiation.

-

Leadership and team decisions: Leading people requires understanding them as individuals, making judgment calls about development, motivation, and culture. AI can provide frameworks but can't replace the judgment of someone who actually knows the people involved.

Basically, if the decision depends on knowing someone or something specific that isn't in your prompt, AI is going to give you generic advice.

Verification and Accuracy

AI is confidently wrong approximately 30-40% of the time. Not in a humble, "I'm not sure" way. In a completely confident, fabricated-a-source way.

This is called hallucination, and it's not getting solved by just scaling up models. It's fundamental to how these systems work. They're predicting the next token based on patterns. Sometimes the pattern leads to false information, and the system has no way to know.

Which means: anything you use from AI needs verification if it matters. You can't use it as your source of truth. You need to check facts, verify sources, and independently confirm key claims.

For straightforward information retrieval, this is manageable. You ask about a technical spec and verify it in documentation. But for complex topics where there are multiple credible perspectives, AI is actively bad. It will confidently present one perspective as fact, miss nuance, ignore contradictory evidence, and sometimes just make things up.

Don't use AI as your source of truth. Use it as a thinking partner that you verify independently.

The Job Shift: What's Actually Changing in the Workforce

Let's address the elephant in the room directly: is AI going to eliminate jobs?

Historical perspective is useful here. Every major technological shift has eliminated certain job categories while creating new ones. Spreadsheets eliminated a lot of human calculator positions. Email changed what administrative assistants do. The internet destroyed some industries while creating entirely new ones.

What's different now is the speed and the breadth. AI can potentially impact a huge range of knowledge work simultaneously, which means the transition period could be genuinely disruptive.

But here's what's actually happening: certain tasks are being eliminated, not entire jobs. And the jobs that are shrinking are, almost universally, the ones that were primarily routine task completion with little judgment.

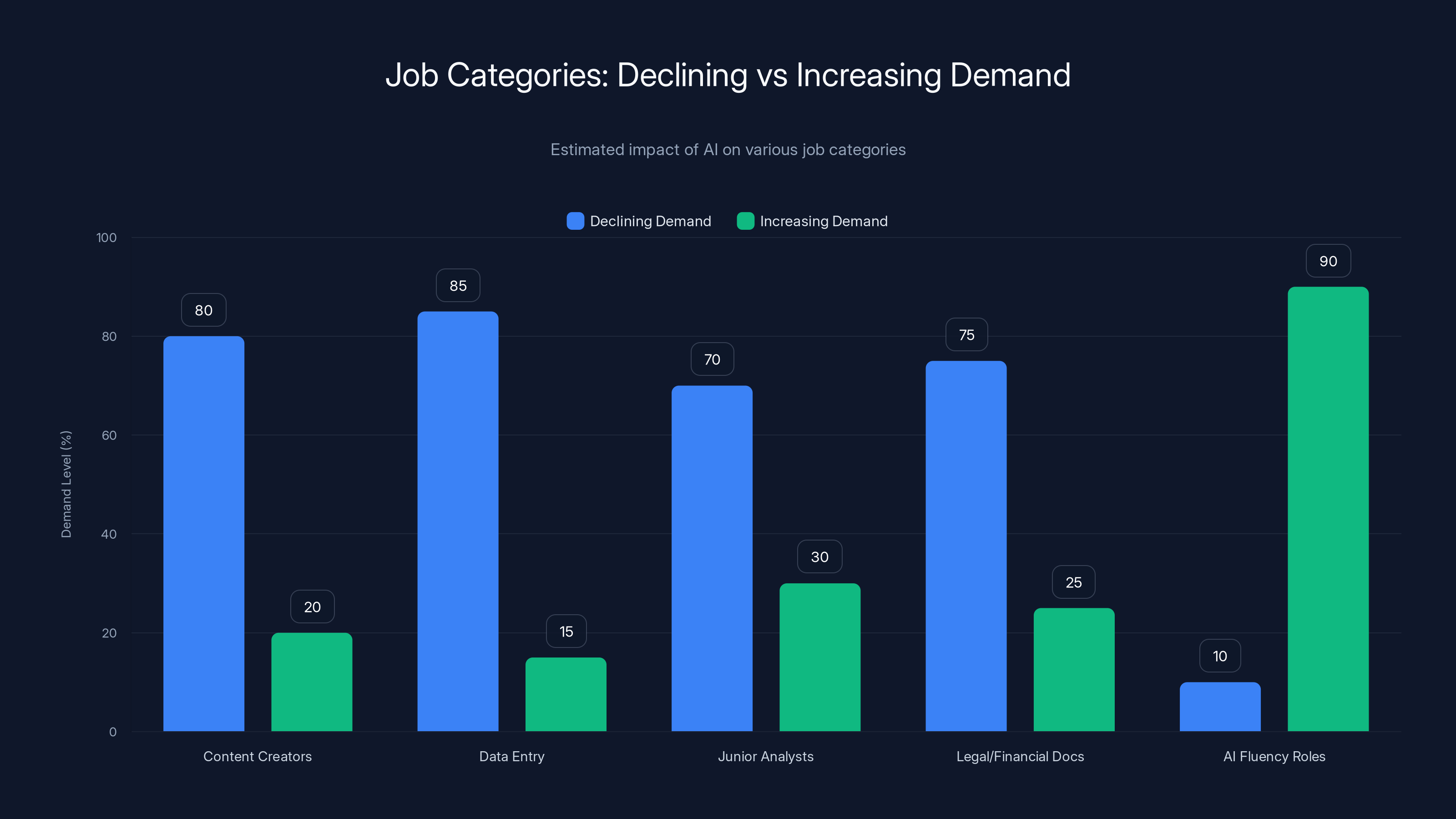

Job Categories with Declining Demand

High-volume content creators working at scale: People writing 50 product descriptions daily, 100 social media posts weekly, routine marketing copy. These roles are compressing because AI can do this volume work now. But the underlying demand for strategy and creative direction isn't going anywhere.

Routine data entry and processing: If your job is entering data into systems, checking formats, and moving information around, automation tools (including AI) have been coming for this role for years. This is accelerating.

Junior analyst roles that are pure information synthesis: Reading reports, summarizing findings, presenting what you learned. If that's your entire job, AI can do parts of it. If that's 20% of your job and the other 80% is judgment and strategy, you're fine.

Boilerplate legal and financial document generation: The routine stuff. Standard contracts, templates, standard explanations. The strategy and judgment parts of law and finance aren't going anywhere.

Job Categories with Increasing Demand

Roles that combine AI fluency with domain expertise: The person who understands marketing deeply and knows how to use AI for research, copy generation, and strategy is way more valuable than someone who does marketing without AI. You're not being replaced. You're being upgraded in value if you learn the tools.

Judgment and decision-making roles: As routine work gets automated, the jobs that remain are the ones requiring judgment, accountability, and real expertise. This is actually a positive shift if you've got skills beyond "execute what you're told."

Strategic and creative direction: The work that actually requires thinking. This is increasing in importance because when routine work gets automated, the remaining value is in strategy, creativity, and judgment.

AI training, fine-tuning, and improvement: A whole new category of jobs is emerging around making AI better, more reliable, and more useful in specific contexts.

Human-AI collaboration roles: Project managers who coordinate between AI tools and human teams. QA roles verifying AI outputs. People who understand what AI can and can't do and work at that boundary.

The pattern is clear: if your job is 80% following process and 20% judgment, you're at risk. If your job is 20% following process and 80% judgment, you're fine. If you work in a field where routine tasks get compressed, you need to move up the value chain.

AI is reducing demand for routine task jobs like content creation and data entry, while increasing demand for roles that require AI fluency and strategic skills. (Estimated data)

How Humans Actually Use AI Well: Frameworks That Work

Okay, so you've got a sense of where AI helps and where it doesn't. How do you actually integrate it into your work without either overselling it or ignoring it completely?

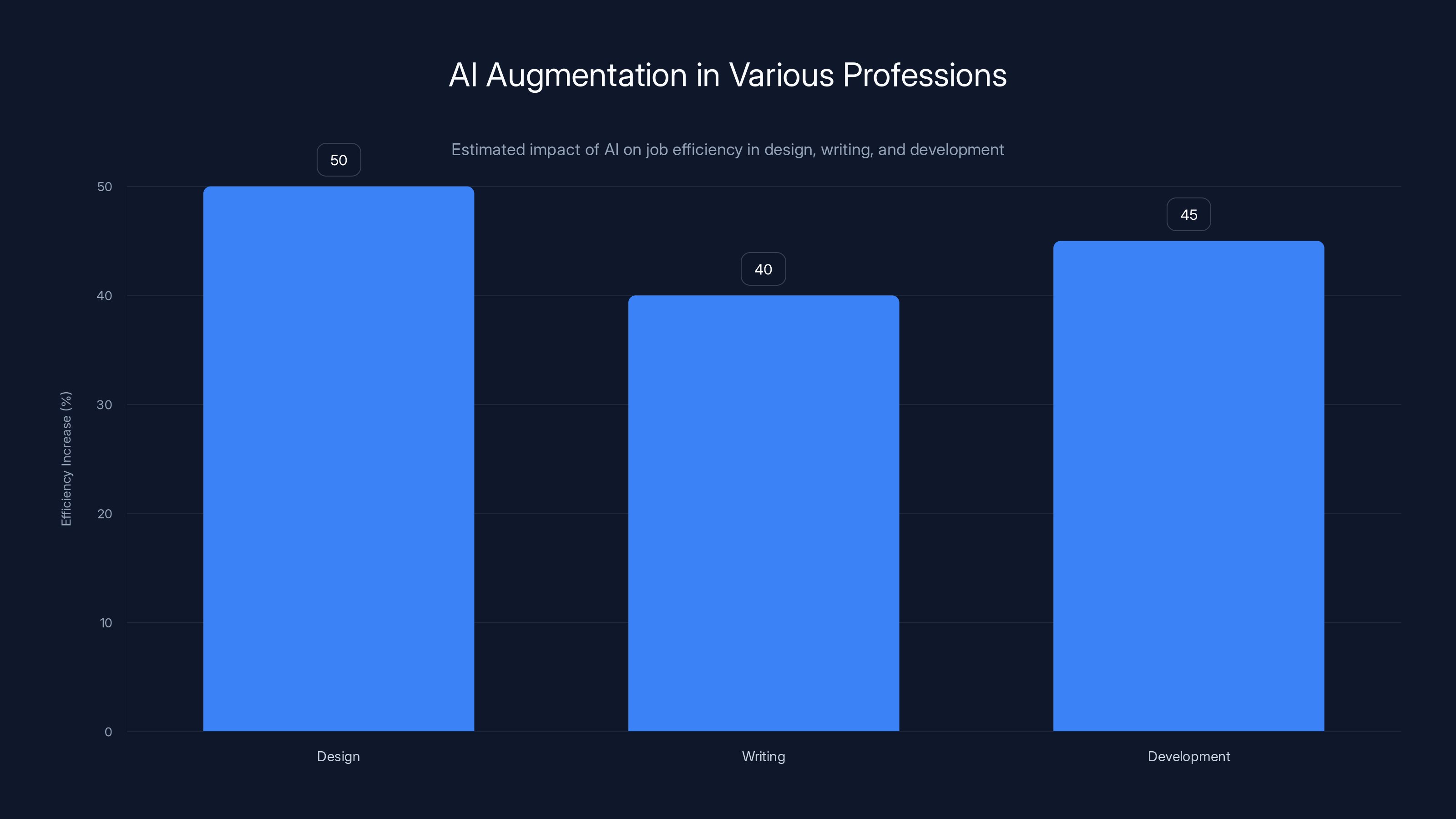

The Augmentation Mindset

Stop thinking of AI as a replacement for your job. Think of it as a tool that makes you faster at certain parts of your job.

A designer still needs to make design decisions. But if the AI can generate five visual directions in the time it used to take to generate one, the designer can iterate faster, explore more options, and make better decisions with more data. That's augmentation.

A writer still needs to write with voice and strategy. But if AI can generate structural options or first drafts, the writer spends less time on scaffolding and more time on the parts that actually matter. The output is better because they could refine more thoroughly.

A developer still needs to architect systems and make judgment calls. But if the AI handles routine boilerplate code, they move faster and can focus on the complex parts. They write better code because they're thinking about the hard problems, not wrestling with scaffolding.

The right mental model: AI is a very fast junior who's good at routine patterns and terrible at judgment. You're the senior. You direct the junior, verify the work, and make the decisions that matter. That's not your job disappearing. That's your job shifting higher up the value chain.

The Verify-and-Refine Loop

Any output from an AI that matters needs a verify-and-refine loop. You don't take what it gives you and ship it. You:

- Review for accuracy: Does this actually say what I want? Are facts correct? Are examples relevant?

- Check for voice and fit: Does this sound right? Does it match my style or brand guidelines?

- Refine for nuance: What's missing? What's overstated? What needs more detail or less?

- Verify critical claims: If this matters, did I independently confirm it?

- Make judgment calls: Is this actually the direction I want, or should we try something different?

This loop is essential. AI output is almost never ready to ship. But it's a great starting point that you refine into something actually good.

For most people, this loop takes 30-50% of the time it would take to build from scratch. So you're still getting major time savings, but you're maintaining quality and control.

Prompt Precision

The quality of what AI gives you is directly proportional to how precisely you ask for it.

Vague prompt: "Write a marketing email." AI output: Generic, could be anything, mostly useless.

Precise prompt: "Write a marketing email for freelance developers announcing a new feature in project management software. The feature cuts meeting time by 40%. The tone is friendly but professional. We want to convey time savings without sounding desperate. The CTA is 'Request a demo.' Keep it under 150 words." AI output: Way more useful because you gave it constraints and direction.

The people using AI well are the ones who think through what they actually want before they ask. That's not different from good communication in general. It just matters more with AI because the system has no context or common sense.

Know Your AI's Weaknesses

Different AI systems have different strengths.

Claude is strong on writing, nuanced thinking, and admitting when it doesn't know something. Chat GPT is strong on broad knowledge and code generation. Perplexity does real-time research with source citations. Gemini integrates with Google's tools and has good image understanding.

For your specific use case, you probably want to use multiple systems. Chat GPT for code generation, Claude for writing, Perplexity for research, then your human judgment to synthesize.

Also know what each system gets wrong systematically. Chat GPT tends to hallucinate sources. Claude is sometimes overly verbose. Perplexity occasionally misses recent information. If you know the blind spots, you can guard against them.

Building AI Into Your Workflow: Practical Integration

At this point, you understand the landscape. Now how do you actually build this into your daily work?

Start with the Most Tedious Tasks

Don't try to revolutionize your entire workflow. Start with the parts of your job that you hate and that don't require much judgment.

Summarizing reports? Great AI task. Formatting data? Great AI task. Generating first draft options? Great AI task. Making strategic decisions about direction? Not a great AI task.

Start by offloading one tedious task. Get comfortable with the AI system. Understand how long the verify-and-refine loop takes. Then expand.

You're not trying to replace yourself. You're trying to buy yourself time to do the parts of your job that actually matter.

Build Templates and Repeatable Prompts

Once you find a prompt that works, save it. Create a swipe file of prompts you use regularly. Test and refine them.

Instead of re-explaining what you want every time, you start with a strong template. This dramatically improves consistency and speed.

Example: If you regularly summarize research, build a prompt template that specifies length, key sections, tone, and examples of what good summaries look like. Then you just drop in the new research and run the same prompt structure.

This is how you turn "occasional AI user" into "power user who gets consistent results."

Integrate with Your Existing Tools

AI isn't separate from your workflow. It should integrate into where you already work.

Runable offers AI-powered automation for presentations, documents, reports, and images starting at $9/month. You can use it to generate slides from data, create documents from outlines, or build reports automatically. When AI output integrates directly into the tools you already use, adoption skyrockets because friction drops to zero.

Many tools are adding AI features directly: Slack with AI summaries, Notion with AI assistance, Google Docs with writing help. Using these integrated versions often beats switching to a separate AI tool because you stay in your existing workflow.

Set Rules for What Doesn't Get AI

Not everything should go through AI. Decide what your lines are.

Maybe you don't use AI for anything that involves client relationships directly. Maybe you don't use it for confidential company information. Maybe you don't use it for high-stakes decisions without human review. Maybe you don't use it for anything you don't understand deeply enough to verify.

Having explicit rules prevents you from accidentally offloading something you shouldn't. And it makes your use of AI more trustworthy to clients and colleagues who can see you have a coherent philosophy about it.

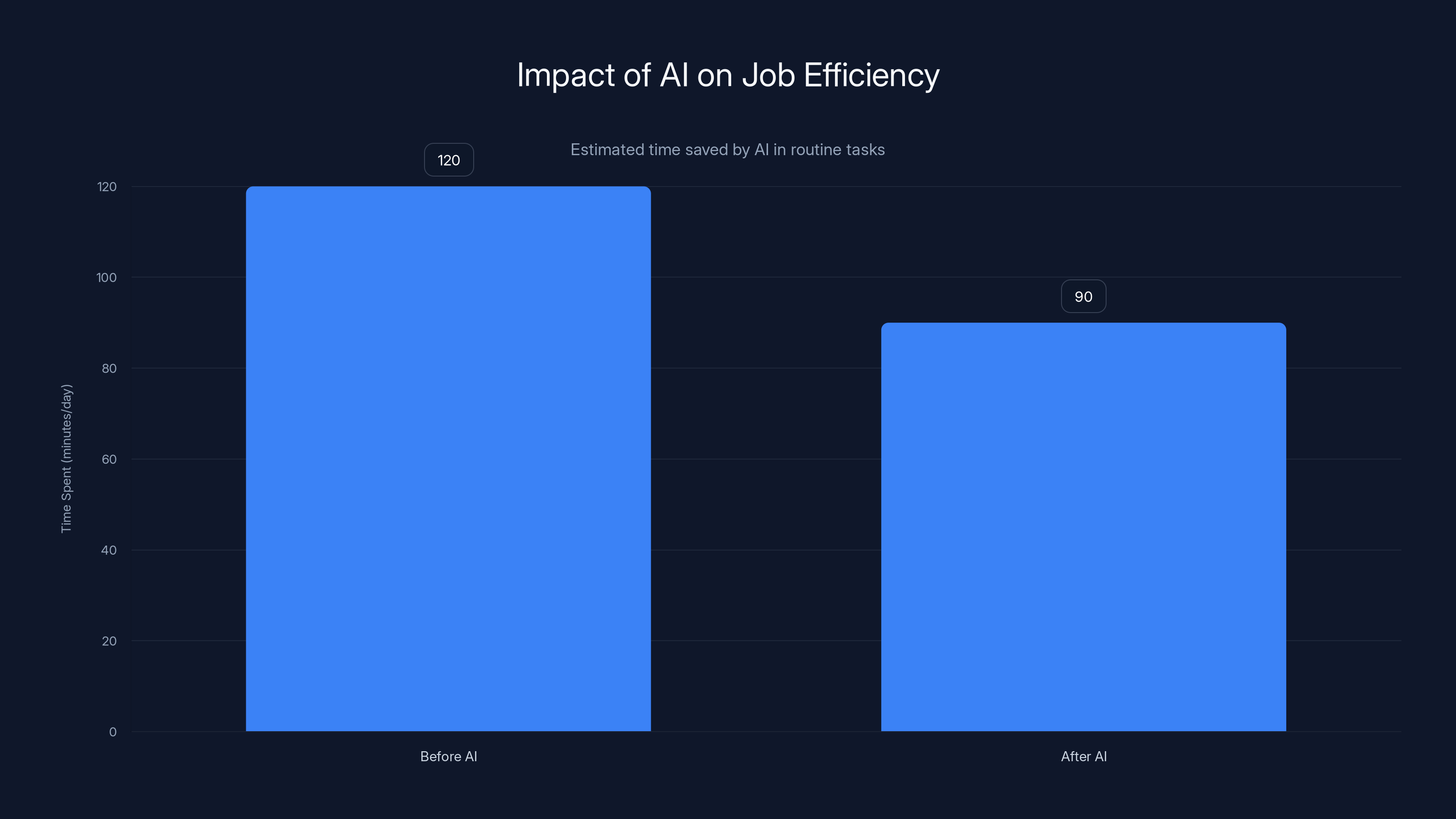

Estimated data shows AI can save approximately 30 minutes per day on routine tasks, allowing more focus on strategic activities.

The Real Misconceptions: Separating Hype from Reality

Let's address the big myths that are driving most of the unrealistic thinking around AI.

Myth 1: AI Will Automate "The Future"

There's this tendency to talk about AI as if it's a future technology that will someday do amazing things. But it's already here and already doing things.

The future of AI isn't some breakthrough moment where suddenly everything changes. It's incremental improvement from where we are now. Better models. More reliable outputs. Better integration with tools you use. Stronger guardrails against hallucination.

But the fundamental limitations won't go away: AI will still lack judgment, still struggle with context, still need human verification. These aren't bugs that get fixed in the next version. They're features of how these systems work.

The hype suggests that AI will transcend these limitations. It probably won't. Instead, we'll get better at living within them.

Myth 2: General Intelligence Is Coming Soon

A lot of the fear about AI replacing humans comes from the assumption that we're heading toward general artificial intelligence, where AI systems can do anything humans can do, better and faster.

Maybe that's true. But we have no idea when, and the more we develop specific AI systems, the more we understand how far we still are. These systems are narrow. They're good at predicting tokens and finding patterns. They're not generally intelligent. They don't understand causation. They don't reason the way humans do.

Assuming general intelligence is coming is like assuming we'll land on Mars next year because we landed on the moon in 1969. Possible? Maybe. Inevitable? No. Imminent? Definitely not.

Treat AI as what it is: very sophisticated pattern matching. That's powerful and useful. But it's not consciousness. It's not judgment. It's not general intelligence. Planning your career around AGI arriving in 5 years is probably premature.

Myth 3: Creative Jobs Are Safe Because AI Can't Be Creative

AI can't have original ideas. That's true. But a lot of job security isn't about originality. It's about cost and volume.

If you're a creative professional, your security doesn't come from the fact that AI can't create. It comes from the fact that your creativity is strategic—you're creating because you have judgment about what will work for your specific audience and goals.

But if you're a creative professional creating on volume without strategy—generating design options, copywriting at scale, producing content for content's sake—that's at risk.

The security for creatives comes from moving up the value chain: from "create the thing" to "decide what thing we should create and why." That still requires human judgment.

Myth 4: Using AI Means You're Lazy or Cheating

This one's particularly pernicious because it makes people feel guilty about using a tool that actually makes them better.

If a carpenter uses a power saw instead of a hand saw, they're not cheating. They're working efficiently. If you use AI to handle routine parts of your job so you can focus on strategy and quality, you're not cheating. You're working smart.

The judge of whether you're cheating is the person you're delivering to. If they care about speed and quality, and AI helps you deliver both, that's fine. If they care about knowing that human hands touched every part, and AI bothers them, that's information you need to know.

But the intrinsic question of whether using AI is legitimate? It is. It's a tool. Tools aren't cheating.

Governance and Risk: What You Actually Need to Be Careful About

While we're on the topic of things people get wrong, let's talk about real risks, not imagined ones.

The Actual Privacy Concerns

When you put data into a public AI system, that data trains the model. It can show up in outputs for other users. It can be accessed by the company running the system.

If you're working with confidential information, don't put it in Chat GPT. Use a private/enterprise version or handle it locally.

If you're fine with the data being used to train future models, public systems are fine. But understand the tradeoff.

Bias and Fairness Issues

AI systems learn from data. If the data reflects historical bias, the AI will replicate it. A hiring system trained on historical hiring data will likely discriminate the way the hiring has historically discriminated.

This isn't a theoretical problem. It's actively causing harm in lending, hiring, healthcare, and criminal justice.

If you're using AI for any decision that affects people, you need to:

- Understand what data trained the model

- Test for bias outcomes

- Have a human review edge cases

- Be willing to override the system

Hallucination and Reliability

AI makes things up. Not because it's trying to deceive. But because it's trained to predict tokens, and sometimes the prediction leads to false information.

For anything where accuracy matters, you need to verify. For decisions that have consequences, you need to be willing to override the AI if something seems wrong.

This isn't a risk you can eliminate. It's a risk you manage by not fully trusting the system.

Accountability

If you use an AI system to make a decision and that decision causes harm, who's responsible?

Right now, the legal answer is unclear and probably you. The AI developer will argue they're not liable. The company deploying it will argue they provided tools. You're the one who used it.

So for high-stakes decisions, you need to be prepared to defend your use of AI and explain your reasoning.

This is both a technical problem and a governance problem. We haven't solved it yet.

While AI can assist in creative tasks, human professionals excel in judgment, insight, risk-taking, and responsibility, which are crucial for effective creative direction and strategy. Estimated data.

The Future of AI: Not Sci-Fi, Just Better Tools

If you're tired of the hype cycle, here's a grounded take on what's actually likely to happen.

More Integration, Less Novelty

The exciting moment of Chat GPT arriving and transforming everything? That was the novelty. The actual future is less exciting: AI becomes a standard feature in every tool you use.

Your email client will have AI writing assistance. Your spreadsheet will have AI analysis. Your design tool will have AI generation. Your notes app will have AI organization.

These aren't revolutionary. They're just... normal features that make you faster at routine parts of your work.

Better Specialized Models, Fewer General Models

Right now, the industry is betting on massive general models that try to do everything. Probably the future involves smaller, specialized models that are really good at specific things.

A model trained specifically on code is better at code than a general model. A model trained specifically on medical information is better and more reliable for medical questions. A model trained specifically on your company's data is better for your company.

This means AI becomes more reliable and more useful in specific contexts, even if no single AI system is good at everything.

Continued Improvement in Reasoning

Current AI systems are good at pattern matching and recall but weak on reasoning through complex problems. Future systems will probably get better at multi-step reasoning, understanding causation, and working through problems systematically.

This probably means AI moves from "generating content" to "actually thinking through problems with you." Still not replacing human judgment, but closer to a real thinking partner.

Increased Attention to Reliability

Right now, AI is fast and often wrong. The next phase is probably slower and more reliable. Better sources for answers. Better acknowledgment of uncertainty. Better refusal to guess.

This means AI becomes more trustworthy but potentially slower. And probably fine for most use cases, because the most important thing isn't speed. It's accuracy.

Regulatory Frameworks Arriving

Governments are starting to regulate AI. The EU has the AI Act. The US has various agencies examining it. China is regulating it aggressively.

These regulations will probably:

- Require disclosure when AI is used

- Mandate testing for bias and fairness

- Establish liability frameworks

- Limit use of AI for certain high-stakes decisions

This means AI in professional contexts probably gets more restricted, more auditable, and more formally integrated into processes.

It's less exciting than "AI will do everything." But it's more realistic than "AI won't change anything."

Building an AI-Literate Mindset: What You Actually Need to Know

Okay, so here's the thing nobody says clearly: you don't need to understand how AI works to use it well. But you do need certain mental models.

Understand Pattern Matching vs. Understanding

AI is pattern matching. It's not actually thinking. It's not actually understanding. It's predicting what token comes next based on patterns in training data.

This has huge implications:

- It will confidently predict wrong answers if the patterns fit

- It won't understand context the way humans do

- It's great at extrapolating from examples

- It's bad at truly novel situations

If you understand that AI is sophisticated pattern matching, not actual understanding, you'll use it correctly. You'll verify things. You'll understand its limitations. You'll be skeptical of confident-sounding wrong answers.

Understand Training Data Determines Behavior

AI systems behave like the data they trained on. If they trained on high-quality data, they're reliable. If they trained on biased data, they're biased. If they trained on recent data, they're current. If they trained on old data, they're outdated.

This means:

- Different AI systems behave differently because they trained on different data

- All AI systems are only as good as their training data

- You can't fix bias by prompting better, because bias is in the training data

- Updates happen when you retrain, not through discussion

Understanding this keeps you from expecting things AI can't deliver.

Understand Hallucination Is Fundamental

Hallucination isn't a bug that'll get fixed. It's inherent to how language models work. They predict tokens. Sometimes the prediction is wrong. That's not a failure. That's how the system works.

The right response isn't to hope for better AI. It's to build verification into your workflow. Always assume AI might be making things up and verify anything that matters.

Understand the Narrow-vs-Broad Tradeoff

AI systems are either:

- Broad but shallow: General-purpose AI that can do lots of things okay but nothing exceptionally well

- Narrow but deep: Specialized AI that does one thing really well

For your work, figure out which you need. If you need a tool that does seven different things at 70% competency, use a general tool. If you need a tool that does one thing at 95% competency, use a specialized tool.

Most people default to general tools because they're convenient. But for important work, specialized usually wins.

AI augments human capabilities by increasing efficiency: 50% in design, 40% in writing, and 45% in development. Estimated data based on typical AI integration benefits.

Real Use Cases You Can Implement Today

Let's get concrete. Here are actual use cases working right now that you can implement without waiting for AI to get better.

Research and Learning

You're trying to understand a new topic. Use AI to:

- Generate an outline of the topic's main concepts

- Explain each concept in simple terms with examples

- Identify areas of disagreement or debate

- Suggest key sources to dive deeper

Then you do the critical work: reading actual sources, forming your own opinions, building nuanced understanding.

Time saved: 50-70% on the initial research phase. Time well spent on verification and deep learning: the same as always.

Content Planning and Iteration

You're creating content. Use AI to:

- Generate multiple angle/approach options

- Create outlines for each approach

- Draft components that you refine

- Test different formats or styles

- Optimize for different audiences

You do the critical work: deciding which angle is right, ensuring accuracy, adding voice and perspective, making final creative decisions.

Time saved: 40-60% on generation. Quality improvement: usually 10-20% because you can iterate more.

Code Generation and Acceleration

You're coding. Use AI to:

- Generate boilerplate and scaffolding

- Write routine helper functions

- Suggest implementations for common patterns

- Generate test cases

- Comment your code

You do the critical work: architecture decisions, complex logic, testing that the AI code actually works, integration decisions.

Time saved: 30-50% on routine coding. Bug risk: higher initially until you build the habit of verifying AI code.

Documentation and Knowledge Management

You're documenting something. Use AI to:

- Generate structure and outline

- Draft sections based on examples

- Create multiple versions for different audiences

- Generate examples and use cases

- Format and organize information

You do the critical work: accuracy, completeness, ensuring the documentation matches reality, updating when things change.

Time saved: 40-60% on initial generation. Maintenance burden: same as always.

Customer and Internal Communication

You're drafting communications. Use AI to:

- Generate multiple versions with different tones

- Create templates for routine communications

- Draft responses to common questions

- Organize information into clear structure

You do the critical work: deciding on tone and approach, ensuring accuracy, adding personal touch, final approval.

Time saved: 30-50% on drafting. Risk: sounding generic if you don't refine.

Making the Call: When to Use AI and When Not To

So you've got the information. How do you actually decide whether to use AI for something?

Here's a simple rubric:

Use AI when:

- The task is routine or follows clear patterns

- Speed matters and accuracy can be verified

- The work is exploratory, not final

- You're trying to overcome friction on boring tasks

- You understand the domain well enough to verify output

- The stakes are low enough that mistakes are correctable

Don't use AI when:

- The task requires genuine originality or strategy

- Accuracy is critical and can't be easily verified

- The work requires personal relationships or trust

- The stakes are so high that mistakes can't be undone

- You don't understand the domain well enough to verify

- The output will be attributed to AI (unless that's explicitly okay)

Maybe use AI when:

- You use it as a starting point you heavily refine

- You use it to accelerate something you'd do anyway

- You use it to generate options you then judge

- You use it to overcome writer's block or analysis paralysis

- You use it alongside human judgment, not instead of it

The difference between good AI use and bad AI use mostly comes down to one thing: understanding when AI is giving you a starting point versus a final answer.

Final answers need judgment. Starting points don't.

The Roadside Scenario Revisited: Why This Matters

Let's come back to where we started: someone stuck on the side of a road at midnight using AI to understand their car problem.

Why was this such a good use case?

- Time-sensitive: They needed information immediately, not eventually

- Expertise-level needed: They needed someone who knows car systems, which is expensive to have on speed dial

- Verifiable: If the advice was wrong, they'd know quickly and could try something else

- Safe stakes: Following wrong advice wouldn't permanently damage the car

- Accessible: They had a phone and internet, which they did have

- Judgment still required: They couldn't just follow blindly. They still needed to understand the answer and apply it

That's AI being genuinely useful. Not solving the entire problem. Not replacing expertise. Just solving the bottleneck—getting expert-level information fast when it wasn't available any other way.

That's the real future of AI, repeated across hundreds of use cases: removing bottlenecks, saving time on routine work, making expertise more accessible, and letting humans focus on the parts of work that require judgment.

It's not going to be revolutionary. It's going to be useful. And honestly, useful is better than revolutionary.

FAQ

What is the real purpose of AI in the workplace?

The real purpose of AI in the workplace isn't to replace workers—it's to accelerate routine tasks and provide expert-level information quickly. AI excels at pattern matching, information synthesis, and speed. The best use cases combine AI's capabilities with human judgment, decision-making, and accountability. When used well, AI lets humans focus on strategy, creativity, and high-level thinking rather than routine scaffolding.

How can I tell if AI is actually working for my specific job?

AI is working for your job if it measurably saves you time on tasks that don't require much judgment and that you dislike doing. Track how much time you spend on your job before and after integrating AI for specific tasks. If AI is saving you 30+ minutes per day on work you'd rather not do, and the output quality is acceptable with light refining, it's working. If you're spending as much time verifying and refining as you would have spent doing the work from scratch, it's not working yet.

Will AI actually replace my job in the next 5 years?

Most likely no, unless your job is primarily high-volume content generation at scale or routine data entry. Jobs don't disappear overnight. Job roles shift over years—some tasks get automated, new tasks emerge, and the balance of work changes. What's changing faster is the value of different skills. Strategic thinking and judgment are becoming more valuable. Routine task execution is becoming less valuable. If your job is 80% judgment and 20% routine execution, you're probably fine. If it's 20% judgment and 80% routine, you should be developing new skills.

How do I get better at using AI without falling into the hype cycle?

Focus on concrete, measurable outcomes rather than potential. Identify one tedious task and use AI to eliminate it. Track the actual time saved and output quality. Then expand from there. Avoid hypothetical discussions about what AI might do someday. Instead, ask: what is AI actually doing for me right now, measurably, and is that valuable? The hype cycle loses power when you focus on tangible results rather than potential.

What's the biggest risk of using AI at work?

The biggest risk is using AI output without verification when accuracy matters, or without understanding what you're actually responsible for. If you use an AI system to make a decision and that decision causes harm, you're likely accountable. The legal frameworks haven't caught up to the technology. So the rule of thumb is simple: if it matters and it's your name on it, you're responsible for getting it right, which means verifying.

How do I know when I'm using AI well vs. using it as a crutch?

You're using AI well when it helps you do work you understand deeper, faster. You're using it as a crutch when it's replacing work you should understand but don't. The test: if the AI output was wrong, could you catch the error? If not, you're relying on it too heavily. If yes, you're using it well. Good AI use increases your capabilities in areas you're strong. Bad AI use masks weaknesses and makes you dependent.

Should I disclose when I've used AI to create something?

Depends on context and who you're delivering to. For internal work where speed matters and quality is verifiable, probably no—nobody cares how fast you went as long as it's good. For work where people care about the process or the human hand (art, writing, anything intimate), probably yes. For work where you're claiming expertise (consulting, advice, analysis), probably yes. For work where it matters that humans understand the reasoning (legal, medical, safety), probably yes. The rule of thumb: if you'd feel awkward disclosing it, you probably should.

What skills will become more valuable as AI becomes more common?

Judgment, strategic thinking, creativity, and accountability will become more valuable because these can't be automated. Knowing how to work with AI (directing it, verifying it, integrating it) will become more valuable. Domain expertise will become more valuable because you need to know a field deeply to verify AI output in that field. Communication and persuasion will become more valuable because many routine communication tasks get automated. The skills becoming less valuable are routine task execution, basic information retrieval, and following detailed processes without judgment.

How should companies govern AI use to reduce risk?

The basics are: be explicit about where AI is and isn't allowed, require human review for high-stakes decisions, test systems for bias before deployment, maintain audit trails of AI-generated outputs, and be prepared to override AI when something seems wrong. Treat AI like you'd treat any powerful tool: with respect, with understanding of limitations, and with governance proportional to risk. Companies that are getting this right are treating AI as a tool that requires human judgment, not as a replacement for judgment.

Conclusion: Practical Optimism

We started with someone on a dark highway using AI to understand a car problem. We end with a broader truth: AI is a genuinely useful tool that solves specific problems well and fails at others reliably.

The hype wants you to believe either that AI will solve everything or that it's overhyped. Both are wrong. AI is what it is: a powerful tool for pattern matching, information retrieval, content generation, and rapid iteration. It's bad at genuine creativity, at judgment, at understanding context, and at accountability.

The future isn't going to be dramatic. It's not going to be AI taking over or AI being useless. It's going to be ordinary: AI becomes a standard feature in the tools you use, people get better at working with it, regulations arrive to prevent the worst outcomes, and life goes on. Some job roles change. Some jobs compress. New types of work emerge. Productivity increases in some ways and stays the same in others.

The right mindset isn't fear or hype. It's practical. Use AI for what it's good at. Use humans for what humans are good at. Verify critical outputs. Make judgment calls. Build accountability. Stay skeptical of confident-sounding wrong answers. Understand the limitations.

That's not revolutionary. But revolution isn't what you need. You need useful. And AI, used well, is genuinely useful.

The people whose jobs are becoming more valuable aren't the ones denying AI. They're the ones integrating it into their workflow, understanding what it can and can't do, and using it to amplify their own judgment and creativity. They're not replacing themselves with AI. They're using AI to do more of the work that matters.

That's the real opportunity. Not AI replacing humans. Humans getting better at work because they understand how to work with AI.

Take that roadside scenario again. Nobody's impressed that someone used AI to understand a car problem. They're just relieved it worked. In five years, nobody will be impressed with most AI use cases either. They'll just be normal. Tools that work, that save time, that make life easier.

That's not the future the hype predicted. But it's the future that's actually coming. And honestly, it's better.

Key Takeaways

- AI excels at pattern matching, information retrieval, and speed but lacks genuine judgment, strategy, and accountability

- Jobs aren't disappearing because of AI, but job roles are shifting from routine execution toward strategic decision-making

- The best AI use cases combine AI's speed and pattern recognition with human judgment, creative direction, and accountability

- AI hallucination is fundamental and unavoidable, requiring human verification for any critical decisions or outputs

- Practical integration beats hype: use AI for specific bottlenecks and tedious tasks, then apply human judgment to refine results

Related Articles

- Linus Torvalds' AI Coding Confession: Why Pragmatism Beats Hype [2025]

- Claude Cowork: Anthropic's AI Agent for Everyone [2025]

- AI PCs Are Reshaping Enterprise Work: Here's What You Need to Know [2025]

- Why 'Learn Once, Work Forever' Is Dead: AI's Impact on Skills and Work [2025]

- Lenovo's Qira AI Platform: Transforming Workplace Productivity [2025]

- Lenovo Yoga Mini i 1L 11: Tiny Cylindrical PC with AI Copilot [2025]

![The Real AI Revolution: Practical Use Cases Beyond Hype [2025]](https://tryrunable.com/blog/the-real-ai-revolution-practical-use-cases-beyond-hype-2025/image-1-1768486176136.jpg)