Google Gemini Personal Intelligence: AI That Knows Your Life [2025]

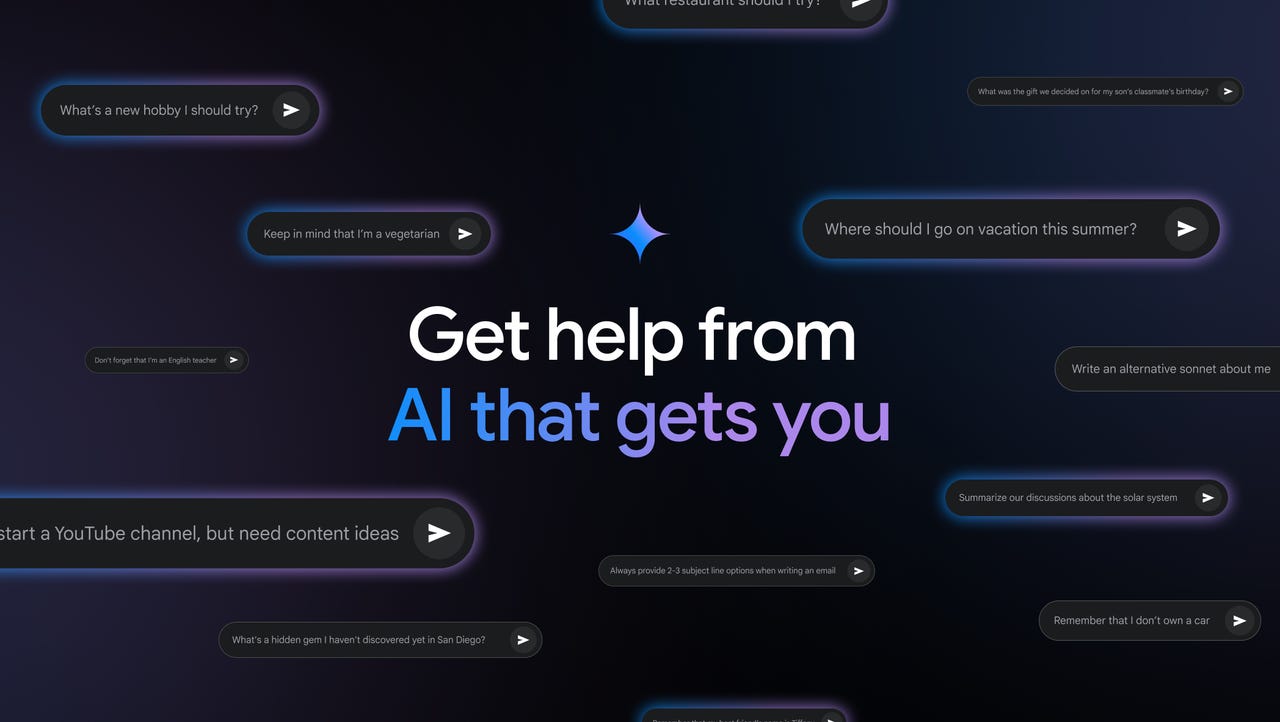

Imagine asking your AI assistant a question and it doesn't just find an answer on the internet. It finds your answer, tailored to your specific situation, your history, your preferences, your life. That's not science fiction anymore. That's Google's new Personal Intelligence feature for Gemini, and it's about to change how we think about AI assistants, privacy, and the line between helpful and creepy.

Google just announced that Gemini will soon connect directly to your Gmail inbox, Google Photos, search history, and YouTube watch history to provide what the company calls "Personal Intelligence" (Fortune). This isn't a minor feature update. This is Google betting its entire AI future on the idea that the most useful AI is an AI that knows everything about you.

But here's where it gets complicated. When an AI has access to your email, your photos, your search queries, and your viewing habits, the stakes change. This is more than a chatbot feature. This is about data, privacy, consent, and the evolving relationship between users and the technology companies that own their information.

Let's break down what Personal Intelligence actually does, why Google's making this move, what could go wrong, and what it means for you.

TL; DR

- Personal Intelligence is opt-in: Google's connecting Gemini to Gmail, Photos, Search, and YouTube history to deliver hyper-personalized AI answers (Google Blog)

- It reasons across your data: Unlike previous features, it can connect dots between your emails, photos, and search history without you asking it to (Bloomberg)

- Privacy by design (theoretically): Google says it doesn't train directly on your Gmail or Photos; data stays in your account (Droid Life)

- Limited rollout for now: Only available to US-based Google AI Pro and AI Ultra subscribers in beta (CNBC)

- The catch: This is the deepest integration of personal data into an AI assistant yet, raising serious questions about privacy, data handling, and long-term implications (Business Insider)

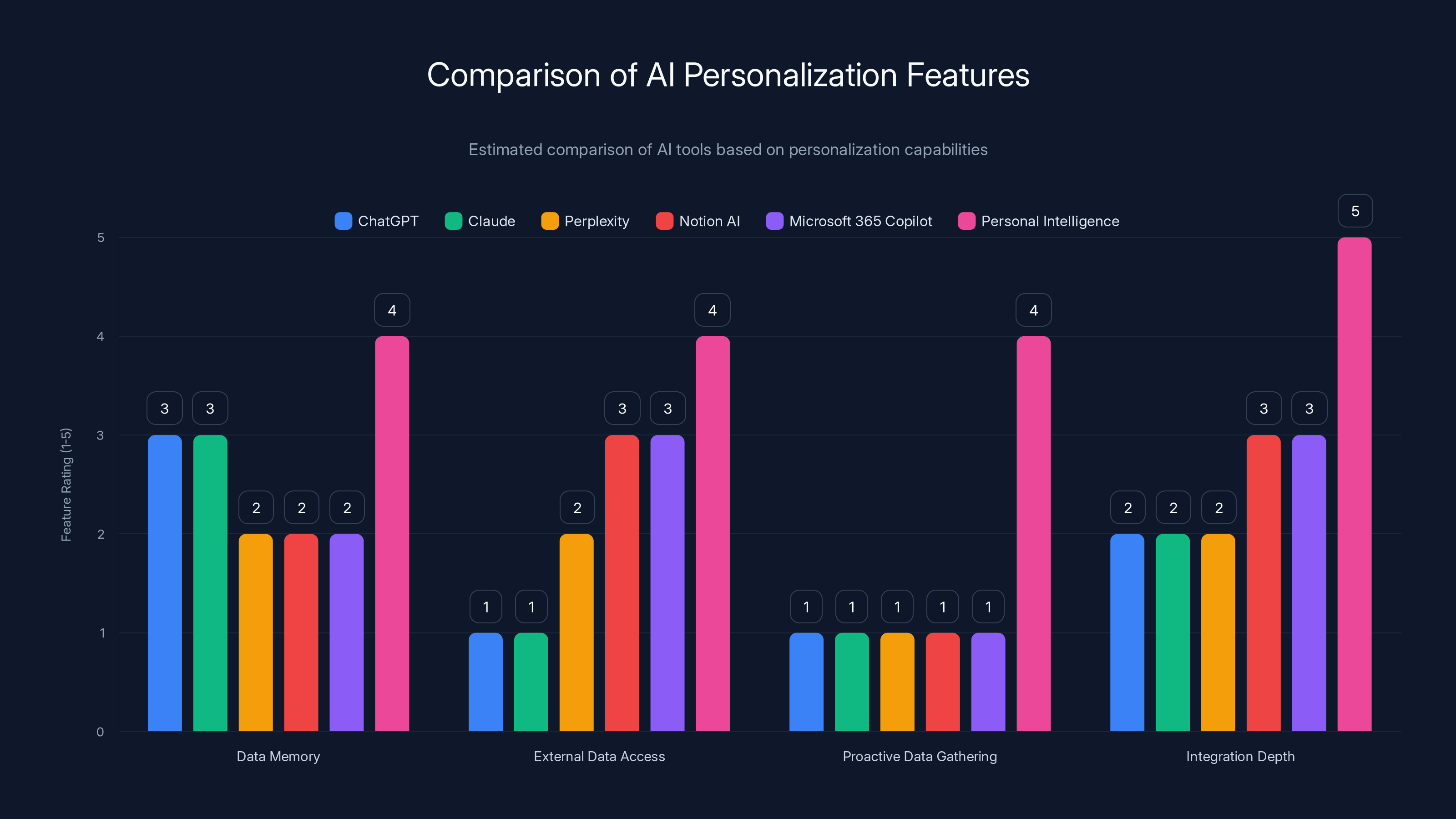

Personal Intelligence stands out with the highest ratings in proactive data gathering and integration depth, indicating its advanced personalization capabilities. Estimated data based on feature descriptions.

What Is Personal Intelligence, Exactly?

Personal Intelligence is Gemini's ability to understand and reason about your personal data without you having to explicitly tell it where to look. It's the difference between asking a normal chatbot a question and asking your best friend who knows everything about you.

Let's use Google's own example. A family needed new tires for their Honda minivan. Standing in line at a tire shop, they asked Gemini for the tire size. A regular chatbot could look that up from the web. But Gemini's Personal Intelligence did something different. It pulled the tire specs, then referenced family road trips to Oklahoma from their Google Photos to suggest all-weather tires for highway driving. It found tire ratings and prices. When they needed their license plate number, it pulled it from a photo in their library and searched their Gmail to identify the specific van trim (Fortune).

That's the magic moment. That's what Personal Intelligence is: an AI that connects information across multiple sources in your Google account to give answers that are actually about you, not just about the general internet.

It sounds incredibly useful. And it is. But the implications are staggering.

Before Personal Intelligence, Gemini had access to Google services, but it worked differently. You had to explicitly tell it "search my Gmail for this" or "look at my Photos for that." The AI wasn't reasoning across your data. It was executing commands. It was a retrieval tool, not an intelligence system.

With Personal Intelligence, Gemini is different. It's actively analyzing your personal information, making connections, drawing conclusions, and using all of that to inform its answers. That's a fundamental shift in how AI interacts with your life.

Google is calling this a "beta" feature, and it's only rolling out to AI Pro and AI Ultra subscribers in the US first (MarketingProfs). But this is clearly the direction Google wants to go. Personal Intelligence isn't an experiment. It's the future of how Google wants Gemini to work.

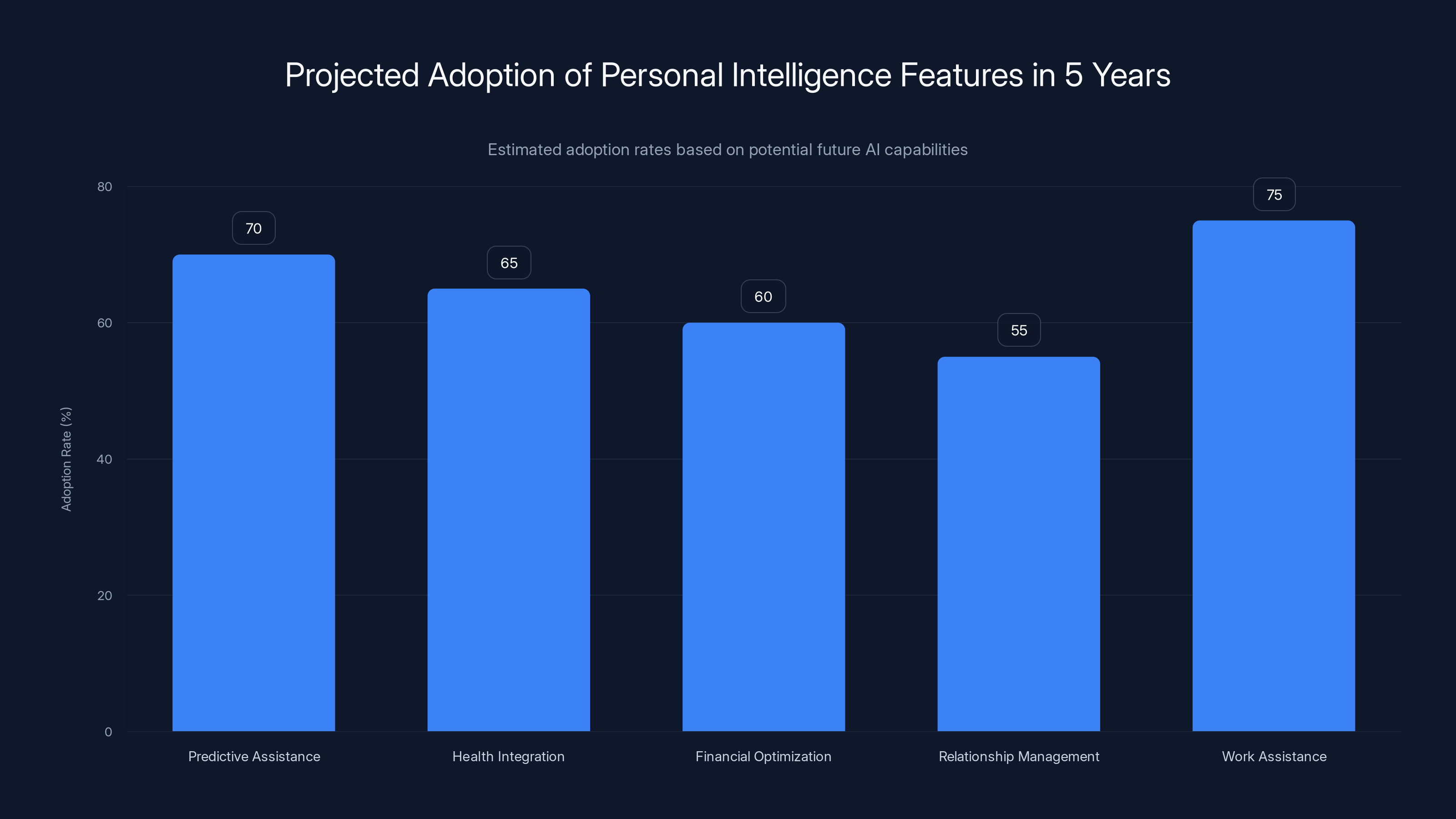

Estimated data suggests that 'Work Assistance' and 'Predictive Assistance' could see the highest adoption rates, with 75% and 70% respectively, as AI becomes more integrated into daily life.

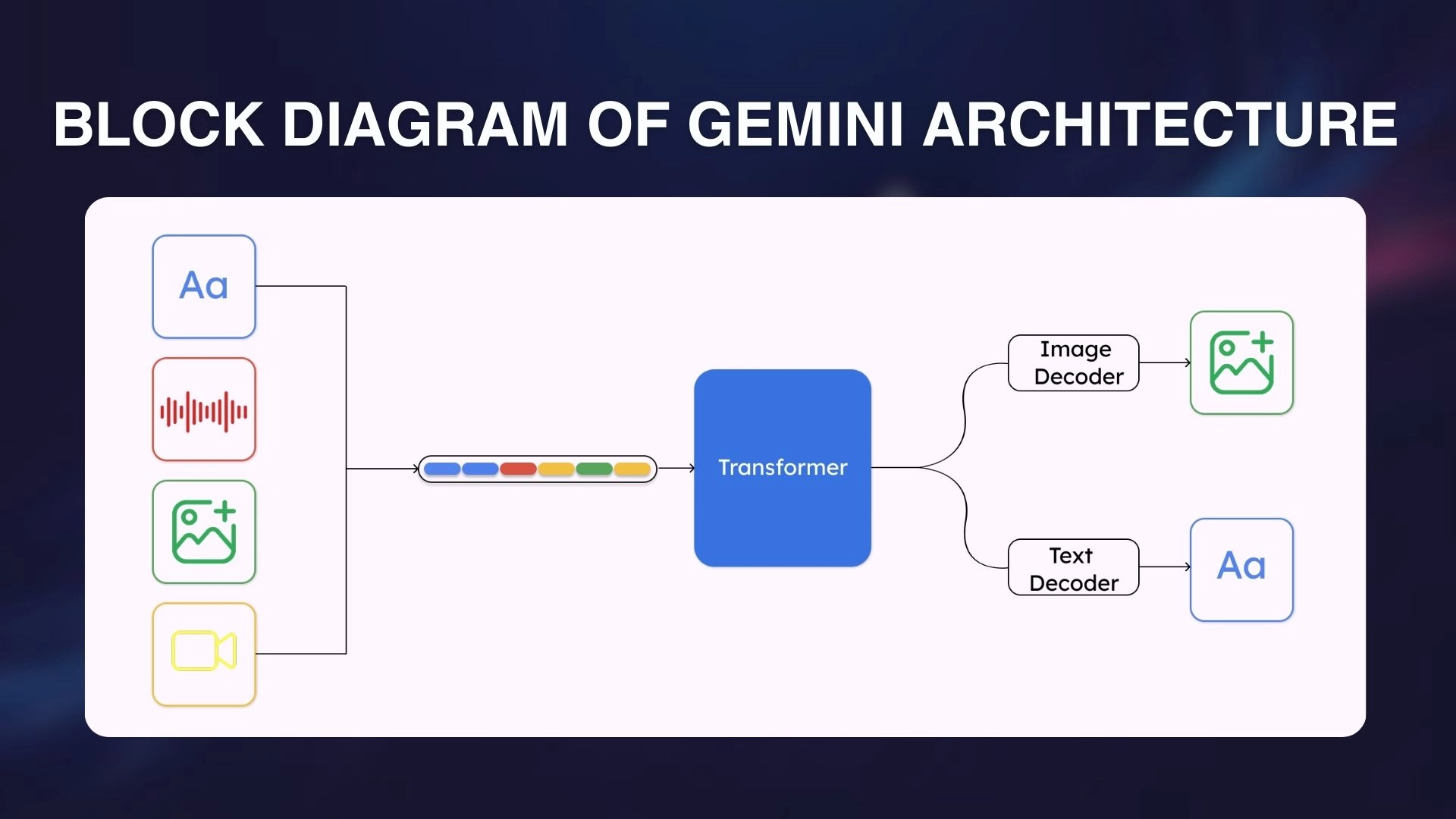

How Does Personal Intelligence Work Under the Hood?

The technical mechanics are worth understanding because they tell you a lot about what Google is actually doing with your data.

Personal Intelligence runs on Google's newer Gemini 3 models, which are designed specifically for reasoning tasks. When you ask Gemini a question, the model analyzes your query and decides whether to pull information from your personal data or just answer from general knowledge (Ars Technica).

If it decides your personal data is relevant, it retrieves information from whichever Google services you've connected: Gmail, Photos, Search history, YouTube history. It then reasons across that data and provides an answer that's contextualized to your life.

Here's what Google claims is not happening: Your Gmail inbox and Google Photos library are not being used to train the Gemini model. Google says the model doesn't learn from your personal data in the way it learns from internet data. Instead, only "limited info" like specific prompts you type to Gemini and the model's responses are used for training and improvement (Droid Life).

That's the official story. And Google has added what it calls "guardrails"—safety measures designed to prevent Gemini from making inappropriate assumptions about sensitive data like health information or relationship status.

The retrieval process itself is where Google claims privacy happens. When you ask Gemini a question, it pulls your data on-device or from your secure account, not from some shared Google training database. The model processes your request, generates an answer, and that's it. Your data stays yours.

But here's where the details matter. Google says it doesn't train the model on your Gmail and Photos. It doesn't say it never looks at that data. And the distinction between "training data" and "data used to improve the model" is getting fuzzier every year (Bloomberg).

Also, Google does train on "specific prompts in Gemini and the model's responses." That means your conversations with Gemini could theoretically be analyzed to improve future models. Google says they use "limited info," but what qualifies as limited is subjective.

The Real Problem: Over-Personalization and False Confidence

Google itself acknowledges that Personal Intelligence has problems. In fact, Google's own executives warned about these issues before rolling out the feature.

Josh Woodward, VP of the Gemini app, warned that users might encounter "inaccurate responses or 'over-personalization,' where the model makes connections between unrelated topics." It might struggle with "timing or nuance, particularly regarding relationship changes, like divorces, or your various interests" (Droid Life).

Translate that from corporate-speak: The AI might get things really, really wrong in ways that are specific to your life.

Think about how this could actually break. Let's say Gemini pulls your email history and sees you talking about an ex-partner. It might not understand that the relationship ended. It might keep making references to that person in future conversations, assuming they're still relevant to your life. Or it might confuse two different people with similar names, pulling information about the wrong person into an answer.

With a regular chatbot, these mistakes are obvious and harmless. With an AI that's supposed to know you, these mistakes could be genuinely disorienting or even harmful.

Another scenario: You have two "Sarah"s in your life—one from work, one from college. You ask Gemini about plans with Sarah. Without a nuanced understanding of context, it might mix up information about both Sarahs, giving you completely wrong information about the wrong person's plans.

Google is supposedly working on fixing these issues, but fixing over-personalization is hard. It requires the model to understand not just what data exists, but what data is relevant to a given query, and how to weight conflicting information.

And here's the deeper problem: Even when the AI gets things right, the very fact that it has access to all this data creates what researchers call "illusion of intimacy." You feel like the AI knows you because it's pulling real facts from your actual life. That feeling might make you trust the AI more than you should, or share more information with it, or rely on it for decisions that need human judgment.

Google isn't being intentionally misleading here. But they're not really warning users about these psychological dynamics either.

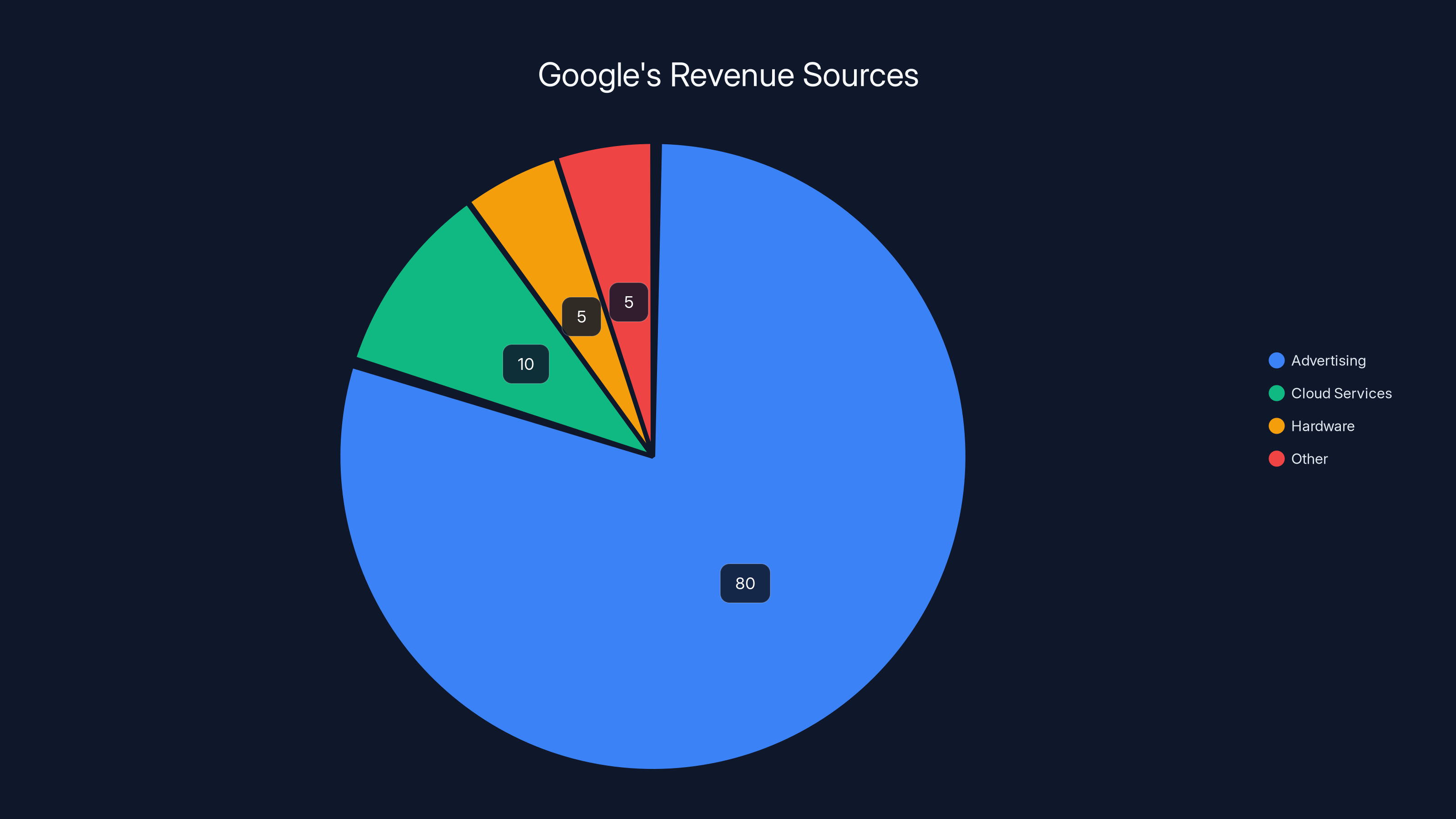

Advertising accounts for approximately 80% of Google's revenue, highlighting its reliance on data-driven ads. Estimated data.

Privacy: What Google Claims vs. What Actually Happens

This is the section where we need to be really careful about the distinction between what Google says and what Google does.

Google's privacy claims around Personal Intelligence are structured around a few key points:

Claim 1: Your data isn't used for training. Google says Gemini doesn't train directly on your Gmail inbox or Google Photos library. What it does train on is "limited info" like prompts you type to Gemini and the responses it generates (Droid Life).

The reality: Google's definition of "limited info" is doing a lot of work in that sentence. What counts as limited? Is it the subject line of emails? The content? Just metadata? Google hasn't specified. And historically, "limited info" has expanded over time as companies found new ways to use data for model improvement.

Claim 2: You have control. Personal Intelligence is opt-in. You choose which services to connect. You can disconnect anytime (Fortune).

The reality: This is actually true, but with caveats. Yes, it's opt-in in the technical sense. But in practice, Google's interfaces are designed to encourage adoption, and most users don't understand what they're opting into. Additionally, once you opt in and start using Personal Intelligence, disconnecting doesn't necessarily delete the data Google has already processed or the improvements Google's made to the model based on your data.

Claim 3: Guardrails prevent abuse. Google says Gemini "aims to avoid making proactive assumptions about sensitive data like your health" (Bloomberg).

The reality: Guardrails are better than nothing, but they're not foolproof. An AI trying to help you understand medical issues in your email could easily veer into "making assumptions" about your health. The difference between helpful and inappropriate is often subjective.

Let's think about what Personal Intelligence actually requires from a privacy perspective. For this to work, Google needs to:

- Give Gemini access to your email (including highly sensitive conversations, financial information, medical records, passwords, legal documents)

- Give Gemini access to your photos (including location data, timestamps, people identifiable from faces)

- Give Gemini access to your search history (including things you searched when you thought you were private)

- Give Gemini access to your YouTube watch history (including recommendations, interests, watch times, video duration data)

That's not a small amount of data. That's essentially the entire digital footprint of your life within Google's ecosystem.

Google's privacy policies say they're taking steps to keep this data secure and separated from the broader AI training infrastructure. But privacy in tech has a history of degrading over time. What's separate today might be integrated tomorrow. What's opt-in today might become mandatory when the next version of Android launches.

It's worth noting that Google's not the only company doing this. Microsoft is working on something called "Recall" for Windows that captures and analyzes everything you do on your PC. Apple is adding on-device AI features that will process your personal information locally. Anthropic's Claude is being integrated into more services that could eventually access personal data.

Personal Intelligence isn't an anomaly. It's the direction the entire AI industry is heading. The question isn't whether AI will have access to your personal data. The question is how much control you'll have over it.

The Comparison: Personal Intelligence vs. Other AI Personalization

Personal Intelligence is not the first time an AI company has tried to personalize AI responses based on user data. But it's different in important ways.

Open AI's Chat GPT can remember information from previous conversations within the same chat thread. If you tell Chat GPT your name and your job, it'll reference that information in future messages. But it doesn't have access to your email or photos. It only knows what you explicitly tell it.

Claude has similar capabilities. It can reference earlier messages in a conversation, but it doesn't access external data sources by default. You have to share that information explicitly.

Perplexity can search the internet in real time, but it doesn't have access to your personal data. It's personalized by what you ask it, not by who you are.

Personal Intelligence is different because it's proactive. It doesn't wait for you to share information. It goes looking for it in your accounts. It makes connections across different data sources without you explicitly asking it to. It's not just remembering what you've said. It's understanding who you are based on your digital life.

There's also Notion's AI assistant and Microsoft 365's Copilot, both of which can access your documents and files. But those are still more limited in scope than what Personal Intelligence is doing. They're document-aware, not life-aware.

Personal Intelligence is in a different category. It's the deepest integration of personal data into an AI assistant that a major tech company has rolled out to consumers.

Here's a table breaking down how different AI assistants handle personalization:

| Feature | Chat GPT | Claude | Gemini Personal Intelligence | Copilot 365 | Perplexity |

|---|---|---|---|---|---|

| Conversation Memory | Yes | Yes | Yes | Yes | No |

| Email Access | No | No | Yes | Yes | No |

| Photo/File Access | No | No | Yes | Yes | No |

| Search History Access | No | No | Yes | No | No |

| Web Search | Optional | No | Yes | Yes | Yes |

| Proactive Data Retrieval | No | No | Yes | No | No |

| On-Device Processing | Some | Some | Partial | Some | No |

You can see the gap. Personal Intelligence is operating in its own category when it comes to personal data integration.

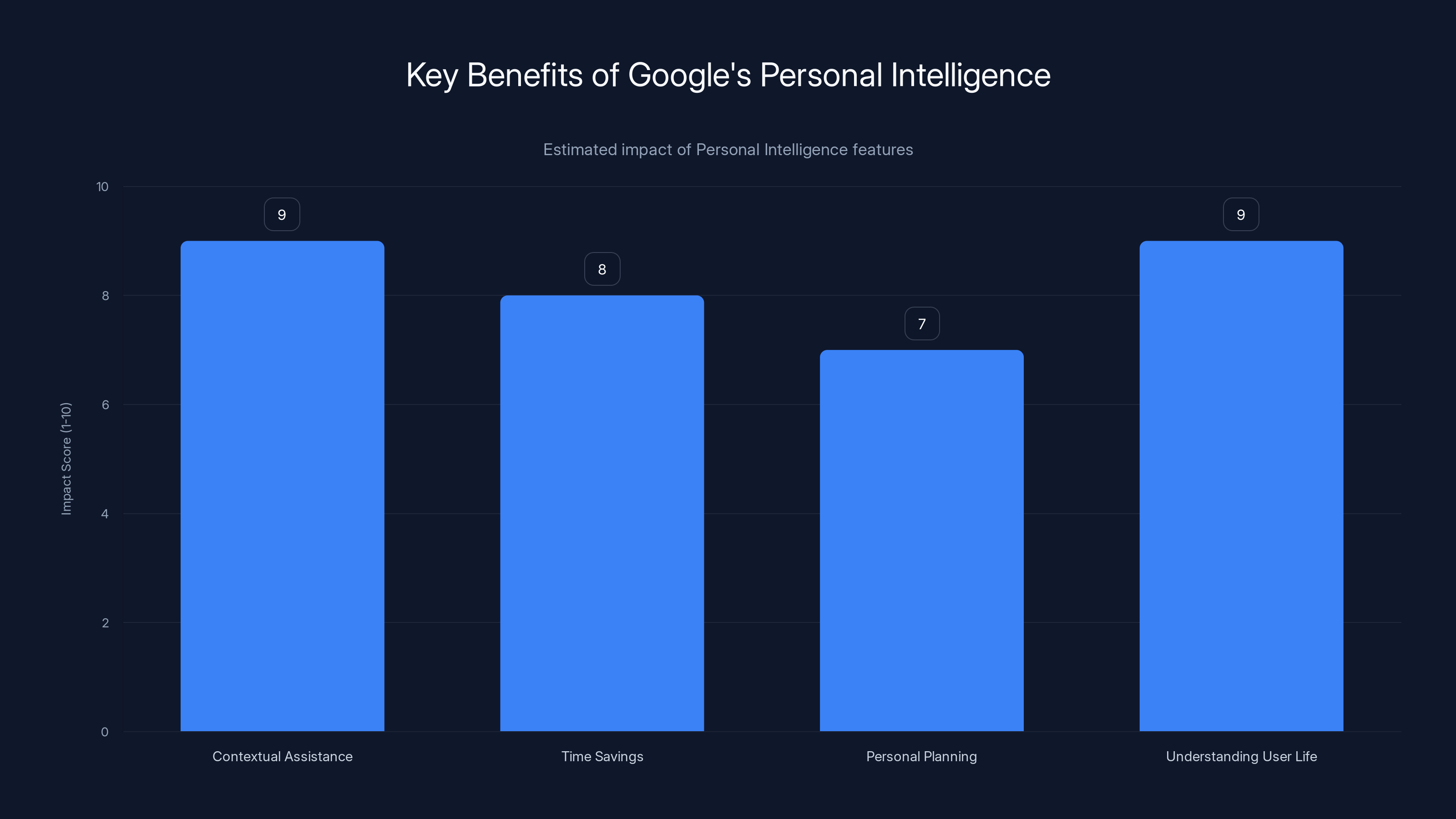

Personal Intelligence provides significant benefits, with high scores in contextual assistance and understanding user life. Estimated data.

Why Google Is Doing This (And Why It Matters)

Google isn't making this move because it's nice to be helpful. Google's making this move because of competitive pressure and strategic positioning in the AI market.

Chat GPT is eating Google's lunch in some markets. Younger users, in particular, are asking Chat GPT questions they used to ask Google. The search habit is breaking. And for Google, that's existential. Google Search is still their primary business, but they know that AI is going to change how people find information.

So Google's strategy is: If people are going to use AI assistants, make sure the AI assistant knows you use Google. Make Gemini so useful, so personalized, so integrated into your daily life that it becomes the answer to "I need AI help with something."

Personal Intelligence is essentially Google's answer to the competition. It's Google saying: We can be more helpful than Chat GPT because we actually know you. We have your email, your photos, your search history, your YouTube videos. No other AI company has that advantage (Business Insider).

It's also about competitive moats. If Gemini becomes genuinely more useful because it knows you, then you have a strong reason to stay within Google's ecosystem. You'd use Gmail, Google Photos, YouTube, Google Search—not because you love these individual products, but because they all feed into this one AI assistant that's invaluable to you.

That's worth billions in terms of lock-in and switching costs.

From Google's perspective, Personal Intelligence is a long-term bet on becoming the dominant AI platform. And from the user's perspective, it's a trade-off: more useful AI in exchange for giving that AI access to your personal information.

That's not a conspiracy. It's just how Google's business model works. The personal data that makes AI more useful also makes advertising more targeted. The two are connected.

The Rollout Plan: Limited at First, Everywhere Eventually

Google is being smart about the rollout. They're not forcing Personal Intelligence on everyone immediately. They're starting with AI Pro and AI Ultra subscribers in the US.

Why this approach? A few reasons:

Limiting the user base lets Google test at scale without the entire user base experiencing failures. If Personal Intelligence gets something wrong in front of millions of users, it's a PR nightmare. If it gets something wrong for thousands of paying subscribers, it's manageable feedback.

It creates a premium feature for paying subscribers. Google's trying to grow its paid AI subscription business. Personal Intelligence is a reason to pay. It's a differentiated feature you can't get on the free tier.

It gives Google time to fix problems before broader rollout. Over-personalization, data handling issues, privacy edge cases—all of these are things Google will discover as real users interact with the feature. Limited beta lets them fix the problems before rolling out to hundreds of millions of users.

But make no mistake: Google's not going to keep Personal Intelligence limited to paying subscribers forever. Eventually, this becomes the default way Gemini works. It becomes integrated into Android, into Google's search results, into the Google app. It becomes ubiquitous.

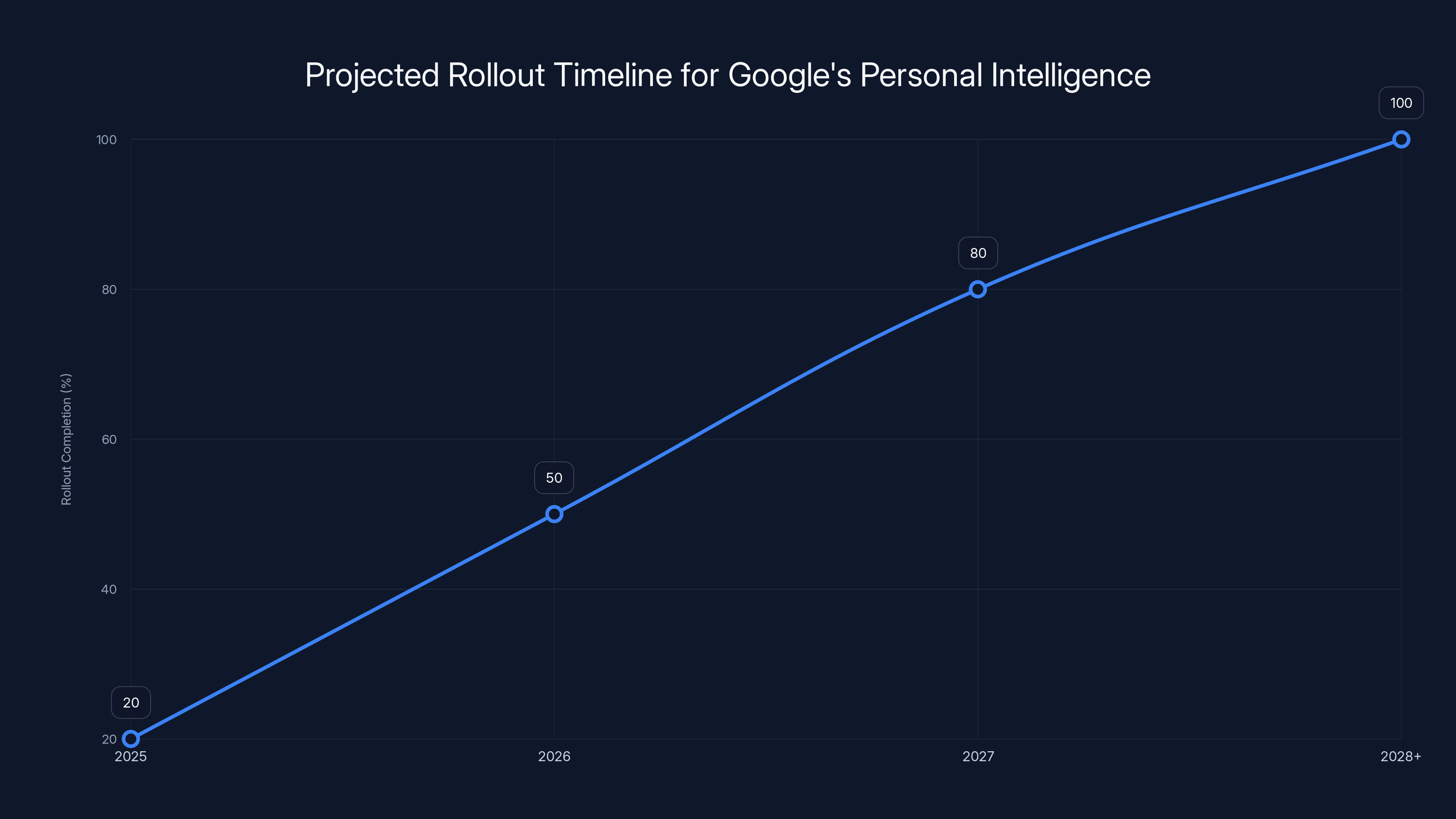

The rollout timeline based on Google's pattern:

- Year 1 (2025): AI Pro/Ultra subscribers, US only, beta

- Year 2 (2026): Expand to other countries, introduce basic version for free users

- Year 3 (2027): Default feature for most Gemini users, integrated into more Google products

- Year 4+ (2028+): Unavoidable part of Google's ecosystem

That's not a prediction. That's historical pattern. Google usually takes features from experimental/premium, proof them out, and then makes them standard. This will follow the same trajectory.

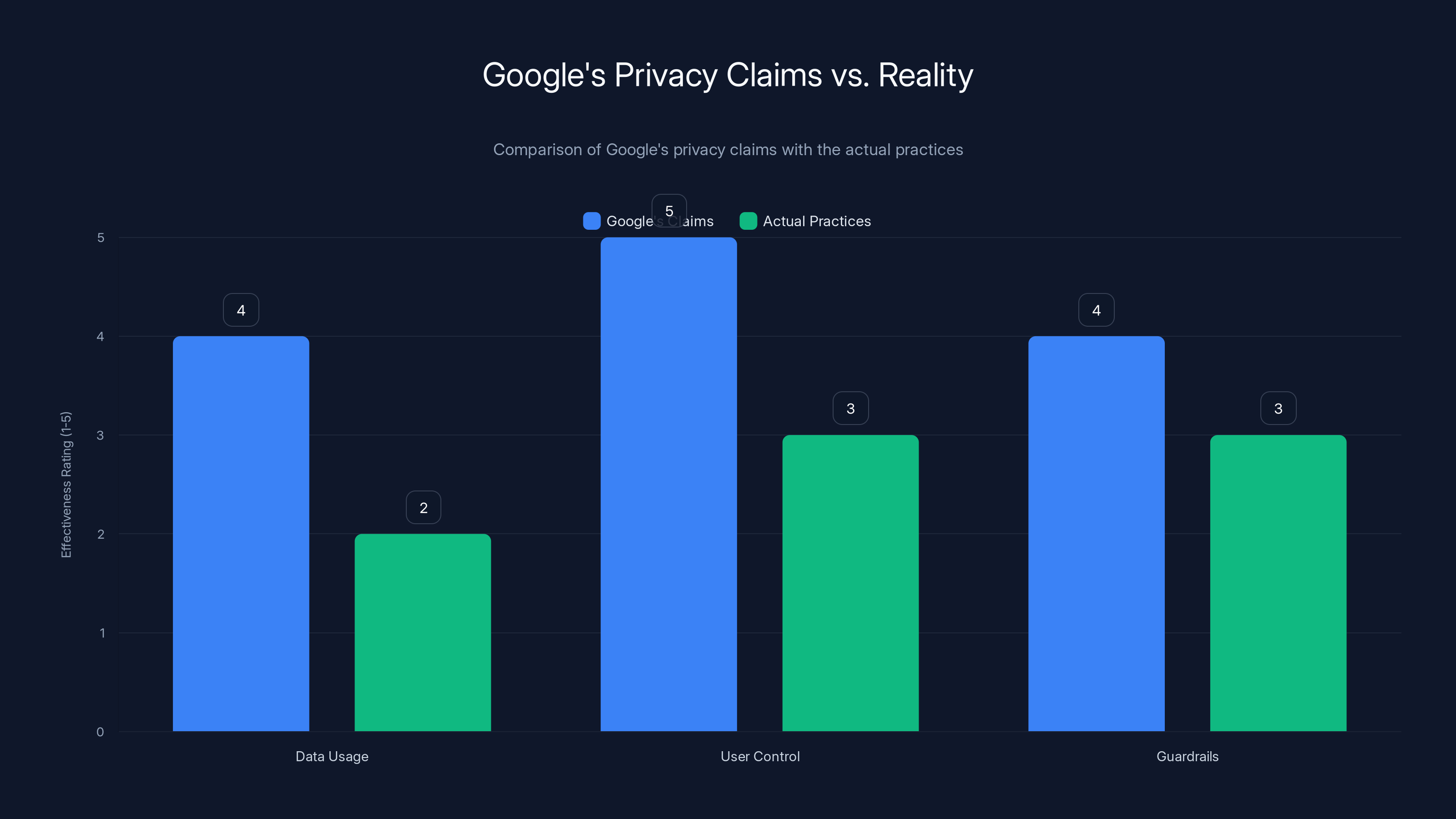

Google's privacy claims often appear more robust than actual practices, with discrepancies in data usage transparency and user control. (Estimated data)

What Could Go Wrong

There are about a hundred ways this could go wrong, and it's worth thinking through the most likely ones.

Data Breaches: Personal Intelligence means Gemini has access to your most sensitive data. If Gemini's infrastructure gets compromised, attackers don't just get generic internet data. They get your emails, your photos, your family information, your location history. The potential damage is exponentially higher.

Google has good security, historically. But "historically good" doesn't mean "perfect." And the more data that's flowing through AI systems, the more attack surface area exists.

Manipulation: An AI that knows everything about you can manipulate you more effectively. It knows your fears, your hopes, your habits, your vulnerabilities. A sophisticated attacker with access to Personal Intelligence data could use that to manipulate users in ways that are nearly impossible to detect or defend against.

Unexpected Data Usage: Google says it doesn't use your email and photos for training. But terms of service change. Business priorities shift. In five years, Google might decide that the opportunity to improve Gemini by training on personal data outweighs the privacy concerns. Users won't know until it happens.

The Permanence Problem: Once you've given Gemini access to years of your personal data, you can't really take that back. Even if you delete the connection, Google retains logs, insights, and patterns learned from that data. You can't un-know something.

Third-Party Access: Google has a history of allowing third parties to access user data through APIs and integrations. Eventually, other companies might request access to Personal Intelligence data. Could Uber request data about your regular routes? Could health insurance companies request data about your searches? It seems unlikely now, but regulations could change.

Emergent Problems: The hardest thing to predict is emergent behavior. Personal Intelligence might work exactly as designed, and still create problems nobody anticipated. An AI that reasons across all of your personal data might develop behaviors that are problematic in ways nobody thought of yet.

That's why historical caution matters. New technologies often have second and third-order effects that take years to understand.

The Privacy Philosophy Behind Personal Intelligence

Google's approach to privacy with Personal Intelligence is based on a specific philosophy: differential privacy, on-device processing, and separation between training and inference.

Differential privacy is the idea that you add just enough noise to data to make it impossible to reverse-engineer which data came from which individual, while keeping the aggregate patterns useful. So Gemini might learn "people who search for hiking trails in Colorado usually buy boots from these brands," without being able to identify which person searched for hiking trails.

On-device processing means that instead of sending your data to Google's servers for analysis, some of the processing happens locally on your phone or computer. That keeps the raw data from leaving your device.

Separation between training and inference means that the data used to train the model and the data used to generate answers to your specific questions are kept separate. Theoretically.

These are genuinely good privacy principles. They're just... not foolproof. Differential privacy has limits. On-device processing can't handle everything. And separation between training and inference is hard to maintain when you're iteratively improving a model based on user feedback.

Google's privacy approach for Personal Intelligence is better than it could be. But it's also not perfect, and it's worth being skeptical of claims that any closed system is completely private.

The question users should ask: Do the benefits of Personal Intelligence justify the privacy trade-offs required to make it work? That's not a question Google can answer for you. It's a personal decision based on your own risk tolerance.

Google's Personal Intelligence is expected to gradually expand from a limited beta in 2025 to full integration across its ecosystem by 2028. Estimated data based on historical rollout patterns.

How to Protect Yourself If You Use Personal Intelligence

If you decide Personal Intelligence is useful enough to enable, here are practical steps to minimize risk:

Step 1: Audit your existing data. Before connecting anything, go through your Google account and see what's actually there. Search through your old emails. Look at what's in Google Photos. Review your search history. This isn't fun, but it's enlightening. You might realize you have data you don't want an AI to see.

Step 2: Connect selectively. You don't have to connect all services. You might connect Gmail and Photos but not YouTube. Or just Gmail. Choose based on what's actually useful, not just what's available.

Step 3: Review sensitivity settings. Google has settings for what topics Gemini won't make assumptions about. Review these and enable the ones that matter to you. You might want to disable health-related inferences, for example.

Step 4: Monitor for strange behavior. If Gemini starts giving you answers that make weird connections or references information you didn't expect it to know, that's a signal that something's off. Report these instances.

Step 5: Keep backups of important documents. If you're using Personal Intelligence and it has access to sensitive documents in Gmail or Photos, make sure you have independent backups. Don't rely on Google as your sole repository for critical information.

Step 6: Understand the permanence. Once data is processed by Personal Intelligence, assume it's permanent. Don't share things you wouldn't be comfortable with Google having forever.

Step 7: Regularly review permissions. Check every few months which services you've connected to Gemini. You might decide something that seemed okay is now too risky.

These aren't foolproof. They're just reasonable precautions based on how technology usually works.

The Competitive Landscape: Who Else Is Doing This?

Google isn't alone in the race to give AI access to personal data. But Google might be the most advanced.

Microsoft: Microsoft's Recall feature for Windows is designed to capture screenshots of everything you do on your computer, process them with AI, and let you search through your past. It's even more invasive than Personal Intelligence in some ways, because it's capturing your entire visual interface, not just data in specific Google products. Microsoft has already had to delay and redesign Recall due to privacy concerns.

Apple: Apple's adding on-device AI to iPhones and Macs. They're emphasizing local processing and privacy by design, but they're still giving AI access to your personal information. The philosophy is different (privacy-first) but the direction is the same.

Meta: Meta's AI assistant in WhatsApp and Facebook can already access some of your message history and behavior data. They're likely to expand this.

Amazon: Amazon's working on Alexa extensions that will have access to your smart home data, your Amazon purchase history, and potentially your connected devices. That's a form of personal data integration, just for voice.

Open AI: Open AI doesn't have Personal Intelligence yet, but they're clearly thinking about it. Chat GPT's system could theoretically be extended to access user data. The question is whether Open AI will do it.

This is an industry-wide trend. Every major AI company is trying to figure out how to make AI more useful by integrating personal data. Google's just the first to roll it out at scale.

The Future: Where This Is Heading

Personal Intelligence is a milestone, not a destination. It's pointing toward a future where AI knows not just about you, but is integrated into the most intimate parts of your digital life.

In five years, we might see:

Predictive assistance: Gemini not just answering questions, but anticipating what you need before you ask. Based on your patterns, it suggests actions, flags problems, recommends opportunities. "Hey, you usually book your car service in August. It's July 15th. Want me to schedule it now?"

Health integration: If you've given Gemini access to your fitness data, your search history, and your emails, it could potentially advise you on health decisions. "You've been searching a lot about sleep issues, and your Apple Watch shows poor sleep quality. Consider talking to a doctor."

Financial optimization: Gemini could analyze your spending, your income, your bills, and your tax history to suggest ways to save money or optimize your finances. "You're paying $18/month for subscriptions you haven't used in 6 months."

Relationship management: An AI that knows about your relationships through emails, calendar events, and photos could help you maintain them. "It's been 2 months since you last talked to your college roommate. Want help planning a call?"

Work assistance: If Personal Intelligence extends to work accounts, it could help with professional tasks in ways Chat GPT can't. It could analyze your emails and meetings to suggest communication improvements, identify issues before they escalate, or predict project risks.

All of these are useful. And all of them require the AI to know more about you than seems comfortable.

The future is probably going to involve less privacy and more convenience. Users will decide which trade-offs make sense for them. Some will embrace Personal Intelligence fully. Others will opt out entirely. Most will probably do something in between, using selective features and accepting the risks.

The Regulatory Question: Is Personal Intelligence Legal?

This is complicated and varies by jurisdiction.

In the US, there's no specific law regulating how AI can access personal data. The FTC regulates unfair or deceptive practices, but Personal Intelligence, if handled as Google describes, probably isn't either of those. It's opt-in and disclosed.

In the EU, the GDPR is more restrictive. Data minimization is a core principle. Giving an AI access to years of emails and photos might violate GDPR's data minimization requirements, even if it's technically opt-in. The EU could easily decide that Personal Intelligence violates GDPR, and Google would have to change how it works in Europe.

In other regions, data protection laws are still developing. As Personal Intelligence rolls out globally, we'll likely see regulatory pushback in some countries.

There's also the question of whether Personal Intelligence might trigger antitrust concerns. Google already has enormous market power in search, email, cloud storage, and video. Now they're using that market power to push users toward an AI assistant that depends on data from all those products. Regulators might see this as anti-competitive bundling.

So far, regulators haven't moved against Personal Intelligence, but that's probably because they're still figuring out what the rules should be.

Should You Use Personal Intelligence?

That's the question that matters most, and it's a decision only you can make.

Yes, if:

- You're willing to trade privacy for convenience

- You're skeptical that your data was ever truly private anyway (fair point)

- You want AI assistance that's actually aware of your context

- You trust Google more than you trust the alternative (maybe a smaller AI company with fewer resources to protect data)

- The specific use cases (tire sizes, license plates, personalized recommendations) are genuinely valuable to you

No, if:

- You're uncomfortable with any company knowing this much about you

- You work in security, law, journalism, or other sensitive fields

- You're not sure what data you have in your Google account

- You don't trust Google's privacy practices (reasonable)

- You value privacy as a principle, not just pragmatically

The middle ground, which is probably where most people will land, is: Enable it selectively, monitor its behavior, and be ready to disable it if something feels off.

Personal Intelligence is useful. It's also a significant privacy trade-off. Both of those things are true. The question is which matters more to you.

The Bigger Picture: AI and the Future of Privacy

Personal Intelligence is one moment in a larger story about AI, data, and privacy.

For decades, the internet was built on a model where users got free services in exchange for their data. Email, search, social media, cloud storage—all free if you agreed to be tracked and analyzed.

That worked because the tracking was mostly about advertising targeting. Advertisers wanted to know your interests so they could show you relevant ads. It was invasive, but the core business was transparent.

Now we're entering a new phase where the data itself is the product that matters most. Personal data doesn't just help companies target ads. It makes AI assistants more useful, more powerful, more central to people's lives. The value of the data has increased dramatically.

Personal Intelligence is the first mainstream consumer product that shows this shift. It's the first time a major company has said: "We need access to your most personal data not for ads, but to make our AI more useful to you."

That's a bigger shift than it might seem. It's redefining the relationship between users and tech companies. It's saying: "Trust us not just with your data, but with the conclusions we draw from it."

Some people will. Some people won't. And the outcome of that choice will shape what AI looks like in the next decade.

The Technical Marvel and the Ethical Minefield

From a purely technical perspective, Personal Intelligence is genuinely impressive. It represents real advances in AI reasoning, multi-source data integration, and contextual understanding.

The engineers who built this deserved to be proud. Getting an AI to reliably connect information across multiple data sources, to understand context and avoid over-generalization, to do all that while preserving some level of privacy—that's hard.

But technical capability and ethical questions are different things. Just because we can build AI that knows everything about you doesn't mean we should. And just because Google built it responsibly (as much as possible) doesn't mean all future versions will be.

That tension is what makes Personal Intelligence worth thinking about seriously. It's not evil. It's just powerful, and power without restraint is always risky.

Google has added some guardrails. They're not connecting to a ton of services yet. They're limiting the initial rollout. They've been transparent about the feature.

But none of that changes the fundamental reality: An AI that knows everything about you is more useful and more dangerous than an AI that doesn't.

Your job is to decide if that trade-off makes sense for you.

Moving Forward

Personal Intelligence is going to change how people interact with AI. Over the next few years, it's going to become more common, more integrated, more essential to Google's product ecosystem.

Other companies will follow. Microsoft's already working on it. Apple's moving toward it. Meta's probably planning it. This isn't a Google anomaly. This is the future of AI.

The question isn't whether Personal Intelligence will exist. It will. The question is what safeguards, regulations, and user controls will shape how it works.

EU regulators are likely to be more restrictive. US regulators are still figuring it out. Individual users need to make choices about what they're comfortable with.

This is genuinely important stuff. Not in a dramatic "AI is going to destroy humanity" way, but in a practical "this is going to affect your digital life significantly" way.

Pay attention. Make informed decisions. And understand that once you give an AI access to your personal data, you're making a choice that's hard to undo.

FAQ

What is Personal Intelligence?

Personal Intelligence is Google's new feature for Gemini that allows the AI assistant to access and reason across your personal data from Gmail, Google Photos, Search history, and YouTube watch history. Unlike previous features that required explicit commands, Personal Intelligence proactively retrieves and connects information from these sources to provide hyper-personalized answers to your questions, understanding context about your life without you having to specify which data source to check.

How does Personal Intelligence work?

Personal Intelligence runs on Google's Gemini 3 models, which analyze your queries and automatically decide whether to retrieve relevant personal information from your connected Google services. When you ask a question, the model pulls data from whichever services you've connected (Gmail, Photos, Search, YouTube), reasons across that information, and generates an answer contextualized to your specific situation. Google claims this data retrieval happens securely within your account and that the model doesn't directly train on your personal data, though your conversations with Gemini may be used for model improvement.

What are the main benefits of Personal Intelligence?

The primary benefits include dramatically more useful and contextual AI assistance, as your questions can be answered with awareness of your specific situation rather than generic web information. For example, instead of just finding tire specifications online, Gemini can suggest tire types based on family road trips stored in your Photos and find prices for those specific recommendations. Other benefits include time savings (not having to manually search across services), better assistance with personal planning, and AI that actually understands your life rather than just patterns from the internet.

What data does Personal Intelligence have access to?

Personal Intelligence can access four primary Google services if you enable them: your complete Gmail inbox including all emails and conversations, your Google Photos library with all images and location metadata, your Google Search history showing what you've searched for, and your YouTube watch history showing what videos you've watched. You choose which services to connect—you don't have to enable all of them. The feature is currently limited to US-based Google AI Pro and AI Ultra subscribers.

What are the privacy risks?

The primary privacy risks include potential data breaches affecting highly sensitive personal information, the difficulty of truly deleting data once an AI has processed it, possible future changes to Google's data usage policies, and the inherent risk of over-personalization where the AI makes incorrect assumptions about sensitive topics like health or relationships. Additionally, there's uncertainty about how this data might be used for advertising targeting in the future, what third parties might eventually access it through integrations, and the emergent problems that could arise from AI reasoning across all your personal data.

Is Personal Intelligence opt-in?

Yes, Personal Intelligence is technically opt-in—you must explicitly enable it and choose which services to connect. However, Google's expansion of this feature will likely follow historical patterns where beta features for paid subscribers eventually become standard or default features. The opt-in nature is genuine now, but users shouldn't assume this level of control will persist as the feature becomes more embedded in Google's products.

How is Personal Intelligence different from Chat GPT or other AI assistants?

Personal Intelligence is fundamentally different because it proactively accesses your personal data without you explicitly asking it to, reasons across multiple personal data sources simultaneously, and has integration with Google's entire ecosystem of services. Chat GPT and Claude remember conversation context but don't access your email, photos, or search history. Copilot 365 can access documents but doesn't have the breadth of personal data access. Personal Intelligence represents the deepest integration of personal data into an AI assistant released by a major company.

When will Personal Intelligence be available to everyone?

Personal Intelligence is currently rolling out as a beta to US-based Google AI Pro and AI Ultra subscribers. Based on Google's historical patterns with feature rollouts, it will likely expand to additional countries within 1-2 years, introduce a basic version for free users within 2-3 years, and eventually become a standard feature across most Gemini products within 3-5 years. The exact timeline is uncertain, but broad availability is essentially inevitable if the feature proves successful and doesn't face major regulatory obstacles.

What should I do if I enable Personal Intelligence?

If you decide to enable Personal Intelligence, selectively connect only the services you trust, regularly monitor Gemini's responses for unexpected behavior or incorrect inferences, maintain independent backups of sensitive documents rather than relying solely on Google's storage, review Google's sensitivity settings and enable restrictions for sensitive topics, and periodically audit which services are connected. Treat all conversations with Personal Intelligence as permanent—assume nothing is truly deleted once processed by the AI.

Will Personal Intelligence expand to work accounts and enterprise use?

Eventually, yes. Google is first rolling out Personal Intelligence to consumer accounts, but the company has indicated interest in extending this to Google Workspace (enterprise) accounts. When this happens, it would enable workplace AI with access to corporate emails, documents, and communications. This would trigger different privacy and legal considerations, particularly around employee surveillance and data protection. Enterprise users should prepare for this possibility and establish clear policies before it becomes available.

Final Thoughts

Google's Personal Intelligence is a inflection point. It's the moment when AI assistants stopped being generic tools and started being personal advisors with access to your entire digital life.

That's exciting from a capability perspective. It's also unsettling from a privacy perspective. Both things can be true simultaneously.

The technology is impressive. The implications are significant. The choice is yours. Make it consciously.

Google will keep improving this feature. Other companies will build similar systems. Over the next decade, AI that knows you deeply will become normal. The question is whether you'll be comfortable with that reality, and what guardrails you'll insist on before you get there.

That's a conversation we should all be having.

Key Takeaways

- Personal Intelligence is the deepest integration of personal data into an AI assistant released by a major consumer company, accessing Gmail, Photos, Search history, and YouTube

- Unlike generic chatbots, Personal Intelligence proactively reasons across multiple personal data sources to understand context without explicit user direction

- Google claims it doesn't train directly on personal data, but uses limited information from conversations for model improvement—a distinction that may blur over time

- Privacy risks include data breaches affecting sensitive information, over-personalization errors, unexpected future data usage changes, and unforeseen emergent problems

- Personal Intelligence is currently limited to US-based AI Pro and AI Ultra subscribers but will likely expand to free users and enterprise accounts within 2-5 years based on historical patterns

- The feature represents broader industry trend toward AI with personal data access, with Microsoft, Apple, Meta, and others developing similar capabilities

- Users can selectively choose which services to connect and should audit their Google account data before enabling Personal Intelligence

![Google Gemini Personal Intelligence: AI That Knows Your Life [2025]](https://tryrunable.com/blog/google-gemini-personal-intelligence-ai-that-knows-your-life-/image-1-1768406833077.jpg)