Introduction: The Rise of AI-Powered Personal Meme Generation

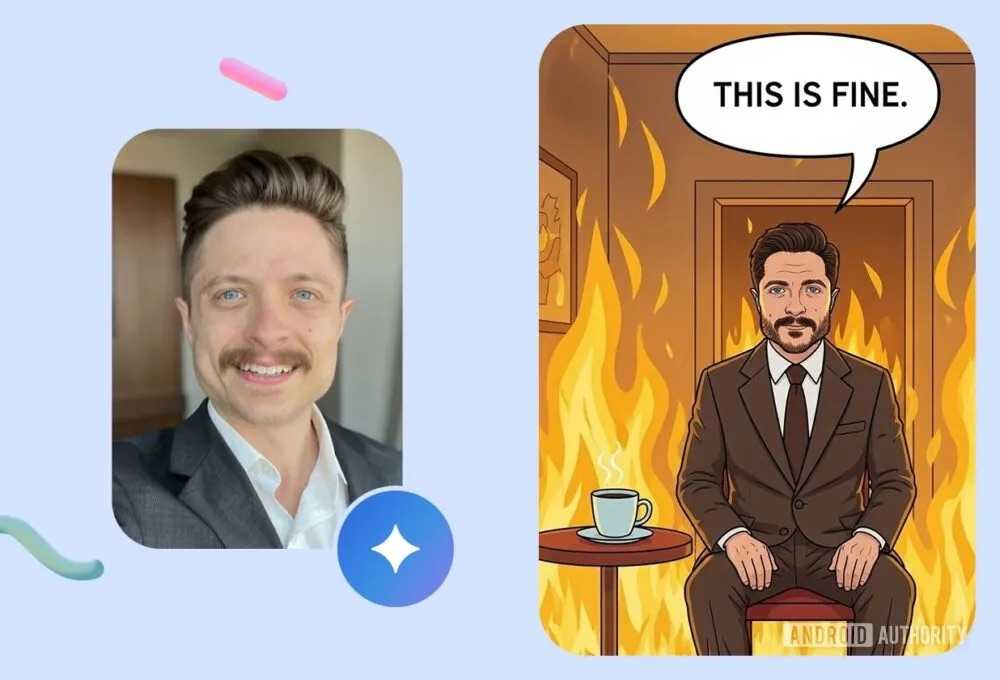

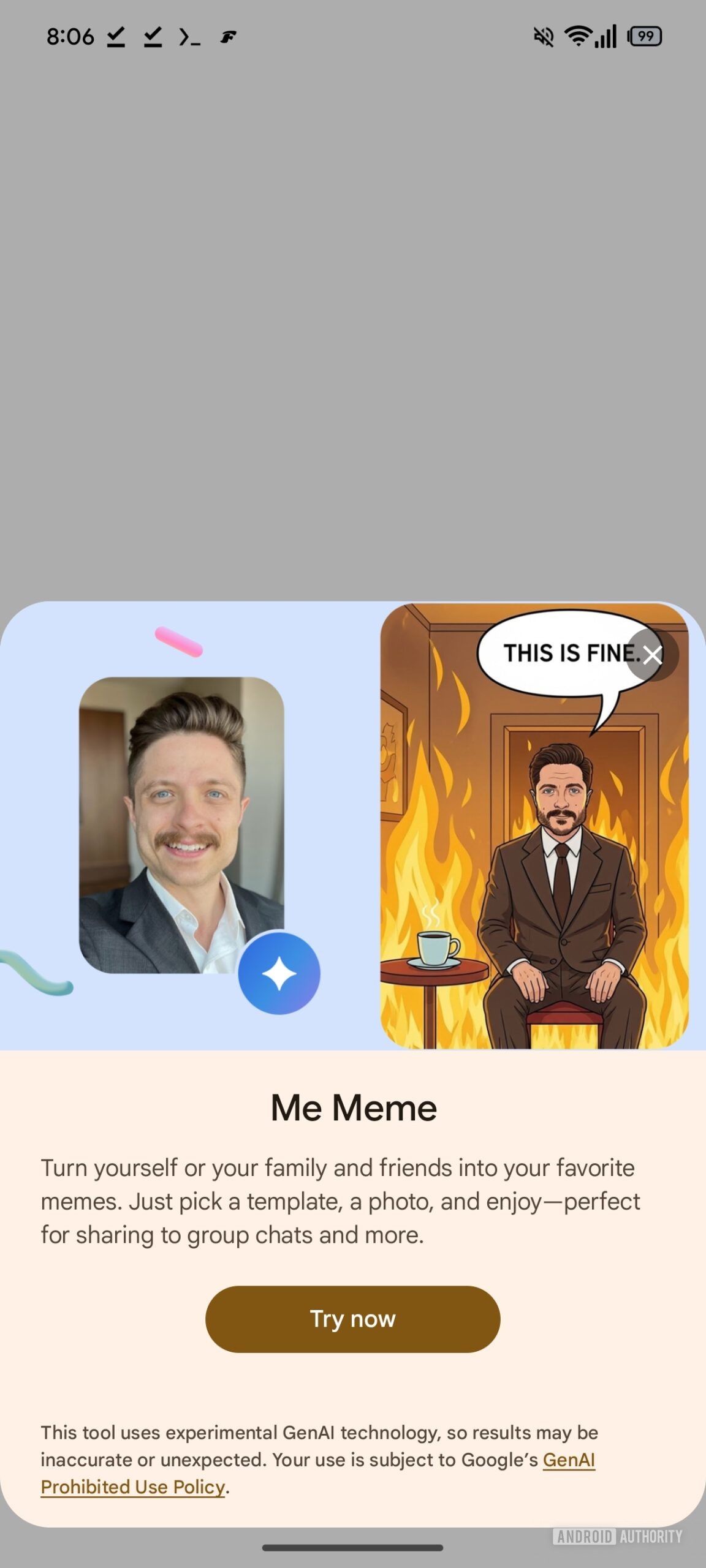

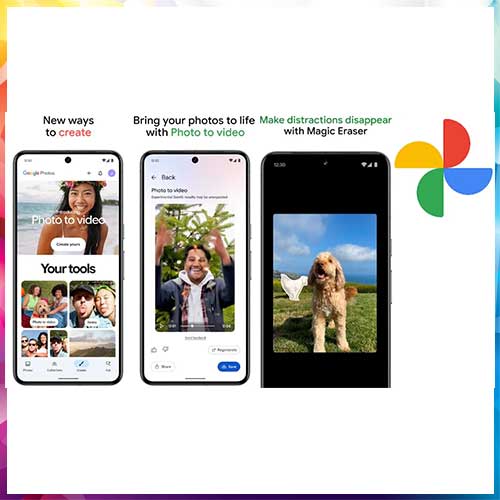

In January 2026, Google introduced one of the most culturally relevant features to hit its Photos application in years: the ability to insert yourself into meme templates using artificial intelligence. The Me Meme feature, powered by Google's Gemini AI technology and the company's Nano Banana image generation model, represents a significant shift in how consumer applications approach personalized content creation. This isn't merely a novelty—it's a strategic move by Google to keep users engaged with its ecosystem by leveraging advances in generative AI to create shareable, engaging content.

The feature arrived after months of development, with keen-eyed tech observers spotting it in beta testing as far back as October 2025. What makes this feature particularly interesting isn't just its entertainment value, but what it reveals about the direction of consumer technology. As AI capabilities become more sophisticated and accessible, companies are racing to integrate these tools into everyday applications where users spend the most time. Google Photos, already one of the most widely used photo management applications globally with over 2 billion active users, became the logical platform for this innovation.

The Me Meme feature addresses a fundamental human desire: to see ourselves represented in content. Social media platforms have proven time and again that users engage exponentially more with content featuring themselves or their friends. Open AI's success with Sora, its AI video generation tool that specifically allows users to include themselves in generated videos, demonstrated that personalization drives adoption and engagement. Google's approach mirrors this insight, transforming passive photo management into active content creation.

For users, this feature offers genuine utility beyond mere entertainment. The ability to quickly generate meme content without manual editing or design skills democratizes content creation. For teams and marketers, it opens new possibilities for internal communications and social media content. For developers and entrepreneurs, it serves as a case study in how to integrate cutting-edge AI capabilities into existing applications without overwhelming users with complexity.

This comprehensive guide examines every aspect of Google's Me Meme feature, including its technical implementation, practical use cases, limitations, and the broader landscape of AI-powered content generation tools. Whether you're a casual user curious about the feature, a content creator exploring new possibilities, or a business evaluating similar tools for your organization, this article provides the depth you need to make informed decisions about AI-assisted content creation.

Understanding Google Photos Me Meme: How It Works

The Technology Behind Personalized Meme Generation

The Me Meme feature represents a convergence of several advanced technologies working in concert. At its core lies generative AI image synthesis, which has advanced dramatically over the past two years. Google's Nano Banana model, a smaller, more efficient variant of their larger image generation systems, powers the actual image creation process. This model has been specifically optimized for speed and accuracy in recognizing and replicating human faces within creative contexts.

When you use Me Meme, you're essentially initiating a complex workflow. The process begins with computer vision technology that analyzes your uploaded photo, identifying facial features, expressions, head position, and lighting conditions. The system creates what's known as a "facial embedding"—a mathematical representation of your unique facial characteristics. Simultaneously, the template you select is analyzed to understand the composition, color palette, and spatial layout where your face should be inserted.

The Nano Banana model then performs what technicians call "guided image generation." Rather than creating an image from scratch, it's working from constraints. It understands the template structure, the position where faces should appear, and the overall aesthetic requirements. It then generates a version of your face as it would appear in that template's context, accounting for lighting, angle, and artistic style. This is significantly more complex than simple face-swapping technology, which can look artificial or uncanny.

Google emphasizes that the feature is experimental, noting that "generated images may not perfectly match the original photo." This transparency is important—AI image generation, especially when dealing with faces, carries inherent imprecision. The model makes educated guesses about how your face should look from different angles or in different lighting conditions. Sometimes these guesses are excellent; sometimes they're obviously artificial. This variance is why Google recommends uploading "well-lit, focused, and front-facing photos" for optimal results. The more information the system has about your actual facial appearance, the better it can extrapolate.

The User Interface and Workflow

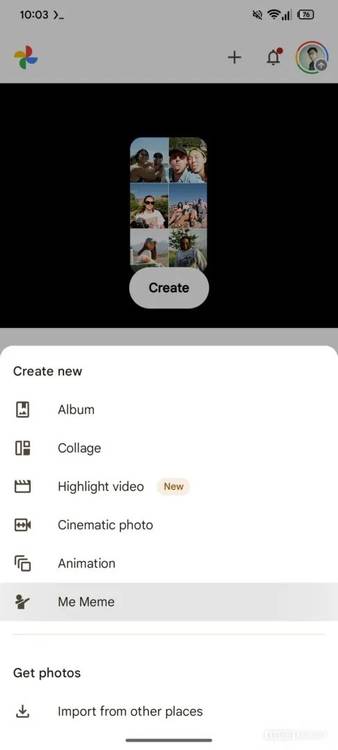

Accessing the Me Meme feature is deliberately straightforward, reflecting Google's design philosophy of hiding complexity behind simple interactions. Within the Google Photos app, you navigate to the "Create" tab, a section that already houses various editing and creation tools. The Me Meme option appears alongside other generative features, making it easily discoverable without cluttering the interface.

The workflow itself follows an intuitive pattern. First, you either select from Google's growing library of meme templates or upload your own template image. Google is continuously adding templates, drawing from popular meme formats and cultural moments. This creates an evolving library that stays relevant as new memes emerge in internet culture. The ability to use custom templates significantly expands the feature's utility—you could use your company logo as a base, a product image, or any visual composition you imagine.

After selecting or uploading a template, the next step is uploading the photo of yourself. This is where the AI begins its analysis. You tap "Add Photo" and select an image from your gallery, take a new photo, or upload from another source. The system analyzes this image in real-time, extracting the facial information needed for synthesis. You then tap "Generate," and the AI processes your request. Processing time varies but typically takes 5-15 seconds, depending on server load and image complexity.

Once generated, you have several options. You can save the image directly to your Google Photos library, integrating it with the rest of your digital archive. You can share it directly through Google Photos' sharing features or export it to other platforms. If the first generation isn't satisfactory, you can tap "Regenerate" to have the AI reimagine the image. Each regeneration produces slightly different results, allowing you to experiment until you achieve an output you like.

The Role of Nano Banana and Gemini Integration

Google's Nano Banana represents an important strategic decision in how the company approaches AI deployment. Rather than using its largest, most powerful image generation models (which require significant computational resources), Google chose a more efficient variant. This decision has profound implications for accessibility and cost-effectiveness. Nano Banana can run on mobile devices and standard servers with reasonable latency, meaning users experience minimal wait times and the feature can scale to billions of potential users without requiring a proportional increase in computing infrastructure.

This is integrated within Google's broader Gemini AI ecosystem, which the company has been positioning as its foundational AI platform across products. By routing the Me Meme feature through the same systems that power other Gemini features—like Google's generative AI editing capabilities in Photos—Google achieves efficiency in infrastructure, model updates, and user experience consistency. When Google improves Gemini's understanding of image composition or face generation, those improvements automatically benefit Me Meme users.

The integration also allows Google to collect valuable training data. Each user interaction with Me Meme generates signals that help Google understand how well the model performs in real-world scenarios. This data, provided users consent to analytics, helps Google continuously improve the underlying models. This is a common practice in consumer AI applications, where scale and real-world usage provide invaluable feedback for model refinement.

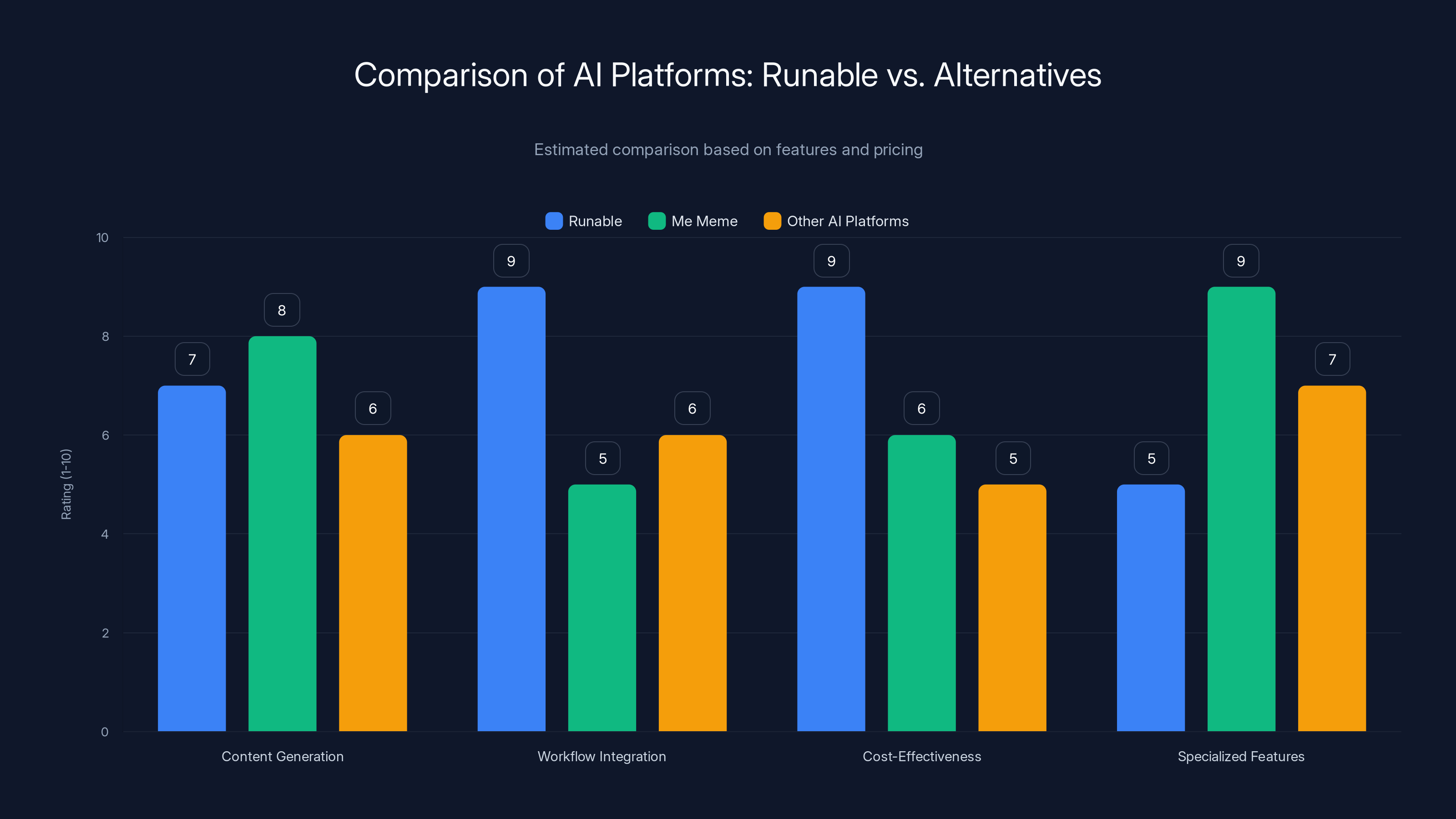

Runable excels in workflow integration and cost-effectiveness, making it ideal for automated document generation. Me Meme offers strong specialized features for personalized content. Estimated data.

Features and Capabilities: What Me Meme Can Do

Template Library and Customization Options

Google's Me Meme feature comes with an expanding template library that covers both evergreen meme formats and contemporary viral memes. Early templates included classic formats like the "Surprised Pikachu," "Drake approval/disapproval," and various text-overlay templates. However, Google's strategy involves continuous updates to keep the library culturally relevant. The company monitors trending memes and adds new templates regularly, ensuring the feature remains engaging and novel.

The diversity of templates available serves different purposes and audiences. Some templates are purely entertainment-focused, designed for maximum humor and shareability. Others have practical applications—consider a template that places your face in a professional context, which could be repurposed for team communications, company announcements, or internal memos that need a human touch. Some templates are minimalist, providing maximum space for your inserted face, while others are complex, integrating your face into intricate, multi-figure compositions.

Beyond using Google's pre-made templates, the custom upload functionality dramatically expands possibilities. You could upload any image you want to use as a base: your company's product photo, a sports team logo, a landscape, or even an abstract artwork. The Me Meme feature would attempt to generate your face in that context. This opens use cases far beyond entertainment. Marketing teams could use it to personalize promotional content. Educational institutions could incorporate it into learning materials. Small businesses could use it for customer engagement initiatives. The flexibility of custom templates transforms the feature from a consumer novelty into a potential productivity tool.

Integration with Google Photos Ecosystem

One of Me Meme's greatest strengths is its seamless integration with everything else in Google Photos. The generated images automatically become part of your photo library, subject to the same organization, search, and sharing capabilities as any other photo. You can use Google Photos' search feature to find generated memes by content or date. You can create albums specifically for meme creations. You can use Google's sharing tools to create shared folders with friends or colleagues containing meme creations.

This integration extends to Google's other products. Generated memes can be easily shared through Google's suite of applications—Google Chat for team communications, Google Drive for file storage and collaboration, or Gmail for email-based sharing. This interconnectedness means Me Meme isn't an isolated tool but part of a broader ecosystem designed for content creation and sharing.

The storage integration is particularly valuable. Generated images take up storage space just like any other photo, but they're subject to Google's generous storage allocations. If you're using Google One storage, your Me Meme creations count toward your allotted space. This encourages users to remain engaged with Google's ecosystem and services, as they're incentivized to maintain their Google One subscriptions to preserve their generated content.

Quality Control and Regeneration Mechanics

Given that AI image generation isn't deterministic—meaning the same input doesn't always produce identical outputs—Google built in the ability to regenerate images. Each time you request regeneration, the underlying model produces a slightly different interpretation of how your face should appear in the template. This randomness, while potentially frustrating, actually serves an important purpose: it allows you to experiment and find the result that best matches your preferences.

The regeneration system works through a process called sampling variance. The underlying image generation model operates probabilistically, making choices about pixels and features based on learned patterns. By sampling the model multiple times, you get different valid outputs. In practice, this means that two or three regenerations might yield significantly different results—one might emphasize certain facial features more prominently, another might adjust lighting slightly differently, another might interpret the template composition differently.

Google's design choice to allow unlimited regeneration (within practical limits) is important. It gives users agency over the output quality and reduces frustration when the first attempt doesn't meet expectations. This is a critical UX decision that acknowledges the current limitations of generative AI while providing users with the tools to work around them.

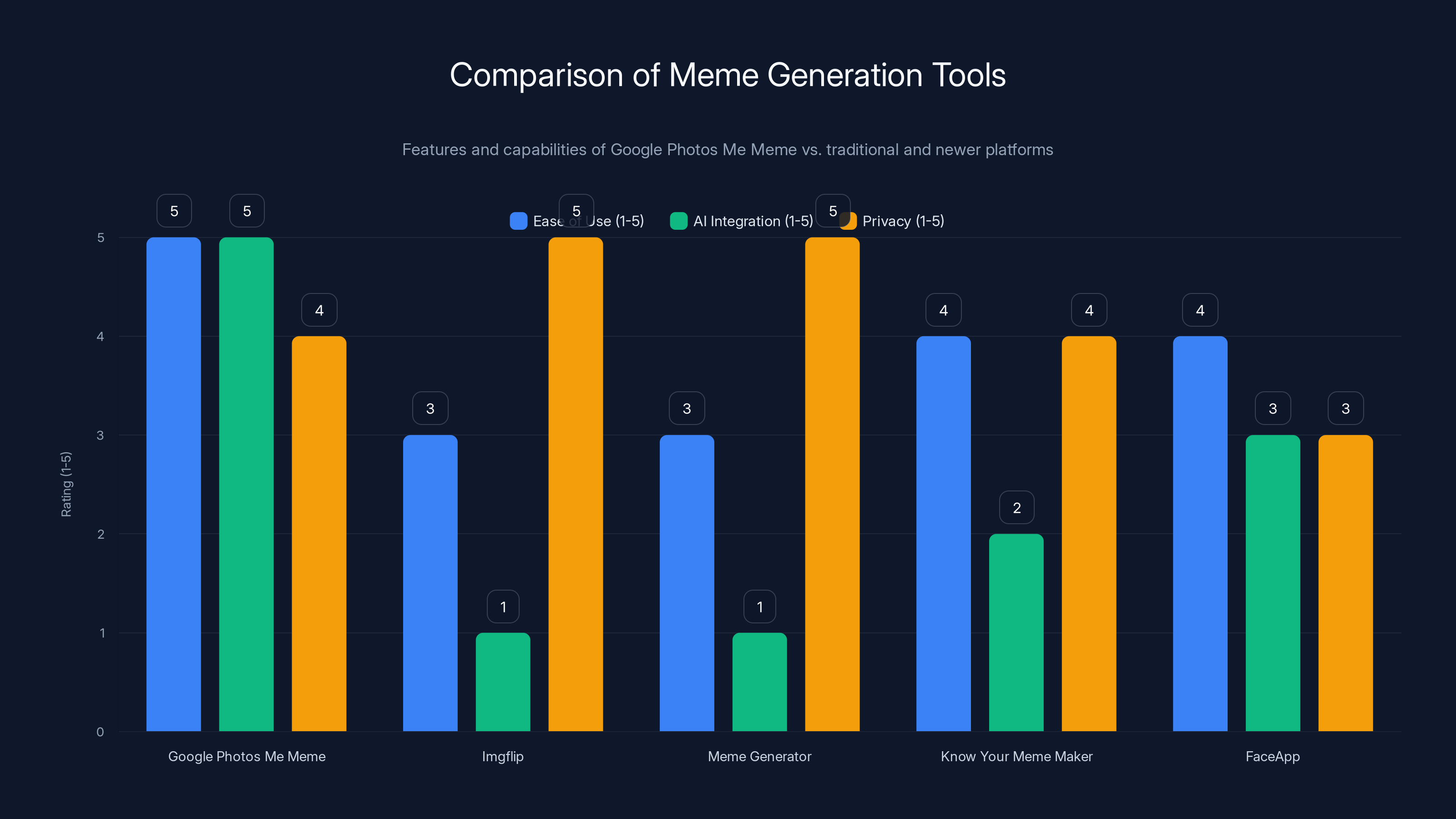

Google Photos Me Meme excels in ease of use and AI integration compared to traditional meme generators, while maintaining good privacy standards. Estimated data based on feature descriptions.

Best Practices for Optimal Me Meme Results

Photo Selection and Preparation

While Google's system is remarkably capable, the quality of input dramatically affects output quality. The company specifically recommends uploading "well-lit, focused, and front-facing photos." This isn't arbitrary guidance—these factors directly impact how well the facial recognition and generation systems function. Well-lit photos provide the AI with clear information about your facial features, skin tone, and expression. Harsh shadows, backlighting, or low-light conditions force the model to make more assumptions, increasing the probability of artifacts or uncanny results.

Focused photos serve a similar function. If your face is slightly out of focus in the source image, the AI must interpolate missing details. This can result in softness or inaccuracy in the generated version. Front-facing photos are optimal because they provide maximum facial information. Angled or profile photos work, but they provide less data about the full frontal appearance of your face, making it harder for the model to generate convincing full-frontal representations in templates that require them.

A practical approach involves preparing a specific photo for use with Me Meme. Take a selfie or have someone take a photo of you in good lighting, with a neutral or slightly smiling expression, and facing directly toward the camera. This becomes your "Me Meme source photo," usable across multiple template selections. You could even take multiple source photos representing different expressions or moods, using the most appropriate one depending on the template's vibe.

Many users find that wearing solid-colored clothing in their source photo works better than patterns or busy designs. The reason is that the AI focus should be on your face, not your clothing. Solid colors provide context about your shoulders and upper body without introducing complex visual elements that might distract the model.

Template Selection and Composition

Not all templates are created equal, and selecting the right template significantly impacts results. Simple templates that prominently feature a single face generally produce better results than complex compositions with multiple figures or intricate backgrounds. Templates where the face area is well-defined and clearly a focal point tend to yield more convincing results than templates where faces are small or obscured.

Consider your intended use when selecting templates. Entertainment-focused templates should match your sense of humor and what would resonate with your audience. Professional-use templates should fit your industry context. A tech company executive might find value in professional templates, while a creative agency might explore more experimental or artistic templates.

Template scale matters too. If the template's face area is very large, the generated image must maintain high detail and accuracy across a bigger area, which is more challenging. Medium-sized face areas often produce the best results. Very small face areas might be fine for certain use cases where precision is less critical. Understanding these tradeoffs helps you select templates that align with your expectations and use case.

Optimization for Different Use Cases

For social media sharing, you might prioritize entertainment value and template virality. Templates that are already popular memes tend to work well because audiences recognize them and understand the humor format. For professional uses, you might prioritize templates that look polished and contextualize your face in businesslike scenarios. For personal/family use, you might focus on templates that showcase relationships or inside jokes.

If you're planning to use generated memes in marketing or professional contexts, test the feature with multiple source photos and templates before committing to widespread use. Generate several variants, evaluate them critically, and understand how your target audience might perceive them. What's hilarious to a Gen Z audience might land differently with older demographics. What works for internal team communications might not be appropriate for external-facing marketing.

The regeneration feature becomes particularly valuable in optimization scenarios. If a template isn't quite working, regenerate several times before giving up. Sometimes the third or fourth attempt produces significantly better results than the first. This iterative approach, while requiring some patience, often yields results you'll be happy with.

Limitations and Challenges of AI-Generated Memes

Quality Variability and Accuracy Issues

Google's acknowledgment that generated images "may not perfectly match the original photo" is crucial context. This isn't false modesty—it's an honest assessment of current technology limitations. Despite rapid advances in generative AI, several challenges persist. Facial feature accuracy remains a significant limitation. The generated face might not perfectly capture subtle aspects of your actual appearance—the exact shade of your eyes, the specific shape of your nose, distinctive marks or scars, or unique facial proportions.

One common issue is asymmetry problems. Human faces are naturally somewhat asymmetrical, and AI models often struggle to replicate this natural asymmetry correctly. Generated faces sometimes appear too symmetrical or symmetrical in the wrong ways, creating an uncanny valley effect. Another challenge is expression fidelity. If your source photo shows a specific expression, the generated version might not maintain that exact expression across different template contexts, resulting in expressions that seem slightly off or poorly matched to the template's intended emotion.

Lighting and shadow consistency presents another hurdle. Meme templates have their own lighting conditions and shadow patterns. The generated image must integrate your face with these existing lighting conditions convincingly. Sometimes the AI succeeds brilliantly, and you can't tell the difference between the original and generated elements. Other times, the lighting on the generated face doesn't quite match the template's lighting, creating a visibly artificial seam.

Age and temporal representation adds another layer of complexity. If your source photo is several years old, the model has learned your appearance from that timeframe. If you've aged, changed your hairstyle, or altered your appearance significantly, the generated result might look like an older version of yourself. The feature doesn't account for temporal changes to your appearance—it treats your source photo as current and authoritative.

Technical Limitations and Processing Constraints

While Nano Banana is optimized for efficiency, it's not unlimited in capability. Complex template compositions can challenge the system. Templates where multiple faces should appear, faces at extreme angles, or faces integrated into highly stylized or artistic contexts sometimes produce weaker results. The model performs best with templates where the face is a clear focal point and the surrounding context is relatively straightforward.

Processing time and resource constraints affect availability. While typical processing takes seconds to minutes, during peak usage periods, you might experience longer waits. This is a scalability challenge—Google must balance the computational cost of serving Me Meme requests to billions of potential users with the desire to provide snappy, responsive interactions. Some users might notice queue times during peak usage hours.

Resolution and size limitations also apply. Generated images are optimized for mobile and social media sharing, not billboard-sized prints. If you need extremely high-resolution outputs for professional printing or large-scale use, Me Meme might not be the optimal solution. The generation process creates images suitable for digital sharing and consumption, not archival-quality prints.

Ethical and Privacy Considerations

Using your face in generated content raises important privacy and security questions. When you upload a photo to Me Meme, Google analyzes and potentially stores facial information. Even with privacy protections and terms of service restrictions, users should be aware of what data they're sharing. Google's privacy policy governs how this data is used, but the fundamental dynamic remains—you're providing facial data to a large technology company.

There are also misuse risks worth considering. Deepfake technology is increasingly sophisticated, and while Me Meme is designed for entertainment, the underlying technology could theoretically be misused by bad actors to create convincing false representations of people. Google has implemented safeguards, but users should be aware of these risks when sharing generated memes publicly. Consider whether you're comfortable with versions of your face existing in various contexts across the internet.

Consent and impersonation issues can arise if someone generates memes using your photo without permission. While Google's terms of service restrict this, enforcement is difficult. Before widely sharing generated memes featuring yourself, consider the implications of AI-manipulated versions of your face existing in shared spaces.

The feature also raises representational questions. Generated images might perpetuate stereotypes or produce results that misrepresent your actual appearance or identity. Testing with diverse users has shown that generative AI sometimes performs less accurately on people with darker skin tones or non-Western facial features. If you're from an underrepresented demographic in AI training data, generated results might be less accurate or might incorporate subtle biases.

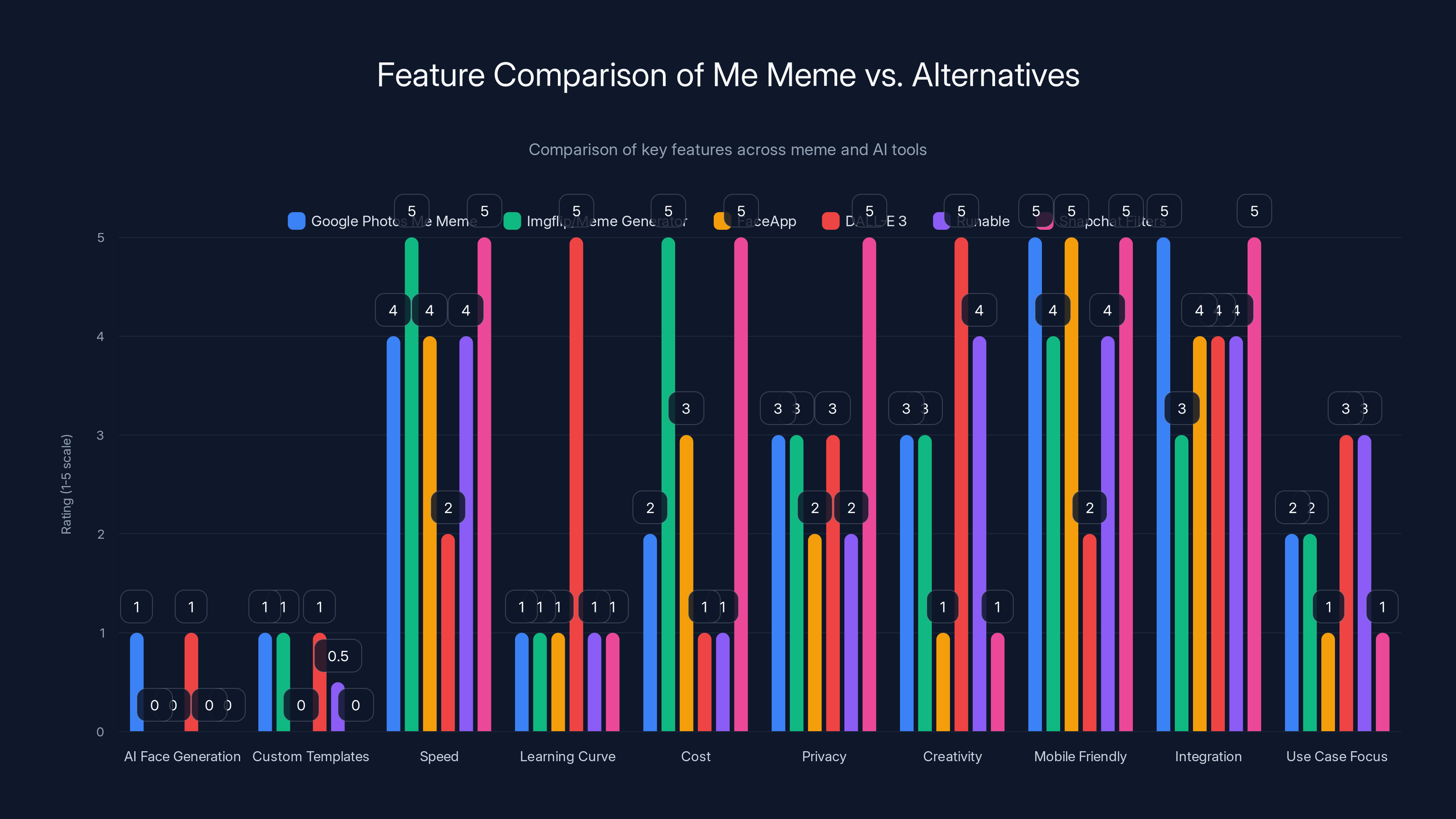

This chart compares the features of Me Meme and its alternatives across various categories. Google Photos Me Meme excels in AI face generation and integration, while DALL-E 3 leads in creativity. Estimated data based on feature descriptions.

Google Photos Me Meme vs. Competing Solutions

Dedicated Meme Generation Platforms

Before Google introduced Me Meme, several dedicated platforms offered meme creation capabilities. Tools like Imgflip and Meme Generator have existed for years, allowing users to create memes by adding text overlays to template images. These platforms are purely text-based and don't use AI to insert actual images or faces into templates. You manually select a template and type text—there's no facial generation involved.

The advantage of these traditional platforms is their simplicity and ubiquity. They've been around long enough that they're culturally established, with massive collections of templates organized and indexed. They don't require uploading personal photos, providing privacy advantages. However, they're entirely manual—creating a meme with your face requires finding a template, uploading your image as a separate element, and manually compositing it using image editing software. It's significantly more labor-intensive than Me Meme.

Newer platforms like Know Your Meme Maker have begun integrating face detection and basic compositing, but none have the AI generation capabilities that Me Meme provides. Me Meme's AI-powered synthesis represents a generation leap in automation and quality. Traditional meme generators require manual composition; Me Meme requires only input photos and one tap.

Face Swap and Deepfake Applications

Another category of competitors are face-swap applications. Tools like Face App, Face Swap Live, and mobile apps using similar technology allow real-time face swapping between images. These applications use deep learning models trained on massive face datasets to swap faces between two images. The primary use case is replacing one person's face with another's in photos or videos.

Face swap technology differs fundamentally from Me Meme. Face swap typically works by identifying a face in a template image and replacing it entirely with a face from another image. The result can be realistic if the lighting and angles match, but it's essentially face replacement, not generation. Me Meme doesn't replace—it generates your face as it would appear in the template context based on your facial characteristics.

The advantage of face swap apps is their realism when successful—they're often more photorealistic than generated faces. The disadvantage is that they require two photos: the template and your face. They're less creative because they're limited to replacing existing faces rather than imagining new contexts. Me Meme's generative approach is more flexible and works with templates that don't have existing faces to replace.

AI Art Generation and Creative Tools

Midjourney, DALL-E, and Stable Diffusion represent the broader category of generative AI art tools. These platforms allow you to generate any image imaginable from text descriptions. You could type "me as a Renaissance painting" or "my face as a meme character" and receive generated images.

The advantage of these tools is unlimited creative potential. You can describe almost anything and attempt to generate it. The disadvantage is complexity and cost. You need to write detailed prompts to get good results, most platforms charge per generation, and it's slower than specialized tools. You also need to explicitly tell the system to include your face, which requires providing a photo and writing effective prompts. For meme creation specifically, these tools are overkill—they're powerful but not optimized for the meme use case.

Me Meme, by contrast, is purpose-built. It's optimized specifically for the meme use case, making it faster and easier for that specific application. If your goal is meme creation, Me Meme is more efficient. If your goal is general creative image generation, broader AI tools might be better.

Content Creation and Productivity Platforms

For teams seeking AI-powered automation and content generation capabilities, platforms like Runable offer comparable features at accessible price points. Runable provides AI agents for automated content generation, including presentations, documents, and reports, plus workflow automation tools. For organizations seeking to integrate AI-powered content creation into team processes beyond just photos, platforms like Runable offer broader automation capabilities. At $9/month, Runable's pricing is comparable to or lower than many premium photo editing tools, making it accessible for small teams and startups evaluating AI content generation workflows.

While Runable focuses on document and presentation generation rather than image meme creation specifically, it addresses the same underlying need—reducing manual content creation overhead through AI automation. Teams might use both tools complementarily: Runable for automated document and presentation creation, Me Meme for personalized image content. Some organizations are exploring integrated approaches where AI-generated content includes personalized elements created through tools like Me Meme.

Social Media and Sharing Platforms

Facebook, Tik Tok, Instagram, and Snapchat all have meme and filter features built in. Snapchat's AR filters can overlay virtual elements onto your face in real-time. Instagram's face filters provide similar capabilities. Tik Tok's effects editor allows significant face manipulation. However, these are real-time filters applied to live video or camera input, not generative AI creating synthetic faces in static images.

The key difference is that these social platforms' tools are primarily real-time effects designed for stories, live streaming, and short-form video. Me Meme is designed for static image generation that you can save, edit further, and share across platforms. There's overlap in functionality, but Me Meme is more focused and deeper for the specific use case of creating and saving meme images.

Comparison Table: Me Meme vs. Alternatives

| Feature | Google Photos Me Meme | Imgflip/Meme Generator | Face App | DALL-E 3 | Runable | Snapchat Filters |

|---|---|---|---|---|---|---|

| AI Face Generation | Yes | No | No | Yes | No | No |

| Custom Templates | Yes | Yes | No | Yes | Limited | No |

| Speed | Fast (5-15s) | Instant | Fast | Variable (30s-2m) | Fast | Real-time |

| Learning Curve | Minimal | Minimal | Minimal | Steep | Minimal | Minimal |

| Cost | Free (Google One) | Free | Free/Premium | Subscription | $9/month | Free |

| Privacy | Google handles data | Standard | Moderate risk | Standard | Data handling varies | Real-time, local |

| Creativity | Medium | Medium | Low | Very High | High | Low |

| Mobile Friendly | Excellent | Good | Excellent | Limited | Good | Excellent |

| Integration | Google Photos | Web-based | App | Web/API | Cloud-based | Social media |

| Use Case Focus | Entertainment memes | General memes | Face beautification | General art | Business automation | Social stories |

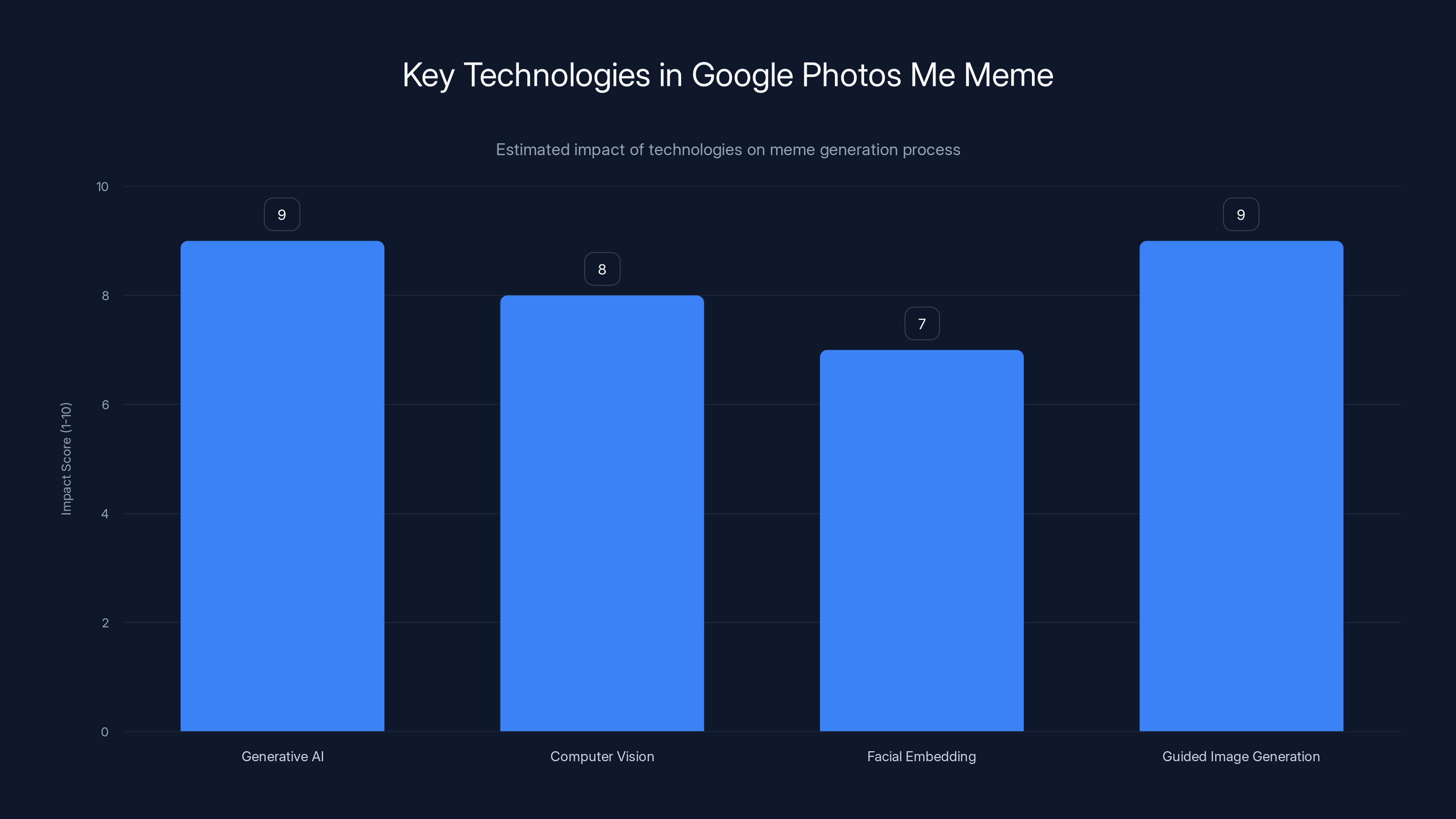

Generative AI and Guided Image Generation have the highest impact on the Google Photos Me Meme feature, highlighting their critical roles in creating personalized memes. Estimated data.

Use Cases and Applications

Entertainment and Social Media

The most obvious use case for Me Meme is entertainment and social media content creation. Users can generate memes featuring themselves, share them with friends, and participate in internet culture in a more personalized way. This addresses a real desire—people naturally gravitate toward content featuring themselves or their friends. By making meme creation accessible without design skills, Me Meme lowers the barrier to participation.

Creators on platforms like Reddit, Tik Tok, Instagram, and Twitter can use Me Meme to generate unique content rapidly. Rather than spending time on image editing or commissioning custom graphics, they can generate multiple variations in minutes. The novelty factor of AI-generated memes also carries appeal—users are naturally curious about how AI interprets their appearance in various contexts, making "my AI meme" content inherently shareable.

Content trends often involve participants creating similar content in different ways. Me Meme enables this at scale. If a particular meme template becomes trending, thousands of users can rapidly generate personalized versions, creating a wave of user-generated content. Platforms like Tik Tok would naturally surface this type of content since it drives engagement.

Professional and Business Communications

Beyond entertainment, Me Meme has legitimate professional applications. Corporate communications teams can use the feature to create personalized greeting videos or announcements. Imagine a CEO generating a meme-format message to company employees, or a marketing team creating a series of customer appreciation graphics featuring actual customers. The personalization factor makes these messages more impactful and memorable than generic corporate communications.

Team building and internal culture can benefit from Me Meme. Teams can create inside jokes and shared meme content that strengthens group cohesion. Employee recognition programs might incorporate personalized meme content celebrating individual achievements. The novelty and humor element of AI-generated content can make traditionally dry internal communications more engaging.

Educational institutions could use Me Meme in creative ways. Professors might generate meme-format learning content, making educational material more memorable and engaging. Student presentations could incorporate memes featuring classmates, adding humor and personality. The feature democratizes graphic design, allowing educators without design skills to create visually engaging educational materials.

Marketing and Brand Engagement

For brands and marketers, Me Meme opens possibilities for personalized customer engagement. Customer loyalty programs could offer personalized meme creation as a value-add service. Brands could run campaigns encouraging customers to generate memes featuring themselves with brand products or logos. This user-generated content becomes authentic marketing material with inherent social sharing potential.

Influencer marketing could be enhanced through Me Meme. Brands could collaborate with influencers to create meme content featuring the influencer, their audience, or brand partnerships. The personalization factor makes this content more engaging than traditional sponsored posts. Product launches might incorporate meme campaigns where customers generate images of themselves "using" new products.

Real estate, automotive, and retail sectors could leverage Me Meme for customer engagement. Real estate agents could generate meme-format property listings. Auto dealers could create humorous content featuring customers with vehicles. Retail brands could generate social media content featuring customers with products. The key advantage is speed—personalized content that would traditionally require professional photographers or designers can be generated in seconds.

Creative Experimentation and Artistic Use

Beyond obvious commercial applications, artists and creatives might find value in Me Meme for experimental work. Visual artists could use the feature as a starting point for further digital art creation. They could generate multiple versions and then modify them in Photoshop or Procreate, using AI generation as a creative tool rather than the final product. This blurs the line between AI as a tool and AI as a creator.

Photographers and portrait artists might use Me Meme to explore how AI interprets their subjects. Understanding AI's creative choices could inform their own artistic direction. Some might deliberately use AI-generated images for their lack of human perfection or unique artifacts, viewing these as stylistic elements rather than flaws.

Underrepresented artists and creators without access to professional equipment or services benefit significantly from Me Meme. The feature democratizes content creation, allowing anyone with a smartphone to produce visually engaging content. This democratization extends creative opportunities to people who might otherwise lack resources for graphic design or photo editing.

Technical Deep Dive: How Modern AI Meme Generation Works

Computer Vision and Facial Recognition

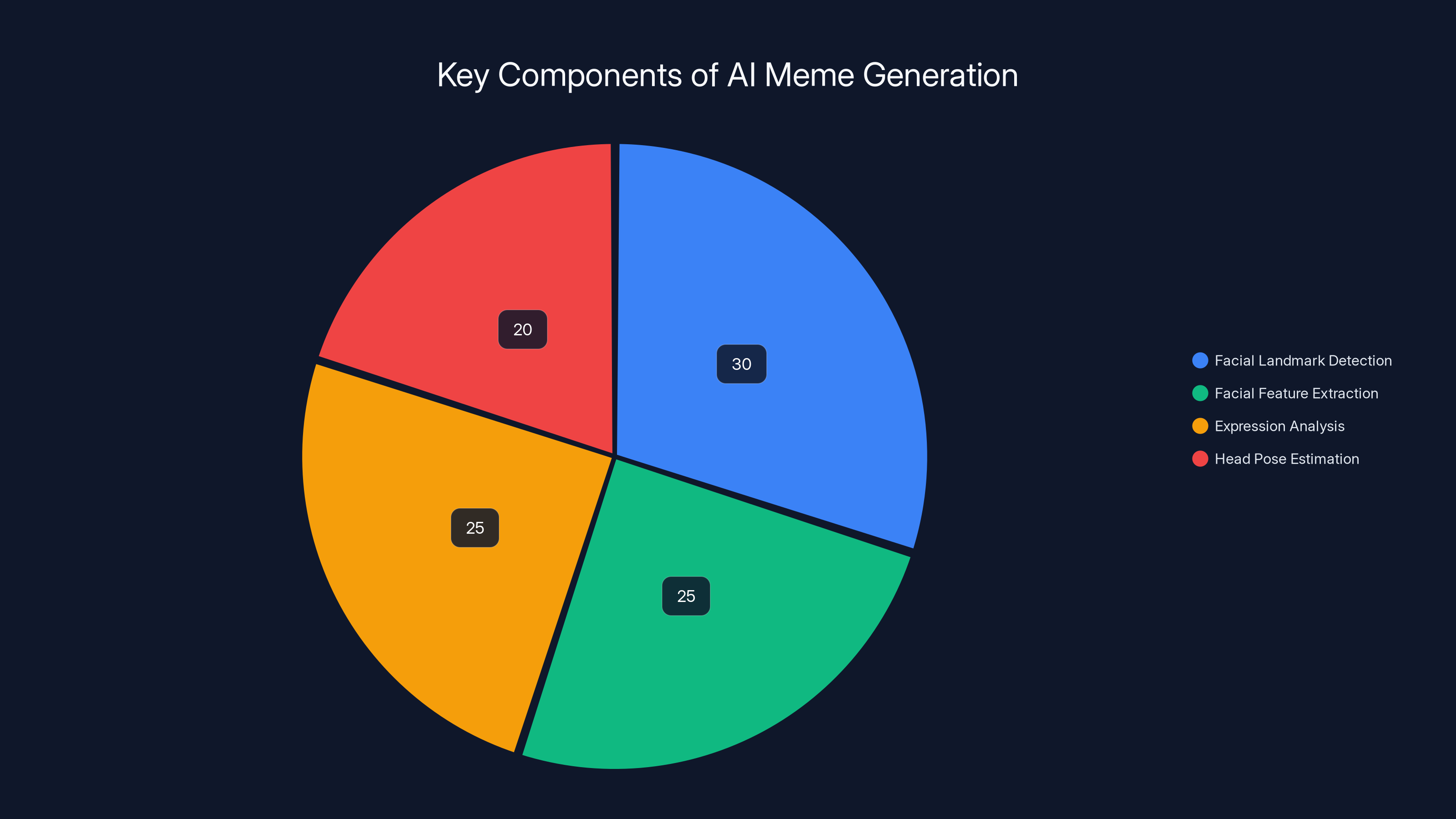

At the foundation of Me Meme is sophisticated computer vision technology. When you upload a photo, the system doesn't just see an image—it extracts detailed information about your face. Facial landmark detection identifies key points: the corners of your eyes, tip of your nose, corners of your mouth, jawline definition, and dozens of other reference points. These landmarks form a three-dimensional model of your face.

Beyond landmarks, the system performs facial feature extraction. This involves identifying and quantifying characteristics like skin tone, hair color and texture, eye color, face shape, and distinctive features (dimples, scars, unique proportions). All of this information is converted into numerical representations—vectors in high-dimensional space that a machine learning model can process and understand.

The system also performs expression analysis. If your photo shows a smile, frown, neutral expression, or surprise, the system quantifies these emotional cues. This becomes relevant when generating your face in template contexts with different emotional connotations. If the template depicts celebration, the system might adjust the generated expression to match. If the template is sad or serious, the generated face might shift accordingly.

Head pose estimation determines how your head is oriented. Are you looking straight at the camera, turned slightly, looking up or down? This spatial information is crucial because meme templates might require your face at a different angle than your source photo. The model uses this information to imagine how your face would look from different angles.

All of this information—landmarks, features, expression, head pose—gets encoded into what's called a facial embedding: a compact numerical representation capturing the essence of your face. This embedding is what the generative model actually works with, not your raw image data. This design choice provides privacy benefits; the raw pixel data isn't stored, only the abstracted representation.

Generative Modeling and Image Synthesis

Once you have a facial embedding representing you, and the system has analyzed the template image, the actual generation process begins. This involves diffusion models, a breakthrough approach in generative AI that's become dominant in image generation.

Diffusion models work through a fascinating process: they start with pure noise (random pixels) and iteratively refine it into meaningful images. The model has learned, through training on billions of images, how to take a noisy image and denoise it in ways that respect the constraints you've provided—in this case, incorporating your facial characteristics into the template composition.

The generation process follows mathematical principles described by diffusion equations. At each step, the model applies small adjustments to the image, guided by your facial embedding and the template structure. After hundreds of these iterative refinements, the noise gradually becomes a coherent, realistic image. This iterative approach is computationally expensive, which is why Me Meme's processing takes several seconds.

Conditioning is the technical term for how the model incorporates your specific facial information. The facial embedding acts as a condition—the model generates images that match the embedding while also respecting the template composition. This is more sophisticated than simple overlay or compositing; the model generates pixels holistically, considering how your face should integrate with the template's colors, lighting, and style.

Quality improvements come through architectural innovations and training data. Nano Banana likely uses a more compact architectural variant of a larger image generation model, optimized for speed and efficiency. The training data—images the model learned from—directly impacts performance. If training data included few faces from particular demographics, the model performs less accurately on those faces. This is an active area of research as companies work to ensure their generative models work fairly across diverse populations.

Server-Side Processing and Optimization

When you hit "Generate," your request travels to Google's servers, where the actual processing happens. Google likely routes your request through a load-balanced system of GPUs or TPUs (Google's custom AI chips) optimized for image generation. The system queues requests and processes them efficiently, balancing latency (how quickly you get a result) against computational cost.

Google likely employs caching strategies to improve performance. If multiple users generate their faces in the same template, the template analysis is cached—no need to re-analyze it. Commonly-used facial embeddings might be cached. Partial results might be cached. These optimizations dramatically reduce computational load and latency.

Inference optimization is another critical factor. The process of using a trained model to generate new data (inference) is computationally expensive. Google uses several techniques to reduce this cost: model quantization (using lower-precision numbers), knowledge distillation (training smaller models to mimic larger ones), and mixed-precision computation (using appropriate precision levels for different operations). These optimizations might reduce inference speed by 10-20x compared to the theoretical maximum, making global-scale deployment feasible.

The generated image, once created, is likely quality-checked before being returned to you. Automated systems might screen for obvious artifacts, quality issues, or policy violations. Very poor-quality results might trigger automatic regeneration. Only images meeting quality thresholds are returned to users, maintaining perceived quality and user satisfaction.

Facial landmark detection is the most significant component, contributing 30% to AI meme generation, followed by facial feature extraction and expression analysis at 25% each. Estimated data.

Privacy, Security, and Data Handling

How Google Processes Your Facial Data

When you use Me Meme, you're trusting Google with your facial data. Google's privacy policy governs this relationship, but it's worth understanding how the process works. The source photo you upload is analyzed, facial information is extracted into an embedding, and that embedding (not the original photo) is sent to the generation system. The original photo is typically deleted from Google's systems after processing unless you've enabled photo backup.

Google's privacy commitments state that generative AI features don't use your data for advertisement targeting. Your photos aren't used to train new models without explicit consent. However, the feature does generate usage signals—when you generate, what templates you use, how often you regenerate. These signals help Google understand feature usage and optimize the system. They're treated as analytics data, subject to privacy protections.

The generated images become part of your Google Photos library, subject to your cloud storage settings. If you've enabled Google One backup, they're backed up to Google's servers. If you haven't enabled backup, they're stored locally on your device. You maintain control over sharing—the images are private by default and only visible to people you explicitly grant access.

Security Considerations and Data Protection

Google employs standard security measures: encryption in transit (when data travels between your device and servers), encryption at rest (when data is stored), and access controls limiting who can see your data. The company undergoes regular security audits and penetration testing. These measures protect your data from unauthorized access.

However, security is never absolute. Sophisticated attackers might find vulnerabilities. Large organizations like Google are persistent targets for state-sponsored actors and criminal groups. If you're dealing with particularly sensitive content, you should understand these risks. Using Me Meme means accepting some level of digital risk, as with any cloud service.

The generated images themselves carry security implications. Once you share a generated meme publicly, it exists on the internet permanently. Screenshots can preserve it even if you delete it. Other users can share it. The image might be indexed by search engines. You lose control of the content once it's public. Consider this before creating memes you'd be uncomfortable with existing permanently on the internet.

Regulatory Compliance and Legal Implications

Google's Me Meme likely complies with major regulations like GDPR (in Europe), CCPA (in California), and similar privacy laws. Users in these jurisdictions have specific rights: the right to access their data, the right to deletion, and the right to portability. Google's tools generally support these rights.

Face-related features raise additional regulatory attention. Some regions have begun regulating facial recognition technology specifically. The EU has proposed restrictions on certain facial recognition uses. China has different regulations. The legal landscape is evolving, and companies deploying facial recognition must track regulatory changes to stay compliant.

The use of facial data for generating synthetic images falls into a regulatory gray area in many jurisdictions. There's no clear consensus yet on whether generating synthetic images of your face requires explicit consent or falls under fair use. Future regulations might establish requirements. For now, using Me Meme means accepting some legal uncertainty about how your facial data might be regulated in the future.

The Future of AI-Powered Meme Generation

Technological Roadmap and Expected Improvements

The field of generative AI is advancing rapidly, and Me Meme will benefit from these advances. Higher resolution generation is coming—soon, the feature will generate ultra-high-resolution images suitable for large prints and professional use. Current outputs are optimized for mobile and social media; future versions will handle various use cases.

Multi-face generation will likely arrive, allowing you to generate memes featuring multiple people with AI faces for each. This opens new possibilities for group content creation. Real-time generation might eventually become possible, allowing you to see preview results instantly before committing to generation. Video meme generation could extend the feature to video templates, generating short video clips rather than static images.

More sophisticated expression matching will improve the system's ability to map your face's emotions onto template contexts. Better pose adaptation will allow your face to appear at various angles more convincingly. Style transfer integration might allow you to choose artistic styles—oil painting, watercolor, cartoon—for the generated output.

The most ambitious future direction would be training personal models. If Me Meme allowed you to upload a dozen photos of yourself, the system could train a lightweight model specifically optimized for your face. This would dramatically improve generation quality since a personal model would understand your unique facial characteristics perfectly. Such capability would require more compute but isn't technically infeasible.

Integration with Other Google Products

Google will likely expand Me Meme beyond Photos. Google Slides integration would allow inserting generated memes directly into presentations. Gmail integration would let you generate memes in email. Google Chat integration would allow real-time meme generation during team communications. Workspace integration would extend it to enterprise settings.

Google will probably develop API access allowing third-party developers to integrate Me Meme into their applications. A fitness app could let you generate memes of yourself achieving fitness goals. A gaming platform could let you generate memes featuring your avatar. This ecosystem expansion would dramatically increase usage and engagement.

Market Expansion and Competitive Response

Me Meme's success will inevitably trigger competitive responses. Meta (Facebook/Instagram/Whats App) is developing its own generative AI capabilities and will likely launch competing features. Apple, Amazon, and Microsoft are all investing heavily in generative AI. Competition will drive innovation, making meme generation capabilities better and more accessible across platforms.

We'll see specialized meme generation services emerge—startups focused specifically on personalized meme creation, possibly with premium templates, advanced features, or specialized use cases. Some might focus on specific audiences (K-pop fans, sports fans, gaming communities). Others might target professional use cases. The market will likely stratify, with multiple players serving different segments.

Integration with social media platforms will become tighter. Instagram might integrate meme generation directly into Stories. Tik Tok might add meme templates to its effects editor. Twitter/X might integrate meme generation into the tweet composition experience. This ubiquitous availability will make personalized meme creation a standard feature, not a novelty.

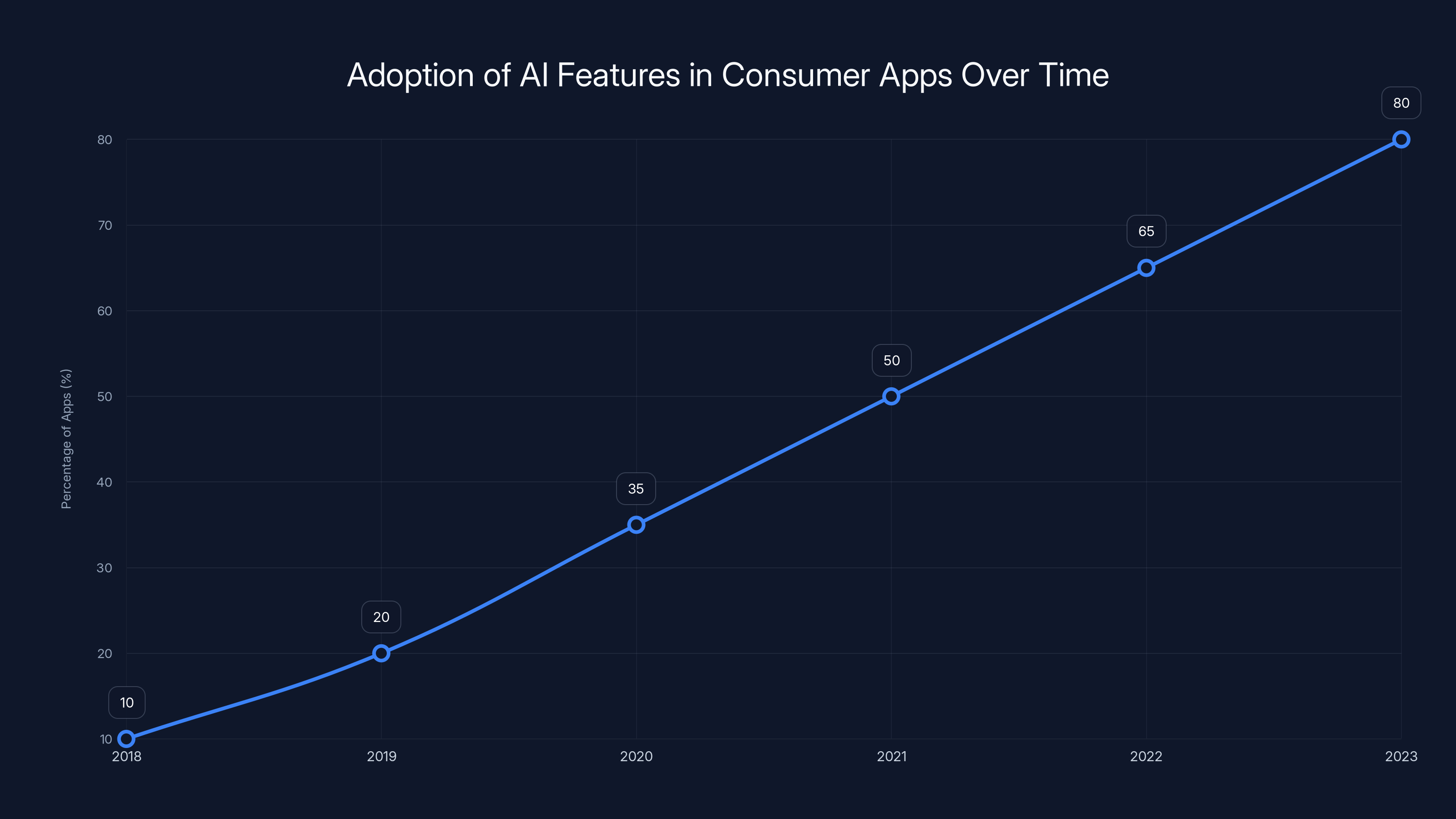

The integration of AI features in consumer applications has grown significantly from 10% in 2018 to an estimated 80% in 2023, reflecting the mainstreaming of AI technologies in everyday tools. Estimated data.

Practical Guide: Creating Your First Me Meme

Step-by-Step Instructions

Step 1: Gather Your Source Photos Open Google Photos and select or take a high-quality photo of yourself. Remember Google's recommendations: good lighting, focused image, front-facing if possible. If you don't have a suitable photo in your library, take a new one. Use natural light if available. A window with indirect sunlight provides excellent lighting. Position yourself so your entire face is visible and clearly lit. Take several shots to have options.

Step 2: Navigate to the Create Tab Open the Google Photos app and tap the "Create" button at the bottom of the screen (usually marked with a plus icon or labeled "Create"). You'll see a menu of creation tools. Look for the "Me Meme" option. If you don't see it, the feature might not have rolled out to your account yet. Google was initially rolling it out to US-based users, so availability varies by geography and account.

Step 3: Select a Template You'll be presented with available templates. Scroll through the options to find one that appeals to you. Consider what vibe you want—funny, professional, artistic, nostalgic. Read any descriptions if provided. If you have a specific meme template in mind that isn't available, note that you can select "Upload Custom Template" to use your own image.

Step 4: Upload Your Photo Tap "Add Photo" and select the source photo you want to use. You can select from your existing Google Photos library or take a new photo. Once selected, the system analyzes your facial characteristics. This process is local on your device initially; the analysis happens before any data is sent to Google's servers.

Step 5: Initiate Generation Tap the "Generate" button. Your browser or app will display a processing indicator. This is when your data travels to Google's servers and the actual AI generation happens. Processing typically takes 5-15 seconds depending on server load. You'll see a loading animation indicating the system is working.

Step 6: Review the Result Once generation completes, you'll see the generated meme. Take a moment to evaluate it. Does it look convincing? Is the face recognizable as you? Does the expression match your intentions? Does the integration with the template look natural? If satisfied, proceed to Step 8. If unsatisfied, proceed to Step 7.

Step 7: Regenerate for Better Results (Optional) If the first result wasn't satisfactory, tap the "Regenerate" button. The system will create a different version using the same inputs but different random sampling. Different generations might emphasize different facial features or interpret the template differently. Try 2-3 regenerations if the first few results are unsatisfactory. Keep the best result and discard others.

Step 8: Save or Share Once you have a result you're happy with, save it. The image is automatically saved to your Google Photos library. You can now share it through Google Photos' sharing features, export it to other apps, or post it on social media. You can also tap "Edit" to make further adjustments using Google Photos' editing tools—adjusting brightness, contrast, or adding text overlays.

Common Mistakes and Solutions

Mistake 1: Poor Source Photo Quality Problem: You uploaded a blurry, poorly-lit, or angled photo, and the generated result looks bad. Solution: Start over with a better source photo. Take a new selfie with better lighting. Use your device's best camera (usually the back camera if you can set up a mirror). Ensure your face takes up a reasonable portion of the frame—not too close, not too far away.

Mistake 2: Unrealistic Template Expectations Problem: You selected a complex template with multiple faces or extreme artistic styles, and the results are unconvincing. Solution: Try simpler templates first while you're learning. Templates with a single, centered face in standard artistic styles work best. Once you understand the system's capabilities, you can experiment with more complex templates.

Mistake 3: Mismatched Emotional Context Problem: You generated a meme where your face's expression doesn't match the template's intended emotion. Solution: Choose templates that match your source photo's expression. If your source shows a smile, use templates with happy emotional contexts. If your source is more neutral, use neutral-context templates. Or try different source photos with different expressions to match various templates.

Mistake 4: Over-Sharing Without Consideration Problem: You generated a meme and immediately shared it publicly without thinking about implications. Solution: Before sharing widely, consider your audience and context. Show the meme to a trusted friend first and gauge their reaction. Think about whether the content aligns with your personal brand if shared professionally. Remember that once shared publicly, the content exists permanently.

Comparison with Runable and Alternative AI Platforms

Runable's Content Generation Capabilities

While Runable is primarily focused on automation and productivity rather than image generation, it represents an important alternative for teams seeking AI-powered content creation at scale. At $9/month, Runable provides access to AI agents capable of generating presentations, documents, and reports with minimal manual intervention. For businesses evaluating comprehensive AI-assisted content creation workflows, Runable's approach of automating entire documents and presentations complements more specialized tools like Me Meme.

Runable's strength lies in workflow integration. Rather than treating AI content generation as an isolated feature, Runable positions AI as part of an integrated productivity suite. Teams can set up automated workflows that generate content on schedules, triggered by specific events, or integrated into existing processes. For instance, a company might automatically generate weekly reports with Runable while using Me Meme for personalized team announcements or marketing materials.

The cost-effectiveness of Runable is notable. At $9/month, it's more accessible than many enterprise automation platforms, making it attractive for startups and small teams. Compared to hiring graphic designers or video editors, or paying for premium subscription-based content creation tools, Runable's pricing model makes advanced AI capabilities accessible. Teams prioritizing AI-powered automation might find Runable's document and presentation generation more impactful than specialized image generation tools, depending on their primary content needs.

Strategic Tool Combinations

Organizations don't need to choose between Me Meme and other platforms—they can combine them strategically. A content-heavy team might use Runable for automated document and presentation generation while using Me Meme for personalized image content. A marketing team might use Runable to generate social media post templates and descriptions while using Me Meme to create personalized variations featuring team members or customers.

A human resources department might use Runable to generate employee communications and policy documents while using Me Meme to create personalized recognition content or employee spotlights. The combination of automation (Runable) and personalization (Me Meme) creates synergistic value greater than either tool alone.

This multi-tool approach acknowledges that different content types require different solutions. Image generation is Me Meme's specialty. Document and workflow automation is Runable's specialty. Text generation is better handled by dedicated large language models. Rather than forcing all content creation into a single tool, strategic teams select specialized tools and integrate them into cohesive workflows.

Industry Trends and Broader Context

The Mainstreaming of Generative AI in Consumer Apps

Me Meme represents a broader trend: generative AI features becoming standard expectations in consumer applications. Five years ago, AI-powered features were novelties. Today, users increasingly expect major applications to include some form of AI assistance. This expectation will only grow stronger.

We're seeing this pattern across categories. Email clients offer AI-powered compose assistance. Photo apps offer AI-powered editing. Social media platforms offer AI-powered content recommendations and generation. Music apps offer AI-powered remixes and generation. This democratization makes advanced capabilities available to billions of users, not just professionals with specialized training.

The implications are profound. Content creation becomes more accessible. People without design training can create professional-looking content. Time spent on content creation decreases. What took hours now takes minutes. Accessibility improves for people with disabilities who might struggle with traditional tools. These changes reshape creative industries and professional workflows.

The Personalization Imperative

Why did Google choose to add Me Meme now, rather than years ago? The answer involves engagement metrics and personalization trends. Companies have learned that personalized content dramatically outperforms generic content in user engagement. Content featuring users, their friends, or their personal information generates more engagement, shares, and return visits.

This insight drives product decisions across the industry. Apps increasingly ask for personal information. Notifications are personalized. Recommendations are personalized. Content is personalized. Me Meme fits this trend—it personalizes meme creation, making the feature emotionally resonant in ways generic meme tools aren't.

This personalization comes with tradeoffs. More personalization requires more data collection. More data enables better personalization but raises privacy concerns. Companies are walking a tightrope between personalizing enough to drive engagement while collecting as little data as legally and ethically acceptable.

The Creator Economy Connection

Me Meme sits at the intersection of consumer social media and the creator economy. For creators and influencers, the ability to rapidly generate personalized content is valuable. It allows faster content iteration, more output, and more authentic connection with audiences. Creators who embrace new tools earlier often gain competitive advantages.

The feature also democratizes creation. You don't need professional equipment, design skills, or large budgets to create engaging meme content. This lowers barriers to entry for aspiring creators, increasing the total addressable market for content creation tools. Some of today's casual Me Meme users might become tomorrow's professional creators, having discovered their interest through playful experimentation.

For established creators, Me Meme is one more tool in an expanding kit. Successful creators are typically early adopters of new platforms and tools, experimenting to understand how audiences respond before committing significant resources. Me Meme fits this pattern of innovation and experimentation.

Monetization Opportunities and Business Models

How Google Might Monetize Me Meme

Currently, Me Meme is available free to Google account holders. However, Google might implement monetization strategies in the future. Premium templates featuring celebrity images, brand partnerships, or exclusive designs could be offered for subscription fees. Advanced generation options like higher resolution, faster processing, or style selection might be behind a paywall.

Google could introduce quota systems where free users get a limited number of generations per day, with unlimited generation available through subscription. This is a common freemium model. Alternatively, Google might offer white-label versions of Me Meme to enterprise customers who want the feature for their own products, creating a B2B revenue stream.

Sponsored templates from brands wanting to run meme campaigns could generate revenue. Nike might create branded Me Meme templates promoting new shoes. Coca-Cola might create holiday-themed templates. Brands would pay Google for template placement and access to aggregated usage data. This model already exists in similar forms on other platforms.

Third-Party Developer Opportunities

Google could create a developer API allowing third-party services to integrate Me Meme functionality. Social media scheduling tools could integrate meme generation. Content management systems could use it for personalized content creation. Marketing automation platforms could leverage it for campaign personalization. These integrations would expand Me Meme's reach while generating API revenue for Google.

Third-party developers could build specialized applications on top of Me Meme. A meme-specific marketplace where users create and sell templates. A meme-generation service for businesses. Meme-based marketing campaigns. Plugins for various platforms. The AI image generation market is large and growing, with plenty of room for specialized applications built on foundational models like Nano Banana.

Frequently Asked Questions

FAQ

What exactly is Google Photos Me Meme?

Google Photos Me Meme is an AI-powered feature that allows you to insert your face into meme templates using generative AI. You upload a photo of yourself and select a meme template, and the system uses Google's Gemini AI technology (specifically the Nano Banana image generation model) to generate an image of you appearing in that meme template. The process is automated and designed to be fun and shareable, requiring no manual image editing skills.

How does Me Meme use my facial data?

When you upload a photo to Me Meme, Google's computer vision systems analyze your facial characteristics and convert them into a numerical representation called a "facial embedding." This embedding captures information like your facial features, skin tone, and expression, but isn't your raw photo data. The embedding is what the AI uses to generate images of your face in various contexts. Google states it doesn't use this data for ad targeting without explicit consent, though the company does collect usage analytics to improve the feature.

What are the quality requirements for source photos?

Google recommends uploading well-lit, focused, and front-facing photos for optimal results. Good lighting helps the AI clearly identify your facial features. A focused image ensures sharpness rather than blur. Front-facing photos provide the most information about your face's appearance, making it easier for the system to generate convincing results. You can still use other photos, but results will likely be better with these characteristics. Taking a deliberate selfie under good lighting typically yields better results than using a random photo from your gallery.

Can I use Me Meme with custom templates?

Yes, Me Meme allows you to upload custom templates beyond Google's pre-made collection. You can upload any image to use as a base—a company logo, a product photo, an artwork, or any visual composition you want. The system will attempt to generate your face into that custom template. This significantly expands the feature's creative possibilities beyond the standard meme library, though custom templates vary in how well they work depending on composition and where faces would logically appear.

Is Me Meme available worldwide?

No, Me Meme initially rolled out to US-based Google account holders before expanding to other regions. Google stated it would reach US i OS and Android users over "coming weeks" from its January 2026 announcement. International availability might come later. If you don't see the feature in your Google Photos app, it may not have reached your region yet. Check your app version—you need the latest Google Photos version to access new features.

How long does it take to generate a meme?

Generating a meme typically takes 5-15 seconds from when you tap the "Generate" button. The processing time depends on server load, image complexity, and template complexity. During peak usage times, processing might take longer as requests queue. Regenerating alternative versions of the same image typically takes similar time. The entire process is designed to be responsive enough for casual use without significant waiting.

Can I regenerate images multiple times?

Yes, you can tap "Regenerate" multiple times to create different versions of your face in the same template. Each regeneration produces a slightly different output because the underlying AI model works probabilistically, making different random choices each time. This is valuable if the first result isn't satisfactory—you can try several variations until you get one you like. There isn't a strict limit on regenerations, though very high volumes might be rate-limited.

How do I share generated memes?

Once you generate a meme, it's automatically saved to your Google Photos library. From there, you can share it through Google Photos' built-in sharing features, export it to your device's camera roll, or send it to specific people. You can also post it directly to social media platforms if you've authorized Google Photos for that. The image is your property and can be shared, deleted, or edited like any other photo in your library.

Are generated images private?

Generated images are private by default—only visible to you unless you explicitly share them. They're stored in your personal Google Photos library with the same privacy and security protections as any other photos. You control who has access through Google Photos' sharing features. However, once you share a generated image publicly on social media or messaging platforms, you lose control of it. Consider this before posting generated memes featuring yourself on public platforms.

What happens if the quality isn't good?

If a generated image has obvious flaws, artifacts, or doesn't match your expectations, you have several options. Try regenerating to get a different interpretation. Select a different source photo that might provide better facial information to the AI. Choose a simpler template that might be easier for the system to work with. Try different templates entirely. If problems persist, the feature might not be producing optimal results for your specific facial characteristics or the template you selected—in which case, other tools might be better suited to your needs.

Is there a cost to use Me Meme?

Me Meme is currently free for all Google account holders. You need a Google account and the latest version of the Google Photos app, but there are no direct usage fees. Google One subscribers get the same feature as non-subscribers (though they have more cloud storage for their generated images). Google could introduce premium features or quotas in the future, but as of now, the feature is included for all users at no additional cost.

How does Me Meme compare to face-swap apps?

Me Meme uses generative AI to create your face as it would appear in a template context, whereas face-swap apps typically replace one person's face with another's from two different images. Me Meme is more creative and flexible since it can work with templates that don't have existing faces, and it can generate your face in new contexts. Face-swap apps are often more photorealistic when successful but are limited to replacing existing faces. The choice between them depends on your specific use case and preferences.

Can I use Me Meme for commercial purposes?

Me Meme's terms of service would govern commercial use. Generally, content you create remains your intellectual property, but you should review Google's full terms of service for specific restrictions. Using generated memes commercially (like in advertising or products) might have different restrictions than personal use. If you plan commercial use, it's worth checking Google's documentation or contacting them to ensure your specific use case is permitted.

What if the generated face doesn't look like me?

Generative AI sometimes struggles with accuracy, especially if your source photo lacks clear facial information or if your face has distinctive features the model hasn't learned well. Try with a different, clearer source photo. Experiment with different head angles in your source photos. Use better lighting. Try simpler templates. If the model consistently struggles with your appearance, it might be a limitation of the current technology—you might need to wait for future improvements or use alternative tools.

Conclusion: The Future of Personalized AI Content

Google's introduction of the Me Meme feature represents a significant milestone in the democratization of AI-powered content creation. What once required specialized skills in graphic design, photography, and digital composition is now accessible to anyone with a smartphone and a Google account. This democratization mirrors broader trends in technology—making advanced capabilities available to the masses rather than reserving them for professionals.

The feature's success—if adoption metrics align with similar personalized features—will likely inspire competitive responses from Meta, Apple, Microsoft, and other major technology companies. Within a few years, personalized image generation might become an expected feature in photo applications across platforms. The competitive landscape will drive innovation, creating better, faster, and more capable systems.

For businesses and organizations, Me Meme and similar tools represent new opportunities for engagement and communication. Personalized content drives dramatically higher engagement than generic content. Teams that master these tools early will likely see competitive advantages in employee engagement, customer retention, and brand affinity. The ability to rapidly generate personalized content at scale opens possibilities for deeper, more authentic communication between organizations and their audiences.

For creators and content professionals, AI-powered image generation tools fundamentally change workflows. Rather than spending hours on image composition and editing, creators can generate dozens of variations in minutes, allowing for faster iteration and more experimentation. This doesn't eliminate the need for human creativity and judgment—in fact, it increases the value of human decision-making about which variations to pursue and how to contextualize generated content.

For those exploring alternative solutions, platforms like Runable offer complementary capabilities at accessible price points. Where Me Meme focuses on personalized image generation, Runable emphasizes document and presentation automation for team productivity. Strategic teams might combine multiple tools—each specialized for its domain—into cohesive workflows that maximize efficiency and output quality.

The broader question isn't whether to use Me Meme or alternative tools, but how to thoughtfully integrate AI-powered capabilities into your creative or business processes. The technology is powerful and increasingly accessible. The limiting factor is human imagination about applications and thoughtful implementation. As you evaluate whether Me Meme or similar tools make sense for your needs, consider not just current capabilities but the trajectory of AI development and how these tools will likely improve and expand in the coming years.

The age of AI-assisted content creation has arrived. Me Meme is one manifestation of this broader transformation. The organizations, creators, and individuals who understand these tools and deploy them thoughtfully will be best positioned to benefit from the dramatic improvements in content creation efficiency and personalization that AI enables.

Key Takeaways

- Google Photos Me Meme uses Gemini AI and Nano Banana model to generate personalized faces in meme templates through advanced facial recognition and diffusion-based image synthesis

- The feature democratizes meme creation by removing design skills barriers, enabling anyone to generate engaging personalized content in seconds

- Quality is highly dependent on source photo quality—well-lit, focused, front-facing photos yield dramatically better results than poor-quality images

- For teams seeking broader AI automation beyond image generation, platforms like Runable offer complementary capabilities at accessible $9/month pricing

- Me Meme faces legitimate limitations including variable quality, lack of photorealism in some cases, and current US-only availability, but regeneration options help users find satisfactory outputs

- The feature represents a broader trend toward AI-powered personalization in consumer apps, with competitive responses likely from Meta, Apple, and Microsoft

- Privacy considerations exist around facial data usage and permanent digital footprint once content is shared publicly

- Strategic organizations combine Me Meme for personalized image content with other tools like Runable for document automation and broader content workflows

![Google Photos Me Meme: Complete Guide & AI Alternatives [2025]](https://tryrunable.com/blog/google-photos-me-meme-complete-guide-ai-alternatives-2025/image-1-1769189997006.jpg)