The Shift From Experimentation to Implementation

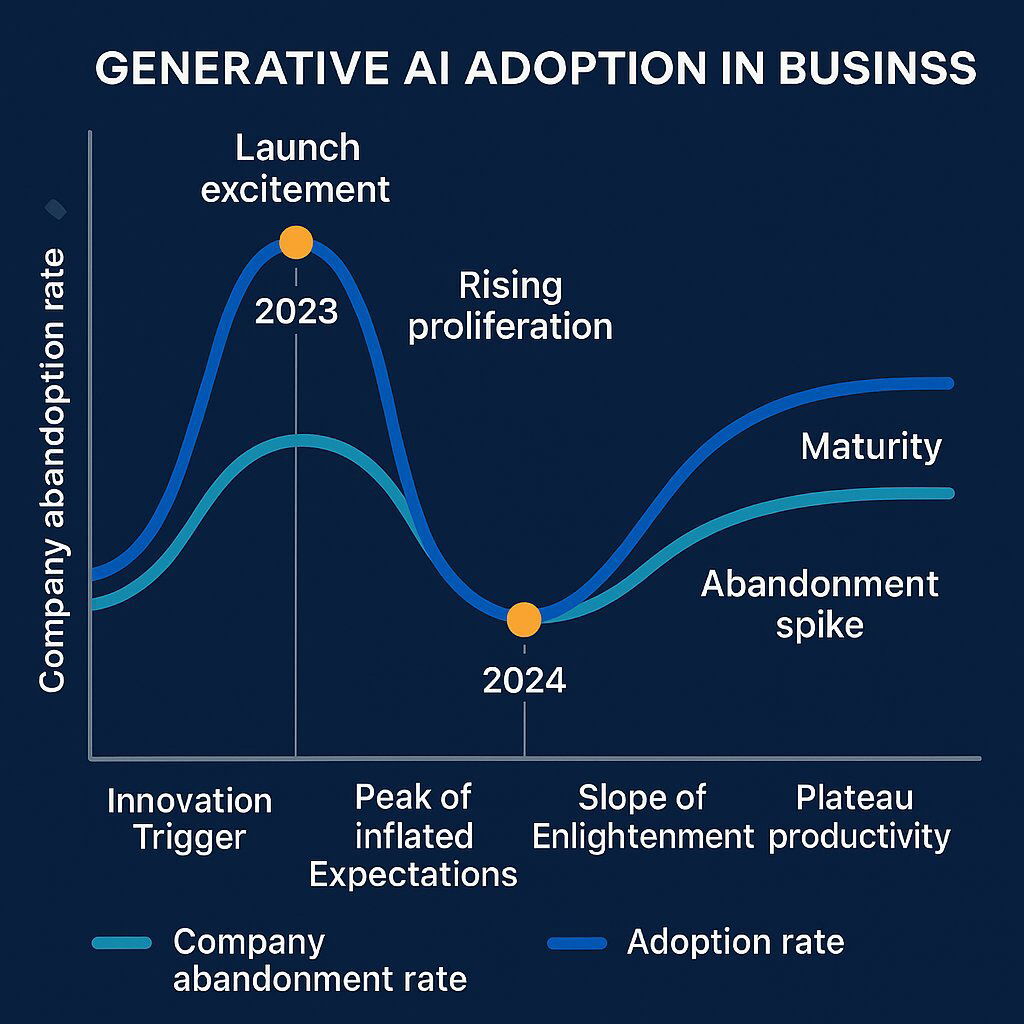

Remember when every company was launching an "AI task force" just to say they had one? Yeah, those days are mostly over.

The AI gold rush mentality is fading. What's replacing it is something far more interesting: real, quantifiable returns. Enterprises aren't asking "Should we use AI?" anymore. They're asking "Where's our ROI, and why isn't it bigger?"

This shift represents one of the most significant changes in how technology gets deployed at scale. For years, AI was treated like venture capital funding treats startups. You throw money at it, hope something sticks, and celebrate if 10% of your bets actually work. But that model breaks down when you're talking about enterprise-wide implementations involving thousands of employees, millions in budget, and actual business outcomes.

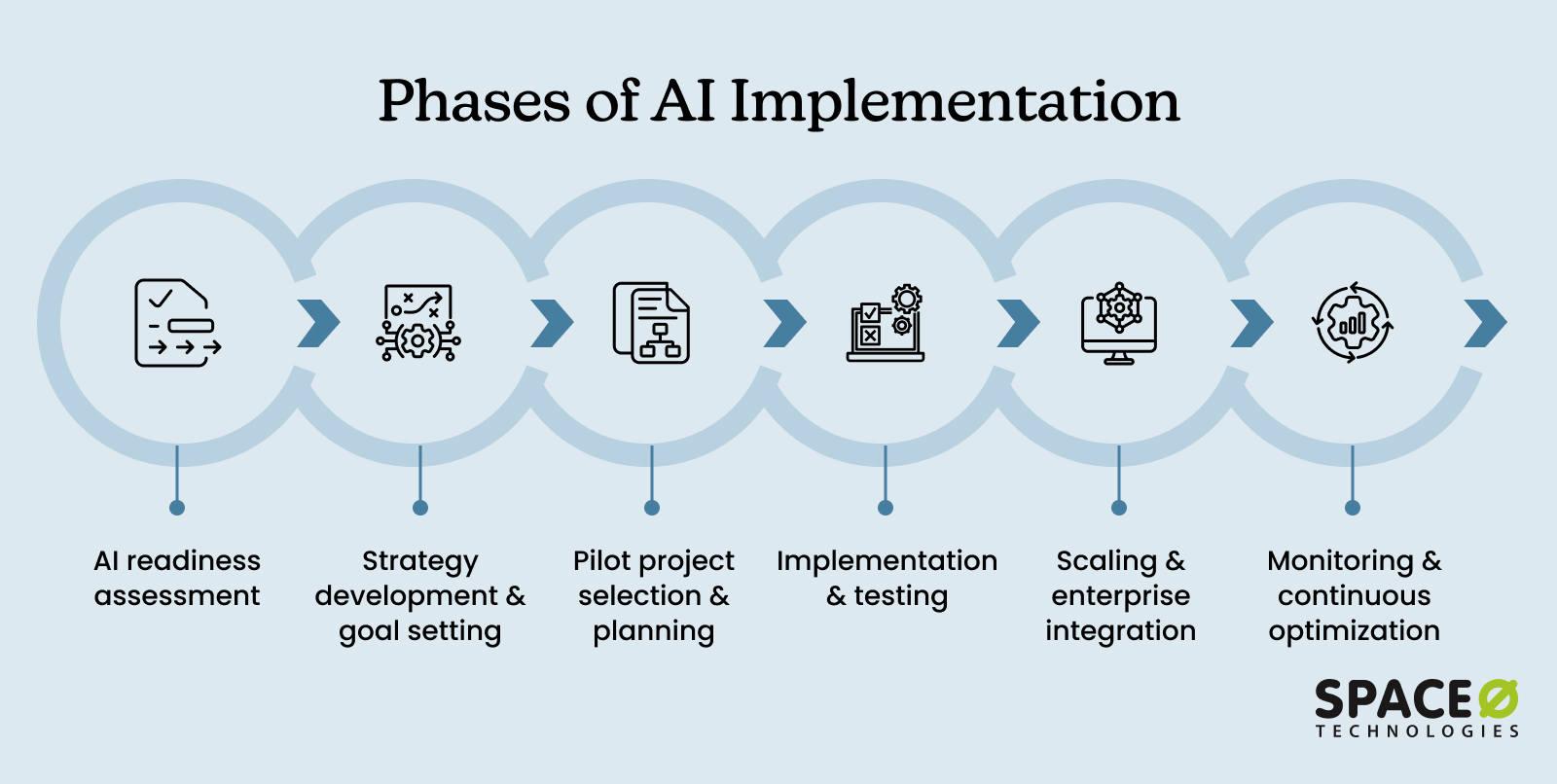

What's changed isn't the technology itself. It's the maturity of implementation. Organizations have moved past the "let's experiment with Chat GPT" phase and into the "let's build systematic AI into our core operations" phase. And that's a fundamentally different challenge.

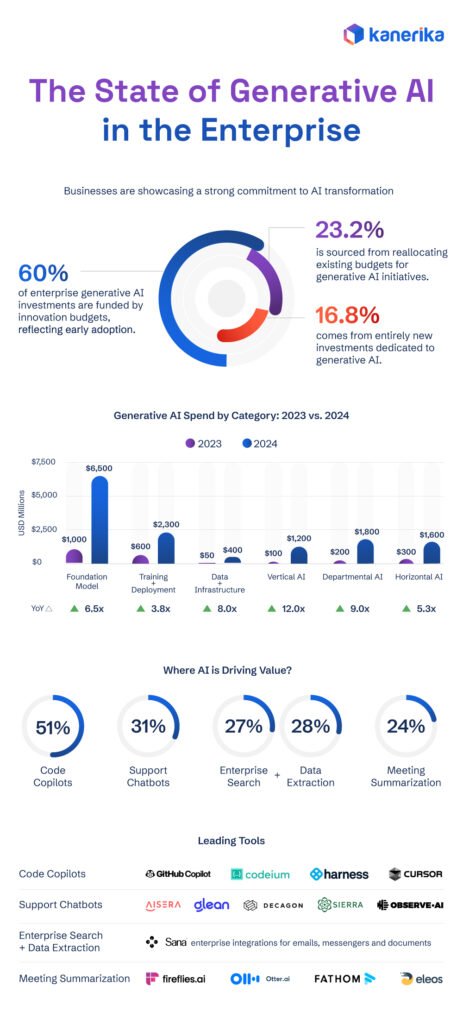

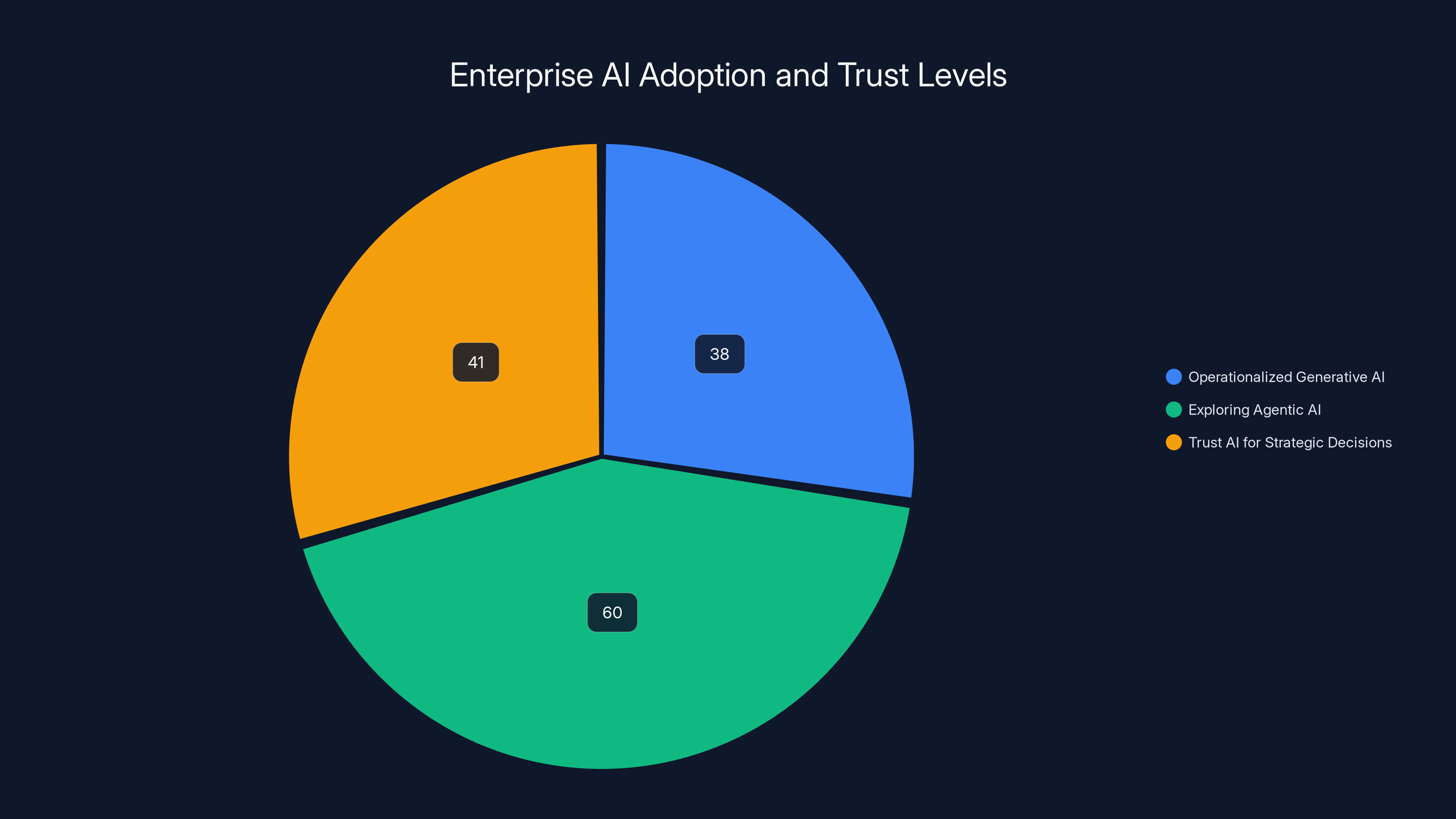

The data backs this up. According to Capgemini's insights, around 38% of organizations have already operationalized generative AI—meaning they've moved it from sandbox projects into production systems serving real customers or internal processes. That's significant. That's not "we're thinking about AI." That's "we're running AI now, and it's affecting our bottom line."

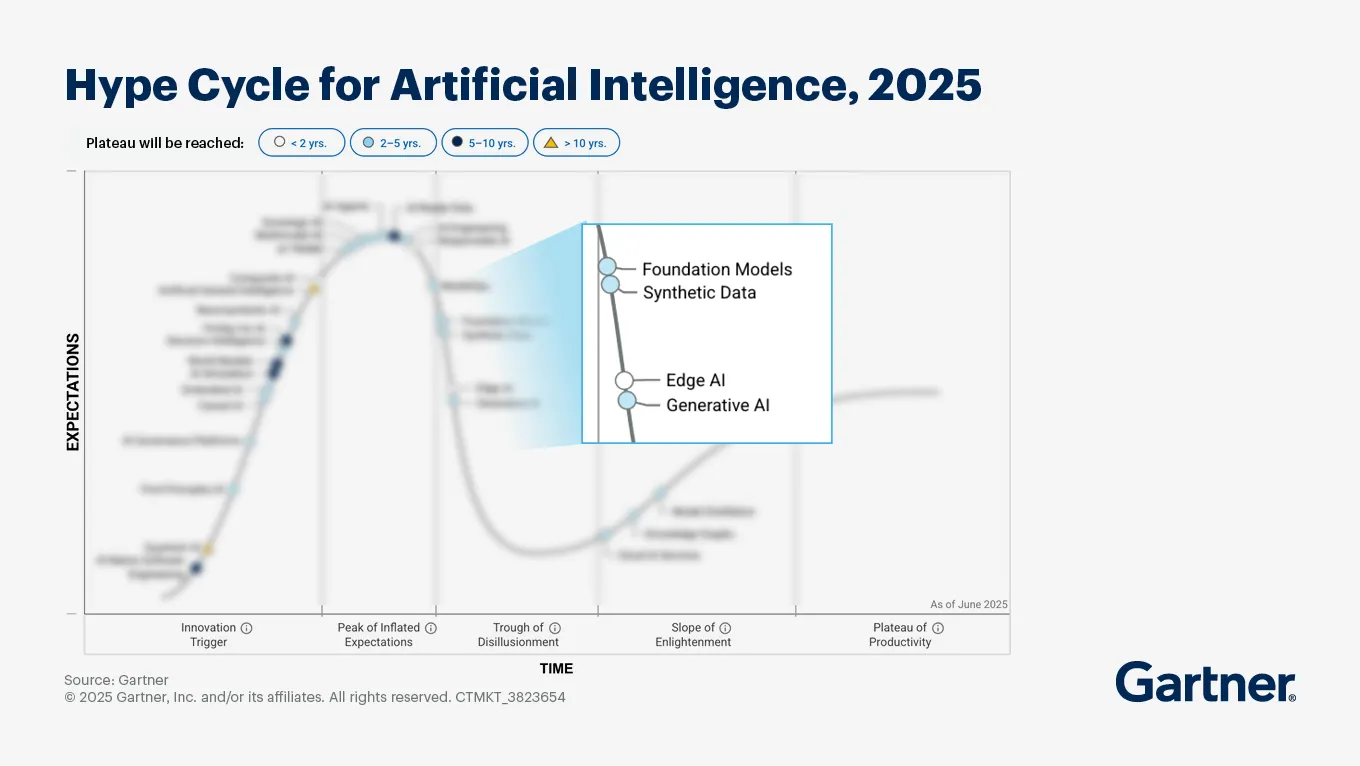

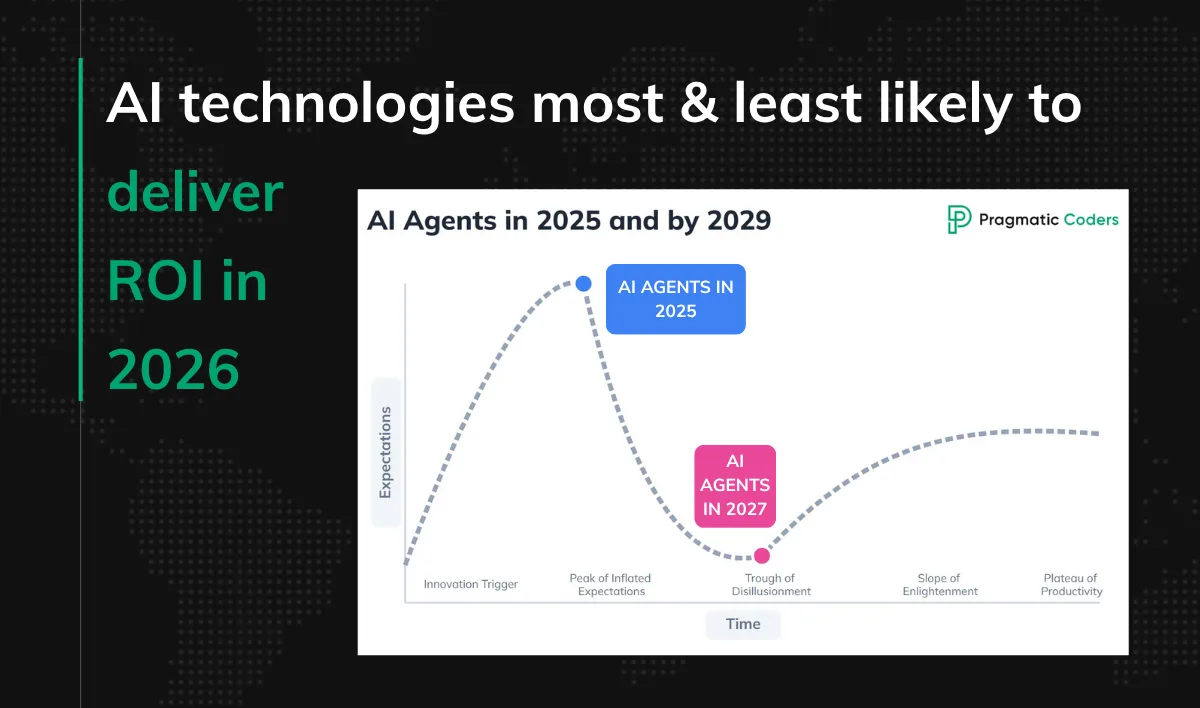

But here's where it gets interesting. While generative AI is being deployed, something else is capturing organizational attention with even more intensity: agentic AI.

What Agentic AI Actually Is (And Why It Matters)

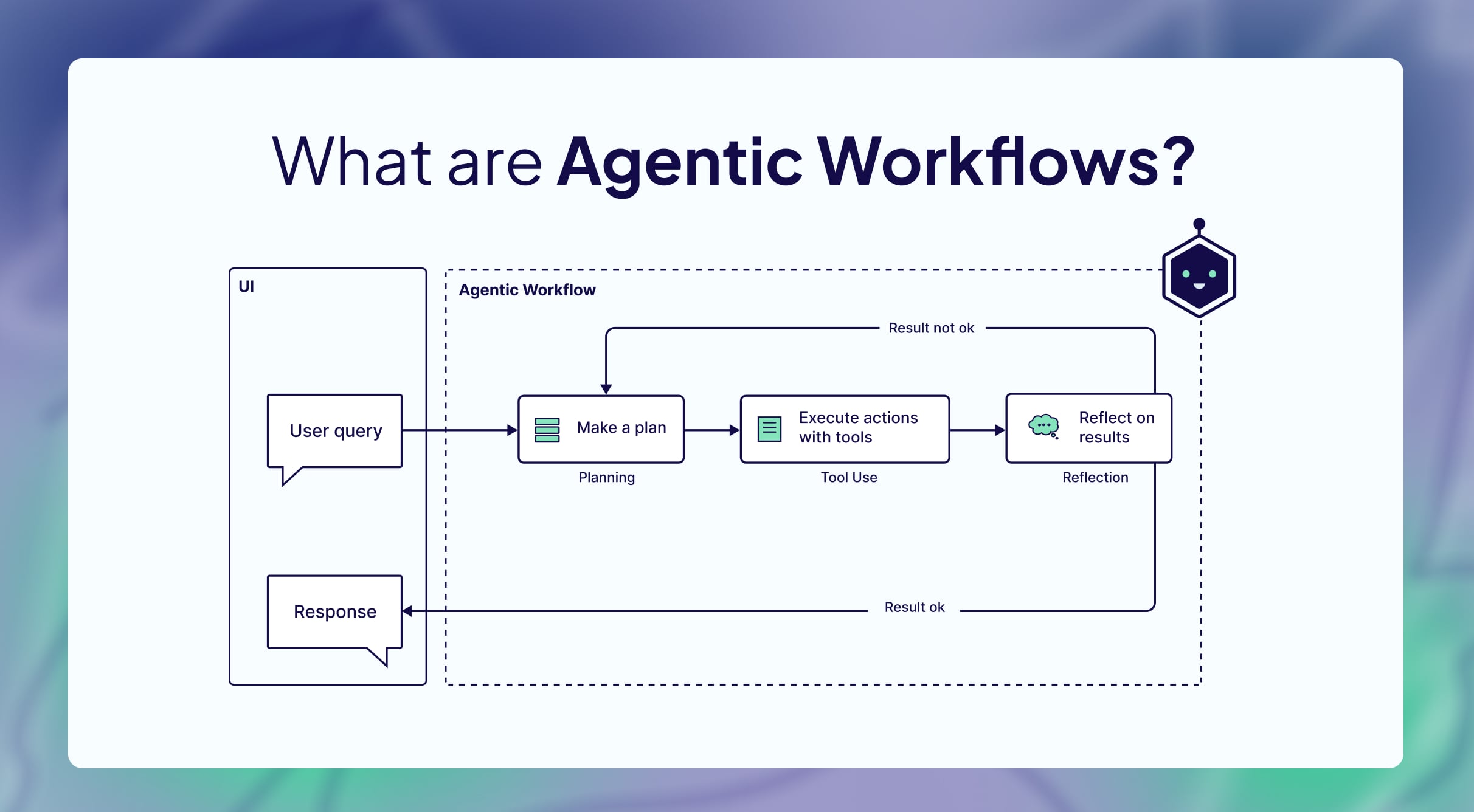

Agentic AI gets thrown around a lot these days, often without much clarity about what it actually means. Let me break this down.

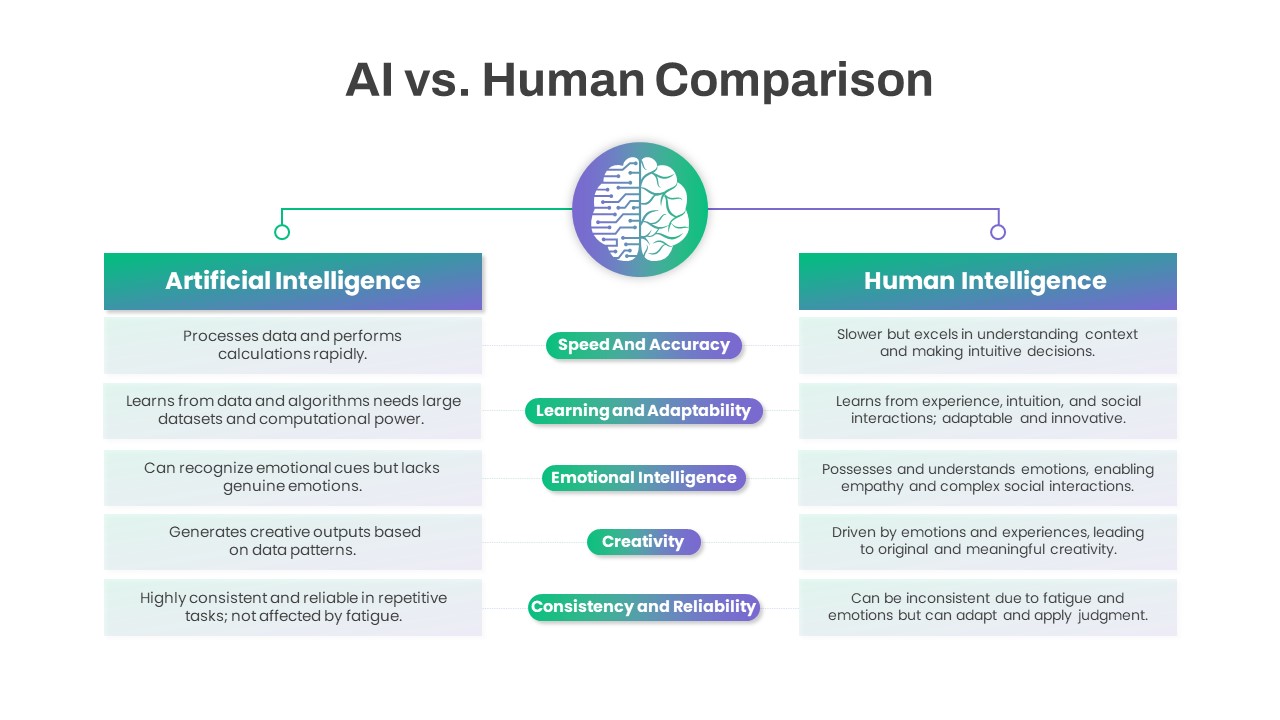

Generative AI, which most people encountered first through tools like Chat GPT, is fundamentally responsive. You ask it a question, it generates an answer. You give it a prompt, it produces text, images, or code. It's reactive. It's a tool you use.

Agentic AI flips that model. Instead of waiting for instructions, agentic systems can break down complex goals into smaller tasks, execute those tasks independently, make decisions about next steps, and adjust course based on results. They can loop back, check their work, and handle multi-step processes without human intervention at each stage.

Think of it this way: generative AI is like having a really smart consultant you can call for advice. Agentic AI is like having an employee who can actually execute on that advice without you supervising every step.

The business appeal is obvious. Organizations can take repetitive, multi-step processes and hand them to AI agents that run 24/7, don't get tired, and can scale horizontally without hiring more people.

The research shows this is resonating. Roughly 60% of organizations have started exploring agentic AI use cases. That's a much higher percentage than the 38% who've deployed generative AI. Why? Because the ROI story is more straightforward. When you can point to a process that previously took a human five hours and say "our AI agent handles this in twelve minutes," that's a number that translates directly to the C-suite.

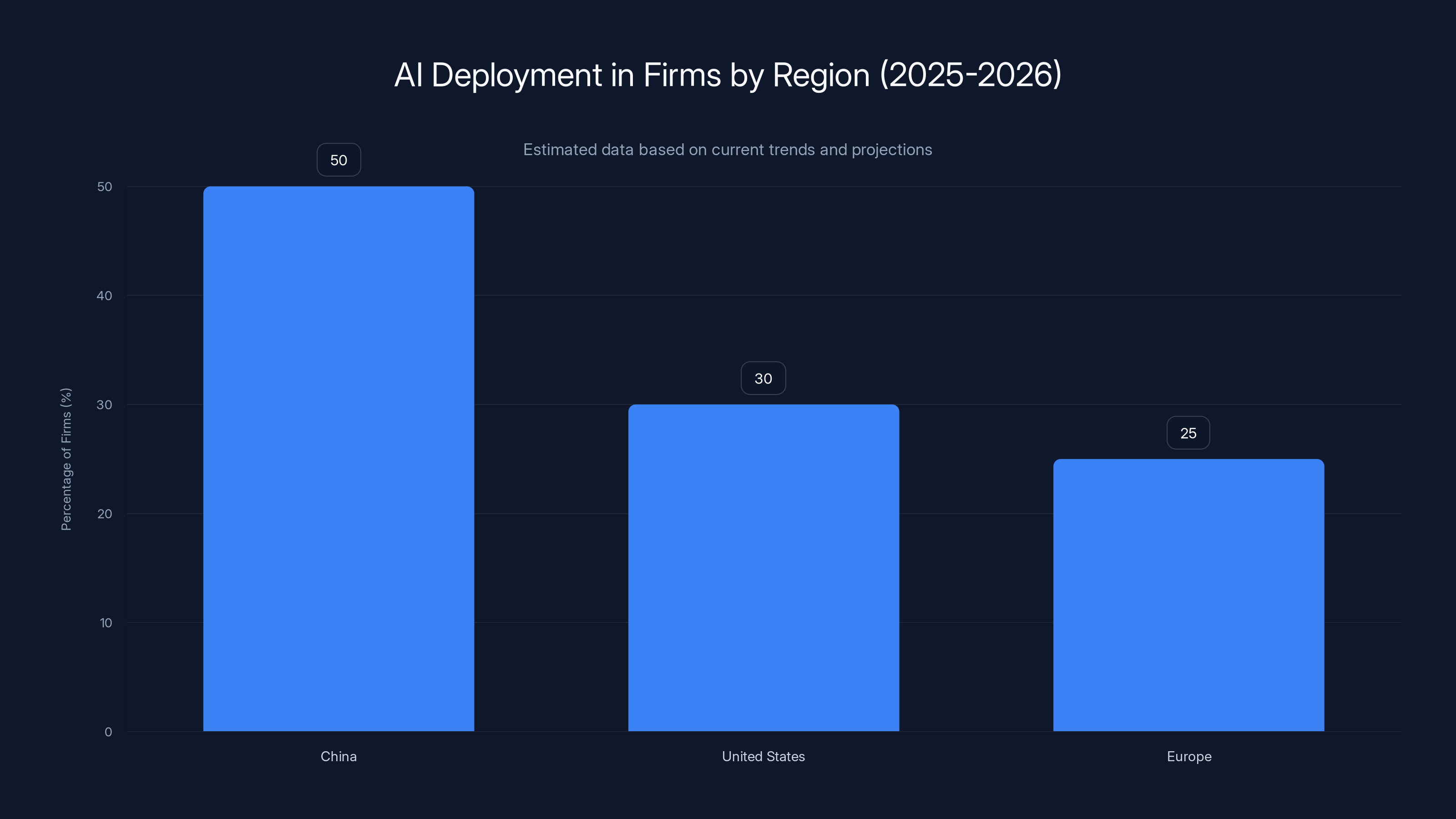

Interestingly, it's China leading the charge here. Nearly half of Chinese firms have already piloted or deployed AI agents, ahead of the US and Europe. This likely reflects both aggressive investment in AI infrastructure and, frankly, fewer regulatory constraints around autonomous systems.

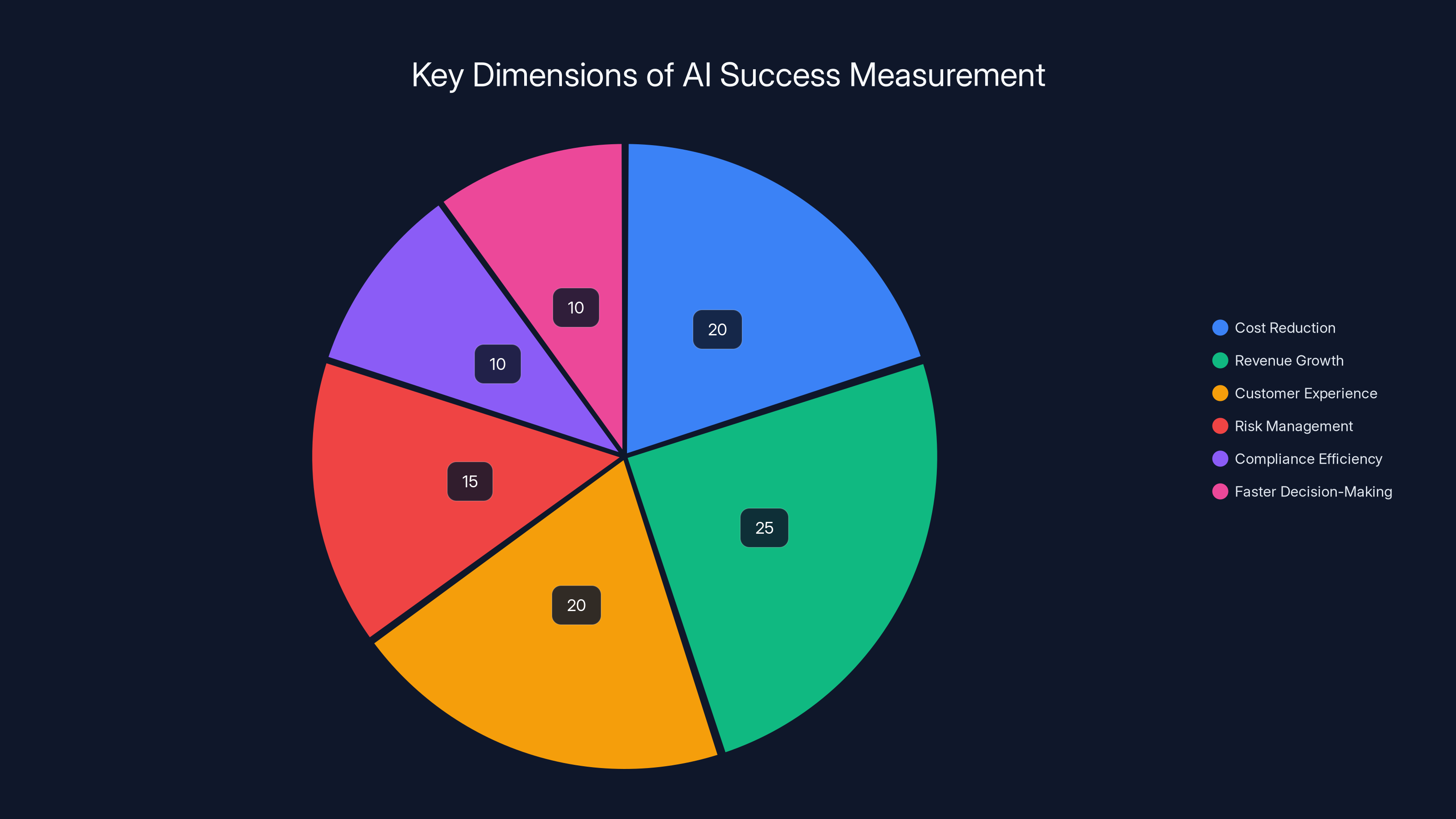

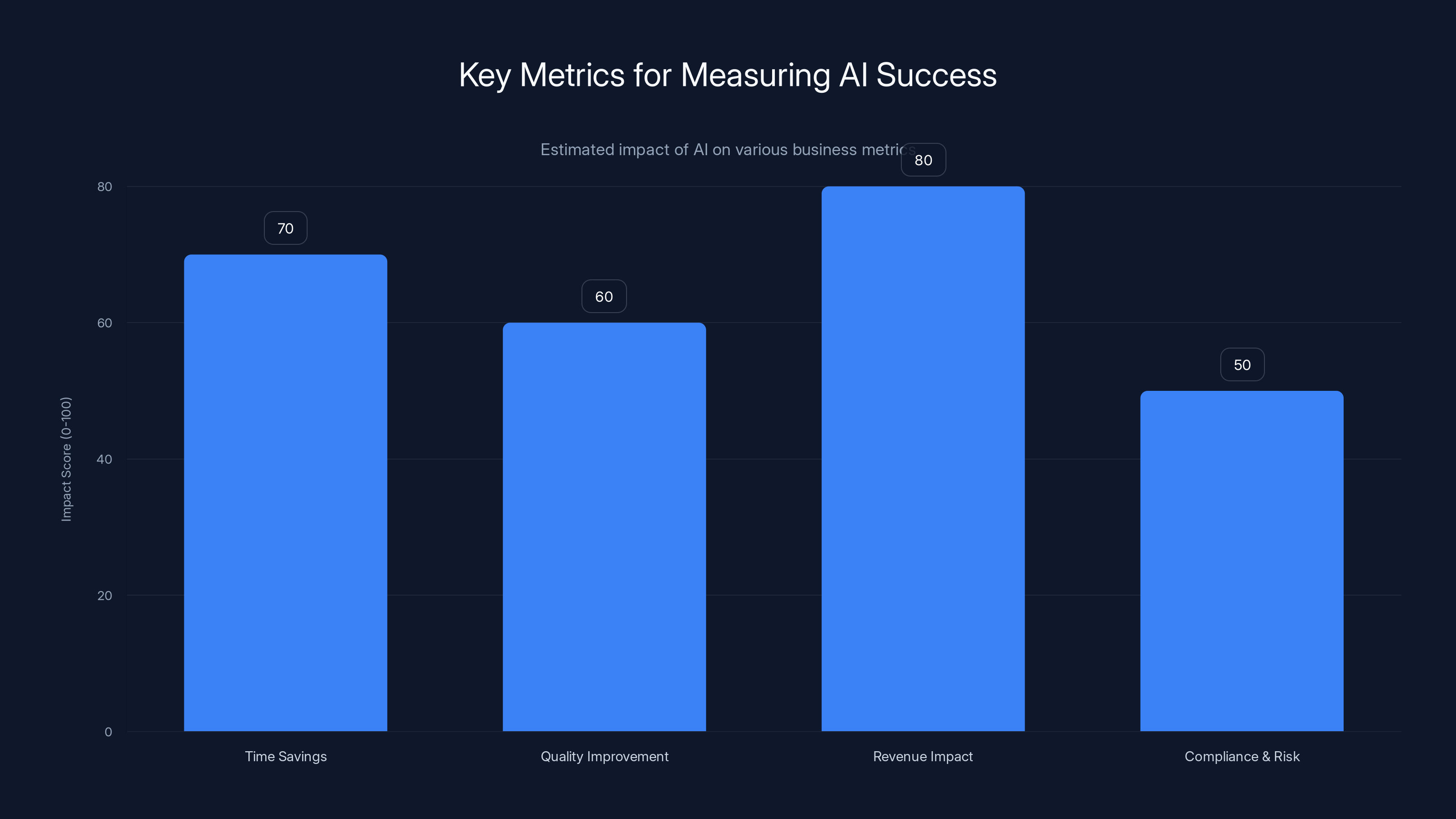

Organizations now measure AI success across multiple dimensions, with revenue growth and customer experience being as important as cost reduction. (Estimated data)

The ROI Conversation Has Changed

For the first couple years of the AI boom, there was a pretty narrow definition of ROI in most organizations: cost savings.

Cut headcount. Reduce labor costs. That was the story. And sure, that still matters. But it turns out enterprises care about a lot more than just cutting costs.

The sophisticated organizations we're seeing now are measuring AI success across multiple dimensions. Cost savings is still there, but it's sitting alongside revenue growth, customer experience improvements, risk management, compliance efficiency, knowledge management, and personalization capabilities.

This is crucial because it changes how you evaluate AI projects. A project that costs

The metrics matter, and different organizations weight them differently. A financial services firm heavily focused on regulatory compliance might prioritize risk management ROI above all else. A consumer company might care most about customer experience and retention. A manufacturer might focus on operational efficiency and throughput.

What's important is that organizations are now thinking about this holistically. The "pragmatic era" of AI, as one Capgemini executive put it, is about understanding what value AI actually creates in your specific business context, and then measuring whether you're actually getting that value.

That's mature. That's the opposite of hype-driven purchasing.

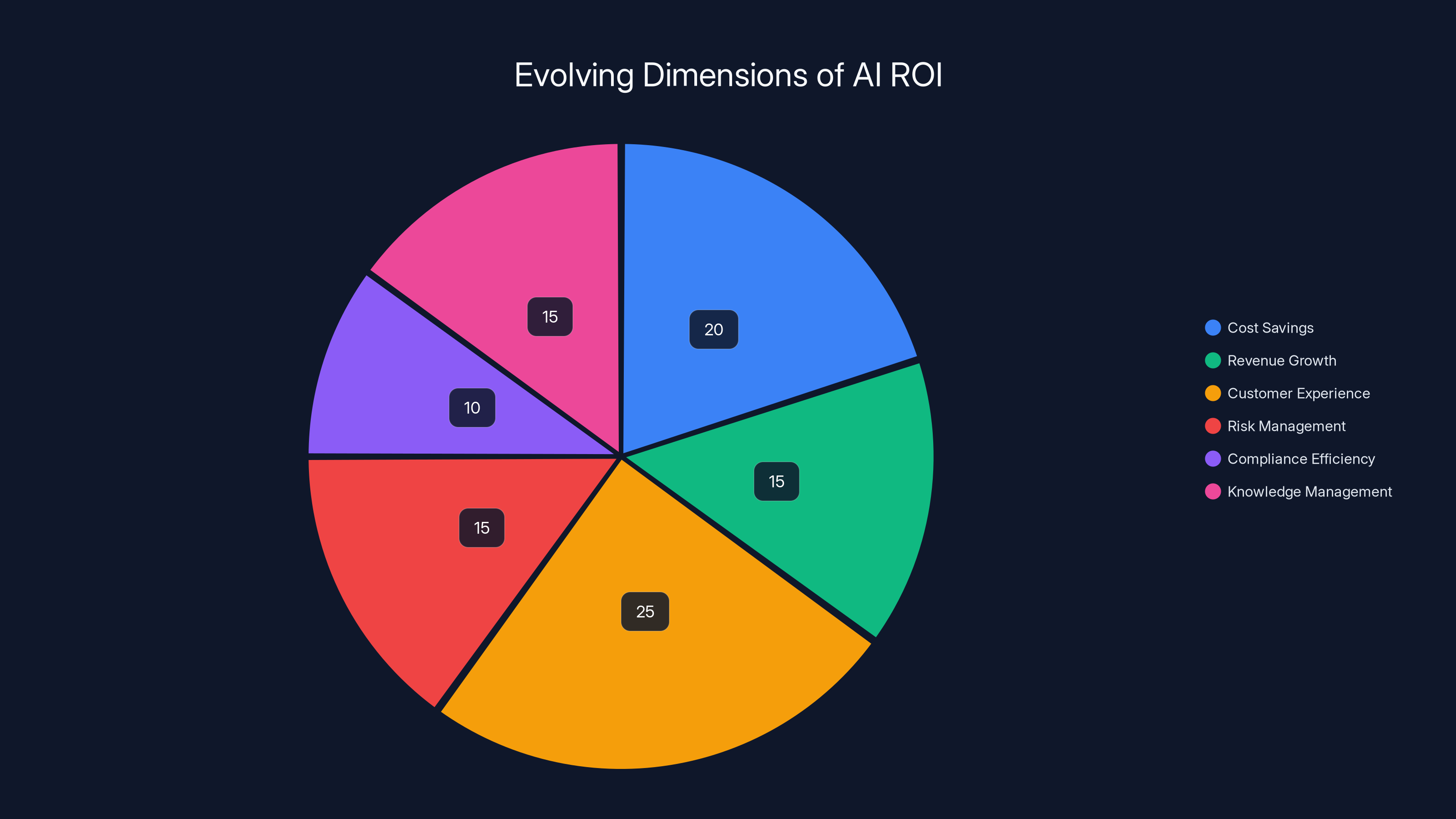

Organizations are now evaluating AI ROI across multiple dimensions, with customer experience and cost savings being prominent focus areas. Estimated data.

Where AI Is Being Deployed Right Now

AI isn't evenly distributed across business functions. Certain use cases have become standard because the ROI case is clear and the technical challenges are manageable.

Email and document processing is one of the biggest. Every organization handles massive volumes of email, documents, and unstructured text. AI can read through this, extract relevant information, categorize it, and route it to the right person or system. A law firm can use AI to review contracts and flag problematic clauses. An insurance company can use it to process claims faster. This isn't speculative. This is deployed and working.

Meeting notes and transcription is another. Record a meeting, feed it to an AI system, get back structured notes with action items, decisions made, and key discussion points. Some organizations are even using AI to summarize meeting notes for people who couldn't attend. Again, this is standard practice now in many large organizations.

Research and analysis is a third major domain. Instead of having analysts spend days pulling together market research, competitive intelligence, or financial analysis, AI can accelerate that process significantly. You still need skilled people to interpret results and make decisions, but the legwork is faster.

But organizations are clearly looking beyond these relatively "safe" use cases. The research indicates that AI in strategic decision-making is the next frontier.

More than half of C-suite executives already use AI to support their decision-making. That's executives using AI to think through strategy, evaluate options, and shape business direction.

Now, this is where skepticism is appropriate.

The Trust Problem Nobody Wants to Talk About

Here's a number that should concern every organization: only 41% of CEOs, CFOs, and COOs have above-average trust in AI for decision-making.

That means more than half of the top executives in most organizations don't trust AI systems with strategic decisions. Not because the technology isn't capable. But because they don't understand how the AI is arriving at its conclusions, they worry about hidden biases, they're concerned about legal liability if something goes wrong, and they're anxious about security.

Legality is a real issue. If an AI system recommends a strategy that violates antitrust law, or if an AI model trained on biased data recommends discrimination, who's responsible? The company? The AI vendor? The engineer who built the system? The law is genuinely unclear in most jurisdictions, and executives know it.

Explainability matters too. In finance or healthcare or government, you often need to be able to explain decisions. You can't just say "the algorithm decided." Regulators and auditors won't accept that. Some AI systems, particularly large language models, are essentially black boxes. You can see inputs and outputs, but the reasoning in between is opaque.

Security is another legitimate concern. If your AI system is connected to your core business systems, and that system gets compromised, you've created a single point of failure for your entire operation. The risk calculus changes when you're not just protecting customer data, but also protecting your decision-making infrastructure.

So here's the reality: organizations are using AI for decisions. But they're doing it carefully, with humans still firmly in the loop.

Only 1% of executives think AI will autonomously make strategic decisions in the next one to three years. One percent. That's not caution. That's near-universal agreement that human judgment remains essential.

What AI is becoming is a tool for making human decision-makers more effective. It can process more information, spot patterns humans would miss, and present options faster. But the actual judgment call—the decision about which path to take—that still belongs to people.

Estimated data suggests that by 2025-2026, around 50% of Chinese firms will have deployed AI agents, compared to 30% in the US and 25% in Europe, highlighting a significant geographic gap in AI adoption.

Infrastructure and Data: The Unsexy Foundation

If you ask most executives what they're excited about with AI, they'll talk about new capabilities, competitive advantages, customer experiences. Nobody gets excited about data foundations and infrastructure.

But that's where the actual work is happening.

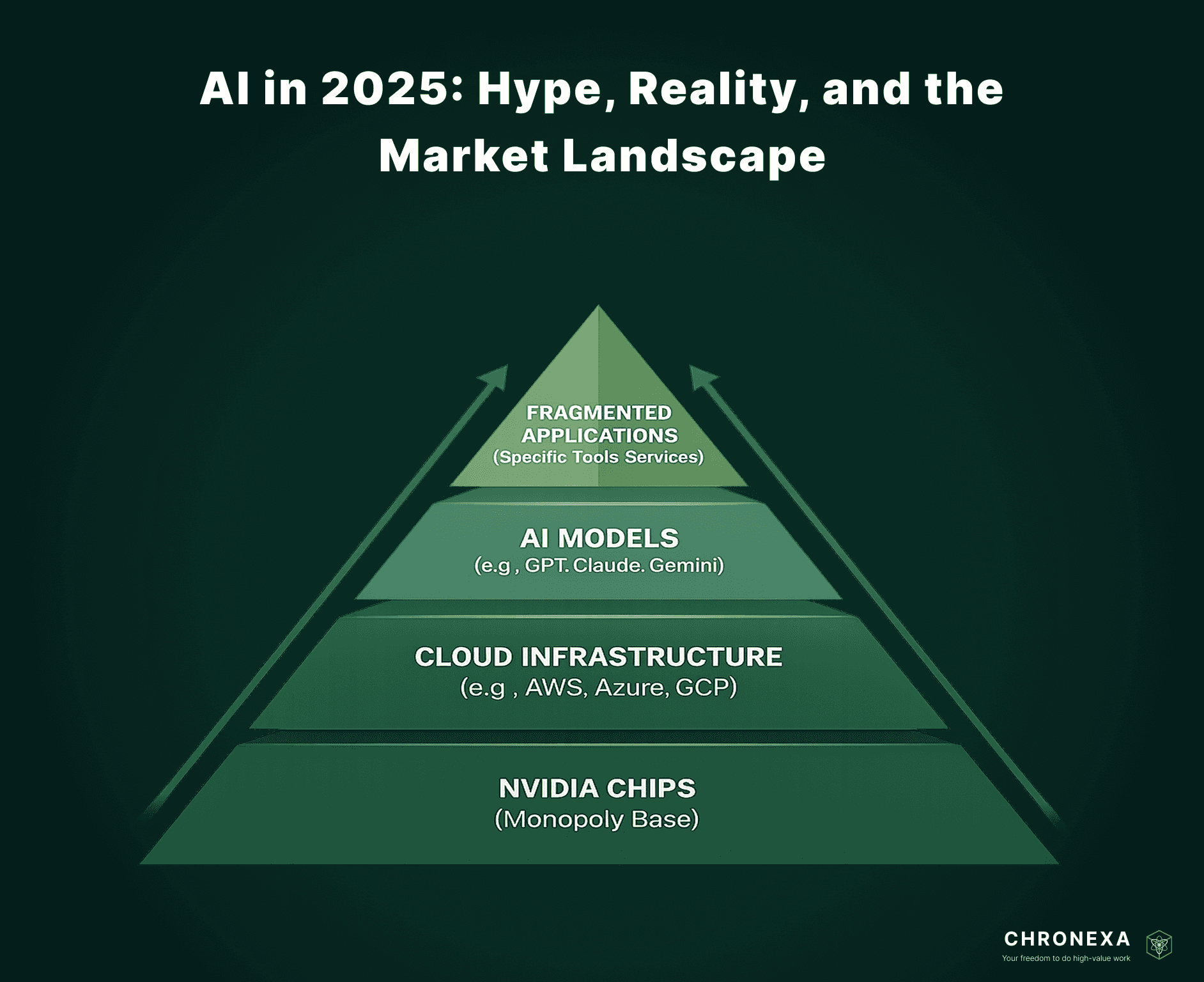

Large language models are expensive to run. They consume enormous amounts of compute power, which means expensive infrastructure. Organizations are investing heavily in GPU clusters, data centers, and cloud computing resources. Nvidia isn't getting rich because AI is solving problems. Nvidia is getting rich because running AI requires expensive chips, and every organization wants them.

And then there's data. High-quality AI models require high-quality training data. That means most organizations are investing heavily in data collection, cleaning, and organization. They're building data pipelines, implementing data governance frameworks, and dedicating people specifically to data quality.

This is unglamorous work. It doesn't make for good press releases. But it's absolutely essential. An AI system trained on garbage data will produce garbage results, no matter how sophisticated the underlying algorithms are.

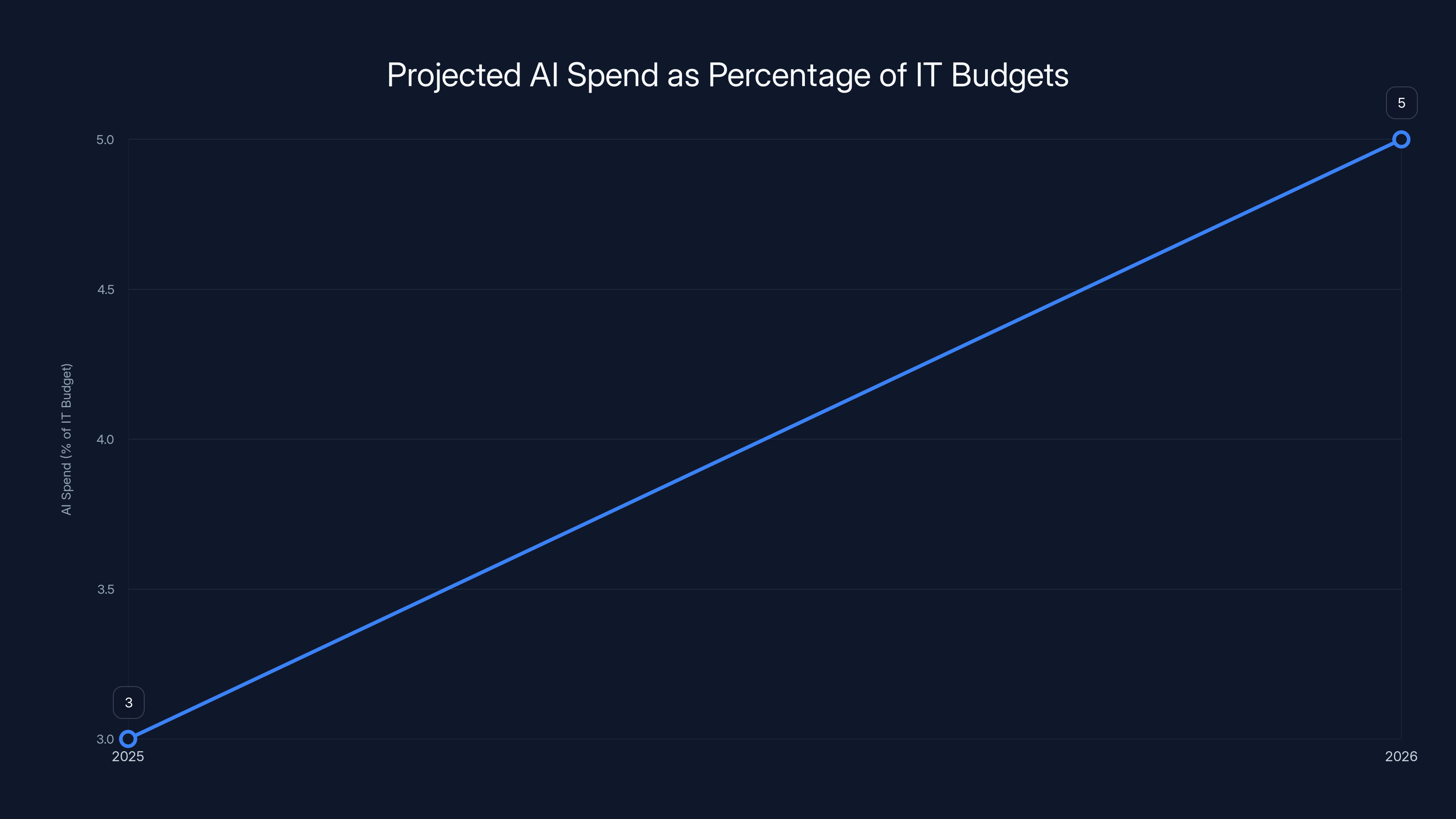

What's changing is that organizations are moving beyond treating this as optional. The research shows AI spend is rising to 5% of annual IT budgets in 2026, up from 3% in 2025. That's a significant increase, and a lot of that money is going to infrastructure and data foundations.

Governance is getting attention too. Most organizations have discovered that you can't just let different departments spin up AI systems independently. You need policies about what data can be used, how models are validated, what safeguards are in place, who can deploy models to production, and how systems are monitored for drift or degradation over time.

This is the work of building AI as a systematic capability rather than a collection of experimental projects.

How Companies Are Actually Measuring Success

The theory of AI ROI is one thing. The practice is messier.

Organizations are learning that you need to measure before you deploy, establish baselines, and track results consistently. A process that takes humans eight hours should become a documented, measurable baseline before you automate it. Then you can measure how much AI actually improves it.

Time savings are measurable, though they're often harder to realize than expected. You might reduce task time by 70%, but if the human who was doing that task doesn't have five hours of other work ready to go, you've just freed up time that gets consumed by meetings or other low-value work.

Quality improvements are real. AI can help catch errors humans miss, maintain consistency, and improve output quality. But measuring quality is often harder than measuring time. How do you quantify the value of catching one more error per thousand items processed?

Revenue impact is the most powerful metric when you can measure it. A sales AI that helps your reps close deals faster? That translates directly to revenue. Customer service AI that reduces wait times and improves satisfaction? That reduces churn, which is a direct revenue impact.

Compliance and risk improvements are hard to measure but potentially enormous. If your AI system catches one regulatory violation that would have cost $5M in fines, that's ROI that justifies years of investment. But it's hard to prove a negative—you can't prove how many problems you avoided.

Most successful organizations are doing something sophisticated: they're measuring multiple metrics simultaneously, understanding that different stakeholders care about different things, and building business cases that show impact across multiple dimensions.

Organizations measure AI success across multiple dimensions, with revenue impact often being the most significant. Estimated data.

The Workforce Challenge

Every organization deploying AI at scale is bumping up against the same constraint: they don't have enough people who understand AI.

Not just data scientists. They need people who understand how to design AI systems, how to evaluate whether AI solutions are appropriate for specific problems, how to manage risks and governance, and how to help the organization extract value from AI investments.

The research explicitly mentions workforce upskilling as a major 2026 investment area. Organizations are building internal AI education programs, hiring people with AI expertise, and trying to develop AI literacy across the organization so that more people understand what AI can and can't do.

This is harder than it sounds. There's a massive shortage of AI talent. Experienced people have a lot of options. And training people takes time—you're expecting skilled engineers and data analysts to spend time on education that could be spent shipping features.

But organizations that aren't making this investment are going to fall behind. It's that straightforward.

The Role of AI Agents in Automation

Agentic AI represents a qualitatively different model of automation than what came before.

Traditional automation—the kind organizations have been building for decades—is task-specific and deterministic. You identify a particular process, you write rules for how to handle it, and you automate exactly that. It's rigid. If the process changes slightly, the automation breaks.

AI agents are more flexible. They can handle variations in input, adapt to changing circumstances, and handle multi-step processes where the right next action depends on the results of previous steps.

This matters because it opens up automation possibilities that were previously too complex or variable for traditional approaches.

A customer service chatbot that follows a decision tree will work great until a customer asks something outside the tree. Then you're escalating to a human. An AI agent can take a wider range of customer issues, understand context, pull in relevant information from multiple systems, and attempt to resolve issues that traditional automation would immediately escalate.

Supply chain optimization is another domain where agentic systems shine. A supply chain involves countless interdependencies. Demand forecasts change. Supplier availability fluctuates. Shipping costs vary. Rather than building static optimization rules, an AI agent can continuously monitor the supply chain, spot inefficiencies, and recommend adjustments. Or in some cases, actually execute those adjustments without waiting for human approval.

The productivity gains can be substantial. If an AI agent can handle 80% of a business process autonomously, and humans only need to intervene on edge cases and exceptions, that's a dramatic change in economics.

But this also creates the trust and governance challenges we discussed earlier. As AI systems become more autonomous, organizations need more sophisticated safeguards, better monitoring, and clearer policies about what they're allowed to do without human review.

A significant 38% of enterprises have operationalized generative AI, while 60% are exploring agentic AI. However, only 41% of C-suite executives trust AI for strategic decisions.

Where We Are With Strategic Decision-Making

Using AI for strategic decisions is different from using it for tactical automation.

Strategic decisions are where organizations place bets on what matters. They have outsized impact. They're often high-stakes and high-uncertainty. And they tend to involve judgment calls where reasonable people disagree.

More than half of C-suite executives are already using AI tools to support their thinking on these decisions. They're feeding strategic questions to large language models, running scenario analyses with AI assistance, and using AI to help evaluate options.

But—and this is important—they're not using AI to make the decisions. They're using AI as a thinking partner. The research is absolutely clear on this: nobody thinks AI will autonomously make strategic decisions in the near term. And frankly, that's probably wise.

AI can synthesize information, highlight patterns, and surface considerations that humans might miss. That's genuinely valuable. But strategic decisions involve values, risk tolerance, organizational culture, and judgment about people and markets. These aren't things AI is equipped to handle autonomously.

The sweet spot appears to be: use AI to process information and generate options faster, but keep humans firmly in charge of actual decisions.

Building Sustainable AI Infrastructure

Organizations learning from early mistakes are building AI infrastructure differently now.

They're not building point solutions for individual problems. They're building platforms.

A data lake or data warehouse gives you a centralized repository where different parts of the organization can access consistent, high-quality data. That foundation enables different teams to build AI applications on top of it without duplicating data infrastructure.

Similarly, organizations are building AI platforms with shared model management, monitoring, governance, and deployment infrastructure. Instead of each team managing their own models and risking inconsistency or drift, there's a central system.

This requires more upfront investment and coordination. It's slower to get to your first AI application. But it pays dividends when you're building the tenth, twentieth, and fiftieth application.

Versioning and monitoring matter too. When you deploy a model to production, you need to track performance over time. Models degrade. Data distributions shift. A model that performed perfectly at launch might be quietly degrading six months later. Organizations are building monitoring systems that track model performance, spot degradation early, and trigger retraining.

Governance frameworks are becoming more sophisticated. Who can build models? Who can deploy to production? What validation is required? What happens if a model produces a problematic decision? These aren't technical questions. They're organizational and policy questions. And they need to be decided thoughtfully before you have a crisis.

AI spend is projected to rise from 3% of IT budgets in 2025 to 5% in 2026, highlighting increased investment in infrastructure and data foundations. Estimated data.

The Reality of Implementation Timelines

If you're expecting AI projects to move quickly, adjust your expectations.

Generative AI makes it seem like AI should be fast. You ask a question, you get an answer in seconds. Why can't enterprise AI implementations work the same way?

They can't because enterprises are complex. You need to identify the right problem to solve. You need to understand current processes and baselines. You need to acquire and clean data. You need to build or adapt models. You need to integrate with existing systems. You need to train people on how to use the system. You need to handle edge cases and exceptions.

A moderate-complexity AI implementation in a large organization is typically a multi-quarter project. A sophisticated, enterprise-wide implementation can take years.

This is where organizations that treated AI as experimentation are now struggling. They spent a year running pilots and experimentation. Now they're trying to scale, and they're discovering that scaling is much harder than initial pilots. Pilot projects can cut corners and work around limitations. Production systems need to be robust, maintainable, and integrated with existing infrastructure.

Organizations that are succeeding are setting realistic timelines, staffing appropriately, and treating AI implementation as a serious technology program rather than something you can spin up in a few months.

The Competitive Pressure

Here's what's driving all of this investment and activity: competitive pressure.

If your competitors are using AI to reduce costs, improve quality, and accelerate innovation, and you're not, you're going to fall behind. Gradually at first, then suddenly.

Companies that are deploying AI well are using it to gain competitive advantages in customer experience, operational efficiency, and decision-making speed. Those advantages are real and sustainable, at least in the medium term.

This is creating a kind of arms race. Organizations that don't invest in AI now are betting that they won't need to. That's a risky bet in most industries.

But this competitive pressure is also a corrective to hype. Organizations aren't investing in AI because it's trendy. They're investing because they see competitors using it to gain advantage, and they need to keep pace.

Building Trust in AI Systems

The trust issue we discussed earlier isn't going away.

Organizations are addressing it in several ways. First, transparency. Better understanding of how AI systems work, what data they were trained on, and what limitations they have. Not just understanding for the technical team, but understanding that business stakeholders can grasp and act on.

Second, validation. Before deploying an AI system, validate that it works as expected and produces defensible results. This is more sophisticated than just checking accuracy. It's checking for bias, testing edge cases, understanding failure modes, and ensuring the system behaves reasonably when it encounters data it wasn't trained on.

Third, control. Humans should always be able to understand, review, and override AI decisions, at least until there's much higher confidence in autonomous systems. This might mean requiring human approval for certain decisions, or allowing humans to easily intervene when an AI system is taking an action they disagree with.

Fourth, governance. Clear policies about what AI systems are allowed to do, who's responsible when they fail, how they're monitored, and what triggers review or retraining.

Organizations that are doing well on these dimensions are building sustained confidence in their AI systems. They're not asking people to blindly trust AI. They're showing why the AI is trustworthy through transparency, validation, and control.

The 2026 Outlook and Beyond

We're at a turning point. The era of experimentation is ending. The era of implementation is in full swing.

Based on where organizations are now, we should expect to see several trends dominate 2026 and beyond.

Agentic AI will continue to gain ground, particularly in automating multi-step processes that currently require human coordination. Organizations will push agentic systems into more domains and expect them to handle more complexity.

Investment in AI infrastructure and data will continue to grow. The unsexy foundation work—data pipelines, model management, monitoring systems, governance frameworks—will become visible because it's table stakes for doing AI at scale.

Integration with core business systems will deepen. Rather than AI being a separate tool you use sometimes, it'll be built into the systems you use every day. Your email system will have AI built in. Your document creation system will have AI built in. Your analytics platform will have AI built in.

Human-AI collaboration will become more sophisticated. Organizations will develop workflows where humans and AI systems work together, with clear handoffs about who's responsible for what decision.

Regulation will likely increase. Governments are already moving toward AI governance frameworks. Organizations will need to understand regulatory requirements in their jurisdictions and build compliance into their AI implementations.

Workforce skilling will accelerate. Organizations that want to capture AI's benefits will need people who understand AI. That means more education, more hiring of AI talent, and more internal training.

ROI expectations will become more realistic and more rigorous. The pie-in-the-sky projections will be replaced by measured, audited results. Organizations will become more sophisticated about what AI can realistically deliver.

And perhaps most importantly, AI will become normal. Not a special project. Not something you need special permission to think about. Just another tool in the technology toolkit that organizations use to solve problems and create value.

That normalization is the real sign of maturity. When AI stops being a phenomenon and starts being infrastructure, that's when you know the hype is genuinely over.

TL; DR

- From Hype to Implementation: 38% of enterprises have operationalized generative AI in production systems, marking a shift from experimentation to measurable business value.

- Agentic AI Leading the Way: 60% of organizations are exploring agentic AI (autonomous agent systems), seeing greater ROI potential than generative AI through multi-step process automation.

- Broader ROI Metrics: Organizations now measure AI success across cost savings, revenue growth, customer experience, risk management, and compliance—not just labor reduction.

- Trust Remains Critical: Only 41% of C-suite executives trust AI for strategic decisions; 99% believe humans must remain in control of strategic choices for the next 3 years.

- Infrastructure Investment Growing: AI spend rising to 5% of annual budgets in 2026; heavy investment in data foundations, governance frameworks, and workforce upskilling needed for sustainable implementations.

- Bottom Line: The AI transition is real but requires patient, systematic implementation with clear metrics, governance, and human oversight at every level.

FAQ

What does "operationalized AI" mean?

Operationalized AI refers to artificial intelligence systems that have moved beyond pilot projects and experimentation into active production use within business operations. These systems are handling real business processes, serving actual customers, or supporting core organizational functions—not just testing in sandboxes. When 38% of organizations have operationalized generative AI, it means they've deployed AI systems that are actively generating business value, managing customer interactions, processing data, or automating workflows at scale.

How is agentic AI different from the Chat GPT experience most people have?

Generative AI like Chat GPT is fundamentally responsive—you ask it a question and it generates an answer. You maintain control and give it new instructions each time. Agentic AI is proactive and autonomous—you give it a goal and the AI system breaks it into smaller tasks, executes those tasks, makes decisions about next steps, checks its own work, and adjusts course without asking for permission at each stage. Think of it as the difference between consulting an expert (generative) versus hiring an employee (agentic). Agentic systems can handle multi-step processes, work 24/7, and scale without hiring more people.

Why do organizations measure AI success differently now?

Early AI implementations focused narrowly on cost savings—reduce headcount, lower labor costs. But organizations learned that this miss most of AI's actual business value. A system that saves $200K in labor but helps sales teams close deals 30% faster creates much more total value. Now enterprises measure AI across multiple dimensions: cost reduction, revenue growth, customer experience improvements, risk management, compliance efficiency, and faster decision-making. Different organizations weight these differently based on their business model, but the principle is the same: understand what value AI creates in your specific context, then measure whether you're actually getting it.

What's the biggest obstacle preventing more organizations from deploying AI?

Trust is a major factor, but the real bottleneck is infrastructure and talent. Organizations need sophisticated data pipelines, model management systems, governance frameworks, monitoring infrastructure, and people who understand how to build and maintain these. The unsexy foundation work—data organization, quality assurance, infrastructure setup, workforce training—takes far longer and costs more than most executives expect. Many organizations have the will to deploy AI but lack the infrastructure foundation or skilled people required to do it well at scale.

How long does a realistic AI implementation actually take?

Pilot projects might take months, but scaling to production typically takes multi-quarter projects in large organizations, and sophisticated enterprise-wide implementations can take years. This surprises many executives because generative AI makes it seem like AI should be fast. But enterprise implementation requires identifying the right problems, understanding current baselines, acquiring and cleaning data, building or adapting models, integrating with existing systems, training users, and handling edge cases. Organizations that succeed are setting realistic timelines, staffing appropriately, and treating AI as a serious technology program rather than something you spin up quickly.

What percentage of C-suite executives actually trust AI for strategic decisions right now?

Only 41% of CEOs, CFOs, and COOs report above-average trust in AI for strategic decision-making. The main concerns are legal liability, security risks, lack of explainability (understanding how the AI arrived at conclusions), and biases in the underlying models. But here's the important part: 99% of executives don't expect AI to autonomously make strategic decisions in the next 1-3 years. The trust issue isn't about whether AI is used for strategic decisions—it's about how. AI functions best as a thinking partner that helps humans process information and explore options faster, while humans retain authority over actual decisions.

How much should organizations be budgeting for AI initiatives in 2026?

Based on current trends, AI spend is rising to 5% of annual IT budgets in 2026, up from 3% in 2025. But this varies dramatically by industry and organization size. This 5% budget typically gets distributed across three areas: infrastructure and compute costs (expensive because running large AI models consumes significant computing power), data foundations and governance (building clean data pipelines, establishing governance frameworks), and workforce upskilling (training existing employees and hiring AI talent). Organizations should expect actual AI implementation to take more budget than they initially estimate because they underestimate how much foundational work is required before they can deploy sophisticated systems.

Why is China ahead of the US and Europe in deploying AI agents?

China leads in agentic AI deployment with nearly 50% of firms having piloted or deployed AI agents, compared to lower percentages in the US and Europe. This reflects several factors: aggressive government investment in AI infrastructure, fewer regulatory constraints around autonomous systems, strong tech industry competition driving rapid deployment, and cultural factors that may create less resistance to automation. However, deployment speed isn't the same as long-term success—the US and Europe may be moving more slowly but with more attention to governance, risk management, and sustainable implementation practices.

What specific metrics should organizations track to measure AI ROI?

The best organizations track multiple metrics simultaneously rather than relying on a single measure. Time savings are foundational—how much faster does the process complete? Quality improvements matter too—are error rates lower, consistency higher? Revenue impact is powerful when measurable—does this help sales close faster, reduce customer churn, or increase transaction volume? Risk and compliance impact matters—does this catch violations, reduce legal exposure? And customer experience improvements—does this improve satisfaction, reduce wait times, enable personalization? The key is understanding which metrics matter most for your specific implementation, establishing baselines before deployment, and tracking results consistently after launch to prove the AI actually delivered the expected value.

Building Your AI Strategy in 2025-2026

The landscape has shifted significantly. Organizations that are thriving with AI now treat it as serious infrastructure rather than an experimental project. They've moved past asking "Should we use AI?" to asking "How do we build AI capabilities that create measurable, sustainable competitive advantage?"

This requires thinking differently about AI implementation. It's not about finding the flashiest use case or the coolest new model. It's about identifying business problems where AI can genuinely create value, building the infrastructure to deploy solutions reliably, establishing governance frameworks that manage risks, measuring results rigorously, and continuously improving based on what you learn.

It also means accepting that this is a multi-year journey. Organizations don't build sustainable AI capability in six months. They do it over years, with patient investment in the unsexy foundation work that enables everything else.

The good news is that the path is increasingly clear. Thousands of organizations have gone through this journey. There are patterns in what works and what doesn't. Best practices are emerging. The organizations that follow these patterns and invest appropriately are seeing substantial returns.

The ones that don't are discovering that AI hype was easy. AI reality is harder. But the reality is also where the actual value lives. And that's worth the effort.

Key Takeaways

- AI has matured from experimentation phase into systematic, production-level implementation with 38% of enterprises having operationalized generative AI in real business processes

- Agentic AI (autonomous agent systems) is accelerating faster than generative AI adoption, with 60% of organizations exploring autonomous agents that can handle multi-step processes without human intervention at each stage

- Organizations now measure AI success across multiple dimensions—cost savings, revenue growth, customer experience, risk management, compliance—rather than just labor reduction

- Only 41% of C-suite executives trust AI for strategic decisions; 99% believe humans must remain in control of strategic choices for the next 1-3 years, creating clear guardrails for autonomous systems

- AI infrastructure spending is rising to 5% of annual IT budgets in 2026 (up from 3%), with major investment required in data foundations, governance frameworks, and workforce upskilling rather than just model development

Related Articles

- Jensen Huang's Reality Check on AI: Why Practical Progress Matters More Than God AI Fears [2025]

- Parloa's $3B Valuation: AI Customer Service Revolution 2025

- Google Gemini vs OpenAI: Who's Winning the AI Race in 2025?

- Shadow AI in 2025: Enterprise Control Crisis & Solutions

- AI-Powered Go-To-Market Strategy: 5 Lessons From Enterprise Transformation [2025]

- Apple & Google AI Partnership: Why This Changes Everything [2025]

![From AI Hype to Real ROI: Enterprise Implementation Guide [2025]](https://tryrunable.com/blog/from-ai-hype-to-real-roi-enterprise-implementation-guide-202/image-1-1768594072484.jpg)