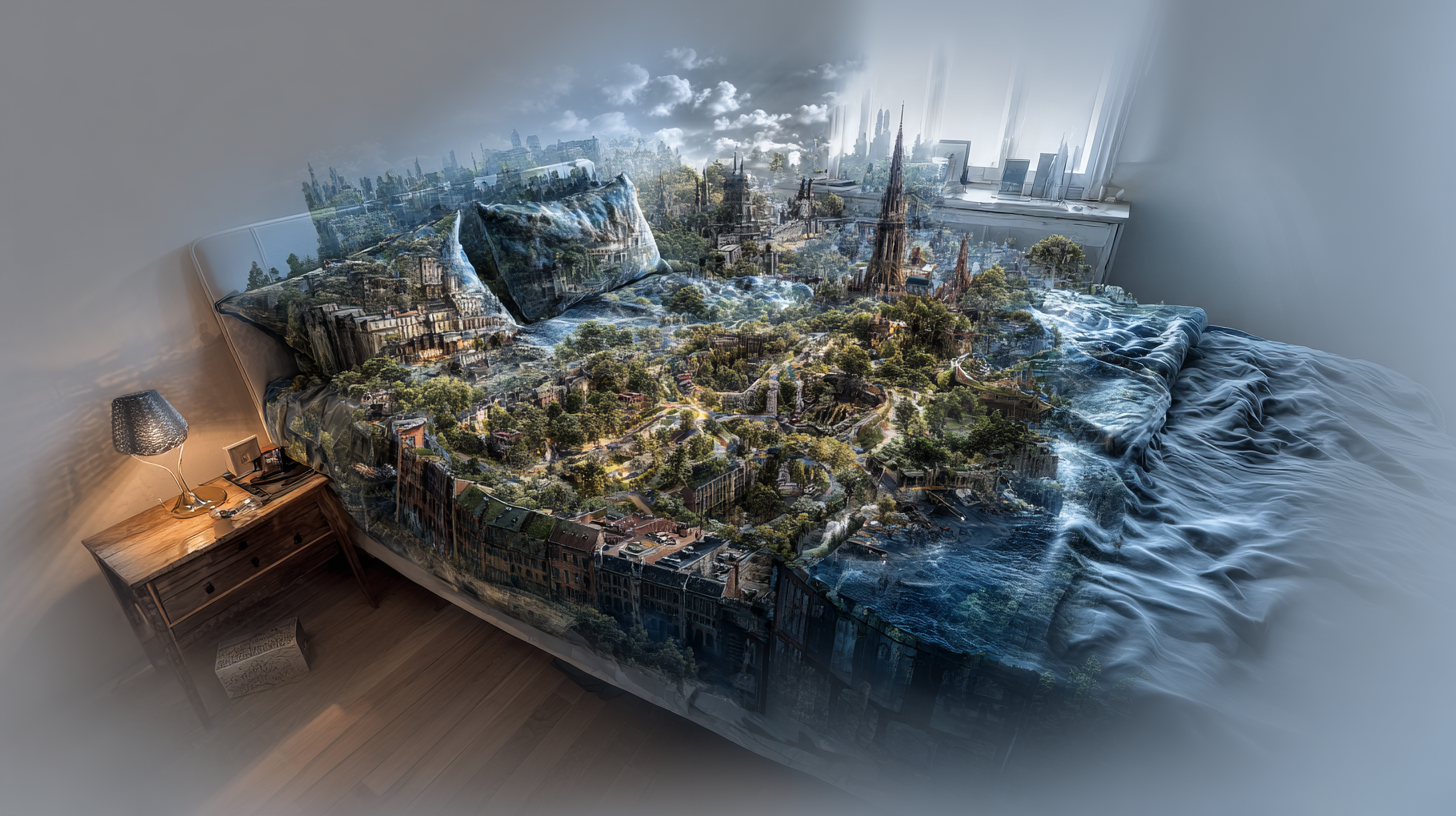

Google Project Genie: Create Interactive Worlds from Photos and Prompts

Imagine showing an AI a single photo, describing a scene in a few words, or sketching out what you want—and within seconds, you're walking around inside a fully interactive 3D-like world that responds to your every keystroke. That's not science fiction anymore. It's Google Project Genie, and it's here.

Google just made something that feels like it shouldn't exist yet available to anyone willing to pay for their premium AI subscription. After years of research locked away in labs, Project Genie stepped out of the shadows and into the real world. And while there are real limitations worth understanding, the underlying technology represents a genuine leap forward in how machines understand and simulate environments.

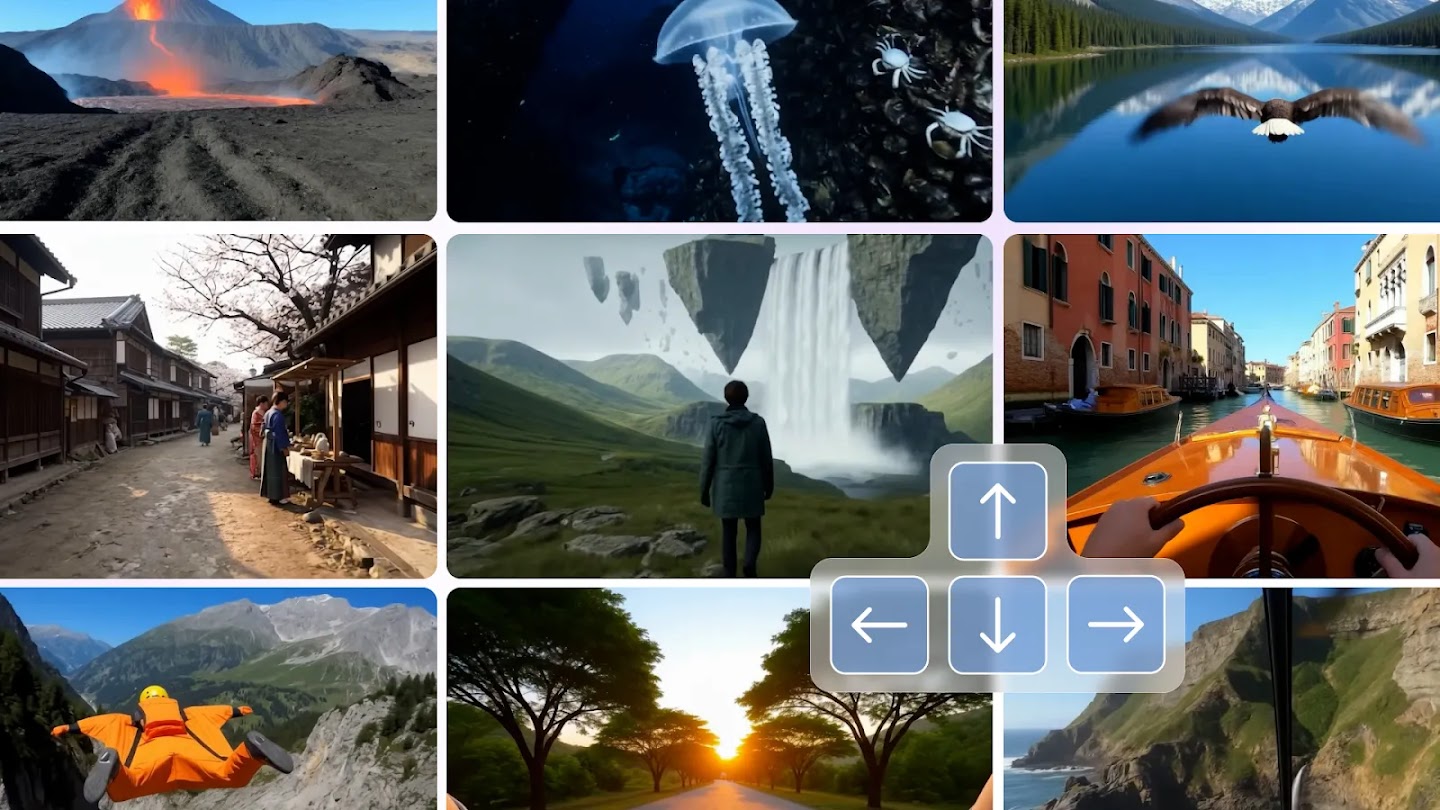

This isn't about static images or pre-recorded videos. Project Genie creates dynamic, responsive worlds that react to your input in real time. Move forward, and the world generates what's ahead. Turn left, and the environment shifts accordingly. It's closer to a video game engine than a text-to-image tool, except instead of artists building the world pixel by pixel, an AI generates it on the fly.

The practical implications are massive. Game developers could prototype worlds in minutes instead of weeks. Architects could walk through buildings that don't exist yet. Educators could create interactive learning environments tailored to students' needs. But there's a catch: it's expensive, limited to 60 seconds per session, and right now, only available to those paying Google's highest subscription tier.

Let's dig into what Project Genie actually is, how it works under the hood, why it matters, and what it means for the future of interactive media.

TL; DR

- World models create dynamic, interactive environments that respond to user input in real time, generating new content as you explore rather than playing back pre-recorded video

- 60-second exploration windows limit each session, but results regenerate differently each time you run the same prompt, creating nearly infinite variations

- Requires Google's AI Ultra subscription at $250/month, making it accessible only to serious researchers, professionals, and well-funded teams

- Breakthrough memory system allows Genie to maintain consistency across minutes of exploration, a massive improvement over earlier world models that forgot details within seconds

- Physics can be inconsistent and some game IP is being blocked after initial testing, revealing current limitations in accuracy and legal compliance

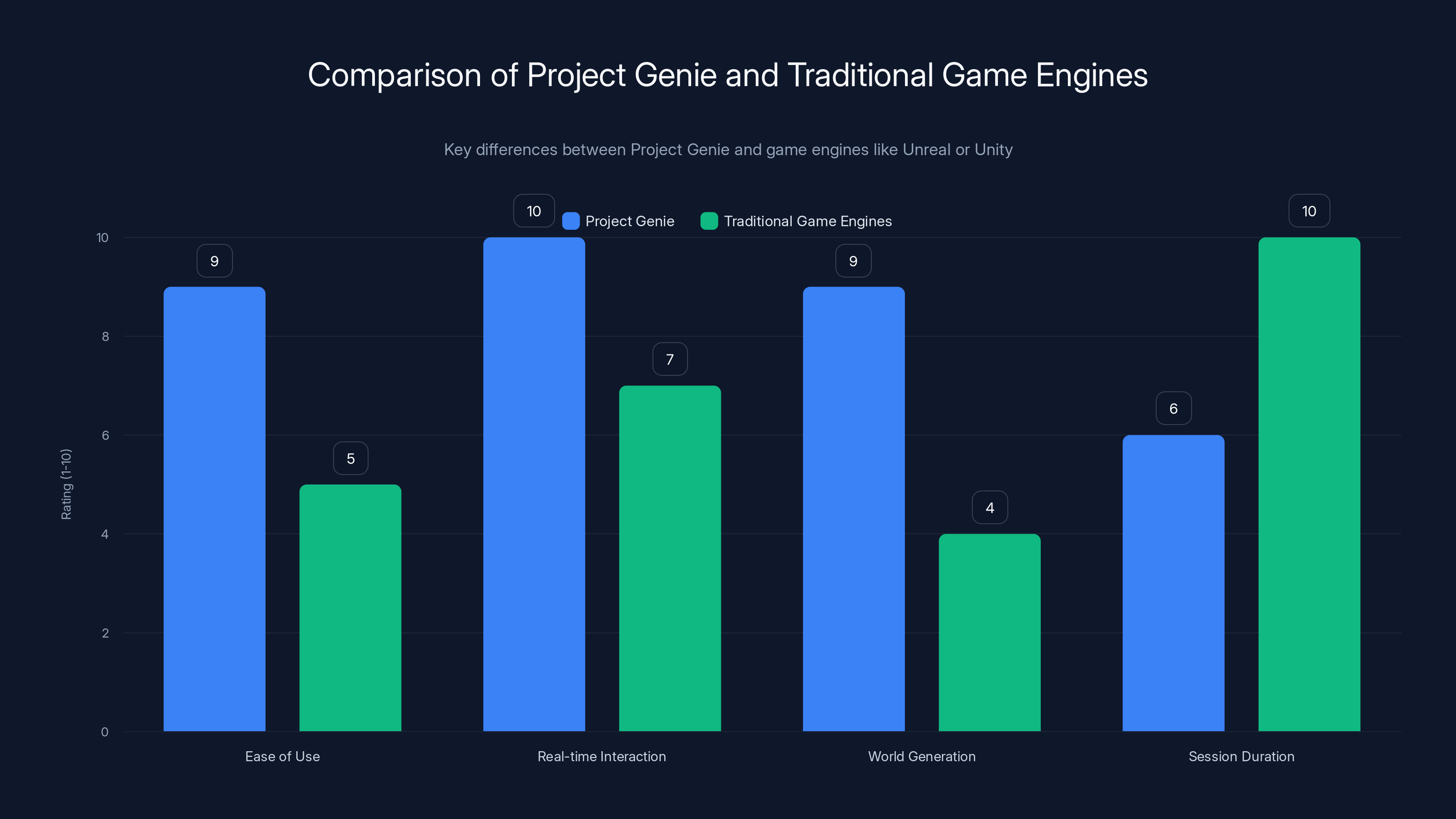

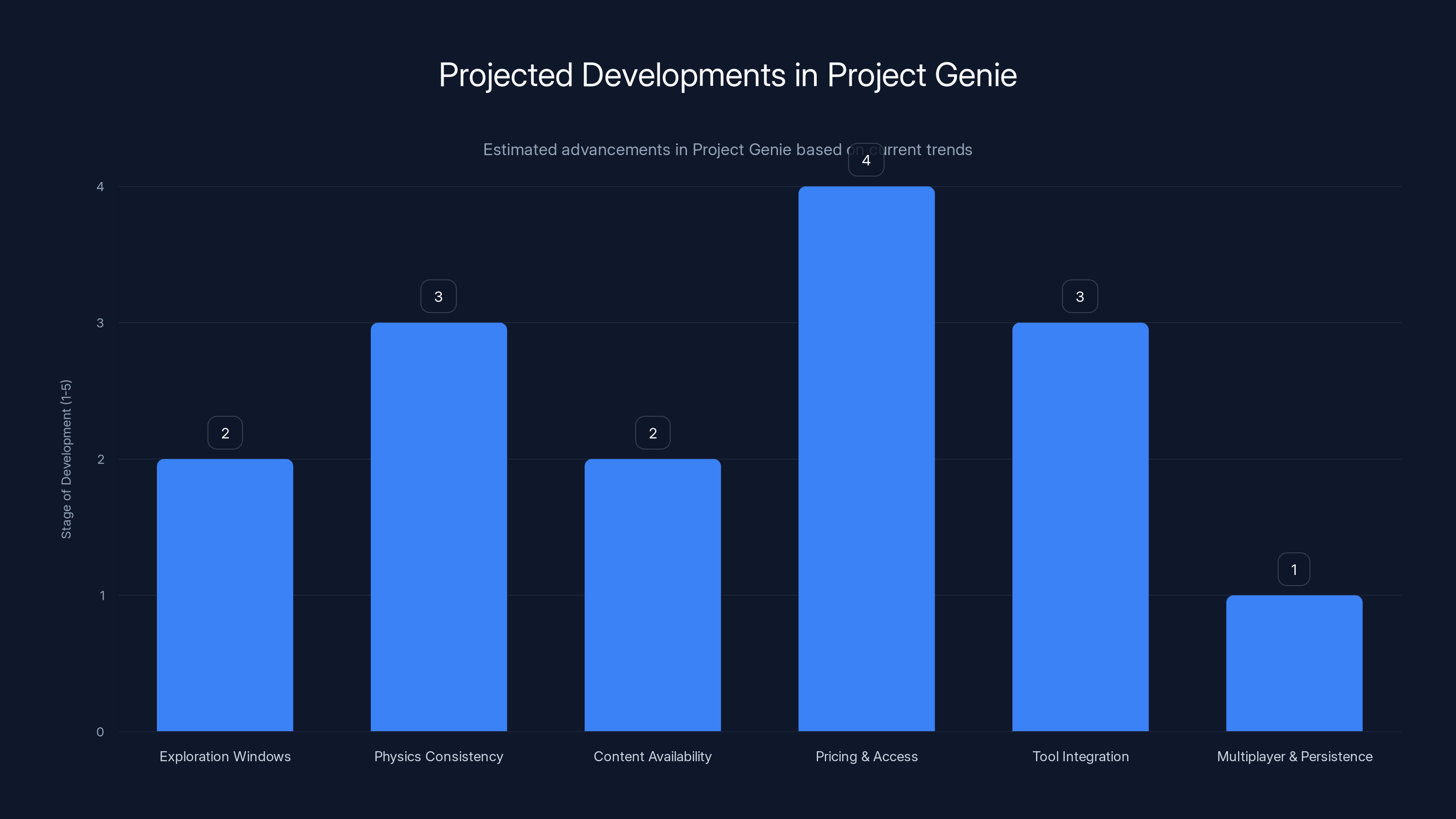

Project Genie excels in ease of use and real-time interaction compared to traditional game engines, but is limited by shorter session durations. Estimated data.

What Is a World Model? Understanding the Technology Behind the Magic

Before you can understand Project Genie, you need to understand what a world model actually is. It's a term that sounds technical, and it is, but the concept itself is beautifully simple.

A world model is an AI system that understands the rules of a simulated environment well enough to generate it frame by frame as you interact with it. It's not a 3D engine like Unreal or Unity. It's not storing pre-made assets. Instead, it's watching your input, predicting what should logically happen next based on physics, perspective, and the laws that govern the world it created, then rendering that prediction as a video frame.

Think of it like this: you tell an AI artist to draw the view ahead of you as you walk through a forest. They draw one frame. You take a step. They draw the next frame based on where you moved, what direction you're looking, and how a forest should actually look from that angle. They're not looking at a map. They're creatively solving what the next moment should look like. That's essentially what a world model does, except it does it 24 times per second.

The challenge has always been consistency. Early world models would generate a few seconds of coherent video before things started breaking down. A tree would change shape. Physics would stop making sense. The sky would turn purple for no reason. This happened because the AI would forget the details of what it had already created and start generating nonsensical variations.

Project Genie changed that equation with a breakthrough in memory. The system can now maintain internal consistency for a couple of minutes. It remembers what the world looks like from multiple angles. It understands object permanence. If you place something in the world and then walk away, when you turn back around, it's still there. For a generative AI system, that's massive.

The technical architecture is built on a foundation of video prediction models trained on vast amounts of visual data. Genie doesn't just predict pixels randomly. It learns the underlying rules of how the world works. Objects fall down. Light reflects off surfaces. Distances scale correctly as you move through space. Perspective changes realistically. These aren't hardcoded rules. They emerge from training on billions of video frames.

What makes Genie special is that it does this in something approaching real time. When you press the W key to walk forward, there's a brief delay (a few hundred milliseconds), but then the next frames render fast enough that it feels interactive. This is genuinely difficult because the AI has to predict, render, and display frames faster than you can reasonably explore.

The system uses what Google calls "world sketching." You either provide an image, describe what you want, or both. Google's image generation model (currently Nano Banana Pro or Gemini 3) synthesizes a reference image from your prompt. If you're happy with it, you approve it, and Genie takes over from there. If not, you can edit the image before handing it to Genie to generate the interactive world.

The output is 720p video at approximately 24 frames per second. That's lower resolution than you'd get from a modern game engine, but it's plenty to see what's happening, and the resolution is probably a practical trade-off given how computationally expensive the generation is.

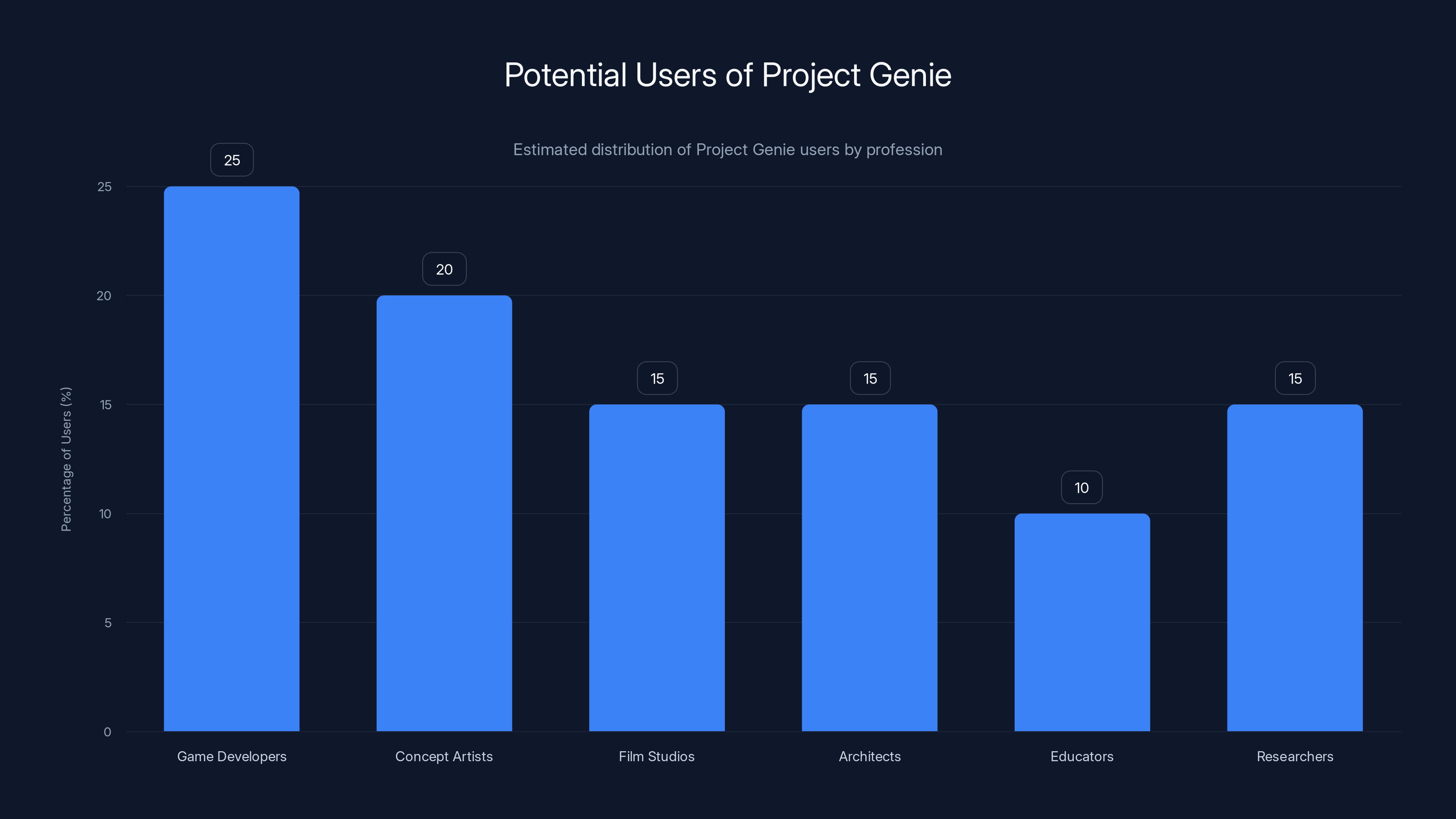

Estimated data suggests that game developers and concept artists are the primary users of Project Genie, followed by film studios and architects. Estimated data.

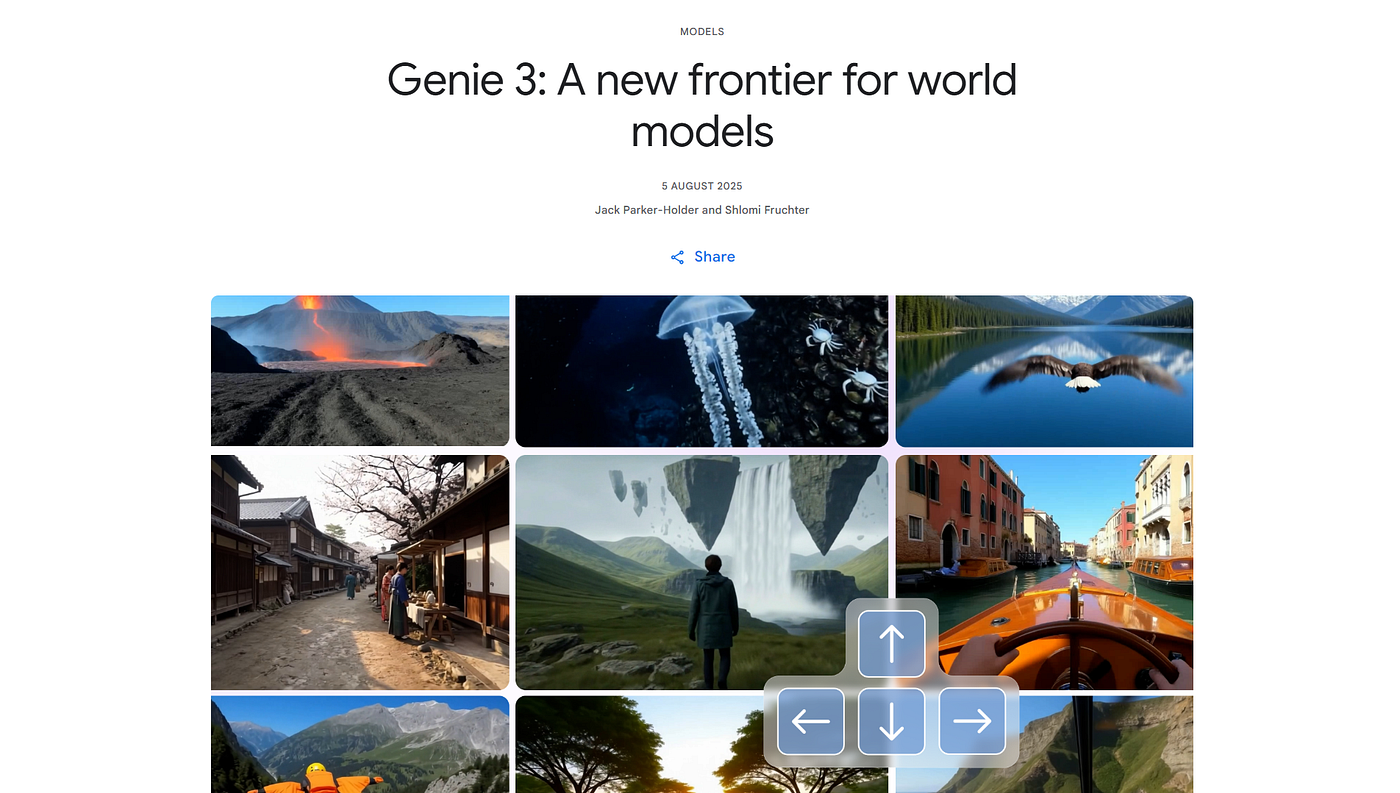

The Evolution From Genie 3 to Project Genie: What's New and What Changed

Google didn't start with Project Genie. This is version 4 (or technically version 3.5) of technology that's been in development for years. Understanding the evolution helps you see what actually improved.

Genie 3 was the breakthrough moment. Announced with significant fanfare in research papers and demos, it showed the world that consistent 60-second interactive environments were possible. But Genie 3 was a research prototype. It existed in labs. A small group of testers could play with it, but it wasn't available to the broader public. Google was still optimizing it, testing it, seeing where it broke.

Project Genie represents the moment Google decided to productize that research. Instead of building something radically different, they took Genie 3, cleaned it up, integrated it with updated AI models (Nano Banana Pro for image generation, Gemini 3 for understanding prompts), and built a web interface around it.

The improvements are subtle but meaningful. The memory system is more robust. The character controls feel more responsive. The image generation that creates the initial reference is better. But honestly, from a user perspective, you're not getting a radically different experience. You're getting the research prototype polished and made available.

Google also added remix functionality. You can take one of their pre-built worlds, swap in different characters, change the visual style, and explore it in a new way. This is useful for creators who want to customize existing environments without starting from scratch.

What's notably absent is the "promotable events" feature that was teased for Genie 3. This was supposed to let you insert new elements into a running world mid-exploration. You'd be walking around, and you could add an object, change the weather, or introduce new NPCs without stopping the simulation. That's not available yet. It's coming, maybe, but not now.

The architecture powering this isn't entirely new. Google has been publishing papers on world models and video prediction for years. This is them taking that accumulated research, combining it with modern large language models and image generation, and shipping it as a service. That's harder than it sounds, but it's not inventing entirely new algorithms. It's excellent product engineering applied to published research.

How Project Genie Actually Works: The Step-by-Step Process

Using Project Genie isn't complicated, but understanding what's happening behind each step matters. Here's the actual workflow:

Step 1: Provide Input

You start by either uploading an image or typing a text description (or both). If you type a description, the system sends that to an image generation model. If you upload an image, it uses that directly. This is the "world sketching" phase. Your input becomes a static reference image.

Step 2: Generate the Reference Image

If you only provided text, Google's image model synthesizes a visual representation. It's not creating the full world yet. It's creating a still image that shows what the world should look like. This image serves as a foundation for what comes next.

You can edit this image before moving forward. Don't like the colors? Edit them. Think the landscape should be different? Modify it. This is your last chance to get the aesthetic right, because Genie uses this as a template.

Step 3: Genie Generates the Interactive World

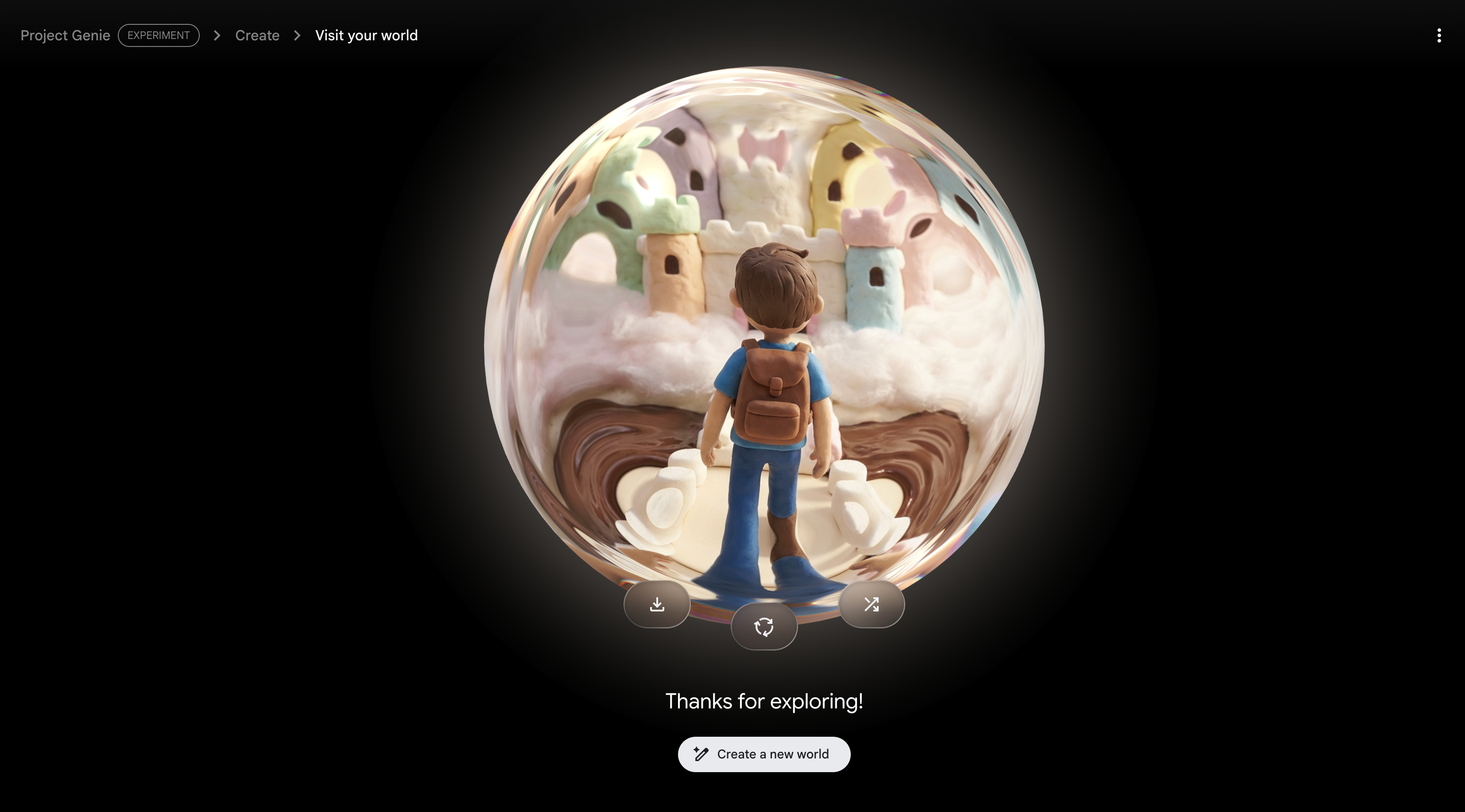

Once you approve the reference image, Genie takes over. It analyzes the image, understands the layout, the lighting, the overall composition, and starts generating frames. You're now inside this world, looking at a first-person perspective.

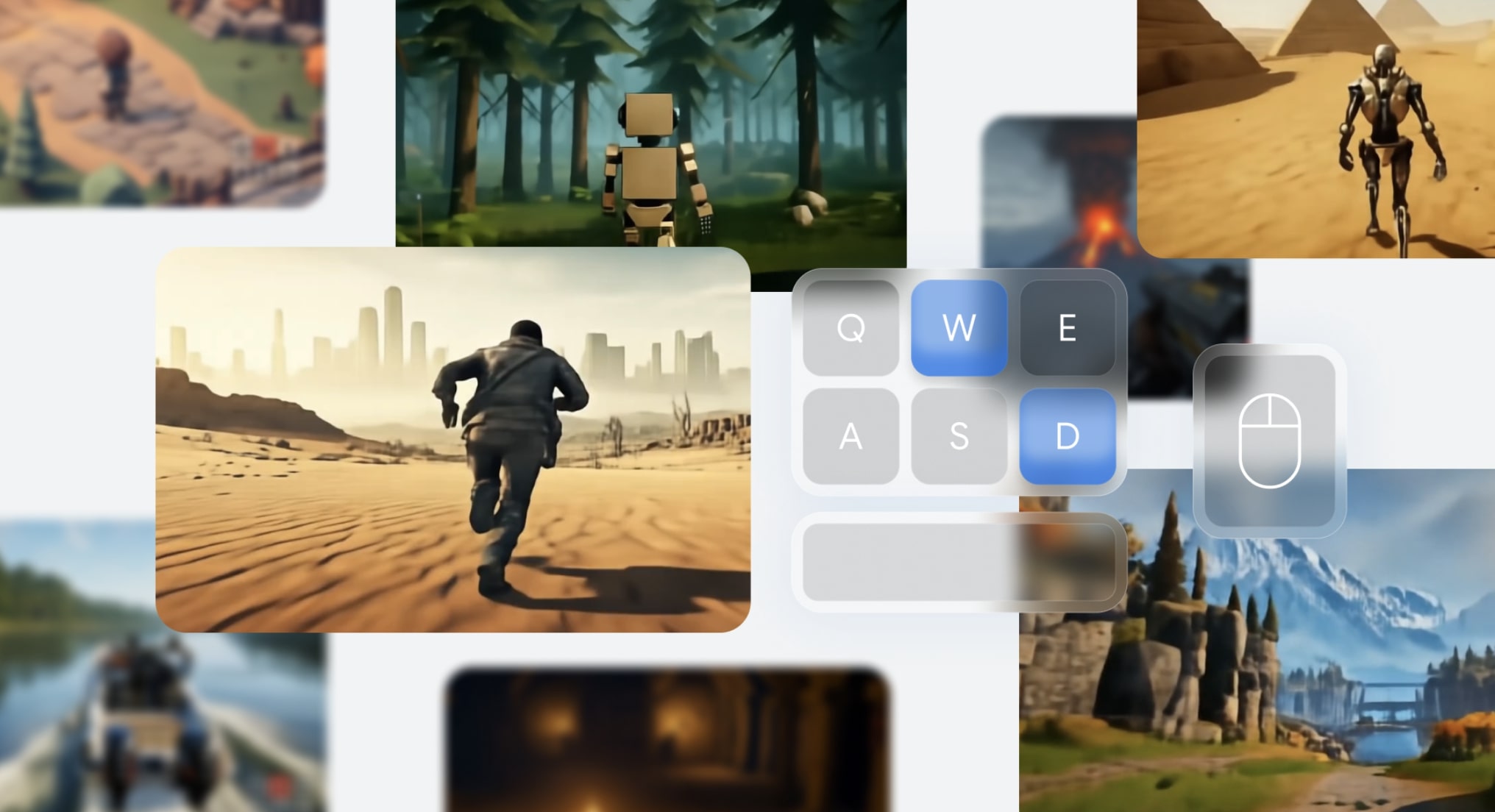

Step 4: Explore Using WASD Controls

Just like a video game, you use W to move forward, A to strafe left, S to move backward, and D to strafe right. As you move, Genie generates new frames in real time (with a brief lag), creating the illusion that you're walking through this environment.

The frame rate is around 24 FPS, not the 60+ you'd get from a game engine, but fast enough to feel like you're exploring rather than watching a slideshow. There's input lag—it's not instant—but it's not so bad that it breaks immersion.

Step 5: You Have 60 Seconds

Then you hit a hard limit. The world generation stops. Your 60-second window is over. You can choose to run the same prompt again (you'll get a slightly different world because generative AI is probabilistic), remix an existing world, or create something new.

The 60-second limit is arbitrary but practical. Longer explorations would be more computationally expensive. Shorter explorations wouldn't feel satisfying. 60 seconds is enough time to explore a modest area, get a feel for the environment, understand the physics, and either be impressed or disappointed.

Step 6: Download Your Video

The video you create can be downloaded. It's 720p, MP4 format, ready to share or use in projects. This is useful for creators who want to capture their explorations.

Each of these steps involves multiple AI systems working in concert. The image model understands prompts. The world model understands space and physics. The rendering system converts predictions into visible frames. The latency optimization system tries to keep things feeling responsive. When it all works, it's impressive. When it doesn't, you notice.

Estimated data suggests that pricing and broader access are likely to advance sooner, while multiplayer and persistence features may take longer to develop.

The 60-Second Limitation: Why It Exists and What It Means

The most obvious constraint you'll hit with Project Genie is the 60-second exploration window. You generate a world, you have one minute to explore it, and then it's done. You can't just keep walking around indefinitely.

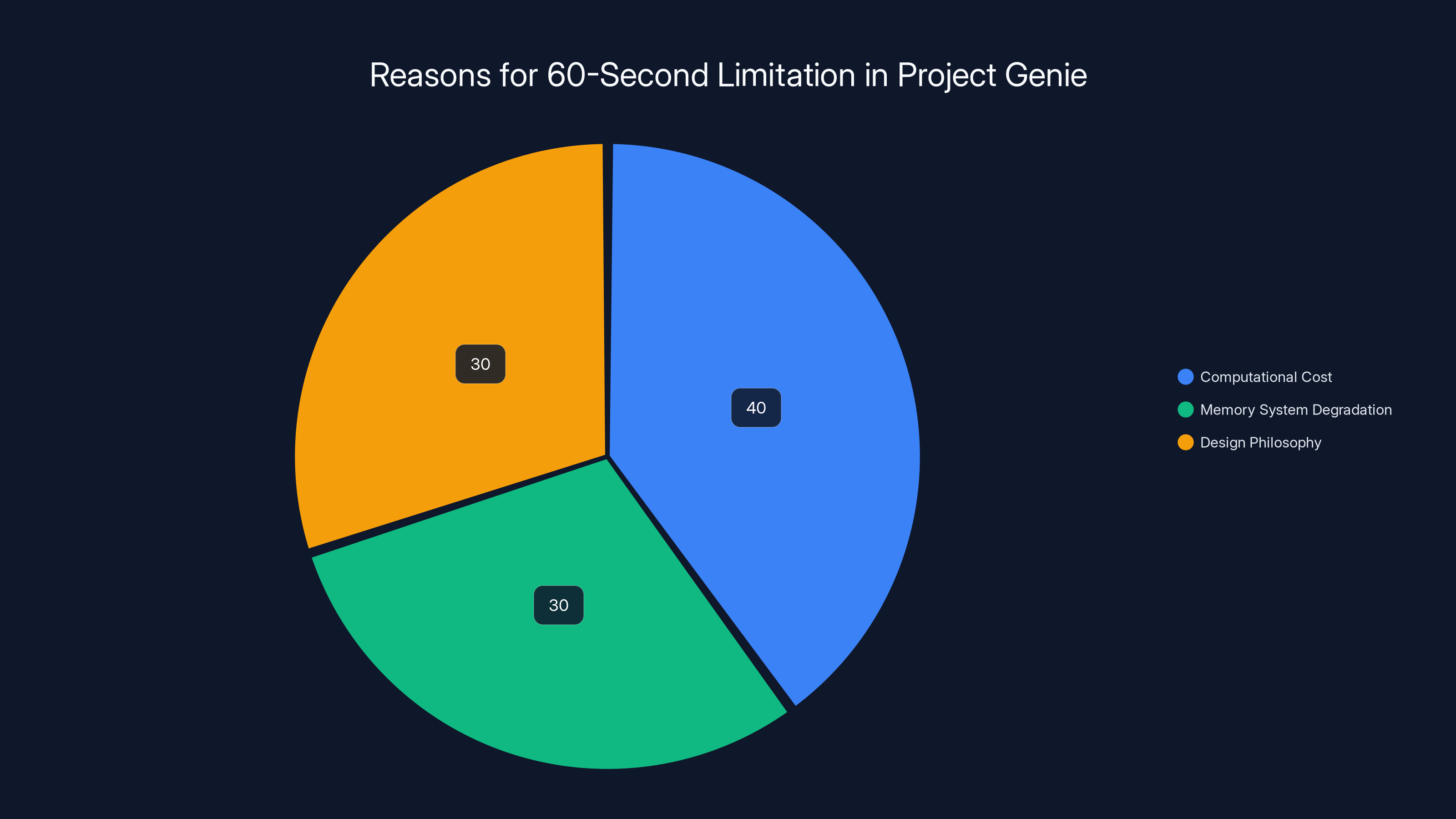

This isn't an arbitrary design choice. It's a necessity dictated by computational cost and current technical limitations. Generating video in real time, frame by frame, while predicting what should happen next based on your input, is computationally expensive. Keeping that consistent for an hour would require enormous compute resources. At $250/month subscription cost, Google can't afford to give you unlimited time without losing money.

Moreover, the memory systems that keep the world consistent degrade over time. The longer you explore, the more drift accumulates. Details start changing. Physics gets weird. The coherence that makes it impressive falls apart. Sixty seconds is roughly the sweet spot where everything still works pretty well.

But sixty seconds is also just long enough to be satisfying. You can explore an area. Walk into a structure. Get a sense of scale. Experience the environment. You're not just seeing a glimpse. You're getting enough time to feel like you explored something.

If you want more time, you re-run the prompt. Each time, you get a slightly different world (same general layout, different details). This isn't a limitation so much as a design philosophy. Instead of longer sessions, you get infinite variety across shorter sessions.

For gaming, this is limiting. You couldn't build a game where players explore one persistent world. You could build a game that generates a new level every 60 seconds, and that's actually kind of interesting. For testing and prototyping, 60 seconds is often enough. For creating reference material for artists, it's plenty.

The limitation also serves as a nudge toward specific use cases. It's not designed for casual exploration. It's designed for rapid prototyping, testing ideas, and generating reference material. Those are the use cases that make sense with 60-second windows.

Project Genie vs. Traditional Game Engines: The Fundamental Difference

The immediate question people ask is: "How does this compare to Unreal or Unity?" The answer is both simpler and more complex than you'd think.

Unreal and Unity are tools for building games and interactive experiences through authoring. A developer (or team of developers) builds the game. They place assets, write code, test extensively, and ship a finished product. The entire experience is predetermined. The engine renders what the developers created.

Project Genie is generative. You don't build anything. You describe it (or show a picture of it), and an AI generates it. The experience is novel every time. There's no predetermined path. The physics aren't coded. They're learned.

This is actually pretty different from traditional game development, and it has different strengths and weaknesses.

Traditional engines are better at:

- Precise control and predictability

- High fidelity and visual quality

- Complex physics simulations

- Polished, consistent experiences

- Large, persistent worlds

Project Genie is better at:

- Rapid prototyping

- Generating novel content

- Testing design ideas

- Creating varied environments quickly

- Lower barrier to entry (describe what you want, don't code it)

They're not really competing in the same space. A professional game developer will continue using game engines. But someone who wants to prototype an idea, test if a concept works, or quickly generate reference material might turn to Project Genie instead.

There's also a hybrid future. Imagine a game engine that can generate terrain procedurally using a world model, then let you hand-edit and polish it. Or an AI that generates the base level, and developers refine it. That's probably where this technology heads.

For now, Project Genie is a standalone tool with distinct advantages for specific workflows, not a replacement for traditional game development infrastructure.

The 60-second limitation in Project Genie is primarily due to computational cost (40%), memory system degradation (30%), and design philosophy (30%). Estimated data.

The Physics Problem: Why Worlds Sometimes Break

Here's something important to understand: Project Genie doesn't have a physics engine in the traditional sense. It doesn't have gravity as a rule, collision detection as code, or material properties hardcoded. Instead, it learned physics from watching videos of the real world and simulating environments.

This is amazing when it works. Water flows realistically. Shadows fall correctly. Distances scale properly as you move. The AI understood these rules from patterns in data.

But it also means physics can be inconsistent. Trees might phase through each other. The ground might deform weirdly as you walk. Gravity might behave oddly in certain areas. Objects might not fall where you'd expect. The AI is making its best guess at what should happen next, and sometimes it guesses wrong.

Google acknowledges this explicitly. They tell testers to expect things that "don't look or behave quite right." This is research prototype language. Translation: don't be surprised when the physics is weird.

Why is this important? Because it sets expectations. If you go into Project Genie expecting physics as precise and reliable as a commercial game engine, you'll be disappointed. If you go in understanding that this is a generative system that's learning physics from data, oddness becomes interesting rather than frustrating.

As the underlying models improve, this gets better. The more video data the models train on, the better they understand how the real world works. Eventually, you'd expect physics consistency to approach game engine levels. But not yet.

For specific use cases (rapid prototyping, concept testing, generating reference images), physics inconsistency doesn't matter much. You don't need perfect physics to test if an idea works. You just need something close enough that you understand the spatial relationships and can evaluate the concept.

The Pricing Reality: Why This Costs So Much and Who Should Pay

Project Genie is only available through Google's AI Ultra subscription, which runs $250 per month. That's not cheap. That's a serious commitment.

Why is it so expensive? Because generating all those video frames is genuinely expensive. Each world you create represents significant compute. Each frame generation costs something. At $250/month, Google is likely still subsidizing the actual cost of compute.

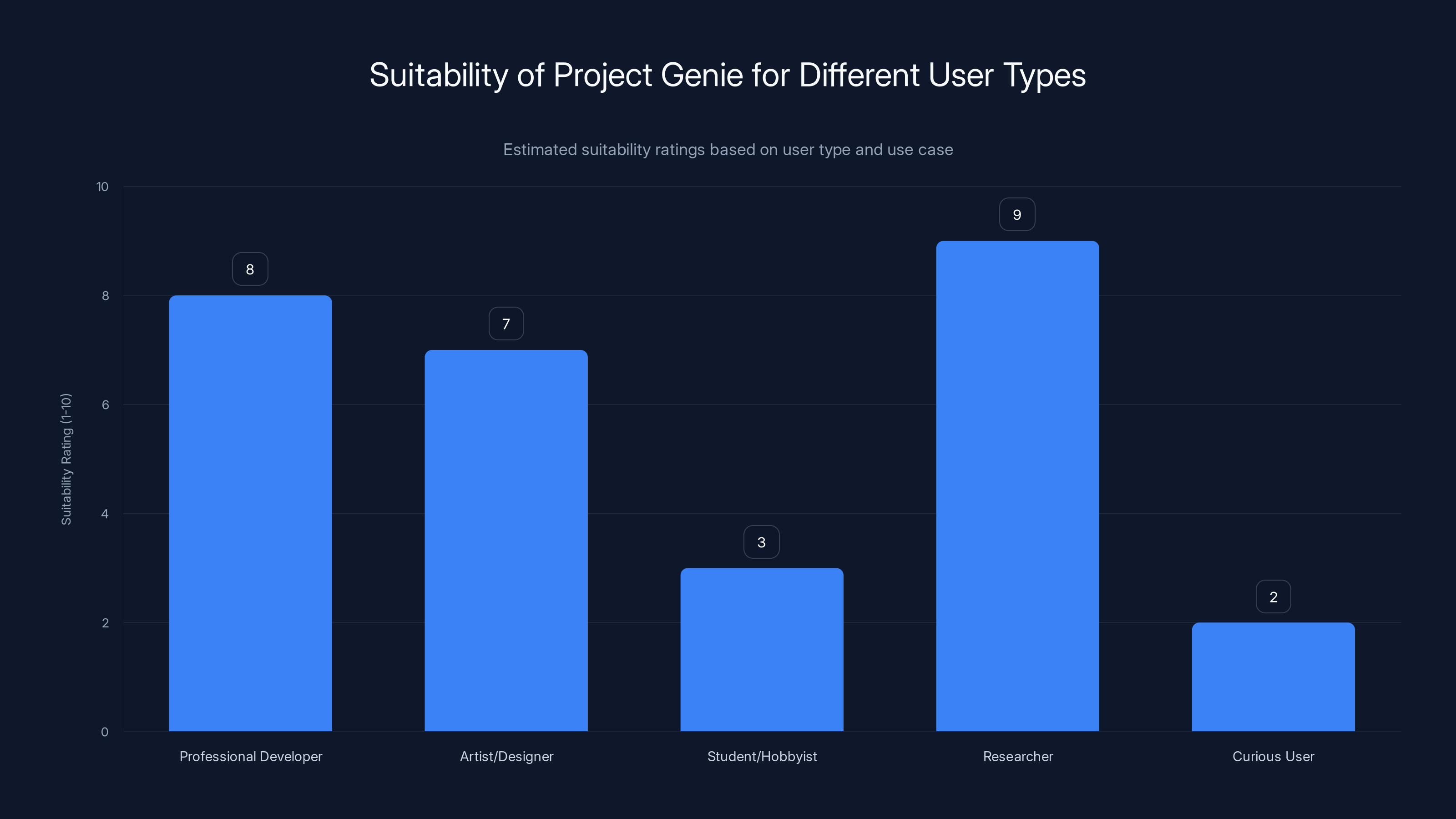

This pricing puts Project Genie in a specific category of tools. It's not for casual users. It's not for hobbyists. It's for professionals, researchers, and companies that have budgets for experimental tools.

Who should actually pay for this?

Game developers prototyping concepts benefit immediately. Instead of spending a week in a game engine building a test level, you describe it and explore it in an afternoon. That's real time saved.

Concept artists and level designers can generate reference material quickly. Instead of painting or sculpting every environment, generate a starting point and iterate from there.

Film and animation studios could use it for pre-visualization. Before filming or rendering a scene, generate it to test composition and camera movement.

Architects and designers could walk through designs that don't exist yet. It's not precision, but it's useful for understanding spatial flow.

Educators could create interactive learning environments tailored to student needs without building everything from scratch.

Researchers studying world models, AI consistency, or generative systems have an obvious use case.

If you're building a product that saves money or time more than $250/month, it pays for itself. If you're experimenting and willing to invest in next-gen tools, it's a reasonable cost. If you're curious but not professional, it's hard to justify.

Google has indicated that pricing will drop and access will expand over time. Eventually, you might expect free or low-cost tiers. But that's future speculation. Right now, $250/month is the only way in.

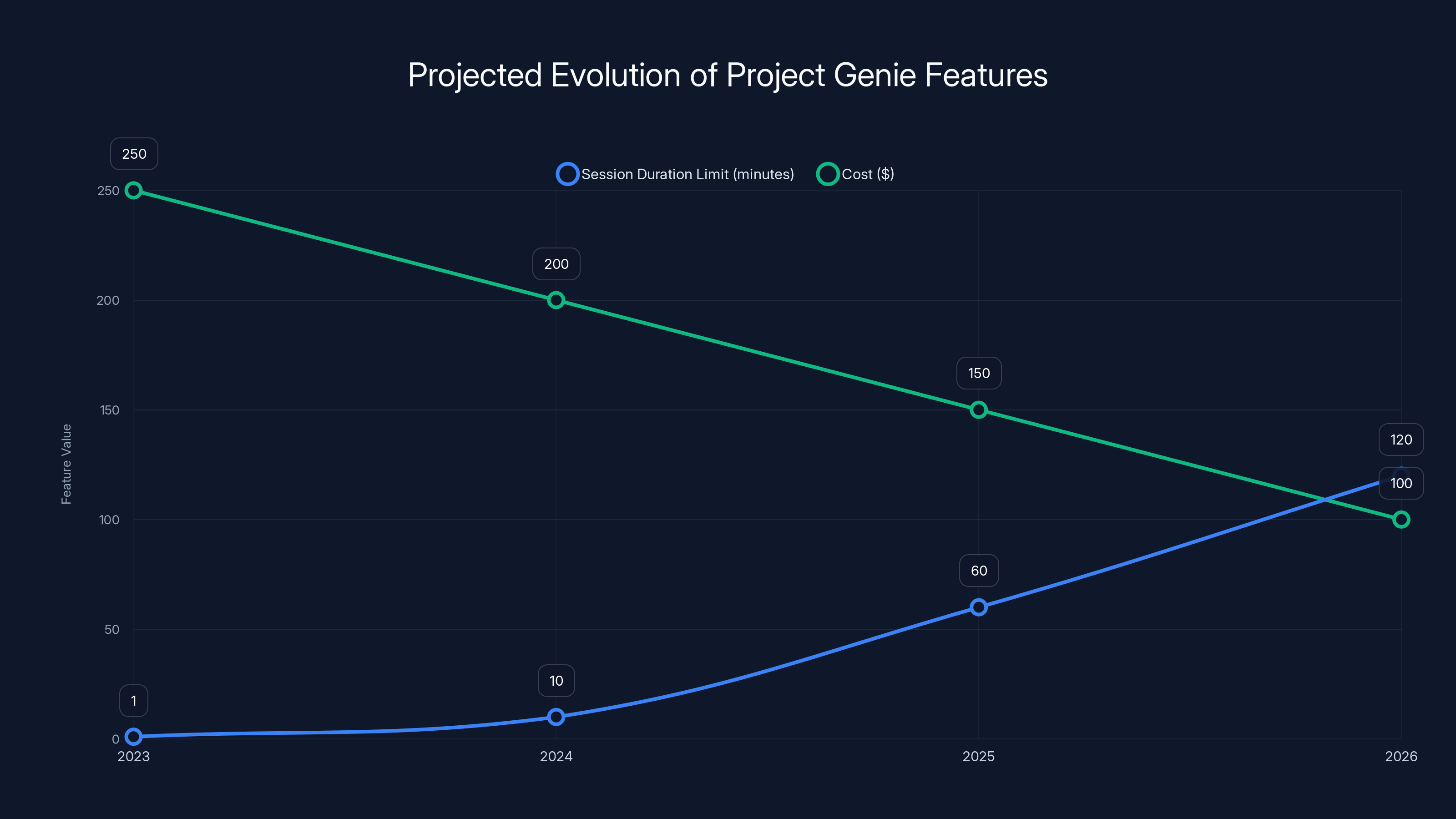

Estimated data shows Project Genie will likely see increased session durations and reduced costs over the next few years, enhancing accessibility and usability.

Content Moderation and IP Blocking: Legal Challenges Emerge

One thing became immediately apparent when testers got access to Project Genie: content moderation is tricky, and IP protection is enforced unevenly.

The Verge reported that initially, they could prompt Project Genie to create knock-off Nintendo games. Super Mario-style worlds. Zelda-like dungeons. These are immediately recognizable to anyone who plays games, but they're also owned by Nintendo. By the end of the testing period, those prompts were being blocked.

This suggests Google's moderation systems are either learning in real time or being updated based on testing feedback. That's reasonable for a research prototype, but it also shows a potential problem: what counts as infringing IP?

There's legitimate ambiguity here. If you prompt for "a fantasy castle," is that infringing? What if you say "a castle like in Zelda"? What if your world just happens to look vaguely similar to a popular game? These are questions that don't have clear answers yet.

Google is being cautious, blocking content that might interest "third-party content providers." Translation: if a company might complain, it gets blocked. This is defensive lawyering, but it narrows what you can use the tool for.

This is a broader problem with generative AI. Training data included copyrighted material. Generated outputs can resemble copyrighted work. The legal frameworks to handle this don't fully exist yet. Companies are erring on the side of caution.

For users, this means some of the most interesting applications (creating game worlds inspired by existing games, for example) are off limits. You can't prompt for specific IP. You can describe what you want in generic terms, but the moment you reference specific games, franchises, or recognizable properties, it gets blocked.

This will probably loosen over time as legal frameworks clarify. But right now, it's a constraint worth knowing about.

Use Cases That Actually Make Sense Right Now

Theory is useful, but practical use cases matter more. Where does Project Genie actually solve real problems?

Level Design and Prototyping

A game designer wants to test if a concept works. Instead of opening an engine and building for an hour, they write a prompt, explore for 60 seconds, and know if the idea has legs. Not every idea gets greenlit. Failing fast is valuable. Project Genie accelerates this.

Concept Art Reference

An artist needs to paint a science fiction city. Instead of looking at 50 Pinterest boards, they generate a few variations and use them as references. It's faster, and the variations are all unique.

Pre-visualization for Film

A director is planning a scene in a location that doesn't exist. Generate it with Project Genie, walk through it, understand sight lines and composition, then plan the actual shoot more efficiently.

Architectural Walkthroughs

An architect wants clients to understand a design. Instead of static renders or lengthy 3D model builds, generate an interactive preview they can explore themselves. It won't be perfect, but it communicates the idea faster.

Educational Environments

An educator wants to create a custom interactive learning environment. A teacher could describe "ancient Rome but interactive" and guide students through a generation that's unique to their lesson.

Rapid Prototyping for Startups

A startup is testing if an interactive world-based experience is viable. Rather than building traditional infrastructure, they use Genie to validate the concept before investing engineering resources.

These aren't "nice to have" applications. These are workflows where Project Genie saves meaningful time or enables things that were previously hard to do.

Project Genie is highly suitable for researchers and professional developers due to its advanced capabilities, while students and curious users may find it less cost-effective. Estimated data based on user needs and investment justification.

Limitations You Need to Know Before You Commit

It's important to go in with eyes open. Project Genie has real limitations beyond the 60-second window and physics inconsistency.

Input Lag

The delay between pressing a key and seeing the world respond isn't zero. It's a few hundred milliseconds. That's tolerable for exploration but wouldn't work for action gameplay. You're not playing a game here. You're walking through a simulation.

Resolution

720p is standard today, but it's not high-end. If you're capturing this for commercial use, you might need higher fidelity. For prototyping and reference, it's fine.

No Persistent State

Every time you run a prompt, you get a slightly different world. There's no saved state. You can't mark a location and teleport back to it. You can't build on your previous exploration. Each 60-second session is independent.

Limited World Sketch Editing

You can modify the generated reference image before exploring, but options are limited. You can't do complex edits. It's minor tweaks, not major restructuring.

No NPC Interaction

The worlds are places to explore, not universes with characters and quests. There are no NPCs, no dialogue, no story elements. You're walking through an environment, not living in a world.

Character Control is Basic

WASD movement and looking around. That's it. No jumping, no crouching, no complex interactions. It's a simple first-person camera, not a full character controller.

Requires High-Speed Internet

The video is streaming to you in real time. A slow or unstable connection will cause stuttering and lag. You need reliable, reasonably fast internet for this to work smoothly.

Blocked Content

As mentioned, specific IP and some content categories are moderated. What you can create is constrained by what Google's systems approve.

These aren't deal-breakers for the intended use cases. But they're worth understanding before you commit to the subscription and start planning workflows around it.

The Technical Stack: What Powers Project Genie

Understanding the technology behind Project Genie helps explain why it works and where its limitations come from.

Image Generation: The system uses Google's image generation models (currently Nano Banana Pro or Gemini 3) to synthesize the reference image from your text prompt. This is not new technology. Google has had strong image generation for years. But it's essential to the workflow because a good reference image makes a better world.

World Model: The core is a video prediction model that understands spatial consistency and physics. This is what Genie 3 pioneered. The model has learned from vast video datasets to understand how environments and objects behave. It's not explicitly programmed with physics rules. It learned them.

Memory System: Breakthrough architecture allowing the model to maintain internal state across 60 seconds. Without this, worlds would fall apart in seconds. With it, they're coherent enough to explore meaningfully.

Rendering Pipeline: Converting model predictions into actual video frames that stream to you. This involves optimizations to keep latency low enough to feel interactive.

Latency Optimization: The system is constantly trying to predict what you're about to do and pre-generate frames so your input feels responsive. It's predictive rendering, and it's crucial for the interactive feel.

Streaming Infrastructure: The video streams to your browser in real time. This requires infrastructure, compression, and bandwidth management. You're not downloading a video. You're receiving a live stream of generated content.

Each of these systems is a complex piece of engineering. Together, they enable something that seemed impossible a few years ago: interactive, consistent, generative environments.

Comparisons to Other Generative Tools: Where Genie Fits

You might be thinking: "How does this compare to text-to-video AI like Sora?" Or "Isn't this similar to what game engines do?" Fair questions. Let's clarify.

vs. Sora (Open AI's Text-to-Video)

Sora can generate impressive videos from text, but they're not interactive. You watch them. You don't control them. Genie adds interactivity. You're not a passive viewer. You're an active participant. That changes everything about the experience and the use cases.

vs. Game Engines (Unreal, Unity)

Game engines require authoring. Genie requires description. Engines are faster at runtime but slower to create. Genie is faster to create but slower at runtime. Engines are deterministic (the same input always produces the same output). Genie is generative (the same input produces varied outputs). They serve different purposes.

vs. Procedural Generation Systems

Procedural generation (using algorithms to create content) has existed for years. Genie is different because it's learned from data rather than explicitly programmed. The results are more creative and varied but less predictable.

vs. Minecraft or Roblox

These are platforms where users create and explore. Genie is a tool for generating exploration spaces quickly. You could imagine building a game on top of Genie (generate new levels every 60 seconds), but Genie itself is not a game platform.

Genie occupies a unique space. It's not quite a game engine, not quite a video generator, not quite a traditional procedural system. It's something new that borrows from all of these.

The Future: Where This Technology Is Heading

Project Genie is released, but it's explicitly a research prototype. Google has a roadmap, even if they're not fully transparent about it. Based on what we know, here's what's probably coming.

Longer Exploration Windows

As models improve and compute gets cheaper, the 60-second limit will probably increase. Maybe 5 minutes, then 30 minutes, eventually unlimited sessions. This requires better memory systems and more efficient generation, but it's the obvious direction.

Better Physics Consistency

The inconsistencies you see now will decrease as the underlying models improve. More training data, better architectures, continued research on consistency. Expect physics to feel more solid and predictable over time.

Broader Content Availability

IP restrictions will probably loosen as legal frameworks clarify. Right now, Google is being extremely cautious. As precedent develops, restrictions might relax. (Or they might tighten. The law is still forming.)

Lower Pricing and Broader Access

Getting Project Genie into the hands of more people requires lower prices. Google will likely release free or low-cost tiers eventually. Maybe limited free exploration (a few minutes per day), premium tiers for professionals, and enterprise plans for companies.

Integration With Other Tools

Right now, Project Genie is standalone. You access it through a dedicated web app. Eventually, you'd expect integration with game engines (export a Genie world into Unreal, refine it there), creative software (use Genie worlds as references in art tools), or other platforms.

Multiplayer and Persistence

This is harder technically, but longer-term, you could imagine multiplayer experiences where multiple users explore the same generated world, or persistent worlds that change over time. That's not trivial, but it's probably being researched.

Mobile and Edge Generation

Currently, this all happens on Google's servers. As edge computing improves and models get more efficient, you might generate worlds locally on your device. That would reduce latency and enable offline use.

Specialized Variants

Sectors (game development, architecture, education) might get customized versions optimized for their workflows. A game development version might expose more parameters. An education version might focus on historically accurate environments.

None of this is announced. But it's the logical progression of the technology.

Practical Tips If You're Going to Use Project Genie

If you're considering Project Genie (and have the budget), here's how to get the most out of it.

Start With Clear Mental Images

The better you can describe what you want, the better the reference image, the better the world. Vague prompts produce mediocre results. Specific, visual descriptions work better.

Test Iteratively

Your first prompt probably won't be perfect. Generate a world, explore it, learn what worked and what didn't, then refine your prompt. Iteration beats perfection on the first try.

Capture Good Moments

Download the videos you create. Some will be better than others. Organize them by use case. Build a library of generated environments you can reference or share.

Use It for What It's Good At

Prototyping, reference generation, concept testing. Don't try to use it for things it's not designed for (high-fidelity production assets, complex gameplay, etc.). Match the tool to the task.

Collaborate With Others

Share the videos you generate. Get feedback. Use it as a communication tool. Someone who hasn't used Genie might see your generation and understand the concept better than a description could convey.

Experiment With Remixes

Google's pre-built worlds are a starting point. Don't just explore them as-is. Remix them. Change characters, styles, visual themes. See what works and what doesn't.

Document Your Learnings

If you're using Project Genie professionally, track what prompts work, what doesn't, what generates good reference material, and what's a waste of time. After a few weeks, you'll have strong intuition about how to prompt for what you need.

The Bottom Line: Is Project Genie Worth the Investment?

This depends entirely on your use case and budget.

If you're a professional developer, artist, or designer working on projects where rapid prototyping saves time or money, Project Genie is worth serious consideration. The $250/month investment might pay for itself in hours saved per week.

If you're a student, hobbyist, or small team without a specific use case, it's probably not worth it right now. Wait for pricing to drop. Use free alternatives for experimentation.

If you're a researcher studying world models, AI consistency, or generative systems, it's essential. You need access to this technology to do your work.

If you're just curious and want to play around, the entry cost is too high. Maybe bookmark it and revisit when pricing drops.

Project Genie is real, it works, and it opens up possibilities that seemed impossible a few years ago. But it's also still expensive, limited, and explicitly a research prototype. Those are the facts. Your decision depends on where you fit in that equation.

What's undeniable is that world models are becoming a real category of tools. Project Genie is the first mainstream access to this technology. Whether you use it or not, the trajectory is clear: interactive, generative environments are coming. The question isn't if they'll transform creative work. It's when.

FAQ

What exactly is Project Genie?

Project Genie is Google's AI-powered tool for generating interactive, explorable environments from text prompts or reference images. Instead of creating pre-recorded videos or static renders, Genie builds dynamic worlds that respond to your real-time input, allowing you to walk through them using WASD controls for 60-second sessions. It's closer to a video game than a video generator because you're actively participating in the environment rather than watching a pre-made sequence.

How does the world generation actually work technically?

Project Genie uses a world model—a type of AI system trained on vast amounts of video data that has learned to understand spatial consistency and physics. When you provide a prompt or image, an image generation model (like Gemini 3) creates a reference image. Genie then analyzes that image and generates video frames in response to your movement inputs, maintaining internal consistency about what the world looks like from different angles. It's predicting what should appear next based on physics, perspective, and logical scene understanding, all in something approaching real time.

Why is Project Genie limited to 60 seconds per exploration?

The 60-second limit exists for both computational and technical reasons. Generating video frames in real time while maintaining physics consistency is expensive. Longer sessions would require significantly more computational resources, making the service far more costly to operate. Additionally, the memory systems that keep worlds coherent degrade over time, and physics consistency becomes harder to maintain beyond about 60 seconds. The limit is practical rather than arbitrary, and Google has indicated it may extend as technology improves.

What makes Project Genie different from traditional game engines like Unreal or Unity?

Game engines require developers to build worlds authoritively—placing assets, writing code, and creating finished products. Project Genie is generative, meaning you describe what you want and AI creates it. Traditional engines are predictable and precise but require more upfront work. Genie is faster for prototyping and generates varied, novel content, but has less control and consistency. They serve different purposes: engines for finished products, Genie for rapid exploration and reference generation.

Who is Project Genie actually designed for?

Project Genie is aimed at professionals with specific use cases: game developers prototyping concepts quickly, concept artists generating reference material, architects creating walkthroughs of designs, filmmakers doing pre-visualization, and researchers studying world models. The $250/month subscription cost puts it in the professional tier. It's not intended for casual exploration or hobbyists—those users should wait for pricing to drop or use free alternatives.

What happens with copyrighted content and game IP?

Google moderates Project Genie to prevent creation of worlds that closely resemble copyrighted properties. During testing, prompts for Nintendo games were initially allowed but later blocked "in the interests of third-party content providers." This suggests real-time content moderation that errs on the side of caution. You can describe generic game-like scenarios, but referencing specific franchises or recognizable IP gets blocked. These restrictions may loosen as legal frameworks clarify, but for now, they're a meaningful limitation.

Can you explore Project Genie worlds with other people?

No, not currently. Each exploration is a single-user experience. Worlds are generated fresh for each user and each prompt. There's no shared persistence or multiplayer functionality. Google hasn't announced plans for multiplayer, but it's theoretically possible in the future if technical challenges around consistency and streaming can be solved.

How much does Project Genie cost and what subscription plan is required?

Project Genie is only available as part of Google's AI Ultra subscription, which costs $250 per month. You can't access Genie through lower-tier subscriptions or separate purchasing. Google has indicated pricing and access will expand over time, but currently, this is the only way in. The high cost reflects the computational expense of real-time world generation and is likely subsidized by Google even at this price.

What's the difference between Project Genie and Open AI's Sora?

Sora is a text-to-video model that generates impressive, high-quality videos from text descriptions, but those videos are static—you watch them. Project Genie generates interactive environments you can explore in real time using keyboard controls. Sora might generate a beautiful three-minute video of a scene. Genie generates a 60-second environment you navigate through. They're fundamentally different tools serving different purposes. Sora is for creating video content. Genie is for interactive exploration and rapid prototyping.

What are the most realistic use cases for Project Genie right now?

The strongest use cases are rapid prototyping for game designers (test concepts in minutes), reference generation for artists (create varied visual references quickly), architectural walkthroughs for clients (explore designs interactively), pre-visualization for filmmakers (understand spatial composition before shooting), and concept testing for startups (validate ideas before engineering investment). These are workflows where generating something roughly correct quickly is more valuable than generating something perfectly detailed slowly.

Will Project Genie eventually replace game development as we know it?

No, but it might change it. Project Genie is excellent for prototyping and rapid idea validation. But for polished, commercial games, traditional game engines provide the precision, performance, and control that professionals need. The likely future is hybrid: use Genie to prototype and generate rough assets, then refine them in traditional engines. Genie is a tool that speeds up early-stage development, not a replacement for the entire game development pipeline.

Conclusion: A Glimpse Into the Future of Interactive Design

Project Genie represents a genuine inflection point in how we think about creating interactive experiences. For years, digital worlds were authored by humans through game engines, each building block placed deliberately, each interaction coded by developers. Project Genie flips that script. You describe what you want, and an AI builds it.

The technology isn't perfect. Physics breaks sometimes. The limit is 60 seconds. It's expensive. Copyrighted content gets blocked. These are real constraints.

But the trajectory is undeniable. The fact that this is possible at all is remarkable. A year ago, interacting with AI-generated environments in real time seemed years away. Now it's available to anyone willing to pay for it.

What matters most is what comes next. As world models improve, as compute gets cheaper, as legal frameworks clarify, the constraints will loosen. The 60-second limit will become 10 minutes, then an hour, then unlimited. The $250 price will eventually drop. The physics will become more consistent. The creative possibilities will expand.

For professionals in design, game development, architecture, and creative fields, Project Genie is worth paying attention to right now. It's not the final form. It's the early version of technology that's going to change how creative professionals work.

For everyone else, keep watching. The future of interactive media is being built in tools like this. Project Genie is the first mainstream glimpse of where we're heading.

Think about what you could create if generating interactive environments was as easy as describing them. That's the question Google is asking with Project Genie. We're only just starting to find the answers.

Key Takeaways

- Project Genie uses breakthrough world model technology with improved memory systems to maintain consistency across 60-second interactive explorations, a 20x improvement over early systems

- The tool requires Google's AI Ultra subscription ($250/month), positioning it exclusively for professionals with clear ROI, not casual users

- Primary use cases include game developer prototyping, concept art reference generation, architectural walkthroughs, and film pre-visualization where rapid generation beats polished precision

- Physics consistency is still imperfect, content moderation blocks specific game IP, and the 60-second limit is a computational necessity rather than a design choice

- Future improvements likely include longer exploration windows, lower pricing tiers, and broader integration with game engines as technology matures and compute costs decrease

![Google Project Genie: Create Interactive Worlds from Photos [2025]](https://tryrunable.com/blog/google-project-genie-create-interactive-worlds-from-photos-2/image-1-1769719157163.png)