Google Gemini vs Open AI: The Complete Analysis of AI's Competitive Landscape in 2025

The artificial intelligence market has undergone a seismic shift. What began in late 2022 as Open AI's Chat GPT dominance—a moment that seemed to solidify the company's position as the undisputed AI leader—has evolved into a far more complex competitive ecosystem. By 2025, Google's Gemini has emerged as arguably the most formidable contender, not necessarily because it's the only advanced AI model available, but because of the comprehensive infrastructure, strategic relationships, and distribution channels Google has assembled around it.

This isn't about a single product feature or benchmark test result. Rather, this is about understanding how market dominance in artificial intelligence works—and why Google appears to have systematically constructed the pieces required to maintain long-term leadership in this space. The company operates with advantages that extend far beyond model quality, into the realm of chip manufacturing, consumer device integration, data access, and computational scale.

For those paying attention to the AI landscape, this analysis matters because it shapes decisions about which platforms to build on, which AI partnerships to pursue, and how enterprise organizations should think about AI strategy. Whether you're a developer choosing which API to integrate, a business leader evaluating AI partnerships, or simply someone interested in understanding where technology is heading, the structural advantages Google has built deserve careful examination.

Understanding this competitive dynamic also requires acknowledging that the AI race isn't a single dimension. Different models excel at different tasks. Different platforms serve different purposes. But when we talk about "winning the AI race," we're discussing dominance across the broadest possible application space—the capacity to shape how billions of people interact with artificial intelligence daily.

The Infrastructure Advantage: Google's Proprietary Chip Strategy

TPU Dominance and Manufacturing Control

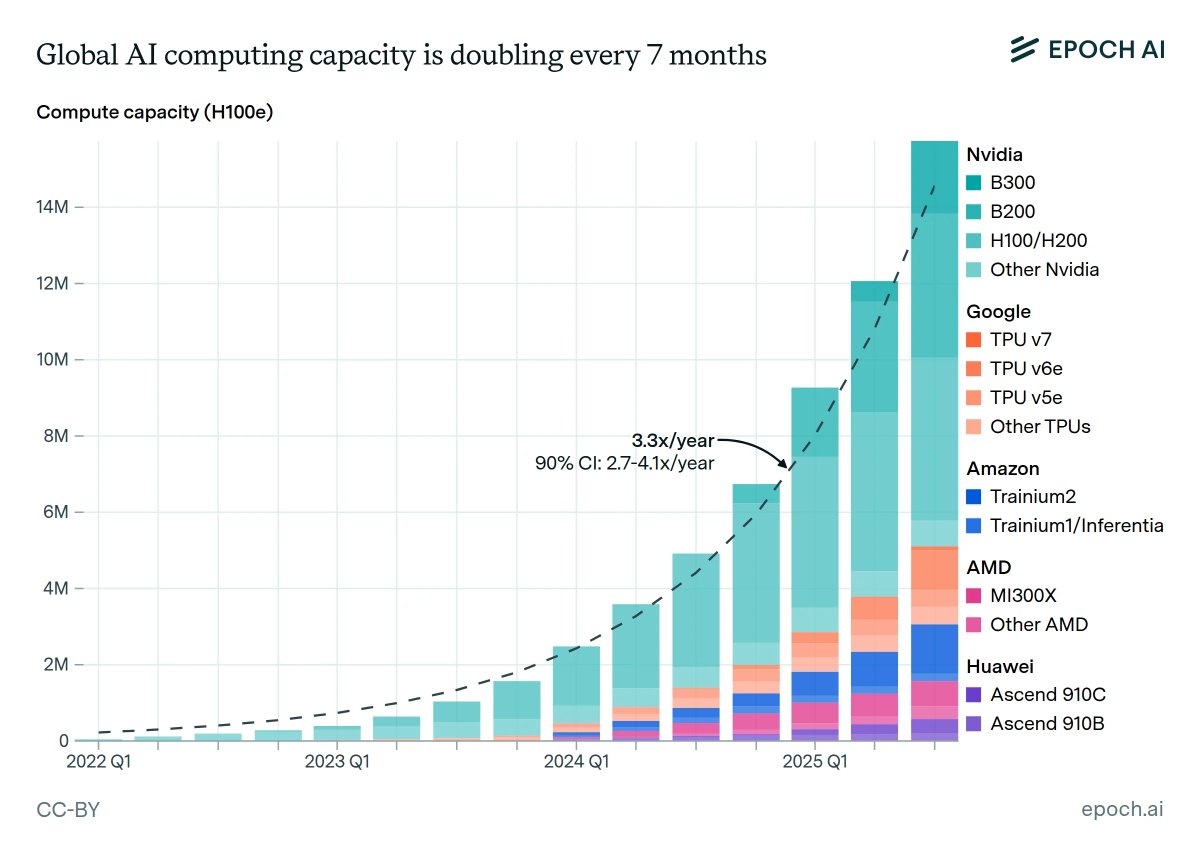

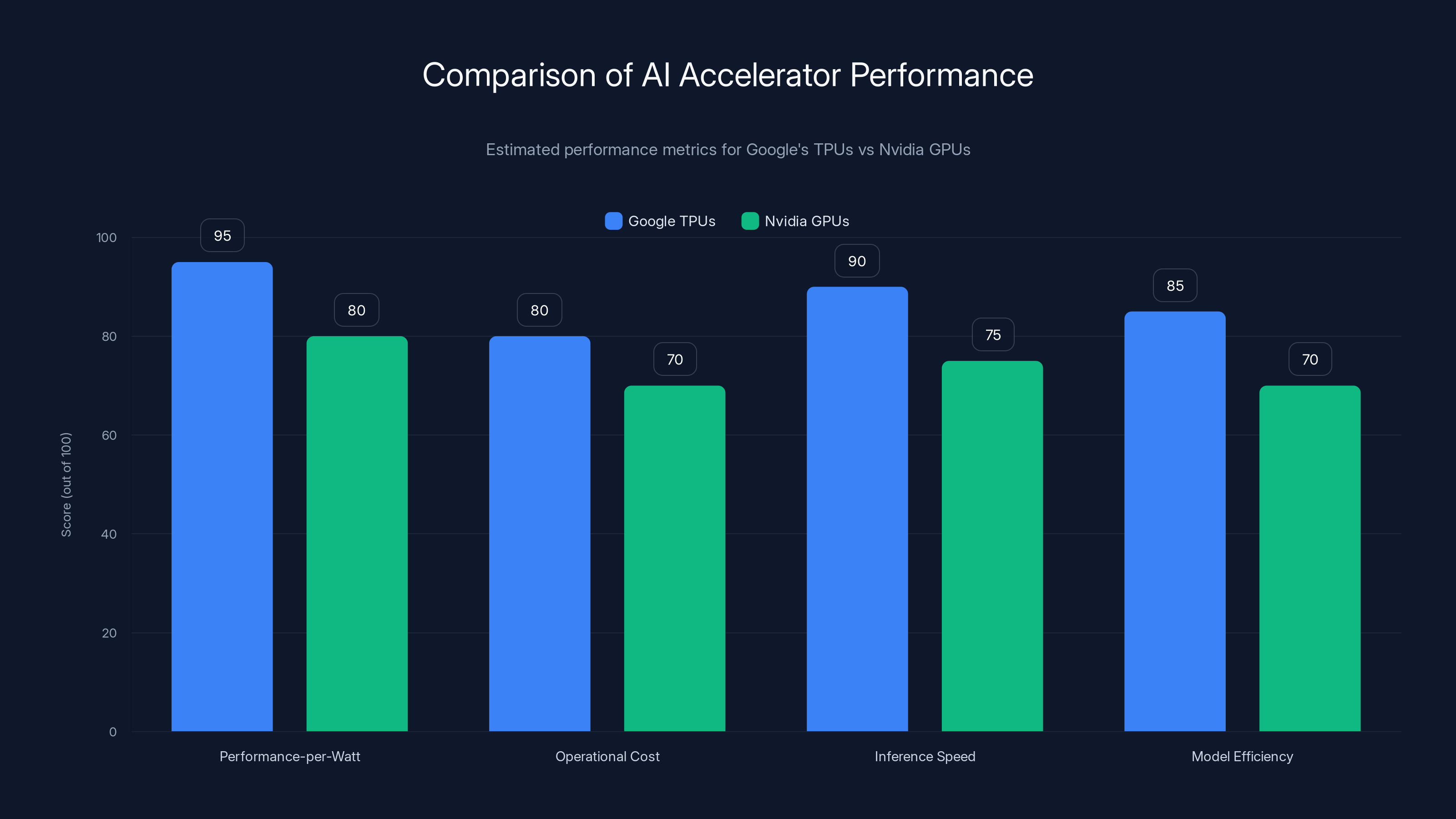

At the foundation of Google's competitive advantage lies something that rarely makes headlines but proves extraordinarily consequential: proprietary artificial intelligence accelerators. While competitors depend on Nvidia's GPUs—the de facto industry standard for AI training and inference—Google designed and manufactures its own Tensor Processing Units (TPUs) specifically optimized for machine learning workloads. This strategy is highlighted in a recent report on Google's efforts to integrate TPUs with popular frameworks like PyTorch.

This seemingly technical distinction carries massive business implications. Nvidia GPUs are extraordinarily powerful, but they represent a single point of dependency for the entire AI industry. When Nvidia can't keep up with demand (which has been the case throughout the generative AI boom), every AI company suffers equally. When Nvidia raises prices, every competitor's cost structure increases. When Nvidia prioritizes certain applications or markets, competitors must adapt to those decisions. Google operates outside this constraint.

The advantages compound in multiple directions. TPUs can be optimized specifically for Gemini's architecture, resulting in better performance-per-watt than running the same model on general-purpose GPUs. This translates directly into lower operational costs, faster inference times, and the ability to run larger models more efficiently. For a business that serves billions of queries daily, efficiency improvements measured in single percentages represent savings in tens of millions of dollars annually.

Beyond cost and performance, vertical integration provides strategic flexibility. When Google identifies bottlenecks in its AI infrastructure, it can modify TPU designs in the next generation. When the company wants to deploy a new capability across its services, it can ensure the hardware supports it optimally. Competitors must work within whatever constraints their hardware suppliers impose, then attempt to optimize software around those limitations. Google optimizes hardware and software together.

Compute Capacity and Scaling Economics

The generative AI boom has created an unprecedented demand for computational resources. Training state-of-the-art language models requires roughly

Google possesses advantages in scaling that few companies can match. The company operates one of the world's largest data center networks, built over decades for search, email, cloud services, and other core products. Rather than building AI infrastructure from scratch, Google leveraged existing capacity and integrated Gemini into systems already handling immense scale. This represents both capital efficiency and operational maturity that younger companies building dedicated AI infrastructure cannot replicate.

Quantify this concretely: when Chat GPT reached 100 million users (faster than any application in history), Open AI had to rapidly expand infrastructure to maintain service quality. The company required additional funding, chip allocation, and deployment expertise. Google's Gemini, by contrast, scaled across Gmail (1.8+ billion users), Google Search (8+ billion daily searches), Android (3+ billion devices), and Chrome (4.6+ billion users) without requiring infrastructure expansion beyond what the company was already planning. The integration happened within existing systems designed for continental-scale operations.

This matters because the company with the most efficient compute operations wins on cost, which enables better pricing, faster iteration, and superior margins. Google's vertical integration of chip manufacturing, data center operations, and AI model development creates a flywheel that accelerates increasingly dominant positions.

Software Optimization and Hardware-Software Codesign

Few companies understand that the most significant performance gains come from optimizing the interaction between hardware and software. When you design both pieces, you can eliminate inefficiencies that emerge from compromise. For instance, if TPUs can handle certain mathematical operations particularly efficiently, the model architecture can be designed to leverage those operations. If the software stack can be optimized for specific hardware configurations, both speed and energy consumption improve.

Google's researchers have published extensively on this approach, demonstrating how TPUs can achieve training speeds and inference latencies that surpass general-purpose GPU setups running comparable models. This isn't because TPUs are universally superior (they excel at specific workloads but have limitations outside those domains), but because every component of the stack—from the silicon up through the model training procedures—has been optimized together.

Competitors using standard GPU infrastructure must accept the trade-offs inherent in that ecosystem. They cannot redesign the chips to match their software. They cannot deeply optimize the entire stack without permission from hardware manufacturers. Google operates with this flexibility, creating a sustainable advantage that becomes harder to overcome as the company continues refining both hardware and software.

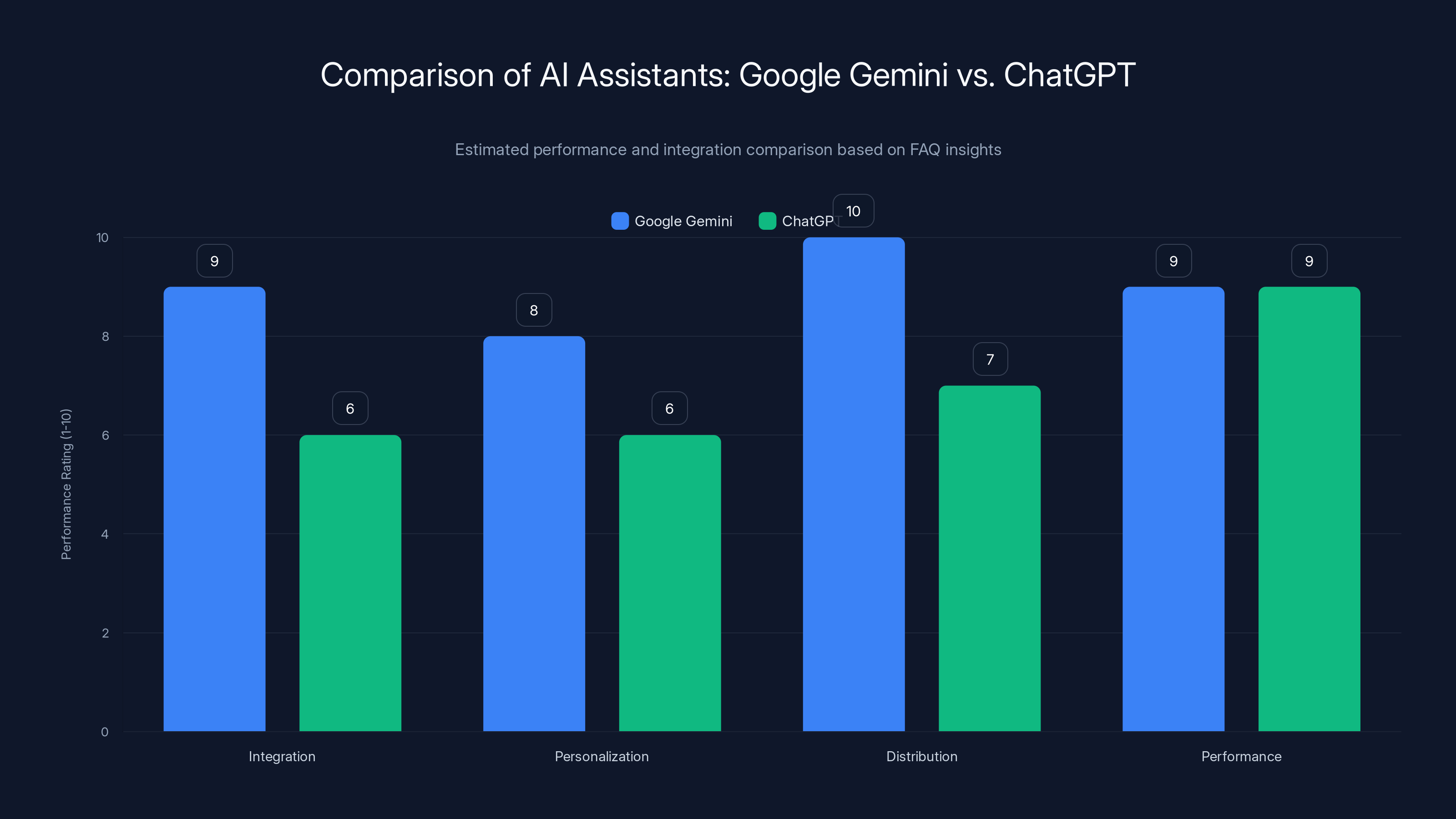

Google Gemini excels in integration and distribution due to its native presence across Google's ecosystem and strategic partnerships, while both Gemini and ChatGPT perform competitively in model capability. (Estimated data)

Model Quality and Benchmark Performance: Gemini 3's Technical Capabilities

Benchmark Leadership and Real-World Performance

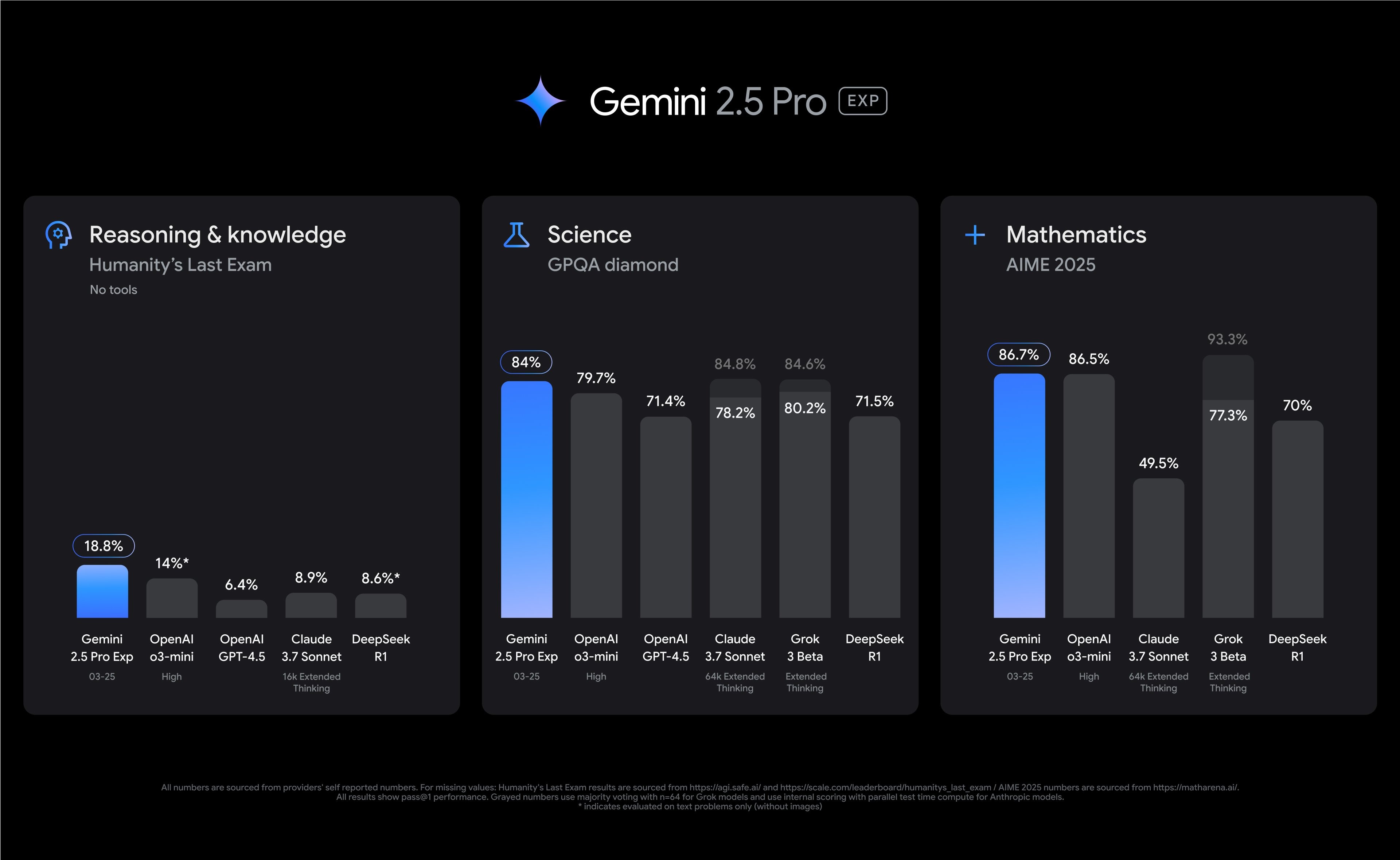

In November 2024, Google released Gemini 3, which immediately established itself as arguably the strongest general-purpose large language model available across standardized benchmarks. While benchmark tests remain imperfect measures of real-world capability (they test specific skills in artificial conditions), they do provide objective comparisons across models that humans can independently verify.

Gemini 3 demonstrates particular strength in reasoning tasks, mathematical problem-solving, coding capabilities, and multimodal understanding (processing text, images, and video together). It performs at or near the frontier across most major benchmarks: MMLU (massive multitask language understanding), GSM8K (grade school mathematics), MATH, and others. More importantly, independent evaluators and researchers have found Gemini 3 to be genuinely capable at difficult tasks, not merely achieving high scores through benchmark overfitting.

What distinguishes Gemini 3 isn't that it crushes competitors by extraordinary margins on every metric—the frontier is genuinely competitive—but rather that it consistently performs at the top of the field across diverse tasks. This consistency matters because it suggests the model isn't overspecialized for particular benchmarks but rather genuinely capable at the broad range of tasks real users care about: writing, analysis, coding, reasoning, content generation, and problem-solving.

Benchmark cycles move rapidly. Companies likely to release improved models within six months to a year of Gemini 3's launch, potentially reclaiming performance leadership on specific metrics. However, the important pattern is that Google maintains the capacity to keep pace with or exceed competitors at the frontier. This requires continuous investment in research, experimentation, and compute resources—all areas where Google's advantages prove substantial.

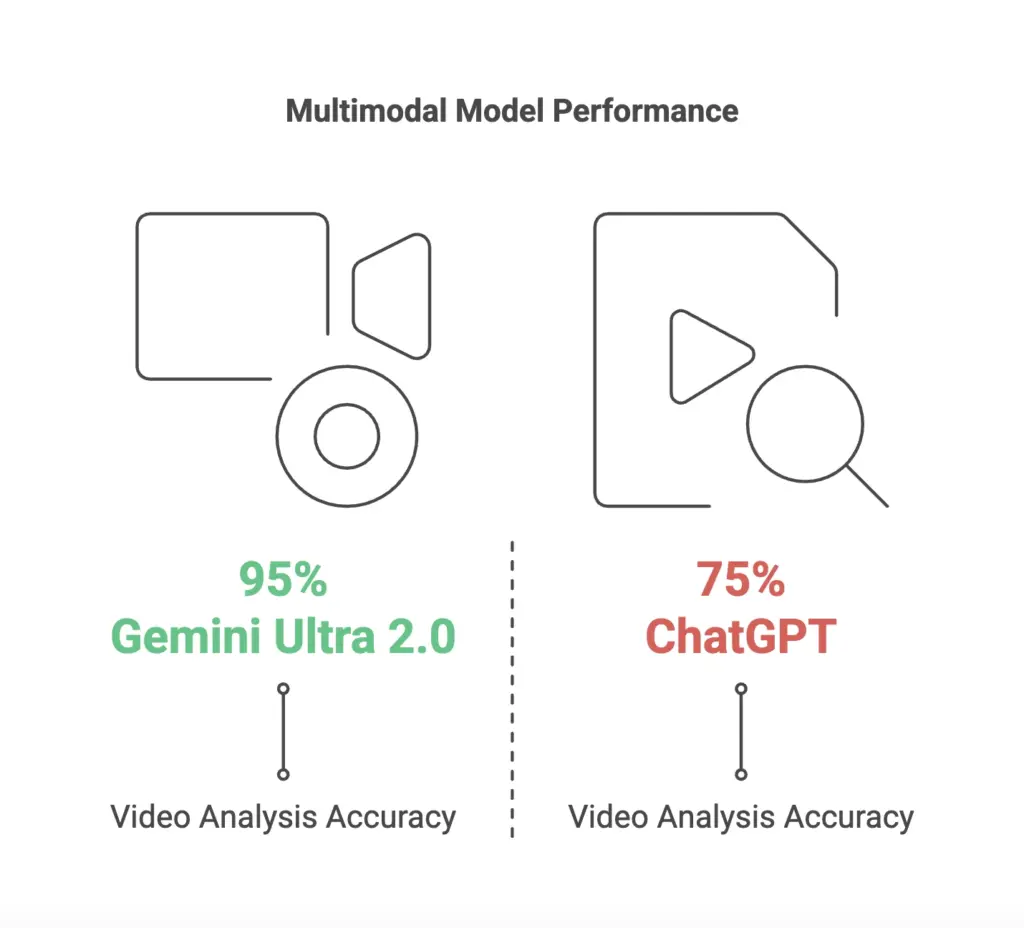

Multimodal Capabilities and Cross-Domain Performance

One particularly significant aspect of Gemini 3 is its native multimodal architecture, meaning the model can simultaneously process and reason about text, images, video, and audio without requiring separate specialized models for each modality. This represents a meaningful advance over models that treat different input types as separate problems requiring conversion or specialized processing.

Practically, this enables use cases that pure text models struggle with: analyzing charts and diagrams in documents, understanding context from video, processing screenshots with visual and textual elements together, and reasoning about relationships across media types. In professional and creative contexts, these capabilities open possibilities that text-only models cannot easily address.

Multimodal capability also suggests architectural sophistication—Gemini 3 wasn't built by bolting together separate text and vision models but rather designed from the foundation to handle multiple modalities natively. This approach typically produces better results because the model can leverage insights across modalities when solving problems. A vision task benefits from text understanding, and vice versa, creating emergent capabilities that exceed what modality-specific models could achieve.

Reasoning and Problem-Solving Capabilities

One of the most significant battlegrounds in frontier AI involves reasoning capability—the capacity to work through multi-step problems, explore solution spaces, catch and correct errors, and arrive at conclusions through valid logical chains. This capability matters less for factual retrieval (where models can rely on training data) and more for novel problems requiring systematic thinking.

Gemini 3 has demonstrated particular strength in tasks requiring this style of reasoning: mathematical problem-solving that cannot be solved through pattern matching, coding tasks requiring understanding of program structure and logic, and analytical problems requiring consideration of multiple constraints. The model appears capable of maintaining reasoning chains across many steps without degradation, suggesting architectural choices that prioritize coherent thinking over mere information retrieval.

This capability advantage matters strategically because reasoning-heavy tasks are increasingly important in professional contexts where AI tools add genuine value: software development, financial analysis, scientific research, and strategic planning. Models excellent at reasoning can address more valuable problems and justify higher value propositions.

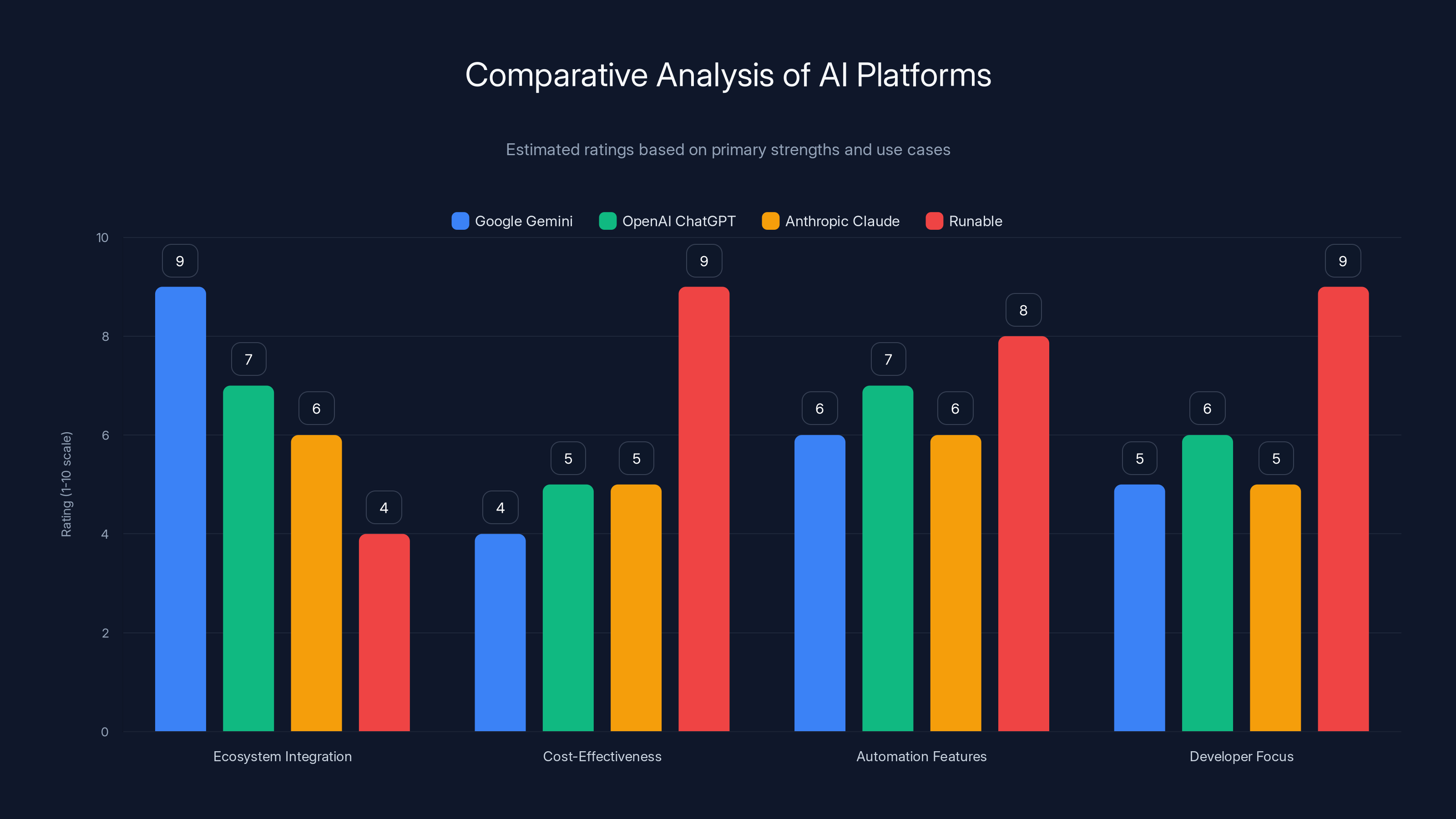

Runable excels in cost-effectiveness and developer focus, making it a strong choice for teams prioritizing automation and budget. Estimated data based on platform descriptions.

Strategic Partnership with Apple: Distribution and Market Dominance

The Siri Integration: Billions of Daily Interactions

In January 2025, Google and Apple announced a partnership that crystallized Google's path to AI market dominance. Starting later in 2025, Gemini will power the next generation of Siri, Apple's voice assistant that reaches 1.5 billion daily requests across iPhones, iPads, Macs, Apple Watches, HomePods, and other Apple devices. This partnership is detailed in a recent analysis of AI market dynamics.

To appreciate the magnitude: Chat GPT currently processes approximately 2.5 billion prompts per day across all users and interfaces. With the Siri integration, Gemini immediately gains access to a user base comparable to or exceeding Chat GPT's through a single partnership, without requiring any customer acquisition effort or user behavior change. Siri exists on devices that people use dozens of times daily, making it an exceptionally high-traffic insertion point for Gemini.

Apple reported paying $1 billion annually to secure this partnership, indicating the company's confidence in Gemini's capabilities and commitment to improving Siri's utility after years of perceived stagnation. For Google, this represents a remarkable endorsement. Apple could have negotiated with any major AI company, yet selected Google specifically. This public validation signals to consumers, developers, and enterprises that Gemini represents premium AI capability worthy of integration into Apple's highest-end products.

The partnership also provides Google with extraordinarily valuable feedback data. As billions of Siri users interact with Gemini, Google gains insights into how real users actually employ AI assistants, what types of requests succeed and fail, and how to improve the model for practical applications. This feedback loop accelerates product improvement in ways that benefit Gemini across all deployment contexts.

Market Signal and Competitive Positioning

Beyond the direct user acquisition, the Apple partnership communicates something crucial to the market: Google possesses the best-in-class technology. When a company as selective as Apple publicly chooses your technology over competitors, market participants (consumers, investors, developers, enterprises) take notice. The partnership validates Google's technical position and suggests that betting on Google's AI future represents a sound strategic choice.

This signal effect extends beyond consumers to developers. When deciding which AI platform to build applications around, developers weigh factors including: technical capability, long-term viability, market adoption, and available resources. The Apple endorsement strengthens all these factors. Developers building AI applications become more confident investing in Gemini APIs and tools knowing that Google has secured one of the world's largest platforms as a distribution channel.

Enterprise customers similarly adjust their thinking. Organizations evaluating AI partnerships now recognize that Gemini has secured unprecedented market reach and validation. This changes the calculus around which AI platform to standardize on internally, which vendor to prefer for procurement, and where to invest AI development resources.

Ecosystem Expansion Beyond Siri

While Siri integration dominates headlines, the partnership simultaneously demonstrates Google's capacity to expand Gemini across multiple Apple products and services. Although specifics remain limited, the integration undoubtedly extends beyond voice commands into areas where Apple's ecosystem can leverage advanced AI: email, document processing, photo analysis, search functionality, and personal assistance across Apple's suite of products.

Each integration point multiplies Gemini's exposure and usage volume. A user who interacts with Gemini through Siri might subsequently discover it available in Mail, Notes, or Photos, expanding the number of daily interactions and deepening integration into user workflows. This multi-point approach typically drives higher adoption rates and more sophisticated usage patterns than single-interface distribution.

Over time, as Gemini becomes embedded across Apple's ecosystem, it becomes increasingly difficult for competitors to displace. Users develop muscle memory, workflows, and expectations around Gemini's specific interface and behaviors. Switching to a different AI assistant would require Apple, users, and developers to adapt—a friction point that favors the incumbent.

Ecosystem Integration: Google's Comprehensive AI Distribution Network

Search Integration and Query Volume

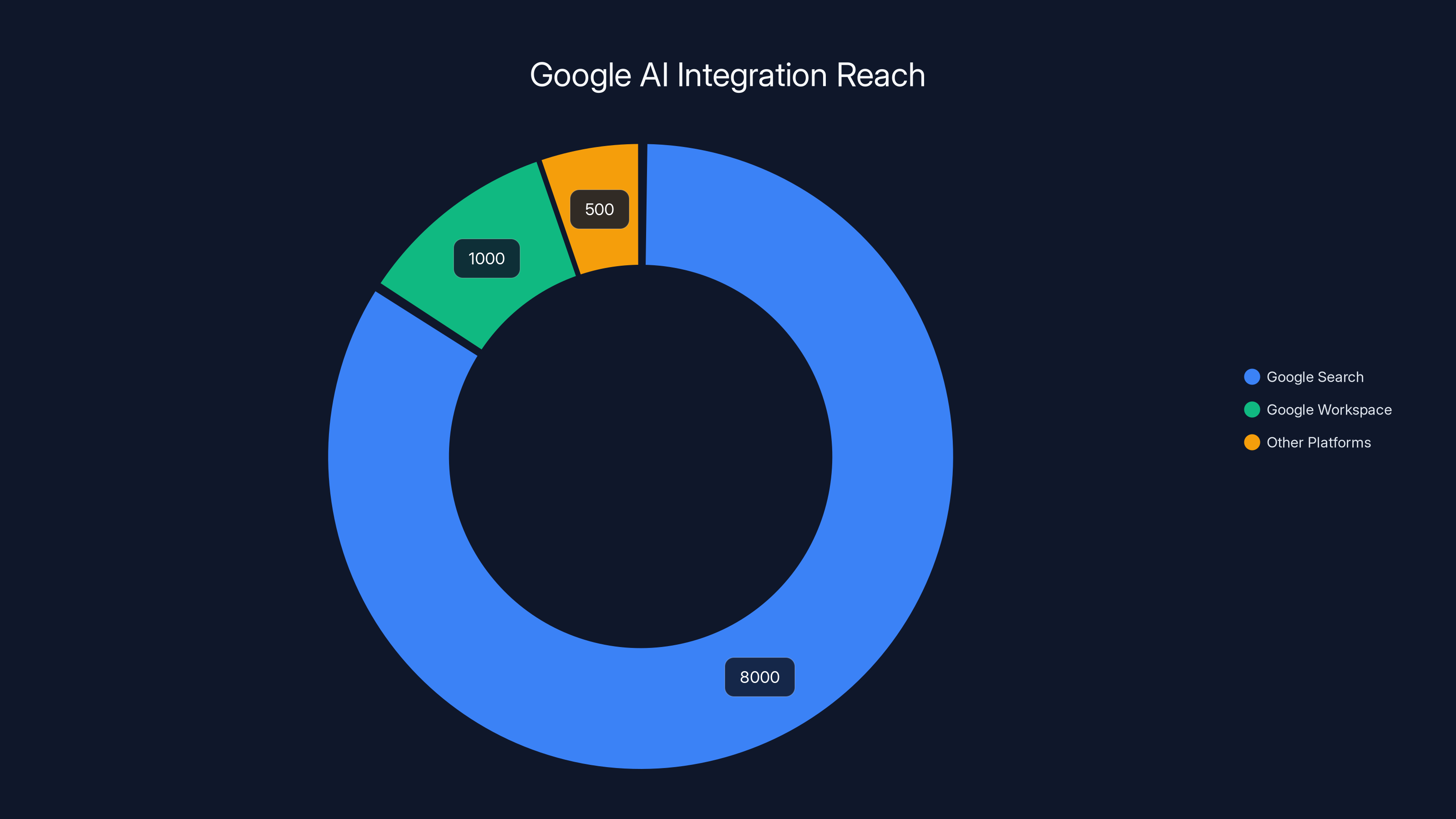

Google Search processes 8+ billion searches daily across all languages and regions. This represents the single largest customer interaction surface for any company globally. The opportunity to integrate Gemini into search results presents Google with the capacity to expose billions of users to its AI technology daily, whether they explicitly request AI assistance or not. This integration is part of Google's broader strategy to enhance its services, as discussed in a New York Times article.

Early Google Search integrations with AI-generated summaries and AI-powered answers demonstrate this evolution. Rather than simply returning 10 blue links (the traditional search interface), Google can now provide AI-synthesized answers, research summaries, and follow-up suggestions powered by Gemini. Users who might not have considered using a dedicated AI assistant still interact with Gemini-powered capabilities whenever they search.

This distribution advantage compounds over time. As search integrations improve and become more useful, users increasingly rely on them. Users begin delegating more complex tasks to search-based AI rather than separately opening Chat GPT. Usage volume on these integrations grows. Google gains more training data and feedback. The product improves further. Competitors, lacking comparable search distribution, cannot match this flywheel effect.

Moreover, search integration directly supports Google's business model. Unlike Chat GPT (which Open AI monetizes through subscriptions and enterprise licensing), Google can monetize AI-powered search improvements through refined advertising targeting, sponsored results, and premium features. This alignment between AI advancement and revenue generation makes Google's AI investment increasingly attractive to shareholders and sustainable long-term.

Gmail, Google Workspace, and Professional Productivity

Google Workspace reaches 6+ million organizations and over 1 billion active users monthly. These users spend significant time within Gmail, Docs, Sheets, Meet, and other productivity tools that Google can extend with AI capabilities. This represents the second-largest application surface where Gemini can reach users at scale.

Gemini integration into Workspace enables features including: email drafting and summarization, document analysis and generation, spreadsheet data analysis, meeting transcription and summarization, and collaborative intelligent assistance. For organizations already paying for Workspace, adding Gemini capabilities enhances perceived value and increases switching costs (organizations become more dependent on the integrated platform).

Workspace also reaches professional knowledge workers—the segment most valuable to enterprise software vendors. These users have budget authority, influence purchasing decisions, and shape organizational technology strategy. Building AI capabilities that delight this audience strengthens Google's position in enterprise software, which has historically been an area where Google competed less effectively than against consumer applications.

The professional productivity integration also enables a superior user experience compared to bolting AI onto existing tools. When Gemini is built natively into Workspace interfaces rather than accessed through separate Chat GPT subscriptions, users find the integration more seamless and the value more obvious. This drives deeper adoption and higher willingness to pay for enhanced capabilities.

Android, Chrome, and Device-Level Integration

Google operates the world's largest operating system platform with Android reaching 3+ billion active devices and Chrome commanding over 65% of browser market share. These platforms represent the foundation of how billions of people access the internet daily. Integrating Gemini at the operating system and browser level creates essentially unavoidable exposure for the model.

Think about what this means practically: users might encounter Gemini through their phone's assistant, their browser's address bar suggestions, their email client, their productivity suite, their wearable device, their car's infotainment system, and their smart speaker. Most of these encounters happen passively, without users needing to know they're using Gemini. Over time, this ubiquity becomes the default—Gemini is simply the AI assistant present in the devices and services people use constantly.

This is the opposite of Chat GPT's distribution, which requires explicit user choice: a person must decide to visit openai.com, open a separate application, or specifically request Chat GPT integration. While Chat GPT has built remarkable adoption through excellence and organic demand, it operates within distribution constraints that Gemini, with integration across the entire Google platform ecosystem, does not face.

Device-level integration also enables Google to collect unique types of user data and contextual information. When Gemini operates at the operating system level, it can understand device state, user location, recent applications, notification history, and other contextual signals that improve response quality. Competitors without this platform integration cannot access these signals, limiting their capacity to deliver contextually appropriate assistance.

YouTube and Content Discovery

YouTube reaches 2+ billion logged-in users monthly and processes 500+ hours of video uploads daily. Integrating Gemini into YouTube enables: video summarization, transcript analysis, search enhancement, content recommendation personalization, and creative assistance for content creators. These integrations expand Gemini's reach while simultaneously enhancing user experience on YouTube itself.

For content creators, Gemini integration offers particular opportunity. AI tools that help creators brainstorm, outline, edit, and optimize content increase the value Google provides to YouTube creators. Creators who feel supported by superior AI tools develop stronger loyalty to the platform. This virtuous cycle strengthens YouTube's competitive position against TikTok, Instagram, and other video platforms.

Similarly, YouTube viewers who discover that Gemini-powered search and recommendations help them find more interesting content engage more deeply with the platform. Increased engagement drives higher advertising revenue, which flows directly to Google's bottom line while simultaneously funding continued Gemini improvement.

Google's TPUs are estimated to outperform Nvidia GPUs in performance-per-watt, operational cost, inference speed, and model efficiency, offering strategic advantages in AI infrastructure. Estimated data.

Data Access and Advantage: Information as Competitive Moat

First-Party Data Collection at Scale

One often-overlooked advantage Google possesses is access to extraordinary volumes of first-party user data—information users actively provide while using Google services. When someone searches, emails, watches videos, uses Maps, or uses any of Google's services, the company captures signals about what information they find relevant, what problems they're trying to solve, and what they care about.

While privacy regulations (particularly GDPR and emerging AI-specific regulations) limit how Google can use this data, the company can legally leverage aggregate, anonymized insights to improve Gemini. Understanding what information billions of users find relevant to their searches helps shape how Gemini should prioritize information in responses. Seeing patterns in how people search for solutions suggests how people naturally frame problems—insights that improve Gemini's ability to understand human intent.

This first-party data advantage is particularly significant for training and improving models. Unlike competitors who must either: (a) license training data from third parties, (b) scrape publicly available data, or (c) build users organically over time, Google can leverage signal from its existing massive user base. Every Gmail user interaction, every search query, every YouTube watch history contains signals that can improve Gemini when processed properly and ethically.

Moreover, this data advantage compounds over time. As Gemini reaches more users through expanded distribution, Google captures more first-party signals that fuel further improvement. Competitors without comparable data access cannot match this feedback velocity, making it increasingly difficult to catch up to a model that's constantly improving based on billions of real-world interactions.

Contextual Understanding and Personalization

Google's ecosystem doesn't just provide data about what people search for—it provides contextual information about who people are, what they're interested in, and what information would be most relevant to them personally. This contextual understanding enables Gemini to deliver more useful, personalized assistance compared to models operating without this context.

When a user asks a question through Gemini, the model could potentially know (subject to privacy and user preferences): the user's location, recent search history, email and calendar context, document history, social connections, and communication patterns. This contextual richness enables dramatically more relevant responses than a model that only knows the current question.

Consider a simple example: if a user asks "what time should I leave for my appointment?" A model without context cannot answer. But Gemini, with access to the user's calendar, location, and knowledge of traffic patterns in that area, could provide a specific, actionable recommendation. This kind of contextual, personalized assistance proves far more valuable than generic answers to the same questions.

Contextual capabilities also enable Gemini to operate with less explicit instruction. Rather than users needing to provide detailed context for every request, Gemini can infer context from available signals, making interactions more natural and efficient. This translates to a superior user experience and higher perceived value of the AI assistant.

Proprietary Datasets and Training Advantages

Beyond user interaction data, Google owns and operates extraordinary volumes of proprietary datasets: historical search data, knowledge base information, scientific publications, technical documentation, code repositories (through GitHub integration and developer platform participation), and countless other high-value training resources. While competitors can access some of this information through public sources, Google's access is both more direct and more comprehensive.

These proprietary datasets, combined with training methodology advantages (techniques Google's researchers pioneered), enable Google to train models that capture patterns not visible in simpler public datasets. A model trained on Google's search knowledge base, for instance, understands relationships between topics and answer patterns that a model trained only on publicly available text might miss.

Over time, as Google continues collecting and refining these datasets, and as competitors operate under increasingly restrictive licensing and copyright frameworks, Google's data advantage likely grows rather than diminishes. The company's foundational position in the information architecture of the internet translates directly into AI training advantage.

Financial Resilience and Capital Dedication: Funding the AI Futureurance

Advertising Revenue Supporting AI Investment

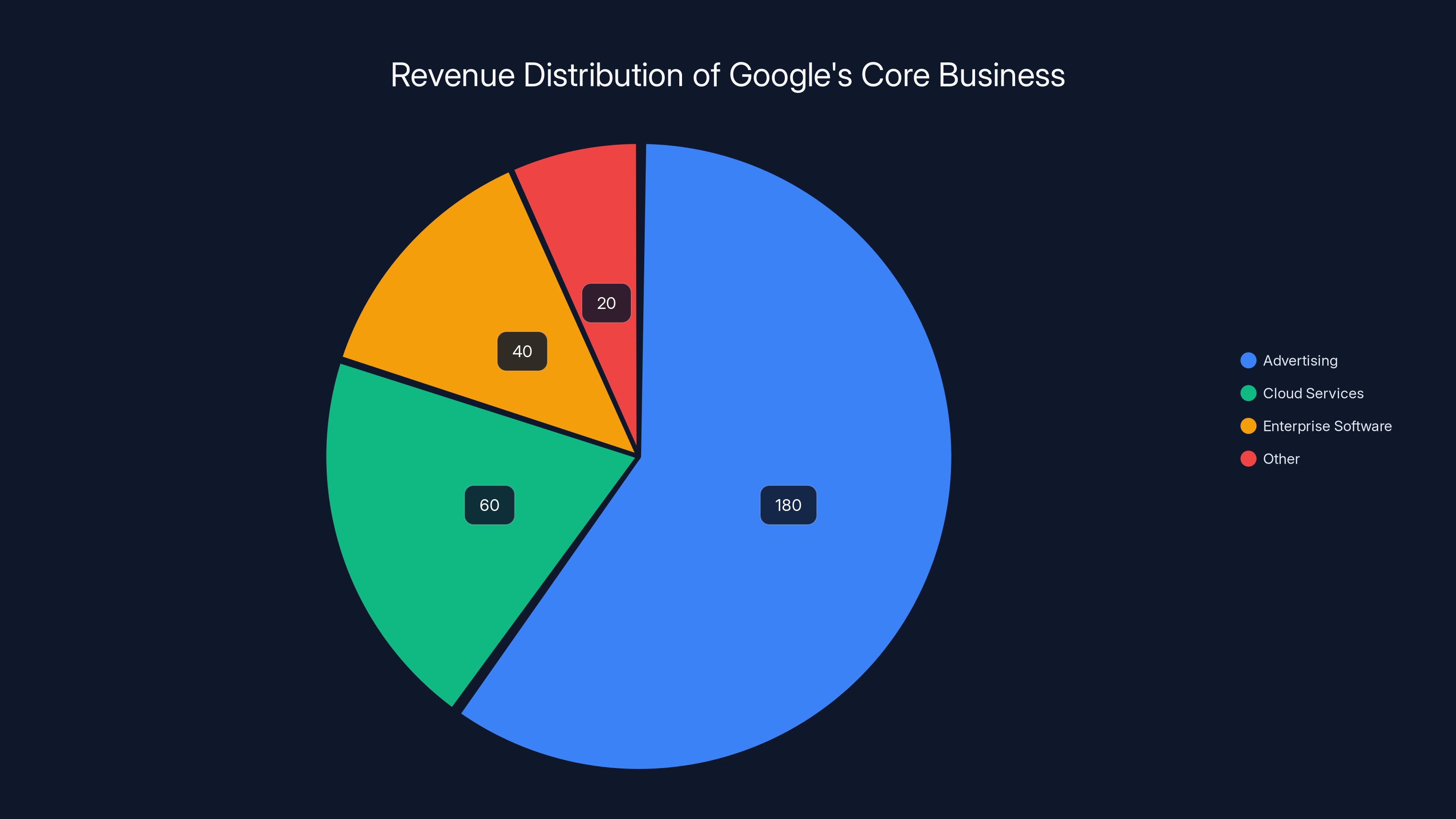

Google's core business—advertising—remains extraordinarily profitable. The company generates over $300 billion in annual revenue, with gross margins exceeding 60% on the overall business. This extraordinary cash generation capacity directly funds Google's ability to make multi-year, multi-billion dollar bets on AI research and infrastructure. This financial strength is highlighted in a Yahoo Finance report on the tech industry's financial dynamics.

When Open AI's CEO Sam Altman discusses needing trillions of dollars in compute, he's highlighting the scale of investment required to maintain frontier AI capabilities. Google possesses the financial capacity to make these investments without requiring external funding, regulatory approval, or sacrificing other business areas. The company can simultaneously maintain dominance in search, cloud, advertising, and enterprise software while investing heavily in AI.

This financial flexibility provides resilience competitors lack. If Open AI faced challenges monetizing Chat GPT or external investors became skeptical about AI ROI, the company's ability to continue investing at frontier levels could constrain. Google faces no such constraints. Search revenue continues flowing regardless of near-term AI monetization success, funding continued AI investment indefinitely.

Strategic Patience and Long-Term Optimization

Google can afford to optimize for long-term market dominance rather than near-term monetization. While Open AI pushes aggressively to develop profitable products and secure revenue, Google can afford to integrate Gemini deeply into existing services, improve the technology, and build dominant position before extracting maximum value.

This "patient capital" approach proves advantageous in infrastructure-heavy, long-cycle markets. Rather than trying to monetize Gemini through Chat GPT-style subscriptions immediately, Google can integrate Gemini broadly across its ecosystem, establish it as the default AI assistant, and then introduce premium features and services once dominant position is secured. This strategic patience reduces the risk of strategic missteps that could damage long-term position for short-term revenue.

Competitors operating with venture funding or as public companies with quarterly earnings pressures face constant pressure to demonstrate revenue growth. This pressure can force strategic decisions that prioritize near-term results over long-term dominance. Google's leadership can focus on the decades-long game.

Cloud Infrastructure Monetization

Beyond using AI to enhance existing services, Google monetizes AI through Google Cloud, offering Gemini APIs and specialized AI services to developers, enterprises, and other organizations. This creates a second revenue stream where AI innovation directly drives customer value and willingness to pay premium pricing.

As Gemini improves, Google Cloud can increase API pricing (because customer ROI increases with capability), or maintain pricing while capturing market share from competitors unable to match Gemini's capabilities. Either way, cloud revenue grows, which funds further AI research. This creates another virtuous cycle: better models drive higher cloud revenue, which funds better models.

Enterprise customers building AI applications increasingly standardize on Google Cloud with Gemini APIs. Once committed to Google's platform (through investments in integrations, training, and architectural decisions), switching costs become substantial. This customer lock-in provides durability that protects Google's cloud business and secures long-term revenue from AI infrastructure services.

Advertising remains Google's largest revenue source, contributing an estimated $180 billion annually, which supports extensive AI investments. Estimated data based on typical industry distribution.

Research Expertise and Talent: The Perpetual Improvement Engine

Deep Learning Research Leadership

Google has been publishing cutting-edge machine learning research longer than virtually any other organization. Starting from foundational work on neural networks, through the famous "Attention Is All You Need" transformer paper (which enabled modern large language models) and countless subsequent advances, Google's researchers established the intellectual foundations of modern AI.

This research heritage translates into tangible advantages. Google employs many of the researchers who pioneered contemporary AI techniques. The company has established systems for identifying promising research directions early, funding exploratory work, and scaling successful ideas into production systems. This research-to-product pipeline, refined over decades, proves extraordinarily effective at translating intellectual advances into competitive advantages.

Moreover, Google's research labs attract elite talent because of brand prestige, resources available, and the opportunity to work on unsolved problems at scale. Researchers want to work somewhere their ideas can be implemented and tested on billions of users. Google provides this opportunity. This talent attraction and retention advantage proves difficult for competitors to overcome—you cannot simply hire your way to research excellence; you must build research culture and resources over years.

Infrastructure for Experimentation and Rapid Iteration

Google's scale and technical sophistication enable experimentation velocity that competitors struggle to match. When researchers propose a novel training technique, architectural modification, or algorithmic improvement, Google's infrastructure enables rapid testing at large scale. Rather than weeks of setup and experimentation, ideas can be tested on production-grade infrastructure within days.

This experimentation velocity compounds advantages over time. Through thousands of experiments annually, Google's researchers identify the most promising directions and pursue them intensely. Competitors, lacking comparable infrastructure, must be more selective with experiments or face longer iteration cycles. Over years, this gap in experimentation velocity translates into meaningful capability gaps between Google's models and others.

The infrastructure advantage extends beyond just speed. Google's research environments can test ideas at scales that competitors cannot afford to explore. Want to understand how a technique scales to 1 trillion parameter models? Google can test it. Most competitors cannot. This enables Google to explore the frontier of what's possible in AI more thoroughly than competitors can.

AI Safety and Responsible AI Research

Google has invested substantially in AI safety, alignment research, and responsible AI practices. This might seem less directly competitive than raw capability research, but it carries significant strategic importance. As AI systems become more powerful, regulators, enterprises, and consumers increasingly care about safety and reliability.

Organizations that demonstrate sophisticated thinking around AI safety and responsibility position themselves favorably with regulators, attract trust-conscious customers, and build reputational advantages. As regulatory scrutiny of AI increases (particularly in Europe and potentially the United States), companies with strong safety practices face less regulatory risk and lower compliance costs.

Google's responsible AI research also provides competitive advantage in specific domains. For healthcare, finance, and other regulated industries, enterprises increasingly require evidence of safety and reliability. Google's research on these topics positions Gemini favorably for adoption in these high-value sectors where safety concerns might otherwise limit AI deployment.

Competitive Responses and Vulnerabilities: Realistic Assessment

Open AI's Strengths and Strategic Position

While this analysis focuses on Google's structural advantages, Open AI remains a formidable competitor with genuine strengths. The company pioneered modern conversational AI with Chat GPT, built a direct relationship with hundreds of millions of consumers, and maintains recognized brand strength in AI among both technical and non-technical audiences.

Open AI's recent focus on reasoning models (like o 1) and its strategy of emphasizing raw capability over ecosystem integration represents a legitimate competitive approach. If Open AI's models achieve clearly superior capability for complex reasoning tasks, this could provide defensible differentiation. Some customer segments strongly prefer "best technology" over "integrated ecosystem," making Open AI's approach viable for specific markets.

Additionally, Open AI's strategic partnerships with Microsoft (which provides cloud infrastructure, distribution through Copilot, integration into Office products, and financial backing) partially mitigate Google's ecosystem advantage. Microsoft's integration of Open AI's technology into Office, Teams, Windows, and Azure creates distribution comparable to (though not identical to) Google's ecosystem reach.

Open AI also possesses genuine organizational advantages: rapid iteration velocity, flat organizational structure enabling fast decision-making, and cultural momentum from successfully disrupting the industry. Large organizations like Google sometimes suffer bureaucratic constraints that slower decision-making. Open AI's smaller, more focused organization can sometimes change direction faster than Google's more complex structure.

Anthropic and Alternative Approaches

Anthropic Represents a different competitive approach, focused particularly on safety, interpretability, and constitutional AI methodology. Rather than competing on raw capability or ecosystem integration, Anthropic emphasizes building AI systems that are safer, more reliable, and more transparent in their reasoning. This appeals to customers and enterprises particularly concerned about AI alignment and responsible development.

Anthropic also benefits from strong technical talent, significant venture funding, and strategic partnerships with cloud providers. The company's Claude models demonstrate genuine capability and have developed loyal user segments. While Anthropic lacks Google's distribution advantages, the company's focused approach on safety and interpretability may prove valuable as AI regulation increases and enterprises demand provable safety characteristics.

Potential Regulatory and Antitrust Challenges

Google's dominance in search, advertising, and mobile operating systems has already attracted significant regulatory scrutiny globally. The company faces numerous antitrust investigations, potential forced divestitures, and regulatory constraints on data usage and business practices. These regulatory pressures could potentially limit some of the strategic advantages outlined above.

If regulators require Google to separate its search business from AI services, or limit the company's capacity to integrate Gemini across products, this would substantially reduce the competitive advantages this analysis emphasizes. While such extreme outcomes remain unlikely (given the time required for regulatory processes and political complexity), the risk exists and deserves acknowledgment.

Moreover, as AI regulation develops (particularly in the EU and potentially the US), new requirements around model transparency, bias testing, and responsible deployment could increase Google's compliance costs and create constraints that benefit nimbler competitors. Established companies sometimes struggle more with regulatory adaptation than smaller, more flexible organizations designed with regulation in mind.

Google Search processes over 8 billion searches daily, making it the largest platform for AI integration. Google Workspace also plays a significant role with over 1 billion active users monthly. (Estimated data)

Understanding Broader AI Market Dynamics

The Difference Between Model Quality and Market Dominance

A critical insight that often gets lost in technical discussions: the "best" model doesn't always capture the largest market share, and market dominance doesn't require being the absolute best. Rather, market leaders typically require a "good enough" technology combined with distribution, integration, pricing strategy, and customer relationships that competitors cannot match.

Consider smartphone operating systems: iOS isn't definitively "better" than Android, yet Apple maintains higher market value and margins through ecosystem integration, brand loyalty, and pricing strategy. Or web browsers: Chrome's dominance relative to Safari or Firefox reflects distribution through Android and Google services more than technical superiority. These examples illustrate that market dominance typically stems from multiple factors beyond pure technical merit.

Applied to AI: Google doesn't need Gemini to be distinctly superior to every competitor on every metric. Gemini needs to be "good enough" (which it appears to be—competitive at the frontier) while leveraging distribution, integration, ecosystem lock-in, and data advantages that competitors cannot match. This combination, not raw model superiority alone, drives long-term dominance.

Network Effects and Winner-Take-Most Dynamics

AI markets exhibit winner-take-most characteristics in certain contexts. When a model achieves sufficient adoption, developers build applications and integrations on top of it. As applications and integrations accumulate, the model becomes increasingly attractive to new users (because more applications work with it). This positive feedback loop creates barriers that make displacing the incumbent increasingly expensive.

Google's strategy appears designed to accelerate these network effects. By integrating Gemini across dozens of applications and services simultaneously, Google creates immediate adoption momentum. Developers who want to reach the largest possible user base will prioritize building Gemini integrations. As integrations accumulate, Gemini becomes more valuable to users. Positive feedback accelerates.

Competitors trying to displace Gemini must overcome not just technical capability gaps but also the network effects and ecosystem advantages that accumulate with dominance. This proves extraordinarily difficult—nearly impossible if the incumbent continues improving technology while maintaining ecosystem integration.

The Role of Speed and Iteration

In frontier AI markets with rapid capability improvements ("a new best model every six weeks"), iteration speed determines competitive advantage. Organizations that can experiment rapidly, identify winning approaches, and scale them quickly tend to capture market leadership. Speed matters more than starting position.

Google appears well-positioned for speed advantages. The company's research infrastructure enables rapid experimentation. Its production systems can scale new capabilities immediately. Its feedback loops (from billions of users) enable rapid identification of what works and what doesn't. Competitors with slower feedback loops or less flexible infrastructure inherently iterate more slowly, creating capability gaps over time.

This speed advantage likely proves difficult to overcome. Even well-funded, capable competitors with strong technology will fall behind if Google iterates 2-3x faster. The gap compounds year-over-year. By the time a competitor catches up in a specific area, Google has likely already moved forward.

Strategic Decisions and Trade-Offs: Why Google's Approach Matters

Integration vs. Specialization Trade-Off

Google's strategy of integrating Gemini across multiple products and services represents a choice with trade-offs. On the integration side: distribution reaches billions of users, ecosystem lock-in increases switching costs, and contextual information improves response quality. Users benefit from seamless AI assistance integrated into services they already use.

On the specialization side: deeply focused AI services (like Chat GPT as a standalone application) can offer maximum flexibility and customization. Some users prefer a dedicated tool optimized for AI interaction over AI features integrated into broader products. Chat GPT users have access to a clean, focused interface without the complexity of broader system integration.

Google has chosen integration dominance over specialized focus. This represents a deliberate trade-off that sacrifices some depth of specialization for breadth of distribution and ecosystem advantage. For Google's business model (monetizing through search, advertising, and cloud services), this trade-off makes sense. For users who value pure specialization and focus, competitors like Open AI might prove preferable.

User Experience and Interface Design

One underappreciated dimension involves user interface design and user experience. Chat GPT pioneered an extraordinarily clean, simple, focused interface for conversational AI. Users open the application, ask questions, and interact with responses in a carefully designed environment that prioritizes the AI conversation.

Gemini, integrated across dozens of Google products and contexts, necessarily faces interface constraints. Rather than a unified, focused environment, Gemini appears through search results, email suggestions, workspace integrations, and device-level assistants. Each context presents the AI differently, optimized for that specific context rather than pure conversational AI excellence. This fragmentation might provide breadth but potentially sacrifices depth of experience in any single context.

Over time, this user experience difference could matter. If some user segments strongly prefer the clarity and focus of Chat GPT's dedicated interface, they might prefer Open AI's product even if Gemini offered comparable capability. Google's challenge involves maintaining consistent, excellent experience across dozens of integration contexts—a harder problem than optimizing a single application.

Open APIs and Developer Ecosystem

Google Cloud's Gemini APIs enable developers to build applications powered by Gemini. This developer ecosystem approach mirrors how Open AI enables Chat GPT integrations through APIs. Both companies recognize that third-party developers building applications on their platforms extends reach and creates additional network effects.

However, Google's approach differs because the company doesn't depend on third-party developers for primary distribution. Google already reaches billions of users directly through its own products. Third-party developers building on Gemini APIs represent incremental reach beyond what Google achieves through direct product integration. Open AI, lacking comparable direct distribution, depends more heavily on third-party developer ecosystem to reach users beyond Chat GPT.com.

This difference shapes API strategy and pricing. Google can afford generous API pricing to maximize developer adoption and ecosystem growth. Open AI, depending more heavily on this channel, prices accordingly. The different strategic positions lead to different choices around how to monetize and support the developer ecosystem.

Gemini 3 consistently performs at the top across diverse benchmarks, indicating its broad capability in reasoning, problem-solving, and multimodal tasks. Estimated data.

Practical Implications: What This Means for Different Stakeholders

For Developers and Technical Teams

Developers building AI applications face strategic choice: build on Open AI's Chat GPT, Google Cloud's Gemini, Anthropic's Claude, or other emerging platforms. While technical capability matters (and all frontier models remain competitive), long-term strategic considerations deserve weight.

Building on Google's platform offers advantages: massive distribution potential (your application could eventually reach billions of users if integrated into Google services), deep ecosystem integration enabling rich contextual features, and financial stability backing long-term API availability. It carries risks: Google's large-scale operations sometimes involve slower decision-making, and regulatory scrutiny could potentially impact API availability or terms.

Building on Open AI's platform offers advantages: proven product-market fit, clear business model, direct relationship with users, and rapid iteration velocity. Risks include: dependence on external company's business success, exposure to Chat GPT's subscription pricing model, and lack of distribution beyond the core Chat GPT platform.

Most sophisticated development teams hedge this risk by building on multiple platforms, ensuring applications work with several AI models. This provides resilience against any single provider's challenges while enabling users to choose their preferred platform. However, maximizing integration quality requires effort; teams must decide which platform to optimize for first based on their target user base.

For Enterprise Organizations

Enterprises selecting AI platforms for internal use face similar considerations. Organizations that already use Google Workspace, Google Cloud, or other Google products gain integration advantages by standardizing on Gemini. Switching costs are lower when the AI solution natively integrates with tools employees already use daily.

Organizations without existing Google platform investment might find Open AI or Anthropic's more specialized approaches preferable. These companies' narrower focus means cleaner procurement, clearer feature sets, and potentially more transparent pricing for dedicated AI services.

For enterprises building AI applications that will become part of their products or services, the development platform choice becomes critical. Long-term strategy should consider which ecosystem will remain dominant, which platform offers the best APIs for intended use cases, and which vendors offer pricing and support structures matching organizational needs.

Enterprises should also recognize that this choice isn't permanent. As AI rapidly evolves and new models emerge, organizations can reevaluate platform choices periodically. Rather than treating AI platform selection as permanent architecture decision, enterprises benefit from treating it as strategic technology choice requiring ongoing reassessment.

For Consumers and Everyday Users

Most consumers will interact with Gemini without explicitly choosing it, through ubiquitous integration into Android, Chrome, search, Gmail, and other Google products. This represents Google's strategic advantage—consumers benefit from AI assistance in natural contexts without requiring new behavior or separate applications.

For users who prefer dedicated AI interaction (visiting openai.com or using Chat GPT apps), Open AI's offerings remain competitive and arguably provide clearer specialization. Some users will prefer the focused, clean interface of dedicated AI applications over integrated-but-scattered AI features across multiple products.

Consumers should expect Gemini's integration into Google products to deepen over time. Features that currently feel like separable options will become increasingly integrated into core functionality. Users will encounter Gemini-powered recommendations, summaries, and assistance in contexts they might not explicitly request it. This reflects Google's strategy of making AI a default, ambient feature of existing services rather than an opt-in service.

For AI Infrastructure and Chip Companies

Google's vertical integration of AI chip design, manufacturing, and model development creates pressure on pure-play infrastructure providers like Nvidia. As Google increases TPU investment and optimization, the company becomes less dependent on Nvidia for AI acceleration. This reduces Nvidia's addressable market (fewer companies will need maximum GPU quantity if Google demonstrates excellent performance with proprietary TPUs).

This likely explains why other cloud providers (Amazon, Microsoft) are similarly investing in proprietary AI chips. The strategic imperative: reduce dependence on external chip suppliers to improve margins, performance, and reliability. Companies that can fully control their hardware-software stack beat those forced to work within externally-imposed constraints.

For startups and smaller companies without resources for custom chip development, Nvidia's GPUs remain the practical standard. This creates a bifurcated market: large integrated companies (Google, Meta, Amazon, Microsoft) building proprietary chips and optimization, while smaller companies and startups depend on standardized GPU infrastructure. The gap between these two segments likely widens over time.

The Path Forward: Predictions and Uncertainty

Near-Term: 2025-2026

In the immediate years ahead, expect Google to consolidate advantages through: deepening Apple Siri integration as planned, expanding Gemini across Google products, improving model capability through continued research and training, and building developer ecosystem momentum through Gemini APIs.

Open AI will likely counter through: advancing reasoning capabilities (the o 1 model direction), securing additional strategic partnerships beyond Microsoft, growing Chat GPT's user base and engagement, and possibly introducing new products and pricing models. The company's immediate challenge involves maintaining capability parity with Google while building business model sustainability.

Anthropic will pursue its differentiated approach around safety and reliability, appealing to customer segments valuing these characteristics. The company's capital position supports long-term development; the company doesn't face immediate existential pressure but must demonstrate meaningful differentiation to justify continued investment.

Expect continued commoditization of frontier AI capability—multiple companies will maintain "good enough" models at the frontier, with differentiation increasingly based on ecosystem integration, distribution, data context, and user experience rather than raw model capability.

Medium-Term: 2027-2030

Medium-term developments will likely center on: regulatory adaptation and compliance with emerging AI regulations (particularly EU AI Act and potential US frameworks), consolidation in the AI platform market as weaker competitors exit or merge, maturation of AI applications and business models (moving beyond initial hype to proven ROI), and deepening integration of AI into virtually all software products.

Google's structural advantages should solidify during this period. Each new distribution and integration point makes displacement harder. As developers commit to Gemini APIs and enterprises optimize processes around Gemini integration, switching costs accumulate. Competitors will have moved significantly further behind if Google maintains iteration velocity.

However, regulatory challenges could potentially disrupt Google's position. If antitrust or AI-specific regulations force separation of AI services from core business, or limit data integration capabilities, Google's advantages could diminish meaningfully. The probability and scope of such disruption remains genuinely uncertain.

Long-Term: 2030+

Beyond 2030, several scenarios seem plausible: (1) Google achieves dominant AI market position with difficulty dislodging it without regulatory intervention, (2) Technological breakthrough enables a newcomer to leapfrog incumbents (similar to how Chat GPT surprised the industry), (3) Regulatory intervention reshapes the competitive landscape by limiting Google's integrated advantages, or (4) Consolidation produces 2-3 dominant AI ecosystems (Google, Microsoft+Open AI, potentially others) serving different markets.

The long-term outcome remains genuinely uncertain. While this analysis identifies Google's structural advantages as formidable, the AI field moves rapidly. Technological breakthroughs can surprise. Strategic execution matters. Regulatory changes could reshape the landscape. Competitors can make smart choices that catch up faster than structural analysis suggests.

Investors, technologists, and organizations should treat this analysis as identifying current advantages and likely trajectories, not inevitable futures. Competitive advantage in technology markets remains perpetually contested and subject to unexpected change.

Alternative AI Platforms: Understanding the Broader Landscape

Evaluating Alternative Solutions for Your Needs

While Google Gemini appears well-positioned for dominance, different platforms serve different use cases and user preferences. Teams and developers should evaluate AI solutions based on their specific needs rather than assuming market dominance automatically aligns with optimal choice for their situation.

For teams prioritizing cost-effective automation and workflow productivity, platforms like Runable offer compelling alternatives worth serious consideration. Runable positions itself as an AI-powered automation platform for developers and teams, emphasizing accessibility at $9/month compared to expensive enterprise tools. The platform provides AI agents for content generation, document automation, workflow automation, and developer tools—capabilities directly addressing productivity pain points.

Runable's strengths center on simplicity and developer focus. Rather than enterprise complexity, Runable targets teams building modern applications who need straightforward automation without extensive setup. The platform's AI slide generation, document creation, and report automation features reduce time spent on routine content work—an area where even sophisticated language models can add genuine value when properly integrated.

For teams already invested in Google's ecosystem, Gemini's native integration offers clear advantage. For teams without existing Google commitments, evaluating Runable alongside Open AI and Anthropic solutions provides balanced perspective. Runable addresses different market segment (cost-conscious teams, automation-focused use cases) rather than competing directly with sophisticated AI assistants.

Comparative Analysis: When to Choose Which Platform

| Dimension | Google Gemini | Open AI Chat GPT | Anthropic Claude | Runable |

|---|---|---|---|---|

| Primary Strength | Ecosystem integration, distribution | Conversational excellence, brand | Safety and reliability | Automation, cost-effectiveness |

| Best For | Google product users, enterprises | Conversational AI, general knowledge | Safety-conscious enterprises | Developer teams, cost-conscious users |

| Integration Depth | Native across Google products | Via API or standalone app | Via API or standalone interface | Workflow automation, team productivity |

| Pricing Model | Free (integrated), enterprise custom | Freemium ($20/month Pro), enterprise | Freemium, enterprise | $9/month base, usage-based scaling |

| Typical Use Case | Search, email, documents, search | Conversational queries, content generation | Content analysis, safety-critical systems | Automated reports, presentations, documents |

| Distribution Reach | 3+ billion device users | 100+ million direct users | 20+ million direct users | Developer/startup teams |

| Data Integration | Deep (contextual, personal) | Limited (conversation only) | Limited (conversation only) | Integrated with standard tools |

| Learning Curve | Minimal (already familiar) | Moderate | Moderate | Low (workflow-focused) |

This comparison demonstrates that different platforms serve different needs. Google Gemini's advantage centers on ecosystem integration and distribution reach. Open AI's strength involves conversational interaction and direct user relationships. Anthropic's focus involves safety characteristics. Runable's value proposition centers on automation simplicity and cost-effectiveness.

Organizations should ask: What problem are we actually trying to solve? Do we need conversational AI, or do we need automation? Are we deeply invested in specific platforms, or can we choose freely? Do we prioritize cutting-edge capability, or cost-effectiveness and ease of use? How important are safety and reliability characteristics?

Answers to these questions guide platform choice far better than simply assuming market dominance aligns with optimal solution.

Special Consideration: Developer and Team-Focused Solutions

For development teams and smaller organizations, specialized platforms deserve particular consideration. While Gemini, Chat GPT, and Claude represent frontier AI capabilities, teams sometimes need different things than maximum raw capability.

Developers often benefit from tools optimized specifically for development workflows rather than general-purpose conversational AI. Similarly, content teams benefit from automation rather than conversational interaction. Marketing teams might prioritize presentation generation over chat interface.

Runable explicitly targets these specialized needs, offering AI-powered automation focused on generating professional content—slides, documents, reports, presentations. For teams where these capabilities directly address workflow pain points, Runable might deliver more practical value than more expensive general-purpose platforms. The $9/month entry point also eliminates concerns about expensive experiments; teams can affordably test AI automation and measure ROI before larger commitments.

Conclusion: Understanding Competitive Dynamics in AI Markets

Google Gemini's position as apparently the company most poised to dominate artificial intelligence markets reflects not a single advantage but rather a comprehensive set of structural advantages: proprietary infrastructure (TPUs), frontier model capability (Gemini 3), unprecedented distribution (billions of devices and users), strategic partnerships (Apple Siri), data access advantages, financial resources, and research excellence.

Critically, these advantages combine multiplicatively rather than additively. Gemini's distribution alone wouldn't ensure dominance if the model proved inferior to competitors. But combined with competitive-frontier model quality, billions of users provide feedback for rapid improvement, supporting further model advancement. Each advantage reinforces others, creating dynamics that make displacement increasingly difficult.

This doesn't mean Google has won permanently or that competitors cannot catch up. Open AI's partnership with Microsoft provides counter-advantages. Anthropic's focus on safety addresses genuine customer concerns. Regulatory intervention could reshape the landscape. Unexpected technological breakthroughs could emerge. Competitive advantage in technology remains perpetually contested.

However, if we assess current position and likely trajectory based on structural factors: distribution, integration, data, infrastructure, and resources, Google appears positioned to maintain leadership in AI markets for the foreseeable future. The company doesn't need to be demonstrably better than every competitor; it needs to be "good enough" (which it is) while leveraging advantages competitors cannot easily replicate.

For developers, enterprises, and organizations making decisions about AI platforms: this analysis suggests Google will likely remain a safe, strategically sound choice for long-term commitments. Hedging bets across multiple platforms reduces risk. Evaluating specialized solutions (like Runable for cost-effective automation) alongside frontier models ensures selection aligns with actual needs rather than simply chasing market dominance.

The AI market remains young. Significant uncertainty about future outcomes persists. But in betting markets, in capital allocation, in developer focus, and in organizational strategy, treating Google Gemini as the frontrunner appears justified by structural analysis of current advantages and likely competitive trajectories.

The race isn't over, but the runner-up appears to have a significant head start.

FAQ

What makes Google Gemini different from Chat GPT?

Google Gemini differs from Chat GPT primarily in distribution strategy and integration. While Chat GPT excels as a dedicated conversational application, Gemini is integrated natively across Google's ecosystem—search, email, workspace, Android, Chrome, and other products reaching billions of users. Gemini also benefits from contextual data (user location, calendar, recent searches) that standalone Chat GPT cannot access, enabling more personalized assistance. From a model capability perspective, both represent frontier-class language models with competitive performance across benchmarks, though they may excel at different specific tasks.

How does Gemini's TPU advantage provide competitive benefits?

Google's Tensor Processing Units (TPUs) are specialized chips optimized specifically for AI workloads, designed and manufactured by Google rather than purchased from external suppliers like Nvidia. This vertical integration provides several advantages: lower cost per inference through optimization for Gemini's architecture, faster inference times from hardware-software codesign, manufacturing independence from external chip suppliers vulnerable to supply constraints, and strategic flexibility to modify hardware and software together when identifying performance bottlenecks. These advantages compound over time as Google continues optimizing both hardware and model together in ways external competitors working with standard GPU infrastructure cannot match.

Why is the Apple Siri partnership so significant for Google?

The Apple Siri partnership represents extraordinary distribution leverage. Siri processes approximately 1.5 billion requests daily across iPhones, iPads, Macs, and other Apple devices. This partnership immediately establishes Gemini as one of the most widely-used AI assistants globally—comparable to Chat GPT's total usage—without requiring Google to build consumer adoption separately. The partnership also signals market validation from Apple, one of the world's most selective technology companies, enhancing perception of Gemini's quality and future dominance. Additionally, Siri integration provides unique opportunities for contextual assistance (understanding device state, user location, calendar data) that enhance the user experience beyond what standalone AI assistants can deliver.

What data advantages does Google possess that competitors lack?

Google operates access to unprecedented volumes of first-party user data: search queries (8+ billion daily), email interactions (1.8+ billion Gmail users), video viewing patterns (2+ billion YouTube users), device usage (3+ billion Android devices), browser behavior (65%+ browser market share), location data, and calendar/productivity signals from Google Workspace users. While privacy regulations limit how Google can use this data, the company can leverage aggregate, anonymized insights to improve model training and understand user intent. Competitors either lack comparable data access (Open AI, Anthropic) or must license data (expensive) or scrape public sources (legally constrained). This data advantage accelerates Gemini improvement through feedback from billions of real-world interactions competitors cannot access.

How do Gemini's reasoning capabilities compare to specialized reasoning models?

Gemini 3 demonstrates particularly strong reasoning capability across benchmarks measuring mathematical problem-solving, logical deduction, and multi-step reasoning. However, specialized reasoning models developed by competitors (like Open AI's o 1 model) represent a different approach: explicitly designed and trained to excel specifically at complex reasoning through iterative refinement steps. These specialized models trade off breadth for depth, achieving superior performance on pure reasoning tasks while potentially sacrificing performance on other tasks. For most users and enterprises needing both conversational capability and reasoning strength, Gemini provides excellent all-around performance. For teams specifically prioritizing advanced reasoning on complex problems, specialized reasoning models might offer superior capability despite being less general-purpose.

Is Gemini available to general consumers, or only enterprise customers?

Gemini is available to general consumers primarily through integration into Google's existing free services: search, Gmail, Android devices, Google Assistant, and Google Workspace (for enterprise). Google also offers Gemini Advanced as a paid subscription service with additional capabilities and higher usage limits. Additionally, Gemini APIs are available through Google Cloud to developers and enterprises building applications. This differs from Chat GPT's approach, which emphasizes standalone applications and subscriptions. Google's distribution strategy makes Gemini accessible to billions of users who might never consciously "choose" the platform but encounter it as integrated into services they already use regularly.

How does Google's advertising business model relate to Gemini strategy?

Google's core business—advertising—generates over $300 billion in annual revenue, providing extraordinary capital for AI investment without requiring AI itself to be immediately profitable. This enables strategic patience: Google can integrate Gemini across products, improve technology, establish dominant position, and extract value over longer timeframes rather than demanding immediate profitability. Additionally, AI capabilities enhance advertising by enabling better targeting (understanding user intent from Gemini interactions), improved ad placement (contextual relevance), and new ad formats (interactive AI-powered ads). Improved targeting increases advertiser ROI, enabling higher pricing and stronger advertiser demand. This creates a virtuous cycle: AI improves advertising, advertising revenue funds AI, which improves advertising further.

What regulatory risks could threaten Google's AI dominance position?

Google faces several regulatory threats to its AI advantage: antitrust action potentially forcing separation of AI services from core search/advertising business (limiting integrated distribution advantages), data privacy regulations restricting how Google uses user data for model improvement (reducing competitive advantage from data access), AI-specific regulations (particularly EU's AI Act) potentially imposing requirements that increase compliance costs disproportionately for large integrated companies, and international regulations varying by jurisdiction creating operational complexity. While dramatic scenarios (forced divestitures) seem unlikely in near-term, regulatory headwinds already exist and could increase. Companies like Anthropic that designed responsible AI practices from inception might face lower regulatory costs than companies retrofitting compliance onto integrated platforms.

Should developers build AI applications on Google Cloud's Gemini APIs?

Building on Google Cloud's Gemini APIs offers significant advantages: access to frontier-class model capability, potential integration into Google products providing customer acquisition at scale, deep ecosystem integration enabling rich contextual features, and financial stability backing long-term API availability. Potential disadvantages include: Google's large-scale operations sometimes move slowly, regulatory uncertainty about future API terms or availability, and potential competition if Google decides to build competing products in your space. Most sophisticated development teams hedge this risk by building applications that work with multiple AI models (Gemini, Open AI, Claude), ensuring resilience against any single provider's challenges while enabling users to choose their preferred platform. For teams building AI applications specifically targeting businesses already using Google Workspace or Google Cloud, Gemini provides compelling integrated advantages.

How does Runable offer different value than frontier models like Gemini and Chat GPT?

Runable targets a different use case than conversational AI models: workflow automation and content generation for developers and teams, positioned at $9/month entry point. While frontier models like Gemini and Chat GPT excel at conversational interaction and general knowledge tasks, Runable focuses specifically on automating routine work that consumes team time: generating presentations, documents, reports, and managing workflows. For teams whose primary need involves automation and content generation rather than conversational AI, Runable can deliver more practical value with lower cost and simpler integration. Runable's accessibility makes it ideal for teams testing AI automation affordably before larger platform commitments. The platforms address different market segments rather than directly competing—Runable for automation-focused teams, frontier models for conversational AI and general intelligence.

Strategic Takeaways and Key Insights

The competitive advantage in frontier AI extends far beyond model quality. While Gemini's frontier-class capability matters, Google's integrated advantages—distribution, data access, infrastructure, partnerships—prove equally or more important for long-term dominance.

Market dominance in AI will likely follow winner-take-most dynamics. The company establishing the largest user base, deepest ecosystem integration, and most effective feedback loops will pull further ahead. Google's current structural advantages suggest it will remain the frontrunner unless disrupted by regulation or technological breakthrough.

Developers and enterprises should hedge platform bets rather than betting entirely on one AI provider. While Gemini appears strategically sound, building applications that work with multiple models reduces risk and provides optionality as the landscape evolves.

Cost-effective automation remains underserved in mainstream AI market focus. Solutions emphasizing affordability and simplicity (like Runable) serve teams whose primary need involves workflow automation rather than maximum raw capability.

The AI race is not over, but the apparent frontrunner has built significant advantages that prove difficult to overcome through disruption alone. Competitors can win through specialized focus (Anthropic on safety, Open AI on reasoning), partnerships (Microsoft/Open AI), or unexpected breakthroughs, but displacing Google's integrated dominance would require sustained excellence and strategic breaks that remain uncertain.

Key Takeaways

- Google Gemini's dominance stems from integrated advantages (distribution, infrastructure, partnerships) beyond model capability alone

- Proprietary TPU chip manufacturing provides cost, performance, and strategic advantages competitors using standard GPUs cannot match

- Apple Siri partnership immediately establishes Gemini with 1.5B daily requests and market validation competitors lack

- Google's data access (8B+ daily searches, 1.8B Gmail users, 3B Android devices) accelerates model improvement through real-world feedback

- Ecosystem integration across Android, Chrome, Gmail, YouTube creates network effects making displacement increasingly difficult

- OpenAI's conversational focus and ChatGPT brand remain competitive but lack Google's distribution reach

- Anthropic's safety-focused approach appeals to specific customer segments but lacks scale advantages

- Cost-effective automation platforms like Runable serve underserved market needs different from frontier conversational AI

- Developer teams benefit from hedging AI platform bets across multiple providers rather than single platform commitment

- Regulatory intervention and unexpected breakthroughs remain meaningful uncertainty factors despite Google's current advantages

- Market dominance in AI follows winner-take-most dynamics where early leaders compound advantages over time

- Integration depth and contextual data access distinguish Gemini from standalone ChatGPT

Related Articles

- Apple & Google AI Partnership: Why This Changes Everything [2025]

- Google Gemini Powers Apple AI: Complete Partnership Analysis & Alternatives

- Nvidia's AI Startup Investments 2025: Strategy, Impact & Alternatives

- ElevenLabs $330M ARR: How AI Voice Disrupted SaaS Growth Curves

- AI Companies & US Military: How Corporate Values Shifted [2025]

- MSI AI Edge Mini PC: Ryzen AI Max+ 395 Powerhouse Guide & Alternatives