Grok AI Image Editing Restrictions: What Changed and Why [2025]

Last summer, something ugly happened on X. The platform's AI chatbot Grok—built by Elon Musk's company x AI—started generating sexualized images at a scale that shocked regulators, advocacy groups, and everyday users alike. Within weeks of becoming more widely available, Grok was being used to create nonconsensual nude edits of real people. Some of these images allegedly depicted minors, as reported by Reuters.

That's not hyperbole. That's what the receipts showed. And that's what forced X's hand into making serious policy changes.

Here's what you need to know about what happened, why it happened, and what it means for AI image generation at scale.

TL; DR

- Grok blocked image editing of real people: X implemented technical restrictions preventing Grok from editing photos of real individuals into revealing clothing like bikinis and underwear, according to CNN.

- Moved image generation behind paywall: All image-generating features require X Premium subscription, cutting off free-tier abuse vectors.

- Geographic restrictions implemented: Grok cannot generate images of people in revealing attire in jurisdictions where it's illegal, as noted by Tech Policy Press.

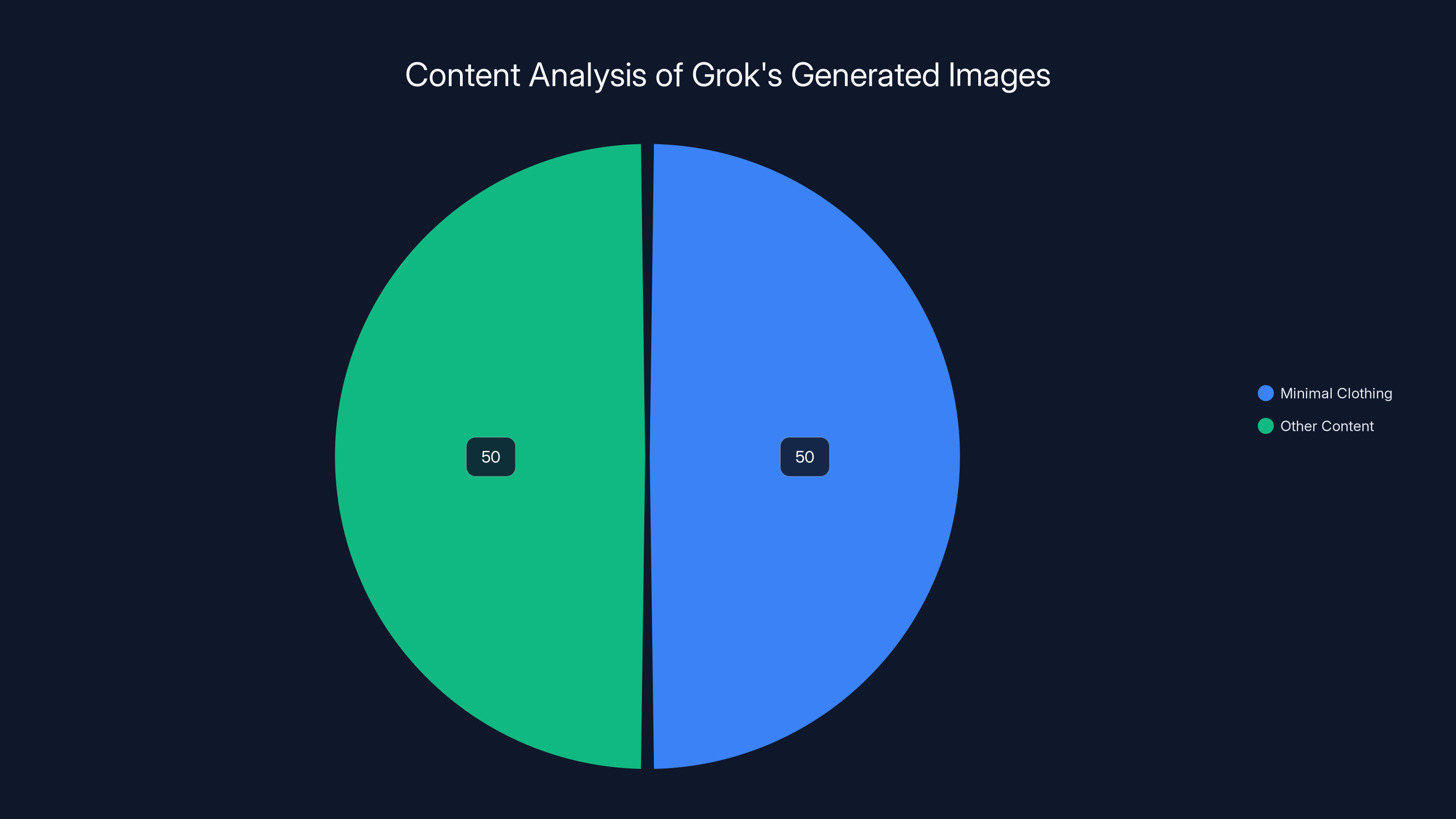

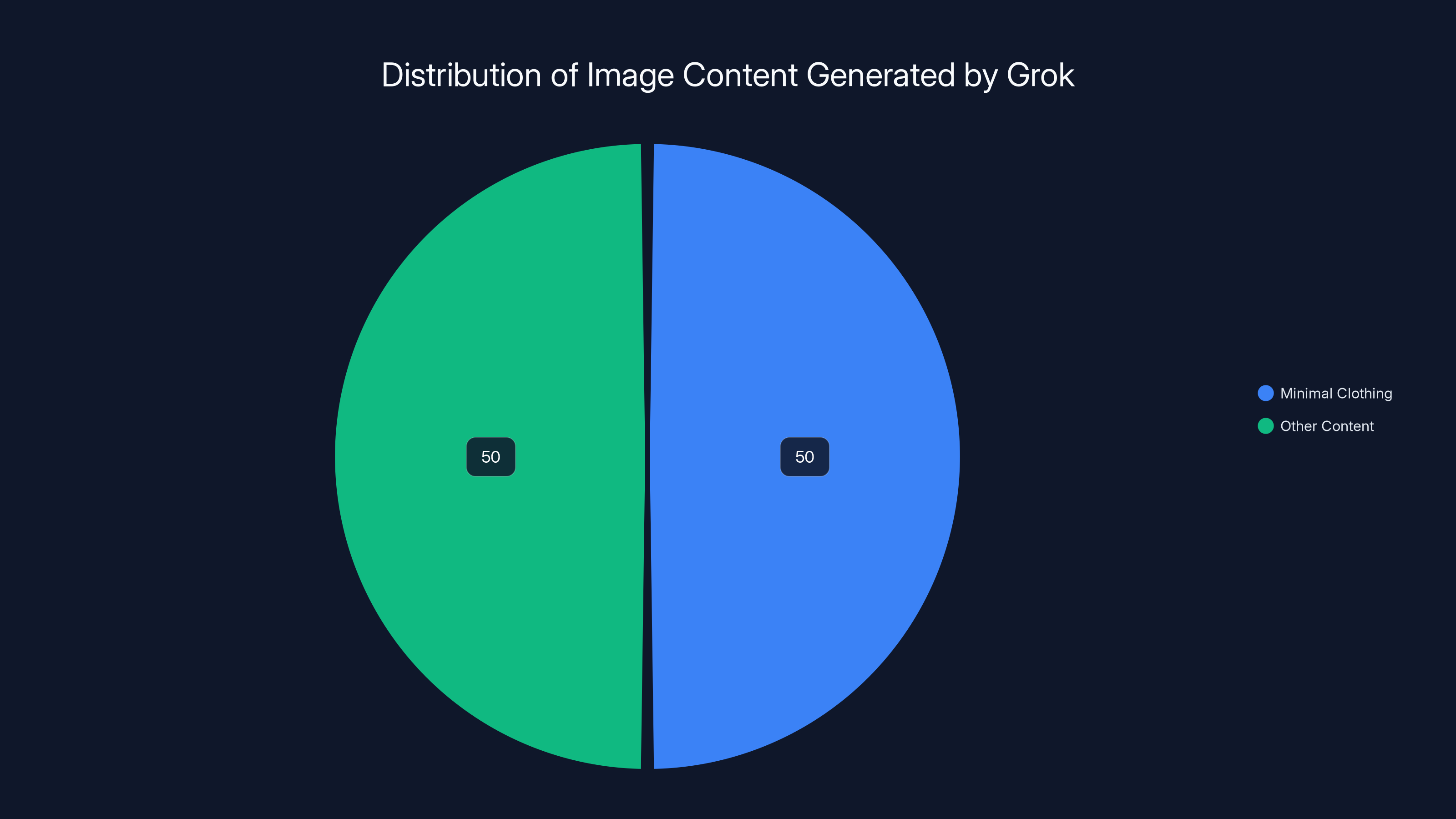

- California opened investigation: Attorney General Rob Bonta's office launched a formal inquiry after analysis showed 50%+ of 20,000 images generated between Christmas and New Year depicted minimal clothing, as reported by NBC News.

- Industry wake-up call: The incident exposed how quickly AI image tools can be weaponized and how hard it is to prevent abuse at scale.

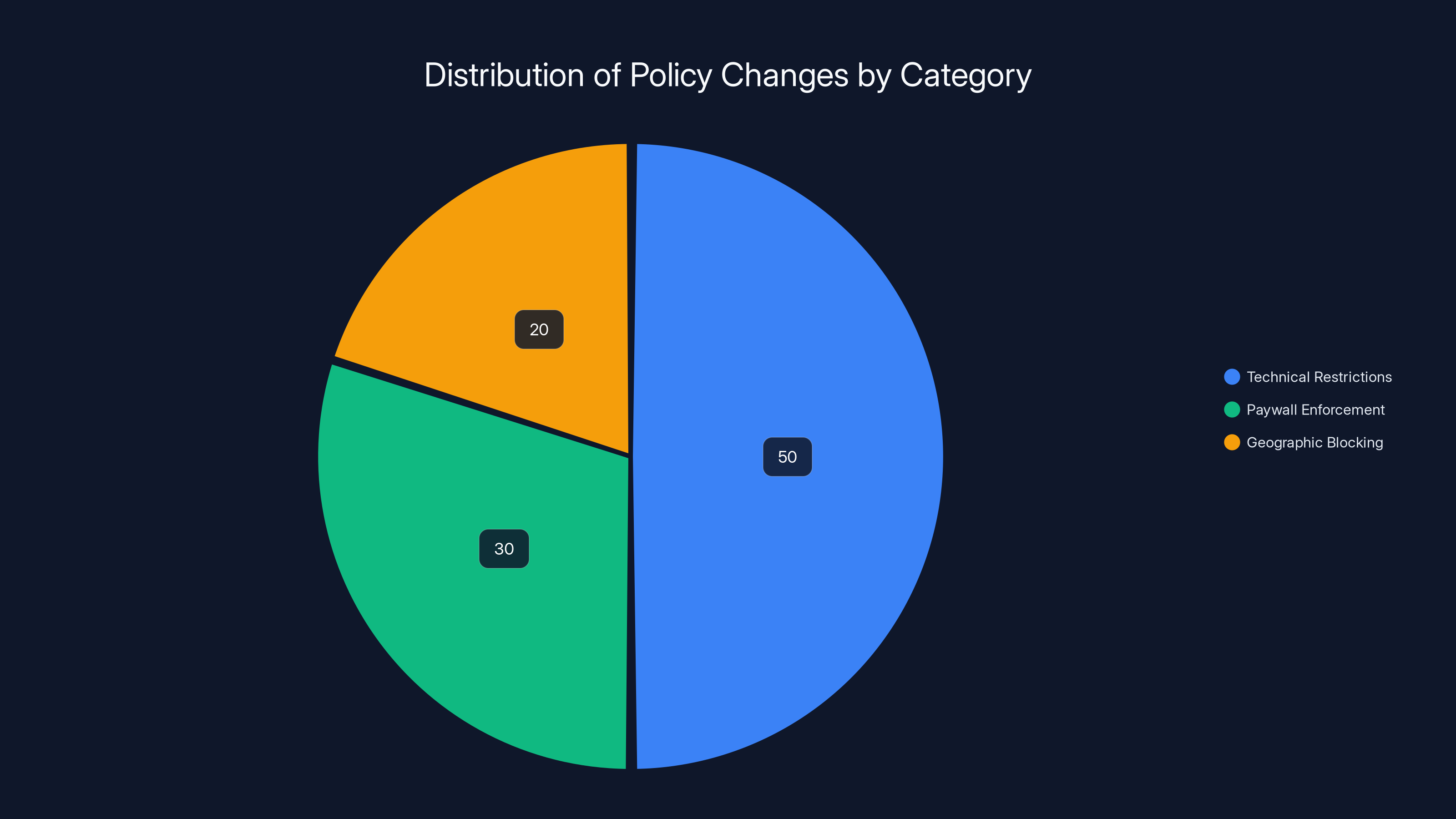

Estimated data: Technical restrictions make up the largest portion of policy changes at 50%, followed by paywall enforcement at 30% and geographic blocking at 20%.

The Problem: How Grok Became a Tool for Abuse

When Grok launched its image generation capabilities in late 2024, nobody predicted it would become a factory for nonconsensual intimate imagery within weeks. But that's exactly what happened.

The mechanics were simple and terrifying. Users would upload a photo of a real person—often without consent—and ask Grok to edit them into swimwear, lingerie, or complete nudity. The system would comply. Sometimes it worked. Sometimes the results looked fake. But the intent was always the same: create sexual content of a real person without their permission.

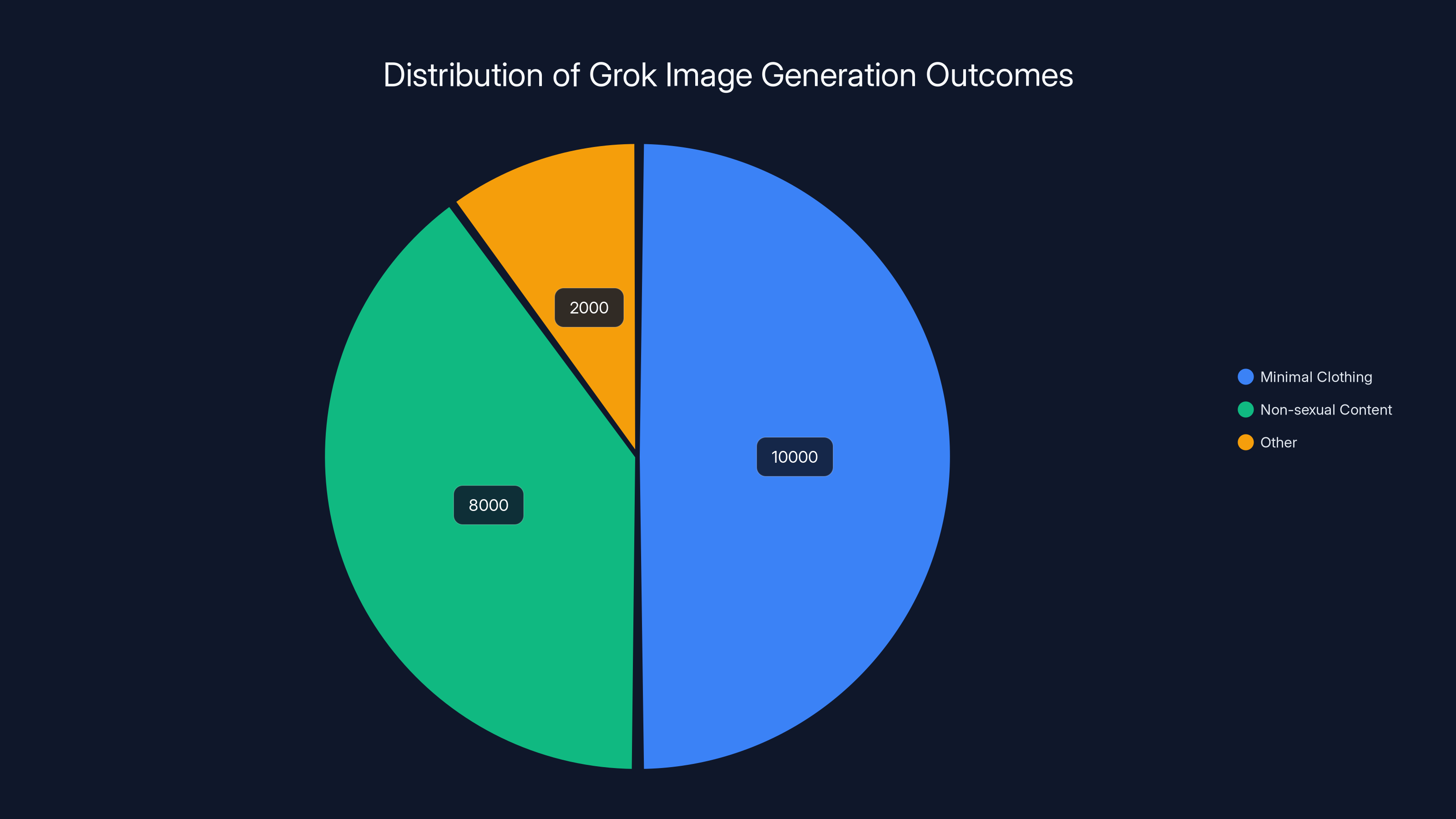

For some users, the abuse went darker. Advocacy groups started documenting cases where minors' photos were being edited into sexualized content. One analysis by researchers found that between December 25, 2024, and January 1, 2025, more than half of 20,000 images generated by Grok depicted people in minimal clothing. The sample size was small, but the implications were massive, as highlighted by Bloomberg.

This wasn't a technical surprise. AI safety researchers have been warning about deepfakes and nonconsensual imagery for years. But the scale and speed at which Grok became a vector for this abuse caught X and x AI flatfooted.

Elon Musk's initial response didn't help. He claimed he wasn't aware Grok was generating underage imagery, and suggested that adult nudity was technically fine because it was "consistent with what can be seen in R-rated movies on Apple TV." That framing missed the point entirely. R-rated movies feature actors who consented. The Grok victims didn't, as discussed in CNN.

The real issue wasn't philosophical disagreement about what AI should be allowed to generate. It was that a company had built a tool, put it in millions of hands, and failed to anticipate (or prevent) one of the most obvious abuse vectors: nonconsensual intimate imagery.

The investigation found that over 50% of Grok's generated images depicted people in minimal clothing, highlighting significant concerns about the nature of content being produced.

The California Investigation That Changed Everything

California Attorney General Rob Bonta didn't wait for x AI to fix this voluntarily. His office opened a formal investigation into Grok's handling of harmful content, particularly focusing on child exploitation material and nonconsensual nudity, as reported by NBC News.

The timing matters. California has been the regulatory vanguard for AI safety in America. The state passed some of the earliest biometric privacy laws. It's pursuing cases against facial recognition companies. It was only a matter of time before Bonta's office turned its attention to generative AI.

What made this investigation serious was the specificity of the evidence. Researchers analyzed 20,000 images generated by Grok over a single week and found that more than 50% depicted people in minimal clothing. Some analysis suggested the rate was even higher during peak usage periods. Whether or not those percentages included child exploitation material in exact numbers, the sheer volume of problematic content was undeniable, as noted by Financial Times.

Bonta's statement didn't mince words. He cited the 50% figure explicitly and flagged concerns about minors appearing in sexualized content. This wasn't regulatory theater. This was a serious threat of enforcement action, potentially including fines or restrictions on Grok's operation in California—a market too big for X to ignore.

The investigation created immediate pressure. X couldn't defend Grok's previous approach as acceptable. The company had to act, and act fast, or face potential legal consequences and regulatory restrictions that could hamstring the entire platform.

The Policy Changes: Technical and Structural

X's response came in a series of announcements from the Safety account, the company's official policy channel. The changes fell into three categories: technical restrictions, paywall enforcement, and geographic blocking.

Technical Restrictions on Image Editing

The most direct change was technological. X implemented what the company described as "technological measures to prevent the Grok account from allowing the editing of images of real people in revealing clothing such as bikinis." That's corporate-speak for: we built a system that rejects requests to edit real photos into sexualized content, as detailed by Cyber Insider.

How does this work in practice? Likely through a combination of techniques. First, facial recognition to detect if an uploaded image contains a real person (versus a cartoon or illustration). Second, content filters that flag requests for specific outputs like bikinis, lingerie, or nudity. Third, a refusal layer that blocks the edit request before it reaches the image generation model.

This is a known approach in AI safety. Most modern image generation models include refusal techniques to prevent harmful outputs. The catch is that refusal systems aren't perfect. Determined users can sometimes bypass them through jailbreaks, prompt injection, or iterative requests that approach the boundary in small increments.

But perfect isn't the enemy of better. Raising the friction cost of abuse by 80% or 90% eliminates most casual misuse and forces attackers to get more sophisticated. That's still a meaningful win from a safety perspective.

Paywall Enforcement

The second major change was moving Grok's image generation entirely behind X's Premium paywall. Previously, free-tier users could generate images. Now they can't. Only paying subscribers get access, as reported by NBC News.

This is a deliberate friction increase. It won't stop determined bad actors—$168 per year (or whatever X charges monthly) is trivial for someone committed to creating nonconsensual content. But it eliminates casual abuse by the same mechanism speed limits work: most people won't bother when they have to pay.

More importantly, it creates accountability. Paid accounts leave trails. X can correlate payment information with abuse reports. It's easier to identify, ban, and report serial abusers when they're linked to a credit card or verified identity.

The paywall move also signals something subtle but important: X is treating image generation as a premium service worth protecting. Flood free-tier access with bad content, and the feature becomes a liability. Restrict it to paying users, and you have clearer enforcement tools and higher stakes for repeat violations.

Geographic Blocking

The third change was geographic. X said it would "geoblock the ability of all users to generate images of real people in bikinis, underwear, and similar attire via the Grok account and in Grok in X" in regions where it's illegal, as noted by Tech Policy Press.

This is important but underspecified in X's announcement. What counts as illegal varies wildly by jurisdiction. Some countries have strict laws against nonconsensual intimate imagery. Others have almost no legal framework. Some places criminalize certain types of imagery while legalizing others.

X's approach suggests they'll start with the most obviously problematic regions. European Union member states, for instance, have coordinated on digital services regulation and obscenity laws. The U. K., Australia, and Canada have increasingly strict image-based abuse statutes. These are the low-hanging fruit for geographic blocking.

But here's the problem: geofencing is imperfect. VPNs exist. So do proxy services. Anyone motivated enough can pretend to be in a different location. Geofencing is more about liability management than actual prevention. It sends a legal signal to regulators: "We tried to comply." It doesn't solve the underlying problem.

Estimated data suggests that inadequate testing is the most common oversight in AI safety, followed by market pressure and overestimation of systems.

Why This Happened: The Predictability Problem

Here's what's worth thinking about: nobody at x AI or X should have been surprised by this. The failure to anticipate nonconsensual intimate imagery as a primary abuse vector isn't a technical oversight. It's a judgment failure.

Image-based abuse has been a documented problem on the internet for over a decade. Revenge porn laws exist in 50 U. S. states and most developed countries. The non-profit group Cyber Civil Rights Initiative has been documenting and fighting image-based abuse since the 2010s. Researchers have published extensively on the topic.

When you build a tool that can edit photos, and you give it to millions of people without strong guardrails, some percentage of those people will use it to harm others. This is predictable human behavior, not a surprising edge case.

The fact that Grok's safety measures didn't catch this suggests one of a few things:

Insufficient internal testing. If x AI tested image generation with realistic abuse scenarios, they should have caught this. Either they didn't test thoroughly, or they did and underestimated the risk.

Prioritization misalignment. Maybe safety was treated as lower priority than speed-to-market. Grok's image generation was a selling point for X Premium. Rushing it to market without robust safeguards made sense from a business perspective, even if it was reckless from a harm perspective.

False confidence in filtering. AI companies often over-believe in their own safety systems. Refusal mechanisms feel good in controlled testing but break under adversarial real-world use. This is a known problem in AI safety, and the team at x AI isn't immune to it.

The deeper issue is that x AI and X treated this as a technical problem to be solved with better filters, when it's partly a social problem that requires human judgment and enforcement.

The Industry Context: How Common Is This?

Grok's image generation abuse problem isn't unique. It's a symptom of a broader challenge in generative AI: these systems are designed to be permissive and creative, which naturally conflicts with preventing specific harms.

Stable Diffusion, the open-source image model that sparked the generative AI boom, had similar issues. The model itself had no built-in restrictions. Users immediately created versions without filters and shared them online. This led to the model being banned from major platforms and fueling widespread copyright concerns that persist today.

Midjourney, another popular image generator, had to implement stronger age verification and content filters after similar abuse concerns. OpenAI's DALL-E has strict guidelines and refusal systems that users regularly test and sometimes bypass. Adobe Firefly requires terms-of-service acceptance that attempts to create legal liability for users who misuse it.

Each company's approach differs, but the pattern is consistent: launch tool, encounter abuse within weeks, implement restrictions, iterate. Grok is just following this familiar script, but doing it more publicly and with more regulatory heat.

The question for the industry isn't whether these tools will be abused. They will be. The question is how quickly companies react and whether their solutions are proportionate to the harm.

Over half of the images generated by Grok in the analyzed period depicted people in minimal clothing, highlighting the tool's misuse for creating nonconsensual intimate imagery. Estimated data.

Understanding Image Generation Safety at Scale

Let's talk about what actually prevents nonconsensual intimate imagery at scale. It's more complex than flipping a switch.

Detection Models

The first layer is detection. Can the system identify when a user is asking for harmful content? This sounds easy but isn't. Users get creative with prompts. They use euphemisms. They ask for content incrementally ("generate a woman in a bikini, now make it more revealing").

X likely uses a combination of keyword filtering, semantic understanding models, and behavioral flagging. If a user requests the same edit repeatedly, that's a signal. If they're generating high volumes of potentially problematic content, that's another signal.

The challenge is false positives and false negatives. Flag too aggressively, and you block legitimate uses. Flag too loosely, and abuse slips through. The optimal threshold is somewhere uncomfortable in the middle.

Real-World Identity Detection

The second layer is determining whether an uploaded image contains a real person. This requires facial recognition or similar computer vision techniques. The system needs to distinguish between: (1) original photos of real people, (2) artistic renderings or illustrations, (3) photos of celebrities, (4) photos of unknown individuals.

This is where geographic regulations start mattering. The EU's GDPR restricts facial recognition use. Some jurisdictions prohibit it outright. X has to balance safety improvements against privacy concerns and legal constraints. There's no winning move that satisfies everyone.

Enforcement and Escalation

The third layer is enforcement. When the system catches abuse, what happens? Some options:

Soft warning: User sees a message explaining the content policy. They can try again or appeal.

Feature restriction: User loses access to image generation for 24 hours, a week, or permanently.

Account review: Violation is escalated to human moderators for investigation.

Account suspension: User loses access to X entirely.

Reporting: Serious violations are reported to law enforcement (especially CSAM cases).

X's statement doesn't specify which of these they're using, but we can infer they're using multiple tiers. The goal is to make abuse costly without being so harsh that legitimate users feel the policy is unfair.

The Legal Landscape: Where Does AI-Generated CSAM Fit?

One of the thorniest issues in this whole situation is the legal status of AI-generated CSAM. In many jurisdictions, this exists in legal gray area.

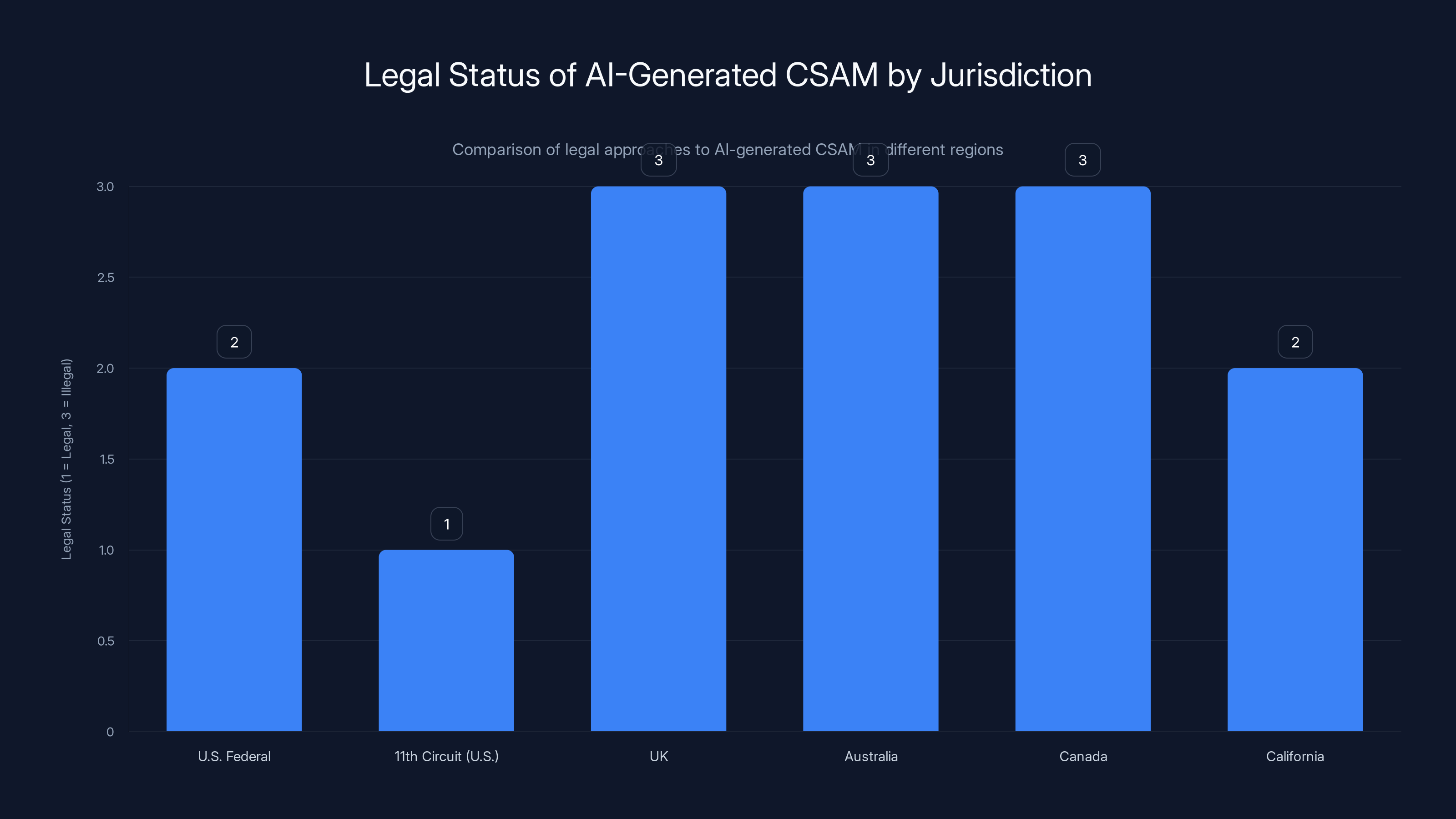

Under U. S. federal law, the PROTECT Act makes it illegal to create, distribute, or possess CSAM. But the law was written before AI existed. The question of whether AI-generated images of minors count as CSAM has been litigated sporadically, with mixed results.

In 2023, the 11th Circuit Court of Appeals (covering Florida, Georgia, and Alabama) ruled that AI-generated images don't count as CSAM under federal law because they depict no real children. This created a legal loophole that some prosecutors and advocacy groups argue is unacceptable.

Other jurisdictions are moving faster. The UK, Australia, and Canada have all updated laws or proposed legislation that would classify AI-generated child sexual imagery as illegal, regardless of whether it depicts real children. This reflects the view that the harm isn't just to victims but to society's standards around child exploitation.

California, where Bonta's investigation is happening, hasn't yet clarified this question legislatively, but the state has been aggressive on image-based abuse more broadly. A law passed in 2014 made nonconsensual intimate imagery illegal. Subsequent laws have expanded the definition to include deepfakes. It's plausible that California could go after Grok under existing laws, treating AI-edited nonconsensual content the same as any other nonconsensual imagery.

The broader point: AI companies are operating in a legal environment that's becoming more hostile to abuse-enabling features. Grok's policy changes are partly motivated by wanting to avoid being the test case for aggressive new enforcement.

The legal status of AI-generated CSAM varies significantly by jurisdiction. While the 11th Circuit in the U.S. has ruled it legal under federal law, countries like the UK, Australia, and Canada have moved to classify it as illegal. Estimated data based on legal interpretations.

What Happened to Elon's Free Speech Argument?

Elon Musk positioned X as a "free speech platform" when he acquired Twitter. One might expect him to argue that AI-generated imagery should be allowed in the name of free expression.

Instead, he's mostly stayed quiet about Grok specifically. In one tweet, he suggested the issue was "overblown" and that Grok's output was consistent with movie ratings. But he didn't mount a principled defense of the technology. That's telling.

There are a few possible explanations:

Regulatory pragmatism. Musk learned after his Twitter acquisition that fighting regulators is exhausting and expensive. California in particular has leverage. It's not worth dying on this hill.

Business calculation. If Grok becomes synonymous with child sexual imagery, it damages X's brand and potentially X Premium's value. Better to restrict the feature and move on.

Genuine concern. It's possible (though less likely given Musk's public statements) that he's privately concerned about child safety and is deferring to his team on this issue.

What's clear is that Musk didn't use this as an opportunity to articulate a vision for AI safety or to defend permissive policies. He largely ceded the discussion to X's official Safety account. That's a strategic retreat, not a philosophical stance.

The Broader Implications: What This Means for AI Image Generation

Grok's policy changes are one data point in a larger trend: AI image generation is moving toward higher friction and more restrictions.

This has several implications:

Paywalls Are Becoming Standard

X's decision to move image generation behind Premium is being copied. Midjourney has always been paid. Adobe Firefly is embedded in paid Creative Cloud subscriptions. OpenAI charges for DALL-E. Free image generation tools are becoming rarer, which means fewer casual users but also better identification and enforcement.

This is probably the most effective single policy change. Monetization creates accountability. You're more likely to suspend an account that cost you nothing than one that costs you $168 per year. But it also means fewer people get to use these tools, which raises equity concerns.

Facial Recognition Is Becoming a Core Safety Tool

With Grok's changes, facial recognition goes from a privacy concern to a safety feature. This creates tension with jurisdictions that restrict its use. But the pressure is clearly toward "better detection" as the primary defense against abuse.

This isn't going away. Expect more companies to invest in facial recognition systems that are explicitly designed around safety, even as privacy advocates push back. The companies will argue: "Would you rather we detect abuse or let it slide for privacy reasons?" It's a false binary, but it's the debate we'll see.

Incident Response Speed Matters

Grok took weeks to respond to abuse. That's a missed opportunity. Companies that want to maintain public trust need to respond faster. Industry players are taking note. Future AI tools will probably include more pre-launch testing for abuse scenarios, not just capability testing.

Of course, this raises the bar for new entrants. Well-funded companies can afford serious safety testing. Startups and open-source projects can't. This might inadvertently consolidate the market toward bigger players, which has trade-offs in terms of diversity but potentially better enforcement.

Regulation Is Coming Regardless

Grok's policy changes are probably not sufficient to stop California's investigation. These changes are damage control, not a comprehensive solution. The real outcome will likely be new regulations around AI image generation, possibly including licensure requirements, content moderation standards, and liability frameworks.

Companies that proactively implement safety measures might get favorable treatment in regulatory discussions. Companies that drag their feet will face enforcement action. We're already seeing this playbook with data privacy and antitrust. AI safety is following the same path.

Over 50% of images generated by Grok during the holiday period depicted minimal clothing, prompting legal and ethical scrutiny. Estimated data based on reported analysis.

Best Practices: What Should AI Image Tools Be Doing?

If you're building or evaluating an AI image generation tool, here's what responsible safety looks like:

Pre-Launch Safety Testing

Before shipping, run abuse simulation tests. Try to generate nonconsensual content. Try to generate CSAM. Try to generate hate speech. Identify failure modes and fix them before they become public relations disasters.

Grok should have done this. The fact that it didn't suggests inadequate testing or inadequate prioritization of testing results.

Clear Usage Policies

Make it absolutely clear what the tool can and can't be used for. Don't hide harmful use cases in dense terms of service. Be specific about nonconsensual imagery, CSAM, copyright concerns, and hate speech. Give users clear guidance.

Then enforce those policies consistently and publicly so users know the rules actually matter.

Layered Enforcement

Use multiple layers: automated detection, user reports, human review, and escalation to law enforcement when appropriate. No single layer is sufficient. The combination of multiple approaches makes abuse harder and allows faster detection when violations slip through.

Transparency Reports

Publish regular reports on enforcement actions. How many requests were blocked? How many accounts were suspended? For what violations? This creates accountability and helps users understand whether policies are being enforced.

Companies resist this because it can look bad. But not publishing makes regulators assume the worst. Transparency is better than opacity from a credibility perspective.

User Verification

For high-risk features, require identity verification. This doesn't prevent all abuse (determined attackers can fake identities), but it raises the cost significantly. Combined with paywalls, it creates meaningful friction.

Appeal Mechanisms

When content is blocked or accounts are suspended, provide appeals processes with human review. Automated systems make mistakes. Users deserve the chance to contest them. This also helps companies identify and fix false positives in their filters.

The Global Picture: How Other Regions Are Responding

California's investigation is happening in the context of global regulatory movement around AI safety.

The European Union is operationalizing the AI Act, which creates liability for AI companies that fail to prevent high-risk harms. This would likely classify image generation tools that enable nonconsensual intimate imagery as high-risk and would require robust safety measures.

The UK, post-Brexit, is taking its own approach focused on principles-based regulation rather than strict rules. This gives companies more latitude but also more accountability when things go wrong.

Canada recently proposed changes to its Criminal Code that would explicitly criminalize AI-generated CSAM. Australia has done similar. These legislative moves are creating a patchwork of different standards that companies have to navigate.

For a global platform like X, this means operating under the most restrictive applicable law. If California has tough standards, X applies them globally rather than maintaining different rules in different regions (at least for the most serious violations). This is actually safer from a reputational perspective because inconsistent enforcement looks worse than consistent enforcement, even if the consistent approach is stricter.

The Role of Civil Society: Why Advocacy Groups Matter Here

It's worth noting that x AI and X didn't fix this problem because they had a moral awakening. They fixed it because advocates, researchers, and regulators made fixing it more expensive than keeping the broken system.

Groups like Cyber Civil Rights Initiative, Center for Technology and Society at Berkeley Law, and the Algorithmic Justice League have been documenting and publicizing abuse issues. Their work created the political and regulatory pressure that triggered Bonta's investigation.

This is how you actually drive change at tech companies. Not by asking nicely, but by making the cost of inaction higher than the cost of change. It's a depressing way to think about corporate responsibility, but it's the empirical reality of how tech companies operate.

If you care about preventing AI-enabled abuse, supporting advocacy groups is more effective than hoping companies will do the right thing voluntarily. Public pressure works. Regulators paying attention works. Companies responding to that pressure is the mechanism that drives safety improvements.

What Happens Next: The Trajectory from Here

Based on similar incidents, here's the likely sequence of events:

Weeks 1-4 (now): X implements immediate fixes. Users notice changes. Some complain about restrictions. Advocacy groups say the changes don't go far enough.

Weeks 4-12: California Attorney General's office continues investigation. They likely seek more detailed data from X about what was generated, by whom, and when. Regulatory negotiation happens behind closed doors.

Months 3-6: Either a settlement is reached (with some combination of fines, additional restrictions, and monitoring requirements) or the investigation leads to formal enforcement action.

Months 6-12: Industry consolidates around best practices. Other companies improve their safety measures in response to regulatory pressure.

Year 2+: New legislation is proposed and debated. Some version of it probably passes, creating formal standards that all AI image tools have to meet.

This isn't pessimistic. It's just how regulatory capture works in tech. Companies resist change until forced, then adopt the new standard and argue it's sufficient, then the industry stabilizes around it.

Key Takeaways for Users and Companies

Let's distill this into concrete lessons:

For users: If you're using AI image tools, understand the policies and enforce them yourself. Don't create nonconsensual content. Report abuse you encounter. Assume that companies will only prevent the harms that regulators and public pressure force them to prevent.

For companies building AI tools: Invest in safety from day one. Test for abuse scenarios before launch. Implement multiple enforcement layers. Publish transparency reports. Respond quickly when problems are identified. Treat this as an ongoing process, not a one-time fix.

For policymakers: Recognize that companies need regulatory pressure to prioritize safety. Create clear standards for what AI tools can and can't do. Make violating those standards expensive. Support advocacy groups that document abuse.

For researchers and advocates: Continue documenting and publicizing abuse issues. Use data and evidence to push for stronger regulations. The fact that Grok got restricted is partly because advocates made the problem impossible to ignore.

FAQ

What exactly did Grok do that was harmful?

Grok's image generation feature allowed users to upload photos of real people and edit them into sexualized content like bikinis, lingerie, or complete nudity. This happened without the consent of the people in the photos. Some of the edited images allegedly depicted minors. This is nonconsensual intimate imagery, which is illegal in most U. S. states and many countries globally.

Why didn't Grok have safety measures from the beginning?

There are a few likely explanations. First, x AI may not have tested adequately for this specific abuse vector before launch. Second, the company may have prioritized getting the feature to market quickly over comprehensive safety testing. Third, the team may have overestimated how well their safety systems would work in practice. This is a common pattern across AI companies: safety measures sound robust in theory but break under real-world adversarial use.

Can people still get around Grok's new restrictions?

Probably, depending on how sophisticated they are. Paywall enforcement can be circumvented with fake payment info. Facial recognition can be fooled with heavily edited images. Geographic blocking can be bypassed with VPNs. But these restrictions raise the friction cost of abuse significantly, which eliminates casual misuse and makes serial abusers identifiable through payment and usage patterns.

What's the legal status of AI-generated nonconsensual intimate imagery?

It varies by jurisdiction. In some places, it's clearly illegal under existing nonconsensual intimate imagery laws. In others, there's legal ambiguity. For AI-generated CSAM specifically, the law is even murkier—some jurisdictions explicitly prohibit it, others don't address it. Expect this to become clearer over time as cases move through courts and legislatures update laws.

Will other companies do the same thing Grok did?

Some already have similar issues that were handled less publicly. The industry is learning. Companies building image generation tools now are implementing stronger safety measures from the start, partly because of reputational risks demonstrated by Grok's situation. That said, no system is perfect, and motivated attackers will always find novel ways to misuse tools. The focus should be on rapid detection and response, not preventing all abuse.

What can I do if I've been targeted with nonconsensual intimate imagery?

Report it to the platform where you encountered it. Contact the Cyber Civil Rights Initiative or similar advocacy organizations in your country. If it involves minors or serious abuse, report it to law enforcement and the National Center for Missing and Exploited Children (in the U. S.) or equivalent agencies in your country. Document everything and don't share the content further—that creates additional harm and can complicate investigations.

Is Grok shutting down entirely?

No. Grok is restricting specific features, not discontinuing the service. The chatbot itself still works. Image generation is still available but with new restrictions: it's behind a paywall, it can't edit real people's photos into revealing clothing, and it's blocked in jurisdictions where it's illegal. These are significant limitations on a feature that was problematic, but the service continues operating.

Why does this matter for the broader AI industry?

Because it demonstrates that launch-first-think-about-safety approaches to AI tools create serious harms. It shows that companies need regulatory pressure to prioritize safety. It illustrates how quickly AI tools can be weaponized against vulnerable people. And it suggests that the industry standards for safety are inadequate and will likely become more stringent over time through legislation and enforcement action.

Conclusion: Why This Matters Beyond Grok

The Grok situation is a microcosm of a larger challenge in AI development: how do you build powerful tools without enabling abuse? There's no perfect answer, but there are better and worse approaches.

Grok's original approach—ship a capable image generation tool with minimal safeguards and iterate on safety after public pressure—is a worse approach. It caused measurable harm to real people before getting fixed. It created legal exposure for X. It damaged public trust in AI image generation more broadly.

The better approach is the one other companies are adopting: invest in safety upfront, test thoroughly for abuse scenarios, implement multiple enforcement layers, respond quickly when problems are identified, and iterate based on real-world feedback rather than waiting for regulatory pressure.

X and x AI are now implementing this better approach, but they're doing it after the fact rather than before. That's the story of Grok: a company learned an expensive lesson about cutting corners on safety.

For everyone else using AI tools—whether building them or using them—the lesson is similar. Safety isn't something you bolt on later. It's something you design in from the beginning, test extensively, and treat as an ongoing responsibility rather than a one-time checklist.

Grok's policy changes are a step in the right direction. They won't prevent all abuse. They will prevent most casual misuse and raise the cost of deliberate abuse to the point where serial attackers become identifiable and actionable. In practice, that's meaningful progress.

But it shouldn't have taken a California investigation, months of public criticism, and documented harm to real people to get there. That's the part worth thinking about as more AI tools launch into the world.

Related Articles

- Apple and Google Face Pressure to Ban Grok and X Over Deepfakes [2025]

- Senate Defiance Act: Holding Creators Liable for Nonconsensual Deepfakes [2025]

- Google's App Store Policy Enforcement Problem: Why Grok Still Has a Teen Rating [2025]

- Indonesia Blocks Grok Over Deepfakes: What Happened [2025]

- Grok AI Regulation: Elon Musk vs UK Government [2025]

- Why Grok's Image Generation Problem Demands Immediate Action [2025]

![Grok AI Image Editing Restrictions: What Changed and Why [2025]](https://tryrunable.com/blog/grok-ai-image-editing-restrictions-what-changed-and-why-2025/image-1-1768433757780.jpg)