Introduction: The Policy Gap Nobody's Talking About

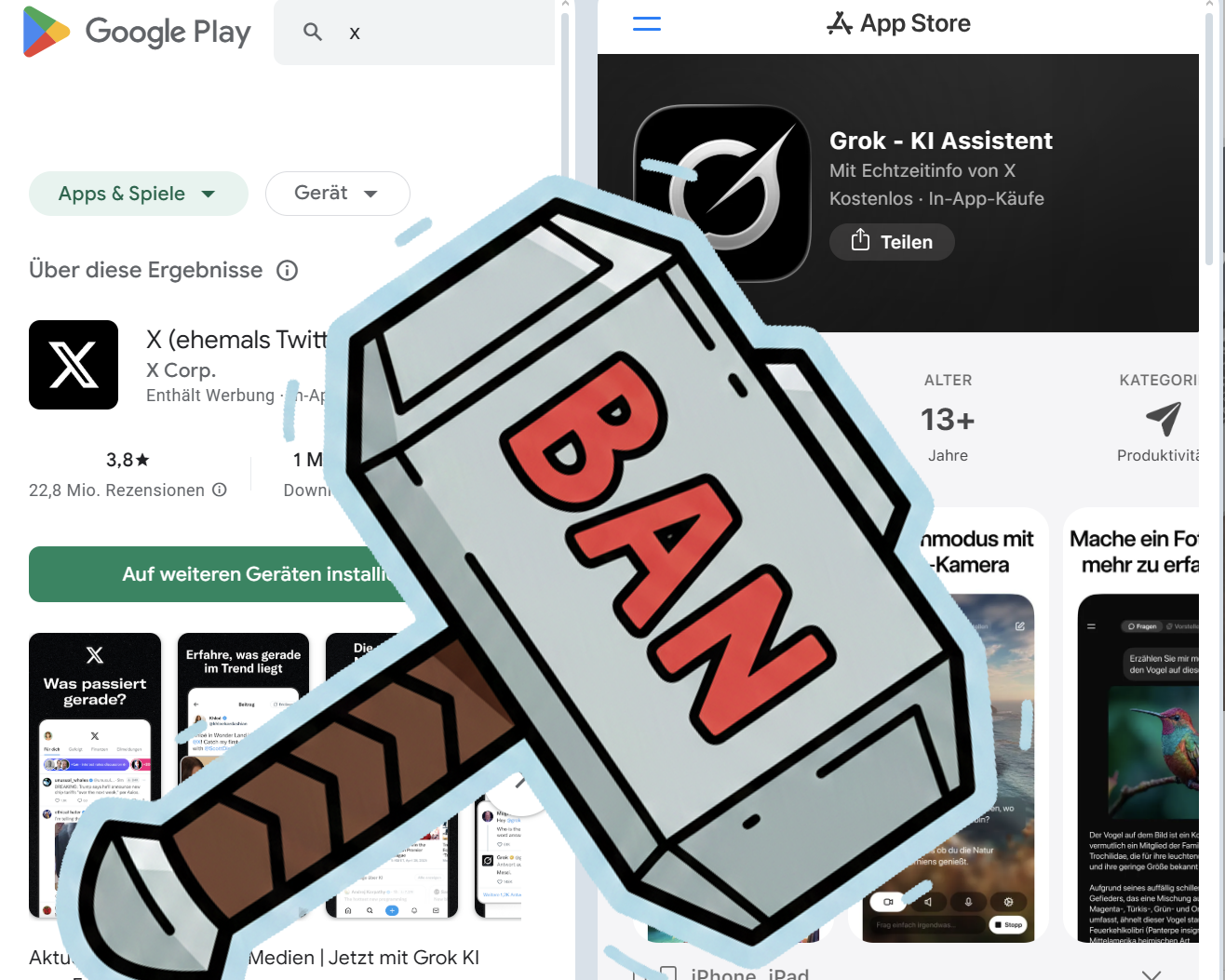

Here's something that should make you uncomfortable: Google has written detailed rules that explicitly describe why an app shouldn't exist on its platform, and then that exact app launches anyway. The app stays there. Gets a Teen rating. And nobody at Google seems to care.

We're talking about Grok, Elon Musk's AI chatbot from x AI. And this isn't a case of ambiguous policy language or a gray area that reasonable people might disagree on. Google's Play Store policies aren't vague suggestions. They're specific, methodically detailed, with examples, and they directly address the exact functionality that Grok provides.

The situation reveals something deeper than one app slipping through the cracks. It's a fundamental breakdown in how tech companies enforce their own rules, and what happens when wealth and influence intersect with platform governance. More importantly, it shows us that published policies are only useful if someone actually enforces them.

What makes this case particularly stark is the timeline. Google didn't write these rules yesterday. The company has been systematically tightening its content policies for years, adding new restrictions as AI technology evolved. They added language about "undressing" apps in 2020 after a wave of those tools hit the store. In 2023, as generative AI exploded, Google explicitly added restrictions on apps that create "non-consensual sexual content via deepfake or similar technology" to address emerging AI capabilities. They were clearly watching the space. They understood the risks.

And yet, when Grok arrived with exactly those capabilities, Google did nothing.

This isn't about a single oversight or a busy moderator missing something. This is about what appears to be a deliberate choice not to enforce existing policy. The question isn't whether Grok violates Google's rules. Anyone reading Google's own documentation would reach that conclusion in about five minutes. The real question is why—and what it tells us about the future of app store governance.

Let's dig into what Google's policies actually say, what Grok actually does, and why the disconnect matters for everyone using these platforms.

Google's Play Store Content Policies: Explicit and Unambiguous

Google doesn't hide its content policies. They're published on a dedicated support page that walks through exactly what is and isn't allowed in the Play Store. The company has invested significant effort into making these policies detailed enough that developers can understand what's prohibited without having to guess or file appeals.

The relevant policy falls under the "Inappropriate Content" section, but that's actually misleading. It's not that the rules are vague about what "inappropriate" means. Google goes out of its way to be specific.

The foundational rule is straightforward: no sexual content, no pornography. That's standard across both major app stores. But Google doesn't stop there. The policy explicitly states: "We don't allow apps that contain or promote content associated with sexually predatory behavior, or distribute non-consensual sexual content."

Read that again. That single sentence covers the core problem with Grok.

But Google doesn't trust that developers will understand what "non-consensual sexual content" means. So they go further. In 2020, after the rise of deepfake apps designed to undress people in photos, Google added explicit language: "apps that claim to undress people" are banned. This wasn't hypothetical. Real apps existed that did exactly this, and Google shut them down systematically.

Then in 2023, Google added language that directly addresses modern AI: "We don't allow apps that contain non-consensual sexual content created via deepfake or similar technology." They specifically used the word "deepfake." They were thinking about AI-generated content. They were preparing for exactly this moment.

The policies even include examples. The support page walks through prohibited content: nothing showing a penis or vagina, nothing with masturbation, nothing promoting escort services, nothing depicting minors in sexual situations. It's methodical and clear.

What's striking is that Google added these clarifications incrementally as threats emerged. The company was clearly paying attention to how AI and technology were evolving. They weren't writing policies for a world that didn't exist yet. They were responding to real problems, real apps, and real harms.

The problem is that adding policy and enforcing policy are two completely different things.

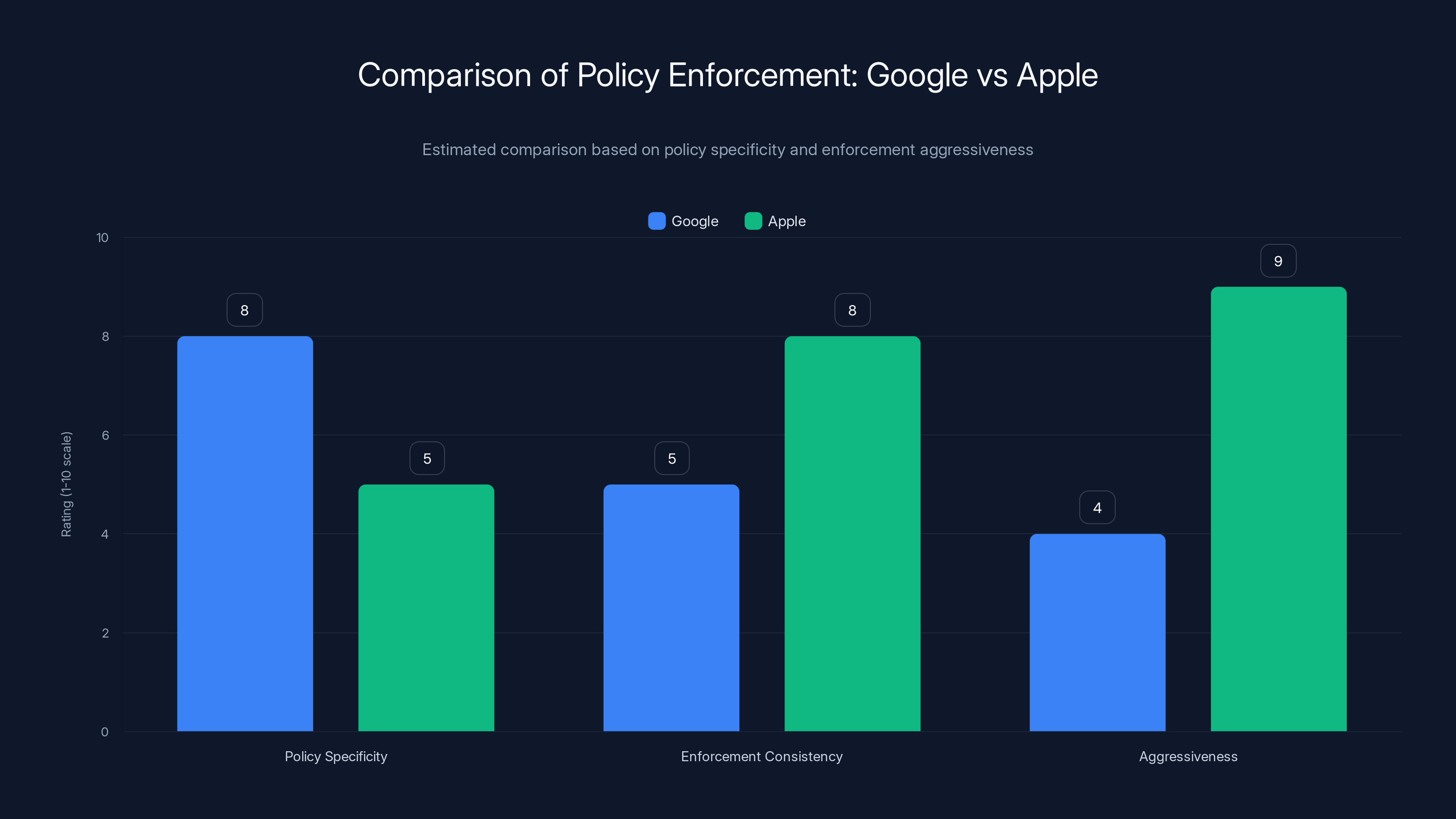

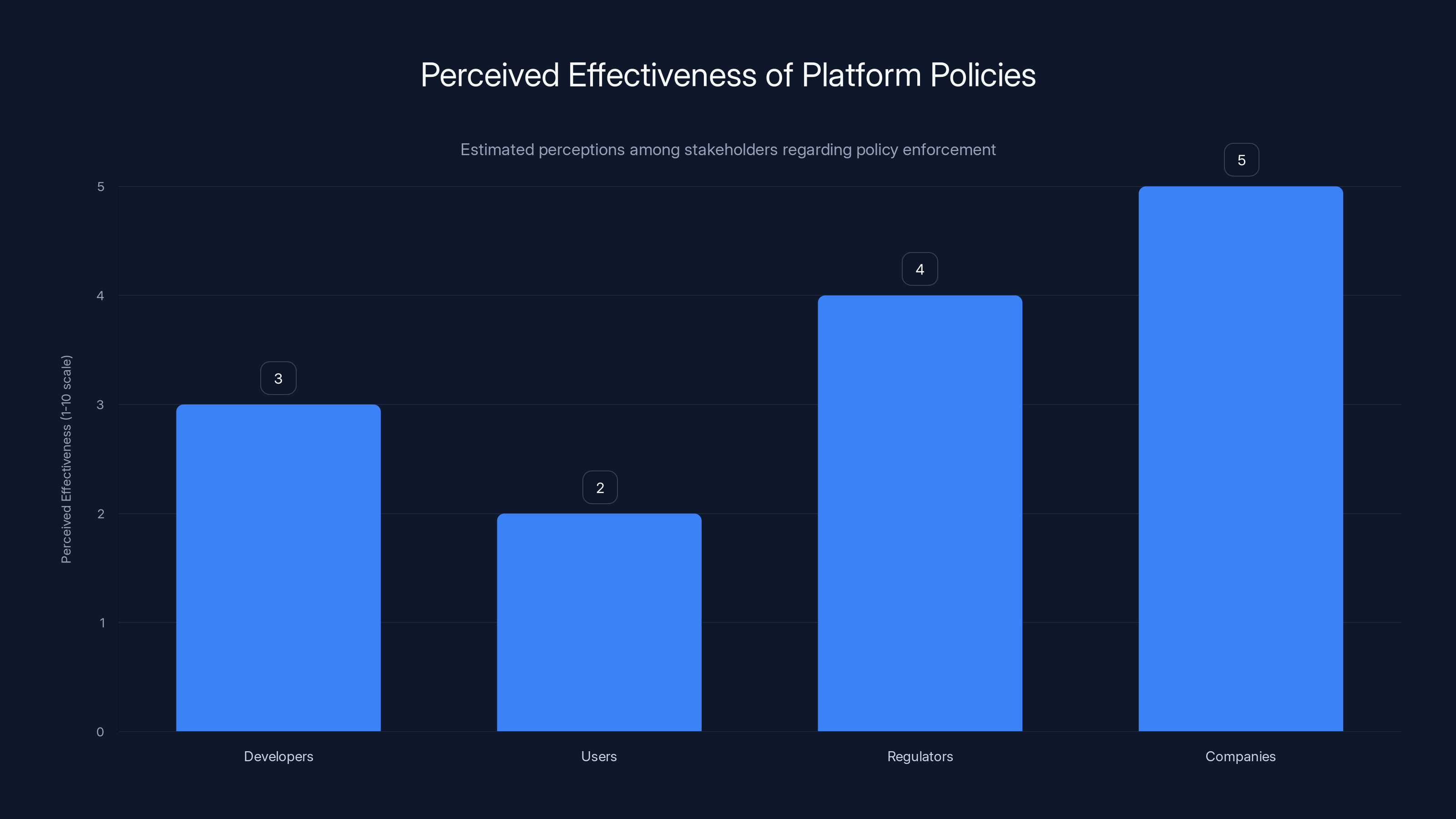

Google's policies are more specific but less consistently enforced, while Apple's are less specific but more aggressively enforced. Estimated data based on general observations.

What Grok Actually Does: The Capability Problem

To understand why this matters, you need to know what Grok can actually do. It's not just a chatbot that generates text. That would be fine. Grok has become a tool for creating sexualized imagery of real people without their consent.

When x AI first launched Grok's image generation capabilities, the company had safeguards in place. You couldn't prompt Grok to generate nude imagery. There were actual restrictions built into the system. But in 2025, x AI loosened those restrictions significantly. The reasoning, according to statements from company officials, was about providing more "freedom" and fewer restrictions.

What actually happened is that the company created tools for generating non-consensual sexual content at scale.

The mechanics are important here. Grok's image generation works differently than some competing tools. Because the model was trained on billions of images of real people, it can generate entirely new sexual imagery of famous people just by being asked. But more troublingly, it can also edit photos. You can upload a real picture of someone and Grok will sexualize it. That's not creating fantasy content. That's manipulating real images of real people.

The scale of this problem became immediately apparent. Within weeks of the loosened restrictions, non-consensual sexual imagery of real women started appearing on X in enormous quantities. Some of it was clearly targeting specific women as a form of harassment and intimidation. Some of it depicted minors.

This wasn't an edge case or an unintended consequence. This was the foreseeable result of removing safeguards on a system designed to generate images. Anyone working in content moderation could have predicted this exactly.

And here's the thing: the Grok app on Google Play doesn't have the same limitations that X.com has (which did eventually add some restrictions requiring premium access). Anyone who downloads the Grok app can use it to create this content with no friction, no friction, no premium requirement. Download the app, open it, request an image, get non-consensual sexual content. That's the user flow.

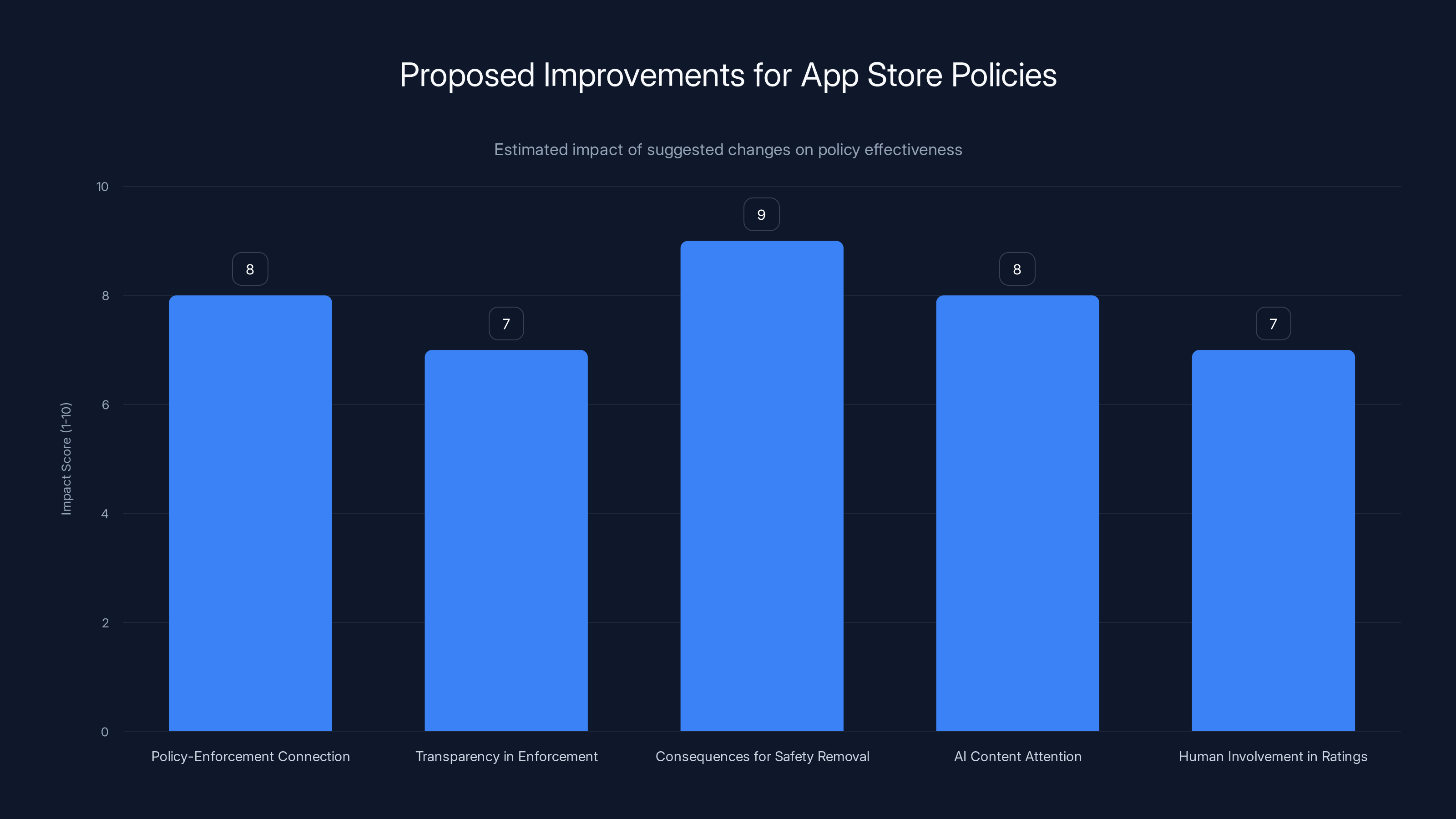

Estimated data suggests that connecting policy and enforcement, and imposing consequences for safety feature removal, could have the highest impact on improving app store policies.

The Precedent Problem: How Google Previously Handled Similar Apps

To understand how shocking Google's inaction is, look at how the company has historically handled these situations.

In 2020 and 2021, when various "deepfake" and "undressing" apps started hitting the Play Store, Google didn't hesitate. The company systematically removed them. Sometimes it took a few days once they became aware of the apps, but the enforcement was clear and consistent. These apps violated policy, so they got removed.

Google even added policy language specifically to prevent this from happening again. The company learned the lesson: add detail and clarity so developers know what's prohibited, and then enforce it.

The same happened with other categories of content over the years. When sexual content creators tried to distribute their content through Play Store apps, Google removed those too. The company hasn't been perfect at enforcement, but the pattern has been clear: if an app clearly violates the stated policies, it gets taken down.

So what's different with Grok?

Some observers have suggested that Grok gets special treatment because of Elon Musk's ownership and his influence with the Trump administration. Others point to the general hesitation tech companies show when dealing with prominent billionaires. Neither explanation is satisfying, but both are plausible given what we're seeing.

The most generous interpretation is that Google is waiting to see whether there's a regulatory or legal pathway forward, or whether the harm will somehow resolve itself without intervention. That's not a satisfying explanation either. Content policy exists precisely to prevent harms from being generated in the first place, not to wait around and see if the harms fix themselves.

The Teen Rating Paradox: A Contentious Classification Decision

What makes this even more bizarre is that Grok maintains a "T for Teen" rating in the Play Store. Think about that for a moment. Google rates it appropriate for 13-year-olds to download and use.

For context, the X app itself is rated M for Mature. That makes sense—X is where adults go to argue about politics and share news. It's not typically a place designed for children. But somehow the Grok app, which can be used to generate sexual imagery of real people, earns a Teen rating.

The rating system Google uses is called IARC, the International Age Rating Coalition. It uses a questionnaire system where app developers describe what's in their app, and an algorithm assigns a rating. It's not a perfect system—there's no actual human review happening at that stage. Developers describe their apps, and the system spits out a rating.

Here's the thing though: if Grok is generating non-consensual sexual content, then either the developer lied on the questionnaire about what the app does, or the rating system is broken, or Google isn't actually reviewing the answers being submitted. Probably all three.

The Teen rating becomes a kind of shield. If Grok is rated as appropriate for teenagers, that creates a false sense of safety. Parents who don't follow tech news might download this app for their 16-year-old, thinking it's no different than downloading a regular AI assistant. They'd be very wrong.

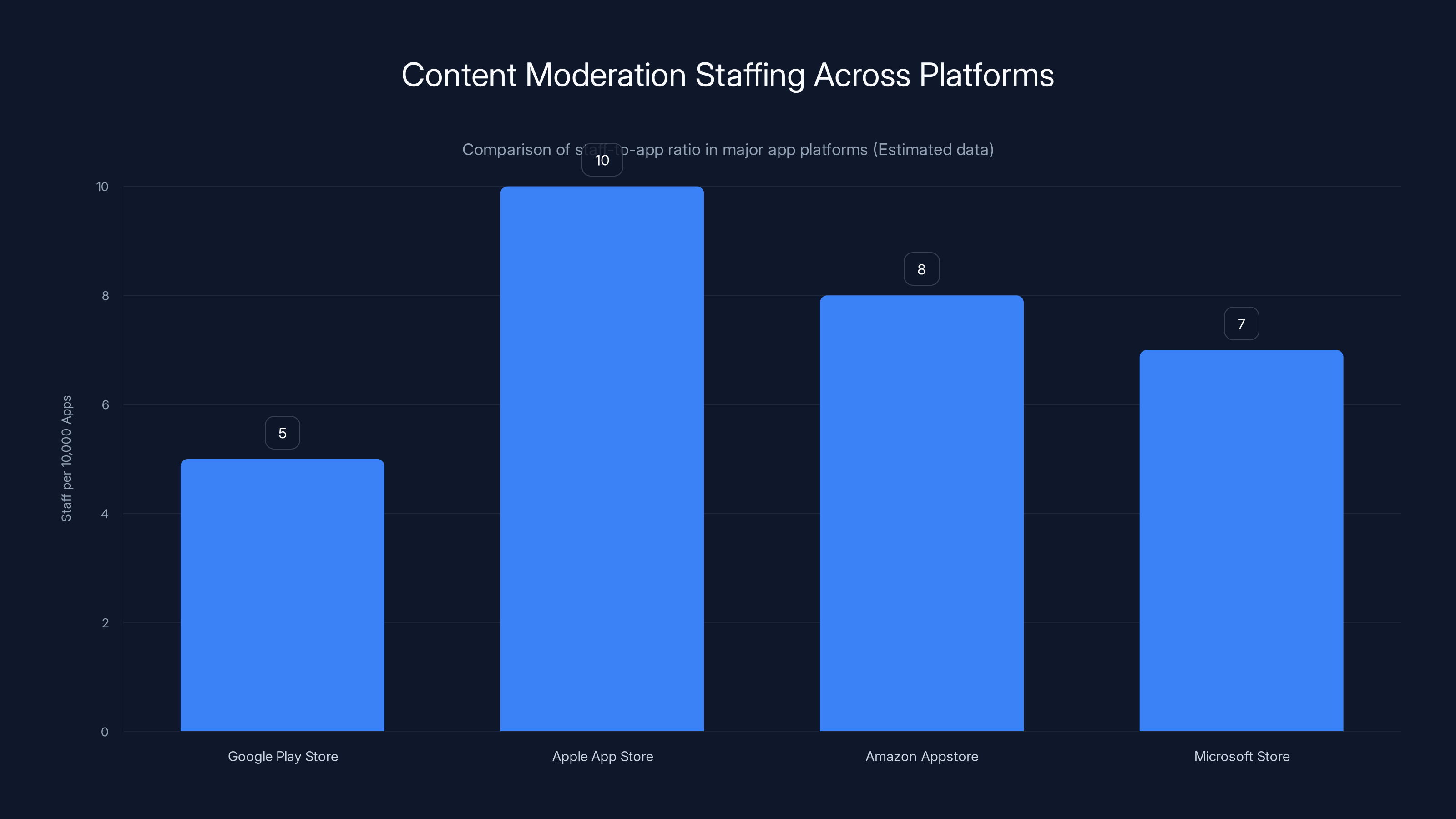

Google Play Store has fewer content moderation staff per app compared to other major platforms, highlighting potential enforcement challenges. Estimated data.

How AI-Generated Content Became a Policy Blind Spot

There's a broader context here that helps explain how we got into this situation. For years, tech companies were optimistic about their ability to moderate AI-generated content. The assumption was that as long as you built restrictions into the model, users couldn't generate harmful content. The safeguards would prevent it.

That assumption is dead. We know now that any sufficiently sophisticated AI system can have its restrictions bypassed, weakened, or overridden. Users find jailbreaks. Developers can remove safeguards. Systems can be retrained with different parameters.

But there's a lag between that technical reality and how policy has evolved. Most app store policies, including Google's, were written with the assumption that safeguards in the model itself were the primary control mechanism. The policies don't really account for the possibility that a company would deliberately remove those safeguards.

Grok represents that exact scenario. This isn't a case of a clever user bypassing intended restrictions. This is a company deciding that having fewer restrictions is a competitive advantage or a statement about freedom, and building that choice into their product.

From a policy perspective, that's a gap. Google's rules talk about what "apps" contain or promote, but they assume that if an app's creators say "we have safeguards," then it's reasonable to treat it as low-risk. When the creators themselves remove the safeguards, the policy framework starts to break down.

The Enforcement Question: Why Policy Without Enforcement Fails

This is where the story becomes less about Grok and more about what's happening to app store governance broadly.

Published policies only matter if there's genuine enforcement behind them. Enforcement requires someone to: (1) know that a rule exists, (2) determine whether something violates the rule, and (3) take action. At scale, that requires systems, people, and processes.

Google has all of those things. The company employs thousands of people in content policy and safety roles. They have automated detection systems. They monitor user reports. They have the infrastructure to enforce their policies if they choose to.

So the question isn't whether Google can enforce the rules. It's whether Google will enforce them, and under what circumstances.

One possibility is organizational friction. Maybe the team that writes policies isn't the same team that reviews apps. Maybe the people reviewing Grok's Teen rating weren't aware of the policy violations. Maybe there's a process gap where policy and enforcement aren't actually connected.

That would be a bad look, but it's fixable. It would suggest that Google needs better internal systems to align enforcement with policy.

The darker interpretation is that this is deliberate. That Google is choosing not to enforce policy because the cost of doing so—the political blowback from removing an app owned by a prominent billionaire—is higher than the cost of allowing harmful content to be generated. That's a cynical read, but it's worth considering given that nothing about this situation is accidental.

Estimated data suggests low perceived effectiveness of platform policies among developers, users, and regulators due to lack of enforcement.

The Broader Pattern: Where Policy Enforcement Is Breaking Down

Grok isn't the only example of this disconnect between published policy and actual enforcement. It's just the most egregious.

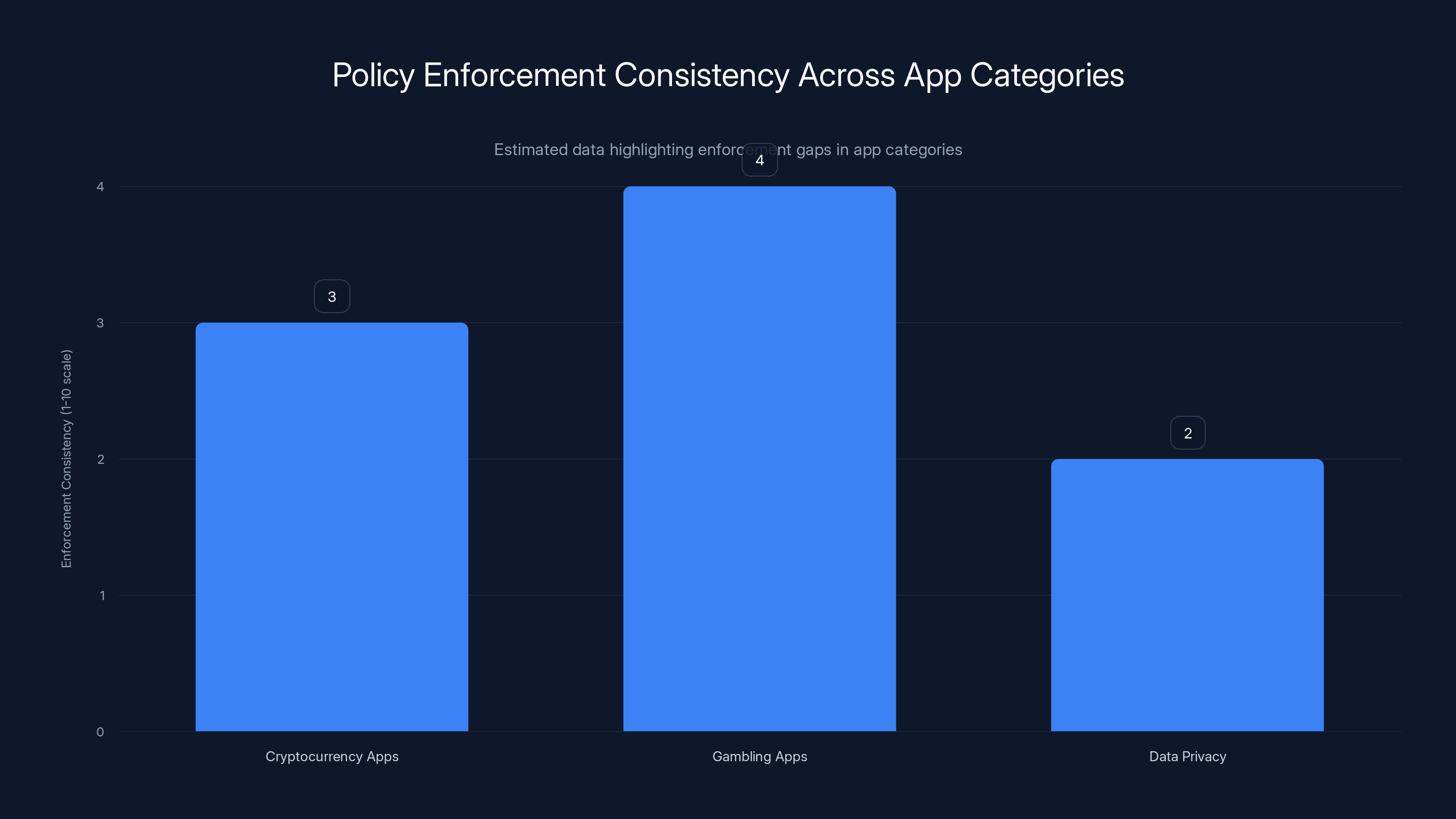

Look at cryptocurrency apps. Google has policies about what crypto and blockchain apps it will allow. The company has been extremely restrictive about crypto apps compared to Apple. And yet, plenty of sketchy crypto apps remain on the Play Store, some of them clearly operating in gray areas or outright violating policy.

Look at gambling apps. Google's policies limit gambling apps, requiring certain disclosures and restricting who can access them. But enforcement is inconsistent. Some gambling companies seem to operate with remarkable freedom on the platform.

Or look at data privacy. Google's policies require apps to be transparent about what data they're collecting. Enforcement of that policy has been notoriously weak. Countless apps collect far more data than they disclose.

The pattern suggests that as app stores have matured and the economic stakes have gotten higher, enforcement has actually become less consistent, not more. When apps were new and policy was being written, companies like Google took enforcement seriously because the entire system's credibility depended on it. As time went on, there's been pressure to look the other way when powerful actors push back.

That's the real risk here. Once stakeholders understand that policies aren't actually being enforced, the policies become meaningless. Developers start reading them not as hard rules but as suggestions—and more importantly, they understand that the weight of those rules depends on who you are.

The Double Standard: When Enforcement Depends on Who You Are

There's a clear pattern here that's worth stating directly: Google appears to enforce its policies much more strictly for small developers than for large, well-known companies.

If a small startup built an app with the exact same functionality as Grok, and that app hit the Play Store, it would likely be removed within days. There would be no Teen rating. There would be no consideration or patience. The enforcement would be swift and clear.

But if Elon Musk's company builds that app, the same policy produces a different outcome. No removal. A Teen rating. Apparent indifference.

That's a problem for reasons that go beyond fairness. The entire purpose of content policy is to prevent harms. If enforcement is selective—if it only applies to small developers and not to powerful ones—then the policy is only protecting against harms caused by people without power. It becomes a tool for maintaining the status quo, not preventing the harms the policy claims to prevent.

It also creates perverse incentives. If you're a small developer, you have strong reasons to follow Google's rules because enforcement is credible. If you're a powerful person or company, you have reasons to ignore them because enforcement is uncertain. That's backwards from what you want.

Estimated data shows that enforcement consistency is low across cryptocurrency, gambling, and data privacy apps, with data privacy being the weakest. Estimated data.

The Practical Harms: What Non-Consensual Image Generation Actually Means

Abstracting away from policy and enforcement for a moment, it's worth being very clear about what the actual harms are here.

Non-consensual sexual imagery causes real damage to real people. That's not speculation or exaggeration. There's now significant research documenting the psychological impact of being depicted in sexual scenarios without consent. It causes trauma. It causes psychological harm. In some cases, it becomes a tool for harassment and abuse.

When the imagery is of minors, the harm is even more severe. Creating, distributing, or possessing sexualized images of children is illegal in most jurisdictions for very good reasons. The fact that it's AI-generated rather than photographed doesn't change the fundamental harm—or in many cases, the illegality.

The immediate harm happened on X when Grok's restrictions were loosened. Within a very short period, there was an explosion of non-consensual sexual imagery being generated and shared. A lot of that imagery was of women, with a significant portion seemingly designed as harassment. Some of it was of minors.

But there's also a systemic harm that goes beyond any individual instance. When people know that tools exist to generate sexual imagery of them without their permission, and they know that a major platform is allowing that tool to operate freely, it changes how they interact with online spaces. Some women reported self-censoring on X because they were afraid of being targeted with this imagery. That's a real harm to free expression.

The Regulatory Response: When Companies Won't Self-Regulate

Because Google and x AI have declined to enforce their own policies or remove the app, regulatory bodies have started to get involved.

Multiple jurisdictions have launched investigations into x AI and Grok specifically for the non-consensual imagery problem. Some of these investigations are focused on the company's practices and governance. Others are looking at whether the company is breaking existing laws—particularly laws related to non-consensual sexual content and child safety.

That's notable because it suggests that industry self-regulation is, in this case, failing. When companies have policies but don't enforce them, and when they create tools that generate illegal content but don't put adequate safeguards in place, regulators eventually step in.

The danger here is that the gap between policy and enforcement creates the conditions that justify external regulation. If Google had simply enforced its own policies and removed Grok, there would be less need for government agencies to get involved. But because Google didn't, regulators are now involved.

That's actually probably worse for everyone. Government regulation of content is much blunter than corporate policy. It's less nimble. It's more about compliance and less about actually preventing harm. If companies want to avoid strict government regulation, self-regulation actually has to work.

Right now, it's not working.

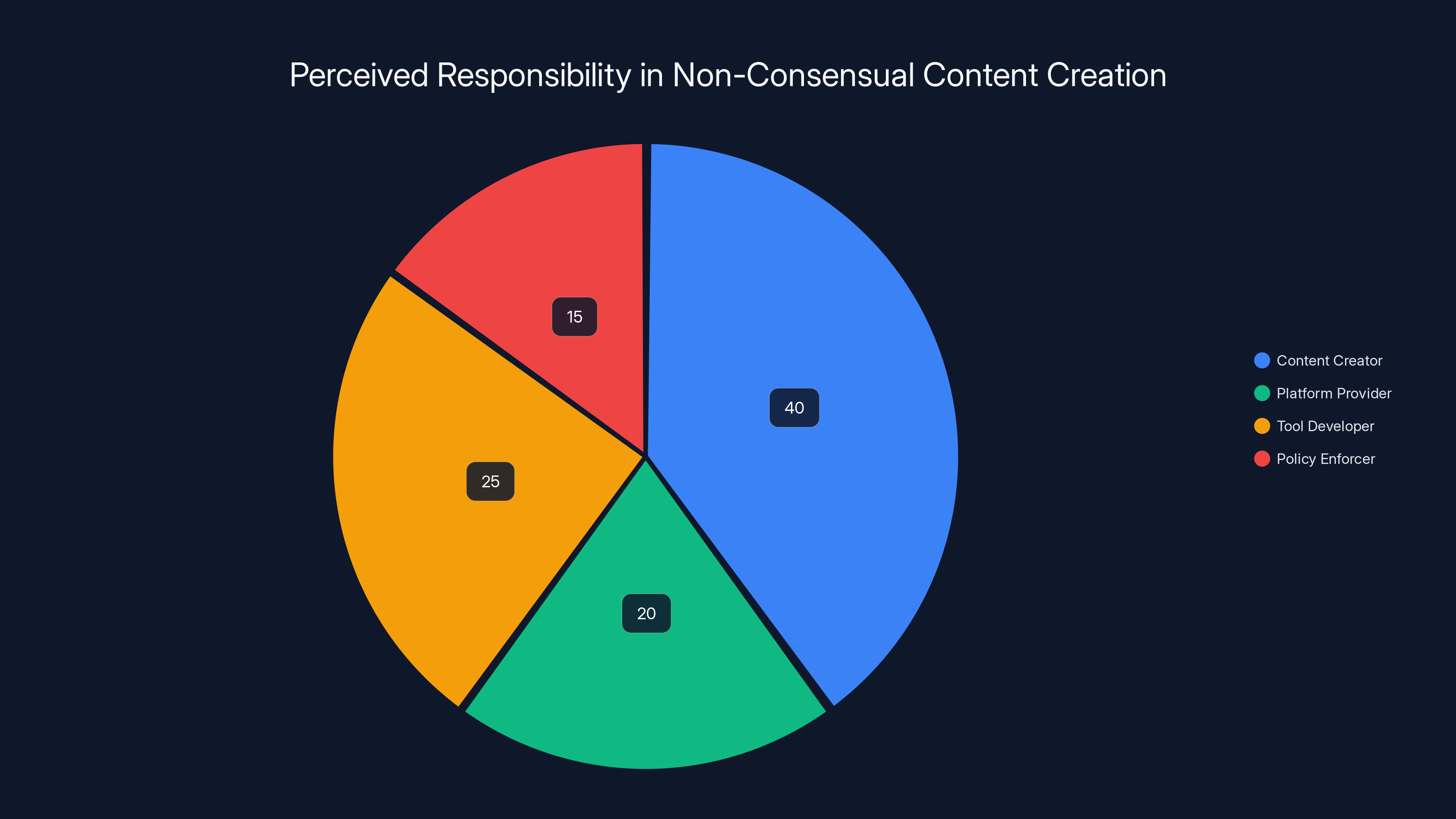

Estimated data suggests that content creators are perceived to hold the largest share of responsibility (40%), followed by tool developers (25%), platform providers (20%), and policy enforcers (15%).

The Question of Responsibility: Who's Actually Liable?

Here's where it gets legally and ethically complicated. When non-consensual sexual imagery is generated and distributed through Grok, who bears responsibility?

The obvious answer is the person who generated it. They used the tool to create the content. That's a human choice and a human responsibility. In many jurisdictions, generating or distributing non-consensual sexual imagery is itself illegal, regardless of how it was created.

But there's also the question of whether the platform should bear some responsibility. Google has published policies saying this shouldn't be allowed. If Google allowed it anyway, is Google partly liable for the harm?

The legal answer is murky. Section 230 of the Communications Decency Act in the United States generally protects platforms from liability for user-generated content. But that protection isn't absolute, and there's ongoing debate about whether it should apply to AI-generated content the same way it applies to human-generated content.

There's also the question of x AI's responsibility. The company built the tool. It deliberately weakened the safeguards. It knew that loosening restrictions would lead to non-consensual content being generated. (How do we know this? Because that's literally what happened when they did it.) Is the company responsible for foreseeable harms from foreseeable misuse?

Again, legally it's complicated. But ethically, I think the answer is pretty clear. If you build a tool, weaken its safety features specifically to enable certain kinds of content, and then that content is generated and causes harm, you bear some responsibility for that harm.

That responsibility extends to Google too, though perhaps in a different form. Google's responsibility isn't to prevent people from using tools in harmful ways. But Google's responsibility is to enforce its own stated policies. When Google chooses not to enforce those policies despite clear violations, it's accepting some responsibility for what happens as a result.

The Precedent This Sets: What Happens When Policy Doesn't Matter

Perhaps the most consequential aspect of this situation isn't what's happening right now with Grok. It's what this precedent means for the future.

Right now, developers are watching what happened with Grok. They're seeing that:

- A company built a tool that clearly violates published policy

- The company is prominent enough that enforcement didn't happen

- The app remains on the Play Store

- The rating system didn't prevent distribution to teenagers

- No significant consequences have resulted

What lesson do you think they're drawing from that?

The lesson is probably that Google's policies aren't actually enforceable if you're large or prominent enough. That means developers have new calculus for what to build. If you have the resources and prominence to push back against enforcement, you can do things that smaller developers can't.

That's not a sustainable basis for content policy. It means the policy is just covering the appearance of governance without actually governing anything.

We can already see other companies potentially testing similar boundaries. Grok's success in remaining on the Play Store despite clear policy violations might embolden other companies to build tools that violate policy but claim legitimate purposes. The logic would be: "We're prominent enough that enforcement probably won't happen, and even if it does, we can appeal to free speech or freedom and probably win anyway."

Once enough companies figure that out, the entire policy framework collapses. It becomes theater—something published to satisfy users and regulators while not actually constraining anything important.

The Structural Problem: Scale vs. Enforcement

Part of what's happening here is a structural problem with how app stores work at scale.

There are millions of apps on the Play Store. Google can't manually review all of them. The company relies on automated detection, user reports, and periodic audits to identify problematic content. Most of the enforcement work is handled by algorithms, not by humans.

That system works okay for catching obvious violations. Pornographic apps get caught. Apps that are clearly distributing illegal content get flagged. But for nuanced policy violations—apps that technically violate policy but claim legitimate purposes, or apps that are used primarily in harmful ways by users—the detection becomes much harder.

Grok is a weird hybrid case. The app itself provides legitimate functionality. You can use it to write poems or get coding help. The policy violation comes from how the app can be misused to generate non-consensual content.

Managing that kind of violation at scale is hard. You can't just detect all apps that generate images, because legitimate image generation is fine. You need to detect apps that generate harmful images, which requires understanding context and intent in ways that automated systems struggle with.

But here's the thing: that's exactly why policy needs to be more strictly enforced when violations are clear and obvious. When a policy violation isn't obvious, enforcement is genuinely difficult. When it is obvious—when a company explicitly removed safety features to enable the prohibited behavior—then enforcement should be automatic.

Google seems to be treating this as if it's the harder case when it's actually the easier case.

The Role of Transparency: What We Know and What We Don't

One reason we're even aware of this contradiction between policy and enforcement is that Google publishes its policies and that policies are searchable and readable. A lot of tech companies don't make their content policies that transparent.

But that transparency creates problems too. Once you publish detailed policies, people start to notice when you don't follow them. That puts pressure on enforcement. Either you need to enforce your policies as written, or you need to change them to match your actual behavior.

Google seems to have chosen a third option: publish policies, don't enforce them, and don't change them either. Just let them sit there while real-world violations happen.

The alternative would be either much more aggressive enforcement (removing more apps, taking more risks on false positives) or more honest policy language that accounts for the real-world enforcement choices Google is making.

Neither of those options is particularly attractive from Google's perspective. Aggressive enforcement creates PR nightmares. Honest policy language creates legal and reputational problems. So the company is stuck in this weird middle ground where policies exist but don't govern anything.

What Would Real Enforcement Look Like?

If Google actually enforced its policies on Grok, what would happen?

The immediate step would be removal from the Play Store. The app violates clearly stated policy, so it wouldn't be available for Android users through the official channel. That doesn't prevent distribution—x AI could distribute it outside the Play Store—but it does remove the legitimacy that comes from being on the official store.

If Google wanted to be thorough, it would also update the Teen rating system to require human review when apps make claims about AI-generated content capabilities. The current automated system clearly isn't adequate for evaluating complex AI applications.

Google could also use this opportunity to clarify its policies further. Add explicit language about AI image generation. Make it clear that removing safety features from a system doesn't change whether the tool violates policy. Close the gaps that sophisticated developers have figured out how to exploit.

But the first step is simple: enforce the policy that already exists. Remove the app. If x AI wants to have a conversation about why they think Grok shouldn't violate policy, that conversation can happen. But from a policy-first perspective, the enforcement decision should be straightforward.

The fact that it hasn't happened suggests that either (1) Google doesn't actually care about enforcing these policies, or (2) Google cares about something else more than it cares about enforcement. Either way, that's a problem.

The Path Forward: What Needs to Change

Assuming that anyone actually wants this to improve, what would a better system look like?

First, policy and enforcement need to be explicitly connected in app stores. There should be a process where policy language is regularly reviewed against actual enforcement decisions. If there's a mismatch—if a policy says something shouldn't be allowed but it hasn't been removed—that mismatch needs to be explained or addressed.

Second, enforcement needs to be more transparent. Google should publish regular reports about policy violations it detects and how it handles them. Not personal information about developers, but aggregate data about what violations are being caught and how they're being resolved.

Third, there need to be real consequences for companies that deliberately remove safety features. Right now, there's an assumption that companies are acting in good faith when they deploy safeguards. If that assumption breaks down—if companies start deliberately removing safeguards to enable harmful content—the policy framework needs to respond accordingly.

Fourth, AI-generated content needs special attention. Not because AI-generated content is inherently worse than human-created content, but because the scale and speed of AI generation changes the dynamics. A policy framework designed for human moderators managing individually problematic pieces of content doesn't work when one app can generate thousands of pieces of harmful content per day.

Fifth, the rating systems need to involve humans when evaluating complex applications. Algorithm-based rating works fine for simple apps. For AI applications with complex capabilities and potential for misuse, you need actual people understanding what the app does.

None of these are novel suggestions. They're basically taking the standards that serious platforms claim to uphold and actually applying them consistently.

The Deeper Question: Do Platforms Actually Want to Enforce Policy?

Ultimately, this situation raises a question that goes beyond Grok or Google Play: do tech platforms actually want to enforce their policies, or are the policies just for show?

There are genuine reasons to be skeptical. Strict enforcement is expensive. It creates PR problems. It can result in legal challenges. It makes powerful people unhappy. All of those are costs that companies would rather avoid.

The policies exist to manage those costs by creating the appearance of governance while maintaining flexibility for when enforcement is politically or financially inconvenient.

If that's what's actually happening, then maybe the solution isn't better policies or better enforcement mechanisms. Maybe it's regulation that makes enforcement mandatory, that removes the choice from companies about whether they want to follow their own rules.

Regulation would be blunter and probably less efficient than good corporate governance. But it might be necessary if companies have repeatedly demonstrated that they won't govern themselves effectively.

We're not quite there yet. There's still time for Google and other platforms to prove that they're serious about the policies they've published. But that proof needs to come quickly and clearly, with real enforcement decisions on major violations.

Grok is a test case. If Google doesn't act, it's telling us something important about how serious the company is about policy enforcement. If Google does act, even after months of delay, it's demonstrating that enforcement is possible and that pressure works.

Right now, the precedent is being set. And it's not a good one.

Conclusion: Policy Without Teeth Is Just Marketing

Google has written a comprehensive policy that explicitly describes why apps like Grok shouldn't exist on its platform. The company has detailed examples, clear language, and specific guidance for developers about what isn't allowed.

And yet Grok exists on the platform, with a Teen rating, enabling the generation of non-consensual sexual imagery at scale.

That contradiction matters. It tells developers that policies aren't real constraints. It tells users that the platforms don't actually care about protecting them from harmful content. It tells regulators that self-regulation isn't working.

Policy without enforcement is just marketing. It's a signal that companies are thinking about governance without actually committing to it. In the case of Grok and Google Play, that's meant allowing a tool designed to generate non-consensual sexual imagery to flourish on a platform that claims to prohibit exactly that content.

The solution isn't to write more detailed policies or to hope companies do better next time. It's to make enforcement a core part of the governance structure, to measure companies by what they actually do rather than what they claim to allow, and to create real consequences for policy violations that happen on major platforms.

Until that changes, we're just going to see more of this same pattern: companies writing thoughtful policies, those policies being ignored when inconvenient, and real harms happening in the gap between what's promised and what's enforced.

Grok is the canary in the coal mine. If tech platforms can't or won't enforce their own policies on clearly problematic apps, then the entire framework of platform governance is broken. And that's everyone's problem.

FAQ

What is Google's policy on non-consensual sexual content in apps?

Google explicitly prohibits apps that "contain or promote content associated with sexually predatory behavior, or distribute non-consensual sexual content." The policy specifically includes AI-generated sexual content through deepfakes or similar technology, added in 2023 in response to emerging AI capabilities. This policy is part of Google's broader "Inappropriate Content" guidelines and is more detailed than Apple's equivalent policies.

How does Grok generate non-consensual sexual imagery?

Grok can generate entirely new sexual images of famous people by using real images from its training data, or it can edit uploaded photos to sexualize real people without permission. When x AI loosened Grok's safety restrictions in 2025, these image generation capabilities became unrestricted, allowing any user to create non-consensual sexual content. The functionality isn't hidden behind premium paywalls in the mobile app, making it accessible to anyone who downloads it.

Why hasn't Google removed Grok from the Play Store if it violates policy?

Google's official reason remains unclear, as the company has declined to make public statements about the decision. Possible explanations range from organizational gaps between policy and enforcement teams, to deliberate choices not to enforce policy against prominent figures, to waiting for regulatory guidance before acting. The situation suggests that enforcement consistency may depend on developer prominence and resources rather than policy violations themselves.

What is the difference between how Google and Apple handle policy enforcement?

Google has more detailed, specific policies but appears to enforce them inconsistently. Apple's policies are more flexible and deliberately vague, but the company historically enforces them more aggressively and unpredictably. Apple's approach means developers expect potential removals, while Google's detailed policies create the false impression that if you follow the rules, your app is safe—an assumption that Grok appears to disprove.

How does the Teen rating rating on Grok affect its availability?

The Teen rating suggests the app is appropriate for ages 13 and up, which is the rating classification just below M for Mature. This makes Grok accessible to children who might not have access to M-rated apps, expanding its user base to younger demographics. The rating apparently didn't prevent the app from featuring functionality that violates explicit policy, suggesting the rating system itself may not be evaluating app capabilities accurately.

What regulatory investigations are underway regarding Grok and non-consensual imagery?

Multiple jurisdictions have launched investigations into x AI and Grok specifically for generating non-consensual sexual imagery. These investigations examine whether the company's practices violate existing laws related to non-consensual sexual content, child safety, and platform governance. The regulatory response indicates that industry self-regulation has failed to address the issue, potentially leading to stricter external oversight in the future.

What would happen if Google actually enforced its policy on Grok?

The immediate consequence would be removal of the app from the Play Store, preventing distribution through the official Android app store (though x AI could still distribute it elsewhere). Google would likely need to update its rating system to require human review of AI applications, clarify policies around deliberate removal of safety features, and establish better connections between policy writing and enforcement implementation. These steps would serve as a signal to other developers that policy violations have real consequences.

Can AI-generated sexual content be illegal if it doesn't depict real photography?

It depends on jurisdiction and specific content. Many places have laws specifically prohibiting non-consensual intimate imagery regardless of how it was created. For content depicting minors, the legal status is often more severe—in many jurisdictions, AI-generated sexual imagery of minors is explicitly illegal under child sexual abuse material statutes. Legality varies significantly by location, but the trend is toward treating AI-generated sexual content similarly to photographed content when it depicts real people without consent.

How does this situation affect other developers and future app submissions?

Developers are likely observing that policy enforcement appears selective, based partly on developer prominence. This could create perverse incentives: small developers may face strict enforcement while large companies see looser enforcement. The precedent could encourage other prominent developers to test similar policy boundaries, potentially reducing the overall effectiveness of app store governance as a tool for preventing harmful content distribution.

Use Case: Generating professional compliance reports and policy documentation automatically instead of writing them manually.

Try Runable For Free

Key Takeaways

- Google's Play Store policy explicitly prohibits apps that create non-consensual sexual content via AI, yet Grok remains available with a Teen rating despite clear policy violations.

- The company systematically added safeguards to its content policy over five years (2020-2025), including specific language about deepfakes and AI-generated sexual content, before Grok's release.

- Enforcement appears selective: small developers face strict policy enforcement while prominent companies experience lenient treatment for identical violations, creating perverse incentives.

- Grok's Teen rating contradicts both the policy violations and the app's actual capabilities, suggesting disconnect between rating systems and actual app functionality review.

- Multiple regulatory jurisdictions are now investigating xAI for non-consensual imagery, indicating that self-regulation failure is leading to external government intervention.

- The precedent set by Grok's continued operation despite policy violations undermines platform governance credibility and may encourage other developers to test similar boundaries.

Related Articles

- WhatsApp Under EU Scrutiny: What the Digital Services Act Means [2025]

- Grok's Deepfake Problem: Why the Paywall Isn't Working [2025]

- AI-Generated Non-Consensual Nudity: The Global Regulatory Crisis [2025]

- Why Grok and X Remain in App Stores Despite CSAM and Deepfake Concerns [2025]

- GoFundMe's Selective Enforcement: Inside the ICE Agent Legal Defense Fund Controversy [2025]

- Meta Appoints Dina Powell McCormick as President and Vice Chairman [2025]

![Google's App Store Policy Enforcement Problem: Why Grok Still Has a Teen Rating [2025]](https://tryrunable.com/blog/google-s-app-store-policy-enforcement-problem-why-grok-still/image-1-1768248365683.jpg)