How to Buy a GPU in 2026: Complete Guide & Best Options

Introduction: Navigating the GPU Market in 2026

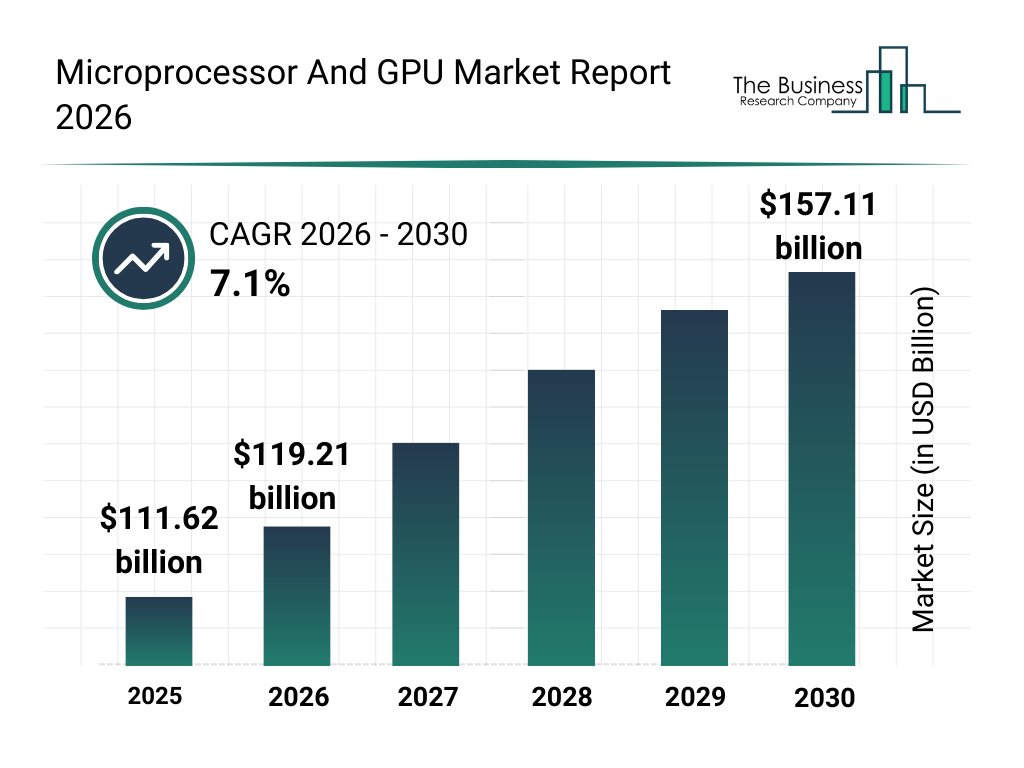

Purchasing a graphics processing unit (GPU) has never been more complex. The graphics card market in 2026 presents both unprecedented opportunities and significant challenges. You're navigating an industry where three major manufacturers—NVIDIA, AMD, and Intel—compete for dominance while countless add-in board (AIB) partners manufacture multiple versions of the same chip at vastly different prices.

The stakes have increased considerably. A GPU represents the single most important component in a gaming PC build, influencing everything from frame rates and visual fidelity to content creation capabilities and machine learning performance. Unlike processors or motherboards, where diminishing returns become apparent quickly, a GPU upgrade can fundamentally transform your computing experience across gaming, professional workloads, and emerging AI applications.

In early 2025, NVIDIA released its anticipated RTX 50 series, but the results disappointed many enthusiasts expecting dramatic generational leaps. This shift has created a unique buying environment where AMD and Intel suddenly offer compelling alternatives at entry and mid-range price points, challenging NVIDIA's market dominance for the first time in several years. Simultaneously, supply chain stabilization has eased some of the pricing pressures that plagued the market in previous years, though manufacturers still struggle to align MSRP with real-world pricing.

This comprehensive guide addresses the fundamental questions you need to answer before spending hundreds or thousands of dollars on a GPU. Whether you're building your first gaming PC, upgrading an aging system, or choosing a card for professional work, you'll learn the critical factors that separate exceptional purchases from regrettable ones. We'll explore the technical specifications that actually matter, decode the marketing language used by manufacturers, analyze pricing strategies, and provide transparent recommendations across multiple budget categories.

The modern GPU market rewards informed buyers. Understanding what drives performance, recognizing the importance of memory bandwidth and VRAM capacity, and timing your purchase strategically can save you hundreds of dollars while delivering better long-term value. This guide is designed to transform GPU shopping from an overwhelming experience into a confident, data-driven decision.

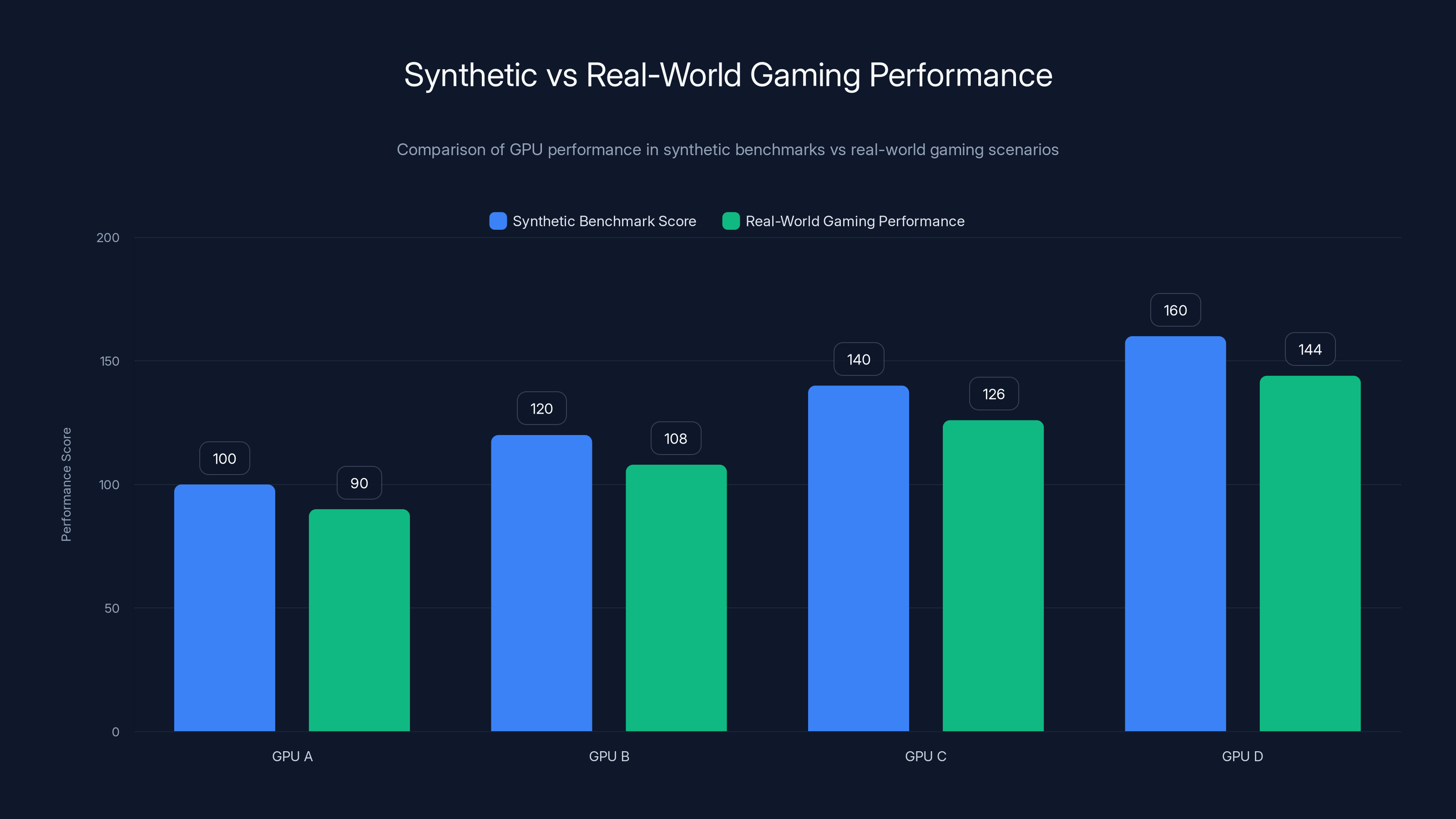

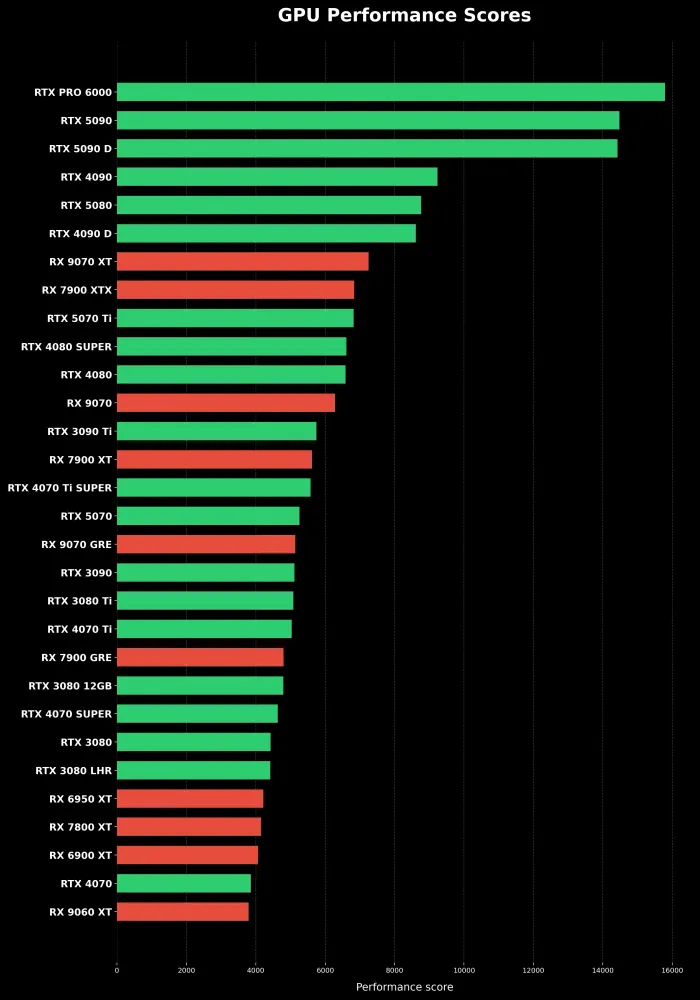

Synthetic benchmarks often show higher performance scores compared to real-world gaming, highlighting the gap due to real-world limitations such as driver overhead and CPU bottlenecks. Estimated data.

Understanding GPU Fundamentals: What Actually Matters

The Role of Graphics Processing Units in Modern Computing

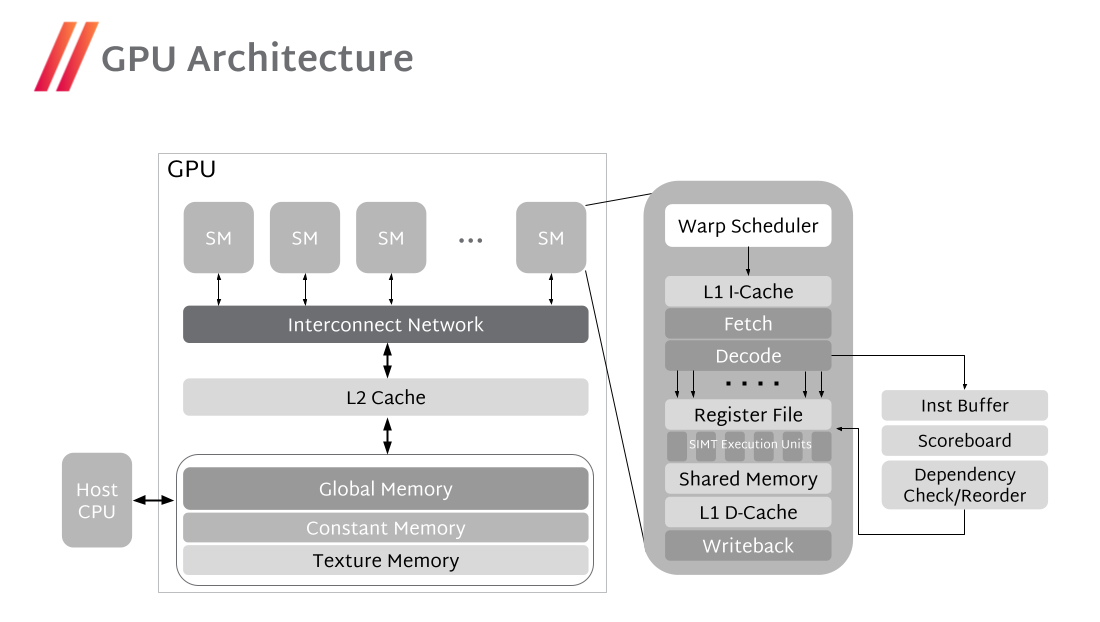

Graphics processing units evolved far beyond their original purpose of rendering pixels to displays. Modern GPUs are parallel processing powerhouses containing thousands of specialized cores designed to handle multiple calculations simultaneously. This architecture makes them invaluable across gaming, content creation, scientific simulation, and artificial intelligence applications.

In gaming contexts, the GPU handles the computationally intensive work of converting 3D data into 2D images displayed on your monitor. This process occurs millions of times per second at modern refresh rates, requiring consistent, reliable performance. The difference between a capable GPU and an inadequate one manifests not as minor performance dips but as the difference between smooth, responsive gameplay and frustrating stuttering that makes competitive gaming nearly impossible.

Beyond gaming, professional workloads increasingly depend on GPU acceleration. Video editing, 3D rendering, machine learning model training, and cryptocurrency operations all benefit dramatically from GPU compute capabilities. A capable graphics card can reduce video export times from hours to minutes and accelerate machine learning training by factors of 10x or more compared to CPU-only processing.

Memory Architecture and Bandwidth Considerations

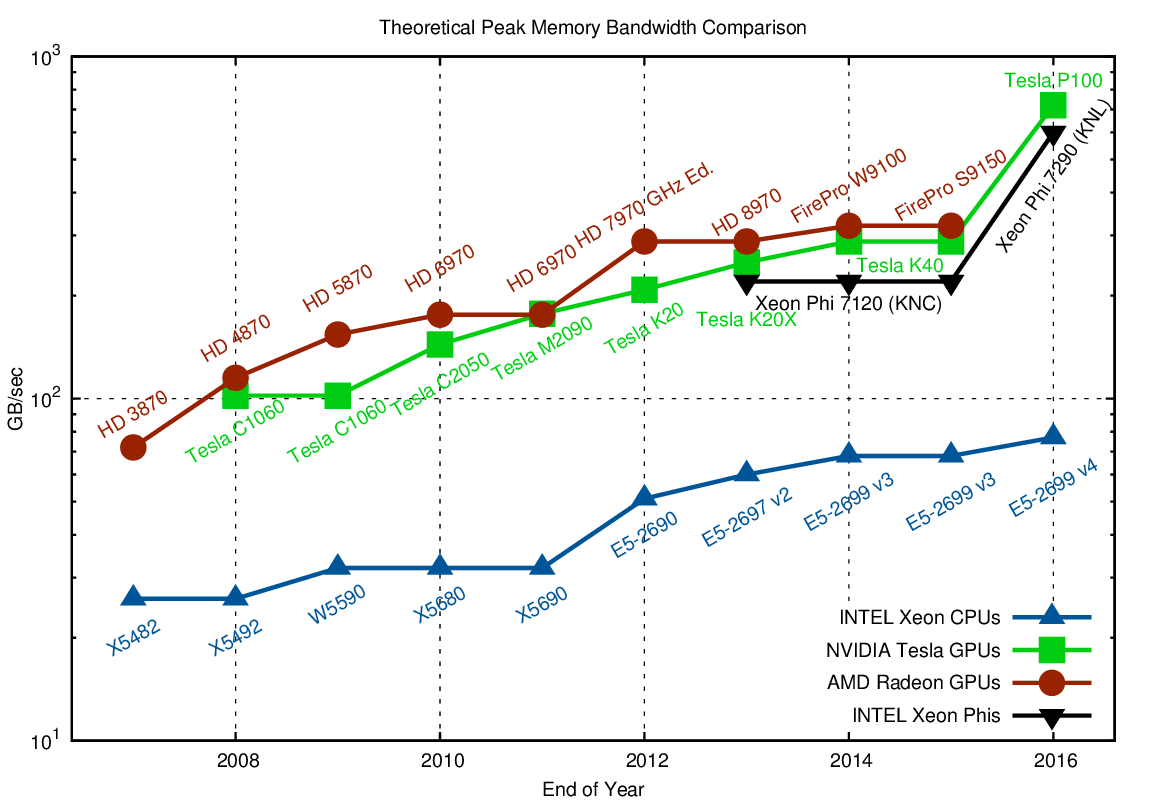

GPU memory architecture differs fundamentally from system RAM. Graphics cards utilize specialized VRAM (video RAM) optimized for high-bandwidth data access patterns. The memory bandwidth—measured in GB/s—determines how quickly the GPU can read and write data, directly impacting performance in memory-intensive operations.

Consider these bandwidth figures as a tangible example: An RTX 5070 offers 432 GB/s of memory bandwidth, while an RTX 4070 provides 576 GB/s. The older card's superior bandwidth in memory-constrained scenarios can actually outperform the newer generation when working with large data sets. This reality explains why specifications extend far beyond simple GPU core counts.

Memory capacity has evolved critically over the past three years. In 2023, 8GB of VRAM felt adequate for gaming and light professional work. By 2026, the baseline for serious gaming has shifted to 12GB, with 16GB becoming standard for content creation. Emerging AI applications increasingly demand 24GB or more. Choosing a card with insufficient VRAM forces compromises in texture quality, rendering resolution, or AI feature utilization—limitations that devalue your entire purchase.

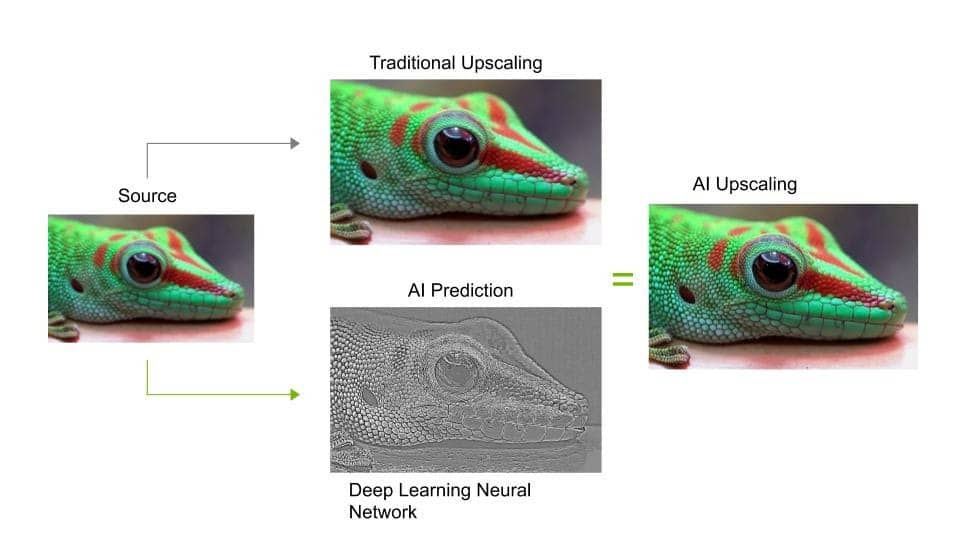

The Emergence of AI-Accelerated Features

Artificial intelligence fundamentally reshapes GPU purchasing decisions in 2026. NVIDIA's DLSS (Deep Learning Super Sampling) technology, AMD's Fidelity FX Super Resolution, and Intel's Xe Super Sampling represent a category shift in how games and applications approach performance optimization.

These AI-powered upscaling technologies render games at lower native resolutions, then use neural networks trained on real high-resolution images to intelligently reconstruct missing visual detail. The results prove remarkably convincing, offering 30-50% performance improvements with minimal quality loss. Modern games increasingly require these technologies to achieve smooth performance at high resolutions and refresh rates.

Beyond upscaling, GPU manufacturers integrate AI capabilities into ray tracing operations, physics simulations, and image processing pipelines. A GPU without strong AI acceleration becomes progressively less suitable as game engines evolve to depend on these capabilities by default.

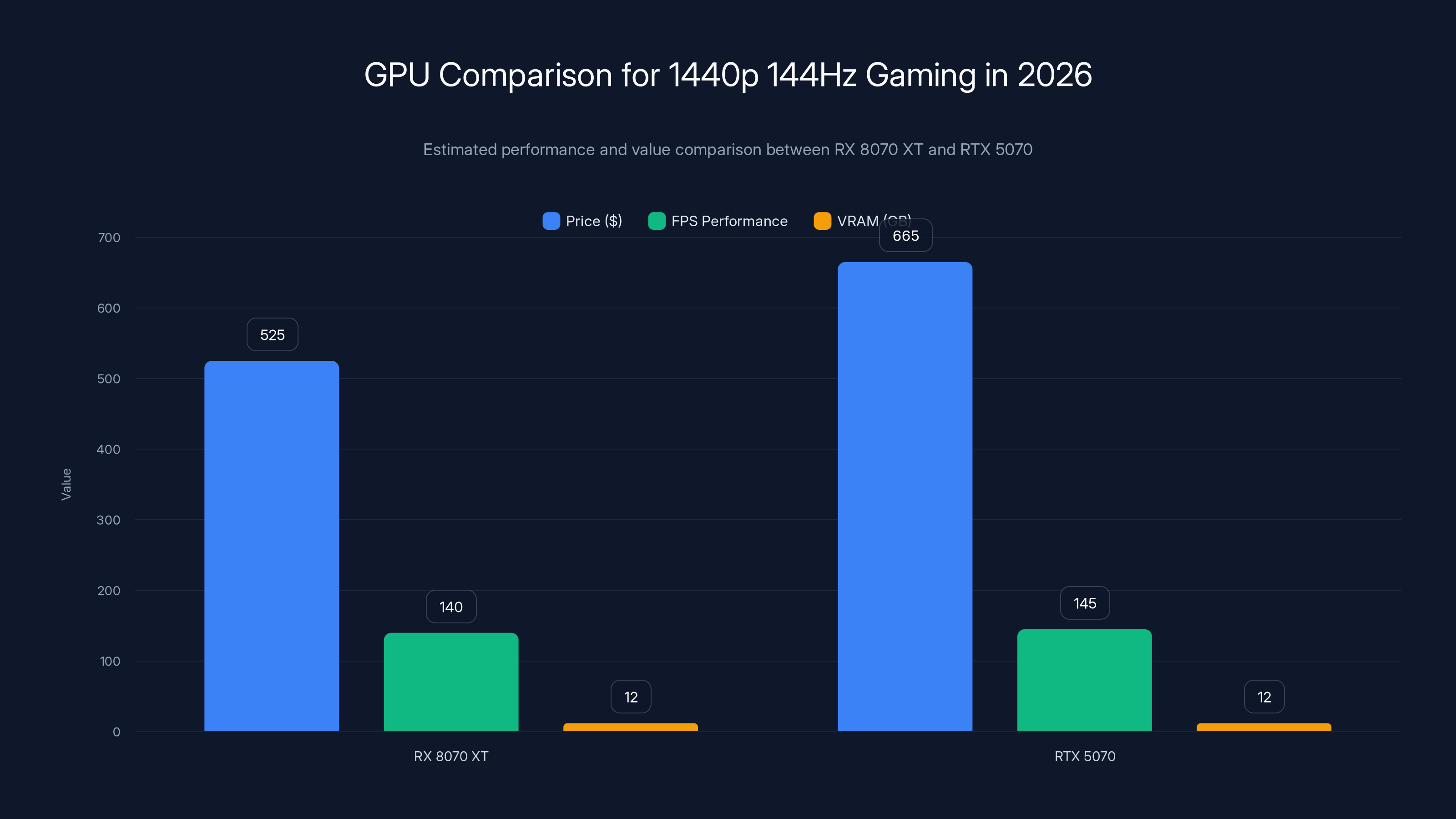

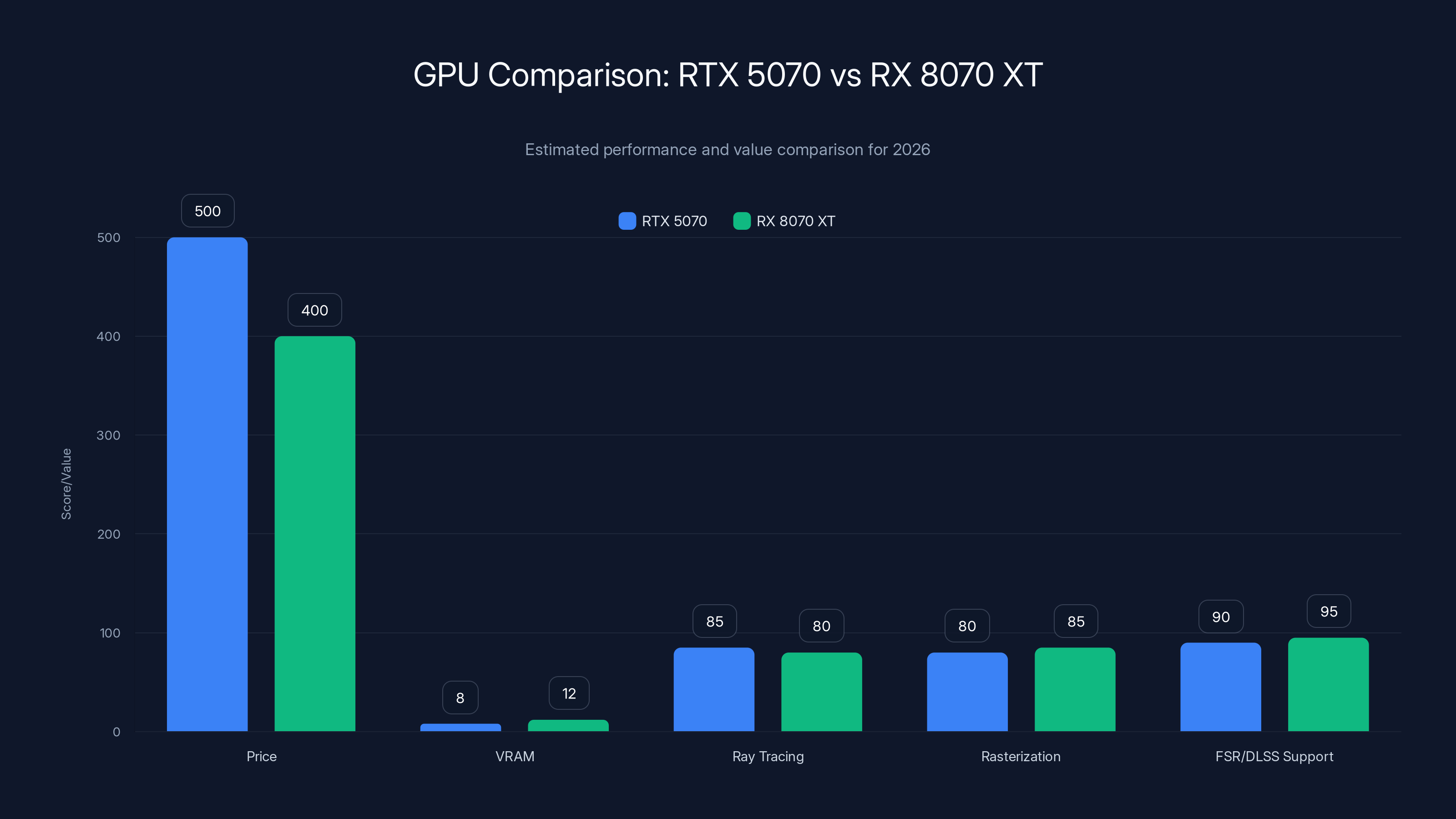

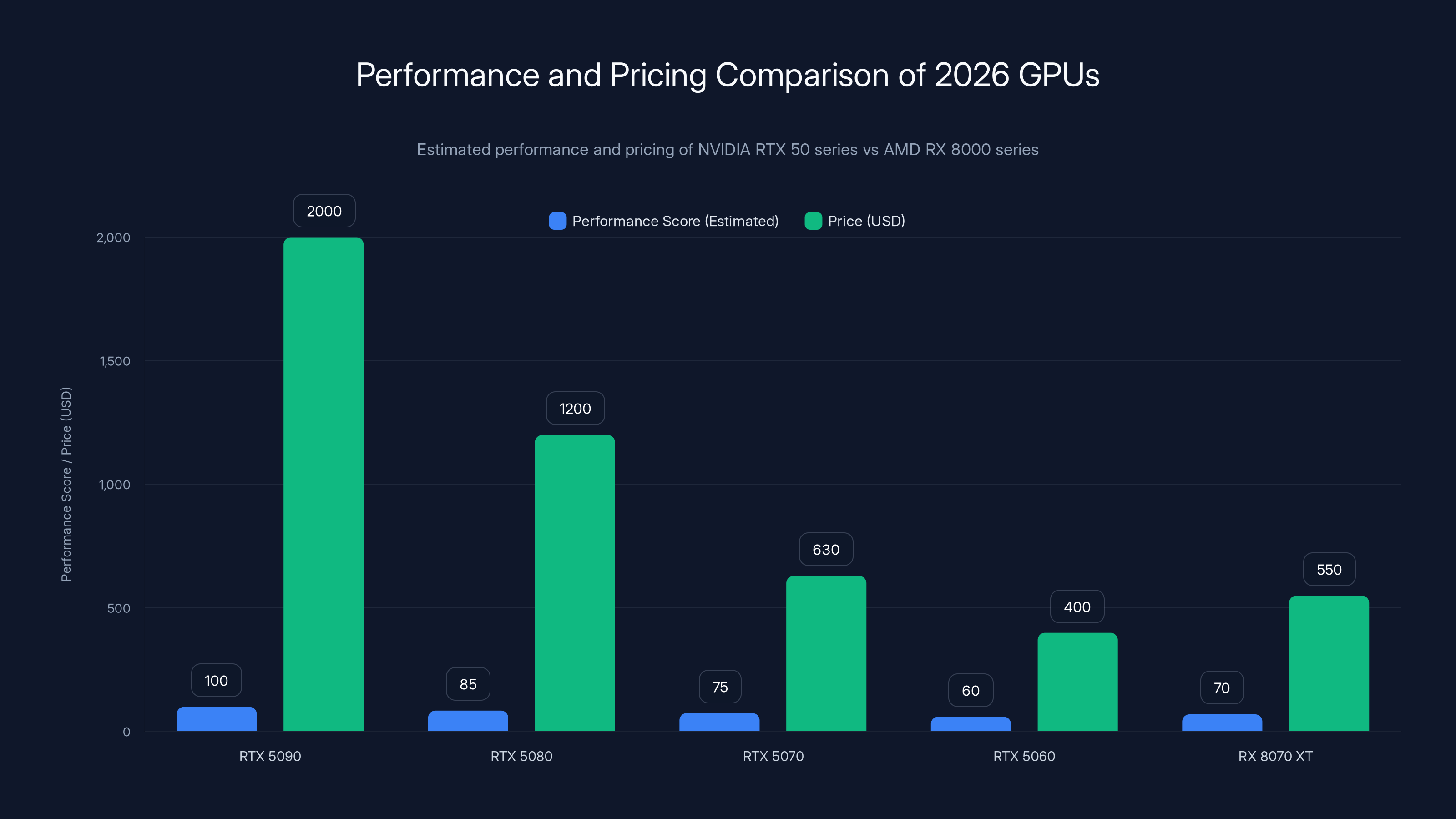

The RX 8070 XT offers better value with a lower price, while the RTX 5070 provides slightly better performance, especially in ray tracing. Estimated data.

Game Requirements and Resolution Planning

Categorizing Game Types by Performance Demands

Not all games demand identical GPU resources. Competitive shooters like Valorant, Counter-Strike 2, and Overwatch 2 prioritize frame rates over graphical fidelity. These titles, designed to run on hardware from 2010-2015, achieve 120+ frames per second on entry-level GPUs. An RTX 5060 easily pushes Valorant to 240+ fps at 1080p, creating the responsive, fluid experience competitive players demand.

Conversely, modern single-player experiences like Stalker 2, Dragon Age: The Veilguard, and S. T. A. L. K. E. R. 2 demand substantial GPU resources. These games exploit ray tracing extensively, implementing physically accurate reflections, shadows, and global illumination that necessitate AI upscaling to achieve playable frame rates. Running these titles at 1440p with high ray tracing settings requires a mid-range GPU at minimum, with high-end cards recommended for 4K gaming.

A practical approach involves categorizing your gaming habits: Do you primarily play competitive titles, demanding single-player experiences, or a mixture? Your answer should heavily influence GPU selection and budget allocation.

Resolution, Refresh Rate, and the GPU-Display Relationship

Resolution and refresh rate determine pixel throughput requirements. A 1440p display at 60 Hz requires the GPU to render 162 million pixels per second, while 4K at 144 Hz demands 2.8 billion pixels per second—a 17x difference. This mathematical relationship explains why 4K gaming requires substantially more powerful hardware than 1440p.

The relationship between GPU capability and monitor specifications must remain balanced. Pairing an expensive 4K monitor with an entry-level GPU creates frustration and wastes money. Conversely, using a high-end GPU with a modest 1080p 60 Hz display fails to leverage your hardware investment. Optimal builds align GPU capability with monitor specifications to achieve your target resolution and refresh rate in your actual games.

Consider this practical framework: A well-chosen mid-range GPU at 1440p with 144 Hz refresh rate strikes an excellent balance for most users. This combination feels fluid and responsive, accommodates both competitive and single-player games, and remains affordable for most budgets. It represents the "sweet spot" where GPU improvements deliver noticeable frame rate gains without requiring premium-tier spending.

Understanding Frame Rate Requirements and Perception

Frame rate directly impacts perceived smoothness and responsiveness. The threshold between stuttering and smooth performance occurs around 60 frames per second for casual players, but competitive gamers demand 120+ fps for responsive mouse tracking and quick reaction times. 144+ fps has become the competitive standard for esports titles, with some players preferring 240+ fps for maximum responsiveness.

However, frame rate perception diminishes above certain thresholds. The difference between 60 and 120 fps feels dramatic and obvious. The difference between 120 and 240 fps feels incrementally better but less transformative. Understanding your own preferences matters enormously—some users value graphical quality over frame rate, while others prioritize responsiveness above all else. No universal "correct" answer exists; only your preferences matter.

Ray Tracing Technology: The Visual Innovation Reshaping Gaming

The Physics of Ray Tracing and Real-Time Rendering

For decades, game developers approximated how light behaves using mathematical techniques called rasterization. These methods simulate light's behavior through educated guesses and clever visual tricks, producing convincing results that viewers accept as realistic despite being fundamentally inaccurate.

Ray tracing approaches lighting simulation differently. Instead of approximations, ray tracing algorithms actually simulate light rays bouncing through a virtual scene, calculating how light reflects off materials, passes through translucent objects, and creates realistic shadows. This physics-based approach produces dramatically more convincing lighting, especially in reflections, shadows, and indirect illumination.

The computational cost is staggering. A single pixel might require hundreds or thousands of light ray calculations to achieve photorealistic results. Until 2018, real-time ray tracing remained confined to offline rendering (creating static images in hours or days). NVIDIA's RTX architecture changed everything by introducing specialized RT cores—hardware specifically designed to accelerate ray tracing calculations. Suddenly, real-time ray tracing became possible during gameplay, opening entirely new visual possibilities.

Ray Tracing in Contemporary Games: Impact and Implementation

Modern games implement ray tracing at varying intensity levels. Some titles apply it selectively to reflective surfaces, creating stunning reflections in puddles, windows, and polished metals. Others extend ray tracing to shadows and global illumination, transforming the entire scene's lighting. The visual impact ranges from subtle (you might not immediately notice) to dramatic and transformative.

Titles like Cyberpunk 2077 with full ray tracing enabled present environments that feel tangibly more realistic than comparable rasterization-only scenes. Reflections in rain-covered surfaces and glass show the world accurately. Shadows consistently align with light sources. Indirect lighting from bouncing light creates natural color bleeding and soft shadows. For visually oriented players, ray tracing represents gaming's most significant graphics advance in decades.

The performance cost remains substantial. Ray tracing typically reduces frame rates by 30-60% depending on intensity settings and implementation. This steep cost explains why AI upscaling technologies emerged alongside ray tracing—they address the performance penalty while maintaining visual quality.

Comparing NVIDIA DLSS, AMD FSR, and Intel Xe Super Sampling

Three major upscaling technologies dominate in 2026, each offering different approaches and trade-offs:

NVIDIA DLSS represents the most mature technology, with years of refinement and integration into hundreds of games. The latest DLSS 4 implementation uses neural networks trained on massive datasets to reconstruct high-resolution images from lower-resolution inputs. Quality improvements from DLSS 3 to 4 proved substantial—visual quality gaps between native 4K and DLSS 4 upscaling from 1440p have nearly vanished. DLSS availability remains NVIDIA's strongest competitive advantage, with exclusive access in numerous major titles.

AMD Fidelity FX Super Resolution has matured considerably, offering comparable quality to DLSS in many scenarios while remaining available in open-source form. FSR 3 supports more games than DLSS 3, though availability gaps exist in newer AAA titles where NVIDIA has secured exclusive partnerships. AMD's approach uses computer vision rather than neural networks, creating deterministic, reproducible results that some users prefer from a trustworthiness perspective.

Intel Xe Super Sampling remains the newest technology, available in select titles but lacking the game support of competing solutions. Intel's approach leverages Arc GPU capabilities to deliver competitive visual quality, though limited adoption restricts its current relevance for most gamers.

For GPU purchasing, the practical implication is clear: NVIDIA's DLSS advantage remains significant for ray tracing enthusiasts, while AMD's FSR represents a genuine alternative with comparable quality and better game availability in non-NVIDIA exclusive titles.

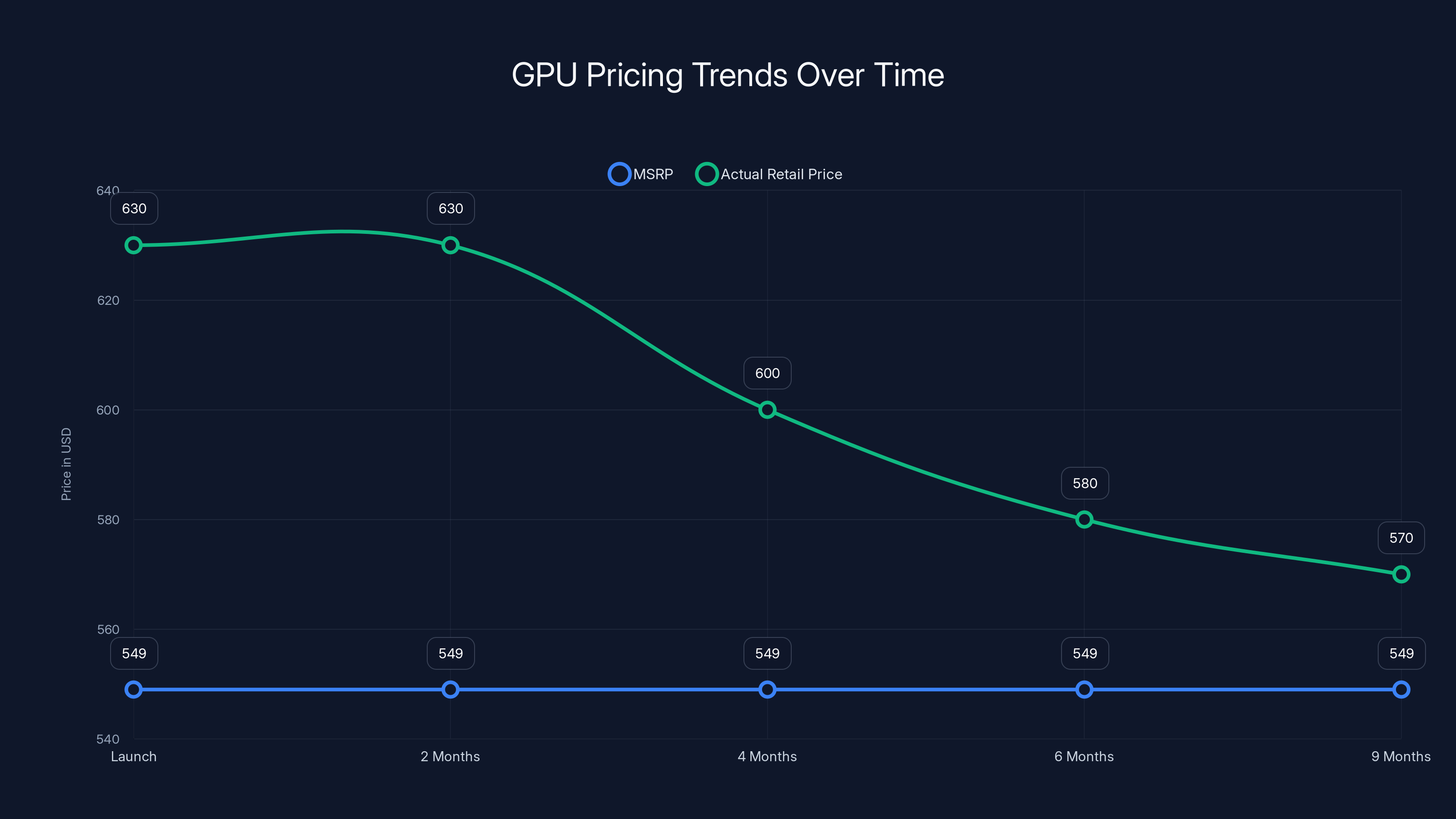

Estimated data shows that GPU prices often start 15-27% above MSRP and gradually decrease over 9 months, aligning closer to MSRP as supply stabilizes.

NVIDIA vs AMD vs Intel: Navigating the Competitive Landscape

NVIDIA's Market Position and RTX 50 Series Reality Check

NVIDIA historically dominated the discrete GPU market through consistent performance advantages and forward-thinking feature development. The introduction of DLSS created a competitive moat—exclusive features that justified premium pricing. For years, buying NVIDIA meant accessing technologies unavailable on competing platforms, making the purchase decision straightforward for serious gamers.

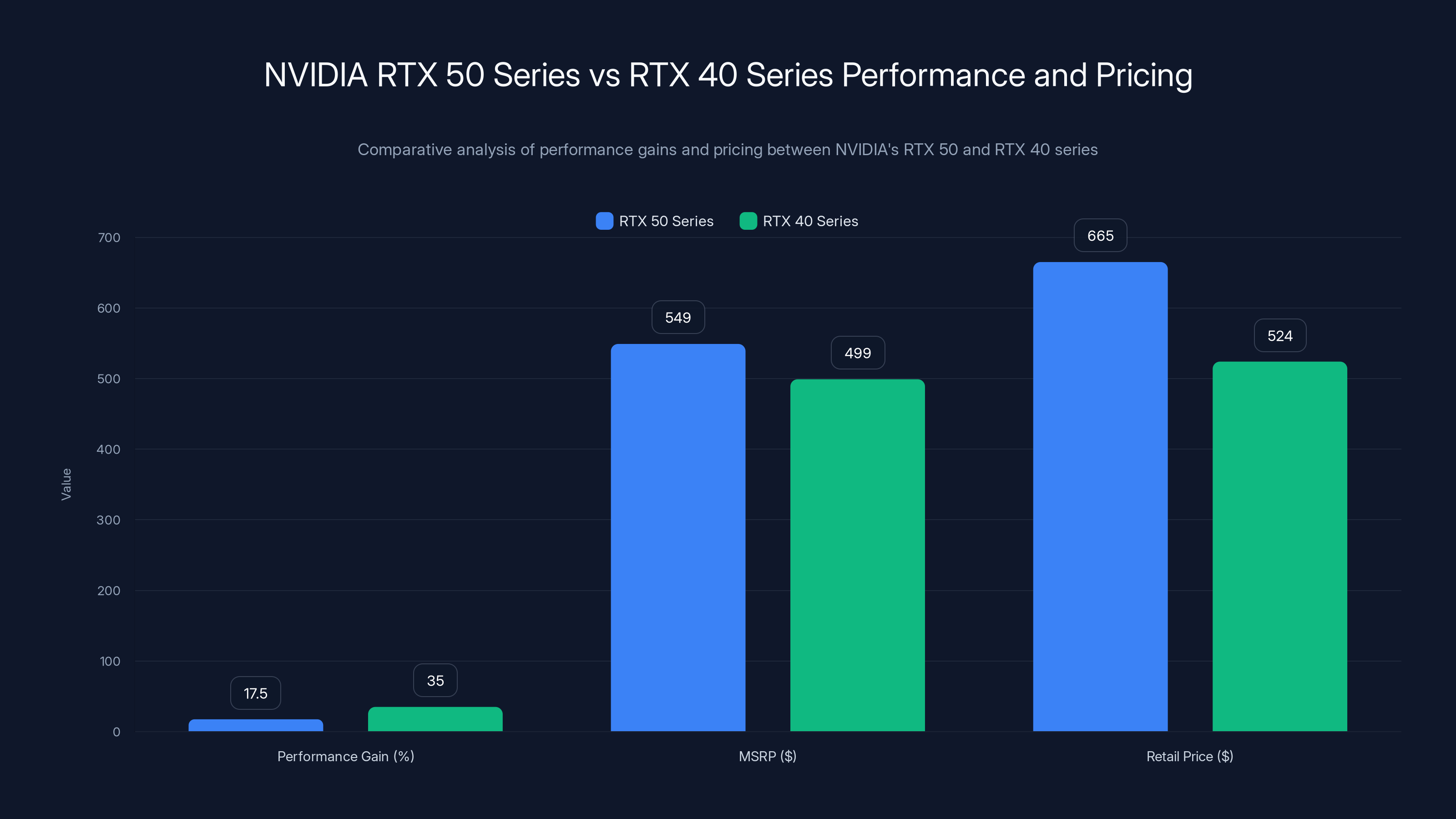

The 2025 RTX 50 series release disrupted this narrative. Rather than delivering the 30-40% performance gains typically expected in a new generation, performance improvements averaged 15-20% against the previous RTX 40 series. While manufacturing cost reductions allowed NVIDIA to adjust pricing, real-world pricing often exceeded MSRP by $80-150 across the product stack. The perceived value proposition weakened considerably.

Specifically, the RTX 5070 launched at a stated

NVIDIA's strength remains in exclusive ray tracing performance and DLSS availability, particularly in AAA titles where NVIDIA has secured preferential treatment. The GeForce RTX 5090 and 5080 lack direct AMD competition, guaranteeing market dominance in the ultra-premium segment. However, at entry and mid-range price points, NVIDIA's advantage eroded significantly in 2025.

AMD's Resurgence with RDNA 4 Architecture

AMD enters 2026 with genuine competitiveness. The RDNA 4 architecture, with cards like the Radeon RX 8070 XT and RX 8050 XT, delivers impressive rasterization performance exceeding comparable NVIDIA cards while providing more VRAM as standard. An AMD card often delivers equal or superior performance to its NVIDIA counterpart while costing $50-100 less and including more memory.

AMD's Fidelity FX Super Resolution has matured to compete genuinely with DLSS in visual quality. While DLSS maintains slight advantages in cutting-edge games, the gap has narrowed substantially. FSR's open-source nature means developers can implement it independently without NVIDIA partnerships, improving adoption in indie and smaller studio titles.

However, AMD faces challenges in premium ray tracing performance, particularly in optimized NVIDIA-exclusive titles. Games specifically optimized for DLSS often show visible advantages over FSR 3 when both run on AMD hardware. Additionally, professional software support remains stronger on NVIDIA, limiting appeal to creative professionals.

For most gamers prioritizing value—particularly those playing older titles, competitive shooters, and games with strong FSR support—AMD represents an increasingly compelling choice. The value proposition particularly shines at the $350-500 price range where AMD's VRAM and rasterization advantages become most pronounced.

Intel Arc: The Promising Wildcard

Intel's discrete GPU initiative remains relatively new, with Arc Alchemist (Battlemage series) gaining traction throughout 2025. Intel's hardware delivers competitive rasterization performance with significant VRAM allocations (typically 12GB standard), targeting value-conscious builders. Pricing proves particularly aggressive, with Arc A770 models frequently appearing at $250-300, substantially undercutting NVIDIA and AMD at similar performance levels.

Intel's weakness centers on driver maturity and game optimization. The Arc GPU architecture differs substantially from NVIDIA and AMD designs, requiring dedicated driver and software development for optimal performance. Early Arc releases suffered from inconsistent performance, driver issues, and limited game support. While improvements have been substantial, the perception of unreliability persists.

Xe Super Sampling technology works adequately but lacks the maturity and game support of DLSS or FSR. Developers show little incentive to optimize specifically for Intel Arc when NVIDIA and AMD install bases remain vastly larger. For buyers comfortable managing driver updates and accepting potential compatibility issues for maximum value, Intel presents an intriguing option. For those prioritizing stability and broad game support, NVIDIA or AMD remain safer choices.

Memory Capacity and GPU VRAM Considerations

Why VRAM Capacity Matters More Than Ever

Memory capacity—the total amount of VRAM on the card—represents one of 2026's most critical GPU specifications. Five years ago, 6GB seemed adequate for gaming. Today, many games refuse to run properly below 8GB, and 10GB represents a practical minimum for serious gaming in 2026.

The shift toward more demanding graphical standards drives VRAM requirements upward. Detailed textures, high-resolution normal maps, complex shader systems, and AI-upscaled content all consume video memory. Games increasingly implement settings requiring 12GB+ to enable maximum quality without compromising performance through memory bandwidth constraints.

AMD understands this reality, equipping RX cards with 12-16GB standard across mid-range offerings. NVIDIA still ships RTX cards with 8GB at entry and mid-range price points, forcing buyers to spend extra for 12GB variants. This specification gap gives AMD a distinct advantage for buyers planning to keep hardware for 5+ years.

VRAM Requirements by Gaming Segment

Competitive esports titles (Valorant, CS2, Overwatch 2) operate with minimal VRAM demands—4-6GB covers extreme cases. Resolution doesn't substantially increase VRAM needs since texture counts remain consistent. An 8GB GPU covers these titles comfortably across any resolution.

Modern single-player games with ray tracing demand 10-12GB minimum at 1440p and high settings. At 4K with ray tracing maximized, many 2024-2025 releases prefer 12-16GB for optimal performance. Stalker 2, Dragon Age: The Veilguard, and similar AAA titles show stuttering with 8GB cards when running maximum quality settings, as insufficient VRAM forces constant data transfers between GPU and system RAM—operations significantly slower than on-GPU access.

Content creation workloads prove most demanding. Video editing, 3D rendering, and AI operations benefit from abundant VRAM. A content creator building a workstation should target 16-24GB minimum, with professional workloads sometimes preferring 48GB or more on specialized enterprise cards.

Understanding Memory Types: GDDR6X, GDDR6, and HBM

GPU memory comes in multiple varieties, each offering different bandwidth and power characteristics. GDDR6X remains standard in high-end gaming GPUs, offering excellent bandwidth (

GDDR6 appears in budget and entry-level cards, offering lower bandwidth but also lower cost and power consumption. A card with GDDR6 might show noticeable performance differences compared to GDDR6X in memory-intensive games, particularly at high resolutions.

HBM (High Bandwidth Memory) represents professional-grade memory found in workstation and AI accelerator cards, offering exceptional bandwidth (

For gaming and general-purpose GPUs, GDDR6X remains the standard choice balancing performance and cost. Memory type specifications matter less than actual bandwidth figures—compare GB/s specifications rather than memory type names when evaluating different cards.

The RX 8070 XT offers better value with more VRAM and competitive performance at a lower price than the RTX 5070. (Estimated data)

Pricing Strategies and Real-World Market Dynamics

Understanding MSRP Versus Actual Retail Pricing

Manufacturer Suggested Retail Price (MSRP) represents a theoretical starting point disconnected from reality. NVIDIA's RTX 5070 carries a

Why does this gap exist? Demand consistently exceeds supply at MSRP, allowing AIB partners to charge premium prices. Retailers face no incentive to sell below market rates when customers willingly pay more. The gap typically shrinks after 3-6 months as supply increases, making timing an important purchasing consideration. Buying immediately after a generation launch means paying premium pricing, while waiting creates opportunities for modest discounts.

Recent historical patterns show 15-25% price premiums over MSRP persisting for 2-3 months, then declining toward 5-10% over the following 3 months. Patients buyers can save hundreds by waiting, though early adopters gain access to latest features and games optimized for newest architectures.

Seasonal Price Variations and Historical Trends

GPU pricing shows seasonal patterns tied to retailer inventory cycles and gaming industry events. Summer typically brings higher prices as the gaming season begins, with prices moderating in autumn and winter. Holiday periods (November-December) sometimes feature bundled promotions reducing effective prices despite stated prices remaining high.

Historically, pricing drops 20-30% occur 6-9 months after generation launches as manufacturing scales and new announcements create inventory clearing pressure. A GPU launching at

Inventory management by retailers creates opportunities. When new generations release, older inventory must clear. A previous-generation card's price might drop $100-200 overnight as sellers compete to move stock. Savvy buyers monitor price history and grab sudden drops indicating inventory management events.

Evaluating Value Across Price Tiers

Price-to-performance ratios differ substantially across the product stack. Budget cards (

This diminishing returns curve means budget builders maximize value by selecting the highest performance card possible within their budget constraint. Stretching from

New GPU Releases in 2026: What's Changed

NVIDIA's RTX 50 Series: Promise and Reality

NVIDIA's RTX 50 series (RTX 5090, 5080, 5070, 5060) released throughout early 2025, completing the lineup into 2026. The architecture promised improvements in ray tracing and AI capabilities, but measured performance gains disappointed many enthusiasts expecting 30-40% jumps between generations.

The RTX 5090 maintains dominance in pure performance but carries a nearly

NVIDIA's primary advantage remains ray tracing in exclusive titles and comprehensive DLSS support. For buyers prioritizing access to latest features and cutting-edge performance regardless of cost, NVIDIA remains the default choice. For value-conscious buyers, the 2025 lineup offered fewer compelling reasons to choose NVIDIA over AMD or Intel.

AMD's RDNA 4 Architecture and Competitive Response

AMD's RDNA 4 architecture, embodied in RX 8000 series cards released throughout 2025, represents a genuine competitive threat to NVIDIA's value proposition. The RX 8070 XT delivers comparable performance to the RTX 5070 while including 12GB standard VRAM versus 8GB, often at

Rasterization performance often exceeds NVIDIA at equivalent price points, with benchmarks showing 5-15% advantages in non-ray-traced games. Ray tracing remains AMD's weakness, but Fidelity FX Super Resolution has matured sufficiently that AMD's ray tracing disadvantage matters less than previously.

AMD's strategy prioritizes value and VRAM, acknowledging that NVIDIA's strengths lie in ray tracing performance and feature exclusivity. Rather than compete directly on those fronts, AMD offers superior rasterization, more memory, and lower prices—a compelling alternative for gamers playing non-exclusive titles or prioritizing frame rates over ray tracing effects.

Intel Arc's Strategic Positioning

Intel continues aggressive pricing on Arc Alchemist (Battlemage) cards, offering 12GB standard memory at

Intel's weakness remains game optimization and driver maturity. Buying Intel Arc requires comfort with potential driver issues and accepting that some games may show inconsistent performance compared to NVIDIA or AMD equivalents. For buyers accepting these limitations, Intel provides unmatched value.

The RTX 50 Series shows reduced performance gains (15-20%) compared to typical expectations (30-40%) and higher retail prices (

Used and Refurbished GPU Market Considerations

Evaluating Used GPU Purchases

The used GPU market offers opportunities for budget builders but carries real risks. Mining-used GPUs represent a particular concern—crypto miners often run cards at high temperatures for extended periods, degrading components and reducing longevity. While a used card might function perfectly immediately after purchase, mining damage can manifest as sudden failures or gradual performance degradation months later.

Assessing mining history proves nearly impossible without manufacturer documentation. Many used GPUs lack original packaging or receipts, making verification difficult. A used RTX 3080 selling for $300 might provide years of reliable service—or fail catastrophically after three months.

Refurbished GPUs from authorized retailers offer superior protection. These cards undergo testing, repair, and come with manufacturer warranties (typically 3-6 months). The price premium over mystery used cards ($50-150 typically) proves worthwhile for the peace of mind and warranty coverage.

When Used Purchases Make Sense

Used GPUs make financial sense in specific scenarios: When needing temporary hardware for a short-term project, when budget constraints are severe and risk tolerance is high, or when purchasing from trusted sources (friend upgrades, known retailers with return policies).

A more conservative strategy involves waiting for sale prices on new cards rather than purchasing used. A used RTX 4080 at

For casual gamers, the used market offers access to previous-generation high-end cards at mid-range prices—a genuinely compelling value proposition if you can verify condition and mining history.

Gaming Monitor Selection and GPU-Display Harmony

Panel Technology and Refresh Rate Synchronization

GPU selection must align with monitor specifications. A powerful GPU paired with a 60 Hz 1080p monitor represents wasted potential. Conversely, an RTX 5060 paired with a 4K 144 Hz monitor will frustrate with stuttering and low frame rates.

Modern gaming monitors standardize on IPS or VA panel technologies with 144 Hz+ refresh rates. IPS panels offer superior color accuracy and viewing angles, ideal for hybrid gaming/creative work. VA panels provide superior contrast but narrower viewing angles. Neither significantly affects gaming performance—choosing between them depends on use case and budget.

Adaptive sync technologies (NVIDIA G-Sync, AMD Free Sync) reduce visual tearing when frame rates don't align perfectly with monitor refresh rates. These technologies became nearly mandatory for smooth gaming, particularly in variable performance scenarios. Ensure your chosen GPU supports your monitor's adaptive sync technology (most modern cards support both standards).

Resolution and GPU-Monitor Matching Framework

Optimal GPU-monitor pairing follows this framework:

1080p 144 Hz pairs with RTX 5060, RX 8050 XT or budget equivalents. These GPUs deliver 100+ fps in modern games, fully saturating the monitor's refresh rate.

1440p 144 Hz pairs with RTX 5070, RX 8070 XT, RTX 4070 Super—the sweet spot balancing performance, cost, and smoothness. These cards deliver 80-120 fps in demanding titles, 150+ in competitive games.

4K 60 Hz requires RTX 5080, RTX 4090, RX 8070 XT with AI upscaling enabled. Native 4K gaming demands AI upscaling assistance to achieve acceptable frame rates.

4K 144 Hz demands RTX 5090 or equivalent high-end hardware, typically with aggressive AI upscaling. This combination remains expensive and primarily relevant for enthusiasts with unlimited budgets.

Mismatches in either direction create problems: underpowered GPUs cause frustrating stuttering; overpowered GPUs waste investment potential. Finding the right balance matters far more than selecting the most expensive option available.

The RTX 5090 leads in performance but at a high cost, while the RX 8070 XT offers competitive performance at a lower price. Estimated data based on typical market trends.

Professional Use Cases: Content Creation and AI Workloads

Video Editing, 3D Rendering, and Encoding Acceleration

Professional workflows depend heavily on GPU acceleration. Video editors using Adobe Premiere Pro benefit from NVIDIA CUDA acceleration, reducing timeline scrubbing latency and export times substantially. 3D rendering platforms like Blender and Octane Render leverage CUDA, HIP (AMD), or Opti X acceleration to reduce render times from hours to minutes.

The GPU significantly impacts productivity for creators. A RTX 5070 might render a complex 3D scene in 10 minutes compared to 60 minutes on CPU alone—a 6x improvement that adds up to hours per week for active professionals. Over a year, this productivity boost justifies substantial GPU investment through direct time savings.

Specialized encoding (converting video between formats) accelerates dramatically with GPU assist. Exporting a 1-hour video project from 4K to 1080p might take 30 minutes on CPU alone, but 3-5 minutes with GPU acceleration. For video professionals working with multiple projects daily, this matters enormously.

NVIDIA's CUDA platform dominates professional software, providing significantly better compatibility than AMD HIP or Intel's limited support. Video editors, 3D artists, and motion graphics professionals should heavily weight CUDA availability when selecting GPUs, often justifying NVIDIA's premium despite AMD's superior gaming value.

Machine Learning and AI Model Training

Artificial intelligence workloads represent the fastest-growing GPU use case. Training machine learning models on GPU hardware proves orders of magnitude faster than CPU alternatives. A model requiring 100 hours on CPU might train in 5-10 hours on a capable GPU, enabling researchers and developers to iterate far faster.

NVIDIA's CUDA ecosystem dominates AI development, with specialized CUDA Compute Capability versions supporting specific GPU architectures. The RTX 5090 offers 576 TFLOPS of FP32 compute performance, enabling massive parallel processing for neural network training. AMD Arc and Intel Arc lag significantly in mature AI framework support despite reasonable hardware specifications.

For AI enthusiasts and researchers, NVIDIA remains the default choice despite higher costs. The software ecosystem advantage often outweighs hardware cost considerations. For casual AI experimenters, AMD or Intel provide adequate capability at lower costs, though expect more configuration challenges.

Building a Complete System: Complementary Components

Power Supply Sizing for Modern GPUs

High-end GPUs demand substantial power. The RTX 5090 consumes up to 575W during peak gaming, requiring a quality 1000W PSU minimum to ensure stable operation. The RTX 5080 demands 350W, suggesting a 850W PSU. Mid-range cards like the RTX 5070 need 250W, supporting 750W PSU adequately.

Calculate PSU requirements by adding CPU and GPU TDP (Thermal Design Power) figures, then add 20-30% headroom for transient spikes:

Cheap power supplies fail catastrophically, potentially damaging expensive GPU hardware through voltage instability. Invest in certified 80+ Gold or Platinum PSUs from reputable manufacturers (Corsair, EVGA, Seasonic, Superflower). The extra $50-100 prevents devastating hardware failures.

Cooling Considerations and GPU Temperature Management

Modern GPUs operate safely up to 85-90°C under sustained load, though performance throttles above that threshold. Adequate case airflow and GPU cooling prevent thermal throttling that reduces frame rates and potentially shortens component lifespan.

GPU cooler quality varies dramatically between card manufacturers. Founder's Edition designs from NVIDIA and reference AMD designs often prove adequate, though AIB partners (ASUS, MSI, Gigabyte) frequently offer superior cooling solutions. A high-quality AIB card might run 5-10°C cooler than reference designs, improving stability and longevity.

Liquid cooled cards offer marginal advantages over quality air coolers except at extreme overclocking levels. For standard gaming, air-cooled cards suffice for most users. Ensure adequate case ventilation—at least one front intake fan and one rear exhaust fan—to prevent hot air recirculation.

Motherboard and CPU Compatibility Verification

GPU compatibility with motherboards remains straightforward—virtually all discrete GPUs fit any motherboard with a PCIe slot. However, verify PCIe generation compatibility: PCIe 5.0 GPUs work in PCIe 4.0 and 3.0 slots (at reduced bandwidth), but older GPUs don't benefit from newer slots.

CPU-GPU balance matters more than raw compatibility. A high-end RTX 5090 paired with an entry-level CPU creates bottlenecking where the CPU fails to feed the GPU sufficient data, reducing GPU utilization and leaving performance on the table. Balance CPU budget with GPU budget—roughly equal investment often proves optimal, though this varies by use case.

Timing Your Purchase: Strategic Considerations

Generational Release Cycles and Optimal Purchase Windows

GPU generations release on roughly 2-3 year cycles. NVIDIA launched RTX 40 series in October 2022, RTX 50 series in January 2025, suggesting RTX 60 series arrival around late 2026 or early 2027. Knowing generational timelines helps strategize whether to buy now or wait.

Buying immediately before a generation launch (the month before release) represents poor timing—you'll pay peak prices for hardware about to become obsolete relative to newer options. Buying 2-3 months after launch provides access to new architecture advantages while avoiding extreme pricing premiums as supply stabilizes.

Older generations become bargains 6-12 months post-launch as previous inventory clears. An RTX 4070 Super cost

Price Prediction and Market Forecasting

Historical price patterns suggest:

- Months 1-3 post-launch: MSRP premiums of 15-25%

- Months 3-6: Premiums decline to 10-15%

- Months 6-9: Stabilization near MSRP or 5-10% premium

- Months 9-12: Often 5-10% discounts as new generation rumors build

- After 12 months: Previous generation prices collapse 30-50% below launch

These patterns aren't guaranteed but reflect historical trends across multiple generations. Building a purchase plan around these patterns can save hundreds, though requires patience and accepting temporary hardware limitations.

When to Buy Immediately Despite Higher Prices

Immediate purchase makes sense in specific situations: When current hardware is failing and replacement is critical, when new features (like DLSS 4 in RTX 50 series) provide substantial advantage for your specific use case, when supply is severely limited and availability is uncertain, or when you've already delayed beyond reasonable waiting periods.

Perfectionism about purchase timing often leads to decision paralysis. Analyzing pricing variations endlessly while missing gaming experiences represents poor optimization. Sometimes buying now, enjoying the hardware for 2-3 years, then upgrading beats perpetually waiting for better timing.

Understanding Benchmark Data and Performance Metrics

Interpreting Synthetic Benchmarks

Synthetic benchmarks (3DMark, GFXBench, Spec View Perf) measure specific hardware capabilities under controlled conditions, providing standardized comparison points. These benchmarks prove valuable for technical comparison but often diverge from real-world gaming performance.

A GPU scoring 20% higher on synthetic benchmarks might show only 10-15% real-world gaming improvements. Benchmark scores reflect peak theoretical performance, while actual games rarely achieve perfect utilization due to driver overhead, CPU bottlenecking, and other real-world limitations.

Use synthetic benchmarks for broad tier comparisons (RTX 5070 belongs roughly here, RTX 5080 belongs roughly there) rather than assuming exact performance correlations. They're useful for rapidly assessing relative performance across dozens of GPUs, but actual gaming benchmarks matter more for purchase decisions.

Real-World Gaming Benchmarks and Frame Rate Testing

Actual game benchmarks reveal real-world performance—the metric that ultimately matters. Modern games like Cyberpunk 2077, Alan Wake 2, and Stalker 2 provide reliable performance benchmarks revealing how specific GPUs handle demanding workloads with specific settings.

Frame rate testing should use your actual monitor resolution and refresh rate. Testing a card's ability to deliver 240 fps doesn't matter if your monitor maxes at 144 Hz. Similarly, testing ray tracing off provides irrelevant data if you intend to use ray tracing. Performance testing should replicate your actual usage patterns.

Resourceful benchmarking involves testing 4-6 games spanning competitive (Valorant, CS2), mid-tier (Hogwarts Legacy, Baldur's Gate 3), and demanding (Cyberpunk 2077, Stalker 2) categories. This diversity provides realistic expectations across varied use cases. Relying on single game benchmarks risks making decisions based on unrepresentative data.

Noise and Power Consumption Data

Beyond frame rates, thermal performance, power consumption, and noise matter for daily usability. A GPU delivering great performance but generating excessive noise proves frustrating in practice.

Noise levels vary dramatically between cooler designs. High-quality AIB coolers often operate 5-10d B quieter than reference designs through improved thermal efficiency and better fan characteristics. At 1-2 meters distance (typical desk distance), the difference between 30d B (near silent) and 40d B (noticeable) feels substantial during quiet gaming sessions.

Power consumption affects both electricity costs and thermal output. A 250W GPU versus 375W GPU running 8 hours daily costs roughly

Avoiding Common GPU Purchasing Mistakes

Overspending on Unnecessary Performance

Budget creep represents the most common purchasing mistake—gradually increasing budget throughout the decision process until buying something far beyond original constraints. The psychology is understandable: "Just

Resist this temptation by establishing budget constraints early and seeking the best performance within that constraint, rather than constantly evaluating higher price tiers. A well-chosen

Neglecting VRAM Capacity in Pursuit of Raw Performance

Chasing specs sheets while ignoring memory capacity creates regrets. An 8GB card with slightly faster memory beats a 10GB card with slightly slower memory in specifications, but the 8GB card throttles performance through memory constraints in demanding games while the 10GB card operates comfortably.

Prioritize having sufficient VRAM for your intended use case. Eight gigabytes works for competitive gaming and older titles. Twelve gigabytes suits modern AAA games. Sixteen gigabytes provides comfortable headroom for content creation and future-proofing. Insufficient VRAM isn't overcome through faster memory—it's a hard limitation.

Ignoring Driver Quality and Software Support

Hardware quality matters less than software maturity. A technically superior GPU with poor driver support or limited game optimization delivers inferior real-world performance compared to slightly inferior hardware with mature, stable drivers.

NVIDIA's driver maturity represents its biggest advantage over Intel Arc and to a lesser extent AMD. Buying Intel Arc as a primary gaming GPU without comfort managing driver issues courts frustration. AMD's drivers have matured substantially but still occasionally lag NVIDIA for optimization in exclusive AAA titles.

Verify game support and driver quality reviews before purchase. A poorly-supported GPU makes every new release a lottery—will my card perform adequately? Mature drivers eliminate this concern.

Failing to Account for Entire System Synergy

Purchasing GPU independently from the broader system often results in mismatches. A powerful GPU with inadequate power supply, insufficient cooling, CPU bottlenecking, or display incompatibility creates frustration and wastes investment.

Context matters enormously. The best GPU for a

Future-Proofing Considerations and Technology Trends

Ray Tracing Becoming Mandatory Rather Than Optional

Ray tracing is transitioning from optional features to increasingly standard implementation. Games release with ray tracing on by default, with rasterization options available rather than the inverse. This architectural shift means ray tracing competence becomes mandatory for purchasing, not optional.

AMD's ray tracing improvements and Fidelity FX Super Resolution maturation have narrowed the performance gap with NVIDIA. AMD cards will become increasingly viable for ray tracing games as developers optimize for multiple architectures. However, NVIDIA maintains a temporary advantage worth considering if ray tracing is important for your gaming library.

AI Acceleration as Default Computing Model

Artificial intelligence acceleration is becoming ubiquitous across games and applications. DLSS, FSR, and similar upscaling technologies represent just the beginning. Expect AI to power physics simulation, non-player character behavior, player prediction, dynamic quality adjustment, and countless other features.

GPUs with strong AI acceleration become increasingly valuable. NVIDIA's tensor cores provide substantial advantage for AI workloads, making RTX cards particularly relevant despite AMD's gaming value proposition. AMD is improving, but the gap remains significant for AI-intensive applications.

VRAM Scaling and Larger Memory Pools

VRAM capacity requirements scale faster than performance improvements. Games increasingly demand 12GB+ to enable maximum quality settings at native resolution. Generational progression suggests 12GB becoming standard across mid-range products by 2027, with 16GB appearing in premium offerings.

Choosing sufficient VRAM for your intended 3-5 year usage period matters enormously. An 8GB card today becomes increasingly limiting toward the end of its lifespan. Paying $50-100 more for 12GB or 16GB provides substantially better longevity and resale value.

Expert Recommendations by Use Case and Budget

Budget Gaming ($200-350)

Target RTX 5060 (

The RTX 5060 offers 8GB VRAM and DLSS access, suitable for years of 1080p gaming. The RX 8050 XT provides 12GB VRAM and FSR 3 support at similar pricing. Either choice provides excellent value for budget-constrained builders. Avoid stretching beyond this range unless planning 1440p gaming—the value proposition worsens significantly.

Mid-Range Gaming ($400-550)

Select RTX 5070 (actual prices

This category handles 1440p 144 Hz gaming smoothly, with access to ray tracing at moderate quality settings. Plan spending toward the RX 8070 XT unless prioritizing exclusive NVIDIA ray-traced titles, then stretch toward the RTX 5070.

High-End Gaming ($700-1000)

Consider RTX 5080 (

The RTX 4090 offers extraordinary performance for 4K gaming but at premium pricing. The RTX 5080 provides more reasonable pricing with competitive 4K performance. For 4K gaming enthusiasts with generous budgets, these represent legitimate choices. Otherwise, mid-range cards provide better value unless you absolutely need maximum performance.

Content Creation Workloads

Prioritize CUDA support for software compatibility. RTX cards provide optimized performance for Adobe Creative Cloud, Blender with CUDA, and professional encoding tools. An RTX 5070 (

For teams requiring consistent performance, professional RTX cards (RTX 4500, RTX 5000) offer stability guarantees and long-term support. These exceed gaming GPU budgets substantially but provide assurance for income-generating work.

AI and Machine Learning Projects

Focus on CUDA Compute Capability 8.9+ (RTX 50 and 40 series, or RTX 30 series) for broad AI framework support. An RTX 5080 or RTX 4090 provides necessary compute performance for serious model training. For hobbyists, even RTX 4070 or RTX 5070 enables reasonable performance for small-to-medium models.

AMD Arc and Intel represent emerging options but lack mature framework support. NVIDIA remains the default for professional AI work despite higher cost. Budget constraints might justify AMD/Intel for personal projects where framework limitations prove acceptable.

FAQ

What GPU should I buy for 1440p 144 Hz gaming in 2026?

For 1440p 144 Hz gaming, the RX 8070 XT or RTX 5070 represent ideal choices, though at vastly different actual prices (

How much VRAM do I actually need for gaming in 2026?

Minimum viable VRAM has shifted to 12GB for contemporary single-player gaming with ray tracing. Competitive esports titles (Valorant, Counter-Strike 2) operate fine on 8GB, but modern AAA releases like Cyberpunk 2077, Stalker 2, and Dragon Age: The Veilguard prefer 12GB+ for maximum quality settings at native resolution. Insufficient VRAM forces texture quality compromises or causes stuttering through excessive memory bandwidth usage. Plan for 12-16GB minimum if you intend keeping hardware for 3+ years, as games will increasingly demand more memory. Content creators should target 16GB+ for comfortable operation without memory constraints.

Is ray tracing worth the performance cost in 2026?

Ray tracing has become increasingly valuable as game implementations matured and AI upscaling (DLSS 4, FSR 3) eliminates the severe frame rate penalty. In titles like Cyberpunk 2077 with full ray tracing, the visual transformation proves dramatic—reflections become accurate, shadows align with light sources, and indirect lighting creates natural ambiance impossible through rasterization alone. However, not all games implement ray tracing meaningfully; some titles show minimal visual difference. If your game library consists primarily of competitive shooters (Valorant, Overwatch 2), ray tracing matters less. If you play modern single-player games, ray tracing has become worth supporting—though AI upscaling means you don't need extreme performance to enable it.

Should I buy a GPU now or wait for 2027 releases?

The answer depends on your patience tolerance and current hardware status. If your existing GPU functions adequately, waiting 6-12 months potentially saves 15-25% on current-generation pricing and enables comparison shopping as supply stabilizes. RTX 60 series will likely release late 2026 or early 2027, creating discounts on 50 series inventory. However, if your current GPU is failing or severely limiting your gaming experience, waiting costs experiences and frustration—sometimes buying now at premium pricing beats waiting indefinitely. A practical approach: establish maximum comfortable wait time (3-6 months), then purchase rather than perpetually optimizing for perfect timing.

What's the difference between DLSS and FSR, and which should I prioritize?

DLSS (Deep Learning Super Sampling) uses neural networks trained on massive high-resolution datasets to upscale lower-resolution images intelligently. FSR (Fidelity FX Super Resolution) uses computer vision algorithms to achieve similar results without neural networks. In practice, DLSS 4 and FSR 3 deliver comparable visual quality, with DLSS maintaining slight advantages in cutting-edge games where NVIDIA has secured exclusive optimization partnerships. DLSS remains more widely supported in AAA titles, while FSR offers open-source availability benefiting indie developers. For GPU purchasing, prioritize available upscaling support in your actual game library rather than choosing hardware based on upscaling preference—most modern cards support both technologies.

How do I know if my power supply is adequate for a new GPU?

Calculate total system power draw by adding your CPU TDP plus GPU TDP, then multiply by 1.25 for headroom:

Can I use a used or refurbished GPU safely?

Refurbished GPUs from authorized retailers offer reasonable safety through testing and warranty coverage (typically 3-6 months). Completely used GPUs from unknown sources carry risk—particularly mining-used cards which endured extended operation at high temperatures, potentially degrading components that fail unexpectedly months after purchase. Assessing mining history proves nearly impossible without manufacturer documentation. If considering used purchases, buy from trusted sources (friend upgrades with known history, retailers with return policies) rather than mysterious online listings. A 15-30% savings on used GPU versus 20-40% refurbished rarely justifies the risk—the warranty and verification protections justify the modest premium.

What GPU is best for content creation and professional work?

NVIDIA RTX cards dominate professional software with superior CUDA support and optimization in Adobe Creative Cloud, Blender, Octane Render, and professional encoding tools. An RTX 4070 Super or RTX 5070 provides excellent professional performance at reasonable pricing (

How important is cooling design for GPU longevity and performance?

Cooling design significantly impacts both longevity and thermal performance. High-quality AIB coolers (ASUS, MSI Suprim, Gigabyte Gaming OC) operate 5-10°C cooler than reference designs through superior heat sink design and fan configurations. Cooler operation extends component lifespan, improves stability, and reduces noise through lower fan speeds. For daily use, the difference between 60°C (quiet cooler) and 75°C (poor cooler) feels dramatic. Reference designs work adequately with proper case ventilation, but quality AIB coolers justify modest premium ($30-50 typically) through superior thermal performance and quieter operation during long gaming sessions. Ensure your case provides adequate ventilation—at minimum one front intake fan and one rear exhaust fan—to prevent hot air recirculation regardless of GPU cooler quality.

Conclusion: Making Your GPU Purchase Decision

Selecting the right graphics card in 2026 requires balancing multiple competing priorities: performance, cost, reliability, future compatibility, and your specific use case requirements. The GPU market has evolved dramatically from NVIDIA's previous dominance into a genuinely competitive three-way race where manufacturers offer meaningfully different approaches to solving graphics processing challenges.

The fundamental principle guiding smart GPU purchases remains constant: choose the highest performance card within your budget constraint, properly aligned with your monitor specifications and use case requirements. This principle sounds simple but requires resisting continuous budget creep, avoiding specification obsession, and maintaining focus on real-world performance metrics that impact your daily experience.

For most gamers, the sweet spot lands in the mid-range category at 1440p 144 Hz resolution—a combination that accommodates both competitive esports and modern single-player games, feels smooth and responsive in daily use, and doesn't require premium-tier spending. A well-chosen mid-range card provides 3-5 years of satisfying performance before requiring upgrade, making it the optimal value proposition for budget-conscious builders.

NVIDIA retains advantages in ray tracing performance and DLSS availability, particularly in exclusive AAA titles. However, 2025's RTX 50 series release disappointingly failed to deliver substantial generational improvements, creating genuine opportunities for AMD's RDNA 4 architecture. The RX 8070 XT represents unquestionably better value than the RTX 5070 for most builders, offering 12GB VRAM standard, competitive rasterization performance, and mature FSR 3 support at $100-150 lower pricing.

Timing matters significantly but shouldn't create decision paralysis. Buying immediately after a generation launch means paying 15-25% premiums, while waiting 6-9 months can reduce prices 10-20%. However, perfect timing requires patience many users lack. A practical approach involves establishing maximum acceptable wait time (2-6 months) and purchasing within that window rather than perpetually chasing optimal pricing.

Future-proofing your purchase requires allocating adequate VRAM for your intended 3-5 year ownership period. Eight gigabytes represents the bare minimum for contemporary gaming; 12GB reflects the practical standard; 16GB provides comfortable headroom for long-term ownership. The relatively small cost increase ($30-70 typically) provides substantial longevity value—a 3-4 year old card with 12GB VRAM remains relevant far longer than a 3-4 year old card with insufficient memory.

Beyond the GPU itself, consider your complete system context. Adequate power supply, proper cooling, complementary CPU choice, and monitor alignment all influence whether your GPU investment delivers satisfaction or frustration. GPU performance means nothing without adequate electricity, sufficient cooling to prevent thermal throttling, a CPU capable of feeding the GPU data, and a monitor capable of displaying the frame rates your GPU produces.

For specialized use cases—content creation, professional AI training, competitive esports—priorities shift from gaming optimization toward software support, compute performance, and stability. These workloads often justify different GPU choices than gaming optimization would suggest.

Ultimately, GPU purchasing decisions involve calculated risk-taking. You're committing hundreds or thousands of dollars to hardware you can't fully evaluate before purchase. Mitigating this risk requires research, understanding manufacturer claims versus real-world performance, recognizing value propositions across price tiers, and making decisions based on your actual use case rather than specification chasing or emotion-driven budget creep.

The information provided throughout this guide equips you to navigate these decisions confidently. You now understand ray tracing technology, recognize upscaling importance, can evaluate manufacturer claims critically, identify genuine value propositions, and understand the trade-offs defining each price tier. Armed with this knowledge, you can make GPU purchasing decisions aligned with your priorities, budget constraints, and long-term satisfaction.

The perfect GPU doesn't exist—only the GPU optimal for your specific situation. By thoughtfully analyzing your requirements, researching current options, and resisting decision paralysis, you'll select a graphics card delivering years of satisfying performance. That confidence, grounded in understanding rather than luck, represents the ultimate value of informed GPU purchasing decision-making.

Key Takeaways

- GPU selection must align with monitor resolution and refresh rate for optimal value; 1440p 144Hz represents the sweet spot for most builders

- VRAM capacity has become critically important; 12GB minimum is now standard for contemporary gaming, with 16GB providing better long-term value

- AMD's RDNA 4 architecture offers superior value to NVIDIA in mid-range category; RTX 50 series disappointed with limited generational improvements

- Ray tracing has become increasingly important as implementation matured; AI upscaling (DLSS 4, FSR 3) makes ray tracing performance-viable without extreme GPUs

- Real-world retail pricing typically exceeds MSRP by 15-25% immediately after launch; waiting 6-9 months can reduce prices 10-20%

- Sufficient power supply, proper cooling, and complementary CPU selection matter as much as GPU choice for satisfying gaming experience

- Professional workloads prioritize NVIDIA CUDA support over gaming value; AMD and Intel lack mature professional software optimization

Related Articles

- Alienware 16X Aurora Review: The Portable Gaming Powerhouse [2025]

- 007 First Light PC Specs Fixed: The Real VRAM & RAM You Actually Need [2025]

- Nvidia DLSS 4.5 Update: Complete Guide to Features & Performance [2025]

- AI Impact on Budget TVs & Audio: Prices, Features & Quality 2025

- MSI Vector A16 RTX 5070 Gaming Laptop: Price Record Breakdown [2025]

- LG 27GX790B OLED Gaming Monitor: 720Hz Speed & WOLED Brightness [2025]