Instagram's AI Media Crisis: Why Fingerprinting Real Content Matters [2025]

Something fundamental is breaking at Instagram, and Adam Mosseri just confirmed it.

The head of Instagram posted a sobering observation recently: AI is about to completely flood the platform. Not in five years. Now. And his solution? Stop trying to block fake content. Instead, fingerprint the real stuff.

This isn't some niche tech talk. It's a seismic shift in how social media platforms will operate, and it's going to affect millions of creators, marketers, and casual scrollers. Let's dig into what's actually happening, why it matters, and what comes next.

TL; DR

- AI content is overtaking authentic media: Mosseri expects synthetic content to dominate Instagram feeds in 2025-2026, as reported by Business Insider.

- Fingerprinting real media is the new strategy: Instead of detecting fakes, Instagram plans to mark and authenticate genuine content, according to Engadget.

- Creators face an authenticity crisis: Traditional creator advantages—rawness, realness, unfiltered voice—are now easier to fake than ever, as noted by The Verge.

- The "polished square image" era is over: Instagram is pivoting toward "raw and unflattering" content as proof of authenticity, highlighted by Mashable.

- Photography and content creation industries face disruption: Professional creators and photographers must adapt to compete with AI-generated alternatives, as discussed in WebProNews.

- Bottom line: The future of Instagram depends on successfully identifying real humans in an ocean of synthetic everything.

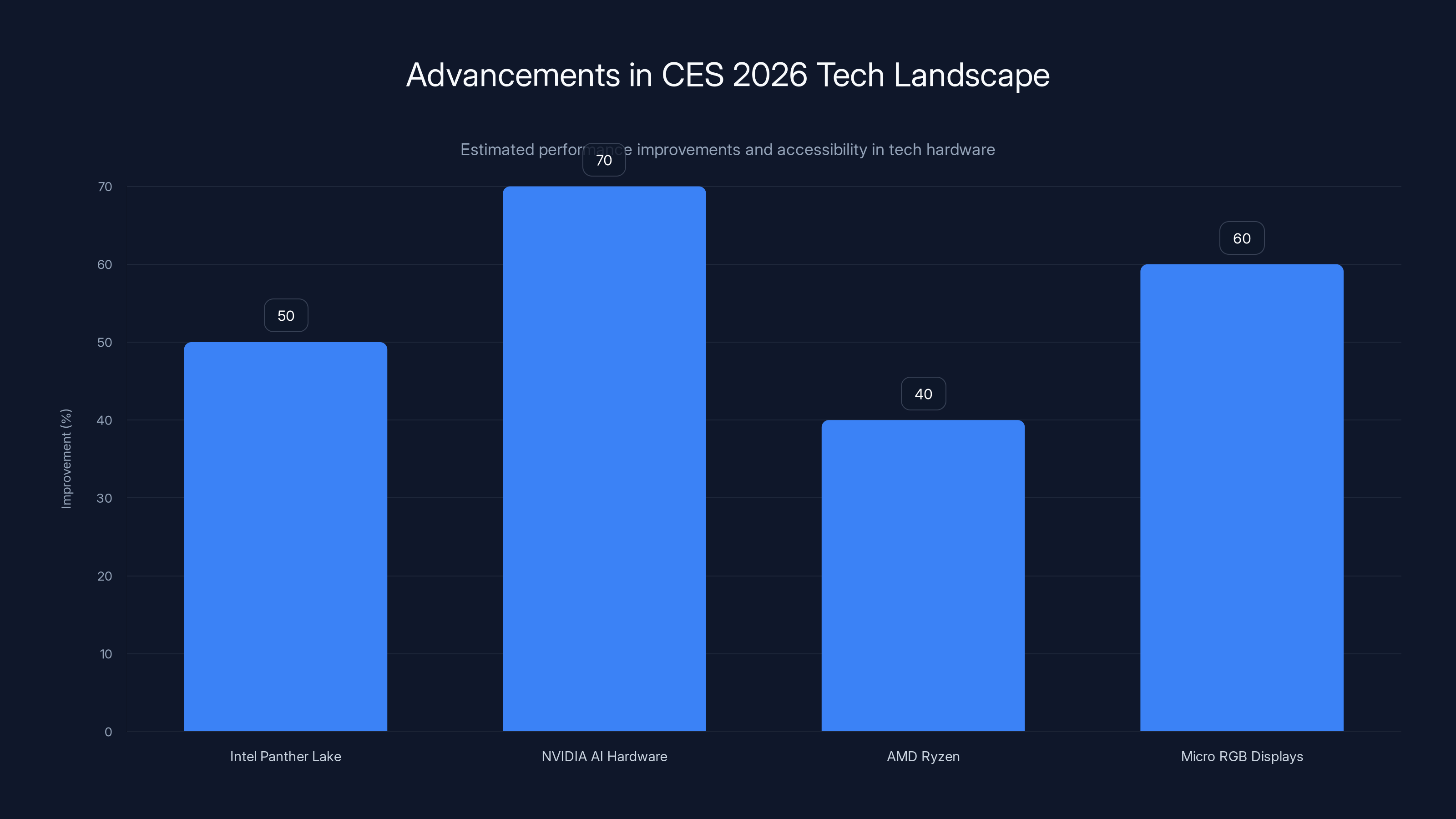

CES 2026 showcases significant advancements in tech hardware, with Intel's Panther Lake processors promising a 50% performance boost and NVIDIA's AI hardware leading with 70% estimated improvement. Estimated data.

The AI Flood Is Already Here

Adam Mosseri didn't speculate about some distant future. He explicitly stated that Instagram feeds are "starting to fill up with synthetic everything" right now. Not eventually. Not next year. Right now, in early 2025, as reported by Nation Thailand.

Think about what this means. A creator posts a photo of themselves. Next to it, an AI-generated image of a completely fictional person wearing the same outfit. Scroll down and find an AI video of a product demo that never actually happened. Keep scrolling and you'll find AI-generated memes, AI-written captions, AI-designed graphics. All indistinguishable from the real thing.

The tools have gotten embarrassingly good. Text-to-image generators can now produce photorealistic content, as noted by PCMag. Video generation tools can create believable footage in seconds. Voice synthesis is nearly perfect. And most importantly, these tools are cheap, fast, and accessible to literally anyone.

Mosseri's core observation cuts to the heart of the problem: "Everything that made creators matter — the ability to be real, to connect, to have a voice that couldn't be faked — is now suddenly accessible to anyone with the right tools."

This is devastating for professional creators. The moat that protected them—authenticity, realness, genuine connection—has collapsed. A teenager with a $10/month AI subscription can now do what took professional photographers years to master.

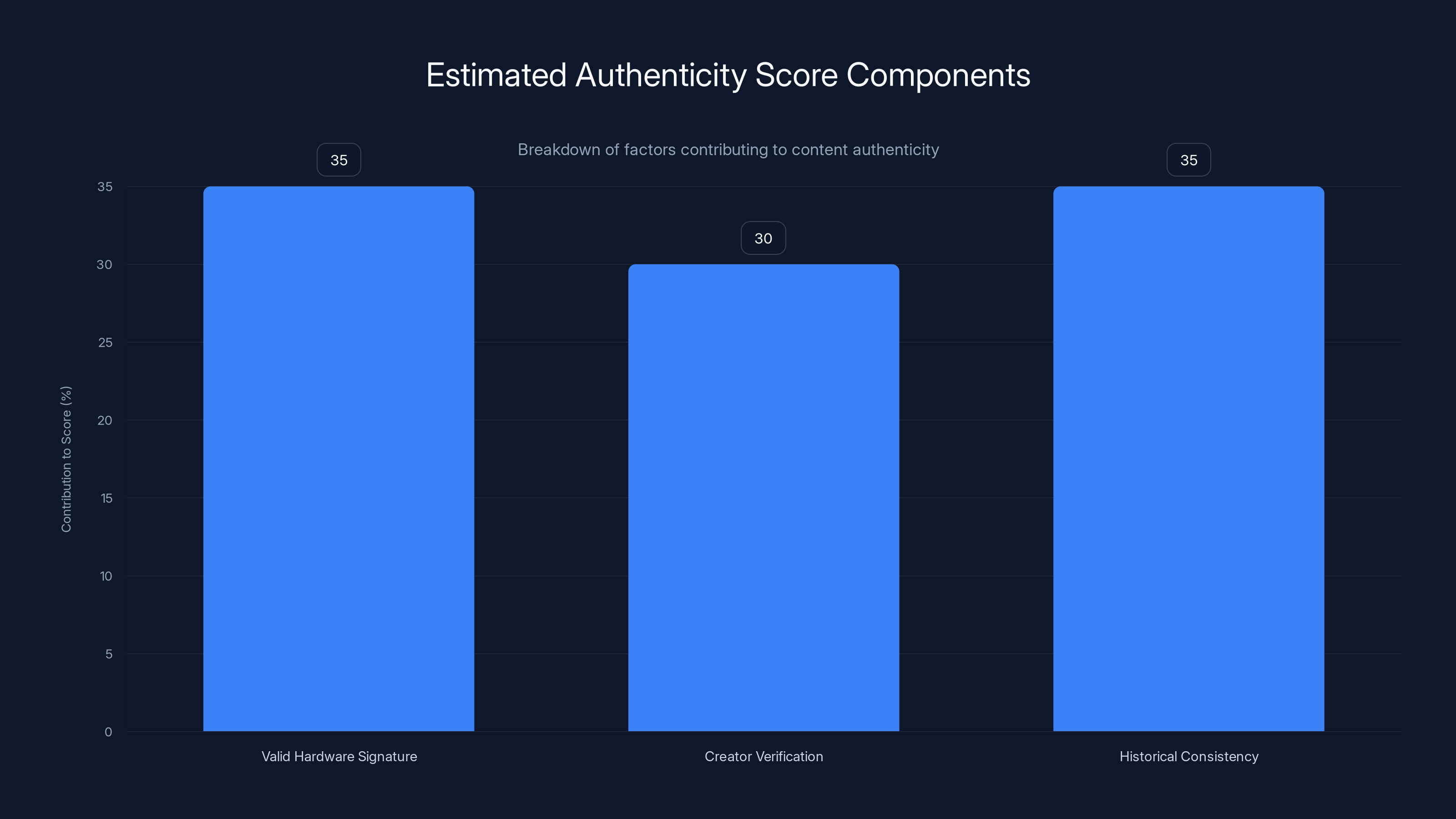

Estimated data shows that valid hardware signatures and historical consistency are key components, each contributing 35% to the authenticity score, with creator verification adding 30%.

Why Fingerprinting Real Media Makes Sense (Even If It Sounds Backwards)

Here's where Mosseri's thinking gets interesting, even if it initially sounds insane. Instead of trying to detect fake content, Instagram wants to fingerprint real content, as explained in Engadget.

This seems backward, right? Why not just get better at detecting AI-generated images? The answer is practical: detection is a perpetual arms race. Every time you build a detector, someone improves the generator. It's like whack-a-mole on steroids.

Fingerprinting flips the problem. Rather than trying to identify the tiny percentage of real content among a sea of fakes, you mark the real stuff and let everything else be treated as potentially synthetic by default.

How would this actually work? Several approaches are theoretically possible:

Cryptographic fingerprinting would embed invisible metadata into original content at the moment of creation. A smartphone camera or professional editing software would sign the content with a unique identifier that proves it came from an authenticated source. Think of it like a digital passport for your photos.

Blockchain-based verification could create an immutable record of content origin. Post a photo to Instagram, and it gets timestamped on a distributed ledger. Anyone viewing that content can verify its authenticity by checking the chain.

Hardware-level authentication would embed verification chips directly into smartphone cameras. Every photo taken would automatically include cryptographic proof that it came from a specific device at a specific time. This is actually possible with modern phone processors.

AI-resistant watermarking involves adding digital signatures that survive compression, cropping, and other typical edits. These watermarks would be invisible to humans but readable by Instagram's systems, proving authenticity without compromising visual quality.

The catch? All of these require buy-in from device manufacturers, camera makers, and potentially legislation. You can't fingerprint real media if people aren't actually generating the fingerprints.

The Death of the Polished Aesthetic

Mosseri made a striking statement: the feed of "polished" square images is dead, as noted by The Verge.

This is huge for understanding where Instagram is headed. For the past decade, the platform rewarded aesthetic perfection. Filters, careful composition, curated lighting, professional editing. The bar for "Instagram-worthy" became prohibitively high, which is why Instagram broke people's mental health and spawned an entire industry of influencer coaches.

But perfect-looking content is exactly what AI generators excel at. You can ask Midjourney or DALL-E to create a flawlessly composed landscape, a geometrically perfect meal photo, a model with impossible beauty standards. AI doesn't get tired, doesn't have bad skin days, doesn't struggle with unflattering angles.

So Mosseri is essentially saying: we're going to win this by doing the opposite. Raw is the new aesthetic. Unflattering is the new authentic.

This makes sense from a detection standpoint. An imperfect photo—bad lighting, awkward angle, visible flaws, genuine emotion—is actually harder for AI to generate convincingly. When you're trying to recreate real human messiness, that's where synthetic content breaks down.

But this creates an interesting social consequence. For years, Instagram trained people to polish and perfect. Creators spent thousands on equipment, lighting, editing software, and coaching to get that perfect shot. Now Instagram is saying: that's over. The path to credibility is literally the opposite—embrace imperfection.

For many creators, especially those who built their entire brand on aesthetic perfection, this is a gut punch. Their competitive advantage just got inverted.

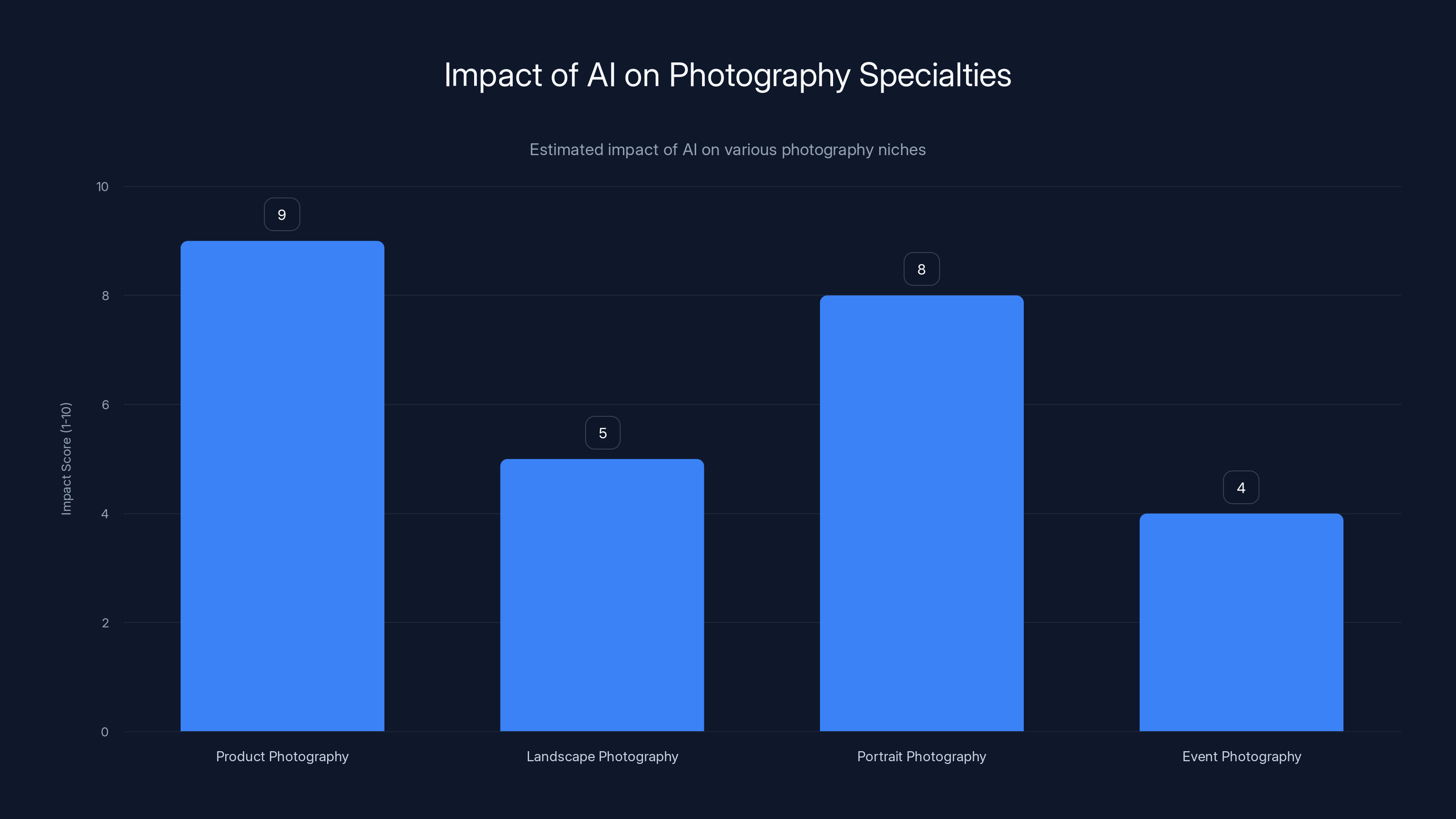

AI is significantly impacting product and portrait photography, while event photography remains less affected for now. Estimated data.

What This Means for Professional Creators

Photographers and content creators are rightfully panicked. Let's be clear about what's actually happening to their livelihoods.

Professional photography was already being disrupted. Stock photo sites cannibalized work that used to require hiring photographers. AI image generation is now cannibalizing the stock photo market. And AI-generated "content" is starting to compete directly with creators on social platforms.

A marketing team used to hire an influencer to post about their product. That cost could run from

For photographers, the impact varies by specialty:

Product photography is getting demolished. E-commerce companies can now generate product images in multiple contexts, angles, and settings instantly. Why hire a photographer when you can prompt an AI?

Landscape and travel photography faces pressure but has more staying power. There's something about real experience and location scouting that AI struggles with—but not for long.

Portraits and headshots are becoming commoditized. Professional headshot photographers are already seeing demand drop as AI can generate dozens of professional-looking headshots in minutes.

Event photography remains safe-ish for now because it requires being physically present, but even this is changing as AI video generation improves.

The photographers and creators who'll survive this transition are the ones who lean into what AI can't do: genuine human connection, real-world experience, and documented authenticity. The irony is brutal—you win by being less polished, more imperfect, and more obviously real.

The CES 2026 Tech Landscape Shaping This Crisis

While Instagram grapples with AI content, the broader tech industry is about to ship hardware that will make the problem worse.

At CES 2026, the industry is focused on chips and displays. Intel is rolling out Panther Lake processors with a promised 50% performance boost. NVIDIA will demo their latest AI hardware. AMD is refreshing the Ryzen lineup. The TV obsession this year is Micro RGB displays—thousands of dimming zones for perfect contrast.

But buried in these announcements is a deeper reality: the hardware to generate, edit, and deploy AI content is about to become exponentially more powerful and accessible.

Consider what this means practically:

Mobile processors are getting AI chips built directly in. Your next iPhone or Android phone will have dedicated silicon for running AI models locally. This means generating AI images or video won't require hitting a server—it happens on your device, instantly and privately.

Desktop GPUs will become even cheaper and more powerful. The barrier to entry for running state-of-the-art AI models will continue dropping. A

Display technology is getting better at rendering hyperrealistic content. Micro RGB displays with thousands of dimming zones can display subtly imperfect details that make synthetic content look more real. The hardware is literally getting better at lying.

This isn't a tech problem anymore. It's an infrastructure problem. The technology to create convincing fake content is becoming as accessible as the technology to share content online. We're not dealing with edge cases. We're dealing with a complete inversion of the signal-to-noise ratio.

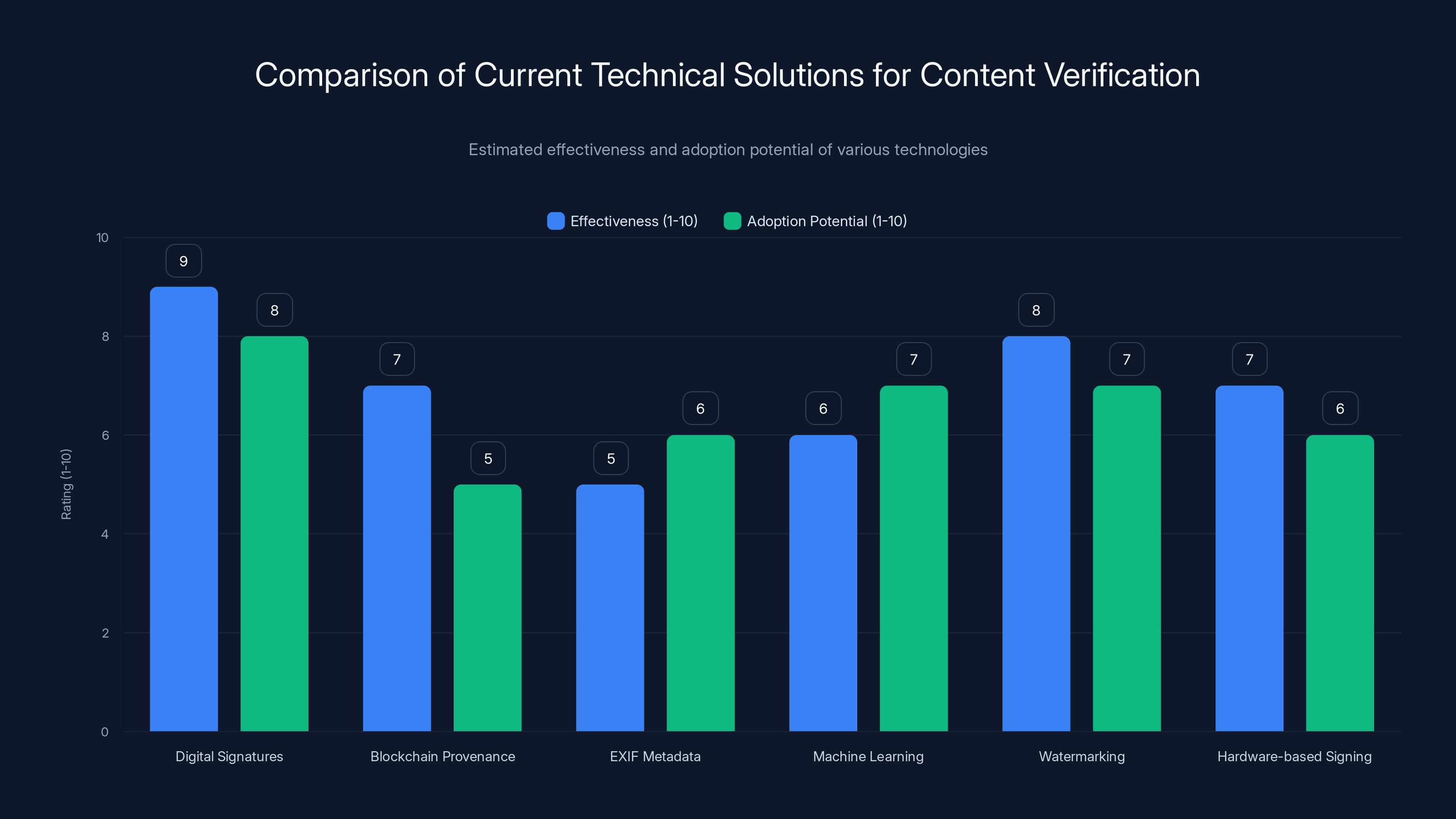

Digital signatures and watermarking technologies are currently the most effective and have high adoption potential. However, a combination of multiple solutions is necessary for comprehensive content verification. (Estimated data)

Hardware Authentication: A Technical Deep Dive

One practical solution gaining traction is authenticating content at the hardware level.

Imagine a smartphone that cryptographically signs every photo the moment the camera sensor captures light. The signing happens in specialized silicon, impossible to spoof. You take a photo, and it gets a unique digital signature proving it came from your specific device at a specific timestamp.

When you post to Instagram, that signature goes with it. Instagram's servers can instantly verify that the image hasn't been edited after capture and that it genuinely originated from your device. AI-generated images, by definition, can't have these signatures because they never came from an actual camera.

Apple has talked about building this into iPhones. Microsoft has similar initiatives for Windows devices. The C2PA (Coalition for Content Provenance and Authenticity) has been working on open standards for exactly this.

The formula would be something like:

If you have a hardware signature proving device origin, plus a verified account history of authentic content, plus posting patterns consistent with a real human, your authenticity score climbs toward 100%. AI-generated content and heavily edited content would have lower scores.

The challenge is getting universal adoption. You need smartphone manufacturers, operating systems, camera makers, and social platforms all agreeing on standards. That's politics, not just technology.

The Creator Economy in Transition

We're witnessing the transition of the creator economy from "content production" to "authenticity verification."

In the early days of Instagram and YouTube, creators won by producing better content. More beautiful, more entertaining, more polished. The production quality gap between professionals and amateurs was massive.

AI has collapsed that gap. Now the average person can produce technically excellent content. The new differentiator is authenticity. Can you prove you're actually you? Can you prove your experiences are real? Can you build trust at scale?

This fundamentally changes what creators should be optimizing for:

Instead of production quality, focus on personality and perspective. Why should people follow you instead of an AI bot with perfect images? Because you have a unique viewpoint that can't be faked.

Instead of polished aesthetics, embrace the messy. Show the work. Document the failures. Be visibly human.

Instead of maximizing reach, focus on building genuine community. Engagement with real followers matters more than raw follower count when everything is potentially fake.

Instead of one-way broadcasting, embrace actual conversation. Respond to comments. Answer questions. Build relationships.

This is almost the opposite of what Instagram optimized for during the influencer era. That era rewarded big numbers, aesthetic perfection, and one-way fame. The next era will reward authenticity, realness, and genuine connection.

Creators who thrive will be the ones who adapt to this. That might mean smaller but more engaged audiences. That might mean lower sponsorship payouts but higher lifetime value. That definitely means being more visible as a real human.

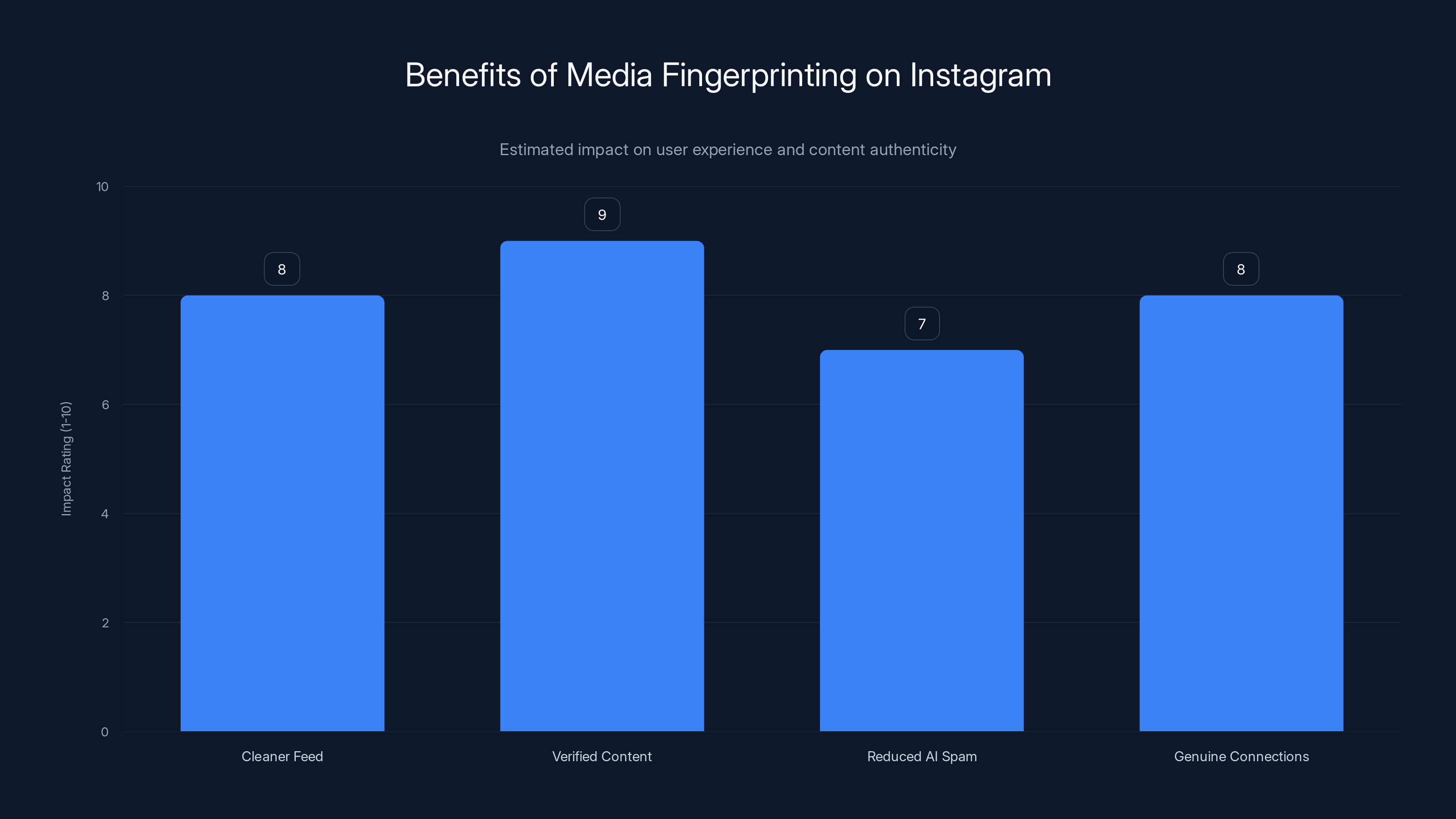

Media fingerprinting is expected to significantly enhance user experience by ensuring content authenticity and reducing AI-generated spam. Estimated data.

The Verification Arms Race

Once Instagram implements fingerprinting for real media, an inevitable arms race begins.

Bad actors will immediately try to fake authentication. They'll attempt to spoof hardware signatures, create convincing fake provenance records, or manually add fingerprints to AI-generated content. Some of these attacks might work, at least initially.

Here's how the escalation might play out:

Phase 1: Basic fingerprinting emerges. Real media gets marked. AI content is unmarked. Works reasonably well initially.

Phase 2: Fingerprint spoofing becomes common. Bad actors figure out how to fake signatures or copy them from real photos. Instagram adapts with more sophisticated authentication methods.

Phase 3: AI detection improves anyway. Even as fingerprinting emerges, AI detection algorithms get better. Instagram probably invests in both approaches simultaneously.

Phase 4: Legislation catches up. Governments start requiring authentication standards. The C2PA's work becomes legally mandated in some jurisdictions.

This isn't unique to Instagram. It's the eternal game of security: every detection method spawns evasion techniques, which spawn better detection, which spawn better evasion. It's a treadmill that never stops.

The difference is that this time, the stakes are social. It's not just about protecting financial systems or corporate networks. It's about truth and trust at scale.

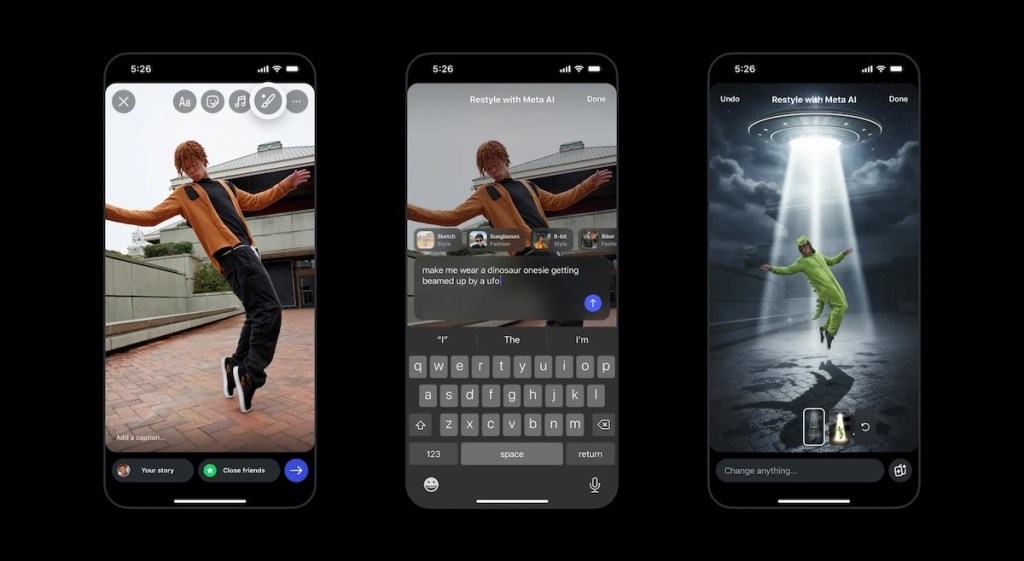

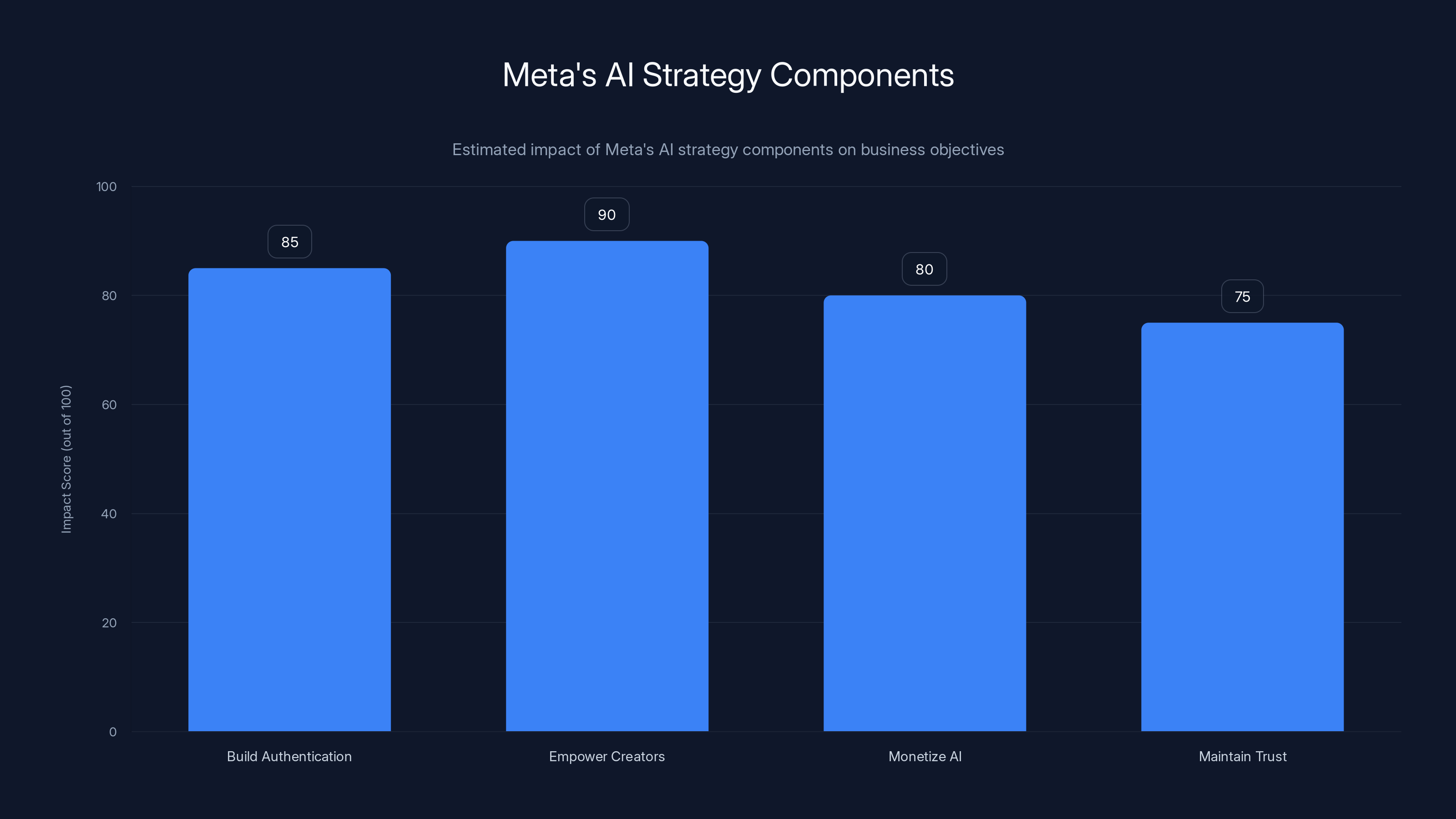

Meta's Broader AI Bet

Meta's recent acquisition of a startup focused on AI task automation agents signals broader strategy. The company isn't just defending against synthetic content. It's building AI-powered tools that help creators and marketers work at scale.

Think about what this means: Meta is simultaneously fighting AI-generated spam while building tools that help creators use AI productively. That sounds contradictory, but it actually makes sense.

The company recognizes that AI isn't going away. Instead of fighting the tide, Meta is trying to:

- Build authentication to distinguish real from fake

- Empower creators with AI tools to compete

- Monetize AI through advertising and premium features

- Maintain trust so users believe what they're seeing

This is a sophisticated play. Meta isn't choosing between "embrace AI" and "fight AI spam." They're doing both simultaneously.

For creators, this means opportunity. The AI tools Meta and other platforms provide could become essential competitive advantage. A creator who masters using AI for productivity while maintaining authentic personal connection could dominate the algorithm.

Meta's AI strategy focuses on authentication, empowering creators, monetization, and trust. Empowering creators is estimated to have the highest impact. Estimated data.

Why This Matters Beyond Instagram

Instagram's AI crisis isn't just about Instagram. It's a preview of every social platform's future.

TikTok faces identical problems. YouTube is already dealing with AI-generated channels and deepfake videos. Twitter/X has verification and bot issues. LinkedIn is getting flooded with AI-generated "thought leadership" posts. Discord communities are fighting AI-generated spam.

The core issue is platform-agnostic: when the cost of generating synthetic content approaches zero, platforms need new ways to maintain signal-to-noise ratios.

Some platforms are choosing detection: trying to identify and remove AI content. Others are choosing labeling: requiring AI content to be marked as synthetic. Instagram is choosing authentication: marking real content instead.

Each approach has tradeoffs. Detection is always behind. Labeling relies on user honesty. Authentication requires infrastructure and buy-in from device manufacturers.

But all of them are saying the same thing: authenticity has become valuable. And valuable things get protected, verified, and commodified.

For users, this means the social media of 2026 will look very different from the Instagram of 2020. Feeds will be slower, more thoughtful, more curated toward authenticity. The algorithm will favor verified creators with longer histories. Paid verification might become table stakes for professional accounts.

The age of infinite growth through engagement metrics is ending. The age of authenticity verification is beginning.

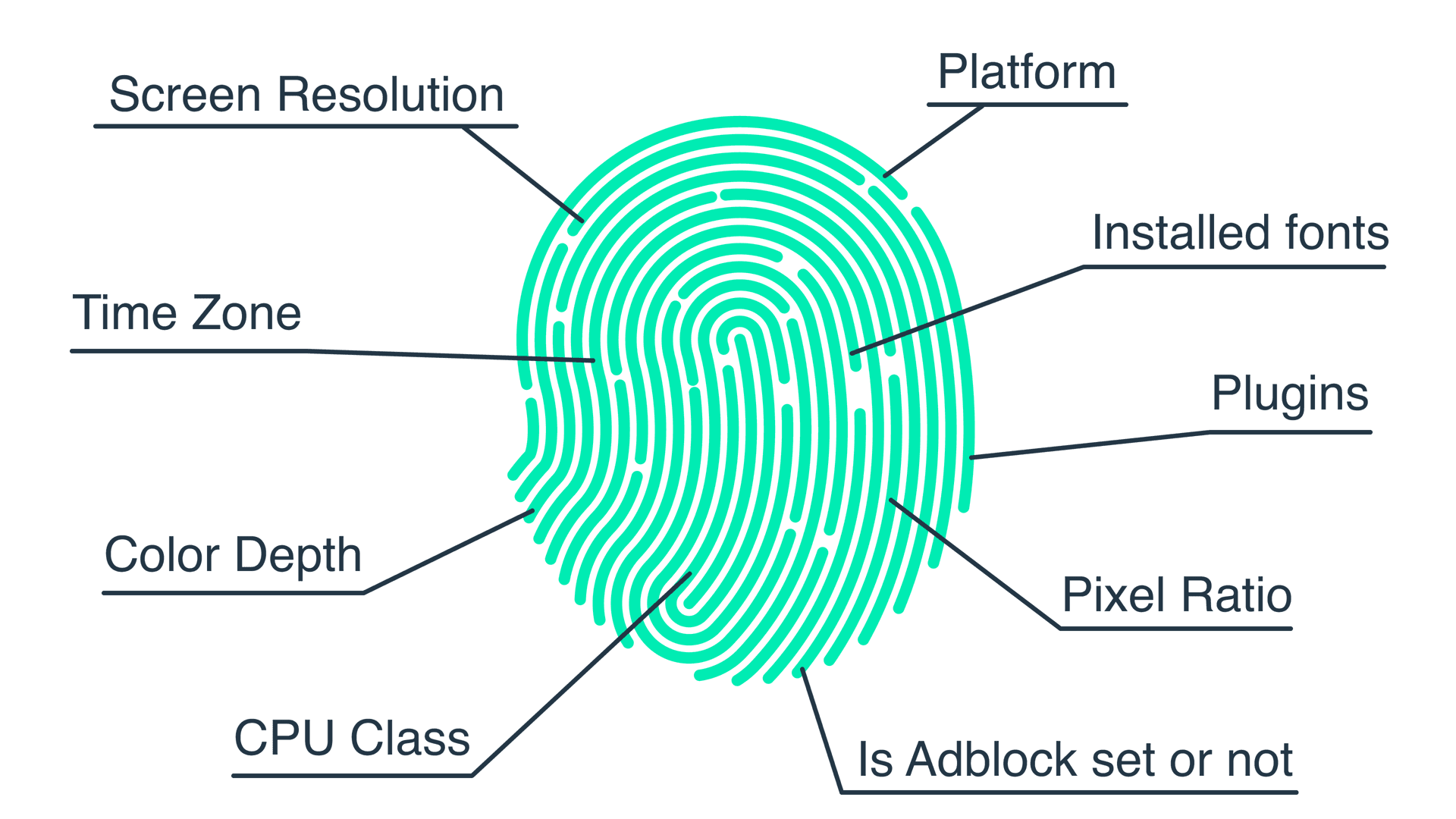

The Technical Solutions Currently Available

It's worth understanding what's actually possible right now, versus what's still theoretical.

Digital signatures using cryptographic keys are mature technology. Every bank, government, and large company uses them. Instagram absolutely could implement this today if they had user device cooperation. The challenge is getting billions of smartphones to participate.

Blockchain-based provenance tracking is technically proven but hits scalability and environmental concerns. Trust is distributed but transparency is total. Adoption is difficult because incentives aren't aligned yet.

EXIF metadata verification could work for photos, though it's easy to strip and spoof. The amount of data embedded in modern photos (location, camera model, timestamp) could theoretically help verify authenticity, but it's not cryptographically secure.

Machine learning for detection has improved dramatically but still has a 15-30% false positive/negative rate depending on sophistication. Not reliable enough for enforcement but good enough for scoring.

Watermarking technology from companies like Truepic and others can embed invisible signatures in photos. These are hard to remove without degrading image quality and provide decent but not perfect authentication.

Hardware-based signing using TPM (Trusted Platform Module) chips in modern computers is available but not well integrated with content creation workflows. Apple and others are working on this.

None of these are silver bullets. Each has limitations. The practical solution will likely combine multiple approaches: hardware signing where possible, cryptographic signatures for account verification, AI detection for flagging suspicious content, and user reporting for community moderation.

What Happens to Less Authentic Creators?

Let's talk about the uncomfortable consequence: many creators who built audiences aren't actually that authentic.

There are creators who've been using filters, facetune, heavily edited photos, and curated narratives to build followings. Their content is technically real, but it's not honestly real. It's an optimized, idealized version of reality.

When the platform pivots toward rewarding authentic imperfection, these creators hit a wall. Their strategy—polish, perfect, and present an aspirational image—becomes a liability. Suddenly, the follower count that was an asset becomes evidence that they've been optimizing for the wrong thing.

Some will adapt. They'll loosen up, show more realness, lean into flaws. Their engagement might actually improve because audiences are hungry for actual connection.

Others won't. They'll hold onto the polished aesthetic, double down on filters, and slowly fade as the algorithm deprioritizes them. Their audiences will migrate to creators who embrace the new authenticity standard.

This is actually healthy for the platform. It's painful for some creators, but it makes the whole ecosystem better. It shifts incentives away from "look perfect" and toward "be real."

The Regulatory Dimension

Authentication and fingerprinting aren't just technical problems. They're regulatory ones.

The EU has already started requiring platforms to take responsibility for synthetic content through the Digital Services Act. The US is considering similar legislation. Governments are asking: how do you know what's real? How do you verify content? How do you prevent AI-generated disinformation?

Companies that move early on authentication might get regulatory advantages. Companies that wait might face mandates. There's incentive to implement this voluntarily before governments require it.

But regulation cuts both ways. Strict requirements could push small creators off platforms because they can't afford verification infrastructure. Heavy-handed detection could censor legitimate content. Balance is hard.

We're likely looking at a future where:

- Some jurisdictions mandate content authentication (EU leading)

- Platforms adopt voluntary standards to avoid regulation (C2PA becoming industry norm)

- Professional creators get verified badges and authentication

- Casual users remain mostly unverified but flagged by algorithm

- AI content gets labeled or deprioritized but not banned

The result is a tiered system where authenticity becomes a visible attribute. Real might become the new premium.

Implications for Different User Groups

How this plays out matters very differently depending on who you are:

For casual users who just scroll Instagram, authenticity markers might be invisible. They'll notice that the feed looks different—less polished, more raw—but won't understand why. The experience gets cleaner because spam and AI content get deprioritized.

For content creators with verified accounts, authentication becomes table stakes. Not having it signals lower quality. Getting it signals professional legitimacy. Competitive advantage goes to those who embrace it first.

For photographers and artists, this is existential. Your competitive advantage has been the quality of your work. If AI can match that quality, you need a new moat. Authenticity and personality become your survival mechanisms.

For marketers and agencies, using AI-generated content becomes complicated. You can still use it (labeled and deprioritized), but authentic creator partnerships become more valuable. The economics shift back toward real people doing real work.

For bad actors trying to spread misinformation, this gets exponentially harder. Fake accounts, inauthentic networks, AI-generated disinformation campaigns all become detectable and stoppable.

For journalists and newsrooms, authentication standards become incredibly useful. Being able to verify that a photo or video came from a real source at a real location is exactly what you need for credible reporting.

Each group faces different challenges and opportunities in this transition.

Historical Parallels and Lessons

This isn't the first time technology platforms have faced the "authenticity vs. scale" problem.

Email had the same crisis with spam. Early email assumed good intentions. Anyone could send from anyone else. It broke. The solution was gradually layering on authentication (SPF, DKIM, DMARC), reputation systems, and detection. Email is still spammy, but at least critical messages make it through.

Payment systems faced identical problems. Credit cards enabled fraud. The solution was layering on authentication (CVV, 3D Secure, device fingerprinting) and making fraud detection smarter. It's a constant arms race.

Search engines faced it too. Early Google simply ranked by links. Spammers immediately gamed the system. The response was better ranking algorithms, editorial guidelines, and detection. Twenty years later, search is still fighting spam.

Each of these industries converged on the same solution: authentication + detection + human judgment. Not one alone, but all three together.

Instagram is probably heading the same direction. Fingerprinting real media plus improved AI detection plus human moderation. No perfect solution, but much better than what exists today.

The lesson from history: this transition is painful, but it's also necessary and eventually settles into a new normal.

FAQ

What exactly is media fingerprinting and how does it work?

Media fingerprinting is the process of cryptographically signing digital content to prove its origin and authenticity. When you take a photo on your phone, a unique digital signature is embedded that proves the image came from your specific device at a specific timestamp. This signature is mathematically impossible to forge without access to your device's private cryptographic keys. Instagram would then be able to instantly verify that content came from a real person's camera rather than being AI-generated, because AI-generated images never originated from an actual camera sensor.

Why is Instagram choosing to fingerprint real media instead of detecting fake media?

Detecting fake media is a perpetual arms race. Every time AI detection improves, generators improve to evade detection. By the time you catch one generation of fakes, a better one already exists. Fingerprinting flips this by focusing on proving what's real rather than identifying what's fake. This is more efficient because you only need to authenticate a fraction of content (real media) instead of constantly improving detection for the ever-growing percentage of synthetic content flooding the platform. It's mathematically and practically more sustainable.

How will this affect regular Instagram users who just scroll their feed?

Most casual users likely won't notice the technical infrastructure changes, but they will notice effects. The feed should become cleaner because AI spam gets deprioritized. Content you see will increasingly be from verified creators with proven authenticity. The algorithm will still use engagement metrics, but authenticity becomes a stronger signal in ranking what appears in your feed. For many users, this means seeing less polished content but more genuine connection and less manipulated accounts.

What competitive advantages will creators have in this new environment?

Creators who build verified, authentic presences early will have massive advantages. The competitive advantages shift from production quality (where AI now competes) toward personality, perspective, and genuine community building. Creators who embrace rawness and imperfection—behind-the-scenes content, failures, real emotions—will outperform those chasing perfect aesthetics. Being early to verification signals professionalism. Building authentic audience relationships matters more than raw follower count. Basically, realness becomes the commodity creators compete on.

Is this good or bad for professional photographers and content creators?

It's disruptive in the short term but ultimately clarifying. Professional photographers face immediate pressure from AI image generation, but authentication systems create a moat: they can prove their images are real. This matters for journalism, commercial work, and credible documentation. Photographers who lean into uniqueness—specific style, genuine expertise, real-world presence at events—will survive better than those competing on pure technical quality. The painful truth is some photographic work will be commoditized by AI, but the authentic, human-centered work will become more valued.

When will Instagram actually implement fingerprinting and authentication?

Authentication standards are being developed now through initiatives like the Coalition for Content Provenance and Authenticity (C2PA), which Meta participates in. Hardware-level support is coming in smartphones and computers over the next 1-2 years. Instagram could begin implementing basic authentication systems in 2025-2026, but full platform-wide adoption will take longer because it requires device manufacturer cooperation, user adoption, and refinement of detection algorithms. Expect gradual rollout rather than sudden switch, with verification badges appearing on creator accounts first.

What happens to accounts that can't or won't get verified?

Unverified accounts won't disappear, but they'll face algorithm deprioritization. The platform likely implements a tiered system where verified accounts get higher reach and visibility, while unverified content is available but harder to find. This creates incentive for serious creators to verify but doesn't completely exclude casual accounts. Think of it like verified badges on Twitter—they matter for visibility, but verified accounts don't completely replace unverified ones. Verification becomes a competitive advantage rather than a requirement for existing.

How does AI detection fit into this if fingerprinting is the main solution?

They work together rather than replacing each other. Fingerprinting handles content that's verifiably from real sources. AI detection catches synthetic content that doesn't have valid fingerprints. You need both because not all devices will have fingerprinting capability, especially legacy phones. Users in different regions might not have access to authentication hardware. Having multiple verification layers makes the system more robust. It's like email: SPF, DKIM, and DMARC all exist together, not as competing solutions.

Will this authentication system work internationally or just in certain countries?

Authentication standards will likely be global because the C2PA is developing open international standards. However, implementation and enforcement will vary. The EU might mandate stronger authentication requirements through digital regulation. The US will probably see voluntary adoption. China and other countries might implement their own systems. For platforms like Instagram, this means supporting multiple standards and allowing regional variation. Content verification might look different in Europe than in India or Brazil based on local requirements.

What's the timeline for this transition away from polished aesthetics?

The shift is already happening. Raw, authentic content is already outperforming highly edited content in engagement metrics, according to Meta's own data. Full platform transition toward rewarding imperfection will probably be complete by late 2025 or 2026. Creators will need to adapt their strategies within the next 6-12 months to stay competitive. If you're still optimizing for perfect aesthetics and polished feeds right now, you're already behind the curve. The transition is measured in months, not years.

What This Means for the Future of Social Media

We're witnessing the end of one era of social media and the beginning of another.

The Instagram era—roughly 2010 to 2025—was defined by aesthetic perfection and the influencer economy. It was beautiful but often fake. Mental health consequences were real. Comparison culture was rampant. Authenticity was the currency but the least valued resource.

The next era will be defined by verified authenticity and creator specialization. It won't be as pretty, but it'll be more genuine. The algorithm will reward realness over polish. Professional creators will have clearer competitive advantages because verification signals will be visible. The influencer economy will become more about genuine expertise and community building than raw follower count.

For users, this means more trustworthy feeds and less AI spam. For creators, it means adaptation is mandatory but opportunity exists for those who embrace it early. For platforms, it means heavy infrastructure investment in authentication, detection, and verification systems.

The uncomfortable truth is this transition is necessary. AI is flooding social platforms with synthetic content. Something has to give. Either platforms implement verification and authentication, or social media becomes worthless as a source of real information. Given those options, Mosseri's fingerprinting strategy makes sense.

It's not perfect. It requires device cooperation. It creates new attack surfaces. It might be gamed initially. But it's more sustainable than the alternative: trying to detect an ever-growing mountain of increasingly convincing synthetic content.

The creators who adapt first—who embrace authenticity, build verified presences, and lean into realness—will thrive in this new environment. Those who cling to the old aesthetic perfection will struggle. The platform has essentially announced it's changing the rules of the game.

Welcome to the post-polish era of social media. It's messier, but it's more real.

Key Takeaways

- AI-generated content is already flooding Instagram feeds faster than detection can manage, forcing platform to pivot strategy

- Fingerprinting real media instead of detecting fakes is mathematically more sustainable than arms-race detection approaches

- Creator economy is shifting from aesthetic perfection to authentic rawness as the primary competitive differentiator

- Hardware-level authentication (cryptographic signing) is the most promising technical solution but requires device manufacturer cooperation

- Professional photographers face disruption but can survive by leveraging verified authenticity as competitive moat

- The influencer era is ending; the verification era is beginning with visible badges signaling authentication status

- Creators who embrace imperfection and real-world messiness will outperform those clinging to polished aesthetics

- Regulatory pressure from EU's Digital Services Act is accelerating platform adoption of authentication standards

![Instagram's AI Media Crisis: Why Fingerprinting Real Content Matters [2025]](https://tryrunable.com/blog/instagram-s-ai-media-crisis-why-fingerprinting-real-content-/image-1-1767357347719.jpg)