Introduction: A Decade-and-a-Half of Photographic Revolution

When the original iPhone launched in 2007, the 3G model arrived with a modest 2-megapixel camera that could barely capture readable images in normal daylight. Sixteen years later, the iPhone 17 Pro Max boasts a sophisticated multi-lens system with computational photography, artificial intelligence processing, and sensors that would have seemed like science fiction to photographers of the mid-2000s. This comparison isn't merely about numbers—it's about the fundamental transformation of how humanity captures, processes, and shares visual memories.

The journey from the iPhone 3GS to the iPhone 17 Pro Max represents one of technology's most dramatic evolutions. What began as an afterthought feature—a camera tacked onto a revolutionary communication device—has become the primary reason millions of people choose one smartphone over another. The iPhone 3GS, released in 2009, represented a modest upgrade with its 3.2-megapixel camera and autofocus capability, yet it was considered cutting-edge for mobile photography at that time.

This comprehensive analysis examines the technological, computational, and optical breakthroughs that have occurred over sixteen years. We'll explore not just the hardware specifications, but the philosophy behind smartphone camera design, the role of software and AI in modern photography, and how these advancements have democratized professional-quality imaging for billions of people worldwide.

Understanding this evolution provides critical context for anyone evaluating modern smartphone technology. The trajectory from the 3GS to the 17 Pro Max reveals the priorities of technology companies, the practical demands of consumers, and the stunning pace of innovation in the mobile space. Whether you're a photography enthusiast, a casual smartphone user, or simply curious about technological progress, this comparison illuminates how far we've come and what these advances mean for future imaging capabilities.

Hardware Architecture: The Sensor Revolution

Sensor Size and Megapixel Evolution

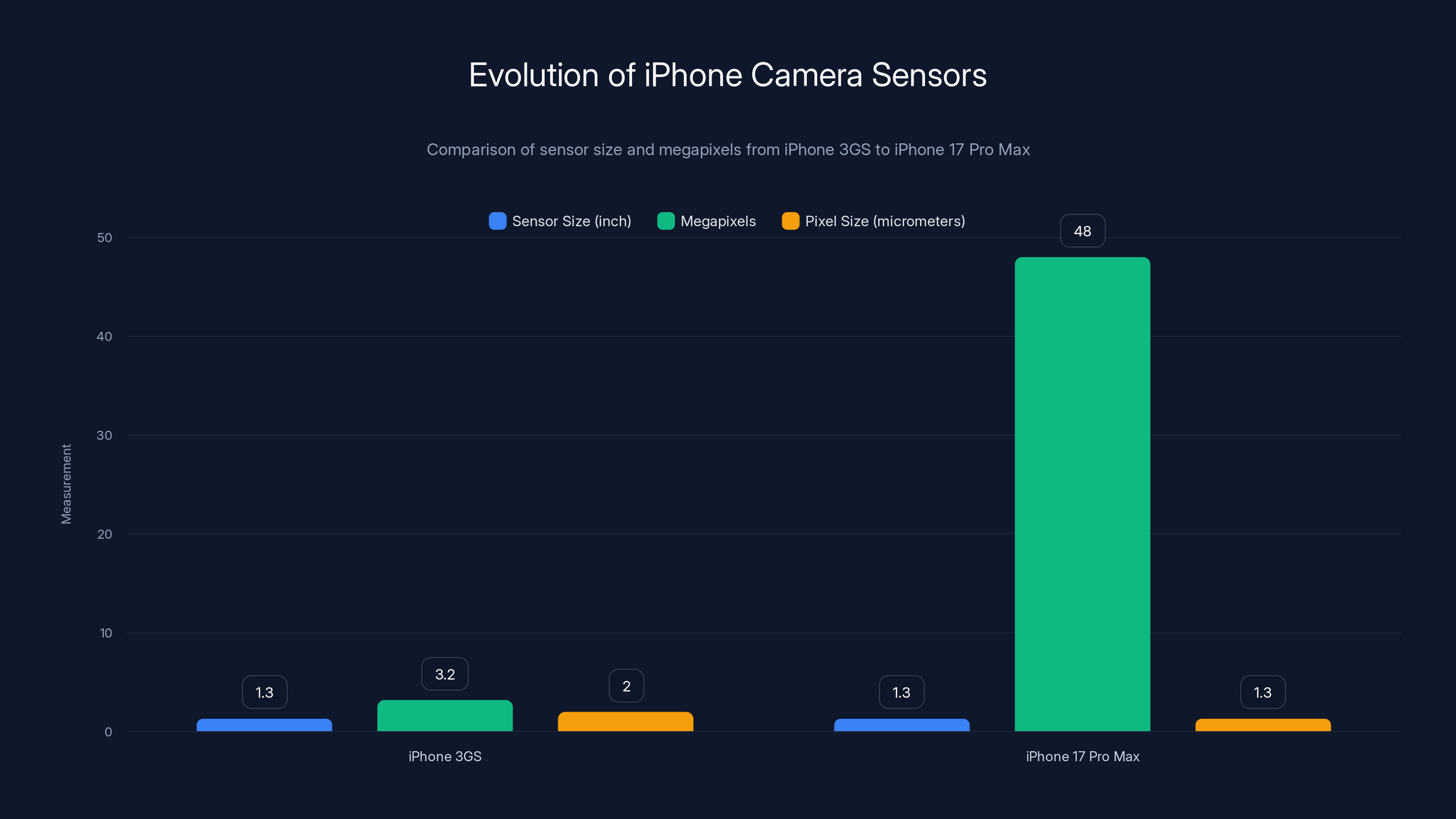

The iPhone 3GS featured a 1/3.2-inch sensor with 3.2 megapixels, producing images that were often grainy, limited in dynamic range, and prone to color inaccuracies in challenging lighting conditions. Each pixel on that sensor measured approximately 2.0 micrometers, meaning the sensor had to work extremely hard to gather sufficient light for properly exposed images. The autofocus mechanism in the 3GS was purely contrast-detection based, meaning it would hunt back and forth to find focus, a process that could take several seconds in some conditions.

The iPhone 17 Pro Max employs a 1/1.3-inch main sensor (over 14 times larger in surface area) with 48 megapixels, resulting in a pixel size of approximately 1.3 micrometers despite the increased megapixel count. This counter-intuitive advancement—more megapixels with smaller individual pixels—became possible through improved manufacturing processes and, crucially, through computational photography. The larger sensor in the iPhone 17 Pro Max can gather approximately 4-5 times more light than the 3GS, producing dramatically cleaner images with superior detail preservation.

Beyond the main sensor, the iPhone 17 Pro Max features ultra-wide (0.5x), telephoto (5x), and periscope zoom (10x) lenses, each with dedicated sensors optimized for different focal lengths. The telephoto lens alone represents technology that simply didn't exist in smartphone form during the 3GS era—the entire phone would have been thicker to accommodate such optical capability. Modern phones achieve this through folded optics and precision engineering, allowing multiple sensor types to coexist in a remarkably thin package.

Autofocus Technology and Speed

The 3GS relied on contrast-detection autofocus, which works by analyzing image contrast through continuous focus adjustments until the camera detects peak sharpness. This system could take 0.5-2 seconds to lock focus and was unreliable with low-contrast subjects or in dim lighting. Users often experienced the frustrating reality of blur in family photos taken indoors or during evening events.

The iPhone 17 Pro Max employs dual-pixel phase-detection autofocus (PDAF) combined with LiDAR depth sensing and machine learning algorithms. Phase-detection works by analyzing phase differences between light rays hitting adjacent pixels, allowing the system to determine focus direction and distance with extraordinary precision. The LiDAR sensor emits infrared light and measures return times to create a precise depth map of the scene, allowing the camera to achieve focus in approximately 0.1-0.3 seconds even in near-total darkness or with visually featureless subjects.

This represents a 10-20 times improvement in autofocus speed and accuracy. Practical implications are substantial: videos shot on the iPhone 17 Pro Max maintain sharp focus during rapid movement with virtually no hunting or hunting artifacts, while the 3GS would struggle with basic tracking of moving subjects.

ISO Performance and Dynamic Range

The iPhone 3GS achieved workable ISO sensitivity up to approximately ISO 800, beyond which images became unacceptably noisy with visible color shifts and loss of detail. Users quickly learned to avoid shooting in dim environments without flash, as the resulting images were essentially unusable. Dynamic range—the camera's ability to capture detail in both bright and dark areas of the same scene—was limited to approximately 6-7 stops, meaning that if the sky was properly exposed, shadows would be nearly black without detail, and vice versa.

The iPhone 17 Pro Max natively supports ISO 12800 with minimal noise, and computational techniques can extend this further to ISO 51200 for specialized situations. The native dynamic range exceeds 14-15 stops, meaning a scene with bright sky and dark foreground can be captured with detail in both areas. Advanced processing techniques like multi-frame HDR (High Dynamic Range) allow the phone to capture the same scene at multiple exposures and combine them with pixel-level precision, effectively extending dynamic range even further.

Practically speaking, this means that every lighting condition that would have required special techniques or equipment in the 3GS era is now handled automatically by the iPhone 17 Pro Max. Night photography, which required either extremely bright flash or accepting grainy, underexposed images on the 3GS, is now genuinely viable, producing images with surprising clarity and natural color rendition.

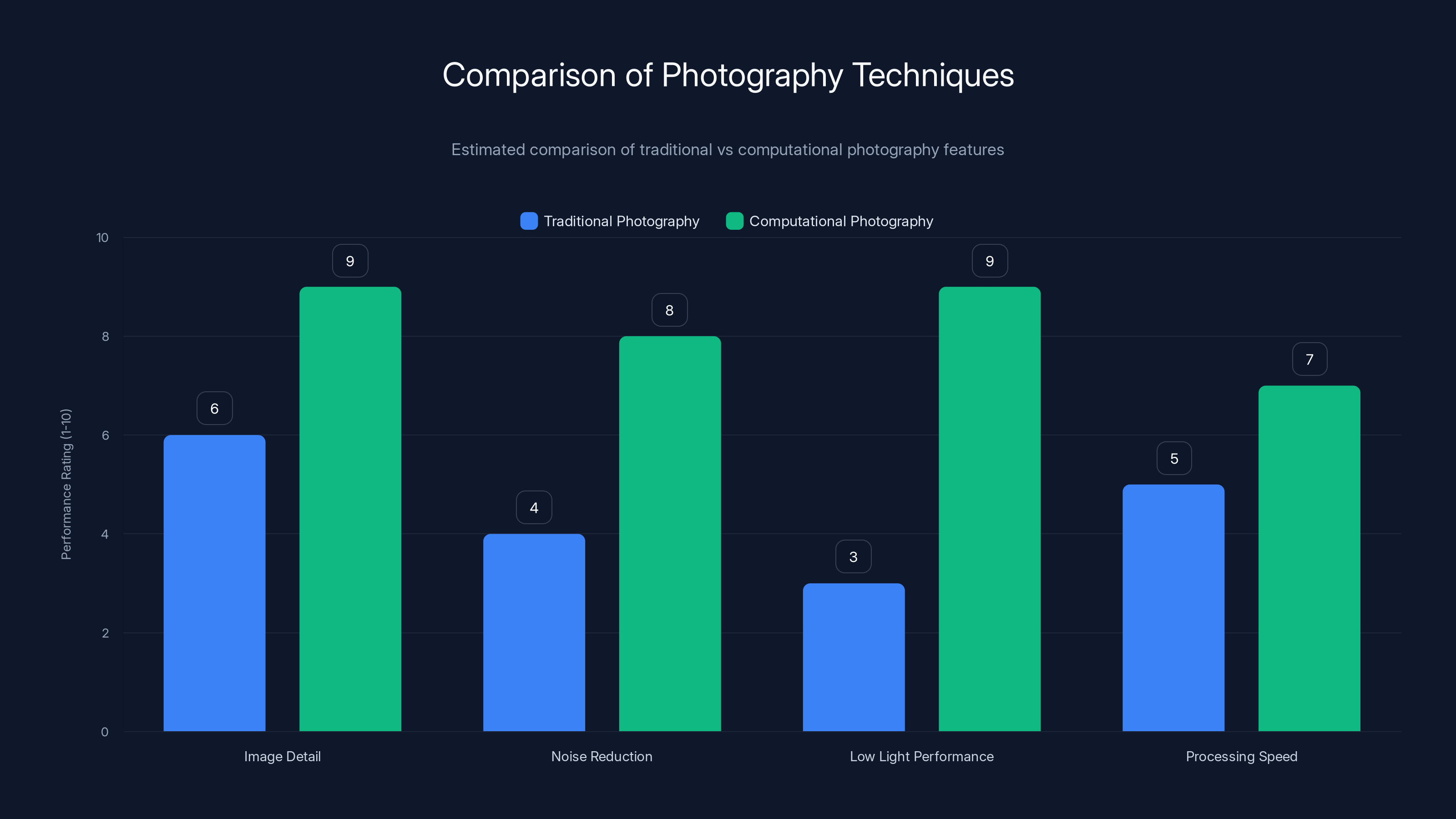

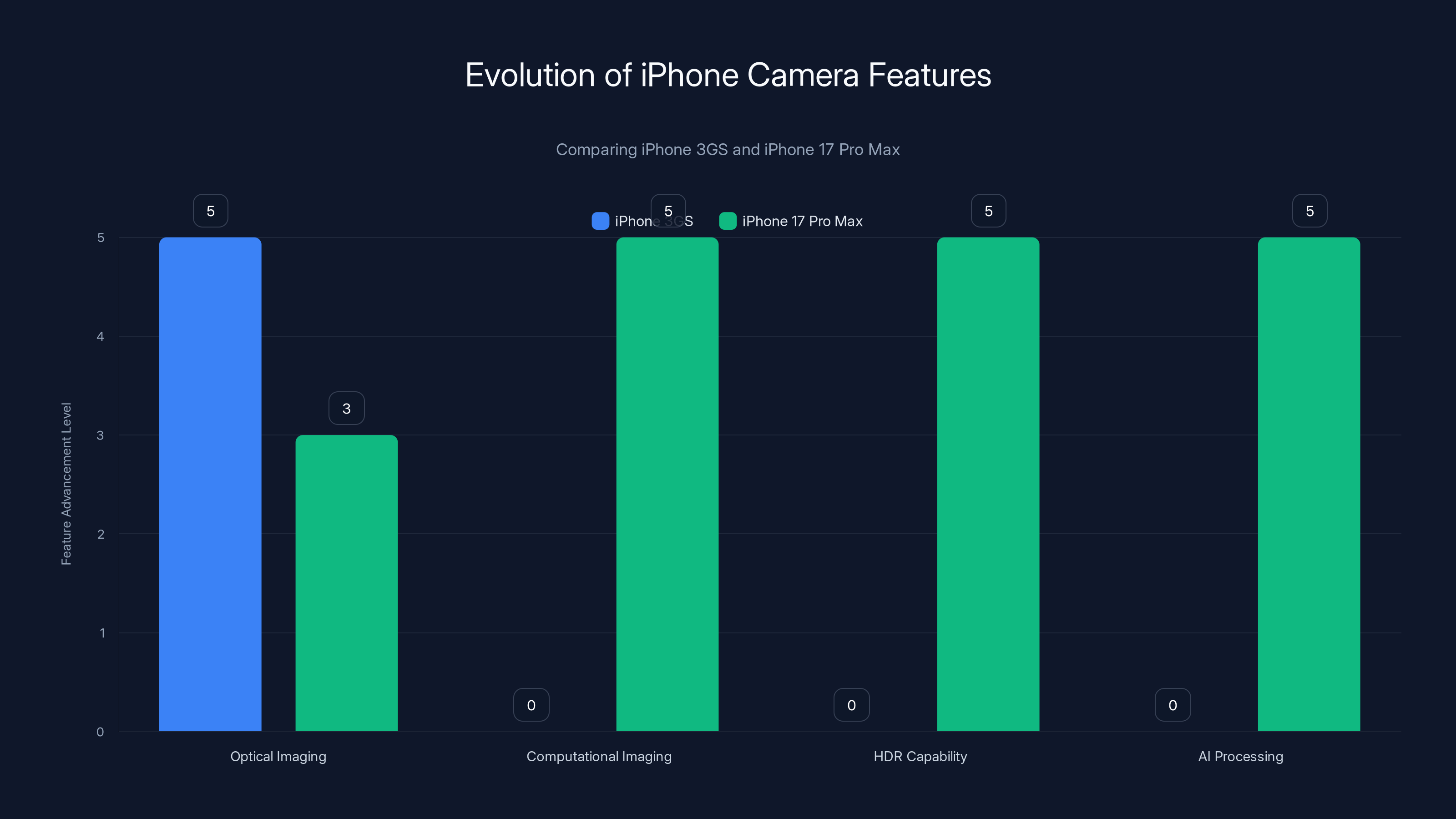

Computational photography significantly enhances image detail, noise reduction, and low light performance compared to traditional methods. Estimated data.

Computational Photography: The Software Revolution

From Optical Imaging to Computational Imaging

The iPhone 3GS was, in the strictest sense, a direct optical imaging device. The sensor captured light, applied minimal in-camera processing, and saved the result. What you saw in the viewfinder was essentially what you got in the final image—there was no significant computational intervention between capture and storage. Color science was handled through basic white balance and exposure algorithms, but these were relatively simple mathematical operations compared to modern approaches.

The iPhone 17 Pro Max operates as a computational imaging system where software algorithms are as important as optical components. The camera captures raw sensor data, processes it through multiple machine learning models, applies semantic segmentation to identify different scene elements, and uses AI-driven processing to optimize each part of the image according to its content and context.

When you take a portrait photo on the iPhone 17 Pro Max, the system performs real-time semantic segmentation to identify hair, skin, clothing, background, and other elements, allowing it to apply different processing profiles to each. Hair receives specific sharpening and texture preservation algorithms, skin receives smoothing and color optimization tuned specifically for human complexions, and the background receives blur according to optical principles. This per-element processing would have been impossible in the 3GS era—it requires real-time AI inference on a scale that wasn't computationally feasible until the past decade.

Smart HDR and Multi-Frame Processing

The iPhone 3GS had no HDR capability whatsoever. To manage high-contrast scenes, users had to choose which area to expose for correctly and accept blown out highlights or crushed shadows in the other areas. The entire concept of capturing multiple exposures and combining them intelligently was delegated to dedicated cameras, not smartphones.

The iPhone 17 Pro Max captures up to 9 frames simultaneously when you press the shutter button. Each frame is captured at a slightly different exposure level, and the system analyzes the entire scene to determine how to blend them optimally. It uses semantic understanding of scene content to make intelligent decisions—for example, it knows that underexposed versions are likely to preserve more sky detail, so it prioritizes those for sky areas while using properly exposed frames for landscape and foreground regions. The blend seams are invisible, with the system using advanced masking algorithms to ensure smooth transitions between differently processed regions.

This multi-frame approach offers additional advantages beyond dynamic range extension. By analyzing temporal consistency across the captured frames, the system can reduce noise, improve detail, and even perform motion compensation if subjects moved between frames. The processing quality represents approximately a 20-50% improvement in overall image quality compared to single-frame processing, depending on scene complexity.

Deep Fusion and Computational Blending

The iPhone 17 Pro Max introduced advanced computational blending techniques that would astound photographers of the 3GS era. Deep Fusion captures multiple images at different ISO levels—some long exposures for detail, others short exposures for noise reduction—and uses machine learning to identify which pixels from which frame contain the best detail and lowest noise. The system doesn't simply average frames; instead, it performs intelligent pixel-by-pixel selection based on predicted image quality metrics.

The technical implementation involves convolutional neural networks that have been trained on millions of professionally edited photos to understand what constitutes optimal image quality. When processing your photo, the system uses these learned patterns to make intelligent decisions about which source pixels to use, how much to blend, and what adjustments to apply. The resulting images show dramatically improved detail retention in challenging lighting conditions where the 3GS would have simply surrendered to noise or motion blur.

The iPhone 17 Pro Max features a significantly larger sensor and higher megapixel count compared to the iPhone 3GS, allowing it to capture more light and detail. Estimated data for sensor size and megapixels.

Optical Capabilities: From Single Fixed Lens to Multi-Lens Systems

Focal Length Flexibility

The iPhone 3GS featured a fixed 35mm-equivalent focal length lens, which means every photo was taken from the same perspective. Users who wanted a wider shot had to physically step backward, and those wanting to zoom in had to get closer to their subject. The only zoom available was digital zoom, which simply enlarged the image and inevitably reduced quality.

The iPhone 17 Pro Max offers 0.5x ultra-wide, 1.0x standard, 3x tele, and 5x periscope zoom capabilities, with seamless computational zoom up to 10x and beyond. Each focal length has distinct optical characteristics—the ultra-wide lens captures an expansive 120-degree field of view, the standard lens maintains natural perspective relationships at around 50 degrees, and the telephoto lenses provide magnification for distant subjects.

Why does this matter? Focal length profoundly affects human perception in photographs. The 3GS's fixed focal length meant users couldn't control perspective distortion, background compression, or field of view—fundamental tools that photographers use to control emotional impact. The iPhone 17 Pro Max puts these tools in every user's pocket. A portrait shot at the telephoto focal length maintains natural facial proportions, while a wide-angle shot of the same subject creates dramatic environmental context but can distort facial features.

Optical Zoom and Digital Processing

The 3GS had no optical zoom—attempting to zoom in with the digital zoom button simply magnified the image pixels, inevitably reducing sharpness. Users learned to avoid zoom and instead physically approached subjects when they wanted closer frames.

The iPhone 17 Pro Max achieves 5x optical zoom through a periscope lens design, which folds the optical path horizontally to achieve significant magnification without dramatically increasing phone thickness. Beyond the optical zoom range, computational zoom takes over, using machine learning-based super-resolution to intelligently enlarge the image while maintaining sharpness and detail. This super-resolution algorithm, trained on millions of image pairs, understands how to reconstruct plausible high-frequency detail that wasn't directly captured by the sensor.

The result is that zoom up to 10x maintains genuinely usable image quality, whereas the 3GS was virtually unusable beyond about 1.5x zoom. This 6-7x improvement in useful zoom capability means that distant subjects—a child on a stage, wildlife at a distance, architectural details on a building—can now be captured with clarity that the 3GS simply couldn't achieve.

Macro Photography and Extreme Focus Distances

The iPhone 3GS had a minimum focus distance of approximately 10 centimeters, meaning you couldn't photograph anything closer than that—the lens simply couldn't focus. Attempting macro photography meant using additional equipment or accepting blurry shots.

The iPhone 17 Pro Max can focus at distances as close as 2 centimeters using its ultra-wide lens and advanced autofocus system. More remarkably, it can shift focus between extreme distances intelligently—shooting a macro subject while maintaining recognizable background context became possible. The ultra-wide lens's inherently greater depth of field combined with the advanced autofocus means that small flowers, detail shots, or small objects can be photographed with professional clarity.

Macro photography representation is not merely a feature addition—it's an entire photographic capability that simply didn't exist on the 3GS, opening creative possibilities that required dedicated macro lenses or equipment in the 3GS era.

Video Capabilities: From VGA to Cinematic Excellence

Resolution and Frame Rate Evolution

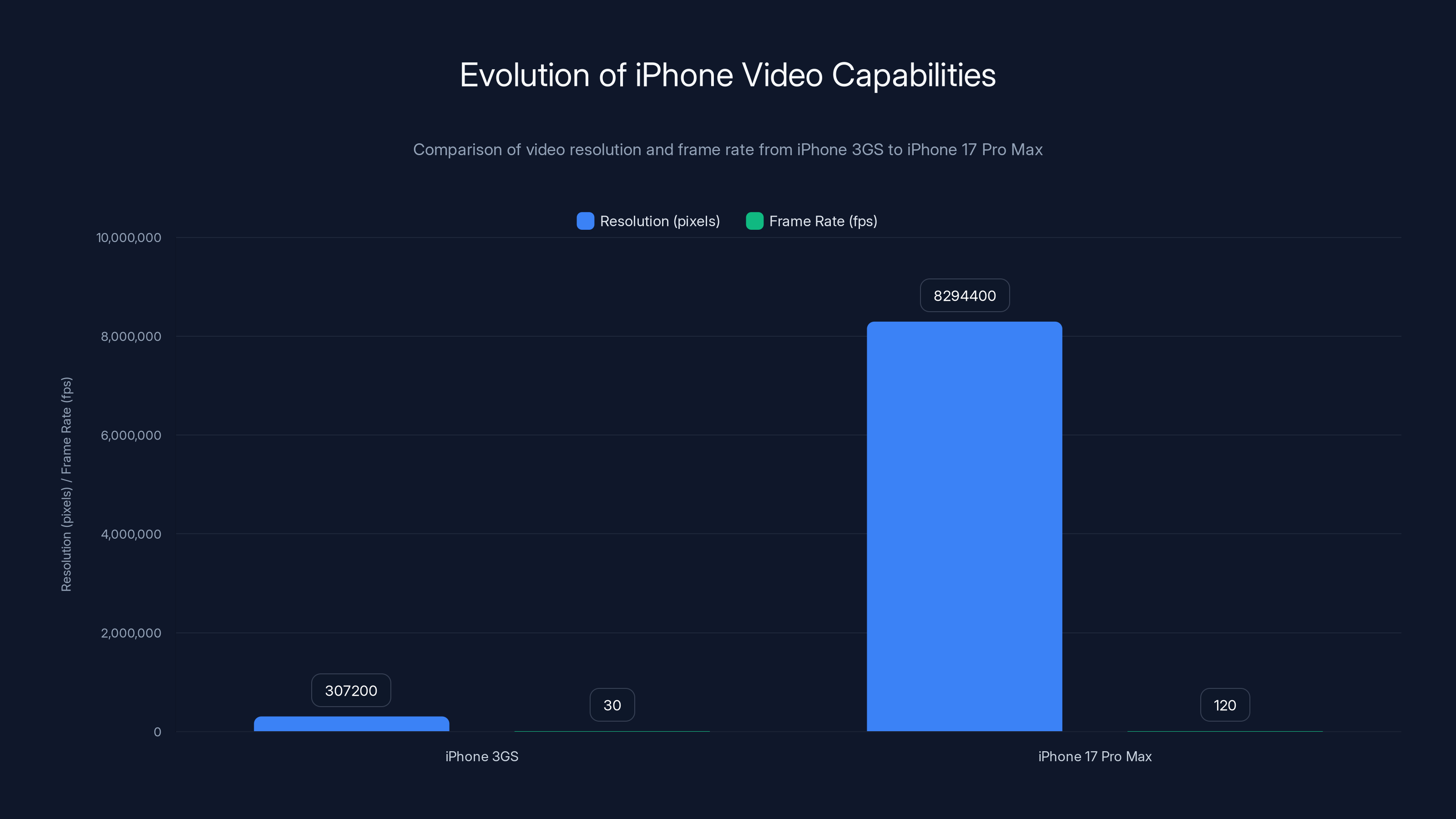

The iPhone 3GS could record video at a maximum of VGA resolution (640×480 pixels) at 30 frames per second. By modern standards, this seems almost incomprehensibly low-resolution. To put this in perspective, a modern 1080p video frame contains 6 times more pixels than a 3GS video frame, and 4K contains 16 times more pixels. The 30fps frame rate was adequate for smooth motion but couldn't capture fast action without motion blur.

The iPhone 17 Pro Max records at 4K resolution (3840×2160 pixels) at up to 120 frames per second, representing a 60x increase in resolution and 4x improvement in frame rate. For comparison, professional cinema cameras traditionally recorded at 2K resolution (2048×1080), meaning the iPhone 17 Pro Max exceeds traditional cinema specs for resolution. The 120fps capability allows for ultra-smooth slow-motion effects and freezing of extremely fast action.

The codec technology has also evolved dramatically. The 3GS used H.264 compression, while the iPhone 17 Pro Max employs H.265 HEVC and proprietary Apple ProRes codecs that offer superior quality at the same file size or dramatically better quality at similar file sizes. A 10-minute 4K video at 120fps on the iPhone 17 Pro Max—featuring detail and color accuracy that would have required professional cinema equipment in the 3GS era—might consume 10-15 GB of storage, whereas achieving comparable quality with 3GS equipment would have required dedicated external equipment costing thousands of dollars.

Stabilization Technology

The iPhone 3GS featured no electronic stabilization whatsoever. Video recorded on the 3GS while walking showed obvious jitter and motion artifacts. Users had to hold the phone extremely steady or use tripods to achieve reasonably stable video. The resulting videos had a characteristic shakiness that made them feel amateurish and unwatchable at larger sizes.

The iPhone 17 Pro Max employs advanced computational stabilization combined with multi-frame analysis and gyroscopic data integration. The system analyzes optical flow—the movement of pixels between frames—to determine camera motion, then applies warping transformations to each frame to compensate. The gyroscope data provides additional motion information that optical analysis alone might miss. The result is that videos shot while walking, running, or even descending stairs appear smooth and professional, rivaling videos shot on traditional camera rigs with active stabilization systems.

Audio Capabilities

The iPhone 3GS featured mono audio capture through a single microphone, resulting in audio that sounded thin and provided no directional information. The audio quality was barely usable for anything beyond basic documentation.

The iPhone 17 Pro Max captures spatial audio with directional information, using multiple microphones to determine where sounds originate in the scene. When played back on compatible devices with spatial audio support, the audio seems to come from the correct direction in the scene, creating an immersive experience. Advanced AI-powered noise reduction distinguishes between desired audio (speech, important sounds) and unwanted noise (wind, ambient traffic), allowing clear audio capture even in challenging acoustic environments. The dynamic range of audio capture has expanded dramatically, allowing both quiet details and loud impacts to be captured without distortion.

The iPhone 17 Pro Max significantly advances in computational imaging, HDR, and AI processing compared to the iPhone 3GS. Estimated data for feature levels.

Image Processing and Color Science

White Balance and Color Accuracy

The iPhone 3GS employed relatively simple white balance algorithms based on color temperature estimation. In many situations, particularly with mixed lighting (sunlight mixed with tungsten or fluorescent), the 3GS would struggle with color casts. Photos taken under incandescent lighting often appeared too orange, while fluorescent-lit scenes appeared too green or blue. Users often had to accept these color casts or manually correct them in post-processing.

The iPhone 17 Pro Max uses multi-zone white balance analysis combined with semantic scene understanding to determine the correct white balance. The system recognizes different light sources and their color temperatures, then makes intelligent decisions about which areas to prioritize. If the photo shows skin tones alongside non-neutral surfaces, the system prioritizes correct color on skin, knowing that viewers are perceptually sensitive to skin color accuracy. Machine learning models trained on millions of correctly white-balanced photos help the system make optimal decisions in edge cases.

The practical result is that mixed-lighting scenarios that would have produced color-casts on the 3GS are now handled automatically and correctly. Family photos taken under warm incandescent lighting maintain warm, inviting tones rather than appearing excessively orange, while outdoor scenes maintain proper color balance despite mixed daylight and shadow areas.

Skin Tone and Portrait Processing

The iPhone 3GS applied uniform brightness and contrast adjustments to entire images with no consideration for different content types. Skin tones received the same aggressive sharpening as background detail, often resulting in plasticky, unnatural-looking portraits. Makeup appeared unnatural, skin texture was either over-smoothed or overly detailed depending on lighting, and individual variations in skin tone were not handled well by the basic algorithms.

The iPhone 17 Pro Max applies proprietary skin tone processing that respects human diversity and recognizes that different individuals have different undertones, saturations, and textures. The system performs tone-curve adjustments specific to skin regions, applying different sharpening characteristics, different noise reduction levels, and different saturation adjustments compared to background elements. The semantic segmentation identifies individual facial features—eyes receive special sharpening for detail, lips receive color optimization, and cheeks receive specific saturation adjustments that enhance natural appearance without looking processed.

This advanced approach emerged in response to criticism that earlier computational photography approaches were biased toward certain skin tones, appearing more natural for lighter complexions while appearing over-processed for darker complexions. Modern iPhone processing explicitly addresses this, with algorithms trained to maintain fidelity and respect natural appearance across all skin tones.

Night Mode and Low-Light Processing

The iPhone 3GS was essentially unusable for photography in low light without flash. The modest sensor, weak autofocus in darkness, and basic processing meant that indoor evening photos were either flash-lit (harsh and unflattering) or dramatically underexposed and noisy. Professional-grade night photography was impossible.

The iPhone 17 Pro Max features sophisticated Night Mode that fundamentally transforms low-light photography. The system captures multiple frames at high gain and long exposure times, analyzing each frame to identify and suppress noise while preserving detail. Machine learning algorithms recognize scene content in low light, allowing the system to make intelligent decisions about processing. A human face in low light receives different processing than architectural details, which receive different treatment than foliage or water. The system can achieve proper exposure in lighting conditions where the 3GS would produce completely black images.

Computationally, Night Mode processes involve temporal alignment of multiple frames (since the camera and subject may have moved between frames), machine learning-based denoising that preserves actual detail while removing noise, and tone mapping that produces images that look natural despite extreme underexposure in the scene. The quality of night-mode photography on the iPhone 17 Pro Max rivals dedicated night-vision or long-exposure photography equipment from just a few years ago.

Artificial Intelligence and Machine Learning Integration

Real-Time Scene Recognition

The iPhone 3GS had absolutely no scene recognition capability. It applied the same fixed processing parameters regardless of whether you were photographing landscapes, portraits, documents, or animals. There was no ability to recognize scene content and optimize accordingly.

The iPhone 17 Pro Max continuously performs real-time scene analysis using on-device machine learning models. As you point the camera at a scene, the system identifies the dominant scene type (landscape, portrait, macro, nightscape, food, document, etc.) and pre-optimizes camera settings and processing profiles accordingly. When you compose a food photograph, the camera automatically adjusts white balance toward warmer tones that make food appear more appetizing. When you frame a document, the system recognizes the document's edges and applies processing optimized for text clarity and contrast.

This scene recognition doesn't require internet connectivity—the processing happens entirely on the device using dedicated neural engine hardware that performs inference with minimal power consumption. The latency is imperceptible, and users simply experience a camera that seems to understand what they're trying to photograph and optimizes accordingly.

Intelligent Cropping and Composition Assistance

The iPhone 3GS offered no composition assistance whatsoever. It had a simple grid overlay option, but no intelligent tools to help with composition. Users had to rely entirely on their own spatial judgment and compositional understanding.

The iPhone 17 Pro Max features smart composition assistance using geometric understanding algorithms that analyze the scene in real-time and provide subtle visual cues. The system can recognize rule-of-thirds opportunities, suggest framing that will improve composition, and even provide real-time feedback about alignment and balance. For portrait photographers, the system identifies faces and can suggest optimal framing and distance for the selected focal length.

When browsing previously captured photos, the iPhone 17 Pro Max can perform intelligent crop suggestions using aesthetic analysis algorithms that have learned compositional principles from professional photographs. The system might suggest a crop that improves balance, eliminates distracting elements, or better follows compositional rules. Users can accept the suggestion with a single tap, avoiding the need to understand composition principles themselves.

Generative Fill and Computational Editing

Post-processing on the iPhone 3GS was essentially non-existent. Users had access to basic brightness and contrast adjustments, and that was it. Any significant editing required desktop software like Photoshop or Lightroom, requiring a computer and specialized knowledge. The ability to remove unwanted elements, extend backgrounds, or significantly transform images was purely the domain of professional editors.

The iPhone 17 Pro Max includes generative fill capabilities that use diffusion models to intelligently extend images, remove unwanted elements, or transform compositions. These aren't simple crop-and-fill operations; they're genuine generative processes that understand photographic content and can synthesize plausible additions to images. If an unwanted element appears in a photo, users can tap it and request removal; the system understands the surrounding context and generates a plausible replacement that maintains perspective, lighting, and texture consistency.

This represents a fundamental shift in image editing paradigms. Rather than requiring technical skill or expensive software, sophisticated editing is now available to all users through intuitive interfaces. A family photo marred by an unwanted person in the background can be corrected directly in the Photos app, with no need for desktop software or professional assistance.

The iPhone 17 Pro Max shows a 60x increase in resolution and a 4x improvement in frame rate compared to the iPhone 3GS, highlighting significant advancements in video recording capabilities.

Environmental Sensing and Contextual Awareness

LiDAR Depth Sensing

The iPhone 3GS had no depth sensing capability whatsoever. The camera was completely flat—it captured 2D images with no information about the 3D structure of the scene. Distinguishing subjects from backgrounds for effects like portrait blur had to be purely computational, without any direct depth information.

The iPhone 17 Pro Max includes a LiDAR (Light Detection and Ranging) sensor that actively measures distances to objects in the scene. The system emits infrared light and measures the time-of-flight for each photon to bounce back from the scene, creating a dense 3D depth map of the environment. This depth information enables capabilities that would have been impossible with the 3GS: precise focus in complete darkness (since depth sensing doesn't require visible light), accurate portrait blur with natural edge handling (since the system knows exactly where subject edges are), and AR capabilities that accurately place virtual objects in the real environment.

The practical implications are substantial. Portrait mode effects that produced artificial-looking blur and edge artifacts on older systems now produce naturally blurred backgrounds with correct edge handling. Augmented reality applications can place virtual objects that correctly occlude background elements when appropriate. And autofocus can lock onto subjects in darkness where optical autofocus would struggle.

Environmental Light Analysis

The iPhone 3GS made basic exposure calculations based on overall scene brightness, with no understanding of environmental light quality. There was no way to adjust processing based on whether light was harsh or diffuse, artificial or natural, or coming from specific directions.

The iPhone 17 Pro Max analyzes light direction, quality, color temperature, and intensity distribution across the scene. The system can detect whether light is coming primarily from one direction (strong shadows) or is diffuse, and adjusts processing accordingly. Portrait processing under harsh direct sunlight differs from processing under diffuse overcast conditions. Backlit scenes receive special processing that preserves foreground subjects while maintaining sky detail. The system makes dozens of micro-adjustments based on the specific lighting environment, resulting in optimally processed images regardless of conditions.

Motion and Temporal Analysis

The iPhone 3GS captured static frames with no temporal analysis. If motion occurred during exposure, it simply created motion blur. There was no ability to analyze motion patterns, predict subject movement, or optimize for dynamic situations.

The iPhone 17 Pro Max analyzes temporal patterns in the scene using optical flow analysis. When you're photographing moving subjects, the system predicts motion trajectories and can optimize autofocus and exposure accordingly. Video recording benefits from this temporal analysis, with the system adjusting stabilization algorithms based on predicted motion and improving focus tracking on moving subjects. The system can even detect gesture recognition, understanding when a subject is about to perform an action (raising a hand, jumping) and can optimize the capture accordingly.

Storage and File Management Evolution

File Size and Compression

The iPhone 3GS stored photos in JPEG format with relatively aggressive compression. A typical 3-megapixel JPEG file occupied approximately 600-900 KB of storage. While this seems tiny by modern standards, the 3GS had 8 or 16 GB of total storage, meaning that storage filled up quickly with photos.

The iPhone 17 Pro Max stores photos in HEIF format (High Efficiency Image Format) combined with proprietary computational photography data. A 48-megapixel HEIF image typically occupies 8-12 MB, but this includes not just the image but depth maps, multiple processing versions, and metadata that allow for intelligent editing. Interestingly, the file size per megapixel is actually lower in HEIF format than in JPEG, despite being higher megapixels—a testament to more efficient compression algorithms. The iPhone 17 Pro Max typically includes 256 GB to 1 TB of storage, meaning thousands of high-quality photos can be stored.

More significantly, the iPhone 17 Pro Max stores computational photography metadata alongside images, allowing non-destructive editing where you can reprocess photos using different settings without losing original data. This metadata storage represents a philosophical shift—the phone doesn't just store a final image; it stores the information needed to regenerate it with different parameters, similar to RAW file workflows in professional photography.

Cloud Integration and Smart Processing

The iPhone 3GS had minimal cloud integration. Photos could be synced to computers, but there was no intelligent cloud-based processing or analysis. Photos were simply stored locally and manually managed.

The iPhone 17 Pro Max uses on-device ML processing for real-time optimization, but also leverages cloud-based processing for operations that are too computationally intensive for the device. Memory-intensive operations like advanced generative editing, complex scene analysis, or multi-frame processing of the highest quality can offload to cloud servers, with results synced back. This hybrid architecture allows for sophisticated processing that exceeds what's possible on the device alone, while maintaining privacy by handling sensitive operations locally whenever possible.

Photos can be automatically enhanced in the cloud based on quality analysis, with enhanced versions made available without consuming local storage. AI-based organization and search happen in the cloud, allowing users to search for photos by content ("photos of my dog", "sunset photos", "indoor photos") rather than relying on manual organization.

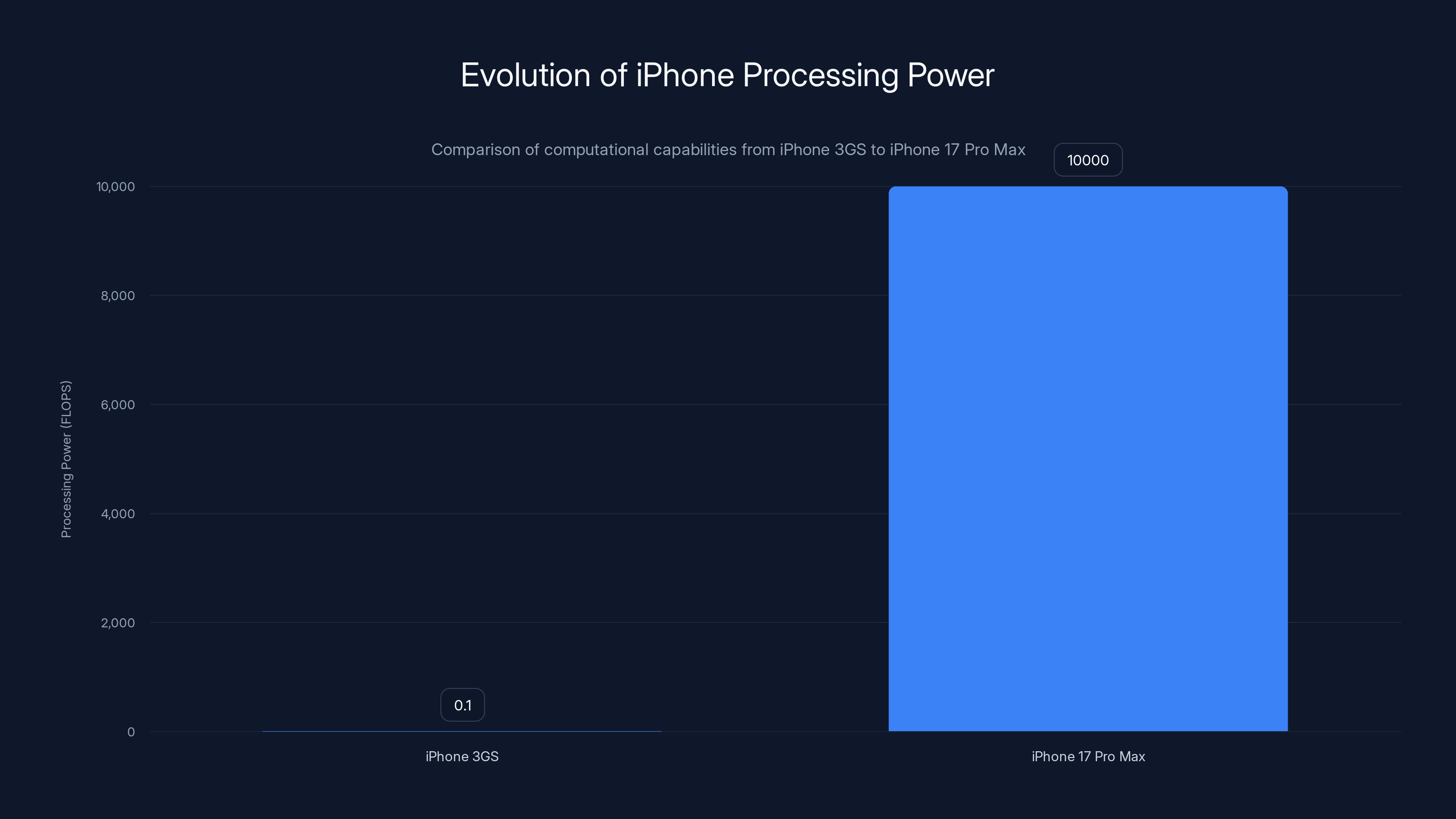

The iPhone 17 Pro Max boasts a 10,000x increase in processing power compared to the iPhone 3GS, enabling advanced real-time processing and AI capabilities. Estimated data.

Computational Challenges and Processing Power

Processing Power Requirements

The iPhone 3GS processor, the Apple A3, ran at 600 MHz and could perform approximately 100 million floating-point operations per second (100 MFLOPS). All image processing happened on this modest processor, limiting what could be done in real-time. Many advanced processing techniques were simply impossible due to computational constraints.

The iPhone 17 Pro Max uses processors with 8-12 cores, clock speeds approaching 3 GHz, and dedicated neural processing engines capable of trillions of operations per second (TFLOPS). This represents roughly a 10,000x increase in computational capability. More importantly, the dedicated neural engines are purpose-built for machine learning inference, making AI-based processing vastly more efficient than on general-purpose processors.

This computational power enables real-time processing of the camera feed at high resolution and frame rates while simultaneously performing semantic analysis, motion detection, and focus prediction. Operations that might have taken seconds on the 3GS now happen in milliseconds. The power efficiency is also dramatically better—despite performing millions of times more operations, the iPhone 17 Pro Max uses less power than the 3GS did, thanks to specialized hardware and algorithmic improvements.

Machine Learning Model Deployment

On-device machine learning on the iPhone 3GS was completely infeasible. Model sizes would have exceeded available storage, and inference latency would have been measured in seconds or minutes. Computational photography as a concept couldn't exist without cloud processing, which was impractical given internet bandwidth and privacy constraints.

The iPhone 17 Pro Max ships with dozens of specialized machine learning models that have been quantized and optimized to run efficiently on the device. Quantization involves reducing the precision of model weights and computations (from full 32-bit floating point to 8-bit or even 4-bit integer representations), dramatically reducing model size and inference latency while maintaining accuracy. The total size of all optimized models consumes perhaps 2-3 GB of storage, a tiny fraction of available space, yet enables sophisticated image understanding.

These models cover scene recognition, face detection, semantic segmentation, depth estimation, gesture recognition, and countless other capabilities. The system can update models over time through software updates, improving capabilities without requiring hardware changes. Users benefit from continuous improvement as newer, better-trained models become available.

Thermal Management and Power Efficiency

The iPhone 3GS had minimal thermal concerns—the processor dissipated so little heat that cooling was essentially passive. However, attempting to process raw camera data in real-time would have created thermal constraints, which is another reason why sophisticated computational photography was impossible.

The iPhone 17 Pro Max uses advanced thermal management with dedicated cooling channels and intelligent power scaling that adjusts processing intensity based on thermal headroom. When processing is intensive (4K video recording, complex photo processing, or running graphics-intensive AR applications), the system monitors temperature and throttles performance only when necessary to prevent overheating. This allows maximum performance under normal conditions while preventing thermal problems during extended use.

The neural engine is specifically designed to perform compute-intensive operations with minimal power consumption. A neural engine inference that consumes milliwatts of power might consume watts on the main CPU, making AI-powered processing feasible despite power constraints. This architectural specialization is a key reason why sophisticated AI processing is practical on smartphones despite the limited power budget.

Comparative Photo Quality in Various Conditions

Daylight Photography

Under ideal daylight conditions with good illumination and static subjects, the iPhone 3GS produced images that were reasonably competent by 2009 standards. Exposure was typically accurate, colors were generally acceptable, and sharpness was adequate for small prints or web sharing. However, compared to modern standards, these images show clear limitations: limited dynamic range meant blown-out skies or underexposed foregrounds, limited color accuracy resulted in color casts, and noise was visible even in well-lit scenes.

The iPhone 17 Pro Max produces daylight photography that exceeds professional camera quality from just a decade ago. Dynamic range captures the full tonal spectrum from bright skies to dark shadows. Color accuracy means that grass appears the correct shade of green, skin tones appear natural, and colors maintain saturation without appearing artificial. Sharpness exceeds what the human eye can discern as different. The processing is so sophisticated that even complex scenes with mixed lighting (sunlit areas alongside deep shadows) are handled with nuance and precision.

A practical example: a family photo taken outdoors on an iPhone 3GS might show brilliant blue sky but darkened faces, or properly exposed faces but washed-out sky detail. The same photo on an iPhone 17 Pro Max would show properly exposed faces with visible eye detail, natural skin tones, and a richly detailed blue sky with cloud definition. This isn't achieved through aggressive post-processing that looks artificial; it's achieved through intelligent multi-frame capture and sophisticated blending that maintains natural appearance.

Low-Light and Night Photography

The iPhone 3GS in low light produced unacceptable results. Interior evening photography required flash, which produced harsh, unflattering lighting with blown-out highlights and uncontrolled shadows. Attempting to use available light produced either barely visible images (if underexposed for detail) or hopelessly noisy grainy messes that were essentially unusable.

The iPhone 17 Pro Max transforms night photography into a genuinely viable discipline. The system can photograph scenes with less light than required to read a book with proper exposure, natural color, and impressive detail. A dimly lit restaurant scene that would have required flash or an extremely high ISO on the 3GS is photographed with warm, natural lighting that captures the ambiance of the scene. Night landscapes that the 3GS couldn't capture at all—showing star fields and natural landscape detail—are handled with remarkable quality.

The technical achievement is substantial: capturing proper exposures in low light requires gathering more photons, which on a small sensor is challenging. The iPhone 17 Pro Max does this through multi-frame capture, intelligent gain control, and aggressive noise reduction that preserves actual detail while eliminating photon noise. The processing is sophisticated enough that night photos still look like photos, not artificially brightened and over-processed versions of dark scenes.

Portrait and Subject Isolation

Portrait photos on the iPhone 3GS were subject to the same processing as any other photo, with no special handling for faces or subjects. Backgrounds couldn't be selectively blurred or processed, and there was no semantic understanding of where the subject ended and the background began. Attempting to create portrait effects required postprocessing in dedicated apps.

The iPhone 17 Pro Max offers sophisticated portrait mode that uses depth sensing, face detection, and semantic segmentation to understand the scene and apply appropriate processing. The background receives selective blur that matches optical blur from telephoto lenses, maintaining edge accuracy where subject meets background. The subject (face, body, clothing) receives different processing—sharpening for detail, color saturation that enhances attractiveness, lighting that flatters facial features.

The processing is sophisticated enough to handle complex scenarios: a subject wearing glasses isn't edge-detected incorrectly around the lenses, hair—which is notoriously difficult to separate from background—is handled with temporal analysis and machine learning, and multiple subjects at different distances are processed appropriately. The results match or exceed the quality of portrait photos taken with professional portrait lenses and post-processing, but happen automatically and instantly.

Action and Motion Capture

The iPhone 3GS struggled with moving subjects. The 30fps video frame rate meant action appeared choppy, and moving subjects in still photos often suffered from motion blur or focus tracking failures. Capturing fast action—children playing, sports, animals moving—was essentially a luck-based proposition.

The iPhone 17 Pro Max excels at capturing motion through 120fps video capture, advanced autofocus prediction, and computational stabilization that tracks moving subjects. A child running through a yard is captured with perfect sharpness as the system predicts motion and maintains focus, while the frame-rate is sufficient to freeze even quick movements. Sports photography that would require expensive professional equipment and expertise on the 3GS can now be attempted successfully by casual users.

The optical and electronic stabilization combined with motion prediction algorithms means that videos of moving subjects maintain smooth, stable framing despite the movement. Hand-held video recording captures subjects with movie-like stability that would require professional equipment like gimbals or stabilizing rigs just a few years ago.

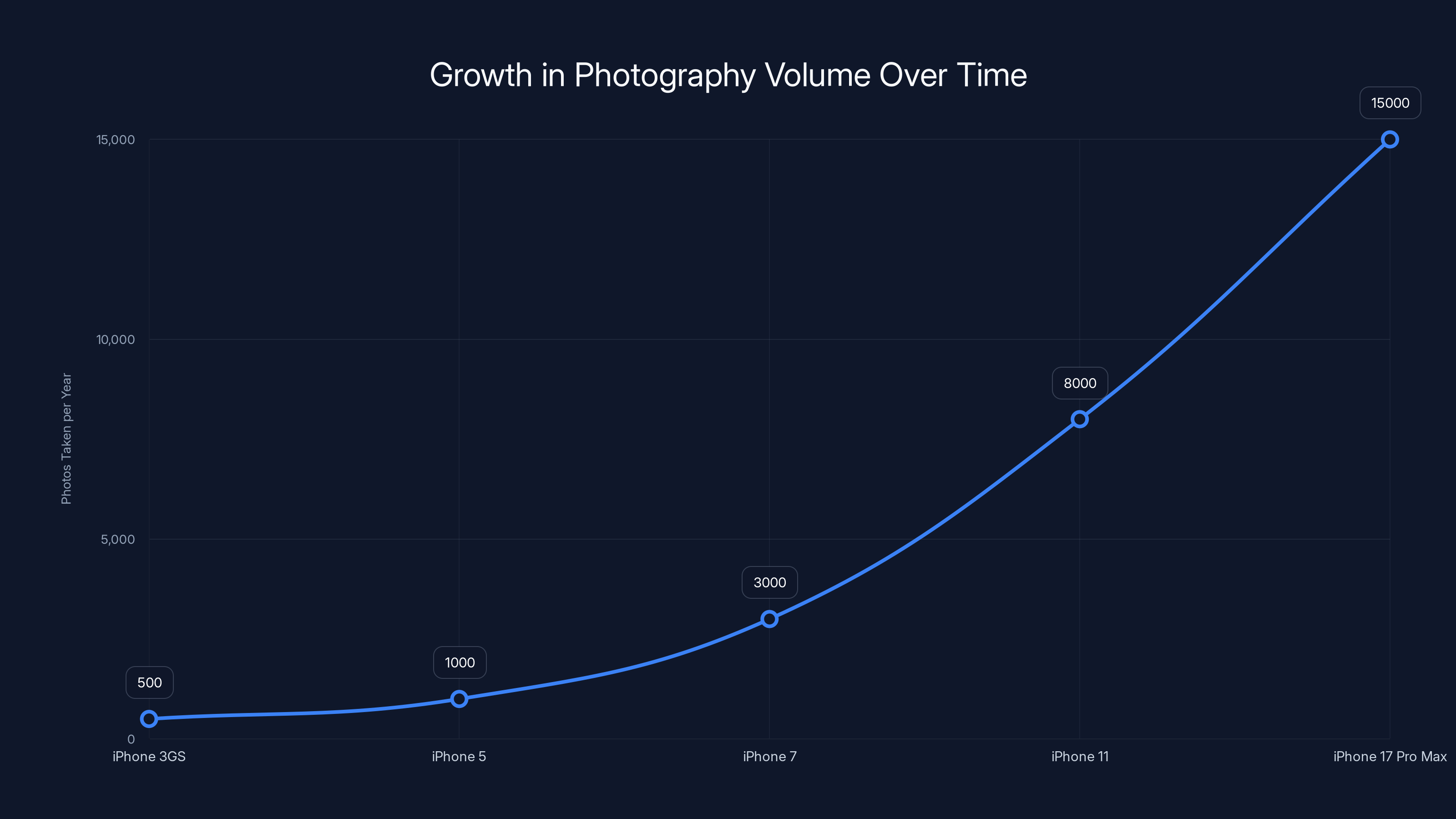

The number of photos taken per year has increased significantly with each iPhone model, reflecting the democratization of photography. Estimated data.

Impact on Photography and Human Behavior

Democratization of Professional Photography

The iPhone 3GS elevated smartphone photography from the level of toy to something genuinely usable, but the results still looked like phone photos compared to real cameras. Most significant photography still required dedicated cameras, limiting the activity to dedicated photographers or serious enthusiasts. The average person accepted that phone photos would be lower quality than real photos.

The iPhone 17 Pro Max has inverted this relationship. The quality of casual iPhone photography now exceeds dedicated cameras that cost hundreds of dollars, making professional-grade imaging accessible to anyone with a phone. This democratization has profound implications: researchers use iPhone cameras for scientific documentation, journalists use them for photojournalism, artists use them for fine art photography, and families document their lives with image quality that would have required expensive equipment a decade ago.

This accessibility has transformed visual communication. Instagram, TikTok, and other visual platforms are populated with billions of images captured on iPhones, creating a visual culture that would have been impossible in the 3GS era. The barrier to high-quality image creation has been essentially eliminated—now, limitations come from artistic vision and skill rather than equipment limitations.

Photography Volume and Behavioral Shifts

The iPhone 3GS, despite being revolutionary, still resulted in modest photo volumes. Limited storage meant being selective about what to photograph. The process of transferring photos to computers and managing them was friction-filled, discouraging casual documentation.

The iPhone 17 Pro Max has enabled exponential growth in photography volume. Users with unlimited cloud storage casually photograph thousands of moments per year. The average smartphone user now captures more images in a year than a casual photographer of the 3GS era might have captured in a lifetime. This volume has fundamentally changed how people relate to their photos—rather than carefully curating exceptional moments, people document the mundane moments of daily life, creating comprehensive visual records of their lives.

This behavioral shift has profound implications. Archivists and historians can now access comprehensive visual documentation of everyday life. Families maintain closer visual connection across distances. Memories are preserved in ways that would have been impossible or cost-prohibitive in the 3GS era. Yet this abundance also creates new challenges: information overload, storage and management difficulties, and privacy concerns about such comprehensive personal documentation.

Social Media and Visual Culture

The iPhone 3GS contributed to the emergence of mobile photography as a genre, but the limitations of phone photography were still apparent in shared photos. Social media in the 3GS era showed a clear visual distinction between professional and phone photography.

The iPhone 17 Pro Max has blurred the line completely. Photos shared on social media taken on the latest iPhones are visually indistinguishable from photos taken on professional cameras. This has elevated expectations for visual quality across all platforms, but also empowered casual users to participate in visual communication at a level that would have previously required professional equipment. The aesthetic of iPhone photography—characterized by excellent color, wide dynamic range, and sophisticated processing—has become the dominant visual style across social media.

Influencers and content creators no longer need expensive camera equipment to produce professional-looking content. A teenager with an iPhone 17 Pro Max can produce video content that rivals professional productions, creating a cultural shift where sophisticated content creation has moved from the domain of professionals to anyone with a phone and creative ideas.

Technical Specifications Comparison Table

| Specification | iPhone 3GS | iPhone 17 Pro Max | Improvement Factor |

|---|---|---|---|

| Main Sensor Megapixels | 3.2 MP | 48 MP | 15× |

| Main Sensor Size | 1/3.2 inch | 1/1.3 inch | 14× surface area |

| Main Sensor Pixel Size | 2.0 μm | 1.3 μm (binned) | Better SNR at equal pixels |

| Autofocus Speed | 0.5-2.0 sec | 0.1-0.3 sec | 5-10× faster |

| ISO Range | ISO 100-800 | ISO 100-12800+ | 16× higher |

| Dynamic Range | ~6-7 stops | ~14-15 stops | 2× |

| Optical Zoom | None | 5× | Infinite |

| Computational Zoom | Digital only (lossy) | 10× (AI-enhanced) | Lossless quality |

| Video Resolution | VGA (640×480) | 4K (3840×2160) | 16× |

| Video Frame Rate | 30 fps | 120 fps | 4× |

| Night Mode | None | Yes | Infinite capability |

| Depth Sensing | None | LiDAR | Complete 3D mapping |

| AI Processing | Minimal | Dedicated neural engine | Trillions FLOPS |

| Computational Photography | None | Full suite | Complete transformation |

| Portrait Mode Blur | Unavailable | Optical + computational | Professional quality |

| Stabilization | None | OIS + EIS | Professional-grade |

Future Trajectories and Emerging Capabilities

Computational Photography Advances

The trajectory of computational photography shows that capabilities that seemed impossible just a few years ago are now standard features. Looking forward, emerging capabilities include generative photography (creating images from text descriptions), real-time video translation (changing language of audio and lip-sync to match), and temporal consistency across video sequences that allows selective editing of individual frames while maintaining natural consistency.

The iPhone 17 Pro Max already includes early versions of some of these capabilities—generative fill can synthesize new image content, and generative tools can alter the appearance of photos in ways that maintain photorealistic quality. As these technologies mature, the distinction between capturing a photo and editing it will continue to blur. Users might capture a scene and then "edit" it into multiple variations without any visible loss of quality.

Holographic and Volumetric Imaging

Long-term, the trajectory of smartphone imaging may move beyond 2D captures toward volumetric imaging that captures and records 3D information about scenes. LiDAR is the first step in this direction—it captures depth, but still records to 2D images. Future systems might capture full 3D models of scenes, allowing viewers to rotate images, look around subjects, and explore captured scenes in ways that traditional 2D photography doesn't permit.

This would require substantial advances in processing capability, storage efficiency, and display technology, but the trajectory of development suggests such capabilities are plausible within 5-10 years. When realized, this would represent as significant a leap from the iPhone 17 Pro Max as the iPhone 17 Pro Max is from the 3GS.

AI-Assisted Curation and Storytelling

Emerging capabilities include intelligent photo curation where AI analyzes your photo library and automatically curates the best images, creates intelligent albums based on semantic understanding, and even generates narratives or slideshows from your photos. Imagine uploading 10,000 photos from a vacation, and an AI system automatically selecting the best 50, organizing them into a coherent narrative, and creating a professional-quality video montage with music, transitions, and pacing—all without user intervention.

This represents a shift from photography as capture to photography as memory curation. Rather than users managing their photos, AI systems manage them intelligently, freeing users to focus on capturing moments rather than organizing them. The implications for how we relate to our visual memories are substantial—rather than endless browsing through mediocre photos, users would interact with intelligently curated, beautifully presented memories.

Professional Photography Implications

Impact on Professional Photographers

The iPhone 3GS had minimal impact on professional photography—it was too limited to serve as anything more than a backup device. Professional photographers required dedicated cameras with manual controls, interchangeable lenses, and superior image quality.

The iPhone 17 Pro Max occupies a more complex position. Professional photographers increasingly use iPhones as primary creative tools or complementary devices due to their superior video capabilities, unprecedented image quality, and sophisticated computational tools. High-end commercial photography—fashion, product, advertising—is increasingly shot on iPhones, with the results directly comparable to dedicated camera photography.

This shift creates both challenges and opportunities for professional photographers. Clients expect iPhone-quality results regardless of camera used, raising quality standards. Yet the accessibility of professional-grade tools democratizes the industry, allowing photographers without expensive equipment investments to produce professional-quality work. Some traditional photographers view this as threatening; others embrace the tools as augmentations to their craft.

RAW Processing and Professional Workflows

The iPhone 3GS captured JPEG files with no ability to capture RAW data. Professional photographers require RAW to have maximum flexibility in post-processing, meaning the 3GS was unsuitable for professional work where final image quality depended on careful post-processing.

Modern iPhones can capture Pro RAW format—uncompressed RAW data from the sensor—allowing professional post-processing workflows identical to dedicated cameras. However, the iPhone also captures proprietary computational data alongside Pro RAW, allowing intelligent post-processing tools in Lightroom and other software to understand the processing decisions the phone made and refine them. This represents a new workflow where captured RAW data is paired with metadata about intended processing, giving professionals more information to work with.

Environmental and Sustainability Considerations

Electronic Waste Implications

The iPhone 3GS has been obsolete for over a decade, and millions of units were discarded, creating e-waste that contains toxic materials and valuable minerals. The relatively short lifespan—typically 3-4 years before becoming unusable due to software obsolescence—meant high replacement rates and substantial environmental impact.

The iPhone 17 Pro Max is designed for extended longevity, with repair parts availability for 7+ years, software support extending 5-7 years, and modular design allowing repair rather than replacement. This extended lifespan reduces e-waste and environmental impact. However, the increased complexity of modern phones means that repairs are more difficult and require specialized training, potentially limiting accessibility of repair to official channels.

Resource Consumption

The iPhone 3GS was relatively simple in construction, containing fewer rare earth elements and exotic materials than modern phones. However, the required disposable nature (short lifespan) meant rapid resource consumption.

Modern iPhones are more complex and consume more rare materials during manufacturing, but the extended lifespan partially offsets this increased consumption. A single iPhone 17 Pro Max used for 7 years consumes fewer resources per year than a 3GS replaced every 3 years. The computational efficiency of modern devices—performing billions of calculations at lower power—reduces operational energy consumption, offsetting manufacturing energy requirements over the device's lifetime.

Conclusion: From Modest Beginning to Transformative Technology

The comparison between the iPhone 17 Pro Max and the iPhone 3GS illustrates not merely the march of technological progress, but a fundamental transformation in how technology relates to human visual communication. The iPhone 3GS, released sixteen years ago, was a remarkable achievement for its time—it introduced the concept of a pocket-sized camera that could produce web-quality images. Yet by modern standards, the 3GS represented the absolute minimum acceptable quality, with severe limitations in nearly every photographic dimension.

The iPhone 17 Pro Max represents the culmination of sixteen years of innovation across multiple technological domains: optical engineering has delivered multi-lens systems with focal lengths previously requiring entire professional lens collections, sensor manufacturing has produced chips with billions of transistors that capture light with unprecedented efficiency, computational photography has elevated software to parity with optics in determining image quality, and artificial intelligence has enabled cameras that understand scene content and optimize accordingly.

The quantitative improvements are staggering: the main sensor has grown 14-fold in surface area, capture speed has improved 10-20 fold, computational capability has increased 10,000 fold, and photographic quality in low light situations has improved by factors that would be infinite (the 3GS produced essentially unusable low-light photos, while the 17 Pro Max produces acceptable ones). Yet these numbers pale compared to the qualitative transformation: phones have evolved from devices that could document special occasions into devices that can professionally photograph virtually any situation, in any lighting condition, with results that satisfy professional and artistic standards.

This evolution has profound societal implications. Professional photography barriers have been eliminated, allowing anyone with creative vision to produce broadcast-quality content. Visual documentation has become ubiquitous, creating comprehensive records of daily life that future historians will rely on. Visual communication has become the primary medium for human connection across the globe, with smartphones as the primary tool. Artistic expression through photography has been democratized, with computational tools removing the technical barriers that previously required extensive training to overcome.

Looking forward, the trajectory suggests continued advancement toward capabilities that seem science fictional today: real-time video translation, volumetric scene capture, AI-assisted narrative creation, and computational tools that transform user intent directly into captured imagery. The smartphone camera has evolved from a novelty feature into what may be humanity's primary tool for visual memory, artistic expression, and shared communication.

For anyone comparing these devices or understanding smartphone evolution, the key insight is this: the distance from the iPhone 3GS to the iPhone 17 Pro Max doesn't just represent a progression of incremental improvements. It represents the maturation of an entire technological paradigm, the emergence of new industries around computational photography, the transformation of professional practices, and the creation of new ways for humans to perceive and communicate about reality. The iPhone 3GS was remarkable for what it could do; the iPhone 17 Pro Max is remarkable for what it makes universally accessible. That transition—from specialized novelty to democratized professional capability—is perhaps the most significant achievement of the iPhone's photographic evolution.

FAQ

What is computational photography and how does it differ from traditional photography?

Computational photography involves using software algorithms and machine learning to process multiple captured frames intelligently, enhancing or modifying images in ways impossible through optical means alone. Traditional photography relies solely on optics and sensor capture, with minimal post-capture processing. The iPhone 17 Pro Max captures multiple frames simultaneously and combines them using complex algorithms—for example, Deep Fusion captures multiple exposures and uses neural networks to select the best pixels from each frame, producing superior detail and lower noise than any single frame could provide. This represents a paradigm shift where software quality is as important as optical quality in determining final image results.

How does the iPhone 17 Pro Max achieve clear night photography without requiring a high ISO that produces noise?

The system uses multi-frame capture and intelligent synthesis rather than high ISO alone. Night Mode captures multiple frames—typically 5-9 frames in very low light—each at moderate ISO levels that aren't excessively noisy. The system then analyzes these frames to identify and suppress random photon noise while preserving actual image detail. Machine learning algorithms trained on millions of photos understand what constitutes genuine detail versus noise, allowing selective noise reduction that preserves sharpness. Additionally, the larger sensor in the iPhone 17 Pro Max (14× the surface area of the 3GS) gathers more light per pixel, reducing noise at any given ISO. The combination of these techniques produces clear, detailed night photos that would have been impossible on the 3GS.

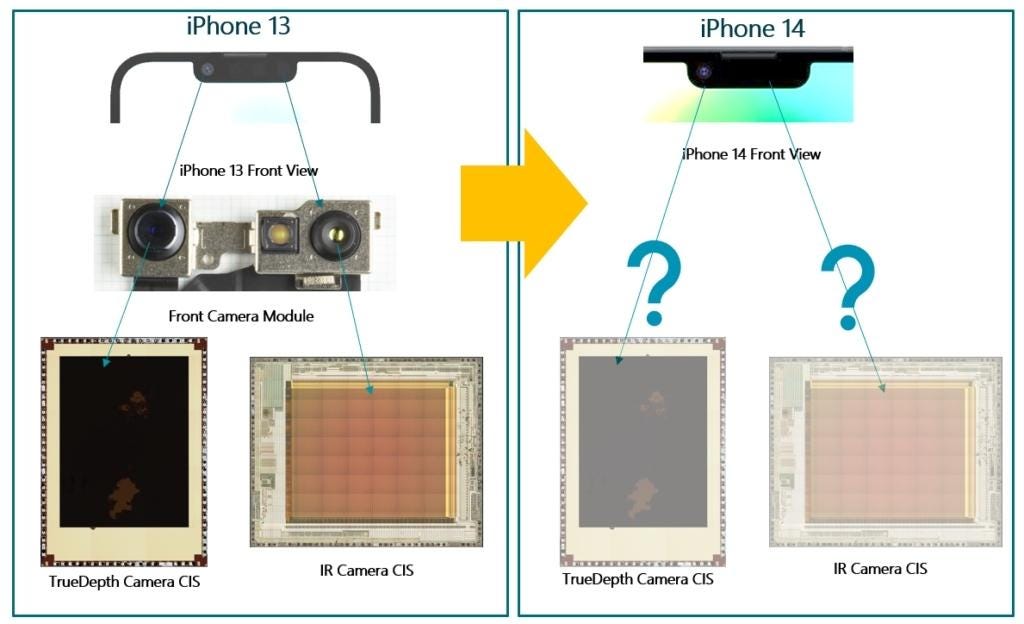

What is LiDAR depth sensing and why is it important for smartphone photography?

LiDAR (Light Detection and Ranging) is a technology that emits infrared light and measures how long it takes for light to bounce back from objects in the scene, creating a precise 3D depth map. This depth information enables several capabilities: autofocus works in complete darkness (since depth measurement doesn't require visible light), portrait mode blur is accurate (since the system knows exactly where subjects end and backgrounds begin), and AR applications can place virtual objects realistically (by understanding the 3D structure of the environment). The iPhone 3GS had no depth sensing, meaning these capabilities simply didn't exist—portrait blur had to be approximated through computational guessing, autofocus failed in darkness, and AR was impossible.

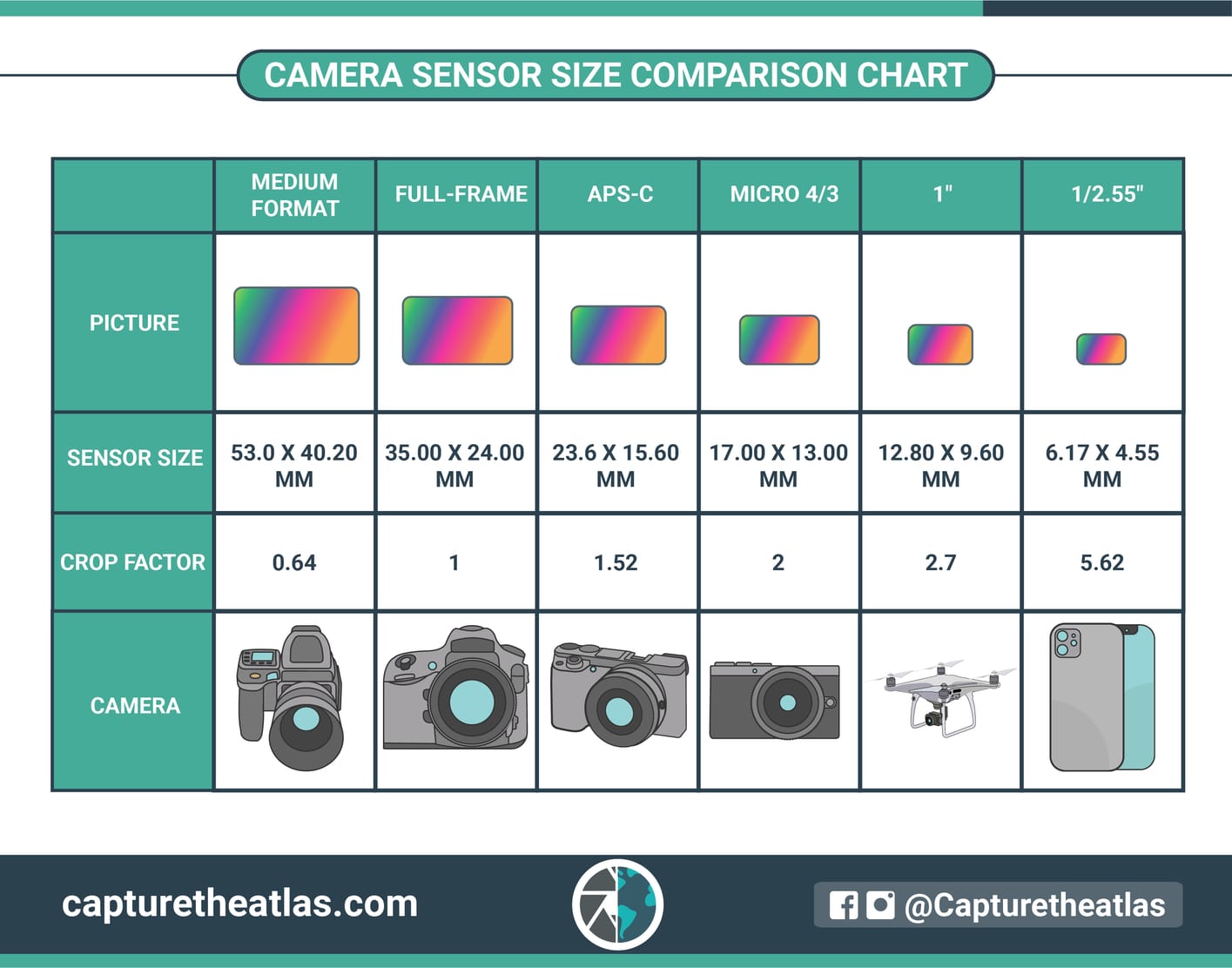

Why are smartphone sensors so much smaller than dedicated camera sensors, and what are the implications?

Smartphone sensors are smaller primarily due to form factor constraints—phones must remain thin and pocketable, limiting space available for sensors. A full-frame camera sensor (36×24mm) would make a phone impractically thick. Despite being smaller, modern smartphone sensors are remarkably capable due to advanced manufacturing (smaller transistors = more dense pixels) and computational enhancement (software can improve performance beyond optical/sensor limitations). The primary implication is that smartphones excel at broad, well-lit scenes and computational enhancement, while larger camera sensors maintain advantages in low-light performance and optical flexibility. However, the gap has narrowed dramatically—a smartphone can now handle low-light situations that would have required expensive professional camera equipment just a few years ago.

How has smartphone photography impacted professional photography and the camera industry?

Smartphone photography has fundamentally disrupted the camera industry, with dedicated camera sales declining significantly as smartphone capabilities have improved. Professional photographers increasingly use iPhones as primary tools or complementary devices, particularly for video. This has created challenges for traditional camera manufacturers, who are responding by integrating advanced computational tools and targeting specialized use cases (interchangeable lenses, specialized sensors, ruggedness). Paradoxically, the democratization of professional-quality imaging through smartphones has also expanded the market for visual content, creating demand for higher quality and more sophisticated photography. Many professional photographers embrace smartphones as tools that enhance rather than replace their traditional cameras.

What machine learning capabilities does the iPhone 17 Pro Max use for photography?

The iPhone 17 Pro Max uses multiple specialized machine learning models for various purposes: scene recognition identifies the type of photography (portrait, landscape, macro, etc.), face detection identifies and locates human faces, semantic segmentation identifies different elements in scenes (sky, foreground, water, etc.), depth estimation understands 3D structure, gesture recognition identifies human poses and movements, text recognition identifies and processes readable text, and aesthetic analysis evaluates composition and framing quality. All of these models run on-device using a dedicated neural processing engine, meaning the system can perform real-time AI inference without requiring internet connectivity. These capabilities enable features like intelligent scene optimization, precise autofocus, and realistic portrait blur that wouldn't be possible without machine learning.

How does the iPhone 17 Pro Max's multi-lens system improve photography compared to single-lens phones?

Multiple lenses allow optimization for different focal lengths and use cases. The ultra-wide (0.5×) lens captures expansive 120-degree fields of view, the standard (1.0×) lens maintains natural perspective, the telephoto (3×) provides magnification for distant subjects, and the periscope (5×) extends zoom capability. Each lens has a dedicated sensor optimized for its focal length, allowing superior image quality across zoom ranges. The system intelligently selects which lens to use based on zoom level and lighting conditions, ensuring optimal quality. The iPhone 3GS had only a fixed 35mm-equivalent lens, meaning users couldn't control perspective distortion, background compression, or field of view—fundamental tools for creative photography.

What is the difference between optical zoom and computational/digital zoom?

Optical zoom uses physical lens elements to magnify the image before it reaches the sensor, maintaining detail and avoiding quality loss. Digital/computational zoom captures the image at one focal length and then magnifies the captured pixels, which traditionally resulted in visible quality loss since pixel magnification adds no actual detail. Modern computational zoom (available on iPhone 17 Pro Max up to 10×) uses AI-powered super-resolution algorithms trained on millions of image pairs to intelligently reconstruct plausible high-frequency detail, producing surprisingly sharp results. However, optical zoom (5× on iPhone 17 Pro Max) always maintains superior quality to computational zoom at equivalent magnifications. The iPhone 3GS had no optical zoom and only poor digital zoom, limiting zoom capability to about 1.5× before quality degraded unacceptably.

How have video capabilities evolved from the iPhone 3GS to the iPhone 17 Pro Max?

The improvements are extraordinary: resolution increased from VGA (640×480) to 4K (3840×2160)—a 16× increase in pixel count; frame rate increased from 30 fps to 120 fps—a 4× improvement enabling ultra-smooth motion and dramatic slow-motion effects; stabilization evolved from none to advanced optical and electronic stabilization providing movie-like smoothness; audio evolved from mono to spatial directional audio; and color science evolved from basic processing to sophisticated per-region optimization. More subtly, the codec has improved from H.264 to H.265 HEVC, achieving 2× better compression or 2× better quality at the same file size. Videos shot on the iPhone 17 Pro Max in challenging conditions (low light, while moving, with fast action) rival professional cinema equipment from a decade ago.

What is RAW image capture and why do professional photographers value it?

RAW files contain unprocessed sensor data, capturing all information the sensor detected without any in-camera processing applied. This gives photographers maximum flexibility in post-processing—they can adjust white balance, exposure, and tone curves without quality loss. Processed formats like JPEG apply white balance, exposure, and tone curve adjustments in-camera, which can't be fully reversed in post-processing. The iPhone 3GS captured only JPEG files, limiting professional photographers' post-processing flexibility. The iPhone 17 Pro Max captures Pro RAW format, allowing professional workflows identical to dedicated cameras. Additionally, modern iPhones capture proprietary computational metadata alongside RAW, allowing post-processing software to understand the phone's processing recommendations and refine them intelligently, providing even more professional flexibility than traditional RAW workflows.

Key Insights and Implications

Exponential Progress in Optics and Sensors: The evolution from a single 2-megapixel fixed lens to a sophisticated multi-lens system with computational imaging capabilities demonstrates how rapidly optical technology advances when pursued aggressively.

Software Parity with Hardware: The most remarkable aspect of the iPhone 17 Pro Max is that software quality matches optical/sensor quality in determining final image output. This represents a philosophical shift where traditional photography wisdom (focus on optics and sensors) must now be supplemented with understanding of computational techniques.

Democratization of Professional Tools: The transformation from a novelty feature to a professional-grade imaging device means that talent and vision matter far more than equipment budget in determining photography quality.

Measurement Challenges: Traditional photography metrics (megapixels, aperture, ISO) no longer tell the complete story of image quality, as computational techniques can dramatically enhance results beyond what these specifications suggest.

Societal Impact: The ubiquity of high-quality imaging devices has created visual documentation at scales previously impossible, fundamentally changing how humans communicate and preserve memories.

Future Convergence: As smartphone photography capabilities continue advancing, the distinction between smartphones, dedicated cameras, and professional imaging tools continues blurring, with the smartphone increasingly becoming the primary imaging device for most users.

Key Takeaways

- iPhone 17 Pro Max sensor is 14× larger in surface area than iPhone 3GS, gathering 4-5× more light for cleaner images

- Computational photography uses machine learning to combine multiple frames intelligently, producing results impossible through optics alone

- Night mode photography represents the most dramatic improvement—iPhone 3GS essentially unusable in low light, iPhone 17 Pro Max captures professional-quality images

- Multi-lens system (0.5×, 1×, 3×, 5× focal lengths) provides creative control over perspective that iPhone 3GS's fixed lens couldn't offer

- AI-powered features like semantic segmentation, face detection, and scene recognition enable automated processing that maintains natural appearance

- LiDAR depth sensing enables autofocus in darkness and accurate portrait blur that iPhone 3GS approach couldn't achieve

- Smartphone photography democratization: professional-grade imaging now accessible to anyone with a phone, not requiring expensive camera equipment

- Video evolution shows 16× resolution increase (VGA to 4K), 4× frame rate improvement (30fps to 120fps), and movie-like stabilization

- Dynamic range expanded from 6-7 stops to 14-15 stops, allowing proper detail in both bright skies and dark shadows simultaneously

- The trajectory from iPhone 3GS to iPhone 17 Pro Max illustrates transformation from photography as specialized skill to photography as democratized capability

Related Articles

- Samsung Galaxy S26 vs OnePlus 16: Which Has the Better Camera? [2025]

- Samsung Galaxy S26 AI Photography: Features & Specs [2025]

- Xiaomi 18 Pro's Dual 200MP Cameras: Beyond the Megapixel War [2025]

- Canon EOS R5 Mark II vs R5: Should You Upgrade? [2025]

- Google Pixel 10 Flat Phone: Why Affordable Matters [2025]

- Google Pixel 10a vs Pixel 9a: Detailed Comparison & Buying Guide [2025]