Samsung Galaxy S26 AI Photography Tools: Complete Guide to Mobile AI Imaging [2025]

Samsung just dropped a hint that got everyone paying attention. The Galaxy S26 is coming, and it's bringing something different to mobile photography: a unified AI imaging system that actually sounds useful.

Here's what we're looking at. Samsung's taking everything you normally do across five different apps—capture, edit, enhance, merge, share—and consolidating it into a single Galaxy AI photography suite. That's not just convenient. That's a fundamental shift in how smartphones approach image processing.

The company shared demos showing some genuinely impressive capabilities. Photos going from daylight to nighttime in seconds. Objects that were partially obscured getting restored automatically. Low-light shots getting detail that shouldn't be there. Multiple frames merging into one cohesive image without the weird blending artifacts you're used to seeing.

This matters because mobile photography hit a ceiling. Better sensors, better lenses, bigger pixels—we've wrung everything out of hardware optimization. The next frontier is software. And Samsung's betting that AI is the answer.

But here's what's interesting. This isn't just Samsung throwing AI at problems because everyone else is doing it. There's a genuine workflow logic here. You're not switching between apps constantly. You're not learning ten different interfaces. Everything lives in one place.

Let me walk you through what Samsung's actually building, what it means for photographers (casual and serious), and whether this actually changes anything about how you take pictures.

The Core Problem Samsung Is Solving

Mobile photography has gotten good. Too good, actually. A smartphone camera now produces images that would've required a DSLR ten years ago. Dynamic range is solid. Color science is sophisticated. Autofocus is snappy.

But there's a massive gap between "good" and "what I was actually trying to capture."

You've felt this. You're at dinner. The light is moody and atmospheric to your eye. You take a photo. It comes out dark and muddy. The restaurant hired a lighting designer. Your phone didn't notice. Or the opposite happens: bright sunlight washes everything out. Contrast goes flat. Details disappear.

Or you're trying to get a group photo. Someone blinked. Someone's half-cut off. You have seventeen takes. Picking the best one means compromising—blinker or composition. You pick composition.

Or you've got a shot you love from the wrong angle. There's a telephone pole photobombing the background. You could try Photoshop, but that takes 20 minutes and looks fake anyway.

These are workflow problems. Not camera problems. The hardware is fine. The software is what's broken.

Samsung's approach is fundamentally different from what competitors are doing. Google's leaning into computational photography—taking multiple exposures and blending them intelligently. Apple's focusing on processing optimization—making sure RAW files give you more control. Samsung's saying: what if we just made the phone understand what you were trying to photograph and fix it?

That's the insight. Your phone shoots hundreds of photos. You keep barely any. What if instead of increasing the shot-to-keeper ratio through hardware, you just made the keepers better?

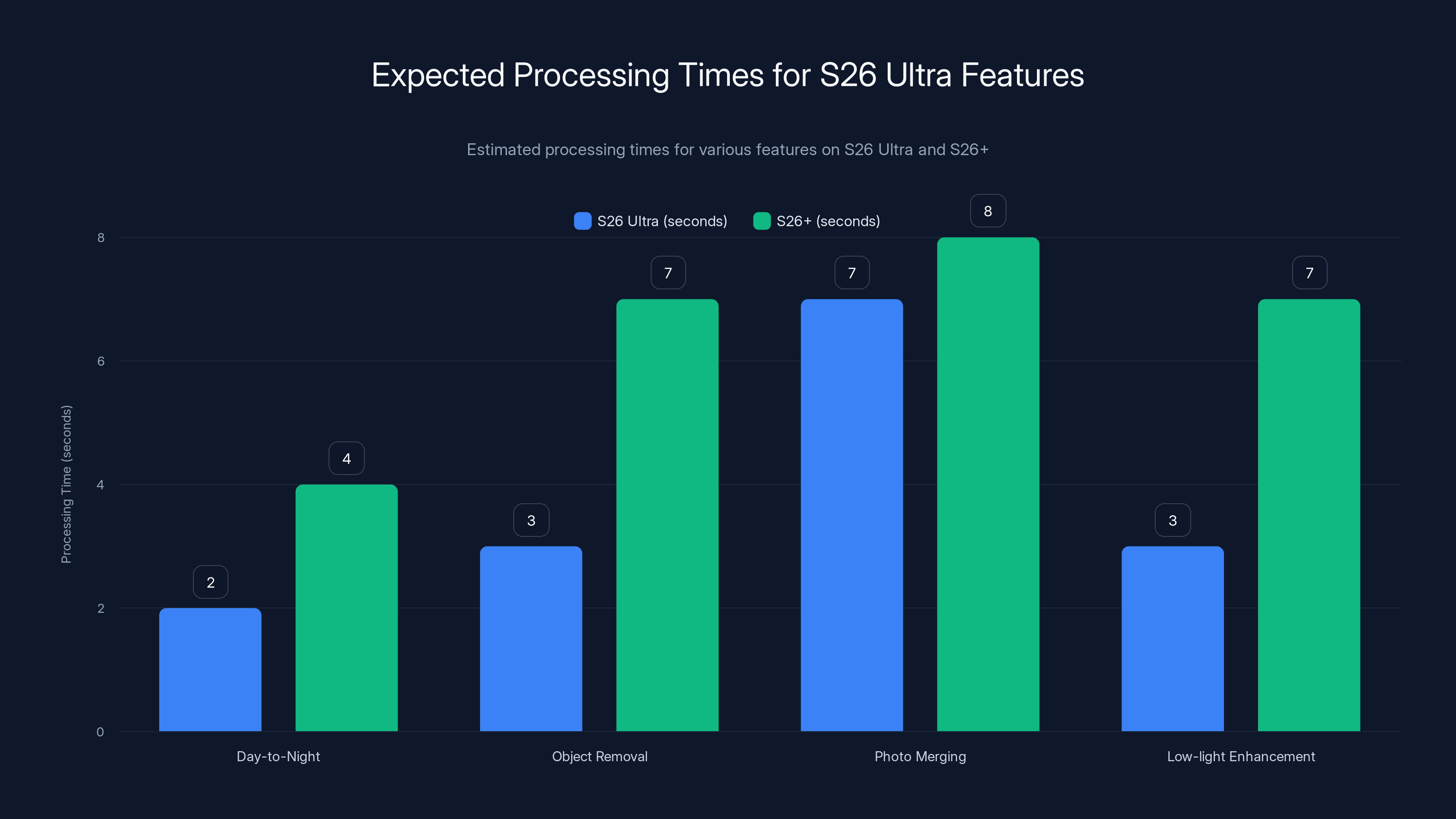

The S26 Ultra is expected to perform faster than the S26+ across all features, with day-to-night transformations being the quickest. Estimated data.

Day-to-Night Transformation: How It Actually Works

Let's talk about the feature that got everyone's attention first. Converting a daytime photo to nighttime in seconds.

This is wild on the surface. You're not just darkening the image. That's trivial. You're restructuring the entire lighting environment. Shadows appear where they should be. Highlights shift. The moon appears in a dark sky. Streetlights glow. The entire mood transforms.

Under the hood, here's what's happening. The AI isn't magic. It's trained on thousands of paired images: the same scene shot in daylight and nighttime. It learned the patterns. When you feed it a daytime image, it's not just adjusting curves and saturation. It's identifying scene elements—buildings, trees, sky, foreground—and applying learned transformations specific to each element.

The sky becomes darker and gains stars or moon. Buildings keep their detail but shift to cooler tones. Streetlights and windows gain warm glow. Shadows deepen naturally, not just by reducing brightness uniformly.

This is different from traditional photo editing. When you manually adjust a daytime photo to look like night in Lightroom, you're making global adjustments. Darken overall exposure. Cool the white balance. Increase contrast. It looks obviously edited. Wrong.

AI-driven transformation respects scene structure. It knows that a building's south-facing wall would be darker at night than the north-facing wall. It knows streetlights cast light in specific directions. It's applying learned structural rules, not just global filters.

Now, here's the constraint. This works best on certain types of scenes. A landscape photo of a mountain? Perfect. A street scene with buildings and lights? Excellent. An indoor portrait? You're going to see issues. The AI doesn't know where the fill light should come from. Your subject's face might look weirdly shadowed.

Samsung's demos conveniently showed what works. Outdoor scenes. Rich environments. Multiple light sources. That's where the transformation shines.

The practical application is interesting though. Photographers have been asking for this for years. You want to show how a location looks at different times without returning to shoot it again. Social media content creators can show variety without shooting multiple times. Real estate photography could show properties in different lighting scenarios.

But honestly? For most users, the appeal is simpler. You've got a great daytime photo. You think it would look cooler as a night shot. You don't want to sit in Photoshop for 30 minutes. One tap. Done.

Object Restoration and Content Removal

This is where Samsung's getting into genuinely complex territory. Partially hidden objects getting revealed. Unwanted elements disappearing. The technical term is "inpainting"—using surrounding context to intelligently fill missing areas.

You've seen this in Photoshop's content-aware fill. You've seen it in Google Photos' magic eraser. But those tools require active user intervention. You draw a selection. You process. You wait.

Samsung's approach sounds more automated. The system identifies what's missing or unwanted and suggests fixes. Maybe your subject's hand is partially cut off at the frame edge. The AI fills in what the hand should look like based on learned patterns of hands, anatomical correctness, and the specific person's appearance in other parts of the photo.

Here's the challenge: this is incredibly easy to mess up. If the AI makes a wrong assumption about what should be there, you get artifacts. A hand might have six fingers. A face might have warped symmetry. The learning model is only as good as its training data.

Samsung's had time to work on this. They've been iterating through multiple Galaxy generations. The technology is more mature than it was two years ago. But the concept is still fundamentally limited by context. If there's ambiguity—is that shadow a missing object or just shadow?—the algorithm has to guess.

The practical cases where this shines are specific. You've got a group photo. Someone's head is cut off at the edge. Partially show it. Or there's a photobomber partially in frame. Remove them. Or your subject's eyes are closed, but another photo from the burst has them open. The AI can intelligently combine.

The constraint: it's not magic. Complex scenes with lots of texture—like crowds or forests—are harder. Simple scenes with clear subjects—like portraits—work better. You'll get best results with professional photographers understanding the limitations and setting expectations appropriately.

Low-Light Detail Extraction: More Than Increased Brightness

Smartphones have gotten dramatically better at low light. Larger sensors. Better noise reduction. More sophisticated computational photography. But there's still a hard limit: if the light isn't there, the detail isn't there.

Except Samsung's saying not quite. With AI, you can infer detail that should exist based on what you can see and what similar scenes look like.

This is noise reduction taken to the next level. Traditional noise reduction averages pixels. If something looks noisy, it gets smoothed. Result: detail loss. You reduce noise, but you lose texture. Faces look plastic. Fabrics look flat.

AI-driven detail extraction is smarter. It's trained to recognize what parts of an image are actually "noise" (random sensor artifacts) versus what's "detail" (real texture and structure). It aggressively removes noise while preserving detail.

It also uses learned priors. It knows what fabric texture should look like. What skin texture should be. What foliage detail should be. When it encounters a low-light image with heavy noise, it's not just smoothing. It's reconstructing what the scene likely actually looks like based on patterns it's learned.

Take a low-light photo of someone's face. Traditional processing either keeps the detail and the noise, or removes noise and loses detail. AI-processing can often get both. The face is clear. The skin texture is there. But the photon noise is gone.

The limitation: this only works so far. At some point, the light is just too low. There isn't enough information to reconstruct. The AI starts hallucinating detail that wasn't there. A face might get sharpness that makes it look weirdly unnatural. You gain detail but lose authenticity.

Samsung's solution is probably conservative. The demos showed reasonable light levels—dimly lit restaurant, nighttime street—not complete darkness. That's where the technology works best.

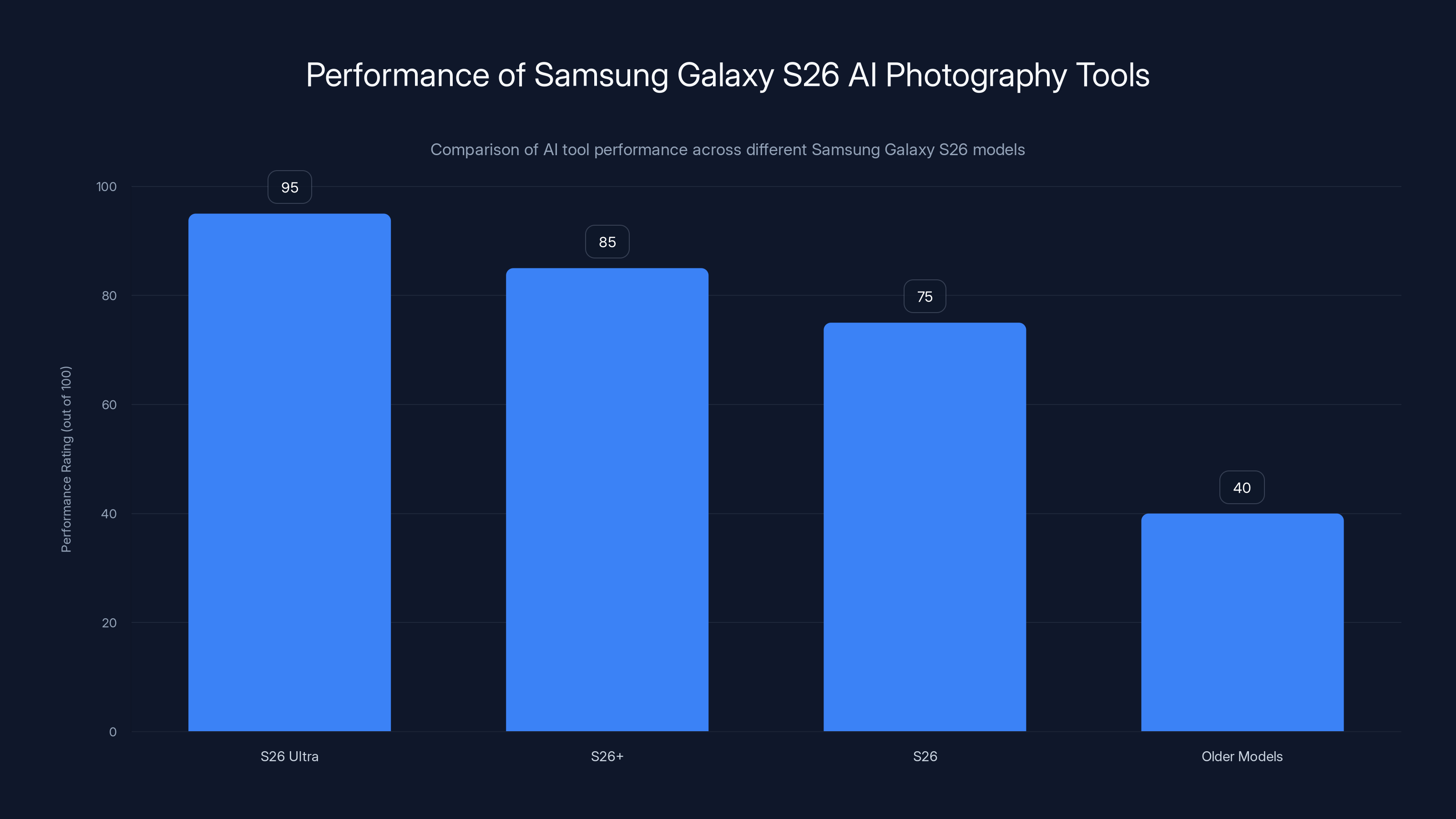

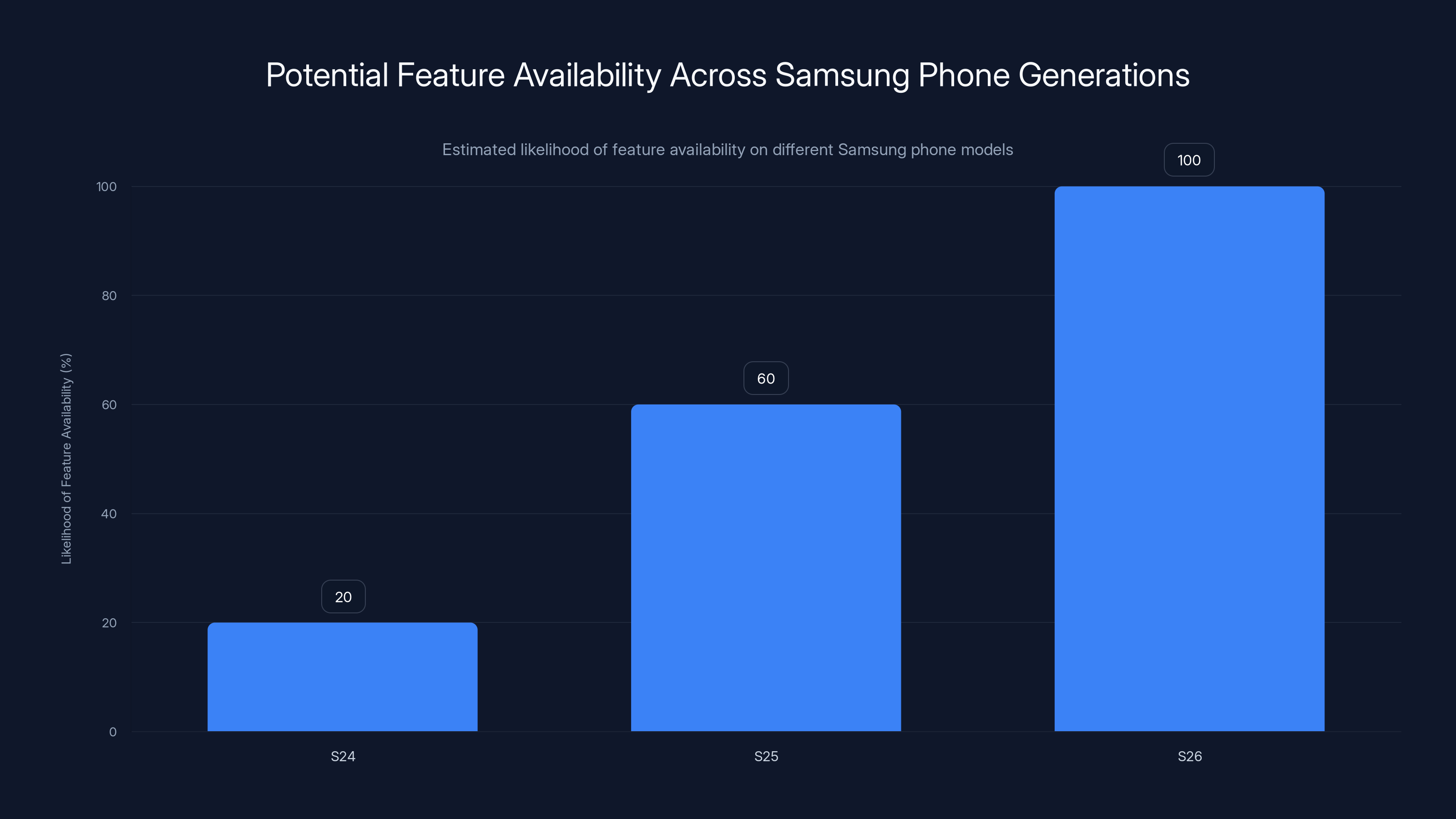

The Samsung Galaxy S26 Ultra offers the best performance for AI photography tools, followed by the S26+ and S26. Older models struggle due to hardware limitations. Estimated data based on processing capabilities.

Photo Merging: The Underrated Capability

Here's the feature nobody's talking about but photographers should be excited about: intelligent photo merging.

You've taken five shots. One has perfect exposure but soft focus. One has sharp focus but overexposed sky. One has great color but the subject blinked. Traditionally, you're picking the best compromise or spending time in post.

AI-driven merging can analyze all five and combine the best elements from each. Exposure from photo three. Focus from photo one. Expression from photo five. It's blending at the semantic level, not pixel level.

This is monumentally useful for serious photographers. Capture three exposures for an HDR merge? The phone does it automatically now and does it better. Shoot burst mode to get perfect focus? The phone picks the sharpest frame from any shot in the series. Facial expression issues? It can analyze which frame has the best face and which has the best body and blend them.

The technical challenge: alignment and ghosting. If you move between shots, merging creates artifacts. The AI needs to detect movement, align frames correctly, and blend smoothly.

Samsung's approach likely includes intelligent alignment detection and rejection of frames that moved too much. It probably also uses face detection to ensure that if multiple faces are present, expressions are blended correctly.

The practical impact is substantial. Your keeper rate goes up. Your success rate with group photos goes up. You can be more aggressive with challenging lighting because the AI can blend multiple exposures.

The Unified App Strategy: Why This Matters More Than You Think

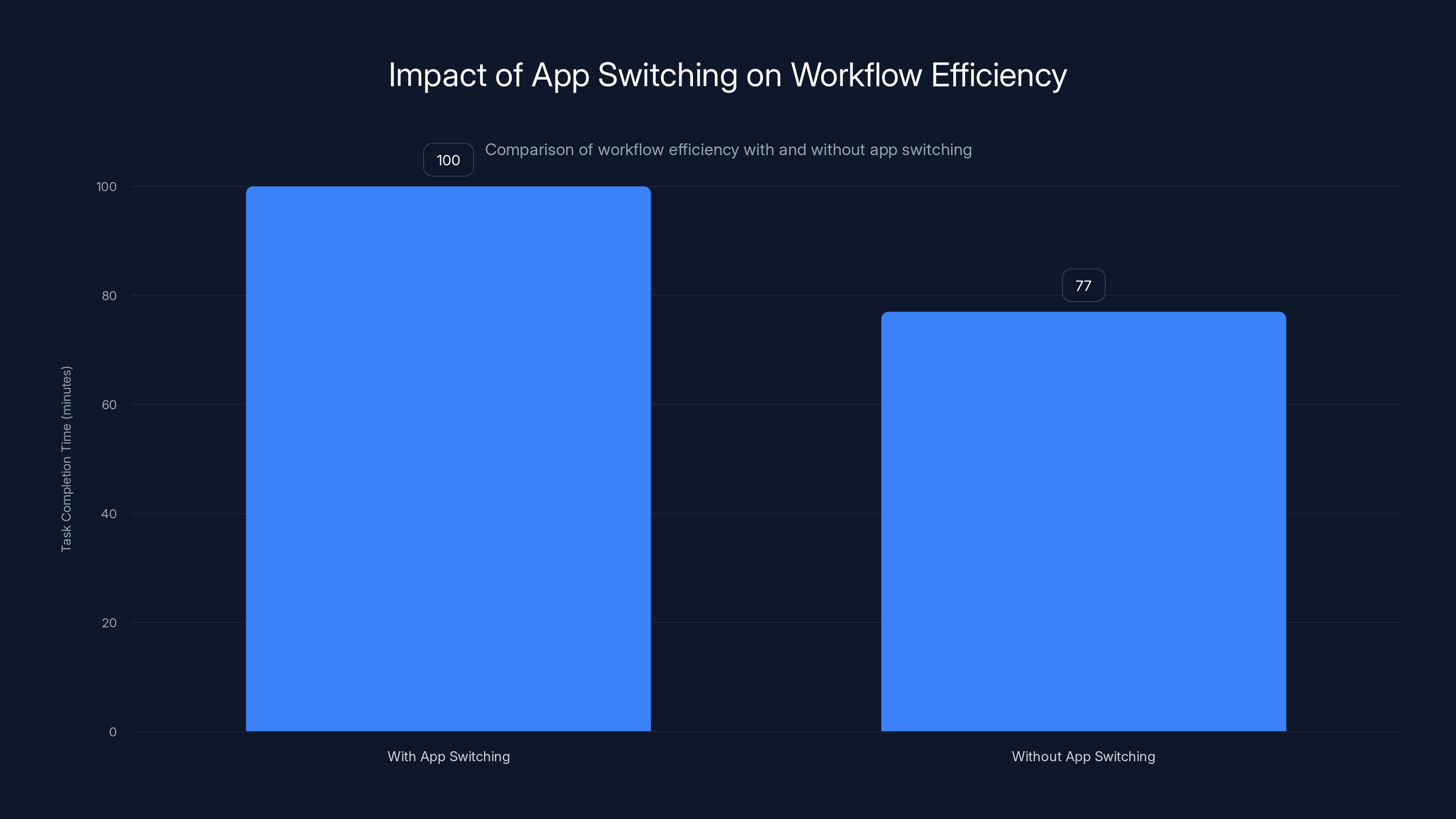

Here's something people gloss over: everything lives in one app.

That sounds trivial. It's not. Here's why it matters.

Every other smartphone maker's approach requires switching. You take a photo in the camera app. It goes to a gallery. You open a photo editor. Make changes. Open a sharing app. Post. Or you're trying to fix something: camera app doesn't have the tool you need, so you open Snapseed. Or Instagram's native editor. Or Lightroom. You're juggling apps.

App switching costs time. It also breaks workflow momentum. Every switch is a cognitive interruption. You lose the creative thread.

Samsung's consolidation means: capture, edit, merge, adjust, share—all in sequence, all in one place. The photo stays in context. You're never hunting for where your image went. The tools you need are always right there.

This is also an advantage competitively. Google and Apple can't consolidate as easily because they don't own the entire OS ecosystem the same way. Samsung does. They can build something deeply integrated into the OS layer.

It's also a data play. Every operation stays within Samsung's system. Every transformation is logged. That data trains future iterations of the AI. The system gets smarter over time.

The practical impact for users: faster workflows. For Samsung: competitive moat. The more people use the system, the more data it generates, the better the AI gets, the more people use it.

Comparison With Competing AI Photography Systems

Google's approach with Pixel phones has been computational photography focused. They take multiple exposures, intelligently combine them, and use AI to enhance the result. It works brilliantly. The results are consistently good.

But Google's tools are mostly automatic. You take a photo. It gets processed. You get a result. There's not much active editing. You can adjust exposure and color temperature, but you're not doing transformative work.

Apple's approach is more control-oriented. They give you RAW files. They give you advanced sliders. They're saying "here's the data, you decide what to do with it." The AI is more subtle—helping with autofocus, exposure metering, noise reduction.

Neither is really doing transformative editing at the on-device level.

Samsung's approach sits between them. More transformative than Google, more automated than Apple. The AI isn't suggesting adjustments. It's making intelligent changes. Day to night. Removing objects. Merging exposures. These are significant alterations.

The advantage: non-professionals can do professional-level edits. The constraint: it could feel like the phone is making decisions about your photos without you. Some photographers will love the autonomy. Others will want more control.

Huawei and some Chinese manufacturers have been exploring similar territory, but they don't have the ecosystem reach or the training data volume that Samsung does.

Microsoft (through their AI partnerships) has been investing in similar technologies, but not in a focused consumer product.

Performance Requirements and Processing Power

Here's the technical reality: this stuff requires processing power.

AI models that can do real-time transformation, inpainting, and intelligent merging need either a dedicated AI accelerator or a very efficient model. The Snapdragon processors Samsung uses have dedicated neural processing units (NPUs). That's where this processing happens.

Samsung's flagship processor (the Snapdragon 8 Elite, which the S26 Ultra will likely use) has a dedicated NPU that can handle trillions of operations per second. That's enough for on-device processing of these kinds of transformations.

The advantage of on-device processing: privacy. Your photos never leave the phone. They're not sent to Samsung's servers. You're not waiting for cloud processing. The edit happens instantly.

The constraint: the model has to be relatively efficient. The most sophisticated AI models are massive—billions of parameters. You can't fit those on a phone. Samsung's models have to be compressed, quantized, and optimized.

That optimization means slight quality loss compared to server-side processing. But it's a tradeoff Samsung's making intentionally: real-time performance and privacy over maximum quality.

For the S26 and S26+, which use less powerful processors, the transformations will still work but might be slightly slower. The processing might happen in 2-3 seconds instead of 1 second. Still fast, but noticeable.

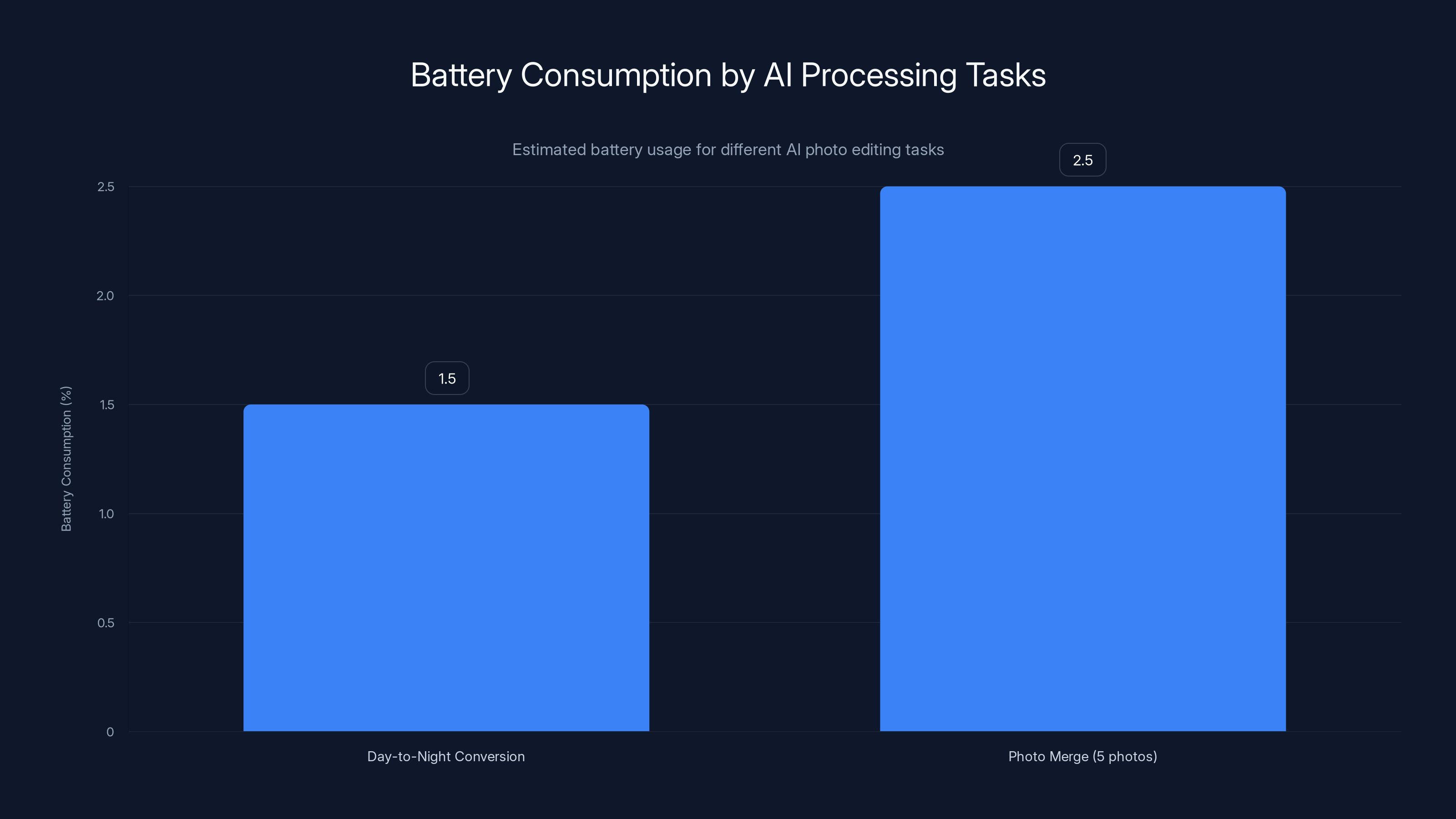

Battery impact is real too. Processing intensive AI operations drains battery faster. Samsung's probably done work to optimize this—using lower precision math where possible, offloading some processing to idle times, limiting intensive operations to when the phone is plugged in.

But if you're aggressively using these tools, you'll notice battery drain. That's the tradeoff.

Reducing app switching can improve task completion time by approximately 23%, highlighting the efficiency of a unified app strategy. (Estimated data)

Privacy and Data Handling Considerations

Samsung's statement that these are on-device processing is significant. But let's dig into what that actually means.

On-device doesn't mean Samsung never sees your photos. It means the transformation processing happens on the phone. But Samsung still might log metadata. What transformations did you use? How often? What types of images do you edit?

That metadata is valuable for training. It tells Samsung what works, what doesn't, what features are popular.

For most users, this is fine. You're getting a service. Samsung gets data about how you use it. Standard tradeoff.

For privacy-conscious users, it's worth understanding. You're not sending raw image data to the cloud. But you're generating usage signals that go back to Samsung.

You can presumably opt out of this, but you might lose some functionality. For instance, if the system learns that "day to night" edits on beach scenes look weird, and you've got that data, the system could warn you. Without that data, no warning.

Samsung hasn't been particularly sketchy about privacy relative to competitors. But they're also not a privacy-first company like Signal or Apple (at least, not consistently).

The real privacy question: what about the AI models themselves? Where were they trained? Are they trained on user photos? On licensed datasets?

Samsung's probably training on a mix: their own collected data (from beta testers and users who opted in), licensed datasets, and public datasets. The ethical considerations around AI training are substantial, and Samsung hasn't been transparent about this.

Real-World Use Cases and Scenarios

Let's talk about when these tools actually matter in practice.

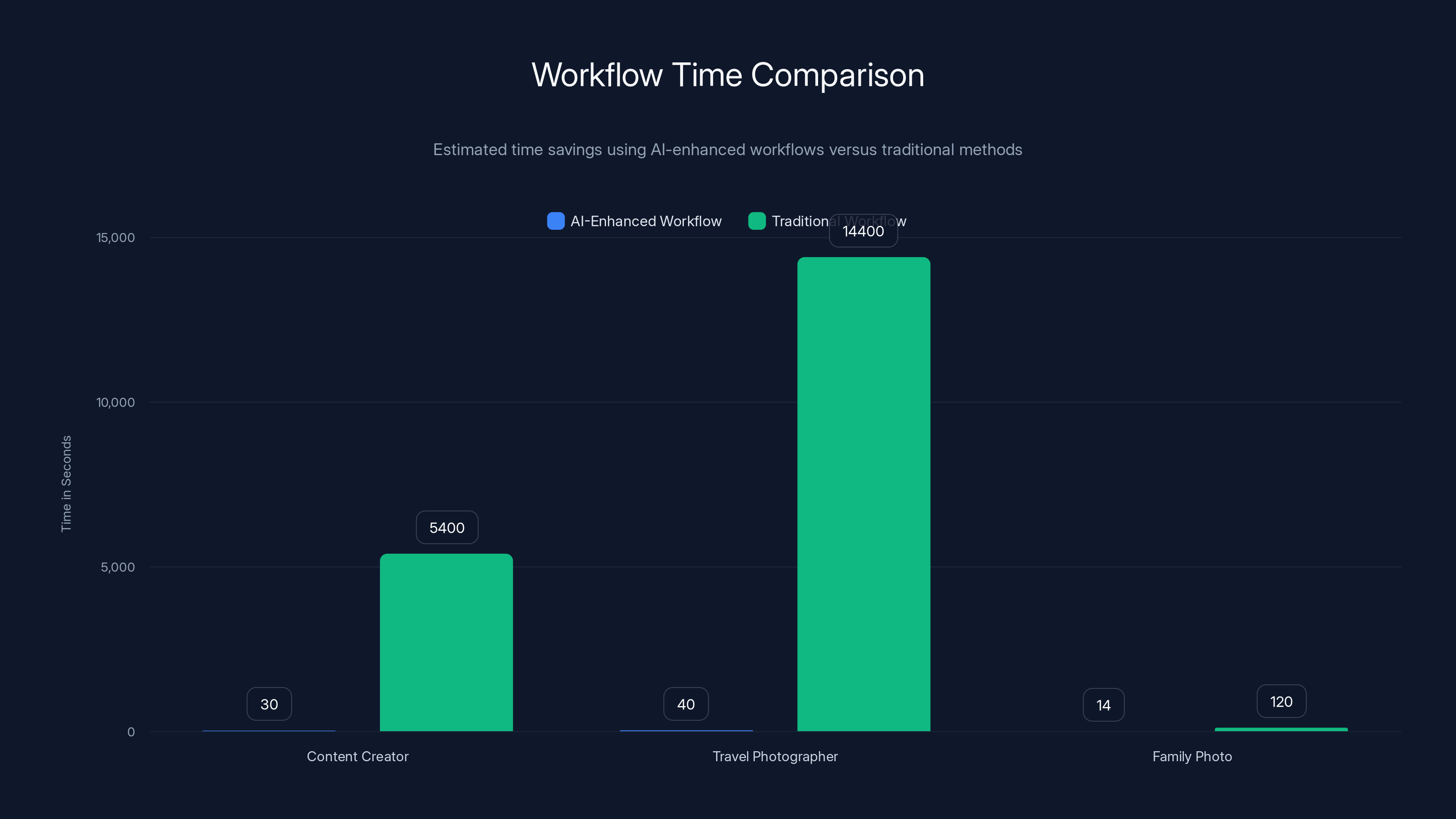

Scenario 1: Content Creator You're running Instagram or Tik Tok. You need consistent aesthetic. Shooting multiple times for variety is impractical. With these tools: one location, multiple interpretations. Daytime shot, plus night shot. One merged exposure. Better composition. Different lighting scenarios. Same location, more content variety. Time saved: hours per week.

Scenario 2: Travel Photography You're visiting somewhere once. You're not coming back. You want to capture both daytime and nighttime versions of the same location. Traditional approach: return at night. With Samsung: capture once in daylight, create convincing night version algorithmically. You're getting two shots' worth of content from one trip.

Scenario 3: Family Photos Group photos with kids are hard. Someone blinks. Someone picks their nose. Someone's blurry. Traditional: retake until everyone looks good. With Samsung: take three shots, the system blends the best face from each, best composition, best eyes. One keeper instead of fighting for it.

Scenario 4: Low-Light Events You're at a concert or theater event. The light is terrible. You want the memory. Traditional approach: blurry photos you can barely see. With Samsung: low-light extraction pulls out detail. You can actually see what happened.

Scenario 5: Imperfect Shots You've got a great photo but there's a photobomber in the background. Or a telephone pole through someone's head. Object removal fixes it. You're not spending 30 minutes in Photoshop.

The common thread: these are use cases where traditional photography hits friction. The AI removes friction.

Limitations You Should Know About

Let's be real about what won't work.

Highly detailed outdoor scenes with complex textures—forests, crowds, intricate architecture—are harder. The AI might create artifacts. Objects might blend weirdly. Textures might look painted.

Portraits with complex expressions are tricky. If you're trying to merge expressions, the AI might not understand subtle emotion. The result could look odd.

Extreme transformations—converting a bright noon photo to believable midnight, or a portrait to full landscape—exceed the AI's capability. The further the transformation, the more obvious the artifacts.

Rare or unusual subjects—you have a pet bird the AI has never seen. A specific architecture style from a region the training data didn't cover. The AI defaults to more generic outputs.

Moving subjects are problematic for merging. If you shot multiple frames and someone moved between them, the alignment gets confused. Multiple exposures of moving subjects are harder to blend.

Very high resolution photos strain on-device processing. The phone might downscale, process, then upscale. That introduces quality loss.

Samsung's probably being conservative with their default settings. The AI might have the capability to do more, but defaults to safer transformations. Advanced users might find the results underwhelming. Casual users will probably find them impressive.

Software Updates and Iterative Improvement

Here's something important: these AI systems get better over time.

Samsung will update the models. Each update brings improvements. The first version might struggle with certain scenarios. Six months later, they fix it.

This is a contrast to hardware upgrades. You buy a camera with certain capabilities. They don't improve. Your S26's photography AI could be significantly better in a year.

Samsung has experience with this from Galaxy AI's rollout. They launched it somewhat half-baked, then iterated. Each update improved the system.

For users: this is good. Your phone gets smarter. But it also means early adopters might hit rough edges.

For Samsung: it's a win. They can launch with "beta" quality and improve without hardware changes. The software that's been refined over a year is what most users experience.

The implication: reviewing the S26's photography at launch will tell you what the early experience is like. Reviewing it in 6 months will tell you what the mature experience is like.

AI-enhanced workflows significantly reduce time investment compared to traditional methods, offering creative flexibility and efficiency. Estimated data.

Integration With Other Samsung Services

Samsung's not building this in isolation. It connects to their broader ecosystem.

Galaxy Cloud presumably gets direct integration. You're editing on the phone, the cloud has your originals, you can sync edited versions.

Galaxy Buds could be relevant—hands-free voice commands for triggering edits or sharing.

Smart Things (Samsung's home Io T platform) could theoretically integrate—imagine smart home lighting information helping inform how the photo should be processed.

Samsung's tablet and laptop ecosystem could extend this. You start editing on your phone, continue on your tablet with a stylus, finish on your laptop with keyboard and mouse.

None of this is confirmed, but it's where Samsung's probably thinking. The photography AI isn't a one-off feature. It's part of a broader suite.

Battery and Thermal Implications

Intensive AI processing generates heat and drains battery. This is real, not theoretical.

A 30-second day-to-night conversion might consume 1-2% battery. A complex merge of five photos might consume 2-3%. If you're extensively using these tools, you're watching battery percentage drop.

Samsung's probably included thermal throttling. If the processor gets too hot, processing slows down or stops. You don't want your phone getting uncomfortably warm in your pocket.

For most users, this is fine. You're doing occasional editing, not continuous heavy processing. But power users who aggressively edit photos will notice.

The S26 Ultra will handle this better than the S26+, which will handle it better than the S26. The larger batteries and better cooling in the flagship help.

How This Compares to Desktop Software

Professional photographers use Lightroom, Capture One, or custom workflows. The level of control available is substantially greater than what a phone can offer.

But that control comes with friction. You're on a computer. You're navigating menus. You're waiting for processing. It's not portable.

Samsung's approach trades control for convenience. You give up pixel-level adjustment and you gain speed and accessibility. For 95% of photos, the results are probably good enough. For the 5% where you need fine control, you're still moving to desktop.

But the gap is closing. Each year, on-device tools get more powerful. At some point, professional photographers might find they don't need the desktop for 80% of their work.

That's years away though. Current generation tools still have limitations.

Market Positioning and Competitive Landscape

Samsung's making a clear statement: we're the smartphone for serious photographers.

Not casual snapshots. Serious photographers. Creative professionals. Content creators.

Google's Pixel line is incredibly good for automatic photography. Apple's i Phone is incredibly polished and accessible. But neither is explicitly targeting the creative professional.

Samsung's saying: if you care about photography and want professional-level tools on your phone, we're your choice.

This positioning makes sense. It's a differentiator. It justifies premium pricing. It attracts users who switch because they want better control and creative capability.

The risk: if the tools don't deliver, the positioning falls apart. If day-to-night conversion looks fake, or object removal creates artifacts, the credibility evaporates.

Samsung needs these to work genuinely well, not just impressively in demos.

AI processing tasks like day-to-night conversion and photo merging consume approximately 1.5% and 2.5% battery respectively. Estimated data.

What We Still Don't Know

Samsung's been selective about what they're revealing.

Pricing isn't confirmed. Will there be a pro mode with more control? Will advanced features be behind a paywall? This is Samsung—probably not, but possible.

Processor exclusivity: will these features work on previous generation phones? Probably not. The S26 generation will get the full suite. Maybe the S25. The S24? Unlikely.

Release timeline: the Unpacked is February 25. Sales presumably start early March. When do you actually get the software? Could be day one. Could be a month later as a software update.

Regional limitations: will all features be available everywhere, or will privacy regulations exclude certain regions?

Does the system work in landscape mode? Horizontal videos? These seem like basic things but you'd be surprised how many features don't.

Voice control integration: can you trigger transformations with voice commands, or is it tap-only?

Samsung will probably answer these at or shortly after Unpacked.

TL; DR

- Galaxy S26 brings unified AI photography system combining capture, editing, enhancement, and sharing in one app, eliminating constant app switching

- Day-to-night transformation converts daytime photos to convincing nighttime versions by restructuring lighting and shadow patterns, best for outdoor scenes

- Intelligent object restoration and removal uses inpainting AI to reveal partially obscured objects or remove unwanted elements based on contextual analysis

- Low-light detail extraction reconstructs texture in dim environments by removing noise while preserving actual detail through learned pattern recognition

- Photo merging capability intelligently combines multiple shots to get perfect exposure, focus, and expression from different frames automatically

- On-device processing means transformations happen instantly without cloud upload, protecting privacy while requiring dedicated NPU hardware

- Bottom Line: Samsung's repositioning itself as the serious photographer's smartphone choice, offering professional-level creative tools that work at phone speeds and don't require desktop software

The Technology Behind the Transformation

Let's get technical. Understanding how these work matters because it determines what they can actually do.

Day-to-Night Transformation Process

The system isn't simply darkening your image. That would be trivial and obviously fake. Instead, it's performing what researchers call "image-to-image translation"—learning the mapping between daytime and nighttime versions of scenes.

The training process involved thousands of paired images: the same location shot in daylight and the same location shot at night. The AI learned the structural transformations. How does sky change? How do shadows appear? How do artificial lights get positioned? What color shifts happen?

When you feed it a new daytime image, the system applies learned transformations based on scene content. It's not generic. It's specific.

Think of it this way. If there's a streetlamp post visible in the daytime image, the system knows streetlamps produce warm light in specific directions. It knows the ground beneath gets illuminated. It knows shadows get cast. All based on patterns learned from thousands of examples.

The result feels realistic because it's not inventing things. It's applying learned structural rules.

Inpainting and Object Removal

This uses what's called "context-aware fill"—analyzing the surrounding pixels to infer what should be in the empty space.

Simple version: you have a uniform wall with a person in front. Remove the person. The system sees the wall on all sides of the person and fills with wall texture.

Complex version: you have a crowded scene and want to remove someone. The system has to understand what's behind them. Is it another person? Scenery? How does that person overlap with what's behind? It requires sophisticated spatial reasoning.

The neural network that does this is trained on images where objects are deliberately masked out. The network learned to reconstruct what should be there. It's not magic. It's statistical prediction based on millions of examples.

Artifacts appear when the system makes wrong assumptions. The training data didn't include your specific scenario. Maybe you're trying to remove an object from a rare angle. The network defaults to its most confident guess, which might be wrong.

Low-Light Detail Extraction

Traditional denoising is destructive. It blurs to remove noise. This is smarter.

The system is trained to distinguish noise (random variations caused by sensor limitations) from detail (actual structure in the scene). It aggressively removes noise while preserving detail.

It also uses learned priors about what things look like. It knows what fabric texture should look like, what skin should look like, what foliage should look like. When encountering low-light images with heavy noise, it's not just denoising. It's reconstructing.

Example: a portrait photo with heavy shadow noise on the subject's face. Traditional denoising removes the noise but also removes subtle skin texture. The face looks plastic. The AI-driven approach knows skin has specific texture characteristics. It removes sensor noise but preserves skin texture.

The limitation is hallucination. If the light is extremely low, there's not much information to work with. The system might invent detail that wasn't there. A face might get sharper features that look unnatural.

Multi-Frame Intelligent Merging

You've got burst mode: five frames taken rapidly. Some are sharp. Some have good exposure. Some have good color. Merging them intelligently means analyzing each frame and combining the best parts.

First step: alignment. The frames were taken rapidly but there was likely some small movement. The system aligns them to compensate.

Second step: difference detection. What varies between frames? Exposure? Focus? Expression? The system identifies what changed.

Third step: selective merging. For each pixel location, the system picks the best version from the available frames. For exposure, it might average or pick the frame with optimal exposure. For focus, it picks the sharpest version. For faces, it analyzes expression and picks the best.

Final step: blending. Seamlessly combining different source frames without visible boundaries.

This is computationally expensive. Analyzing five 48-megapixel photos, aligning them, differencing them, and merging requires serious processing. That's why it needs the dedicated NPU.

Practical Workflow Examples

Let's walkthrough realistic scenarios.

Content Creator Workflow

You need Instagram content. You're at a coffee shop with nice morning light.

- Shoot daytime photo with good composition (5 seconds)

- Tap "AI enhance" and select day-to-night transformation (3 seconds, 2% battery)

- Edit the result—adjust warmth, saturation if needed (10 seconds)

- Save original and night version (2 seconds)

- Crop for 9:16 story format using on-phone editor (5 seconds)

- Post story with both versions (5 seconds)

Total workflow: 30 seconds. You got two different looks from one location.

Compare to traditional approach: return at night, shoot again, spend time in Lightroom processing both, schedule posts. You're looking at 90 minutes of your time spread across multiple days.

Travel Photographer Workflow

You're visiting Machu Picchu. You're there once. You want photos of the location in different lighting scenarios.

- Shoot during golden hour (sunset) with good light (1 minute for composition, 1 shot)

- Immediately transform to night version, to early morning version, to noon version (3 x 3 seconds = 9 seconds, 6% battery)

- You've got four completely different "times of day" of the same location

- Later, merge similar shots to get perfect exposure for each version (10 seconds each, 3 versions = 30 seconds)

Total new investment: 40 seconds plus battery. You've got portfolio variety from a single visit that traditionally would've required four trips.

Family Photo Workflow

You're trying to get a family group photo. Kids are involved. Getting everyone looking good simultaneously is hard.

- Set up camera, take continuous burst (20 frames over 2 seconds)

- Tap "smart merge" (2 seconds, processing happens)

- System analyzes all 20 frames and creates a version where everyone looks good (5-10 seconds depending on phone)

- Result: one keeper where everyone has the right expression, eyes open, good composition

Instead of: take 20 frames, review each one, settle for the best compromise.

Low-Light Event Workflow

You're at a concert. Lighting is dramatic but dim.

- Shoot normally with phone's stabilization (photos will be sharp but dark)

- Open the photo, tap "low-light enhance" (2 seconds)

- System extracts hidden detail (5-10 seconds depending on extent of enhancement)

- Result: you can actually see the performance, the emotion, the detail

Traditional approach: photo too dark to see anything useful. It gets deleted.

Estimated data suggests that the S26 will most likely have full feature availability, with decreasing likelihood for S25 and S24 models.

Integration With Galaxy Ecosystem

Samsung's building this as part of a larger system.

Galaxy Cloud Syncing

You edit a photo on your S26. The original and edited version sync to Galaxy Cloud. You can access them on your tablet or laptop.

You start editing on the phone with quick transformations. Later, on your tablet, you do more refinement with a stylus. On your laptop, final touch-ups. All versions sync back.

This is more seamless than having to export, move files, import.

Galaxy Buds Integration

Theoretically, you could command transformations with voice. "Convert to night." "Enhance low light." "Merge frames."

For accessibility and hands-free operation, this matters. You're driving, you want to process a photo you just shot, you don't want to mess with the phone's screen.

Samsung hasn't confirmed this, but it's probably coming.

Smart Things Home Context

Here's a wild idea: your home has smart bulbs and cameras. Your Smart Things system knows your home's lighting setup.

When you're processing a photo taken at home, the system could use the lighting data. You're trying to fix a photo from your living room at 8 PM. Smart Things knows your lighting setup. The transformation could account for that.

This is speculative, but Samsung's positioning Smart Things as increasingly central to their strategy.

De X Integration

Connect your S26 to a monitor and keyboard (De X mode). You've got a desktop interface. Full-screen photo editor with more controls than the phone interface offers.

You're editing the same photos, synced across devices, with interface scaling to each device.

Quality Expectations vs. Hype

Samsung's shown selected results. These will be impressive. They're marketing materials.

Real-world results will be more variable.

Day-to-night transformation will look great on landscape and architectural photos. It'll look less convincing on portraits. It'll look weird on action shots with motion blur.

Object removal will work great on simple backgrounds. It'll struggle on complex textures or multiple overlapping objects.

Low-light enhancement will be excellent for 1-3 stops of darkness. At extreme darkness, it'll start to look artificial.

This isn't a knock on Samsung. It's realistic. AI is probabilistic. It makes its best guess based on what it learned. Sometimes that guess is perfect. Sometimes it's wrong.

The competitive advantage isn't that Samsung's system is perfect. It's that it's better than what competitors offer and it's integrated into the workflow.

For most users, most of the time, the results will exceed expectations. There will be edge cases where the limitations become obvious.

Performance Benchmarks We Should Expect

Here are the metrics that actually matter.

Processing Speed

Day-to-night transformation should complete in under 2 seconds on the S26 Ultra, probably 2-5 seconds on the S26+.

Object removal should be similar timeframe for simple scenarios, maybe 5-10 seconds for complex ones.

Merging five photos should take 5-10 seconds.

Low-light enhancement varies with severity. Minor enhancements: 2-3 seconds. Heavy enhancement: 5-10 seconds.

If processing takes significantly longer, the feature feels sluggish and you'll use it less.

Quality Consistency

Samsung should publish quality metrics. How many test images does the day-to-night conversion handle perfectly? 80%? 90%?

What about edge cases? How does it perform on rare scenarios?

They probably won't publish this—marketing wants it to sound flawless—but independent reviewers will test.

Battery Consumption

Each transformation's battery cost should be disclosed or easily testable. If day-to-night costs 5% battery, that's significant. If it costs 1%, it's negligible.

Thermal Performance

Does the phone get uncomfortably warm during extended editing? Or does thermal throttling kick in?

You shouldn't need to wait for the phone to cool down between edits.

Software Update Strategy and Future Roadmap

Samsung doesn't typically announce features beyond launch, but based on their pattern:

Month 1-2 After Launch: Stabilization Bug fixes. Performance optimization. Early user feedback incorporated.

Month 3-6: First Major Update Better quality through model refinement. More scenarios working well. New features possibly (video transformation? Batch processing?)

Month 6-12: Second Major Update Significant quality improvements. Model size optimizations. Better integration with other services.

By year-end, the system should be noticeably better than launch version.

Long-term prediction: Samsung probably wants these tools expanding to video. Transforming video from day to night. Color grading video with AI. Stabilizing shaky video better. These are natural expansions.

Who This Is Built For

Primary Users Who'll Benefit Most:

- Content creators and influencers needing variety without multiple shoots

- Travel photographers wanting to show same location in different conditions

- Family photographers dealing with challenging group dynamics

- Professional photographers looking for efficiency gains

- Casual users taking pictures in suboptimal light

Users Who'll See Less Benefit:

- Professional photographers with specific aesthetic requirements (they'll want more control)

- People who mostly take candid social snapshots (current phones are already good enough)

- Users with older phones that can't upgrade (features unavailable)

The addressable market is substantial. Professional photography tools have never been in phones before. This creates a new product category.

Pricing Implications and Value Proposition

These features justify a premium. Not every phone needs AI photography tools. Users who care about photography are willing to pay more.

For Samsung: this is selling point for flagship devices. You're not buying the S26 Ultra just for processing power. You're buying it for tools you can't get elsewhere.

Expect significant price gap between S26 and S26 Ultra justified largely by camera capabilities.

Possible pricing models:

- Free features on all S26 models (basic transformations)

- Premium features for S26 Ultra only (complex merging, batch processing)

- Or: free on-device, paid cloud-based advanced editing

Samsung historically doesn't charge for on-device features, so expect most of this free.

But integration with Galaxy Cloud and cross-device editing might have tiers.

FAQ

What are Samsung Galaxy S26 AI photography tools?

They're a suite of AI-powered image processing features built into the Galaxy S26 smartphone line, designed to transform how photos are captured and edited. The tools live in a single unified app and include day-to-night conversion, intelligent object removal, low-light detail extraction, and multi-frame intelligent merging. Rather than switching between separate apps, you do everything in one place directly after capturing the photo.

How does the day-to-night transformation actually work?

The system uses neural networks trained on thousands of paired images showing the same scenes in both daylight and nighttime conditions. When you input a daytime photo, the AI applies learned transformations that restructure the entire lighting environment: the sky darkens and gains stars, artificial lights gain warm glow, shadows deepen naturally, and the overall mood shifts. It's not simple darkening—it's semantic transformation based on learned patterns about how scenes actually change at night.

Can the object removal tool work on any background?

Object removal works best on simpler scenes with clear, uniform backgrounds or easily understood spatial relationships. It struggles more with complex textures, crowds, intricate patterns, or ambiguous spatial layouts. A photobomber in front of a plain wall? Easy. Removing someone from a crowded concert photo? Much harder. The AI reconstructs based on context, so if the context is complex or ambiguous, the results become less reliable.

Will these tools work on phones older than the S26?

Unlikely for the full feature set. The transformations require significant processing power, specifically a dedicated neural processing unit (NPU). The S26 Ultra will have the best performance, the S26+ will be slightly slower, and the S26 will be adequate. Previous generation phones lack the necessary hardware, though Samsung might offer limited versions through software optimization. This is where having the ecosystem matters—Samsung controls enough of the hardware stack to optimize deeply.

What's the battery impact of using these tools frequently?

Each transformation consumes power depending on complexity. A day-to-night conversion might use 1-3% of your battery. Merging five photos might use 2-5%. If you're extensively editing photos, battery drain will be noticeable. Samsung's probably included thermal throttling to prevent overheating, which means processing might slow down if you use the tools continuously. For casual editing, it's negligible. For power users, it's worth being aware of.

Are my photos sent to Samsung's servers for processing?

No. All transformations happen on the device using dedicated NPU hardware. Your photos never leave the phone during the editing process. This means zero cloud latency, instant processing, and your images stay private. Samsung might collect metadata about which features you use and how often (for improving the system), but not the actual photos or processed images unless you explicitly sync them to Galaxy Cloud.

How does this compare to desktop photo editing software like Lightroom?

Desktop software gives you far more granular control over every aspect of the image. You can adjust precise color values, tone curves, specific areas independently. Phone tools offer much less control but far more convenience and speed. For 80-90% of photos, the phone tools will produce satisfactory results. For the 10-20% where you want specific creative vision, you'd still move to desktop. The gap is narrowing each year as mobile tools become more sophisticated.

Will there be a paid tier or premium features?

Samsung hasn't announced this. Historically, they include on-device processing features free with the phone. However, cloud-based features, advanced controls, or integration with professional tools might have subscription tiers. Cross-device editing and cloud backup of original files might require a Galaxy Cloud subscription. Samsung's pattern suggests the core transformations will be free, but advanced or cloud-based options might cost.

Can you undo a transformation if you don't like it?

Yes. The system works non-destructively—you're not overwriting the original. The edited version and original coexist. You can always revert to the original or try a different transformation. Samsung's unified app approach means you're seeing the transformation preview before committing, which adds safety. This is different from destructive editing where applying a change locks it in.

What types of photos will these tools struggle with?

Highly detailed scenes with complex textures (forests, crowds), portraits where expression is critical, moving subjects between frames, extreme lighting differences, or very rare/unusual subjects not well-represented in the training data. Also, any image where the transformation contradicts physics in obvious ways—trying to add extreme detail where there's truly no information, or transforming a scene in a way that creates impossible shadows or lighting. The system is probabilistic, so edge cases always exist.

Looking Forward: What's Next

Samsung's positioning this as the beginning, not the endpoint. If the initial launch goes well, expect rapid feature expansion.

Video transformation is inevitable. Color grading AI tools. Batch processing entire albums automatically. Perhaps generative fill—create missing image content rather than just restore it.

Integration with Samsung's broader services—maybe photo analysis powered by AI that automatically tags, sorts, and organizes your library.

The long-term vision seems to be: your phone becomes your primary creative tool for photography. You don't need desktop software for 95% of your work.

That's aspirational and probably 2-3 years away. But it's the trajectory.

For competitive context: this is Samsung saying we understand photography and creative tools. We're not trying to make the best camera sensor. We're trying to make photography accessible and efficient for everyone.

It's a smart positioning. It's differentiated. And if the tools actually work as promised, it's genuinely useful.

The proof will be in the reviews and real-world results. Samsung's shown impressive demos. When thousands of users put these tools in their hands, we'll see how well they actually perform on real, messy, imperfect photographs in challenging scenarios.

That's when we'll know if Samsung's genuinely advanced mobile photography or just created an impressive-looking feature that works great on marketing materials.

Key Takeaways

- Samsung's Galaxy S26 consolidates photography tools (capture, edit, enhance, merge, share) into one unified AI app, eliminating context switching friction

- Day-to-night transformation uses neural networks trained on paired daytime/nighttime images to structurally reshape lighting environments, not just darken photos

- Object removal and restoration employ AI inpainting to intelligently reconstruct missing content based on surrounding context and learned visual patterns

- Low-light detail extraction distinguishes sensor noise from actual texture, reconstructing detail while preserving authenticity in dim environments

- All processing happens on-device using dedicated NPU hardware, ensuring instant processing, privacy, and no cloud dependency

Related Articles

- Samsung Galaxy S26 Trade-In Deal: Get Up to $900 Credit [2025]

- Halide Mark III Process Zero: The Anti-Algorithm Camera App [2025]

- Samsung Galaxy S26 Launch Event on February 25 [2025]

- Samsung Galaxy S26 Unpacked Confirmed for February 2025 [2025]

- Xiaomi 18 Pro's Dual 200MP Cameras: Beyond the Megapixel War [2025]

- Samsung's AI Slop Ads: The Dark Side of AI Marketing [2025]

![Samsung Galaxy S26 AI Photography: Features & Specs [2025]](https://tryrunable.com/blog/samsung-galaxy-s26-ai-photography-features-specs-2025/image-1-1771373383376.jpg)