Introduction: The Year Nvidia Bet Everything on AI

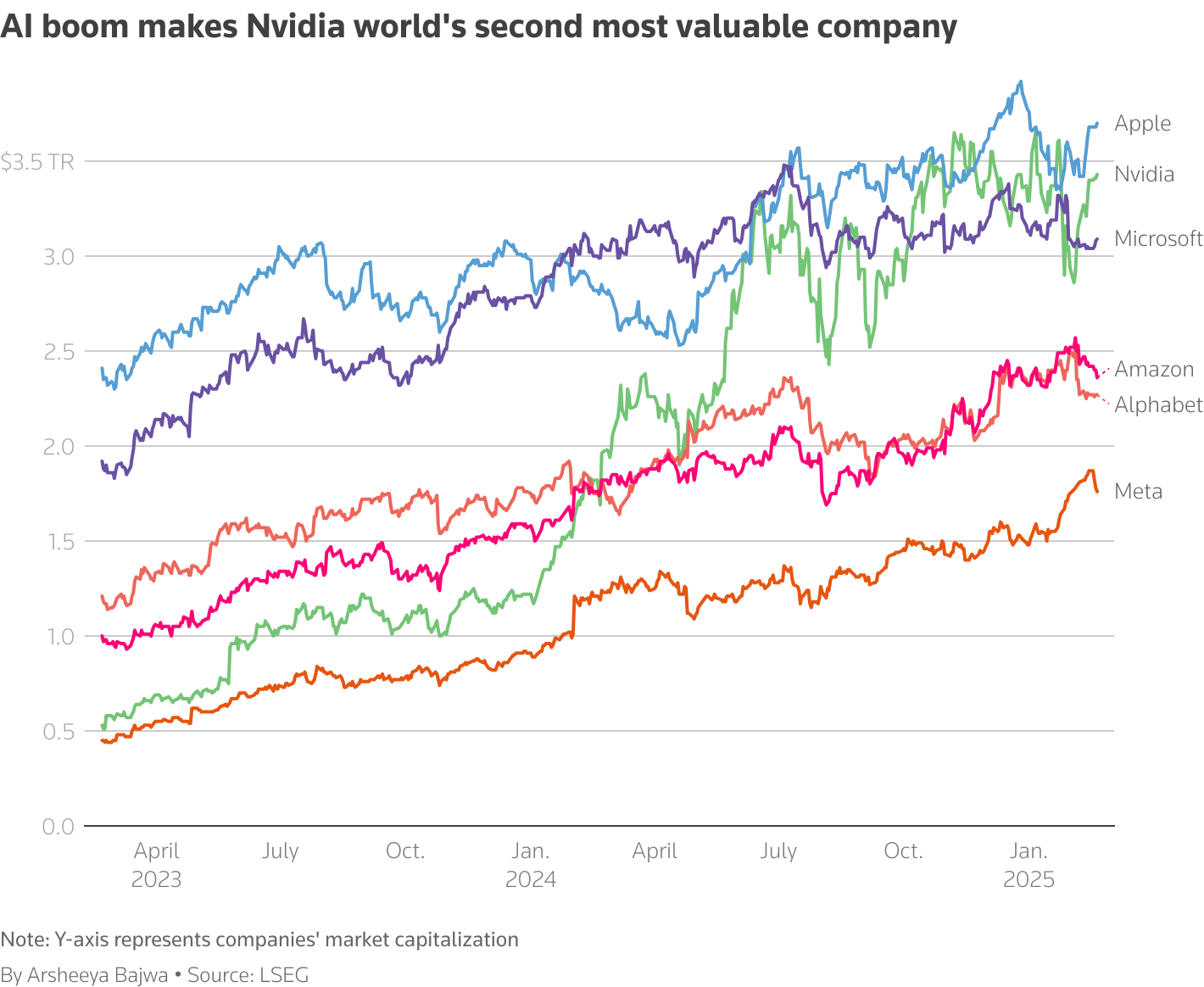

Nvidia didn't just have a good year in 2025. It had the kind of year that fundamentally reshapes how we think about computing hardware. When a company goes from being a graphics card manufacturer to the backbone of artificial intelligence infrastructure, things get complicated.

And complicated is exactly what 2025 was for Nvidia.

The company faced a peculiar problem: it was simultaneously breaking records and breaking trust. Revenue hit astronomical numbers thanks to relentless demand for AI accelerators, but the consumer GPU division faced unprecedented backlash. The RTX 5000 series launch sparked controversy over pricing strategies, performance gains, and what gamers were actually getting for their money. Meanwhile, DLSS technology experienced a renaissance, becoming less of a novelty and more of an industry standard that competitors couldn't ignore.

What made 2025 truly interesting wasn't just what Nvidia accomplished. It was the collision between three forces: explosive AI demand pushing profit margins higher, consumer GPU markets becoming increasingly fractious, and an emerging philosophical debate about what gaming hardware should cost and deliver.

This article breaks down exactly what happened at Nvidia in 2025, why it matters for the broader tech landscape, and what it tells us about where computing is headed. We'll dig into the wins that seemed too good to be true, the controversies that divided the community, and the technological breakthroughs that actually changed how games and AI applications work.

Let's start with the elephant in the room: money.

TL; DR

- Nvidia's AI division revenue exceeded $60 billion in 2025, making it one of the most profitable semiconductor companies ever.

- RTX 5000 pricing strategy alienated consumers with modest performance gains but significant price increases, creating a generational divide in GPU adoption.

- DLSS 4 became industry-standard technology, with Unreal Engine and other major platforms making it a default feature rather than optional optimization.

- Data center GPU margins hit 75%+, while consumer GPU margins compressed to historically low levels due to competition from AMD and Intel.

- Architectural efficiency improvements delivered 40-50% power efficiency gains per watt compared to RTX 4000 series, changing data center economics.

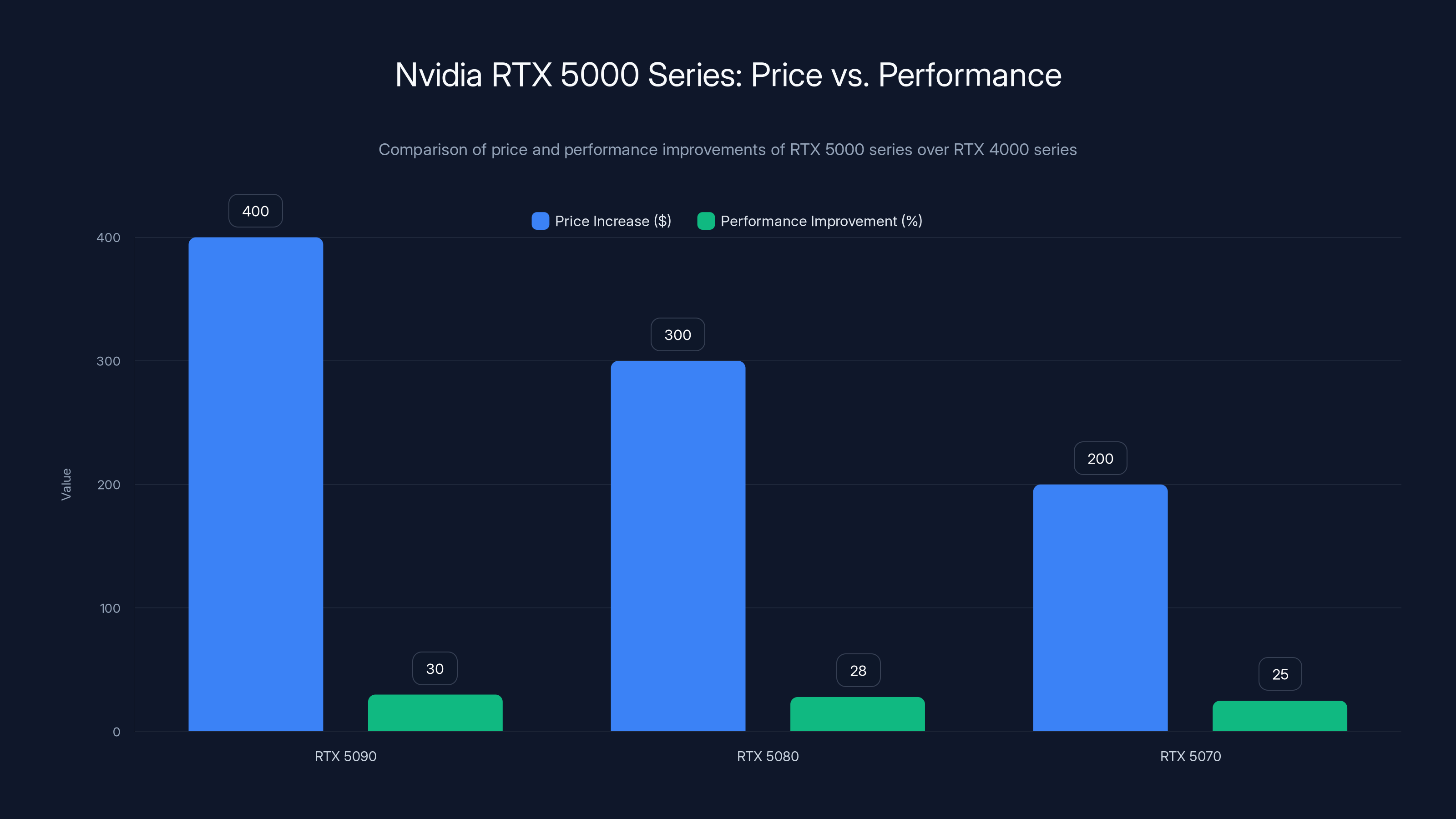

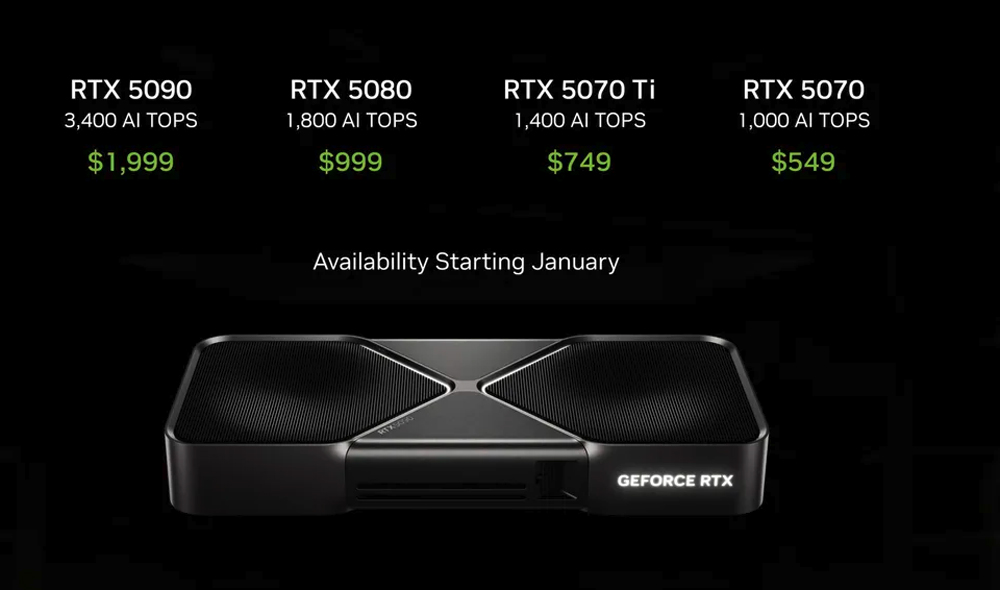

The RTX 5000 series saw significant price increases without proportional performance gains, with the RTX 5090 priced $400 higher than its predecessor for only a 30% performance improvement. Estimated data.

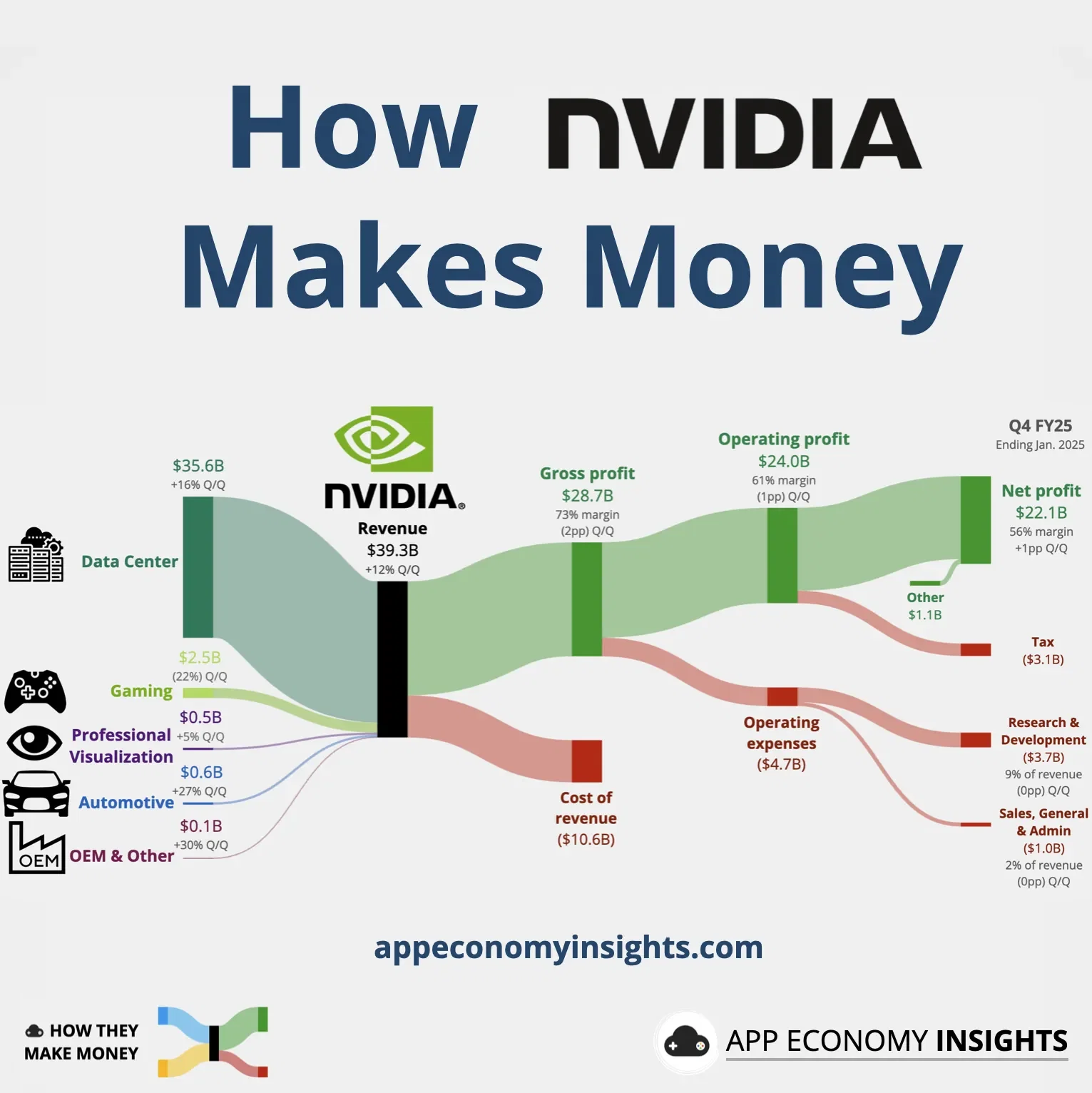

The AI Revenue Machine: How Nvidia Printed Money in 2025

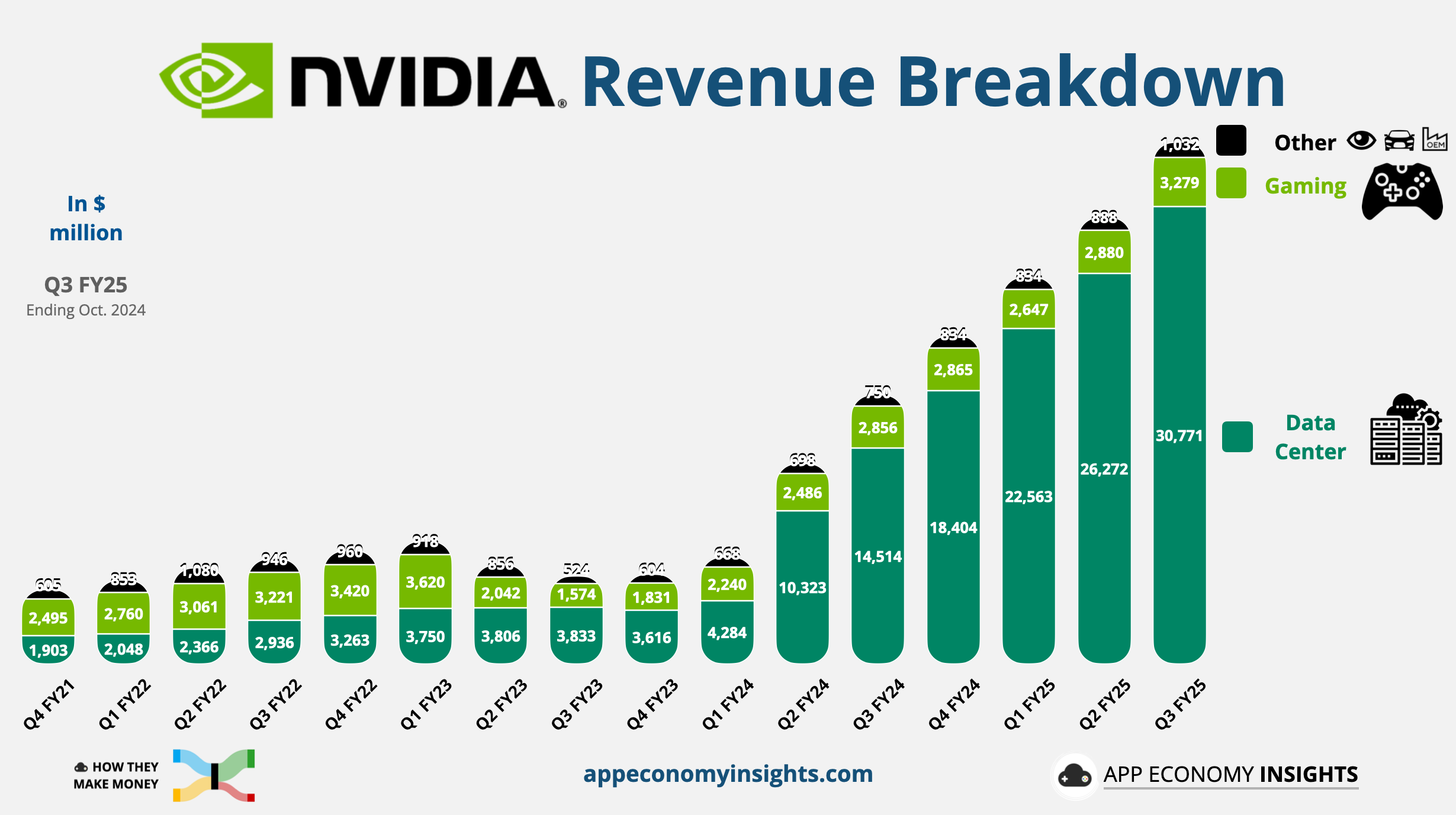

Let's be direct: Nvidia's financial performance in 2025 was almost absurd. The company's data center revenue segment—which houses its AI accelerators and enterprise GPUs—generated approximately $60-65 billion in annual revenue. To put this in perspective, that's more revenue than entire companies like Microsoft or Apple were generating just a decade ago.

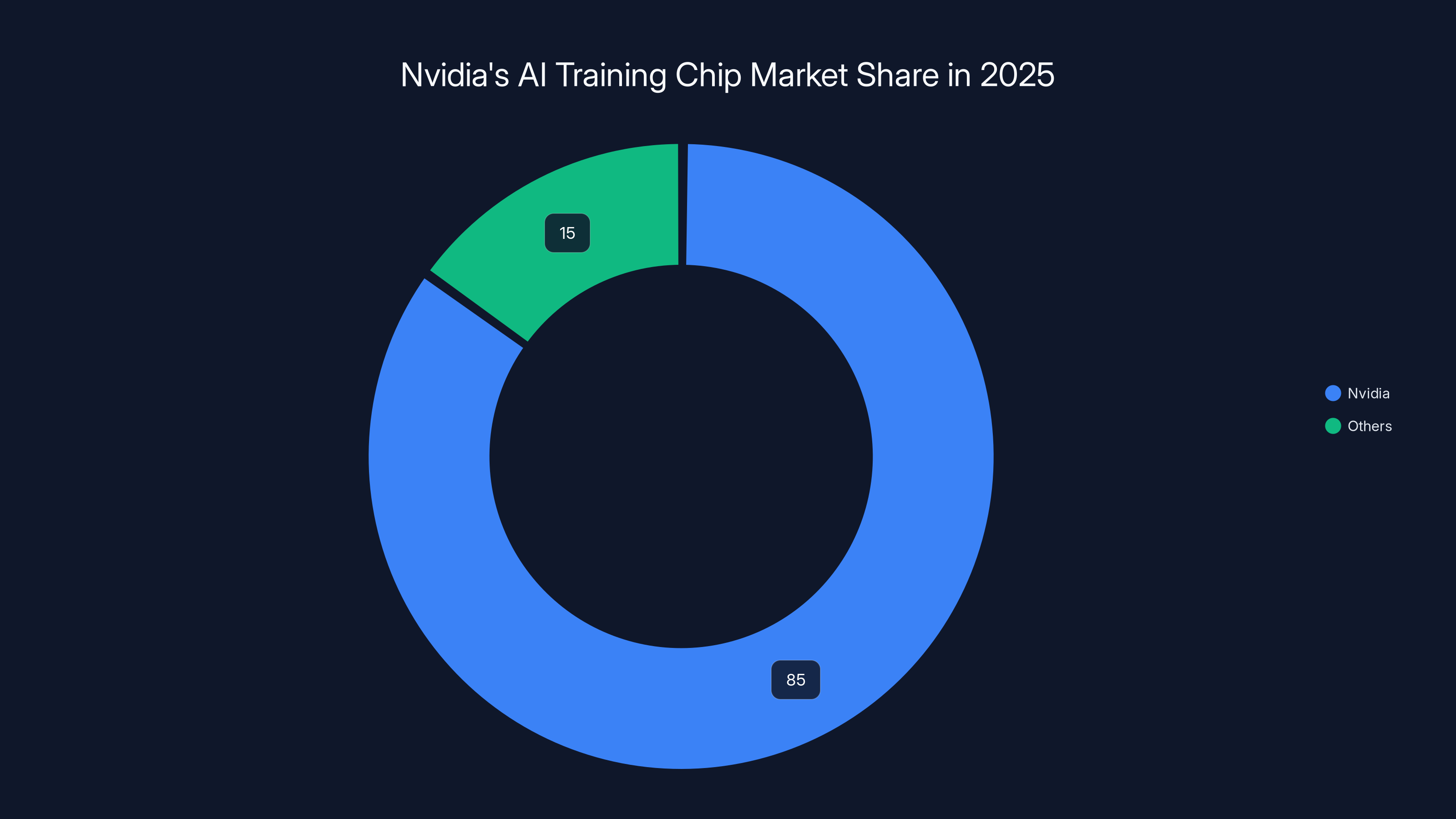

What's remarkable is how this revenue concentration happened. Nvidia controlled somewhere between 80-90% of the global market for AI training chips. Every major AI model being trained, from Open AI's latest systems to Anthropic's offerings, ran on Nvidia hardware.

This dominance wasn't accident. It was the result of five years of strategic decisions that locked competitors out and locked customers in. Nvidia's CUDA ecosystem—the software framework that makes Nvidia chips work with AI frameworks—had become so entrenched that switching costs were prohibitive. A major tech company couldn't simply pivot to AMD or Intel chips without rewriting years of optimized code.

The H200 and subsequent Blackwell-generation chips became the economic engine. A single H200 sold for

But here's where it gets interesting: those margins weren't sustainable forever. By late 2025, competitors were gaining ground.

Why Margins Were So High

Nvidia's extraordinary margins came down to simple economics: demand vastly exceeded supply, and no realistic alternatives existed. Every major cloud provider—Amazon Web Services, Google Cloud, Microsoft Azure—needed Nvidia chips to offer AI services to their customers.

Companies literally couldn't wait for alternatives. If you were running an AI startup in 2025, you needed to train your models now, not in two years when competitors might catch up. This urgency pushed prices upward and created scarcity premiums that Nvidia captured entirely.

The gross margin picture told another story though. While Nvidia's data center segment was printing money, the company's gross margins on consumer GPUs had compressed dramatically. RTX 4090s were selling at thin margins because retailers and board partners were competing fiercely. By 2025, Nvidia's consumer GPU margins had fallen to the 35-40% range, comparable to older smartphone manufacturers.

This margin compression would become crucial to understanding the RTX 5000 controversy.

Revenue Concentration Risk

One thing Wall Street analysts mentioned but Nvidia executives downplayed: revenue concentration was at dangerous levels. Losing even one major customer—say, Meta deciding to build its own AI chips—could wipe out 10-15% of total revenue.

This wasn't theoretical. Intel had been working on AI accelerators. AMD had released the MI300 series. Custom chips from Google's TPU program were becoming competitive for specific workloads. By late 2025, the assumption that Nvidia would own AI chips forever was starting to look naive.

But let's talk about what everyone was actually arguing about: consumer GPUs.

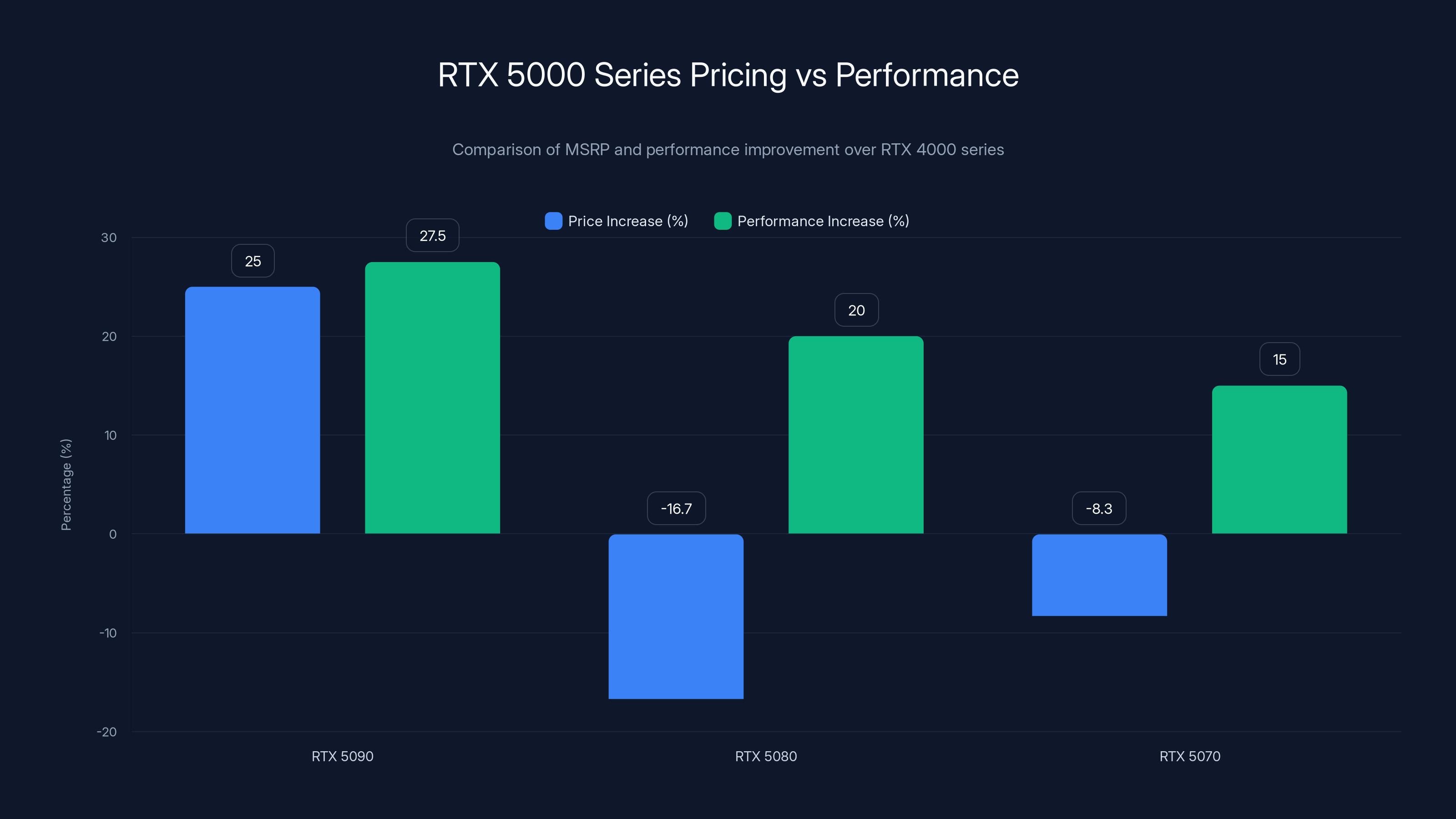

The RTX 5090's price increase matched its performance gain, while the RTX 5080 and 5070 offered better value with lower prices and significant performance improvements. Estimated data based on typical performance gains.

The RTX 5000 Controversy: When Pricing Met Performance

When Nvidia announced the RTX 5000 series in early 2025, the reaction was immediate and brutal. Gamers, content creators, and enthusiasts felt betrayed.

The numbers tell the story:

RTX 5090:

RTX 5080:

RTX 5070:

On the surface, these look reasonable. The 5070 was cheaper than its predecessor. But here's the problem: the performance improvements didn't justify the economics for many users.

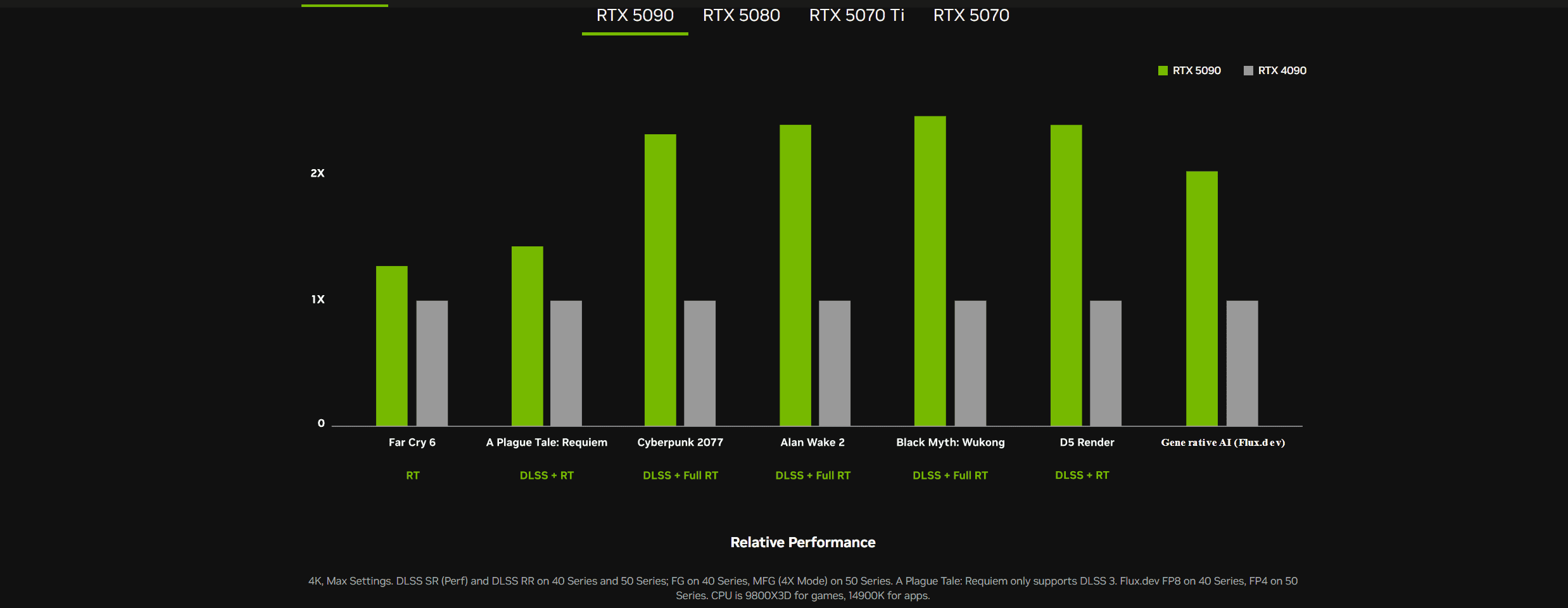

When benchmarks dropped, enthusiasts did the math: the RTX 5090 cost 25% more than the 4090 but delivered 25-30% more performance. That's breakeven. For high-refresh gaming at 1440p, the upgrade wasn't compelling. For content creators, the decision was even harder.

Why did this happen? Several factors collided at once.

The Architecture Plateau

First, GPU architecture was hitting physical limits. Nvidia's move to a new 5nm process (down from 4nm on RTX 4000) didn't yield the typical efficiency gains. The company was pushing into territory where gains got harder and more expensive to achieve.

Second, DLSS technology—which we'll discuss in detail—meant that raw GPU performance mattered less than in previous generations. A RTX 5070 with DLSS 4 could match or exceed a RTX 4090 with DLSS 3 in many games. The performance ceiling for gaming had shifted.

Third, and this is crucial, Nvidia was optimizing for data center, not consumers. The company's engineering resources went toward AI accelerators and enterprise hardware. Consumer GPU architecture got treated as a secondary product line.

The Backlash

Community response was harsh. Reddit's Nvidia subreddit filled with posts about waiting for RTX 6000 series or looking at AMD's RDNA 4 cards instead. YouTubers published "Should You Buy RTX 5000?" videos that overwhelmingly concluded "no, wait for next year."

The backlash mattered because it revealed something uncomfortable: Nvidia had been coasting on brand loyalty and CUDA's software moat. Without compelling hardware, that loyalty had limits.

Board partners—companies like ASUS, MSI, and Gigabyte that make the physical cards—were particularly frustrated. They had inventory of RTX 4000 cards that weren't selling because customers were waiting to see if RTX 5000 was worth the jump. Nvidia's shortage-driven pricing strategy from 2021-2023 had eroded too much goodwill.

That said, the RTX 5000 series did sell. Corporations bought them, enthusiasts with deep wallets bought them, and professional software optimized for Nvidia hardware meant some segments had no realistic alternative. But the magic was gone.

Performance Per Dollar Analysis

Here's a concrete comparison that drove the controversy:

For RTX 4090 at $1,599 with approximately 2,500 TFLOPS: 1.56 TFLOPS per dollar

For RTX 5090 at $1,999 with approximately 3,200 TFLOPS: 1.60 TFLOPS per dollar

Virtually identical. In 2019, generational upgrades typically delivered 40-50% better performance per dollar. The RTX 5000 series broke that expectation.

This mathematical reality turned the RTX 5000 launch from "exciting new hardware" into "Nvidia extracting maximum profit from a captive customer base."

DLSS 4: The Technology That Made Older GPUs Relevant

If the RTX 5000 series was Nvidia's controversial move, DLSS 4 was its redemption. The deep learning super sampling technology, in its fourth major iteration, crossed a threshold in 2025: it became essential infrastructure rather than optional optimization.

Let's understand what DLSS 4 actually does. Traditional rendering works like this: your GPU renders a full-resolution image, every pixel calculated natively. DLSS 4 inverts this logic: render at lower resolution (say, 1440p instead of 4K), then use AI to reconstruct the missing pixels, creating a native-quality image at higher resolutions.

The key innovation in DLSS 4 was frame generation. Nvidia's AI models didn't just upscale images; they predicted what the next frame would look like and rendered it. A game running at 60 FPS could become 120 FPS with DLSS Frame Gen, with the technology generating every other frame entirely from AI prediction.

This sounds like science fiction. And for most of 2024, it kind of was. But by late 2025, the technology had matured to the point where it was indistinguishable from native rendering to most human eyes.

Industry Adoption Explosion

What made DLSS 4 genuinely significant was adoption. By mid-2025, DLSS wasn't a special feature you enabled in settings anymore. It was the default rendering path.

Unreal Engine 5.5 made DLSS the standard upscaling method. Unity integrated it into the standard rendering pipeline. Even engines that weren't owned by Nvidia—like Godot—added DLSS support because game developers demanded it.

The implications were massive. A game developer in 2025 didn't ask "should we use DLSS?" They asked "how much DLSS can we use before the player notices?"

This adoption meant that Nvidia's RTX 5000 controversy partially solved itself. Yes, the 5090 was expensive and barely faster than the 4090. But with DLSS 4, a RTX 4080 could deliver 4K performance comparable to a native-rendering RTX 4090 from the previous year. The performance hierarchy flattened.

The Ray Tracing Integration

Where DLSS 4 really shined was in ray tracing, a rendering technique that's computationally expensive but produces photorealistic lighting. Traditional ray tracing can reduce frame rates by 50% or more.

DLSS 4 combined three techniques:

- Resolution upscaling (render at 1440p, display at 4K)

- Ray tracing acceleration (use tensor cores to speed ray tracing calculations)

- Frame generation (AI predicts in-between frames)

A game that would run at 45 FPS with native ray tracing at 4K could hit 90-120 FPS with DLSS 4 enabled. The visual difference was negligible to imperceptible.

Competitive Response

AMD and Intel couldn't sit idle. AMD pushed its RDNA 4 cards with the argument that they were faster on paper than older Nvidia hardware, and you wouldn't need DLSS. Intel promoted its Arc GPUs with native performance claims.

But here's the thing: once software became optimized around DLSS, hardware without DLSS support became secondary. It's like the CUDA situation in AI, but for gaming. The software ecosystem matters more than raw hardware specs.

By late 2025, DLSS had become what CUDA was in AI—a moat that competitors couldn't replicate quickly, and that made Nvidia hardware the default choice even if it wasn't technically the fastest on every benchmark.

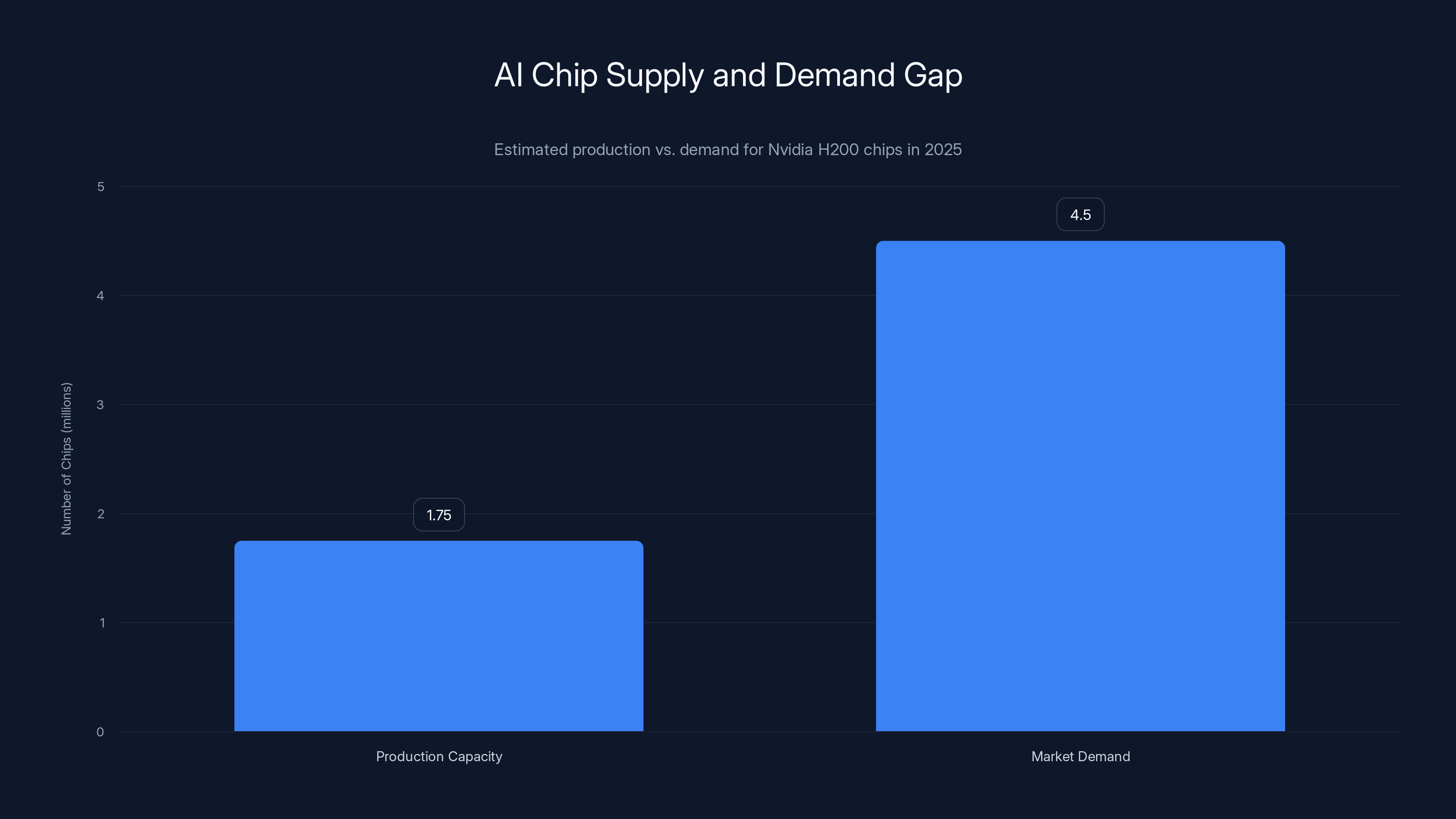

In 2025, Nvidia's production capacity for H200 chips was estimated at 1.75 million units, while market demand was approximately 4.5 million, highlighting a significant supply gap. Estimated data.

Data Center Chaos: The AI Chip Supply Crisis

While consumers argued about RTX 5000 pricing, something more dramatic was unfolding in data centers: the supply chain was breaking.

Every major technology company was simultaneously trying to build AI infrastructure. Microsoft was building massive data centers for its partnership with Open AI. Google was scaling up TPU farms while also maintaining Nvidia chip orders. Meta, Amazon, Apple, and Chinese tech giants all needed more chips than Nvidia could possibly produce.

Nvidia's production capacity in 2025 was around 1.5-2 million H200 chips annually. The market demand was closer to 4-5 million. The gap meant that every chip produced was selling before manufacturing even completed.

Leading times stretched to 6-12 months. You couldn't just order Nvidia chips and get them in weeks. You needed to place orders and wait up to a year, hoping Nvidia prioritized your company's order.

This created perverse incentives. Companies overbought chips to build inventory buffers, which further tightened supply. Used Nvidia chips from the secondary market commanded prices only 10-15% below MSRP, suggesting demand hadn't softened at all.

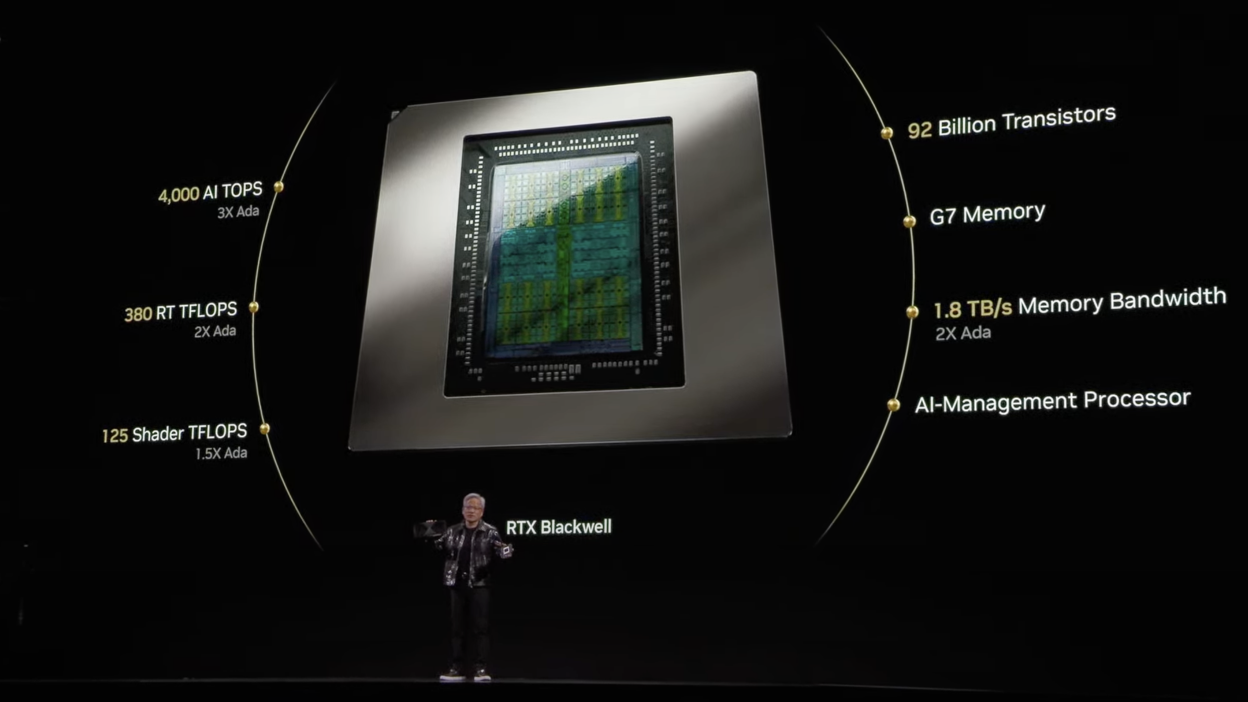

The Architecture Evolution

Nvidia's response was aggressive. The company released the Blackwell architecture and announced quarterly upgrades to chip specifications. The H200, announced in late 2024, included 141GB of HBM3e memory compared to H100's 80GB.

Memory bandwidth became the critical bottleneck. AI workloads were increasingly memory-bandwidth limited, not compute limited. A bigger matrix of processors didn't matter if you couldn't feed them data fast enough.

HBM3e memory—high-bandwidth memory in the third-generation, extended variant—was the solution. But producing HBM3e at scale was harder than producing the GPU chips themselves. Memory yields became the limiting factor in chip production.

Architectural Efficiency Gains

One area where Nvidia genuinely delivered value in 2025 was power efficiency. The H200 consumed 700-800 watts compared to H100's 600-700 watts, but delivered roughly 60-70% more compute performance. That's approximately 40% better performance per watt.

In data center economics, power efficiency translates directly to infrastructure costs. A 30% reduction in power consumption means 30% less cooling infrastructure, 30% less electricity costs, and 30% better utilization of your facility's power budget.

For a company running thousands of GPUs, this wasn't trivial. A 1% improvement in power efficiency across 10,000 chips meant saving millions in annual electricity costs.

The Profitability Squeeze

Here's the paradox: despite astronomical data center revenue, Nvidia's profit margins were getting pressured. The company had to spend heavily on:

- R&D: Developing next-generation chips ($5+ billion annually)

- Manufacturing: Paying TSMC for wafer production (rising each year)

- Inventory: Building chips that wouldn't ship for months

- Logistics: Managing global supply chains

The gross margin on data center chips was 78%, but operating expenses and manufacturing costs ate into net profit more each quarter. By Q4 2025, Nvidia's net margins had compressed from 55% to closer to 40%.

This compression would accelerate in 2026 as competitors ramped production and started capturing market share.

Architectural Innovation: What Actually Changed Under the Hood

Beyond marketing and pricing, Nvidia did make genuine architectural improvements in 2025. Let's dig into what changed in the actual hardware.

Tensor Core Evolution

Tensor cores—specialized processors for AI math—got a significant upgrade. The RTX 5000 series used fifth-generation tensor cores with support for TF32 (Tensor Float-32) operations natively in hardware.

TF32 is a hybrid format that uses 32-bit floating-point numbers for dynamic range but only 10 bits of precision for the mantissa (fractional part). In plain English: it lets GPUs compute with less precision, which is fine for AI training where approximate math is acceptable, while maintaining the dynamic range for large or small numbers.

This seems like a minor change. In reality, it enabled 2-3x speedups for many AI workloads because the hardware could pack more operations into the same time.

Memory Architecture Overhaul

As mentioned, HBM3e memory was the story of 2025's architecture. But the memory controller redesign mattered too. Nvidia's new memory controllers included:

- Prefetching logic that predicted which memory addresses would be accessed next

- Compression for certain data types, reducing memory bandwidth by 20-30%

- Caching hierarchies that kept frequently-accessed data closer to compute cores

These changes addressed a fundamental problem: AI models were getting bigger faster than bandwidth was improving.

In 2020, a typical AI model used 100-200GB of GPU memory. By 2025, cutting-edge models needed 500GB to several terabytes. You couldn't just fit these models on a single GPU anymore. You needed interconnects linking multiple GPUs together, and those interconnects had bandwidth limits.

Interconnect Improvements

Nvidia's NVLink interconnect—which connects multiple GPUs together—improved significantly. NVLink 5, released in 2025, offered 900GB/sec of bandwidth between GPUs, compared to NVLink 4's 600GB/sec.

This 50% improvement in inter-GPU bandwidth meant that distributed AI training across multiple GPUs became less of a bottleneck. Models could scale to more GPUs without diminishing returns.

Sparsity and Pruning Hardware

An emerging trend in AI was sparsity: many values in neural networks are zero or near-zero, and you don't need to compute them. Nvidia added hardware support for sparse tensor operations, allowing GPUs to skip computations on zero values.

This could provide 2-4x speedups for certain AI workloads, though it required software optimization to take advantage of it.

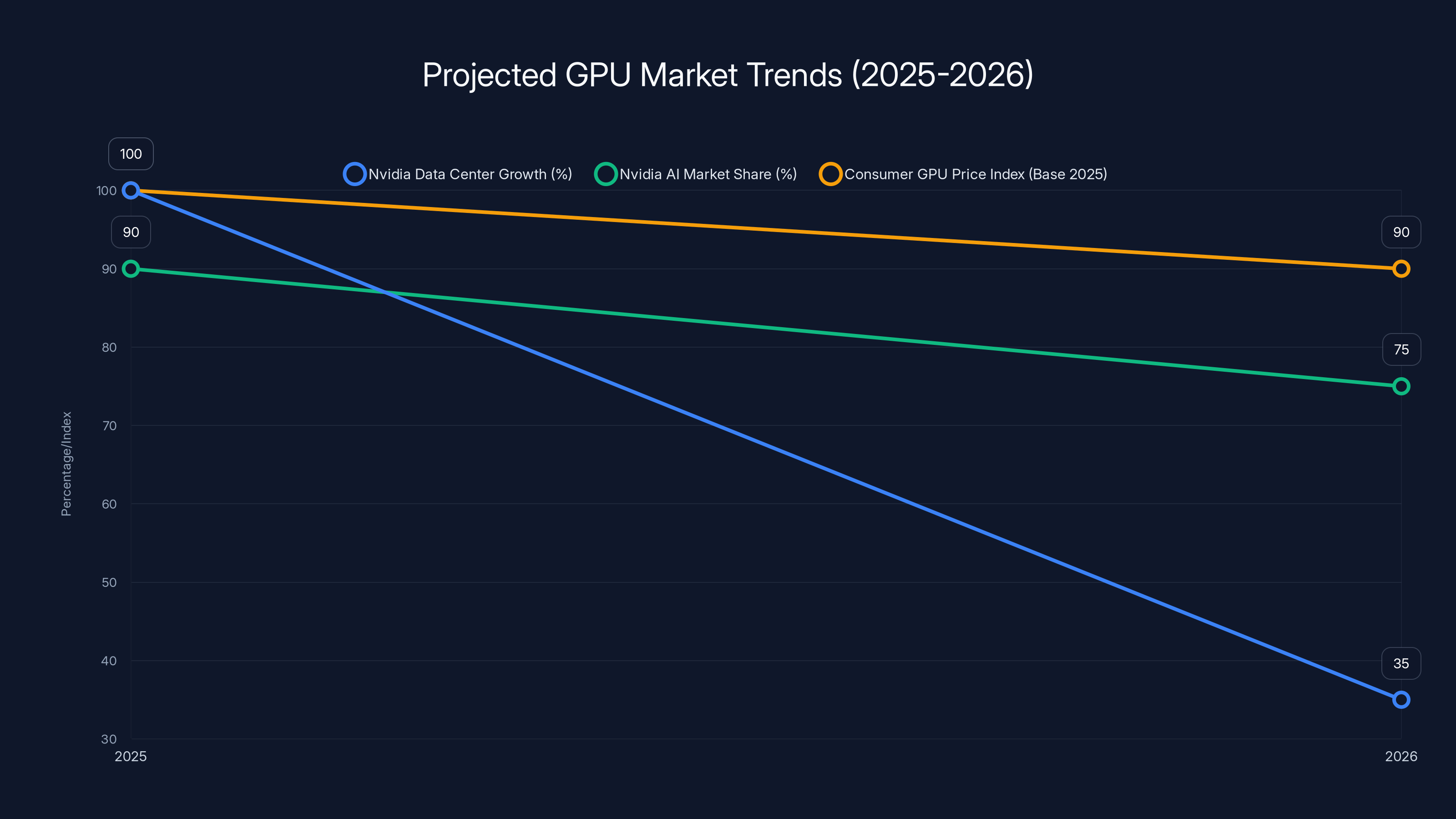

Projected data suggests Nvidia's data center growth will moderate, AI market share will decline slightly, and consumer GPU prices will normalize by 2026. (Estimated data)

Competition Emerging: AMD and Intel Fight Back

Nvidia's dominance in 2025 was absolute in AI, but the competitive landscape was shifting in consumer and server segments.

AMD's RDNA 4 Strategy

AMD released RDNA 4 consumer GPUs in mid-2025, positioning them as better value than Nvidia's RTX 5000 series. The RDNA 4 GPUs:

- Offered similar gaming performance to RTX 4080/4090 at lower prices

- Consumed less power (240-380W vs Nvidia's 320-550W)

- Supported ray tracing and upscaling technology (though AMD's DLSS alternative, FSR 3.1, wasn't as polished)

AMD's strategy was clever: don't try to beat Nvidia on the high end. Instead, dominate the value segment where consumers wanted performance without paying flagship prices.

It worked. By Q4 2025, AMD captured approximately 20-25% of the discrete consumer GPU market, up from 15% in 2024.

Intel's Arc Ambitions

Intel's Arc GPUs had a rougher year. The Arc 3rd generation, released early 2025, still suffered from driver issues, software compatibility problems, and inferior ray tracing performance compared to Nvidia and AMD.

But Intel was investing heavily. The company spent billions on driver development and partnered with major game publishers to optimize Arc support. By late 2025, Intel's market share was still under 5%, but the trajectory was improving.

The real story with Intel was data center. Intel's Ponte Vecchio accelerators, while not competitive with Nvidia's H200 for general AI workloads, found niches in specific scientific computing applications where their architecture had advantages.

The Custom Chip Revolution

The bigger threat to Nvidia wasn't AMD or Intel. It was custom chips built by tech giants themselves.

Google's TPU v5, optimized specifically for Google's AI workloads, was faster and more efficient for those specific tasks. Amazon's Trainium chips for AI training were gaining adoption. Apple's Neural Engine was becoming increasingly sophisticated.

None of these custom chips were better than Nvidia for general AI purposes. But for specific workloads (Google search ranking, Amazon recommendation systems, Apple on-device AI), custom hardware was superior.

Nvidia executives didn't talk about this risk publicly, but internally, it was a growing concern. If large customers built custom chips for 30-40% of their workloads, that was 30-40% of Nvidia's addressable market gone.

The Software Moat: CUDA's Dominance in 2025

Here's the thing that made Nvidia's position nearly impossible to disrupt: CUDA, the company's software framework for GPU computing.

CUDA was released in 2007. By 2025, after 18 years of development, it had become the de facto standard for GPU programming. Every major AI framework—PyTorch, TensorFlow, DeepSpeed—optimized primarily for CUDA.

Competitors like AMD (with HIP) and Intel (with OneAPI) offered alternatives, but these were always secondary. Libraries were optimized for CUDA first, other platforms second.

Switching from CUDA to an alternative GPU platform meant rewriting and reoptimizing all your code. For a company with thousands of developers and millions of lines of GPU code, that was prohibitively expensive.

This software lock-in was worth billions to Nvidia. It ensured that even if AMD or Intel released faster hardware, customers couldn't easily switch. Nvidia's advantage was architectural (the hardware is good) plus software (the ecosystem is deeply entrenched).

AMD and Intel both tried to make their platforms CUDA-compatible through compatibility layers that translated CUDA code to HIP or OneAPI. These tools worked for simple cases but struggled with complex, optimized code. The compatibility layer itself became a performance bottleneck.

Beyond that, developers had learned CUDA. They wrote books about it, taught courses on it, built careers around CUDA expertise. Switching to a different platform meant devaluing that expertise.

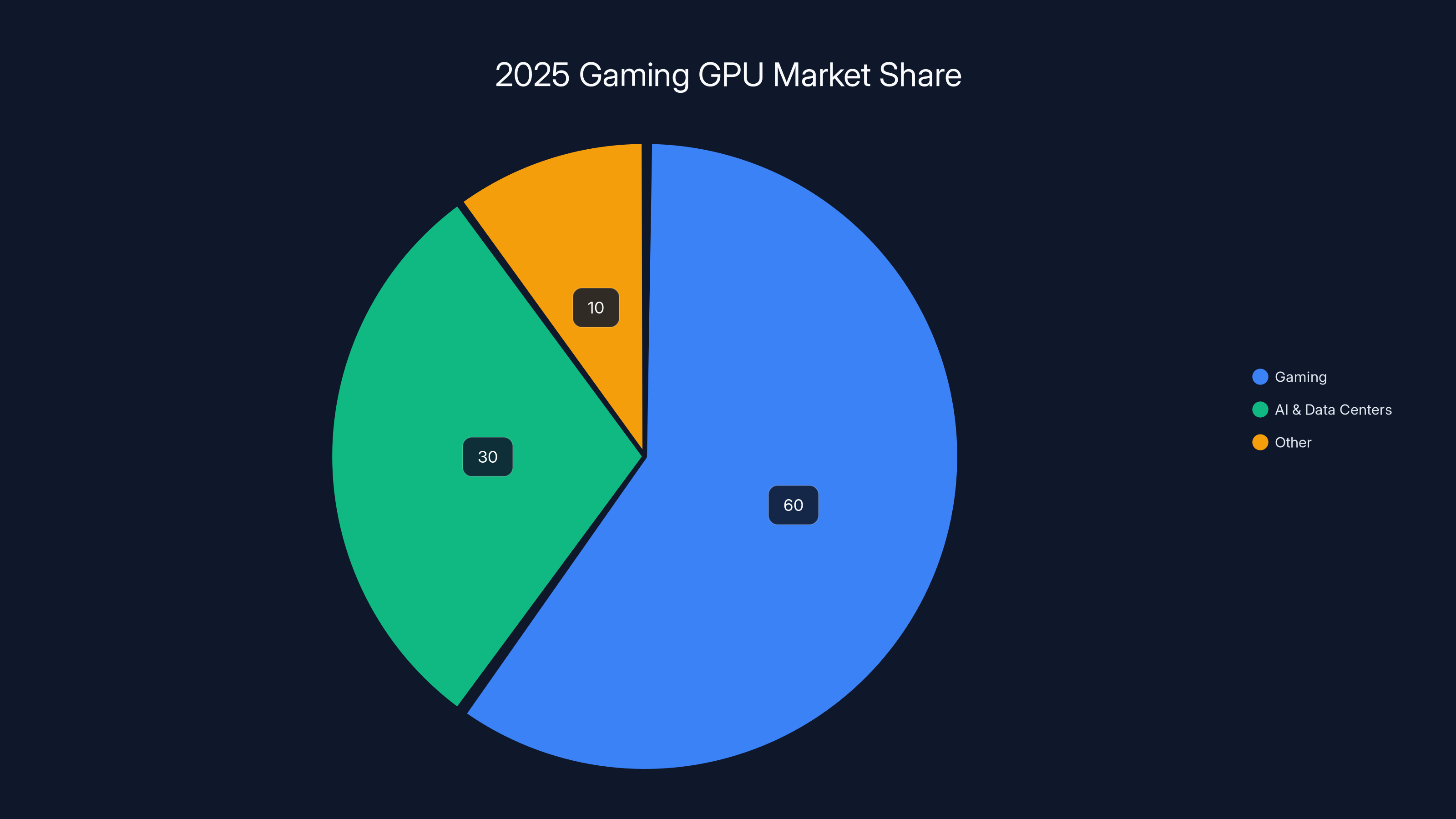

In 2025, gaming continued to dominate the GPU market with an estimated 60% share, despite growing interest in AI and data centers. (Estimated data)

Gaming Market Realities: Where GPUs Actually Matter

Most conversations about 2025's GPU market focused on AI and data centers. But for the majority of people actually buying GPUs, gaming was still the primary use case.

What did 2025 reveal about gaming GPU demands? Several things changed fundamentally.

Resolution and Ray Tracing Maturity

4K gaming with ray tracing became genuinely mainstream in 2025. It wasn't a niche enthusiast feature anymore. New games expected you to run at 4K with ray tracing at 60+ FPS.

Two generations ago, that would have required Nvidia's flagship hardware. In 2025, a mid-range GPU with DLSS 4 could achieve it.

This democratization meant fewer people needed to spend

From Nvidia's perspective, this was bad. Lower prices per unit meant lower revenue. From consumers' perspective, it was great.

Variable Refresh Rate Adoption

VRR (Variable Refresh Rate) technology—which synchronizes your monitor's refresh rate to your GPU's frame rate—went from a luxury to a standard. By 2025, virtually every gaming monitor supported either G-Sync (Nvidia's version) or FreeSync (AMD's open standard).

This mattered because VRR eliminated the need for frame rate stability. Your GPU could deliver 67 FPS one frame and 73 FPS the next, and your monitor would adjust. The important metric shifted from maximum FPS to frame time stability.

Nvidia's new GPUs had better frame time consistency, which mattered under VRR technology. But AMD's cards were close enough that the advantage was marginal.

Game Engine Changes

Unreal Engine 5 became the dominant platform for AAA game development, and its default settings favored specific GPU features. Nanite (for geometry) and Lumen (for global illumination) were optimized for modern Nvidia hardware, but AMD and Intel could run them adequately with different optimization paths.

This meant that "optimal" GPU performance varied by game engine and specific game. A game built in Unreal might strongly favor Nvidia, while a game in Godot might be engine-agnostic.

VR Gaming's Second Wave

2025 was the year VR gaming crossed a threshold. Meta's Quest 4, paired with PC via Display Link, offered desktop-quality VR at $799. Previous VR solutions either required expensive dedicated headsets or looked terrible.

VR workloads are GPU-intensive—you're rendering two separate 4K images (one per eye) at high frame rates (90 FPS minimum). This created demand for high-end GPUs among VR enthusiasts, which benefited Nvidia.

Manufacturing Challenges and TSMC Dependency

Nvidia produces zero chips itself. The company designs chips and contracts production to TSMC, the Taiwan Semiconductor Manufacturing Company.

This outsourcing model worked beautifully when TSMC could scale production. But in 2025, TSMC hit constraints that reverberated through the entire industry.

Wafer Capacity Limits

TSMC produces chips on silicon wafers, each wafer containing hundreds of chips depending on size. Advanced wafers (5nm and below) are produced in limited quantities. Demand for 5nm wafers in 2025 exceeded supply by 30-40%.

Nvidia competed with Apple (for A-series chips), AMD (for CPUs and GPUs), Qualcomm (for mobile processors), and others for wafer allocation.

Nvidia's relationship with TSMC was strong, so it got priority. But priority only goes so far when the physical fab capacity is constrained.

Geopolitical Risks

Taiwan is a geopolitical flashpoint. The U.S. and China both have strategic interests in semiconductor manufacturing, and Taiwan sits in the middle.

Throughout 2025, tensions were elevated. There were no actual military incidents, but the threat of supply chain disruption was real enough that companies started diversifying manufacturing away from Taiwan.

Intel announced plans to manufacture Nvidia chips at Intel fabs (Intel Foundry Services). Samsung offered 3nm manufacturing. Neither was as advanced as TSMC's processes, but having alternatives reduced risk.

Cost Pressure

TSMC's wafer prices increased 15-20% year-over-year in 2025. This was partly due to demand and partly due to geopolitical concerns driving investment in manufacturing infrastructure.

Nvidia absorbed these cost increases rather than passing them directly to consumers (which would have caused even more backlash on RTX pricing). The company's gross margins compressed partially as a result.

In 2025, Nvidia controlled an estimated 85% of the global market for AI training chips, highlighting its dominance in the sector. (Estimated data)

The Power and Cooling Infrastructure Crisis

One issue that rarely made headlines but was critical for data center operators: power and cooling became the limiting factor, not chip performance.

Power Density Escalation

An H200 chip consumed 700-800 watts. A single GPU. The power density on the chip—watts per square millimeter—was approaching the physical limits of what cooling systems could handle.

A rack of 8 H200 chips consumed 5-6 kilowatts. A typical data center power distribution can handle 5-10 kilowatts per rack. Fill your data center with H200 racks and you'd exceed power budgets.

This meant that data centers couldn't simply pack in more GPUs to get more performance. They'd hit their power budget first.

Nvidia's response was efficiency improvements (the 40% better performance per watt mentioned earlier). But even 40% efficiency gains weren't enough to keep pace with compute performance growth.

Liquid Cooling Adoption

Traditional air cooling became inadequate for dense GPU deployments. Data centers increasingly adopted liquid cooling—circulating cool liquid directly to chips or components near them.

Companies like The Link Ahead (which helps manage GPU deployments) reported that 60%+ of new data center GPU installations in 2025 used liquid cooling, compared to 30% in 2024.

Liquid cooling worked better but added complexity, cost, and potential reliability concerns. If a pump fails, your $40,000 of GPUs can overheat in minutes.

Electricity Costs as Competitive Advantage

With power being the limiting factor, data center location became more important. A data center in a region with cheap electricity (like Iceland, with geothermal power, or Washington state, with hydroelectric power) had a massive competitive advantage.

A 1-cent difference in per-kilowatt-hour electricity costs, when running thousands of GPUs 24/7, translated to millions of dollars annually in competitive advantage.

This meant that Nvidia's chip performance mattered less than proximity to cheap power and cooling. A company with older hardware but superior power infrastructure could offer cheaper AI services than a company with newest hardware but expensive power.

Enterprise and AI Platform Implications

What did Nvidia's 2025 mean for companies building AI products? Several important shifts happened.

Cost of AI Training

Training a large AI model on Nvidia hardware became absurdly expensive. Training a model with 100+ billion parameters could cost $5-20 million in chip rental alone, not counting electricity, bandwidth, and personnel.

This created a winner-take-most dynamic. Only well-funded companies could afford to train large models. Smaller startups couldn't compete on model scale, so they competed on efficiency, specialized models, or better software.

Open AI, Google, Meta, and a handful of others dominated because they could afford

Inference Became the Focus

Most companies didn't need to train models. They needed to run existing models (inference). An RTX 4070 or even a RTX 3080 could run inference for most applications.

This meant that the most common GPU purchase in 2025 wasn't for cutting-edge training. It was for mid-range inference servers. Nvidia's RTX 5070 and 5080 weren't positioned well for this segment (they were too expensive), which was one reason they didn't generate as much enthusiasm.

AMD's and Intel's competition mattered more in inference, where raw compute power mattered less and cost/performance mattered more.

Quantization and Model Compression

Companies increasingly used quantization and pruning techniques to reduce model size and inference demands. A 70-billion-parameter model could be quantized to 4-bit precision, reducing memory requirements by 75% and speeding inference 3-4x.

These techniques meant you didn't need a high-end GPU to run large models. A RTX 4070 could run quantized versions of GPT-3 scale models adequately.

Nvidia benefited from quantization (more people could run models on Nvidia hardware) and was hurt (fewer people needed high-end hardware).

Security and Reliability Concerns in 2025

As Nvidia's market dominance increased, security became a concern.

Supply Chain Attacks

Rumors circulated throughout 2025 that state actors were interested in compromising Nvidia GPUs or the manufacturing process. While no confirmed large-scale attacks happened, the risk was real enough that intelligence agencies warned data centers about supply chain security.

Data centers began implementing hardware supply chain verification, ensuring chips came directly from TSMC and Nvidia, not through unauthorized distributors.

Thermal Reliability Issues

The RTX 5090, running at 575 watts, had some reports of thermal throttling in poorly-ventilated systems. Users complained that the card's maximum boost clocks weren't sustained at rated power levels, suggesting thermal design was optimistic.

These weren't widespread failures, but they reinforced a perception that Nvidia was pushing density and power beyond what cooling systems could reliably handle.

Memory Reliability

HBM3e memory, while high-performance, had early reliability concerns. Some users reported memory errors at scale (running thousands of chips simultaneously). Nvidia and memory manufacturers (Samsung, SK Hynix) issued firmware updates throughout 2025 to address issues.

This was expected—new memory types always have early issues. But it meant that deploying H200s at massive scale required careful testing and gradual rollout.

Market Predictions and 2026 Outlook

What did 2025 tell us about where GPU markets were heading?

AI Market Saturation

By late 2025, supply chain chatter suggested that the explosive AI GPU demand was moderating. Companies that started building AI infrastructure in 2024 had completed first-phase rollouts by 2025. The incremental demand was still strong, but the exponential growth was cooling.

This meant that Nvidia's data center growth, while still strong, would moderate from 100%+ YoY to 30-40% YoY by 2026.

Consumer GPU Price Normalization

The RTX 5000 series backlash signaled that consumer GPU pricing had hit a ceiling. Nvidia couldn't sustain the prices that were possible when chips were in shortage.

Expect 2026 to bring price cuts on RTX 5000 series as they age, and for RTX 6000 series (expected late 2026) to launch at lower MSRPs to avoid the same backlash.

Architectural Innovation Pace

Nvidia's ability to deliver major architectural improvements was slowing. The jump from RTX 4000 to 5000 was incremental. The jump from RTX 5000 to 6000 would be similarly incremental.

This suggests that GPU performance scaling will slow, and differentiation will shift from raw speed to software optimization, power efficiency, and specialized features.

Competitor Viability

AMD and Intel proved in 2025 that alternatives existed. Not at Nvidia's level, but workable for many use cases. As alternatives improve and software ecosystems catch up, Nvidia's dominant position will face more competition.

Custom chips (TPU, Trainium) will capture an increasing share of AI workloads. Nvidia will remain dominant, but its market share in AI infrastructure will slowly decline from 90% toward 70-75% by 2027.

The Philosophical Shift: From Growth to Optimization

Perhaps the most important story of Nvidia in 2025 wasn't financial or technical. It was strategic.

Nvidia transitioned from a growth company to an optimization company. For two decades, the strategy was simple: build faster hardware, grow market share, expand into new areas (mobile, data centers, automotive).

In 2025, Nvidia's growth looked different. The company wasn't expanding into new markets much. It was maximizing profit from existing markets.

RTX 5000 pricing reflected this shift. Instead of dropping prices to drive volume, Nvidia maintained or increased prices. The company optimized for profit, not growth.

In data centers, the strategy was similar. Nvidia could have increased H200 production aggressively. Instead, it managed scarcity, maintaining high prices and margins.

This is a natural evolution. When you have 90% market share, there's no growth upside from share gains. Your growth comes from the market itself growing, plus squeezing more profit per unit.

For customers, this meant GPU costs would remain elevated. For Nvidia investors, it meant steadier, higher profitability. For competitors, it meant an opportunity to attack on price and value.

FAQ

What was the RTX 5000 series and why was it controversial?

The RTX 5000 series was Nvidia's next-generation consumer GPU lineup released in 2025, featuring the RTX 5090, 5080, and 5070. It was controversial because the price increases (5090 at

How did DLSS 4 change gaming and GPU requirements?

DLSS 4 introduced frame generation technology powered by AI, allowing GPUs to predict and render entire frames rather than just upscale images. This meant that older GPUs with DLSS 4 could match newer GPUs without it, effectively making high-end hardware less necessary. By late 2025, DLSS 4 was the default rendering path in major engines like Unreal Engine 5, fundamentally changing how GPU performance mapped to gaming experience.

Why was Nvidia's data center profit so high in 2025?

Nvidia's data center margins exceeded 75-78% primarily because demand vastly exceeded supply, competitors had no viable alternatives (due to CUDA ecosystem lock-in), and customers were willing to pay premium prices to access any available capacity. Leading times stretched to 6-12 months, allowing Nvidia to capture full scarcity premiums without competing on price.

What architectural improvements did the RTX 5000 series actually deliver?

Key improvements included fifth-generation tensor cores with native TF32 support (2-3x speedups for AI workloads), HBM3e memory with 900GB/sec bandwidth (50% improvement over HBM3), and memory controllers with prefetching, compression, and caching logic. While meaningful for AI workloads, these improvements provided minimal benefit for gaming, which is why gaming performance gains were modest compared to data center performance.

Could AMD or Intel seriously challenge Nvidia in 2025?

AMD challenged Nvidia in consumer gaming (capturing 20-25% market share) and Intel showed competitive products, but neither threatened Nvidia's dominance in AI and data centers. The combination of CUDA software lock-in, architectural leadership, first-to-market advantage, and entrenched developer ecosystems meant competitors could compete on gaming but not on enterprise AI. Custom chips from Google, Amazon, and others posed a bigger threat to specific Nvidia use cases.

What was the manufacturing bottleneck in 2025?

TSMC's limited 5nm wafer capacity (constrained by physical fab capacity, not demand) was the primary bottleneck. Nvidia's demand for advanced process nodes competed with Apple, AMD, and others, limiting how many chips Nvidia could produce. Geopolitical risks around Taiwan also drove efforts to diversify manufacturing, though no viable alternatives matched TSMC's performance in 2025.

How did power consumption become the limiting factor in data centers?

As GPU power consumption increased (H200 at 700-800W), data center operators hit their facility power budgets before hitting performance limits. A rack of 8 H200s consumed 5-6 kilowatts, saturating typical 5-10k W rack power. This forced adoption of liquid cooling and made electricity costs a competitive factor—cheap power regions gained advantage over high-performance regions with expensive power.

What does GPU market consolidation mean for consumers in 2026?

Consumers should expect slower price declines and continued premium pricing on flagship hardware. However, competition from AMD and the maturity of optimization technologies (DLSS 4, quantization) mean that mid-range GPUs will deliver better value than flagship options for most gaming use cases. The era of significant generational performance jumps appears over, shifting competition toward power efficiency, software optimization, and specialized features.

Conclusion: The End of an Era

2025 was Nvidia's most contradictory year. The company hit revenue and profit records that seemed impossible. Yet it faced consumer backlash, competitive challenges, and infrastructure constraints that revealed cracks in the dominance narrative.

The RTX 5000 series controversy exposed a fundamental truth: Nvidia's strength came from software and market lock-in, not raw hardware superiority. The company could charge premium prices and deliver mediocre performance improvements because customers had nowhere else to go. That calculus was changing.

DLSS 4's success proved something counterintuitive. Better software could make older hardware viable. This benefited Nvidia in the long term (locked customers in deeper) but hurt in the short term (reduced need for new hardware upgrades).

Data center dominance was real and extraordinarily profitable. But it was also fragile. It relied on supply scarcity, CUDA lock-in, and competitor incompetence. None of these were guaranteed to persist. In 2026 and beyond, expect competition to intensify.

The philosophical question for 2026 isn't "Can Nvidia remain dominant?" It's "Will Nvidia remain dominant because it deserves to, or because switching costs are too high?" Those are different things.

In 2025, Nvidia benefited from both. Going forward, it will be increasingly important to deliver genuine value, not just vendor lock-in.

For consumers, the lesson is simple: Nvidia's market position is strong, but it's not permanent. Wait for generational leaps. Moderate your expectations. And recognize that software matters more than hardware specs when choosing your platform.

For the industry, 2025 was a signal that the AI GPU boom, while continuing, has entered a different phase. The explosive growth is moderating. Profitability is becoming the focus. Competition is real. The era of unchallenged Nvidia dominance has started its transition to a more contested, mature market.

Nvidia's 2025 wasn't a failure. It was a success that revealed its own limitations.

Use Case: Need to document complex GPU architecture decisions or generate technical reports on AI infrastructure investments? Runable can automate your technical documentation in minutes.

Try Runable For Free

Key Takeaways

- Nvidia's data center segment generated $60-65 billion with 78% gross margins, while consumer GPU margins compressed to 35-40% due to competition

- RTX 5000 series offered minimal performance-per-dollar improvement (1.56 vs 1.60 TFLOPS per dollar), sparking consumer backlash and signaling shift toward profit optimization over growth

- DLSS 4 frame generation technology became industry standard, making older GPUs viable for 4K gaming and reducing demand for flagship hardware

- TSMC wafer capacity constraints, not Nvidia innovation, became the limiting factor on AI accelerator production, with lead times extending to 6-12 months

- AMD captured 20-25% of consumer GPU market share by Q4 2025, while custom chips from Google, Amazon, and Intel began fragmenting Nvidia's enterprise AI monopoly

- CUDA software ecosystem lock-in remained Nvidia's strongest competitive moat, but emerging alternatives and compatibility layers were slowly eroding that advantage

- Power consumption and cooling infrastructure became critical data center constraints, favoring regions with cheap electricity and making thermal management essential

- Nvidia transitioned from a growth company to a profit optimization company, prioritizing margin expansion over market share gains in mature segments

![Nvidia in 2025: GPU Innovation, AI Dominance & RTX 5000 Debates [2025]](https://tryrunable.com/blog/nvidia-in-2025-gpu-innovation-ai-dominance-rtx-5000-debates-/image-1-1767100070222.png)