How Nvidia's Music Flamingo Is Reshaping the Music Industry's AI Future

Last January, something genuinely interesting happened in the music world. Universal Music Group—home to Taylor Swift, The Weeknd, and basically every artist your parents have heard of—announced a major partnership with Nvidia around a new AI model called Music Flamingo. Not another generation of tech hype. Not another rushed rollout of AI slop designed to flood streaming platforms with mediocre beats. This was different. It was the industry saying, "Okay, we know AI is happening. Let's do this right."

Here's the thing: just two years earlier, Universal Music was suing Anthropic for distributing song lyrics without permission. The music industry was in full defensive mode. Artists were terrified. Labels were writing cease-and-desist letters faster than Chat GPT could generate lyrics. But something shifted. The threat of regulation, the reality of AI's capabilities, and maybe—just maybe—the potential upside finally made the music industry realize that fighting AI wasn't sustainable. Joining forces was.

The Nvidia Music Flamingo deal represents a crucial inflection point. Not for hype reasons. For real reasons. We're talking about an AI model that understands music the way humans do: recognizing song structure, harmony, emotional arcs, and chord progressions. We're talking about artist incubators designed to prevent AI garbage from flooding Spotify. We're talking about ensuring creators actually get paid when their work fuels machine learning models.

This article breaks down what's actually happening with this partnership, why it matters, what could go wrong, and what it means for musicians, fans, and the future of music discovery. Buckle up. The music industry's AI moment is here.

TL; DR

- Music Flamingo isn't a music generator: It's a model built to understand music at a human level, recognizing harmony, structure, emotion, and technical elements with unprecedented accuracy

- Universal Music's 180-degree turn: From suing AI companies in 2023 to partnering with Nvidia in 2025, the label realized that collaboration beats litigation in the AI era

- Discovery gets smarter: Fans can find music by emotional tone, cultural resonance, and nuanced characteristics—not just genre or playlist algorithms

- Artist tools, not replacement: The real value is giving creators better analysis of their own work and helping them understand their music at a deeper technical level

- The elephant in the room: Despite "responsible AI" language, questions remain about copyright enforcement, artist compensation, and whether AI slop prevention will actually work at scale

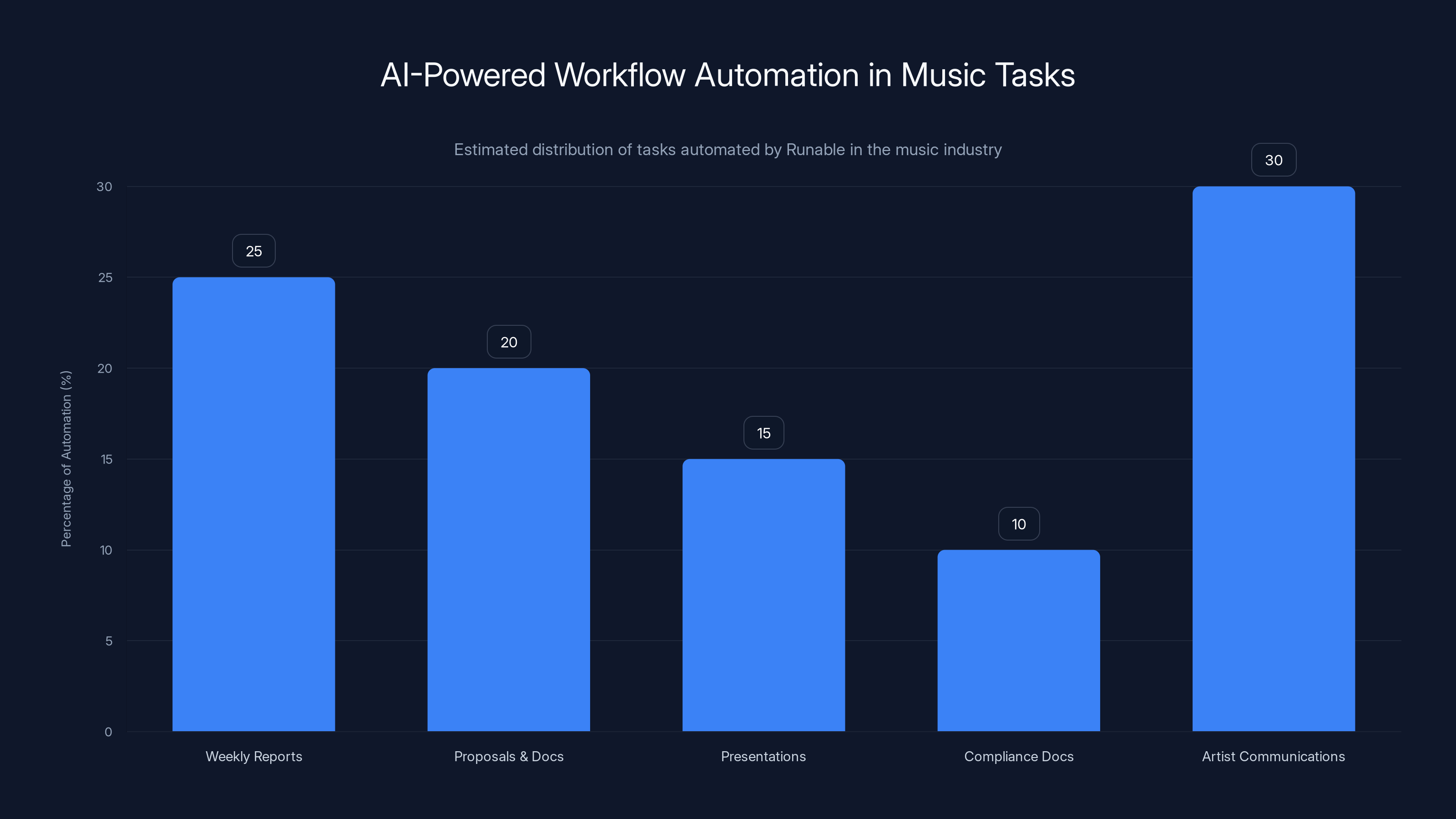

Estimated data shows that artist communications and weekly reports are the most automated tasks in music industry workflows using Runable, highlighting the platform's efficiency in handling repetitive tasks.

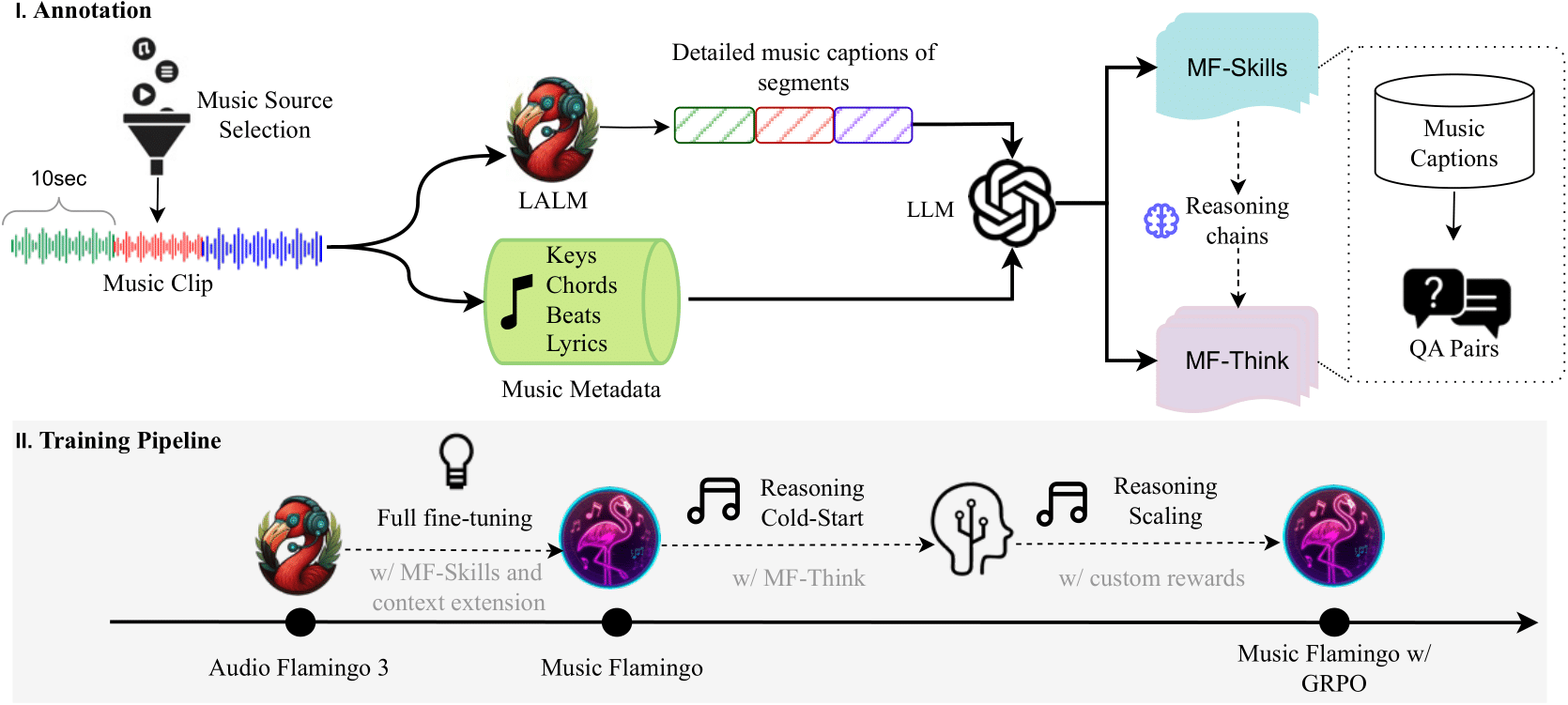

What Is Music Flamingo? Understanding the Core Technology

Music Flamingo isn't what you think it is. And that's actually the best part.

When most people hear "AI music model," they imagine Udio or similar platforms where you type a prompt and get a generated song. Music Flamingo doesn't do that. Instead, it listens to music—genuine songs, complete tracks, audio that sounds like the stuff you actually hear—and understands what's happening at every level.

Think of it like the difference between a person who can hum along to a song versus a music theory professor who can break down exactly why that song makes you feel something. Music Flamingo is the latter. It can identify chord progressions, recognize how dynamics shift, detect emotional arcs, understand cultural resonances, and map relationships between different compositional elements. A 15-minute track? No problem. Music Flamingo processes the full thing, not just snippets.

The model was trained on music data in ways that (theoretically) respect copyright and artist rights, though the complete details remain confidential. What we know is that it can recognize musical patterns that most AI systems struggle with: the subtle emotional progression in a Radiohead song, the harmonic complexity in a Kendrick Lamar beat, the cultural significance of a traditional folk melody.

The technical architecture leverages transformer-based deep learning—the same fundamental approach that powers OpenAI's language models. But instead of processing text tokens, Music Flamingo processes audio spectrograms and learns relationships between audio features, semantic descriptors, and cultural context. The result? An AI that understands music at a depth that previous models couldn't touch.

The catch? Nvidia hasn't released the full technical paper. We've got outlines and statements, but not complete methodology. This is intentional. Music Flamingo is proprietary, and Nvidia (partnering with Universal Music) wants to control how it's deployed. That transparency gap matters when we talk about the music industry's "responsible AI" claims later.

The Music Industry's Pivot: From Lawsuits to Partnerships

To understand why this deal is significant, you need to understand how badly things went wrong in 2023.

Universal Music sued Anthropic. Not because Anthropic was making music. Because Anthropic's Claude AI was trained on song lyrics scraped from the internet, and when users asked Claude to reproduce lyrics, it would do exactly that. Entire copyrighted songs. Word for word. No license. No royalties. Universal Music's position was crystal clear: this is theft, and AI companies need to stop treating the music catalog as free training data.

They weren't wrong. The problem was that the music industry's legal playbook didn't scale. You can't sue every AI company individually. You can't litigate your way out of a technological shift. Every lawsuit they won would just encourage the next scrappy startup to try a different approach. The music industry was in an impossible position: either fight an endless legal war, or find a way to participate in the AI future on their own terms.

Enter Nvidia and the Music Flamingo partnership.

This wasn't surrender. It was strategic repositioning. Universal Music realized that sitting on the sidelines while AI reshapes music discovery and creation would hand the entire future to competitors. If AI is going to understand and analyze music, Universal Music wants a seat at the table. If AI is going to help artists create better music, Universal Music wants to shape how that works. If AI is going to discover songs for fans, Universal Music wants their catalog at the center.

The timeline matters here. In October 2024, Universal Music signed a deal with Udio, an AI music generator platform. That signaled the shift was real. Then came the Nvidia deal. This wasn't one-off partnerships. This was the music industry fundamentally changing its AI strategy.

But let's be clear about what changed: not the industry's skepticism of AI, but their approach to managing it. They stopped treating AI as a threat and started treating it as infrastructure. That's a massive difference.

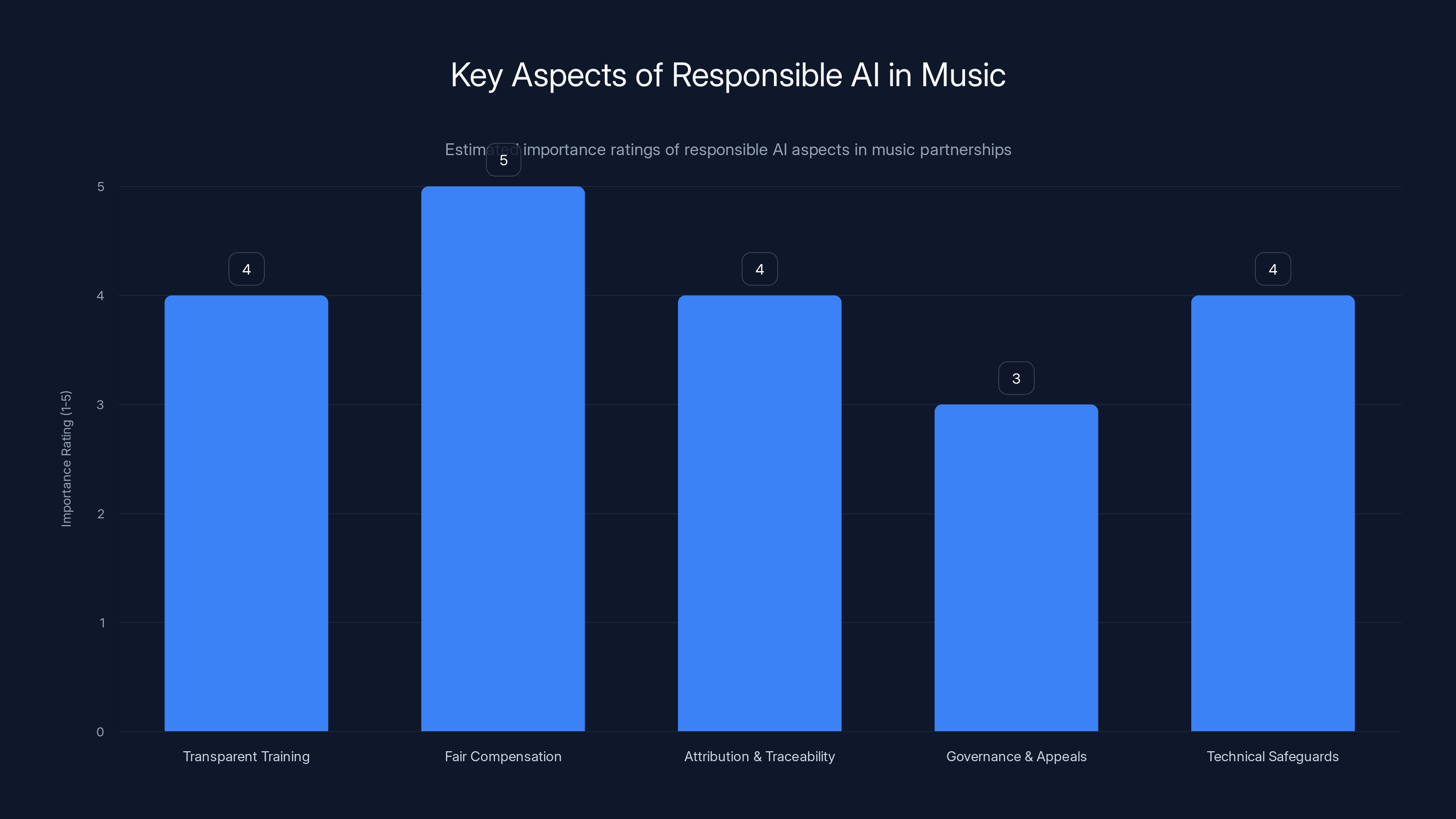

Fair compensation and transparent training are rated as the most important aspects of responsible AI in music, reflecting the need for ethical practices and artist rights. (Estimated data)

How Music Flamingo Changes Music Discovery

Here's where things get practical.

Today, how you find new music is limited. You search by artist name, browse a genre category, or let a recommendation algorithm guess based on your listening history. These systems work, but they're crude. A recommendation engine that says "you like pop, so here's more pop" misses everything interesting. It can't recognize that you specifically love minor-key melodies, or that you're drawn to music with sparse instrumentation, or that you respond to certain vocal timbres.

Music Flamingo changes this. Because the model can understand these nuanced elements, search and discovery become dimensional. Instead of browsing by genre, you could search for music by:

- Emotional tone: Find songs with melancholic crescendos, joyful instrumental breaks, or anxious vocal delivery

- Cultural resonance: Discover music that shares thematic or structural elements with cultural movements, historical moments, or geographic traditions

- Harmonic characteristics: Search for songs with specific chord progressions, modal tonality, or harmonic complexity

- Structural patterns: Find music with similar buildup, drop, and release patterns

- Instrumental textures: Discover based on the specific combination of instruments, production techniques, or sonic characteristics

This isn't theoretical. This is the actual use case Universal Music is building toward.

For fans, this means better music discovery. You're not fighting algorithmic homogenization anymore. You're actually finding the songs that match what you're looking for.

For Universal Music, this means stickier engagement. If fans can discover music more effectively using Music Flamingo-powered tools, they spend more time on streaming platforms. More time equals more advertising revenue and higher subscription value. It's a win-win that doesn't require creating new music—just making existing music more discoverable.

The implementation details matter, though. Universal Music has been vague about exactly how Music Flamingo will integrate into Spotify, YouTube Music, or Apple Music. Will it be a new search feature? A recommendation algorithm layer? A separate discovery platform? The statement mentions that artists will be able to "describe and share the music with unprecedented depth," which suggests something more like metadata enhancement. Artists could tag their own songs with detailed descriptors that Music Flamingo understands, making discovery smarter without requiring end-user changes.

Artist Tools and the Fight Against "AI Slop"

The second major component of the partnership is artist-focused. And this is where things get interesting—and controversial.

Universal Music's statement emphasizes a "dedicated artist incubator" where creators can design and test AI-powered tools. The goal, according to the companies, is to prevent generic "AI slop"—the mediocre, derivative, soulless music that floods SoundCloud, Spotify playlists, and TikTok daily.

But here's the honest truth: preventing AI slop at scale is genuinely hard.

You can't engineer human creativity. You can't write code that says "only generate great songs." What you can do is give artists better tools to understand their own work, analyze why certain songs resonate, and use AI as a creative companion rather than a replacement.

Music Flamingo enables this. An artist could upload their own track and get detailed analysis: what chord progressions are driving engagement, where the emotional peaks occur, how the song compares structurally to similar tracks in their genre, what cultural resonances might make it more discoverable. That's valuable. That helps artists make better creative decisions.

Where the artist incubator gets fuzzy is on the creation side. The companies say they're committed to "responsible AI innovation" and placing "artists at the center." But what does that actually mean when you're talking about AI generation tools? Does it mean artists can use AI-generated backgrounds? Full tracks? Only certain kinds of generation?

Universal Music's Lucian Grainge said the partnership aims to "direct AI's unprecedented transformational potential towards the service of artists and their fans." That's positive language. But it's also vague. Vague language in partnership announcements usually means the companies haven't actually figured out the details yet.

What we know: there will be an incubator program. Artists will have access to Music Flamingo tools. The focus will be on "advancing human music creation," not replacement. But the mechanics of how that works at scale? That's still being designed.

Rightsholder Compensation: The Critical Question

Here's what matters most for artists and everyone who creates music: money.

The Nvidia partnership statement mentions a shared objective of "advancing human music creation and rightsholder compensation." That's the money sentence. Because if AI is going to learn from existing music, artists need to get paid. Period.

This is where things get complicated, because the music industry has spent the last two decades discovering that "fair compensation" is nearly impossible to define when technology changes how music is used.

When you stream a song on Spotify, that rights holder gets paid—a fraction of a cent, but it's something. When an AI trains on your music, learns your patterns, and generates new music based on that understanding, who gets paid? How much? For how long?

Universal Music's partnership with Nvidia doesn't fully answer these questions. The statement emphasizes "safeguards that protect artists' work, ensure attribution, and respect copyright." But safeguards sound nice in press releases. What actually happens in implementation?

There are models that could work. Blanket licensing (like ASCAP and BMI operate) could be extended to AI training. A small percentage of revenues generated by Music Flamingo tools could be pooled and distributed to artists whose work trained the model. Attribution systems could ensure that when Music Flamingo-powered tools create something derivative, the original artist gets recognition and revenue.

But here's the tension: none of these models are perfect. Blanket licensing means everyone gets a cut regardless of whether their music actually contributed. Revenue-sharing models require perfect tracking, which doesn't exist. Attribution systems can be gamed. Perfect solutions don't exist. Only tradeoffs.

Universal Music is in a position to actually solve this—or at least to set industry standards that others would follow. But there's no public indication they're doing that. Which means we're probably going to see the same fights that happened with streaming play out all over again with AI.

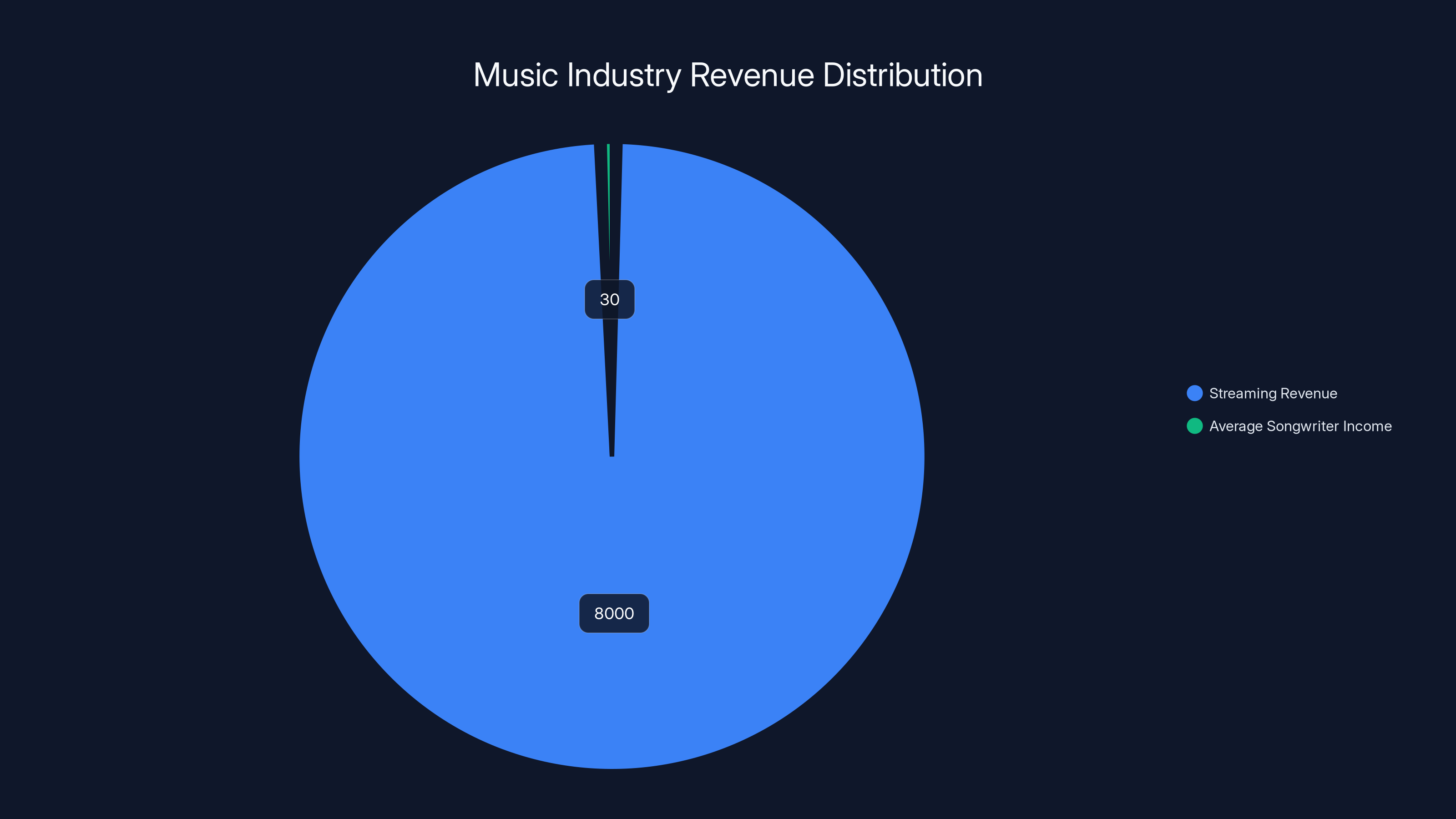

Streaming generates approximately

The Copyright Protection Problem

Let's talk about the elephant in the room: Music Flamingo doesn't magically solve copyright issues. It might actually make them worse.

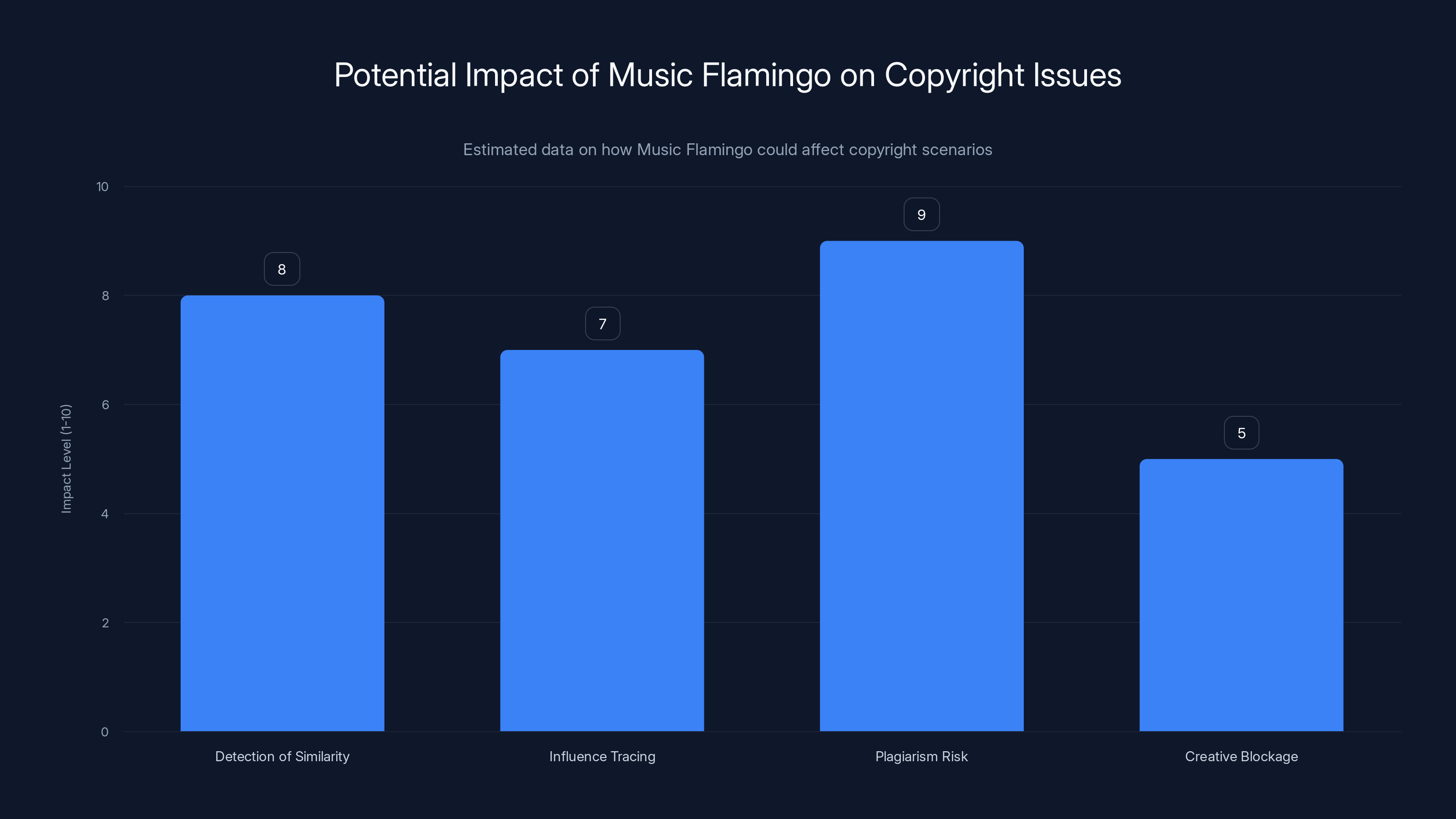

The model can understand and recognize music at a detailed level. That means it can identify similarities between songs, recognize when one piece borrows from another, and potentially trace influence or inspiration across vast catalogs. That's useful for music analysis. It's useful for discovery. It's also useful for plagiarism.

Imagine you're an artist using Music Flamingo. You upload your song. The model tells you exactly what chord progressions, melodic structures, and harmonic elements are in your work. Now imagine someone else using those same insights to generate a "derivative" track that borrows everything interesting about your song. Plausible deniability is suddenly much harder to prove—or even maintain.

Nvidia and Universal Music say they're committed to "protecting artists' work" and "respecting copyright." But how?

They could use Music Flamingo to detect when generated music is too similar to existing work. They could set threshold rules—if a generated track is >75% similar to an existing song, flag it or block it. But those thresholds are arbitrary. Music borrows from music. Influence is how the art form works. Set the threshold too high and you've just created a system that prevents all creativity. Set it too low and it's useless.

The other option is legal enforcement. Music Flamingo could be designed to refuse generating anything that violates copyright. But AI models can be fine-tuned, remixed, or used in ways the creators didn't intend. There's no such thing as an AI system that's perfectly controlled. Someone will figure out how to work around the safeguards.

This isn't necessarily a deal-breaker for the partnership. But it's a reality that Universal Music and Nvidia haven't been fully transparent about. The model is powerful. Powerful tools can be misused. Both companies are betting that the benefits outweigh the risks.

What About AI-Generated Music Quality?

One thing the partnership carefully sidesteps: Music Flamingo isn't being positioned as a tool for creating hits.

Nvidia and Universal Music are both acutely aware that Spotify is already drowning in AI-generated music. Thousands of artists (mostly bots) upload algorithmic beats every single day. The platform is full of music that technically exists but that nobody wants to hear. It's noise. And streaming platforms, record labels, and legitimate artists all hate it.

Music Flamingo being a recognition and analysis tool rather than a generation tool is deliberate. The message is: "We're using AI to make music better, not to make more music." That's the distinction the music industry is trying to hold onto.

But here's where the framing gets tricky. If Music Flamingo is so good at understanding music, why wouldn't you use it for generation? A model that understands harmony, emotion, structure, and cultural resonance could theoretically generate music that sounds human. That's exactly what Udio and other generative models do.

So the partnership effectively says: "This model could generate music, but Universal Music's partners won't use it that way." That's a commitment based on trust and business alignment. Not on technical limitations.

What happens in three years if Nvidia or Universal Music or a licensee decides the revenue from generation is too good to ignore? What happens if a competitor gets access to a similar model and takes exactly the opposite approach?

These aren't paranoid questions. They're practical questions about governance and control in the AI era. Commitments can change when financial incentives shift.

Integration with Streaming Platforms: The Real Challenge

Here's what actually matters for users: how does this show up in Spotify, YouTube Music, or Apple Music?

The partnership statement mentions that Music Flamingo will be "integrated into UMG's catalog," but the mechanics are vague. Universal Music controls one of the world's largest music catalogs, but they don't control Spotify or YouTube. They're licensing partners. Which means any Music Flamingo-powered discovery or tools have to work within the ecosystem that streaming platforms already built.

Streaming platforms have existed for over a decade now. Their recommendation algorithms are fairly mature. YouTube Music has YouTube's recommendation infrastructure. Spotify has invested billions into recommendation technology. Apple Music has Siri integration and deep learning systems.

Music Flamingo is better at understanding music, but that doesn't automatically mean it's better than what these platforms already have. It's different. And different often means fragmented. Artists would have to optimize for different discovery systems. Listeners would have to use different tools depending on their streaming platform. That friction defeats the purpose.

For Music Flamingo to actually change how people discover music, it needs to be integrated into the platforms people already use. Not as a separate tool. Not as an experimental feature. As the default recommendation engine.

Universal Music can push for that. They have leverage. Their artists generate massive streaming revenue for these platforms. They could theoretically say, "If you want UMG music, you need to implement Music Flamingo as your primary recommendation layer." But that kind of leverage creates antitrust questions. Regulators are already watching how major labels leverage their market position with streaming platforms.

So real integration will probably be slow, gradual, and voluntary—which means Music Flamingo-powered discovery might be a niche feature for years before becoming mainstream.

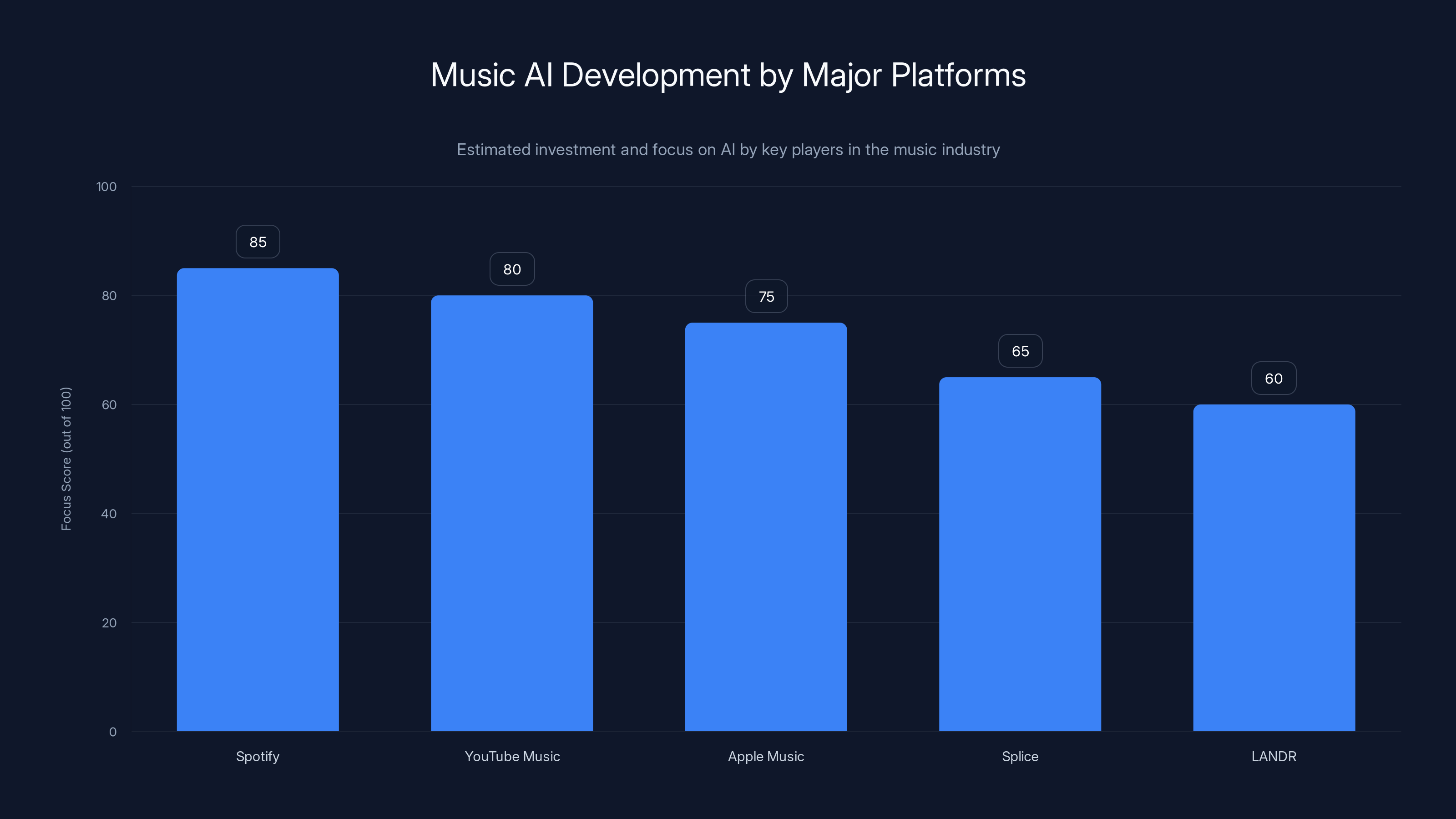

Spotify leads in AI development focus, followed by YouTube Music and Apple Music. Estimated data based on industry insights.

The Competitive Landscape: Who Else Is Building Music AI?

Nvidia and Universal Music aren't alone in this space. They're just the most high-profile partnership so far.

Spotify has been investing heavily in music AI for years. They've acquired recommendation startups, developed internal AI research teams, and are building music understanding tools that could theoretically rival Music Flamingo. YouTube Music, powered by Google's AI capabilities, has massive advantages in scale and infrastructure. Apple Music is quietly integrating AI into Siri and Apple Music's discovery features.

Outside the major platforms, companies like Splice, LANDR, and other music production platforms are incorporating AI tools for mixing, mastering, and composition assistance.

The difference between Music Flamingo and most of these is legitimacy. When Nvidia and Universal Music partner, they're saying, "We've got the music industry's support." When a startup builds AI music tools in isolation, they're constantly fighting copyright concerns and artist pushback.

But that legitimacy can also be limiting. Nvidia can't innovate as fast as a startup with a lean team. Universal Music has to balance innovation with the interests of thousands of artists and songwriters. That creates bureaucratic friction.

The real competitive test will come when Music Flamingo-powered tools actually launch and users try them. If they genuinely improve music discovery, adoption will snowball. If they feel gimmicky or don't integrate well with existing platforms, they'll be another high-profile AI initiative that sounds good but doesn't change much in practice.

What "Responsible AI" Actually Means in Music

Both Nvidia and Universal Music use the phrase "responsible AI" constantly. It's in every press release, every statement. But what does it actually mean?

Responsible AI is a buzzword. Everyone wants to be "responsible." But the term covers everything from basic ethics to serious technical commitments. For a music partnership, responsible AI should mean:

-

Transparent training: Artists should know when their work was used to train a model. This doesn't mean consent—the companies aren't committing to that. But transparency about data sources.

-

Fair compensation: When AI trained on copyrighted music generates value, rights holders should receive a share. How that gets calculated is the hard part.

-

Attribution and traceability: When Music Flamingo-powered tools are used, the system should track and attribute influence clearly. Derivative works should point back to their sources.

-

Governance and appeals: If an artist believes their work is being used unfairly, there should be a process to challenge it.

-

Technical safeguards: The model should have built-in limits to prevent obvious copyright violations, plagiarism, or misuse.

Nvidia and Universal Music haven't explicitly committed to all of these. They've mentioned some. They've been vague about others. "Responsible AI" in their framing seems to mean, "We're thinking about this carefully and we're committed to doing better than previous AI music systems."

That's... something. But it's not a guarantee.

The test of whether this partnership is truly responsible will be in how it's implemented over the next 18-24 months. If artists start seeing revenue from Music Flamingo usage. If they have transparency into how their work was used. If copyright protection actually works. Then maybe "responsible" was more than marketing.

If none of that happens, and Music Flamingo becomes another revenue stream for Nvidia and Universal Music while artists continue to get squeezed, then "responsible" just meant "better PR."

Artist Reactions: Mixed Signals So Far

How have musicians responded to the Nvidia partnership?

It's complicated. Some artists and producers see legitimate potential. Better discovery tools could mean their music reaches more people. AI-powered analysis could help them improve their craft. The artist incubator program could create opportunities for those interested in experimenting with AI.

Other artists are skeptical. They've heard these promises before. They've watched streaming destroy the economics of music for everyone except superstars. They've seen AI companies train on their work without permission. Trust is low. Promises are cheap.

The union perspective is cautious support with heavy conditions. The American Federation of Musicians and other unions have been clear: AI tools for analysis and enhancement are acceptable. AI tools for replacement are not. Any deal that reduces session musician work or allows AI-generated tracks to compete directly with human-created work is a problem.

Universal Music's commitment to "advancing human music creation" is partly a response to union pressure. The major labels need union cooperation to operate—especially in film and TV scoring where unions have significant leverage. Any deal that looks like labor replacement would face immediate pushback.

What would actually build artist confidence in this partnership?

- Clear compensation mechanisms laid out in advance

- Independent auditing of how Music Flamingo is being used

- Artist opt-in (not just opt-out) for training data usage

- Union signoff on terms and conditions

- Case studies showing artists actually benefiting

None of those have been announced yet. Which means artist buy-in is still theoretical. That matters because without artist participation in the incubator program and the development process, Music Flamingo becomes just another tech-company tool imposed on the music industry from above.

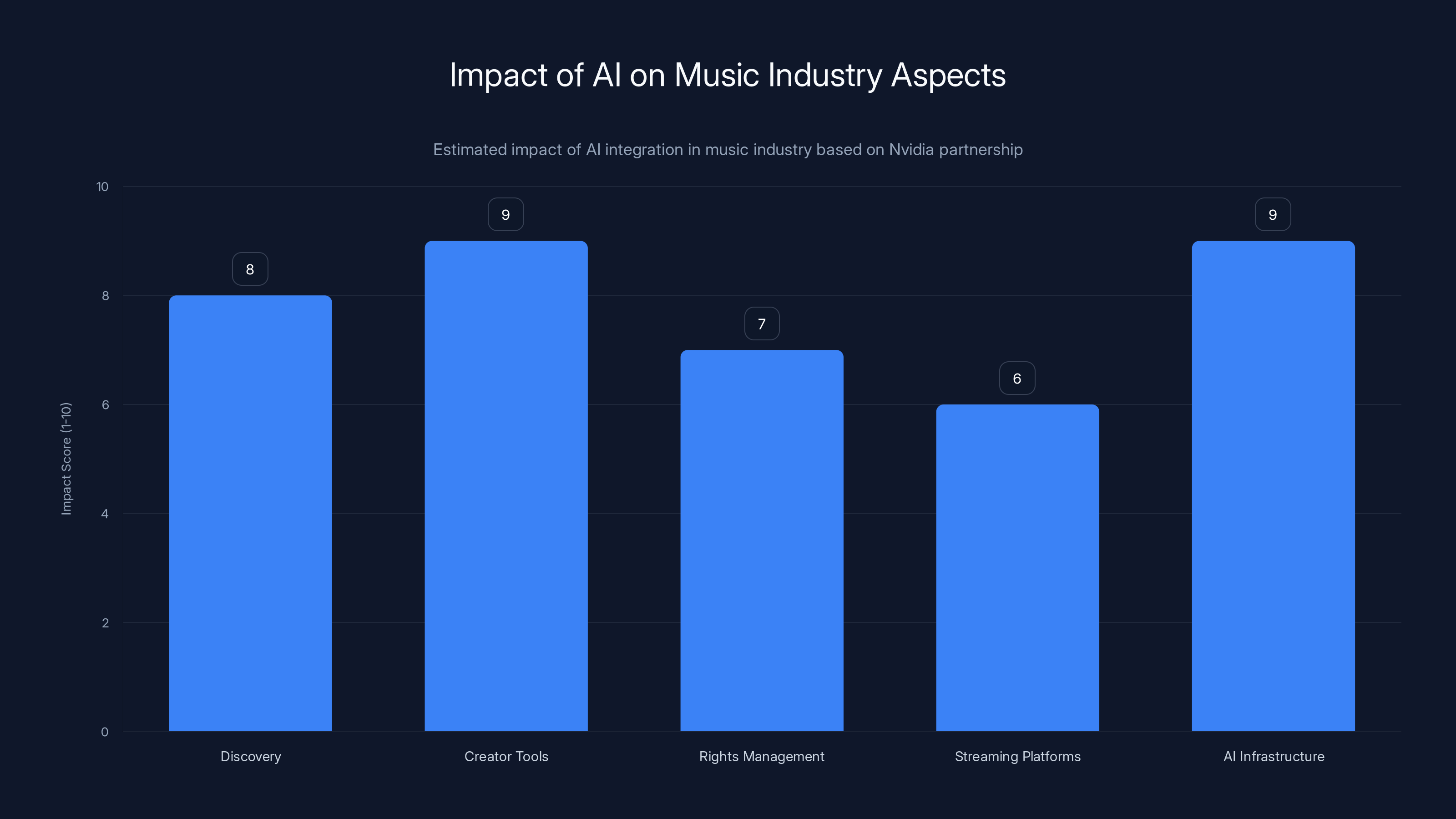

AI integration is expected to significantly enhance creator tools and AI infrastructure in the music industry, with discovery and rights management also seeing notable improvements. (Estimated data)

The Licensing and Rights Management Problem

Let's get into the weeds on rights, because this is where partnerships like this can either work brilliantly or collapse catastrophically.

Music rights are already incredibly complex. A single song might have multiple rights holders: the composer, the lyricist, the recording artist, the producer, the label, possibly other contributors. When you create AI that understands and learns from music, every single one of those parties needs to be considered. Or at least, they're going to think they need to be considered, which amounts to the same thing legally.

Universal Music controls a huge portion of the global music catalog. They have exclusive licenses to distribute and monetize that music. When Nvidia and Universal Music partner to build Music Flamingo, they're essentially saying, "Universal Music's catalog is fair game for training and analysis."

But Universal Music doesn't own all the music. They own the right to exploit it—to distribute it, sell it, license it. Training an AI model on that music to create new insights and tools might fall under those rights. Or it might not. That's literally what lawyers are going to argue about for years.

The partnership is trying to pre-empt these arguments by framing Music Flamingo as beneficial to artists and rights holders. That's smart PR. But it doesn't actually solve the legal complexities. When someone in three years sues because they believe their song was used improperly in training, Universal Music and Nvidia will still have to defend their practices.

The way rights holders typically protect themselves is through licensing agreements. Every use of copyrighted material requires explicit permission. But AI training is so new that the licensing frameworks don't exist yet. Spotify doesn't have a standard license for "AI training." Publishers don't have rates for "machine learning usage."

Universal Music is trying to create those frameworks unilaterally through this partnership. Whether other rights holders will accept those terms remains to be seen.

Looking Forward: How This Changes the Music Industry

Assuming the Nvidia partnership actually delivers on its promises—and that's a big assumption—what happens to the music industry?

Discovery becomes smarter and more personal. Users can find music that matches their taste at a granular level. That means less algorithmic homogenization, more long-tail discovery, and potentially more value for mid-tier artists who don't fit neatly into major genres.

Creator tools get significantly better. Artists will have access to AI systems that understand music at a technical level. That enables better composition, better production, better decision-making. The gap between professional and amateur producers might actually narrow.

Rights management becomes more complex but also more transparent. If Music Flamingo can track influence, understand similarity, and map relationships between songs, the industry has better tools for managing rights. That's both good (better attribution) and bad (more opportunities for disputes).

Streaming platforms might finally get disrupted. If Music Flamingo's discovery is significantly better than Spotify's or YouTube's, independent platforms built around Music Flamingo-powered discovery could compete. That creates actual alternatives to the current streaming duopoly.

AI becomes a default infrastructure layer in music. Like it or not, within two to three years, every major music platform will have AI understanding and analysis as a core component. Artists will need to understand how to work with these systems. That's a skill that separates successful creators from everyone else.

The partnership also sets a precedent: major labels partnering with AI companies isn't adversarial anymore. It's collaborative. That changes the entire conversation around AI and music. Artists and creators will increasingly be asked: "Are you going to work with AI or against it?" That's a much harder position to defend from.

But precedent cuts both ways. If Universal Music's partnership with Nvidia sets a standard where artists' work is used to train AI without explicit consent, that sets a very different precedent. Rights holders who don't get to negotiate with AI companies could find their work used anyway. That's the real worry.

The Technical Limitations Nobody's Talking About

Music Flamingo is impressive. But it has real limitations that Universal Music and Nvidia haven't discussed in detail.

First, the model is trained on existing music. All the beautiful, culturally significant music that humans have created. But AI learns patterns. If your song sounds like something the model has heard before, Music Flamingo will understand it. If your music is genuinely novel—if it breaks rules in new ways—the model will struggle. That's not a flaw specific to Music Flamingo. That's how all AI systems work. They're pattern learners, not philosophers.

Second, understanding music and appreciating music are different things. Music Flamingo can recognize that a chord progression is minor-key and sparse. It can identify that as emotionally melancholic. But does it understand why that evokes melancholy? Can it recognize when a minor-key progression is beautiful versus boring? These are philosophical questions that AI isn't equipped to answer. At least not yet.

Third, cultural context is fragile. Music is deeply tied to culture, community, history. A traditional folk song means something different to someone from that culture versus someone from outside it. Music Flamingo can recognize cultural resonances, but it does so based on training data. If the training data doesn't include certain cultures or traditions, the model will have blind spots.

These aren't reasons to dismiss Music Flamingo. They're reasons to be realistic about what it can and can't do. The partnership will succeed or fail based on whether it actually improves the music experience for users. Not on whether the AI is philosophically perfect.

Estimated data suggests Music Flamingo could significantly impact plagiarism detection and influence tracing, but also poses a risk of creative blockage due to arbitrary similarity thresholds.

Competitive Threats and What Could Go Wrong

The Nvidia partnership sounds promising. But several things could derail it.

Regulatory intervention: The FTC has been increasingly interested in how major labels use their market power. If Music Flamingo is used to give Universal Music's artists an unfair advantage on streaming platforms, that could trigger antitrust action. The labels have already been sued multiple times for anti-competitive behavior.

Technology advancement elsewhere: Spotify, Google, or another company could build a competing music AI that's equally capable but more integrated with their existing platforms. If those companies move faster, they own the space.

Artist backlash: If artists believe Music Flamingo is being used unfairly or without proper compensation, they'll make noise. The music industry is relationship-based. Bad relationships kill deals.

Copyright litigation: Someone will sue claiming their work was improperly used in training Music Flamingo. These lawsuits could tie up the partnership in litigation for years.

Poor initial execution: If the first Music Flamingo-powered tools are buggy, unhelpful, or poorly integrated, adoption will stall. First impressions matter in tech.

Any of these scenarios could happen. None of them are inevitable. But they're realistic risks that the partnership needs to navigate.

Runable and AI-Powered Workflow Automation in Music

As the music industry increasingly adopts AI tools like Music Flamingo, many production teams and labels are discovering they need better systems for managing the workflow around these technologies.

When Music Flamingo analyzes a catalog of 10,000 tracks, you need to process and organize those results. When artist incubators generate proposals and presentations for new tools, you need to create documentation quickly. When labels need to generate reports on AI-driven discovery metrics or compensation calculations, you need something that can automate those processes.

That's where platforms like Runable become valuable. Runable is an AI-powered automation platform that helps music industry professionals create presentations, documents, reports, and slides automatically—without manual creation or repetitive work.

For example, a label could use Runable to:

- Automatically generate weekly reports on how Music Flamingo-powered discovery is performing

- Create artist incubator proposals and documentation with AI assistance

- Build presentations for rights holder meetings with data visualizations

- Produce compliance documentation for regulatory filings

- Generate artist communications and educational materials about Music Flamingo

Rather than spending hours building these documents manually, Runable's AI agents do the heavy lifting. At just $9/month, it's a fraction of what you'd spend on admin staff or consultants.

As AI becomes more integrated into music workflows, the tools that manage those workflows become critical infrastructure.

Use Case: Generate comprehensive reports on AI-powered music discovery metrics and artist compensation automatically in minutes.

Try Runable For Free

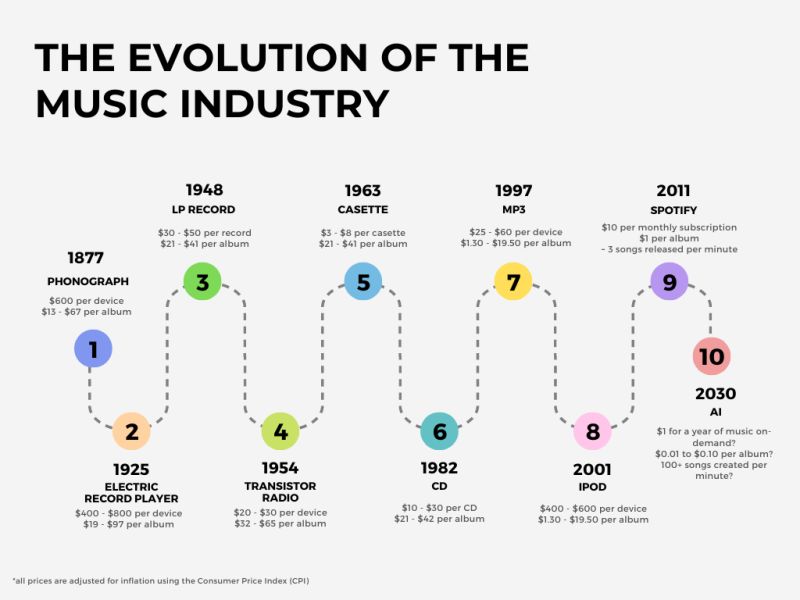

The Historical Precedent: Why This Matters for AI Governance

The Nvidia and Universal Music partnership isn't just about music. It's a test case for how industries should work with AI companies.

For the last few years, tech companies and creative industries have been mostly adversarial. Tech companies build AI. Creative industries sue them for copyright violation. Everyone loses. The music industry's pivot—from suing to partnering—sets a completely different template.

The message is: "Instead of fighting AI, let's figure out how to do AI in a way that benefits creators."

If that works, you'll see similar partnerships in:

- Publishing and books: Authors and publishers working with AI companies to improve discovery and reading

- Film and television: Studios partnering with AI companies for editing, effects, and creative tools

- Visual art: Artists and galleries partnering with AI companies for curation and discovery

- News and journalism: Publishers working with AI for research, fact-checking, and story generation

Each of these industries has the same problem: AI is coming whether they like it or not. The intelligent move is to shape how it arrives, not to try to prevent arrival.

This could be the model for responsible AI in the 2020s. Or it could be the blueprint for how big companies co-opt and control AI on their terms. History will tell. But the partnership sets a precedent either way.

What Artists Actually Need From Music AI

Let's zoom out from the partnership mechanics and think about what musicians actually need.

Better discovery tools. Artists spend more time trying to get people to listen to their music than actually making music. If AI can fix that, it's genuinely valuable.

Affordable creation and production tools. Professional recording and production costs thousands of dollars. AI tools that democratize production—that let bedroom producers sound professional—are net positive for music creation.

Fair compensation when AI uses their work. This is non-negotiable. If someone profits from your music without paying you, that's exploitation.

Transparency about how their work is being used. Artists deserve to know when and how their music is part of AI training. This isn't about permission (the industry has established it doesn't need permission). It's about information.

Tools that augment, not replace. Music Flamingo as an analysis tool is valuable. Music Flamingo as a composition tool that replaces human musicians is not.

Protection against plagiarism and copyright theft. If AI can understand similarity and influence, it should be used to prevent copyright violations, not enable them.

The Nvidia partnership addresses some of these. Not all of them. Whether it's actually executed in a way that benefits artists remains to be seen. But those are the criteria that matter.

Conclusion: A Pivotal Moment for Music and AI

The Nvidia and Universal Music partnership around Music Flamingo represents a genuine inflection point. For the first time, the music industry and an AI company are working together at scale to shape how AI understands, analyzes, and engages with music.

That's significant. Not because it solves everything. But because it represents a shift in strategy. Instead of litigation and opposition, the music industry is now building infrastructure.

Music Flamingo itself is impressive. A model that understands music at the level of harmony, structure, emotion, and cultural resonance opens possibilities that previous AI systems couldn't touch. Better discovery. Better analysis. Better tools for creators.

But the technical capability is only half the story. The real test is implementation. How does Music Flamingo actually get integrated into the platforms people use? How are artists compensated when their work trains the model? How are copyright protections enforced? What happens when other companies try to build competing systems? These are the questions that determine whether this partnership is genuinely transformative or just another high-profile AI initiative that doesn't change much.

The honest assessment is: promising, but unproven. Nvidia and Universal Music have committed to responsible AI in music. But "responsible" is still being defined. The proof will be in the actual deployment, integration, and results over the next 18-24 months.

For artists, the advice is simple: stay skeptical. These partnerships are valuable only if they actually deliver benefit. Watch for transparency. Watch for compensation. Watch for whether your work is being used fairly. If this partnership genuinely serves artists and fans, it's a model the entire creative industry should copy. If it's just a way for big companies to control AI for their benefit, it's a cautionary tale.

One more thing: this partnership is evidence that the era of AI being something that happens to industries is ending. The era of industries actively shaping AI is beginning. Music isn't passive anymore. Neither should any creative field be.

The Nvidia deal isn't the end of the story. It's the beginning of the next chapter. And that chapter is still being written.

FAQ

What is Music Flamingo?

Music Flamingo is an AI model developed by Nvidia and researchers at the University of Maryland that can understand music at a human level by recognizing nuanced elements like song structure, harmony, emotional arcs, and chord progressions. Unlike generative AI models that create music, Music Flamingo is designed to analyze and understand existing music, processing complete tracks up to 15 minutes long to extract detailed insights about how songs work at a technical and emotional level.

How does Music Flamingo differ from other music AI tools?

Most music AI tools focus on generation—creating new music from prompts or parameters. Music Flamingo takes the opposite approach: it's built for recognition and understanding. It can identify what makes a song work, how it compares to other music, and what cultural or emotional resonances it carries. This makes it useful for discovery, artist analysis, and music exploration rather than music creation, which is a fundamentally different application than tools like Udio or other generative platforms.

Why did Universal Music partner with Nvidia instead of continuing to sue AI companies?

Universal Music realized that fighting AI through litigation alone wasn't sustainable. The music industry shifted strategy from opposition to participation, recognizing that AI is reshaping the industry whether they like it or not. By partnering with Nvidia, Universal Music gets to shape how AI understands and analyzes their catalog, ensure artist interests are considered, and build revenue models around AI capabilities. It's a strategic pivot from defense to offense.

Will Music Flamingo help artists get discovered?

Potentially, yes. If Music Flamingo-powered discovery tools are integrated into streaming platforms, fans could find music through more nuanced searches—by emotional tone, harmonic characteristics, or cultural resonance rather than just genre. This would theoretically help artists reach listeners who might love their music but wouldn't find them through traditional browsing. But the actual impact depends on how streaming platforms implement the technology, which hasn't been fully detailed yet.

How will artists be compensated when their work is used in Music Flamingo training?

Universal Music and Nvidia have committed to "rightsholder compensation" but haven't released specific details about how that will work. The companies could use blanket licensing models, revenue-sharing from Music Flamingo tools, or direct licensing arrangements. The exact mechanism will likely be negotiated with individual artists, songwriters, and publishers over the coming months. This remains one of the most critical and unresolved questions about the partnership.

What does "responsible AI" mean in this context?

"Responsible AI" in the Nvidia and Universal Music partnership means the companies are committed to protecting artist rights, ensuring attribution, preventing AI-generated slop, and using AI in ways that advance human creativity rather than replace it. However, the term remains somewhat vague in practical terms. True responsibility would include transparent data usage, fair compensation mechanisms, effective copyright protection, and accountability mechanisms when artists believe their work is being misused.

Could Music Flamingo be used to create AI-generated music?

Technically, a model that understands music at Music Flamingo's level could theoretically be used for generation. But Nvidia and Universal Music have explicitly stated that the partnership focuses on analysis and understanding, not creation. The partnership includes an "artist incubator" for creative tools, but details about what that means remain vague. The companies are betting that the value of Music Flamingo for discovery and analysis is sufficient without adding generative capabilities.

How will this partnership affect streaming platforms like Spotify?

Music Flamingo will likely be integrated into streaming platforms gradually, probably as an enhanced recommendation or discovery layer. However, streaming platforms like Spotify and YouTube Music have their own recommendation systems and won't necessarily replace them with Music Flamingo. The real competition will be whether Music Flamingo-powered discovery outperforms existing algorithms, which could shift user behavior and platform adoption over time.

What are the copyright concerns with Music Flamingo?

Music Flamingo was trained on music data, and while Universal Music says the training respected copyright, the specific data sources and licensing remain confidential. Key concerns include: determining whether all rights holders agreed to the use, establishing fair compensation, preventing the model from being misused for plagiarism, and ensuring that generated insights don't enable copyright violation. These are largely unresolved questions that will likely involve litigation if not proactively addressed.

Will Music Flamingo replace human musicologists and music producers?

Music Flamingo is designed to augment human creativity and analysis, not replace it. The model can provide detailed technical analysis and suggestions, helping producers and musicians make better decisions. But creating great music requires human judgment, emotion, cultural understanding, and creativity—all things machines still struggle with. Music Flamingo is a tool to improve those human processes, not eliminate them.

Key Takeaways

- Music Flamingo is an understanding tool, not a generation tool: It analyzes music at a technical and emotional level, enabling better discovery and artist analysis without replacing human creativity

- Universal Music's strategy shifted from litigation to participation: Partnering with Nvidia rather than suing AI companies signals the music industry recognizes AI is inevitable and wants to shape it

- Discovery gets smarter: Fans can find music by emotional tone, harmonic characteristics, and cultural resonance rather than just genre—potentially helping artists reach their real audience

- Compensation mechanisms remain vague: Despite commitment to "rightsholder compensation," specific details about how artists will be paid haven't been released

- Integration with existing platforms is critical: Music Flamingo only matters if it's actually built into the tools people use daily, which remains unconfirmed

- Copyright protection and enforcement are open questions: The partnership claims to protect artists' work, but how that actually works at scale with other AI systems remains unclear

- This sets a precedent for industry-AI partnerships: If successful, this model could reshape how creative industries work with AI companies across publishing, film, visual art, and journalism

- Skepticism is warranted but optimism is fair: The partnership addresses real problems and represents genuine progress, but execution will determine whether it actually delivers on its promises

Related Articles

- The Wildest Tech at CES 2026: From AI Pandas to Holographic Anime [2026]

- California's AI Chatbot Toy Ban Explained [2025]

- Roborock's Legged Robot Vacuum: The Future of Home Cleaning [2025]

- Wi-Fi 8 is Coming in 2026 (And You Probably Aren't Ready) [2025]

- AI Companion Robots and Pets: The Real-World Shift [2025]

- Open WebUI CVE-2025-64496: RCE Vulnerability & Protection Guide [2025]

![Nvidia Music Flamingo & Universal Music AI Deal [2025]](https://tryrunable.com/blog/nvidia-music-flamingo-universal-music-ai-deal-2025/image-1-1767732073443.jpg)