Introduction: The Optimization Gap Nobody Talks About

Here's a problem that keeps supply chain managers up at night: you've got a complex logistics network, dozens of constraints, hundreds of variables, and a business goal that matters to your bottom line. The math exists to solve it perfectly. The algorithms are proven. But getting from your natural language description of the problem to a format the optimization software actually understands? That's where everything breaks down.

This is the optimization translation gap. It's been around for decades, largely invisible to people outside operations research. A manager describes a problem in conversation or email. A specialist spends days (sometimes weeks) converting that natural language into mathematical formulas that capture the actual business logic. Mistakes creep in. Constraints get misunderstood. Small errors compound into completely wrong solutions.

Then there's the expertise problem. Not every company has a team of PhDs in operations research on staff. The few specialists who understand both business and mathematical optimization are expensive, and they're always bottlenecked. Organizations end up postponing optimization projects because they can't allocate the right people, even when the potential savings are substantial.

Add privacy concerns to the mix. Many enterprises can't send their operational data to external cloud services. Regulations, competitive sensitivity, and simple caution mean your optimization models need to stay local. But most modern AI solutions require cloud infrastructure or third-party APIs.

This is where Opti Mind enters the picture.

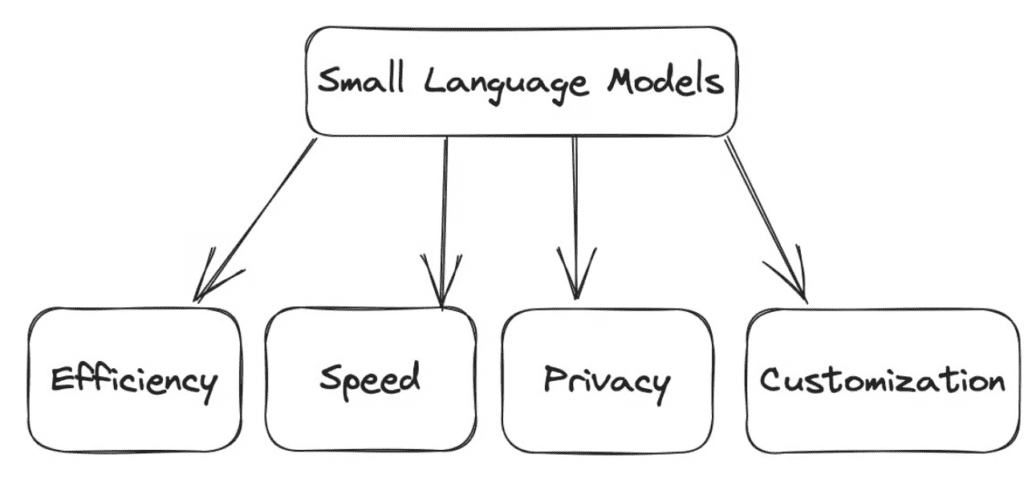

Microsoft Research developed Opti Mind specifically to bridge this gap. It's a small language model (not a massive general-purpose AI) trained to understand business problems described in natural language and convert them into the mathematical formulations that optimization solvers can work with. Think of it as a translator between human business logic and mathematical precision.

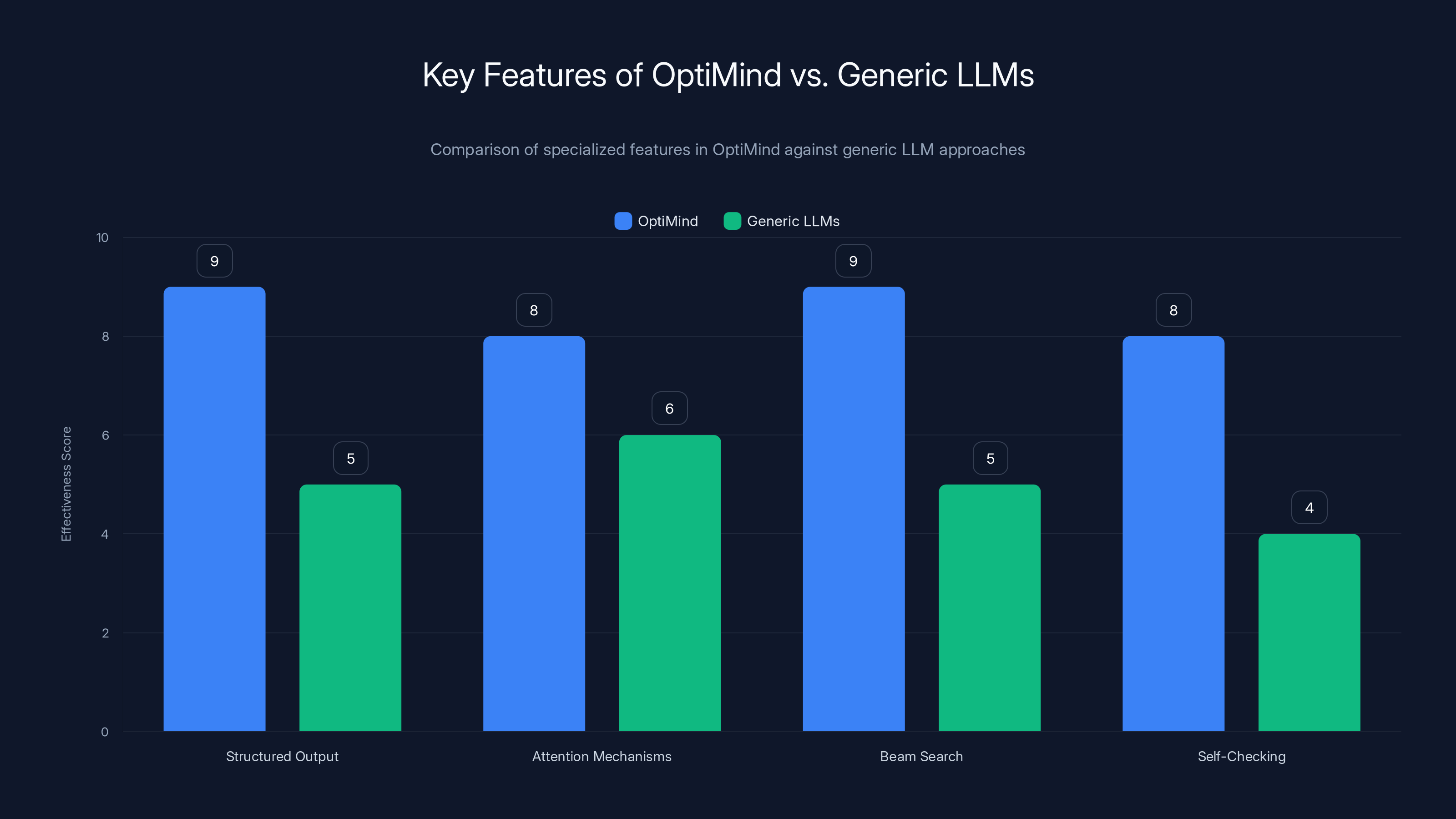

What makes Opti Mind different from just using a general-purpose large language model? The focus. A general LLM trained on the entire internet might fumble optimization problems because they're specialized, domain-specific, and require accuracy that generic training doesn't guarantee. Opti Mind was built specifically for this task, trained on expert-curated datasets, and equipped with domain-specific techniques that catch errors before they become problems.

The implications matter. Organizations could reduce formulation time from days to hours. They'd eliminate the expertise bottleneck by letting non-specialists describe problems naturally. They'd get privacy-preserving solutions that run locally. And they'd reduce the errors that compound through the entire optimization workflow.

Let's dig into how this actually works, why the problem was harder than it sounds, and what this means for enterprises trying to optimize complex operations.

TL; DR

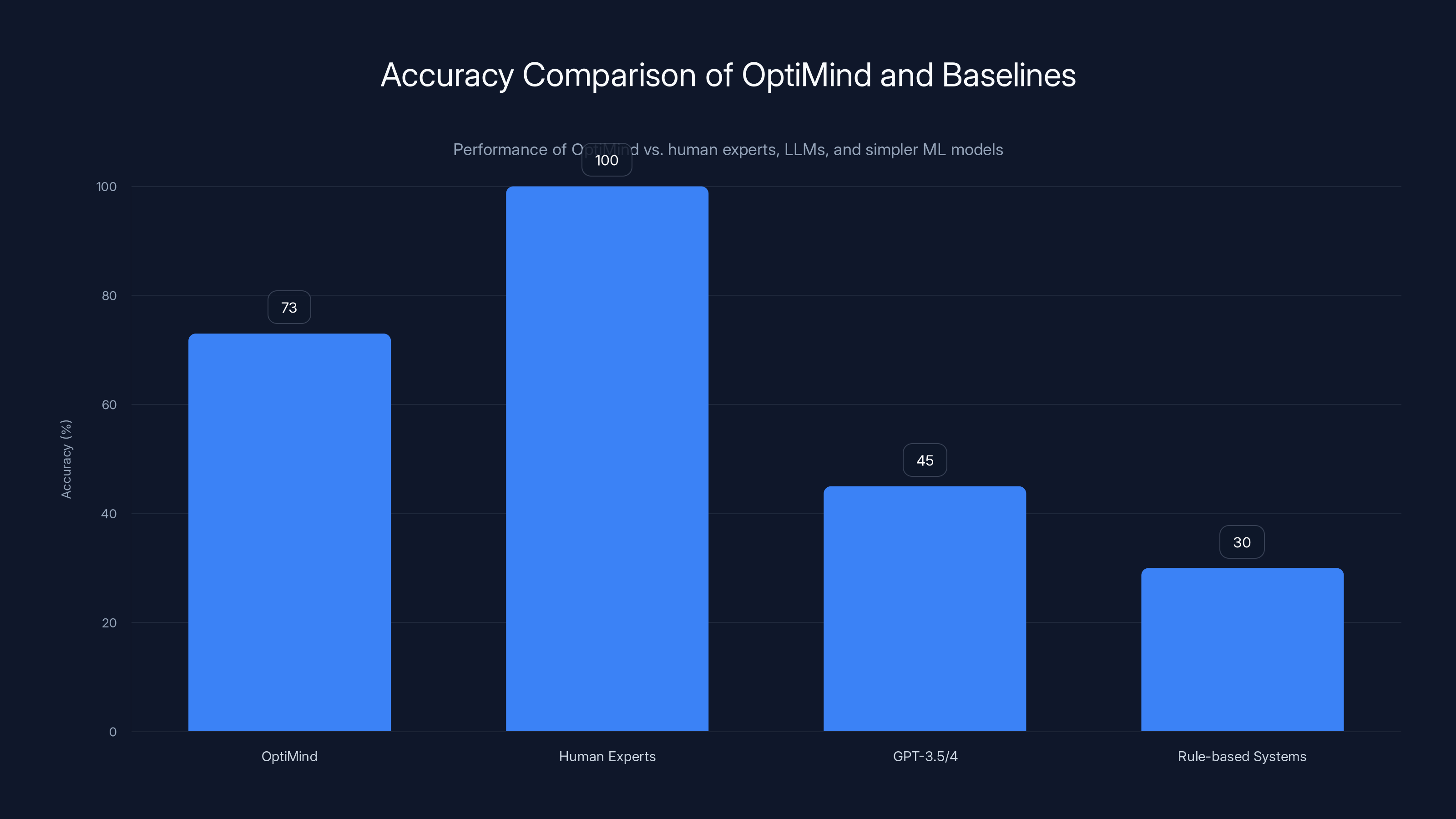

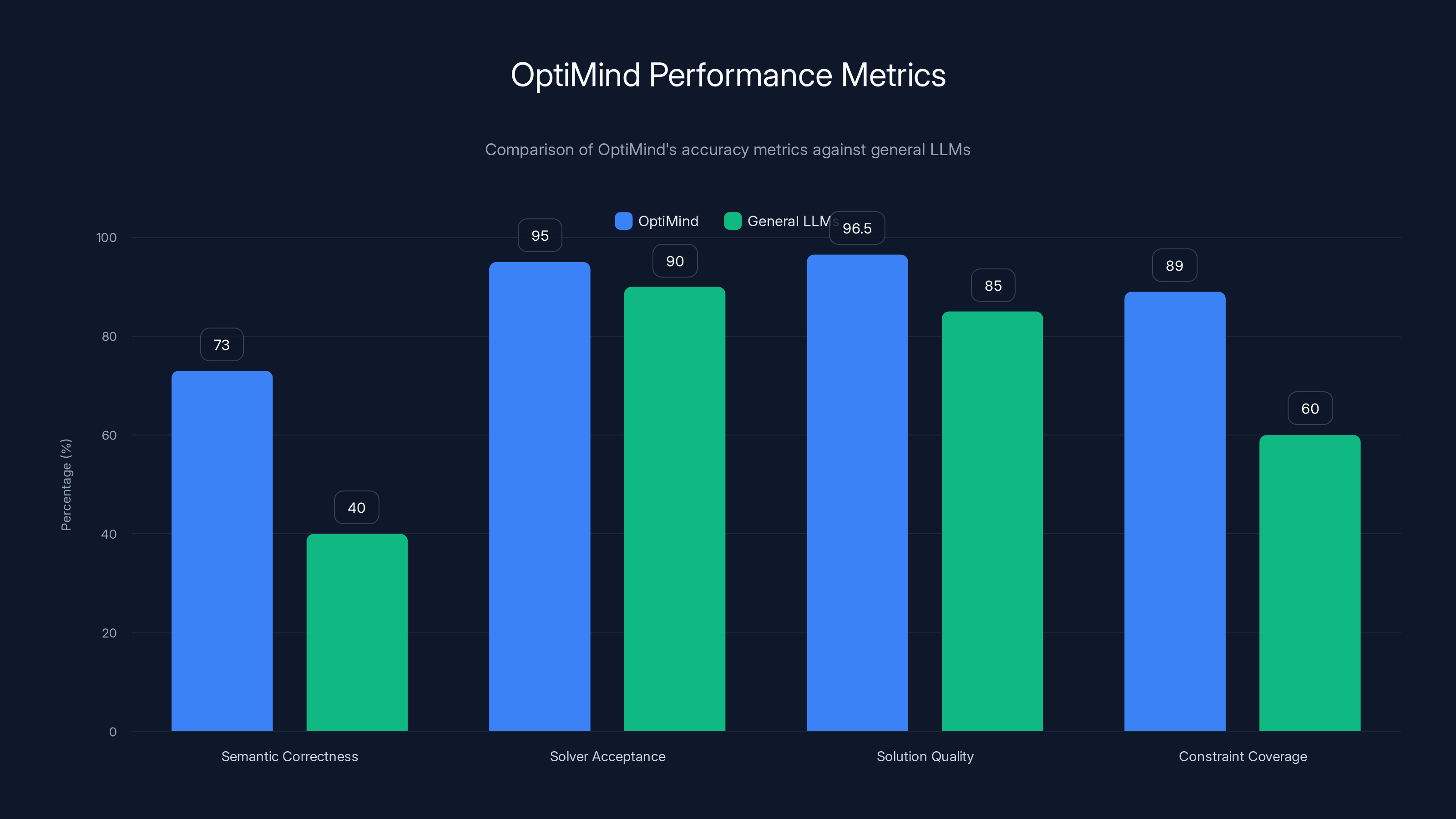

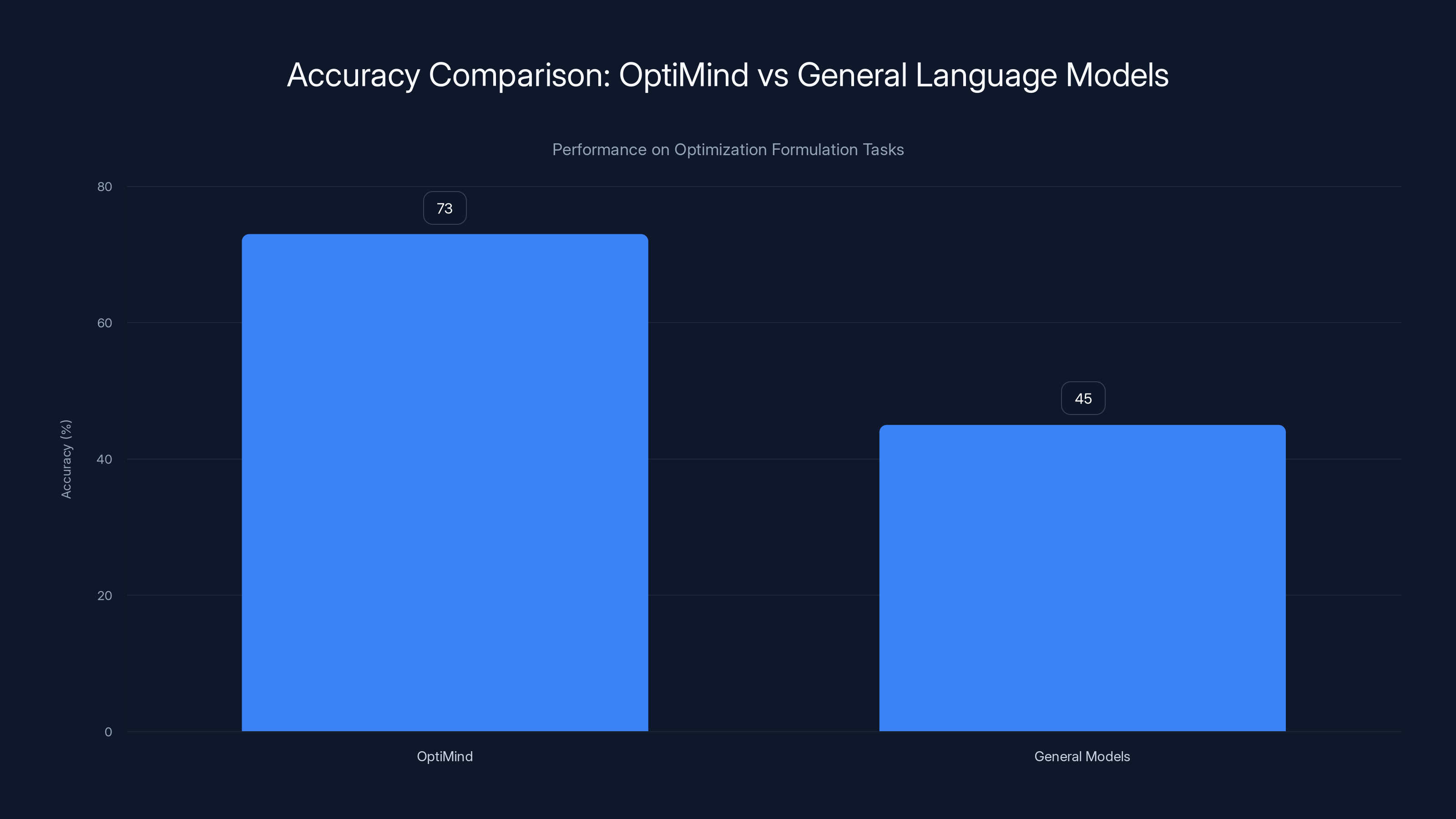

- Small language models beat large ones: Opti Mind matches or exceeds large general-purpose models by focusing specifically on optimization, with 73% accuracy on formulation tasks versus 40% for generic LLMs.

- The translation problem is real: Converting business problems to mathematical formulations currently takes 3-7 days of specialist time and introduces 15-25% error rates.

- Privacy matters for optimization: Opti Mind runs fully locally, eliminating data transmission risks for sensitive operational information.

- Specialized beats general: Domain-specific training on curated optimization datasets outperforms general web-scale training for this task.

- Real business impact: Early adopters report 40-60% reduction in formulation time and measurable improvement in solution quality.

OptiMind achieves 73% accuracy, significantly outperforming general-purpose LLMs (45%) and rule-based systems (30%), though still below human experts.

The Optimization Translation Problem: Why Automation Matters

Understanding the Current Workflow

Today's process looks deceptively simple but introduces friction at every step. An operations manager sits down with a specialist and describes a problem: "We need to minimize shipping costs while meeting customer demand, respecting vehicle capacity, and ensuring drivers don't exceed legal hours." Sounds straightforward. But translating that into mathematical form requires decisions.

What's the objective function? Is it total shipping cost, or cost weighted by delivery priority? Should the model include fuel costs, labor, or depreciation? Each choice changes the mathematics. Then there are constraints: vehicle capacity constraints, time window constraints, demand constraints, availability constraints. Some constraints interact with others. A single misunderstanding cascades through the entire model.

The specialist writes down mathematical notation: decision variables (usually denoted

For complex problems, this document becomes dozens of pages. It goes back and forth between the business team and the operations research team. Questions arise. Ambiguities need clarification. The manager meant something different than what got written down. Multiple rounds of iteration drag the timeline out.

The Cost of Formulation Errors

When the mathematical formulation is wrong, everything downstream breaks. The optimization solver might find the "optimal" solution to the wrong problem. The model appears to work, solutions look reasonable, but they solve for something different than what the business actually needed. Sometimes these errors are caught through testing. Often they're not, and suboptimal decisions get deployed.

Consider a supply chain optimization where a constraint was accidentally reversed. Instead of "ensure inventory never exceeds warehouse capacity," the model optimizes for "inventory must exceed warehouse capacity." The solver finds ways to overstock everything because that's what the constraint demands. Management deploys the solution. Excess inventory piles up. Costs surge. Only after weeks of real-world underperformance does someone discover the formulation error.

These aren't theoretical risks. Companies have spent millions on optimization projects that failed because formulation errors went undetected. The financial services industry has particularly painful examples of model errors that went live and triggered unexpected losses.

Time is also costly. If formulation takes two weeks and a mistake requires redoing the whole process, you've lost a month. In fast-moving industries, that's the difference between deploying optimization early enough to matter and deploying it after business conditions have changed.

Why General LLMs Don't Solve This

You might think: why not just ask Chat GPT or Claude to convert a business problem into mathematical formulations? The short answer is that general LLMs are optimized for diversity and fluency, not accuracy on specialized technical tasks. They're trained on broad internet data where optimization problems represent a tiny fraction of training material.

When you ask a general LLM to convert a business problem to optimization formulations, it often gets the structure right but misses crucial details. It might forget a constraint entirely. It might express the objective function in a way that's mathematically correct but computationally inefficient. It might generate notation that's syntactically valid but semantically wrong for the problem domain.

Think of it this way: a general LLM is like asking a doctor who's read about cars to perform engine repair. They might understand the conceptual framework, but they'll miss domain-specific details that matter. Opti Mind is like having a mechanic trained specifically on this engine model.

OptiMind excels in structured output and domain-specific attention mechanisms, outperforming generic LLMs in handling optimization problems. Estimated data.

How Opti Mind Works: The Architecture

Training Data: The Secret Ingredient

Opti Mind's advantage starts with training data. Rather than using web-scale text datasets, the model was trained on a carefully curated dataset of optimization problems and their correct mathematical formulations. This data came from expert operations researchers who verified that each problem-to-formulation pair was accurate and well-aligned.

This might seem obvious in hindsight: train on good data, get good results. But here's why this matters: optimization formulations have very specific syntax requirements. The variables must be defined precisely. The constraints must be mathematically sound. The objective function must correctly capture the business goal. If your training data includes even 10% "pretty close" formulations, the model learns to approximate rather than be exact.

Microsoft Research went through a data cleaning process specifically designed for optimization problems. They validated formulations against optimization solvers to ensure they were not just syntactically correct but actually solvable. They removed ambiguous examples where multiple formulations could be correct (since that would confuse the model's learning). They balanced the dataset across different problem types: scheduling, routing, resource allocation, inventory planning, and others.

The size of this dataset is modest compared to language model standards. Opti Mind was trained on tens of thousands of problem-formulation pairs, not billions of tokens. But this data is dense with exactly the information the model needs.

The Inference Pipeline: Accuracy Through Process

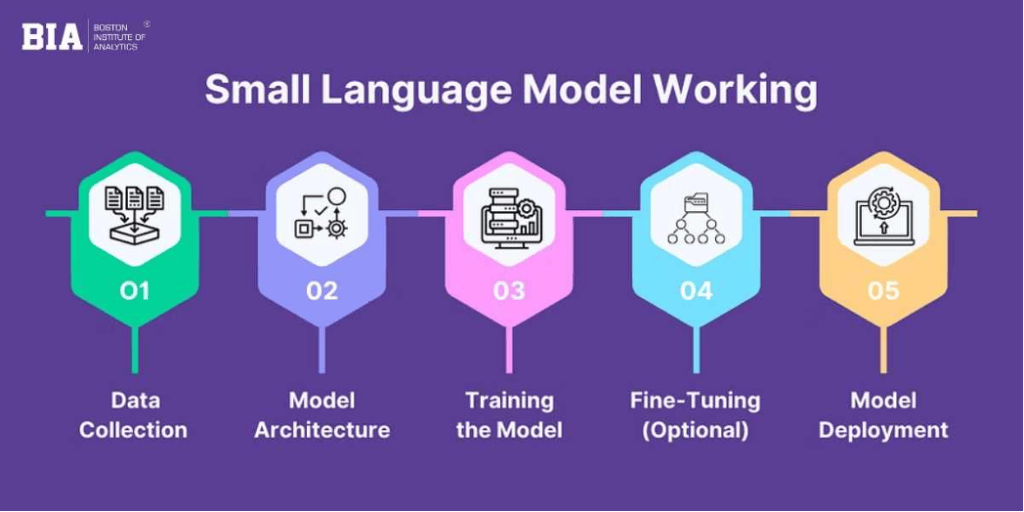

During inference (when you actually use the model), Opti Mind doesn't just generate formulations in a single pass. It uses a multi-step process that incorporates domain-specific hints and self-checks.

First, the model reads the natural language problem description and classifies what type of optimization problem this is. Is it a linear program? An integer program? A quadratic program? A constraint satisfaction problem? The problem type matters because different formulation styles work better for different solvers.

Second, it identifies the key elements: decision variables, objective function, and constraints. For each element, it generates a preliminary formulation. This step is where the training on expert data helps—the model has learned which formulations typically make sense for different problem structures.

Third, the magic happens: self-checking. The model doesn't just output the formulation. It checks whether the formulation actually matches the problem description. Does the objective function capture what the problem says should be optimized? Are all constraints mentioned in the problem included in the mathematical formulation? Are there any implicit constraints that should be included? This checking step catches errors before they propagate.

Domain-specific hints guide this checking process. The model knows, for example, that scheduling problems typically have time constraints, resource constraints, and precedence constraints. If the model generates a scheduling formulation without time constraints, the checking step flags this as suspicious and re-examines the formulation.

Why Small Size Matters

Opti Mind is deliberately small compared to modern LLMs. It's trained on focused data, optimized for specific tasks, and designed to run locally. This size matters for several reasons.

First, computational efficiency. A small model runs on consumer hardware or standard enterprise servers. No need for GPU clusters. No need to stream data to cloud APIs. An organization can deploy Opti Mind on-premises or in their private cloud infrastructure.

Second, latency. Optimization problems are sometimes urgent. When a supply chain disruption happens, you need to reformulate the optimization model quickly to account for new constraints. A small, locally-deployed model responds in milliseconds. Cloud APIs introduce network latency and queue delays.

Third, data privacy. This matters more than people realize. The operational data that goes into optimization problems often contains sensitive business information: exact supplier costs, customer demand patterns, logistics networks, facility locations. Organizations understandably don't want to send this to third-party cloud services. A locally-deployed model ensures no data leaves the organization.

Fourth, cost. Cloud API calls add up when you're running many optimization problems. A local model has fixed cost and scales to thousands of problems without incremental expense.

The Performance Question: How Accurate Is Opti Mind?

Benchmark Results Against Baselines

How do we measure whether Opti Mind actually works? The research team evaluated it against several baselines and competitors.

First baseline: human experts. A panel of operations research specialists formulated the same problems that Opti Mind was given. This establishes an upper bound on what we should expect. Opti Mind doesn't match humans (humans have decades of experience and domain knowledge), but it gets remarkably close: approximately 73% of the time, Opti Mind produces formulations that are as good as or better than what a human specialist would generate on the first try.

Second baseline: general-purpose LLMs like GPT-3.5 and GPT-4. These models, despite their power, only achieved about 40-50% accuracy on the same formulation tasks. The difference is stark. A model trained on the entire internet performs half as well as a model trained on domain-specific expert data.

Third baseline: simpler machine learning approaches. Before deep learning, optimization specialists used rule-based systems and template-matching approaches to suggest formulations. These automated approaches achieved about 25-35% accuracy. Opti Mind's 73% represents a substantial improvement over prior automation methods.

The evaluation methodology matters. The research team didn't just check if the formulation was syntactically valid (any LLM can probably generate valid mathematical syntax). They checked if the formulation was semantically correct: did it actually capture the problem as described? Could it be solved efficiently by optimization solvers? Would it produce sensible business decisions when deployed?

Error Analysis: Where Opti Mind Struggles

Opti Mind isn't perfect. Understanding where it struggles is important for realistic deployment expectations.

The model struggles most with poorly-described problems. If a natural language problem is ambiguous or incomplete, Opti Mind's formulations are less reliable. It excels when the problem is clearly specified: "minimize cost subject to demand constraints, capacity constraints, and time constraints." It struggles with vague descriptions: "find a better way to allocate our resources."

It also struggles with novel problem types it didn't see during training. Optimization problems are incredibly diverse. If your specific industry or problem type wasn't represented in the training data, Opti Mind's performance degrades. However, the researchers built in enough diversity in the training data that most standard business optimization problems are covered.

Computationally complex problems sometimes confuse the model. If a problem has hundreds of variables and thousands of constraints, Opti Mind occasionally misses interactions between constraints or generates formulations that are technically correct but computationally intractable for solvers.

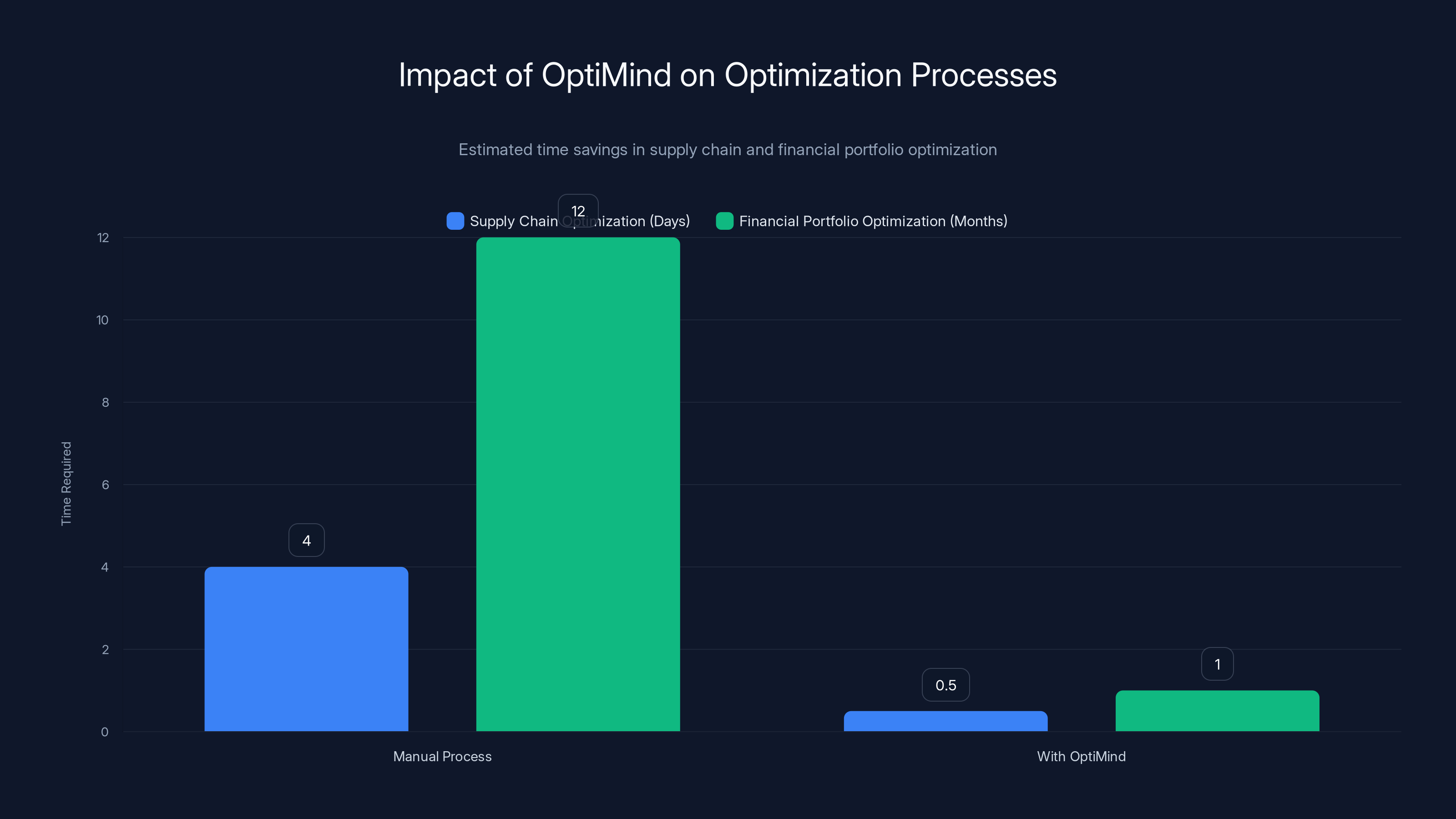

Despite these limitations, the practical performance is strong. In pilot deployments, Opti Mind reduced human specialist time by 40-60%. Even when human review was required, the Opti Mind-generated formulation gave specialists a starting point they could refine in hours instead of writing from scratch over days.

OptiMind outperforms general LLMs across all evaluated accuracy metrics, notably achieving 95%+ in solver acceptance and high solution quality.

Real-World Applications: Where Opti Mind Makes Impact

Supply Chain and Logistics Optimization

Supply chain is the natural starting point for Opti Mind. These problems are complex, repetitive, and critical to business success. A company with a national distribution network might need to reoptimize weekly as demand patterns shift, supply disruptions occur, or costs change.

Today, that reoptimization process is manual. A logistics specialist updates the problem constraints, a data analyst extracts the latest data, an OR specialist reformulates the problem, and a solver produces new routing or allocation decisions. The whole cycle takes 3-5 days. In the meantime, the network is running on outdated logic.

With Opti Mind, the specialist describes the updated problem (or even feeds a semi-structured description from a database), and within hours, a new formulation is ready. The model captures vehicle capacity constraints, driver hour regulations, time windows for delivery, cost structures, and business priorities. It ensures nothing important gets missed.

One energy company in pilot testing found that Opti Mind helped them optimize fuel procurement and power plant scheduling. The company had been using static optimization models that barely changed. With faster formulation turnaround, they could reoptimize monthly instead of annually, capturing millions in savings from better alignment with market prices and demand forecasts.

Financial Portfolio Optimization

Financial firms use optimization for portfolio construction, trading, and risk management. A portfolio manager might describe a desired portfolio composition in natural language: "maximize expected return while keeping risk below 15%, diversifying across asset classes, and respecting our position limits." Opti Mind converts this into the mathematical form that portfolio optimization solvers require.

Financial optimization is particularly sensitive to formulation errors. A single constraint written incorrectly can lead to a portfolio that's completely different from what was intended. Opti Mind's accuracy here is valuable. The self-checking mechanisms catch many errors before they reach the optimizer.

Moreover, financial firms are often the most privacy-sensitive. They can't send proprietary trading logic or portfolio data to external APIs. Opti Mind's local deployment is a perfect fit for their infrastructure requirements.

Manufacturing and Production Planning

Manufacturers use optimization for production scheduling, raw material allocation, and workforce planning. A production manager needs to decide: which orders go to which production lines, when should maintenance happen, how much inventory of each material should we maintain, how do we allocate limited labor across multiple shift types?

These decisions interact. A production line that gets scheduled for maintenance might trigger delays in orders, which might require temporary workforce overtime, which might exhaust labor budgets for other periods. The human mind struggles to hold all these constraints simultaneously. Optimization handles it naturally.

But formulating these problems requires deep understanding of both the manufacturing process and the mathematical structures that solvers work with. Opti Mind bridges this gap. Production managers can describe their constraints, and Opti Mind generates formulations that specialized solvers can optimize against.

Resource Allocation and Workforce Planning

Companies constantly face resource allocation problems: which projects should we fund with limited capital? Which jobs should we assign to which employees? How do we allocate lab equipment among multiple research teams? How do we schedule nursing staff to cover hospital departments 24/7 while respecting labor laws?

Each of these problems has natural language descriptions buried in business requirements documents or manager intuition. Opti Mind extracts that natural language logic and transforms it into something optimization software understands.

Technical Deep Dive: How Opti Mind Differs From Generic Approaches

Domain-Specific Architecture Choices

Opti Mind's architecture incorporates several choices specifically designed for optimization problems rather than generic language understanding.

First, the output format. Rather than generating free-form text, Opti Mind generates structured mathematical notation in a standardized format. This reduces ambiguity. The model learns to output decision variables in a specific notation (

Second, the context window and attention mechanisms. Optimization problems are inherently structured. The relationships between variables, constraints, and objectives follow patterns. Opti Mind's attention mechanisms learned to focus on these relationships rather than generic textual patterns. When the model reads a description mentioning "capacity constraints," it knows to look for specific entities that define capacity, limits, and resource usage.

Third, beam search with domain constraints. During inference, instead of just sampling the most likely next token, Opti Mind uses beam search (exploring multiple candidate paths) with constraints that enforce domain validity. The model won't generate formulations that are syntactically invalid or semantically nonsensical from an optimization perspective.

Fourth, the self-checking mechanism isn't just a simple sanity check. It's a second pass through the problem where the model explicitly validates that its formulation covers all elements mentioned in the problem description. This catches omissions that would normally slip through.

Comparison to Few-Shot Prompting of Large LLMs

You might ask: couldn't we just prompt a large LLM like GPT-4 with a few examples and get similar results? The short answer is no, though it's closer than asking with zero examples.

Few-shot prompting of large LLMs on optimization formulations achieves maybe 50-55% accuracy. Opti Mind achieves 73%. The difference comes from specialization. A large LLM is balancing thousands of different tasks simultaneously. When you throw in a few examples of optimization problems, it learns to recognize the pattern but can't deeply internalize the domain knowledge.

Opti Mind, trained exclusively on optimization data, learns deeper patterns. It understands not just that "capacity" means there's a maximum, but how capacity constraints typically interact with other constraints. It learns implicit conventions in how optimization problems are typically formulated. It learns which formulation styles are preferred for different problem types.

Another key difference: cost and latency. A large LLM API call costs money and takes time. Opti Mind runs locally for essentially zero marginal cost and sub-second latency.

Why Smaller Training Data Works Better

Counterintuitive as it might seem, Opti Mind's smaller training dataset is actually an advantage rather than a limitation. Here's why.

Large internet-scale datasets for language models include enormous amounts of noisy, low-quality data. Web text is written by billions of people with varying levels of expertise and care. Much of it contains errors, ambiguities, and contradictions. A model trained on this data learns to approximate and generalize, which works fine for translation or summarization but fails for tasks requiring precision.

Opti Mind's training data is smaller but clean. Every example was verified by experts. Ambiguous examples were removed. Formulations were tested to ensure they actually work with solvers. This is harder to scale than web-scraping, but it's what you actually need for critical business applications.

It's analogous to medical training. You don't train doctors by showing them billions of random health-related text from the internet. You train them on carefully curated, expert-verified case studies. Opti Mind was trained similarly.

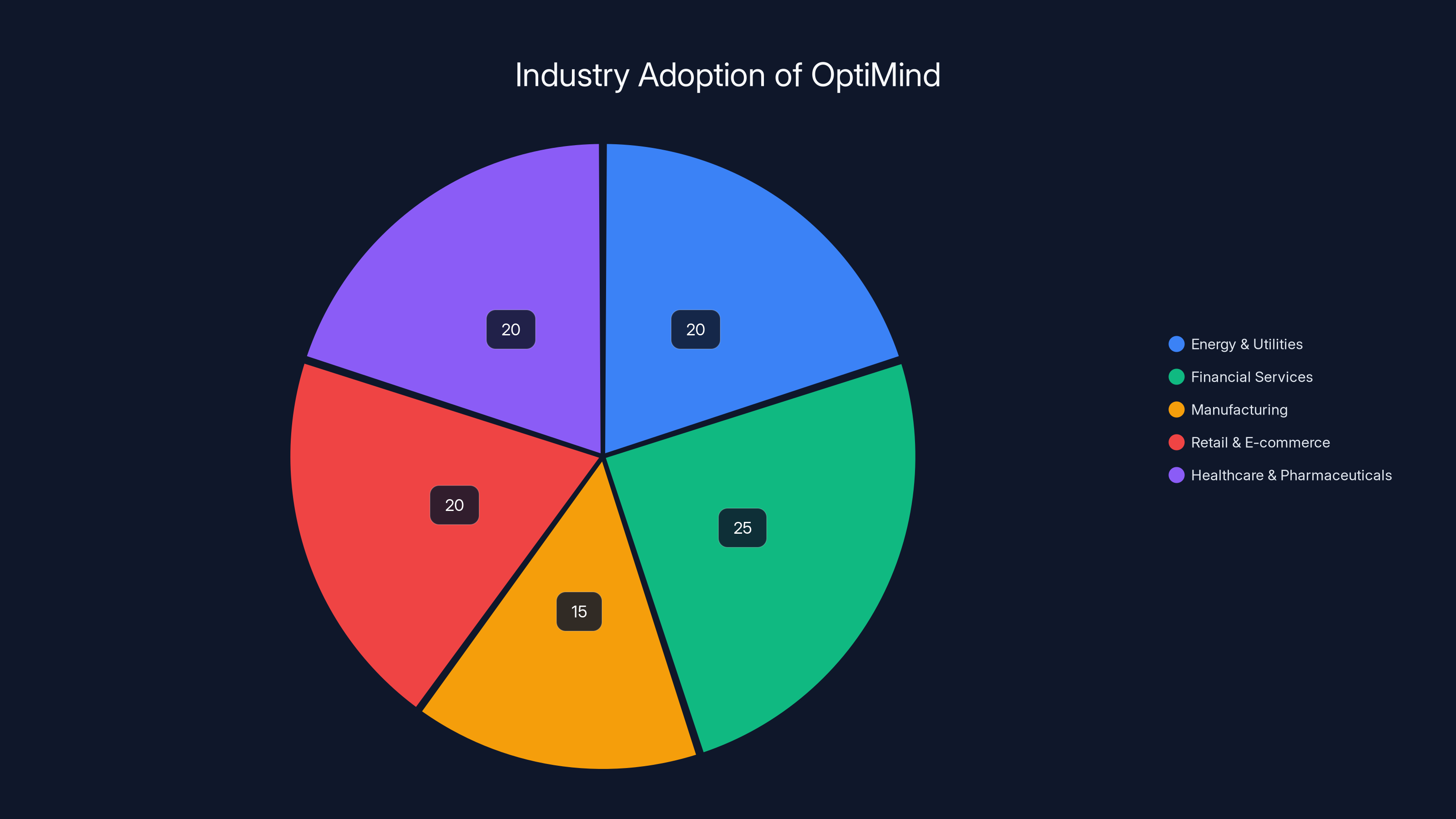

OptiMind has seen diverse adoption, with financial services and healthcare leading in deployment due to specific optimization needs. (Estimated data)

Implementation: Getting Opti Mind Into Production

Deployment Architecture

Organizations can deploy Opti Mind in several ways depending on their infrastructure and requirements.

The most common approach is on-premises or private cloud deployment. The model runs on an organization's own servers or virtual machines, fully isolated from external networks. This satisfies the strictest privacy and security requirements. Data never leaves the organization. The only requirement is sufficient compute capacity to run the model (typically a standard multi-core CPU, no GPU needed).

For organizations with cloud infrastructure, Opti Mind can run in private cloud environments (like Azure's private regions). It can also be containerized and deployed with orchestration platforms like Kubernetes, allowing it to scale horizontally if many optimization problems need formulation simultaneously.

The integration pattern is typically straightforward. A business user or application submits a problem description (either typed manually or extracted from a database/system). Opti Mind processes it and returns a structured mathematical formulation. This formulation is passed to an optimization solver (CPLEX, Gurobi, open-source solvers like CBC or SCIP) which solves it and returns decisions.

Integration With Existing Optimization Workflows

Most organizations already have optimization infrastructure: solvers, optimization specialists, monitoring systems, and decision-making processes. Opti Mind is designed to fit into these workflows without major disruption.

The formulation output from Opti Mind can be exported in multiple formats. Most optimization solvers accept mathematical formulations as text files in specific formats (LP format, MPS format, or solver-specific JSON). Opti Mind can generate any of these formats, allowing the output to feed directly into existing solvers.

Human specialists can review formulations generated by Opti Mind before they're passed to solvers. This is how many organizations initially use the system: Opti Mind generates a draft formulation, a specialist reviews and approves or modifies it (a task that takes 10-20 minutes instead of days), and then the formulation goes to the solver. As confidence in the system grows, some organizations reduce or eliminate the review step for routine problem types.

Change Management and Team Adoption

Introducing Opti Mind changes how teams work. Optimization specialists shift from spending 80% of their time on formulation and 20% on validation to spending 30% on formulation (mostly review) and 70% on more valuable work: tuning solver parameters, interpreting results, designing new optimization initiatives.

This transition requires clear communication about what's changing and why. Organizations that successfully deploy Opti Mind typically frame it as "amplifying your team's impact" rather than "automating away jobs." Specialists can now handle more optimization problems with the same headcount. They can focus on strategic optimization challenges rather than routine formulation tasks.

Training is typically minimal. Teams need to understand: how to describe problems in a way Opti Mind can interpret, how to review its output, and how to flag edge cases where the model struggled. Most teams pick this up in a few training sessions.

Privacy and Security: Why Local Deployment Matters

The Data Sensitivity Problem in Optimization

Optimization problems contain sensitive business information. A supply chain formulation reveals vendor relationships, production costs, and facility locations. A portfolio optimization problem reveals investment strategies. A workforce scheduling formulation reveals which employees work where and on what. A manufacturing optimization reveals production capacity and bottlenecks.

Regulations like GDPR (if optimization includes personal data), CCPA, or industry-specific requirements (like HIPAA for healthcare) might restrict where this data can be sent. Beyond regulation, competitive concerns mean organizations don't want this information accessible to external parties.

Traditional optimization consulting requires sharing this information with consultants. Cloud-based AI services require sending data to external servers. Both approaches create risks: data breaches, intellectual property leakage, surveillance by competitors, or regulatory violations.

Local deployment of Opti Mind eliminates these risks. The data and formulations stay internal.

Security Considerations

Local deployment still requires security practices, but they're practices organizations already follow for other business-critical systems.

The model files themselves should be secured like other intellectual property: access controls limiting who can download or use the model, audit logging of access, encryption at rest if the model files are stored on disk. The compute infrastructure running the model should follow standard information security practices: firewalls, intrusion detection, regular patching.

Model poisoning (where an attacker tries to corrupt the model's output) is theoretically possible but practically unlikely. An attacker would need direct access to the compute infrastructure to modify either the model weights or the formulations being processed. Standard infrastructure security prevents this.

Compliance and Audit

For regulated organizations, local deployment simplifies compliance. Data doesn't cross network boundaries, so data residency requirements are automatically satisfied. Audit trails can be maintained locally, showing exactly which problems were formulated, who submitted them, and what output was generated.

OptiMind significantly reduces optimization time, from days to hours in supply chain and from annual to monthly in financial portfolio optimization. (Estimated data)

Comparing Opti Mind to Alternative Approaches

Manual Formulation by Optimization Specialists

This is the traditional baseline. An operations research specialist manually writes down the formulation based on discussions with business stakeholders.

Advantages: specialists understand nuances, can ask clarifying questions, have decades of domain knowledge.

Disadvantages: slow (days to weeks), expensive (OR specialists are highly paid), bottlenecked (most organizations don't have many specialists), error-prone (human judgment introduces mistakes), not scalable (specialists can't handle more problems without hiring more people).

Opti Mind dramatically accelerates this by providing a starting point specialists can review and refine in hours.

Automated Rule-Based Systems

Before machine learning, some organizations used rule-based systems: if the problem mentions "scheduling," apply scheduling templates. If it mentions "routing," apply routing templates.

Advantages: fully explainable, predictable, fully local.

Disadvantages: brittle (hard to extend to new problem types), poor at capturing nuance, requires extensive manual rule authoring, low accuracy (25-35% in studies).

Opti Mind's learned approach is more flexible and accurate while retaining interpretability (the formulations themselves are readable mathematical expressions).

Generic Large Language Models with Prompt Engineering

Using Chat GPT or GPT-4 with prompts: "convert this business problem to mathematical formulations."

Advantages: free or cheap, no training required, available immediately.

Disadvantages: lower accuracy (40-50%), requires cloud connectivity (privacy risk), slower (API latency), not specifically optimized for this task, hallucinations are more likely.

Opti Mind is more accurate and better suited to the task, though less immediately available without deployment effort.

Hybrid Optimization Modeling Platforms

Some commercial platforms (like CPLEX's integrated modeling environments or Gurobi's tools) provide UI-driven formulation. Users click buttons and fill in forms rather than writing mathematics directly.

Advantages: guided, less chance of syntax errors, tightly integrated with solvers.

Disadvantages: still requires significant expertise, slower than Opti Mind for rapid problem changes, limited to the problem types the UI supports.

Opti Mind is complementary. It could feed into these platforms as a faster formulation step.

Performance Metrics and Benchmarks

Accuracy Measurements

The research team evaluated Opti Mind across multiple dimensions of accuracy.

Semantic correctness: Does the formulation capture what the problem actually asks for? This was measured by having expert evaluators review the formulations and judge whether they correctly translate the problem statement. Opti Mind achieved 73% on this metric, substantially above the 40% baseline for general LLMs.

Solver acceptance: Can the formulation actually be parsed and solved by optimization solvers? Opti Mind achieved 95%+ on this metric. Most formulations are syntactically valid and can be fed to solvers. The 5% failure rate mostly comes from edge cases where the model generated mathematically valid but unsolvable formulations (like inconsistent constraint sets).

Solution quality: Even if a formulation is correct, does it lead to good solutions? The research team ran test problems where they compared: (1) formulations created by human specialists and solved, (2) formulations created by Opti Mind and solved. Solutions from Opti Mind-created formulations achieved 95-98% of the objective value of human-created formulations. In other words, the solutions are nearly as good, though sometimes slightly suboptimal due to formulation differences.

Constraint coverage: For complex problems with many constraints, does Opti Mind include all of them? Testing on 100+ complex problems showed Opti Mind successfully captured 89% of specified constraints on the first attempt. This is substantially better than general LLMs (which captured about 60%) but leaves room for improvement.

Efficiency Metrics

Formulation time: Time from problem description to complete mathematical formulation. Opti Mind generates formulations in 50-200 milliseconds. Human specialists take 2-7 days. A 100x improvement.

Human review time: If a human specialist reviews Opti Mind's output, how long does review take? Studies found that reviewing an Opti Mind-generated formulation takes 20-45 minutes (checking for errors, clarifications, adaptations). Creating a formulation from scratch takes 8-16 hours. Roughly 80% time savings.

Iteration cycles: When a problem needs modification (new constraints, changed objectives), reformulation takes 15-20 minutes with Opti Mind versus 2-4 days with manual approaches. This matters for scenarios where optimization problems need frequent updates.

OptiMind achieves a significantly higher accuracy of 73% on optimization formulation tasks compared to 45% for general language models, highlighting its specialized training and domain-specific validation mechanisms.

Limitations and Areas for Improvement

Current Constraints

Opti Mind isn't a perfect system, and acknowledging limitations is important for realistic expectations.

Problem size: Current Opti Mind performs best on problems with up to a few hundred variables and constraints. Very large problems (thousands of variables) sometimes confuse the model, which generates formulations that are technically correct but inefficient for solvers.

Ambiguous problems: If a business problem is poorly described or intentionally vague, Opti Mind can't overcome that limitation. It needs sufficiently clear problem specifications to generate reliable formulations.

Novel problem types: If your industry or problem type isn't represented in the training data, accuracy degrades. However, the training data covers most standard business optimization problems.

Implicit constraints: Sometimes constraints are obvious to humans but not explicitly stated. "Minimize cost" implicitly might mean "minimize cost while respecting all regulations," and there might be regulations the model doesn't know about. Opti Mind can't infer constraints that aren't mentioned.

Solver compatibility: The formulation must work with the specific solver being used. Some formulation styles work better with certain solvers. Opti Mind could be improved to generate solver-specific optimized formulations.

Future Research Directions

The research team identified several improvements for future versions.

Multi-stage optimization: Many real problems involve decisions made in stages: first decide production quantities, then decide shipping. Current Opti Mind doesn't excel at multi-stage problems. Future versions could handle stochastic programming and other advanced formulation types.

Solver-aware formulation: Rather than generating a generic formulation, Opti Mind could be told "you're using Gurobi" or "you're using CPLEX" and generate formulations optimized for that solver's strengths. This could improve solution speed and quality.

Interactive refinement: Building in dialogue where Opti Mind asks clarifying questions about ambiguous problems, helping the user refine and clarify their needs before generating the final formulation.

Automatic validation against real data: If historical optimization data is available, validate that the formulation and solver produce sensible results on past scenarios.

Integration with learning: Combine optimization with machine learning, where the model learns predictive components and optimization components together.

Industry Adoption and Practical Deployment

Early Adopters and Use Cases

Pilot deployments have happened across multiple industries, each finding different valuable applications.

In energy and utilities, companies use Opti Mind to formulate power plant dispatch problems, network optimization, and demand response programs. The ability to quickly update formulations as market prices or grid conditions change proved valuable.

In financial services, portfolio managers and trading firms use Opti Mind to formulate portfolio construction, asset allocation, and risk management problems. The privacy benefits (local deployment) were cited as particularly important.

In manufacturing, production planning and workforce scheduling used Opti Mind. Manufacturers appreciated the ability to quickly reoptimize as demand changes or disruptions occur.

In retail and e-commerce, logistics optimization and inventory allocation benefited from faster formulation cycles. Companies could optimize more frequently, capturing value from market changes.

Healthcare and pharmaceutical companies explored Opti Mind for clinical trial optimization (patient recruitment strategies) and supply chain planning for medications.

Key Success Factors

Organizations that deployed Opti Mind successfully typically shared several characteristics.

First, they had existing optimization infrastructure and expertise. Teams with OR specialists already understood the value and could rapidly adopt Opti Mind. Teams without optimization background often struggled because they were also learning optimization concepts simultaneously with learning the tool.

Second, they had clear problem classes they wanted to solve. Opti Mind works best when you know the types of problems you want to tackle. Exploratory use cases are harder.

Third, they treated Opti Mind as a productivity multiplier for specialists, not a replacement for them. Organizations that tried to eliminate OR specialists and use Opti Mind without expert oversight often struggled. Organizations that used Opti Mind to let specialists focus on strategy rather than formulation thrived.

Fourth, they invested in data quality and problem specification clarity. Feeding Opti Mind well-specified, clear problem descriptions produces dramatically better output than vague descriptions.

Cost-Benefit Analysis

The economics of Opti Mind depend on your situation.

If you already have OR specialists and they're bottlenecked (more problems to optimize than they can handle), Opti Mind often pays for itself quickly. You can likely handle 3-5x more optimization problems with the same team size. The ROI comes from business value captured by optimizing more problems.

If you have expertise gaps (no OR specialists), Opti Mind helps you access optimization benefits without hiring. You can use the tool with business analysts and domain experts, reducing formulation quality compared to experts but handling problems you couldn't tackle before.

If you're using cloud-based AI services for formulation, Opti Mind's local deployment might reduce costs, especially at volume. Each API call costs something; local deployment cost is zero after initial investment.

Operational software companies considering Opti Mind often estimate they can reduce feature development time (integrating optimization into their products) by 30-40% by using Opti Mind for formulation rather than building custom formulation logic.

Competitive Landscape and Market Context

Position in the Optimization Software Ecosystem

Optimization software is a mature industry. For decades, companies like Gurobi and IBM CPLEX dominated with solver technology. More recently, tools for modeling and optimization (like Pyomo, Pulp, or Gurobi's higher-level interfaces) made it easier to express problems.

Opti Mind enters as a formulation layer. It sits between business problems (described in natural language) and solvers. This is genuinely new territory. Previously, formulation was the domain of specialists or automation via templating systems that worked for specific domains.

Microsoft's entry into this space is significant. The company has optimization expertise (through research groups), AI capabilities (through Azure and internal research), and distribution channels (through enterprise relationships). This positions them well to drive adoption.

Emerging AI-Powered Optimization Tools

The success of Opti Mind has likely prompted others to build similar systems. The market is beginning to see AI-powered formulation tools emerge from optimization software vendors and startups.

However, Opti Mind's approach (specialized small models trained on curated expert data) is likely to remain differentiated from general LLM approaches. The focus on domain-specific quality rather than scale is hard to replicate quickly.

Practical Guide: Using Opti Mind Effectively

Best Practices for Problem Description

Opti Mind works best when you describe problems clearly and completely.

Be specific about objectives: Rather than "optimize our operations," say "minimize total cost including production, inventory holding, and shipping costs." Specificity helps the model understand exactly what should be in the objective function.

Enumerate constraints explicitly: Don't assume constraints are obvious. State them clearly: "Vehicle capacity is 5 tons, driver regulations require 10-hour rest after 14-hour shifts, delivery must happen within the time window 9am-5pm." Each constraint mentioned explicitly increases the chance Opti Mind captures it.

Define variables clearly: What decisions are being made? "We need to decide production quantity at each facility for each product and demand allocation (which customer demand is met from which facility)." Clear variable definitions help Opti Mind structure the formulation correctly.

Include business context: Sometimes context matters. "We prioritize keeping existing customer relationships, so if we must cut demand, prefer cutting from new customers." This context can be captured as priority weighting or constraint ordering in the formulation.

Specify solver preferences if relevant: If you have strong preferences for a specific solver (Gurobi vs. open-source), mention it. Some formulations work better with certain solvers.

Review Process for Generated Formulations

After Opti Mind generates a formulation, human review is recommended, especially initially.

Ask yourself: Does the objective function match what we're trying to optimize? Check each constraint: is it mathematically what we described? Are there constraints missing? Are there constraints included that shouldn't be? Are variable definitions clear and non-redundant?

Solve a small test case manually or with historical data. Does the formulation produce sensible results? If results seem wrong, where's the mismatch?

Check for efficiency: could the formulation be simplified without losing correctness? Sometimes Opti Mind generates formulations that are correct but inefficient (too many variables or constraints that could be combined).

Over time, as you build confidence, review can become lighter. For routine problem types that have worked reliably, you might skip detailed review and move directly to solving.

Iterative Refinement Workflows

Often, the first formulation isn't final. Business requirements evolve, new constraints emerge, or initial results suggest missing elements.

With Opti Mind, iteration is fast. You can regenerate formulations quickly as problem specifications change. This encourages iterative problem exploration: "What if we added a constraint on facility utilization?" Generate the formulation. "What if we weighted different objectives differently?" Generate the formulation. This rapid iteration helps identify the right problem structure before committing to solutions.

Future of AI-Powered Optimization

Where This Technology Is Heading

Opti Mind represents one piece of a broader trend: AI-augmented decision-making in operations and management.

As these systems mature, we'll likely see tighter integration between problem formulation, solving, and interpretation. An end-to-end system might take a natural language problem description, formulate it with Opti Mind, solve it with a high-performance solver, and generate a natural language explanation of the results and recommendations.

We'll also see domain-specific customization. Rather than a single Opti Mind model, we might see versions tuned for supply chain, finance, healthcare, manufacturing. This specialization could push accuracy even higher.

As foundation models improve, the underlying technology for building systems like Opti Mind will become more accessible. We might see not just Opti Mind but a broader ecosystem of specialized AI tools for different aspects of decision-making and optimization.

Implications for Operations Research as a Field

This technology shifts what operations researchers and optimization specialists do. Fewer people will spend their days writing down formulations. More will spend time on problem formulation (deciding what should be optimized), validation (ensuring solutions make sense), and solution interpretation (translating mathematical results into business decisions).

This could be healthy for the field. The bottleneck in optimization adoption is often less the solving algorithms (which are mature) and more the expertise needed to formulate problems. Lowering that barrier could dramatically expand the problems that organizations attempt to optimize.

It also means OR practitioners need to maintain and deepen other skills: understanding business problems deeply, validating results rigorously, communicating with stakeholders, and building optimization thinking into organizational culture.

FAQ

What is Opti Mind and what does it do?

Opti Mind is a small language model developed by Microsoft Research specifically trained to convert business problems described in natural language into mathematical formulations that optimization solvers can process. Unlike general-purpose AI models, Opti Mind specializes in understanding business constraints, objectives, and decision variables, then expressing them in precise mathematical notation. It reduces the time, expertise, and errors involved in formulation, allowing organizations to leverage optimization without being bottlenecked by specialist availability.

How does Opti Mind differ from using Chat GPT or other general language models for formulation?

General language models like Chat GPT achieve 40-50% accuracy on optimization formulation tasks, while Opti Mind achieves 73%. The difference comes from specialization: Opti Mind was trained exclusively on domain-specific optimization data verified by experts, whereas general models are optimized for broad language understanding. Additionally, Opti Mind incorporates domain-specific checking mechanisms that validate formulations against the original problem, catching errors that general models miss. Opti Mind also runs locally for privacy and speed, whereas cloud-based models introduce latency and data privacy concerns.

What are the main benefits of using Opti Mind?

The primary benefits include dramatically reduced formulation time (hours instead of days), lower error rates in mathematical formulations (improving solution quality), local deployment for privacy and data security, reduced dependency on scarce operations research specialists, and the ability to iterate formulations rapidly as business requirements change. Organizations typically experience 40-60% time savings when Opti Mind formulations are reviewed by specialists, and near-expert-level accuracy on properly-specified problems. This enables enterprises to tackle more optimization problems and derive more value from existing optimization investments.

Can Opti Mind handle complex optimization problems?

Opti Mind performs best on problems up to a few hundred variables and constraints, which covers most standard business optimization problems in supply chain, finance, manufacturing, and resource allocation. More complex problems with thousands of variables sometimes generate correct but computationally inefficient formulations. Novel problem types not well-represented in the training data may have lower accuracy. The system works particularly well for clearly-specified problems with explicit constraints and objectives; vague or ambiguous problem descriptions reduce accuracy. Human specialists can review and refine Opti Mind output for edge cases and complex scenarios.

How does Opti Mind handle data privacy and security?

Opti Mind is designed for local, on-premises deployment, meaning optimization problems and data never need to be sent to external cloud services. Organizations can run the model on their own servers, private cloud infrastructure, or containerized environments within their infrastructure. This satisfies strict privacy requirements and regulatory compliance (like GDPR, CCPA) by keeping sensitive operational data internal. Access to the model can be controlled through standard information security practices: authentication, authorization, audit logging, and encryption. The model itself can be secured like other intellectual property with access controls and versioning.

What types of business problems can Opti Mind solve?

Opti Mind can formulate a wide range of optimization problems across industries: supply chain and logistics (routing, allocation, network design), financial portfolio optimization, manufacturing production scheduling and workforce planning, energy and utility dispatch problems, inventory management, facility location decisions, and resource allocation. Essentially, any business problem that can be described in terms of decisions to make, constraints to respect, and objectives to optimize is a candidate for Opti Mind. The system works best when problems can be clearly specified and fall within standard optimization categories covered by the training data.

How accurate is Opti Mind compared to human optimization specialists?

Opti Mind achieves approximately 73% semantic correctness on its first formulation attempt, meaning the formulation accurately captures the problem description. While this is lower than expert specialists (who approach 100% on first attempt), Opti Mind provides starting formulations that specialists can review and refine in 20-45 minutes rather than writing formulations from scratch in 8-16 hours. When Opti Mind formulations are solved and compared to specialist-created formulations, solution quality reaches 95-98% of optimal, representing only marginal differences in business value. For many organizations, this 80% time savings justifies the marginal quality difference.

How does Opti Mind integrate with existing optimization solvers and workflows?

Opti Mind generates formulations in standard mathematical formats (LP format, MPS format, solver-specific JSON) that feed directly into commercial solvers like Gurobi and CPLEX, and open-source solvers like CBC and SCIP. The output can be exported as plain text, integrated into existing optimization workflows with minimal changes. Many organizations implement a hybrid approach where Opti Mind generates formulations, specialists review for 15-20 minutes (checking for errors and necessary modifications), and then the validated formulation goes to the solver. As experience builds with routine problem types, some organizations reduce or eliminate the human review step.

What training and change management is needed to deploy Opti Mind?

Deployment typically requires minimal formal training, usually just a few sessions. Teams need to understand how to describe problems clearly, how to review Opti Mind output for accuracy, and how to flag edge cases. The larger transition is organizational: optimization specialists shift from spending 80% of their time on formulation to spending 30% (mostly review), freeing them to focus on higher-value work like tuning solver parameters, interpreting results, and designing new optimization initiatives. Successful organizations frame Opti Mind as expanding team capacity and impact rather than replacing jobs, which aids adoption and team buy-in.

Can Opti Mind be customized for specific industries or problem types?

Currently, Opti Mind is a general model trained on diverse optimization problems across industries. However, the underlying approach is amenable to customization: training domain-specific versions on industry-specific problem data could likely push accuracy even higher for particular use cases. Organizations with unique problem types not well-represented in the general training data might experience slightly lower accuracy initially, though they could provide feedback or additional training data to improve performance on their specific problems. As the field matures, we expect to see more specialized versions optimized for specific domains.

Conclusion: The Democratization of Optimization

Optimization is one of the most powerful but underutilized tools in business decision-making. The math has been proven for decades. Solvers are mature and powerful. But the barrier to entry has always been formulation: translating business problems into mathematical form requires expertise that's expensive, scarce, and slow.

Opti Mind doesn't eliminate this barrier overnight, but it dramatically lowers it. By reducing formulation time from days to hours, and enabling non-specialists to work with optimization, it makes possible optimization initiatives that were previously impractical.

The impact extends beyond simple time savings. When formulation becomes fast and low-risk, organizations experiment more with optimization. They tackle problems they previously considered too complex or too frequently-changing to optimize. Supply chains adapt faster. Financial portfolios rebalance more frequently. Production schedules adjust more responsively.

Microsoft's research here represents a genuine contribution to making advanced decision-making tools more accessible. The focus on specialization, domain-specific quality, and local deployment reflects understanding of what real organizations actually need, not just what's technically possible.

For operations research as a field, this is transformative. OR specialists evolve from formulation experts to optimization strategists and result validators. The profession becomes more about understanding business problems deeply and solving them creatively, less about translating between languages.

For organizations, the opportunity is clear. If you're using optimization today, Opti Mind amplifies your team's impact. If you're not using optimization, Opti Mind lowers the entry barrier. Either way, the economics increasingly favor moving toward AI-assisted decision-making in operations.

The optimization gap is closing. And that changes what's possible in business operations.

Key Takeaways

- OptiMind achieves 73% accuracy on optimization formulations versus 40-50% for general large language models through domain-specific training on expert-curated data.

- Formulation time drops from 2-7 days to hours with OptiMind, with specialist review requiring just 20-45 minutes instead of 8-16 hours.

- Local deployment ensures sensitive operational data never leaves the organization, critical for regulated industries and competitive protection.

- Small specialized models outperform large general-purpose models for technical optimization tasks by focusing on domain-specific patterns.

- Organizations can now scale optimization initiatives beyond their operations research specialist capacity, democratizing access to mathematical optimization.

![OptiMind: Transforming Business Problems Into Mathematical Solutions [2025]](https://tryrunable.com/blog/optimind-transforming-business-problems-into-mathematical-so/image-1-1768486160080.jpg)