Introduction: The Convergence of Exceptional Talent and Transformative Technology

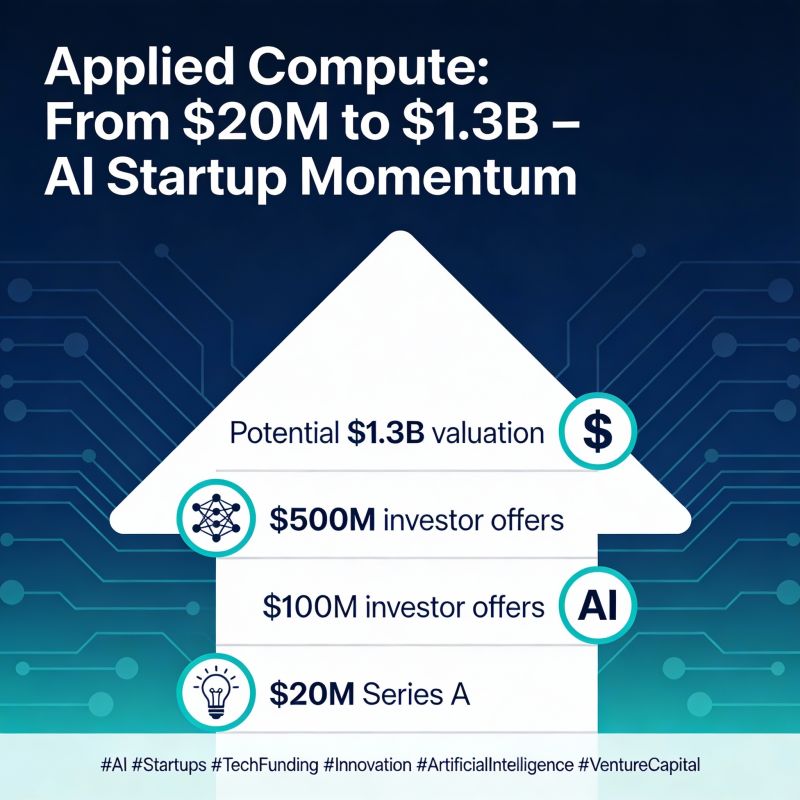

In early 2026, the venture capital world witnessed a phenomenon that rarely occurs in the startup ecosystem: a company raising

What made Ricursive's funding trajectory so remarkable wasn't the capital amount itself, though that certainly turned heads. Rather, it was the speed and the near-universal enthusiasm from top-tier venture firms like Lightspeed and Sequoia, combined with participation from industry titans including Nvidia, AMD, and Intel. The reason was deceptively simple: the founding team.

Anna Goldie and Azalia Mirhoseini represented something rare in Silicon Valley—founders whose technical accomplishments and industry reputation preceded them so substantially that investors needed minimal convincing. Both had worked together at Google Brain, where they created Alpha Chip, an artificial intelligence system that could generate semiconductor layouts in hours instead of the typical one year required by human designers. They subsequently joined Anthropic, one of the most prestigious AI safety companies, further cementing their status as leading researchers in the field.

The convergence of exceptional founders, a massive addressable market, critical infrastructure technology, and flawless execution created a funding story worth examining in detail. Beyond the headline numbers, Ricursive Intelligence represents a broader shift in how the technology industry approaches hardware innovation. Rather than trying to design and manufacture chips themselves (a path that has bankrupted countless startups), Ricursive is building the tools that make chip design so efficient that companies can innovate at the pace of software.

This comprehensive analysis explores the story behind Ricursive's unprecedented funding, the technical innovations that make their approach unique, the market dynamics that created such demand for their solution, and the competitive landscape of AI-powered chip design tools. We'll also examine alternative approaches to semiconductor automation and what this means for the future of artificial intelligence infrastructure.

Part 1: The Founders—Why Venture Capitalists Lined Up

The Google Brain Connection: Creating Alpha Chip

The story of Ricursive Intelligence begins not in a startup incubator or venture capitalist's office, but at Google Brain, the research division of Google dedicated to artificial intelligence and machine learning. Anna Goldie and Azalia Mirhoseini arrived at Google at different times but eventually collaborated on one of the most significant projects in the company's chip history: Alpha Chip.

Alpha Chip represented a paradigm shift in how computers could approach chip design. Traditional chip layout—the process of placing millions or billions of transistors and logic gates on a silicon wafer—has remained fundamentally similar for decades. Engineers use design rules, heuristics, and iterative manual refinement to optimize for performance, power consumption, thermal characteristics, and manufacturing yield. This process is extraordinarily time-consuming, often requiring teams of specialists working for 12-24 months on a single design.

Goldie and Mirhoseini's breakthrough was applying reinforcement learning—a form of artificial intelligence where an algorithm learns through trial and feedback—to this classical engineering problem. Their AI system could generate chip layouts in approximately 6 hours, representing a 100x improvement in speed over the traditional human-driven approach. More impressively, the quality of these AI-generated layouts was competitive with or superior to human designs in many metrics including power efficiency, performance characteristics, and manufacturing yield.

The technical sophistication of their approach involved using reward signals to rate design quality across multiple dimensions. The AI agent would receive feedback on how good its design was, then update its underlying deep neural network parameters to improve. After designing thousands of variations, the system became increasingly sophisticated, much like how a master chess player improves through playing thousands of games. The system also became faster over time, as the neural network learned patterns and shortcuts that accelerated the design process.

Industrial Impact and Recognition

The practical impact of Alpha Chip at Google was substantial and immediate. The technology was used to design three generations of Google's Tensor Processing Units (TPUs)—the custom chips that power Google's machine learning infrastructure. This wasn't a research project or a proof of concept; it was deployed on some of the most critical hardware in Google's entire technology stack.

The technical accomplishment earned Goldie and Mirhoseini recognition throughout the artificial intelligence and semiconductor communities. In an industry where most engineers work anonymously, rarely achieving household recognition, their work became something of a legend. Colleagues, collaborators, and industry peers understood that they had solved a problem that semiconductor companies had been struggling with for decades.

Interestingly, the narrative around Alpha Chip also highlights the interpersonal dynamics within tech companies. Goldie and Mirhoseini were known to be exceptionally close collaborators—so much so that they became known within Google as "A&A" among colleagues. They even worked out together, both enjoying circuit training exercises, which led Google engineer Jeff Dean (himself a legendary figure in AI) to affectionately nickname their project "chip circuit training"—a clever pun on their shared fitness routine and the project itself.

The Controversy and Vindication

Success in highly competitive technical fields often attracts envy, and Goldie and Mirhoseini's rapid rise within Google was no exception. According to reporting, one of their colleagues at Google spent years attempting to discredit their work and diminish their accomplishments. This colleague was eventually fired for this behavior, but the episode illustrates a reality of competitive technical environments: groundbreaking work sometimes generates resistance from those who feel threatened by it.

The vindication came through deployment and real-world results. Alpha Chip wasn't an abstract research paper; it was actually used to design chips that Google deployed at massive scale. This practical validation of their approach proved its legitimacy in a way that no academic publication could match.

The Anthropic Period and Advanced AI Understanding

After their success at Google, Goldie and Mirhoseini joined Anthropic, one of the most prestigious artificial intelligence safety and research companies. Anthropic, founded by former Open AI researchers including Dario and Daniela Amodei, focuses on developing AI systems that are safer, more interpretable, and more aligned with human values.

Their tenure at Anthropic further developed their expertise in advanced artificial intelligence techniques, frontier AI capabilities, and the challenges of building increasingly powerful AI systems. This experience would prove invaluable in founding Ricursive, as it provided insight into both the computational demands of cutting-edge AI and the infrastructure bottlenecks that limit AI progress.

The "Weird Emails" from Tech Giants

The level of talent acquisition competition surrounding Goldie and Mirhoseini reached such intensity that Goldie later recounted receiving what she described as "weird emails from Zuckerberg making crazy offers." While the details of these recruitment efforts remain private, the anecdote illustrates the extraordinary demand for their talents. Meta, Google, Anthropic, and other tech giants were all competing for their expertise.

The fact that they turned down these offers—turning away whatever compensation packages Meta, Google, and other companies proposed—to instead found Ricursive demonstrates their confidence in their vision and their conviction that building a new company around chip design automation was a more compelling opportunity than joining any existing organization.

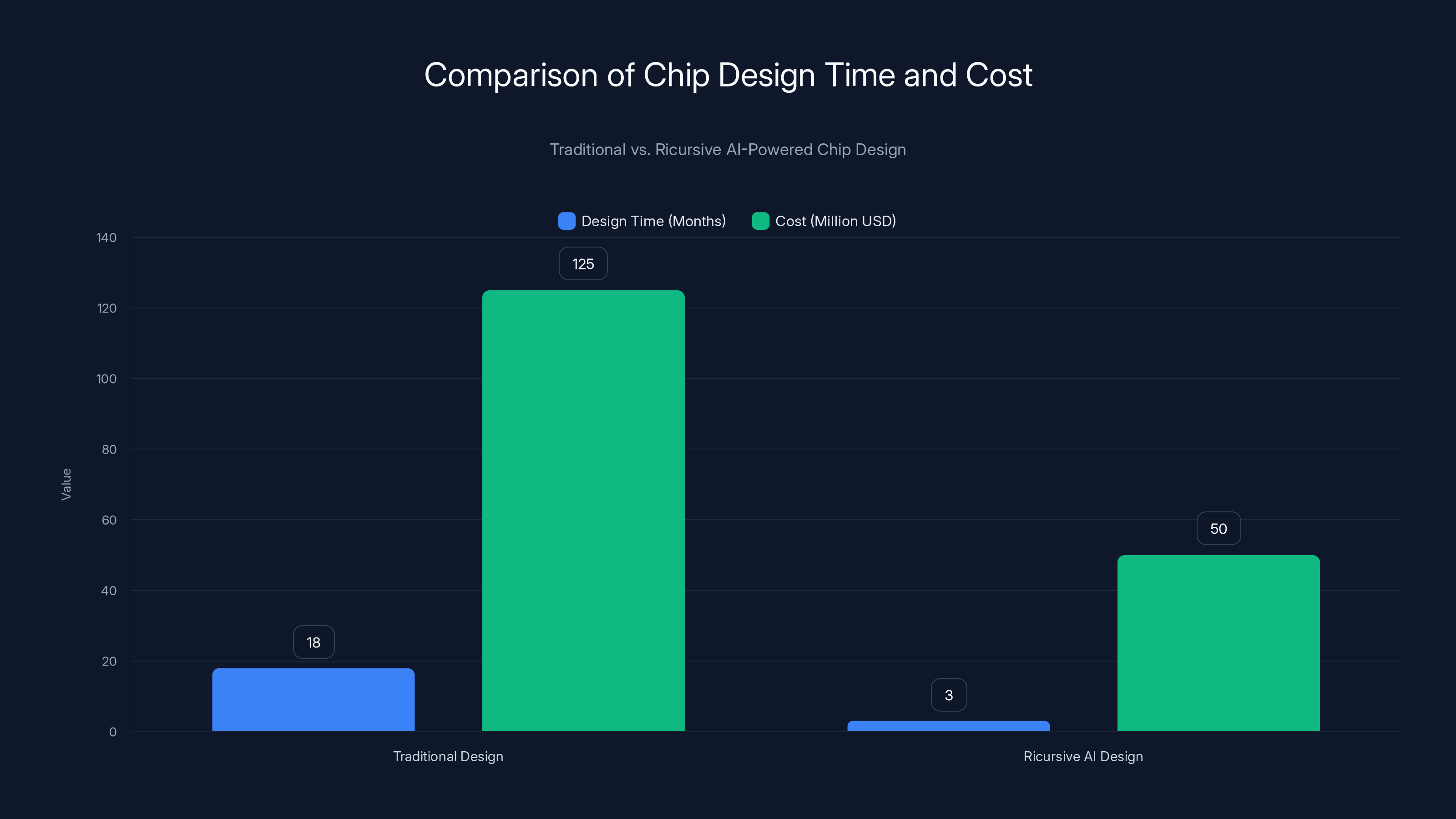

Ricursive's AI reduces chip design time from 18 to 3 months and costs from

Part 2: The Semiconductor Industry Context—Why Chip Design is a Bottleneck

The Scale of the Chip Design Challenge

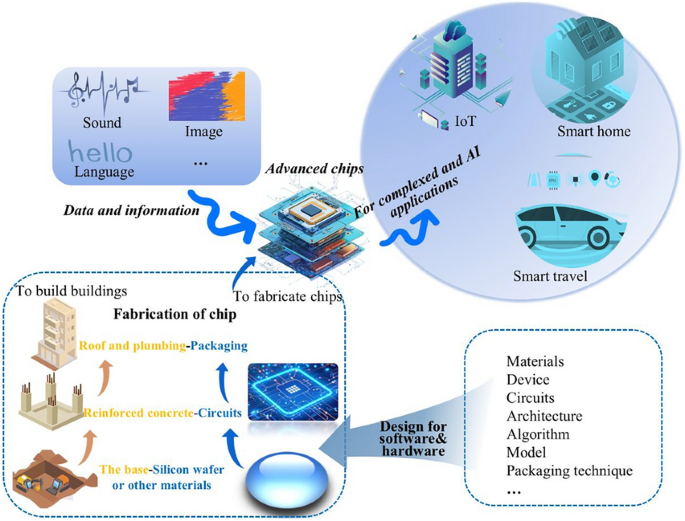

To understand why Ricursive Intelligence's technology is so valuable, we need to understand the complexity and scale of modern chip design. Modern semiconductors—whether they're processors, graphics processing units, or specialized AI accelerators—contain billions of transistors packed into an area smaller than a fingernail.

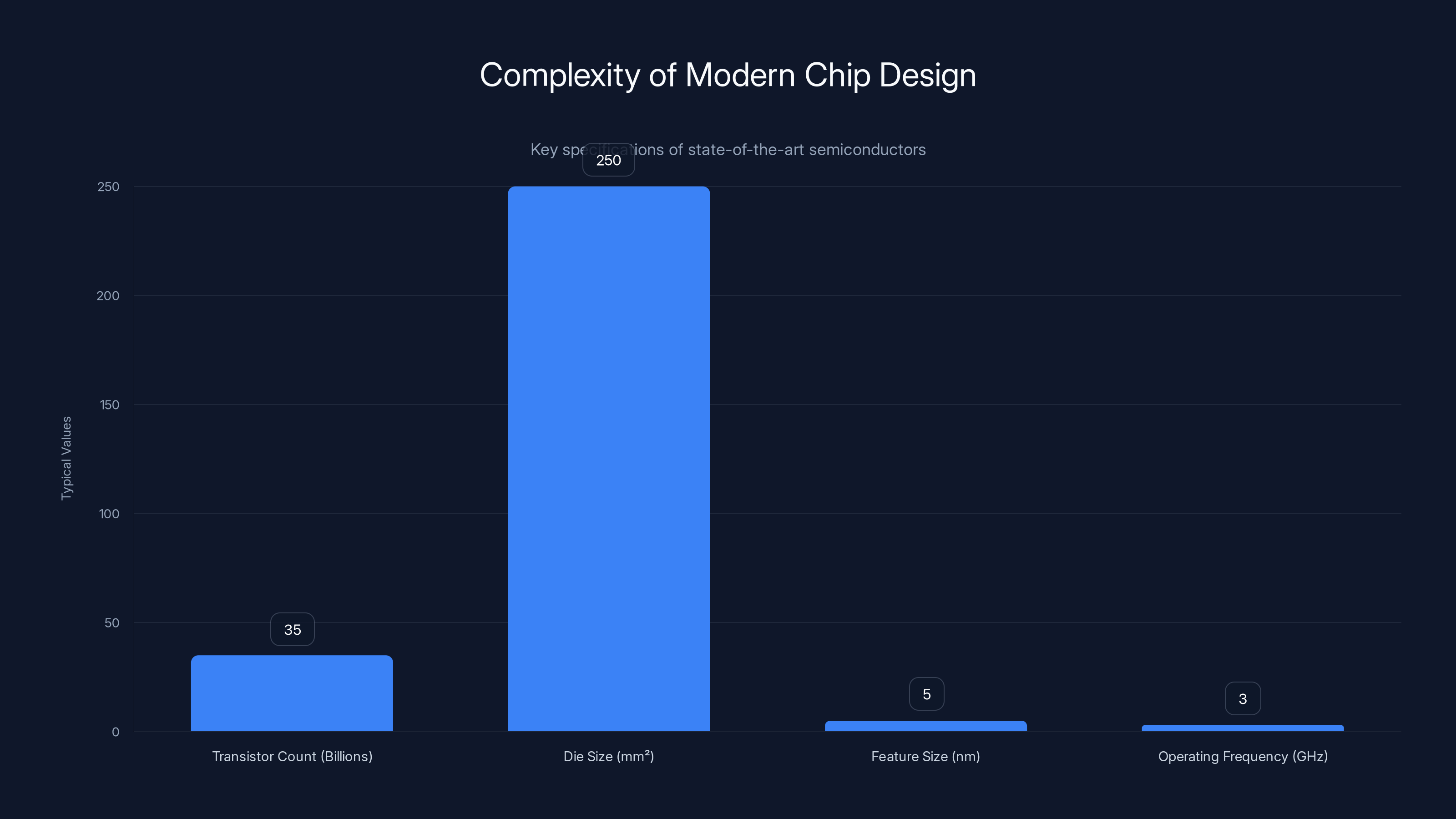

Consider the specifications of a modern chip:

- Transistor Count: A state-of-the-art processor might contain 20-50 billion transistors

- Die Size: The actual silicon area might be only 100-400 square millimeters

- Feature Size: Individual circuit elements measure just 3-7 nanometers (billionths of a meter)

- Performance Requirements: These chips must operate at 2-4 GHz (billions of cycles per second) with incredibly tight tolerances

- Power Constraints: Depending on the application, chips must stay within power budgets measured in watts to kilowatts

The relationship between these factors is highly nonlinear. Placing transistors too close together creates heat dissipation problems. Spreading them too far apart creates signal propagation delays that reduce performance. The routing of electrical connections between components must minimize both distance and electromagnetic interference. Power must be distributed evenly to prevent voltage drops. Thermal hotspots must be avoided. Manufacturing variations and defects must be accounted for.

The Design Process: A Year-Long Engineering Endeavor

The traditional chip design flow involves multiple specialized phases, each requiring months of work:

- Architectural Design (2-3 months): Engineers define the high-level logic and structure

- Register Transfer Level (RTL) Design (3-4 months): Circuit designers describe the chip's behavior at a lower level of abstraction

- Logic Synthesis (2-3 months): Electronic design automation tools convert RTL descriptions into gate-level netlists

- Physical Design and Layout (4-8 months): This is where the Alpha Chip breakthrough applies—engineers and EDA tools must place individual transistors and route connections

- Verification and Tape-out (3-6 months): Extensive testing and final preparation for manufacturing

The layout phase (step 4) is particularly time-consuming and challenging because it requires simultaneous optimization across multiple competing objectives:

- Timing: Ensuring signals propagate between components within acceptable time windows

- Power: Minimizing energy consumption while meeting performance requirements

- Area: Fitting everything within the physical die size to control costs

- Yield: Designing with manufacturing tolerances to maximize the percentage of chips that function correctly

- Thermal: Distributing heat generation evenly to prevent localized hotspots

- Routability: Ensuring that all electrical connections can actually be routed without conflicts

These objectives are not just competing—they're often contradictory. Reducing power consumption might increase area. Maximizing performance might require more area and power. Improving yield might require larger features and more spacing. A human designer must navigate these tradeoffs using experience, intuition, and iterative refinement.

The Economic Impact of Design Time

The 12-24 month design timeline translates directly into enormous financial consequences:

- Team Costs: A chip design team for a major project might include 50-200 engineers at total compensation of 500,000 per person annually. A 2-year project represents $30-100 million in labor costs alone.

- Opportunity Cost: If a competitor can design a chip in 3 months instead of 18 months, they can deploy 6 generations of products in the same timeframe, creating an insurmountable competitive advantage.

- Manufacturing Costs: Modern chip fabrication plants (fabs) cost $10-20 billion to build. They run continuously; any delay in tape-out (final chip submission to manufacturing) represents millions of dollars in opportunity cost for that fab.

- Time-to-Market: In fast-moving markets like artificial intelligence, a 12-month delay in chip availability might mean losing an entire generation of market opportunity.

Current Limitations of Electronic Design Automation

While electronic design automation (EDA) tools like those from Synopsys, Cadence, and Mentor Graphics have improved significantly over decades, they still rely heavily on human expertise:

- Rule-Based Approaches: Traditional EDA tools encode design rules as explicit constraints and algorithms. These work well for routine design tasks but struggle with novel design challenges.

- Limited Optimization: These tools optimize locally (e.g., minimizing delay on a specific signal path) but can't effectively optimize globally across all design objectives simultaneously.

- Slow Iteration: Making changes to a design and re-running place-and-route tools can take hours to days, limiting how many design iterations engineers can explore.

- Specialization Required: Using these tools effectively requires years of expertise; the learning curve is steep and the knowledge base is difficult to transfer.

Ricursive's AI-based approach addresses all these limitations by learning design patterns from data rather than relying on explicit rules, optimizing across global objectives rather than local metrics, and potentially enabling faster iteration through more efficient algorithms.

Part 3: Ricursive Intelligence's Technical Approach

Beyond Alpha Chip: Building a Platform

While Alpha Chip proved that AI could accelerate chip design, it was a proof of concept optimized for Google's specific needs and chip designs. Ricursive Intelligence is building something more ambitious: a generalizable platform that can accelerate design for any type of chip, from custom accelerators to traditional processors to analog and mixed-signal designs.

The key technical innovation is that Ricursive's AI system will learn across different chips. Each new chip design problem it solves should make it smarter and more efficient at solving the next design problem. This is fundamentally different from traditional EDA tools, where each new chip design starts essentially from scratch using the same algorithmic approach.

This "learning across different chips" capability creates a powerful compounding effect. Early in the platform's lifecycle, the AI system learns what works and what doesn't across different design scenarios. Over time, as it accumulates more design experience, it becomes increasingly sophisticated, much like how a senior engineer with 20 years of experience is more capable than a junior engineer with 1 year of experience.

Multi-Modal AI Integration

Ricursive's platform integrates multiple forms of artificial intelligence:

- Reinforcement Learning: Used for layout optimization and design space exploration, the same approach that powered Alpha Chip

- Large Language Models (LLMs): Increasingly, chip design involves understanding specifications, requirements, and design constraints expressed in natural language. LLMs can help interpret these specifications and generate design parameters.

- Graph Neural Networks: Chip designs can be represented as graphs (components as nodes, connections as edges), and graph neural networks are particularly effective at learning patterns in such structured data

- Evolutionary Algorithms: Population-based optimization techniques can explore the design space more effectively than simple gradient descent

The integration of these multiple AI approaches allows Ricursive to address different aspects of the chip design problem with the most appropriate technique.

Design Verification and Completeness

A critical aspect of Ricursive's approach is that it doesn't just handle layout—it encompasses everything from component placement through design verification. This is significant because:

- Layout alone is insufficient: A well-placed chip that doesn't meet electrical specifications is worthless

- Verification is time-consuming: Currently, verification (ensuring a design meets all functional and performance requirements) can consume 30-50% of total design time

- Integration challenges: If layout and verification are handled by different tools and teams, coordination becomes a bottleneck

By handling the complete design flow within a unified AI platform, Ricursive can optimize across all design phases simultaneously, identifying design solutions that not only look good from a placement perspective but also meet all electrical and functional requirements.

The Vision: AI Designing AI Chips

Ricursive's ultimate vision extends beyond automating chip design—it's enabling co-evolution of AI models and the chips that run them. This is a profound concept with major implications:

Current State: AI researchers develop new models (like larger language models or new neural network architectures), then chip designers must optimize existing chips to run these models more efficiently. The models are fixed; the chips adapt to them. This is a sequential, iterative process that takes months or years.

Ricursive's Vision: AI researchers propose a new model, and simultaneously, chip designers use Ricursive to design custom chips optimized specifically for that model's computational patterns. Then researchers might adjust the model based on what the custom chip can efficiently execute, leading to a new chip design. This creates a feedback loop where models and chips co-evolve to reach new levels of efficiency and capability.

As Mirhoseini told Tech Crunch, "We think we can enable this fast co-evolution of the models and the chips that basically power them," allowing AI to grow smarter faster because neither models nor hardware will be constrained by static designs.

This vision has profound implications for artificial general intelligence (AGI) development. If the rate of AI progress is currently constrained by hardware limitations and the time required to design new chips, then dramatically accelerating chip design could become a key bottleneck remover for AGI research.

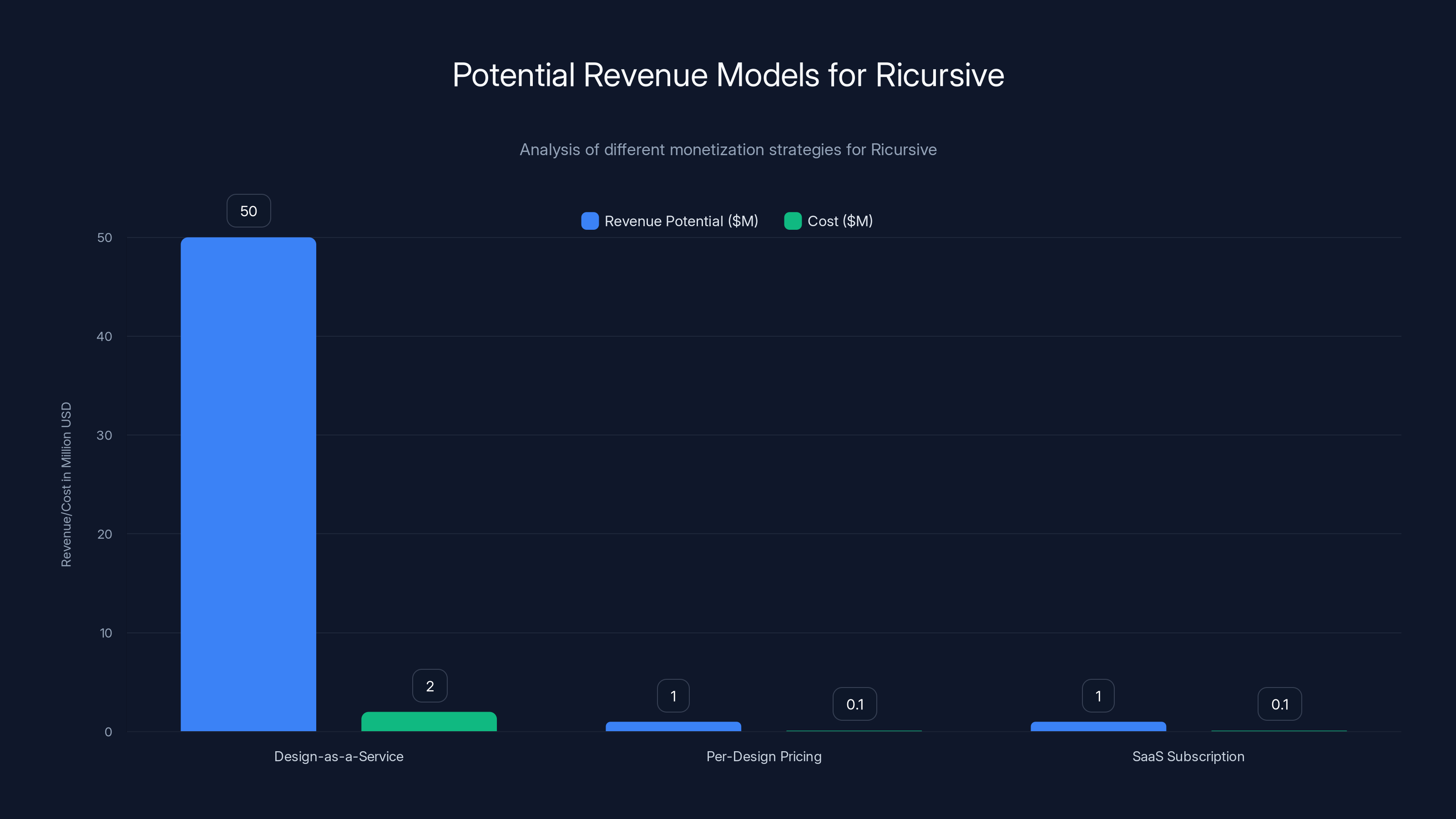

The 'Design-as-a-Service' model offers the highest revenue potential but also incurs higher costs. The 'SaaS Subscription' model provides predictable revenue with lower costs, making it attractive for scalability. (Estimated data)

Part 4: The Funding Story—$335M in Four Months

The Seed Round: Sequoia's Conviction

Ricursive Intelligence's first major funding milestone was a **

Sequoia's participation was significant because the firm has a long history of identifying foundational technologies and exceptional founders. Sequoia led early investments in companies like Apple, Cisco, Google, and Airbnb—firms that didn't just succeed financially but created entire categories or transformed existing categories.

Sequoia's investment reflected confidence in several factors:

- Founder pedigree: Two founders with verified track records of creating transformative technology

- Massive addressable market: The semiconductor industry represents hundreds of billions in annual spending

- Urgency: AI's rapid advancement had created urgent demand for better chips, making the timing optimal

- Technical risk reduction: Alpha Chip's existence and deployment significantly reduced technical risk compared to typical deep-tech startups

- Network effects: Each chip design Ricursive completed would make the platform better for the next design

The Series A: Lightspeed's Bold Bet

Just months after raising

Let that valuation sink in: a company with no publicly announced customers, no revenue, and minimal operational history reached a $4 billion valuation. This is extraordinary even by Silicon Valley standards.

Several factors explain the aggressive Series A:

1. Founder Momentum: Founders who are this celebrated have a track record of raising capital quickly and executing ambitiously. Investors know that if Ricursive has raised $335M from top-tier firms, other investors will fight to get in the next round. This creates competitive dynamics that drive up valuations.

2. Market Urgency: The artificial intelligence industry was experiencing unprecedented growth and investment in 2025-2026. Every major AI company—Open AI, Anthropic, Meta, Google, Microsoft—was competing for more computational power. Better chip design tools directly address this bottleneck. Lightspeed likely saw the risk of Ricursive raising capital from non-traditional tech investors (private equity firms, corporate ventures) or going in a different direction, and moved aggressively to secure board representation and influence.

3. Multiple Technical de-Risking: Unlike most deep-tech startups, Ricursive had multiple risk-reducing factors:

- Technical proof of concept: Alpha Chip demonstrated the core technology worked

- Founder execution track record: Goldie and Mirhoseini had successfully shipped technology at scale at Google

- Customer signals: While not publicly named, the founders indicated they had heard from "every big chip making name you can imagine," suggesting strong market validation

- Platform scalability: The platform design (learning across multiple chips) suggested the technology could scale beyond specific use cases

4. Strategic Investor Participation: Beyond Lightspeed, Ricursive secured participation from Nvidia, AMD, Intel, and other chip makers as strategic investors. This was crucial for several reasons:

- It reduced business risk (these companies would be natural customers or partners)

- It provided distribution channels (chip makers have relationships with fabless design companies)

- It provided validation (no chip maker would invest in a competing technology unless they believed in its potential)

- It created lock-in (if competitors are investing in Ricursive, non-investing chip makers must worry they're falling behind)

The Valuation Mathematics

How does a $4 billion valuation make sense for a company with no announced revenue? Understanding venture capital valuation dynamics:

Venture Capital Valuation Framework:

For a Series A-stage deep-tech company, multiples vary wildly:

- High-risk biotech: 5-15x forward revenue

- Proven Saa S companies: 8-12x forward revenue

- Venture-backed software: 4-8x forward revenue

- Deep-tech infrastructure: 10-30x forward revenue (or valued on TAM/market potential rather than revenue)

Ricursive appears to be valued primarily on total addressable market (TAM) rather than near-term revenue:

TAM Analysis:

- Semiconductor design services market: ~$150 billion globally

- EDA tool market: ~$15 billion annually

- Custom chip/fabless design market: ~$200 billion

- Potential value capture: Even 1-2% of market would represent $2-4 billion in annual software/services revenue

At a 20x forward revenue multiple (high for software, but justified for infrastructure that accelerates AI), a company capturing even 2% of the chip design market could expect

Additionally, venture capitalists often use Option Value frameworks for deep-tech investments: even if the probability of success is 30-50%, the upside is so large that expected value justifies the investment.

Why the Speed?

The four-month timeline from Series Seed (

1. Supply-Side Dynamics: Top venture firms have allocation committees and internal capital competitions. When Lightspeed saw that Sequoia had invested, and heard early signals about customer demand, missing the opportunity carried significant internal cost (explaining their aggressive check size).

2. Founder Agency: Goldie and Mirhoseini, as celebrated founders with optionality, could have waited longer to raise, demanding higher valuations or different terms. By raising quickly, they proved they didn't "need" the capital—investors were pushing it on them. This changes negotiating dynamics.

3. Technical de-risking: The existence of Alpha Chip meant that unlike most deep-tech companies, Ricursive didn't need to prove the technology worked in a research phase. The critical path items were already de-risked.

4. Competitive investor dynamics: Once word spread that Lightspeed was leading a massive Series A, other investors (especially those who passed on the seed round) wanted in. This created competitive pressure that Lightspeed could capitalize on by sizing the round to absorb demand from follow-on investors.

Part 5: Market Traction and Early Customer Signals

The Hidden Customer Base

While Ricursive's founders explicitly declined to name their early customers, they provided a telling comment: they've heard from "every big chip making name you can imagine." This isn't casual interest—it's the semiconductor industry knocking on the door.

Who is Ricursive's potential customer base?

Tier 1: Chip Manufacturers (IDMs)

- Intel: Struggling with manufacturing node transitions and competing with TSMC. Custom chip design acceleration could help regain process leadership.

- Samsung: Major chip manufacturer with both IDM and foundry business

- SK Hynix, Micron: Memory chip manufacturers who could use faster design iteration

- Qualcomm: Fabless chip designer dependent on design efficiency

Tier 2: AI Semiconductor Specialists

- Cerebras, Graphcore, Samba Nova: These companies are trying to design custom chips optimized for AI. Faster design cycles could help them iterate and compete against established players.

- Groq, Cerebras: Custom chip designers in the AI accelerator space who could dramatically accelerate their design cycles

Tier 3: AI Companies Building Custom Chips

- Meta: Building in-house chips for data centers

- Google: Continuing to design next-generation TPUs

- Tesla: Designing custom chips for autonomous driving

- Microsoft: Working on AI accelerators through partnerships and internal development

- Amazon AWS: Designing custom chips for data center workloads (Trainium, Inferentia)

- Apple: Designing chips for devices

Tier 4: Fabless Semiconductor Companies

- Hundreds of companies designing chips for various applications (automotive, Io T, networking, etc.) who could use faster design cycles

The Competitive Advantage Ricursive Offers Each Segment

For Established Chip Manufacturers: The ability to design chips 10x faster could convert a 24-month design cycle to 2-3 months. This would allow them to:

- Iterate more rapidly on designs, exploring more design space

- Respond more quickly to market demands or competitive threats

- Reduce the engineering team size required for a design (and thus reduce costs by 30-50%)

- Bring new capabilities to market before competitors

For AI Chip Specialists: Speed matters even more in the fast-moving AI space. The ability to design new chips in weeks rather than months could allow:

- Rapid iteration based on feedback from software teams

- Multiple product lines serving different market segments without time-to-market pressure

- Cost reduction that improves margin on expensive specialty chips

For AI Companies: Custom chips optimized specifically for their workloads provide enormous advantages:

- 5-10x better performance per dollar spent on hardware

- Reduced power consumption (critical for data center economics)

- Competitive moat (competitors can't buy the same chip)

- Reduced dependency on external suppliers

Early Market Signals: What the Founder Comments Reveal

When Goldie stated that "we want to enable any chip, like a custom chip or a more traditional chip, any kind of chip, to be built in an automated and very accelerated way," she was signaling that Ricursive's ambition extends far beyond their initial Alpha Chip focus on high-performance computing.

The inclusion of "custom chip" and "traditional chip" indicates Ricursive is pursuing a broad platform approach:

- Custom chips (like AI accelerators) appeal to companies with unique computational needs and high margins

- Traditional chips (like standard processors or memory controllers) appeal to larger manufacturers with massive volumes

- Mixed approach shows they're not limiting themselves to a single market segment

Part 6: The Hardware Acceleration Imperative—Why Chip Design Innovation is Critical Now

The AI Compute Scaling Problem

Artificial intelligence, particularly large language models and deep learning systems, has become extraordinarily expensive. The computational cost of training state-of-the-art models has been doubling approximately every 3-4 months—a trend that's unsustainable indefinitely.

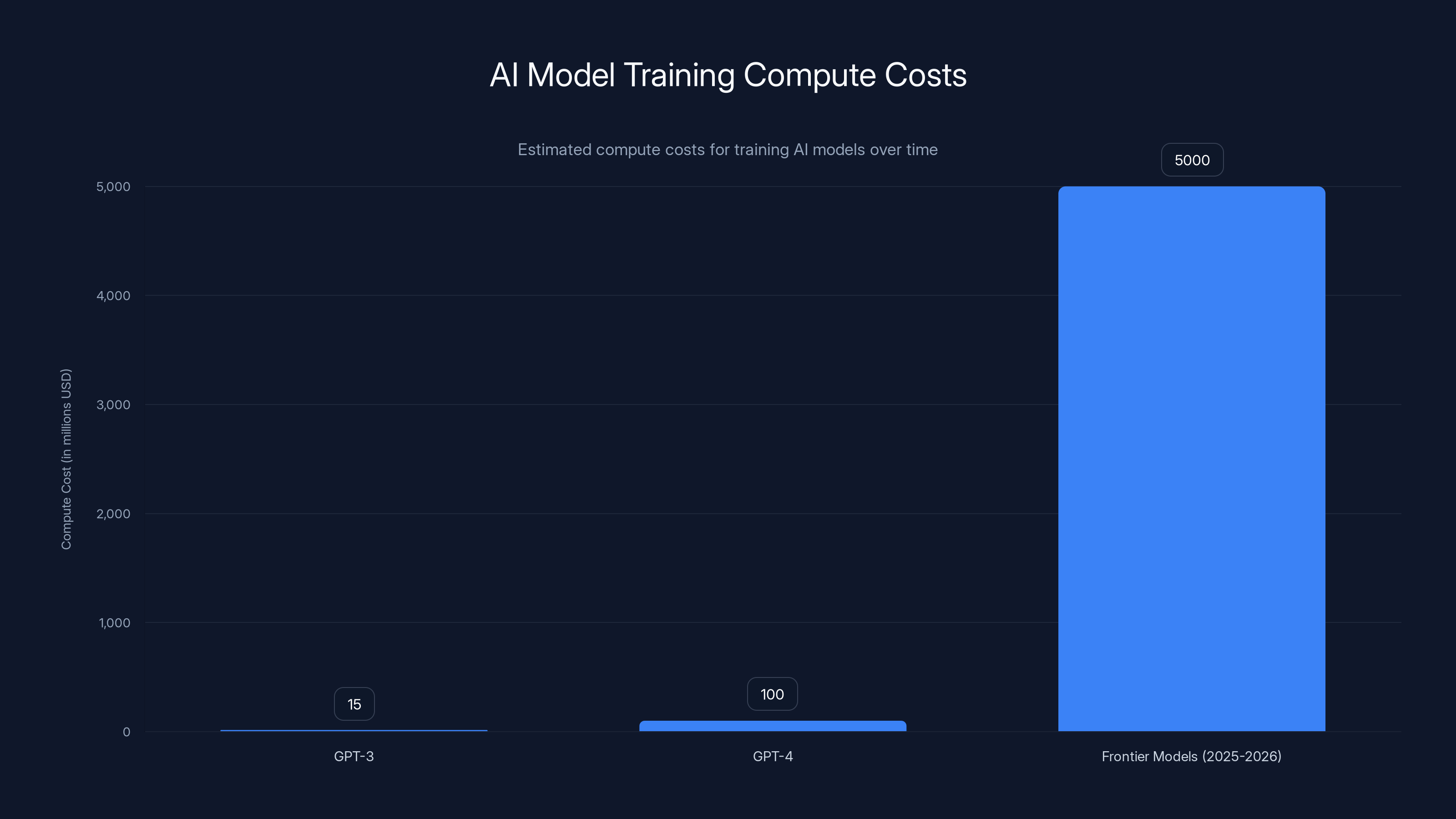

Consider the costs:

- GPT-3 Training: Estimated $10-20 million in compute costs

- GPT-4 Training: Estimated $100+ million in compute costs

- Frontier Models (2025-2026): Estimated $1-10 billion+ in compute costs

These costs are driven by:

- Increasing Model Size: Each generation of model has more parameters (weights and biases)

- Increasing Training Data: Larger models require larger, more diverse training datasets

- Hardware Limitations: Current GPUs are increasingly optimized for inference rather than training, creating inefficiencies

If this exponential cost growth continues, eventually the entire AI industry hits a compute-cost ceiling where training new models becomes economically infeasible.

The Hardware Design Bottleneck

Custom hardware accelerators (chips specifically designed for AI workloads) can provide 5-100x better performance per dollar compared to general-purpose GPUs. However, designing these custom chips currently takes 18-24 months and costs $50-200 million.

This creates a paradox:

- Opportunity: Custom chips could dramatically reduce AI compute costs

- Constraint: The time and cost to design custom chips prevents most companies from pursuing them

- Competitive Moat: Only the wealthiest companies (Google, Meta, Tesla, Apple) can afford to design custom chips, creating an unfair advantage

Ricursive's technology directly addresses this bottleneck:

Current State: Design cycle time = 18-24 months → Cost per new design = $50-200 million

With Ricursive (Estimated): Design cycle time = 2-4 months → Cost per new design = $5-20 million

This 5-10x improvement in speed and cost could democratize custom chip design, allowing companies that couldn't previously afford it to design chips optimized for their specific workloads.

The Energy Efficiency Imperative

Beyond pure speed, there's another critical factor: energy efficiency. As AI systems grow more powerful, their energy consumption becomes a major constraint:

- Data Center Power Consumption: A large AI data center might consume 5-10 megawatts of electricity (comparable to a small city)

- Cooling Costs: In many data centers, cooling represents 30-50% of operational costs

- Carbon Emissions: Training a single large AI model can generate 100+ tons of CO2 equivalent in emissions

- Geopolitical Constraints: Countries with power shortages may restrict AI development to preserve power for other uses

Custom hardware can improve energy efficiency by 2-5x compared to general-purpose hardware through:

- Specialized computation: Only implementing the specific operations the AI workload needs

- Optimized precision: Using lower-precision arithmetic (e.g., 8-bit integers instead of 32-bit floats) where acceptable

- Reduced data movement: Minimizing power-hungry memory accesses through specialized caches and data paths

As Goldie noted, "We could design a computer architecture that's uniquely suited to that model, and we could achieve almost a 10x improvement in performance per total cost of ownership." This efficiency gain is not just financially important—it's environmentally critical as AI systems consume ever more energy.

The AGI Timeline Connection

Though neither Goldie nor Mirhoseini explicitly claim their technology will enable AGI, they hint at this possibility: "Chips are the fuel for AI. I think by building more powerful chips, that's the best way to advance that frontier."

The logic is straightforward: if hardware has been a bottleneck on AI progress, then removing that bottleneck could accelerate progress toward artificial general intelligence. Conversely, if AGI requires massive amounts of custom-optimized hardware, then the ability to design that hardware quickly becomes critical.

This connection explains why top AI researchers (and companies training frontier AI models) would be deeply interested in Ricursive's technology. It's not just about incremental improvements—it's about removing a potential constraint on AI development.

Modern chips are incredibly complex, with billions of transistors, compact die sizes, and high operating frequencies. Estimated data.

Part 7: Competitive Landscape and Strategic Considerations

The Traditional EDA Incumbents

The electronic design automation industry is dominated by three companies:

Synopsys: ~

These companies have dominated chip design for decades through:

- Entrenched relationships with major chip designers

- Continuous incremental improvements to existing tools

- High switching costs (training, workflow integration, custom scripts)

- Platform lock-in (tools from one vendor often work better together)

Ricursive's approach could be genuinely disruptive to this incumbency because:

Orthogonal Technology: AI-driven design is different enough from rule-based EDA that these incumbents might struggle to integrate it without rearchitecting their entire platform

Performance Advantage: If Ricursive delivers 5-10x faster design cycles, users might tolerate some loss of control or understanding to get that speed benefit

Generational Shift: Younger chip designers trained on AI-assisted design might prefer Ricursive's approach to traditional EDA workflows

However, the incumbents aren't powerless:

Acquisition Risk: Synopsys and Cadence both have acquisition budgets and could buy Ricursive or a similar company

Feature Integration: They could implement AI-assisted design capabilities within existing tools

Customer Lock-in: Deep integrations with existing workflows make switching costs high even with better technology

Complementary Positioning: Rather than direct competition, Ricursive could become a complementary tool that works alongside traditional EDA

Emerging Competitors and Alternatives

Ricursive is not unopposed in pursuing AI-driven chip design. Several other efforts exist:

Academic Research Groups: Universities including UC Berkeley, MIT, and Stanford have published research on neural networks for chip placement. While not commercial products, they prove the concept is viable and could eventually produce startups.

Internal Development Efforts: Large chip makers (particularly Google, Meta, and others investing in Ricursive) are likely developing internal tools as well. Ricursive's value is that they can serve all customers equally, whereas internal tools serve only one company.

Smaller Startups: Various other startups are pursuing AI-driven chip design (e.g., Magillem Design Services, though focused on a different problem). The space is nascent enough that multiple companies could succeed.

Open Source Initiatives: DARPA and other government agencies have funded open-source chip design tools. While not directly competitive with Ricursive, they could provide baseline capabilities that reduce the premium companies will pay for faster tools.

Ricursive's Defensibility

What would prevent Ricursive from being commoditized or duplicated by competitors?

1. Founder Moat: Goldie and Mirhoseini's exceptional talents and reputations make execution significantly more likely than for competing teams. This is a real but temporary advantage that compounds over time as their team grows.

2. Data Advantage: Each chip design Ricursive works on trains their AI system, making future designs easier. This creates a learning curve disadvantage for competitors entering later (though not an insurmountable one).

3. Network Effects: As more chip makers use Ricursive, they generate more data, training a better system, which attracts more customers. This creates a virtuous cycle that's difficult for later entrants to overcome.

4. Customer Relationships: Deep integrations with chip design teams create switching costs and habit formation that make replacing Ricursive difficult.

5. First-Mover Advantage: Being first to market with a working AI chip design platform gives time to improve the product before competitors arrive.

However, these advantages are not permanent. Eventually, competitors will emerge, EDA incumbents will integrate AI capabilities, or open-source solutions will commoditize the technology. Ricursive must execute flawlessly and continuously innovate to maintain leadership.

Part 8: The Business Model—How Ricursive Will Make Money

Potential Monetization Approaches

Ricursive hasn't publicly disclosed its business model, but analyzing their target market and the value they create suggests several possibilities:

Model 1: Design-as-a-Service

Ricursive could provide a full chip design service: companies submit specifications, Ricursive uses its AI platform to design the chip, and charges a fee ($5-50 million per chip). This model:

Advantages:

- High margin (cost of service might be 5-50M)

- Predictable revenue

- Deep customer relationships

- Defensible (secret algorithms and training data)

Disadvantages:

- Limited scalability (each design requires significant human oversight and verification)

- Customer concentration risk (few companies commission large custom chips)

- Requires massive team of verification engineers

Model 2: Software Platform with Per-Design Pricing

License the Ricursive platform to chip design teams, charging per design or per design iteration. Pricing might be

Advantages:

- Higher volume potential (many designs per customer)

- Better scalability (software, not services)

- Customers retain control and IP

- Broader TAM (fabless companies and chip makers)

Disadvantages:

- Lower per-unit revenue than services

- Requires robust software product

- Customer support costs

- Easier for competitors to replicate

Model 3: Saa S Subscription Model

Subscription fee (

Advantages:

- Predictable recurring revenue

- High margin (software scales)

- Incentivizes customer stickiness

- Matches buyer preferences (opex vs. capex)

Disadvantages:

- Lower upfront revenue

- Requires critical mass of customers for revenue significance

- Customers might balk at recurring costs for occasional designs

Model 4: Revenue Share

Charge a percentage (1-5%) of the savings that chip designs generate (e.g., 3% of the cost reduction achieved by faster design cycles). This model:

Advantages:

- Perfectly aligned with customer success

- Scales with customer value realization

- Shares risk (if design fails, Ricursive doesn't make money)

Disadvantages:

- Difficult to measure and verify

- Adversarial (customers try to understate savings)

- Revenue delays (only paid after design is deployed)

Likely Business Model Hybrid

Ricursive probably pursues a hybrid model:

-

Initial customers (Nvidia, AMD, Intel, Google, Meta): Custom design services at premium prices ($10-50M per design), where Ricursive provides full design services

-

Growing customers (smaller chip makers, new startups): Software platform licensing with per-design fees or Saa S subscription (

2M annually) -

Mature phase: Evolve toward software licensing as the platform matures and becomes self-service

This approach allows Ricursive to:

- Generate substantial near-term revenue from services

- Build relationships with key industry players

- Gather data to improve AI platform

- Eventually transition to higher-margin software model

Path to Profitability

With $335M in funding, Ricursive has substantial runway:

Burn Rate Estimate:

- Team: 200 employees at 60M annually

- Infrastructure: Cloud compute, facilities, equipment = $20M annually

- Other: Sales, marketing, G&A = $20M annually

- Total: ~$100M annual burn

Revenue Required for Breakeven:

- At gross margin of 70% (typical for software/services companies), Ricursive needs ~$140M annual revenue to breakeven

- This might require 10-30 major design projects or 200-500 smaller projects annually

Timeline to Profitability: 2-3 years

With

Part 9: The Broader AI Infrastructure Thesis

Why Chip Design is Critical AI Infrastructure

Ricursive Intelligence represents a specific instance of a broader thesis gaining traction in venture capital: AI infrastructure is the new frontier. Here's why:

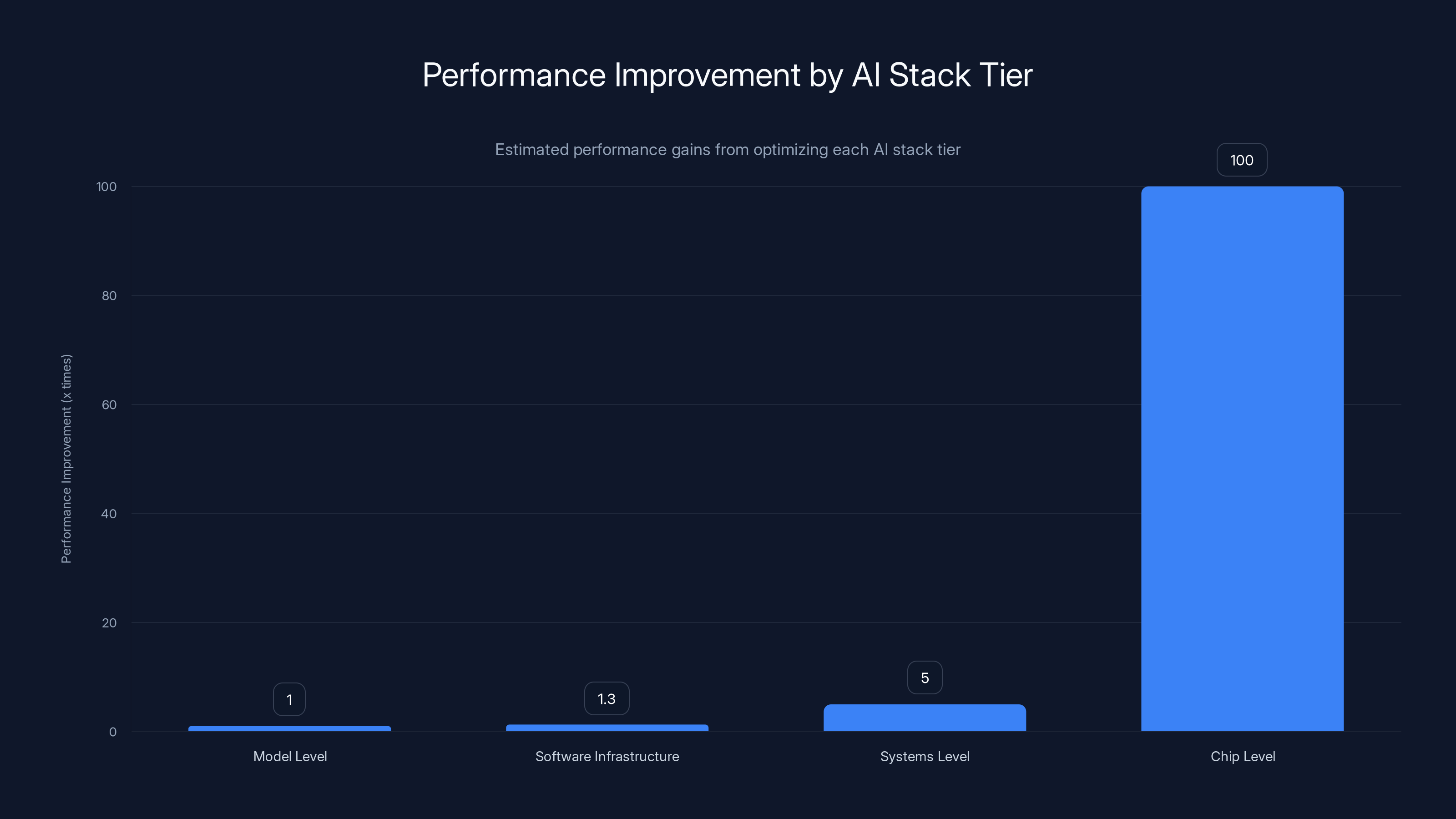

The AI Stack Pyramid:

- Bottom Tier (Chips): Custom-optimized silicon designed for specific AI workloads

- Next Tier (Systems): Integration of multiple chips into coherent systems (networking, cooling, power management)

- Next Tier (Software Infrastructure): Operating systems, compilers, scheduling software that makes clusters of chips work efficiently

- Application Tier (Models): The large language models, diffusion models, and other AI systems that end users interact with

Traditionally, companies have focused on the Application Tier (Open AI, Anthropic, Stability AI) or the Software Infrastructure tier (Hugging Face, v LLM). But over time, advantages in lower tiers become critical:

- Model Level: Differences between GPT-4 and Claude can be compensated for with more compute

- Software Infrastructure Level: Better compilers and scheduling can improve performance by 20-30%

- Systems Level: Better integration of chips can improve performance by 2-5x

- Chip Level: Custom chips optimized for specific workloads can improve performance by 5-100x

As the frontier of AI has matured, competitive advantage has progressively shifted toward lower layers of the stack. This is why:

- Google invested so heavily in TPUs

- Meta is designing custom AI accelerators

- Nvidia's GPU dominance is being challenged by custom chips from multiple companies

- Chip design efficiency becomes increasingly valuable

The Infrastructure Consolidation Trend

Historically, chip design (Ricursive's domain) was separate from:

- Chip manufacturing (handled by TSMC, Samsung, Intel)

- Systems integration (handled by server manufacturers or in-house)

- Software infrastructure (handled by Linux vendors, database companies)

But increasingly, top AI companies are consolidating across these layers:

Vertical Integration Examples:

- Google: Designs TPUs, integrates them into systems, develops custom software stack, trains frontier models

- Meta: Designs custom accelerators, builds systems, develops software infrastructure, trains LLa MA

- Tesla: Designs custom automotive chips, integrates into vehicles, develops inference software

- Apple: Designs So Cs, manufactures, develops OS and applications

Ricursive enables this vertical integration by making it fast and cost-effective for companies to design the chips that sit at the foundation of their AI infrastructure. Rather than waiting 2 years and paying $100M for a chip design, companies could iterate rapidly and affordably.

The Winner-Takes-Most Risk

One concern with infrastructure consolidation is "winner-takes-most" dynamics:

- If large AI companies (Google, Meta, Open AI if it ever develops its own chips) can design chips 10x faster than competitors, they create an insurmountable advantage

- Smaller competitors can't catch up because they lack both Ricursive's technology and the capital to fund chip development

- The market consolidates toward 2-3 giant companies

This would be negative for:

- Innovation (fewer competitors means less diverse approaches)

- Competition (consumers lose choices)

- Progress (consolidation often leads to slower innovation)

It would be positive for:

- The few winner companies (Google, Meta, Open AI, Microsoft)

- Ricursive (as the enabler of this consolidation, they capture massive value)

- Chip efficiency (consolidation could accelerate optimization)

How this plays out will depend on factors beyond Ricursive's control:

- Regulatory responses to AI company consolidation

- Competing technologies emerging from other groups

- Open-source or public alternatives to custom chips

The compute costs for training AI models are escalating rapidly, with projected costs for future models reaching up to $1-10 billion. Estimated data.

Part 10: Risk Factors and Challenges

Execution Risk

Despite exceptional founders, Ricursive faces significant execution challenges:

1. Scaling the Team: Ricursive likely has 50-100 employees currently. Scaling to 200-500 employees over 3 years while maintaining culture and focus is difficult. Chip design expertise is rare; attracting top talent is challenging.

2. Supporting Diverse Chip Types: Alpha Chip worked well for high-performance computing chips. But extending to analog chips, mixed-signal designs, memory chips, and other chip types requires different expertise and algorithms. Mitigating this risk requires either:

- Hiring chip design experts for each domain

- Building a flexible platform that truly generalizes (extremely difficult)

- Partnering with chip design specialists

3. Customer Support and Verification: Each chip design must be thoroughly verified. This requires expertise and time. Early customer issues could damage reputation and slow expansion.

4. Continuous Improvement Pressure: Once Ricursive ships their platform, continuous improvement is necessary to maintain competitive advantage. Failure to keep improving could lead to commoditization.

Market Adoption Risk

1. Organizational Inertia: Chip design teams are conservative and risk-averse. They've worked with specific EDA tools and methodologies for years. Switching to a new approach carries risk (what if the AI-generated layouts are subtly flawed?). Some customers might be unwilling to trust AI for such critical infrastructure.

2. Regulatory Concerns: Chip design has national security implications. Governments might restrict use of AI-based design tools due to concerns about backdoors, lack of explainability, or foreign involvement. If the U. S. government restricted use of Ricursive's tools by defense contractors, market could shrink significantly.

3. Verification Challenges: Proving that AI-generated chip layouts are correct is theoretically and practically difficult. If early customers experience chip tapeouts that fail due to AI-generated design flaws, it could poison the well for the entire category.

4. Incumbent Resistance: Synopsys and Cadence have deep relationships with chip design teams. They'll integrate AI capabilities into their existing tools, making it harder for Ricursive to displace them.

Technology Risk

1. Learning Transfer: Ricursive's thesis that learning from one chip design transfers to future designs is unproven. If each chip requires largely starting from scratch, the promised efficiency gains don't materialize.

2. Hardware Generalization: Extending from high-performance computing chips (Google's domain) to other domains (automotive, analog, mixed-signal) might require fundamentally different approaches. Building a true general-purpose chip design AI could be much harder than extending Alpha Chip.

3. AI Advancement Rate: Ricursive's competitive advantage depends on leveraging cutting-edge AI techniques faster than competitors. If AI research slows, or if competitors catch up with comparable techniques, Ricursive's advantage erodes.

Financial Risk

1. Burn Rate: At ~$100M annual burn, Ricursive has 3-4 years of runway. If they fail to generate meaningful revenue in that timeframe, they'll need additional funding at potentially lower valuations or face difficult decisions about profitability.

2. Market Timing: If the AI chip market cools (e.g., if AI progress plateaus), demand for Ricursive's services could disappear. Ricursive's success is tied to continued growth in AI, which is not guaranteed indefinitely.

3. Customer Concentration: If initial customers are primarily Nvidia, Intel, AMD, and Google, Ricursive faces concentration risk. Losing one of these customers could be devastating.

Part 11: Comparative Analysis—Ricursive vs. Alternatives for Chip Design Acceleration

Comparison Table: Chip Design Acceleration Approaches

To understand Ricursive's positioning, it's helpful to compare different approaches to accelerating chip design:

| Approach | Timeline | Cost | Result Quality | Scalability | Current Status |

|---|---|---|---|---|---|

| Traditional EDA | 12-24 months | $50-200M | Mature, proven | Limited (humans required) | Industry standard |

| Ricursive (AI-based) | 2-4 months | $5-20M | TBD, promising | High (AI scales) | Early commercial |

| Internal Development | 6-18 months | $20-100M | Company-specific | Very limited (single company) | Used by Google, Meta, Tesla |

| Outsourced Design | 12-18 months | $50-150M | Dependent on vendor | Limited (vendor capacity) | Traditional approach |

| Open Source EDA | 12-24 months | $0-20M | Improving, but gaps | Medium | GDS II, Open ROAD gaining traction |

| Partial Automation | 8-16 months | $10-50M | Moderate improvement | Medium | Some companies pursuing |

When Each Approach Makes Sense

Traditional EDA: Best for well-defined designs with proven methodologies. Synopsys and Cadence tools will continue to dominate for standard, non-AI-specific applications.

Ricursive (AI-based): Best for:

- Novel chip designs requiring innovation (new architectures, emerging applications)

- Companies that need multiple design iterations (rapid prototyping)

- Cost-conscious organizations that can't afford traditional EDA budgets

- AI-specific chips where specialized optimization is valuable

Internal Development: Only makes sense for companies like Google, Meta, Tesla with:

- $1B+ annual design budgets

- Critical competitive need for custom chips

- In-house chip design expertise

- IP protection concerns

Open Source EDA: Emerging as a viable alternative for:

- Research organizations

- Early-stage chip companies

- Academic institutions

- Applications where cost < capability trade-off is acceptable

Key Differentiators

What makes Ricursive different from alternatives:

-

AI-Native Rather Than AI-Augmented: While traditional EDA companies add AI features, Ricursive is built ground-up on AI. This allows optimization across all design phases simultaneously, rather than AI improving specific local tasks.

-

Founder Credibility: Goldie and Mirhoseini's proven track record (Alpha Chip, Google, Anthropic) provides confidence that they can execute, distinguishing Ricursive from other AI chip design startups.

-

Customer Validation: Participation from Nvidia, AMD, Intel signals industry confidence. Competitors would struggle to match this validation.

-

Capital: $335M provides resources to develop broadly useful tools and scale customer support. Most competitors have much less capital.

Part 12: The Broader Implications for AI Development

Removing the Hardware Bottleneck

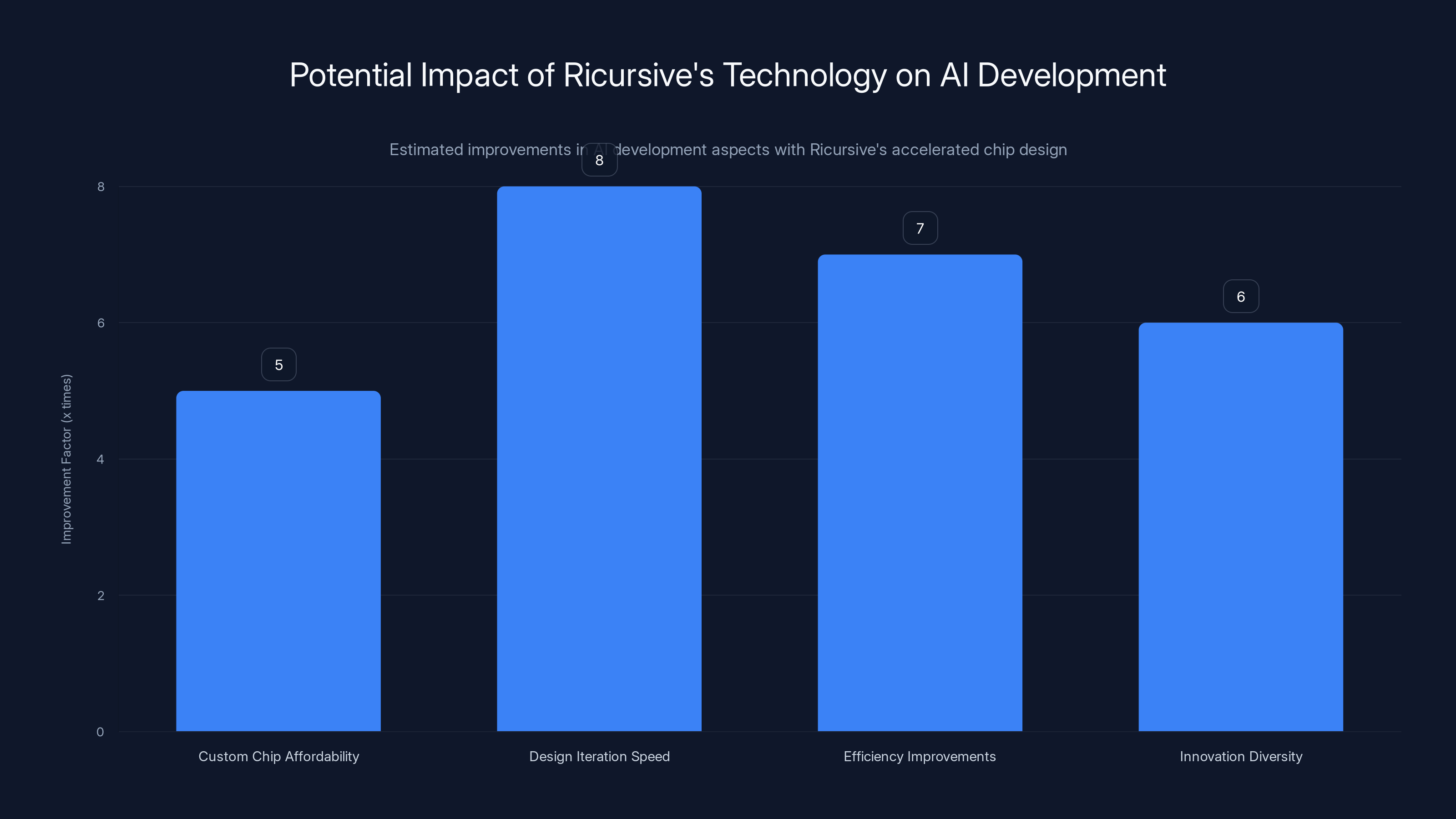

If Ricursive succeeds in accelerating chip design by 5-10x, the implications for AI development are substantial. Currently:

Hardware Constraints on AI Development:

- Training Large Models: Limited by GPU availability and cost. Companies compete for access to limited NVIDIA inventory.

- Specialization: Only well-funded companies can afford custom chips, limiting innovation to players like Google, Meta, Tesla.

- Energy Consumption: Massive, requiring constant infrastructure investment.

- Geographic Constraints: Computation must be located near cheap power sources, limiting some companies.

With Ricursive's Technology:

- Custom Chips Become Affordable: Companies with 100M+) can commission custom chips

- Rapid Iteration: Designs that took 24 months now take 2-3 months, allowing faster exploration of design space

- Efficiency Improvements: Better hardware drives down AI inference and training costs

- Democratization: More companies can design custom hardware, increasing innovation diversity

Implications for AGI Timeline

Could Ricursive's technology accelerate artificial general intelligence development?

The Argument For Acceleration:

- If hardware has been a bottleneck on AI capability, removing it could accelerate progress

- Faster chip design → better hardware → better foundation for AI models → faster progress

- Custom chips optimized for AI could be 5-10x more efficient than general-purpose hardware

- Companies could iterate on hardware and software (models) in tandem rather than sequentially

The Argument Against:

- Hardware is one constraint among many; others include data availability, algorithmic breakthroughs, compute scaling laws

- Diminishing returns to hardware improvements; doubling hardware doesn't double AI capability

- Some claims about hardware-software co-evolution might be overstated

- Progress in AI might be limited by factors other than hardware (e.g., safety, alignment)

Most Likely Scenario: Ricursive's technology provides a meaningful acceleration (perhaps 10-20% faster progress toward AGI) but is not the primary driver. Progress toward AGI is constrained by multiple factors: algorithm innovation, data availability, understanding of scaling laws, safety/alignment work, and hardware. Ricursive removes one constraint but others remain.

Geopolitical and Policy Implications

Ricursive's technology has geopolitical dimensions:

Advantages for U. S./Western Companies: If Ricursive is based in the U. S. and controlled by Americans, it provides U. S.-based AI companies with a tool for designing custom chips faster than competitors in other countries.

Concerns About Technology Diffusion: If Ricursive's technology leaks to other countries, or if competing tools are developed elsewhere, the advantage evaporates. This could prompt regulatory pressure for export controls on Ricursive's technology.

Potential Regulation: Governments might:

- Restrict Ricursive's use for sensitive applications (defense, critical infrastructure)

- Require review of chip designs created with Ricursive before production

- Impose export controls to prevent technology transfer

These regulatory concerns add uncertainty to Ricursive's growth prospects.

Chip-level optimization can lead to the most significant performance improvements, up to 100x, compared to other tiers in the AI stack. Estimated data.

Part 13: Alternative Approaches and Complementary Solutions

Exploring Different Chip Design Paradigms

While Ricursive focuses on accelerating traditional chip design using AI, other approaches to chip design acceleration exist:

Heterogeneous Computing: Rather than designing one powerful chip, use multiple specialized chips working together. This is harder to design but can match custom chip benefits. Companies like AMD are pursuing this.

Reconfigurable Hardware: FPGAs (field-programmable gate arrays) allow software engineers to modify hardware after fabrication. This trades some performance for flexibility and eliminates long design cycles. Companies like Xilinx pursue this.

Chiplets: Break monolithic chips into smaller interchangeable modules that can be combined in various ways. Intel and AMD are investing in this. Ricursive's technology could accelerate chiplet design.

Analog and Neuromorphic: Some researchers are exploring analog computing (continuous signals instead of digital bits) and neuromorphic computing (chips designed like brains) as alternatives to traditional digital logic. These represent different design paradigms that existing tools (and potentially Ricursive) would need to adapt to.

For Teams Seeking AI-Powered Automation Solutions

While Ricursive focuses specifically on chip design, teams seeking broader AI automation capabilities have alternatives worth considering. Runable, for example, offers AI-powered automation for content generation, document creation, and workflow automation starting at $9/month. While these are different domains from semiconductor design, they share a common principle: using AI agents to automate previously manual, time-consuming tasks.

For developer teams specifically, platforms offering AI-powered automation for documentation, code generation, and infrastructure tasks might provide complementary value alongside specialized tools like Ricursive. Teams prioritizing AI-driven productivity and cost reduction might explore solutions like Runable's automated workflow capabilities as part of a broader automation strategy.

Complementary Infrastructure Investments

Ricursive's success depends on complementary infrastructure investments:

Manufacturing Capacity: Faster design means nothing without foundries (TSMC, Samsung, Intel) willing to manufacture small-volume custom chips affordably. Currently, foundries require large minimum order quantities, limiting small companies. If this changes, Ricursive benefits.

Packaging and Integration: Moving from design to finished chip involves packaging, testing, and integration steps that add months to timelines. Innovations in these areas (e.g., chiplets, advanced packaging) could further reduce timelines.

Software Stacks: Custom chips need software optimizations to achieve theoretical performance. Compilers and runtime systems must evolve to target Ricursive-designed chips. Collaboration with LLVM, compiler companies, and OS vendors will be important.

Supply Chain: Sourcing materials, components, and manufacturing resources for chip production is complex. Ricursive likely needs partnerships with supply chain companies.

Part 14: Competitive Responses and Market Evolution

How Incumbents Will Respond

Synopsys and Cadence Response: Rather than compete head-to-head on AI-based design, incumbents will likely:

- Acquire AI Talent: Hire researchers, acquire small AI companies, or license technology from Ricursive competitors

- Integrate AI Into Existing Tools: Add AI-assisted placement and routing to their existing EDA suites

- Leverage Customer Lock-in: Use existing relationships to convince customers that integrated AI tools are better than point solutions like Ricursive

- Acquire Ricursive or Competitors: If Ricursive is particularly successful, Synopsys or Cadence might acquire them for $5-20B

- Compete on Ecosystem: Build more complementary tools and services that work better together

Chip Maker Response (Nvidia, Intel, AMD):

- Internalize Development: Some might license Ricursive's tools; others will develop internal capabilities

- Invest in Competitors: Spread bets by investing in multiple chip design automation companies

- Partner with Ricursive: Use Ricursive's tools for some products while maintaining internal tools for others

- Accelerate Own Roadmaps: Use Ricursive to design new products faster

New Competitors Entering the Market

Ricursive's success will likely attract competitors:

Venture-Backed Startups: Other founders, seeing the $4B valuation, will start companies in adjacent spaces (chip verification, chip testing, chip simulation). Some might directly compete on design acceleration.

Academic Spinouts: Researchers at MIT, UC Berkeley, Stanford, and other universities who published papers on neural networks for chip design will start companies. These might be well-funded by venture firms seeking alternatives to Ricursive.

Open Source Alternatives: Community-driven open-source projects might develop AI-based design tools that compete on price (free or low-cost) rather than quality or support.

International Competitors: Competitors based in Europe, Israel, China, or other regions might emerge with different approaches to the same problem.

Market Evolution Scenarios

Scenario 1: Ricursive Wins (30% probability): Ricursive executes flawlessly, becomes the standard tool for chip design, is acquired by or partners with Synopsys/Cadence for

Scenario 2: Market Fragmentation (40% probability): Multiple competitors emerge, each with specialized strengths. Ricursive remains leading but faces real competition. Market evolves toward AI-assisted design from multiple vendors, with Ricursive commanding 40-50% share.

Scenario 3: Incumbent Consolidation (20% probability): Synopsys or Cadence acquire or partner with Ricursive, integrating their technology into existing tools. Ricursive remains important but becomes a feature rather than a standalone product.

Scenario 4: Disruption of Disruption (10% probability): A new technology or approach (e.g., neuromorphic computing, novel design paradigms) emerges that makes even Ricursive's approach obsolete. All chip design tools must be completely rethought.

Part 15: Investment Thesis and What It Means for Investors

Why Investors Are Excited

The

The TAM Argument: The global chip design market (including EDA tools, design services, and custom chip development) represents $200-500B annually. Even capturing 1-2% would justify a multi-billion-dollar business.

The Timing Argument: AI has created urgent demand for custom chips and fast iteration. Ricursive appears at exactly the moment when the market is desperate for faster chip design. First-mover advantage is critical.

The Founder Argument: Goldie and Mirhoseini have proven they can deliver transformative technology. Past success is the best predictor of future success.

The Optionality Argument: Even if chip design acceleration isn't the full story, Ricursive's technology might enable:

- Faster hardware/software co-design

- More efficient chip designs

- Democratization of custom chips

- Each of these alone might justify the valuation

The Infrastructure Consolidation Argument: As AI companies consolidate stack components, owning or having access to the best chip design tools becomes increasingly valuable. Ricursive captures value from this consolidation.

Return Scenarios for Investors

For Sequoia (

Bear Case: Ricursive's technology doesn't scale as promised, or is displaced by competitors or acquirers developing internal tools. Acquired for $1-3B. Investors see 3-10x return.

Base Case: Ricursive becomes the leading AI chip design platform, with

Bull Case: Ricursive's technology enables explosive growth in custom chip design, transforming semiconductor economics. Valuation reaches $50B+ at IPO. Investors see 100-200x return.

Given the $4B valuation, investors are betting on somewhere between base and bull case scenarios.

What This Funding Says About VC Sentiment

Ricursive's funding reflects current venture capital sentiment:

- AI Infrastructure is Hot: Any company claiming to be "AI infrastructure" can raise capital at premium valuations

- Founder Worship: Proven founders with track records can raise capital faster and at higher valuations than less proven founders with similar ideas

- Urgency Narrative: Companies that can position themselves as solving critical bottlenecks (hardware, speed, cost) attract premium valuations

- Large Check Willingness: Top-tier VCs are willing to write massive checks ($300M+) for opportunities that fit their theses

- Competitive Dynamics: Fear of missing out drives up valuations as multiple VCs compete for allocation

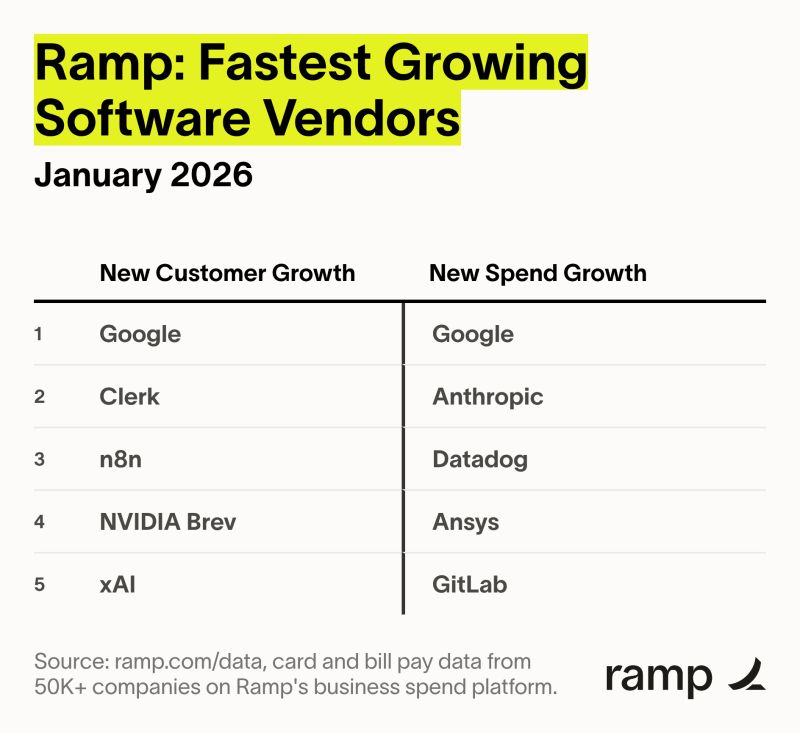

Ricursive's technology could significantly enhance AI development by making custom chips more affordable, speeding up design iterations, improving efficiency, and increasing innovation diversity. Estimated data.

Part 16: Long-Term Vision and Strategic Implications

The Path to AGI Through Hardware Optimization

Goldie and Mirhoseini's ultimate vision—using custom chip design to enable AI models and chips to co-evolve—points toward a larger thesis about AI progress:

Current Paradigm: AI researchers design models → Systems engineers design hardware for those models → Feedback loop is slow and decoupled

Proposed Paradigm: AI researchers propose new model capabilities → Hardware designers design custom chips optimized for those capabilities → Model researchers adjust models based on hardware constraints and opportunities → Tight feedback loop between models and hardware

This tight coupling could accelerate AI progress because neither models nor hardware would be static; both would evolve together based on insights from the other domain.

For AGI specifically, if the path requires custom-optimized hardware at each stage, then the ability to design that hardware quickly becomes a critical capability. Ricursive potentially removes a significant constraint on this path.

Market Structure Implications

If Ricursive succeeds, the semiconductor industry could restructure:

Current Structure:

- Layer 1: Chip designers (Intel, NVIDIA, AMD, Qualcomm, ARM, Broadcom)

- Layer 2: EDA tool vendors (Synopsys, Cadence, Mentor)

- Layer 3: Fabless companies and chip makers

Potential Future Structure (with Ricursive):

- Layer 1: Chip design acceleration platforms (Ricursive, competitors)

- Layer 2: Chip designers (traditional + many new entrants)

- Layer 3: Manufacturers (fewer due to consolidation)

- Layer 4: Fabless companies (more, because design is more affordable)

This could lead to more innovation and diversity (more companies can design chips) but could also lead to consolidation (manufacturing becomes more concentrated).

The Winner-Take-Most Concern Revisited

One long-term concern: if chip design accelerates dramatically, will this help large companies (Google, Meta, Microsoft) at the expense of startups?

The Argument That It Helps Large Companies:

- Large companies have capital to design many chips

- They benefit from 5-10x speed improvement more than startups who design fewer chips

- They can fully exploit custom hardware efficiency

The Argument That It Helps Startups:

- Chip design cost drops from 10M, within reach of well-funded startups

- Speed improvement means startups can iterate faster, potentially outmaneuvering incumbents

- Democratization of chip design could enable new companies

Most Likely Outcome: Ricursive helps both large and small companies, but advantages accrue based on how well each executes. The overall market might grow (more chip designs happen) while large companies still benefit disproportionately due to scale economies in manufacturing and deployment.

Part 17: Expert Perspectives and Industry Commentary

What Chip Design Experts Say

While public commentary on Ricursive has been limited (the company is new and private), the broader chip design community's perspective on AI-assisted design is becoming clearer:

Skeptical View: "AI is a hammer looking for a nail. Chip design is fundamentally about tradeoffs between power, performance, area, and yield. Humans are good at navigating these tradeoffs through experience and intuition. AI might optimize locally but won't make truly creative leaps."

Optimistic View: "Chip design has stagnated due to EDA tool limitations. Humans are reaching the limits of what we can hand-optimize. AI brings fresh approaches and can explore design space faster than humans ever could. This could unlock decades of stagnated innovation."

Balanced View: "AI is likely to become a valuable tool in chip designers' arsenal—not a replacement for human expertise, but an augmentation. Designers will use AI to generate layouts, then refine them, verify them, and integrate them. The best results will come from human-AI collaboration."

Ricursive's success will depend partly on which view proves most accurate.

What AI/ML Researchers Say

Among AI researchers, Goldie and Mirhoseini are widely respected for their Alpha Chip work. Common perspectives:

"Alpha Chip was a genuine breakthrough—applying reinforcement learning to a domain where it hadn't been tried before. Ricursive's ambition to scale this across all chip types is natural but might underestimate the specialization required for different domains."

"The chip design problem is compelling because it has a clear objective (optimize design) and clear feedback signal (whether the design works). These conditions make it well-suited for AI. Many other engineering problems have more ambiguous objectives or harder-to-measure outcomes."

"Transfer learning from one chip to another is plausible but not guaranteed. Whether Ricursive can achieve this will be a key technical question. If they can, it's genuinely transformative. If they can't, the advantage is much smaller."

What Chip Manufacturers Say (Publicly)

Public statements from chip makers about Ricursive's technology have been limited, but their investment speaks louder than words. The fact that Nvidia, AMD, and Intel all invested signals belief that:

- The technology works (or at least has plausible potential)

- It's important enough to invest in (not just watch from the sidelines)

- They don't see it as a threat to their core business (they're comfortable with Ricursive existing)

The third point is interesting: chip makers aren't trying to block or acquire Ricursive immediately, suggesting they see it as complementary to their businesses rather than directly competitive.

Part 18: Future Developments and What to Watch

Key Milestones to Watch

Over the next 2-3 years, several developments will indicate whether Ricursive's thesis is holding up:

Technical Milestones:

- First Commercial Chip Design: When does Ricursive's platform deliver its first commercial chip design to a paying customer? How long did the design take vs. traditional methods?

- Design Verification Success: Do chips designed with Ricursive's tools pass all verification checks on first try, or do they require iteration and debugging?

- Cross-Domain Extension: Does Ricursive successfully extend beyond high-performance computing chips to other domains (analog, mixed-signal, automotive)?

- Learning Transfer: Do design problems 10, 20, 50 years into the platform's lifecycle show marked improvement vs. initial designs, proving that learning across chips is real?

Business Milestones:

- Customer Announcements: Which companies become Ricursive customers, and what chip designs are they working on?

- Revenue Disclosure: When does Ricursive disclose revenue numbers? (100M? $500M?)

- Team Expansion: How large does Ricursive's team grow? Are they hiring top chip design talent?

- Series B Funding: Does Ricursive raise additional funding, and at what valuation?

Competitive Milestones:

- Incumbent Response: How quickly do Synopsys and Cadence integrate AI into their tools?

- Competitor Emergence: How many other startups pursue AI-driven chip design, and which ones gain traction?

- Open Source Development: Do open-source chip design tools incorporate AI capabilities?

Market Milestones:

- Adoption Rates: How quickly do companies adopt Ricursive's tools vs. sticking with traditional EDA?

- Chip Tapeout Success: Are chips designed with Ricursive's platform successfully manufactured and deployed?

- Performance Validation: Do Ricursive-designed chips actually achieve promised performance, power, and area improvements?

Potential Pivots and Adaptations

Ricursive's current strategy (building a general-purpose AI chip design platform) might need adaptation based on market realities:

Possible Pivot 1: Narrow focus to specific chip types where AI provides clear advantages (e.g., AI accelerators, custom processors) rather than attempting broad generality.

Possible Pivot 2: Shift from design-as-a-service to software licensing, recognizing that customers want to retain control of designs and IP.

Possible Pivot 3: Develop complementary services (verification, testing, manufacturing liaison) that increase customer stickiness and capture more value.

Possible Pivot 4: Partner with or integrate with traditional EDA vendors rather than competing directly.

Possible Pivot 5: Focus on emerging design paradigms (neuromorphic computing, analog, quantum-readiness) where incumbents have less advantage.

The ability to adapt while maintaining focus will be critical to long-term success.

Part 19: Lessons for Founders, Investors, and the Tech Industry

Lessons for Founders

1. Founder Track Record Matters: Goldie and Mirhoseini's track record (Alpha Chip, Google Brain, Anthropic) was a key factor in their ability to raise $335M in 4 months. Building proven accomplishments before starting your own company creates optionality and credibility.

2. Timing is Critical: Ricursive launched at exactly the moment when AI companies were desperate for more and better chips. Timing your launch to match market urgency dramatically improves odds of success.

3. Choose Big Problems: Chip design affects the entire electronics industry. Choosing problems with billion-dollar TAMs creates the possibility of billion-dollar companies. Small problems rarely lead to large outcomes, regardless of execution quality.

4. Leverage Domain Expertise: Goldie and Mirhoseini's domain expertise in chip design and AI gave them credibility and insight that outsiders couldn't easily replicate. Founders with deep expertise in a domain have advantages over generalists.

5. Build Network Effects: The vision of learning across different chips creates network effects (each design makes the platform better for subsequent designs). Products with network effects are more defensible.

Lessons for Investors

1. Founder Selection is Paramount: The greatest indicator of Ricursive's likely success is the quality of its founders. Two legendary researchers backing a plausible thesis justifies large check sizes.

2. Market Timing Matters: Investing in companies solving problems the market is actively demanding (better chip design in 2026) is lower risk than investing in solutions for future problems.

3. Infrastructure Beats Applications: Investing in infrastructure (tools that everyone needs) typically creates more value than investing in applications (tools that specific users want). Ricursive is infrastructure; most AI companies are applications.

4. Competitive Dynamics Affect Returns: The presence of credible competitors reduces expected returns. Ricursive's funding size might be partly driven by competitive concerns (fear that competitors might raise capital from other sources).

5. Conviction Drives Speed: Lightspeed's rapid decision to invest $300M suggests strong internal conviction