The Future of Smartphone Photography Is Here

Samsung's camera technology has always been the gold standard in the smartphone world. But the S26? That's going to be something else entirely.

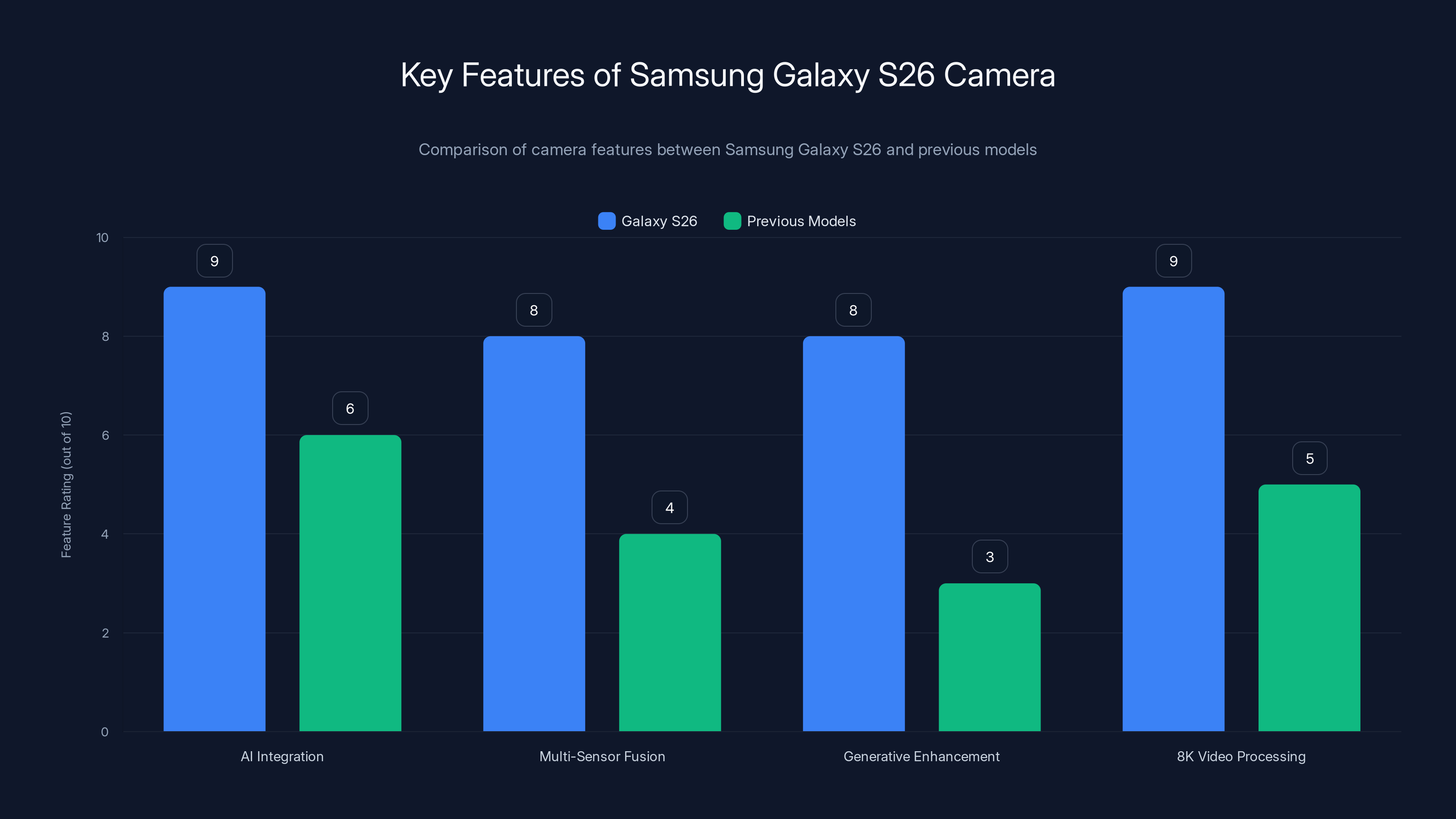

We're not talking about incremental improvements or a few extra megapixels. Samsung is fundamentally rethinking how phones capture and process images by putting AI at the absolute center of everything. The Galaxy S26 is shaping up to be the most AI-integrated camera system ever shipped in a smartphone, and honestly, once you understand what they're building, you'll see why this matters.

The core insight here is simple: computational photography has already replaced hardware specs as the differentiator. Raw megapixels? They don't mean much anymore. What matters is what the phone does after you press the shutter. The S26 takes this to an extreme, with AI handling everything from scene detection to pixel-level enhancement in real time.

Let's break down what Samsung is actually building, why it's different from what Apple and Google are doing, and what it means for how you'll take photos starting next year.

Why AI Camera Processing Matters More Than Hardware

For the past five years, smartphone camera companies have obsessed over megapixel counts and sensor sizes. Bigger sensors catch more light. More pixels mean more detail. That logic made sense when phones had terrible software. But that era is over.

Today's flagship phones all have excellent hardware. The real separation comes from software. A decent sensor paired with great AI beats a great sensor with mediocre processing every single time. Google proved this with the Pixel series. Apple doubled down on it with the iPhone 16. Samsung watched, learned, and is about to flip the entire table with the S26.

The computational advantage comes from what AI can do faster than ever before. Real-time object detection. Instant scene recognition. Pixel-level enhancement happening before you even see the preview. This requires serious processing power and intelligent algorithms working together seamlessly.

Here's the mathematical reality: a 10-megapixel image processed by advanced AI often looks better than a 108-megapixel image processed with basic algorithms. The difference is in signal-to-noise ratio, dynamic range recovery, and color accuracy. AI handles all three at once, across millions of pixels, in milliseconds.

Samsung's bet on the S26 is that this computational advantage matters infinitely more than one-upmanship on sensor specs.

Multi-Sensor AI Fusion: The Real Game-Changer

Every flagship phone has multiple cameras. But they rarely work together intelligently. You pick one, shoot, and that's what you get. The S26 changes this completely.

Imagine a system where all cameras fire simultaneously, and AI picks the best image from each sensor, then blends them together. Or a system that uses the ultra-wide lens to understand scene context while the main camera captures detail. Or the telephoto providing depth information to enhance the main shot. That's what the S26 is building.

How Multi-Sensor Fusion Actually Works

The technical approach is elegant. Each camera on the S26 captures the same scene from a slightly different angle and with different optical properties. The telephoto compresses perspective. The ultra-wide spreads it out. The macro sees tiny details. The main camera balances everything.

Traditionally, these streams never talk to each other. You choose which one to use. But with AI running on the S26's dedicated NPU (neural processing unit), all four cameras can contribute to a single final image.

The AI handles exposure merging, which solves one of photography's hardest problems: capturing bright skies and dark shadows in the same shot. A bright sky blown out in the main camera? The telephoto might have preserved highlights. Shadows crushed in the wide shot? The main camera might have detail there. AI blends them at the pixel level, recovering detail that was technically in the scene but invisible in any single capture.

Color accuracy gets a huge boost too. Each sensor has slightly different color response curves. AI learns these and corrects them, producing images with color consistency across the frame that single-sensor processing can't achieve.

Real-Time Scene Recognition Changes Everything

The S26 doesn't just capture images. It understands them before you even shoot.

Advanced scene detection has been around for years. But the S26 takes it further. The system recognizes not just what you're photographing, but the intent behind the shot. Are you trying to capture detail? Movement? Mood? Professional users often know which settings they want. Casual users don't. The S26 learns from your shooting patterns and starts suggesting modes before you even think about them.

This ties directly into computational processing. If the AI detects that you're shooting a concert, it can pre-optimize for high ISO, motion blur reduction, and skin tone preservation. All that processing happens before the sensor even fires. By the time you hit the shutter button, the pipeline is already optimized.

Face recognition works the same way. But here's the creepy part: the S26 recognizes faces and immediately applies beautification algorithms specific to that person. It learns which adjustments you like for your partner, which ones you hate for yourself, and applies those learned preferences automatically. You might never even realize the software is working.

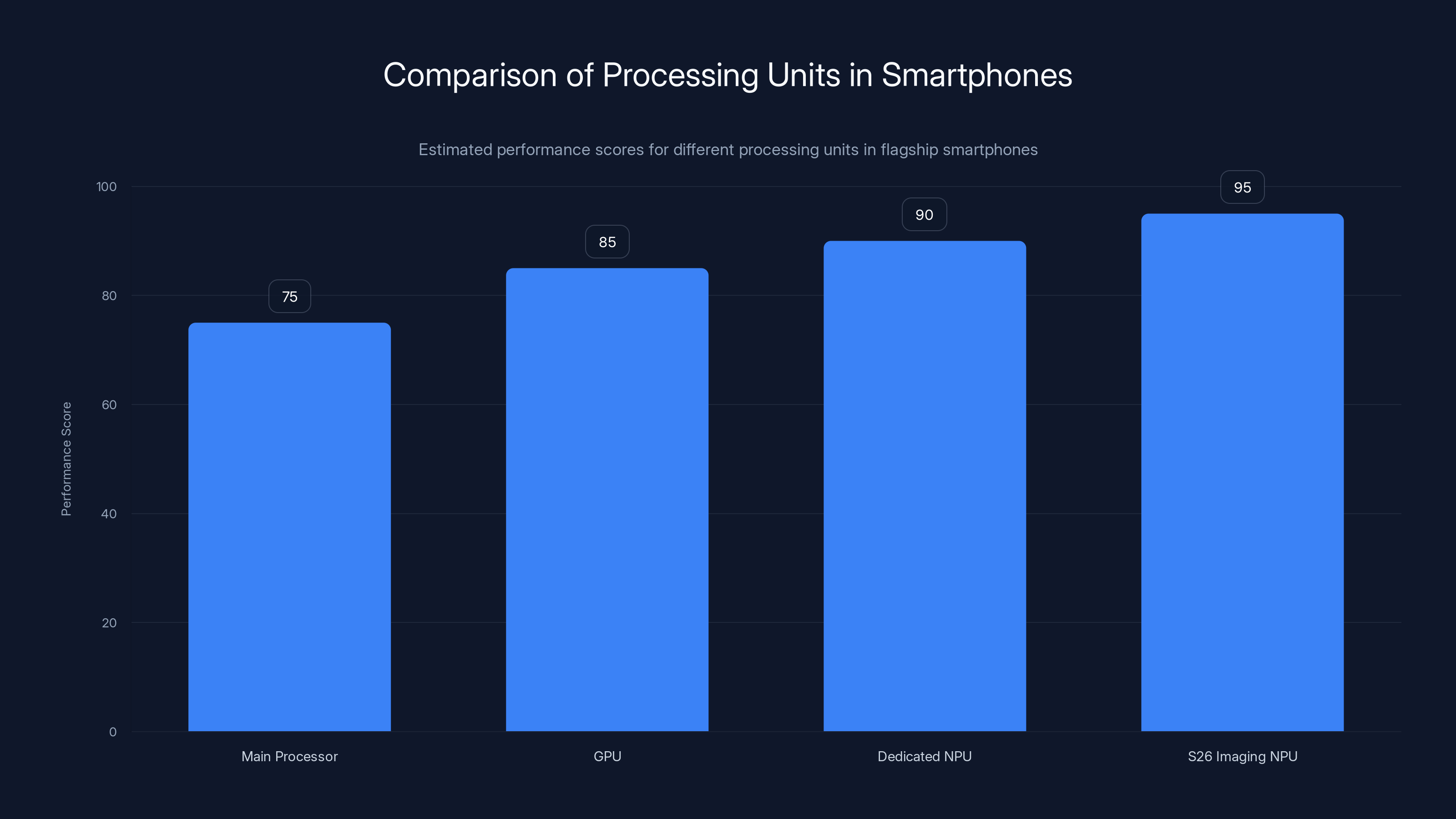

The S26's imaging NPU is estimated to outperform other processing units in handling imaging tasks due to its specialized design. (Estimated data)

Real-Time Generative Enhancement: The Controversial Feature

Here's where things get spicy. Samsung isn't just capturing and processing photos anymore. They're starting to generate pixels.

In-painting technology (filling in missing or damaged parts of an image) has been around in Photoshop for years. But doing this in real-time on a phone, automatically, while you're taking the photo? That's new territory for consumer devices.

How Generative Photo Filling Works

The most obvious use case is removing unwanted objects. You're taking a sunset photo, but there's a power line ruining the composition. Older phones made you deal with it. Google's Pixel started letting you remove it in post-processing. The S26 removes it while you're shooting, so the live preview shows the cleaned-up version before you ever capture the image.

Same idea applies to inpainting detail into shadows. If an area of your photo is too dark to see texture, generative AI can infer what should be there based on surrounding pixels and photographic patterns it learned from training data. The result looks natural because it's statistically consistent with what would be there in good lighting.

This creates a weird question: is this a photograph or a composite? Technically, it's both. Every phone already blends multiple exposures for HDR. Samsung is just taking that philosophy further, using AI instead of simple averaging.

The controversy is coming. Photography purists will hate this. Professional photographers will have a field day pointing out that generative enhancement isn't true photography. But casual users? They'll love it. A sunset photo with no distracting power lines and fully detailed shadow details is objectively better to their eyes than the "pure" version.

Video Becomes AI-First

Still photography gets all the attention, but video on the S26 might be even more impressive.

Real-time video stabilization has been around. But the S26 adds AI-powered motion anticipation. The system predicts camera shake before it happens, based on hand movement patterns and device gyroscope data. It over-corrects slightly to prevent the shake from appearing in the first place.

For 8K video (which the S26 will definitely support), processing requirements are insane. Stabilizing 8K 60fps requires analyzing 480 million pixels per second. No traditional processor can do that fast enough without power-hungry computation. The dedicated NPU on the S26 handles it, which means stabilized 8K video that doesn't murder your battery.

Color grading during capture is another weird advantage. You know how pro cameras record RAW video? That's because footage needs heavy color correction in post-production. The S26 applies real-time color intelligence to video, adjusting white balance, saturation, and tone on the fly based on the detected scene. Your videos look professionally graded right out of the phone.

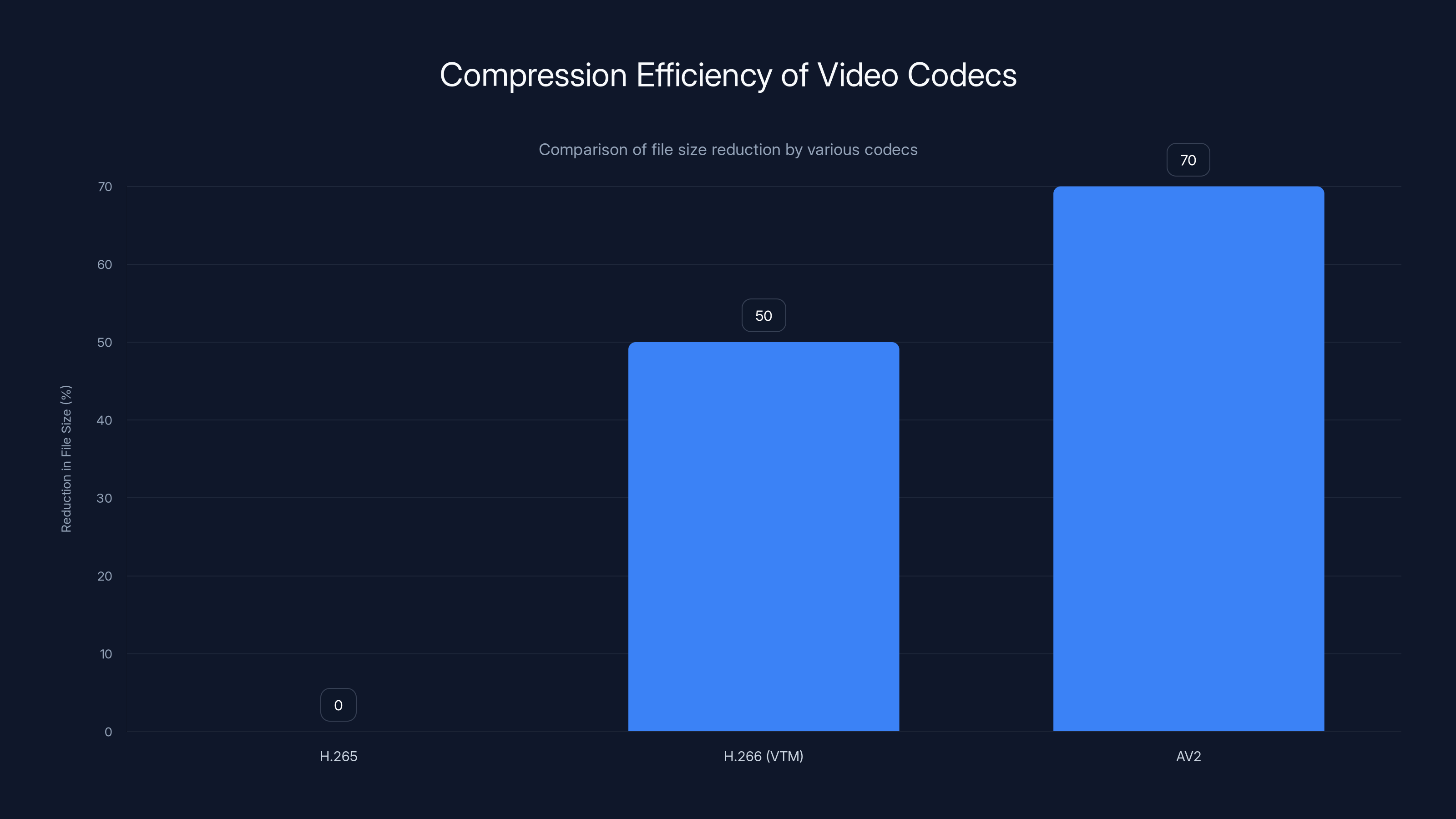

H.266 (VTM) reduces file sizes by 50% compared to H.265, while AV2 offers an additional 20-30% reduction, making it the most efficient codec in this comparison. Estimated data.

Night Photography Gets a Complete Overhaul

Every flagship claims to be good at night shots. But night photography is genuinely difficult. Light is scarce. Sensors are struggling. Motion becomes a problem because exposure times get longer. The S26 approaches this completely differently than previous generations.

Computational Night Mode vs. Hardware Approaches

Traditional night modes work by stacking multiple exposures. You hold the phone still (or use a tripod), and the phone takes 10+ shots, each exposed for different durations. The processor blends them together, recovering shadow detail while keeping highlights from blowing out.

But this approach has limits. You need to hold the phone still for several seconds. Movement in the scene causes ghosting. Bright lights create haloes. The S26 fixes these with AI.

Multi-frame alignment gets smarter. If a person moves between frames, the AI detects this and handles it properly instead of creating a blurry ghost. If a car drives through the shot, the system recognizes it as a coherent object moving through space and tracks it, aligning it across frames instead of averaging it away.

This means night mode works well with subject movement now. Handheld shooting becomes viable. Longer exposures aren't necessary.

The real breakthrough is noise reduction. Traditional denoising blurs fine details to reduce grain. AI-based denoising understands what's important detail versus random noise. It preserves texture while eliminating grain, which is technically impossible with traditional filters but works because the AI learned patterns of real textures from millions of images.

Low-Light Color Accuracy

One of the hardest problems in night photography is color. Human eyes see color well in darkness because our brains are incredible at color constancy (perceiving the right color even when lighting is weird). Phone sensors aren't smart. They see orange sodium vapor lights and struggle to correct them accurately.

The S26 uses AI-learned color models trained on thousands of night scenes. It recognizes the type of lighting (sodium vapor, neon, tungsten, LED) and applies specific color corrections tuned for that light source. The result is night photos with natural colors, which older phones simply can't achieve.

Zoom in night mode? Usually a disaster with older phones because telephoto sensors are smaller and gather even less light. The S26 uses the main camera's larger sensor to gather light, crops to the telephoto's angle, and upscales using AI super-resolution. The result looks like a telephoto shot but with better light gathering, creating night zoom that actually works.

Portrait Mode Becomes Impossibly Good

Blur backgrounds (bokeh) used to require specialized hardware. Smartphones faked it by detecting depth and applying software blur. Google and Apple got good at this with machine learning. Samsung's doing something even more interesting.

Depth Sensing Gets Smarter

The traditional approach: use a dedicated depth sensor, or use two cameras to calculate depth through stereo vision, or use machine learning to infer depth from a single image.

The S26 uses all three simultaneously. The telephoto camera provides one depth perspective. The ultra-wide provides another. The main camera provides a third. Machine learning unifies these into a coherent depth map. The result is depth information that's more accurate than any single method could produce.

This matters because bad depth maps create bad blur. If the AI thinks the background is nearby when it's actually far, the blur doesn't look right. With three depth sources being merged, errors in one source get caught and corrected by the others.

Subject-Aware Bokeh Rendering

Here's where it gets fancy. Different subjects deserve different blur looks. A person in a portrait looks best with circular bokeh balls (beautiful when backlit). A landscape with depth looks better with graduated blur (closer things blurred more, far things less). Architecture looks better with less blur overall.

The S26 detects the subject (person, pet, object, landscape) and applies subject-specific bokeh rendering. Portrait of a person? Maximum bokeh with beautiful highlight rendering. Landscape with foreground interest? Subtle graduated blur. This is AI-driven taste-making applied to photography.

The ethical question is real. Is the phone making decisions about what looks good? Yes. Is that a problem? Probably not for casual users. Professional photographers might disable it. Everyone in between probably never notices, but loves the results.

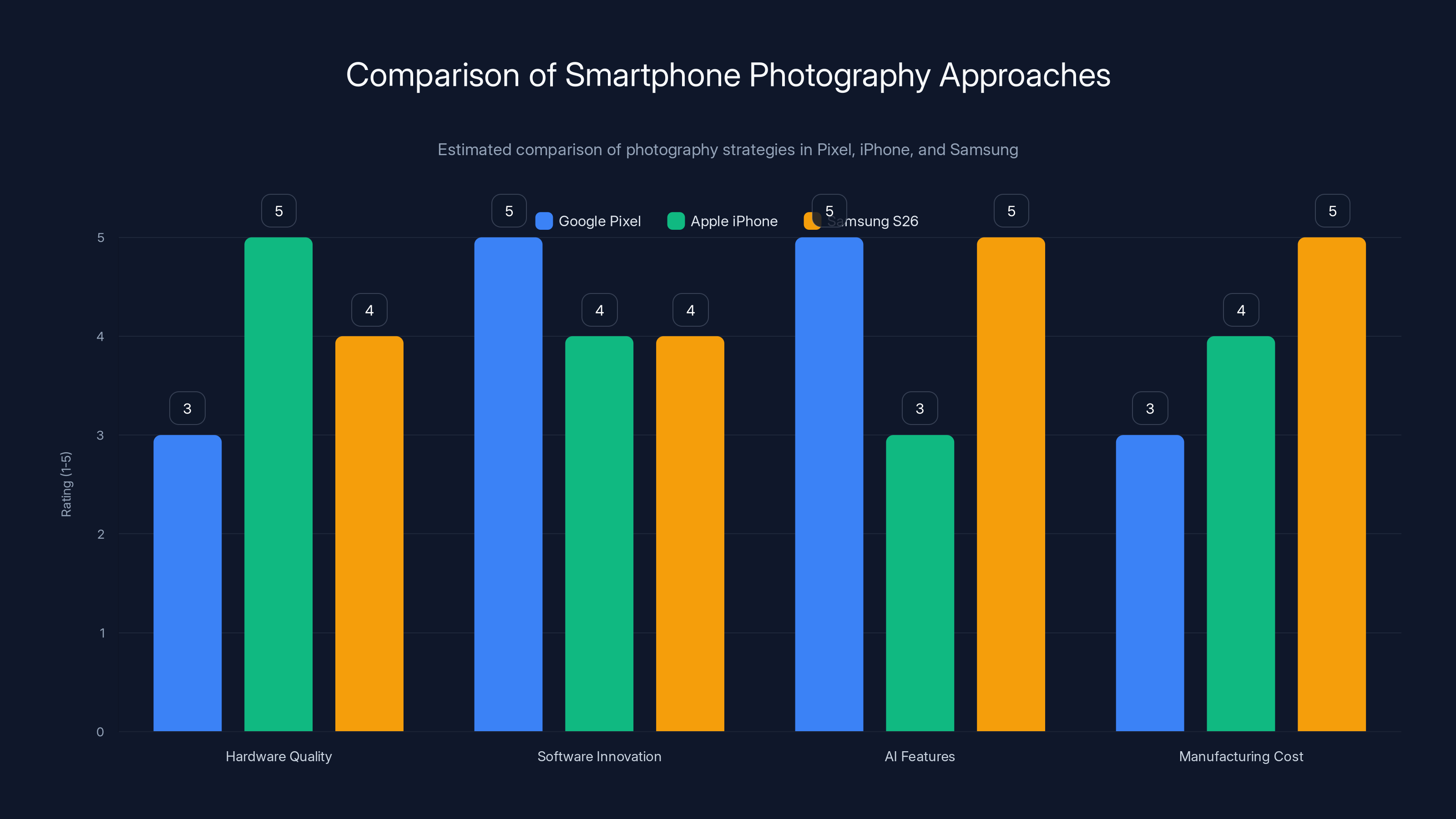

Estimated data shows that Google Pixel excels in software innovation and AI features, Apple iPhone leads in hardware quality, while Samsung S26 balances all aspects with high manufacturing cost.

Zoom Without the Pain

Optical zoom is limited by physics. A phone can only be so thick, which limits focal length. Zoom beyond 5x or so requires digital zoom, which historically meant blurry pictures.

Google solved this with "Magic Eraser" and computational techniques. Apple uses telephoto tricks. Samsung's S26 approach is more direct: AI super-resolution at every zoom level.

How AI Super-Resolution Works

Upscaling images with traditional algorithms (bilinear, bicubic interpolation) is simple but produces soft, blurry results. Neural networks trained on thousands of high-quality image pairs can learn to upscale intelligently, inferring fine details that would be invisible with traditional methods.

The S26 doesn't just upscale. It upscales subject-aware. Detected faces get enhanced with face-specific detail recovery (eyes stay sharp, skin texture looks natural). Text gets sharpened with text-specific algorithms. Natural textures (plants, water, stone) get detail inferred from patterns the AI learned.

At 3x zoom, the S26 uses the telephoto lens, so you get optical quality. At 5x zoom, it blends telephoto with AI upscaling. At 10x zoom, it's pure AI upscaling from the telephoto input. At 20x zoom, it's upscaling from a cropped telephoto image. By 50x or 100x, you're in pure AI territory.

Does it look perfect? No. Heavy zoom will always show artifacts. But the S26's approach creates zoom that's usable up to 30x or 40x, where previous phones looked mushy at 10x. That's a generational improvement.

Processing Pipeline: Where the Real Magic Happens

Having all these AI features means nothing without the processing architecture to execute them fast enough. The S26's neural processing unit is where everything comes together.

Dedicated NPU vs. Main Processor

Every flagship phone now has a dedicated processor for AI tasks. But the S26's NPU is different. It's designed specifically for imaging, not general AI.

The main processor (probably a Snapdragon 8 variant) handles traditional computing tasks. The GPU handles 3D graphics. But imaging tasks get their own processor optimized for the workloads: tensor operations, convolutions, matrix multiplications. The result is fast enough to process 8K video in real-time while the main processor handles everything else.

This separation means you can take 8K stabilized video with real-time color grading without your camera app freezing or your battery draining instantly. The imaging NPU is specialized and efficient.

Real-Time Processing Pipeline

Here's roughly what happens when you take a photo with the S26:

- Sensor captures image

- Raw ISP (image signal processor) does basic debayering and white balance

- Multiple cameras are aligned and fused

- Scene recognition AI labels the content

- Subject detection identifies people, objects, text

- Exposure merging combines multiple exposures (if needed)

- Generative enhancement fills shadows or removes objects (if enabled)

- Color grading applies learned adjustments

- Denoising removes grain while preserving texture

- Upscaling is applied if needed

- Compression encodes the final image

This entire pipeline happens in under 500 milliseconds. For video, it loops constantly at frame rate (30fps or 60fps). The processing load is insane, which is why the dedicated NPU matters.

Memory Architecture

Processing multiple full-resolution images simultaneously requires massive memory bandwidth. The S26 uses LPDDR6 memory (or newer) with bandwidth around 200GB/s. For comparison, traditional phone memory provides about 50GB/s. That 4x increase lets the system process multiple imaging streams simultaneously without bottlenecks.

Image data gets cached at different stages. Raw sensor data sits in one buffer. Processed intermediate frames sit in another. The final output gets compressed before writing to storage. Managing all this happens in microseconds, which is why the memory controller is as important as the NPU itself.

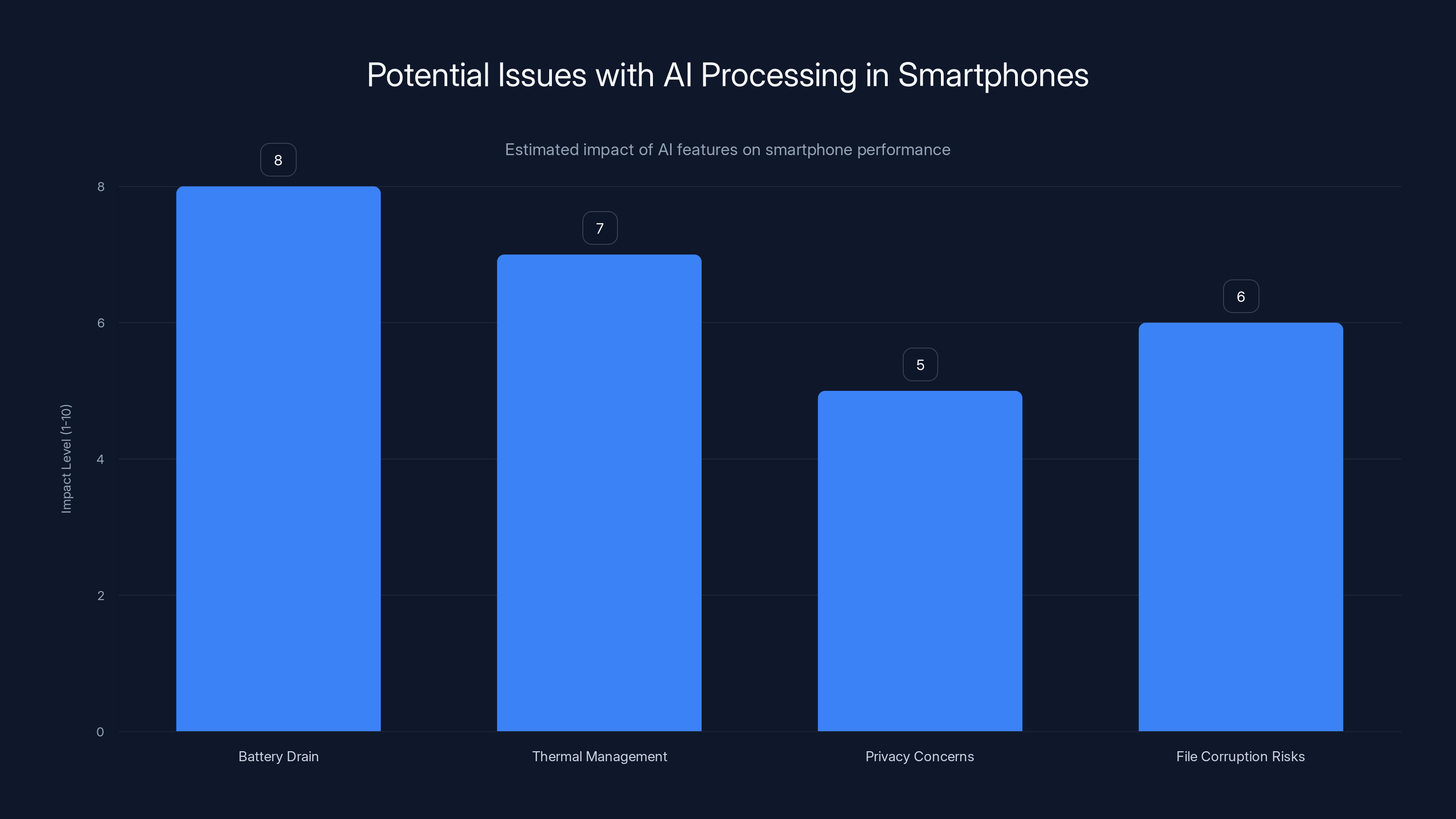

AI processing in smartphones can lead to significant battery drain and thermal management issues, with moderate privacy and file corruption concerns. Estimated data based on typical AI processing challenges.

Storage and File Formats: The Hidden Challenge

Capturing all this computational brilliance means generating massive files. A 8K 60fps video with AI processing is potentially 1TB per hour. That's not sustainable.

Next-Generation Compression

The S26 uses advanced codecs like H.266 (also called VTM, the successor to H.265) which cuts file sizes roughly 50% compared to H.265 at the same quality. Even more aggressive, it supports AV2, which is another 20-30% smaller than H.266.

For photos, the S26 probably uses modern formats like AVIF or the latest JPEG XL variants. These achieve 30-40% better compression than traditional JPEG while supporting way more color information.

But here's the catch: better compression means more processing during capture. Compressing while shooting requires the NPU to handle encoding in real-time. This is possible, but it's why the dedicated hardware matters so much.

RAW Capture Philosophy

Professional photographers often want RAW files (unprocessed sensor data) so they can edit in post-production. The S26 captures RAW, but with a twist: it also captures all the intermediate processing steps, so you can choose how much AI enhancement to keep or discard.

This is actually brilliant. The phone applies all its intelligence, creating a beautiful final image. But if you're a professional photographer who hates the generative enhancement, you can reprocess the RAW using different settings. Best of both worlds.

How This Compares to iPhone and Pixel

Google and Apple have been doing computational photography longer. Both have incredible systems. But they approached it differently than Samsung.

Google Pixel's Approach: Maximum Software

Pixel phones often use smaller sensors and smaller phones overall. Google compensates with aggressive computational techniques. "Magic Eraser" removes objects. Night Sight creates usable images in near-total darkness. Face unblur recovers blurry faces using AI inference.

The Pixel's philosophy is: fix everything in software. Use the smallest hardware that's acceptable, then make it beautiful through algorithms. This approach is cheaper to manufacture and creates incredibly smart results. But there are limits. You can't fix everything, and the software can't overcome every hardware limitation.

The S26 takes a hybrid approach: good hardware plus aggressive computation. Better sensors and lenses do part of the work. AI refinement does the rest. This is more expensive but produces more robust results.

Apple's Approach: Hardware Optimization First

Apple designs custom sensors and lenses for its phones. It invests heavily in optical quality. Then the software layer applies computational refinement. The iPhone 16's new Pro RAW format captures 16-bit color, which is 65,536 levels of tone per color channel versus the traditional 256 levels.

Apple's recent strategy has been adding "Apple Intelligence" features, though initial implementations have been somewhat limited. The Pixel has more aggressive AI features. Apple is moving toward more AI, but staying conservative. Samsung is jumping in aggressively.

Samsung's Differentiator: Scale and Boldness

Samsung has the manufacturing scale to build custom silicon, big sensors, and advanced lenses. They also have the software engineering to implement aggressive AI. The S26 will be the first phone that truly commits to "AI first" camera design.

Pixel is still software-first. iPhone is still hardware-first. Samsung is saying "AI-first, with great hardware and smart software." That's the positioning that sets the S26 apart.

The Samsung Galaxy S26 significantly enhances camera capabilities with AI integration and multi-sensor fusion, outperforming previous models in all key areas. Estimated data.

The Problems You Should Know About

All this AI processing creates challenges.

Battery Drain

Processing high-resolution images through multiple AI models is energy-intensive. Even with a dedicated NPU, running heavy computational photography drains battery faster than minimal processing. The S26 will have a bigger battery (probably 6,000mAh+) to compensate, but expect heavier battery drain than S25 when using AI features heavily.

Samsung will probably make this toggleable. Maximum AI processing mode uses more power. Reduced processing mode extends battery life. Users pick their balance.

Thermal Management

When the NPU, GPU, and main processor all run simultaneously (capturing video while applying AI processing), heat generation gets serious. Phones aren't great at dissipating heat. Extended 8K video recording with AI enhancement will probably thermal throttle, reducing performance.

Expect Samsung to add better heat pipes or phase-change materials. But expect thermal limits regardless. Professional video workflows might need cooling solutions.

Privacy Concerns

AI processing requires understanding what's in your photos. Face detection, subject recognition, scene understanding all require analyzing your images. The S26 likely does this on-device (without sending to servers), but you're still trusting Samsung's implementation.

Generative features that infer or create pixels are even more philosophically problematic. You might not notice the phone is generating content. That's concerning from a transparency perspective.

File Corruption Risks

Complex processing pipelines have more failure points. If any step in the AI pipeline crashes, your photo is corrupted. Samsung will probably implement fallback modes (use unprocessed output if AI fails), but expect occasional glitches.

The software will mature over time, as it always does. Early adopters might encounter more issues. If you need reliability, wait for the S26 Ultra refresh or the S27.

When Will We Actually See This?

Samsung typically announces Galaxy S models in January or February. The S26 would likely launch in early 2026. Possibly late 2025 if they accelerate.

But here's the reality: most of these features might not ship at launch. Samsung will probably roll out AI capabilities in software updates over the first 6 months. The cameras will be ready, but the full software stack takes time to optimize.

Expect the S26 Ultra to get features first. Standard S26 and S26+ will follow. Some features might be exclusive to higher tiers (Samsung likes segmentation).

The long game for Samsung is clear: own computational photography the way Apple owns hardware integration and Google owns software intelligence. The S26 is their flagship move in that direction.

The Bottom Line

The Samsung Galaxy S26 represents something genuinely different in smartphone design. It's not just another phone with better camera hardware. It's a AI-first imaging device that uses hardware as support for software intelligence.

Every major phone brand claims to use AI. But most of it is marketing fluff. Computational photography that improves results incrementally while using modest neural networks. The S26 is committing to deep AI involvement at every level: multi-sensor fusion, generative enhancement, real-time processing, subject-aware optimizations.

For casual photographers, this means photos that look better immediately, without any effort. No editing needed. The phone just makes smart decisions and you get beautiful results.

For professionals, it means more options. Capture with AI, then disable it for specific shots. Use RAW with processing metadata. Edit knowing exactly what the camera did.

For the industry, it means the competition is now in computational intelligence, not megapixel counts. Google and Apple will respond. All three companies will invest billions in imaging AI. Within two years, every flagship will have something similar. But Samsung's first-mover advantage with the S26 matters. They'll own the perception that they're the "AI camera phone" maker.

The question isn't whether the S26 camera will be good. It'll be excellent. The question is whether you're comfortable with a phone that's making increasingly automated decisions about how your photos should look. That comfort level varies. Some people hate it. Some can't imagine going back.

Once you've used a phone that automatically removes power lines from sunset photos and recovers detail from impossible shadows, regular computational photography feels primitive. Samsung's betting you'll feel the same way about the S26. They're probably right.

FAQ

What makes the Samsung Galaxy S26 camera different from previous models?

The S26 doesn't just improve camera hardware—it puts AI at the center of the entire imaging pipeline. Multi-sensor fusion means all cameras work together intelligently, not separately. Real-time generative enhancement removes objects and recovers shadow detail during capture. The dedicated imaging NPU processes 8K video with computational effects simultaneously. Previous Samsung phones used AI for scene detection and optimization. The S26 uses AI to fundamentally change how images are captured and processed at every stage.

How does multi-sensor fusion improve photo quality?

Instead of choosing which camera to use, all four cameras (main, ultra-wide, telephoto, macro) capture simultaneously and AI blends them intelligently. The telephoto might preserve bright highlights that the main camera loses. The ultra-wide adds depth context. The main camera provides detail. AI merges these at the pixel level, recovering information that would be lost in any single capture. This creates images with better dynamic range, more accurate colors, and more recovered detail than traditional single-camera approaches.

Is generative photo enhancement the same as AI-created content?

Not quite. Generative enhancement uses AI to infer pixels that should logically be there based on surrounding context. Removing a power line means the AI reconstructs what should be behind it. Recovering shadow detail means inferring texture based on photographic patterns the AI learned. This is different from AI creating entirely new content. The underlying scene was captured—you're just seeing a computationally enhanced version of it. Whether you view this as cheating or smart processing depends on your philosophy about photography.

Will AI processing drain the battery faster?

Yes. Running heavy computational photography continuously is energy-intensive, even with a dedicated NPU. The S26 will likely have a larger battery (probably 6,000mAh) to compensate. Samsung will probably offer modes: maximum AI enhancement (uses more power), standard mode (balanced), and minimal processing mode (extends battery life). You'll choose your balance between image quality and battery longevity.

How does the S26 handle night photography differently?

Previous night modes required holding the phone still and waiting several seconds for multiple exposures to be captured and merged. The S26 uses AI-powered multi-frame alignment that handles subject movement, real-time color correction for different light sources (sodium vapor, LED, neon), and noise reduction that preserves fine texture while eliminating grain. This means night mode works with handheld shooting, moving subjects, and produces images with natural colors that previous phones struggled to achieve.

Is the RAW capture option still available for professionals?

Yes, and better than before. The S26 captures RAW sensor data, plus all the intermediate AI processing steps. Professional photographers can reprocess the RAW using different settings, essentially having the option to keep or discard AI enhancements. This gives professionals the control they want while casual users get the benefit of AI optimization by default. Best of both worlds.

How does AI super-resolution improve zoom quality?

Traditional digital zoom just magnifies pixels, creating blurry results. AI super-resolution learns patterns from millions of images and intelligently infers details that should be visible when zooming. At 3x zoom, you get the telephoto lens (optical). At 5x, it blends telephoto with AI enhancement. At 10x and beyond, it's pure AI upscaling. The result is usable zoom quality up to 30-40x, where older phones looked mushy at 10x. The AI doesn't create perfect detail, but creates believable detail that looks natural.

Will thermal throttling be a problem with all these AI features?

Possibly, especially with extended 8K video recording combined with real-time AI processing. Running the NPU, GPU, and main processor simultaneously generates significant heat. Phones don't dissipate heat efficiently. Samsung will likely add better thermal solutions (heat pipes, phase-change materials), but expect some thermal throttling during extended heavy use. This is a known limitation of mobile devices, not unique to the S26. It just becomes more noticeable when you're pushing the hardware harder.

How do privacy implications differ with AI-based photo processing?

The S26 likely processes images entirely on-device without sending data to servers (this is becoming standard practice). However, face detection, subject recognition, and scene analysis require the phone to understand what's in your photos. Generative enhancement means the phone is creating content you didn't directly capture—some people find this philosophically concerning. Samsung should be transparent about what data is processed, where it's processed, and how long it's retained. On-device processing is better for privacy than cloud processing, but neither is perfectly private if the phone manufacturer wanted to extract data.

What Comes Next in Computational Photography

The S26 is beginning of a new era, not the end of it. Within two years, expect even more aggressive AI integration. Real-time style transfer (making your photos look like specific art styles). Instant object replacement (not just removal). Temporal coherence in video (making edits consistent across frames). Voice-controlled composition adjustments.

The S26's significance is that it proves computational photography can be mainstream. Samsung is betting billions that average users will love phones that make AI-driven decisions about their photos. Google will intensify Pixel's software approach. Apple will add more "Apple Intelligence" to the camera pipeline. All three companies will race to own computational photography supremacy.

The camera is becoming a computational device first, an optical device second. The S26 fully embraces this reality. Everything else is just refinement.

Key Takeaways

- The S26 uses multi-sensor AI fusion, allowing all four cameras to contribute intelligently to a single final image rather than choosing one camera per shot

- Generative AI enhancement removes objects and recovers shadow detail in real-time during capture, not just in post-processing

- A dedicated imaging NPU processes computational photography simultaneously with 8K video without draining battery or throttling performance

- Night photography uses AI-powered multi-frame alignment that works with handheld shooting and moving subjects, plus subject-specific color correction for different light sources

- AI super-resolution makes digital zoom usable up to 30-40x magnification, compared to 10x on previous phones, by intelligently inferring details rather than just magnifying pixels

Related Articles

- Samsung Galaxy S26 Launch Event on February 25 [2025]

- Samsung's AI Slop Ads: The Dark Side of AI Marketing [2025]

- Samsung Galaxy S26 Privacy Display: Everything You Need to Know [2025]

- Tech Gear News: Samsung Galaxy Unpacked, Fitbit AI Coach, iOS Updates [2025]

- 7 Biggest Tech News Stories This Week: Claude Crushes ChatGPT, Galaxy S26 Teasers [2025]

- Samsung Galaxy S26 Trade-In Deal: Get Up to $900 Credit [2025]

![Samsung Galaxy S26 AI Camera: Revolutionary Features & Specs [2025]](https://tryrunable.com/blog/samsung-galaxy-s26-ai-camera-revolutionary-features-specs-20/image-1-1771373314212.jpg)