The AI Ad Problem That Samsung Can't Hide

It starts with a simple question: what are you actually seeing on your screen?

Last month, Samsung posted a video to Instagram and TikTok called "Brighten your after hours." It shows two people skateboarding through a nighttime urban landscape. The video promises to showcase the low-light video capabilities of the upcoming Galaxy S26 smartphone series. But here's the catch—the video itself was created and edited using generative AI tools, as highlighted by MSN.

Now, Samsung did include a fine print disclaimer at the bottom of the video. It says "generated with the assistance of AI tools." Problem is, most people scrolling through social media never see that disclaimer. They're watching what looks like a real product demonstration, except it's not real at all.

This isn't an isolated incident. Samsung has been flooding its YouTube, Instagram, and TikTok channels with AI-generated and AI-edited content for weeks. Low-quality cartoons promoting smart home appliances. Cats being digitally edited in impossible ways. Snowmen struggling to figure out what's real (the irony is almost too perfect). All of it tagged with vague AI disclosures that most viewers never catch, as noted by The Verge.

The problem runs deeper than just Samsung being lazy with their marketing. This is about the collapse of trust in digital advertising at a moment when authenticity is already on life support. When a major tech company can't even be consistent about disclosing AI usage in their own ads, what does that say about the future of online content?

Let me break down what's actually happening here, why it matters, and what comes next.

Understanding AI-Generated Content and Its Role in Marketing

First, let's be clear about what we're talking about. AI-generated content isn't new. Companies have been experimenting with generative AI tools for marketing materials for the past two or three years. The technology itself is neutral. A hammer can build a house or destroy one. The question is how you use it and how honest you are about using it.

Generative AI tools can create images, videos, text, and audio that are indistinguishable from authentic content. OpenAI's DALL-E can generate photorealistic images. Runway and Pika Labs can create videos from text descriptions. The outputs have gotten so good that it's genuinely difficult to tell what's real without careful examination.

For marketing teams, the appeal is obvious. You can create dozens of variations of an ad in hours instead of weeks. You can test different messaging, visuals, and product angles without hiring expensive production crews. You can scale content creation to dozens of platforms simultaneously. The efficiency gains are real, as discussed in BCG's analysis.

But here's where it gets murky. There's a difference between using AI to speed up the creative process and using AI to deceive your audience about what they're looking at. Samsung isn't using AI to edit footage more efficiently. They're using AI to create content that looks like real product demonstrations when it's actually synthetic.

The vegetable-laden shopping bags in that "Brighten your after hours" video? They look slightly wrong because they were generated by an AI. The cobblestones in the road? They shift and warp in ways that only happen when an AI hallucinates spatial relationships. These artifacts are visible if you know what to look for, but most viewers don't know what to look for.

Samsung is betting that most people won't dig deeper than the surface. And statistically, they're probably right.

The pie chart highlights the key challenges in Samsung's AI content disclosure, with invisible fine-print and lack of platform-level labeling being major issues. Estimated data.

The Disclosure Problem That Everyone's Missing

Here's where things get really interesting. Samsung did disclose the AI usage in these videos. They put it right there in the fine print. So technically, they're following the rules, right?

Not really. And that's the entire problem.

The disclosure exists, but it's practically invisible. It's text at the bottom of a video that plays for a few seconds. On TikTok and Instagram, where most people watch videos in full-screen mode on their phones, that fine print is essentially invisible. You'd have to actively pause the video and look closely to see it.

Compare that to how other companies are handling AI disclosures. Google has committed to labeling AI-generated content with visible, easy-to-understand badges. Meta has done the same. The industry standard is moving toward making disclosures prominent and unavoidable, as reported by Built In.

Samsung's approach? It's the bare minimum. Technically compliant, practically useless.

There's also the problem of inconsistency. Not all of Samsung's AI-generated ads include the fine print disclosure. Some videos have it, others don't. This suggests there's no consistent policy governing how Samsung labels AI content. It's ad hoc. It's reactive. It's not thought through.

Platform-level labeling makes this worse. Even when Samsung includes a disclosure, YouTube and Instagram haven't added their own AI labels to the content. Samsung, Google, and Meta have all adopted the C2PA standard for content authenticity. That's the industry framework for labeling AI-generated content. But these videos aren't getting flagged with C2PA labels on the platforms themselves. The disclosure only exists within the video itself, in tiny text, that most people never see.

Why? Because the disclosure systems aren't standardized yet. Samsung adds the disclosure themselves. The platforms could add their own labels, but they haven't. There's a gap in the system, and Samsung is exploiting it.

The use of AI-generated ads initially boosts interest but may reduce credibility upon disclosure, especially among tech reviewers and engaged consumers. (Estimated data)

The History of Samsung's Misrepresentations in Camera Marketing

Here's the thing about Samsung and camera marketing: this isn't their first rodeo with exaggeration and misrepresentation.

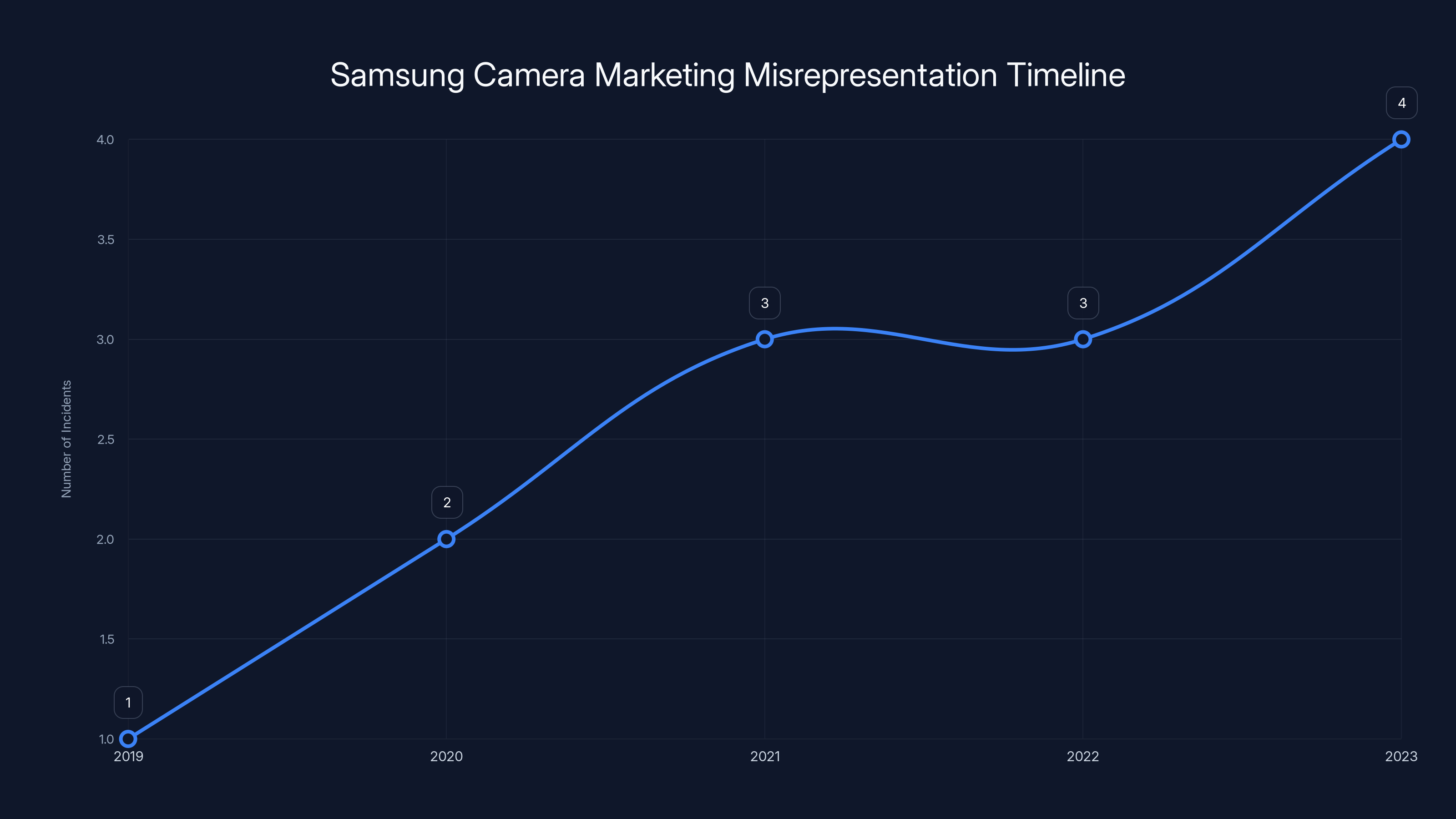

Back in 2021, Samsung faced criticism for using edited and composite images in marketing materials for the Galaxy S21 Ultra's camera capabilities. The company showed photos that looked incredible, but they weren't actually taken with the phone. They were heavily processed, combined, and enhanced in ways that real users could never replicate. The low-light photography capabilities looked amazing in the marketing materials, but real-world results were less impressive, as noted by Business Model Analyst.

This created a pattern. Samsung shows off impressive camera features in marketing materials, users buy the phone expecting those results, and then reality doesn't match the hype. It's not fraud exactly, but it's misleading at best.

Now Samsung is taking that one step further. Instead of just editing photos, they're using AI to generate entire scenarios that never happened. The skateboarding video in the low-light test? There's no way to verify whether that was actually shot with a Galaxy S26. It's synthetic. The entire scenario is AI-generated.

This is where it gets dangerous. There's a difference between enhancing a photo and creating an entirely fictional scenario designed to look real. One is marketing exaggeration. The other is closer to fraud.

The problem is that Samsung can hide behind the fine print disclosure. Legally, they're protected. But morally and ethically, they're crossing a line that the industry hasn't fully grappled with yet.

Why Platform Labeling Matters More Than You Think

Platform-level labeling is crucial because it's the only system that reaches the average person.

Most people don't read disclaimers. They scroll through social media quickly, catch the headline or the visuals, and move on. For those people, the only labeling that matters is what the platform itself shows them. If YouTube or Instagram adds an "AI-generated" label to a video, people see it. If Samsung buries the disclosure in fine print, people don't see it.

Google, Meta, and other platforms have adopted C2PA—the Coalition for Content Provenance and Authenticity. This is the industry standard for labeling and tracking AI-generated content. But adoption is inconsistent. Some platforms are better at labeling than others. Some content slips through the cracks, as discussed by Digiday.

In this case, the "Brighten your after hours" video has a disclosure inside the video itself, but no platform-level label on YouTube or Instagram. That's a failure of the system. The platforms know their own standards. They know Samsung disclosed the AI usage. But they're not enforcing platform-level labeling consistently.

Why? Partly because the systems aren't fully automated yet. Detecting AI-generated content is harder than it sounds. The technology for identifying synthetic content is still evolving. Partly because the platforms are still figuring out how to implement these standards across billions of pieces of content. And partly because there's no real enforcement mechanism. No regulatory requirement. No financial penalty for inconsistency.

This creates a world where companies like Samsung can technically follow the rules while still being misleading to the vast majority of viewers.

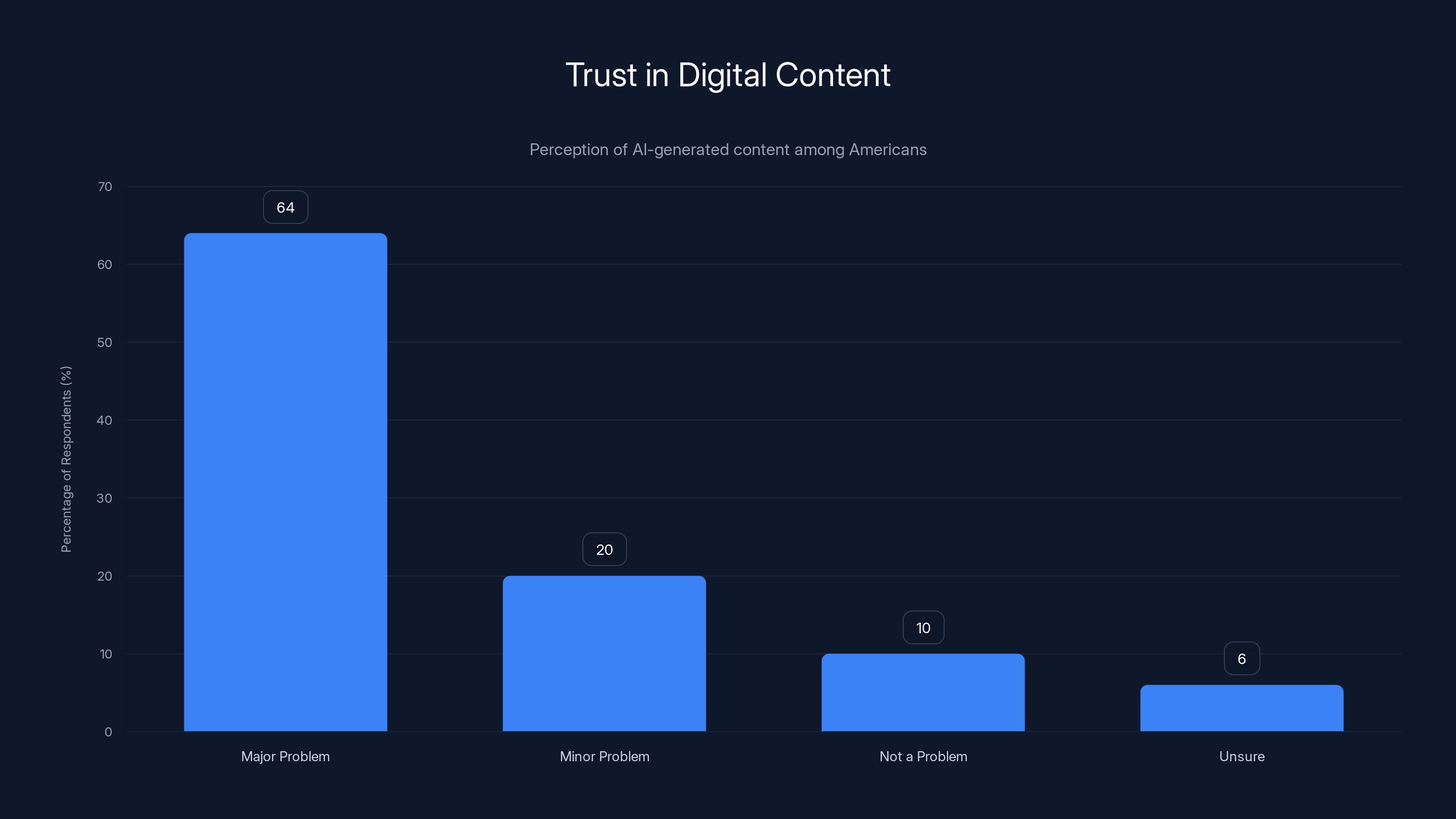

64% of Americans view AI-generated content as a major problem, indicating significant skepticism towards digital authenticity.

The Business Case for AI-Generated Ad Content

Understood objectively, Samsung's decision to use AI-generated content makes business sense.

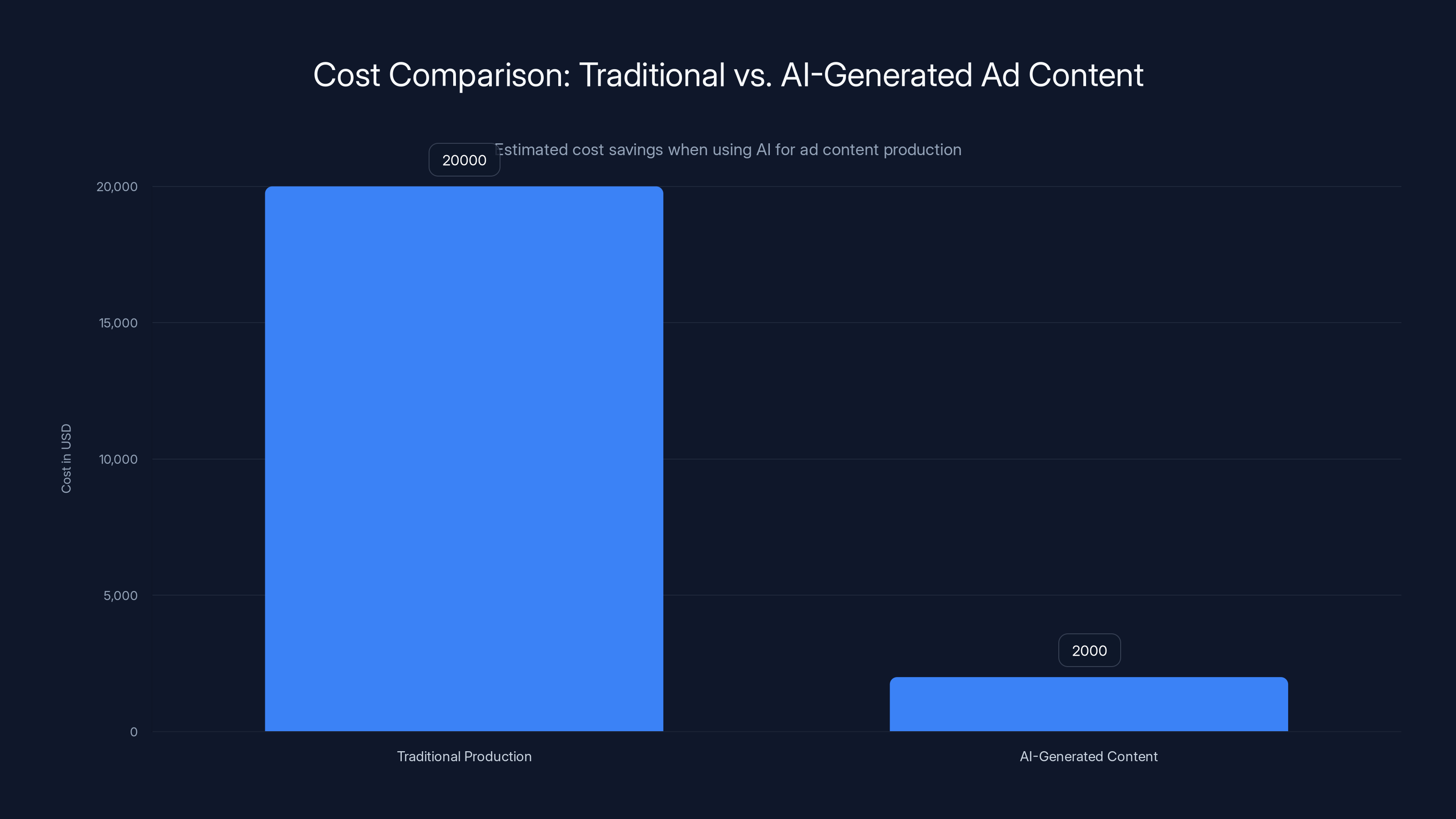

Producing high-quality video content is expensive. You need cameras, crews, locations, talent, and post-production work. A single product video can cost tens of thousands of dollars. Creating dozens of variations for different markets and platforms multiplies that cost significantly.

With AI, you can generate dozens of variations in hours. The cost drops from tens of thousands to potentially hundreds or thousands. The efficiency gains are enormous. And if you're trying to market a phone, showing different scenarios and use cases becomes much cheaper, as detailed by CyberNews.

But there's a hidden cost that Samsung isn't accounting for: brand trust.

When people find out that a company has been showing them AI-generated content without being clear about it, trust evaporates. This isn't theoretical. We've seen this play out repeatedly in recent years. When brands get caught using AI-generated images or deepfakes in marketing, the backlash is immediate and harsh.

The calculation Samsung seems to be making is: the cost savings from using AI-generated content are higher than the reputational risk of being less than transparent about it. They're betting that most people won't notice, and those who do will either not care or forget about it quickly.

They might be right in the short term. But over time, this erodes brand credibility. It feeds into growing consumer skepticism about what's real and what's synthetic online. It contributes to what some researchers are calling the "authenticity crisis" in digital marketing.

The Broader Industry Trend: Everyone's Slipping AI Into Everything

Samsung isn't alone in this. This is becoming an industry-wide trend.

Companies across every sector are experimenting with AI-generated content. Some are transparent about it. Many aren't. The disclosure standards are all over the place. Some companies put tiny labels in the corner. Others hide it in the terms of service. Some don't disclose it at all.

The problem is that there's no unified standard yet. Each company, each platform, and each country is making its own rules. Until governments step in with regulations, there's no enforcement mechanism that forces consistency.

Meanwhile, consumers are getting increasingly skeptical about what's real online. Trust in digital content is declining. AI-generated deepfakes are becoming more sophisticated. The ability to verify authenticity is becoming a more valuable skill, as highlighted by The Information.

In this environment, companies that are transparent and honest about their use of AI will have a competitive advantage. Companies that are opaque and misleading about it will face backlash when they're caught—and they will be caught eventually.

Samsung seems to be betting that the benefits of cheaper, faster content creation outweigh the risks. History suggests they're wrong about that calculation.

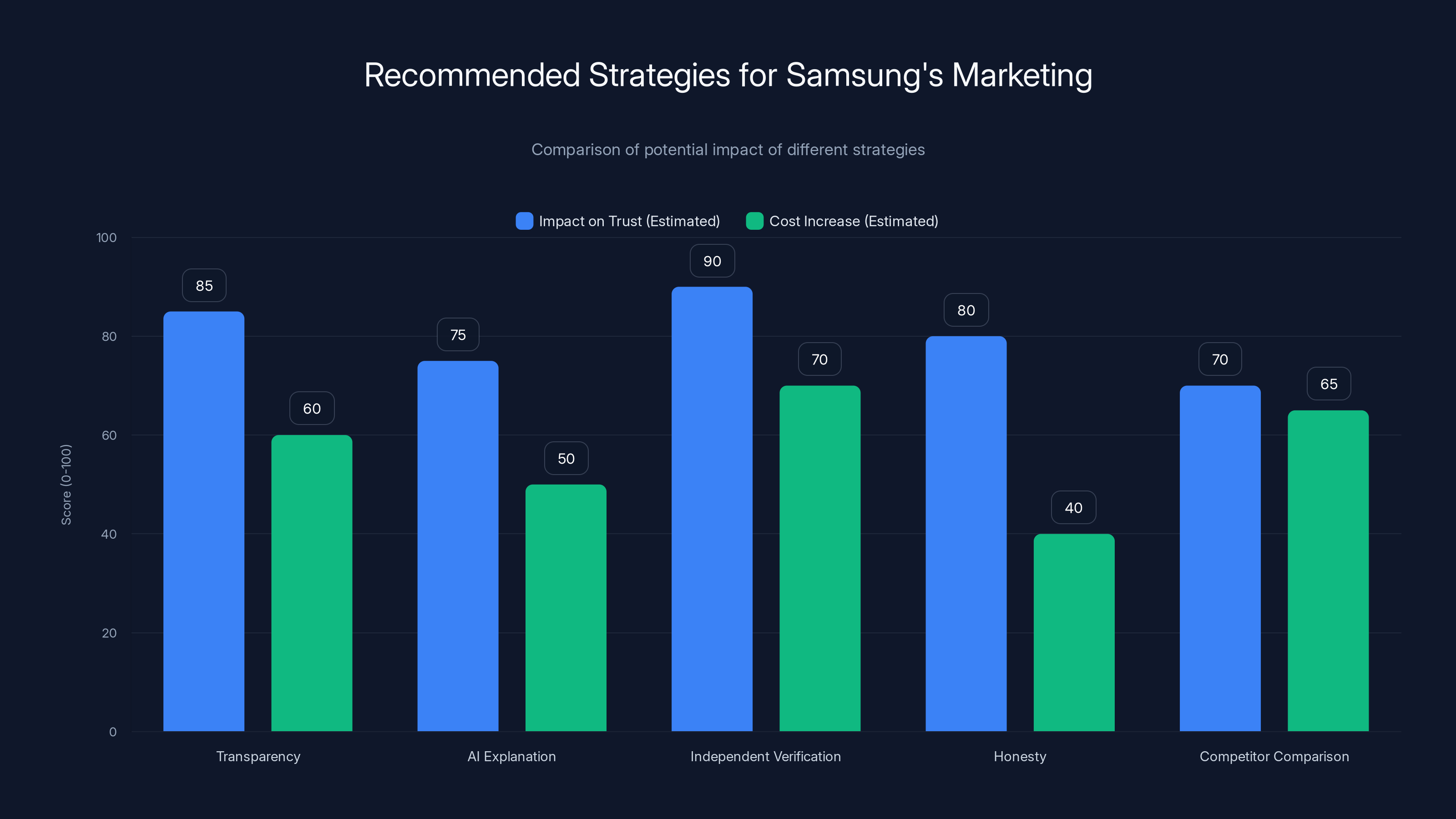

Implementing transparency and independent verification is estimated to have the highest impact on consumer trust, though it may increase costs. Estimated data.

How Consumers Can Spot AI-Generated Content

If you're scrolling through social media and you want to know whether something is AI-generated or real, here are the signs to look for:

Artifacts in backgrounds. AI-generated images and videos often contain strange visual glitches in the background. Objects that don't make physical sense. Hands that have the wrong number of fingers. Text that's garbled or doesn't make sense. These are called artifacts, and they're the most reliable way to spot AI-generated content. Look at Samsung's skateboarding video. The shopping bags and cobblestones are the tells.

Unnatural lighting and shadows. AI models sometimes struggle with how light behaves in the real world. Shadows might fall in impossible directions. Lighting might be inconsistent. Reflections might not work the way they should. Once you train your eye to spot these issues, they become obvious.

Unconvincing textures. AI-generated images sometimes have smooth, plastic-looking textures. Skin might look too smooth. Fabric might not have realistic wrinkles and folds. Hair might look like plastic. Real textures are complex and varied in ways that AI still struggles to replicate perfectly.

Strange anatomy. This is less of an issue now than it was a year ago, but AI still sometimes generates human figures with anatomically wrong proportions. Limbs that are the wrong length. Asymmetrical faces. Bodies that don't make sense.

Inconsistent details. If you zoom in on different parts of an AI-generated image, the details sometimes don't match up. A person's hand might be a different size than it should be relative to the rest of their body. Faces in the background might have wrong proportions. Consistency is one of the hardest things for AI to get right.

The challenge is that AI is getting better at avoiding these artifacts every month. Six months ago, these tells were obvious. Now they're more subtle. And in another year, they might be nearly impossible to spot with the naked eye.

This is why platform-level labeling matters so much. We can't rely on humans to spot AI-generated content. We need the platforms themselves to be transparent about what's real and what's synthetic.

The Role of C2PA and Content Authenticity Standards

C2PA is the industry standard that's supposed to solve this problem.

It's a technical specification that allows creators and platforms to attach cryptographic credentials to digital content. Essentially, it's a way of saying: "This content came from this source, on this date, and was edited in these ways." It's like a digital certificate of authenticity.

The idea is that as content moves across the internet, the C2PA credentials move with it. So if Samsung creates an AI-generated video and adds C2PA credentials, those credentials follow the video when it's posted to YouTube, Instagram, TikTok, wherever. And when you view the video, the platform can show you information about where it came from and how it was created.

In theory, this solves the disclosure problem. In practice, adoption is inconsistent and implementation is complicated.

Google, Meta, and other major platforms have adopted C2PA as a standard. But enforcement and labeling varies wildly. Some platforms are better at displaying C2PA information to users. Others make it hard to find. And the system relies on creators actually adding the credentials in the first place.

Samsung has adopted C2PA, which means they should be adding credentials to their AI-generated videos. But there's no requirement that platforms visibly label or display those credentials to users. So the credentials exist, but most people never see them.

C2PA is a good start, but it's not a complete solution. What we really need is platform-level labeling that's visible and easy to understand. When you view a video on Instagram or TikTok, you should be able to see at a glance whether it's AI-generated or authentic.

Some platforms are moving in that direction. Others are moving slowly. And in the gaps, companies like Samsung are finding ways to be technically compliant while still being misleading to most viewers.

Estimated data shows a rising trend in Samsung's camera marketing misrepresentations, peaking in 2023 with AI-generated scenarios.

What This Means for Galaxy S26 Product Credibility

Here's the trillion-dollar question: does using AI-generated ads actually hurt the Galaxy S26's credibility?

In the short term, probably not. Most people who see these ads won't know or care that they're AI-generated. They'll see what looks like an impressive camera demo and they'll be interested in buying the phone. The ads will work.

But there's a longer-term problem. When people find out (and they will find out, because tech media will cover it), their perception of the phone changes. They start wondering: if Samsung is faking the camera demo in ads, how good is the camera actually? Are they hiding something? Is the real-world performance worse than what they're showing?

This creates a credibility gap. Samsung is trying to use AI to make their product look better. But the use of AI itself undermines trust in what they're showing.

It's a paradox. The technology that's supposed to make the product look better actually makes people trust the product less.

For the Galaxy S26, specifically, this means Samsung is starting its marketing campaign with a credibility deficit. Tech reviewers will be more skeptical. Consumers will be more suspicious. Any claims about camera performance will be met with extra scrutiny.

Samsung could have avoided this by being transparent from the start. "We're using AI-generated scenarios to show you the potential of the Galaxy S26's camera. Here's what real users can actually achieve." Honesty builds trust. Secrecy erodes it.

Instead, Samsung chose to bury the disclosure in fine print. They're betting on the fact that most people won't dig deeper. But the people who do dig deeper—the tech influencers, the reviewers, the engaged consumers—will be the ones who drive the narrative. And that narrative is already starting to form around the use of AI-generated content in marketing.

The Regulatory Void: Why There Are No Real Consequences

One of the biggest problems with the current situation is that there's no regulatory framework for this.

The FTC has guidelines about truth in advertising, but they're not very specific about AI-generated content. The guidelines say you can't make claims that are false or misleading. But what counts as misleading? Is it misleading to show an AI-generated scenario without clear disclosure? Technically, Samsung did disclose it. So are they complying with regulations?

The legal answer is probably yes. The ethical answer is probably no.

This is the gap that Samsung is exploiting. They're doing something that feels wrong to most people, but they're doing it in a way that technically complies with existing regulations.

Different countries are approaching this differently. The EU is working on stricter AI regulations that might require clearer labeling of AI-generated content. The UK has similar initiatives. The US is still figuring out its approach. Until there's real regulation, there's no enforcement mechanism.

Meanwhile, companies like Samsung will continue to push the boundaries of what's acceptable, testing how far they can go before facing real consequences. They're operating in a gray zone where the rules aren't clear, enforcement is inconsistent, and the benefit of the doubt goes to the company, not the consumer.

This will continue until either regulations catch up or consumers demand better practices. Given the current pace of regulatory change, I'd guess we're years away from real enforcement.

AI-generated content can reduce production costs from tens of thousands to just thousands of dollars, offering significant savings. (Estimated data)

The Authenticity Crisis: A Broader Context

The Samsung situation is just one symptom of a much bigger problem.

We're entering an era where it's becoming increasingly difficult to distinguish authentic content from synthetic content. AI is getting better at generating images, videos, audio, and text that are indistinguishable from real content. Deepfakes are becoming more sophisticated. Synthetic media is becoming more common.

At the same time, trust in digital content is declining. Pew Research found that about 64% of Americans believe that made-up news and images created with AI are a major problem. That skepticism is justified. If you can't tell what's real, you can't trust anything.

This creates a cascading problem. As people trust digital content less, companies have to work harder to prove authenticity. But as more companies use AI-generated content, it becomes harder for anyone to prove authenticity. We're spiraling toward a world where everything online is assumed to be fake unless proven otherwise.

Companies that build trust early—by being transparent about their use of AI and honest about the limitations—will have an advantage. Companies that try to sneak AI-generated content past consumers will face backlash.

Samsung is on the wrong side of that divide. They're betting on deception when they should be betting on transparency.

The Future of Product Marketing in an AI-Generated World

So what does product marketing look like in the future when AI-generated content is cheap and ubiquitous?

One possibility is that we all become more skeptical. We assume everything is AI-generated until proven otherwise. We demand independent verification and third-party reviews. Companies can't use their own marketing materials as evidence of product quality. We have to rely on reviewers and customers to tell us what's real.

Another possibility is that platform-level labeling becomes mandatory. Every piece of content on social media gets automatically scanned for AI-generated elements. C2PA credentials become visible and impossible to ignore. Consumers can see at a glance whether something is authentic or synthetic.

A third possibility is that we bifurcate into two worlds: one where AI-generated content is clearly labeled and accepted as part of the marketing mix, and another where "authentic" and "verified" content becomes a luxury good that commands a premium.

My guess is that we end up somewhere in the middle. Platforms will improve their labeling. Regulations will tighten. Consumers will become more skeptical. Companies that are transparent about their use of AI will have an advantage. Companies that try to hide it will face backlash.

For Samsung, specifically, the question is whether they'll learn from this and change their approach. Will they be more transparent about AI usage in future campaigns? Or will they double down on the fine print disclosure strategy?

Based on their history with camera marketing exaggeration, I'd guess they'll continue pushing boundaries. They'll find new ways to be technically compliant while still being misleading to most viewers. And they'll eventually face more backlash for it.

How Other Tech Companies Are Handling AI Disclosures

For context, let's look at how other companies are approaching this.

Google has been relatively transparent about using AI-generated images in some advertising materials. They label them clearly. They don't try to hide the fact that they're AI-generated. Apple tends to avoid using AI-generated content in advertising altogether. They prefer authentic product photography and user-generated content. Microsoft uses AI-generated content in some materials but tries to be clear about it.

Smaller companies and startups are all over the map. Some are very transparent. Others are less so. Without regulation, there's no incentive to standardize disclosure practices.

The brands that are winning in this space are the ones that are being most transparent. Consumers appreciate honesty. When a company clearly labels AI-generated content and explains why they're using it, people are generally fine with it. It's the deception that people hate.

Samsung's approach—fine print disclosures that most people never see—is the opposite of transparency. It's transparency theater. They're technically compliant but practically deceptive.

The Skills Gap: Can Most People Even Spot AI Content?

Here's a sobering reality: most people don't have the skills to spot AI-generated content reliably.

I mentioned earlier the visual artifacts to look for. But realistically, the average person scrolling through social media isn't trained to spot these artifacts. They're not going to zoom in on images and look for anatomical inconsistencies. They're going to see something that looks impressive and move on.

This is actually a larger problem than Samsung's disclosures. It's about digital literacy.

As AI-generated content becomes more sophisticated and more common, digital literacy becomes more important. People need to understand how AI works. They need to know how to spot synthetic content. They need to be skeptical of what they see online.

But the vast majority of people don't have this training. Schools are just starting to teach media literacy. Most adults learned to consume media in an era before AI-generated content was possible. They don't have the mental models or the skills to navigate this new landscape.

Companies like Samsung are exploiting this skills gap. They know that most people won't be able to tell that content is AI-generated. They know that even the fine print disclosure will go unnoticed by most viewers. They're betting on digital illiteracy.

Filling this skills gap is going to require a coordinated effort from schools, platforms, and regulators. It's not something that's going to happen quickly. In the meantime, companies will continue to exploit the gap.

What Samsung Should Do Instead

Let me be direct: Samsung's current approach is a mistake.

They should dump the fine print disclosures and adopt a more transparent strategy. Here's what I'd recommend:

-

Use visible labels on all AI-generated content. Not fine print. Not buried in the description. Right on the video itself. A clear, unmistakable label that says "AI-Generated Scenario."

-

Explain why you're using AI-generated content. "We're using AI to show you the creative potential of the Galaxy S26's camera. Here's what professional videographers can actually achieve."

-

Back up marketing claims with independent verification. Partner with tech reviewers. Let them test the camera in real conditions. Show side-by-side comparisons of marketing materials and real-world results.

-

Be honest about the limitations. "The camera is great, but it won't look exactly like this in your hands unless you spend hours editing." Honesty builds trust.

-

Provide clear comparison with competitors. Show how the Galaxy S26 compares to the iPhone, Pixel, and other flagships. Give people concrete reasons to buy your phone beyond flashy marketing.

This strategy would be more expensive and less flashy than AI-generated fake product demos. But it would build trust. And in the long run, trust is worth more than any ad campaign.

The Bigger Picture: Content Authenticity in 2025

We're at an inflection point.

For the past few years, AI-generated content has been a novelty. Something companies experiment with. But now it's becoming mainstream. Major corporations are using it for product marketing. It's appearing everywhere on social media.

At the same time, people are becoming more skeptical. Trust in online content is declining. Concerns about deepfakes and synthetic media are rising.

The two trends are converging. On one side, the supply of synthetic content is increasing rapidly. On the other side, demand for authentic content is increasing. The gap between the two is growing.

This creates opportunity for companies that can prove authenticity. It also creates danger for companies that rely on deception.

Samsung is on the wrong side of this divide. They're betting on the fact that most people won't notice that their ads are synthetic. But as awareness of AI-generated content increases, that bet becomes riskier.

In a few years, this whole episode might look quaint. By 2027 or 2028, AI-generated content might be so common that nobody cares. Or conversely, regulations might be so strict that Samsung's approach would be illegal.

But in 2025, we're in the gray zone. Samsung is testing the boundaries of what's acceptable. They're betting on fine print disclosures and obscurity. And they're probably going to lose that bet eventually.

Key Lessons From Samsung's AI Ad Misstep

So what's the takeaway from all this?

First: transparency always wins in the long run. Companies that are honest about their use of AI-generated content build trust. Companies that try to hide it get caught and face backlash.

Second: disclosure systems need to work at the platform level, not just in fine print. Consumers see what platforms show them. If platforms don't label AI-generated content visibly, most people won't know it's AI-generated.

Third: the technology for creating synthetic content is moving faster than the regulations designed to govern it. Until that gap closes, companies will continue to push boundaries.

Fourth: authenticity is becoming a competitive advantage. As AI-generated content becomes more common, authentic content becomes more valuable. Companies that can prove they're showing real results will stand out.

And fifth: we need better digital literacy. Most people don't know how to spot AI-generated content. As consumers get smarter about spotting synthetic media, companies like Samsung will face more scrutiny.

Samsung's AI ad strategy is a sign of the times. It's a company testing how far they can push AI-generated marketing without facing real consequences. And they're finding that the answer is: pretty far, as long as you include tiny disclaimers that most people never see.

But this strategy is unsustainable. Eventually, either regulations will tighten, platforms will improve their labeling, or consumers will demand better. When that happens, companies that have been transparent about their use of AI will have an advantage. Companies like Samsung that have been opaque about it will face a credibility crisis.

The choice Samsung made—to use AI-generated content with minimal disclosure—makes sense from a short-term business perspective. But it's a bet against the future. It's a bet that deception will continue to work indefinitely. And that's a bet that never pays off in the long run.

FAQ

What is AI-generated content in advertising?

AI-generated content refers to images, videos, audio, or text created or significantly edited using artificial intelligence tools rather than produced by humans in real-world settings. In advertising, this includes AI-generated scenarios, characters, backgrounds, and effects designed to showcase product features or benefits. Samsung's "Brighten your after hours" video is an example where the entire skateboarding scene was created or heavily enhanced using AI technology, rather than being filmed with an actual Galaxy S26 device.

How does Samsung's disclosure system work for AI content?

Samsung includes fine-print text at the bottom of videos stating "generated with the assistance of AI tools" as their disclosure method. However, this text appears only briefly during the video and is often difficult to notice on mobile devices or when videos are watched in full-screen mode. While Samsung has adopted the C2PA authenticity standard, the platform-level labeling on YouTube and Instagram doesn't always display these disclosures prominently, making the disclosure practically invisible to most viewers despite being technically present.

Why is this approach to AI disclosure problematic?

The main issues with Samsung's approach include: invisible fine-print disclosures that most viewers never see, inconsistent labeling across different videos, reliance on viewer knowledge to find and read disclaimers, and a lack of platform-level labeling that would be automatically visible to all users. This creates a situation where Samsung is technically compliant with disclosure requirements while remaining practically deceptive to the majority of consumers who encounter the content. The approach prioritizes minimal legal liability over genuine transparency and consumer education.

What are the signs that content is AI-generated?

Key indicators of AI-generated content include: visual artifacts like glitches in backgrounds, objects with wrong proportions or dimensions, hands with incorrect finger counts, unnatural lighting and shadow placement, plastic-looking or overly smooth textures, strange anatomical features, and inconsistent details when comparing different parts of an image. In Samsung's skateboarding video, the unnaturally weighted shopping bags and shifting cobblestones are clear AI artifacts. However, as AI technology improves, these tells become increasingly subtle and harder to spot without technical training.

What is C2PA and how does it help with AI disclosure?

C2PA (Coalition for Content Provenance and Authenticity) is an industry standard that allows creators to attach cryptographic credentials to digital content, essentially creating a digital certificate of authenticity. These credentials travel with the content across platforms and can include information about the source, creation date, and editing history. Major platforms including Google, Meta, and others have adopted C2PA, but the system's effectiveness depends on consistent platform-level implementation and visible labeling to users, which currently varies significantly across different social media platforms.

How does this compare to other tech companies' approaches to AI disclosure?

Different companies take varying approaches to AI transparency. Google tends to label AI-generated materials clearly when used in advertising. Apple generally avoids AI-generated content in marketing, preferring authentic product photography. Microsoft uses AI content but attempts to be transparent about it. Smaller companies and startups show inconsistent practices. The companies most successful with consumers are those being most transparent about AI usage, while those attempting to hide or minimize disclosure face greater backlash when discovered. Samsung's fine-print approach falls on the less transparent end of the spectrum.

What regulatory frameworks currently exist for AI advertising disclosure?

Currently, oversight relies primarily on general FTC guidelines regarding truth in advertising and false claims, which predate AI technology and lack specific provisions for AI-generated content. The EU is developing stricter AI regulations with potential implications for advertising transparency. The UK has similar initiatives underway. The US is still developing its regulatory approach. This creates a regulatory vacuum where companies like Samsung can technically comply with vague existing rules while remaining practically misleading to consumers, with no clear enforcement mechanism or financial penalty for inconsistency.

How might AI-generated ads affect consumer trust in Samsung products?

Short-term impact is minimal since most viewers don't realize ads are AI-generated. However, once discovered—particularly through tech media coverage—consumers may question Samsung's credibility and wonder if the company is hiding poor real-world performance. This undermines confidence in product marketing claims and creates a credibility gap where any assertions about camera performance or other features face extra skepticism. Early adopters and tech-savvy consumers who discover the deception become skeptical influencers who shape broader narrative around the Galaxy S26, potentially affecting sales and brand perception longer-term.

What's the difference between AI-enhanced marketing and AI-generated deception?

AI-enhanced marketing uses artificial intelligence to improve existing content—like automatically adjusting lighting, removing backgrounds, or optimizing photos—while being transparent about the process and acknowledging that the core product still performs as shown. AI-generated deception creates entirely fictional scenarios that never happened with the product and presents them as real demonstrations without clear acknowledgment. Samsung crosses from enhancement into deception by creating synthetic skateboarding scenarios and presenting them as camera capability demonstrations without sufficient disclosure that the entire scene is synthetic rather than a real product in action.

Key Takeaways

- Samsung uses AI-generated content in Galaxy S26 ads but buries disclosure in fine print most viewers never see

- Fine-print disclosures are technically compliant but practically deceptive—real transparency requires platform-level labeling

- C2PA authenticity standards are adopted by Samsung and platforms but inconsistently implemented, creating enforcement gaps

- Consumers lack digital literacy to spot AI artifacts; companies exploit this skills gap using barely-visible disclosures

- Samsung has history of camera marketing exaggeration; AI-generated ads represent escalation from enhancement to pure fiction

- Regulatory frameworks haven't caught up to technology; companies face no real enforcement penalties for misleading AI usage

- Tech companies show vastly different approaches—Google transparent, Apple avoids AI, Microsoft moderate, Samsung minimal

- Brand credibility suffers long-term when AI deception is discovered; transparency builds trust while secrecy erodes it

- Authenticity is becoming competitive advantage as synthetic content becomes ubiquitous—honest companies will stand out

- Future depends on stricter regulations, better platform labeling, and improved consumer digital literacy to combat AI deception

Related Articles

- Seedance 2.0 Sparks Hollywood Copyright War: What's Really at Stake [2025]

- SAG-AFTRA vs Seedance 2.0: AI-Generated Deepfakes Spark Industry Crisis [2025]

- Deepfake Detection Deadline: Instagram and X Face Impossible Challenge [2025]

- ByteDance's Seedance 2.0 Deepfake Disaster: What Went Wrong [2025]

- AI Apocalypse: 5 Critical Risks Threatening Humanity [2025]

- Samsung Galaxy S26 Privacy Display: Everything You Need to Know [2025]

![Samsung's AI Slop Ads: The Dark Side of AI Marketing [2025]](https://tryrunable.com/blog/samsung-s-ai-slop-ads-the-dark-side-of-ai-marketing-2025/image-1-1771326342246.jpg)